Abstract

Purpose

To assess the interobserver and intraobserver agreement of fellowship trained chest radiologists, nonchest fellowship-trained radiologists, and fifth-year radiology residents for COVID-19-related imaging findings based on the consensus statement released by the Radiological Society of North America (RSNA).

Methods

A survey of 70 chest CTs of polymerase chain reaction (PCR)-confirmed COVID-19 positive and COVID-19 negative patients was distributed to three groups of participating radiologists: five fellowship-trained chest radiologists, five nonchest fellowship-trained radiologists, and five fifth-year radiology residents. The survey asked participants to broadly classify the findings of each chest CT into one of the four RSNA COVID-19 imaging categories, then select which imaging features led to their categorization. A 1-week washout period followed by a second survey comprised of randomly selected exams from the initial survey was given to the participating radiologists.

Results

There was moderate overall interobserver agreement in each group (κ coefficient range 0.45-0.52 ± 0.02). There was substantial overall intraobserver agreement across the chest and nonchest groups (κ coefficient range 0.61-0.67 ± 0.06) and moderate overall intraobserver agreement within the resident group (κ coefficient 0.58 ± 0.06). For the image features that led to categorization, there were varied levels of agreement in the interobserver and intraobserver components that ranged from fair to perfect kappa values. When assessing agreement with PCR-confirmed COVID status as the key, we observed moderate overall agreement within each group.

Conclusion

Our results support the reliability of the RSNA consensus classification system for COVID-19-related image findings.

Key Words: Interobserver variability, intraobserver variability, RSNA COVID-19 chest CT consensus classification categories

Introduction

In response to the COVID-19 pandemic, the Radiological Society of North America (RSNA) released a consensus statement on the reporting of chest CT findings related to COVID-19 in March of 2020 (1). To date, there have been over 21.2 million confirmed cases worldwide, with over 2 million confirmed cases in the United States (2,3). Most professional radiological societies, as well as the Center for Disease Control, have recommended against the use of screening chest CTs for the detection of COVID-19. Despite this, chest CTs are performed frequently on patients with suspected or confirmed COVID-19 (4, 5, 6, 7, 8, 9, 10). To best equip clinicians with the necessary tools to make informed decisions with regards to the management of COVID-19, there exists a need for accurate and precise reporting of imaging findings. The RSNA consensus statement proposed four categories for standardized CT reporting based on expert consensus with endorsement from the Society of Thoracic Radiology and the American College of Radiology (1). The purpose of this study is to assess the interobserver and intraobserver variability of COVID-19-related imaging findings based on the criteria outlined by the consensus statement released by RSNA.

Methods

Sample Selection

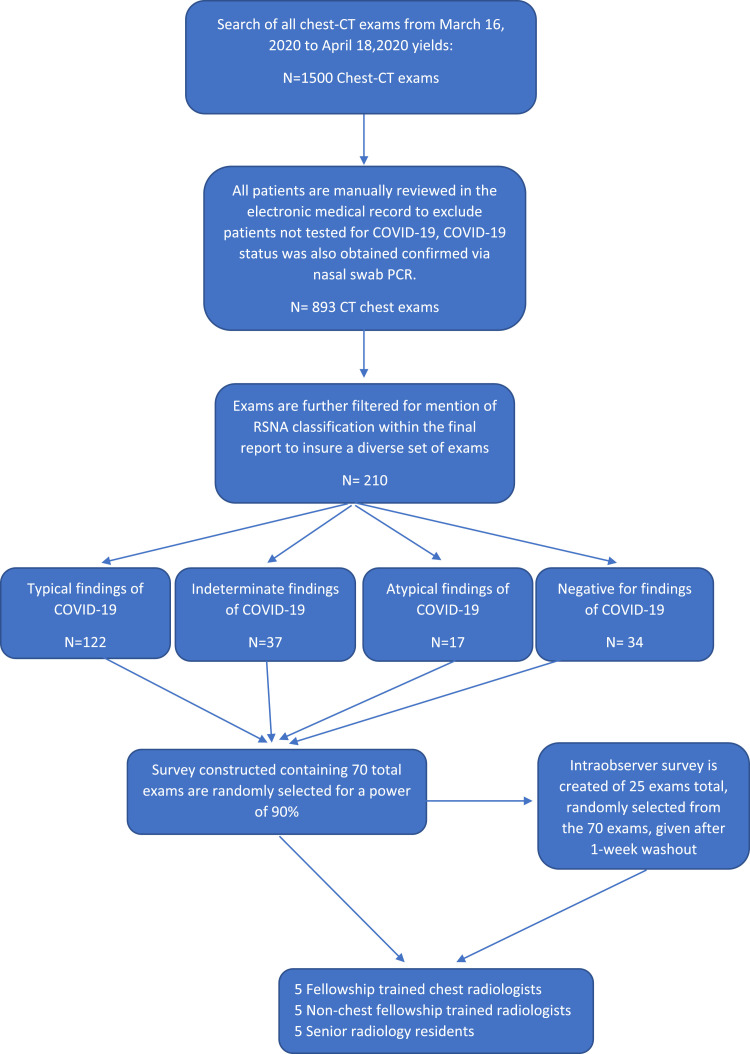

Institutional review board approval was obtained for this study and patients’ consents were waived. An automated data pull of all chest CT exams performed on patients from March 16, 2020 to April 18, 2020 at a single institution yielded 1500 eligible patients (Fig 1 ). All 1500 electronic records charts were manually reviewed to exclude those who were not tested for COVID-19. This process yielded 893 records. These records were then manually filtered for mention of the RSNA classification system within the final CT dictation to insure a diversity of cases, which yielded a total of 210 records. Of these 210 exams, 122 displayed findings that were reported as “Typical,” 37 of the exams were determined to be “Indeterminate,” 17 were deemed “Atypical” and 34 were reported “Negative” based on the RSNA classification system. Manual search of the electronic medical record was done to determine the COVID-19 status of the 210 patients, which was tested for at this institution via nasal swab polymerase chain reaction (PCR).

Figure 1.

Flow diagram of patient exam selection. (Color version of figure is available online.)

Survey Design and Statistical Analysis

A statistical analysis demonstrated 70 of the 210 exams would be necessary to appropriately power the study (11). Seventy exams were randomly selected from the 210 available chest CTs. The 70 exams were randomized, deidentified, and incorporated into an online research picture archiving and communication system developed at our institution. Survey participants were blinded to the image selection process, as well as the initial chest CT classification, and were told they would be receiving a random set of chest CTs from the recent months to evaluate utilizing the RSNA classification system. Of the 70 included chest CTs, COVID-19 positive and COVID-19 negative patients were included to a ratio of 46:24.

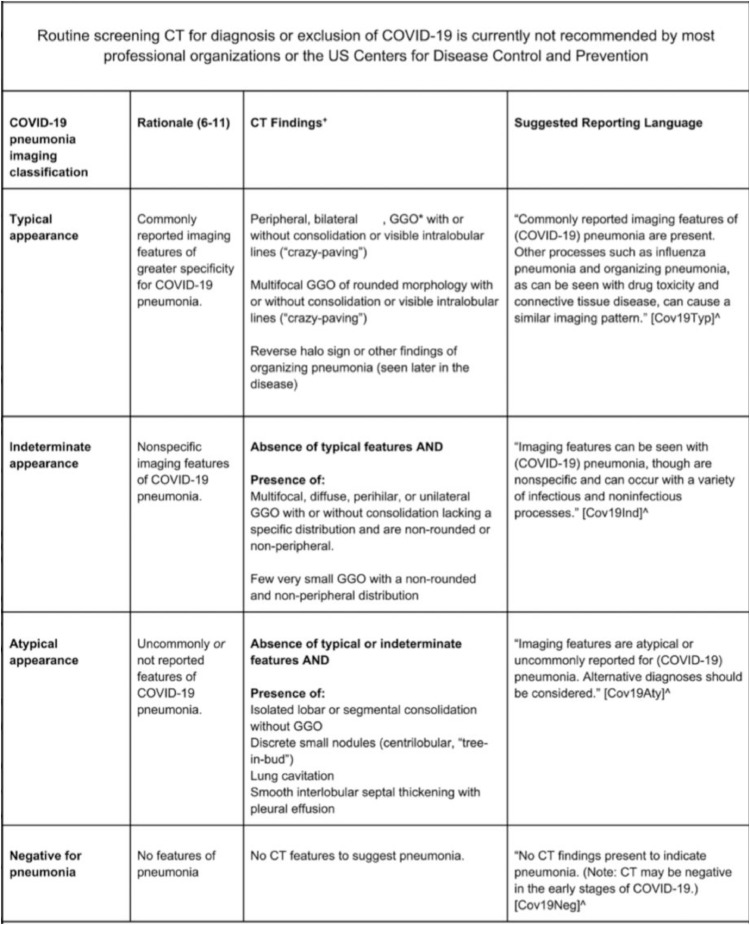

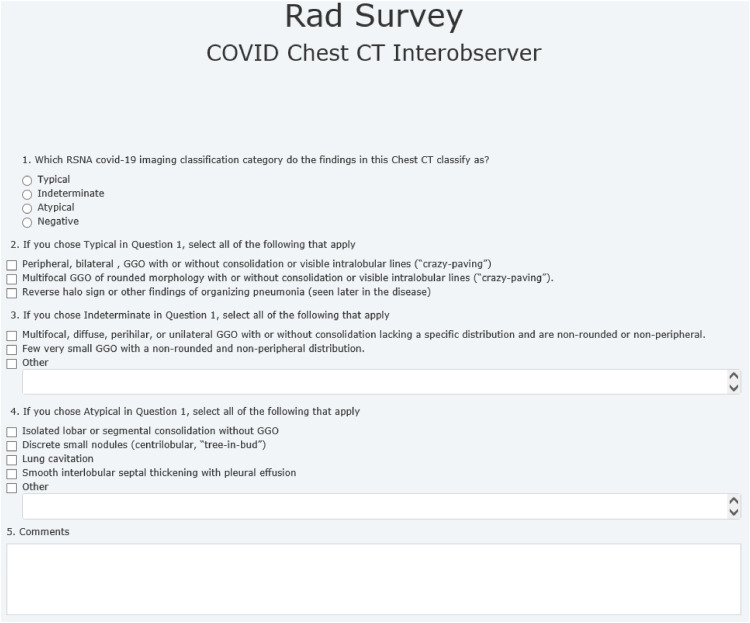

The survey asked participants to broadly classify the findings of each chest CT into one of the four RSNA COVID-19 imaging categories (Figs 2 and 3 ). After category selection, participants were asked to specify which images features led to their classification choice (Figs 2 and 3).

Figure 2.

Consensus RSNA classification system for chest CT imaging findings related to COVID-19 with four categories and suggested reporting language.

Figure 3.

Survey sheet distributed to participating radiologists.

Three different groups participated in the survey, varying in both level of training and subspecialization. The measure of inter-rater agreement for the categorical measurement of COVID-19 was the Kappa statistic. We followed Fleiss, Levin, and Paik's technique for multiple ratings per scan with different raters (12). Statistical analysis determined that a minimum of four participants per group was necessary to give the study a power of 90% to detect a Kappa statistic of 0.20 or more. The study participants included five fellowship-trained chest radiologists (Group 1), five nonchest fellowship-trained radiologists consisting of two emergency and three abdominal-trained radiologists (Group 2), and five fifth-year radiology residents (Group 3). Survey participants were blinded to both the COVID-19 status of each patient, as well as the original radiology report.

The intraobserver survey component was completed after 1 week of washout time and contained 24 exams randomly selected from the initial survey for a power of 80% to detect a Kappa statistic of 0.40 or more. The image order and deidentified accession numbers were randomized again for the intraobserver survey. The 1-week washout was determined to be sufficient to prevent a learning curve given the randomization of image selection. COVID-19 positive and COVID-19 negative patients were included in the intraobserver survey to a ratio of 9:15.

Results

The average year of experience of the five chest fellowship-trained radiologists was 13.2 years with a range of 3-35 years. The average year of experience of the nonchest fellowship-trained radiologists was 17.2 years with a range of 2-27 years. Five tables display the kappa results as discussed below, only statistically significant k values were included within the tables.

Interobserver Agreement

There was moderate overall agreement (κ coefficient range 0.45-0.52 ± 0.02) for the four RSNA categories across all three participating groups (Table 1 ). For each individual category, there was moderate agreement across all three groups for the “Typical” category (κ coefficient range 0.44-0.55 ± 0.02), fair agreement across all groups for the “Indeterminate” category (κ coefficient range 0.20-0.36 ± 0.01-0.06), fair to moderate overall agreement for the “Atypical” category (κ coefficient range 0.37-0.45 ± 0.02), and substantial agreement across all three groups for the “Negative” category (κ coefficient range 0.71-0.78 ± 0.02).

Table 1.

Kappa Coefficients With Standard Errors for the Interobserver Agreement Survey

| Group | Overall | Typical | Indeterminate | Atypical | Negative |

|---|---|---|---|---|---|

| Chest | 0.52 ± 0.02 | 0.51 ± 0.02 | 0.36 ± 0.02 | 0.43 ± 0.02 | 0.78 ± 0.02 |

| Nonchest | 0.49 ± 0.02 | 0.55 ± 0.02 | 0.32 ± 0.02 | 0.37 ± 0.02 | 0.71 ± 0.02 |

| Resident | 0.45 ± 0.02 | 0.44 ± 0.02 | 0.20 ± 0.06 | 0.45 ± 0.02 | 0.76 ± 0.02 |

For the secondary questions within each category as defined on Table 2 , there was fair-moderate overall agreement across all three groups for the first set of “Typical” imaging features (i.e., peripheral, bilateral, ground-glass opacities [GGO] with or without consolidation or visible intralobular lines). There was substantial to perfect agreement among participating chest and nonchest radiologists for the image features of the “Indeterminate” category. The remainder of the agreement for the secondary questions varied from zero to perfect agreement as described on Table 2.

Table 2.

Kappa Coefficients for Secondary Questions of the Interobserver Survey

| Imaging Features | Chest | Nonchest | Resident |

|---|---|---|---|

| Typical: | |||

| Peripheral, bilateral, GGO with or without consolidation or visible intralobular lines (i.e., crazy paving) | 0.57 ± 0.19 | 0.37 ± 0.10 | 0.15 ± 0.17 |

| Multifocal GGO of rounded morphology with or without consolidation or visible intralobular lines (“crazy paving”) | 0.30 ± 0.12 | 0.29 ± 0.16 | 0.24 ± 0.14 |

| Reverse halo sign or other findings of organizing pneumonia | −0.03 ± 0.16 | 0.08 ± 0.23 | −0.15 ± 0.32 |

| Indeterminate: | |||

| Multifocal, diffuse, perihilar, or unilateral GGO with or without consolidation lacking a specific distribution and are nonrounded or nonperipheral | 1.00 ± 0.30 | 0.73 ± 0.26 | 0.19 ± 0.23 |

| Few very small GGO with a nonrounded and nonperipheral distribution | 1.00 ± 0.28 | 0.73 ± 0.26 | 0.31 ± 0.20 |

| Atypical: | |||

| Isolated lobar or segmental consolidation without GGO | 0.65 ± 0.23 | 0.47 ± 0.19 | 0.60 ± 0.18 |

| Discrete small nodules (centrilobular, tree-in-bud | 0.38 ± 0.36 | 0.47 ± 0.14 | 0.61 ± 0.17 |

| Lung cavitation | −0.37 ± 0.66 | 1.00 ± 0.78 | 0.16 ± 0.58 |

| Smooth interlobular septal thickening with pleural effusion | 1.00 ± 0.52 | 0.39 ± 0.29 | 0.70 ± 0.20 |

Intraobserver Agreement

There was substantial overall intraobserver agreement across the chest and nonchest groups (κ coefficient range 0.61-0.67 ± 0.05-0.06) and moderate overall agreement within the resident group (κ coefficient 0.58 ± 0.06). For the individual categories, there was moderate intraobserver agreement across all three groups for the “Typical” and “Atypical” categories, with fair to moderate in the “Indeterminate” category, and almost perfect in the “Negative” category (Table 3 ).

Table 3.

Kappa Coefficient With Standard Errors for Intraobserver Variability Survey

| Group | Overall | Typical | Indeterminate | Atypical | Negative |

|---|---|---|---|---|---|

| Chest | 0.67 ± 0.05 | 0.57 ± 0.08 | 0.58 ± 0.08 | 0.50 ± 0.16 | 0.92 ± 0.04 |

| Nonchest | 0.61 ± 0.06 | 0.53 ± 0.09 | 0.48 ± 0.08 | 0.45 ± 0.12 | 0.92 ± 0.04 |

| Resident | 0.58 ± 0.06 | 0.60 ± 0.09 | 0.38 ± 0.09 | 0.46 ± 0.11 | 0.88 ± 0.05 |

For the secondary questions, the results were varied among the participants, from fair to substantial agreement as described on Table 4 . Of note, there was moderate to substantial agreement across all three groups for the first set of features in the “Indeterminate” and second set of features in the “Atypical” categories. A few secondary choices were chosen very infrequently so that there were not enough results to make a statistically significant kappa value. Negative kappa values describe less agreement then expected by chance alone.

Table 4.

Kappa Coefficient for Secondary Questions of the Intraobserver Variability Survey

| Secondary Imaging Features | Chest | Nonchest | Resident |

|---|---|---|---|

| Typical: | |||

| Peripheral, bilateral, GGO with or without consolidation or visible intralobular lines (i.e., crazy paving) | 0.55 ± 0.08 | 0.41 ± 0.10 | 0.33 ± 0.11 |

| Multifocal GGO of rounded morphology with or without consolidation or visible intralobular lines (“crazy paving”) | 0.50 ± 0.11 | 0.36 ± 0.13 | 0.50 ± 0.11 |

| Reverse halo sign or other findings of organizing pneumonia | 0.76 ± 0.13 | – | – |

| Indeterminate: | |||

| Multifocal, diffuse, perihilar, or unilateral GGO with or without consolidation lacking a specific distribution and are nonrounded or nonperipheral | 0.61 ± 0.07 | 0.52 ± 0.08 | 0.41 ± 0.09 |

| Few very small GGO with a nonrounded and nonperipheral distribution | −0.01 ± 0.01 | 0.66 ± 0.32 | 0.11 ± 0.14 |

| Atypical: | |||

| Isolated lobar or segmental consolidation without GGO | 0.27 ± 0.23 | 0.46 ± 0.14 | 0.57 ± 0.13 |

| Discrete small nodules (centrilobular, tree-in-bud | 0.70 ± 0.14 | 0.72 ± 0.12 | 0.47 ± 0.16 |

| Lung cavitation | – | – | – |

| Smooth interlobular septal thickening with pleural effusion | – | 0.49 ± 0.30 | 0.65 ± 0.19 |

Correlation With PCR-Confirmed COVID-19 Status

Using PCR-confirmed COVID-19 status (i.e., positive vs negative) as the standard for the patient exams included in our study, there was moderate overall agreement across all three groups (κ coefficient range 0.53-0.57 ± 0.05, Table 5 ). Results were best when agreed upon “Typical” and “Indeterminate” categories were combined and corresponded with moderate overall agreement to COVID-19 positive status. The COVID-19 negative group was a combination of moderately agreed upon “Atypical” and “Negative” categories.

Table 5.

Kappa Coefficient Using PCR-Confirmed COVID-19 Status as Key

| Group | Using COVID-19 Status |

|---|---|

| Chest | 0.55 ± 0.05 |

| Nonchest | 0.53 ± 0.05 |

| Resident | 0.57 ± 0.05 |

Chest CT Cases

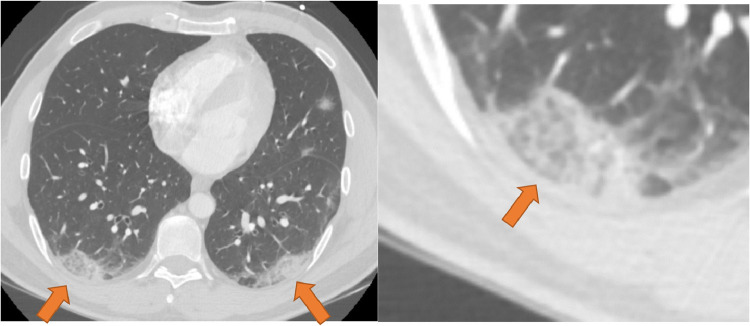

Below a few case examples of chest CT exams included in our study. The first two of which (Figs 4 and 5 ) are cases that received unanimous agreement across all 15 participants as “Typical” and “Atypical” respectively for imaging findings related to COVID-19 based on the RSNA consensus reporting guidelines. The next two (Figs 6 and 7 ) are cases that received poor observer agreement as described.

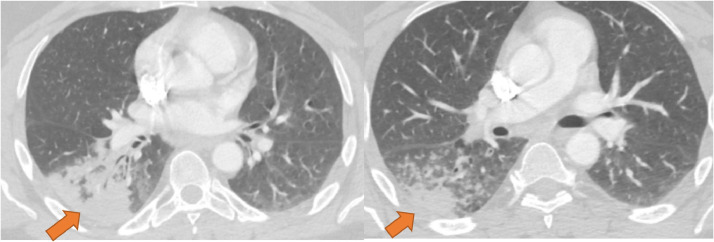

Figure 4.

CT imaging features unanimously agreed upon as “Typical” for COVID-19. Enhanced axial images of the lungs show bilateral, multifocal rounded, and peripheral opacities (orange arrows). Opacity at the right base has visible intralobular lines producing a “crazy-paving” appearance (inset). (Color version of figure is available online.)

Figure 5.

CT imaging features unanimously agreed upon as atypical for COVID-19. Enhanced axial CT images of the chest show dense multisegmental consolidation in the right lower lobe (left, orange arrow). Centrilobular and tree-in-bud type nodularity is present in the superior segment of the right lower lobe (right, orange arrow). (Color version of figure is available online.)

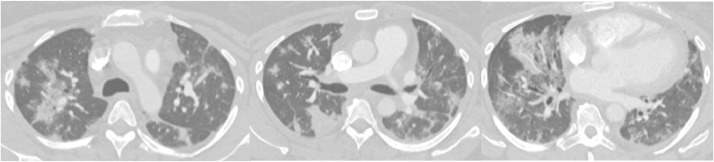

Figure 6.

Peripheral and peribronchial ground-glass opacities with rounded and nonrounded morphology. This case received split agreement with eight participants agreeing on typical and seven agreeing on indeterminate.

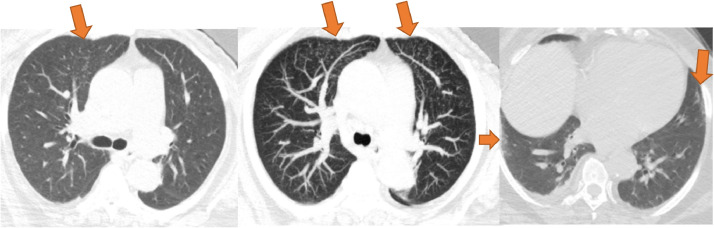

Figure 7.

This case demonstrates tree-in-bud nodularity in the anterior segment of the right upper lobe (left, orange arrow). Maximum intensity projection (MIP) makes the nodules more conspicuous and reveal additional tree-in-bud nodularity in the anterior left upper lobe (middle, orange arrows). There are also vague nonrounded areas of subpleural ground glass in the peripheral right lower lobe and inferior lingula (right, orange arrows). This case received mixed agreement with six atypical, six indeterminate, two negative, and one typical classification. (Color version of figure is available online.)

Discussion

Professional radiological societies and the Center for Disease Control currently recommend against the use of chest CTs for the detection of COVID-19. Despite this, clinicians frequently order these imaging studies to guide clinical management of suspected or confirmed COVID-19 patients (4, 5, 6, 7, 8, 9, 10). The role of chest CTs in the management of COVID-19 patients is evolving, especially given recent literature describing increased rates of thromboembolic complications such as increased rates of pulmonary embolism among COVID-19 patients (14). Due to the frequent incorporation of chest CTs for the detection of COVID-19 and the management of active COVID-19 cases and their complications, there exists a significant need for reliable and accurate reporting of imaging studies by the responsible radiologist. The RSNA classification system was created to provide radiologists with criteria to appropriately classify imaging findings. The results of this study show a moderate overall interobserver agreement across all three groups as well as moderate to substantial agreement in the intraobserver survey completed after 1-week washout time.

The findings in this study with regards to interobserver results are in agreement with a recently published article on the topic by de Jaegere et al (13). This study expands on their findings by demonstrating moderate to substantial intraobserver agreement among our three groups when utilizing the RSNA classification. Compared to the study by Jaegere et al, this study utilizes a larger group of survey participants; 15 total radiologists compared to 3. This study also expands on the findings in the study by Jaegere et al by assessing the agreement of each imaging feature within the broad categories, as opposed to reporting on agreement between general categories.

Multiple theories explain the overall fair agreement seen within the “Indeterminate” category for the interobserver survey. One major difference between the “Typical” and “Indeterminate” categories is that the former requires rounded and peripherally distributed GGO, while the latter typically demonstrate nonrounded or randomly distributed GGOs. In cases where a mixture of features for different categories was present, participants tended to disagree or spilt answers between two of the prominent features (Fig 6). It is possible that accurate recognition of which of the two features was present, as well as the presence of both features in a CT, posed difficulty to the participants in this study. This would explain why participants had some difficulty in determining whether to categorize CT in the “Indeterminate” category or the “Typical” category. The potential solution to this dilemma would be emphasizing to practicing radiologists, as the RSNA classification system does, that the presence of any features of the “Typical” category take priority over the other features. In the absence of “Typical” features, “Indeterminate” features take priority over the other categories, and so on.

Participants did not receive any structured training with the RSNA classification system prior to our survey other than their own acquired experience with reading chest CTs during the peak of COVID-19 cases. This is a potential limitation of the study as the lack of familiarity with the RSNA classification system may lead to misinterpreting or missing COVID-19 specific imaging findings, this would be expected to affect nonchest-trained participants to a greater degree.

When correlating the interobserver agreement results to the PCR-confirmed COVID-19 status of the patients, this study demonstrated moderate overall agreement. This indicates that utilization of the RSNA classification system agrees with COVID-19 status as determined by the established method of nasal swab PCR. Of note, there was a significant amount of COVID-19 positive patients classified as “Indeterminate.” These results can be explained by the similarity in the classification criteria between the two categories and presence of mixed imaging features of COVID-19.

The study is limited by its retrospective design and single institutional analysis. Experience among participating radiologist was widely varied and ranged from 3 to 35 years in the chest fellowship trained group and 2-27 years in the nonchest fellowship-trained group. The variation in years of experience can create mixed results especially in cases with several or little to no findings (Fig 7). There are several articles that discuss the dramatic changes in chest CT findings in COVID-19 patients over time (15, 16, 17, 18). Our study did not adequately explore this phenomenon, as the studies included in our survey represent a single moment in the clinical course of the affected patients. Given this constraint, some patients may have presented earlier in the course of their disease without any image findings of COVID-19 and some may have presented later in the course of the disease with the most severe findings. The variation in time from symptom onset to chest CT likely skews the results.

Recently published literature on a new classification system called CO-RADS proposed by Prokop et al (19) utilizes a six-point scale that is based on similar imaging findings discussed among expert RSNA consensus. The CO-RADS system was not introduced to our hospital system during data acquisition, and given the lack of familiarity, was not included within our study.

In conclusion, this study demonstrates moderate overall interobserver agreement and moderate to substantial intraobserver agreement for chest CT findings of COVID-19 based on the RSNA classification system. There is reliable utilization of the RSNA classification system criteria by radiologists when reporting on chest CT findings related to COVID-19.

Author Contributions

All authors substantially contributed to the conception or design of the study, the writing and/or revision of the manuscript, approved the final version of the manuscript, and are accountable for the manuscript's contents.

Funding

No funding was used for the completion of this study.

Disclosures

The authors have nothing to disclose.

Statement of Data Integrity

The authors declare that they had full access to all the data in this study and the authors take complete responsibility for the integrity of the data and the accuracy of the data analysis.

Acknowledgments

Priyanka Annigeri, MD

Vladimir Starcevic, MD

Ting Li, MD

Joshua Joseph, MD

Hannan Saad, MD

Thomas Keimig, MD

David Spizarny, MD

Daniel Myers, MD

Alexander Tassopoulos, MD

Matthew Rheinboldt, MD

John Blase, MD

Milan Pantelic, MD

References

- 1.Simpson S, Kay FU, Abbara S. Radiological Society of North America expert consensus statement on reporting chest CT findings related to COVID-19. Endorsed by the Society of Thoracic Radiology, the American College of Radiology, and RSNA. Radiology. 2020;2(2) doi: 10.1148/ryct.2020200152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Johns Hopkins University. Coronavirus COVID-19 global cases by the Center for Systems Science and Engineering (CSSE). Available at:https://gisanddata.maps.arcgis.com/apps/opsdashboard/index.html#/bda7594740fd40299423467b48e9ecf6. 2020. Accessed June 19, 2020.

- 3.World Health Organization. Coronavirus disease (COVID-19) situation dashboard. Available at:https://experience.arcgis.com/experience/685d0ace521648f8a5beeeee1b9125cd. 2020. Accessed June 19, 2020.

- 4.ACR recommendations for the use of chest radiography and computed tomography (CT) for suspected COVID-19 infection. Available at:https://www.acr.org/Advocacy-and-Economics/ACR-Position-Statements/Recommendations-for-Chest-Radiography-and-CT-for-Suspected-COVID19-Infection. Accessed June 19, 2020.

- 5.Clinical questions about COVID-19: questions and answers. Center for Disease Control. 2020. Available at:https://www.cdc.gov/coronavirus/2019-ncov/hcp/faq.html. Accessed June 19, 2020.

- 6.Society of Thoracic Radiology. COVID-19 position statement2020. Available at:https://thoracicrad.org/. Accessed June 19, 2020.

- 7.American Society of Emergency Radiology. COVID-19 position statement2020. Available at:https://thoracicrad.org/wp-content/uploads/2020/03/STR-ASER-Position-Statement-1.pdf. Accessed June 19, 2020.

- 8.Rubin GD, Ryerson CJ, Haramati LB. The role of chest imaging in patient management during the COVID-19 pandemic: a multinational consensus statement from the Fleischner Society. Radiology. 2020 doi: 10.1148/radiol.2020201365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mossa-Basha M, Medverd J, Linnau K. Policies and guidelines for COVID-19 preparedness: experiences from the University of Washington. Radiology. 2020 doi: 10.1148/radiol.2020201326. [DOI] [PubMed] [Google Scholar]

- 10.Hope MD. A role for CT in COVID-19? What data really tells us so far. Lancet. 2020;395:1189–1190. doi: 10.1016/S0140-6736(20)30728-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bujang MA, Baharum N. A simplified guide to determination of sample size requirements for estimating the value of intraclass correlation coefficient: a review. Arch Orofac Sci. 2017;12:1–11. [Google Scholar]

- 12.Fleiss JL, Levin B, Paik MC. 3rd ed. Wiley and Sons; New York, New York: 2003. Statistical Methods for Rates and Proportions. [Google Scholar]

- 13.Jaegere TM, Krdzalic J, Fasen BA. Radiological Society of North America Chest CT classification system for reporting COVID-19 pneumonia: interobserver variability and correlation with RT-PCR. Radiology. 2020;2(3) doi: 10.1148/ryct.2020200213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Poyiadji N, Cormier P, Patel PY. Acute pulmonary embolism and COVID-19. Radiology. 2020 doi: 10.1148/radiol.2020201955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wang Y, Dong C, Hu Y. Temporal changes of CT findings in 90 patients with COVID pneumonia: a longitudinal study. Radiology. 2020 doi: 10.1148/radiol.2020200843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Li M, Lei P, Zeng B. Coronavirus disease (COVID-19): spectrum of CT findings and temporal progression of disease. Acad Radiol. 2020;27:603–608. doi: 10.1016/j.acra.2020.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hu Q, Guan H, Sun Z. Early CT features and temporal lung changes in COVID-19 pneumonia in Wuhan, China. Eur J Radiol. 2020;128 doi: 10.1016/j.ejrad.2020.109017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Salehi S, Abedi A, Balakrishnan S. Coronavirus disease 2019 (COVID-19): a systematic review of imaging findings in 919 patients. AJR Am J Roentgenol. 2020;215:1–7. doi: 10.2214/AJR.20.23034. [DOI] [PubMed] [Google Scholar]

- 19.Prokop M, van Everdingen W, van Rees Vellinga T. CO-RADS—a categorical CT assessment scheme for patients with suspected COVID-19: definition and evaluation. Radiology. 2020;296:E97–E104. doi: 10.1148/radiol.2020201473. [DOI] [PMC free article] [PubMed] [Google Scholar]