Abstract

Deep learning of artificial neural networks has become the de facto standard approach to solving data analysis problems in virtually all fields of science and engineering. Also in biology and medicine, deep learning technologies are fundamentally transforming how we acquire, process, analyze, and interpret data, with potentially far-reaching consequences for healthcare. In this mini-review, we take a bird’s-eye view at the past, present, and future developments of deep learning, starting from science at large, to biomedical imaging, and bioimage analysis in particular.

Keywords: Deep learning, Artificial neural networks, Bioimage analysis, Microscopy imaging, Computer vision

1. Introduction

Ever since the introduction of digital scanning technologies in biological imaging [1], [2], [3], there has been a growing need for powerful computational methods to enable automated quantitative image analysis. Microscopy images potentially contain a wealth of information about the morphological, structural, and dynamical characteristics of tissues, cells, and molecules, which may go unnoticed even to the expert human eye [4], [5], [6]. However, designing computer algorithms to extract this information with high fidelity is a great challenge, as has been well recognized since the mid-1960s, after the first decade of serious attempts [7], [8], [9], and is still true today.

Automated bioimage analysis typically requires executing an intricate series of operations, which may involve image restoration [10], [11], [12] and registration [13], [14], [15], object detection [16], [17], [18], segmentation [17], [19], [20], and tracking [21], [22], [23], as well as downstream image or object classification [24], [25], [26], quantification [27], [28], [29], and visualization [30], [31], [32]. As attested by the just cited reviews and evaluations, a plethora of methods and tools have been developed for this purpose in the first half a century of computational bioimage analysis, based on what may now be considered traditional image processing and computer vision paradigms.

Recently, a major paradigm shift has occurred with the massive adoption of deep learning technologies [33], [34], [35], which are now rapidly replacing traditional data analysis approaches in virtually all fields of science, including bioimage analysis. In a matter of just a few years, the scientific literature on deep learning has grown explosively, not only with research papers describing novel concepts, algorithms, software platforms, and applications, but also with an abundance of reviews and surveys exploring and commenting on the state of the art.

In this mini-review, we take stock and summarize the latest developments and the challenges ahead, starting from science at large, to biomedical imaging, and to bioimage analysis in particular. Rather than providing a technical introduction or an exhaustive review, we briefly discuss major trends in the past, present, and future of deep learning and their implications for bioimage analysis. Along the way, we mainly cite other reviews and surveys for further reading on specific subtopics.

2. Deep learning on the rise

Deep learning popularly refers to the use of artificial neural networks (ANNs) with multiple (ultimately many) layers of elementary computational cells (called “neurons” by analogy with neuronal cells in biological neural networks) to progressively extract higher-level representations of given input data in order to perform data analysis tasks [33], [35], [36]. It is a form of machine learning [34], [37], [38], a major branch of the field of artificial intelligence (AI) [39], [40], [41], which is concerned with the science and engineering of developing machines exhibiting characteristics associated with human intelligence. While deep learning is now taking the world by storm (Fig. 1), its road to success has been long and arduous.

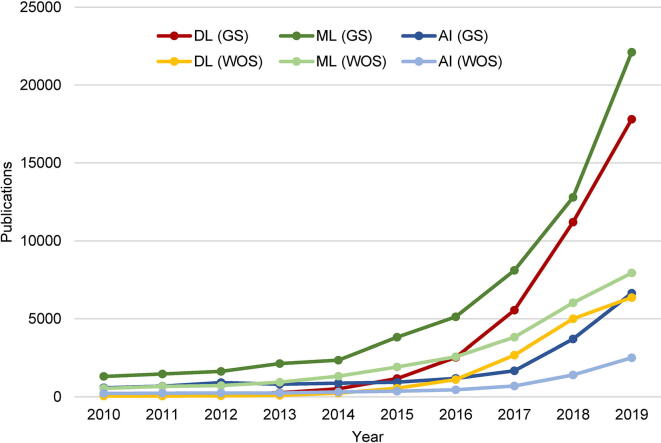

Fig. 1.

Explosive growth of the scientific literature on deep learning and related topics. The graph shows the number of publications per year in the past decade, having the terms deep learning (DL), machine learning (ML), or artificial intelligence (AI) in the title, according to Google Scholar (GS) and Web of Science (WOS) around the time of submission of this article.

2.1. A brief history of deep learning

The idea of using ANNs for data analysis dates back to the dawn of digital computing [42]. In the early 1940s, the first mathematical model of a biological neuron was proposed, providing “a tool for rigorous symbolic treatment of known nets and an easy method of constructing hypothetical nets of required properties” [43]. In order for the model to work, its parameters (weights) had to be set correctly, which initially was done manually. In the late 1950s, the perceptron became the first model capable of learning the weights from examples, illustrating “some of the fundamental properties of intelligent systems” [44]. However, by the end of the 1960s, it was clear that such models have severe limitations [45], and multilayer perceptrons are required for more complex tasks, but it was not obvious how to train them.

After the ensuing first “AI winter”, from the late 1960s throughout the 1970s and some time beyond, interest in ANNs resurged in the mid-1980s with the (re)invention of the back-propagation algorithm [46]. Important advances were made in the 1990s, including the development of recurrent neural networks (RNNs) such as the long short-term memory (LSTM) for modeling data sequences [47], and successful applications of multilayer convolutional neural networks (CNNs) in image analysis [48]. But by the end of the millennium, due to unmet overinflated expectations created by AI-exploiting ventures, and successes in other areas of machine learning, interest in ANNs waned for the second time.

Until the mid-2000s it was generally believed that deep ANNs are very hard to train. This perception started to change when it was shown that a particular type of multilayer ANN called a deep belief network (DBN), where each layer is a restricted Boltzmann machine (RBM), can be efficiently trained by greedy layer-wise unsupervised learning [49]. Soon after, based on the same principle, algorithms for training deep autoencoders (AEs) were proposed [50], as well as other deep architectures [33]. By this time, deep learning began to clearly outperform competing machine learning technologies for various data analysis tasks. This became most evident in the 2012 edition of the ImageNet challenge on image classification, where a CNN called AlexNet won by a large margin [51]. Since then, deep learning has gained ground at an exponential rate, including in the biomedical domain, as covered in later sections of this article.

2.2. Driving forces behind deep learning

In recent years, deep learning has been well recognized as a breakthrough technology. So much so that in 2018, the Turing Award, given annually since 1966 by the Association for Computing Machinery (ACM) and generally considered to be the “Nobel Prize of Computing”, was awarded to three highly influential researchers “for conceptual and engineering breakthroughs that have made deep neural networks a critical component of computing”: Yoshua Bengio (University of Montreal), Geoffrey Hinton (University of Toronto & Google), and Yann LeCun (New York University & Facebook) [52].

Apart from groundbreaking research, two other factors have played an important role in the relatively recent rapid rise of deep learning [53]. Both relate directly to the very needs of deep learning algorithms to be successful. The first is the need for large data sets to properly train the likewise large numbers of neural network parameters. Compiling such data sets has been greatly facilitated since the turn of the millennium by the increasing digitization of the world, leading to the present era of “big data”. The second is the need for large computing power to complete the required large numbers of iterations in the training process within reasonable time. More and more advanced computing power has become affordable even for individual researchers in the form of general purpose graphics processing units (GPUs).

Together, these advances have enabled the development of ever deeper neural networks, reaching ever higher accuracies and beating the state of the art in an ever growing number of applications. The widespread usage of deep learning has been further accelerated by the development of open-access software libraries and frameworks [54], [55], [56], [57], greatly facilitating deep neural network (DNN) design and training even for non-computer scientists. Tech giants such as Google, Facebook, Apple, IBM, Intel, Microsoft, Amazon, Baidu, and many others invest heavily in deep learning, capitalizing on its potential and contributing to a world that is increasingly driven by DNNs, and it seems this is only the beginning [58].

2.3. Widespread impact of deep learning

The extraordinary power of deep learning in addressing intractable challenges has led to a competitive race for leadership among research groups, universities, companies, and even nations [59]. Every week, new papers appear, not seldom by researchers without a solid background in computer science, commenting on the impact of deep learning in their field, or claiming victory with DNNs in yet another application domain, often simply by exploiting existing software tools and network architectures. The past few years have seen a flood of reviews and surveys on the subject, in virtually all fields of science, often by authors or in journals the seasoned computer scientist had never heard of. Apparently, despite many remaining challenges requiring further research (Section 5), a methodology has emerged that is relatively easy to use and that everyone is eager to own.

By now, deep learning has become the go-to data analysis technology in domains as diverse as agriculture [60], bioinformatics [61], biometrics [62], computational biology [63], consumer analytics [64], cyber security [65], dentistry [66], drug discovery [67], education [68], face recognition [69], gaming [70], health informatics [71], high-energy physics [72], hydrology [73], genomics [74], linguistics [75], mobile multimedia [76], mobile networking [77], multimedia analytics [78], nanotechnology [79], natural language processing [80], precision medicine [81], remote sensing [82], renewable energy forecasting [83], robotics [84], smart manufacturing [85], speech generation [86], surveillance [87], traffic control [88], video coding [89], and countless others [90].

3. Deep learning in biomedical imaging

A domain we focus on more specifically in this article is biomedical imaging (Fig. 2). Here we take biomedical imaging to be the broad, multidisciplinary field concerned with the acquisition, processing, visualization, and interpretation of structural and functional images of living organisms, whether for clinical or for research purposes. Celebrating a long history of its own [91], including multiple Nobel Prize winning revolutions [92], biomedical imaging has become a cornerstone of modern healthcare and life sciences, to the extent that today “a world without imaging is clearly not imaginable” [93]. For the sake of brevity in this mini-review, we roughly divide the field into medical imaging, pathological imaging, preclinical imaging, and biological imaging in the life sciences, and summarize the impact of deep learning on each.

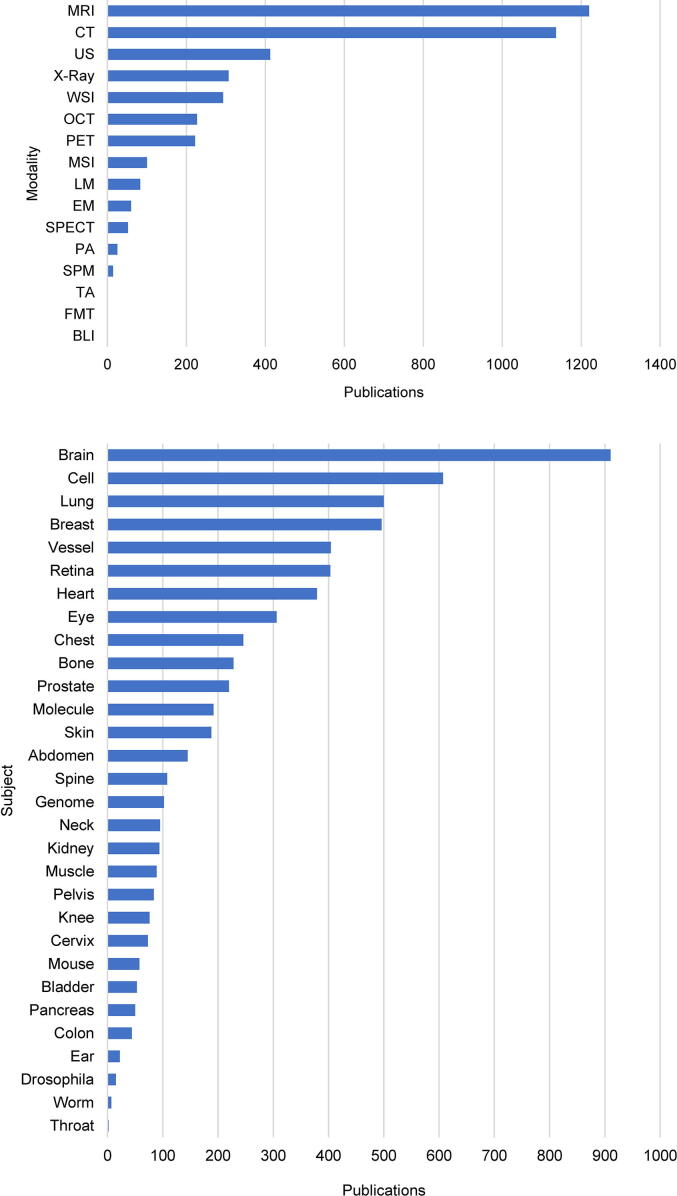

Fig. 2.

Impact of deep learning on biomedical imaging. The graphs show the number of peer-reviewed journal and selected conference proceedings publications on deep learning in different biomedical application areas, categorized by imaging modality (top, see text for abbreviations) and subject of study (bottom), ranked from most to least popular. Numbers were estimated from the PubMed database of the US National Library of Medicine, National Institutes of Health, around the time of submission of this article, by searching for publications having relevant terms in the title or abstract (Supplementary Data).

3.1. Deep learning in medical imaging

In clinical practice, the screening, diagnosis, prognosis, and treatment of disease in the human body, all rely increasingly on advanced medical imaging technologies such as X-ray computed tomography (CT), magnetic resonance imaging (MRI), positron emission tomography (PET), single-photon emission computed tomography (SPECT), and ultrasound (US) imaging. Successful application of imaging technologies involves not only high-fidelity image acquisition but also reliable image interpretation [94]. While medical imaging devices have improved substantially in recent decades in terms of sensitivity, efficiency, and image quality, for a long time image interpretation was done primarily by humans. But even experts are known to suffer from subjectivity, variability, and fatigue. These impediments can potentially be overcome by computational methods, and deep learning in particular has emerged as a key enabling technology for this purpose, as attested by many recent overview articles in the field [95], [96], [97], [98], [99], [100], [101], [102].

The impact of deep learning has been reviewed more specifically in a wide range of medical imaging areas, including abdominal imaging [103], atherosclerosis imaging [104], structural and functional brain imaging [105], [106], in-vivo cancer imaging [107], dermatological imaging [108], endoscopy [109], mammography [110], musculoskeletal imaging [111], nuclear imaging [112], ophthalmology [113], pulmonary imaging [114], thoracic imaging [115], as well as in radiotherapy [116], interventional radiology [117], and radiology in general [118], [119], [120]. The massive body of papers on deep learning in virtually all areas of medical imaging has inspired many to write primers [121], [122], [123], guides [124], [125], [126], white papers or roadmaps [127], [128], [129], and other commentaries [130], [131], [132]. There is now growing evidence that deep learning methods can perform on par with, if not better than, radiologists in specific tasks [133], though the latter will continue to play a critical role in integrating such methods in clinical workflows [127].

3.2. Deep learning in pathological imaging

Disease diagnosis and prognosis cannot always be performed solely using structural or functional in-vivo medical imaging, but often also require complementary ex-vivo pathological imaging of tissue, cell, and body fluid samples extracted from the body. Perhaps even more than in medical imaging, visual image interpretation in pathology has traditionally been the task of human experts. However, the increasing adoption of digital whole-slide imaging (WSI) into routine clinical practice in recent years has created unprecedented opportunities for computer-aided diagnosis (CAD) in pathology [134], [135], [136], [137]. Here, too, deep learning is being rapidly and widely adopted for this purpose, as reported in many reviews [138], [139], [140], [141], [142], [143], [144], [145].

Pathological imaging plays a prominent role especially in cancer diagnosis and prognosis, and the impact of deep learning has been reviewed in various areas of oncological pathology, including in histopathology [141], cytopathology [146], and hematopathology [147]. Deep learning in pathology has been surveyed more specifically for breast cancer [142], [148], lung cancer [149], [150], tumor pathology in many other forms of cancer [151], and cancer prognosis [152], with many opinion articles commenting on challenges and opportunities [153], [154], [155], [156], [157]. As in medical imaging, there is mounting evidence for the potential of deep learning to provide fast and reliable image analysis at a performance level of a seasoned pathologist, or to serve as a synergistic tool for the latter to improve accuracy and throughput [131].

3.3. Deep learning in preclinical imaging

Innovative clinical medical imaging technologies and procedures are usually the fruit of preclinical imaging research with animal models representing humans in studying responses to physiological and environmental changes. Modern small-animal based anatomical, functional, and molecular imaging research involves a wide range of well-established as well as more experimental imaging modalities, including micro versions of clinical scanners (µCT, µMRI, µPET, µSPECT, µUS), optical coherence tomography (OCT), fluorescence molecular tomography (FMT), bioluminescence imaging (BLI), photoacoustic (PA) and thermoacoustic (TA) imaging, multispectral imaging (MSI), and others [93], [158], [159]. The use of deep learning for automated analysis of such imaging data is relatively uncharted territory, but recent studies have reported first applications in translational molecular imaging experiments [160], [161], [162], [163], [164].

3.4. Deep learning in biological imaging

Even more fundamental to our understanding of disease processes and the homeostatic mechanisms maintaining life down to the cellular and molecular levels, is biological microscopy imaging, more succinctly also referred to as bioimaging. Revolutionary scientific discoveries and technological innovations in the past decades have spurred the development of a vast array of advanced light microscopy (LM), notably fluorescence microscopy (FM), as well as electron microscopy (EM) and scanning probe microscopy (SPM) imaging modalities that have proven key to much of the progress in modern biological research [165], [166], [167], [168], [169], [170].

Of all biomedical imaging fields, bioimaging arguably faces the biggest challenges in automating visual image interpretation tasks, due to the lack of standard imaging protocols, the high variability of experimental conditions, and the sheer volume of the data produced. Whereas (pre)clinical medical imaging systems typically generate data sets of dozens of megabytes (MB), and digital pathology scanners yield data sets of tens to hundreds of gigabytes (GB), automated microscopes may easily produce on the order of terabytes (TB) of image data in a single experiment [171], [172], [173], [174]. Here, the power of deep learning is increasingly leveraged not only to improve image formation [175], [176], [177], [178], [179], but also subsequent image analysis, discussed next.

4. Deep learning for bioimage analysis

First studies using ANNs for bioimage analysis date back to the late 1980s [180], soon after the popularization of the back-propagation algorithm. A review on future trends in microscopy around that time already commented that for complex visual tasks “a good deal of faith is now placed in electronic neural networks” [181]. Indeed, the use of ANNs caught on during the 1990s [182], [183], [184] and 2000s [185], [186], [187], but as in biomedical imaging at large, deep learning began to be massively adopted for bioimage analysis only in recent years [188], [189], [190], [191], [192], [193], [194]. We briefly discuss some of the common tasks in bioimage analysis (Fig. 3) where deep learning has been particularly successful.

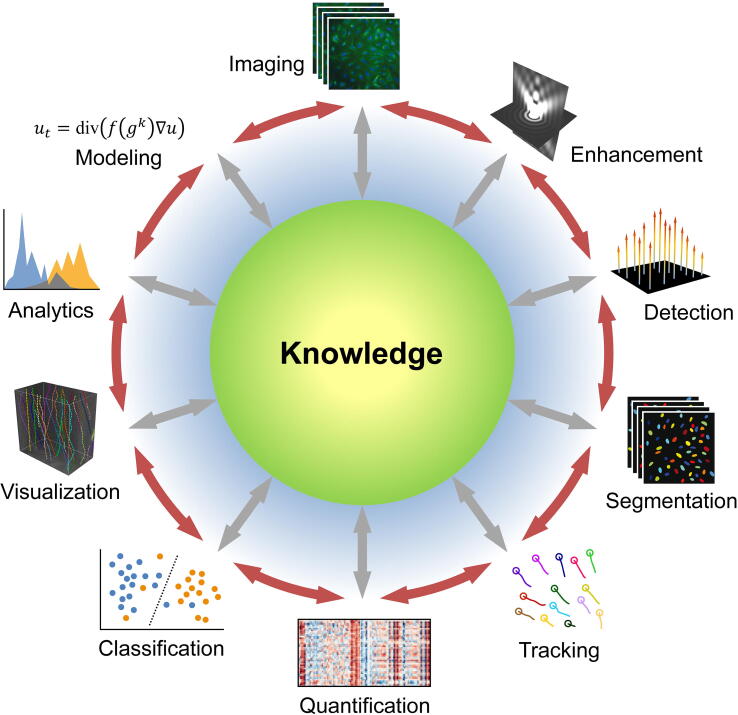

Fig. 3.

Common tasks in bioimage analysis. The ultimate goal is to gain knowledge of biological processes in health and disease by extracting relevant information from microscopy image or video recordings of these processes. Depending on the specific application, information extraction may involve image enhancement, object detection, image segmentation, object tracking, quantification, and classification, data visualization and analytics, and mathematical or statistical modeling. Deep learning is used increasingly in many of these tasks and we discuss several prominent ones in the main text. The diagram shows a typical order of tasks, with double-headed arrows indicating the possible interrelation and feedback between tasks, as well as the fact that any of them independently may also contribute to knowledge along the way, affecting other tasks. Modified from [6].

4.1. Deep learning for image enhancement

Many bioimage analysis tasks are greatly facilitated if the raw microscopy images are first enhanced by removing artifacts and restoring essential information as much as possible. Generally, a high signal-to-noise ratio (SNR) and spatial resolution are beneficial, but may not be achievable in a given experiment due to the required imaging speed and maximum allowable light exposure to avoid damaging the sample. Depending on the type of microscope used and the imaging conditions, different kinds of image enhancement operations may be applied, and deep learning has proven to be a powerful methodology for these. For instance, using well-registered pairs of low-quality and high-quality images, a CNN can be trained to perform denoising and recover resolution [175], [179], [200], [201], [202], [203], [204]. Similarly, trained with pairs of images from different imaging modalities, deep networks can predict fluorescent labels from transmitted-light microscopy images of unlabeled biological samples [179], [195], [205] (Fig. 4A), a technique referred to as cross-modality inference or transformation. Also, generative adversarial networks (GANs) have been shown to enable virtual refocusing of a two-dimensional (2D) fluorescence microscopy image onto a user-defined three-dimensional (3D) surface within a biological sample, correcting for sample drift, tilt, and other aberrations [178].

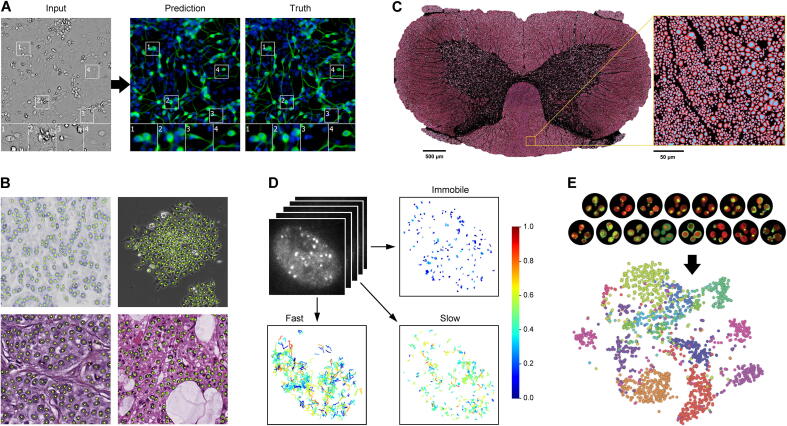

Fig. 4.

Examples of successful application of deep learning in bioimage analysis. A: Prediction of a fluorescence microscopy image (middle) from a bright-field microscopy image (left) compared to the truth (right) [195]. The image shows neurons in a culture of induced pluripotent stem cells differentiated toward the motor neuron lineage but containing other cell types as well. Fluorescent labels are TuJ1 (green) with Hoechst (blue) for the cell nuclei. The predicted image was obtained using a multiscale CNN inspired by U-Net. B: Detection of cells in various types of microscopy images [196]: Ki-67 stained bright-field microscopy image of neuroendocrine tumor tissue (top left), phase-contrast microscopy image of HeLa cervical cancer cells (top right), and H&E stained bright-field microscopy images of breast cancer tissue (bottom left) and human bone marrow tissue (bottom right). Detected cells are marked by yellow dots with green circles indicating the ground truth and were obtained using a structured regression model based on a fully residual CNN. C: Segmentation of neuronal axons (blue) and myelin sheaths (red) in a full scanning electron microscopy image slice of a rat spinal cord [197]. The segmentation was obtained using a CNN called AxonDeepSeg. D: Motion analysis of tracked breast cancer susceptibility gene BRCA2 particles in time-lapse fluorescence microscopy images [198]. Tracks were segmented into tracklets showing consistent motion (no switching between different dynamics states) using an LSTM network. Subsequent moment scaling spectrum (MSS) analysis of the tracklets yielded an estimate of the number of mobility classes (three in this case) and their associated parameters. Color coding indicates the value of the MSS slope per tracklet. E: Classification of fluorescence microscopy images (examples at the top) of yeast cells expressing GFP-tagged proteins localizing to 15 subcellular compartments [199]. The classification was done using a CNN called DeepLoc. A visualization (bottom) of the activations of the final convolutional layer of the network in 2D using t-distributed stochastic neighbor embedding (t-SNE) illustrates the power of the model to distinguish the different classes. For more detailed information, see the cited papers, from which the shown examples were adapted with permission (see Acknowledgments section). (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

4.2. Deep learning for object detection

Another challenge central to many bioimage analysis tasks is to determine whether certain objects of interest are present in given microscopy images. Object detection often goes hand in hand with object localization and has been the subject of intense research for more than half a century [206]. The problem can be solved by extracting features from local image patches and performing classification on them. Here, too, traditional approaches have made way for deep learning in numerous applications, with two-stage region-proposal CNN-based (R-CNN) and unified you-only-look-once (YOLO) approaches and variants being most popular [207], [208], [209]. In contrast with traditional object detection methods, which have found broad application in bioimage analysis for spotting intracellular particles [16], [18], [210], [211], cell nuclei [17], [26], and cellular events such as mitosis [212], [213], [214], deep learning approaches for these tasks have been explored since only recently. First results are promising [196], [215], [216], [217], [218] (Fig. 4B) but more extensive evaluations are needed to assess their general superiority.

4.3. Deep learning for image segmentation

One of the most ubiquitous tasks in bioimage analysis is the partitioning of images into meaningful segments for downstream quantification and statistical evaluation [17], [19], [26]. It is therefore no surprise that the bulk of literature on deep learning in many application areas of computer vision including bioimage analysis has focused on the potential for image segmentation [188], [190], [219], [220], [221], [222]. Similar to object detection, image segmentation can be cast as a classification problem, this time down to the pixel level rather than the object level, which indeed is the approach taken by many deep-learning based methods. In particular, fully convolutional neural networks (FCNs) [223] such as U-Net [224], SegNet [225], DeepLab [226], and variants [227] (Fig. 4C) have become immensely popular for image segmentation. Deep learning methods have also begun to feature prominently in recent international competitions in bioimage analysis, including on segmentation of EM brain images [228], cell nuclei in FM images [229], cells in a variety of time-lapse microscopy images [23], and glandular structures in microscopy images of histological slides [230]. No doubt the future will see more and more deep-learning based methods dominating the charts in such evaluation studies.

4.4. Deep learning for object tracking

Characterizing real-life objects requires quantifying not only their spatial properties but also their temporal behavior. As advanced microscopes nowadays enable fully automated acquisition of time-lapse images of living cells and intracellular particles, this calls for robust computational methods capable of not only detecting and segmenting objects, but also tracking them over time in these images. Object tracking is generally considered to be “one of the most challenging computer vision problems” [231] and is a common task also in bioimage analysis [22], [232], [233], [234], [235]. The first international competition of particle tracking methods was held before deep learning broke through, but already led researchers to suggest the development of learning-based tracking methods [21]. Also, the continuing series of cell tracking challenges has seen the increasing use of deep learning methods for the problem [23]. As discussed in recent reviews, much of the work on deep learning for object tracking in bioimage analysis has focused on the spatial aspect of detection and segmentation [190], [193], while the temporal aspect of data association and linking is typically still solved using traditional computer vision methods. First studies have appeared using DNNs to address both [236], [237], as well as for subsequent trajectory analysis [198] (Fig. 4D), but the challenge remains to develop end-to-end deep-learning based cell and particle tracking methods [238].

4.5. Deep learning for object classification

The task of identifying images or objects therein as belonging to one of multiple predefined classes is a fundamental problem of computer vision in general [239], [240], [241] and a recurring theme also in bioimage analysis [242], [243], [244]. Traditionally, the problem has been addressed by extracting handcrafted image features and using these together with given class labels to train classifiers such as support vector machines (SVM) or random forests (RF) [25], [245], [246]. But the capacity of deep CNN-based classifiers to learn relevant image features autonomously make them favorable over traditional approaches. Following their great success in the 2012 ImageNet challenge [51], CNN-based approaches have grown in popularity for image classification tasks across the board. In bioimage analysis, they have been shown to achieve expert-level performance in a wide range of cell classification and subcellular pattern recognition tasks [188], [189], [191] (Fig. 4E), although recent evaluations have revealed they do not necessarily outperform traditional approaches [244]. An issue in many studies is the lack of sufficient training data, which may be remedied by leveraging transfer learning [190] or crowd-sourcing strategies [247].

5. Summary and outlook

Deep learning has had a long history of discoveries, inventions, expectations, disappointments, rejections, revivals, successes, declines, recoveries, and breakthroughs, but is now widely accepted as the most powerful computing paradigm for big data analysis. The impact of deep learning on our daily lives is already unlike any other technology in the history of computer science, yet it seems we have seen only the proverbial tip of the iceberg. In biomedical imaging, DNNs are beginning to outperform human experts in a growing number of visual interpretation tasks, which is fueling fierce debates among professionals on the future ramifications for the field. Zeroing in on biological imaging, we have reviewed the use of deep learning approaches for common tasks in bioimage analysis, where they are now increasingly favored over traditional computer vision methods. Notwithstanding impressive achievements reported to date, many scientific and engineering challenges remain to further improve deep learning. In closing this mini-review, we touch on several important developments addressing these challenges that are relevant to bioimage analysis (see Table 1 for a quick summary of key research topics with references to reviews and commentaries for further reading).

Table 1.

Overview of key reviews and commentaries for further reading on big research topics in deep learning (DL).

| Topic | References |

|---|---|

| Biological DL | Neuro-inspired AI [40] Bio-inspired computer vision [248] Integrating DL and neuroscience [249] Biological vision and ANNs [250] |

| Optimal DL | User-friendly software platforms [56] Neural architecture search [251] AutoML in biomedical imaging [252] |

| Economical DL | Semi/weakly supervised learning [253] Unsupervised learning strategies [254] Transfer learning strategies [255] |

| Generalizable DL | Economical DL [256] Open-set recognition [257] Domain adaptation [255] |

| Multimodal DL | Multimodal learning models [258] Data fusion strategies [259] Omics applications [260] |

| Efficient DL | Parallelization and distribution [261] Compression and acceleration [262] Biomedical imaging applications [263] |

| Explainable DL | Interpretable AI approaches [264] Visual analytics tools [265] |

| Responsible DL | On replacing radiologists [266] On replacing physicians [267] On replacing microscopists [268] Biomedical students on AI [269] |

5.1. Biological deep learning

Biology has always been a great source of inspiration for technology. Recognizing the unparalleled capacity of the brain in processing information, researchers in computer vision have exploited models of human vision from very early on [248], [270], [271]. Similarly, the idea of developing ANNs for data analysis was born out of research into the workings of biological neural networks (BNNs) [40], [53], [249]. In both cases, however, the ties between computer science and neuroscience have not remained strong, perhaps because “we simply do not have enough information about the brain to use it as a guide” [53]. But as long as human experts continue to be the gold standard in critical vision-based decision-making tasks, it seems there is still much to be gained from renewed interactions between the fields [40], [248], [249], [250]. Bioimage analysis could play a pivotal role here, in a virtuous circle of helping to decipher BNNs at the microscopic level [272], [273], [274] and translating discoveries into improved ANNs for such studies [275], [276], [277].

5.2. Optimal deep learning

One of the key strengths of deep learning underlying its great success is that it automates the process of finding optimal feature descriptors given any data analysis task. While this eliminates the cumbersome handcrafting of descriptors, it leaves the user with the responsibility to design the right DNN architecture and tweak its hyperparameters to achieve satisfactory results. In practice, this may still require significant manual effort and yield suboptimal results. Notwithstanding the great arsenal of software toolkits for deep learning available today [54], [55], [56], [57], there is still much room for the development of higher-level, user-friendly platforms that make it easier also for non-experts to adopt and use existing DNNs or to design and deploy their own solutions. The desire to further minimize human intervention in finding optimal solutions has given birth to the field of automated machine learning (AutoML). For deep learning, various neural architecture search (NAS) approaches have been proposed to automate the network engineering process [251], [278], [279]. First successful applications have recently been reported in medical imaging [252], [280], [281], suggesting NAS holds great potential also for bioimage analysis.

5.3. Economical deep learning

The most common form of deep learning is supervised learning, which requires input data with corresponding labeled output data. Especially in biomedical imaging applications, the output labels are typically obtained by expert manual annotation of the input data. However, as deep learning methods are notoriously data hungry, preparing a training data set this way can be extremely burdersome. Humans themselves largely learn in an unsupervised fashion, as they “discover the structure of the world by observing it, not by being told the name of every object” [35]. More economical deep learning approaches requiring less human input and/or training data are very much needed. Semi-supervised, weakly supervised, and unsupervised learning are important research topics [253], [254], [282] receiving increasing attention also in biomedical imaging [283], [284], [285]. An alternative approach is transfer learning between domains [255], [278], [286] which holds great promise for biomedical imaging as well [287], [288], [289], [290]. Another strategy popular in bioimage analysis is to use high-fidelity simulated data as a surrogate for real data [256], [291], [292], which allows supervised learning with any number of images without requiring manual annotation [293], [294], [295].

5.4. Generalizable deep learning

In experimental evaluations of deep-learning methods, visual recognition tasks are typically framed as “closed set” problems, where the possible conditions in the test set are exactly the same as those in the training set. But in many applications, including in biomedical imaging, this is not very realistic. In practice, a more realistic scenario is that “incomplete knowledge of the world is present at training time, and unknown classes can be submitted to an algorithm during testing” [296]. This implies that current claims of superiority of machines over humans must be taken with a grain of salt, and that more generalizable or “open set” approaches to developing and evaluating deep-learning methods are needed. Open-set recognition (OSR) has been studied in the AI literature for some time [257], [296], [297] but has thus far received very little attention in biomedical imaging.

5.5. Multimodal deep learning

Nowadays, biomedical studies are hardly ever based on data from one imaging modality alone. Multiple, complementary imaging modalities are often used to obtain a more complete picture of the subject or sample under study. An example in bioimaging is the correlative recording of structural and functional image data, using electron and fluorescence microscopy, respectively [298], [299], [300]. But it does not stop there. Experiments typically also involve collecting genomic, proteomic, metabolomic, or other “omic” information [260], [301], [302], and in clinical studies additional data may come from electronic patient records. To take full advantage of all available information in such studies, powerful multimodal deep learning methods are required. This has been well recognized in various other fields [258], [259], [303] but deserves more attention in bioimaging and calls for an integrative approach to bioimage analysis and bioinformatics.

5.6. Efficient deep learning

The ever-growing volume of biomedical data sets and the increasing complexity of DNNs for improved analysis put proportionally higher demands on computing power. Training a deep network to achieve super-human performance, particularly in highly specialized domains such as biomedical imaging, essentially requires super-computing technology. To some extent this is provided by modern multicore GPUs, and more recent tensor processing units (TPUs), which enable single-machine parallelization. But more efficiency is often needed to finish network training within a desired time frame. Codesigning architectural, algorithmic, software, and hardware solutions to allow multi-machine parallelism and scalable distributed deep learning for this purpose is an ongoing engineering challenge [261], [262], [304]. Biomedical imaging at large will greatly benefit from such solutions, as they also facilitate exploiting data from multiple institutes in training DNNs without actually having to share the data, thus mitigating legal or ethical concerns [263], [305], [306].

5.7. Explainable deep learning

A major point of criticism for which even the pioneers of deep learning had their early papers rejected by peers in computer science, is that the use of neural networks for any given perceptual task provides “no insight into how to design a vision system” [51]. Even today, many in the community still have a propensity for carefully hand-designed solutions based on a solid understanding of the nature of the task. But the reality is that “methods that require careful hand-engineering by a programmer who understands the domain do not scale as well as methods that replace the programmer with a powerful general-purpose learning procedure” [51]. Nevertheless, the call for more explainability and interpretability of deep learning methods is legitimate and receiving growing attention in many areas of AI research [307], [308], [264] including computer vision [309], [310], [311]. A host of visual analytics tools have been developed to dissect DNNs and uncover what they have actually learned [265], [312], [313]. Such tools have not yet found widespread application in bioimage analysis but could help practitioners better understand the predictions made by network models.

5.8. Responsible deep learning

Ultimately, the goal of developing computational image analysis methods for the biomedical domain, from fundamental biological imaging to clinical medical imaging, is to improve the efficacy of healthcare. But in order for biomedical professionals to be willing to transfer their responsibilities to machines, and for those whose health depends on their care to accept such transition, these methods need to be trustworthy enough. In this regard it seems we have not quite reached the tipping point. In the past few years, the question whether or when AI will replace human experts has been pondered in many areas of biomedical imaging [132], [268], [267], [269], [266], [314], [315]. It goes without saying that decision making in biomedicine is more critical and risk-averse than in most other technological domains. Much work remains to take deep learning to the level of transparency, adaptability, creativity, empathy, and responsibility normally required of biomedical specialists. That said, as deep learning methods are already achieving human-competitive performance in specific subtasks and have only just begun showing their considerable potential, DNNs will increasingly play an integral role in biomedical procedures. Historically, “human-machine collaborations have performed better than either one alone” [266], and there are no compelling reasons to believe this will ever change.

CRediT authorship contribution statement

Erik Meijering: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Resources, Visualization.

Declaration of Competing Interest

The author declares he has no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

The authors of the works cited in Fig. 4 are gratefully acknowledged. Examples shown in the figure were adapted from these works. Fig. 4A and 4B are © Elsevier and Fig. 4C, 4D, 4E are licensed under the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/).

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.csbj.2020.08.003.

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- 1.Tolles W.E. The Cytoanalyzer – an example of physics in medical research. Trans New York Acad Sci. 1955;17:250–256. doi: 10.1111/j.2164-0947.1955.tb01204.x. [DOI] [PubMed] [Google Scholar]

- 2.Preston K. Machine techniques for automatic leukocyte pattern analysis. Ann N Y Acad Sci. 1962;97:482–490. doi: 10.1111/j.1749-6632.1962.tb34658.x. [DOI] [PubMed] [Google Scholar]

- 3.Ledley R.S. High-speed automatic analysis of biomedical pictures. Science. 1964;146:216–223. doi: 10.1126/science.146.3641.216. [DOI] [PubMed] [Google Scholar]

- 4.Murphy R.F., Meijering E., Danuser G. Special issue on molecular and cellular bioimaging. IEEE Trans Image Processing. 2005;14:1233–1236. doi: 10.1109/TIP.2005.855701. [DOI] [Google Scholar]

- 5.Danuser G. Computer vision in cell biology. Cell. 2011;147:973–978. doi: 10.1016/j.cell.2011.11.001. [DOI] [PubMed] [Google Scholar]

- 6.Meijering E., Carpenter A.E., Peng H., Hamprecht F.A., Olivo-Marin J.C. Imagining the future of bioimage analysis. Nat Biotechnol. 2016;34:1250–1255. doi: 10.1038/nbt.3722. [DOI] [PubMed] [Google Scholar]

- 7.Prewitt J.M.S., Mendelsohn M.L. The analysis of cell images. Ann N Y Acad Sci. 1966;128:1035–1053. doi: 10.1111/j.1749-6632.1965.tb11715.x. [DOI] [PubMed] [Google Scholar]

- 8.Lipkin L.E., Watt W.C., Kirsch R.A. The analysis, synthesis, and description of biological images. Ann New York Acad Sci. 1966;128:984–1012. doi: 10.1111/j.1749-6632.1965.tb11712.x. [DOI] [PubMed] [Google Scholar]

- 9.Ingram M., Preston K. Automatic analysis of blood cells. Scientific American. 1970;223:72–82. doi: 10.1038/scientificamerican1170-72. [DOI] [PubMed] [Google Scholar]

- 10.Sarder P., Nehorai A. Deconvolution methods for 3-D fluorescence microscopy images. IEEE Signal Processing Magazine. 2006;23:32–45. doi: 10.1109/MSP.2006.1628876. [DOI] [Google Scholar]

- 11.Roels J., Aelterman J., Luong H.Q., Lippens S., Pizˇurica A., Saeys Y. An overview of state-of-the-art image restoration in electron microscopy. J Microsc. 2018;271:239–254. doi: 10.1111/jmi.12716. [DOI] [PubMed] [Google Scholar]

- 12.Meiniel W., Olivo-Marin J.C., Angelini E.D. Denoising of microscopy images: a review of the state-of-the-art, and a new sparsity-based method. IEEE Trans Image Processing. 2018;27:3842–3856. doi: 10.1109/TIP.2018.2819821. [DOI] [PubMed] [Google Scholar]

- 13.Tsai C.L., Lister J.P., Bjornsson C.S., Smith K., Shain W., Barnes C.A. Robust, globally consistent and fully automatic multi-image registration and montage synthesis for 3-D multi-channel images. J Microsc. 2011;243:154–171. doi: 10.1111/j.1365-2818.2011.03489.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lucotte B., Balaban R.S. Motion compensation for in vivo subcellular optical microscopy. J Microsc. 2014;254:9–12. doi: 10.1111/jmi.12116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Qu L., Long F., Peng H. 3-D registration of biological images and models: registration of microscopic images and its uses in segmentation and annotation. IEEE Signal Processing Magazine. 2015;32:70–77. doi: 10.1109/MSP.2014.2354060. [DOI] [Google Scholar]

- 16.Stěpka K., Matula P., Matula P., Worz S., Rohr K., Kozubek M. Performance and sensitivity evaluation of 3D spot detection methods in confocal microscopy. Cytometry Part A. 2015;87:759–772. doi: 10.1002/cyto.a.22692. [DOI] [PubMed] [Google Scholar]

- 17.Xing F., Yang L. Robust nucleus/cell detection and segmentation in digital pathology and microscopy images: a comprehensive review. IEEE Rev Biomed Eng. 2016;9:234–263. doi: 10.1109/RBME.2016.2515127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Mabaso M.A., Withey D.J., Twala B. Spot detection methods in fluorescence microscopy imaging: a review. Image Anal Stereol. 2018;37:173–190. doi: 10.5566/ias.1690. [DOI] [Google Scholar]

- 19.Meijering E. Cell segmentation: 50 years down the road. IEEE Signal Process Mag. 2012;29:140–145. doi: 10.1109/MSP.2012.2204190. [DOI] [Google Scholar]

- 20.Beneš M., Zitová B. Performance evaluation of image segmentation algorithms on microscopic image data. J Microsc. 2014;257:65–85. doi: 10.1111/jmi.12186. [DOI] [PubMed] [Google Scholar]

- 21.Chenouard N, Smal I, de Chaumont F, Masˇka M, Sbalzarini IF, Gong Y, Cardinale J, Carthel C, Coraluppi S, Winter M, Cohen AR, Godinez WJ, Rohr K, Kalaidzidis Y, Liang L, Duncan J, Shen H, Xu Y, Magnusson KEG, Jaldén J, Blau HM, Paul-Gilloteaux P, Roudot P, Kervrann C, Waharte F, Tinevez JY, Shorte SL, Willemse J, Celler K, van Wezel GP, Dan HW, Tsai YS, Ortiz de Solórzano C, Olivo-Marin JC, Meijering E. Objective comparison of particle tracking methods. Nat Methods 2014;11:281–9, 10.1038/nmeth.2808. [DOI] [PMC free article] [PubMed]

- 22.Manzo C., Garcia-Parajo M.F. A review of progress in single particle tracking: from methods to biophysical insights. Rep Progress Phys. 2015;78 doi: 10.1088/0034-4885/78/12/124601. [DOI] [PubMed] [Google Scholar]

- 23.Ulman V, Masˇka M, Magnusson KEG, Ronneberger O, Haubold C, Harder N, Matula P, Matula P, Svoboda D, Radojevic M, Smal I, Rohr K, Jaldén J, Blau HM, Dzyubachyk O, Lelieveldt B, Xiao P, Li Y, Cho SY, Dufour AC, Olivo-Marin JC, Reyes-Aldasoro CC, Solis-Lemus JA, Bensch R, Brox T, Stegmaier J, Mikut R, Wolf S, Hamprecht FA, Esteves T, Quel-has P, Demirel O, Malmstro¨m L, Jug F, Tomancak P, Meijering E, Muñoz-Barrutia A, Kozubek M, Ortiz-de Solorzano C. An objective comparison of cell-tracking algorithms. Nat Methods 2017;14:1141–52, 10.1038/nmeth.4473. [DOI] [PMC free article] [PubMed]

- 24.Orlov N., Shamir L., Macura T., Johnston J., Eckley D.M., Goldberg I.G. WND-CHARM: multi-purpose image classification using compound image transforms. Pattern Recogn Lett. 2008;29:1684–1693. doi: 10.1016/j.patrec.2008.04.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Shamir L., Delaney J.D., Orlov N., Eckley D.M., Goldberg I.G. Pattern recognition software and techniques for biological image analysis. PLoS Comput Biol. 2010;6 doi: 10.1371/journal.pcbi.1000974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Irshad H., Veillard A., Roux L., Racoceanu D. Methods for nuclei detection, segmentation, and classification in digital histopathology: a review-current status and future potential. IEEE Rev Biomed Eng. 2014;7:97–114. doi: 10.1109/RBME.2013.2295804. [DOI] [PubMed] [Google Scholar]

- 27.Hamilton N. Quantification and its applications in fluorescent microscopy imaging. Traffic. 2009;10:951–961. doi: 10.1111/j.1600-0854.2009.00938.x. [DOI] [PubMed] [Google Scholar]

- 28.Eliceiri K.W., Berthold M.R., Goldberg I.G., Ibáñez L., Manjunath B.S., Martone M.E., Murphy R.F., Peng H., Plant A.L., Roysam B., Stuurman N., Swedlow J.R., Tomancak P., Carpenter A.E. Biological imaging software tools. Nat Methods. 2012;9:697–710. doi: 10.1038/nmeth.2084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Skylaki S., Hilsenbeck O., Schroeder T. Challenges in long-term imaging and quantification of single-cell dynamics. Nat Biotechnol. 2016;34:1137–1144. doi: 10.1038/nbt.3713. [DOI] [PubMed] [Google Scholar]

- 30.Walter T., Shattuck D.W., Baldock R., Bastin M.E., Carpenter A.E., Duce S. Visualization of image data from cells to organisms. Nat Methods. 2010;7:S26–S41. doi: 10.1038/nmeth.1431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Long F., Zhou J., Peng H. Visualization and analysis of 3D microscopic images. PLoS Comput Biol. 2012;8 doi: 10.1371/journal.pcbi.1002519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Sailem H.Z., Cooper S., Bakal C. Visualizing quantitative microscopy data: history and challenges. Crit Rev Biochem Mol Biol. 2016;51:96–101. doi: 10.3109/10409238.2016.1146222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bengio Y. Learning deep architectures for AI. Foundations Trends Machine Learning. 2009;2:1–127. doi: 10.1561/2200000006. [DOI] [Google Scholar]

- 34.Arel I., Rose D.C., Karnowski T.P. Deep machine learning – a new frontier in artificial intelligence research. IEEE Computational Intelligence Magazine. 2010;5:13–18. doi: 10.1109/MCI.2010.938364. [DOI] [Google Scholar]

- 35.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 36.Serre T. Deep learning: the good, the bad, and the ugly. Ann Rev Vision Sci. 2019;5:399–426. doi: 10.1146/annurev-vision-091718-014951. [DOI] [PubMed] [Google Scholar]

- 37.Marx V. Machine learning, practically speaking. Nat Methods. 2019;16:463–467. doi: 10.1038/s41592-019-0432-9. [DOI] [PubMed] [Google Scholar]

- 38.Baltrusaitis T., Ahuja C., Morency L.P. Multimodal machine learning: a survey and taxonomy. IEEE Trans Pattern Anal Mach Intell. 2019;41:423–443. doi: 10.1109/TPAMI.2018.2798607. [DOI] [PubMed] [Google Scholar]

- 39.Tecuci G. Artificial intelligence. Wiley Interdisciplinary Reviews: Computational Statistics. 2012;4:168–180. doi: 10.1002/wics.200. [DOI] [Google Scholar]

- 40.Hassabis D., Kumaran D., Summerfield C., Botvinick M. Neuroscience-inspired artificial intelligence. Neuron. 2017;95:245–258. doi: 10.1016/j.neuron.2017.06.011. [DOI] [PubMed] [Google Scholar]

- 41.Herna’ndez-Orallo J. Evaluation in artificial intelligence: from task-oriented to ability-oriented measurement. Artificial Intelligence Rev. 2017;48:397–447. [Google Scholar]

- 42.Schmidhuber J. Deep learning in neural networks: an overview. Neural Networks. 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003.2018.02.016. [DOI] [PubMed] [Google Scholar]

- 43.McCulloch W.S., Pitts W. A logical calculus of the ideas immanent in nervous activity. Bull Mathematical Biophys. 1943;5:115–133. doi: 10.1007/BF02478259. [DOI] [PubMed] [Google Scholar]

- 44.Rosenblatt F. The perceptron: a probabilistic model for information storage and organization in the brain. Psychol Rev. 1958;65:386–408. doi: 10.1037/h0042519. [DOI] [PubMed] [Google Scholar]

- 45.Minsky M.L., Papert S.A. The MIT Press; Cambridge, MA, USA: 1969. Perceptrons: An Introduction to Computational Geometry. [Google Scholar]

- 46.Rumelhart D.E., Hinton G.E., Williams R.J. Learning representations by back-propagating errors. Nature. 1986;323:533–536. doi: 10.1038/323533a0. [DOI] [Google Scholar]

- 47.Hochreiter S., Schmidhuber J. Long short-term memory. Neural Computation. 1997;9:1735–1780. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 48.Lecun Y., Bottou L., Bengio Y., Haffner P. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86:2278–2324. doi: 10.1109/5.726791. [DOI] [Google Scholar]

- 49.Hinton G.E., Osindero S., Teh Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006;18:1527–1554. doi: 10.1162/neco.2006.18.7.1527. [DOI] [PubMed] [Google Scholar]

- 50.Hinton G.E., Salakhutdinov R.R. Reducing the dimensionality of data with neural networks. Science. 2006;313:504–507. doi: 10.1126/science.1127647. [DOI] [PubMed] [Google Scholar]

- 51.Krizhevsky A., Sutskever I., Hinton G.E. ImageNet classification with deep convolutional neural networks. Commun ACM. 2017;60:84–90. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 52.Savage N. Neural net worth. Commun ACM. 2019;62:10–12. doi: 10.1145/3323872. [DOI] [Google Scholar]

- 53.Goodfellow I, Bengio Y, Courville A. Deep Learning. The MIT Press, Cambridge, MA, USA, 2016. https://www.deeplearningbook.org/.

- 54.Erickson B.J., Korfiatis P., Akkus Z., Kline T., Philbrick K. Toolkits and libraries for deep learning. J Digital Imaging. 2017;30:400–405. doi: 10.1007/s10278-017-9965-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Pouyanfar S., Sadiq S., Yan Y., Tian H., Tao Y., Reyes M.P. A survey on deep learning: algorithms, techniques, and applications. ACM Comput Surv. 2018;51:92. doi: 10.1145/3234150. [DOI] [Google Scholar]

- 56.Nguyen G, Dlugolinsky S, Bobák M, Tran V, Garćıa A.L., Here-dia I, Maĺık P, Hluchý L. Machine learning and deep learning frameworks and libraries for large-scale data mining: a survey. Artificial Intelligence Rev 2019;52:77–124, 10.1007/s10462-018-09679-z. [DOI]

- 57.Shrestha A., Mahmood A. Review of deep learning algorithms and architectures. IEEE Access. 2019;7:53040–53065. doi: 10.1109/ACCESS.2019.2912200. [DOI] [Google Scholar]

- 58.Sejnowski TJ. The Deep Learning Revolution. The MIT Press, Cambridge, MA, USA, 2018. https://www.amazon.com/dp/026203803X.

- 59.Williams MA. The artificial intelligence race: will Australia lead or lose?. J Proc Royal Soc New South Wales 2019;152:105–14. https://royalsoc.org.au/council-members-section/435-v152-11007/s10462-016-9505-7.

- 60.Kamilaris A, Prenafeta-Boldú FX. Deep learning in agriculture: a survey. Computers and Electronics in Agriculture2018;147:70–90. 10.1016/j.compag. [DOI]

- 61.Min S., Lee B., Yoon S. Deep learning in bioinformatics. Briefings in Bioinformatics. 2017;18:851–869. doi: 10.1093/bib/bbw068. [DOI] [PubMed] [Google Scholar]

- 62.Sundararajan K., Woodard D.L. Deep learning for biometrics: a survey. ACM Computing Surveys. 2018;51:65. doi: 10.1145/3190618. [DOI] [Google Scholar]

- 63.Angermueller C., Pӓrnamaa T., Parts L., Stegle O. Deep learning for computational biology. Molecular Syst Biol. 2016;12:878. doi: 10.15252/msb.20156651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Zhang S., Yao L., Sun A., Tay Y. Deep learning based recommender system: a survey and new perspectives. ACM Comput Surv. 2019;52:5. doi: 10.1145/3285029. [DOI] [Google Scholar]

- 65.Berman D.S., Buczak A.L., Chavis J.S., Corbett C.L. A survey of deep learning methods for cyber security. Information. 2019;10:122. doi: 10.3390/info10040122. [DOI] [Google Scholar]

- 66.Hwang J.J., Jung Y.H., Cho B.H., Heo M.S. An overview of deep learning in the field of dentistry. Imaging Sci Dentistry. 2019;49:1–7. doi: 10.5624/isd.2019.49.1.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Gawehn E., Hiss J.A., Schneider G. Deep learning in drug discovery. Molecular Informatics. 2016;35:3–14. doi: 10.1002/minf.201501008. [DOI] [PubMed] [Google Scholar]

- 68.Hernández-Blanco A., Herrera-Flores B., Tomás D., Navarro-Colorado B. A systematic review of deep learning approaches to educational data mining. Complexity. 2019;2019:1306039. doi: 10.1155/2019/1306039. [DOI] [Google Scholar]

- 69.Guo G., Zhang N. A survey on deep learning based face recognition. Comp Vision Image Understanding. 2019;189 doi: 10.1016/j.cviu.2019.102805. [DOI] [Google Scholar]

- 70.Justesen N., Bontrager P., Togelius J., Risi S. Deep learning for video game playing. IEEE Trans Games. 2020;12:1–20. doi: 10.1109/TG.2019.2896986. [DOI] [Google Scholar]

- 71.Ravi D., Wong C., Deligianni F., Berthelot M., Andreu-Perez J., Lo B. Deep learning for health informatics. IEEE J Biomed Health Inf. 2017;21:4–21. doi: 10.1109/JBHI.2016.2636665. [DOI] [PubMed] [Google Scholar]

- 72.Abdughani M., Ren J., Wu L., Yang J.M., Zhao J. Supervised deep learning in high energy phenomenology: a mini review. Commun Theor Phys. 2019;71:955. doi: 10.1088/0253-6102/71/8/955. [DOI] [Google Scholar]

- 73.Shen C. A transdisciplinary review of deep learning research and its relevance for water resources scientists. Water Resources Res. 2018;54:8558–8593. doi: 10.1029/2018WR022643. [DOI] [Google Scholar]

- 74.Eraslan G., Avsec Z., Gagneur J., Theis F.J. Deep learning: new computational modelling techniques for genomics. Nat Rev Genetics. 2019;20:389–403. doi: 10.1038/s41576-019-0122-6. [DOI] [PubMed] [Google Scholar]

- 75.Monroe D. Deep learning takes on translation. Commun ACM. 2017;60:12–14. doi: 10.1145/3077229. [DOI] [Google Scholar]

- 76.Ota K., Dao M.S., Mezaris V., De Natale F.G.B. Deep learning for mobile multimedia: a survey. ACM Trans Multimedia Comput Commun Appl. 2017;13:34. doi: 10.1145/3092831. [DOI] [Google Scholar]

- 77.Zhang C., Patras P., Haddadi H. Deep learning in mobile and wireless networking: a survey. IEEE Commun Surveys Tutorials. 2019;21:2224–2287. doi: 10.1109/COMST.2019.2904897. [DOI] [Google Scholar]

- 78.Zhang W., Yao T., Zhu S. Deep learning-based multimedia analytics: a review. ACM Trans Multimedia Comp Commun Appl. 2019;15:2. doi: 10.1145/3279952. [DOI] [Google Scholar]

- 79.Sacha G.M., Varona P. Artificial intelligence in nanotechnology. Nanotechnology. 2013;24 doi: 10.1088/0957-4484/24/45/452002. [DOI] [PubMed] [Google Scholar]

- 80.Young T., Hazarika D., Poria S., Cambria E. Recent trends in deep learning based natural language processing. IEEE Comput Intelligence Magazine. 2018;13:55–75. doi: 10.1109/MCI.2018.2840738. [DOI] [Google Scholar]

- 81.Parekh V.S., Jacobs M.A. Deep learning and radiomics in precision medicine. Expert Rev Precision Med Drug Devel. 2019;4:59–72. doi: 10.1080/23808993.2019.1585805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Zhu X.X., Tuia D., Mou L., Xia G.S., Zhang L., Xu F. Deep learning in remote sensing: a comprehensive review and list of resources. IEEE Geosci Remote Sens Mag. 2017;5:8–36. doi: 10.1109/MGRS.2017.2762307. [DOI] [Google Scholar]

- 83.Wang H., Lei Z., Zhang X., Zhou B., Peng J. A review of deep learning for renewable energy forecasting. Energy Conversion Manag. 2019;198 doi: 10.1016/j.enconman.2019.111799. [DOI] [Google Scholar]

- 84.Pierson H.A., Gashler M.S. Deep learning in robotics: a review of recent research. Adv Robotics. 2017;31:821–835. doi: 10.1080/01691864.2017.1365009. [DOI] [Google Scholar]

- 85.Wang J., Ma Y., Zhang L., Gao R.X., Wu D. Deep learning for smart manufacturing: methods and applications. J Manuf Syst. 2018;48:144–156. doi: 10.1016/j.jmsy.2018.01.003. [DOI] [Google Scholar]

- 86.Ling Z.H., Kang S.Y., Zen H., Senior A., Schuster M., Qian X.J. Deep learning for acoustic modeling in parametric speech generation: a systematic review of existing techniques and future trends. IEEE Signal Processing Magazine. 2015;32:35–52. doi: 10.1109/MSP.2014.2359987. [DOI] [Google Scholar]

- 87.Sreenu G., Durai M.A.S. Intelligent video surveillance: a review through deep learning techniques for crowd analysis. J Big Data. 2019;6:48. doi: 10.1186/s40537-019-0212-5. [DOI] [Google Scholar]

- 88.Nguyen H., Kieu L.M., Wen T., Cai C. Deep learning methods in transportation domain: a review. IET Intelligent Trans Syst. 2018;12:998–1004. doi: 10.1049/iet-its.2018.0064. [DOI] [Google Scholar]

- 89.Liu D., Li Y., Lin J., Li H., Wu F. Deep learning-based video coding: a review and a case study. ACM Comput Surv. 2020;53:11. doi: 10.1145/3368405. [DOI] [Google Scholar]

- 90.Khamparia A., Singh K.M. A systematic review on deep learning architectures and applications. Expert Syst. 2019;36 doi: 10.1111/exsy.12400. [DOI] [Google Scholar]

- 91.Robb R.A. Biomedical imaging: past, present and predictions. Med Imaging Tech. 2006;24:25–37. doi: 10.11409/mit.24.25. [DOI] [Google Scholar]

- 92.Wallyn J., Anton N., Akram S., Vandamme T.F. Biomedical imaging: principles, technologies, clinical aspects, contrast agents, limitations and future trends in nanomedicines. Pharmaceutical Res. 2019;36:78. doi: 10.1007/s11095-019-2608-5. [DOI] [PubMed] [Google Scholar]

- 93.Weissleder R., Nahrendorf M. Advancing biomedical imaging. Proc National Acad Sci United States of America. 2015;112:14424–14428. doi: 10.1073/pnas.1508524112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Greenspan H., van Ginneken B., Summers R.M. Deep learning in medical imaging: overview and future promise of an exciting new technique. IEEE Trans Medical Imaging. 2016;35:1153–1159. doi: 10.1109/TMI.2016.2553401. [DOI] [Google Scholar]

- 95.Litjens G., Kooi T., Bejnordi B.E., Setio A.A.A., Ciompi F., Ghafoorian M., van der Laak J.A.W.M., van Ginneken B., Sánchez C.I. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 96.Shen D., Wu G., Suk H.I. Deep learning in medical image analysis. Ann Rev Biomed Eng. 2017;19:221–248. doi: 10.1146/annurev-bioeng-071516-044442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Suzuki K. Overview of deep learning in medical imaging. Radiol Phys Tech. 2017;10:257–273. doi: 10.1007/s12194-017-0406-5. [DOI] [PubMed] [Google Scholar]

- 98.Lee J.G., Jun S., Cho Y.W., Lee H., Kim G.B., Seo J.B. Deep learning in medical imaging: general overview. Korean J Radiol. 2017;18:570–584. doi: 10.3348/kjr.2017.18.4.570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Ker J., Wang L., Rao J., Lim T. Deep learning applications in medical image analysis. IEEE Access. 2018;6:9375–9389. doi: 10.1109/ACCESS.2017.2788044. [DOI] [Google Scholar]

- 100.Biswas M., Kuppili V., Saba L., Edla D.R., Suri H.S., Cuadrado-Godia E., Laird J.R., Marinhoe R.T., Sanches J.M., Nicolaides A., Suri J.S. State-of-the-art review on deep learning in medical imaging. Front Biosci. 2019;24:392–426. doi: 10.2741/4725. [DOI] [PubMed] [Google Scholar]

- 101.Kaji S., Kida S. Overview of image-to-image translation by use of deep neural networks: denoising, super-resolution, modality conversion, and reconstruction in medical imaging. Radiol Phys Tech. 2019;12:235–248. doi: 10.1007/s12194-019-00520-y. [DOI] [PubMed] [Google Scholar]

- 102.Chan H.P., Samala R.K., Hadjiiski L.M., Zhou C. Deep learning in medical image analysis. Adv Exp Med Biol. 2020;1213:3–21. doi: 10.1007/978-3-030-33128-3_1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Brattain L.J., Telfer B.A., Dhyani M., Grajo J.R., Samir A.E. Machine learning for medical ultrasound: status, methods, and future opportunities. Abdominal Radiol. 2018;43:786–799. doi: 10.1007/s00261-018-1517-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Kolossváry M., De Cecco C.N., Feuchtner G., Maurovich-Horvat P. Advanced atherosclerosis imaging by CT: radiomics, machine learning and deep learning. J Cardiovascular Computed Tomography. 2019;13:274–280. doi: 10.1016/j.jcct.2019.04.007. [DOI] [PubMed] [Google Scholar]

- 105.Nadeem M.W., Ghamdi M.A.A., Hussain M., Khan M.A., Khan K.M., Almotiri S.H., Butt S.A. Brain tumor analysis empowered with deep learning: a review, taxonomy, and future challenges. Brain Sci. 2020;10:118. doi: 10.3390/brainsci10020118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Zhu G., Jiang B., Tong L., Xie Y., Zaharchuk G., Wintermark M. Applications of deep learning to neuro-imaging techniques. Front Neurol. 2019;10:869. doi: 10.3389/fneur.2019.00869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Munir K., Elahi H., Ayub A., Frezza F., Rizzi A. Cancer diagnosis using deep learning: a bibliographic review. Cancers. 2019;11:1235. doi: 10.3390/cancers11091235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Chan S, Reddy V, Myers B, Thibodeaux Q, Brownstone N, Liao W. Machine learning in dermatology: current applications, opportunities, and limitations. Dermatol Therapy 2020;10:365–86, 10.1007/s13555-020-00372-0. [DOI] [PMC free article] [PubMed]

- 109.Min J.K., Kwak M.S., Cha J.M. Overview of deep learning in gastrointestinal endoscopy. Gut and Liver. 2019;13:388–393. doi: 10.5009/gnl18384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Zou L., Yu S., Meng T., Zhang Z., Liang X., Xie Y. A technical review of convolutional neural network-based mammographic breast cancer diagnosis. Comput Math Methods Med. 2019;2019:6509357. doi: 10.1155/2019/6509357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Kijowski R, Liu F, Caliva F, Pedoia V. Deep learning for lesion detection, progression, and prediction of musculoskeletal disease. J Magnetic Resonance Imaging 2020;51:Forthcoming, 10.1002/jmri.27001. [DOI] [PMC free article] [PubMed]

- 112.Currie G.M. Intelligent imaging: artificial intelligence augmented nuclear medicine. J Nuclear Medicine Tech. 2019;47:217–222. doi: 10.2967/jnmt.119.232462. [DOI] [PubMed] [Google Scholar]

- 113.Ting D.S.W., Peng L., Varadarajan A.V., Keane P.A., Burlina P.M., Chiang M.F., Schmetterer L., Pasquale L.R., Bressler N.M., Webster D.R., Abramoff M., Wong T.Y. Deep learning in ophthalmology: the technical and clinical considerations. Progress Retinal Eye Res. 2019;72 doi: 10.1016/j.preteyeres.2019.04.003. [DOI] [PubMed] [Google Scholar]

- 114.Ma J, Song Y, Tian X, Hua Y, Zhang R, Wu J. Survey on deep learning for pulmonary medical imaging. Front Med 2020;14:Forthcoming, 10.1007/s11684-019-0726-4. [DOI] [PubMed]

- 115.Chassagnon G., Vakalopolou M., Paragios N., Revel M.P. Deep learning: definition and perspectives for thoracic imaging. Eur Radiol. 2020;30:2021–2030. doi: 10.1007/s00330-019-06564-3. [DOI] [PubMed] [Google Scholar]

- 116.Meyer P., Noblet V., Mazzara C., Lallement A. Survey on deep learning for radiotherapy. Comput Biol Med. 2018;98:126–146. doi: 10.1016/j.compbiomed.2018.05.018. [DOI] [PubMed] [Google Scholar]

- 117.Chassagnon G., Vakalopolou M., Paragios N., Revel M.P. Deep learning: definition and perspectives for thoracic imaging. Eur Radiol. 2020;30:2021–2030. doi: 10.1007/s00330-019-06564-3. [DOI] [PubMed] [Google Scholar]

- 118.McBee M.P., Awan O.A., Colucci A.T., Ghobadi C.W., Kadom N., Kansagra A.P. Deep learning in radiology. Acad Radiol. 2018;25:1472–1480. doi: 10.1016/j.acra.2018.02.018. [DOI] [PubMed] [Google Scholar]

- 119.Yasaka K., Abe O. Deep learning and artificial intelligence in radiology: current applications and future directions. PLoS Med. 2018;15 doi: 10.1371/journal.pmed.1002707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120.Hosny A., Parmar C., Quackenbush J., Schwartz L.H., Aerts H.J.W.L. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18:500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Chartrand G., Cheng P.M., Vorontsov E., Drozdzal M., Turcotte S., Pal C.J., Kadoury S., Tang A. Deep learning: a primer for radiologists. Radiographics. 2017;37:2113–2131. doi: 10.1148/rg.2017170077. [DOI] [PubMed] [Google Scholar]

- 122.Montagnon E., Cerny M., Cadrin-Chênevert A., Hamilton V., Derennes T., Ilinca A., Vandenbroucke-Menu F., Turcotte S., Kadoury S., Tang A. Deep learning workflow in radiology: a primer. Insights Into Imaging. 2020;11:22. doi: 10.1186/s13244-019-0832-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 123.Do S., Song K.D., Chung J.W. Basics of deep learning: a radiologist’s guide to understanding published radiology articles on deep learning. Korean J Radiol. 2020;21:33–41. doi: 10.3348/kjr.2019.0312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 124.England J.R., Cheng P.M. Artificial intelligence for medical image analysis: a guide for authors and reviewers. Am J Roentgenol. 2019;212:513–519. doi: 10.2214/AJR.18.20490. [DOI] [PubMed] [Google Scholar]

- 125.Soffer S., Ben-Cohen A., Shimon O., Amitai M.M., Greenspan H., Klang E. Convolutional neural networks for radiologic images: a radiologist’s guide. Radiology. 2019;290:590–606. doi: 10.1148/radiol.2018180547. [DOI] [PubMed] [Google Scholar]

- 126.Faes L., Liu X., Wagner S.K., Fu D.J., Balaskas K., Sim D.A., Bachmann L.M., Keane P.A., Denniston A.K. A clinician’s guide to artificial intelligence: how to critically appraise machine learning studies. Transl Vision Sci Tech. 2020;9:7. doi: 10.1167/tvst.9.2.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 127.Tang A., Tam R., Cadrin-Chênevert A., Guest W., Chong J., Barfett J., Chepelev L., Cairns R., Mitchell J.R., Cicero M.D., Poudrette M.G., Jaremko J.L., Reinhold C., Gallix B., Gray B., Geis R. Canadian Association of Radiologists white paper on artificial intelligence in radiology. Canadian Assoc Radiologists J. 2018;69:120–135. doi: 10.1016/j.carj.2018.02.002. [DOI] [PubMed] [Google Scholar]

- 128.Langlotz C.P., Allen B., Erickson B.J., Kalpathy-Cramer J., Bigelow K., Cook T.S. A roadmap for foundational research on artificial intelligence in medical imaging. Radiology. 2019;291:781–791. doi: 10.1148/radiol.2019190613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129.European Society of Radiology (ESR). What the radiologist should know about artificial intelligence – an ESR white paper. Insights Into Imaging 2019;10:44. 10.1186/s13244-019-0738-2. [DOI] [PMC free article] [PubMed]

- 130.Saba L., Biswas M., Kuppili V., Cuadrado Godia E., Suri H.S., Edla D.R., Omerzu T., Laird J.R., Khanna N.N., Mavrogeni S., Protogerou A., Sfikakis P.P., Viswanathan V., Kitas G.D., Nicolaides A., Gupta A., Suri J.S. The present and future of deep learning in radiology. Eur J Radiol. 2019;114:14–24. doi: 10.1016/j.ejrad.2019.02.038. [DOI] [PubMed] [Google Scholar]

- 131.Kulkarni S., Seneviratne N., Baig M.S., Khan A.H.A. Artificial intelligence in medicine: where are we now? Acad Radiol. 2020;27:62–70. doi: 10.1016/j.acra.2019.10.001. [DOI] [PubMed] [Google Scholar]

- 132.Pesapane F., Tantrige P., Patella F., Biondetti P., Nicosia L., Ianniello A., Rossi U.G., Carrafiello G., Ierardi A.M. Myths and facts about artificial intelligence: why machine- and deep-learning will not replace interventional radiologists. Med Oncol. 2020;37:40. doi: 10.1007/s12032-020-01368-8. [DOI] [PubMed] [Google Scholar]

- 133.Shen J., Zhang C.J.P., Jiang B., Chen J., Song J., Liu Z. Artificial intelligence versus clinicians in disease diagnosis: systematic review. JMIR Med Inforatics. 2019;7 doi: 10.2196/10010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 134.Bhargava R., Madabhushi A. Emerging themes in image informatics and molecular analysis for digital pathology. Annu Rev Biomed Eng. 2016;18:387–412. doi: 10.1146/annurev-bioeng-112415-114722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 135.Pantanowitz L., Sharma A., Carter A.B., Kurc T., Sussman A. Saltz whole slide image analysis: an overview. Front Med. 2019;6:264. doi: 10.3389/fmed.2019.00264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 136.Aeffner F., Zarella M.D., Buchbinder N., Bui M.M., Goodman M.R., Hartman D.J., Lujan G.M., Molani M.A., Parwani A.V., Lillard K., Turner O.C., Vemuri V.N.P., Yuil-Valdes A.G., Bowman D. Introduction to digital image analysis in whole-slide imaging: a white paper from the digital pathology association. J Pathol Informatics. 2019;10:9. doi: 10.4103/jpi.jpi_82_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 137.Nam S., Chong Y., Jung C.K., Kwak T.Y., Lee J.Y., Park J., Rho M.J., Go H. Introduction to digital pathology and computer-aided pathology. J Pathol Trans Med. 2020;54:125–134. doi: 10.4132/jptm.2019.12.31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 138.Janowczyk A., Madabhushi A. Deep learning for digital pathology image analysis: a comprehensive tutorial with selected use cases. J Pathol Informatics. 2016;7:29. doi: 10.4103/2153-3539.186902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 139.Madabhushi A., Lee G. Image analysis and machine learning in digital pathology: challenges and opportunities. Med Image Anal. 2016;33:170–175. doi: 10.1016/j.media.2016.06.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 140.Zhong C., Han J., Borowsky A., Parvin B., Wang Y., Chang H. When machine vision meets histology: a comparative evaluation of model architecture for classification of histology sections. Med Image Anal. 2017;35:530–543. doi: 10.1016/j.media.2016.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 141.Komura D., Ishikawa S. Machine learning methods for histopathological image analysis. Computational Struct Biotech J. 2018;16:34–42. doi: 10.1016/j.csbj.2018.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 142.Hamidinekoo A., Denton E., Rampun A., Honnor K., Zwiggelaar R. Deep learning in mammography and breast histology, an overview and future trends. Med Image Anal. 2018;47:45–67. doi: 10.1016/j.media.2018.03.006. [DOI] [PubMed] [Google Scholar]

- 143.Wang S., Yang D.M., Rong R., Zhan X., Xiao G. Pathology image analysis using segmentation deep learning algorithms. Am J Pathol. 2019;189:1686–1698. doi: 10.1016/j.ajpath.2019.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 144.Serag A., Ion-Margineanu A., Qureshi H., McMillan R., Saint Martin M.J., Diamond J. Translational AI and deep learning in diagnostic pathology. Front Med. 2019;6:185. doi: 10.3389/fmed.2019.00185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 145.Dimitriou N., Arandjelovic J. Twenty years of digital pathology: an overview of the road travelled, what is on the horizon, and the emergence of vendor-neutral archives. J Pathol Informatics. 2018;9:40. doi: 10.4103/jpi.jpi_69_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 146.Landau M.S., Pantanowitz L. Artificial intelligence in cytopathology: a review of the literature and overview of commercial landscape. J Am Soc Cytopathol. 2019;8:230–241. doi: 10.1016/j.jasc.2019.03.003. [DOI] [PubMed] [Google Scholar]

- 147.El Achi H., Khoury J.D. Artificial intelligence and digital microscopy applications in diagnostic hematopathology. Cancers. 2020;12:797. doi: 10.3390/cancers12040797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 148.Saxena S., Gyanchandani M. Machine learning methods for computer-aided breast cancer diagnosis using histopathology: a narrative review. J Med Imaging Radiation Sci. 2020;51:182–193. doi: 10.1016/j.jmir.2019.11.001. [DOI] [PubMed] [Google Scholar]

- 149.Wang S., Yang D.M., Rong R., Zhan X., Fujimoto J., Liu H. Artificial intelligence in lung cancer pathology image analysis. Cancers. 2019;11:1673. doi: 10.3390/cancers11111673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 150.Cong L., Feng W., Yao Z., Zhou X., Xiao W. Deep learning model as a new trend in computer-aided diagnosis of tumor pathology for lung cancer. J Cancer. 2020;11:3615–3622. doi: 10.7150/jca.43268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 151.Jiang Y., Yang M., Wang S., Li X., Sun Y. Emerging role of deep learning-based artificial intelligence in tumor pathology. Cancer Commun. 2020;40:154–166. doi: 10.1002/cac2.12012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 152.Zhu W., Xie L., Han J., Guo X. The application of deep learning in cancer prognosis prediction. Cancers. 2020;12:603. doi: 10.3390/cancers12030603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 153.Tizhoosh H.R., Pantanowitz L. Artificial intelligence and digital pathology: challenges and opportunities. J Pathol Informatics. 2018;9:38. doi: 10.4103/jpi.jpi_53_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 154.Niazi M.K.K., Parwani A.V., Gurcan M.N. Digital pathology and artificial intelligence. Lancet Oncol. 2019;20:e253–e261. doi: 10.1016/S1470-2045(19)30154-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 155.Bera K., Schalper K.A., Rimm D.L., Velcheti V., Madabhushi A. Artificial intelligence in digital pathology – new tools for diagnosis and precision oncology. Nat Rev Clin Oncol. 2019;16:703–715. doi: 10.1038/s41571-019-0252-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 156.Colling R, Pitman H, Oien K, Rajpoot N, Macklin P, in Histopathology Working Group CMPAI, Snead D, Sackville T, Verrill C. Artificial intelligence in digital pathology: a roadmap to routine use in clinical practice. J Pathol 2019;249:143–50, 10.1002/path.5310. [DOI] [PubMed]

- 157.Acs B., Rantalainen M., Hartman J. Artificial intelligence as the next step towards precision pathology. J Internal Med. 2020;288:62–81. doi: 10.1111/joim.13030. [DOI] [PubMed] [Google Scholar]

- 158.Stout D.B., Zaidi H. Preclinical multimodality imaging in vivo. PET Clinics. 2008;3:251–273. doi: 10.1016/j.cpet.2009.03.001. [DOI] [PubMed] [Google Scholar]

- 159.Alam I.S., Steinberg I., Vermesh O., van den Berg N.S., Rosenthal E.L., van Dam G.M. Emerging intraoperative imaging modalities to improve surgical precision. Mol Imag Biol. 2018;20:705–715. doi: 10.1007/s11307-018-1227-6. [DOI] [PubMed] [Google Scholar]

- 160.Xue Y., Chen S., Qin J., Liu Y., Huang B., Chen H. Application of deep learning in automated analysis of molecular images in cancer: a survey. Contrast Media Molecular Imaging. 2017;2017:9512370. doi: 10.1155/2017/9512370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 161.Choi H. Deep learning in nuclear medicine and molecular imaging: current perspectives and future directions. Nuclear Med Molecular Imaging. 2018;52:109–118. doi: 10.1007/s13139-017-0504-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 162.Cook G.J.R., Goh V. What can artificial intelligence teach us about the molecular mechanisms underlying disease? Eur J Nucl Med Mol Imaging. 2019;46:2715–2721. doi: 10.1007/s00259-019-04370-z. [DOI] [PMC free article] [PubMed] [Google Scholar]