Abstract

Background

The role of emotion is crucial to the learning process, as it is linked to motivation, interest, and attention. Affective states are expressed in the brain and in overall biological activity. Biosignals, like heart rate (HR), electrodermal activity (EDA), and electroencephalography (EEG) are physiological expressions affected by emotional state. Analyzing these biosignal recordings can point to a person’s emotional state. Contemporary medical education has progressed extensively towards diverse learning resources using virtual reality (VR) and mixed reality (MR) applications.

Objective

This paper aims to study the efficacy of wearable biosensors for affect detection in a learning process involving a serious game in the Microsoft HoloLens VR/MR platform.

Methods

A wearable array of sensors recording HR, EDA, and EEG signals was deployed during 2 educational activities conducted by 11 participants of diverse educational level (undergraduate, postgraduate, and specialist neurosurgeon doctors). The first scenario was a conventional virtual patient case used for establishing the personal biosignal baselines for the participant. The second was a case in a VR/MR environment regarding neuroanatomy. The affective measures that we recorded were EEG (theta/beta ratio and alpha rhythm), HR, and EDA.

Results

Results were recorded and aggregated across all 3 groups. Average EEG ratios of the virtual patient (VP) versus the MR serious game cases were recorded at 3.49 (SD 0.82) versus 3.23 (SD 0.94) for students, 2.59 (SD 0.96) versus 2.90 (SD 1.78) for neurosurgeons, and 2.33 (SD 0.26) versus 2.56 (SD 0.62) for postgraduate medical students. Average alpha rhythm of the VP versus the MR serious game cases were recorded at 7.77 (SD 1.62) μV versus 8.42 (SD 2.56) μV for students, 7.03 (SD 2.19) μV versus 7.15 (SD 1.86) μV for neurosurgeons, and 11.84 (SD 6.15) μV versus 9.55 (SD 3.12) μV for postgraduate medical students. Average HR of the VP versus the MR serious game cases were recorded at 87 (SD 13) versus 86 (SD 12) bpm for students, 81 (SD 7) versus 83 (SD 7) bpm for neurosurgeons, and 81 (SD 7) versus 77 (SD 6) bpm for postgraduate medical students. Average EDA of the VP versus the MR serious game cases were recorded at 1.198 (SD 1.467) μS versus 4.097 (SD 2.79) μS for students, 1.890 (SD 2.269) μS versus 5.407 (SD 5.391) μS for neurosurgeons, and 0.739 (SD 0.509) μS versus 2.498 (SD 1.72) μS for postgraduate medical students. The variations of these metrics have been correlated with existing theoretical interpretations regarding educationally relevant affective analytics, such as engagement and educational focus.

Conclusions

These results demonstrate that this novel sensor configuration can lead to credible affective state detection and can be used in platforms like intelligent tutoring systems for providing real-time, evidence-based, affective learning analytics using VR/MR-deployed medical education resources.

Keywords: virtual patients, affective learning, electroencephalography, medical education, virtual reality, wearable sensors, serious medical games

Introduction

Affective Learning

According to Bloom’s taxonomy of learning domains, there are three main domains of learning, namely cognitive (thinking), affective (emotion/feeling), and psychomotor (physical/kinesthetic) [1]. Specifically, in the affective domain, learning objectives focus on the learner’s interests, feelings, emotions, perceptions, attitudes, tones, aspirations, and degree of acceptance or rejection of instructional content [2]. Despite the fact that defining emotion is a rather daunting process, the term has been defined as “an episode of interrelated, synchronized changes in the states of all or most of the five organismic subsystems in response to the evaluation of an external or internal stimulus event as relevant to major concerns of the organism” [3]. Furthermore, emotion has been defined from a psychological stance as a conscious representation of what individuals feel, whereas from a neuropsychological point of view, emotion “is seen as a set of coordinated responses that take place when an individual faces a personally salient situation” [4].

The role of affect (emotions) is considered crucial in the learning process, as well as in influencing learning itself, since it is linked to notions such as motivation, interest, and attention [5]. Earlier still, it was postulated [6] that learning most often takes place during an emotional episode; thus, the interaction of affect and learning may provide valuable insight in how people learn. Similarly, the relationship between learning and affective states is evident in other studies as well. In this way, a person’s affective condition may systematically influence how they process new knowledge. It has been reported [7] that expert teachers are able to have a positive impact on their students’ learning by recognizing and responding to their emotional states. Accordingly, attributes like curiosity, among other affective states, are identified as an indicator of motivation [8]. Such attributes constitute a driver for learning, being useful to motivated and affectively engaged learners in order to become more involved and to display less stress and anger [9-11], greater pleasure and involvement [10], and less boredom [11].

The well-established pleasure, arousal, dominance (PAD) psychological model of emotional states [12,13] represents all emotions deploying the above three dimensions on a scale from negative to positive values. Pleasure regards how pleasant (joy) or unpleasant (anger, fear) one feels about something. Arousal corresponds to how energized or bored one feels, and dominance refers to how dominant or submissive one feels. Even though the PAD model was originally configured with three components, the first two, pleasure and arousal, seem to have been used to a greater extent by researchers than dominance [14], mainly due to the fact that “all affective states arise from two fundamental neurophysiological systems, one related to valence (a pleasure–displeasure continuum) and the other to arousal” [15].

Sensor-Based Affect Recognitions

Biosignals such as heart rate (HR), blood volume pressure, external body temperature, electrodermal activity (EDA), and electroencephalography (EEG) are the physiological signals of the human body that drastically change during changes of emotional state [16]. Analyzing the change of physiological signal recordings can determine the emotional state of the human [17] by using devices that record and track the changes of the physiological signals, called biosensors [18,19]. Analyzing the data recordings from those devices for emotional state detection has been the research interest of recent studies. EDA and EEG biosignals have been used for the detection of stress during indoor mobility [20,21]. Additionally, EEG biosignal classification for emotion detection using the valence-arousal model of affect classification was also explored by our group [22].

Emotional Content and Brain Activation With Regards to Learning

Affective states, such as fear, anger, sadness, and joy, alter brain activity [23,24] and are associated with the neurophysiological interaction between cortical-based cognitive states and subcortical valence and arousal systems [15]. Today, ever-increasing neuroimaging data verify the importance of specific emotion-related brain regions, such as the orbitofrontal cortex, the dorsolateral prefrontal cortex, the cingulate gyrus, the hippocampus, the insula, the temporal regions, and the amygdala, in forming emotions and in the process of learning [25-32]. The hippocampus and amygdala form an apparatus of memory that houses two distinct yet functionally interacting mnemonic systems of declarative and nondeclarative memory [33,34]. Along with the other previously mentioned areas, they belong to the limbic system and the pathway of memory formation, consolidation, and learning [35], although those are wider processes that are not intrinsically tied to anatomical restraints and cannot be precisely localized [36,37].

Based on studies in animals and humans, which showed evidence of the critical role of the amygdaloid complex (AC) in emotional reactions [38-40], other studies in humans using functional magnetic resonance imaging have identified AC activation in response to affectively loaded visual stimuli [41-43]. Additionally, positron emission tomography scan studies have identified a connection between emotional stimuli and activity in the left AC [44]. The AC takes part in a system of nondeclarative memory formation and emotional conditioning using mechanisms such as long-term potentiation [45,46]. A functional asymmetry has also been identified between left and right AC, as negative emotional conditioning, especially based on fear, leads to a nondeclarative learning process, tracked predominantly in left AC [47]. Emotion valence and information encoding favor this asymmetry with regard to both positive and negative stimuli [48,49], while arousal has been linked to electroencephalographic theta waves from the amygdala [50]. This asymmetry also entails different modalities of encoded information and, while the left side has been functionally associated with language and detailed affective information, the right side has been functionally associated with imagery [49]. Moreover, it has been demonstrated that the declarative and nondeclarative systems interact during the process of learning, as emotion influences encoding by modulating the qualitative characteristics of attention, while episodic and active learning have also been proven to condition emotional response through memory formation [47,51-54].

Age difference is another factor that may potentially moderate cognitive appraisal of emotional content. Taking into consideration the age-related positivity effect, eye-tracking was used [55] to test for potential age differences in visual attention and emotional reactivity to positive and negative information between older and younger adults. It was discovered that when older adults processed negative emotional stimuli, they attended less to negative image content compared with younger adults, but they reacted with greater negative emotions. Finally, several studies have explored sex differences in the neural correlates of emotional content reactivity [41,56]. These have often highlighted the key role of the amygdala. It was found [57] that women exhibited increased activation in the amygdala, dorsal midbrain, and hippocampus. Similarly, men exhibited increased activation in the frontal pole, the anterior cingulate cortex and medial prefrontal cortex, and the mediodorsal nucleus of the thalamus.

Technology-Enhanced Immersive Medical Education and Virtual Reality

Information and communication technologies (ICT) have shaped interventions for health care and wellness from their beginning. Digital innovations reduce costs, increase capacities to support growth and address social inequalities, and improve diagnostic efficacy and treatment effectiveness. Contemporary medical education in particular has progressed extensively towards widely diverse learning resources and health care–specific educational activities in the ICT domain [58]. The incentive behind this lies with the necessity for worldwide access to clinical skills, unconstrained by time and place [59]. This potential of ICT in medical education is multiplied by the parallel advancement of web technologies and the proliferation of interactive learning environments with immediate, content-related feedback [60].

Currently, medical education is mostly based on case-based or problem-based learning and other small-group instructional models [61,62]. These include simulations, scenario narratives and other structured, task-based learning episodes. Scenario narratives in particular, termed virtual patients (VPs) in the health care sector, are serious game episodes designed custom to the learning objectives but also aligned with the expectations and skill sets of students in order to provide a game-informed, media-saturated learning environment. In that way, students can explore a case through multiple avenues, exercise their decision-making skills, and explore the impact of those decisions in a safe but engaging way [63,64]. VPs are defined as “interactive computer simulations of real-life clinical scenarios for the purpose of healthcare and medical training, education or assessment” [65]. Web-based VPs, unlike real patients, are consistently repeatable, since they are structured as branching narratives [66], offering few limitations with respect to time, place, and failure during the practice of clinical skills. Medical students have the opportunity to practice on a diverse set of rare and difficult diseases that they may later encounter in clinical practice [67]. Finally, the reproducibility of the case outcomes and the provisions for standardized validated assessment that exist in most VP platforms have established the use of VPs as an effective and important tool for modern medical education [68-70]. Due to these advantages, there is a global trend towards increased development of VPs, with many academic institutions working towards this goal [71]. The extended impact of VPs for medical education has been recognized, and standardization solutions for repurposing, reusing, and transferability have been initiated early on [65], with a formal standard, the MedBiquitous virtual patient standard, being finalized as early as 2010 [72,73]. Contemporary improvements, such as semantic annotations, have been implemented for easy reusability of VP content [74], while other efforts have focused on various fields, like elderly care [75], and even on intensifying experiential means, like virtual worlds [76,77], virtual reality, and augmented reality [78].

On the experiential front, many ideas have been implemented. One of them is the virtual laboratory. Virtual labs use simulations and computer models, along with a multitude of other media, such as video, to replace real-life laboratory interactions. A virtual lab consists of several digital simulations supported by discussion forums and video demonstrations, or even collaboration tools and stand-alone complex simulations [79]. Such interactive environments facilitate self-directed, self-paced learning (eg, repeating content, accessing content at off hours). That way, learners maintain initiative and increased engagement in the learning process, while interactivity hones laboratory skills that go beyond simple knowledge transfer. These laboratory skills strengthen the core areas of weakness in the contemporary medical curriculum. Hands-on laboratory techniques are usually not available for training to students due to cost, time, or safety constraints [80,81]. This leaves medical students with theoretical understanding but a lack of real-world clinical and lab skills [81].

An approach readily supportive of the virtual lab that can incorporate VP serious gaming is the implementation of virtual reality (VR), augmented reality, and, recently, mixed reality (MR) technologies, especially with the advent of devices like the Microsoft HoloLens (Microsoft Corp). The distinctions are somewhat blurred at times, but virtual reality is the substitution of external sensory inputs (mainly visual and audio) with computer-generated ones using a headset device. Augmented reality is the superposition of digital content over the real world that uses either 2-dimensional (2D) or 3-dimensional (3D) markers in the real-world environment. Finally, mixed reality is similar to augmented reality, with one key difference. Instead of the real-world marker being a preprogrammed static item or image, the superposition of content is done after a 3D mapping of the current environment has been completed. This way, features can be used in intuitive ways, like 3D models positioned on tables or 2D images or notes hanging on walls. There is evidence that such technologies significantly increase the educational impact of a learning episode and can subsequently greatly affect educational outcomes [82]. Realized examples include experiential world exploration [83], physics and chemistry concept visualizations with high engagement impact [84-86], and even the incorporation of such modalities for VPs [78]. It is this immediate engagement capacity of these modalities that can not only motivate the student but also allow for internalization of the educational material and, thus, avoidance of conceptual errors [87].

Aim and Scope of This Work

From this introduction, it becomes apparent that there is currently a sufficient body of research identifying both the impact and the capacity of digital tools for detecting and affecting the emotional state of users in their learning activities. Contemporary integrated wearable and unobtrusive sensor suites can provide objective, biosignal-based (as opposed to self-reported or inferred) emotion recognition. In addition, the proliferation and impact of immersive resources as medical education support tools provide strong motive to explore the feasibility of implementing in them real-time, evidence-based affective analytics.

Using commercial wearables and EEG sensors, this work presents the first, to the authors’ knowledge, feasibility pilot of real-time, evidence-based affective analytics in a VR/MR-enhanced medical education VP serious game.

Methods

Equipment, Affective, and Educational Setup

A total of 11 healthy participants took part in our study after providing written informed consent. The participants included medical students (4 participants), medical school postgraduates (3 participants), and neurosurgeons (4 participants). The participants were informed that they would take part in 2 VP scenarios. The 2 scenarios were (1) a simple emergency response VP scenario that is familiar to all medical students beyond the third year of their studies, implemented on a simple web-based platform (OpenLabyrinth), and (2) a neuroanatomy-focused case regarding the ascending and descending pathways of the central nervous system, designed for a specialized neuroanatomy lecture at the graduate/postgraduate level and implemented in the MR HoloLens platform. The choice of scenario for the MR platform (Microsoft HoloLens) was forced to be this specific resource, as it was the only one that was available in scenario-based format. Both scenarios were medical narrative games that were guided by player choice. In the case of the web-based VP game, the users chose their responses from a multiple-choice panel on each of the VP game’s pages. In the MR VP game, the users visualized the actual case through the HoloLens device, seeing and tacitly manipulating the relevant parts of the anatomy as they were described to them by narrative text. Selections in this modality were conducted by hand gestures in relevant locations of the actual anatomy that was presented to the user.

Due to the diverse background of the participants, they were exposed to the educational episodes in different ways. All groups tackled the first scenario on their own according to their knowledge. The medical students were fully guided in the MR scenario, since the educational content of it was beyond their knowledge. That means that they were free to ask any technical or medical question to the research team and they were provided with full guidance to select the correct answer. The postgraduate medical students were asked to resolve the MR scenario on their own and were offered assistance if they appeared to be stuck in and unable to proceed from a specific stage of the scenario. That means that they were free to ask any technical or medical question to the research team and they were provided with full guidance to select the correct answer. The neurosurgeons were all asked to complete the case on their own and were provided help only towards usage issues regarding the HoloLens device. That means that they were allowed to ask only technical questions about the functionality of the device.

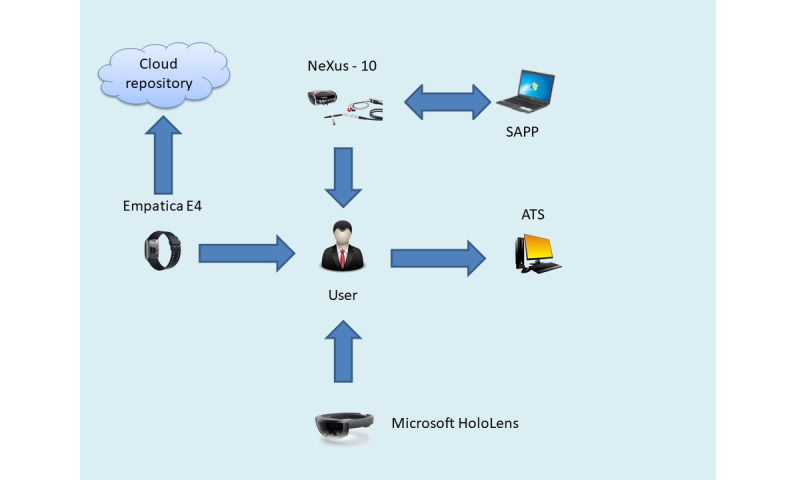

In both scenarios, brain activity was recorded via an EEG, along with biosignals through a wearable unit. EEG signals were acquired using a 2-channel EEG amplifier (Nexus-10; Mind Media) [88] connected via Bluetooth to the PC, where signals were recorded (sampling rate 256 Hz) and preprocessed in real time. EEG electrodes were placed at the Fz and Cz positions, references at A1 and A2 (earlobes), and ground electrode at Fpz of the international 10-20 electrode placement system. Preprocessing included automatic EEG artifact removal and generation of real-time theta (4 to 8 Hz), alpha (8 to 12 Hz), and beta (13 to 21 Hz) rhythm. Moreover, the composite ratio of theta over beta was additionally generated.

For continuous, real-time physiological signals for stress detection, the E4 wearable multisensory smart wristband (Empatica Inc) was used [89]. HR and EDA were recorded with a sampling rate of 1 Hz and 4 Hz, respectively.

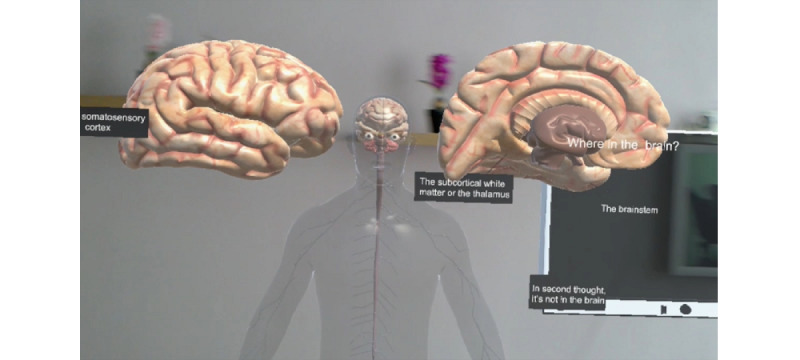

In order to implement the MR component of this experiment, the Microsoft HoloLens holographic computer headset was used [90]. Microsoft HoloLens is the world's first fully untethered holographic computer, providing holographic experiences in order to empower the user in novel ways. It blends optics and sensors to deliver seamless 3D content interaction with the real world. Advanced sensors capture information about what the user is doing, as well as the environment the user is in, allowing mapping and understanding of the physical places, spaces, and things around the user. The scenario used for the experiment was an exploratory interactive tutorial on the main central nervous system pathways in the brain and spinal cord (Figure 1). For the needs of this pilot experimental setup, 2 standard PC units were also used.

Figure 1.

Part of the HoloAnatomy neuroanatomy virtual scenario.

All real-time signal acquisition and postprocessing were conducted in a dedicated signal acquisition and postprocessing (SAPP) PC unit, while subjective emotion self-reporting, VP educational activity, and overall time stamp synchronization was conducted through an activity and time stamp synchronization (ATS) PC unit.

In the ATS unit, the Debut video capture software (NHS Software) [91] was used to record and time-stamp all of the participant’s activities on screen for reference and manual synchronization with the internal clocks of the EEG and wearable sensor recorded from the SAPP unit.

The overall equipment setup and synchronization is demonstrated in Figure 2.

Figure 2.

Multimodal biosignal sensors and mixed reality equipment setup. ATS: activity and time stamp synchronization; SAPP: signal acquisition and postprocessing.

Experimental Methodology

The experiment and relevant recordings took place on the premises of the Lab of Medical Physics of the Aristotle University of Thessaloniki in a quiet space. For the first session, the participant was invited to sit comfortably on a chair in front of the ATS unit’s screen, located at a distance of 60 cm. While the participant was seated, the Empatica E4 sensor was provided to them to wear and the Nexus-10 EEG electrodes were applied. The ATS unit’s screen displayed the initial page of the VP scenario and the time in Coordinated Universal Time (UTC) to allow for precise time recording. Data synchronization was conducted manually. The biosignal data acquired by the Empatica E4 and Nexus-10 devices were exported with a time stamp in UTC. Having a global time stamp both in the sensor recordings and in the educational activity computer (ATS) allowed for later offline synchronization. Specifically, the start of the time series for each sensor acquisition was synchronized in global time, together with the educational events as they were annotated manually, also in global time. The scenario is, in short, an interlinked web of pages that describes a coherent medical case.

The users navigated the interlinked web of pages by selecting their preferred answers in each part of the case from a predetermined multiple-choice list. The coordinator of the experiment was seated at a close distance next to the participant, though outside of the participant’s visual field in order to not affect participant behavior, and was operating the SAPP unit continuously overseeing the acquisition process. The recordings of this session constituted the personalized sensor baseline for this user.

For the second session, the Empatica E4 and the Nexus-10 devices were used in the same manner as in the first session. Additionally, the HoloLens holographic computer unit was worn by the subject. Sitting comfortably on their chair, the participant viewed through the holographic unit an interactive exploratory neuroanatomy tour. Interaction with the HoloLens was conducted with gestures. In order not to contaminate the EEG recordings with the motor cortex EEG responses, the gestures were conducted by the coordinator after a preset time had passed (approximately 4 seconds). The whole session was video recorded in order to facilitate activity annotation at a later time. All participants took approximately the same time to finish their session. This time was approximately 35 to 40 minutes. About 10 minutes were used for orientation and equipment placement, 10 minutes were used for the VP case, and another 15 minutes were used for the MR experience.

Data Analysis

The acquired data (HR, EDA, alpha amplitude, theta/beta ratio) were annotated according to user activity data taken from the ATS unit and the video recording of the second session. Annotation marks in the web-based VP session were placed at the time points where the user moved to a new node in the VP scenario. These data segments of all the data sets (HR, EDA, alpha, theta/beta) formed the baseline values of each biosignal modality for this user. The acquired data of the MR VP session were annotated with marks placed at time points of the interaction gestures as they appeared in the video recording of the session. The first session segments were averaged in order to extract a global baseline average for each biosignal modality. The second session data were averaged on a per-segment basis, and the resulting data series (one data point per gesture per signal modality) were explored using descriptive statistics for quantitative differences from the baseline values.

Results

In the conventional educational episode, the participants explored a VP scenario. As previously mentioned, the data were annotated and segmented after each user transitioned from one stage of the VP scenario to the next. Thus, after averaging all biosignal data that were annotated and segmented for these stages, we extracted the averages for the biosignals recorded in this experimental setup. These included the alpha amplitude and the theta over beta ratio for the Cz EEG position (where the sensor was placed), as well as HR and EDA values. Representative results for one participant are summarized in Table 1.

Table 1.

Biosignal baseline averages of a representative participant (neurosurgeon) during the conventional educational episode.

| Segment | Alpha, mean (SD), μV | Theta/beta power ratio, mean (SD) | HRa, mean (SD), bpm | EDAb, mean (SD), μS |

| Segment 1 | 4.886 (0.1822) | 3.198 (0.5675) | 86 (2) | 0.227 (0.0114) |

| Segment 2 | 5.259 (0.1822) | 2.235 (0.5675) | 90 (2) | 0.209 (0.0114) |

| Segment 3 | 4.883 (0.1822) | 3.047 (0.5675) | 88 (2) | 0.203 (0.0114) |

| Segment 4 | 4.921 (0.1822) | 3.584 (0.5675) | 89 (2) | 0.203 (0.0114) |

| Average | 4.987 (0.1822) | 3.016 (0.5675) | 87 (2) | 0.215 (0.0114) |

aHR: heart rate.

bEDA: electrodermal activity.

A similar process was followed for the second session, where the VR/MR neuroanatomy resource was explored by the user. The time series of the biosignals were annotated and segmented on the time stamps corresponding to each gesture-based transition that the user experienced in this resource.

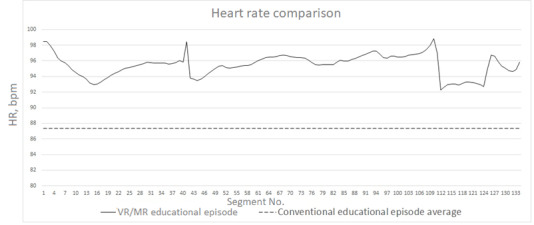

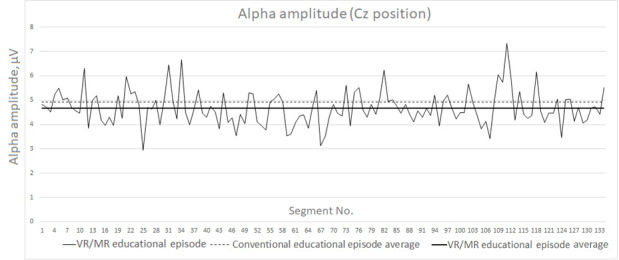

For each segment and for the HR and EDA, average values were recorded along with the segment number. Example plots are presented for a representative participant in Figure 3 and Figure 4. For reference purposes, the baseline average was also plotted as a constant in these graphs.

Figure 3.

Representative heart rate segment plot for the VR/MR educational episode. Dashed line denotes the baseline established in the first experimental session. HR: heart rate; MR: mixed reality; VR: virtual reality.

Figure 4.

Alpha amplitude (μV) segment plot for the VR/MR educational episode for the Cz position. MR: mixed reality; VR: virtual reality.

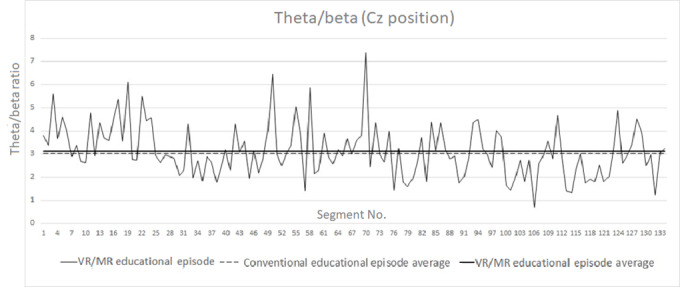

The same process was followed for the alpha rhythm and theta/beta ratio for the Cz point (sensor positions). Similar plots of the rhythms and ratios are presented for a representative participant in Figure 4 and Figure 5.

Figure 5.

Theta/beta ratio segment plot for the VR/MR educational episode for the Cz position. MR: mixed reality; VR: virtual reality.

In Figure 4 and Figure 5, we have also included, as a plotted line, the average of the recorded signal in order to reveal even nondefinitive increases or decreases between the two experimental sessions. A representative value set is summarized in Table 2.

Table 2.

Biosignal average of a representative participant (neurosurgeon) during the virtual reality/mixed reality educational episode.

|

|

Alpha, mean (SD), μV | Theta/beta power ratio, mean (SD) | HRa, mean (SD), bpm | EDAb, mean (SD), μS |

| Average | 4.680 (0.711) | 3.131 (1.137) | 95 (1) | 3.475 (0.865) |

aHR: heart rate.

bEDA: electrodermal activity.

This analysis was performed for all 11 participants. The averaged results for each participant are presented in Table 3. Given the differentiation of each group’s affective and educational setup, the participants are also partitioned according to group in this table. Table 4 presents a per-group average for all metrics recorded in the web-based VP and MR scenarios. Given that some of these metrics (eg, EDA) are highly varied across the population, which makes averaging irrelevant for any useful purpose (see standard deviations for EDA in Table 4), Table 5 presents the average value shifts between the two scenarios. Specifically, we present the total and per-group number of participants who had increased theta/beta ratios, decreased alpha rhythm amplitude, increased HR, and increased EDA in the MR scenario compared with the web-based VP scenario.

Table 3.

Biosignal averages per participant for the virtual reality/mixed reality educational episode.

| Participant No. | Amplitude theta/beta (Cz), mean, μV | Amplitude alpha (Cz), mean, μV | HRa, mean, bpm | EDAb, mean, μS | |||||

|

|

VPc | MRd | VP | MR | VP | MR | VP | MR | |

| Students |

|

|

|

|

|

|

|

|

|

|

|

1 | 4.315 | 4.417 | 8.024 | 8.540 | 97 | 96 | 3.733 | 6.479 |

|

|

2 | 2.734 | 1.788 | 10.228 | 12.443 | 92 | 92 | 0.525 | 2.438 |

|

|

3 | 4.306 | 3.425 | 5.794 | 5.529 | 66 | 65 | 0.268 | 0.382 |

|

|

4 | 2.611 | 3.284 | 7.040 | 7.157 | 96 | 90 | 0.265 | 7.090 |

| Neurosurgeons |

|

|

|

|

|

|

|

|

|

|

|

1 | 3.016 | 3.131 | 7.033 | 7.146 | 87 | 95 | 0.215 | 3.475 |

|

|

2 | 1.438 | 1.598 | 6.023 | 6.223 | 88 | 82 | 5.788 | 14.539 |

|

|

3 | 1.986 | 1.679 | 6.396 | 8.072 | 75 | 76 | 1.019 | 3.076 |

|

|

4 | 3.935 | 5.420 | 10.725 | 9.606 | 73 | 79 | 0.538 | 0.540 |

| Postgraduates |

|

|

|

|

|

|

|

|

|

|

|

1 | 2.439 | 2.532 | 7.831 | 7.801 | 75 | 70 | 0.371 | 0.307 |

|

|

2 | 2.580 | 3.331 | 20.523 | 13.933 | 90 | 84 | 0.387 | 4.516 |

|

|

3 | 1.971 | 1.823 | 7.163 | 6.926 | 77 | 79 | 1.459 | 2.671 |

aHR: heart rate.

bEDA: electrodermal activity.

cVP: virtual patient.

dMR: mixed reality.

Table 4.

Biosignal averages per group for the virtual reality/mixed reality educational episode.

| Group | Amplitude theta/beta power ratio (Cz), mean (SD) | Amplitude alpha (Cz), mean (SD), μV | HRa, mean (SD), bpm | EDAb, mean (SD), μS | |||||

|

|

VPc | MRd | VP | MR | VP | MR | VP | MR | |

| Students | 3.49 (0.82) | 3.23 (0.94) | 7.77 (1.62) | 8.42 (2.56) | 87 (13) | 86 (12) | 1.198 (1.467) | 4.097 (2.79) | |

| Neurosurgeons | 2.59 (0.96) | 2.90 (1.78) | 7.03 (2.19) | 7.15 (1.86) | 81 (7) | 83 (7) | 1.890 (2.269) | 5.407 (5.391) | |

| Postgraduates | 2.33 (0.26) | 2.56 (0.62) | 11.84 (6.15) | 9.55 (3.12) | 81 (7) | 77 (6) | 0.739 (0.509) | 2.498 (1.72) | |

aHR: heart rate.

bEDA: electrodermal activity.

cVP: virtual patient.

dMR: mixed reality.

Table 5.

Biosignal average value shifts for the virtual reality/mixed reality educational episode.

| Group | Increased amplitude theta/beta power ratio (Cz), n |

Decreased amplitude alpha (Cz), n, μV |

Increased HRa, n, bpm |

Increased EDAb, n, μS |

| Total | 6 | 5 | 2 | 9 |

| Students | 2 | 1 | 0 | 4 |

| Neurosurgeons | 3 | 1 | 3 | 4 |

| Postgraduates | 2 | 3 | 0 | 2 |

aHR: heart rate.

bEDA: electrodermal activity.

Discussion

Originality

This work presented a pilot study for technically achieving the capacity to obtain evidence-based, real-time affective analytics from users of a VR/MR educational serious game resource. Three participant groups were included, namely undergraduate medical students, medical school postgraduates, and neurosurgeons. This is the first time that a multitude of recording and interaction devices were integrated within a cohesive and contemporary educational episode. This integration led to a plausible neurophysiological interpretation of recorded biosignals regarding engagement and other affective analytics metrics.

The technical barriers for this endeavor were significant. While the wrist-wearable sensor is unobtrusive and easy to wear, obtaining EEG recordings while simultaneously wearing a highly sophisticated electronic device like the Microsoft HoloLens is not easily completed. The literature has significant findings regarding EEG and VR, but usually these involve virtual environments (3D environments projected on a 2D screen) instead of true immersive virtual reality, which requires a dedicated headset [92]. In other cases, successful endeavors incorporating EEG to VR scenarios require expensive and cumbersome simulation rooms [93]. This work is the first, to the authors’ knowledge, that involves (1) the first wearable, truly immersive mixed reality holographic computer (the MS HoloLens) and (2) real-time concurrent EEG recordings in an unconfined, free-roaming setting easily transferrable to real-world educational settings.

Principal Neurophysiological Results

Our neurophysiological investigation focuses primarily on the theta/beta ratio (the ratio of powers of theta rhythm to beta rhythm) and the amplitude of alpha rhythm (8 to 12 Hz). An increase of theta (4 to 8 Hz) power in EEG recordings has been documented, corresponding to neurophysiological processes that facilitate both working memory and episodic memory, as well as the encoding of new information [94,95]. Moreover, when recorded at the area over midline brain regions, theta activity is also related to cognitive processes that involve concentration, sustained attention, and creativity [96-100]. In line with this, higher theta activity has been reported in the frontal-midline regions during a task of high cognitive demand, a task with increasing working memory needs [101,102], or even a high-attention process [103-105]. On the other hand, engagement in attention-demanding tasks or judgment calls is reported to lead to alpha power suppression [106,107].

Our results, in the context of the educational and affective setup of the experiment, agree with the previously reported literature.

The undergraduate student group presented a decrease in theta/beta ratio activity and an increase in alpha rhythm amplitude. These results can be interpreted as engagement in high-demand cognitive functions while under suppression of judgement calls. As we described in the “Equipment, Affective, and Educational Setup” section, undergraduate students were offered the correct choices by the researchers during the MR scenario, given its very demanding neuroanatomy content. In that context, undergraduate students concentrated on the mechanical tasks of using the MR equipment, while no judgement calls were made by them (it can be said that cognitive control of the scenario was relegated to the researcher facilitating the student).

The second group, the postgraduate students, presented an increase in theta/beta ratio activity. They also presented a decrease in alpha rhythm amplitude during the MR serious game scenario. As we previously described, these students were asked to individually solve a very challenging (for their education level) medical scenario. Thus, they had to use all their cognitive faculties in order to overcome this challenge. The results are consistent with the educational setup for this group.

The third group, consisting of specialist neurosurgeon doctors, presented an increase in theta/beta ratio. They also presented an increase in alpha rhythm amplitude, similar to the undergraduate medical students. In this case, the participants (neurosurgeons) were presented with a scenario that required their concentration and initiative to solve, but they were not seriously challenged, since the educational material covered in the case was well within their skills. Thus, they had to commit cognitively to the task, especially to use the MR equipment, but not fully. This cognitive engagement ambivalence is demonstrated by the concurrent increase in theta/beta ratios and alpha rhythm amplitudes.

Regarding the EDA and HR results, these value were significantly elevated in the VR/MR session versus the baseline educational episode. Elevated HR and EDA are established signs of high arousal, independent of valence [108,109]. HR remained more or less steady on average in all groups, a fact that can be attributed to the overall relaxed environment of the experiment (seated participation, silent room, etc) and the resilience of the average HR in short-term variations. However, almost all the participants presented a significant increase in EDA, which can be attributed to the overall novelty factor of wearing a sense-altering digital device, as well as the immersion and excitement of the interaction with the VR/MR educational resource.

Limitations

Despite the overall promising results, this work contains some inherent limitations, mostly linked with the novelty of its aim and scope. A significant limitation is the small and diverse group of subjects that were used for the pilot run of the multimodal signal acquisition configuration. It must be emphasized that given the sampling rate of the biosensors, every 1 minute of recording provided 15,360 samples (256 Hz × 60 s) of EEG per channel, 60 samples (1 Hz × 60 s) of HR, and 240 samples (4 Hz × 60 s) of EDA. This high-density data throughout the study allowed for rather definitive biosignal results to be extracted on a per-participant basis. While the extensive data set gathered from participants provided credible results for this feasibility study, obviously there is the need to expand the participant sample in order to explore personalization and statistical verification challenges.

Another core limitation of this study is the low affective impact of the MR case. Furthermore, all patients experienced the educational content only once. The case had significant immersive content, including animation and audio cues as rewards and motivations for the user. However, it lacked impact and significant narrative consequences for the users’ actions.

Thus, future work will require a larger user base, as well as more frequent exposure to emotional affective educational stimuli with emotional and narrative specificity of this suite in a VR/MR approach. Specific emotion-inducing content needs to be implemented in this modality in order to assess the biosignal variations as the emotional content clashes (or cooperates) with the VR/MR platform’s inherently high arousal and emotional impact (for example, error-based training). Finally, as previously mentioned, a future goal to pursue based on this work is the integration of this biosensor suite in an intelligent tutoring system (ITS) for immediate medical teaching support that includes VR/MR resources.

Comparison With Prior Work

Similar endeavors for affective analytics in non–technology-heavy medical education episodes have already been initially explored [110]. However, this work is the first proof of application of at least one configuration that is realistically unconfined and applicable in a simple educational setting for emotion detection in a technology-heavy VR/MR-based medical education episode. The full scope of application for this work is the integration of sensors and devices for incorporating objective, sensor-based affective analytics in VR/MR educational resources provided by ITSs.

ITSs are computer systems that aim to provide immediate and customized instruction or feedback to learners [80], usually without requiring intervention from a human teacher. ITSs typically aim to replicate the demonstrated benefits of one-to-one (personalized) tutoring to one-to-many instruction from a single teacher (eg, classroom lectures) or no teacher at all (eg, online homework) [111]. An ITS implementation is based on students’ characteristics and needs, and it analyzes and anticipates their affective responses and behaviors in order to allow more efficient collection of information of a student’s performance, handle content adjustment, tailor tutoring instructions upon inferences on strengths and weaknesses, suggest additional work, and in general improve the learning level of students [112].

In that context, the integration of emotion detection for content and experience customization is not a new endeavor. Affective tutoring systems use bias-free physiological expressions of emotion (facial expressions, eye-tracking, EEG, biosensors, etc) in order “to detect and analyze the emotional state of a learner and respond in a way similar to human tutors” [113], hence being able to adapt to the affective state of students [114]. The results of this work provide initial evidence for the integration of multisensor configurations with a VR/MR platform and the synchronization and coordination of such a suite by an affect-aware ITS.

Conclusions

VR/MR is a versatile educational modality, especially in the highly sensitive medical domain. It provides the capacity both for highly impactful narrative, which is crucial for making doctors invested in the educational process, and for building decision-making skills and competences. It also provides the ability, through immersive simulation, for the medical learner to practice manual skills in surgical specialties. This dual capacity makes this modality highly impactful and sought after in the medical field. Therefore, the capacity to provide personalized, context-specific content and feedback based on the learner’s emotional state is crucial in this modality for both self-directed and standard guided medical learning. This work provides the first proof of application for such endeavors.

Abbreviations

- 2D

2-dimensional

- 3D

3-dimensional

- AC

amygdaloid complex

- ATS

activity and time stamp synchronization

- EDA

electrodermal activity

- EEG

electroencephalography

- HR

heart rate

- ICT

information and communication technologies

- ITS

intelligent tutoring system

- MR

mixed reality

- PAD

pleasure, arousal, dominance

- SAPP

signal acquisition and postprocessing

- UTC

Coordinated Universal Time

- VP

virtual patient

- VR

virtual reality

Footnotes

Conflicts of Interest: None declared.

References

- 1.Bloom B. Bloom's taxonomy of learning domains. NWlink. [2020-03-09]. http://www.nwlink.com/~donclark/hrd/bloom.html.

- 2.Krathwohl DR, Bloom BS, Masia BB. Taxonomy of Educational Objectives, Handbook II: Affective Domain. New York, NY: David McKay Co; 1964. [Google Scholar]

- 3.Scherer KR. Toward a Dynamic Theory of Emotion: The Component Process Model of Affective States. Geneva Studies in Emotion and Communication. 1987;1:1–198. https://pdfs.semanticscholar.org/4c23/c3099b3926d4b02819f2af196a86d2ef16a1.pdf. [Google Scholar]

- 4.Valenzi S, Islam T, Jurica P, Cichocki A. Individual Classification of Emotions Using EEG. JBiSE. 2014;07(08):604–620. doi: 10.4236/jbise.2014.78061. [DOI] [Google Scholar]

- 5.Picard RW, Papert S, Bender W, Blumberg B, Breazeal C, Cavallo D, Machover T, Resnick M, Roy D, Strohecker C. Affective Learning — A Manifesto. BT Technology Journal. 2004 Oct;22(4):253–269. doi: 10.1023/b:bttj.0000047603.37042.33. [DOI] [Google Scholar]

- 6.Stein NL, Levine LJ. Making sense out of emotion: The representation and use of goal-structured knowledge. In: Kessen W, Ortony A, Craik F, editors. Memories, thoughts, and emotions: essays in honor of George Mandler. New York: Psychology Press; 1991. p. 322. [Google Scholar]

- 7.Goleman D. Emotional intelligence. New York, NY: Bantam Book Publishing; 1995. [Google Scholar]

- 8.Stipek DJ. Motivation to learn: from theory to practice. 3rd ed. Boston, MA: Allyn and Bacon; 1998. [Google Scholar]

- 9.Patrick BC, Skinner EA, Connell JP. What motivates children's behavior and emotion? Joint effects of perceived control and autonomy in the academic domain. Journal of Personality and Social Psychology. 1993;65(4):781–791. doi: 10.1037/0022-3514.65.4.781. [DOI] [PubMed] [Google Scholar]

- 10.Tobias S. Interest, Prior Knowledge, and Learning. Review of Educational Research. 2016 Jun 30;64(1):37–54. doi: 10.3102/00346543064001037. [DOI] [Google Scholar]

- 11.Miserandino M. Children who do well in school: Individual differences in perceived competence and autonomy in above-average children. Journal of Educational Psychology. 1996;88(2):203–214. doi: 10.1037/0022-0663.88.2.203. [DOI] [Google Scholar]

- 12.Mehrabian J, Russell A. An approach to environmental psychology. Cambridge, MA: MIT Press; 1974. [Google Scholar]

- 13.Russell JA. A circumplex model of affect. Journal of Personality and Social Psychology. 1980;39(6):1161–1178. doi: 10.1037/h0077714. [DOI] [Google Scholar]

- 14.Bakker I, van der Voordt T, Vink P, de Boon J. Pleasure, Arousal, Dominance: Mehrabian and Russell revisited. Curr Psychol. 2014 Jun 11;33(3):405–421. doi: 10.1007/s12144-014-9219-4. [DOI] [Google Scholar]

- 15.Posner J, Russel JA, Peterson BS. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Develop Psychopathol. 2005 Nov 1;17(03) doi: 10.1017/s0954579405050340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chang C, Zheng J, Wang C. Based on Support Vector Regression for emotion recognition using physiological signals. The 2010 International Joint Conference on Neural Networks (IJCNN); July 18-23, 2010; Barcelona, Spain. 2010. [DOI] [Google Scholar]

- 17.Niu X, Chen Q. Research on genetic algorithm based on emotion recognition using physiological signals. 2011 International Conference on Computational Problem-Solving (ICCP); Oct 21-23, 2011; Chengdu, China. 2011. [DOI] [Google Scholar]

- 18.Takahashi K. Remarks on SVM-based emotion recognition from multi-modal bio-potential signals. RO-MAN 2004. 13th IEEE International Workshop on Robot and Human Interactive Communication (IEEE Catalog No.04TH8759); Sep 22, 2004; Kurashiki, Japan. 2004. [DOI] [Google Scholar]

- 19.Kim J, Bee N, Wagner J, Andre E. Emote to win: Affective interactions with a computer game agent. Lecture Notes in Informatics. 2004;1:159–164. [Google Scholar]

- 20.Kalimeri K, Saitis C. Exploring multimodal biosignal features for stress detection during indoor mobility. Proceedings of the 18th ACM International Conference on Multimodal Interaction - ICMI; 18th ACM International Conference on Multimodal Interaction - ICMI; Nov 12-16, 2016; Tokyo, Japan. 2016. [DOI] [Google Scholar]

- 21.Ollander S, Godin C. A comparison of wearable and stationary sensors for stress detection. IEEE International Conference on Systems, Man, and Cybernetics (SMC); Oct 9-12, 2016; Budapest, Hungary. 2016. [DOI] [Google Scholar]

- 22.Frantzidis C, Bratsas C, Klados M, Konstantinidis E, Lithari C, Vivas A, Papadelis C, Kaldoudi E, Pappas C, Bamidis P. On the Classification of Emotional Biosignals Evoked While Viewing Affective Pictures: An Integrated Data-Mining-Based Approach for Healthcare Applications. IEEE Trans Inform Technol Biomed. 2010 Mar;14(2):309–318. doi: 10.1109/titb.2009.2038481. [DOI] [PubMed] [Google Scholar]

- 23.Lane RD, Reiman EM, Ahern GL, Schwartz GE, Davidson RJ. Neuroanatomical correlates of happiness, sadness, and disgust. Am J Psychiatry. 1997 Jul;154(7):926–33. doi: 10.1176/ajp.154.7.926. [DOI] [PubMed] [Google Scholar]

- 24.Damasio AR, Grabowski TJ, Bechara A, Damasio H, Ponto LL, Parvizi J, Hichwa RD. Subcortical and cortical brain activity during the feeling of self-generated emotions. Nat Neurosci. 2000 Oct;3(10):1049–56. doi: 10.1038/79871. [DOI] [PubMed] [Google Scholar]

- 25.Grodd W, Schneider F, Klose U, Nägele T. [Functional magnetic resonance tomography of psychological functions exemplified by experimentally induced emotions] Radiologe. 1995 Apr;35(4):283–9. [PubMed] [Google Scholar]

- 26.Schneider F, Gur RE, Mozley LH, Smith RJ, Mozley P, Censits DM, Alavi A, Gur RC. Mood effects on limbic blood flow correlate with emotional self-rating: A PET study with oxygen-15 labeled water. Psychiatry Research: Neuroimaging. 1995 Nov;61(4):265–283. doi: 10.1016/0925-4927(95)02678-q. [DOI] [PubMed] [Google Scholar]

- 27.Maddock RJ, Buonocore MH. Activation of left posterior cingulate gyrus by the auditory presentation of threat-related words: an fMRI study. Psychiatry Research: Neuroimaging. 1997 Aug;75(1):1–14. doi: 10.1016/s0925-4927(97)00018-8. [DOI] [PubMed] [Google Scholar]

- 28.Canli T, Desmond JE, Zhao Z, Glover G, Gabrieli JDE. Hemispheric asymmetry for emotional stimuli detected with fMRI. Neuroreport. 1998 Oct 05;9(14):3233–9. doi: 10.1097/00001756-199810050-00019. [DOI] [PubMed] [Google Scholar]

- 29.Phillips ML, Young AW, Scott SK, Calder AJ, Andrew C, Giampietro V, Williams SCR, Bullmore ET, Brammer M, Gray JA. Neural responses to facial and vocal expressions of fear and disgust. Proc Biol Sci. 1998 Oct 07;265(1408):1809–17. doi: 10.1098/rspb.1998.0506. http://europepmc.org/abstract/MED/9802236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Paradiso S, Johnson DL, Andreasen NC, O'Leary D S, Watkins GL, Ponto L L, Hichwa RD. Cerebral blood flow changes associated with attribution of emotional valence to pleasant, unpleasant, and neutral visual stimuli in a PET study of normal subjects. Am J Psychiatry. 1999 Oct;156(10):1618–29. doi: 10.1176/ajp.156.10.1618. [DOI] [PubMed] [Google Scholar]

- 31.Lane RD, Reiman EM, Axelrod B, Yun L, Holmes A, Schwartz GE. Neural correlates of levels of emotional awareness. Evidence of an interaction between emotion and attention in the anterior cingulate cortex. J Cogn Neurosci. 1998 Jul;10(4):525–35. doi: 10.1162/089892998562924. [DOI] [PubMed] [Google Scholar]

- 32.Wright CI, Fischer H, Whalen PJ, McInerney SC, Shin LM, Rauch SL. Differential prefrontal cortex and amygdala habituation to repeatedly presented emotional stimuli. Neuroreport. 2001 Feb 12;12(2):379–83. doi: 10.1097/00001756-200102120-00039. [DOI] [PubMed] [Google Scholar]

- 33.Izquierdo I, Bevilaqua LRM, Rossato JI, Bonini JS, Silva WCD, Medina JH, Cammarota M. The connection between the hippocampal and the striatal memory systems of the brain: A review of recent findings. Neurotox Res. 2006 Jun;10(2):113–121. doi: 10.1007/bf03033240. [DOI] [PubMed] [Google Scholar]

- 34.Klados M, Pandria N, Athanasiou A, Bamidis PD. A novel neurofeedback protocol for memory deficits induced by left temporal lobe injury [forthcoming] International Journal of Bioelectromagnetism. 2018 [Google Scholar]

- 35.Vann SD. Re-evaluating the role of the mammillary bodies in memory. Neuropsychologia. 2010 Jul;48(8):2316–27. doi: 10.1016/j.neuropsychologia.2009.10.019. [DOI] [PubMed] [Google Scholar]

- 36.Gaffan D. What is a memory system? Horel's critique revisited. Behav Brain Res. 2001 Dec 14;127(1-2):5–11. doi: 10.1016/s0166-4328(01)00360-6. [DOI] [PubMed] [Google Scholar]

- 37.Horel JA. The neuroanatomy of amnesia. A critique of the hippocampal memory hypothesis. Brain. 1978 Sep;101(3):403–45. doi: 10.1093/brain/101.3.403. [DOI] [PubMed] [Google Scholar]

- 38.Adolphs R, Tranel D, Damasio H, Damasio A. Fear and the human amygdala. J Neurosci. 1995 Sep 01;15(9):5879–5891. doi: 10.1523/jneurosci.15-09-05879.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Davis M. The role of the amygdala in conditioned fear. In: Aggleton JP, editor. The Amydala: Neurobiological Aspects of Emotion, Memory Mental Dysfunction. New York, NY: Wiley; 1992. p. 395. [Google Scholar]

- 40.LeDoux JE. The neurobiology of emotion. In: Oakley DA, editor. Mind and Brain. Cambridge, MA: Cambridge University Press; 1986. p. 354. [Google Scholar]

- 41.Reiman EM, Lane RD, Ahern GL, Schwartz GE, Davidson RJ, Friston KJ, Yun LS, Chen K. Neuroanatomical correlates of externally and internally generated human emotion. Am J Psychiatry. 1997 Jul;154(7):918–25. doi: 10.1176/ajp.154.7.918. [DOI] [PubMed] [Google Scholar]

- 42.Irwin W, Davidson RJ, Lowe MJ, Mock BJ, Sorenson JA, Turski PA. Human amygdala activation detected with echo-planar functional magnetic resonance imaging. Neuroreport. 1996 Jul 29;7(11):1765–9. doi: 10.1097/00001756-199607290-00014. [DOI] [PubMed] [Google Scholar]

- 43.Müller JL, Sommer M, Wagner V, Lange K, Taschler H, Röder CH, Schuierer G, Klein HE, Hajak G. Abnormalities in emotion processing within cortical and subcortical regions in criminal psychopaths. Biological Psychiatry. 2003 Jul;54(2):152–162. doi: 10.1016/s0006-3223(02)01749-3. [DOI] [PubMed] [Google Scholar]

- 44.Taylor SF, Liberzon I, Fig LM, Decker LR, Minoshima S, Koeppe RA. The effect of emotional content on visual recognition memory: a PET activation study. Neuroimage. 1998 Aug;8(2):188–97. doi: 10.1006/nimg.1998.0356. [DOI] [PubMed] [Google Scholar]

- 45.Phelps EA. Human emotion and memory: interactions of the amygdala and hippocampal complex. Curr Opin Neurobiol. 2004 Apr;14(2):198–202. doi: 10.1016/j.conb.2004.03.015. [DOI] [PubMed] [Google Scholar]

- 46.Maren S. Long-term potentiation in the amygdala: a mechanism for emotional learning and memory. Trends in Neurosciences. 1999 Dec;22(12):561–567. doi: 10.1016/s0166-2236(99)01465-4. [DOI] [PubMed] [Google Scholar]

- 47.Phelps EA, O'Connor KJ, Gatenby JC, Gore JC, Grillon C, Davis M. Activation of the left amygdala to a cognitive representation of fear. Nat Neurosci. 2001 Apr;4(4):437–41. doi: 10.1038/86110. [DOI] [PubMed] [Google Scholar]

- 48.Lanteaume L, Khalfa S, Régis Jean, Marquis P, Chauvel P, Bartolomei F. Emotion induction after direct intracerebral stimulations of human amygdala. Cereb Cortex. 2007 Jun;17(6):1307–13. doi: 10.1093/cercor/bhl041. [DOI] [PubMed] [Google Scholar]

- 49.Markowitsch HJ. Differential contribution of right and left amygdala to affective information processing. Behav Neurol. 1998;11(4):233–244. doi: 10.1155/1999/180434. doi: 10.1155/1999/180434. [DOI] [PubMed] [Google Scholar]

- 50.Paré D, Collins DR, Pelletier JG. Amygdala oscillations and the consolidation of emotional memories. Trends Cogn Sci. 2002 Jul 01;6(7):306–314. doi: 10.1016/s1364-6613(02)01924-1. [DOI] [PubMed] [Google Scholar]

- 51.Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001 May 17;411(6835):305–9. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- 52.Fox E, Russo R, Bowles R, Dutton K. Do threatening stimuli draw or hold visual attention in subclinical anxiety? Journal of Experimental Psychology: General. 2001;130(4):681–700. doi: 10.1037/0096-3445.130.4.681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ochsner KN, Bunge SA, Gross JJ, Gabrieli JDE. Rethinking feelings: an FMRI study of the cognitive regulation of emotion. J Cogn Neurosci. 2002 Nov 15;14(8):1215–29. doi: 10.1162/089892902760807212. [DOI] [PubMed] [Google Scholar]

- 54.Funayama ES, Grillon C, Davis M, Phelps EA. A double dissociation in the affective modulation of startle in humans: effects of unilateral temporal lobectomy. J Cogn Neurosci. 2001 Aug 15;13(6):721–9. doi: 10.1162/08989290152541395. [DOI] [PubMed] [Google Scholar]

- 55.Wirth M, Isaacowitz DM, Kunzmann U. Visual attention and emotional reactions to negative stimuli: The role of age and cognitive reappraisal. Psychol Aging. 2017 Sep;32(6):543–556. doi: 10.1037/pag0000188. [DOI] [PubMed] [Google Scholar]

- 56.Lithari C, Frantzidis CA, Papadelis C, Vivas AB, Klados MA, Kourtidou-Papadeli C, Pappas C, Ioannides AA, Bamidis PD. Are females more responsive to emotional stimuli? A neurophysiological study across arousal and valence dimensions. Brain Topogr. 2010 Mar;23(1):27–40. doi: 10.1007/s10548-009-0130-5. http://europepmc.org/abstract/MED/20043199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Filkowski MM, Olsen RM, Duda B, Wanger TJ, Sabatinelli D. Sex differences in emotional perception: Meta analysis of divergent activation. Neuroimage. 2017 Feb 15;147:925–933. doi: 10.1016/j.neuroimage.2016.12.016. [DOI] [PubMed] [Google Scholar]

- 58.Fry H, Ketteridge S, Marshall S. A Handbook for Teaching and Learning in Higher Education: Enhancing Academic Practice. New York, NY: Routledge; 2009. [Google Scholar]

- 59.Downes S. Distance Educators Before the River Styx Learning. [2013-05-25]. http://technologysource.org/article/

- 60.Kaldoudi E, Konstantinidis S, Bamidis PD. Web 2.0 approaches for active, collaborative learning in medicine and health. In: Mohammed S, Fiaidhi J, editors. Ubiquitous Health and Medical Informatics: The Ubiquity 2.0 Trend and Beyond. Hershey, PA: IGI Global; 2010. [Google Scholar]

- 61.Williams B. Case based learning--a review of the literature: is there scope for this educational paradigm in prehospital education? Emerg Med J. 2005 Aug;22(8):577–81. doi: 10.1136/emj.2004.022707. http://emj.bmj.com/cgi/pmidlookup?view=long&pmid=16046764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Larson RD. In Search of Synergy in Small Group Performance. New York, NY: Psychology Press; 2010. [Google Scholar]

- 63.Brief description. ePBLnet Project; [2020-03-01]. http://www.epblnet.eu/content/brief-description. [Google Scholar]

- 64.Poulton T, Ellaway RH, Round J, Jivram T, Kavia S, Hilton S. Exploring the efficacy of replacing linear paper-based patient cases in problem-based learning with dynamic Web-based virtual patients: randomized controlled trial. J Med Internet Res. 2014 Nov 05;16(11):e240. doi: 10.2196/jmir.3748. https://www.jmir.org/2014/11/e240/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Ellaway R, Poulton T, Fors U, McGee JB, Albright S. Building a virtual patient commons. Medical Teacher. 2009 Jul 03;30(2):170–174. doi: 10.1080/01421590701874074. [DOI] [PubMed] [Google Scholar]

- 66.Cook DA, Erwin PJ, Triola MM. Computerized Virtual Patients in Health Professions Education: A Systematic Review and Meta-Analysis. Academic Medicine. 2010;85(10):1589–1602. doi: 10.1097/acm.0b013e3181edfe13. [DOI] [PubMed] [Google Scholar]

- 67.Saleh N. The Value of Virtual Patients in Medical Education. Ann Behav Sci Med Educ. 2015 Oct 16;16(2):29–31. doi: 10.1007/bf03355129. [DOI] [Google Scholar]

- 68.Zary N, Johnson G, Boberg J, Fors UG. Development, implementation and pilot evaluation of a Web-based Virtual Patient Case Simulation environment--Web-SP. BMC Med Educ. 2006 Feb 21;6:10. doi: 10.1186/1472-6920-6-10. https://bmcmededuc.biomedcentral.com/articles/10.1186/1472-6920-6-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Bateman J, Davies D. Virtual Patients: Are We in a New Era? Academic Medicine. 2011;86(2):151. doi: 10.1097/acm.0b013e3182041db4. [DOI] [PubMed] [Google Scholar]

- 70.Huwendiek S, De leng BA, Zary N, Fischer MR, Ruiz JG, Ellaway R. Towards a typology of virtual patients. Medical Teacher. 2009 Sep 09;31(8):743–748. doi: 10.1080/01421590903124708. [DOI] [PubMed] [Google Scholar]

- 71.Huang G, Reynolds R, Candler C. Virtual patient simulation at US and Canadian medical schools. Acad Med. 2007 May;82(5):446–51. doi: 10.1097/ACM.0b013e31803e8a0a. [DOI] [PubMed] [Google Scholar]

- 72.MedBiquitous Standards. Medbuquitous; [2020-02-29]. https://www.medbiq.org/medbiquitous_virtual_patient. [Google Scholar]

- 73.Medbuquitous virtual patient summary. Medbuquitous; [2020-03-01]. https://www.medbiq.org/sites/default/files/vp_tech_overview/player.html. [Google Scholar]

- 74.Dafli E, Antoniou PE, Ioannidis L, Dombros N, Topps D, Bamidis PD. Virtual patients on the semantic Web: a proof-of-application study. J Med Internet Res. 2015 Jan 22;17(1):e16. doi: 10.2196/jmir.3933. https://www.jmir.org/2015/1/e16/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Antoniou PE, Sidiropoulos EA, Bamidis PD. DISCOVER-ing beyond openSim; immersive learning for carers of the elderly in the VR/AR era. Communications in Computer and Information Science; Third International Conference, Immersive Learning Research Network; June 26-29, 2017; Coimbra, Portugal. 2017. http://link.springer.com/10.1007/978-3-319-60633-0_16. [DOI] [Google Scholar]

- 76.Antoniou PE, Athanasopoulou CA, Dafli E, Bamidis PD. Exploring design requirements for repurposing dental virtual patients from the web to second life: a focus group study. J Med Internet Res. 2014 Jun 13;16(6):e151. doi: 10.2196/jmir.3343. https://www.jmir.org/2014/6/e151/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Antoniou PE, Ioannidis L, Bamidis PD. OSCase: Data Schemes, Architecture and Implementation details of Virtual Patient repurposing in Multi User Virtual Environments. EAI Endorsed Transactions on Future Intelligent Educational Environments. 2016 Jun 27;2(6):151523. doi: 10.4108/eai.27-6-2016.151523. http://eudl.eu/doi/10.4108/eai.27-6-2016.151523. [DOI] [Google Scholar]

- 78.Antoniou PE, Dafli E, Arfaras G, Bamidis PD. Versatile mixed reality medical educational spaces; requirement analysis from expert users. Pers Ubiquit Comput. 2017 Aug 23;21(6):1015–1024. doi: 10.1007/s00779-017-1074-5. https://link.springer.com/article/10.1007/s00779-017-1074-5. [DOI] [Google Scholar]

- 79.Scheckler RK. Virtual labs: a substitute for traditional labs? Int J Dev Biol. 2003;47(2-3):231–6. http://www.intjdevbiol.com/paper.php?doi=12705675. [PubMed] [Google Scholar]

- 80.de Jong T, Linn MC, Zacharia ZC. Physical and virtual laboratories in science and engineering education. Science. 2013 Apr 19;340(6130):305–8. doi: 10.1126/science.1230579. [DOI] [PubMed] [Google Scholar]

- 81.Weller JM. Simulation in undergraduate medical education: bridging the gap between theory and practice. Med Educ. 2004 Jan;38(1):32–8. doi: 10.1111/j.1365-2923.2004.01739.x. [DOI] [PubMed] [Google Scholar]

- 82.Chiu JL, DeJaegher CJ, Chao J. The effects of augmented virtual science laboratories on middle school students' understanding of gas properties. Computers & Education. 2015 Jul;85:59–73. doi: 10.1016/j.compedu.2015.02.007. [DOI] [Google Scholar]

- 83.Dede C. Immersive interfaces for engagement and learning. Science. 2009 Jan 02;323(5910):66–9. doi: 10.1126/science.1167311. [DOI] [PubMed] [Google Scholar]

- 84.Klopfer E, Squire K. Environmental Detectives—the development of an augmented reality platform for environmental simulations. Education Tech Research Dev. 2007 Apr 5;56(2):203–228. doi: 10.1007/s11423-007-9037-6. [DOI] [Google Scholar]

- 85.Dunleavy M, Dede C, Mitchell R. Affordances and Limitations of Immersive Participatory Augmented Reality Simulations for Teaching and Learning. J Sci Educ Technol. 2008 Sep 3;18(1):7–22. doi: 10.1007/s10956-008-9119-1. [DOI] [Google Scholar]

- 86.Wu H, Lee SW, Chang H, Liang J. Current status, opportunities and challenges of augmented reality in education. Computers & Education. 2013 Mar;62:41–49. doi: 10.1016/j.compedu.2012.10.024. [DOI] [Google Scholar]

- 87.Olympiou G, Zacharia ZC. Blending physical and virtual manipulatives: An effort to improve students' conceptual understanding through science laboratory experimentation. Sci Ed. 2011 Dec 20;96(1):21–47. doi: 10.1002/sce.20463. [DOI] [Google Scholar]

- 88.NeXus-10 product page. Mind Media; [2020-03-01]. https://www.mindmedia.com/en/products/nexus-10-mkii/, [Google Scholar]

- 89.Empatica; [2020-03-01]. Empatica E4 product page. https://www.empatica.com/en-eu/research/e4/ [Google Scholar]

- 90.Microsoft HoloLens product page. Microsoft; [2020-03-01]. https://www.microsoft.com/en-us/hololens. [Google Scholar]

- 91.NCH Software; [2020-03-01]. Debut Video Capture Software product page. http://www.nchsoftware.com/capture/index.html. [Google Scholar]

- 92.Charalambous EF, Hanna SE, Penn AL. Visibility analysis, spatial experience and EEG recordings in virtual reality environments: The experience of 'knowing where one is' and isovist properties as a means to assess the related brain activity. Proceedings of the 11th Space Syntax Symposium; The 11th Space Syntax Symposium; July 3-7, 2017; Lisbon, Portugal. 2017. p. 128. [Google Scholar]

- 93.Chin-Teng Lin. I-Fang Chung. Li-Wei Ko. Yu-Chieh Chen. Sheng-Fu Liang. Jeng-Ren Duann EEG-Based Assessment of Driver Cognitive Responses in a Dynamic Virtual-Reality Driving Environment. IEEE Trans Biomed Eng. 2007 Jul;54(7):1349–1352. doi: 10.1109/tbme.2007.891164. [DOI] [PubMed] [Google Scholar]

- 94.Karrasch M, Laine M, Rapinoja P, Krause CM. Effects of normal aging on event-related desynchronization/synchronization during a memory task in humans. Neurosci Lett. 2004 Aug 05;366(1):18–23. doi: 10.1016/j.neulet.2004.05.010. [DOI] [PubMed] [Google Scholar]

- 95.Klimesch W. EEG alpha and theta oscillations reflect cognitive and memory performance: a review and analysis. Brain Research Reviews. 1999 Apr;29(2-3):169–195. doi: 10.1016/s0165-0173(98)00056-3. [DOI] [PubMed] [Google Scholar]

- 96.Kubota Y, Sato W, Toichi M, Murai T, Okada T, Hayashi A, Sengoku A. Frontal midline theta rhythm is correlated with cardiac autonomic activities during the performance of an attention demanding meditation procedure. Cognitive Brain Research. 2001 Apr;11(2):281–287. doi: 10.1016/s0926-6410(00)00086-0. [DOI] [PubMed] [Google Scholar]

- 97.Başar-Eroglu C, Başar E, Demiralp T, Schürmann M. P300-response: possible psychophysiological correlates in delta and theta frequency channels. A review. International Journal of Psychophysiology. 1992 Sep;13(2):161–179. doi: 10.1016/0167-8760(92)90055-g. [DOI] [PubMed] [Google Scholar]

- 98.Missonnier P, Deiber M, Gold G, Millet P, Gex-Fabry Pun M, Fazio-Costa L, Giannakopoulos P, Ibáñez V. Frontal theta event-related synchronization: comparison of directed attention and working memory load effects. J Neural Transm (Vienna) 2006 Oct;113(10):1477–86. doi: 10.1007/s00702-005-0443-9. [DOI] [PubMed] [Google Scholar]

- 99.Lagopoulos J, Xu J, Rasmussen I, Vik A, Malhi GS, Eliassen CF, Arntsen IE, Saether JG, Hollup S, Holen A, Davanger S, Ellingsen Ø. Increased theta and alpha EEG activity during nondirective meditation. J Altern Complement Med. 2009 Nov;15(11):1187–92. doi: 10.1089/acm.2009.0113. [DOI] [PubMed] [Google Scholar]

- 100.Gruzelier J. A theory of alpha/theta neurofeedback, creative performance enhancement, long distance functional connectivity and psychological integration. Cogn Process. 2009 Feb;10 Suppl 1:S101–9. doi: 10.1007/s10339-008-0248-5. [DOI] [PubMed] [Google Scholar]

- 101.Jensen O, Tesche CD. Frontal theta activity in humans increases with memory load in a working memory task. Eur J Neurosci. 2002 Apr;15(8):1395–9. doi: 10.1046/j.1460-9568.2002.01975.x. [DOI] [PubMed] [Google Scholar]

- 102.Grunwald M, Weiss T, Krause W, Beyer L, Rost R, Gutberlet I, Gertz H. Theta power in the EEG of humans during ongoing processing in a haptic object recognition task. Cognitive Brain Research. 2001 Mar;11(1):33–37. doi: 10.1016/s0926-6410(00)00061-6. [DOI] [PubMed] [Google Scholar]

- 103.Gevins A, Zeitlin G, Yingling C, Doyle J, Dedon M, Schaffer R, Roumasset J, Yeager C. EEG patterns during ‘cognitive’ tasks. I. Methodology and analysis of complex behaviors. Electroencephalography and Clinical Neurophysiology. 1979 Dec;47(6):693–703. doi: 10.1016/0013-4694(79)90296-7. [DOI] [PubMed] [Google Scholar]

- 104.Gevins A, Zeitlin G, Doyle J, Schaffer R, Callaway E. EEG patterns during ‘cognitive’ tasks. II. Analysis of controlled tasks. Electroencephalography and Clinical Neurophysiology. 1979 Dec;47(6):704–710. doi: 10.1016/0013-4694(79)90297-9. [DOI] [PubMed] [Google Scholar]

- 105.Gevins A, Zeitlin G, Doyle J, Yingling C, Schaffer R, Callaway E, Yeager C. Electroencephalogram correlates of higher cortical functions. Science. 1979 Feb 16;203(4381):665–8. doi: 10.1126/science.760212. [DOI] [PubMed] [Google Scholar]

- 106.Adrian ED, Matthews BH. The interpretation of potential waves in the cortex. J Physiol. 1934 Jul 31;81(4):440–71. doi: 10.1113/jphysiol.1934.sp003147. https://onlinelibrary.wiley.com/resolve/openurl?genre=article&sid=nlm:pubmed&issn=0022-3751&date=1934&volume=81&spage=440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Niedermeyer E, Lopes da Silva F. Electroencephalography: Basic principles, clinical applications, and related fields. Baltimore, MD: Williams & Wilkins; 2004. [Google Scholar]

- 108.Fowles DC. The three arousal model: implications of gray's two-factor learning theory for heart rate, electrodermal activity, and psychopathy. Psychophysiology. 1980 Mar;17(2):87–104. doi: 10.1111/j.1469-8986.1980.tb00117.x. [DOI] [PubMed] [Google Scholar]

- 109.Drachen A, Nacke L, Yannakakis G, Pedersen A. Correlation between heart rate, electrodermal activity and player experience in first-person shooter games. Proceedings of the 5th ACM SIGGRAPH Symposium on Video Games; 5th ACM SIGGRAPH Symposium on Video Games; July 28, 2010; Los Angeles, CA. 2010. pp. 49–54. [DOI] [Google Scholar]

- 110.Antoniou P, Spachos D, Kartsidis P, Konstantinidis E, Bamidis P. Towards Classroom Affective Analytics. Validating an Affective State Self-reporting tool for the medical classroom. MedEdPublish. 2017;6(3) doi: 10.15694/mep.2017.000134. doi: 10.15694/mep.2017.000134. [DOI] [Google Scholar]

- 111.Blandford A. Intelligent tutoring systems: Lessons learned. Computers & Education. 1990 Jan;14(6):544–545. doi: 10.1016/0360-1315(90)90114-m. [DOI] [Google Scholar]

- 112.VanLehn K. The Relative Effectiveness of Human Tutoring, Intelligent Tutoring Systems, and Other Tutoring Systems. Educational Psychologist. 2011 Oct;46(4):197–221. doi: 10.1080/00461520.2011.611369. [DOI] [Google Scholar]

- 113.Mohanan R, Stringfellow C, Gupta D. An emotionally intelligent tutoring system. 2017 International Conference on Distributed Computing Systems; July 18-20, 2017; London, England. 2017. [DOI] [Google Scholar]

- 114.Sarrafzadeh A, Alexander S, Dadgostar F, Fan C, Bigdeli A. “How do you know that I don’t understand?” A look at the future of intelligent tutoring systems. Computers in Human Behavior. 2008 Jul;24(4):1342–1363. doi: 10.1016/j.chb.2007.07.008. [DOI] [Google Scholar]