Abstract

Facial expressions are important for intentional display of emotions in social interaction. For people with severe paralysis, the ability to display emotions intentionally can be impaired. Current brain–computer interfaces (BCIs) allow for linguistic communication but are cumbersome for expressing emotions. Here, we investigated the feasibility of a BCI to display emotions by decoding facial expressions. We used electrocorticographic recordings from the sensorimotor cortex of people with refractory epilepsy and classified five facial expressions, based on neural activity. The mean classification accuracy was 72%. This approach could be a promising avenue for development of BCI‐based solutions for fast communication of emotions. ANN NEUROL 2020;88:631–636

Introduction

Locked‐in syndrome (LIS) is a condition in which people are (almost) completely paralyzed while mental capacity remains intact 1 and in which communication through the normal means of vocalization and body language is completely lost. A promising technological aid is the brain–computer interface (BCI), which enables communication by recording brain signals and translating these into computer output. 2 However, the speed of communication with the current generation of BCIs 3 , 4 , 5 is substantially lower than that of normal speech.

Intentional communication of emotions is usually conveyed by facial expressions, 6 which allow people to convey their feelings quickly, without linguistic utterance. Although people with LIS cannot make deliberate facial expressions, attempts to do so might lead to recognizable and distinctive neural activity patterns, which can potentially contribute to restoration of the communication of emotion using a BCI approach. Indications for the feasibility of such an “intentional emotion display” BCI are sparse (but see Chin and colleagues 7 ), and it is currently unclear whether deliberate facial expressions, which require the integration of different facial movements at the same time, 6 , 8 can be distinguished from one another based on neural activity. In the present proof‐of‐concept study, we measured electrocorticographic sensorimotor cortex (SMC) neural activity associated with posed facial expressions in two individuals and classified the facial expressions based on the neural activity patterns.

Patients and Methods

Participants

Two participants (A and B; both female, aged 30 and 41 years, respectively) were included in this study after giving written informed consent. They underwent surgery for epilepsy treatment in the University Medical Center Utrecht, during which subdural electrocorticography electrodes were temporarily implanted for clinical purposes. For research purposes only, and with written informed consent of the patients, an additional high‐density electrode grid of 128 electrodes (electrode diameter 1.17 and 1 mm, interelectrode distance 4 and 3 mm, for Subjects A and B, respectively) was placed over the clinically non‐relevant SMC (subject A, left hemisphere; subject B, right hemisphere). Only the high‐density electrodes were used for the present analysis. The Ethics Committee of the University Medical Center Utrecht approved this study, which was carried out in accordance with the Declaration of Helsinki (2013).

Task

We examined five facial expressions (happy, sad, surprised, disgusted, and neutral), which are clearly distinct from each other with respect to the required muscles and can be distinguished accurately with video‐analysis. 6 , 9 The participants were asked to generate the facial expressions cued either by pictures of facial expressions (Cohn–Kanade database 10 , 11 ) or by the associated words. We used picture and word cues because neural changes in the SMC could be induced by visual perception of actions, 12 posing a confound in decoding. Trials started with a 2‐second cue (picture or word). The participants were instructed to make the expression quickly and hold it for as long as the cue was visible. The intertrial interval was 3 seconds. Each facial expression was randomly repeated 5 times in pictures and 5 times in words, interleaved with rest trials, during which the participants were instructed to keep a neutral face.

Data Acquisition and Preprocessing

Data acquisition and preprocessing was similar to that described by Salari and colleagues13 unless mentioned otherwise.

Classification Procedure

Trials were epoched between cue onset and offset and were classified by template matching with leave‐one‐trial‐out cross‐validation and using high‐frequency‐band (65–130 Hz) power as a feature (for further details, see Salari and colleagues13). To generate classification templates, we used all grid electrodes (excluding noisy, flat, or re‐reference electrodes) and we combined picture and text trials. The effect of presentation type on classification was assessed separately by inspection of cue‐specific templates and classifying text trials using picture templates and vice versa.

Neural Activity Localization and Trial Consistency

To gain insight into which areas were activated by which expression, we visualized, per expression, the average neural activity pattern on the brain. Furthermore, to see how consistent neural activity patterns were, we calculated the average within‐class correlation between trials and the average between‐class correlation. This was done for only the four emotional expressions, because the neutral expression did not involve any movement and therefore was not expected to be associated with neural activity changes.

Informative Locations and Grid Sizes

We determined which brain regions were most informative for classification against the background of considering BCI applications and minimizing risks of electrocorticography grid implants. 14 For this purpose, we used a random search procedure (described by Salari and colleagues 13 ), in which random combinations of electrodes were chosen 5,000 times and subsequently used for classification. The average accuracy of each electrode was then determined and z‐scored. In addition, we used a searchlight procedure 13 to investigate what the classification accuracy would be if we used a smaller portion of the grid. This approach was also used to determine the maximum possible accuracy over all investigated grid locations for different grid sizes. In addition, we investigated which grid size yielded the highest accuracy, to find the minimally required grid size for maximal accuracy.

Data Availability

Data can be made available upon reasonable request.

Results

Task Performance

Participants performed the task well, although for Subject B some expressions, for example surprise, were noted to be rather subtle. This participant did report, however, that the movements she made represented a surprised face for her, and therefore we did not exclude any trials.

Spatial Classification Accuracy

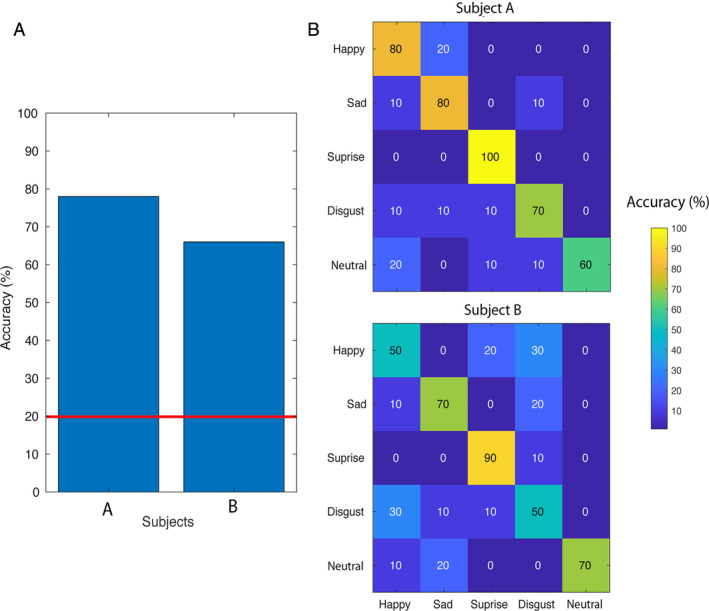

Classification accuracy was 78 and 66% for Subject A and B, respectively (mean = 72, SD = 8.49, n = 2), and chance level was 20% (Fig 1). Classification was significantly above chance level (p < 0.001) for both participants.

FIGURE 1.

Classification accuracies and confusion matrices. (A) The classification accuracies (y‐axis) for both participants (x‐axis). The red line represents chance level. (B) The confusion matrices are shown for Subject A and B, respectively, with on the y‐axis the actual expression and on the x‐axis the predicted expression. [Color figure can be viewed at www.annalsofneurology.org]

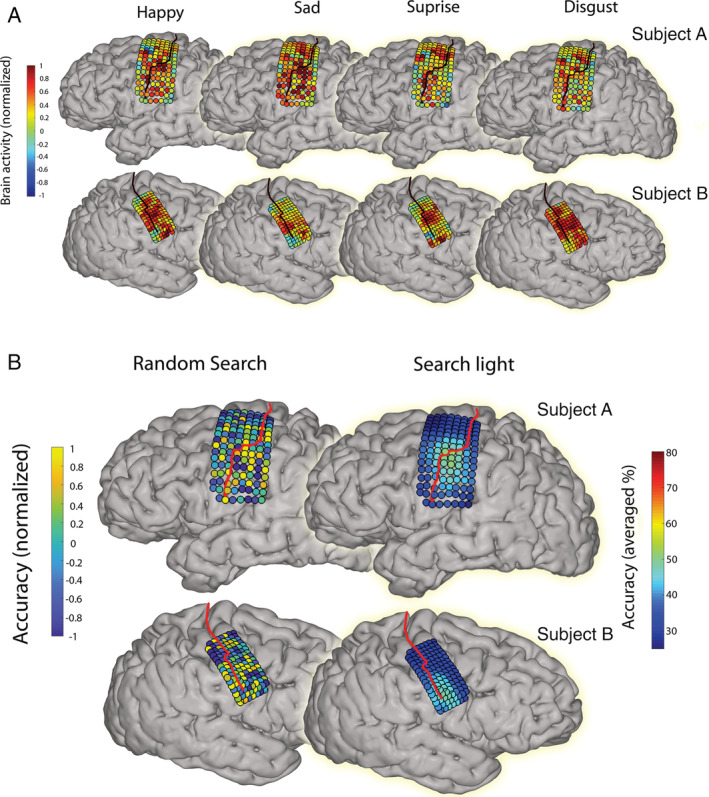

Neural Activity Localization and Trial Consistency

Of all electrodes, 84% (106 of 126 for Subject A) and 82% (103 of 125 for Subject B) showed a significant correlation with at least one of the posed facial expressions. Although some areas were active during all investigated facial movements, there were also many areas where high‐frequency‐band activity differed between expressions (Fig 2A). On average, trials within the same class correlated with a value of 0.32 (SD = 0.05) for Subject A and 0.32 (SD = 0.09) for Subject B. Trials of different classes correlated on average with a value of 0.21 (SD = 0.05) and 0.20 (SD = 0.05) for Subject A and B, respectively.

FIGURE 2.

The average neural activity patterns and informative areas. (A) The average neural activity pattern is shown per movement containing expression for both Subjects A and B. The black line indicates the central sulcus. Colors indicate the level of normalized high‐frequency band power. (B) On the left, the most informative electrodes are shown for both Subjects A and B. The red line indicates the central sulcus. Colors indicate the level of attributed information for each electrode, which was determined by a random search procedure. Warm colors indicate high classification accuracies and therefore more information. On the right, the results of the searchlight procedure are shown, with the most informative areas shown in warm colors. We used a 3 × 3 electrode configuration for the creation of the current plot (three electrode rows and columns), corresponding to approximately 12 mm × 12 mm for Subject A and 9 mm × 9 mm for Subject B. Colors represent, for each electrode, the accuracy score averaged over all configurations containing that electrode. [Color figure can be viewed at www.annalsofneurology.org]

Informative Locations and Grid Sizes

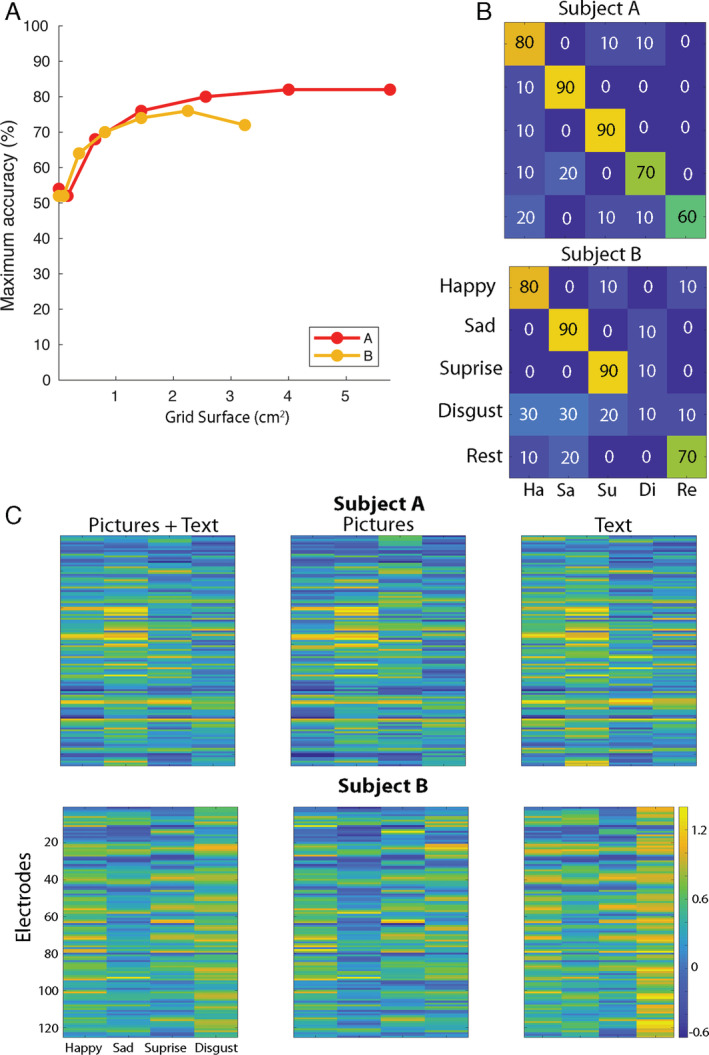

The most informative electrodes were located in the face area of the SMC, immediately below the hand area (Fig 2B). The minimum required grid size to obtain maximum accuracy was 4 cm2 (6 × 6 electrodes with 4 mm distance) and 2.25 cm2 (6 × 6 electrodes with 3 mm distance) for Subject A and B, respectively (mean, 3.13 cm2; Fig 3).

FIGURE 3.

The influence of grid size and presentation type on classification accuracy. (A) On the y‐axis, the maximum classification accuracy (from all grid locations in the searchlight) is indicated for both participants per size of cortical surface, which is indicated on the x‐axis. Results were obtained using the searchlight approach, in which, for each sampled area of cortex, the classification accuracy was determined. Note that the number of electrodes of each consecutive data point in this plot is equal for both participants, but owing to differences in the resolution, the covered surfaces differ. (B) The confusion matrices of classification with templates based on the opposite presentation type are shown. That is, picture trials were classified using templates based only on text trials and vice versa. The confusion matrices are shown for Subject A and B, respectively, with on the y‐axis the real expression and on the x‐axis the predicted expression. By doing so, classification can be driven only by activity common in both presentation types and is therefore more likely to be attributable to the facial movements than to the stimulus presentation. Note, however, that as the number of trials per presentation type is rather limited (5 per class), stability of the templates may be reduced. Therefore, in all other classification analysis, we included both text and picture trials. (C) The facial expression neural activity templates are shown per Subject A and B. On the x‐axis, the facial expressions are indicated. On the y‐axis, electrodes are shown. The color scale indicates the normalized neural activity level, and each cell indicates the average neural activity level for one electrode based on all trials (left), on picture trials only (middle), or text trials only (right). [Color figure can be viewed at www.annalsofneurology.org]

Presentation Type

Classification of picture trials based on text templates and vice versa resulted in similar results to the use of combined text and picture templates (78 and 68% accuracy for Subject A and B, respectively). Visual inspection of the average neural pattern per class showed that only for the expression of disgust by Subject B was there a clear influence of presentation type. In the combined (picture + text) template, this effect was reduced, however (see Fig 3).

Discussion

In this proof‐of‐concept study, we showed that five posed facial expressions can be classified well based on SMC neural activity. Both the left and the right hemisphere could be used for classification, which corresponds to earlier demonstrations of bilateral activation during production of a willful smile. 15 The facial SMC area was most informative for classification. Furthermore, we found that even with a grid size of only 3.13 cm2, good performance could be reached. Importantly, given that people had to hold the facial expressions for only 2 seconds, we show that classification of posed facial expressions can be established relatively fast.

Our results add a new conceptual dimension to previous studies that classified isolated articulator movements, 13 , 16 by showing that posed facial expressions, which entail simultaneous movements of multiple effectors (lips, jaw, nose and eyebrows), can be identified to a high degree by using neural activity alone. Importantly, this study focused on facial expressions, which allows the decoding of activity that corresponds to willful expression of feelings and is therefore conceptually different from earlier reports on decoding mood itself (eg, Sani and colleagues 17 ). Indeed, a double dissociation between genuine and mimicked facial movements exists in the motor pathways, with the mimicked movements originating from the motor cortex and the emotional state mostly from subcortical structures. 18 , 19 Given that it is important for BCI users to have control over what they want to communicate, we believe that classification of willful facial expressions benefits the development of communication BCIs.

In the present study, we investigated executed movements produced by able‐bodied people. The feasibility of classifying attempted facial expressions in people with LIS is likely 20 but requires confirmation. Another limitation is the small number of participants and expressions. The influence of the presentation type on the classification seemed to be limited, but further research on this topic is needed because the number of trials per presentation type was low.

Conclusion

We demonstrate that posed, intentional facial expressions can be distinguished from each other based on SMC activity. These results suggest that the deliberate display of emotions in the form of facial expressions can be added to communication BCIs and potentially increase the utility of neuroprosthetic communication devices. Enrichment of BCI communication with emotional messaging is likely to enhance the quality of social interaction for people with LIS.

Author Contributions

E.S., Z.F., M.V., and N.R. contributed to the conception and design of the study; E.S., Z.F., and M.V. contributed to the acquisition and analysis of data; E.S., Z.F., M.V., and N.R. contributed to drafting the text and preparing the figures.

Potential Conflicts of Interest

Nothing to report.

Acknowledgments

This work was supported by the European Union (ERC‐Advanced “iConnect” grant 320708).

We would like to thank the participants and the clinical staff for their contribution to this experiment. In addition, we would like to thank Philippe Cornelisse, Andreas Wolters, and Joosje Kist for their help and advice with respect to the development of the task and their suggestions for relevant literature.

References

- 1. American Congress of Rehabilitation Medicine . Recommendations for use of uniform nomenclature pertinent to patients with severe alterations in consciousness. Arch Phys Med Rehabil 1995;76:205–209. [DOI] [PubMed] [Google Scholar]

- 2. Wolpaw JR, Birbaumer N, McFarland DJ, et al. Brain‐computer interfaces for communication and control. Clin Neurophysiol 2002;113:767–791. [DOI] [PubMed] [Google Scholar]

- 3. Jarosiewicz B, Sarma AA, Bacher D, et al. Virtual typing by people with tetraplegia using a self‐calibrating intracortical brain‐computer interface. Sci Transl Med 2015;7:313ra179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Vansteensel MJ, Pels EGM, Bleichner MG, et al. Fully implanted brain–computer interface in a locked‐in patient with ALS. N Engl J Med 2016;375:2060–2066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Speier W, Arnold C, Chandravadia N, et al. Improving P300 spelling rate using language models and predictive spelling. Brain Comput Interfaces (Abingdon) 2018;5:13–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Ekman P, Friesen WV. Unmasking the face: a guide to recognizing emotions from facial clues. Los Altos, California, USA: ISHK, 2003. [Google Scholar]

- 7. Chin ZY, Ang KK, Guan C. Multiclass voluntary facial expression classification based on Filter Bank Common Spatial Pattern. Conf Proc IEEE Eng Med Biol Soc 2008;2008:1005–1008. [DOI] [PubMed] [Google Scholar]

- 8. Cattaneo L, Pavesi G. The facial motor system. Neurosci Biobehav Rev 2014;38:135–159. [DOI] [PubMed] [Google Scholar]

- 9. Taner Eskil M, Benli KS. Facial expression recognition based on anatomy. Comput Vis Image Underst 2014;119:1–14. [Google Scholar]

- 10. Kanade T, Cohn JF, Tian Y. Comprehensive database for facial expression analysis. In: Proceedings Fourth IEEE International Conference on Automatic Face and Gesture Recognition (Cat. No. PR00580). 2000: 46–53.

- 11. Lucey P, Cohn JF, Kanade T, et al. The extended Cohn‐Kanade dataset (CK+): a complete dataset for action unit and emotion‐specified expression. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition ‐ Workshops 2010: 94–101. [Google Scholar]

- 12. Pineda JA. Sensorimotor cortex as a critical component of an “extended” mirror neuron system: does it solve the development, correspondence, and control problems in mirroring? Behav Brain Funct 2008;4:47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Salari E, Freudenburg ZV, Branco MP, et al. Classification of articulator movements and movement direction from sensorimotor cortex activity. Sci Rep 2019;9:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Wong CH, Birkett J, Byth K, et al. Risk factors for complications during intracranial electrode recording in presurgical evaluation of drug resistant partial epilepsy. Acta Neurochir 2009;151:37–50. [DOI] [PubMed] [Google Scholar]

- 15. Iwase M, Ouchi Y, Okada H, et al. Neural substrates of human facial expression of pleasant emotion induced by comic films: a PET study. Neuroimage 2002;17:758–768. [PubMed] [Google Scholar]

- 16. Bleichner MG, Jansma JM, Salari E, et al. Classification of mouth movements using 7 T fMRI. J Neural Eng 2015;12:066026. [DOI] [PubMed] [Google Scholar]

- 17. Sani OG, Yang Y, Lee MB, et al. Mood variations decoded from multi‐site intracranial human brain activity. Nat Biotechnol 2018;36:954–961. [DOI] [PubMed] [Google Scholar]

- 18. Rinn WE. The neuropsychology of facial expression: a review of the neurological and psychological mechanisms for producing facial expressions. Psychol Bull 1984;95:52–77. [PubMed] [Google Scholar]

- 19. Morecraft RJ, Stilwell‐Morecraft KS, Rossing WR. The motor cortex and facial expression: new insights from neuroscience. Neurologist 2004;10:235–249. [DOI] [PubMed] [Google Scholar]

- 20. Bruurmijn MLCM, Pereboom IPL, Vansteensel MJ, et al. Preservation of hand movement representation in the sensorimotor areas of amputees. Brain 2017;140:3166–3178. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data can be made available upon reasonable request.