Abstract

The infant brain may be predisposed to identify perceptually salient cues that are common to both signed and spoken languages. Recent theory based on spoken languages has advanced sonority as one of these potential language acquisition cues. Using a preferential looking paradigm with an infrared eye tracker, we explored visual attention of hearing 6- and 12-month-olds with no sign language experience as they watched fingerspelling stimuli that either conformed to high sonority (well-formed) or low sonority (ill-formed) values, which are relevant to syllabic structure in signed language. Younger babies showed highly significant looking preferences for well-formed, high sonority fingerspelling, while older babies showed no preference for either fingerspelling variant, despite showing a strong preference in a control condition. The present findings suggest babies possess a sensitivity to specific sonority-based contrastive cues at the core of human language structure that is subject to perceptual narrowing, irrespective of language modality (visual or auditory), shedding new light on universals of early language learning.

A large component of human language development research has focused on identifying the specific cues present in the language signal that captivate infants’ attention in early language learning. However, a common bias is that the biological foundation of language learning is tied to properties of speech, and that brain regions are “hardwired” at birth to propel spoken language acquisition (Hickok & Poeppel, 2007; Liberman & Mattingly, 1989; MacNeilage & Davis, 2000; Seidenberg, 1997). For instance, Gomez et al., 2014, in a neuroimaging study of neonates’ perception of spoken phonological features, claimed to have discovered “language universals” present at birth that “shape language perception and acquisition.” However, only spoken language phonological features were studied. Logically, such universals should also be observable in signed languages and therefore be amodal, if they are truly “universal.”

Past studies have uncovered other putative universals of human language acquisition via the same logical reasoning by looking for these phenomena in signed languages. For example, perceptual narrowing—the well-documented phenomenon (cf., Werker & Tees, 1984) where infants are initially able to discriminate among the full set of phonemic categories in the world’s spoken languages but then attenuate down to only the phonetic set in their native languages by their first birthday—has been extended to signed languages and offered up as a universal feature of language acquisition (Baker, Golinkoff, & Petitto, 2006; Palmer, Fais, Werker, & Golinkoff, 2012). Another putative language universal is infants’ attraction to linguistic input over non-linguistic input, which has been demonstrated widely for speech over nonspeech sounds (reviewed in Vouloumanos & Werker, 2007), has also been found for linguistic signs over non-linguistic gestures (Krentz & Corina, 2008; Bosworth, Hwang, & Corina, 2013). Over the course of language acquisition, identical maturational patterns and timetable of the stages of language learning have been observed across speaking and signing children (Bellugi & Klima, 1982; Newport & Meier, 1985; Petitto, Holowka, Sergio, Levy, & Ostry, 2004; Petitto, Holowka, Sergio, & Ostry, 2001; Petitto & Marentette, 1991).

One hypothesis offered to account for the identical maturational patterns between sign and speech is that “the brain at birth cannot be working under rigid genetic instruction to produce and receive language via the auditory-speech modality” (Petitto, 2000, p. 12). Instead, the brain may be predisposed to identify salient cues that are common to both signed and spoken languages—in other words, language universals (Petitto, 2000; 2009). Therefore, what might such a salient cue be that is present in both visual-manual and auditory-spoken modalities? To answer this question, we selected sonority, a component of spoken language structure which Gomez et al., 2014 implicated as a “language universal,” explored this exact same component in signed language structure, and asked if infants also demonstrated sensitivity to it in this modality. Answering this question has the potential to shed new light on a supposed universal of early language learning by testing for it in the visual modality, and to challenge commonly-held biases of human language acquisition as being tied to uniquely to speech.

Sonority is defined as perceptual salience in the language signal (Brentari, 1998; Corina, 1990; Corina, 1996; Jantunen & Takkinen, 2010; MacNeilage, Krones, & Hanson, 1970; Ohala, 1990; Perlmutter, 1992; Sandler, 1993). Sonority in spoken languages is usually referred to as the relative audibility of speech sounds, as modulated by the openness of the vocal tract during phonation. Speech sounds with greater sonority are more perceptible (louder). Hence, vowels are more sonorant than glides and liquids, which are more sonorant than nasals and obstruents (MacNeilage et al., 1970; Ohala, 1990). Sonority is relevant to phonological restrictions of syllable formation across all spoken languages. One such restriction is the “Sonority Sequencing Principle” (SSP), which holds that syllables are best formed when they obey a theoretical sonority contour (i.e., pattern of rise and fall of intensity and loudness) with the syllable nucleus representing a sonority peak, that is, at its most loud (Ohala, 1990; Parker, 2008). Within a single syllable, sonority rises from onset to nucleus, and falls from nucleus to end, and consonant clusters in onsets and rimes are organized according to rising and falling sonority values respectively.

Psycholinguistic studies of adults by Berent and colleagues have uncovered intriguing properties regarding the SSP. English-speaking adults appear to universally prefer syllables that conform to sonority constraints and are considered “well-formed.” For example, blif is favored over a less sonorant, less well-formed (or “ill-formed”) version, lbif (Berent, 2013; Berent, Steriade, Lennertz, & Vaknin, 2007). Even in unfamiliar or artificial languages, as the SSP is increasingly violated, hearing children and adults increasingly misidentify the syllable (Berent et al., 2007; Berent, Harder, & Lennertz, 2011; Berent & Lennertz, 2010; for a review, see Berent, 2013). This ability also has been observed with infants who, as early as two days old, exhibit preferences for well-formed syllables (Gómez et al., 2014). In this study, functional near infrared spectroscopy (fNIRS) was used to demonstrate greater left temporal lobe activation in neonates when they are presented with well-formed syllables compared to ill-formed syllables, exhibiting a potential selective neural sensitivity to sonority. Hence, it has been suggested that sonority is one of the fundamental linguistic cues to which humans may possess a biological-governed sensitivity, and, in turn, sonority may be a key linguistic mechanism at the heart of human language structure that imposes universal restrictions on syllable well-formedness.

But is sonority (again, relative audibility of speech sounds modulated by the openness of the vocal tract during phonation) restricted to the spoken language modality? At first glance, some have assumed that sonority is indeed a unique property of spoken language (MacNeilage et al., 1970; Ohala, 1990). However, recent studies of adult deaf signers have suggested a remarkable parallel finding: visual sonority does exist in signed languages and is analogous to aural sonority (Brentari, 1998; Jantunen & Takkinen, 2010; Sandler & Lillo-Martin, 2006), suggesting that at the structural linguistic level, sonority is indeed present in both signed and spoken languages. While the precise definition of sonority within signed languages continues to be debated, most sign linguists generally agree that sonority refers to the relative visibility of sign movements, moderated by the proximity of the articulating joint to the body’s midline (Brentari, 1998; Sandler & Lillo-Martin, 2006). Signs with greater sonority are larger and easier to perceive; signs produced with shoulder movement are more sonorant than those with movement at the elbow or wrist, which are more sonorant than those with the knuckle or finger joints. Going forward, we adopt Brentari’s definition of sonority in which variations in visual sonority are central to sign syllable structure, with the movement in a sign specified as both the syllable nucleus and sonority peak (Brentari, 1998; also see Jantunen & Takkinen, 2010; Perlmutter, 1992; Wilbur, 2011). The selection of Brentari’s model is motivated by (1) its parallels to how aural sonority places constraints on spoken syllable formation and the role of the sonority peak as the spoken syllable nucleus, and (2) its use in recent experimental studies of sonority in signed languages. For example, both Berent, Dupuis, & Brentari (2013) and Williams and Newman (2016) employ Brentari’s model of sonority to demonstrate that, among adult L2 learners, more sonorant signs—that is, signs incorporating movement in more proximal joints—are easier to perceive and learn than less sonorant signs.

The theoretical discussions of sonority in signed languages and its parallel structure to sonority in spoken language offer up the possibility that visual sonority could potentially influence how infants perceive a signed language, akin to how aural sonority interacts with infants’ perception of speech (Gomez et al., 2014). We hypothesize that sonority may be a linguistic feature1 that may serve as a salient cue for all early language learning, drawing from evidence involving a soundless natural signed language. To date, we know of no studies that have investigated this phenomenon in infant signed language acquisition. The study of signed languages permit the examination of fascinating questions about language universals that have hitherto been among the knottiest issues to resolve in early human language learning.

There are two hypotheses addressed in this study about how infants learn language. First, one hypothesis is that infants have biologically-governed sensitivities that guide language identification and learning. Support for this Biologically-Governed Hypothesis is provided by findings of early language sensitivities present in newborn infants. Newborns can discriminate between languages of different rhythmic classes despite having no prenatal experience with them (Nazzi, Bertoncini, & Mehler, 1998), discriminate between well-formed and ill-formed syllables based on sonority constraints (Gómez et al., 2014), and possess encoding biases for positional, relational, and sequential information in linguistic structure, which are key abilities permitting abstract language processing (Gervain, Berent, & Werker, 2012; Gervain, Macagno, Cogoi, Peña, & Mehler, 2008). That newborns just two or three days old possess such abstract linguistic capabilities have led these and other researchers to hypothesize that this capacity may not be wholly learned in such a short period of time. Instead, it may be derived from the infant’s biologically-driven sensitivity to specific maximally contrastive rhythmic-temporal cues (e.g., sonority in syllabic structure), common to all world languages, over other input features (Petitto et al., 2001; 2004; 2016).

Another hypothesis, here called the Entrainment Hypothesis, is that language learning is enabled and guided by the ability to detect characteristics of relative prosodic features learned not within the first two days of life, but instead during prenatal language exposure. Humans in utero are able to hear language beginning in the second trimester (approximately five months’ gestation), with prosodic and syllabic features involving loudness, fundamental frequency, tempo, and pauses—in alternating patterns of loud and soft sounds, and high and low tones—transmittable to the fetus (DeCasper & Fifer, 1980; DeCasper & Spence, 1986; May, Byers-Heinlein, Gervain & Werker, 2011; Mehler et al., 1988). Thus, these researchers hypothesize that the infant is not born sensitive to these sound patterns, but instead heard and learned them prior to birth. Interestingly, on this view, learning is pushed farther and farther back in human development to a time even before birth.

The existence of silent, natural signed languages, for which there is no apparent prenatal exposure, provides a critical test that can adjudicate between the above two equally plausible hypotheses and may offer new answers about the nature of language learning in early life. According to the Biologically-Governed Hypothesis, if aspects of early language learning involve infants’ biologically-driven sensitivity to specific contrastive cues in the input, then all infants—irrespective of what languages their parents use at home—should show an early sensitivity to both visual and auditory contrastive language cues. Indeed, infants who have never seen signed language should nonetheless demonstrate an early sensitivity to visual contrastive cues in signed languages. Conversely, according to the Entrainment Hypothesis, hearing fetuses can hear and directly learn spoken language contrastive cues primarily coming from the pregnant mother’s voice, such as variation in intonation, pitch and volume which are grossly transmitted through the uterine wall (for a review, see Houston, 2005). However, a fetus (hearing or deaf) of a deaf mother who uses only sign language receives no exposure to extrauterine language signals, making direct learning from prenatal language exposure impossible. If entrainment and prenatal learning drive the mechanism of early language learning, then infants who have never seen signed language should show no sensitivity to visual contrastive cues (sonority) present in signed languages.

To test the above hypotheses, we ask whether young infants, who have never seen sign language, are sensitive to sonority constraints in a soundless signed language. All infants were tested using a preferential looking paradigm and an infrared eye tracker that precisely recorded eye gaze position as the infants watched videos of fingerspelled items that were well-formed and contained high sonority values (HS) or were comparatively ill-formed and contained lower sonority values (LS).

One unique design feature of the present study, and one that provides a powerful test of the above two hypotheses, is our population: We investigate hearing infants who have never seen a signed language before. If sensitivity to sonority constraints is universal irrespective of the home language, then a hearing baby is predicted to show a sensitivity to sonority constraints in a signed language, just like they would show a sensitivity to sonority constraints in any spoken language to which they may be exposed, such as French, Chinese, or Hindi (see Baker, Golinkoff & Petitto, 2006 for further explication of this logical reasoning).

This study compares two age groups of hearing infants, 6- and 12-month-olds, as these specific age groups fall on either ends of the developmental “perceptual narrowing” period of linguistic sensitivity. This refers to the well-documented developmental phenomenon in spoken language acquisition whereupon all young infants are initially able to discriminate among the full set of phonetic categories found in the world’s languages, but as they near their first birthday, their phonetic sensitivities attenuate (i.e., “perceptually narrow”) to only the phonetic inventory found in their native languages (see Kuhl et al. 2006; Werker & Tees, 1984 for monolingual infants, and Petitto et al., 2012 for bilingual infants). This perceptual narrowing pattern has been extended to sign language, as young infants unfamiliar to sign language are able to discriminate sign-phonetic units, while older infants could not (Baker et al., 2006; Palmer, Fais, Golinkoff, & Werker, 2012). If the Biologically-Governed Hypothesis holds, then younger babies, irrespective of language experience, should demonstrate sensitivity to visual sonority constraints due to their biological sensitivity to specific contrastive cues in the input over others. Furthermore, given that the infants are in this developmental period, the strongest prediction is this: This sensitivity should attenuate over time due to lack of regular exposure to a signed language and here, they should be sensitive only to aural sonority and not both.

A third design feature of the present study is our use of lexicalized fingerspelling. Fingerspelling is the serial presentation of specific hand and finger configurations to represent each letter within a written word form, and has existed in signed languages for centuries (Grushkin, 1998; Padden & Gunsauls, 2003). Because fingerspelling obeys many of the same phonological constraints as regular lexical signs, fingerspelling is considered to be part of the lexicon of its signed language and not a wholly separate system meant to only represent written orthography (Brentari & Padden, 2001; Cormier, Schembri, & Tyrone, 2008). Since signed languages co-exist within predominately spoken language environments, fingerspelling provides a way for signed languages to convey non-native vocabulary items borrowed from spoken language for which there is no common or agreed-upon lexical sign, and also provides a way to represent proper nouns including names, locations, and product brands. These fingerspelled items then may undergo nativization processes where they increasingly adhere to the phonological constraints of native lexical signs items by reducing non-native elements (e.g. dropping letters), acquiring native elements such as movement, or blending native and non-native elements (Cormier et al., 2008). One outcome, among many, of these nativization processes is lexicalized fingerspelling, which are rapidly fingerspelled sequences blended with native syllabic structure, including sonority peaks, that incorporate a reduced set of letters from the original fingerspelled sequence (Brentari, 1998). These lexicalized fingerspelling items typically, after frequent use, become stable, holistic sign forms. For example, the American Sign Language (ASL) signs BACK, JOB, and CHARITY were initially fingerspelled words that had undergone such nativization processes to become lexicalized fingerspelling items, reducing the number of handshapes used and taking on native ASL syllabic and prosodic properties; now, they are signed as stable lexical items.

The use of ASL lexicalized fingerspelled forms as stimuli has two experimental advantages in this study that utilizes infants’ looking biases as a measure of sensitivity to perceptual features in language. First, by using fingerspelled stimuli, we are able to contrast infants’ looking preferences for two stimuli that differ only along the sonority dimension. In this study, we present infants with high vs. low sonority variants that differ in their sonority values and, hence, vary in their “well-formedness” (Brentari, 1998; Gomez et al., 2014). Had we used full signs as stimuli, there would be other perceptual features such as changes in signing space size and hand/arm location, orientation and velocity that could explain infants’ perceptual looking biases, rather than variation in sonority. Hence, the use of fingerspelling allows us to test sensitivity to visual sonority in the absence of these other visual confounds, which are otherwise difficult, if not impossible, to control. Second, fingerspelled words do not require being signed in conjunction with the face or body, images of which could also capture infants’ attention making it difficult to gauge sensitivity to linguistic cues presented specifically on the hands. In addition, any possible findings of perceptual looking biases mediated by sonority values in fingerspelling stimuli are potentially generalizable to regular lexical signs in light of past studies demonstrating visual sonority effects on adults’ acquisition and perception of lexical signs (Berent, Dupuis, & Brentari, 2013; Williams & Newman, 2016).

Studying early perception of fingerspelling stimuli and how infants recognize these forms has important implications to understanding how signing children learn fingerspelling early in language acquisition and could even generalize to language and literacy acquisition in all children. It has been shown that children—both hearing and deaf who have signing parents—acquire these lexicalized forms very early in life like they would any other signed lexical item. Additionally, they perceive these as whole-word forms before they understand that they are comprised of individual letters, just like how hearing children perceive whole words before learning they are composed of individual speech phonemes (Akamatsu, 1985; Blumenthal-Kelly, 1995; Erting, Thumann-Prezioso, & Benedict, 2000; Padden & Le Master, 1985). Upon learning the alphabetic principle and how to read, they begin to link these lexicalized fingerspelling forms to the printed-word form, not to the spoken-word form (Padden, 1991, 2006). Together, these findings suggest that infants and children may be sensitive to the well-formedness of fingerspelled stimuli. Finally, early exposure to fingerspelling provides a crucial foundation for young deaf children’s early literacy acquisition and awareness of important correspondences between sign phonology and English orthography (Morere & Allen, 2012; Petitto et al., 2016; Stone et al., 2015), which is highly predictive of literacy skills later in life (Corina, Hafer, & Welch, 2014; McQuarrie & Abbott, 2013; McQuarrie & Parrila, 2014; Padden & Ramsey, 2000). Hearing children’s reading ability has also been found to benefit from fingerspelling instruction (almost always in the context of sign language immersion or instruction) in early elementary classrooms (Daniels, 2004; Wilson, Teague, & Teague, 1984).

Because the current study employs eye gaze to measure preferential looking, two outcomes are possible: when presented with a choice to look at a high versus a low sonority stimulus, infants could show a significant looking preference for one of them, a finding which can be taken as evidence for sensitivity to visual sonority constraints. Conversely, if infants show no difference in time spent looking at a high versus low sonority stimulus, this lack of preference could mean either a true insensitivity to sonority or it could result from a failure to elicit behavioral preferences for any possible reason (e.g., both stimuli were equally interesting, even if infants were sensitive to the difference between the stimuli). To clarify this issue, we also contrasted looking preferences in an additional video orientation condition: upright versus inverted fingerspelling, with the fingerspelling sequences being exactly the same on each side. This was chosen because infants do have upright vs. inverted orientation preferences for various images, such as faces (Chien, 2011; Farroni et al., 2005; Slater, Quinn, Hayes, & Brown, 2000) and biological moving point-light displays (Bertenthal, Proffitt, Kramer, & Spetner, 1987; Fox & McDaniel, 1982; Kuhlmeier, Troje, & Lee, 2010; Simion, Regolin, & Bulf, 2008). If infants fail to demonstrate a sonority preference (that is, a preference for either HS or LS fingerspelling), but they do demonstrate an orientation preference (that is, a preference for either upright or inverted fingerspelling), using exactly the same stimuli, but with one small, simple manipulation—inversion—then we can confirm that the sample of infants tested were capable of demonstrating looking preferences. There is no reason to believe that a null result would be observed for one condition and not the other within the same sample of infants.

In summary, the fascinating existence of visual sonority contrastive cues in adult signed languages permits us to investigate whether sonority is a relevant linguistic cue in early life, one that attracts infants’ attention to core features of language syllabic structure and thus potentially facilitates all human language learning. It permits us to address difficult questions about the essential nature of early human language learning: Do infants show an early biological-governed sensitivity to sonority that guides learning or does sensitivity to sonority require experience with ambient patterns? Are early patterns of language biases and language learning similar for signed and spoken languages? To test these ideas, we study sensitivity to visual sonority constraints in young infants who have had no experience with signed language2.

Method

Participants

A total of 38 infants participated. All infants were recruited by mass mailings of generic recruitment letters to new parents residing in San Diego County. Four younger and five older infants were not included because of fussiness or because they failed to provide accurate eye tracker calibration, resulting in a sample of 16 younger infants, average age 5.6 months (SD = 0.6; range = 4.4–6.7 months; 8 female) and 13 older infants, average age 11.8 months (SD = 0.9; range = 10.6–12.8 months; 7 female) who were included in the study.

Parents reported that all infants had no complications during pregnancy or delivery and had normal hearing and vision. Based on our inclusion criteria, the infants had not seen any sign language at home and had not been exposed to any baby sign instruction videos at any time; only spoken English was used at home. This study was approved by the Institutional Review Board at University of California, San Diego. Testing was conducted with a single 20-minute visit. Parents’ time was compensated with payment and an infant t-shirt.

Materials

We aimed to create well-formed and ill-formed (i.e., less well-formed) samples of signed language stimuli based on sonority restrictions, analogous to the well-formed and ill-formed speech syllables used in psycholinguistic studies of sonority (Berent et al., 2011; Berent et al., 2007; Berent, Lennertz, Jun, Moreno, & Smolensky, 2008; Gómez et al., 2014; Maïonchi-Pino, de Cara, Ecalle, & Magnan, 2012). To do so, we took advantage of known sonority restrictions in lexicalization processes governing fingerspelling in ASL. In ASL, the nature of fingerspelling in a typical conversation differs based on a continuum of conventionalization, ranging from serial fingerspelling on one end to lexicalized fingerspelling on the other end (Akamatsu, 1985; Brentari & Padden, 2001; Padden, 1991, 2006; Stone et al., 2015; Wilcox, 1992). Serial fingerspelling consists of a linear string of hand configurations that represents individual letters of the English alphabet and have little or no phonological assimilation among the hand configurations. Lexicalized fingerspelling is when a fingerspelled sequence has undergone extensive phonological changes such that it is conventionalized and formed with the same syllabic structure native to phonological organization of ASL signs, assuming the typical linguistic properties of a loan word and functioning as a stable lexical item (i.e., BACK, JOB, CHARITY, and INITIALIZED are common examples).

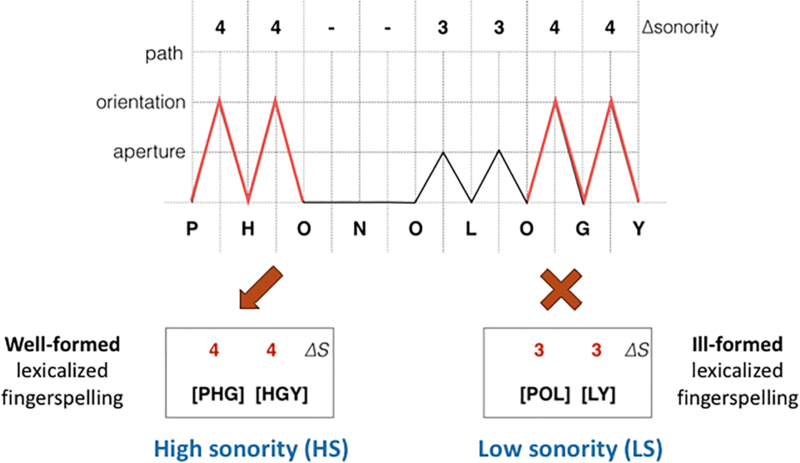

According to Brentari (1998), the lexicalization process for ASL fingerspelling appears to be restricted in part by sonority constraints, which we called the “Sonority in Lexicalization Principle” or SLP. During fingerspelling, the transition between each letter can require a hand movement that consists of a change in selected fingers such as S-T, an aperture change (i.e., whether the hand is open or closed) such as S-C, an orientation change such as S-P, or a path change such as S-Z. The aperture, orientation, and path changes have corresponding, ordinal sonority values organized by increasing amplitude from 3 to 5: aperture < orientation < path. To use an example from Brentari (1998), consider the English word, phonology which may be fingerspelled serially as P-H-O-N-O-L-O-G-Y with little ASL prosodic or syllabic structure or patterning. As seen in Figure 1 along the top row, the hand movement transition between each letter in phonology can be categorized and assigned a sonority value. As this word undergoes lexicalization, several changes take place: handshapes are dropped, ASL prosodic features are added, phonological co-articulation occurs where two letters may combine to form one handshape as with H-G, and the overall sequence is reduced to a new disyllabic ASL form representing the semantic item for phonology. Key here is that the SLP moderates this lexicalization process such that the new lexicalized form contains no more than two syllables and retains the highest sonority values, that is, the most sonorant transitions, from the original serial fingerspelling sequence. In the current case, the most sonorant transitions in P-H-O-N-O-L-O-G-Y are P-H-O and O-G-Y (see Figure 1, top row and lines in red), all containing orientation changes (sonority values = 4). The SLP predicts that the final disyllabic lexicalized form would be P-[HG]-Y whereupon the [HG] unit represents letters that are co-articulated (that is, have undergone handshape merger). The two syllables in this final lexicalized form are P-[HG] and [HG]-Y, both of which involve orientation change and capture the most sonorant transitions in the original serial fingerspelling sequence (see Figure 1, bottom left).

Figure 1.

Example of Calculations of High vs. Low Sonority Forms. Based on Brentari (1998), this sonority graph shows values of each transition between letters when phonology is fingerspelled serially, with some transitions (i.e., ?S) being higher and more sonorous than other transitions. Aperture, orientation, and path are phonetic features that refer to increasingly large (and more sonorant) changes in hand and wrist position between letters. The lexicalization process takes sonority into consideration by retaining the high sonority transitions and dropping the low sonority transitions. On the bottom left, we have a well-formed and “high” sonority (HS) lexicalized fingerspelling form that uses higher sonority transitions. On the bottom right, we have an ill-formed and “low” sonority (LS) lexicalized fingerspelling form that uses lower sonority transitions. In this way, we were able to create lexicalized fingerspelling variants for the HS and LS conditions to be used in the current experiment.

While the SLP makes predictions about well-formed lexicalized fingerspelling forms from serial fingerspelling sequences, “ill-formed” fingerspelling (i.e., less well-formed) forms can also be created as experimental contrasts by purposely violating the SLP and retaining less sonorant transitions between letters. By using lexicalized fingerspelling, high and low sonority stimuli can be created based on the varying sonority values of the transitions between individual fingerspelled letters. In the case of phonology, we can create an ill-formed, disyllabic lexicalized form, P-O-L-Y, by taking non-maximal sonority values from the serial fingerspelled sequence while retaining the same initial and final letters and ensuring it has the equal number of syllables as the well-formed variant (see Figure 1, bottom right). In a visual sonority perception study, the use of fingerspelling has advantages over the use of lexical signs because we can eliminate possible perceptual confounds, such as variation in signing space size and direction or speed of motion paths. For example, lexical signs with high sonority are also, by definition, larger and are usually produced with faster motion, both which can lead to significant perceptual confounds when attempting to study sonority perception per se. In addition, fingerspelling is largely independent of face and body motion, and thus we can create hands-only stimuli that eliminates possible visual confounds stemming from face and body elements, which are almost always required when showing ASL lexical signs.

The stimuli set comprised 25 ten-letter English words, with no doubled letters, that were randomly selected from Luciferous Logolepsy, a database of very low-frequency English words (http://www.kokogiak.com/logolepsy/). The lexical frequency was verified via the Corpus of Contemporary American English (COCA; corpus.byu.edu/coca) and none of the words had a lexical frequency greater than 31 out of 450 million words. We chose to use highly unfamiliar words (in both English and ASL) to ensure that the signer was not more or less familiar with some items than others, which may render some forms as more rehearsed than others. Following Brentari (1998), we calculated sonority values for handshape transitions between each fingerspelled letter for each word, and generated well-formed lexicalized3 fingerspelling variants (high sonority, HS) by retaining the most sonorant transitions present within the word while also obeying other lexicalization rules outlined in Brentari (1998). Next, we also generated ill-formed lexicalized fingerspelling forms (low sonority, LS) by selecting less sonorant transitions between handshapes while also adhering to all other lexicalization rules.

To control for perceptual differences, both HS and LS variants shared the same initial and final handshapes. In addition, Williams and Newman (2016) have suggested that handshape markedness may interact with sonority values in sign language perception studies. To control for markedness, we analyzed each HS and LS variant for markedness by comparing the number of unmarked handshapes (B, O, A, S, C, 1, 5) in the HS and LS variants of each word (Battison, 1978; Boyes Braem, 1990). None of the HS and LS pairs differed from each other by more than one unmarked handshape, with the exception of one pair for the word PARAENETIC, which differed by two handshapes (HS: PARAIC; LS: PENIC); this pair, while piloted, was not used in the present study.

A native ASL signer was filmed producing all stimulus items. She practiced each item at least three times until she felt comfortable signing the item, per Brentari (1998) which has suggested that the lexicalization process requires at least three repetitions of the same fingerspelling sequence before it becomes stable and conforms to native ASL phonological constraints. The signer, in front of a blue chromakey screen, fingerspelled the 25 HS and 25 LS sequences at a natural rate. Using Final Cut Pro X, video clips were edited to one frame before onset of fingerspelling and one frame after the final handshape hold. The video background was changed to white because, based on our laboratory’s piloting, a bright display yielded the best condition for capturing eye gaze data by maximizing the corneal reflection. Only the signer’s fingers, hand, and wrist were visible.

Based on a companion adult study (Stone, Bosworth, & Petitto, 2017), which served as stimuli piloting for the present study, we identified four words that elicited the largest and most consistent sonority preferences. That is, adult signers most consistently preferred the HS variants of these four words to their LS counterparts. Thus, the HS and LS variants of these four words were selected for the present study with infants: ASTROPHILE, CATAPHASIA, KIESELGUHR, and TRITANOPIA (see Table 1).

Table 1. Fingerspelling Stimuli.

Listed are the four words used in the infant study, with HS and LS fingerspelling variants for each word.

| Full Word | Well-formed, high sonority (HS) | Ill-formed, low sonority (LS) |

|---|---|---|

| astrophile | APHLE | ASLE |

| cataphasia | CAPHIA | CATIA |

| kieselguhr | KESGHR | KESUR |

| tritanopia | TRIPIA | TRNIA |

Next, we optimized these stimuli for presentation to infants by reducing the video speed by 50%. This manipulation is important because naturally presented adult fingerspelling is very rapid, and infants need be able to perceive the stimuli optimally. We chose this reduction amount because past research on infant-directed language has shown that both spoken English and ASL signs are approximately half as fast when directed to infants than when addressed to adults (Bryant & Barrett, 2007; Holtzrichter & Meier, 2000). Next, because the HS and LS variant of each word were of slightly different lengths but needed to be precisely equal in order to be presented simultaneously in a looking preference paradigm, we increased or decreased the playback speed of the HS and LS versions until they were of equal duration. For example, in CATAPHASIA, the HS video, at 50% speed, was 1.87 s and the LS video was 1.33 s, for a difference of 0.53 s. Therefore, we increased the HS video length by 0.27 s and decreased the LS video length by an equal amount such that both videos had an equal duration of 1.60 s (see video examples in Appendix A). We verified that the reduction of speed was imperceptible to native deaf signers, as they all reported the speed looked natural and “just right”. We also calculated the fingerspelling speed of all eight fingerspelling sequences (4 HS and 4 LS) following the speed adjustments. The calculated average speed of 4.19 letters per second falls well within the range of published natural fingerspelling rates, which is 3–7 letters per second (Hanson, 1982; Jerde, Soechting, & Flanders, 2003; Wilcox, 1992; Zakia & Harber, 1971). Therefore, our speed adjustments were well within the range of normal language production while making it more perceptible for infants.

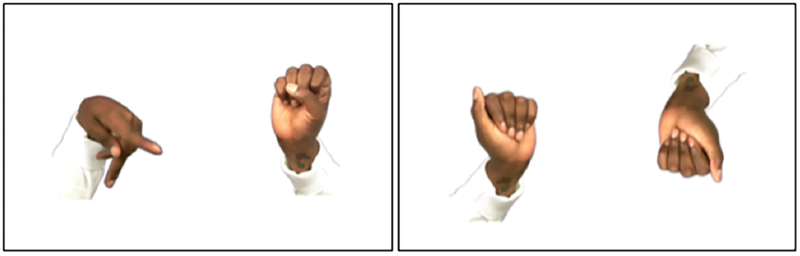

In this looking preference paradigm, two different visual stimuli were presented to the infant, one on the left half of the monitor and one on the right half, and we recorded the total amount of time the infant looks at each stimulus during each trial. In the sonority condition, the infants saw the HS and LS fingerspelling movies side-by-side, each hand centered within each visual hemifield, with the visual angle being 5° by 15°. Both movies started and ended at the same time and with the signing on the right always flipped horizontally to create a symmetric appearance (see Figure 2, left panel). The signing looped continuously for ten seconds; the signing for all four words looped six to seven times in a single trial.

Figure 2.

Sonority and Video Orientation Conditions. Left panel: In the Sonority condition, infants were presented with the HS and LS variant created from the same word (i.e., low frequency English word), with HS and LS appearing randomly on the left or right across trials. Right Panel: In the Video Orientation condition, infants were presented with an upright and inverted (and horizontally flipped) version of the same stimulus, which was either HS or LS. The side of presentation and the order of HS and LS stimuli were randomized across trials.

The video orientation condition (upright vs. inverted) was created using the same procedure as with the sonority condition, with the differences being that (1) both sides showed exactly the same fingerspelling sequence, which was either HS or LS, and (2) one side was also flipped vertically (i.e. inverted) in addition to horizontally (see Figure 2, right panel). Here, the experimental manipulation was the orientation of the fingerspelling hand: upright or inverted. Thus, there were eight instances of the video orientation condition: four consisting of HS variants of the four words, and four consisting of the LS variants.

Apparatus

Stimuli were presented on an HP p1230 monitor (1024 × 768 pixels, 75 Hz) powered by a Dell Precision T5500 Workstation computer. A Tobii X120 remote infrared camera device was placed directly under the monitor, about 15 cm in front of the monitor and at an angle of 23.2°, facing the infant’s face. The eye tracker detects the positions of the pupils and the corneal reflection of the infrared light emitting diodes (LEDs) in both eyes. The x-y coordinates, averaged across both eyes for a single binocular value, corresponded to the observer’s fixation point during stimulus presentation recorded at a sampling rate of 120 hertz (i.e., gaze position and direction toward the monitor every 8.33 milliseconds). A separate remote camera also recorded the observer’s full face view during the stimuli presentation. We used Tobii Studio Pro version 3.4.0 to present the stimuli, record the eye movements, and perform the gaze analysis.

Procedure

In a dimly lit room, the infant was placed on the parent’s lap, 55–65 cm from the monitor and eye tracker. To provide more stability, some infants were strapped into a booster seat cushion which was then placed on the parent’s lap. The parent wore lab-provided occluding glasses provided to prevent biasing the infant to one side or another and also to prevent inadvertently tracking the parents’ eyes. The Tobii X120 was calibrated for each infant using a five-point infant calibration procedure built into Tobii Studio Pro. Calibration was verified using a three-point calibration check (upper right, center, and lower right) both immediately before and after the experiment. If calibration was not within 1 degree of the center of the target, testing was aborted and calibration repeated. For all infants who provided good calibration, no apparent changes were observed in the pre- and post-calibration checks. We also plotted gaze x and y position data over the entire recording session in a random sample of infants, and observed neither any obvious systematic lags in the number of samples recorded over the test session nor any spatial drifts in the gaze position from start to end of the recording sessions.

Participants saw 16 ten-second sonority condition trials and 16 ten-second video orientation condition trials all mixed together (i.e., no condition blocks). We created two experimental runs (A and B), with the order of stimuli and left/right sides wholly randomly generated with the following constraint: no one word x condition video was shown twice in a row (e.g., no one saw TRITANOPIA in the Sonority condition twice in a row), and no one fingerspelling was shown more than twice in a row (e.g., regardless of condition, TRITANOPIA never appeared more than twice in a row). Participants were randomly assigned to see either experimental run A or B, and an equal number of infants saw A vs. B. Before each trial, an image of a puppy (cycling through ten different puppies; visual angle of 5° by 5°) was presented to pique the infant’s interest and bring the gaze to the center of the monitor. When the infant fixated on the puppy’s face, the experimenter immediately began the ten-second trial. This was important to ensure that at stimulus onset, the infant’s gaze was directed to the center of the monitor. The total procedure lasted for approximately 10 minutes. When infants demonstrated irrecoverable fussiness, the experiment was terminated early.

Data Analysis

Data Processing:

For each eye gaze data sample, the position of the two eyes were averaged. No spatial-temporal filter was used to define fixations. Instead, all gaze points were included for analysis, because infants were allowed to move their eyes freely. We employed Tobii Studio Pro’s default noise reduction algorithm which merged 3 adjacent samples, using a non-weighted moving average, to smooth out microsaccades. This served as a low-pass filter to smooth out high frequency signals.

Sonority Preference:

We examined the looking time data to test two parts of our hypothesis: (a) younger babies exposed only to a spoken language would show sensitivity to sonority constraints in a signed language while (b) older infants, who were also exposed only to a spoken language, would not. Looking times were taken from the eyetracker output by summing across all trials within a condition the number of gaze points (obtained every 8.33 ms) for an area of interest (AOI) drawn as two 600 by 900 pixel rectangles on a video size of 1280 × 960 pixels, one centered on the LS stimulus and the other AOI centered on the HS stimulus, with a small 25-pixel gap in the center of the monitor in between the two AOI’s. For each infant, we calculated a Sonority Preference Index by dividing the looking time (in seconds) for the HS stimulus over the looking time for the LS stimulus. We normalized the distribution of all Sonority Preference Index values with a logarithmic transformation (Keene, 1995). Performing this transformation allowed the sonority index values to be meaningfully interpreted across all infants such that an index of 1.0 and −1.0 represented the same strength of preference, but in opposite directions. This also allowed for ease of interpretation, as positive values indicate an HS preference, and negative values indicate an LS preference.

Upright Preference:

We also examined the looking time in the video orientation condition, when both sides showed either HS or LS fingerspelling but with one hand upright and one hand inverted. We calculated an Upright Preference Index in each LS and HS condition for each infant by dividing the looking time for upright signing over the looking time for inverted signing, and normalized these values with a logarithmic transformation. Similar to the sonority preference index, positive values indicate a preference for upright signing, and negative values indicate a preference for inverted signing.

Independent t-tests were used to compare age groups, and paired t-tests were used to compare within-group looking time for HS vs. LS signing conditions. To test whether the upright vs. inverted orientation effect was different for HS and LS conditions and for younger and older groups, we conducted a two-way ANOVA (Group × Sonority), and reported effect sizes with partial eta-squared values (η2).

Results

Sonority Preference:

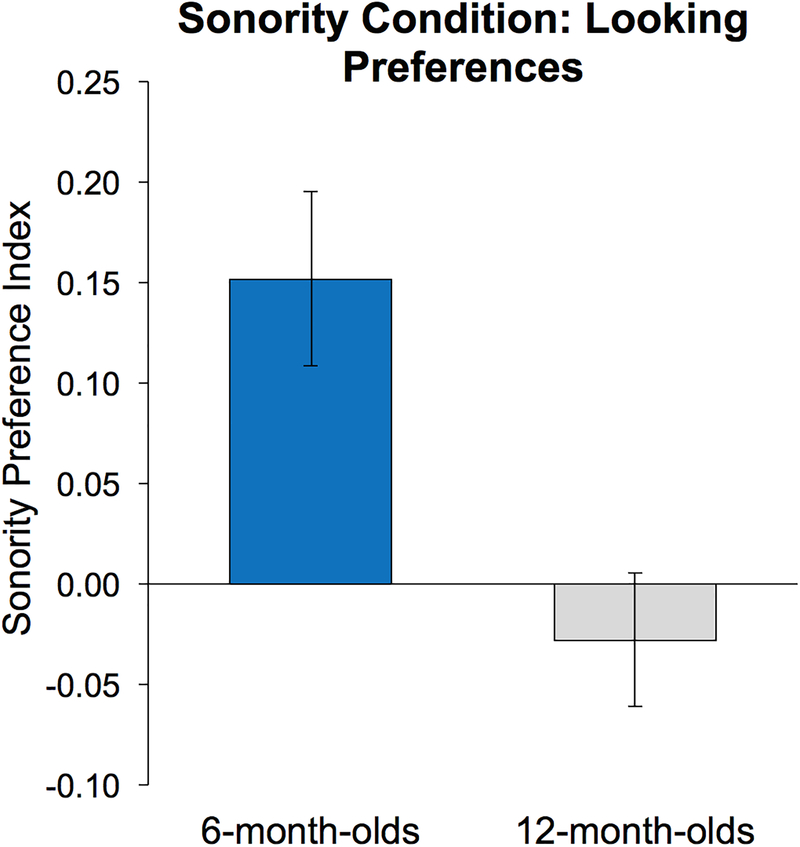

First, with regards to average total looking time, there was no main effect of subject group, meaning the total amount of gaze data obtained from each group did not significantly differ for younger vs. older infants (Means: 48.8 vs. 36.7 seconds; t(27) = 1.71; p = 0.10). This indicates that there was no difference in data loss due to possible extraneous age-related reasons such as fussiness, head turning, attentiveness, or eye blinking. Yet, younger infants significantly looked longer at HS than LS signing (Means: 28.6 vs. 20.2 seconds; paired t(15) = 4.03, p = 0.001, Cohen’s d = 0.74), while older infants showed very little difference in their average looking time for HS vs. LS signing (Means: 18.1 vs. 18.6 seconds; t(12) = 0.29, p = 0.78). Regarding average sonority preference index values (Figure 3), younger infants were found to have significantly larger values compared to older infants (Means: 0.15 vs. −0.03; t(27) = 3.35, p = 0.002, Cohen’s d = 0.74).

Figure 3.

Sonority Condition: Looking Preferences. Sonority Preference Index values greater than zero indicate more time spent looking at the HS fingerspelling than the LS fingerspelling, when both were presented simultaneously on the monitor. 6-month-olds show a strong HS preference, while 12-month-olds show no preference for HS or LS fingerspelling (p = 0.002).

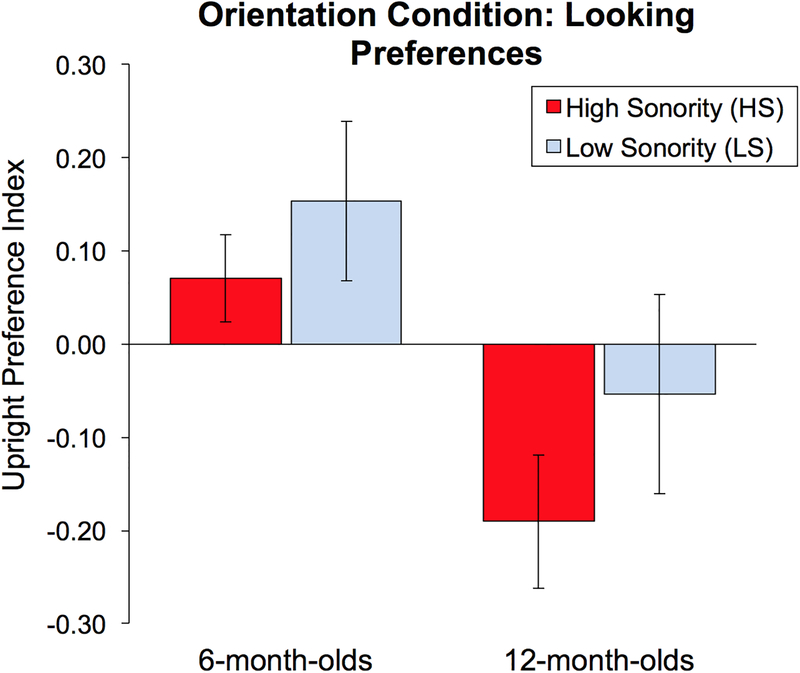

Upright Preference:

Mean Upright Preference Index values are presented in Figure 4. A two-way ANOVA with repeated-measures factor Sonority (HS vs. LS) and between-subjects factor Age (younger vs. older) was conducted on upright preference index values. There was a significant main effect of Age (F(1,27) = 6.815, p = 0.015, partial η2 = 0.20), indicating that younger and older infants have different viewing preferences for simultaneously presented upright and inverted signing stimuli. This is driven by a strong preference for upright stimuli in younger infants (M = 0.11) and a strong inverted preference in older infants (M = −0.12). There was no main effect of Sonority (F(1, 27) = 2.04, p = 0.165, partial η2 = 0.07) indicating that sonority did not modulate the Upright Preference Index values. No significant Sonority × Age group interaction was found F(1,27) = 0.12, p = 0.73, partial η2 = 0.004).

Figure 4.

Video Orientation Condition: Looking Preferences. Upright Preference Index values greater than zero indicate more time spent looking at the upright stimulus than the inverted stimulus. There was a main effect for Age, where 6-month-olds (left) showed a strong upright preference for both stimuli types, while the 12-month-olds (right) show a strong inverted preference (p = 0.015). Looking preferences for HS vs. LS did not differ within each age group.

In summary, 6-month-olds demonstrated a significant preference for HS fingerspelling compared to LS fingerspelling, while 12-month-olds did not show any evidence of a sonority preference. The two-way ANOVA and independent t-tests for the video orientation condition revealed a main effect of age where 6-month-olds have a greater preference for upright hands and 12-month-olds have a greater preference for inverted hands. Importantly, these results reveal that the same infants who failed to demonstrate a looking preference in the sonority condition nonetheless were able to demonstrate a significant novelty preference in the video orientation condition, using exactly the same stimuli. Hence, the failure to find a sonority preference in the older infants is likely not due to a general disinterest or inattentiveness to the HS and LS stimuli, but to an insensitivity to sonority constraints in a signed language.

Discussion

In this study, we asked whether sonority could be one principal cue to which infants universally attend in order to facilitate language acquisition. We explored whether infants, during the early period of language learning, possess a bias to attend to sonority-based constraints that underlie universals of syllabic structure present in both signed and spoken languages. Using a preferential looking paradigm, we tested whether hearing infants, who had no prior sign language experience, were sensitive to sonority constraints in a visual-manual signed language. When presented with a choice between well-formed versus ill-formed lexicalized fingerspelling, 6-month-olds looked significantly longer at the well-formed (high sonority, HS) fingerspelling. Hence, our results support the Biologically-Governed Hypothesis, such that infants expressed a sensitivity to a structured and contrastive cue in a language and sensory modality they had never seen before.

Older infants at around 12 months of age, who also had not received any signed language experience, did not show sensitivity to sonority-based constraints on syllable structure in signed language and did not demonstrate a preference for either well-formed or ill-formed fingerspelling. These age windows fit with well-known findings of perceptual hyper-sensitivity around six months and then a diminishing of sensitivity by around 12 months, known as perceptual narrowing, which has been extensively reported in the literature for both spoken and signed languages (Baker et al., 2006; Kuhl et al., 2006; Palmer et al., 2012; Werker & Tees, 1984).

We further explored sign-naïve infants’ sensitivity to sonority by presenting them with upright and inverted fingerspelling. Young infants, without sign language exposure, showed greater preference for the upright orientation, while older infants favored the inverted orientation. We reasoned that perhaps this is explained by the younger infants being predisposed to what is familiar, while the older infants favor what is novel (Roder, Bushnell, & Sasseville, 2000; Rose, Gottfried, Melloy-Carminar, & Bridger, 1982; Wetherford & Cohen, 1973). Importantly, the upright preference was not modulated by whether the variant was HS or LS. The primary objective of the video orientation condition was to serve as a control condition, because our hypotheses testing did involve the possibility of interpreting null findings. Such interpretations can be problematic because if infants failed to demonstrate a sonority preference, this could represent either a true null finding or a failure to demonstrate an effect. Since we were able to demonstrate a preference, using exactly the same stimuli, but with one small, simple manipulation (here, inversion), we can confirm that our sample of infants tested were capable of demonstrating looking preferences. Thus, an absence of a looking preference is likely due to a true insensitivity.

One consequence of the present study is that it helps us to better understand the phonological processes underlying lexicalized fingerspelling, which constitutes a part of the ASL lexicon (Brentari & Padden, 2001; Cormier et al., 2008) and how its production may be regarded as natural linguistic input by infants. Specifically, the findings present evidence that lexicalization processes, in part, obey sonority-based constraints, and that infants show a perceptual preference for lexicalized forms that meet these phonological constraints. Furthermore, the present study opens up new pathways for further investigation of the intriguing link between fingerspelling (both lexicalized and serial) and reading acquisition. Early developmental studies have reported that deaf parents fingerspell frequently to their infants, and that these infants spontaneously produce lexicalized fingerspelling even in the earliest stages of language production (Akamatsu, 1985; Blumenthal-Kelly, 1995; Erting et al., 2000; Padden & Le Master, 1985). It has been theorized that early exposure to fingerspelling is advantageous for early reading acquisition (Morere & Allen, 2012; Petitto et al., 2016), which is further corroborated by classroom studies of young deaf readers benefiting from explicit serial and lexicalized fingerspelling instruction (Haptonstall-Nykaza & Schick, 2007; Hile, 2009). In deaf adults, high fingerspelling ability is correlated with reading ability (Stone et al., 2015), and brain systems traditionally attributed to orthographic processing, including the visual word form area (VWFA), have shown neural activation for fingerspelling tasks (Emmorey, Weisberg, McCullough, & Petrich, 2013; Waters et al., 2007). Future studies would do well to move beyond findings of correlations between fingerspelling and reading ability, which is now well-established, by testing models of how and which components of fingerspelling contribute to reading in children with different developmental ages and language experiences (Petitto et al., 2016).

The present study demonstrates that human visual processes for language acquisition are developmentally sensitive and change over the first year of life in the absence of sign language exposure. That our youngest hearing infants were sensitive to syllable structure in fingerspelling helps us better understand when (and at what age) young deaf children are best exposed to sign languages so as to promote sign and speech dual language mastery, as well as successful English reading. At the very least, our findings suggest that early exposure to an accessible natural language such as a signed language in the young deaf child is most optimal to ensuring that these infants have healthy linguistic and developmental outcomes, particularly in light of the fact that spoken language cues are inaccessible to them in the first several months of life (Humphries et al., 2012; 2013; 2014). This does not exclude exposure to speech when it is feasible. Many studies have demonstrated that early speech and sign bilingual language exposure (made possible through, for example, cochlear implants, hearing aids, speech training, etc.) affords powerful language benefits to young deaf children (Petitto et al., 2001; 2016).

The present findings cast a remarkable new light on the decades-old “nature vs. nurture” debate and early language learning. Do infants possess biological predispositions to select aspects of natural language structure? Or do infants learn the structure entirely through environmental input in the absence of any biological predispositions? The observed sonority sensitivity present in young infants was found for a visual language that they had never seen before. Hence, we suggest that the present results support the view that infants have a biological predisposition to attend to and learn sonority-based constraints derived from universals of syllabic structure presented in both signed and spoken languages. Sonority and syllables are possibly important cues for infants to hone in on language signals in their environments. Our results are corroborated by findings that hearing babies not exposed to signed languages nonetheless are able to discriminate among soundless, signed phonemic categories (Baker, et al., 2006) and show preferences for signed language over gesture (Bosworth, Hwang, & Corina, 2013; Krentz & Corina, 2008). Crucially, experience appears necessary to maintain sensitivity to important, sonority-based linguistic properties in that language and modality, which can be further confirmed by investigating looking preferences in infants who are exposed to signed language (i.e. they have signing parents).

We conclude that fingerspelling sequences containing complex local features constrained by sonority properties that maximized the contrastive, alternating patterns of language, were highly attractive to infants who had no prior experience with signed language. Our findings advance current linguistic theory about sonority and how it operates within signed languages vis-à-vis spoken languages, particularly by providing psycholinguistic evidence for the reality of sonority in lexicalized fingerspelled sequences per Brentari (1998). Our findings also reveal new understanding of universals of early language learning which are not unique to only spoken language but also are present in signed languages. Such new understandings argue for a reconceptualization of the biological foundations of language acquisition to clarify the role of structured, amodal cues that captivate infants’ attention in the first year of life.

Acknowledgments

Dr. Adam Stone acknowledges with gratitude a Graduate Fellowship from the NSF Science of Learning Center Grant (SBE-1041725), a Graduate Assistantship from the PhD in Educational Neuroscience (PEN) program at Gallaudet University, a NIH NRSA Fellowship (F31HD087085) to conduct his graduate training with Petitto in her Brain and Language Laboratory for Neuroimaging (BL2) at Gallaudet University, and a NIH postdoctoral supplemental award (R01EY024623, Bosworth & Dobkins, PIs). Dr. Laura-Ann Petitto gratefully acknowledges funding for this project from the National Science Foundation, Science of Learning Center Grant (SBE-1041725), specifically involving Petitto (PI)’s NSF-SLC project funding of her study entitled, “The impact of early visual language experience on visual attention and visual sign phonological processing in young deaf emergent readers using early reading apps: A combined eye-tracking and fNIRS brain imaging investigation.” Dr. Rain Bosworth gratefully acknowledges funding for this project from the NSF Science of Learning Center Grant (SBE-1041725), specifically involving Bosworth’s (PI) NSF-SLC project funding of her study entitled, “The temporal and spatial dynamics of visual language perception and visual sign phonology: Eye-tracking in infants and children in a perceptual discrimination experiment of signs vs gestures” and a NIH R01 award (R01EY024623, Bosworth & Dobkins, PIs) entitled, “Impact of deafness and language experience on visual development.” Data collection for the present study was conducted in the UCSD Mind, Experience, & Perception Lab (Dr. Rain Bosworth) while Stone was conducting his PhD in Educational Neuroscience summer lab rotation in cognitive neuroscience; there, Stone was also the recipient of an UCSD Elizabeth Bates Graduate Research Award. We are grateful to the Petitto BL2 student and faculty research team at Gallaudet University and the student research team at the UCSD Mind, Experience, & Perception Lab. We extend our sincerest thanks to Felicia Williams, our sign model, and to the babies and families in San Diego, California, who participated in this study.

Appendix A

Video stimuli examples can be viewed on YouTube via this unlisted link: https://youtu.be/QoDC7YQitgg

The first half (0:00–0:10) shows an example of a video in the sonority condition, and the second half (0:10–0:20) a video in the orientation condition.

Footnotes

There is debate about whether sonority is a phonological or phonetic construct. Here, we adopt the same position as Gomez et al. (2014): “Our results do not speak to this debate, because we have no basis to determine whether responses of infants reflect phonetic or phonological preferences” (p. 5837). However, other studies (i.e., Berent et al. 2011, 2013) suggest sonority is at least partially phonological.

Some may contend that testing newborns, instead of 6-month-olds, for sensitivities to sonority constraints would offer stronger support for the Biologically-Governed Hypothesis. However, the present study with 6-month and 12-month-old infants continues to be a strong test of either hypotheses. First, it is confirmed that all infants were not systematically exposed to any visual signed language at any point in their lives. Second, the 6-month-old age criterion is significant for infants’ emerging perceptual capabilities. Newborns do have sufficient hearing and can be exposed to speech en utero. However, they have very poor sight at birth, seeing at best extremely blurry image at arms’ length. By six months, their acuity and contrast sensitivity sharpens substantially (Teller, 1997). Hence, this is the best age to test because it is at the very point where their vision has just markedly improved they are able to see the fine details of sign language stimuli well, and, for the first time in their lives, during this experiment. This logical reasoning should not be construed to mean that deaf infants do not need exposure to a visual signed language until they are six months old, because they do have coarse vision that is rapidly improving and is sufficient to see faces and moving hands and arms at close distances.

Or more accurately, “lexicalized-like,” given that these fingerspelling forms were generated specifically for the present study and were not part of the ASL lexicon at that time.

References

- Akamatsu T (1985). Fingerspelling formulae: A word is more or less the sum of its letters. SLR, 83, 126–132. [Google Scholar]

- Baker SA, Golinkoff RM, & Petitto LA (2006). New insights into old puzzles from infants’ categorical discrimination of soundless phonetic units. Language Learning and Development, 2(3), 147–162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Battison R (1978). Lexical borrowing in American Sign Language. Silver Spring, MD: Linstok Press. [Google Scholar]

- Bellugi U, & Klima ES (1982). The Acquisition of Three Morphological Systems in American Sign Language. [Google Scholar]

- Berent I (2013). The Phonological Mind. Cambridge: Cambridge University Press. [Google Scholar]

- Berent I, Dupuis A, & Brentari D (2013). Amodal aspects of linguistic design. Plos One, 8(4), e60617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berent I, Harder K, & Lennertz T (2011). Phonological universals in early childhood: Evidence from sonority restrictions. Language Acquisition, 18(4), 281–293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berent I, & Lennertz T (2010). Universal constraints on the sound structure of language: Phonological or acoustic? Journal of Experimental Psychology: Human Perception and Performance, 36(1), 212. [DOI] [PubMed] [Google Scholar]

- Berent I, Lennertz T, Jun J, Moreno MA, & Smolensky P (2008). Language universals in human brains. Proceedings of the National Academy of Sciences, 105(14), 5321–5325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berent I, Steriade D, Lennertz T, & Vaknin V (2007). What we know about what we have never heard: Evidence from perceptual illusions. Cognition, 104(3), 591–630. [DOI] [PubMed] [Google Scholar]

- Bertenthal BI, Proffitt DR, Kramer SJ, & Spetner NB (1987). Infants’ encoding of kinetic displays varying in relative coherence. Developmental Psychology, 23(2), 171. [Google Scholar]

- Blumenthal-Kelly A (1995). Fingerspelling interaction: A set of deaf parents and their deaf daughter. Sociolinguistics in Deaf Communities, 62–73. [Google Scholar]

- Bosworth RG, Hwang S-O, Corina D, & Dobkins KR (2013). Biological attraction for natural language rhythm: Eye tracking in infants and children using reversed videos of signs and gestures. Paper presented at Theoretical Issues in Sign Language Research London, UK. [Google Scholar]

- Boyes Braem P (1990). Acquisition of the handshape in American Sign Language: A preliminary analysis In Volterra V & Padden C (Eds.), From Gesture to Language in Hearing and Deaf Children (pp. 107–127). Berlin: Springer Berlin Heidelberg. [Google Scholar]

- Brentari D (1998). A prosodic model of sign language phonology. Cambridge, MA: MIT Press. [Google Scholar]

- Brentari D, & Padden C (2001). Native and foreign vocabulary in American Sign Language: A lexicon with multiple origins. Foreign vocabulary in sign languages, 87–120. [Google Scholar]

- Bryant GA, & Barrett HC (2007). Recognizing intentions in infant-directed speech: evidence for universals. Psychological Science, 18(8), 746–751. [DOI] [PubMed] [Google Scholar]

- Chien SH-L (2011). No more top-heavy bias: Infants and adults prefer upright faces but not top-heavy geometric or face-like patterns. Journal of Vision, 11(6), 13–13. [DOI] [PubMed] [Google Scholar]

- Corina D (1990). Reassessing the role of sonority in syllable structure: evidence from a visual-gestural language. CLS, 26(2), 31–44. [Google Scholar]

- Corina DP (1996). ASL syllables and prosodic constraints. Lingua, 98(1), 73–102. [Google Scholar]

- Corina DP, Hafer S, & Welch K (2014). Phonological awareness for American Sign Language. Journal of Deaf Studies and Deaf Education, 19(4), 530–545. [DOI] [PubMed] [Google Scholar]

- Cormier K, Schembri A, & Tyrone ME (2008). One hand or two? Nativisation of fingerspelling in ASL and BANZSL. Sign Language & Linguistics, 11(1), 3–44. [Google Scholar]

- Daniels M (2004). Happy hands: The effect of ASL on hearing children’s literacy. Reading Research and Instruction, 44(1), 86–100. [Google Scholar]

- DeCasper AJ, & Fifer WP (1980). Of human bonding: newborns prefer their mothers’ voices. Science, 208(4448), 1174–1176. [DOI] [PubMed] [Google Scholar]

- DeCasper AJ, & Spence MJ (1986). Prenatal maternal speech influences newborns perception of speech sounds. Infant Behavior & Development, 9(2), 133–150. [Google Scholar]

- Emmorey K (2001). Language, Cognition, and the Brain: Insights From Sign Language Research. Burlington, MA: Taylor & Francis. [Google Scholar]

- Emmorey K, Weisberg J, McCullough S, & Petrich JAF (2013). Mapping the reading circuitry for skilled deaf readers: An fMRI study of semantic and phonological processing. Brain and Language, 126(2), 169–180. [DOI] [PubMed] [Google Scholar]

- Erting CJ, Thumann-Prezioso C, & Benedict BS (2000). Bilingualism in a deaf family: Fingerspelling in early childhood. The deaf child in the family and at school: Essays in honor of Kathryn P. Meadow-Orlans, 41–54. [Google Scholar]

- Farroni T, Johnson MH, Menon E, Zulian L, Faraguna D, & Csibra G (2005). Newborns’ preference for face-relevant stimuli: Effects of contrast polarity. Proceedings of the National Academy of Sciences of the United States of America, 102(47), 17245–17250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox R & McDaniel C (1982). The perception of biological motion by human infants. Science, 218(4571), 486–487. [DOI] [PubMed] [Google Scholar]

- Gervain J, Berent I, & Werker JF (2012). Binding at birth: the newborn brain detects identity relations and sequential position in speech. Journal of Cognitive Neuroscience, 24(3), 564–574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gervain J, Macagno F, Cogoi S, Peña M, & Mehler J (2008). The neonate brain detects speech structure. Proceedings of the National Academy of Sciences, 105(37), 14222–14227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gómez DM, Berent I, Benavides-Varela S, Bion RA, Cattarossi L, Nespor M, & Mehler J (2014). Language universals at birth. Proceedings of the National Academy of Sciences, 111(16), 5837–5841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grushkin DA (1998). Lexidactylophobia: the (irrational) fear of fingerspelling. Am Ann Deaf, 143(5), 404–415. [DOI] [PubMed] [Google Scholar]

- Hanson VL (1982). Use of orthographic structure by deaf adults: Recognition of fingerspelled words. Applied Psycholinguistics, 3(4), 343–356. [Google Scholar]

- Haptonstall-Nykaza TS, & Schick B (2007). The transition from fingerspelling to English print: Facilitating English decoding. Journal of Deaf Studies and Deaf Education, 12(2), 172–183. [DOI] [PubMed] [Google Scholar]

- Hickok G & Poeppel D (2007). The cortical organization of speech processing. Nature Reviews Neuroscience, 8(5), 393–402. [DOI] [PubMed] [Google Scholar]

- Hile A (2009). Deaf children’s acquisition of novel fingerspelled words. (Doctoral dissertation, University of Colorado; ). [Google Scholar]

- Holzrichter AS & Meier RP (2000). Child-directed signing in American Sign Language In Chamberlain C, Morford JP & Mayberry RI (Eds.), Language acquisition by eye. Mahwah, NJ: Lawrence Erlbaum Associates. [Google Scholar]

- Houston D (2005). Infant speech perception In Pisoni DB & Remez RE (Eds.), The Handbook of Speech Perception (pp. 47–62). Oxford: Blackwell Publishing. [Google Scholar]

- Humphries T, Kushalnagar P, Mathur G, Napoli DJ, Padden C, & Rathmann C (2014). Ensuring language acquisition for deaf children: What linguists can do. Language, 90(2), e31–e52. [Google Scholar]

- Humphries T, Kushalnagar P, Mathur G, Napoli DJ, Padden C, Rathmann C, & Smith SR (2012). Language acquisition for deaf children: Reducing the harms of zero tolerance to the use of alternative approaches. Harm Reduction Journal, 9(1), 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphries T, Kushalnagar R, Mathur G, Napoli DJ, Padden C, Rathmann C, & Smith S (2013). The right to language. The Journal of Law, Medicine & Ethics, 41(4), 872–884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jantunen T, & Takkinen R (2010). Syllable structure in sign language phonology In Brentari D (Ed.), Sign Languages (pp. 312–331). Cambridge: Cambridge University Press. [Google Scholar]

- Jerde TE, Soechting JF, & Flanders M (2003). Coarticulation in fluent fingerspelling. The Journal of Neuroscience, 23(6), 2383–2393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keene ON (1995). The log transformation is special. Statistics in Medicine, 14(8), 811–819. [DOI] [PubMed] [Google Scholar]

- Krentz UC, & Corina DP (2008). Preference for language in early infancy: the human language bias is not speech specific. Developmental Science, 11(1), 1–9. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Stevens E, Hayashi A, Deguchi T, Kiritani S, & Iverson P (2006). Infants show a facilitation effect for native language phonetic perception between 6 and 12 months. Developmental Science, 9(2), F13–F21. [DOI] [PubMed] [Google Scholar]

- Kuhlmeier VA, Troje NF, & Lee V (2010). Young infants detect the direction of biological motion in point‐light displays. Infancy, 15(1), 83–93. [DOI] [PubMed] [Google Scholar]

- Liberman AM, & Mattingly IG (1989). A specialization for speech perception. Science, 243(4890), 489–494. [DOI] [PubMed] [Google Scholar]

- MacNeilage PF, & Davis BL (2000). Deriving speech from nonspeech: a view from ontogeny. Phonetica, 57(2–4), 284–296. [DOI] [PubMed] [Google Scholar]

- MacNeilage PF, Krones R, & Hanson R (1970). Closed‐Loop Control of the Initiation of Jaw Movement for Speech. The Journal of the Acoustical Society of America, 47(1A), 104–104. [Google Scholar]

- MacSweeney M, Capek CM, Campbell R, & Woll B (2008). The signing brain: the neurobiology of sign language. Trends in Cognitive Sciences, 12(11), 432–440. [DOI] [PubMed] [Google Scholar]

- Maïonchi-Pino N, de Cara B, Ecalle J, & Magnan A (2012). Are French dyslexic children sensitive to consonant sonority in segmentation strategies? Preliminary evidence from a letter detection task. Research in Developmental Disabilities, 33(1), 12–23. [DOI] [PubMed] [Google Scholar]

- May L, Byers-Heinlein K, Gervain J, & Werker JF (2011). Language and the newborn brain: does prenatal language experience shape the neonate neural response to speech? Frontiers in Psychology, 2(222). [DOI] [PMC free article] [PubMed] [Google Scholar]

- McQuarrie L, & Abbott M (2013). Bilingual deaf students’ phonological awareness in ASL and reading skills in English. Sign Language Studies, 14(1), 80–100. [Google Scholar]

- McQuarrie L, & Parrila R (2014). Literacy and linguistic development in bilingual deaf children: Implications of the “and” for phonological processing. American Annals of the Deaf, 159(4), 372–384. [DOI] [PubMed] [Google Scholar]

- Mehler J, Jusczyk P, Lambertz G, Halsted N, Bertoncini J, & Amieltison C (1988). A precursor of language-acquisition in young infants. Cognition, 29(2), 143–178. [DOI] [PubMed] [Google Scholar]

- Morford J, Wilkinson E, Villwock A, Pinar P, & Kroll JF (2011). When deaf signers read English: Do written words activate their sign translations? Cognition, 118(2), 286–292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morere D, & Allen T (2012). Assessing literacy in deaf individuals: Neurocognitive measurement and predictors. New York: Springer Science & Business Media. [Google Scholar]

- Newport EL, & Meier RP (1985). The acquisition of American Sign Language. Mahwah, NJ: Lawrence Erlbaum Associates, Inc. [Google Scholar]

- Ohala JJ (1990). The phonetics and phonology of aspects of assimilation. Papers in laboratory phonology, 1, 258–275. [Google Scholar]

- Padden C (1991). The acquisition of fingerspelling by deaf children. Theoretical issues in sign language research, 2, 191–210. [Google Scholar]

- Padden CA (2006). Learning to fingerspell twice: Young signing children’s acquisition of fingerspelling In Schick B, Marschark M, & Spencer P (Eds.), Advances in Sign Language Development by Deaf Children (pp. 189–201). New York, NY: Oxford University Press. [Google Scholar]

- Padden C, & Gunsauls DC (2003). How the alphabet came to be used in a sign language. Sign Language Studies, 4(1), 10–33. [Google Scholar]

- Padden CA, & Le Master B (1985). An alphabet on hand: The acquisition of fingerspelling in deaf children. Sign Language Studies, 47(1), 161–172. [Google Scholar]

- Padden CA, & Ramsey C (1998). Reading ability in signing deaf children. Topics in Language Disorders, 18(4), 30. [Google Scholar]

- Palmer SB, Fais L, Golinkoff RM, & Werker JF (2012). Perceptual narrowing of linguistic sign occurs in the 1st year of life. Child Development, 83(2), 543–553. [DOI] [PubMed] [Google Scholar]

- Parker S (2008). Sound level protrusions as physical correlates of sonority. Journal of Phonetics, 36(1), 55–90. [Google Scholar]

- Perlmutter DM (1992). Sonority and syllable structure in American Sign Language. Linguistic Inquiry, 23(3), 407–442. [Google Scholar]

- Penhune VB, Cismaru R, Dorsaint-Pierre R, Petitto LA, & Zatorre RJ (2003). The morphometry of auditory cortex in the congenitally deaf measured using MRI. NeuroImage, 20(2), 1215–1225. [DOI] [PubMed] [Google Scholar]

- Petitto LA (2000). On the Biological Foundations of Human Language In Lane H & Emmorey K (Eds.), The signs of language revisited: An anthology in honor of Ursula Bellugi and Edward Klima (pp. 447–471). Mahwah, NJ: Lawrence Erlbaum. [Google Scholar]

- Petitto LA (2009). New discoveries from the bilingual brain and mind across the life span: Implications for education. Mind, Brain and Education, 3(4), 185–197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petitto LA, Berens MS, Kovelman I, Dubins MH, Jasinska K, & Shalinsky M (2012). The “perceptual wedge hypothesis” as the basis for bilingual babies’ phonetic processing advantage: New insights from fNIRS brain imaging. Brain and Language, 121(2), 130–143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petitto LA, Holowka S, Sergio LE, Levy B, & Ostry DJ (2004). Baby hands that move to the rhythm of language: hearing babies acquiring sign languages babble silently on the hands. Cognition, 93(1), 43–73. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Holowka S, Sergio LE, & Ostry D (2001). Language rhythms in baby hand movements. Nature, 413(6851), 35–36. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Langdon C, Stone A, Andriola D, Kartheiser G, & Cochran C (2016). Visual sign phonology: insights into human reading and language from a natural soundless phonology. Wiley Interdisciplinary Reviews. Cognitive Science, 7(6), 366–381. [DOI] [PubMed] [Google Scholar]

- Petitto LA, & Marentette PF (1991). Babbling in the Manual Mode - Evidence for the Ontogeny of Language. Science, 251(5000), 1493–1496. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Zatorre RJ, Gauna K, Nikelski EJ, Dostie D, & Evans AC (2000). Speech-like cerebral activity in profoundly deaf people processing signed languages: implications for the neural basis of human language. Proceedings of the National Academy of Sciences of the United States of America, 97(25), 13961–13966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roder BJ, Bushnell EW, & Sasseville AM (2000). Infants’ preferences for familiarity and novelty during the course of visual processing. Infancy, 1(4), 491–507. [DOI] [PubMed] [Google Scholar]

- Rose SA, Gottfried AW, Melloy-Carminar P, & Bridger WH (1982). Familiarity and novelty preferences in infant recognition memory: Implications for information processing. Developmental Psychology, 18(5), 704–713. [Google Scholar]

- Sandler W (1993). A sonority cycle in American Sign Language. Phonology, 10(02), 243–279. [Google Scholar]

- Sandler W, & Lillo-Martin D (2006). Sign Language and Linguistic Universals. Cambrige, MA: Cambridge University Press. [Google Scholar]

- Seidenberg MS (1997). Language acquisition and use: learning and applying probabilistic constraints. Science, 275(5306), 1599–1603. [DOI] [PubMed] [Google Scholar]

- Simion F, Regolin L, & Bulf H (2008). A predisposition for biological motion in the newborn baby. Proceedings of the National Academy of Sciences, 105(2), 809–813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slater A, Quinn PC, Hayes R, & Brown E (2000). The role of facial orientation in newborn infants’ preference for attractive faces. Developmental Science, 3(2), 181–185. [Google Scholar]