Introduction

Do preschool curricula promote child development? The vast majority of publicly-funded preschool programs—center-based early education for three- and four-year-olds—require use of a “research-based curricula”. Head Start programs are mandated to use research-based “whole-child” curricula. Federally- and state-sponsored quality rating and improvement systems (QRIS) incorporate curriculum into their rankings and consider the use of a developmentally appropriate, research-based curriculum an indication of program quality (e.g., Auger, Karoly, & Schwartz, 2015). Tax dollars invested in funding public preschool programs—totaling $18.3 billion in 2015—are thereby also invested in curricula (Barnett, Carolan, Squires, Brown, & Horowitz, 2015; Isaacs, Edelstein, Hahn, Steele, & Steuerle, 2015). With an average price tag of $2,000 per-classroom, curricula policies benefit publishers, but it is unclear whether they benefit preschool children.

In fact, we know very little about whether and how commonly used preschool curricula influence children’s school readiness. Although most publishers claim their curricula are research-based, few describe the research on which the claim is based or how the curricula materials are explicitly linked to children’s development (Clements, 2007). Data from Head Start programs and from a national sample of child care centers indicate that the most commonly used curriculum is the Creative Curriculum (Hulsey et al., 2011; Jenkins & Duncan, 2017), despite its rating by the What Works Clearinghouse as having “No Discernable Effectiveness” in promoting school readiness (U.S. Department of Education, 2013). The second most commonly used curriculum is HighScope (Hulsey et al., 2011; Jenkins & Duncan, 2017), whose only rigorous evidence comes from the Perry Preschool study; a small, intensive demonstration program conducted in the 1960s with counterfactual conditions that no longer apply to the current preschool population (i.e., children who did not attend center-based preschool; Belfield, Nores, Barnett, & Schweinhart, 2006; Duncan & Magnuson, 2013; Schweinhart, 2005).

Also unknown is whether different curricular packages vary in terms of their implemented activities and instructional practices (e.g., language and literacy activities, small versus large group instruction), which structure the very basis of children’s preschool experiences. Furthermore, prior research consists primarily of researcher-designed curricula studies implemented in highly controlled settings or using limited samples of preschool classrooms; few studies have examined the relationship between curricula, classroom activities, and children’s school readiness in business-as-usual preschool settings.

Our study is a comprehensive examination of widely used preschool curricula and their associations with preschool classroom environments and children’s academic and social-emotional development using five large samples of low-income three- and four-year-old children attending public preschool programs operating at-scale. We examine patterns in classroom activities and the emotional, instructional, and overall quality in classrooms with and without a whole-child curriculum in use, and compare associations between curricula and quality by curricular package (e.g., Creative Curriculum vs. HighScope). Our study provides the first detailed description of the curricular landscape in preschool programs using the best available data (samples which include classroom observations, teacher surveys, curricular package information, and child outcome assessments). In addition to these descriptive calculations, we estimate quasi-experimental impact models—Head Start grantee fixed effects or state fixed effects—to analyze the relationship between classroom curricular package and child school readiness outcomes. Examining how different curricula influence the quality and type of activities in preschool classrooms, and subsequently children’s development is essential to understanding the policy levers that make preschool effective for low-income children.

Curricula and Children’s Development

Curricula set goals for the knowledge and skills that children should acquire in an educational setting. They guide and support educators’ plans for providing the day-to-day learning experiences to cultivate those skills with daily lesson plans, materials, and other pedagogical tools (Goffin & Wilson, 1994; Ritchie & Willer, 2008). Curricula differ across a number of dimensions such as philosophies, materials, the role of the teacher, pedagogy or modality (e.g., small or large group setting), classroom design, and child assessment. Preschool programs can choose their own curricula, but their choices are often constrained by a pre-approved list developed by state agencies, accrediting bodies, or funding sources (Clifford & Crawford, 2009). Most programs, such as Head Start, require a curriculum that provides enriching experiences across the multiple domains of children’s development (e.g., health, social-emotional, academic), known as “whole-child” curricula. The whole-child approach is anchored in Piagetian theory, which emphasizes child-centered active learning cultivated through strategic arrangement of the classroom environment (DeVries & Kohlberg, 1987; Piaget, 1976; Weikart & Schweinhart, 1987), and sociocultural theory, where the teacher provides supportive and responsive interactions with children (Vygotsky, 1978). Whole-child curricula purport to emphasize critical thinking and problem solving skills by providing open-ended learning opportunities and simultaneously cultivating the inter-related domains of children’s development (Diamond, 2010; Elkind, 2007; Zigler & Bishop-Josef, 2006).

In addition to Creative Curriculum and HighScope, and Scholastic and High Reach are other whole-child curricula widely used in preschool programs, including Head Start and state pre-k (Clifford et al., 2005; Hulsey et al., 2011; Jenkins & Duncan, 2017; Phillips, Gormley, & Lowenstein, 2009). Despite their widespread adoption, little empirical support exists for HighScope, none exists for Creative Curriculum, and neither curriculum has demonstrated effectiveness based on rigorous standards when compared with business-as-usual preschool settings (i.e., teacher-developed curricula or no curricula; Belfield et al., 2006; Preschool Curriculum Evaluation Research Consortium, 2008; Schweinhart, 2005; U.S. Department of Education, 2013).

The dearth of evidence supporting Creative Curriculum and HighScope is not unique to whole-child curricula. Most recently, the National Center on Quality Teaching and Learning of the Office of Head Start (2014) released the “Preschool Curriculum Consumer Report”, the first of its kind, which reviewed the most commonly used curricula in Head Start programs nationwide and provided ratings for each based on a set of 13 criteria. One criterion is “Curriculum is Evidence-Based”. Of the 14 curricula reviewed in the report, seven had “no evidence”, five had “minimal evidence”, one had “some evidence”, and only one was rated to have “solid, high-quality evidence” (Opening the World of Learning) with demonstrated effects on child outcomes. One of the first Institute for Education Sciences funded research projects was the Preschool Curriculum Evaluation Research Study Initiative (2008), a large multi-site, random assignment experimental study of 14 different preschool curricula. In this study, only two curricula, both of which were content-specific (i.e., math or literacy focused), were found to be effective at promoting children’s school readiness when compared with business-as-usual counterfactual settings (which included whole-child curricula classrooms).

However, evidence does suggest that other types of less commonly used curricula—when implemented with high-quality professional development, including coaching supports—can have strong impacts on children’s early academic and social-emotional development. Findings from small, randomized control trials of well-implemented, content-specific curricula that target single developmental domains show positive small to moderate impacts on skills targeted in the curricular materials (Bierman et al., 2008; Clements & Sarama, 2008; Diamond, Barnett, Thomas, & Munro, 2007; Fantuzzo, Gadsden, & McDermott, 2011; Morris et al., 2014). For example, children who received a literacy-targeted curriculum showed improvements in their literacy and language skills compared with business-as-usual conditions (i.e., HighScope, Creative Curriculum, or teacher-developed curricular models; Justice et al., 2010; Lonigan, Farver, Phillips, & Clancy-Menchetti, 2011). Clements and Sarama (2007, 2008) found large gains in math achievement from a targeted preschool mathematics curriculum relative to classrooms using business-as-usual curricula. Results are comparable for curricula aimed at promoting children’s social-emotional development (Bierman et al., 2008; Morris et al., 2014). Boston’s successful public pre-k program uses a unique curricular approach that combines two content-specific curricula bundled with strong, ongoing professional development, including coaching for its teachers (who are also well-paid and highly educated) to achieve its program impacts on children’s learning (Weiland & Yoshikawa, 2013).

One might argue that if implemented with similarly strong professional development supports, whole-child curricula may do just as well as the successful content-specific curricula described above, and it is not the presence of a curriculum per se driving impacts. However, evidence from the PCER study (2008) does not suggest this is the case. One of the study sites randomly assigned classrooms to the Creative Curriculum as the treatment condition, and therefore received the training and implementation supports afforded to experimental sites to ensure program fidelity. Still, Creative Curriculum classrooms in the treatment condition were no more effective in promoting children’s outcomes compared with the locally-developed curricular approach that the schools otherwise would have used. Professional development is an important component of any preschool program, but there exists little data to suggest that the lack of evidence on whole-child curricular effectiveness is due to professional developmental models alone. If early learning policies require the use of whole-child curricula, greater empirical support is needed to understand their value-added to the preschool experience.

Curricula and Early Childhood Education Policy

A surfeit of research shows that high-quality preschool can promote children’s cognitive and physical development, particularly for low-income children (Barnett, 2011; Duncan & Magnuson, 2013; Gormley, Phillips, & Gayer, 2008; Yoshikawa et al., 2013). Yet the tremendous variability in preschool quality, implementation, and effectiveness both within and between different types of programs (e.g., Head Start and state prekindergarten) and between states reveal how little is known about precisely what makes preschool effective (Bloom & Weiland, 2015; Dotterer, Burchinal, Bryant, Early, & Pianta, 2009; Jenkins, 2014; Jenkins, Farkas, Duncan, Burchinal, & Vandell, 2016; Karoly, Ghosh-Dastidar, Zellman, Perlman, & Fernyhough, 2008; Walters, 2015). Furthermore, widespread recent attention to the persistence or fadeout of the impacts of preschool programs raise concerns among policy stakeholders as to how programs can ensure continued learning gains and produce “returns” on these human capital investments as pre-k programs continue to expand (Phillips et al., 2017). Policy efforts at the federal, state, and local levels traditionally use three main levers to improve the effectiveness of public preschool programs: 1) increasing teachers’ skills through raising educational requirements and funding professional development, 2) creating quality improvement, licensing and monitoring systems, and 3) requiring preschool curricula to guide instruction.

Although often overlooked, curricular requirements and curricula use are embedded in these and other policies that govern early care and education systems. Preschool programs mandate that teachers use a curriculum, curricula prescribe specific classroom activities and practices using various pedagogical approaches, and these activities represent the learning experiences that cultivate children’s readiness for school. Therefore, instructional materials and the strategies promoted by curricula constitute some of the most direct policy-relevant connections to learning activities in the classroom, especially in light of the strong impact evidence from studies of content-specific curricula.

Still, such requirements can be vague. For example, a recent survey of state education agencies revealed that states have loose requirements for pre-k curricular decisions (e.g., “research-based” curricula, with “research-based” ill-defined), or basic guidelines for selection such as alignment to state early learning standards (Dahlin & Squires, 2016). In most cases, educators choose among preselected curricular options based on local or state policies with little scientific guidance, a few popular selections, and substantial costs.

Most importantly though, published curricula packages may differ, on average, in the experiences they shape for children in preschool classrooms. In other words, when enacted in preschool programs at scale, it is unclear whether certain curricular packages are more likely than others to promote developmentally appropriate learning activities. Additionally, there exists no population-level information about the extent to which classroom experiences and instruction using different, or even the same curricular package, vary across classrooms. In theory, the curriculum drives classroom activities and so classrooms whose teachers report using the same curriculum should be comparable with respect to quantity and type of activities (e.g., math and literacy instruction), and perhaps overall instructional quality. This assumption is dependent on a curriculum being properly enacted with fidelity across preschool classrooms. However, if program features such as length of day or funding for materials vary between classrooms and centers, the classroom experiences generated by curricula packages may differ. Similarly, teacher training and attitudes towards curricula likely affect implementation (e.g., using only part of a curriculum, modifying instruction). Although it is likely that policy-mandated curricula are not, on their own, the primary determinant of children’s development in preschool, it is certainly important to know whether curricula steer classroom experiences and raise the overall quality of instruction and support from teachers to promote children’s learning. Empirically-derived curricula guidance or restrictions may be an efficient mechanism through which policy can improve the consistency and effectiveness of preschool programs.

Another critical policy consideration is that curricula are a significant investment for preschool programs. In the first column of Table 1 we present the approximate costs per classroom for commonly used curricula, which range between $1125-$4190. Not included in these estimates are the additional professional development activities often strongly recommended by publishers to implement the curricula with fidelity, and the costs of supplemental materials. The Head Start program alone has over 50,000 classrooms, making the costs of such policies nontrivial (Office of Head Start, 2010). Given the wide array of curricular choices available, the government expenditures for required curricula, and our insufficient understanding of whether commonly used whole-child curricula promote children’s school readiness, a comprehensive study of preschool curricula is badly needed.

Table 1.

Descriptions of Analysis Sample and Key Measures by Study

| PCER | NCEDL | HSIS | FACES 2003 | FACES 2009 | |

|---|---|---|---|---|---|

| Classroom sample | 170 | 245 | 997 | 308 | 456 |

| Child sample | - | 394 | 1654 | 1565 | 2401 |

| Year of preschool data collection | 2003 | 2001 | 2002 | 2003 | 2009 |

| Percent Head Start classrooms | 35 | 29 | 100 | 100 | 100 |

| Percent state prekindergarten classrooms | 53 | 71 | - | - | - |

| Curricula included (cost per classroom) | |||||

| HighScope ($1150) | Classrooms = 40 Children = 380 |

Classrooms = 84 Children = 359 |

Classrooms = 350 Children = 656 |

Classrooms = 53 Children = 325 |

Classrooms = 66 Children = 424 |

| Creative Curriculum ($2149) | Classrooms = 50 Children = 360 |

Classrooms = 38 Children = 161 |

Classrooms = 416 Children = 854 |

Classrooms = 161 Children =1123 |

Classrooms = 251 Children = 1699 |

| DLM Express ($4108) | Classrooms = 10 Children = 100 |

||||

| High Reach ($1125) | Classrooms = 40 Children = 74 |

Classrooms = 17 Children = 130 |

Classrooms = 24 Children = 179 |

||

| Scholastic ($2900) | Classrooms = 58 Children = 114 |

Classrooms = 14 Children = 106 |

|||

| Other Published Curriculum | Classrooms = 32 Children = 135 |

Classrooms = 193 Children = 361 |

Classrooms = 77 Children = 514 |

Classrooms = 101 Children = 709 |

|

| No Published Curriculum | Classrooms = 70 Children = 600 |

Classrooms = 80 Children = 360 |

|||

| Classroom Activities | Teacher Behavior Rating Scale (Literacy and Math) | Early Academic Snapshot | Teacher survey responses | Teacher survey responses (Literacy) | Teacher survey responses (Literacy and Math) |

| Classroom Quality | ECERS-R, Arnett Caregiver Interaction Sale | ECERS-R, CLASS | ECERS-R, Arnett Caregiver Interaction Scale | ECERS-R, Arnett Caregiver Interaction Scale | ECERS-R, CLASS |

| Child Outcomes | |||||

| Early Academic | - | PPVT, WJ-III Letter Word, Applied Problems | PPVT, WJ-III Letter Word, Spelling, Applied Problems | PPVT, WJ-III Letter Word, Spelling, Applied Problems | PPVT, WJ-III Letter Word, Spelling, Applied Problems |

| Social and Behavioral | - | Teacher-Child Rating Scale | Problem Behavior Rating Scale | Teacher Rating Scale | Teacher Rating Scale |

Note: PCER = Preschool Curriculum Evaluation Research; ECERS = Early Childhood Environment Rating Scale; TBRS = Teacher Behavior Rating Scale; NCEDL = National Center for Early Development and Learning; FACES=Family and Child Experiences Survey; ECERS-R=Early Childhood Environmental Rating Scale-Revised; CLASS=Classroom Assessment Scoring System; WJ=Woodcock-Johnson. PCER classroom count rounded to the nearest 10 per NCES license requirements. PCER child sample and outcome measures are not shown because we could not conduct our child-level analyses using PCER due to the study sample design; see Analyses in main text for more detail. Classroom and child samples reflect our study’s analytic sample, and not the original study samples. Per-classroom cost estimates are approximated from the cost of purchasing the curriculum teacher’s manual or equivalent (in 2015), and the baseline set of materials required to implement the curriculum. Publishers offer different sets of materials and thus costs will vary by publisher and curriculum.

Present Study

Our study is an examination of widely used published preschool curricula including Creative Curriculum, HighScope, Scholastic, High Reach and DLM Express. Four of the five curricula are marketed as “research-based”; however, there exists no to minimal empirical evidence linking these curricula to children’s outcomes (National Center on Quality Teaching and Learning, 2014). Using five large samples of low-income, racially and ethnically diverse preschool children, we aim to understand how preschool curricula relate to classroom activities and quality as they are used in business-as-usual, center-based settings, and subsequently to children’s academic and social-emotional development. Specifically, our three research questions (RQ) are:

To what extent do classroom activities and quality ratings vary by whether a published curriculum is in use, and in classrooms that do use a published curriculum, do activities vary by the specific curricular package (e.g., HighScope compared with Creative Curriculum)?

To what extent is having a published curriculum in use in a preschool classroom associated with children’s academic and social-emotional school readiness, and do children’s readiness vary by the specific curricular package?

To what extent are the classroom activities, overall classroom quality ratings, and teacher’s attitudes and perceptions of curriculum consistent amongst classrooms using the same, or different, curricular packages?

Little prior research exists on whole-child curricula, making predictions about which packages may improve classroom quality and child outcomes difficult. However, because curricula inherently guide classroom processes, we expect differences in classroom process quality between classrooms that do and do not have a published curriculum in use. Because all whole-child curricular packages aim to promote development across multiple domains and are similar in their theoretical approach and pedagogy, we expect that these packages are robust to different classrooms and are similarly related to classroom quality and child outcomes. Specifically, we hypothesize that: (1) there exist differences in classroom process quality between classrooms with and without curricula in use; (2) there are similar levels of process quality in classrooms using different whole-child curricular packages, albeit with different ways of structuring classroom activities; and (3) classroom activities, overall classroom quality ratings, and teacher’s attitudes and perceptions of curricula are consistent across classrooms using the same curricular package.

Hereafter, we use the term “curricular status” to describe whether a classroom has any curricula in use (i.e., yes/no), whereas curricular package refers to the specific published curriculum in use (e.g., Creative Curriculum).

Method

Data

Our study uses secondary data from five studies of children in preschool settings between the 2001 and 2009 school years: The Preschool Curriculum Evaluation Research Study (PCER), the National Center for Early Learning and Development Multi-State Study of Pre-Kindergarten (NCEDL), the Head Start Impact Study (HSIS), the Head Start Family and Children Experiences Survey, 2003 Cohort (FACES 2003), and the Head Start Family and Children Experiences Survey, 2009 Cohort (FACES 2009). Each dataset contains information on curricula, classroom activities, and child academic and social-emotional outcomes. In all five studies, data collection took place in center-based preschool settings and the child participants were majority low-income and were ethnically and racially diverse. We describe each study’s sample and measures in the following sections and summarize this information in Table 1 (additional information on measures is presented in Appendices A.1–A.5).

Before proceeding, we acknowledge that our study datasets are somewhat dated and therefore may not reflect the most current classroom practices and activities. We assessed the extent to which the 2009 FACES cohort—the most recent snapshot of curricula and classroom practices in Head Start centers—compares with both the 2003 FACES cohort and the 2002 HSIS sample to examine differences in practice across years. This comparison indicates that the curricular choices of Head Start centers remained fairly stable over time (Creative Curriculum, HighScope, High Reach, Scholastic, in order of frequency), and closely matches the most recent available national data on curricula use (from the 2012 National Study of Early Care and Education; Jenkins & Duncan, 2017). Descriptive analyses are discussed in greater detail in the Results section and displayed in Table 4. In addition, our data are heavily weighted towards Head Start centers; three of the datasets include only Head Start programs (HSIS and FACES), and the other two include a combination of center-based preschool settings, including state pre-k and Head Start. Although this somewhat limits the interpretation of our results, we also consider this a strength because such programs are universally subjected to the whole-child curricular mandates imposed by federal policy.

Table 4.

Classroom Activity and Quality Rating Comparisons by Curriculum

| PCER | HighScope | Creative Curriculum | DLM Express | No Published Curriculum | Diff | F-stat | |

|---|---|---|---|---|---|---|---|

| Classroom Activities (0-3 scale) | |||||||

| TBRS Math Quantity | 1.15 | 1.29 | 1.21 | .94 | * | 4.06 | |

| TBRS Literacy Quantity | 1.53 | 1.47 | 1.60 | 1.19 | * | 8.04 | |

| Classroom Quality | |||||||

| Total ECERS Score | 4.19 | 4.31 | 4.77 | 3.34 | * | 14.84 | |

| ECERS Factor 1 Language/Interactions | 4.84 | 4.95 | 5.25 | 3.91 | * | 7.99 | |

| ECERS Factor 2 Provisions for Learning | 4.33 | 4.22 | 4.65 | 3.26 | * | 16.34 | |

| Arnett Caregiver Interaction Score | 3.12 | 3.30 | 3.25 | 2.95 | * | 4.19 | |

| Observations (Classrooms) | 40 (23%) | 50 (29%) | 10 (6%) | 70 (41%) | |||

| NCEDL | HighScope | Creative Curriculum | No Published Curriculum | Other Published Curriculum | Diff | F-stat | |

| Classroom Activities (in proportion of day) | |||||||

| Snapshot: Math Activity | .05 | .07 | .07 | .08 | * | 3.21 | |

| Snapshot: Literacy Activity | .15 | .14 | .15 | .16 | .37 | ||

| Classroom Quality | |||||||

| Total ECERS Score | 3.95 | 3.76 | 3.60 | 3.91 | * | 2.92 | |

| ECERS Factor 1 Language/Interactions | 4.60 | 4.18 | 4.33 | 4.70 | 1.71 | ||

| ECERS Factor 2 Provisions for Learning | 4.05 | 3.92 | 3.46 | 3.87 | * | 6.87 | |

| CLASS Emotional Support Scale | 5.34 | 5.14 | 5.42 | 5.45 | 1.42 | ||

| CLASS Instructional Support Scale | 1.89 | 1.80 | 1.99 | 2.10 | 1.03 | ||

| Observations (Classrooms) | 84 (36%) | 38 (16%) | 80 (34%) | 32 (14%) | |||

| HSIS | HighScope | Creative Curriculum | High Reach | Scholastic | Other Published Curriculum | Diff | F-stat |

| Classroom Activities (in times per month) | |||||||

| Total Math Activities | 149.81 | 153.68 | 169.43 | 159.71 | 152.49 | * | 6.53 |

| Total Literacy Activities | 107.40 | 114.09 | 119.33 | 125.80 | 107.51 | 1.92 | |

| Classroom Quality | |||||||

| Total ECERS Score | 5.32 | 5.05 | 4.68 | 4.90 | 5.06 | * | 7.31 |

| ECERS Factor 1 Language/Interactions | 5.79 | 5.47 | 5.50 | 5.41 | 5.54 | * | 4.21 |

| ECERS Factor 2 Provisions for Learning | 5.21 | 4.96 | 4.01 | 4.65 | 4.88 | * | 12.04 |

| Arnett Caregiver Interaction Score | 2.56 | 2.50 | 2.66 | 2.51 | 2.53 | * | 2.42 |

| Observations (Classrooms) | 350 (33%) | 416 (40%) | 40 (4%) | 58 (5%) | 193 (18%) | ||

| FACES 2003 | HighScope | Creative Curriculum | High Reach | Other Published Curriculum | Diff | F-stat | |

| Classroom Activities (in times per month) | |||||||

| Total Literacy Activities | 163.08 | 164.68 | 187.76 | 160.96 | 1.08 | ||

| Classroom Quality | |||||||

| Total ECERS Score | 4.21 | 4.24 | 3.58 | 4.28 | * | 3.29 | |

| ECERS Factor 1 Language/Interactions | 4.37 | 4.31 | 3.63 | 4.38 | * | 2.90 | |

| ECERS Factor 2 Provisions for Learning | 4.30 | 4.41 | 3.72 | 4.43 | * | 2.99 | |

| Arnett Caregiver Interaction Score | 14.47 | 14.46 | 14.65 | 15.09 | 1.14 | ||

| Observations (Classrooms) | 53 (17%) | 161 (52%) | 17 (6%) | 77 (25%) | |||

| FACES 2009 | HighScope | Creative Curriculum | High Reach | Scholastic | Other Published Curriculum | Diff | F-stat |

| Classroom Activities (in times per month) | |||||||

| Total Math Activities | 107.88 | 114.33 | 119.50 | 125.52 | 106.65 | * | 6.36 |

| Total Literacy Activities | 151.40 | 153.74 | 167.33 | 160.11 | 152.62 | 1.28 | |

| Classroom Quality | |||||||

| Total ECERS Score | 4.14 | 4.45 | 4.16 | 3.60 | 4.17 | * | 43.42 |

| ECERS Factor 1 Language/Interactions | 5.34 | 5.04 | 4.69 | 4.92 | 5.01 | * | 7.79 |

| ECERS Factor 2 Provisions for Learning | 2.56 | 2.49 | 2.67 | 2.51 | 2.54 | * | 2.83 |

| CLASS Emotional Support Scale | 5.17 | 5.38 | 5.35 | 5.13 | 5.28 | * | 21.51 |

| CLASS Instructional Support Scale | 2.10 | 2.32 | 2.24 | 2.11 | 2.30 | * | 7.54 |

| Observations (Classrooms) | 70 (15%) | 228 (48%) | 31 (6%) | 36 (7%) | 112 (23%) | ||

Note. Largest significant value(s) for each measure is italicized. PCER = Preschool Curriculum Evaluation Research; ECERS = Early Childhood Environment Rating Scale; TBRS = Teacher Behavior Rating Scale; NCEDL = National Center for Early Development and Learning; FACES=Family and Child Experiences Survey; Snapshot = Emerging Academics Snapshot; HSIS = Head Start Impact Study.

p<.05 from ANOVA. All PCER classroom observations rounded to the nearest 10 per NCES data security policy. Classroom math activities was not included in the FACES 2003 teacher survey. Comparisons of classroom and center characteristics as well as classroom activities and quality by curriculum are shown in Appendix A.1–A.5.

Samples.

PCER.

Beginning in 2003, 12 grantees across the country were funded to study the effect of preschool curricula on children’s academic and social-emotional outcomes in the PCER study. Each grantee selected their study curricula for a total of 14 different curricula tested in 18 different locations. Mathematica Policy Research and Research Triangle Institute assisted with the evaluation to ensure consistent data collection at each site, but each grantee was in charge of its own evaluation. Individual grantees were responsible for recruiting preschool centers to participate in the study. At each grantee site, either classrooms within preschool centers or entire centers themselves were randomly assigned to a treatment (experimental curriculum) or control condition. For feasibility and to preclude cross contamination across classrooms, most research sites assigned only one curriculum to each preschool center. Baseline data on children, parents, and preschools were collected in the fall of 2003, with post-treatment data collected in the spring of 2004. Approximately 2,900 children in 320 preschool classrooms participated in the study. The subsample of PCER most relevant to our study are the grantee sites and classrooms that used one of our focal whole-child curricula—HighScope, Creative Curriculum, DLM Express—and those classrooms with no published curriculum in use (N=1,450 children). The data include children who were either in Head Start, private child care, or public preschool. For more information on the study, see the PCER Final Report (2008).

NCEDL.

This study is comprised of two stratified random samples of children within preschool programs across 11 states. States were purposely selected if they had large numbers of children enrolled in pre-existing public pre-k programs. The sample for the Multi-State Study of Pre-Kindergarten includes six states (California, Illinois, Georgia, Kentucky, New York, and Ohio). No systematic intervention was tested in NCEDL; data were collected to examine characteristics of, and variations in programs that lead to children’s development. The follow-up study, the State-Wide Early Education Programs Study was not included in our analyses because the dataset did not include curriculum indicators. Preschool programs were randomly sampled within states, and 29% were Head Start programs. One classroom was then randomly sampled within each program, and 94% of classroom teachers agreed to participate. Of the selected classrooms, approximately 60% of parents gave consent for their child to participate, and from this subsample four children were randomly selected to participate (N=1,015). Forty preschool programs were selected in each state for a total of 245 classrooms. Child assessment data were collected during the fall and spring of the 2001-2002 preschool year. For more information, see Early et al. (2005).

HSIS.

The HSIS is a nationally representative study of Head Start participants and a group of comparable non-participants from 23 states that were sampled using a complex multi-stage stratified design. Head Start grantees were divided into geographic clusters and were then stratified based on grantee characteristics, with three grantees or delegate agencies randomly selected from each cluster. Within each delegate agency, Head Start centers were stratified in the same way as grantees, and were randomly selected. This resulted in 84 grantees and delegate agencies with a total of 383 individual preschool centers. The full sample included newly entering 3- and 4-year-old Head Start applicants at randomly selected oversubscribed centers, where children were randomly assigned to receive an offer for Head Start. A total of 4,442 children were selected – 2,646 for Head Start and 1,796 for the control condition. Control group participants either found other child care or the child was cared for at home. Study investigators (Westat) collected baseline surveys and child assessments during the fall of 2002, and post-treatment child assessments were collected at the end of Head Start in the spring of 2003.

We restrict the sample for our study to those children who were randomly assigned to, and actually attended, a Head Start program because only under these conditions were classrooms required to have a curricular package in use. Control children in the HSIS were omitted from our study due to the extensive variation in counterfactual care conditions. For more information, see the HSIS Final Evaluation Report (Puma et al., 2012).

FACES.

The Head Start Family and Child Experiences Survey (FACES) study is a multi-wave, large-scale investigation of children, families, and educators in Head Start programs that aims to understand how the program operates and how it contributes to the well-being of the families and children it serves. Similar to NCEDL, the FACES study is not an intervention study. The FACES data contain nationally representative longitudinal data on five cohorts of Head Start children and their families (i.e., FACES 1997, 2000, 2003, 2006, and 2009) as well as staff qualifications, classroom practices, and quality measures including curricula indicators. Our analyses use data from the 2003 and 2009 cohorts. We selected the 2003 cohort because the data timeframe closely aligned with our other study datasets. We included the 2009 cohort because they were the most recent FACES data available at the time of our study. The FACES sampling design included a four-stage sampling process to select a representative group of Head Start (1) grantees; (2) centers; (3) classrooms; and (4) newly enrolled children. Sampling at the first three stages was done with probability proportional to size. Data were collected in the fall and spring of the children’s first year in Head Start, and the spring of the children’s second year in Head Start if they were three years-old at first entry. Though teachers were allowed to select multiple published curricula used in their classrooms, the FACES study also asked teachers to name the primary curriculum they used in class, which we use as our key independent variable. In total, the FACES 2003 sample included 63 grantees, 182 centers, 409 classrooms, and 2,816 children. The FACES 2009 sample included 60 grantees, 129 centers, 486 classrooms, and 3,349 children. For more information, see the FACES User’s Guides (Malone et al., 2013; Zill, Kim, Sorongon, Shapiro, & Herbison, 2008).

Measures.

Preschool curricula.

Each dataset includes classrooms using published curricula. Additionally, both the NCEDL and PCER samples include preschool classrooms with no published curriculum in use. “No published curriculum” means that the classroom did not use a published or packaged curriculum but may have used a “locally developed” or a teacher-designed curriculum. Although we cannot know the exact content of these curricula or the curricula models on which they are based, we consider the “no published curriculum” and the locally or “teacher-developed curriculum” designations to represent another common practice in early childhood education and thus important to include in our study. In the NCEDL, HSIS, FACES 2003, and FACES 2009 studies, a category indicating “Other published curricula” represents those classrooms for whom we do not have specific curricular package information, or with fewer than 10 classrooms using a specific curriculum package. These classrooms were collapsed into a single group for analysis. Note that fewer than five classrooms reported using Scholastic in the FACES 2003, and were not included in the analysis.

We acknowledge that teachers may report “using” a curriculum when it may merely be present on their classroom bookshelves. However, the aim of our study is to understand the implications of policy-mandated curricula. As such, our data represent the de facto classroom environments for children who experienced different curricular choices with at scale business-as-usual implementation.

To provide some context of curricular implementation and teachers’ perspectives on curricula, we use the available teacher survey items related to curriculum in the NCEDL, HSIS, and FACES datasets (teacher curriculum items not collected in PCER) in our descriptive analyses related to curricular variation (RQ 3). Items and their responses are aggregated by curricular packages, and shown in Appendix Tables A.2–A.5, and capture things such as teacher’s attitudes towards the curriculum, whether they have training in the curriculum, whether they have the necessary materials to implement the curriculum, and whether the curriculum leaves room for teacher creativity. All items are indicator variables, and equal 1 if the teacher responded “yes” to the question prompt.

Classroom quality.

Quality of care was measured with several instruments across the three studies. The Early Childhood Environment Rating Scale-Revised (ECERS-R; Harms, Clifford, & Cryer, 1998) is a widely used observer-rated measure of global classroom quality, specifically designed for use in classrooms serving children between 2.5 and 5 years of age, and was used in each study. Scores on the ECERS-R range from 1-7 with 1 indicating “inadequate” quality, 3 indicating “minimal” quality, 5 indicating “good” quality, and 7 indicating “excellent” quality. The scale’s authors report a total scale internal consistency of .92. We report the total ECERS scale score, and the “Provisions for Learning” and “Interactions” factor scores for each study. We focus our classroom-level quality analyses on the ECERS because it was collected in all four studies. However, we incorporate two additional quality measures, each shared by 2-3 studies, in our descriptive analyses, shown in Tables 3 and 4.

Table 3.

Classroom Activity Comparison by Presence of Published Curricula in PCER and NCEDL

| PCER |

NCEDL |

||||||

|---|---|---|---|---|---|---|---|

| Published Curriculum | No Published Curriculum | Diff | Published Curriculum | No Published Curriculum | Diff | ||

| Classroom Activities | |||||||

| TBRS Math Quantity (0-3 scale) | 1.22 | .94 | * | - | - | ||

| TBRS Literacy Quantity (0-3 scale) | 1.51 | 1.19 | * | - | - | ||

| Snapshot: Math Activity (proportion of day) | - | - | .06 | .07 | |||

| Snapshot: Literacy Activity (proportion of day) | - | - | .15 | .15 | |||

| Classroom Quality | |||||||

| Arnett Caregiver Interaction | 3.21 | 2.95 | * | - | - | ||

| Total ECERS Score | 4.31 | 3.34 | * | 3.89 | 3.59 | * | |

| ECERS Factor 1 Language/Interactions | 4.94 | 3.91 | * | 4.52 | 4.31 | ||

| ECERS Factor 2 Provisions for Learning | 4.32 | 3.26 | * | 3.98 | 3.46 | * | |

| CLASS Emotional Support Scale | - | - | 5.31 | 5.40 | |||

| CLASS Instructional Support Scale | - | - | 1.91 | 1.98 | |||

| Observations (Classrooms) | 100 | 70 | 154 | 91 | |||

Note. PCER = Preschool Curriculum Evaluation Research; NCEDL = National Center for Early Development and Learning; ECERS = Early Childhood Environment Rating Scale; TBRS = Teacher Behavior Rating Scale. Snapshot = Emerging Academics Snapshot.

p<.05 from t-test for differences in means. All PCER classrooms observations rounded to the nearest 10 per NCES data security policy. Comparisons of all classroom characteristics and activities by curriculum are shown in Appendix B.

To capture caregiver interactions, the HSIS, PCER, and FACES 2003 studies used the Arnett Caregiver Involvement Scale (Arnett, 1989). This is an observational measure consisting of 26 items reflecting teacher sensitivity, harshness, and detachment that are rated on a 1-4 scale indicating how characteristic they are of the teacher, from not at all (1) to very much (4). Psychometric analyses suggest that the items load onto a single factor (Cronbach’s α = .93).

The NCEDL and FACES 2009 studies also included the Classroom Assessment Scoring System (CLASS; Pianta, La Paro, & Hamre, 2008), an observer-rated assessment of teacher-child interactions in terms of emotional support (climate, teacher sensitivity, regard), classroom organization (behavior management, productivity, instructional learning formats), and instructional support (concept development, feedback quality, language modeling) (Cronbach’s α .88 for Classroom Organization, .90 for Emotional Support, and .93 for Instructional Support).

Classroom learning activities.

We used different instruments and data sources in each study to create aggregate measures of total classroom literacy and mathematics activities. Detailed lists of the individual items used, along with mean values by curricular package, are available in Appendices A.1–A.5.

The Teacher Behavior Rating Scale from the PCER study used trained observers to rate the quality and quantity of academic activities present in a classroom (Landry et al., 2001). There are two content areas measured by the TBRS–math and literacy. Literacy is composed of five subdomains (written expression, print and letter knowledge, book reading, oral language, and phonological awareness). Quality of activities were rated from 0-3 (0 = activity not present; 3 = activity high quality). Quantity of activities was similarly rated from 0-3 (0 = activity not present; 3 = activity happened often or many times). We focus only on the quantity measures in our analyses, and this number was derived from taking the average of each of the activities that were rated. Cronbach’s α for the math scale is .94, and for the literacy scale is .87.

The Emerging Academic Snapshot (EAS) used in the NCEDL study is also an observer-rated measure of children’s classroom engagement that captures children’s moment-to-moment activities (Ritchie, Howes, Kraft-Sayre, & Weiser, 2001). Observations were conducted over one or two days in the spring of the preschool year. The data collector observed each study child in 20-second interval “snapshots,” followed by a 40-second coding period. The other three study children in the sampled classroom are then coded before coming back to observe the first child again, and this is repeated for the entire observation period. Children are coded with one of six mutually exclusive activity settings in each snapshot (basics, free choice, individual time, meals, small group, and whole group). The activity is also coded for early academic content area (aesthetics, fine motor skills, gross motor skills, letter and sound, mathematics, oral language development, read to, science, social studies, and writing). For example, to obtain the proportion of the day spent in math activities at the classroom level, coders took the average amount of time that each sample child was observed engaged in math activities divided by the total observation time. The last coded component of each snapshot is the type of teacher-child interaction (routine, minimal, simple, elaborated, scaffolding, and didactic). Kappas range from .70 to .87.

End-of-year teacher surveys were used in the HSIS, FACES 2003, and FACES 2009 studies to capture the different types of classroom activities. Teachers were asked how many times in the past week their class engaged in a specific literacy or math activity (shown in Appendices A.3–A.5). We used the teacher-reported items on the type and frequency of classroom literacy and math activities, converted into times per month by taking the mean value of the answer category (e.g., never = 0; 1-2 times per week = 1.5), and multiplied by 4, following Claessens, Engel, and Curran (2013). We then standardized this measure to have a mean of 0 and standard deviation of 1. Prior research indicates that teacher survey instruments are valid for assessing quantity of instruction, but not quality (Herman, Klein, & Abedi, 2000). FACES 2003 did not ask teachers about the quantity of math activities in the classroom, so this outcome was excluded from the analyses for this dataset.

Child school readiness skills.

Our analyses use multiple literacy, language, math, and social-emotional assessments that are considered valid and reliable, and are widely used within the field of child development. We examine children’s skills in several outcome domains because a central tenet of the whole-child curricula model is that the experiences generated by the curricula cultivate all aspects of children’s development. In each study, children were assessed at the beginning and end of their preschool year so that the baseline score can be used as a control variable. Note that we do not describe PCER’s school readiness measures because we are unable to estimate our child-level analytic models using those data (see Analyses below).

Receptive language was measured by the Peabody Picture Vocabulary Test (PPVT; Dunn & Dunn, 1997) in each study, which focuses on children’s ability to successfully point to the picture that most closely represents the word spoken to them by the test administrator. Reliability for the PPVT ranges from .92 to .98. Children’s emergent literacy skills were also measured with the Letter Word subtest from the Woodcock-Johnson Psycho-Educational Battery-Revised III in the HSIS and FACES (WJ; Woodcock, McGrew, & Mather, 2001). In the Letter Word (LW) test, the child is initially asked to identify letters and as the test progresses in difficulty, children are asked to read and pronounce written words correctly. This assessment measures children’s ability to correctly recognize and sound out letters and sight words. Reliability is between .97 and .99 for preschool children. HSIS and FACES also included the WJ Spelling subtest. The Spelling subtest requires children to trace letters, write letters in upper and lowercase, and to spell words, measuring early writing and spelling skills (Cronbach’s α = .90).

Children’s general mathematical knowledge was assessed by the WJ Applied Problems subtest in all studies (Woodcock et al., 2001). The Applied Problems (AP) subtest examines early numeracy, and the child’s ability to analyze and solve math problems. The reliability coefficient for the 3- to 5-year-old age group ranges from .92 to .94.

The Teacher-Child Rating Scale (TCRS; Hightower, 1986) was used to measure children’s social and emotional skills in the NCEDL study. This is a behavioral rating scale that assesses children’s social competence and problem behaviors. The Social Competence scale was computed as the mean of 20 items and had a Cronbach’s α of .95. The Problem Behavior scale was computed as the mean of 18 items and had a Cronbach’s α of .91. The HSIS study included the 28-item Behavior Problems Index (Zill, 1990). This is a parent report of problem behaviors related to emotional status, school behavior, and interpersonal relationships, with items drawn from several other child behavior scales (e.g., Child Behavior Checklist). Items are rated on a 3-point scale, and have a 2-week test-retest reliability of .92. Problem behaviors and social skills were measured in the FACES studies using items from an abbreviated adaptation of the Personal Maturity Scale (Alexander, Entwisle, Blyth, & McAdoo, 1988), Child Behavior Checklist for Preschool-Aged Children, Teacher Report (Achenbach, Edelbrock, & Howell, 1987) Behavior Problems Index (Zill, 1990), and the Social Skills Rating System (Gresham & Elliott, 1990).

Covariates.

Each dataset contains several child and parent characteristics that are included as control variables in our analyses. These include gender of child, race of child, mother or primary caregiver educational level and age, and family income. Data on these characteristics were collected via parent report during the preschool year. We also include children’s baseline outcome assessments from the fall of the preschool year as covariates. In the NCEDL analyses we include an indicator for family poverty as a control, and in the HSIS analyses, we include an indicator for teen mother, due to the nature of these two samples (teen mother not reported in FACES). The classroom, teacher, and center covariates are teachers’ education, race, and years of experience, classroom-level aggregates of children’s race, gender, and parental education, whether the classroom is located in a public school or is a Head Start provider (PCER and NCEDL only), and an indicator for full-day (available only in NCEDL and FACES 2009; collected at the center-level in the HSIS). Because PCER was an experimental study, we control for classroom treatment status to adjust for researcher involvement in curricular implementation.

Missing data.

Rates of missingness on key study variables across all datasets range from 0 to 14 percent. The most substantial source of missingness was from curricula information due to teacher or director non-response. We used complete case analysis and compared the characteristics of children and teachers in classrooms with and without curricula information to assess whether the dropped cases differed systematically from the analysis sample. No consistent patterns of missingness emerged across the five datasets, but in three of the datasets teachers with a high school degree or below were less likely to report curricula information. This could bias our estimates of curricula use upward. We assume that data are missing at random (a function of other observable variables), which is plausible given our rich covariates, and also assume that the distribution of missing variables are jointly normal (Allison, 2002).

Analyses

We present an overview of the study hypotheses and analyses by research question in Table 2, indicating the dataset in which each analysis was conducted. The Creative Curriculum serves as the reference category for both the classroom- and child-level outcome analyses because it was the most frequently used curricula in each dataset, providing a common comparison group for all analyses.1

Table 2.

Research Questions, Hypotheses, and Descriptions of Analyses by Study

|

RQ 1: To what extent do classroom activities and quality ratings vary by whether a published curriculum is in use, and in classrooms that do use a published curriculum, do activities vary by the specific curricula package (e.g., HighScope compared with Creative Curriculum)? | |||||

| Hypothesis: Classroom process quality level will differ between classrooms that do and do not have a published curriculum, with classrooms using published curricula having higher levels of quality. We anticipate quality levels will not significantly differ between specific curricula packages. | |||||

| Analysis: | PCER | NCEDL | HSIS | FACES 2003 | FACES 2009 |

| T-tests of means or z-tests of proportion to compare classroom activities by curricular status | X | X | |||

| ANOVAs to compare classroom activities and quality ratings by curriculum package | X | X | X | X | X |

| Regressions of classroom activities on indicators for curricular package | X | X | X | X | X |

|

RQ 2: To what extent is having a published curriculum in use in a preschool classroom associated with children’s academic and social-emotional school readiness, and do children’s readiness vary by the specific curricular package? | |||||

| Hypothesis: Classroom process quality level will differ between classrooms that do and do not have a published curriculum, with classrooms using published curricula having higher levels of quality. We anticipate quality levels will not significantly differ between specific curricula packages. | |||||

| Analysis: | PCER | NCEDL | HSIS | FACES 2003 | FACES 2009 |

| Curricular status state fixed effects models | X | ||||

| Curricular package grantee fixed effects models | X | X | |||

| Curricular package state fixed effects models | X | ||||

| Meta-analysis of curricular package estimates | X | X | X | X | |

|

RQ 3: To what extent are the classroom activities, overall classroom quality ratings, and teacher’s attitudes and perceptions of curriculum consistent amongst classrooms using the same, or different, curricular packages? | |||||

| Hypothesis: Classroom activities, overall classroom quality ratings, and teacher’s attitudes and perceptions of curriculum are consistent across classrooms using the same curricular package. | |||||

| Analysis: | PCER | NCEDL | HSIS | FACES 2003 | FACES 2009 |

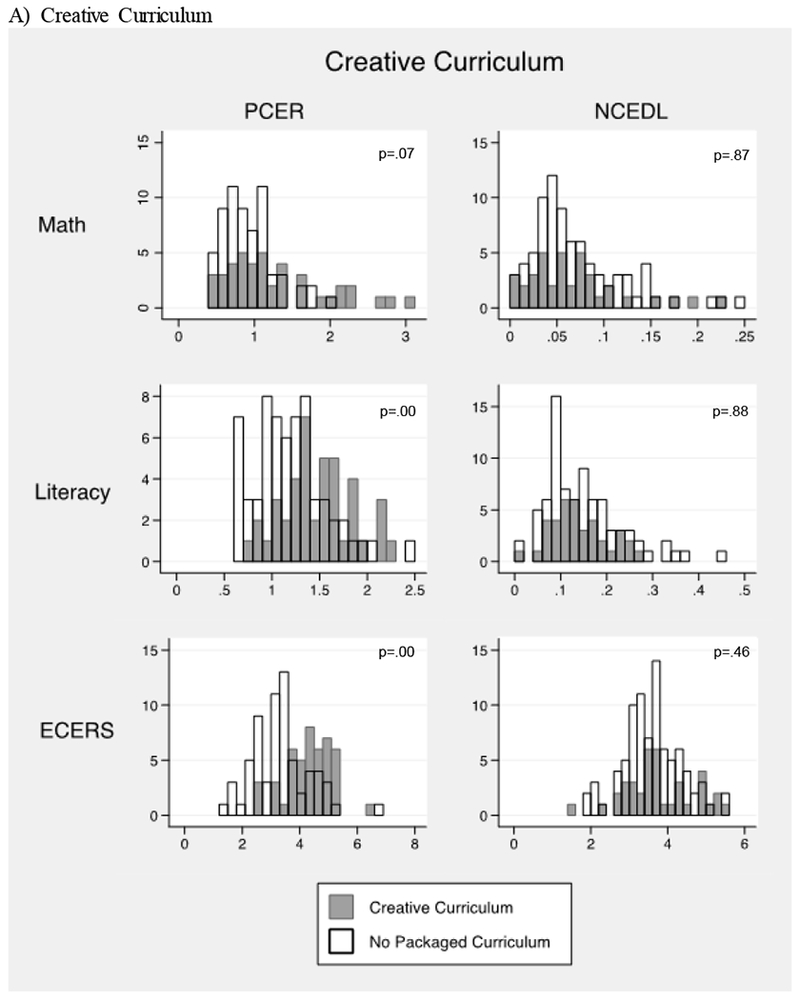

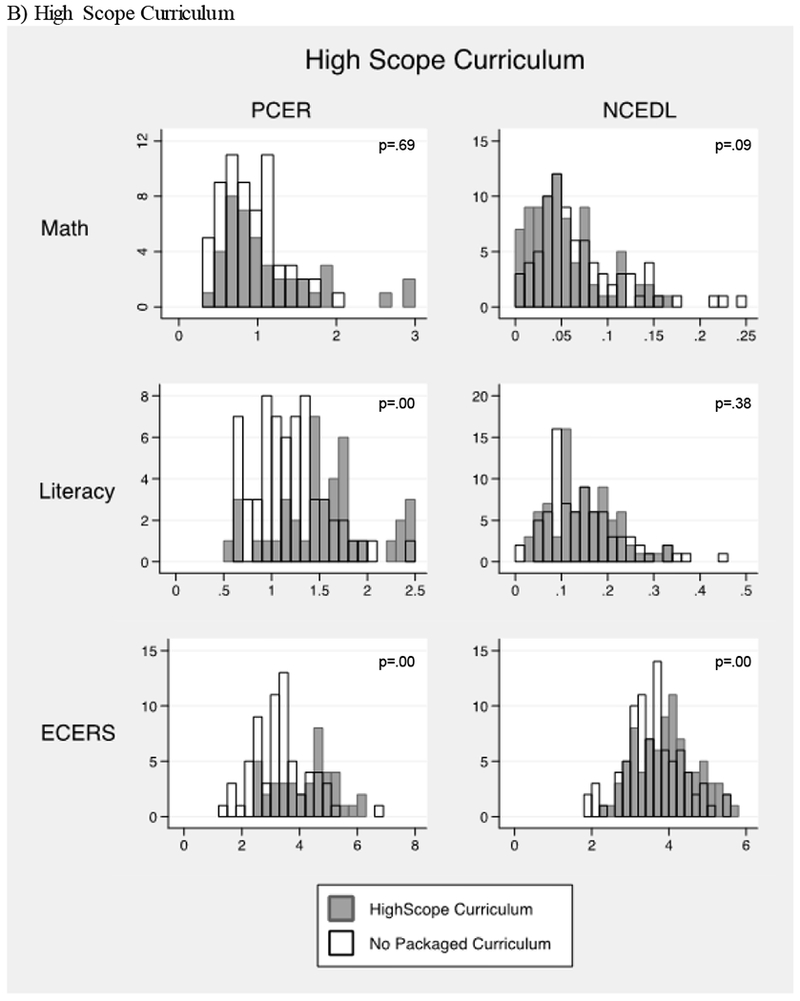

| Histograms of classroom quality and classroom activities by curricular package overlaid with no packaged curriculum | X | X | |||

| Histograms of classroom quality and classroom activities by curricular package | X | X | X | ||

| Descriptive comparison of teacher survey items on curricula | X | X | X | X | |

Note. The term “curricular status” is used to describe whether a classroom has any curricula in use (i.e., yes/no), whereas curricular package refers to the specific published curriculum in use (e.g., Creative Curriculum). PCER = Preschool Curriculum Evaluation Research; NCEDL = National Center for Early Development and Learning; FACES=Family and Child Experiences Survey.

Research Question 1: Descriptive analyses of classroom activities.

A first-order question in the investigation of preschool curricula and children’s school readiness is whether differences exist in children’s preschool classroom experiences by curricular status (i.e., published curriculum in use: yes or no). To answer this question, we first compare the available measures of classroom activities, quality, and other key classroom features (e.g., teachers’ education, classroom-level aggregates of child characteristics) by curricular status in PCER and NCEDL using t-tests of means or z-tests of proportions. Because all Head Start programs require the use of curricula, HSIS and FACES cannot be used to examine differences in curricular status. We then compare the measures of math and literacy activities, quality, and other classroom features by curricular package (e.g., HighScope, Creative Curriculum, Scholastic, etc.) using ANOVA. For this set of analyses we use all five datasets.

We also test for differences in associations using ordinary least squares (OLS) regression, regressing each measure of math and literacy activities and quality on curricular status or curricular package controlling for other classroom characteristics that influence the measurement classroom-level of processes and activities (e.g., classroom-level child characteristics), or may affect implementation (e.g., teacher education), and conduct F-tests to determine whether the set of curricular package coefficients jointly equal zero.2 However, we also recognize that curricula, classroom characteristics, and classroom processes may be jointly determined, and therefore controlling for these factors may complicate inference. Because this relative bias calculation is unclear (bias from measurement or implementation context versus confounding from simultaneity), we prefer the straightforward mean comparisons, and focus our results and discussion text on these analyses. Results from regressions of classroom processes on curricular status and curricular package indicators with covariates included are available from the authors.

Research Question 2: Grantee and state fixed effects analyses of child outcomes.

Curricula are not randomly assigned to grantees, centers, teachers, or children. Unobserved or unmeasured characteristics may be associated with both curricula and children’s outcomes, and thus we cannot causally determine whether a curriculum affects children’s school readiness with observational data. To mitigate such bias, we test for associations between curricular status, curricular package, and child school readiness outcomes using two types of fixed effects. Fixed effects is an econometric technique that removes from the estimate of interest any context-specific and time-invariant observable or unobservable characteristics that may influence both the choice of curriculum and children’s outcomes. These models compare the outcomes of children who share the same proximal (Head Start grantee) or distal (state policy context) environments. We also conduct F-tests of the joint hypothesis of no differences among all curricular packages and children’s outcomes to test for systematic variation. There were not enough states or grantees in the PCER sample with variation in curricular status to test for differences in outcomes. Because no common curricular reference group exists across states or grantees in the PCER study, we are also unable to test for differences in child outcomes by curricular package. In total, we examine relationships between curricula and children’s outcomes in the HSIS, NCEDL, and FACES samples.

Curricular status state fixed effects models.

We estimate the association between curricular status and children’s outcomes in the NCEDL dataset using state fixed effects models. This model compares children in preschool classrooms within the same state across classrooms who use a curricula package with those in classrooms who do not. We acknowledge that state fixed effects do not address classroom-level selection bias, but within the constraints of our data, this approach mitigates bias from cross-state variation in policies, regulations, and funding streams affecting preschool quality and curricular requirements (Barnett et al., 2017; Gilliam & Ripple, 2004; Jenkins, 2014; Kirp, 2007; Pianta, Barnett, Burchinal, & Thornburg, 2009). The general form of this model is as follows:

| 1. |

where ChildOutcome represents a child’s (i) school readiness outcome (e.g., PPVT) at the end of the preschool year, Child Controls is a vector of child and family control variables which also includes children’s baseline skills assessment scores, State is a vector of indicators for each (k) of the states included in the study, and e represents the remaining sources of variation in children’s school readiness from unaccounted factors. ß1 is our coefficient of interest, representing the association between classroom curricular status and children’s school readiness, indexed by classroom (c). We adjust for the clustered sample designs at the classroom-level using Huber-White standard errors.

Since curricula are not randomly assigned, the interpretation of ß1 (and A below) must allow for the possibility that curricula will be picking up other classroom or center characteristics that are correlated with curricula. We attempt to minimize this problem by including a vector of appropriate teacher-, classroom-, and center-level controls, indicated by Classroom Controls (i.e., teacher’s education, teacher’s years of experience, and ECERS score).

Curricular package grantee fixed effects models.

The analysis most robust to bias from unobserved center and classroom characteristics comes from the HSIS and FACES data, where we are able to estimate grantee fixed effects models. For example, in the HSIS data, this method takes advantage of differences in classroom curricula within the grantee where families applied for, and were randomly assigned to receive, Head Start services at one of the centers operated by that grantee. In other words, this analysis allows us to compare the outcomes of children living in the same area who received Head Start services from the same grantee, reducing the possibility of omitted variables bias, but not eliminating it. The general form of this model is as follows:

| 2. |

where Curricula is a vector of curriculum indicator variables which vary by classroom, Grantee is a vector of indicators for each (z) of the Head Start grantees included in the study, and all other terms are identical to those shown in Equation 1 above. The coefficients in A are our estimates of interest since they represent the differential associations between each preschool curriculum and children’s school readiness relative to the reference category. Of the 84 grantees in the HSIS, 62 (75%) had variation across classrooms in curricular package, with Creative Curriculum as the most common curriculum in use. For FACES 2003, 26 (41%) of the 63 grantees had variation across classrooms in curricular package, and 28 (47%) out of the 60 grantees had such variation in FACES 2009. In each of the samples we have 80% power to detect effect sizes of .20. We adjust for the clustered sample designs at the grantee-level using Huber-White standard errors.

Curricular package state fixed effects models.

Although we are unable to estimate a similar grantee fixed effects model for analyses by curricular package in NCEDL because of the difference in sampling and study designs, we estimate a state fixed effect model with the NCEDL dataset. This model compares children in preschool classrooms within the same state across classrooms using different curricula, with Creative Curriculum as the reference group. This model replaces Grantee in Equation 2 above with indicators for the states (Statesk, as in Equation 1) included in the NCEDL study.

Meta-analysis of curricular package estimates.

We use meta-analytic techniques to summarize the four sets of coefficients produced from the child outcome models of curricular packages. The meta-analysis treats the standardized regression coefficients for each curriculum package of Equation 2 as observations in a regression predicting children’s school readiness outcomes at the end of preschool. We follow standard meta-analytic practices and weight each regression coefficient by the inverse of their variance (Hedges & Olkin, 1985).

Research Question 3: Consistency in classroom activities, quality, and teacher perceptions.

We conduct several descriptive analyses to examine variation in classroom processes and activities across classrooms using the same curricular package. First, we create histograms of ECERS scores and the frequency of math and literacy activities for the two most commonly used curricula–Creative Curriculum and HighScope. We then overlay these data for the “no published curricula” classrooms on the same histograms to determine how classrooms without a published curriculum in use are distributed on classroom variables compared with classrooms using a published curriculum. We could not do the comparison overlay in the HSIS and FACES graphs because all Head Start classrooms are required to use a published curriculum, and therefore only conduct these graphical analyses with the PCER and NCEDL datasets.3 In addition to the graphical analyses, we conduct Kolmogorov-Smirnov tests of the equality of distributions to determine if the distributions of classroom quality were significantly different.

We then descriptively examine responses to the available teacher survey items on classroom curricula aggregated by curricular package, to better understand teachers’ perspectives on their classroom curricula and the supports they receive to implement the curricula, and look for differences across curricula. We conduct these analyses in the datasets where such items were available (NCEDL, HSIS, FACES 2003 & 2009). Although these data do not capture implementation as assessed by an objective observer, they do provide a better sense of teacher’s curriculum use, supports for implementation, and overall perspective on their curriculum.

Results

Curricular Status and Curricular Package Differences in Classroom Activities and Quality

Curricular status.

We computed descriptive statistics and t-tests to assess whether having a curriculum in use makes a difference in the quality of children’s preschool classroom experiences and their classroom’s math and literacy activities in the PCER and NCEDL samples, presented in Table 3. All Head Start classrooms use curricula, and therefore the HSIS and FACES data are omitted from the curricular status analysis. Here we discuss mean differences between classrooms with and without published curricula on math and literacy activities and quality scores. Mean comparisons of additional classroom characteristics by curricular status are shown in Appendix B. Regression adjusted comparisons that control classroom characteristics are available from the authors.

PCER.

The PCER results indicate that classrooms reporting use of a published curriculum have significantly more literacy and math activities and higher quality ratings from the ECERS (on both subscales) and Arnett Caregiver Interaction scales relative to classrooms where teachers report using no published curriculum. In regression analyses controlling for a comprehensive set of potential confounds (teacher characteristics and classroom-level aggregates of children’s race, gender, and parental education), these differences remain but the coefficients do not reach significance.

NCEDL.

Descriptive analyses in the NCEDL sample reveal that classrooms using a published curriculum score higher on the total ECERS score and in the Provisions for Learning ECERS factor compared with classrooms not using a curriculum. No significant differences emerge by curricular status in the amount of classroom math and literacy learning activities or in the two CLASS subscales. Regression models including the set of control variables confirm these results.

Curricular package.

Table 4 presents descriptive statistics and ANOVAs for each dataset to examine differences by curricular package in the means and proportions of classroom activities and quality. Counter to our hypothesis of no differences between whole-child curricular packages, there were significant differences across curricular packages in both the quantity of math activities and overall classroom quality based on the ECERS, Arnett, and CLASS scales in all five samples. Other significant differences emerged between curricular packages in each dataset, but without a clear rank ordering of packages in terms of their allocation of literacy and math activities or superior quality.

In PCER, Creative Curriculum had the most math activities, DLM Express had the most literacy activities and highest ECERS scores, and both packages also had the highest Arnett Caregiver Interaction scores. NCEDL revealed the fewest differences between packages, with HighScope and the Other Published Curriculum category demonstrating the highest quality on ECERS. HSIS results indicate that HighScope classrooms have the highest ECERS ratings, and that High Reach have the most math activities and highest Arnett scores. FACES 2003 results favored the Other Published Curriculum category on all ECERS ratings. FACES 2009 reveal Scholastic classrooms implementing the most math activities, while High Reach produced the most literacy activities. Overall ECERS quality was highest in Creative Classrooms, but HighScope had the highest Language/interactions subscale score, and High Reach with the highest Provisions for Learning subscale score, and CLASS subscale scores also favored Creative Curriculum and High Reach.

Regressions of classroom activities on indicators for curricular package controlling for other classroom characteristics are available from the authors. As a complement to the ANOVAs, this analysis allowed us to directly compare each curriculum with the reference category (Creative Curriculum) while controlling for other classroom characteristics. Results are very similar to the patterns in Table 4. We tested for differences overall among the curricular packages with joint F-tests and reject the null hypothesis of no differences in 4 out of the 14 estimated models, providing mixed evidence of the unique contribution of curricular packages to classroom processes. Overall, these descriptive analyses did not reveal a top performer across the five datasets.

Curricular Status and Curricular Package Differences in Child School Readiness

Curricular status.

State fixed effects models testing for differences in children’s school readiness in the Spring of their preschool year by curricular status in NCEDL are presented in Appendix C. We find no significant differences in children’s math, literacy, or social skills depending on whether the classroom used a published curriculum. However, teachers reported significantly fewer problem behaviors in classrooms where a curricular package was used.

Curricular package.

Table 5 presents the results for models examining differences in children’s outcomes in the Spring of their preschool year by curricular package. The reference group is Creative Curriculum in each dataset. All outcomes are in standard deviation (SD) units.

Table 5.

Fixed Effects Results for Associations between Classroom Curricula and Children’s School Readiness

| HSIS† | PPVT | WJAP | WJLW | WJSP | Behavior Problems | |

|---|---|---|---|---|---|---|

| HighScope | 0.02 (0.08) |

0.18* (0.09) |

0.09 (0.12) |

0.27* (0.11) |

0.12 (0.15) |

|

| High Reach | −0.19* (0.09) |

0.19 (0.19) |

0.26 (0.20) |

0.24 (0.15) |

−0.18 (0.23) |

|

| Scholastic | 0.11 (0.07) |

0.25* (0.11) |

0.25* (0.12) |

0.47 (0.29) |

0.10 (0.15) |

|

| Other Published Curriculum | 0.03 (0.08) |

0.10 (0.08) |

0.04 (0.09) |

0.27* (0.12) |

0.20 (0.13) |

|

| F-test (p-value) | 0.10 | 0.00 | 0.22 | 0.03 | 0.63 | |

| Observations | 1709 | 1700 | 1711 | 1709 | 1654 | |

| FACES 2003† | PPVT | WJAP | WJLW | WJSP | Behavior Problems | Social Skills |

| HighScope | −0.24 (0.16) |

−0.09 (0.18) |

−0.04 (0.17) |

−0.04 (0.13) |

−0.08 (0.21) |

−0.26 (0.22) |

| High Reach | −0.32 (0.23) |

−0.08 (0.20) |

−0.10 (0.19) |

0.29 (0.37) |

−0.43 (0.27) |

−0.25 (0.45) |

| Other Published Curriculum | −0.01 (0.08) |

−0.11 (0.09) |

−0.19 (0.14) |

−0.17 (0.12) |

0.01 (0.13) |

−0.34* (0.15) |

| F-test (p-value) | 0.52 | 0.57 | 0.68 | 0.43 | 0.60 | 0.10 |

| Observations | 1637 | 1631 | 1628 | 1565 | 1787 | 1777 |

| FACES 2009† | PPVT | WJAP | WJLW | WJSP | Behavior Problems | Social Skills |

| HighScope | −0.05 (0.05) |

−0.04 (0.09) |

0.18 (0.12) |

−0.01 (0.06) |

−0.27 (0.25) |

−0.06 (0.26) |

| High Reach | −0.33** (0.10) |

−0.18* (0.08) |

−0.11 (0.13) |

−0.24 (0.16) |

0.09 (0.13) |

−0.29+ (0.17) |

| Scholastic | −0.13 (0.16) |

0.04 (0.08) |

−0.04 (0.08) |

−0.02 (0.15) |

−0.29 (0.18) |

−0.09 (0.23) |

| Other Published Curriculum | 0.04 (0.06) |

0.12+ (0.07) |

0.04 (0.09) |

0.06 (0.07) |

−0.15 (0.10) |

0.16 (0.11) |

| F-test (p-value) | 0.09 | 0.11 | 0.74 | 0.72 | 0.45 | 0.24 |

| Observations | 2611 | 2397 | 2401 | 2477 | 2691 | 2736 |

| NCEDL | PPVT | WJAP | HT Problem Behaviors | HT Competency | ||

| HighScope | 0.02 (0.09) |

−0.04 (0.13) |

0.08 (0.12) |

0.15 (0.13) |

||

| Other Published Curriculum | 0.09 (0.11) |

−0.02 (0.14) |

−0.07 (0.14) |

0.16 (0.16) |

||

| No Published Curriculum | 0.11 (0.09) |

0.03 (0.14) |

0.33* (0.15) |

0.07 (0.16) |

||

| F-test (p-value) | 0.54 | 0.68 | 0.10 | 0.59 | ||

| Observations | 398 | 394 | 450 | 452 | ||

Note.

Program/grantee fixed effects.

State fixed effects. Clustered standard errors in parentheses. Creative Curriculum is reference group. All outcomes are in standard deviation units. Sample includes treated children from the HSIS experiment dataset only. All models include: child race, gender, age, and baseline assessment score for each outcome, mother’s education, classroom quality (ECERS), teacher controls (teacher’s education, race, and years of experience). An indicator for full-day preschool status was included in the NCEDL and FACES 2009 analysis (information not collected in FACES 2003 and only collected at center-level in HSIS). Teen mom status was included in HSIS analyses (does not exist in NCEDL or FACES). An indicator for income under 150% of the poverty line was included in the NCEDL analyses (all HSIS and FACES participants were considered poor). PPVT = Peabody Picture Vocabulary Test, WJAP = Woodcock Johnson Applied Problems, WJLW = Woodcock Johnson Letter Word, WJSP = Woodcock Johnson Spelling, HT = Hightower. For all Problem Behaviors scores, a higher score indicates a more serious problem. The F-test statistic indicates whether the curricular package indicators are jointly equal to 0.

p<.10

p<.05

p<.01

HSIS.

After controlling for Head Start grantee with grantee fixed effects – and thus as many unobserved grantee-level factors as possible – results suggest that children in Head Start classrooms using the Scholastic curriculum outperform children in other classrooms operated by that grantee using the Creative Curriculum. We detect 0.25 SD difference in children’s outcomes between Scholastic and Creative Curriculum classrooms on the WJ-Applied Problems and Letter-Word subtests. Children’s WJ-Spelling subtest scores were significantly lower in classrooms using Creative Curriculum compared with HighScope and the “other curricular packages” set of classrooms. Children in classrooms using the HighScope curriculum also scored 0.18 SD higher on the WJ-Applied Problems subtest compared with children in Creative Curriculum classrooms. Children in classrooms using High Reach scored significantly worse on PPVT scores relative to Creative Curriculum. F-test results indicate that there are overall differences in curricular package associations with children’s WJ-Applied Problems and Spelling subtests, marginal differences with PPVT, and no differences with WJ-Letter Word subscale scores and behavior problems.

FACES 2003.

Grantee fixed effects models for the FACES 2003 dataset indicate very few differences in children’s outcomes at the end of preschool by curricular package. Children in classrooms using “other” published curricula scored 0.34 SD lower on social skills compared with children in Creative Curriculum classrooms. F-test results indicate that there are marginal differences in curricular package associations with social skills, and no differences with PPVT, WJ subscale scores, or behavior problems.

FACES 2009.

Grantee fixed effects models using the FACES 2009 dataset show that children in classrooms using High Reach had substantially lower scores on the PPVT and the WJ-Applied Problems subtest compared with children in classrooms using Creative Curriculum (−0.33, −0.18 SD), and marginally significantly lower social skills (−0.29 SD). F-test results indicate that there are marginal differences with PPVT, and no differences with WJ subscale scores, behavior problems, or social skills.

NCEDL.

State fixed effects models in the NCEDL dataset indicate that children in classrooms with no published curriculum in use had higher problem behavior (0.33 SD) scores relative to Creative Curriculum classrooms at the end of the preschool year, which corresponds with the RQ1 finding that classrooms with no curriculum had higher levels of problem behaviors. No other significant differences in children’s outcomes emerged. F-test results indicate that there are marginal differences on the behavior problems measure, and no differences with PPVT, WJ-Applied Problems subtest, or the social competency subscale.

Meta-analyses.

We summarize our findings with a meta-analysis of the 74 coefficients drawn from regressions estimating the relationship between curricula packages and children’s outcomes (from Table 5), with results shown in Table 6. Because we have as few as eight observations in the meta-analytic regression for each outcome, we have limited statistical power to confidently detect statistically significant, meaningful results. As such, we view these analyses as exploratory; results should be interpreted with caution. Overall, the meta-analytic regressions show that the majority of the curricular packages in our sample are not differentially associated with children’s school readiness at the end of preschool. Results for High Reach indicate that children in those classrooms had scores substantially lower on the PPVT (−.26 SD) and on social skills (−.29 SD) compared with children in classrooms using Creative Curriculum.

Table 6.

Meta-Analytic Regression Results from Table 4 Coefficients.

| PPVT | WJAP | WJLW | WJSP | Behavior Problems | Social Skills/Competency | |

|---|---|---|---|---|---|---|

| High Scope | −0.03 (0.07) |

0.03 (0.12) |

0.10 (0.10) |

0.04 (0.14) |

0.03 (0.14) |

0.03 (0.21) |

| High Reach | −0.26** (0.08) |

−0.12 (0.15) |

−0.03 (0.19) |

0.04 (0.30) |

−0.04 (0.24) |

−0.29** (0.12) |

| Scholastic | 0.07 (0.12) |

0.11 (0.14) |

0.05 (0.19) |

0.08 (0.28) |

−0.06 (0.27) |

- |

| Other Published Curriculum | 0.03 (0.03) |

0.05 (0.11) |

0.00 (0.11) |

0.06 (0.17) |

−0.02 (0.15) |

0.03 (0.27) |

| No Published Curriculum | - | - | - | - | - | - |

| Observations | 13 | 13 | 11 | 11 | 13 | 8 |