Abstract

We propose the epsilon-tau procedure to determine up- and down-trends in a time series, working as a tool for its segmentation. The method denomination reflects the use of a tolerance level ε for the series values and a patience level τ in the time axis to delimit the trends. We first illustrate the procedure in discrete random walks, deriving the exact probability distributions of trend lengths and trend amplitudes, and then apply it to segment and analyze the trends of U.S. dollar (USD)/Japanese yen (JPY) market time series from 2015 to 2018. Besides studying the statistics of trend lengths and amplitudes, we investigate the internal structure of the trends by grouping trends with similar shapes and selecting clusters of shapes that rarely occur in the randomized data. Particularly, we identify a set of down-trends presenting similar sharp appreciation of the yen that are associated with exceptional events such as the Brexit Referendum in 2016.

Introduction

Time series segmentation consists in dividing the original time series in segments with similar behavior according to some criteria and it can be either a preprocessing step in order to represent the time series more efficiently or a data mining technique on its own, able to extract information about the dynamics of the underlying phenomenon [1, 2]. Segmentation methods have been utilized to analyze time series of diverse backgrounds, including biological, climate, remote sensing and crime-related data [3–9].

Especially in the context of finance, various techniques to segment time series by identifying periods with similar behavior or by finding switching points were developed [10–17]. A particularly relevant category of such procedures is the segmentation of time series in up- and down trends, since the identification of periods presenting general tendency of increase or decrease is fundamental for risk management and to recognize investment opportunities. Often referred as drawdowns and drawups, there are several methods to determine trends in financial time series [18–22]. A strict definition of drawdown (drawnup) is the continuous decrease (increase) of the series values, terminated by any movement in the opposite direction. Such definition, however, may not be adequate to correctly assess market risks because it is too sensitive to noise. Addressing this limitation, the epsilon-drawdown method was proposed; its improvement is based on the introduction of a tolerance ε within which fluctuations are ignored and the trend is not interrupted [23–25]. Another way to reduce the noise sensitivity is to ignore movements within time horizon τ, method suggested but not discussed in [23]. In this work, we unify and extend those previous ideas and propose the epsilon-tau procedure, that simultaneously makes use of a tolerance level ε and a patience level τ to determine up- and down-trends in time series.

As for the paper outline, in the following section we present the epsilon-tau procedure as a method to define the up- or down-trend associated with a given reference point in a time series. We then illustrate its application in simple random walks. We derive exact expression for the marginal probability distributions of trend lengths and trend amplitudes and explain how to employ the epsilon-tau procedure to segment time series. Finally, we apply the segmentation to analyze foreign exchange data consisting of U.S. dollar/Japanese yen market time series from 2015 to 2018. We pay special attention to the internal structure of the trends, performing a systematic investigation of trend shapes that occur in the market time series and introducing an approach based on the Fisher’s exact test to select abnormal shapes that are rarely produced when the data is randomized.

Epsilon-tau procedure

Consider a time series and a reference point t = m with value xm. We say that the trend associated with the reference point is an up-trend if xm+1 − xm > 0 and it is a down-trend if xm+1 − xm < 0; if xm+1 − xm = 0, the trend is not determined for that reference point.

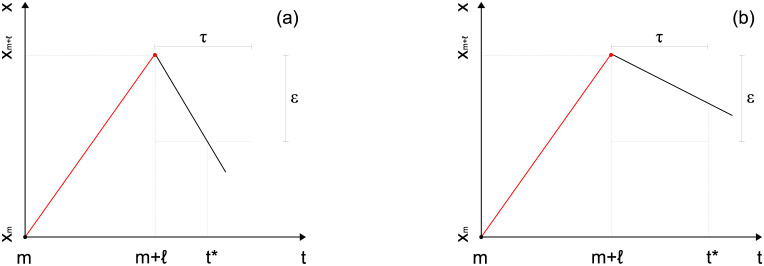

For the up-trend case (analogous for the down-trend case), the epsilon-tau procedure with tolerance level ε > 0 and patience level τ ≥ 1—both possibly time-dependent—consists in comparing values xt, t ≥ m + 1, with the previous rightmost maximum value maxm + 1 ≤ t′ ≤ t{xt′}. The procedure stops at t = t* when one of the following conditions is met:

- (a) value of time series reaches tolerance level ε (Fig 1a):

(1) - (b) time between consecutive maximum values reaches patience level τ (Fig 1b):

(2)

Fig 1. Stop conditions of the epsilon-tau procedure for the up-trend case (analogous for the down-trend case).

Procedure stops when: (a) value of time series reaches tolerance level ε; or (b) time between consecutive maximum values reaches patience level τ. It defines the up-trend (red) of length ℓ ≥ 1 and amplitude a = xm+ℓ − xm > 0.

The trend determined from this procedure is the up-trend [m + 1, m + ℓ] of length ℓ = argmaxm + 1 ≤ t′ ≤ t*{xt′} − m, ℓ ≥ 1, and amplitude a = xm + ℓ − xm, a > 0, where xm + ℓ = maxm + 1 ≤ t′ ≤ t*>{xt′}. Observe that the trend ends in m + ℓ and not in t*; the point t* indicates the stop of the procedure, with at least one point and at most τ points beyond the end of the trend needing to be checked in order to determine it.

We remark that the epsilon-tau procedure does not require a predetermined functional form by which trends are approximated, as it happens, for instance, in piecewise linear methods, where trends are approximated by straight lines [2]; this is an important feature that allow us to explore the diversity of possible trend shapes.

The epsilon-drawdown method used in [23–25] can be regarded as a particular instance of the epsilon-tau procedure with infinite patience level τ → ∞. Developed in a financial context, the cited works selected a time-dependent tolerance level ε proportional to the volatility (measure of price variation over time) estimated over a preceding time window, being more permissive when the market presents high volatility and becoming stricter during calmer periods. Instead, we use throughout this paper the time-dependent tolerance level ε = max{m + 1 ≤ t′ ≤ t} xt′ − xm for the up-trend case (analogous for the down-trend case). Using such tolerance level, stop condition (a) is translated as xt* ≤ xm, i.e., the procedure stops if the reference value xm is reached, and then all points in the up-trend [m + 1, m + ℓ] have values always in between the reference value xm and the maximum value xm + ℓ: xm < xt ≤ xm + ℓ, ∀t ∈ [m + 1, m + ℓ]. Such choice of tolerance level is related to trading psychology by setting the initial price level as the tolerance to keep believing that an up- or down-trend will recover and continue (naturally, this tolerance can be set higher for more aggressive traders or lower for risk averse ones). As for the patience level τ, we use it here as a time constant parameter and it is interpreted as the interval of time that the observer is willing to wait to confirm that a up- or down-trend has ended. The choice of its value is then connected to the characteristics of the observer and her/his intentions; for example, if the aim is the development of real-time applications, a small τ is more suitable, but for historical analysis, larger τ values can provide valuable information.

In the next section, we apply the epsilon-tau procedure with the described tolerance and patience level to simple random walks to illustrate the theoretical study of the trend length and trend amplitude probability distributions for different values of τ and to introduce the time series segmentation using the procedure.

Up- and down-trends in random walks

Take the random walk:

| (3) |

where the independent and identically distributed increments ξt can take value + 1 with probability p, −1 with probability q, or 0 with probability r = 1 − p − q.

For the strict definition of drawdown, which corresponds to tolerance level ε approaching zero or patience level τ = 1 in the epsilon-tau procedure, it was shown in [22] that the trend length and trend amplitude marginal probability distributions are asymptotically exponential when the time series increments are independent with a non-heavy-tailed distribution. Such asymptotic exponential behavior also occurs when applying the epsilon-tau procedure to the considered random walk, as we show next by presenting the exact expressions for the probability distributions and numerical simulations. The detailed derivation of the distributions can be found in S1 Appendix.

Trend length marginal probability distribution

The up-trend length ℓ probability distribution for patience level τ = 1 is given by (analogous for down-trend):

| (4) |

For patience level τ = 2, the distribution is:

| (5) |

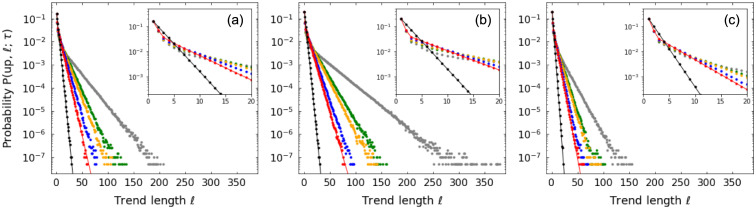

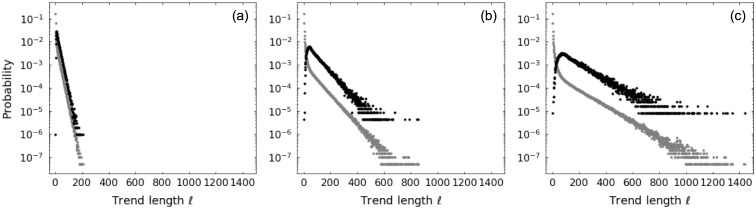

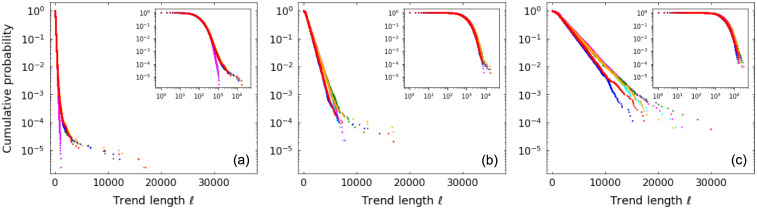

For patience level τ ≥ 3, the computation becomes involved and we do not derive those distributions here. But in Fig 2 we show length distributions from numerical simulations for different values of τ and random walk parameters. We observe agreement with the theoretical distributions for cases τ = 1 and τ = 2 and the presence of exponential tails in all cases, where the value of τ controls the decay rate.

Fig 2. Up-trend length ℓ probability distributions for random walks.

Distributions for patience levels τ = 1 (black), τ = 2 (red), τ = 3 (blue), τ = 4 (orange), τ = 5 (green), τ = 10 (gray) and for random walk parameters: (a) p = 0.4, q = 0.4; (b) p = 0.5, q = 0.4; (c) p = 0.4, q = 0.5. Symbols refer to results from numerical simulations and lines represent theoretical values. Insets detail distributions for small ℓ.

Trend amplitude marginal probability distribution

The up-trend amplitude a probability distribution for arbitrary patience level τ is given by the following expression:

| (6) |

where (using results on lattice path enumeration and powers of tridiagonal toeplitz matrices [26–28]):

| (7) |

with .

| (8) |

And:

| (9) |

For large amplitudes a > τ we can highlight the exponential behavior and write:

| (10) |

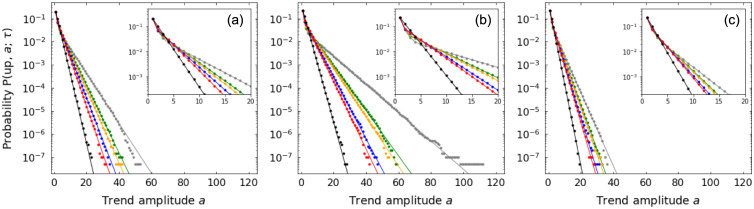

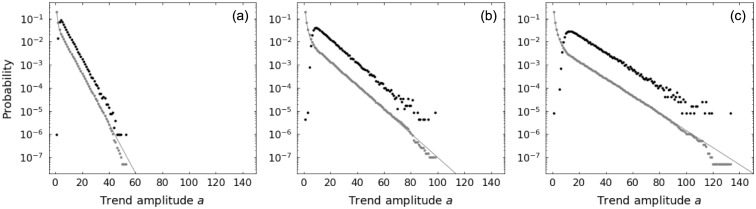

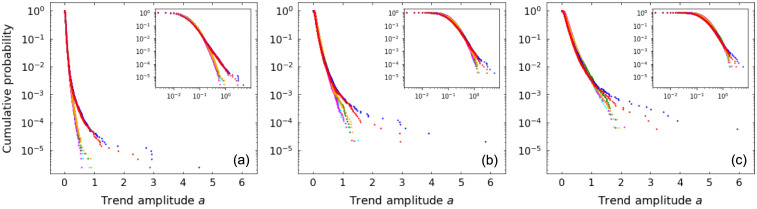

Fig 3 shows amplitude distributions from numerical simulations for different values of τ and random walk parameters. Simulation results agree with theoretical distributions for all values of τ, which also control the decay rate of the exponential tails.

Fig 3. Up-trend amplitude a probability distributions for random walks.

Distributions for patience levels τ = 1 (black), τ = 2 (red), τ = 3 (blue), τ = 4 (orange), τ = 5 (green), τ = 10 (gray) and for random walk parameters: (a) p = 0.4, q = 0.4; (b) p = 0.5, q = 0.4; (c) p = 0.4, q = 0.5. Symbols refer to results from numerical simulations and lines represent theoretical values. Insets detail distributions for small a.

Time series segmentation

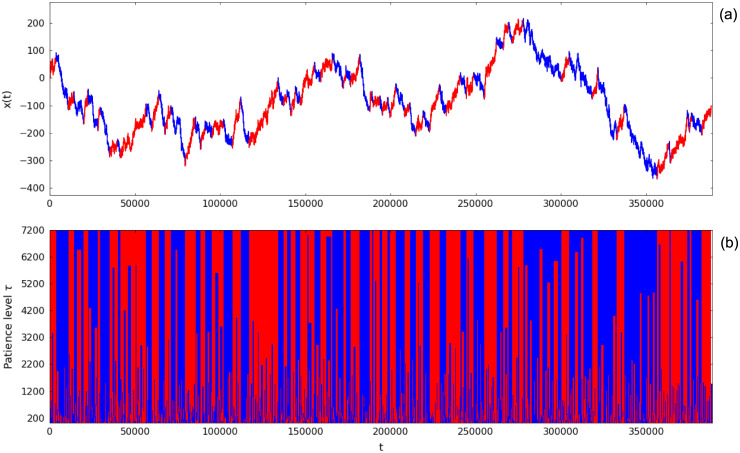

The epsilon-tau procedure can be straightforwardly employed to segment a time series in alternating up- and down-trends by setting the end of a trend as the reference point for the next one. An up-trend is always followed by a down-trend and vice-versa (except in the end of the time series, in which the last trend may not be determined due to the finite size of the series). An example of segmented random walk using patience level τ = 7200 is displayed in Fig 4a, where up-trends are colored red and down-trends, blue. Fig 4b shows the dependence of the segmentation result on the patience level τ, with larger values of τ producing a coarser segmentation with larger trends on average.

Fig 4. Time series segmentation of a random walk realization.

(a) Up- and down-trends segmentation using patience level τ = 7200 for random walk parameters p = 0.4, q = 0.4. (b) Segmentation results for different patience levels τ. Red indicates up-trends, blue indicates down-trends and light-gray (in (a)) or white (in (b)) shows points where the trend is not determined (in the end of the time series—an effect of the finite size of the series).

Note that the marginal probability distributions of length ℓ and amplitude a of trends from the segmentation of a random walk time series differ from the ones derived previously. Such difference arises from the fact that the reference point used to define a given trend is not arbitrary anymore, but it is conditioned to be the end of the previous trend. Figs 5 and 6 make explicit the distinction between the two cases for trend length and trend amplitude, respectively: gray symbols correspond to trends produced by taking arbitrary reference points and black symbols indicate trends resulting from the time series segmentation. In the segmentation case, the stop conditions of the epsilon-tau procedure acting in a trend restricts the next trend, strongly affecting the probability of the small ones (both in length and in amplitude); nevertheless, the decay rates of the exponential tails appear to be the same as the arbitrary reference point case.

Fig 5. Comparison between up-trend length ℓ probability distributions for random walk with parameters p = 0.4, q = 0.4.

Distributions considering arbitrary reference point (gray) and considering the trends obtained from time series segmentation (black) using patience levels: (a) τ = 10; (b) τ = 50; and (c) τ = 100. Symbols refer to results from numerical simulations.

Fig 6. Comparison between up-trend amplitude a probability distributions for random walk with parameters p = 0.4, q = 0.4.

Distributions considering arbitrary reference point (gray) and considering the trends obtained from time series segmentation (black) using patience levels: (a) τ = 10; (b) τ = 50; and (c) τ = 100. Symbols refer to results from numerical simulations and lines represent theoretical values.

Up- and down-trends in financial time series

We now use the time series segmentation by the epsilon-tau procedure to analyze actual financial time series from the foreign exchange market, which has the largest trading volume among all financial markets (6.6 trillion U.S. dollar per day as reported in April 2019 [29]). We use the dataset from the Electronic Broking Service (EBS), one of the main trading platforms in this market, continuously open during weekdays from Sunday 21:00:00 GMT to Friday 21:00:00 GMT. Traders in this platform, mostly banks and financial institutions, can place buy and sell quotes for a given currencies pair; the mid-quote is defined at each time as the average of the highest buy quote and the lowest sell quote and a deal occurs when there is a match between those quotes. We study here the mid-quote time series of the currency pair U.S. dollar (USD) and Japanese yen (JPY) in a time resolution of one second from 2015 to 2018: 51 weeks of 2015, from 2015 January 05 to 2015 December 25; 52 weeks of 2016, from 2016 January 04 to 2016 December 30; 52 weeks of 2017, from 2017 January 02 to 2017 December 29; and 51 weeks of 2018, from 2018 January 08 to 2018 December 28 (each week from Monday 00:00:00 GMT to Friday 12:00:00 GMT).

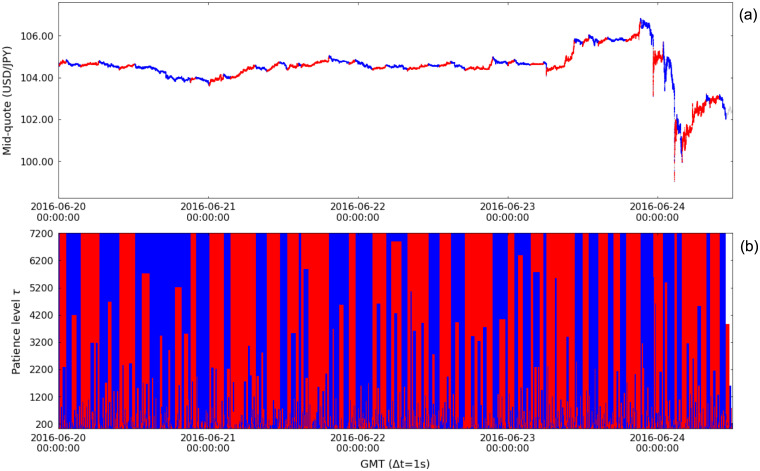

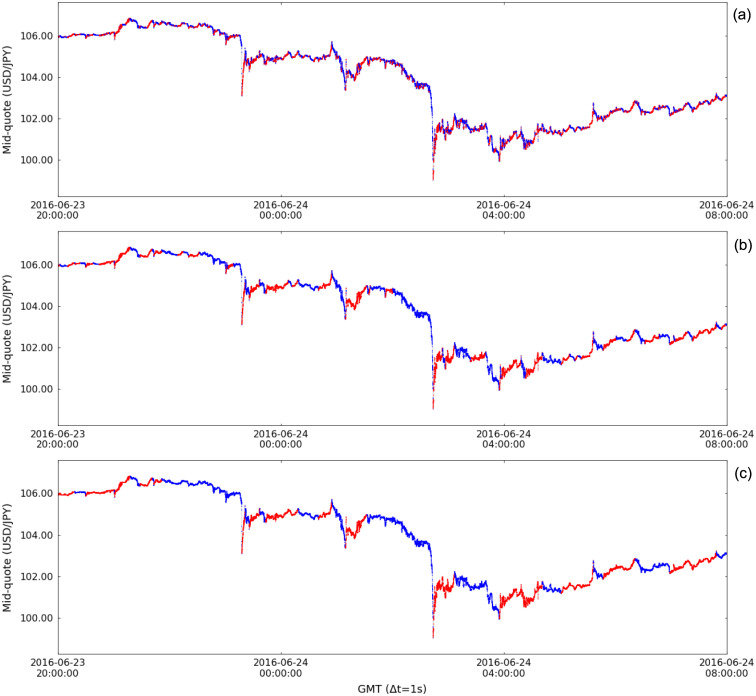

The considered period includes several events that impacted the financial markets, the Brexit Referendum in June 2016 being one among the most relevant [30, 31]. In the foreign exchange market, this event caused the pound sterling to fall against the U.S. dollar to its lowest level since 1985 and a strong appreciation of the Japanese yen. We use the week when the Brexit Referendum took place to illustrate the segmentation of financial time series. Fig 7a displays the segmentation of the mid-quote time series of the currency pair USD/JPY in the referred week, in which the surge of the Japanese yen against the U.S. dollar reflects the market realization of the decision of the United Kingdom to leave the European Union in the night of June 23 and morning of June 24. In this example of financial time series segmentation, we use patience level τ = 7200 (2h) for better visualization of the trends in the one week time frame; Fig 7b shows the segmentation results for different values of τ. Focusing on the yen surge, Fig 8 details the effect of the value of τ on the up- and down-trends of the segmented time series, with small τ emphasizing the microtrends and large τ, the trends regarded as macrotrends (for the one week time frame).

Fig 7. Time series segmentation of the mid-quote time series of the currency pair USD/JPY during the week from June 20 2016 00:00:00 GMT to June 24 2016 12:00:00 GMT, when the Brexit Referendum took place.

(a) Up- and down-trends segmentation using patience level τ = 7200 (2h). (b) Segmentation results for different patience levels τ. Red indicates up-trends, blue indicates down-trends and light-gray (in (a)) or white (in (b)) shows points where the trend is not determined.

Fig 8. Time series segmentation of the mid-quote time series of the currency pair USD/JPY during the 2016 Brexit Referendum.

Segmentation results depend on the used patience level: (a) τ = 60 (1min); (a) τ = 600 (10min); and (a) τ = 1800 (30min).

Trend length and trend amplitude marginal cumulative probability distributions

We start the statistical analysis of the trends obtained from the segmentation of the financial time series for the whole four years period by constructing the marginal (complementary) cumulative distributions of trend lengths ℓ and absolute trend amplitudes |a| for three values of patience level: τ = 60 (1min) (highlighting microtrends), τ = 600 (10min) (intermediate case) and τ = 1800 (30min) (highlighting macrotrends). We separate the up- and down-trend cases and also constructs the distributions for randomized data. For the randomization, we shuffle the increments of the mid-quote time series of each week individually; because of the high fraction of zero increments in the one second resolution mid-quote time series (82.87% of zero, 8.56% of positive and 8.57% of negative increments), we consider two kinds of randomization: fixed zeros randomization, where we fix the zero increments in their original positions and shuffle only the positive and negative ones, and total randomization, where all increments are shuffled.

The distributions of trend lengths ℓ are present in Fig 9. For the small value of τ = 60 (1min) (Fig 9a), the distributions corresponding to the totally randomization case decay exponentially while the one of the fixed zeros randomization are similar to the distributions of trends from the original mid-quote data, which have tails heavier than an exponential both for up- and down-trends. The similarity between the fixed zeros randomization case and the original data indicates that sequences of zero increments control the length of microtrends; in fact, the trend length distributions for small τ shed light on the silent periods of the market, i.e., when there is no trading activity that changes the mid-quote. The effect of the sequences of zeros is reduced for large values of τ (see results for τ = 1800 (30min) Fig 9b), for which both cases of randomization yield similar distributions with exponential tails and the distributions corresponding to the original data lose the heavy tail, presenting an approximate exponential decay but distinct from the random cases. We also note that the probabilities of long up- and down-trends significantly differ from each other, with long up-trends occurring more frequently than long down-trends.

Fig 9. Trend length ℓ cumulative probability distributions for mid-quote time series of the currency pair USD/JPY from 2015 to 2018.

Distributions for up-trends (red) and down-trends (blue) obtained from the segmentation of the mid-quote data, for up-trends (orange) and down-trends (green) obtained from the segmentation of the randomized mid-quote data with fixed zeros, and for up-trends (magenta) and down-trends (cyan) obtained from the segmentation of the totally randomized mid-quote data using patience levels: (a) τ = 60 (1min); (a) τ = 600 (10min); and (a) τ = 1800 (30min). Insets show log-log plots.

Fig 10 shows the distributions of absolute trend amplitudes |a|, not presenting major qualitative differences for different values of τ. The distributions for both randomization types decays exponentially, confirming that the sequences of zeros increments are less important for the amplitudes. The distributions corresponding to the original market data decay slower than the exponential ones of the random cases, with tails approximated by power-laws, which is in accordance with the results using the epsilon-drawdown method in financial markets [24]. The asymmetry between up- and down-trends is more explicit for the amplitudes: large down-trends (movements of depreciation of U.S. dollar against the Japanese yen), have higher probability than large trends in the opposite direction and can reach more extreme values of amplitude, e.g., ∼6 JPY per USD in the τ = 1800 (30min) case (Fig 10c). Such behavior is explained by the fact that the Japanese yen is seen as a safe-haven currency, a safe asset which protects investors during periods of uncertainty [32].

Fig 10. Absolute trend amplitude |a| cumulative probability distributions for mid-quote time series of the currency pair USD/JPY from 2015 to 2018.

Distributions for up-trends (red) and down-trends (blue) obtained from the segmentation of the mid-quote data, for up-trends (orange) and down-trends (green) obtained from the segmentation of the randomized mid-quote data with fixed zeros, and for up-trends (magenta) and down-trends (cyan) obtained from the segmentation of the totally randomized mid-quote data using patience levels: (a) τ = 60 (1min); (a) τ = 600 (10min); and (a) τ = 1800 (30min). Insets show log-log plots.

Trend shape clustering

The study of the probability distributions above are important for the understanding of the market dynamics, but quantities such as length and amplitude summarize the whole trend in a single number and ignore its internal structure; we cannot know, for example, if a down-trend falls uniformly or if it accelerates. Aiming at a more detailed picture of USD/JPY market trends, we proceed to the investigation of trend shape, i.e., the relative position of all points (or a sample of points) of the trend.

Here we group similar trend shapes using cluster analysis so that we are able to describe the different types that occur in the USD/JPY mid-quote time series; we are particularly interested in finding trend shapes that are rare in the randomized data and possibly related to exceptional events. For such task we need to choose a measure of distance between trends that reflect their shapes and a clustering method. For the distance between trends, we normalize the trends by setting unit length and unit amplitude (that is, we rescale the original trend horizontally by its length and vertically by its absolute amplitude) and sample a fixed number of points from the normalized trend at fixed positions (we take 100 equidistant points); we define the distance between trends as the Euclidean distance between the vectors formed by the sampled points from the corresponding normalized trends. For example, if we have two perfectly linear trends, the distance between them is zero independent of their lengths or amplitudes, confirming that they have exactly the same shape; on the other hand, we can have trends with same length and amplitude but with distance greater than zero because they have distinct shapes. For the clustering method, we select the agglomerative hierarchical clustering with complete-linkage criterion: starting from clusters formed by individual trends, at each time step we merge the two clusters with the shortest distance, where the distance between clusters X and Y is defined as the maximum distance between a trend in X and a trend in Y [33–35].

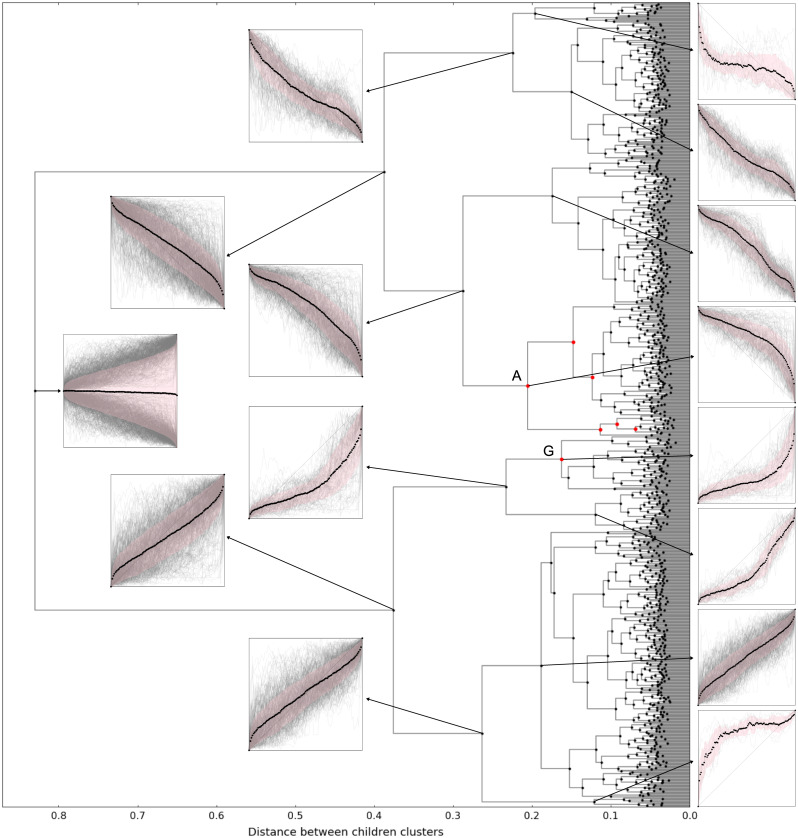

We apply the described method to the trends obtained by the segmentation of the mid-quote time series of the currency pair USD/JPY from 2015 to 2018 using τ = 1800 (30min), that is, focusing on macrotrends. We work with the subset of trends with absolute amplitude |a|>0.5, filtering out small trends. In Fig 11 we present the dendrogram generated by the clustering process that shows the clusters of similar trend shapes and their relations. The first cluster in the left is the one containing all trends, which have as children clusters the cluster of all up-trends and all down-trends, which have their own children clusters until the last clusters in the far right corresponding to the individual trends. In the graphs, we show the normalized trends in the cluster by plotting all normalized trends in gray, the average in black and the standard deviation in pink. The clusters represented by red symbols are the ones whose trends have shapes that deviate from the randomized data case as explained next.

Fig 11. Dendrogram indicating the similarities between shapes of trends obtained by the segmentation of the mid-quote time series of the currency pair USD/JPY from 2015 to 2018 using patience level τ = 1800 (30min).

Only trends with absolute amplitude |a|>0.5 are considered. Each symbol represents a cluster of shapes and graphs show the normalized trends (gray lines), the average (black symbols) and the standard deviation (pink shade). Red symbols in the dendrogram indicate the clusters that deviate from the random case.

After grouping similar trend shapes, we look for the clusters deviating from the random case, i.e., the clusters containing trend shapes of rare occurrence in the randomized data. First, we take the randomized mid-quote data with fixed zeros and extract the trends using the segmentation with same patience level τ = 1800 (30min) and condition |a|>0.5. Next, for each cluster of the original data and each trend from the randomized data, we compute the distance between cluster and trend from randomized data (using the definition of distance between clusters) and count the number of such trends whose distance is shorter than the maximum distance between trends within the cluster. Finally, having for each cluster a number of trends from the original data and a number of trends with similar shapes from the randomized data, we apply the Fisher’s exact test to check if the actual proportion of trends from original and from randomized data in a cluster is incompatible with the proportion of the total trends of each category supposing the null hypothesis of randomly selecting trends to compose the cluster. The probability of grouping ndata from a total of Ndata trends and nrand from a total of Nrand trends assuming that all trends have the same probability of being chosen is given by the hypergeometric distribution [36]:

| (11) |

The total number of trends from the original data Ndata and from the randomized data Nrand are fixed by the results of the segmentation: Ndata = 1055 trends and Nrand = 1376 trends. The number of trends to be selected under the null hypothesis to compose each cluster is also fixed and equal to ndata + nrand. We then use as p-value the probability of the number of trends selected from Ndata trends under the null hypothesis being greater or equal to the observed ndata:

| (12) |

We apply the Fisher’s exact test only to clusters where ndata > nrand; the others are regarded as non-deviant. Table 1 lists the clusters for which p-value is below 10−5, interpreted as the clusters that deviate from the random case. The same clusters are shown in red in the dendrogram of Fig 11 and detailed in Fig 12. We remind that the deviations from the random case that we discovered are related solely to the trend shapes, disregarding trend length or amplitude.

Table 1. Clusters of trend shapes that deviate from the random case.

| Cluster | Trend type | ndata | nrand | p-value |

|---|---|---|---|---|

| A | Down | 174 | 82 | 4.742×10−17 |

| B | Down | 146 | 87 | 3.986×10−10 |

| C | Down | 134 | 94 | 6.940×10−7 |

| D | Down | 28 | 0 | 5.753×10−11 |

| E | Down | 25 | 0 | 7.345×10−10 |

| F | Down | 15 | 0 | 3.447×10−6 |

| G | Up | 70 | 30 | 3.749×10−8 |

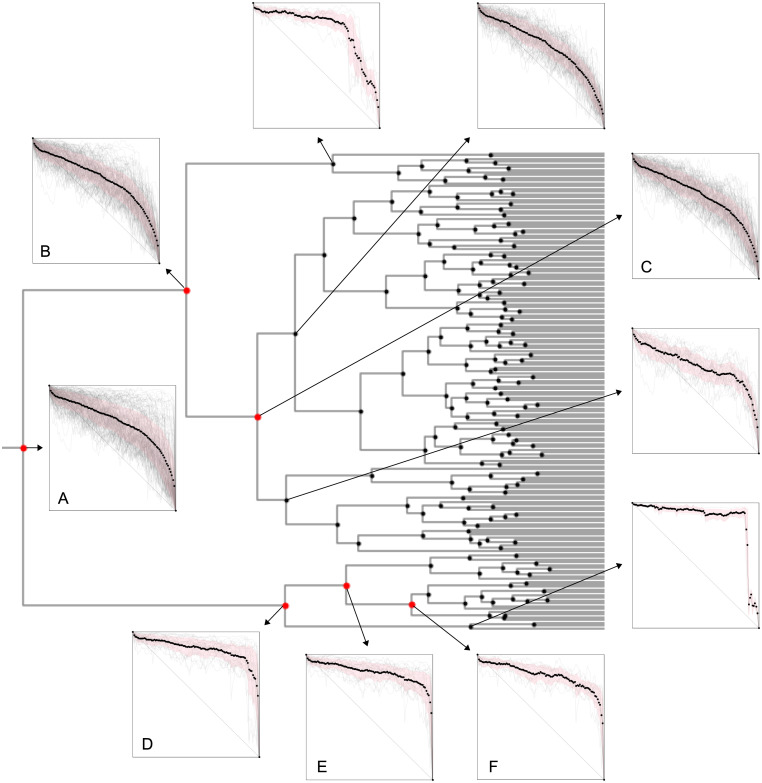

Fig 12. Portion of dendrogram detailing the clusters of down-trend shapes that deviate from the random case.

Graphs show the normalized trends (gray lines), the average (black symbols) and the standard deviation (pink shade). Cluster labels correspond to the ones in Table 1.

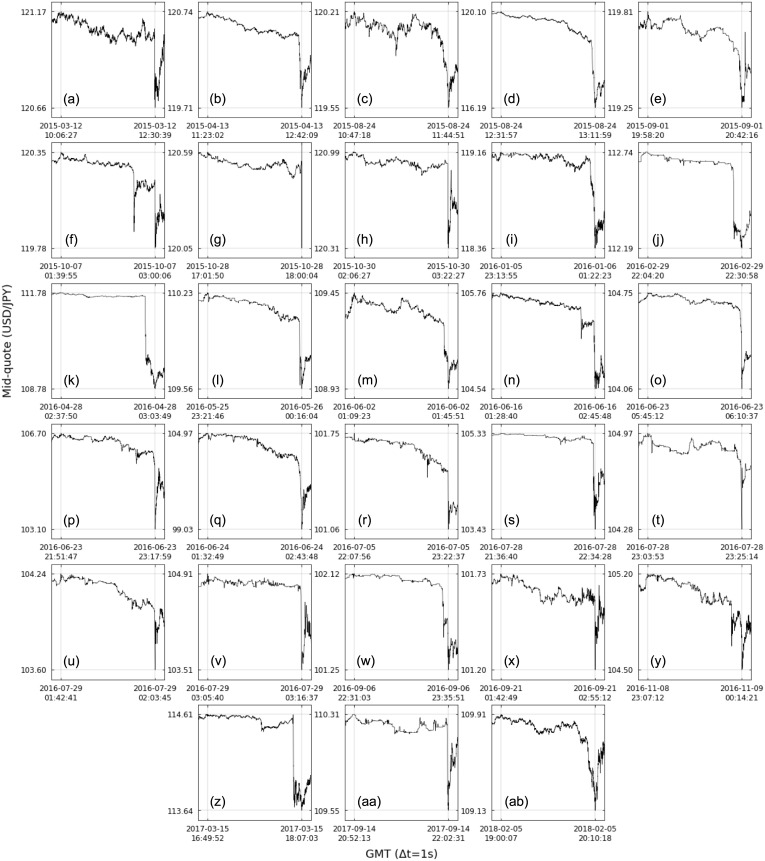

For a more meticulous analysis, we turn our attention to cluster D, the largest one with no trend from the random case, i.e., no trend from the shuffled time series data has shape similar to the original USD/JPY market data trends in the cluster. The average trend shape of cluster D is characterized by a sharp fall in the end of the trend, with the last ∼10% of length of the trend accounting for ∼80% of its amplitude. Fig 13 depicts all 28 down-trends in cluster D with their original lengths and amplitudes and Table 2 details them (labels in the first column correspond to the ones in Fig 13): date of minimum (i.e., end of the trend), time of minimum, trend length, trend amplitude and associated event.

Fig 13. All 28 down-trends of the USD/JPY market data from 2015 to 2018 in cluster D.

Shape of trends in this cluster are marked by a sharp fall in the end of the trend, having ∼80% of its amplitude in the last ∼10% of its length (trends are limited by the gray lines). See Table 2 for trends details.

Table 2. Details of all 28 down-trends of the USD/JPY market data from 2015 to 2018 in cluster D.

| Trend | Date of minimum | Time of minimum | Length | Amplitude | Associated event |

|---|---|---|---|---|---|

| (a) | 2015-03-12 | 12:30:39 | 8652 | -0.5050 | - |

| (b) | 2015-04-13 | 12:42:09 | 4747 | -1.0300 | - |

| (c) | 2015-08-24 | 11:44:51 | 3453 | -0.6650 | China’s Black Monday |

| (d) | 2015-08-24 | 13:11:59 | 2402 | -3.9075 | China’s Black Monday |

| (e) | 2015-09-01 | 20:42:16 | 2636 | -0.5625 | - |

| (f) | 2015-10-07 | 03:00:06 | 4811 | -0.5725 | BOJ Announcement |

| (g) | 2015-10-28 | 18:00:04 | 3494 | -0.5400 | Fed Announcement |

| (h) | 2015-10-30 | 03:22:27 | 4560 | -0.6775 | BOJ Announcement |

| (i) | 2016-01-06 | 01:22:23 | 7708 | -0.8025 | - |

| (j) | 2016-02-29 | 22:30:58 | 1598 | -0.5525 | - |

| (k) | 2016-04-28 | 03:03:49 | 1559 | -3.0100 | BOJ Announcement |

| (l) | 2016-05-26 | 00:16:04 | 3258 | -0.6800 | - |

| (m) | 2016-06-02 | 01:45:51 | 2188 | -0.5175 | - |

| (n) | 2016-06-16 | 02:45:48 | 4628 | -1.2175 | BOJ Announcement |

| (o) | 2016-06-23 | 06:10:37 | 1525 | -0.6850 | Brexit Referendum |

| (p) | 2016-06-23 | 23:17:59 | 5172 | -3.5975 | Brexit Referendum |

| (q) | 2016-06-24 | 02:43:48 | 4259 | -5.9425 | Brexit Referendum |

| (r) | 2016-07-05 | 23:22:37 | 4481 | -0.6900 | - |

| (s) | 2016-07-28 | 22:34:28 | 3468 | -1.9025 | BOJ Announcement(*) |

| (t) | 2016-07-28 | 23:25:14 | 1281 | -0.6825 | BOJ Announcement(*) |

| (u) | 2016-07-29 | 02:03:45 | 1264 | -0.6375 | BOJ Announcement(*) |

| (v) | 2016-07-29 | 03:16:37 | 657 | -1.4025 | BOJ Announcement |

| (w) | 2016-09-06 | 23:35:51 | 3888 | -0.8725 | - |

| (x) | 2016-09-21 | 02:55:12 | 4343 | -0.5250 | BOJ Announcement |

| (y) | 2016-11-09 | 00:14:21 | 4029 | -0.6975 | U.S. Election |

| (z) | 2017-03-15 | 18:07:03 | 4631 | -0.9700 | Fed Announcement |

| (aa) | 2017-09-14 | 22:02:31 | 4218 | -0.7650 | - |

| (ab) | 2018-02-05 | 20:10:18 | 4211 | -0.7775 | - |

Trend labels in the first column correspond to the ones in Fig 13.

(*) Those trends occurred hours before the BOJ Announcement associated with trend (v), but they are related to this event (see text).

By searching for the date and time of the trends in specialized media, it was possible to identify associated events for 17 of the 28 trends, including 3 trends ((o), (p) and (q)) connected with the already mentioned Brexit Referendum in 2016 [37–39], highlighting trend (q) with extreme amplitude of ∼6 JPY per USD when the victory of the Leave side was consolidating (see down-trend distribution for mid-quote data in Fig 10c). Trends (c) and (d) correspond to the called China’s Black Monday on 2015 August 24, when the Shanghai main share index fell 8.49% affecting other financial markets [40–42]. Trend (y) is linked with the 2016 United States elections won by Donald Trump [43, 44]. The remaining trends are related to monetary policy announcements from the central banking system of the United States, the Federal Reserve (Fed): trends (g) [45] and (z) [46, 47]; and from the Bank of Japan (BOJ): trends (f) [48], (h) [49, 50], (k) [51–53], (n) [54–56], (v) [57–59] and (x) [60–62]. In particular, the BOJ announcement on 2016 July 29 associated with trend (v) defined monetary easing actions to stimulate investments (partially as a response to the Brexit Referendum result) that in fact disappointed investors, who were expecting more aggressive measures and caused strong speculation before the announcement itself, probably responsible for trends (s), (t) and (u) [63, 64]. We then have that trends in cluster D with associated events are either related to an exceptional event, causing the yen appreciation which supports its status as safe-haven currency, or the reaction of the market to central banks announcements. Note, however, that no associated events were found for the remaining 11 trends in cluster D and there are probably other major events associated with different trend shapes, reminding us that this is still an incipient study and that the relationship between trend shapes and market events needs to be further investigated.

Final remarks

The epsilon-tau procedure proposed in this work extends previous methods to determine up- and down-trends in time series, particularly the epsilon-drawdown method; besides considering a tolerance level to decide the end of a trend, it introduces a patience level, a kind of tolerance limit in the time axis that controls the time scales of trends, highlighting microtrends if its value is small value and macrotrends if large.

We first studied the epsilon-tau procedure applied to discrete random walks. We derived exact expressions for marginal probability distributions of trend lengths and trend amplitudes, which, together with numerical results, confirmed the expected exponential decay when increments are independent. We explained how to use the epsilon-tau procedure to segment time series in alternating up- and down-trends by successively applying the method and the dependence of the segmentation result on the choice of the patience level value.

We then used the time series segmentation to analyze financial data represented by the USD/JPY mid-quote time series. The probability distributions of trend lengths and trend amplitudes for the market data were compared with the ones for randomized data. Specifically for amplitudes, the tails of the distributions for the market data are heavier than the ones for randomized data. We also observed an asymmetry between up- and down-trends: down-trends with large amplitude, corresponding to the appreciation of the JPY, happen more often than large up-trends and they can reach more extreme values. The status of safe-haven currency of the Japanese yen explains this asymmetry.

Finally, we carried out a more detailed analysis of the internal structure of the market macrotrends with the concept of trend shape. We grouped trends with similar shapes though the complete-linkage clustering and used the Fisher’s exact test to identify clusters containing shapes that rarely occur in the random case. We found a particular cluster whose average trend shape is characterized by a sharp fall in the end of the trend, with no similar shape in the randomized data. For 17 of its 28 down-trends, we could associated the sharp mid-quote drops with exceptional events in the studied period—China’s Black Monday in 2015, Brexit Referendum in 2016 and the 2016 U.S. elections—and with announcements from the Federal Reserve and Bank of Japan. This type of analysis shows the potential of using the epsilon-tau procedure for historical analysis of market trends, e.g., in which situations or what kind of events are responsible for trends with large amplitudes or uncommon shapes. Real-time market applications and uses in other fields remain for future works.

Supporting information

(PDF)

Data Availability

The raw data used in this study was purchased from the EBS Service Company Limited, with no special access privileges. Due to the contract between EBS and us, the authors are not allowed to distribute the raw data. Following the same procedure as the authors, those researchers interested in analyzing similar data sets are recommended to contact the EBS Service Company Limited about the availability and purchase of the data (see https://www.cmegroup.com/tools-information/contacts-list/ebs-support.html).

Funding Statement

This study was supported by the Joint Collaborative Research Laboratory for MUFG AI Financial Market Analysis. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. Author HT is employed by Sony Computer Science Laboratories, Inc, which provided support in the form of salaries for author HT, but did not have any additional role in the study design, data collection and analysis, decision to publish, or preparation of manuscript. The specific roles of this author are articulated in the ‘author contributions’ section.

References

- 1. Fu TC. A review on time series data mining. Eng Appl Artif Intell. 2011;24(1):164–81. 10.1016/j.engappai.2010.09.007 [DOI] [Google Scholar]

- 2. Keogh E, Chu S, Hart D, Pazzani M. Segmenting time series: A survey and novel approach In: Last M, Abraham K, Horst B, editors. Data mining in time series databases. Singapore: World scientific; 2004. p. 1–21. [Google Scholar]

- 3. Peng CK, Buldyrev SV, Goldberger AL, Havlin S, Sciortino F, Simons M, Stanley HE. Fractal landscape analysis of DNA walks. Physica A. 1992;191(1-4):25–9. 10.1016/0378-4371(92)90500-P [DOI] [PubMed] [Google Scholar]

- 4. Ducré-Robitaille JF, Vincent LA, Boulet G. Comparison of techniques for detection of discontinuities in temperature series. Int J Climatol. 2003;23(9):1087–101. 10.1002/joc.924 [DOI] [Google Scholar]

- 5. Reeves J, Chen J, Wang XL, Lund R, Lu QQ. A review and comparison of changepoint detection techniques for climate data. J Appl Meteorol Climatol. 2007;46(6):900–15. 10.1175/JAM2493.1 [DOI] [Google Scholar]

- 6. Verbesselt J, Hyndman R, Newnham G, Culvenor D. Detecting trend and seasonal changes in satellite image time series. Remote Sens Environ. 2010;114(1):106–15. 10.1016/j.rse.2009.08.014 [DOI] [Google Scholar]

- 7. Jamali S, Jönsson P, Eklundh L, Ardö J, Seaquist J. Detecting changes in vegetation trends using time series segmentation. Remote Sens Environ. 2015;156:182–95. 10.1016/j.rse.2014.09.010 [DOI] [Google Scholar]

- 8. Omranian N, Mueller-Roeber B, Nikoloski Z. Segmentation of biological multivariate time–series data. Sci Rep. 2015;5:8937 10.1038/srep08937 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Albertetti F, Grossrieder L, Ribaux O, Stoffel K. Change points detection in crime-related time series: an on-line fuzzy approach based on a shape space representation. Appl Soft Comput. 2016;40:441–54. 10.1016/j.asoc.2015.12.004 [DOI] [Google Scholar]

- 10. Preis T, Stanley HE. Switching phenomena in a system with no switches. J Stat Phys. 2010;138(1-3):431–46. 10.1007/s10955-009-9914-y [DOI] [Google Scholar]

- 11. Rivera–Castro MA, Miranda JG, Cajueiro DO, Andrade RF. Detecting switching points using asymmetric detrended fluctuation analysis. Physica A. 2012;391(1-2):170–9. 10.1016/j.physa.2011.07.009 [DOI] [Google Scholar]

- 12.Sato AH, Takayasu H. Segmentation procedure based on Fisher’s exact test and its application to foreign exchange rates. arXiv:1309.0602 [Preprint]. 2013 [cited 2020 Aug 1]: [16 p.]. Available from: https://arxiv.org/abs/1309.0602

- 13. Yura Y, Takayasu H, Sornette D, Takayasu M. Financial Knudsen number: Breakdown of continuous price dynamics and asymmetric buy-and-sell structures confirmed by high-precision order-book information. Phys Rev E. 2015;92(4):042811 10.1103/PhysRevE.92.042811 [DOI] [PubMed] [Google Scholar]

- 14. Yamashita Rios de Sousa AM, Takayasu H, Takayasu M. Detection of statistical asymmetries in non-stationary sign time series: Analysis of foreign exchange data. PLoS One. 2017;12(5):e0177652 10.1371/journal.pone.0177652 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Clara-Rahola J, Puertas AM, Sanchez-Granero MA, Trinidad-Segovia JE, de las Nieves FJ. Diffusive and arrestedlike dynamics in currency exchange markets. Phys Rev Lett. 2017;118(6):068301 10.1103/PhysRevLett.118.068301 [DOI] [PubMed] [Google Scholar]

- 16. Sánchez-Granero MA, Trinidad-Segovia JE, Clara-Rahola J, Puertas AM, De las Nieves FJ. A model for foreign exchange markets based on glassy Brownian systems. PLoS One. 2017;12(12):e0188814 10.1371/journal.pone.0188814 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Jurczyk J, Rehberg T, Eckrot A, Morgenstern I. Measuring critical transitions in financial markets. Sci Rep. 2017;7(1):1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Chekhlov A, Uryasev S, Zabarankin M. Drawdown measure in portfolio optimization. Int J Theor Appl Finance. 2005;8(01):13–58. 10.1142/S0219024905002767 [DOI] [Google Scholar]

- 19. Rotundo G, Navarra M. On the maximum drawdown during speculative bubbles. Physica A. 2007;382(1):235–46. 10.1016/j.physa.2007.02.021 [DOI] [Google Scholar]

- 20. Vecer J. Preventing portfolio losses by hedging maximum drawdown. Wilmott. 2007;5(4):1–8. [Google Scholar]

- 21. Zhang H, Hadjiliadis O. Drawdowns and rallies in a finite time-horizon. Methodol Comput Appl Probab. 2010;12(2):293–308. 10.1007/s11009-009-9139-1 [DOI] [Google Scholar]

- 22. Johansen A, Sornette D. Large stock market price drawdowns are outliers. J Risk. 2002;4:69–110. [Google Scholar]

- 23. Johansen A, Sornette D. Shocks, crashes and bubbles in financial markets. Brussels Economic Review. 2010;53(2):201–53. [Google Scholar]

- 24. Filimonov V, Sornette D. Power law scaling and “Dragon-Kings” in distributions of intraday financial drawdowns. Chaos Solitons Fractals. 2015;74:27–45. 10.1016/j.chaos.2014.12.002 [DOI] [Google Scholar]

- 25. Gerlach JC, Demos G, Sornette D. Dissection of Bitcoin’s multiscale bubble history from January 2012 to February 2018. R Soc Open Sci. 2019;6(7):180643 10.1098/rsos.180643 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Cicuta GM, Contedini M, Molinari L. Enumeration of simple random walks and tridiagonal matrices. J Phys A Math Gen. 2002;35(5):1125 10.1088/0305-4470/35/5/302 [DOI] [Google Scholar]

- 27. Felsner S, Heldt D. Lattice path enumeration and Toeplitz matrices. J Integer Seq. 2015;18(2):3. [Google Scholar]

- 28. Salkuyeh DK. Positive integer powers of the tridiagonal Toeplitz matrices. In: International Mathematical Forum. 2006;1(22):1061–1065. [Google Scholar]

- 29.Bank for International Settlements. Triennial Central Bank Survey: Foreign exchange turnover in April 2019. 2019 Sep 16 [cited 2020 Mar 10]. In: Bank for International Settlements [Internet]. Available from: https://www.bis.org/statistics/rpfx19_fx.htm

- 30. Aristeidis S, Elias K. Empirical analysis of market reactions to the UK’s referendum results–How strong will Brexit be?. J Int Financial Mark Inst Money. 2018;53:263–86. 10.1016/j.intfin.2017.12.003 [DOI] [Google Scholar]

- 31. Dao TM, McGroarty F, Urquhart A. The Brexit vote and currency markets. J Int Financial Mark Inst Money. 2019;59:153–64. 10.1016/j.intfin.2018.11.004 [DOI] [Google Scholar]

- 32.Lee KS. Safe−haven currency: An empirical identification. Rev Int Econ. 2017;25(4):924–947. 10.1111/roie.12289 [DOI] [Google Scholar]

- 33. Krznaric D, Levcopoulos C. Fast algorithms for complete linkage clustering. Discrete Comput Geom. 1998;19(1):131–45. 10.1007/PL00009332 [DOI] [Google Scholar]

- 34. Dawyndt P, De Meyer H, De Baets B. The complete linkage clustering algorithm revisited. Soft Comput. 2005;9(5):385–92. 10.1007/s00500-003-0346-3 [DOI] [Google Scholar]

- 35.Müllner D. Modern hierarchical, agglomerative clustering algorithms. arXiv:1109.2378 [Preprint]. 2011 [cited 2020 Mar 10]: [29 p.]. Available from: https://arxiv.org/abs/1109.2378

- 36.Weisstein EW. Fisher’s Exact Test. [cited 2020 Mar 10]. In: MathWorld–A Wolfram Web Resource [Internet]. Available from: https://mathworld.wolfram.com/FishersExactTest.html

- 37.Mackenzie M, Platt E. How global markets are reacting to UK’s Brexit vote. 2016 Jun 25 [cited 2020 Mar 10]. In: Financial Times [Internet]. Available from: https://www.ft.com/content/50436fde-39bb-11e6-9a05-82a9b15a8ee7

- 38.Vaswani K. Brexit: Asian investors in panic mode. 2016 Jun 24 [cited 2020 Mar 10]. In: BBC [Internet]. Available from: https://www.bbc.com/news/business-36614979

- 39.Nagata K. Yen leaps on referendum surprise; Nikkei tumbles. 2016 Jun 24 [cited 2020 Mar 10]. In: The Japan Times [Internet]. Available from: https://www.japantimes.co.jp/news/2016/06/24/business/financial-markets/brexit-referendum-forecasts-send-yen-soaring-to-an-almost-2%C2%BD-year-high/#.Xl9LDKgzZPZ

- 40.Yeomans J. How did Black Monday start? 2015 Aug 24 [cited 2020 Mar 10]. In: The Telegraph [Internet]. Available from: https://www.telegraph.co.uk/finance/china-business/11821043/How-did-Black-Monday-start.html

- 41.Denyer S. China’s ‘Black Monday’ spreads stock market fears worldwide. 2015 Aug 24 [cited 2020 Mar 10]. In: The Washington Post [Internet]. Available from: https://www.washingtonpost.com/world/world-markets-lose-ground-amid-black-monday-for-shanghai-index/2015/08/24/a1c88a48-0161-404c-a48b-6cee7d04f864_story.html

- 42.Wearden G, Rankin J, Farrer M. China’s’Black Monday’ sends markets reeling across the globe—as it happened. 2015 Aug 24 [cited 2020 Mar 10]. In: The Guardian [Internet]. Available from: https://www.theguardian.com/business/live/2015/aug/24/global-stocks-sell-off-deepens-as-panic-grips-markets-live

- 43.Rabouin D. Dollar hits new highs as yields spike after Trump win. 2016 Nov 9 [cited 2020 Mar 10]. In: Reuters [Internet]. Available from: https://www.reuters.com/article/uk-global-forex/dollar-hits-new-highs-as-yields-spike-after-trump-win-idUSKBN1332SK

- 44.Tomisawa A. Nikkei suffers biggest daily drop since Brexit vote as Trump nears shock victory. 2016 Nov 9 [cited 2020 Mar 10]. In: Reuters [Internet]. Available from: https://www.reuters.com/article/uk-japan-stocks/nikkei-suffers-biggest-daily-drop-since-brexit-vote-as-trump-nears-shock-victory-idUKKBN1340YI

- 45.Federal Reserve System [Internet]. Federal Reserve issues FOMC statement. 2015 Oct 28 [cited 2020 Mar 10]. Available from: https://www.federalreserve.gov/newsevents/pressreleases/monetary20151028a.htm

- 46.Federal Reserve System [Internet]. Federal Reserve issues FOMC statement. 2017 Mar 15 [cited 2020 Mar 10]. Available from: https://www.federalreserve.gov/newsevents/pressreleases/monetary20170315a.htm

- 47.Mahmudova A, Sjolin S. Dollar drops against rivals after Fed raises rates, hints at 2 hikes in 2017. 2017 Mar 15 [cited 2020 Mar 10]. In: MarketWatch [Internet]. Available from: https://www.marketwatch.com/story/dollar-trades-in-narrow-band-ahead-of-crucial-day-for-currency-markets-2017-03-15

- 48.Bank of Japan [Internet]. Statement on Monetary Policy. 2015 Oct 7 [cited 2020 Mar 10]. Available from: https://www.boj.or.jp/en/announcements/release_2015/k151007a.pdf

- 49.Bank of Japan [Internet]. Statement on Monetary Policy. 2015 Oct 30 [cited 2020 Mar 10]. Available from: https://www.boj.or.jp/en/announcements/release_2015/k151030a.pdf

- 50.B T. The Bank of Japan keeps printing money at speed. 2015 Oct 30 [cited 2020 Mar 10]. In: The Economist [Internet]. Available from: https://www.economist.com/free-exchange/2015/10/30/the-bank-of-japan-keeps-printing-money-at-speed

- 51.Bank of Japan [Internet]. Statement on Monetary Policy. 2016 Apr 28 [cited 2020 Mar 10]. Available from: https://www.boj.or.jp/en/announcements/release_2016/k160428a.pdf

- 52.Harding R. Yen surges and stocks sink after Bank of Japan keeps policy on hold. 2016 Apr 28 [cited 2020 Mar 10]. In: Financial Times [Internet]. Available from: https://www.ft.com/content/95509c6c-0ce0-11e6-b41f-0beb7e589515

- 53.NewsRise. Shares slump after BoJ stands pat, derivatives expiry weighs. 2016 Apr 28 [cited 2020 Mar 10]. In: Nikkei Asian Review [Internet]. Available from: https://asia.nikkei.com/Business/Markets/Stocks/Shares-slump-after-BoJ-stands-pat-derivatives-expiry-weighs

- 54.Bank of Japan [Internet]. Statement on Monetary Policy. 2016 Jun 16 [cited 2020 Mar 10]. Available from: https://www.boj.or.jp/en/announcements/release_2016/k160616a.pdf

- 55.Sano H, Coghill K. Yen hits 20-month high vs dollar after BOJ stands pat, Nikkei futures tumble. 2016 Jun 16 [cited 2020 Mar 10]. In: Reuters [Internet]. Available from: https://www.reuters.com/article/global-forex-yen/yen-hits-20-month-high-vs-dollar-after-boj-stands-pat-nikkei-futures-tumble-idUSL4N1980L3

- 56.Shaffer L. Japanese yen pushed higher by Fed, safe-haven flows amid Brexit fears. 2016 Jun 16 [cited 2020 Mar 10]. In: CNBC [Internet]. Available from: https://www.cnbc.com/2016/06/16/japanese-yen-pushed-higher-by-fed-safe-haven-flows-amid-brexit-fears.html

- 57.Bank of Japan [Internet]. Enhancement of Monetary Easing. 2016 Jul 29 [cited 2020 Mar 10]. Available from: https://www.boj.or.jp/en/announcements/release_2016/k160729a.pdf

- 58.Reuters. Bank of Japan Expands Stimulus, Disappointing Investors. 2016 Jul 29 [cited 2020 Mar 10]. In: Fortune [Internet]. Available from: https://fortune.com/2016/07/29/bank-of-japan-stimulus-expand-interest-rates-brexit

- 59.Soble J. Bank of Japan Resists Strong Medicine for Stimulus. 2016 Jul 29 [cited 2020 Mar 10]. In: The New York Times [Internet]. Available from: https://www.nytimes.com/2016/07/30/business/international/japan-bank-economy-abe-yen.html

- 60.Bank of Japan [Internet] New Framework for Strengthening Monetary Easing: “Quantitative and Qualitative Monetary Easing with Yield Curve Control”. 2016 Sep 21 [cited 2020 Mar 10]. Available from: https://www.boj.or.jp/en/announcements/release_2016/k160921a.pdf

- 61.Harding R. BoJ launches new form of policy easing. 2016 Sep 21 [cited 2020 Mar 10]. In: Financial Times [Internet]. Available from: https://www.ft.com/content/028d47a8-7faa-11e6-bc52-0c7211ef3198

- 62.DeCambre M, Kachi H, Vlastelica R. Dollar hits lowest in a month versus yen after BOJ move; Fed stays put on rates. 2016 Sep 21 [cited 2020 Mar 10]. In: MarketWatch [Internet]. Available from: https://www.marketwatch.com/story/yen-slides-losing-safe-haven-allure-after-bank-of-japan-decision-2016-09-21

- 63.Diedrich S. All Eyes On Bank Of Japan And The Possibility Of’Helicopter Money’. 2016 Jul 26 [cited 2020 Mar 10]. In: Forbes [Internet]. Available from: https://www.forbes.com/sites/greatspeculations/2016/07/26/all-eyes-on-bank-of-japan-and-the-possibility-of-helicopter-money/#7d7ee6386c5d

- 64.Adinolfi J. Here’s how the yen might react to the BOJ decision. 2016 Jul 28 [cited 2020 Mar 10]. In: MarketWatch [Internet]. Available from: https://www.marketwatch.com/story/heres-how-the-yen-might-react-to-the-boj-decision-2016-07-28