Significance

Here we show robust face-selectivity in the lateral fusiform gyrus of congenitally blind participants during haptic exploration of 3D-printed stimuli, indicating that neither visual experience, nor fovea-biased input, nor visual expertise is necessary for face-selectivity to arise in its characteristic location. Similar resting fMRI correlation fingerprints in individual blind and sighted participants suggest a role for long-range connectivity in the specification of the cortical locus of face-selectivity.

Keywords: face selectivity, congenital blindness, development, haptics, fusiform gyrus

Abstract

The fusiform face area responds selectively to faces and is causally involved in face perception. How does face-selectivity in the fusiform arise in development, and why does it develop so systematically in the same location across individuals? Preferential cortical responses to faces develop early in infancy, yet evidence is conflicting on the central question of whether visual experience with faces is necessary. Here, we revisit this question by scanning congenitally blind individuals with fMRI while they haptically explored 3D-printed faces and other stimuli. We found robust face-selective responses in the lateral fusiform gyrus of individual blind participants during haptic exploration of stimuli, indicating that neither visual experience with faces nor fovea-biased inputs is necessary for face-selectivity to arise in the lateral fusiform gyrus. Our results instead suggest a role for long-range connectivity in specifying the location of face-selectivity in the human brain.

Neuroimaging research over the last 20 years has provided a detailed picture of the functional organization of the cortex in humans. Dozens of distinct cortical regions are each found in approximately the same location in essentially every typically developing adult. How does this complex and systematic organization get constructed over development, and what is the role of experience? Here we address one facet of this longstanding question by testing whether the fusiform face area (FFA), a key cortical locus of the human face processing system, arises in humans who have never seen faces.

Both common sense, and some data, suggest a role for visual experience in the development of face perception. First, faces constitute a substantial percentage of all visual experience in early infancy (1), and it would be surprising if this rich teaching signal were not exploited. Second, face perception abilities and face-specific neural representations continue to mature for many years after birth (2–6). Although these later changes could in principle reflect either experience or biological maturation or both, some evidence indicates that the amount (7, 8) and kind (9, 10) of face experience during childhood affects face perception abilities in adulthood. In all of these cases, however, it is not clear whether visual experience plays an instructive role in wiring up or refining the circuits for face perception, or a permissive role in maintaining those circuits (11).

Indeed, several lines of evidence suggest that some aspects of face perception may develop with little or no visual experience. Within a few minutes, or perhaps even before birth (12), infants track schematic faces more than scrambled faces (13, 14). Within a few days of birth, infants can behaviorally discriminate individual faces, across changes in viewpoint and specifically for upright, but not inverted faces (15–17). EEG data from infants 1 to 4 d old show stronger cortical responses to upright than inverted schematic faces (18). Finally, functional MRI (fMRI) data show a recognizably adultlike spatial organization of face responses in the cortex of infant monkeys (19) and 6-mo-old human infants (20). These findings show that many behavioral and neural signatures of the adult face-processing system can be observed very early in development, often before extensive visual experience with faces.

To more powerfully address the causal role of visual experience in the development of face processing mechanisms, what is needed is a comparison of those mechanisms in individuals with and without the relevant visual experience. Two recent studies have done just that. Arcaro et al. (21) raised baby monkeys without exposing them to faces (while supplementing visual and social experience with other stimuli), and found that these face-deprived monkeys did not develop face-selective regions of the cortex. This finding seems to provide definitive evidence that seeing faces is necessary for the formation of the face-selective cortex, as the authors concluded. However, another recent study in humans (22) argued for the opposite conclusion. Building upon a large earlier literature providing evidence for category selective responses for scenes (23), objects, and tools (24–32) in the ventral visual pathway of congenitally blind participants, the study reported preferential responses for face-related sounds in the fusiform gyrus of congenitally blind humans. These two studies differ in stimulus modality, species, and the nature of the deprivation, and hence their findings are not strictly inconsistent. Nonetheless, they suggest different conclusions about the role of visual experience with faces in the development of the face-selective cortex, leaving this important question unresolved.

If indeed true face-selectivity can be found in congenitally blind participants, in the same region of the lateral fusiform gyrus as sighted participants, this would conclusively demonstrate that visual experience is not necessary for face-selectivity to arise in this location. Although the van den Hurk et al. study (22) provides evidence for this hypothesis, it did not show face-selectivity in individual blind participants, as needed to precisely characterize the location of the activation, and it did not provide an independent measure of the response profile of this region, as needed to establish true face-selectivity (i.e., substantially and significantly higher response to faces than to each of the other conditions tested). Furthermore, the auditory stimuli used by van den Hurk et al. (22) do not enable the discovery of any selective responses that may be based on amodal shape information (25, 33, 34), which would be carried by visual or tactile but not auditory stimuli. Here, we use tactile stimuli and individual-subject analysis methods in an effort to determine whether true face-selectivity can arise in congenitally blind individuals with no visual experience with faces.

We also address the related question: Why does the FFA develop so systematically in its characteristic location, on the lateral side of the midfusiform sulcus (35)? According to one hypothesis (36, 37) this region becomes tuned to faces because it receives structured input preferentially from the foveal retinotopic cortex, which in turn disproportionately receives face input (because faces are typically foveated). Another (nonexclusive) hypothesis holds that this region of the cortex may have preexisting feature biases, for example for curved stimuli, leading face stimuli to preferentially engage and experientially modify responses in this region (38–45). A third class of hypotheses argue that it is not bottom-up input, but rather interactions with higher-level regions engaged in social cognition and reward, that bias this region to become face-selective (38, 39). This idea dovetails with the view that category-selective regions in the ventral visual pathway are not just visual processors extracting information about different categories in similar ways, but that each is optimized to provide a different kind of representation tailored to the distinctive postperceptual use of this information (29, 46). Such rich interactions with postperceptual processing may enable these typically visual regions to take on higher-level functions in blind people (47, 48). These three hypotheses make different predictions about face-selectivity in the fusiform gyrus of congenitally blind people, which we test here.

Results

Face-Selectivity in Sighted Controls.

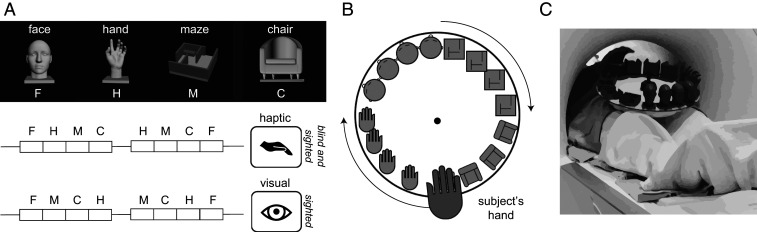

To validate our methods, we first tested for face-selectivity in sighted control participants (n = 15) by scanning them with fMRI as they viewed rendered videos of 3D-printed face, maze, hand, and chair stimuli, and as they haptically explored the same stimuli with their eyes closed (Fig. 1).

Fig. 1.

Haptic experiment stimuli and design. (A, Upper) Images showing a rendered example stimulus from each of the four stimulus categories used in Exp. 1: faces (F), hands (H), mazes (M), and chairs (C). (Lower) Experimental design in which the participants haptically explored the stimuli presented in blocks. Sighted participants viewed these rendered stimuli rotating in depth; both sighted and blind subjects haptically explored 3D-printed versions of these stimuli. Both haptic and visual experiments included two blocks per stimulus category per run, with 30-s rest blocks at the beginning, middle, and end of each run. During the middle rest period in the haptic condition the turntable was replaced to present new stimuli for the second half of the run. (B) The stimuli were presented on a rotating turntable, to minimize hand/arm motion. The subjects explored each 3D printed stimulus for 6 s after which the turntable was rotated to present the next stimulus. (C) Image showing an example participant inside the scanner bore extending his hand out to explore the 3D-printed stimuli on the turntable.

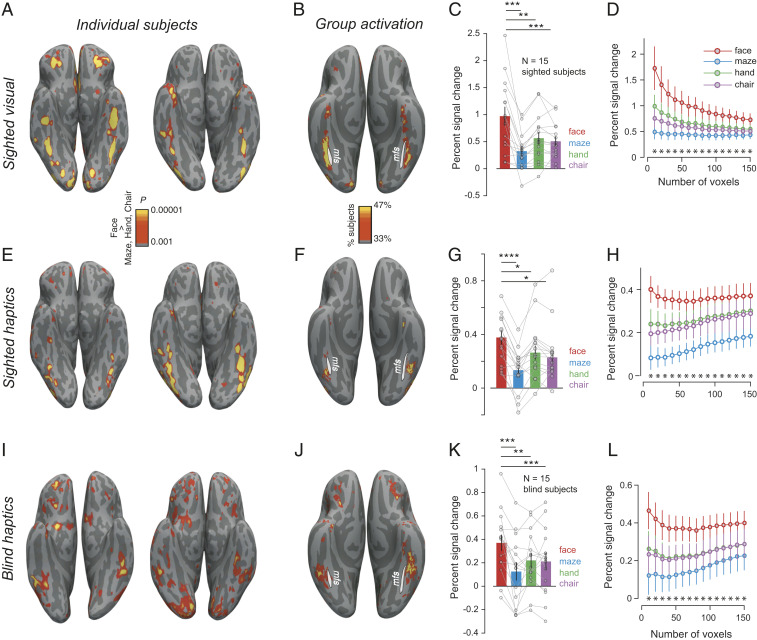

Whole-brain contrasts of the response during viewing of faces versus viewing hands, chairs, and mazes showed the expected face-selective activations in the canonical location lateral to the midfusiform sulcus (35) in both individual participants (Fig. 2A) and in a group-activation overlap map (Fig. 2B). Following established methods in our laboratory (3, 49, 50), we identified the top face-selective voxels for each participant within a previously reported anatomical constraint parcel (49) for the FFA, quantified the fMRI response in these voxels in held-out data, and statistically tested selectivity of those response magnitudes across participants (Fig. 2C). This method avoids subjectivity in the selection of the functional region-of-interest (fROI), successfully finds face-selective fROIs in most sighted subjects, and avoids double-dipping or false-positives by requiring cross-validation (see SI Appendix, Fig. S3 for control analyses). As expected for sighted participants in the visual condition, the response to faces was significantly higher than each of the other three stimulus categories in the held-out data (Fig. 2 C and D) (all P < 0.005, paired t test).

Fig. 2.

Face-selectivity in sighted and blind. (A) Visual face-selective activations (faces > hands, chairs, and mazes) on the native reconstructed surface for two sighted subjects. (B) Percent of sighted subjects showing significant visual face-selectivity (at the P < 0.001 uncorrected level in each participant) in each voxel registered to fsaverage surface. White line shows the location of the midfusiform sulcus (mfs). (C) Mean and SEM of fMRI BOLD response across sighted subjects in the top 50 face-selective voxels (identified using independent data) during visual inspection of face, maze, hand, and chair images. Individual subject data are overlaid as connected lines. (D) Response profile of visual face-selective region (in held-out data) in sighted participants as a function of fROI size (error bars indicate the SEM). Asterisks (*) indicate significant (P < 0.05) difference between faces and each of the three other stimulus categories across subjects. (E–H) Same as A–D but for sighted subjects during haptic exploration of 3D-printed stimuli. (I–L) Same as A–D but for blind subjects during haptic exploration of 3D-printed stimuli. *P < 0.05, **P < 0.005, ***P < 0.0005, and ****P < 0.00005.

In the haptic condition, whole-brain contrasts revealed face-selective activations in a similar location to visual face-selectivity in individual sighted participants (Fig. 2E) and in the group overlap map (Fig. 2F). fROI analyses using haptic data to both select and quantify held-out responses revealed significantly higher response to faces than each of the three other conditions (all P < 0.05, paired t test). Furthermore, when the visual data from the same subject were used to define face-selective fROIs, we observed similar haptic face-selectivity (all P < 0.05, paired t test). Note that the absolute magnitude of the fMRI signal was lower for the haptic condition in sighted participants relative to the visual condition, but the selectivity of the response was similar in the two modalities [t(28) = 0.13, P = 0.89, unpaired t test, on selectivity index] (see Methods). The observation of haptic face-selectivity in sighted participants demonstrates the effectiveness of our stimuli and methods, and presumably reflects visual imagery of the haptic stimuli (51, 52). But the sighted haptic responses do not resolve the main question of this paper, of whether face-selectivity in the fusiform can arise without visual experience with faces. To answer that question, we turn to congenitally blind participants.

Face-Selectivity in Blind Participants.

To test whether blind participants show selectivity for faces despite their lack of visual experience, we scanned congenitally blind participants on the same paradigm as sighted participants, as they haptically explored 3D-printed face, maze, hand, and chair stimuli. Indeed, whole-brain contrast maps reveal face-selective activations in the group activation overlap map (Fig. 2J) and in most blind subjects analyzed individually (Fig. 2I and SI Appendix, Fig. S1). These activations were found in the canonical location (Fig. 2 I and J), lateral to the midfusiform sulcus (35). Following the method used for sighted participants, we identified the top haptic face-selective voxels for each subject within the previously published anatomical constraint parcels for the visual FFA (SI Appendix, Fig. S2) and measured the fMRI response in these voxels in the held-out data. The response to haptic faces was significantly higher than to each of the other three stimulus classes (all P < 0.005, paired t test). Although the absolute magnitude of the fMRI signal was lower for blind participants haptically exploring the stimuli than for sighted participants viewing the stimuli, the selectivity of the response was similar in the two groups [t(28) = 0.05, P = 0.96, unpaired t test, on selectivity index between blind-haptics and sighted-visual and t(28) = 0.12, P = 0.90, on selectivity index between blind-haptics and sighted-haptics]. Note that fROIs were defined in each participant as the top 50 most significant voxels within the anatomical constraint parcel, even if no voxels actually reached significance in that participant, thus providing an unbiased measure of average face-selectivity of the whole group. These analyses reveal clear face-selectivity in the majority of congenitally blind participants, in a location similar to where it is found in sighted participants. Thus, seeing faces is not necessary for the development of face-selectivity in the lateral fusiform gyrus.

Three further analyses support the similarity in anatomical location of the face-selective responses for blind participants feeling faces and sighted participants viewing faces. First, a whole-brain group analysis found no voxels showing an interaction of subject group (sighted visual versus haptic blind) by face/nonface stimuli even at the very liberal threshold of P < 0.05 uncorrected, aside from a region in the collateral sulcus that showed greater scene selectivity in sighted than blind participants. Second, a new fROI-based analysis using a hand-drawn anatomical constraint region for the FFA based on precise anatomical landmarks from Weiner et al. (35) showed similar face-selectivity in the haptic blind data (SI Appendix, Fig. S2). Finally, there is no evidence for differential lateralization of face-selectivity in haptic blind versus visual sighted (see SI Appendix, Fig. S4 for related statistics). Taken together, these analyses suggest that haptic face-selectivity in blind persons arises in a similar anatomical location to visual face-selectivity in the sighted.

Why Do Face-Selective Activations Arise where They Do?

Our finding of haptic face-selective activations in the fusiform gyrus of blind subjects raises another fundamental question: Why does face-selectivity arise so systematically in that specific patch of cortex, lateral to the midfusiform sulcus (39)? According to one widespread hypothesis, this cortical locus becomes face-selective because it receives structured visual information routed through specific connections from the foveal (versus peripheral) retinotopic cortex, where face stimuli most often occur (40–43). This hypothesis cannot account for the anatomical location of face-selectivity in the blind group observed here, because these subjects never received any structured visual input at all.

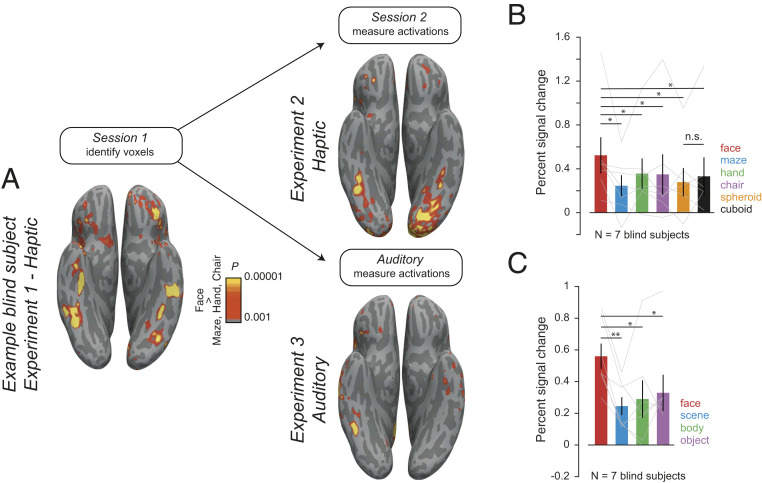

According to another widely discussed hypothesis, this region is biased for curved stimuli, as part of an early-developing shape-based proto-map upon which higher-level face-selectivity is subsequently constructed (53). If this proto-map is strictly visual, then it cannot account for the location of the face selectivity we observe in blind participants. However, if it is amodal, responding also to haptic shape (33, 54), it could. In this case, haptic face-selective regions in blind participants should respond preferentially to curved compared to rectilinear shapes. We tested this hypothesis in a second experiment in which seven of our original congenitally blind participants returned for a new scanning session in which they haptically explored a stimulus set comprising the four stimulus categories used previously and two additional categories: Spheroids and cuboids. This experimental design enabled us to both replicate face-selectivity in an additional experimental session and determine whether the face-selective voxels were preferentially selective for curvilinear shapes (spheroids) over rectilinear shapes (cuboids). Face-selective activations were replicated in the second experiment session (Fig. 3 A, Upper Right). In a strong test of the replication, we quantified face-selectivity by choosing the top face-selective voxels from the first experiment session within the FFA parcel, spatially registered these data to those from the second session in the same participant, and measured the response of this same fROI in the second experimental session. We replicated the face-selectivity from our first experiment (Fig. 3B). Furthermore, we found that the face-selective voxels did not respond more strongly to spheroids than cuboids [t(6) = −0.65, P = 0.54, paired t test]. These data argue against a curvature bias in a preexisting amodal shape map as a determinant of the cortical locus of face-selectivity in blind subjects.

Fig. 3.

Responses of face-selective regions in blind participants during haptic exploration of curved stimuli and auditory listening to sound categories. (A) Face-selective activation (faces > hands, chairs, and scenes) in an example blind subject during haptic exploration of 3D-printed stimuli in session 1 (Left), replicated in an additional follow-up scan with additional curved stimuli (Right Upper) and in an auditory paradigm (Lower Right). (Right) Mean and SEM across participants of fMRI BOLD response in the top 50 face-selective voxels (identified from session 1) during the session. (B) Haptic exploration of spheroids and cuboids (in addition to face, maze, hand, chair stimuli as before) and (C) auditory presentation of face, scene, body, and object related sounds. In B and C, individual subject data are overlaid as connected lines; *P < 0.05, **P < 0.005 (two-tailed t test). n.s. is not significant.

If it is neither early-developing retinotopic nor feature-based proto-maps (40, 55) that specify the cortical locus of face-selectivity in blind participants, what does? In sighted subjects, category-selective regions in the ventral visual pathway serve not just to analyze visual information, but to extract the very different representations that serve as inputs to higher-level regions engaged in social cognition, visually guided action, and navigation (29, 38, 39, 47). Perhaps it is their interaction with these higher-level cortical regions that drives the development of face-selectivity in this location (38, 39). The previously mentioned preferential response to face-related sounds in the fusiform of blind subjects (22) provides some evidence for this hypothesis. To test the robustness of that result, and to ask whether face-selectivity in blind participants arises in the same location for auditory and haptic stimuli, we next ran a close replication of the Van den Hurk et al. (22) study on seven of the congenitally blind participants in our pool.

Participants heard the same short clips of face-, body-, object-, and scene-related sounds used in the previous study (22), while being scanned with fMRI. Examples of face-related sounds included audio clips of people laughing or chewing, body-related sounds included clapping or walking, object-related sounds included a ball bouncing and a car starting, and scene-related sounds included clips of waves crashing and a crowded restaurant. Indeed, we found robustly selective responses to face sounds compared to object-, scene-, and body-related sounds in individual blind participants, replicating van den Hurk et al. (22). Auditory face activations were found in similar approximate locations as haptic face activations (Fig. 3A and SI Appendix, Fig. S1), although the voxel-wise relationship between the two activation patterns within the FFA parcel in each participant was weak (mean across subjects of the Pearson correlation between observed t-values in the face parcel between the haptic and auditory experiment, R = 0.14; correlation between haptic Exp. 1 and haptic Exp. 2, R = 0.23). To quantify auditory face-selectivity, we chose face-selective voxels in the FFA parcels from the haptic paradigm and tested them on the auditory paradigm. This analysis revealed clear selectivity for face sounds across the group (Fig. 3C) in the region showing haptic face-selectivity in blind participants.

Do Similar Connectivity Fingerprints Predict the Locus of Face-Selectivity in the Sighted and Blind?

The question remains: What is it about the lateral fusiform gyrus that marks this region as the locus where face-selectivity will develop? A longstanding hypothesis holds that the long-range connectivity of the brain, much of it present at birth, constrains the functional development of the cortex (39, 46, 56–62). Evidence for this “connectivity constraint” hypothesis comes from demonstrations of distinctive “connectivity fingerprints” of many cortical regions in adults (57, 63, 64), including the FFA (57, 64). To test this hypothesis in our group of sighted and blind participants, we used resting-state fMRI correlations as a proxy for long-range connectivity (65). We first asked whether the “correlation fingerprint” (CF) of face-selective regions was similar in blind and sighted. The fingerprint of every vertex in the fusiform was defined as the correlation between the resting-state time course of that vertex and the average time course within each of 355 cortical regions from a standard whole-brain parcellation (66). Fingerprints corresponding to face-selective vertices were highly correlated between sighted and blind subjects in both hemispheres. Specifically, the 355-dimensional vector of correlations between the face-selective voxels (averaged across the top 200 most face-selective voxels within each hemisphere and individual, then averaged across participants) and each of the 355 anatomical parcels was highly correlated between the blind and sighted participants (mean ± SD across 1,000 bootstrap estimates across subjects, left hemisphere Pearson R = 0.82 ± 0.08, right hemisphere Pearson R = 0.76 ± 0.09). In contrast are the analogous correlations between the best face-selective and scene-selective vertices, bootstrap resampled 1,000 times across subjects, left hemisphere Pearson R = 0.55 ± 0.12, right hemisphere Pearson R = 0.60 ± 0.09, P = 0, permutation test between face- and scene-selective on Fisher transformed correlations.

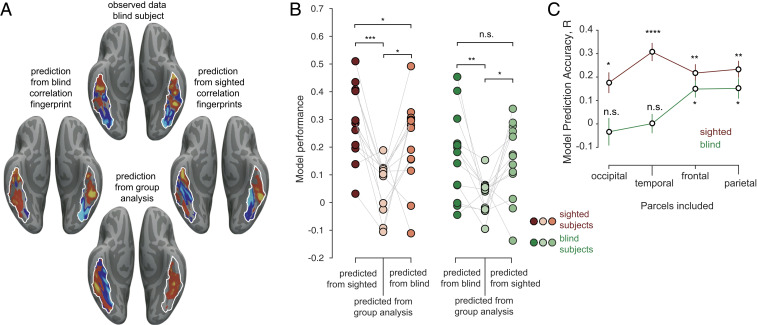

Next, we tested a stronger version of the connectivity hypothesis by building computational models that learn the mapping from the CF of each vertex to the functional activations. Specifically, following established methods (64, 65), we trained models to learn the voxel-wise relationship between CFs and face-selectivity, and tested them using leave-one-subject-out cross-validation. We first tested the efficacy of this approach within the sighted and blind groups separately. Models trained on data within each group (blind or sighted) on average predicted the spatial pattern of each held-out subject’s face-selective activations significantly better than a group analysis of the functional selectivity from the other participants in that group (Fig. 4B) (P < 0.05, paired t test with predictions from a random-effects group analysis). The observed predictions were significantly higher in the sighted participant group than the blind participant group [unpaired t test between Fisher-transformed correlations between sighted predictions based on sighted data and blind predictions based on blind data, t(24) = 2.13, P = 0.043], which is consistent with the observation that face-selectivity is more variable in blind participants (see Discussion) than sighted participants. As expected, the model predictions were also correlated with the observed variability in the fusiform target region (Pearson correlation between variance of t-values in the fusiform and the observed prediction, R = 0.47, P = 0.016). This result shows that it is indeed possible to learn a robust mapping from voxel-wise CFs to voxel-wise face-selective functional activations within each group.

Fig. 4.

CFs predict the spatial locations of face selectivity. (A, Top) Observed face-selective activation in an example blind subject (z-scored units). Predicted activations for the same subject based on that individual’s CF using a model trained on other blind subjects’ haptic activations (Left), or on sighted subjects’ visual activations (Right). (Bottom) predicted activation based on group analysis of face-selective responses in other blind subjects. (B) Model prediction performance across blind and sighted subjects. Each dot is the model prediction accuracy for a single sighted (Left, red) and blind (Right, green) subject. Model predictions were obtained from CFs derived from either the same group (left column in each set), the functional group analysis of the remaining subjects (middle column in each set) or from CFs of the opposite group (right column in each set). Paired t tests were performed on Fisher-transformed data. *P < 0.05, **P < 0.005, ***P < 0.0005, ****P < 0.00005, and n.s., not significant. (C) Model prediction accuracy of face selectivity for sighted (visual responses) and blind (haptic responses) based on CFs including only target regions within one lobe at a time. Statistics indicate significantly better prediction from CFs than from the random-effects analysis of face-selectivity in the rest.

We then asked whether the model trained on one group of subjects (e.g., sighted participants) would generalize to the other group of subjects (e.g., blind participants), as a stronger test of the similarity in the connectivity of face-selective regions between sighted and blind participants. Indeed, these predictions of the spatial pattern of face-selectivity were found not only for the held-out subjects within each group, but also for subjects in the other group, significantly outperforming the predictions from the functional activations of other participants within the same group (Fig. 4 A and B) (P < 0.05, paired t test with predictions from a random-effects group analysis). Note, however, that the face-selective activations in sighted participants were better predicted by the CFs from the sighted participants than blind participants [paired t test t(12) = 2.32, P = 0.039]. Although resting fMRI correlations are an imperfect proxy for structural connectivity (67), these results suggest that face-selectivity in the fusiform is predicted in part by similar long-range connectivity in sighted and blind participants.

The previous analysis does not tell us which connections are most predictive of the functional activations in sighted and blind participants. In order to address this question, we reanalyzed the CFs using only target regions from a single lobe (frontal, temporal, occipital, and temporal) at a time. Here we find that although voxel-wise visual face-selectivity in sighted subjects was significantly predicted from CFs to each of the four lobes of the brain individually (P < 0.05) (Fig. 4C), in blind subjects, haptic face-selectivity was significantly predicted from parietal and frontal regions only. These findings implicate top-down inputs in the development of face-selectivity in the fusiform in blind subjects.

Discussion

How are functionally specific cortical regions wired up in development, and what is the role of experience? This study tests three widely discussed classes of (nonexclusive) hypotheses about the origins of the FFA and presents evidence against two of them. Our finding of robust face-selective responses in the lateral fusiform gyrus of most congenitally blind participants indicates that neither preferential foveal input, nor perceptual expertise, nor indeed any visual experience at all is necessary for the development of face-selective responses in this region. Our further finding that the same region does not respond more to curvy than rectilinear shapes in blind participants casts doubt on the hypothesis that face-selectivity arises where it does in the cortex because this region constitutes a curvature-biased part of an early-developing proto-map. On the other hand, our finding of very similar CFs predictive of face-selectivity for sighted and blind participants, particularly from parietal and frontal regions, supports the hypothesis that top-down connections play a role in specifying the locus where face-selectivity develops.

Our findings extend previous work in two important ways. First, although previous studies have reported some evidence for cortical selectivity in congenitally blind participants for stimulus categories, such as scenes and large objects (23, 24), tools (68), and bodies (69, 70), prior work has either failed to find face-selectivity in the fusiform at all in blind participants (27, 71, 72) or reported only preferential responses in a group analysis (22, 30). Our analyses meet a higher bar for demonstrating face-selectivity by showing: 1) Robust face-selectivity in individual blind subjects, 2) significantly stronger responses of these regions to each of three other stimulus conditions, 3) a similar selectivity index of this region for blind and sighted participants, 4) internal replications of face-selectivity in the fusiform across imaging sessions and across sensory modalities, and 5) a demonstration that haptic face-selectivity in the blind cannot be explained by selectivity for haptic curvature. Second, our findings provide evidence against the most widespread theories for why face-selectivity develops in its particular stereotyped cortical location. Apparently, neither foveal face experience nor a bias for curvature is necessary. Instead, we present evidence that the pattern of structural connections to other cortical regions may play a key role in determining the location where face-selectivity will arise. In particular, we demonstrated that the CF predictive of visual face-selectivity for sighted subjects can also predict the pattern of haptic face-selectivity in blind subjects (and vice versa). Using this predictive modeling approach, we also find preliminary evidence for a dominant role of top-down connections from frontal and parietal cortices in determining the locus of face-selectivity in blind subjects (38).

A common limitation of studies with blind participants is the lack of documentation of the complete absence of visual pattern perception abilities in the first weeks of life. Blind newborns are rarely tested with careful psychophysical methods, and few still have medical records from their first weeks of life. The strongest evidence comes from the rare participants with congenital bilateral anopthalmia, but only one study has included enough of these participants to analyze their data separately (22). Here we have followed practice of most other studies of congenitally blind people, of collecting all evidence our participants were able to provide about the precise nature of their blindness (SI Appendix, Table S1), and including participants in our study if they reported having been diagnosed with blindness at birth and having no evidence or memory of ever having seen visual patterns. Although we cannot definitively rule out some very rudimentary early pattern vision in this group, our subgroup reporting no light perception at all from birth are the least likely to have ever seen any visual patterns. This group included only five individuals, which was not enough for their haptic face selectivity to reach significance when analyzed separately, but the data from this group look very similar to those from the rest of our participants (SI Appendix, Fig. S6).

Many important questions remain. First, is face-selectivity innate, in the sense of requiring no perceptual experience with faces at all? The present study does not answer this question because even though our congenitally blind participants have never seen faces, they have certainly felt them and heard the sounds they produce. For example, blind people do not routinely touch faces during social interactions, but certainly feel their own face and occasionally the faces of loved ones. It remains unknown whether this haptic experience with faces is necessary for the formation of face-selectivity in the fusiform.

Second, what is actually computed in the face-selective cortex in the fusiform of blind participants? It seems unlikely that the main function of this region in blind people is haptic discrimination of individual faces, a task they perform rarely. One possibility is that the “native” function of the FFA is individual recognition, which is primarily visual in sighted people (i.e., face recognition) but can take on analogous functions in other modalities in the case of blindness (25, 33, 58, 62, 73), such as voice identity processing (74, 75). This hypothesis has received mixed support in the past (76, 77) but could potentially account for the observed higher responses to haptic faces and auditory face-related sounds found here. Another possibility is that this region takes on a higher-level social function in blind people, such as inferring mental states in others [but see Bedny et al. (78)]. An interesting parallel case is the finding that the “visual word form area” in the blind actually processes not orthographic but high-level linguistic information (47). Similarly, if the “blind FFA” in fact computes higher-level social information, that could account for another puzzle in our data, which is that not all blind participants show the face-selective response to haptic and auditory stimuli. This variability in our data are not explained by variability in age (correlation of age with selectivity index = 0.03, P = 0.89), sex (R= −0.12, P = 0.65), or task performance (R = 0.06, P = 0.81). Perhaps the higher-level function implemented in this region in blind participants is not as automatic as visual face recognition in sighted participants.

Finally, while our results are clearly consistent with those of van den Hurk et al. (22), they seem harder to reconcile with the prior finding that monkeys reared without face experience do not show face-selective responses (21). Importantly, however auditory and haptic responses to faces have not to our knowledge been tested in face-deprived monkeys, and it is possible they would show the same thing we see in humans. If so, it would be informative to test whether auditory or tactile experience with faces during development is necessary for face-selective responses to arise in monkeys deprived of visual experience with faces (something we cannot test in humans). In advance of those data, a key difference to note between data from face-deprived monkeys and blind humans is that the monkeys introduced to visual faces did not show any indication of recognizing them, with no preference in looking behavior for faces vs. hands. In contrast, all of the blind participants in our study immediately recognized the 3D-printed stimuli as faces, without being told. Detecting the presence of a fellow primate may be critical for obtaining a face-selective response.

In sum, we show that visual experience is not necessary for face-selectivity to develop in the lateral fusiform gyrus, and neither apparently is a feature-based proto-map in this region of the cortex. Instead, our data suggest that the long-range connectivity of this region, which develops independent of visual experience, may mark the lateral fusiform gyrus as the site of the face-selective cortex.

Methods

Participants.

Fifteen sighted and 15 blind subjects participated in the experiment (6 females in the sighted and 7 females in the blind group, mean ± SEM age 29 ± 2 y for sighted; 28 ± 2 y for blind participants). Two additional blind subjects who were recruited had to be excluded because they were not comfortable in the scanner. Seven subjects from the blind pool participated in Exps. 2 and 3 in an additional experiment session (four females, mean ± SEM age 28 ± 3 y). All studies were approved by the Committee on the Use of Humans as Experimental Subjects of the Massachusetts Institute of Technology (MIT). Participants provided informed written consent before the experiment and were compensated for their time. All blind participants recruited for the study were either totally blind or had only light perception from birth. None of our subjects reported any memory of spatial or object vision. Details on the blind subjects are summarized in SI Appendix, Table S1.

Stimuli.

Experiment 1.

In Exp. 1, blind subjects explored 3D-printed stimuli haptically and sighted subjects explored the same stimuli visually and haptically. The haptic stimuli comprised five exemplars each from four stimulus categories: Faces, mazes, chairs, and hands. The 3D models for the face stimuli were generated using FaceGen 3D print (software purchased and downloaded from https://facegen.com/3dprint.htm). Face stimuli were printed on a 4 × 4-cm base and were 7 cm high. Three-dimensional models of mazes were similar to house layouts and were designed on Autodesk 3ds Max (Academic License, v2017). The maze layouts were 5 × 5 cm and consisted of straight walls with entryways and exits and a small raised platform. The 3D models for the hand stimuli were purchased from Dosch Design (Dosch 3D: Hands dataset, from doschdesign.com). The downloaded 3D stimuli were thereafter customized to remove the forearm and include only the wrist and the hand. The stimuli were fixed to a 4 × 4-cm base and were ∼7 cm high and included five exemplar hand configurations. Finally, the 3D models for chairs were downloaded from publicly available databases (from archive3d.net). The stimuli were all armchairs of different types and were each printed on a 5 × 5-cm base and were ∼7 cm tall. Because subjects performed a one-back identification task inside the scanner, we 3D-printed two identical copies of each stimulus, generating a total set of 40 3D-printed models (4 object categories × 5 exemplars per category × 2 copies). The 3D models were printed on a FormLabs stereolithography (SLA) style 3D-printer (Form-2) using the gray photopolymer resin (FLGPGR04). This ensured that the surface-texture properties could not distinguish the stimulus exemplars or categories. The 3D prints generated from the SLA style 3D printers have small deformities at the locations where the support scaffolding attaches with the model. We ensured that these deformities were not diagnostic by either using the same pattern of scaffolding (for the face and hand stimuli) or filing them away after the models were processed and cured (chair stimuli). The mazes could be printed without the support scaffolds because of which there were no 3D-printing–related deformities on those stimuli. Nonetheless, we instructed subjects to ignore small deformities when judging the one-back conditions. Visual stimuli for the sighted subjects included short 6-s video animations of the 3D renderings of the same 3D-printed stimuli (in gray on a black background) rotating in depth. The animations were rendered directly from Autodesk 3Ds Max. Each stimulus subtended about 8° of visual angle around a centrally located black fixation dot. The .STL files used to 3D print the different stimuli and the animation video files can be downloaded from GitHub at https://github.com/ratanmurty/pnas2020.

Experiment 2.

Stimuli for Exp. 2 included stimuli from Exp. 1 (face, maze, hand, and chair) and two additional object categories: spheroids and cuboids. The 3D models were designed from scratch on Autodesk 3Ds Max. The spheroids were egg-shaped and the cuboids were box-shaped and were each printed on a 4 × 4-cm base. We 3D-printed two copies each of four exemplars each of the spheroids and cuboids (three variations in the x, y, and z planes plus one sphere/cube) that varied in elongation. Each stimulus was ∼7 cm high.

Experiment 3.

The auditory stimuli used in Exp. 3 were downloaded from https://osf.io/s2pa9/, graciously provided by the authors of a previous study (22). Each auditory stimulus was a short ∼1,800-ms audio clip and the stimulus set consisted 64 unique audio clips, 16 each from one of four auditory categories (face, body, object, and scene). Examples of face-related stimuli included recorded sounds of people laughing and chewing, body-related stimuli included audio-clips of people clapping and walking, object-related sounds included sounds of a ball bouncing and a car starting, and scene-related sounds included clips of waves crashing and a crowded restaurant. The overall sound intensity was matched across stimuli by normalizing the rms value of the sound-pressure levels. We did not perform any additional normalization of the stimuli, except to make sure that the sound-intensity levels were within a comfortable auditory range for our subjects.

Paradigm.

All 15 blind and 15 sighted subjects participated in Exp. 1. Blind and sighted subjects explored 3D-printed faces, mazes, hands, and chairs presented on a MR-compatible turntable inside the fMRI scanner while performing an orthogonal one-back identity task (mean subject accuracy on the one-back task: 79%, 81%, 89%, and 86% for face, maze, hand, and chair stimuli, respectively). A two-way ANOVA with subject group (blind and sighted), and stimulus types (face, maze, hand, and chair) as factors revealed a significant effect of stimulus category [F(3, 112) = 18.28, P < 0.00005] but no effect of subject group, and no interaction between subject group and stimulus category. Each run contained three 30-s rest periods at the beginning, middle, and end of the run, and eight 24-s-long haptic stimulus blocks (two blocks per stimulus category) (Fig. 1). During each haptic stimulus block, blind subjects haptically explored four 3D-printed stimuli (three unique stimuli plus a one-back stimulus) in turn for 6 s each, timed by the rotation of the turntables to which the stimuli were velcroed (Fig. 1). Once every stimulus had been explored across four blocks (one per stimulus category), the turntable was replaced during the middle rest period with a new ordering of stimuli (Fig. 1). The haptic sessions were interleaved with 3- to 4-min resting-state scans during which subjects were instructed to remain as still as possible. Each turntable included 16 stimuli (4 stimulus categories × 4 exemplars per category) and the stimulus and category orders were randomized across experimental runs (by changing the position of the stimuli on the turntable). Each run lasted 4 min 42 s and 14 of 15 blind subjects completed 10 runs (1 subject completed 8 runs) of the experiment. Blind subjects were not trained on the stimuli or the task but were only familiarized with the procedure for less than 5 min prior to scanning. Care was also taken to ensure that no information about any of the stimulus categories was provided to the participants prior to scanning. Sighted participants performed the analogous task visually (mean accuracy on the one-back task: 99%, 97%, 99%, and 99% for face, maze, hand, and chair stimuli, respectively), viewing renderings of the same 3D-printed stimuli rotating in depth, presented at the same rate and in the same design (four exemplar stimuli per stimulus category per block). Sighted subjects completed five runs over the course of an experimental session.

A subset of blind subjects (n = 7) returned for a second session (a few days to a few months after the first session) and participated in Exps. 2 and 3. In Exp. 2, subjects performed 10 runs of a similar haptics paradigm as in Exp. 1, but the turntables included two additional stimulus categories beyond faces, mazes, hands, and chairs: spheroids and cuboids. In the additional session, runs of Exp. 2 were alternated with runs from Exp. 3, which was a direct replication of the auditory paradigm from a previously published study (22). The stimulus presentation and task design were the same as in the previous study (22). Briefly, subjects were instructed to compare the conceptual dissimilarity of each auditory stimulus with the preceding auditory stimulus on a scale of 1 to 4. Each auditory stimulus was a short ∼1,800-ms audio clip and the stimulus set consisted 64 unique audio clips, 16 each from 1 of 4 auditory categories (face, body, object, and scene). The stimuli were presented in a block design, with four blocks per category in each run. Each block consisted of eight stimuli per category, chosen at random from the 16 possible stimuli set. Each run lasted 7 min 30 s and each subject participated in nine runs of this auditory paradigm interleaved with the Exp. 2 haptic paradigm.

Data Acquisition.

All experiments were performed at the Athinoula A. Martinos Imaging Center at MIT on a Siemens 3-T MAGNETOM Prisma Scanner with a 32-channel head coil. We acquired a high-resolution T1-weighted (multiecho MPRAGE) anatomical scan during the first scanning session (acquisition parameters: 176 slices, voxel size: 1 × 1 × 1 mm, repetition time [TR] = 2,500 ms, echo time [TE] = 2.9 ms, flip angle = 8 o). Functional scans included 141 and 225 T2*-weighted echoplanar blood-oxygen level-dependent (BOLD) images for each haptic and auditory run, respectively (acquisition parameters: simultaneous interleaved multislice acquisition 2, TR = 2,000 ms, TE = 30 ms, voxel-size 2-mm isotrotropic, number of slices = 52, flip angle: 90°, echo-spacing 0.54 ms, 7/8 phase partial Fourier acquisition). Resting-state data were acquired for 13 of 15 blind and 13 of 15 sighted subjects that participated in Exp. 1 (scanning parameters: TR = 1,500 ms, TE = 32 ms, voxel-size 2.5 × 2.5 × 2.5-mm isotropic, flip angle 70°, duration of resting-state data: 27.5 ± 0.5 min for sighted and 27.2 ± 0.5 min for blind subjects). Sighted participants closed their eyes during the resting-state runs.

Data Preprocessing and Modeling.

fMRI data preprocessing and generalized linear modeling were performed on Freesurfer (v6.0.0; downloaded from: https://surfer.nmr.mgh.harvard.edu/fswiki/). Data preprocessing included slice time correction, motion correction of each functional run, alignment to each subject’s anatomical data, and smoothing using a 5-mm full-width at half-maximum Gaussian kernel. Generalized linear modeling included one regressor per stimulus condition, as well as nuisance regressors for linear drift removal and motion correction per run. Data were analyzed in each subjects’ native volume (analysis on the subjects’ native surface also resulted in qualitatively similar results). Activation maps were projected on the native reconstructed surface to aid visualization. For group-level analyses, data were coregistered to standard anatomical surface coordinates using Freesurfer’s fsaverage (MNI305) template. Because the exact anatomical location of the FFA varies across participants, this activation often fails to reach significance in a random-effects analysis, even with a sizable number of sighted participants. So, to visualize the average location of face selective activations in each group, each voxel was color-coded according to the number of participants showing a selective activation in that voxel.

Resting-state data were preprocessed using the CONN toolbox (https://www.nitrc.org/projects/conn). Structural data were segmented and normalized. Functional data were motion-corrected and nuisance regression was performed to remove head-motion artifacts and ventricular and white-matter signals. Subject motion threshold was set at 0.9 mm to remove time points with high motion. The resting-state data were thereafter filtered with a bandpass filter (0.009 to 0.08 Hz). All data were projected onto the fsaverage surface using Freesurfer (freesurfer.net) for further analysis.

fROI Analysis in the Fusiform.

We used fROI analyses in sighted and blind subjects to quantify face selectivity. Specifically, we used previously published (49) anatomical constraint parcels downloaded from https://web.mit.edu/bcs/nklab/GSS.shtml within which to define the FFA. These parcels were projected to each subjects’ native volume. The face-selective ROI for each subject was identified as the top n voxels within the anatomical constraint parcel for the FFA that responded most significantly to face relative to maze, hand, and chair stimuli (regardless of whether any of those voxels actually reached any statistical criterion). We fixed n as the 50 top voxels (or 400 mm3) prior to analyzing the data, but also varied from 10 to 150 voxels (i.e., 80 to 1,200 mm3) to estimate selectivity as a function of ROI size. We always used independent data to select the top voxels and estimate the activations (based on an odd–even run cross-validation routine) to avoid double-dipping. The estimated beta values were converted into BOLD percent signal-change values by dividing by the mean signal strength (see SI Appendix, Fig. S5 for analyses on raw beta estimates instead of percent signal change). The statistical significance was assessed using two-tailed paired t tests between object categories across subjects.

CF-Based Prediction.

This analysis tests how well we could predict the functional activations in each participant from their resting-state fMRI correlation data, using the approach described in Osher et al. (65). This method is a variant of the method used in refs. 57, 64, and 79, but applied to resting-state fMRI data. Briefly, we used the Glasser multimodal parcellations from the Human Connectome Project (66) to define a target region (within the fusiform) and search regions (the remaining parcels). The fusiform target region in each hemisphere included the following five Glasser parcels in each hemisphere: Area V8, fusiform face complex, area TF, area PHT, and ventral visual complex. Next, we defined the CF for each vertex in the fusiform target region. The CF of each vertex was a 355-dimensional vector, each dimension representing the Pearson correlation between the time course of that vertex at rest, and the time course of one of the 355 remaining Glasser parcels in the search space during the same resting scan (averaged across all vertices in the chosen target Glasser parcel).

Predictive Model.

We next trained a ridge-regression model to predict the face-selectivity of each voxel in the fusiform target region directly from the CFs (65). The T-statistic map from the contrast of face > maze, hand, and chair were used for the predictions as in previous studies (57, 64, 65). The model was trained using standardized data (mean centered across all vertices in the fusiform search space and unit SD) using a leave-one-subject-out cross-validation routine. This method ensures that the individual subject data being predicted is never used in the training procedure. The ridge-regression model includes a regularization parameter λ, which was determined using an inner-loop leave-one-subject cross-validation. Each inner-loop was repeated 100 times, each with a different λ coefficient (λ values logarithmically spaced between 10−5 and 102). The optimal λs and βs were chosen from the inner-loop models that minimized the mean-square error between the predicted and observed activation values and used to predict the responses for the held-out subject. The model prediction accuracy was estimated as the Pearson correlation between the predicted and observed patterns of face selectivity across vertices in the fusiform target regions for each subject. We evaluated the predictions from the model against the benchmark of predictions from a random-effects group analysis using the general linear model (in Freesurfer) applied to the face-selectivity of the other subjects.

The model training and benchmark procedures were similar to Osher et al. (65), except for one important difference. We split the all of the functional and resting-state data into two independent groups (even–odd run split). We trained all our models based on data from even runs and evaluated the model performances based on data from the odd runs. This additional procedure ensures that the predictions for each subject are independent samples for subsequent statistical analyses. Statistical significance was assessed using two-tailed paired t tests on Fisher-transformed prediction scores across subjects. To assess the degree to which we could predict functional activations from parcels in individual lobes, we divided the search regions into frontal, parietal, occipital, and temporal lobes based on the Glasser parcels (66). These regions included 162, 66, 60, and 67 parcels each in the frontal, parietal, occipital, and temporal regions, respectively. We retrained our models from scratch limiting the predictors to the parcels within the each of the lobes and evaluated the model performance and performed statistical tests as before.

Supplementary Material

Acknowledgments

The authors would like to thank Lindsay Yazzolino and Marina Bedny for thoughtful discussions and feedback, and Job Van der Hurk and Hans op de Beeck for graciously providing the auditory stimuli used in their study. This work was supported by the NIH Shared Instrumentation Grant (S10OD021569) to the Athinoula Martinos Center at MIT, the National Eye Institute Training Grant (5T32EY025201-03) and Smith-Kettlewell Eye Research Institute Rehabilitation Engineering Research Center Grant to S.T., the Office of Naval Research Vannevar Bush Faculty Fellowship (N00014-16-1-3116) to A.O., the NIH Pioneer Award (NIH DP1HD091957) to N.K., and the NSF Science and Technology Center–Center for Brains, Minds, and Machines Grant (NSF CCF-1231216).

Footnotes

The authors declare no competing interest.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2004607117/-/DCSupplemental.

Data Availability.

All study data are included in the main text and SI Appendix.

References

- 1.Smith L. B., Jayaraman S., Clerkin E., Yu C., The developing infant creates a curriculum for statistical learning. Trends Cogn. Sci. 22, 325–336 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Golarai G., Liberman A., Yoon J. M. D., Grill-Spector K., Differential development of the ventral visual cortex extends through adolescence. Front. Hum. Neurosci. 3, 80 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cohen M. A.et al., Representational similarity precedes category selectivity in the developing ventral visual pathway. Neuroimage 197, 565–574 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Aylward E. H.et al., Brain activation during face perception: Evidence of a developmental change. J. Cogn. Neurosci. 17, 308–319 (2005). [DOI] [PubMed] [Google Scholar]

- 5.Carey S., Diamond R., Woods B., Development of face recognition: A maturational component? Dev. Psychol. 16, 257–269 (1980). [Google Scholar]

- 6.Diamond R., Carey S., Developmental changes in the representation of faces. J. Exp. Child Psychol. 23, 1–22 (1977). [DOI] [PubMed] [Google Scholar]

- 7.Balas B., Auen A., Saville A., Schmidt J., Harel A., Children are sensitive to mutual information in intermediate-complexity face and non-face features. J. Vis. 20, 6 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Balas B., Saville A., Schmidt J., Neural sensitivity to natural texture statistics in infancy. Dev. Psychobiol. 60, 765–774 (2018). [DOI] [PubMed] [Google Scholar]

- 9.McKone E., Boyer B. L., Sensitivity of 4-year-olds to featural and second-order relational changes in face distinctiveness. J. Exp. Child Psychol. 94, 134–162 (2006). [DOI] [PubMed] [Google Scholar]

- 10.Gilchrist A., McKone E., Early maturity of face processing in children: Local and relational distinctiveness effects in 7-year-olds. Vis. Cogn. 10, 769–793 (2003). [Google Scholar]

- 11.Crair M. C., Neuronal activity during development: Permissive or instructive? Curr. Opin. Neurobiol. 9, 88–93 (1999). [DOI] [PubMed] [Google Scholar]

- 12.Reid V. M.et al., The human fetus preferentially engages with face-like visual stimuli. Curr. Biol. 27, 1825–1828.e3 (2017). [DOI] [PubMed] [Google Scholar]

- 13.Johnson M. H., Dziurawiec S., Ellis H., Morton J., Newborns’ preferential tracking of face-like stimuli and its subsequent decline. Cognition 40, 1–19 (1991). [DOI] [PubMed] [Google Scholar]

- 14.Johnson M. H., “Face perception: A developmental perspective” in Oxford Handbook of Face Perception, Rhodes G., Calder A., Johnson M., Haxby J. V., Eds. (Oxford University Press, 2012). [Google Scholar]

- 15.Turati C., Why faces are not special to newborns: An alternative account of the face preference. Curr. Dir. Psychol. Sci. 13, 5–8 (2004). [Google Scholar]

- 16.Turati C., Di Giorgio E., Bardi L., Simion F., Holistic face processing in newborns, 3-month-old infants, and adults: Evidence from the composite face effect. Child Dev. 81, 1894–1905 (2010). [DOI] [PubMed] [Google Scholar]

- 17.Turati C., Bulf H., Simion F., Newborns’ face recognition over changes in viewpoint. Cognition 106, 1300–1321 (2008). [DOI] [PubMed] [Google Scholar]

- 18.Buiatti M.et al., Cortical route for facelike pattern processing in human newborns. Proc. Natl. Acad. Sci. U.S.A. 116, 4625–4630 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Livingstone M. S.et al., Development of the macaque face-patch system. Nat. Commun. 8, 14897 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Deen B.et al., Organization of high-level visual cortex in human infants. Nat. Commun. 8, 13995 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Arcaro M. J., Schade P. F., Vincent J. L., Ponce C. R., Livingstone M. S., Seeing faces is necessary for face-domain formation. Nat. Neurosci. 20, 1404–1412 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.van den Hurk J., Van Baelen M., Op de Beeck H. P., Development of visual category selectivity in ventral visual cortex does not require visual experience. Proc. Natl. Acad. Sci. U.S.A. 114, E4501–E4510 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wolbers T., Klatzky R. L., Loomis J. M., Wutte M. G., Giudice N. A., Modality-independent coding of spatial layout in the human brain. Curr. Biol. 21, 984–989 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.He C.et al., Selectivity for large nonmanipulable objects in scene-selective visual cortex does not require visual experience. Neuroimage 79, 1–9 (2013). [DOI] [PubMed] [Google Scholar]

- 25.Mahon B. Z., Anzellotti S., Schwarzbach J., Zampini M., Caramazza A., Category-specific organization in the human brain does not require visual experience. Neuron 63, 397–405 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mahon B. Z., Schwarzbach J., Caramazza A., The representation of tools in left parietal cortex is independent of visual experience. Psychol. Sci. 21, 764–771 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Pietrini P.et al., Beyond sensory images: Object-based representation in the human ventral pathway. Proc. Natl. Acad. Sci. U.S.A. 101, 5658–5663 (2004). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Amedi A.et al., Shape conveyed by visual-to-auditory sensory substitution activates the lateral occipital complex. Nat. Neurosci. 10, 687–689 (2007). [DOI] [PubMed] [Google Scholar]

- 29.Peelen M. V., Downing P. E., Category selectivity in human visual cortex: Beyond visual object recognition. Neuropsychologia 105, 177–183 (2017). [DOI] [PubMed] [Google Scholar]

- 30.Mattioni S.et al., Categorical representation from sound and sight in the ventral occipito-temporal cortex of sighted and blind. eLife 9, e50732 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Handjaras G.et al., Modality-independent encoding of individual concepts in the left parietal cortex. Neuropsychologia 105, 39–49 (2017). [DOI] [PubMed] [Google Scholar]

- 32.Handjaras G.et al., How concepts are encoded in the human brain: A modality independent, category-based cortical organization of semantic knowledge. Neuroimage 135, 232–242 (2016). [DOI] [PubMed] [Google Scholar]

- 33.Amedi A., Hofstetter S., Maidenbaum S., Heimler B., Task selectivity as a comprehensive principle for brain organization. Trends Cogn. Sci. 21, 307–310 (2017). [DOI] [PubMed] [Google Scholar]

- 34.Striem-Amit E., Bubic A., Amedi A., “Neurophysiological mechanisms underlying plastic changes and rehabilitation following sensory loss in blindness and deafness” in The Neural Bases of Multisensory Processes, Murray M. M., Wallace M. T., Eds. (CRC Press, 2011), pp. 395–422. [PubMed] [Google Scholar]

- 35.Weiner K. S.et al., The mid-fusiform sulcus: A landmark identifying both cytoarchitectonic and functional divisions of human ventral temporal cortex. Neuroimage 84, 453–465 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Amedi A., Malach R., Hendler T., Peled S., Zohary E., Visuo-haptic object-related activation in the ventral visual pathway. Nat. Neurosci. 4, 324–330 (2001). [DOI] [PubMed] [Google Scholar]

- 37.Kanwisher N., Faces and places: Of central (and peripheral) interest. Nat. Neurosci. 4, 455–456 (2001). [DOI] [PubMed] [Google Scholar]

- 38.Powell L. J., Kosakowski H. L., Saxe R., Social origins of cortical face areas. Trends Cogn. Sci. 22, 752–763 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Op de Beeck H. P., Pillet I., Ritchie J. B., Factors determining where category-selective areas emerge in visual cortex. Trends Cogn. Sci. 23, 784–797 (2019). [DOI] [PubMed] [Google Scholar]

- 40.Arcaro M. J., Livingstone M. S., A hierarchical, retinotopic proto-organization of the primate visual system at birth. eLife 6, e26196 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hasson U., Levy I., Behrmann M., Hendler T., Malach R., Eccentricity bias as an organizing principle for human high-order object areas. Neuron 34, 479–490 (2002). [DOI] [PubMed] [Google Scholar]

- 42.Levy I., Hasson U., Avidan G., Hendler T., Malach R., Center-periphery organization of human object areas. Nat. Neurosci. 4, 533–539 (2001). [DOI] [PubMed] [Google Scholar]

- 43.Malach R., Levy I., Hasson U., The topography of high-order human object areas. Trends Cogn. Sci. 6, 176–184 (2002). [DOI] [PubMed] [Google Scholar]

- 44.Yue X., Pourladian I. S., Tootell R. B. H., Ungerleider L. G., Curvature-processing network in macaque visual cortex. Proc. Natl. Acad. Sci. U.S.A. 111, E3467–E3475 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Rajimehr R., Devaney K. J., Bilenko N. Y., Young J. C., Tootell R. B. H., The “parahippocampal place area” responds preferentially to high spatial frequencies in humans and monkeys. PLoS Biol. 9, e1000608 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Mahon B. Z., Caramazza A., What drives the organization of object knowledge in the brain? Trends Cogn. Sci. 15, 97–103 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Kim J. S., Kanjlia S., Merabet L. B., Bedny M., Development of the visual word form area requires visual experience: Evidence from blind braille readers. J. Neurosci. 37, 11495–11504 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Bedny M., Evidence from blindness for a cognitively pluripotent cortex. Trends Cogn. Sci. 21, 637–648 (2017). [DOI] [PubMed] [Google Scholar]

- 49.Julian J. B., Fedorenko E., Webster J., Kanwisher N., An algorithmic method for functionally defining regions of interest in the ventral visual pathway. Neuroimage 60, 2357–2364 (2012). [DOI] [PubMed] [Google Scholar]

- 50.Norman-Haignere S. V.et al., Pitch-responsive cortical regions in congenital amusia. J. Neurosci. 36, 2986–2994 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Amedi A., Malach R., Pascual-Leone A., Negative BOLD differentiates visual imagery and perception. Neuron 48, 859–872 (2005). [DOI] [PubMed] [Google Scholar]

- 52.O’Craven K. M., Kanwisher N., Mental imagery of faces and places activates corresponding stimulus-specific brain regions. J. Cogn. Neurosci. 12, 1013–1023 (2000). [DOI] [PubMed] [Google Scholar]

- 53.Srihasam K., Vincent J. L., Livingstone M. S., Novel domain formation reveals proto-architecture in inferotemporal cortex. Nat. Neurosci. 17, 1776–1783 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Amedi A., Raz N., Azulay H., Malach R., Zohary E., Cortical activity during tactile exploration of objects in blind and sighted humans. Restor. Neurol. Neurosci. 28, 143–156 (2010). [DOI] [PubMed] [Google Scholar]

- 55.Srihasam K., Mandeville J. B., Morocz I. A., Sullivan K. J., Livingstone M. S., Behavioral and anatomical consequences of early versus late symbol training in macaques. Neuron 73, 608–619 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Sur M., Garraghty P. E., Roe A. W., Experimentally induced visual projections into auditory thalamus and cortex. Science 242, 1437–1441 (1986). [DOI] [PubMed] [Google Scholar]

- 57.Osher D. E.et al., Structural connectivity fingerprints predict cortical selectivity for multiple visual categories across cortex. Cereb. Cortex 26, 1668–1683 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Wang X.et al., How visual is the visual cortex? Comparing connectional and functional fingerprints between congenitally blind and sighted individuals. J. Neurosci. 35, 12545–12559 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Wang X.et al., Domain selectivity in the parahippocampal gyrus is predicted by the same structural connectivity patterns in blind and sighted individuals. J. Neurosci. 37, 4705–4716 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Dubois J.et al., The early development of brain white matter: A review of imaging studies in fetuses, newborns and infants. Neuroscience 276, 48–71 (2014). [DOI] [PubMed] [Google Scholar]

- 61.Striem-Amit E.et al., Functional connectivity of visual cortex in the blind follows retinotopic organization principles. Brain 138, 1679–1695 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Bi Y., Wang X., Caramazza A., Object domain and modality in the ventral visual pathway. Trends Cogn. Sci. 20, 282–290 (2016). [DOI] [PubMed] [Google Scholar]

- 63.Passingham R. E., Stephan K. E., Kötter R., The anatomical basis of functional localization in the cortex. Nat. Rev. Neurosci. 3, 606–616 (2002). [DOI] [PubMed] [Google Scholar]

- 64.Saygin Z. M.et al., Anatomical connectivity patterns predict face selectivity in the fusiform gyrus. Nat. Neurosci. 15, 321–327 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Osher D. E., Brissenden J. A., Somers D. C., Predicting an individual’s dorsal attention network activity from functional connectivity fingerprints. J. Neurophysiol. 122, 232–240 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Glasser M. F.et al., A multi-modal parcellation of human cerebral cortex. Nature 536, 171–178 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Buckner R. L., Krienen F. M., Yeo B. T. T., Opportunities and limitations of intrinsic functional connectivity MRI. Nat. Neurosci. 16, 832–837 (2013). [DOI] [PubMed] [Google Scholar]

- 68.Peelen M. V.et al., Tool selectivity in left occipitotemporal cortex develops without vision. J. Cogn. Neurosci. 25, 1225–1234 (2013). [DOI] [PubMed] [Google Scholar]

- 69.Striem-Amit E., Amedi A., Visual cortex extrastriate body-selective area activation in congenitally blind people “seeing” by using sounds. Curr. Biol. 24, 687–692 (2014). [DOI] [PubMed] [Google Scholar]

- 70.Kitada R.et al., The brain network underlying the recognition of hand gestures in the blind: The supramodal role of the extrastriate body area. J. Neurosci. 34, 10096–10108 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Goyal M. S., Hansen P. J., Blakemore C. B., Tactile perception recruits functionally related visual areas in the late-blind. Neuroreport 17, 1381–1384 (2006). [DOI] [PubMed] [Google Scholar]

- 72.Kitada R.et al., Early visual experience and the recognition of basic facial expressions: Involvement of the middle temporal and inferior frontal gyri during haptic identification by the early blind. Front. Hum. Neurosci. 7, 7 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Merabet L. B., Rizzo J. F., Amedi A., Somers D. C., Pascual-Leone A., What blindness can tell us about seeing again: Merging neuroplasticity and neuroprostheses. Nat. Rev. Neurosci. 6, 71–77 (2005). [DOI] [PubMed] [Google Scholar]

- 74.Hölig C., Föcker J., Best A., Röder B., Büchel C., Crossmodal plasticity in the fusiform gyrus of late blind individuals during voice recognition. Neuroimage 103, 374–382 (2014). [DOI] [PubMed] [Google Scholar]

- 75.Hölig C., Föcker J., Best A., Röder B., Büchel C., Brain systems mediating voice identity processing in blind humans. Hum. Brain Mapp. 35, 4607–4619 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Dormal G.et al., Functional preference for object sounds and voices in the brain of early blind and sighted individuals. J. Cogn. Neurosci. 30, 86–106 (2018). [DOI] [PubMed] [Google Scholar]

- 77.Fairhall S. L.et al., Plastic reorganization of neural systems for perception of others in the congenitally blind. Neuroimage 158, 126–135 (2017). [DOI] [PubMed] [Google Scholar]

- 78.Bedny M., Pascual-Leone A., Saxe R. R., Growing up blind does not change the neural bases of Theory of Mind. Proc. Natl. Acad. Sci. U.S.A. 106, 11312–11317 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Saygin Z. M.et al., Connectivity precedes function in the development of the visual word form area. Nat. Neurosci. 19, 1250–1255 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All study data are included in the main text and SI Appendix.