Abstract

Negative visual stimuli have been found to elicit stronger brain activation than do neutral stimuli. Such emotion effects have been shown for pictures, faces, and words alike, but the literature suggests stimulus‐specific differences regarding locus and lateralization of the activity. In the current functional magnetic resonance imaging study, we directly compared brain responses to passively viewed negative and neutral pictures of complex scenes, faces, and words (nouns) in 43 healthy participants (21 males) varying in age and demographic background. Both negative pictures and faces activated the extrastriate visual cortices of both hemispheres more strongly than neutral ones, but effects were larger and extended more dorsally for pictures, whereas negative faces additionally activated the superior temporal sulci. Negative words differentially activated typical higher‐level language processing areas such as the left inferior frontal and angular gyrus. There were small emotion effects in the amygdala for faces and words, which were both lateralized to the left hemisphere. Although pictures elicited overall the strongest amygdala activity, amygdala response to negative pictures was not significantly stronger than to neutral ones. Across stimulus types, emotion effects converged in the left anterior insula. No gender effects were apparent, but age had a small, stimulus‐specific impact on emotion processing. Our study specifies similarities and differences in effects of negative emotional content on the processing of different types of stimuli, indicating that brain response to negative stimuli is specifically enhanced in areas involved in processing of the respective stimulus type in general and converges across stimuli in the left anterior insula.

Keywords: emotion, faces, fMRI, pictures, visual processing, words

In an fMRI study, we investigated brain responses to negative versus neutral pictures, faces, and words under natural viewing conditions. For all three stimulus types, negative stimuli elicited stronger BOLD response than neutral stimuli in areas involved in the processing of the respective stimulus type in general. In this respect, differences were especially evident between pictorial stimuli (i.e., pictures and faces), modulating activity in visual areas, and words, which modulated activity in language processing areas.

1. INTRODUCTION

The human brain is challenged with selecting important information from the multitude of visual input from the environment. In this selection process, it is adaptive to prioritize emotional, and especially negative stimuli, as these often require behavioral responses to ensure survival. The prioritized processing of negative stimuli is reflected by faster detection (Fox et al., 2000; Soares, Lindström, Esteves, & Öhman, 2014) and stronger capture and holding of attention (Alpers & Gerdes, 2007; Bach, Schmidt‐Daffy, & Dolan, 2014). On a neural level, such emotion effects (negative > neutral) are observable in enhanced blood oxygenation level dependent (BOLD) response to negative stimuli, often particularly in visual cortices. This has not only been shown for pictures of negative compared to neutral complex scenes (Aldhafeeri, Mackenzie, Kay, Alghamdi, & Sluming, 2012; Lang et al., 1998; Sabatinelli, Bradley, Fitzsimmons, & Lang, 2005; Sambuco, Bradley, Herring, Hillbrandt, & Lang, 2020a) and fearful versus neutral faces (Vuilleumier, Armony, Driver, & Dolan, 2001), but also for emotional words (Herbert et al., 2009; Hoffmann, Mothes‐Lasch, Miltner, & Straube, 2015). In line with studies showing converging emotional modulation of brain activation even across different modalities (Hayes & Northoff, 2011; Kim, Shinkareva, & Wedell, 2017; Whitehead & Armony, 2019), for example, visual and auditory, these results suggest a common network of brain structures underlying emotion processing (Lindquist, Wager, Kober, Bliss‐Moreau, & Barrett, 2012), independent from the induction context.

Particularly emotion effects in visual brain areas for verbal material are remarkable, as words gain their emotionality from ontogenetically learned, per se arbitrary associations. There is typically no physical resemblance between sign and denoted concept. In contrast, the affective response to facial expressions or complex emotional scenes has developed over the course of evolution and the mapping between physical configuration and affective significance is relatively fixed. Accordingly, some electroencephalography (EEG) evidence suggests that emotion effects for words only occur at higher cognitive processing stages and not in early sensory processing, as shown for faces (Rellecke, Palazova, Sommer, & Schacht, 2011) or pictures (Schupp, Junghöfer, Weike, & Hamm, 2003). Likewise, meta‐analyses of functional magnetic resonance imaging (fMRI) studies indicate that pictures and faces elicit similar emotion effects in the extrastriate visual cortex (Sabatinelli et al., 2011), showing no overlap with the emotion effects for words (García‐García et al., 2016). This is in line with fMRI studies directly comparing BOLD response to emotional pictures and words in orthogonal discrimination tasks (Flaisch et al., 2015; Kensinger & Schacter, 2006). Here, emotion effects in visual brain areas were evident only for pictures, but not for words, which instead activated semantic processing areas of the left hemisphere, such as the superior and inferior frontal gyrus and superior parietal areas. However, some studies have demonstrated emotion effects for words even at early perceptual processing stages in the EEG (Kissler, Herbert, Winkler, & Junghöfer, 2009; Schacht & Sommer, 2009) and, using fMRI, in visual cortices (Herbert et al., 2009; Schlochtermeier et al., 2013). Schlochtermeier et al. (2013) report even stronger emotion effects for emotional words than for pictures, especially in the right hemisphere. This conflicts with more common indications of stronger emotion effects for pictures than for words (de Houwer & Hermans, 1994; Hinojosa, Carretié, Valcárcel, Méndez‐Bértolo, & Pozo, 2009) as well as with findings of predominantly left‐lateralized emotion effects for verbal material (Flaisch et al., 2015; Herbert et al., 2009; Kensinger & Schacter, 2006; Kuchinke et al., 2005), in line with the general predominance of the left hemisphere in language processing (Price, 2012; Pujol, Deus, Losilla, & Capdevila, 1999). By contrast, the right‐lateralization in the study of Schlochtermeier et al. (2013) would be in agreement with the right hemisphere hypothesis of emotion derived from studies with brain‐damaged patients (Borod, Tabert, Santschi, & Strauss, 2000; Gainotti, 2019; Heller, Nitschke, & Miller, 1998). So far, no fMRI study has directly investigated differences in emotion effects between faces and words or simultaneously compared pictures, faces, and words in one sample. Extant evidence suggests that pictures and faces trigger similar emotion effects in visual processing areas, but it is unclear, if processing of negative words is similarly enhanced on the perceptual level or only on a higher‐order processing level.

Moreover, despite some parallels between emotion effects for pictures and faces, a meta‐analysis of Sabatinelli et al. (2011) also indicates differences in extent and exact localization, revealing specific emotion effects for pictures and faces: Faces uniquely triggered emotion effects in the core face processing network suggested by Haxby, Hoffman, and Gobbini (2002), including the fusiform gyrus, superior temporal sulcus, and inferior occipital cortex. By contrast, pictures elicited overall more widespread emotion effects, with unique activations in the lateral occipital and orbitofrontal cortex. These results were further supported by fMRI studies, directly comparing enhanced BOLD response to negative pictures and faces in a passive viewing paradigm (Britton, Taylor, Sudheimer, & Liberzon, 2006) and a matching task (Hariri, Tessitore, Mattay, Fera, & Weinberger, 2002). Compared to emotional faces, pictures induced more pronounced emotion effects in the primary visual cortex (Britton et al., 2006) and in bilateral posterior fusiform and parahippocampal gyri (Hariri et al., 2002). Faces elicited stronger emotion effects than pictures in the bilateral superior temporal gyri and left anterior insula (Britton et al., 2006), which both are also involved in face processing in general (Haxby et al., 2002). This suggests that even within pictorial stimuli (i.e., pictures of complex scenes and faces), stimulus type influences visual emotion processing, with emotion effects occurring in areas specialized for processing of the respective stimulus type in general. However, as Hariri et al. (2002) used simple geometrical forms as control condition, stimulus‐specific activations could reflect differences between picture and face processing in general rather than differences in their emotion processing. Moreover, sample sizes of 12 participants in each of both previous studies were rather small (Britton et al., 2006; Hariri et al., 2002). Thus, differences between emotion effects for negative pictures and faces still need further investigation.

Emotionally negative stimuli have also been found to activate subcortical brain structures, in particular the amygdala which is assumed to be a key structure for emotional processing and part of a core affect network (Barrett, Bliss‐Moreau, Duncan, Rauch, & Wright, 2007). A pronounced response to negative pictures has repeatedly been shown in both amygdalae (Hariri et al., 2002; Kensinger & Schacter, 2006; Sabatinelli et al., 2011), although some studies did not reveal stronger amygdala activation for emotional than for neutral pictures at all (Britton et al., 2006; Flaisch et al., 2015). Bilateral amygdala activation was also reported for emotional faces (Hariri et al., 2002; Sabatinelli et al., 2011), with the right amygdala showing a preference for faces compared to pictures (Hariri et al., 2002). However, since, as mentioned above, the control condition in the study by Hariri et al. (2002) was not matched for perceptual features, the lateralization might reflect the general right hemisphere dominance for face processing (Damaskinou & Watling, 2018; De Renzi, 1986) rather than right‐lateralized emotion effects. In fact, several other imaging studies reported left‐lateralized emotion effects for negative faces (Morris et al., 1998; Vuilleumier et al., 2001; Zald, 2003). Left‐lateralization of amygdala activity has also been found for emotional words (Flaisch et al., 2015; Herbert et al., 2009; Kensinger & Schacter, 2006). In general, many factors have been found to influence amygdala activation (Costafreda, Brammer, David, & Fu, 2008) and correlations of amygdala response to emotional stimuli across different tasks are surprisingly low (Villalta‐Gil et al., 2017). Therefore, it is difficult to generalize findings or compare emotion effects in the amygdala between studies using different experimental paradigms and tasks. Thus, extent and lateralization of emotion processing in the amygdala await further empirical clarification.

In sum, the modulation of cerebral activity by negative stimuli seems to depend on the stimulus type. This is especially evident when comparing pictorial and verbal stimuli, as the latter might elicit emotion effects particularly in higher‐order processing regions. However, the pattern and extent of emotional enhancement may also differ between faces and scenes. Furthermore, lateralization of emotion effects in the amygdala may depend on the stimulus type: Pictures and faces have been reported to exhibit bilateral emotion effects, with faces potentially showing stronger activation of the left amygdala. Words seem to elicit clearly left‐lateralized emotion effects. If confirmed, such discrepancies in emotion effects between different types of visual stimuli would argue against a common network of brain regions underlying emotion processing, independent of the induction context (Lindquist et al., 2012). Instead, such evidence would suggest that emotion effects differ not only between induction modalities (Sambuco, Bradley, Herring, & Lang, 2020b; Shinkareva et al., 2014), but also between different types of stimuli within the same (i.e., visual) modality. However, as findings from different meta‐analyses and studies with different tasks are hardly comparable or generalizable, the question if different types of visual stimuli induce specific emotional enhancements in brain activation is not yet conclusively answered. Therefore, we investigated stimulus‐specific differences in cerebral processing as well as amygdala lateralization in response to different types of visually presented negative stimuli under natural viewing conditions. In a passive viewing fMRI study, we compared modulations of BOLD responses by negative pictures of complex scenes, faces, and words within one relatively large and demographically diverse sample. Within this sample, we were also able to explore gender (Sabatinelli, Flaisch, Bradley, Fitzsimmons, & Lang, 2004; S. Schneider et al., 2011) and age effects (Gunning‐Dixon et al., 2003; Kehoe, Toomey, Balsters, & Bokde, 2013), which have previously been reported for emotional picture and face processing.

2. MATERIALS AND METHOD

2.1. Participants

Forty‐three German‐speaking participants (22 female, mean age M = 32.19 years, SD = 11.47, range: 18–55) were included in the analyses. Four additional participants had been excluded due to elevated depressive symptoms (N = 3) or reported drug use on the day before the experiment (N = 1). Vision was normal or corrected to normal and all remaining participants were free from self‐reported current or previous brain injuries or neurological and psychiatric problems. Current psychological status was confirmed by German versions of the Beck Depression Inventory II (BDI‐II; Hautzinger, Keller, & Kühner, 2009) and the State–Trait Anxiety Inventory (STAI; Laux, Glanzmann, Schaffner, & Spielberger, 1981). The maximum sum score in this sample was 11 (M = 2.90, SD = 2.85) in the BDI‐II and 44 (M = 31.18, SD = 5.23) for the state anxiety in the STAI. For the trait anxiety, the STAI showed a maximum standard score of T = 63 (M = 47.67, SD = 8.54). Two participants reported to be left‐handed, but were included in the analysis, as they showed typical language lateralization during viewing of negative and neutral words. All participants gave written informed consent according to the Declaration of Helsinki. The study was approved by the Ethics Commission of the German Psychological Society.

2.2. Stimuli

A list of all stimuli used in the experiment is provided in Appendix A. Picture stimuli consisted of 60 negative and 60 neutral scenes, taken from the International Affective Picture System (Lang, Bradley, & Cuthbert, 2008; Table A2 in Appendix A shows normative ratings of the pictures used in our experiment), supplemented by analogously constructed and validated own pictures. Negative and neutral pictures were matched for the number of people or animals depicted and for low‐level visual characteristics such as contrast, brightness, or complexity. Face stimuli stemmed from the NimStim set of facial expressions (Tottenham et al., 2009), the FACES database (Ebner, Riediger, & Lindenberger, 2010), and the Karolinska Directed Emotional Faces (Lundqvist, Flykt, & Öhman, 1998), with 120 identities each representing both a neutral and a negative expression. Fearful facial expressions were used as negative faces, as fear is the emotion most likely activating the amygdala (Costafreda et al., 2008). Out of the 120 identities, 60 identities were pseudo‐randomly assigned to the negative and 60 identities to the neutral condition, so that each identity appeared in only one emotion condition. Identities in the two emotion conditions were matched for gender and age, because faces of young, middle and also old age from the FACES database (Ebner et al., 2010) were included. Word stimuli consisted of 60 negative and 60 neutral German nouns from a self‐constructed and validated dataset used in previous studies (e.g., Kissler, Herbert, Peyk, & Junghofer, 2007). Negative and neutral words were matched regarding non‐emotional characteristics such as word length, orthographic neighborhood density, and word frequency determined using dlexDB (http://www.dlexdb.de/ see Table A3 in Appendix A).

2.3. Procedure

In a free viewing fMRI task, pictures, faces, and words were presented in three event‐related blocks. Each block consisted of 40 negative and 40 neutral stimuli of one stimulus category, randomly selected from the corresponding stimulus set. Each stimulus was presented only once. The order of stimulus categories was randomized across participants (number of participants from the final sample in each randomization after exclusion for unrelated reasons of three participants who viewed the pictures first and one who viewed faces first: pictures‐faces‐words: 3, pictures‐words‐faces: 5, faces‐pictures‐words: 4, faces‐words‐pictures: 10, words‐pictures‐faces: 10, words‐faces‐pictures: 11). Within each block, stimuli were pseudo‐randomized. Each run started with a fixation cross for 3,000 ms. Stimuli were presented centered on a black background for 2,000 ms with a jittered inter‐stimulus interval (i.e., fixation cross), ranging from 2,500 to 23,000 ms.

After scanning, 10 negative and 10 neutral stimuli of each category were randomly selected from the stimuli shown in the fMRI experiment and rated on a 7‐point scale regarding valence and arousal and participants filled out a questionnaire on demographical information as well as the BDI‐II and the STAI. All experiments were created using Presentation software (Neurobehavioral Systems Inc. http://www.neurobs.com).

2.4. Image acquisition

MRI data were recorded using a 3T Magnetom Verio Scanner (Siemens, Erlangen, Germany) with a 12‐channel head coil. High‐resolution T1‐weighed structural images were acquired in 192 sagittal slices (TR = 1,900 ms, TE = 2.5 ms, voxel size = 0.75 × 0.75 × 0.8 mm, matrix size = 320 × 320 × 192). Functional echo‐planar images were collected in 35 coronal slices (TR = 3,000 ms, TE = 33 ms, flip angle = 90°, voxel size = 2.4 × 2.4 × 4 mm, matrix size = 80 × 80 × 35). Functional scans were oriented orthogonal to the hippocampus to minimize signal loss in amygdala and hippocampus. The first three volumes were excluded as dummies, resulting in a total of 207 volumes for each run.

2.5. Analyses

Analysis of behavioral data was conducted using IBM SPSS Statistics, version 25. Ratings of valence and arousal of the stimuli were each analyzed as manipulation check in a 3 × 2 analysis of variance (ANOVA) with the within‐subjects factors stimulus type (picture, face, word) and emotion condition (negative, neutral).

Preprocessing and analysis of fMRI data was conducted using SPM12 (https://www.fil.ion.ucl.ac.uk/spm/software/spm12/) running under MATLAB R2015a (The MathWorks, Inc., Natick, MA). Preprocessing comprised slice timing correction, manual artifact correction using ArtRepair toolbox (Mazaika, Whitfield, & Cooper, 2005), realignment, co‐registration to the structural images, normalization to MNI space and spatial smoothing with an 8 mm full‐width‐half‐maximum Gaussian kernel. Manual artifact correction resulted in an interpolation of 0.56% of all collected volumes (maximum of 2.58% in a single participant).

Two‐stage mixed effect models were set‐up for statistical analyses. For each participant, first‐level contrasts were created by modeling each of the six conditions (3 stimulus types: picture, face, word × 2 emotion conditions: negative, neutral) against baseline (i.e., fixation cross) with the hemodynamic response function. Movement parameters from realignment procedure were included in the model as covariates of no interest. Individual contrast images were then entered into second‐level analyses.

To test for differences in emotion effects between stimuli, the interaction of stimulus type (picture, face, word) and emotion condition (negative, neutral) was examined in a 3 × 2 whole‐brain ANOVA using a full‐factorial design. To further explore significant interactions, mean contrast estimates of significant clusters were extracted using Marsbar toolbox in SPM12 (Brett, Anton, Valabregue, & Poline, 2002) and differences in emotion effects between stimuli were statistically tested post hoc using paired t tests conducted with SPSS 25. To allow for more precise characterization of emotion effects in spite of the considerable differences between the stimulus types in global hemodynamic responses, emotional enhancement of BOLD response was additionally explored within each stimulus type using one‐sample t tests on the emotion effects (negative > neutral). The influence of age and gender was also analyzed within each stimulus type. To test for the influence of age, age was included as a covariate into the one‐sample t tests on the emotion effects. The influence of gender was explored looking at the interaction of emotion condition and gender as a between group factor in a 2‐by‐2‐full‐factorial analysis, separately for each stimulus type. All whole‐brain analyses were thresholded at p < .001 uncorrected on voxel‐level and an additional cluster‐forming threshold k according to p < .05 familywise error (FWE) rate corrected, unless stated otherwise.

Emotion processing in the amygdala was examined in structural regions of interest (ROI), defined using the SPM Anatomy toolbox in SPM 12 (Eickhoff et al., 2005), out of which mean contrast estimates for each condition were extracted using Marsbar toolbox in SPM12 (Brett et al., 2002). These were statistically tested for an interaction of side, stimulus type and valence using a three‐way ANOVA in SPSS 25. Where appropriate, degrees of freedom were corrected according to Greenhouse–Geisser. For readability purposes, F values are reported with uncorrected degrees of freedom and corrected p values.

3. RESULTS

3.1. Behavioral data

Rating data of 39 participants were included in the analysis, as four additional data sets were missing due to technical problems. Table 1 shows the ratings for valence and arousal of the stimuli. Main effects of emotional condition confirmed that negative stimuli were perceived to be more negative in their valence (F[1,38] = 167.67, p < .001, η 2 = .82) and more arousing (F[1,38] = 79.03, p < .001, η 2 = .68). An interaction of emotional condition and stimulus type indicated, that differences between negative and neutral stimuli were smaller for faces than for pictures and words in both valence (F[2,76] = 65.53, p < .001, η 2 = .63) and arousal (F[2,76] = 9.98, p < .001, η 2 = .21) ratings, although ratings still differed significantly (valence: t(38) = −5.76, p < .001; arousal: t(38) = 6.20, p < .001). Age and gender had no effect on the ratings.

TABLE 1.

Means and SDs for the ratings of valence and arousal

| Pictures | Faces | Words | ||

|---|---|---|---|---|

| Valence | Negative | 2.00 (0.74) | 2.90 (0.63) | 2.03 (0.69) |

| Neutral | 4.40 (0.89) | 3.67 (0.71) | 4.32 (0.71) | |

| Arousal | Negative | 5.03 (1.37) | 4.04 (1.46) | 4.75 (1.51) |

| Neutral | 2.91 (1.37) | 2.81 (1.13) | 2.80 (1.25) |

Note: The scale for valence ratings was ranging from 1 = negative to 7 = positive and for arousal from 1 = low arousing to 7 = high arousing.

3.2. Imaging data

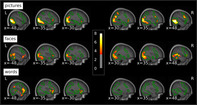

3.2.1. Interaction of stimulus and emotion

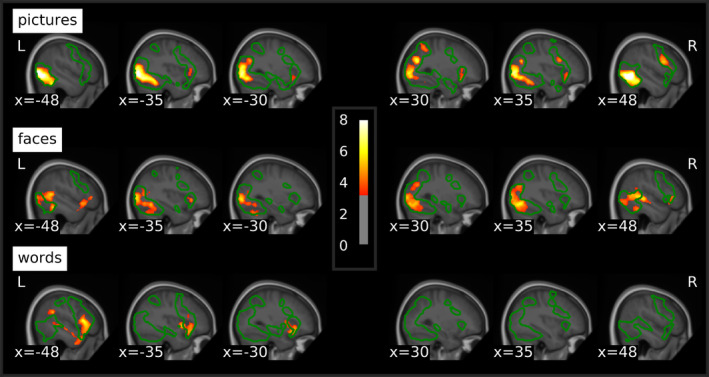

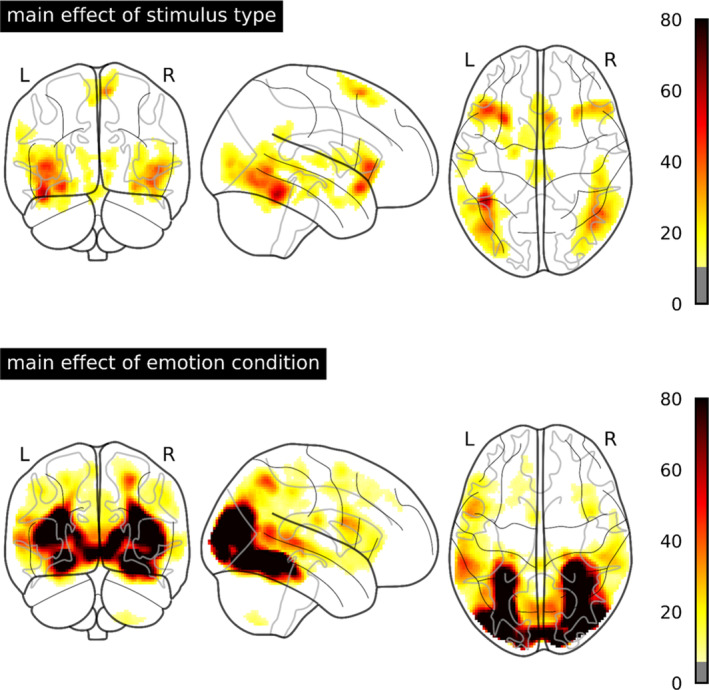

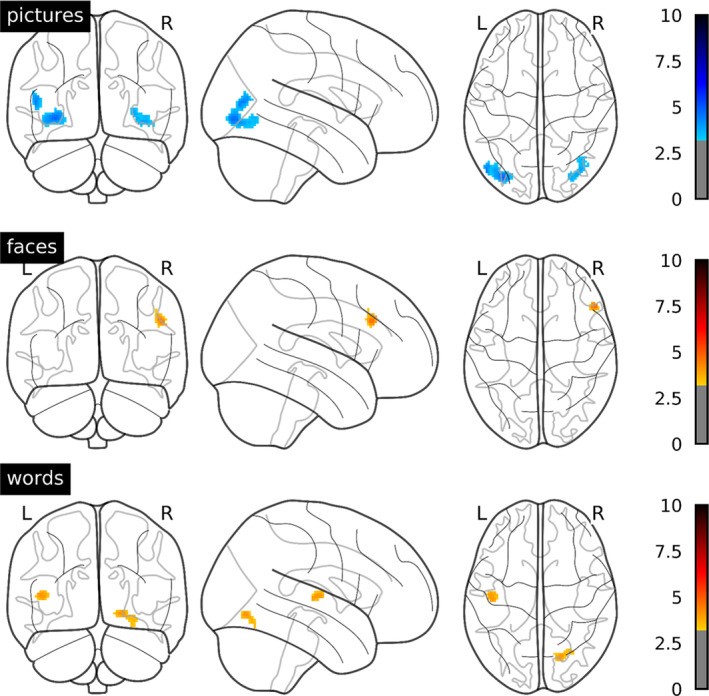

Whole‐brain ANOVA revealed several clusters showing an interaction of stimulus type and emotion condition, indicating differences in emotion effects between stimuli (Table 2; Figure 1). In bilateral extrastriate cortices, extending from lateral occipital cortex over the middle temporal gyrus to the inferior temporal and fusiform gyrus, emotion effects were most pronounced for pictures, followed by faces, and not significant for words (Figure 2a,b). A similar pattern was evident in a cluster spanning the right inferior frontal and precentral gyrus. In the right superior parietal lobe, pictures and faces showed comparable emotion effects, whereas words did not show enhanced activation for negative stimuli. Stronger emotion effects for words than for pictures or faces were observed in the left inferior frontal gyrus (Figure 2c), angular gyrus (Figure 2d), and superior frontal gyrus. Negative faces uniquely triggered enhanced activation in a cluster spanning the right lingual gyrus extending to the cerebellar vermis and in the precuneus. As this article focuses on potential stimulus‐dependent differences in emotion effects, that is, the interaction between stimulus type and emotion, main effects of stimulus type and emotion are reported only in Appendix B. Additionally, Figure 3 shows average stimulus‐specific activation against the fixation cross separately for each stimulus type outlined in green.

TABLE 2.

Peak‐voxels of clusters showing an interaction of stimulus and emotion in the whole‐brain ANOVA and post hoc tests on emotion effects (negative‐neutral) and their differences in mean contrast estimates of significant clusters

| Side | Region | Cluster size | F | MNI‐coordinates | Emotion effects (M [SE]) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | |||||||||

| R | Lateral occipital cortex/middle temporal gyrus/inferior temporal gyrus/fusiform gyrus | 1,285 | 31.13 | 50 | −56 | −4 | Pictures 0.52 (0.06)** | >* | Faces 0.30 (0.06)** | >** | Words −0.02 (0.06) |

| L | Lateral occipital cortex/middle temporal gyrus/inferior temporal gyrus/fusiform gyrus | 1,039 | 16.81 | −46 | −62 | −2 | Pictures 0.51 (0.06)** | >** | Faces 0.24 (0.05)** | >* | Words 0.01 (0.05) |

| R | Inferior frontal gyrus/precentral gyrus | 264 | 15.58 | 46 | 8 | 30 | Pictures 0.47 (0.09)** | >** | Faces 0.06 (0.06) | > † | Words −0.08 (0.08) |

| R | Superior parietal lobe | 242 | 11.30 | 24 | −68 | 48 | Pictures 0.37 (0.09)** | = | Faces 0.19 (0.07)* | >* | Words −0.17 (0.09) |

| L | Superior frontal gyrus | 206 | 11.81 | −16 | 50 | 30 | Words 0.36 (0.07)** | >* | Pictures 0.11 (0.08) | >* | Faces −0.08 (0.06) |

| L | Inferior frontal gyrus | 210 | 14.09 | −48 | 18 | 10 | Words 0.39 (0.06)** | >** | Faces 0.11 (0.05)* | = | Pictures −0.01 (0.06) |

| L | Angular gyrus | 483 | 11.91 | −42 | −50 | 28 | Words 0.20 (0.05)** | > † | Faces 0.08 (0.05) | >* | Pictures −0.13 (0.05)* |

| R | Lingual gyrus/cerebellar vermis | 208 | 10.29 | 6 | −46 | −2 | Faces 0.27 (0.11)* | >* | Words −0.10 (0.09) | > † | Pictures −0.26 (0.07)* |

| R/L | Precuneus | 923 | 15.65 | −4 | −66 | 50 | Faces 0.32 (0.10)* | >** | Words −0.22 (0.09)* | = | Pictures −0.30 (0.10)* |

Note: All results thresholded at p < .001 uncorrected and an FWE‐corrected cluster‐forming threshold p < .05. Asterisks indicate significance of post hoc tests:

Abbreviations: ANOVA, analysis of variance; FWE, familywise error.

p < .05.

p < .001.

p < .10.

FIGURE 1.

F values of the interaction of stimulus type and emotion condition thresholded at p < .001 uncorrected and an FWE‐corrected cluster‐forming threshold p < .05 superimposed on the mean structural T1‐image of the participants. FWE, familywise error

FIGURE 2.

Mean contrast estimates in extrastriate clusters showing an interaction of emotion condition and stimulus type. Error bars denote 95% confidence intervals. Asterisks indicate significance of post hoc tests: *p < .05; **p < .001

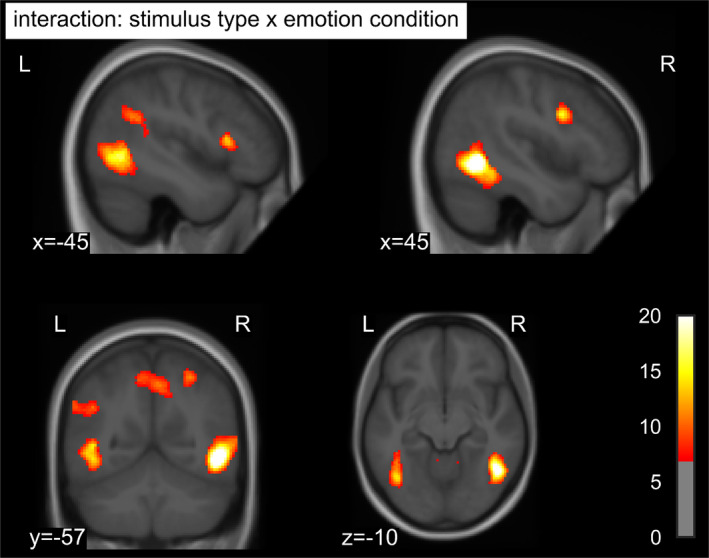

FIGURE 3.

T values of emotion effects within each stimulus type thresholded at p < .001 uncorrected and a cluster‐forming threshold of p < .05 FWE‐corrected superimposed on the mean structural T1‐image of the participants. Green contours delineate the average activation against the fixation cross for the respective stimulus type at the same threshold. FWE, familywise error

3.2.2. Emotion effects within stimulus types

Whole‐brain analyses of emotion effects within stimulus types revealed enhanced response to negative stimuli within all three stimulus types (Table 3; Figure 3), which were mainly located in regions activated by the respective stimulus type in in general (see green outlines in Figure 3), irrespective of emotion condition. Negative compared to neutral pictures elicited widespread emotion effects in extrastriate visual cortices of both hemispheres, spanning lateral occipital cortex as well as regions of the ventral and in the right hemisphere also the dorsal visual processing stream. Further activations were located in right inferior frontal and precentral gyri and bilaterally in the anterior insula. Emotion effects for faces were evident in right primary visual cortex and precuneus as well as bilateral extrastriate visual cortices, including occipital, inferior temporal and fusiform areas and extending to the superior temporal sulci and the cerebellum. Additional emotional enhancement was observed in bilateral inferior frontal gyri and the left anterior insula. For words, there were no emotion effects in visual processing areas. Instead, differential activations for negative words were located in a cluster extending from the left inferior frontal gyrus over the anterior insula to the temporal pole and middle temporal gyrus. Further emotion effects were evident in the superior frontal gyrus and supplementary motor area, supramarginal and angular gyrus as well as in the posterior cingulate. Emotional modulation did not differ between males and females and correlations between emotion effects and age were only evident at a more liberal cluster‐forming threshold of k = 50 voxels (Appendix C), with a negative correlation for pictures in bilateral extrastriate visual cortices and positive correlations in the right dorsolateral prefrontal cortex for faces and in the left Heschl's gyrus and right fusiform gyrus for words.

TABLE 3.

Peaks of emotion effects (negative > neutral) for each stimulus type

| Side | Region | Cluster size | T | MNI‐coordinates | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Pictures | ||||||

| R | Lateral occipital cortex/inferior temporal gyrus/fusiform gyrus | 3,344 | 10.19 | 50 | −58 | −6 |

| L | Lateral occipital cortex/inferior temporal gyrus/fusiform gyrus | 4,245 | 11.01 | −40 | −62 | −12 |

| R | Superior parietal lobe | 845 | 6.74 | 28 | −70 | 28 |

| R | Precentral gyrus/inferior frontal gyrus/anterior insula | 1,038 | 5.83 | 38 | 12 | 26 |

| L | Anterior insula | 328 | 5.38 | −26 | 16 | −12 |

| Faces | ||||||

| R | Primary visual cortex/precuneus/lateral occipital cortex/inferior temporal gyrus/fusiform gyrus/superior temporal sulcus/cerebellum | 5,692 | 7.00 | 56 | −52 | 6 |

| L | Lateral occipital cortex/inferior temporal gyrus/fusiform gyrus/superior temporal sulcus/cerebellum | 3,830 | 7.85 | −42 | −44 | −18 |

| R | Inferior frontal gyrus | 260 | 5.87 | 54 | 30 | 4 |

| L | Inferior frontal gyrus/anterior insula | 653 | 5.13 | −50 | 16 | −8 |

| Words | ||||||

| L | Inferior frontal gyrus/anterior insula/temporal pole/middle temporal gyrus | 2,589 | 6.51 | −46 | 22 | 6 |

| L | Superior frontal gyrus/supplementary motor area | 1,255 | 6.43 | −6 | 16 | 62 |

| L | Supramarginal gyrus/angular gyrus | 412 | 5.44 | −50 | −46 | 28 |

| L | Posterior cingulate | 268 | 4.45 | −8 | −50 | 30 |

Note: All results thresholded at p < .001 uncorrected and a cluster‐forming threshold of p < .05 FWE‐corrected.

Abbreviation: FWE, familywise error.

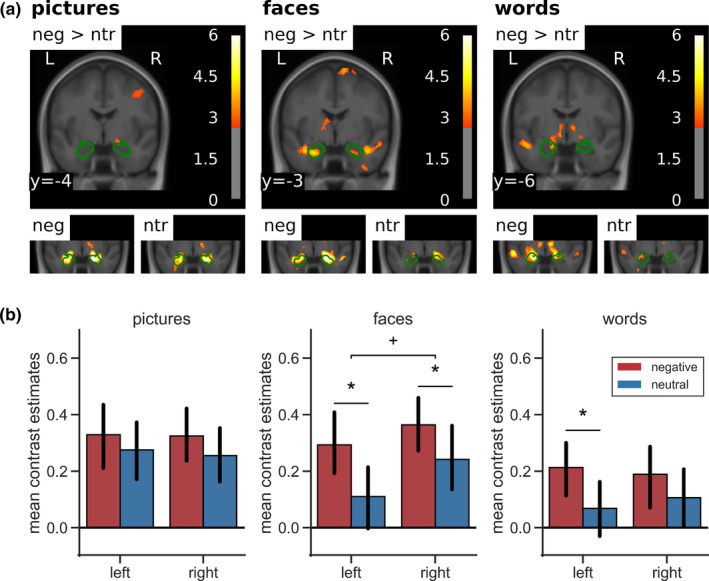

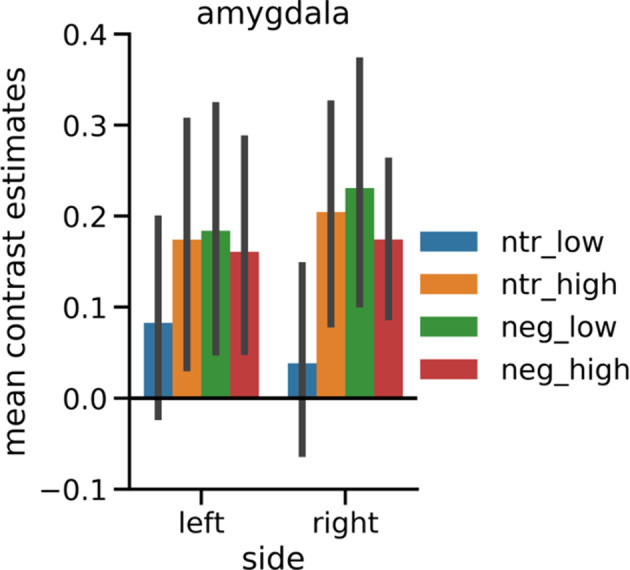

As there were no emotion effects observable in the amygdala at the threshold applied to the whole‐brain analyses, in line with Herbert et al. (2009), emotion effects were also explored at a more liberal statistical threshold of p < .005 (uncorrected) and a cluster‐forming threshold of k = 20 (see Figure 4a). This revealed a bilateral emotion effect for faces and a left‐lateralized effect for words in the amygdala. For pictures, there was no clear differential activation in the amygdala.

FIGURE 4.

Amygdala activation by negative versus neutral stimuli. (a) Top row: emotion effects in a coronal slice of the mean structural T1‐image of the participants including the amygdala in whole‐brain analyses thresholded at p < .005 (uncorrected) and a cluster‐forming threshold of k = 20. Green contours outline structural ROI for the analysis of lateralization of emotion effects in the amygdala. Bottom row: amygdala activation separately for negative and neutral stimuli against baseline in a coronal section zoomed in on the amygdala. (b) Mean contrast estimates in pre‐defined ROI. Error bars denote 95% confidence intervals. Asterisks indicate significance of post hoc tests: +p < .01; *p < .05. ROI, regions of interest

3.2.3. ROI analysis of lateralization of emotion effects in the amygdala

The ANOVA conducted on the mean contrast estimates in structural ROI (Figure 4b) revealed a main effect in the amygdala for emotion (F[1,42] = 11.11, p = .002, η 2 = .21) and stimulus type (F[2,84] = 3.75, p = .028, η 2 = .08). Negative stimuli activated the amygdalae more strongly than neutral stimuli and BOLD response was most pronounced for pictures, closely followed by faces and less for the words. The main effect of side was only marginally significant (F[1,42] = 3.07, p = .087, η 2 = .07), with the right amygdala showing slightly stronger activation than the left amygdala. Furthermore, there was an interaction of side and stimulus type (F[2,84] = 3.91, p = .030, η 2 = .09). While activation in the left amygdala was strongest for pictures, followed by faces and words, the right amygdala exhibited strongest BOLD response to faces, closely followed by pictures and less activation for words. There was also a tendency to laterality differences in emotion effects between the three stimulus types (side × stimulus × valence: F[2,84] = 2.45, p = .092, η 2 = .06). For pictures, there were no emotion‐specific effects in the amygdalae, since there was already relatively high activation of both amygdalae by neutral stimuli. Additional analyses of subsets of the pictures based on the normative arousal ratings (Lang et al., 2008) showed that only very low arousing neutral pictures (arousal: M = 2.99, SE = 0.08) did not induce amygdala activation, while higher arousing neutral pictures (arousal: M = 4.01, SE = 0.09) activated the amygdala as much as the negative pictures (Appendix D). Although normative arousal ratings still differed significantly between the higher arousing neutral, the lower arousing negative (arousal: M = 5.08, SE = 0.10), and the higher arousing negative pictures (arousal: M = 6.28, SE = 0.09), there was no further increase of amygdala activation with increasing arousal. Of note, arousal ratings in neutral pictures were not characterized by larger variability suggestive of emotional ambiguity experienced by the raters. Inspection for specific contents did not suggest any contents that were particularly effective or ineffective in activating the amygdala. In particular, an additional analysis focusing on negative pictures depicting human attack, mutilation, or injuries (pictures: 2,683, 2,811, 3,051, 3,181, 3,185, 3,213, 3,301, 3,550, 6,021, 6,313, 6,520, 6,821, 6,834, 8,230, 8,485, 9,265, 9,402, 9,421, 9,433) versus neutral pictures matched for overall composition (2,026, 2038, 2,372, 2,384, 2,390, 2,393, 2,396, 2,397, 2,400, 2,435, 2,525, 2,579, 2,594, 2,745, 2,749, 2,850, 7,550, 8,241, own: people sitting at a table) did not show major changes in the results regarding amygdala activation. Fearful faces elicited a bilateral emotion effect, which was marginally larger in the left hemisphere. For words, only the left amygdala showed an emotion effect, although this did not differ significantly from the right amygdala.

4. DISCUSSION

We have investigated similarities and differences in activation induced by negative versus neutral pictures, faces, and words in a relatively large and demographically diverse sample. Across stimulus types, our results revealed stronger cerebral activation in multiple areas for negative than for neutral stimuli. Moreover, these emotion effects interacted with the stimulus type. In extrastriate visual areas and the right inferior frontal gyrus, negative pictures triggered strongest emotion effects, followed by fearful faces, while there was no enhancement for negative words. Stronger emotion effects for words than for pictures and faces were observed in the left superior and inferior frontal gyrus and the left angular gyrus. Unique emotion effects for faces were located in the right lingual gyrus, the precuneus and the cerebellum. Examination of emotion effects within each stimulus type suggested some similarities between visual emotion processing of pictures and faces, both triggering emotional enhancement in ventral visual pathways of both hemispheres as well as in the right inferior frontal gyrus and the left anterior insula. However, there were also stimulus‐specific differences, with pictures additionally activating right dorsal visual areas and also the right anterior insula, while unique activation for faces was located in the superior temporal sulcus, especially in the right hemisphere, and bilaterally in the cerebellum. Emotional activation for words exhibited a different pattern, with words eliciting emotion effects in typical higher‐order semantic regions of the left hemisphere rather than in perceptual processing regions. In line with recent theoretical considerations regarding emotion coding in visual cortex (Miskovic & Anderson, 2018), these findings suggest that emotion effects occur specifically in brain areas which are involved in the processing of the respective stimulus type in general. Regarding the lateralization of emotion processing in the amygdala, we found the expected bilateral emotion effect for faces, which showed a tendency to be more pronounced in the left hemisphere. We also replicated the left‐lateralized emotion effect in the amygdala for words. Unlike in several previous studies, amygdala activation was not significantly stronger for negative than for neutral pictures in our study, although overall pictures elicited the strongest amygdala activation.

The stimulus‐specific emotional enhancement of activation in brain areas typically involved in the general processing of the respective stimulus type corresponds to the observation of modality‐specific enhanced activation in sensory regions during visual, auditory, and somatosensory emotion processing (Satpute et al., 2015). Moreover, our results demonstrate that emotion effects in brain activation do not only depend on the induction modality, but also differ between various stimulus types within the same (i.e., visual) modality. Therefore, our findings match previous studies, which showed that emotion processing is highly dependent on the induction context (Sambuco, Bradley, Herring, & Lang, 2020b; Shinkareva et al., 2014).

Differences in emotion effects in our study were especially evident between the pictures and faces on the one hand and the words on the other hand. That enhanced BOLD response in visual cortices was only evident for negative pictures and faces, but not for words, is in line with findings from an EEG study, demonstrating emotion effects for words only at higher‐order processing stages and not at the perceptual level (Rellecke et al., 2011). This supports the hypothesis that emotion processing in written words requires a certain degree of semantic processing (Hinojosa, Méndez‐Bértolo, & Pozo, 2010). Accordingly, similar to previous findings (Flaisch et al., 2015; Kensinger & Schacter, 2006), we found enhanced activation for negative words in typical language processing areas of the left hemisphere. This left‐lateralization argues against the right hemisphere hypothesis of emotion supported by results of Schlochtermeier et al. (2013) and in favor of emotion effects occurring in the hemisphere dominant for language (Price, 2012; Pujol et al., 1999). With the inferior frontal gyrus, the supramarginal and the angular gyrus, emotion effects for words spanned the locations of Broca's and Wernicke's area. Furthermore, these areas are part of a reading network, involved in transferring orthography to phonology (Joubert et al., 2004; Schlaggar & McCandliss, 2007), which precedes semantic processing (Grainger & Holcomb, 2009), and the preparation of speech (Schlaggar & McCandliss, 2007). However, in contrast to the present results, Herbert et al. (2009) showed emotion effects for words primarily in visual processing areas. One simple explanation for this diverging evidence might be that Herbert et al. (2009), who had fewer participants, used a more liberal threshold than we did. An exploratory analysis of our data with the same liberal threshold revealed emotion effects for negative words in lateral occipital cortex of both hemispheres and in the left fusiform gyrus. Still, these clusters were smaller than reported by Herbert et al. (2009) which might be due to the use of different valence (negative vs. negative and positive) or word class (nouns vs. adjectives) in the experiments. Evidence suggests that emotion effects for words are more pronounced for positive stimuli (Bayer & Schacht, 2014; Herbert et al., 2009). However, this is unlikely to account for the lack of visual emotion effects in our study at a more conservative threshold, as we did find quite large emotion effects for negative words in other brain regions. Moreover, in an EEG study (Palazova, Mantwill, Sommer, & Schacht, 2011), stronger emotion effects for positive words were only shown for adjectives and verbs, whereas positive and also negative nouns, as presently used, did elicit emotion effects. Word class could influence the pattern of emotion effects, as adjectives might be perceived as more self‐relevant and therefore more salient. This might lead to emotional modulation of word processing even at early perceptual stages. In sum, the factors contributing to enhanced BOLD response for emotional words in visual versus higher‐order brain areas need further investigation. Present data indicate a predominance of conceptual over perceptual processing for emotional words, while emotion modulation of pictorial stimuli takes place primarily at perceptual stages. The only overlap of emotion effects for all three stimulus types in our study was observed in the left anterior insula, which is involved in the subjective experience of emotional states (Gu, Hof, Friston, & Fan, 2013; Zaki, Davis, & Ochsner, 2012) and also in salience detection (Uddin, 2015). Overlap of emotional enhancement in the left anterior insula has also been shown for visual, gustatory, and olfactory emotion induction (Brown, Gao, Tisdelle, Eickhoff, & Liotti, 2011), supporting the hypothesis that the anterior insula plays a general role in affective awareness (Lindquist et al., 2012).

In line with previous fMRI studies (Britton et al., 2006; Hariri et al., 2002) and meta‐analyses (García‐García et al., 2016; Sabatinelli et al., 2011), we found similar emotion effects for negative pictures and fearful faces in extrastriate visual cortices, which were more pronounced for pictures. Activated areas included the lateral occipital cortex and the inferior temporal and fusiform gyri of both hemispheres, which are part of the ventral visual processing stream relevant for object recognition (Ungerleider & Mishkin, 1982). This suggests a common underlying mechanism of emotion processing in pictorial stimuli. However, as about half of the pictures used in our experiment depicted social situations and therefore also contained human faces, these similarities might be overestimated in our study.

Still, we also revealed important differences between the emotion effects elicited by negative pictures and fearful faces. In addition to the ventral visual stream, negative pictures elicited enhanced activation in the dorsal visual stream, important for processing the spatial location of an object (Ungerleider & Mishkin, 1982). This might reflect that in pictures of natural scenes, processing of affective significance not only requires object recognition, but also some degree of spatial analysis, such as the localization of the relevant (i.e., emotional) object in its context. Together with the emotion effect in frontal regions, this frontoparietal activation could be a manifestation of motivated attention (Moratti, Keil, & Stolarova, 2004; Pessoa & Adolphs, 2010; Sabatinelli & Frank, 2019). By contrast, specific emotion effects for faces were found in the superior temporal sulcus, which has a central role in the processing of dynamic facial features and changeable aspects of faces (Haxby et al., 2002), such as facial expression. Emotion effects for fearful faces also extended to parts of the cerebellum, which contributes to emotional face recognition (Adamaszek et al., 2015), especially for negative expression (Schraa‐Tam et al., 2012). Thus, even the pictorial stimulus types differed in the exact localization of emotion effects, with specific emotion effects arising in areas specialized in processing characteristic features of the respective stimulus type.

However, there are some limitations to the comparison of the pictorial stimuli in our study. Picture and face stimuli differed in their visual complexity with faces being presented in front of a white background, whereas the pictures provided much more contextual information. This might account for the stronger emotion effect for pictures. The type of emotion triggered by pictures and faces might also differ, as fearful faces are cues for environmental threat (Wieser & Keil, 2014), while the pictures probably elicit various negative emotions in the observer. For example, pictures depicting mutilations, violence, or a graveyard might also elicit disgust, anger, or sadness. This could lead to differences in cerebral activation (Hamann, 2012; Kragel & LaBar, 2016), although there is also evidence for a common emotion network underlying all basic emotions (Touroutoglou, Lindquist, Dickerson, & Barrett, 2015). Future studies should further explore similarities and differences between emotional processing of pictures and faces, while controlling for content, context, and complexity of the stimuli as well as the targeted basic emotion category. This might further reduce similarities and emphasize specific emotion effects for negative pictures and faces.

Contrary to previous imaging studies on emotion effects for pictures (Hariri et al., 2002; Kensinger & Schacter, 2006; Sabatinelli et al., 2005), faces (Hariri et al., 2002; Vuilleumier et al., 2001), or words (Herbert et al., 2009; Kensinger & Schacter, 2006), whole‐brain analyses in our study showed no emotion effects in the amygdala. These were evident only at a more liberal statistical threshold, similar to those also used in some previous studies (Hariri et al., 2002; Herbert et al., 2009; Kensinger & Schacter, 2006). One reason for weak emotion effects might be the use of perceptually well‐matched neutral control conditions, which reduces the probability of emotion‐specific amygdala activation (Costafreda et al., 2008). Moreover, our results showed that particularly emotionally neutral pictures are also capable of activating the amygdala (see Figure 4), thereby reducing differences to activation by negative stimuli. This overall finding held in spite of several follow‐up analyses that attempted to identify emotion‐specific activity for several subsets of the pictures, for instance focusing on mutilation and attack versus neutral or excluding animal pictures and also when applying less smoothing (5 mm) to the data. Some evidence also suggests that passive viewing paradigms might be less capable of inducing amygdala activity than explicit emotional tasks (García‐García et al., 2016), although our results appear to indicate that, if present, this effect would seem to apply for emotion‐specific amygdala activation, rather than amygdala activation in general, since the pictures were overall quite potent in activating the amygdalae. Task characteristics also seem to influence amygdala activation in general (Villalta‐Gil et al., 2017).

ROI analyses showed a tendency to stimulus‐specific lateralization of emotion effects in the amygdala. For pictures, we found no significant differences between amygdala activation for negative and neutral pictures on either side, because even neutral pictures elicited rather strong amygdala activation as evident from the main effect of stimulus type on amygdala activation. This is in line with some studies (Britton et al., 2006; Flaisch et al., 2015), which already reported a lack of emotion‐specific effects for pictures in the amygdala. An explanation for this could be that the amygdala acts as a relevance detector (Sander, Grafman, & Zalla, 2003) and might be more active, the more demanding the viewing conditions are. As in the present study the neutral pictures were just as complex as the negative pictures, this complexity might complicate relevance detection and thus lead to more amygdala activation, even for pictures subjectively appraised as emotionally neutral. Another explanation might be the arousal of the neutral stimuli used in this study. Congruent to the previously shown arousal dependency of emotion effects in the amygdala (Garavan, Pendergrass, Ross, Stein, & Risinger, 2001), the lowest arousing neutral pictures in our study induced no amygdala activation, while higher arousing neutral pictures elicited activation comparable to negative pictures. Although negative pictures were still more arousing than the higher arousing neutral stimuli and variability in ratings did not differ between subsets, excluding emotional ambiguity as a potential confound (Schneider, Veenstra, van Harreveld, Schwarz, & Koole, 2016), there was no further gain of amygdala activation with increasing arousal in negative stimuli. This suggests a discrete arousal threshold above which the amygdala is activated. Unfortunately, unbalanced randomization of the order of the three stimulus types in the experiment has led to the fact, that about half of the participants viewed the pictures in the last block. Although follow‐up analyses did not reveal clear habituation of emotion effects in the amygdala in our study, this could have further reduced emotion effects for the pictures. If so, habituation effects would have to be specific for negative but not neutral pictures, since overall amygdala activity was highest for pictures throughout the experiment.

Of note, similar to previous findings regarding emotion effects for pictures (Bisby, Horner, Hørlyck, & Burgess, 2016), despite similar activation levels in the amygdala, visual cortex responses markedly differed between negative and neutral stimuli. A consistent emotional response to aversive stimuli across different tasks in the visual cortex, but not the amygdala, has also been reported by Villalta‐Gil et al. (2017) and highlights the role of extra‐amygdalar sources in emotional enhancement of visual processing (Pessoa & Adolphs, 2010; Petro et al., 2017), likely including the sensory cortices themselves (Miskovic & Anderson, 2018). For fearful faces, amygdala activation was elevated in both hemispheres, but there was a trend for a stronger emotion effect in the left amygdala. This is in line with previous studies, reporting left‐lateralized emotion effects in the amygdala (Morris et al., 1998; Vuilleumier et al., 2001; Zald, 2003), which contrasts with the right‐lateralization of face processing in general (Damaskinou & Watling, 2018; De Renzi, 1986). However, our results showed that the right, but not the left, amygdala was consistently activated above baseline by neutral faces. This suggests that the right amygdala might respond to faces in general, whereas the left amygdala responds more specifically to emotional facial expressions. For words, an emotion effect arose in the left amygdala only, which replicates previous findings (Herbert et al., 2009; Kensinger & Schacter, 2006), in line with the left‐hemisphere dominance for language in general.

We also explored effects of age and gender on the emotion effects. Only small clusters below our statistical threshold revealed a correlation between age and emotion effects. For pictures, emotion effects in bilateral extrastriate visual cortices decreased with age, which is in line with previous findings of reduced reactivity to emotional arousal in older subjects (Kehoe et al., 2013). Also in line with previous research (Yurgelun‐Todd & Killgore, 2006), we found increasing emotion effects with age for faces in the dorsolateral prefrontal cortex. For words, results indicated increasing emotion effects in the left Heschl's gyrus and right fusiform gyrus. Contrary to previous imaging studies, we found no gender differences in extent (Sabatinelli et al., 2004) or lateralization (Schneider et al., 2011) of emotion effects. However, Sabatinelli et al. (2004) found gender differences in particular for high arousing positive, but not for negative stimuli. This suggests that gender differences in affective stimulus processing may be restricted to specific emotion categories or require very large samples to detect.

In general, one limitation to our study is that we used negatively arousing emotional stimuli only and did not include positive stimuli. Therefore, we cannot differentiate between effects of valence and arousal on the emotion effects and their differences between the stimulus types. Although emotion effects on the behavioral level (Anderson, 2005) and in brain activation (Lang et al., 1998) were shown to be driven mainly by arousal, future studies should also take valence‐specific differences into account. Furthermore, we based our study on the analysis of briefly presented, static, and highly controlled visual stimuli, which reduces ecological validity of our results. Emotion processing in daily life must deal with a continuous stream of complex and multimodal information and processing of naturalistic stimuli might differ from processing of simplistic stimuli. For example, naturalistic audiovisual stimuli, such as emotional film scenes, have been shown to elicit strongest emotion effects in dorsal visual areas (Goldberg, Preminger, & Malach, 2014), whereas our static scenes mainly activated the ventral visual stream. So far, there is only few data regarding processing of continuous stimuli with emotionality varying over time, but evidence suggests that inter‐subject correlations during viewing of a film scene vary with the suspense of the scene in brain regions included in salience and executive networks, but not in sensory areas (Schmälzle & Grall, in press). This might emphasize the role of higher‐order cognitive processes in emotion processing of naturalistic stimuli. While the stimuli used in our experiment are suitable for the investigation of basic mechanisms of emotion processing in the brain, naturalistic paradigms may induce larger effects (Kim et al., 2017; Kim, Wang, Wedell, & Shinkareva, 2016; Trautmann, Fehr, & Herrmann, 2009) and generalize better to everyday life. By virtue of their higher power and intrinsic appeal, they may also be particularly suitable for investigation of various patient groups when maximal group differences and minimal task demands are desired. On the other hand, for those complex naturalistic stimuli, it may not always be clear, what psychological or perceptual dimension or mix of dimensions causes the observed effects, even if there might be good inter‐subject correlation in such effects. Presently, this is already evident from the activity elicited by the complex scenes which is larger, but also somewhat more difficult to interpret than the activity elicited by the other stimulus types. At any rate, future studies should also address emotion processing of more naturalistic and dynamic stimuli and investigate how results might differ from or converge with the processing of simpler emotional stimuli.

5. CONCLUSION

Taken together, across three different stimulus types, we confirmed that negative stimuli trigger stronger cerebral activation than neutral stimuli. Differences in emotion effects were particularly evident between pictorial (pictures and faces) and verbal stimulus types, with only the former eliciting emotion effects in visual brain areas, while negative words (nouns) enhanced activation in higher‐order language processing regions. However, there were also specific emotion effects for pictures in the dorsal stream and for faces in the superior temporal sulcus. Additionally, activation extent and lateralization of emotion effects in the amygdala differed as a function of stimulus type, whereas the left anterior insula showed similar emotion effects across all three stimulus types. Our results suggest that emotion effects for each stimulus type arise specifically in brain areas involved in the processing of the respective stimulus type in general and converge in the left anterior insula. Therefore, emotion processing of a given stimulus type seems to depend on a combination of the involvement of stimulus‐specific brain regions, such as sensory or specialized higher‐order processing areas, and common regions involved in emotion processing irrespective of the context of the emotion induction, such as the anterior insula.

CONFLICT OF INTEREST

C. G. B. obtained honoraria for speaking engagements from UCB (Monheim, Germany), Desitin (Hamburg, Germany), and Euroimmun (Lübeck, Germany). C. G. B. and J. K. receive research support from Deutsche Forschungsgemeinschaft (German Research Council, Bonn, Germany) and Gerd‐Altenhof‐Stiftung (Deutsches Stiftungs‐Zentrum, Essen, Germany). The remaining authors declare no conflict of interest.

ETHICS STATEMENT

The study was approved by the Ethics Commission of the German Psychological Society (DGPs).

PATIENT CONSENT STATEMENT

All participants gave written informed consent according to the Declaration of Helsinki.

ACKNOWLEDGMENTS

This work was supported by the Deutsche Forschungsgemeinschaft (BI1254/8‐1 and KI1286/6‐1).

APPENDIX A: STIMULUS SET 1.

TABLE A1.

List of stimuli used in the experiment

| Pictures | Negative | Neutral |

|---|---|---|

| IAPS pictures (Lang et al., 2008) | 1,120 | 1,450 |

| 1,205 | 1,675 | |

| 1,275 | 1,910 | |

| 1,300 | 2,026 | |

| 1,304 | 2,038 | |

| 1,932 | 2,102 | |

| 2,095 | 2,308 | |

| 2,205 | 2,372 | |

| 2,276 | 2,377 | |

| 2,683 | 2,384 | |

| 2,688 | 2,390 | |

| 2,710 | 2,393 | |

| 2,800 | 2,396 | |

| 2,811 | 2,397 | |

| 2,981 | 2,400 | |

| 3,051 | 2,411 | |

| 3,103 | 2,435 | |

| 3,181 | 2,440 | |

| 3,185 | 2,484 | |

| 3,213 | 2,487 | |

| 3,230 | 2,495 | |

| 3,300 | 2,499 | |

| 3,301 | 2,513 | |

| 3,350 | 2,521 | |

| 3,550 | 2,525 | |

| 6,021 | 2,575 | |

| 6,311 | 2,579 | |

| 6,313 | 2,580 | |

| 6,415 | 2,594 | |

| 6,520 | 2,635 | |

| 6,821 | 2,745 | |

| 6,834 | 2,749 | |

| 7,380 | 2,840 | |

| 8,230 | 2,850 | |

| 8,485 | 5,471 | |

| 9,000 | 5,531 | |

| 9,006 | 7,009 | |

| 9,007 | 7,187 | |

| 9,040 | 7,476 | |

| 9,041 | 7,491 | |

| 9,043 | 7,512 | |

| 9,140 | 7,513 | |

| 9,265 | 7,550 | |

| 9,402 | 7,632 | |

| 9,421 | 8,241 | |

| 9,432 | 8,312 | |

| 9,433 | ||

| 9,480 | ||

| 9,560 | ||

| 9,584 | ||

| 9,611 | ||

| 9,630 | ||

| 9,800 | ||

| 9,810 | ||

| 9,909 | ||

| 9,940 | ||

| Own dataset |

Child soldier Burning house Moldy orange Moldy toast |

Wooden chair Empty dental practice People sitting at a table Car Church building Hiking shoes and socks Train Crowned crane Mallard duck Pot‐bellied pig Fox Toad Bird Person cooking a meal |

| Faces | Negative | Neutral |

| NimStim faces (Tottenham et al., 2009) | Female | Female |

| 01F_FE_O | 01F_NE_C | |

| 02F_FE_O | 02F_NE_C | |

| 03F_FE_O | 03F_NE_C | |

| 05F_FE_O | 05F_NE_C | |

| 06F_FE_O | 06F_NE_C | |

| 07F_FE_O | 07F_NE_C | |

| 08F_FE_O | 08F_NE_C | |

| 09F_FE_O | 09F_NE_C | |

| 10F_FE_O | 10F_NE_C | |

| 11F_FE_O | 11F_NE_C | |

| 17F_FE_O | 17F_NE_C | |

| 18F_FE_O | 18F_NE_C | |

| Male | Male | |

| 20M_FE_O | 20M_NE_C | |

| 21M_FE_O | 21M_NE_C | |

| 22M_FE_O | 22M_NE_C | |

| 23M_FE_O | 23M_NE_C | |

| 24M_FE_O | 24M_NE_C | |

| 25M_FE_O | 25M_NE_C | |

| 27M_FE_O | 27M_NE_C | |

| 28M_FE_O | 28M_NE_C | |

| 29M_FE_O | 29M_NE_C | |

| 30M_FE_O | 30M_NE_C | |

| 36M_FE_O | 36M_NE_C | |

| 37M_FE_O | 37M_NE_C | |

| FACES database (Ebner et al., 2010) | Female—Young age | Female—Young age |

| 010_y_f_f_b | 010_y_f_n_a | |

| 020_y_f_f_a | 020_y_f_n_a | |

| 022_y_f_f_a | 022_y_f_n_a | |

| 040_y_f_f_b | 040_y_f_n_a | |

| 048_y_f_f_b | 048_y_f_n_a | |

| 054_y_f_f_a | 054_y_f_n_a | |

| 098_y_f_f_a | 098_y_f_n_a | |

| 101_y_f_f_b | 101_y_f_n_a | |

| 106_y_f_f_b | 106_y_f_n_a | |

| 115_y_f_f_a | 115_y_f_n_a | |

| 163_y_f_f_b | 163_y_f_n_a | |

| 182_y_f_f_b | 182_y_f_n_a | |

| Male—Young age | Male—Young age | |

| 008_y_m_f_b | 008_y_m_n_a | |

| 013_y_m_f_a | 013_y_m_n_a | |

| 041_y_m_f_b | 041_y_m_n_a | |

| 057_y_m_f_a | 057_y_m_n_a | |

| 066_y_m_f_a | 066_y_m_n_a | |

| 072_y_m_f_a | 072_y_m_n_a | |

| 089_y_m_f_a | 089_y_m_n_a | |

| 109_y_m_f_b | 109_y_m_n_a | |

| 114_y_m_f_a | 114_y_m_n_a | |

| 123_y_m_f_a | 123_y_m_n_a | |

| 167_y_m_f_a | 167_y_m_n_a | |

| 170_y_m_f_b | 170_y_m_n_a | |

| Female—Middle age | Female—Middle age | |

| 006_m_f_f_b | 006_m_f_n_a | |

| 011_m_f_f_b | 011_m_f_n_b | |

| 019_m_f_f_a | 019_m_f_n_b | |

| 029_m_f_f_a | 029_m_f_n_a | |

| 035_m_f_f_b | 035_m_f_n_a | |

| 050_m_f_f_a | 050_m_f_n_b | |

| 052_m_f_f_b | 052_m_f_n_a | |

| 061_m_f_f_a | 061_m_f_n_a | |

| 064_m_f_f_b | 064_m_f_n_b | |

| 073_m_f_f_a | 073_m_f_n_b | |

| 080_m_f_f_b | 080_m_f_n_a | |

| 084_m_f_f_b | 084_m_f_n_b | |

| Male—Middle age | Male—Middle age | |

| 007_m_m_f_a | 007_m_m_n_a | |

| 014_m_m_f_a | 014_m_m_n_a | |

| 026_m_m_f_a | 026_m_m_n_b | |

| 032_m_m_f_b | 032_m_m_n_a | |

| 038_m_m_f_b | 038_m_m_n_a | |

| 045_m_m_f_a | 045_m_m_n_b | |

| 056_m_m_f_a | 056_m_m_n_a | |

| 058_m_m_f_b | 058_m_m_n_a | |

| 068_m_m_f_b | 068_m_m_n_b | |

| 070_m_m_f_a | 070_m_m_n_b | |

| 077_m_m_f_b | 077_m_m_n_b | |

| 082_m_m_f_a | 082_m_m_n_b | |

| Female—Old age | Female—Old age | |

| 005_o_f_f_a | 005_o_f_n_b | |

| 012_o_f_f_b | 012_o_f_n_b | |

| 021_o_f_f_b | 021_o_f_n_a | |

| 024_o_f_f_b | 024_o_f_n_a | |

| 036_o_f_f_b | 036_o_f_n_a | |

| 044_o_f_f_a | 044_o_f_n_a | |

| 047_o_f_f_a | 047_o_f_n_a | |

| 055_o_f_f_b | 055_o_f_n_b | |

| 060_o_f_f_a | 060_o_f_n_a | |

| 067_o_f_f_a | 067_o_f_n_b | |

| 075_o_f_f_a | 075_o_f_n_a | |

| 079_o_f_f_b | 079_o_f_n_b | |

| Male—Old age | Male—Old age | |

| 004_o_m_f_a | 004_o_m_n_b | |

| 015_o_m_f_b | 015_o_m_n_a | |

| 018_o_m_f_b | 018_o_m_n_a | |

| 027_o_m_f_b | 027_o_m_n_a | |

| 039_o_m_f_b | 039_o_m_n_b | |

| 042_o_m_f_a | 042_o_m_n_a | |

| 046_o_m_f_a | 046_o_m_n_a | |

| 053_o_m_f_a | 053_o_m_n_b | |

| 059_o_m_f_a | 059_o_m_n_b | |

| 065_o_m_f_a | 065_o_m_n_b | |

| 074_o_m_f_b | 074_o_m_n_b | |

| 076_o_m_f_a | 076_o_m_n_b | |

| KDEF (Lundqvist et al., 1998) | Female | Female |

| AF01AFS | AF01NES | |

| AF06AFS | AF06NES | |

| AF07AFS | AF07NES | |

| AF11AFS | AF11NES | |

| AF13AFS | AF13NES | |

| AF14AFS | AF14NES | |

| AF15AFS | AF15NES | |

| AF16AFS | AF16NES | |

| AF18AFS | AF18NES | |

| AF19AFS | AF19NES | |

| AF30AFS | AF30NES | |

| AF31AFS | AF31NES | |

| Male | Male | |

| AM01AFS | AM01NES | |

| AM02AFS | AM02NES | |

| AM04AFS | AM04NES | |

| AM05AFS | AM05NES | |

| AM06AFS | AM06NES | |

| AM07AFS | AM07NES | |

| AM08AFS | AM08NES | |

| AM10AFS | AM10NES | |

| AM11AFS | AM11NES | |

| AM13AFS | AM13NES | |

| AM22AFS | AM22NES | |

| AM23AFS | AM23NES | |

| Words | Negative | Neutral |

| Own dataset | Angst (fear) | Papier (paper) |

| Blut (blood) | Spiegel (mirror) | |

| Opfer (victim) | Objekt (object) | |

| Teufel (devil) | Sicht (view) | |

| Leiden (suffering) | Eigenschaft (characteristic) | |

| Hunger (hunger) | Flasche (bottle) | |

| Kälte (cold) | Information (information) | |

| Elend (misery) | Kasten (box) | |

| Katastrophe (catastrophe) | Post (post) | |

| Untergang (doom) | Ufer (shore) | |

| Wunde (wound) | Symbol (symbol) | |

| Selbstmord (suicide) | Bewohner (inhabitant) | |

| Verrat (betrayal) | Flugzeug (aircraft) | |

| Explosion (explosion) | Faktor (factor) | |

| Eifersucht (jealousy) | Vorhang (curtain) | |

| Fluch (curse) | Computer (computer) | |

| Ekel (disgust) | Getreide (grain) | |

| Diebstahl (robbery) | Plastik (plastic) | |

| Panik (panic) | Merkmal (feature) | |

| Henker (executioner) | Kurve (curve) | |

| Folter (torture) | Siedlung (settlement) | |

| Wahn (delusion) | Geschirr (dishes) | |

| Sklaverei (slavery) | Batterie (battery) | |

| Ungerechtigkeit (injustice) | Reifen (tire) | |

| Diktator (dictator) | Rasen (lawn) | |

| Hetze (rabble‐rousing) | Bleistift (pencil) | |

| Hilflosigkeit (helplessness) | Unterlage (base) | |

| Brutalität (brutality) | Detail (detail) | |

| Seuche (epidemic) | Ruder (rudder) | |

| Narbe (scar) | Gerüst (scaffold) | |

| Rassismus (racism) | Reflex (reflex) | |

| Vergewaltigung (rape) | Tablett (tray) | |

| Kerker (dungeon) | Beleg (receipt) | |

| Nazi (Nazi) | Quadrat (square) | |

| Lügner (liar) | Regal (shelf) | |

| Spritze (syringe) | Motorrad (motorcycle) | |

| Demütigung (humiliation) | Klingel (bell) | |

| Isolation (isolation) | Ziegel (brick) | |

| Bestie (beast) | Mikroskop (microscope) | |

| Hungersnot (famine) | Votum (vote) | |

| Durchfall (diarrhea) | Truhe (chest) | |

| Kreuzigung (crucifixion) | Aktentasche (briefcase) | |

| Eiter (ous) | Biegung (bend) | |

| Blamage (disgrace) | Tastatur (keyboard) | |

| Beklemmung (anxiety) | Fahrkarte (ticket) | |

| Alptraum (nightmare) | Natrium (natrium) | |

| Habgier (greed) | Kran (crane) | |

| Geisel (hostage) | Partikel (particle) | |

| Verstümmelung (mutilation) | Stellvertretung (representation) | |

| Perversion (perversion) | Brause (sherbet) | |

| Erpresser (blackmailer) | Automat (automat) | |

| Geschwür (ulcer) | Bügeleisen (flat iron) | |

| Heroin (heroin) | Turban (turban) | |

| Tumor (tumor) | Kastanie (chestnut) | |

| Warze (wart) | Kanister (canister) | |

| Ekzem (eczema) | Hausschuhe (slippers) | |

| Lepra (leprosy) | Pronomen (pronoun) | |

| Lungenkrebs (lung cancer) | Rolltreppe (escalator) | |

| Pisse (piss) | Armbeuge (arm bend) | |

| Fixer (junkie) | Kleiderbügel (clothes hanger) |

TABLE A2.

Means, SDs, and t tests of normative ratings of valence and arousal (Lang et al., 2008) of the IAPS pictures used in our experiment

| Negative | Neutral | t (100) | p | |

|---|---|---|---|---|

| Valence |

2.57 (0.61) |

5.24 (0.52) |

23.48 | <.001 |

| Arousal |

5.72 (0.73) |

3.53 (0.65) |

−15.87 | <.001 |

TABLE A3.

Means, SDs, and t tests of characteristics of negative and neutral words

| Negative | Neutral | t (118) | p | |

|---|---|---|---|---|

| Word length (characters) |

7.37 (2.69) |

7.32 (2.10) |

0.11 | .91 |

| Word frequency |

1,019.27 (1,826.27) |

1,015.50 (1,130.77) |

0.01 | .99 |

| Orthographic neighbors Coltheart |

8.10 (9.34) |

7.77 (11.09) |

0.18 | .86 |

APPENDIX B: MAIN EFFECTS OF STIMULUS TYPE AND EMOTION CONDITION 1.

Table B1 and Figure B1 summarize main effects of stimulus type and emotion condition in the whole‐brain ANOVA. There were widespread main effects of stimulus type, especially in the primary and extrastriate visual cortex, where pictures triggered strongest brain activation, followed by faces and words. These main effects were not further explored as our study focused of the emotion effects and its differences between stimulus types. However, the big differences between the stimulus types in general activation, underline that it might be reasonable to separate analyses of emotion effects between stimulus types. Main effects of emotion condition were observed in the extrastriate visual cortex, anterior insula and inferior frontal gyrus, supplementary motor area, stria terminalis, and brain stem of both hemispheres. All these effects indicated stronger activation by negative than by neutral stimuli. Only the left Heschl's gyrus showed stronger activation by neutral stimuli.

TABLE B1.

Peak‐voxels main effects in the whole‐brain ANOVA

| Side | Region | Cluster size | F | MNI‐coordinates | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Stimulus type | ||||||

| R/L | Visual cortex | 38,599 | 320.43 | 28 | −50 | −10 |

| R | Middle/inferior frontal gyrus | 452 | 23.24 | 42 | 6 | 30 |

| R | Angular gyrus | 1,138 | 22.77 | 52 | −62 | 44 |

| L | Supplementary motor area | 654 | 19.70 | −4 | 2 | 62 |

| R | Cerebellum | 219 | 15.34 | 22 | −62 | −60 |

| R | Postcentral gyrus | 324 | 14.17 | 24 | −28 | 62 |

| R | Prefrontal cortex | 230 | 13.08 | 38 | 22 | 42 |

| Emotion condition | ||||||

| Negative > neutral | ||||||

| L | Lateral occipital cortex/inferior temporal gyrus/fusiform gyrus | 3,774 | 60.80 | −42 | −48 | −20 |

| R | Lateral occipital cortex/inferior temporal gyrus/fusiform gyrus/superior temporal sulcus | 3,253 | 42.36 | 42 | −66 | −10 |

| L | Anterior insula/inferior frontal gyrus/amygdala/ | 2,268 | 48.34 | −28 | 18 | −14 |

| R | Anterior insula/inferior frontal gyrus | 961 | 34.20 | 30 | 22 | −14 |

| R/L | Supplementary motor area | 907 | 37.22 | 6 | 18 | 60 |

| R/L | Stria terminalis | 470 | 23.94 | 8 | −2 | 6 |

| R/L | Brain stem | 376 | 23.51 | 8 | −28 | −8 |

| Neutral > negative | ||||||

| L | Heschl's gyrus | 223 | 20.58 | −56 | −14 | 6 |

Note: All results thresholded at p < .001 uncorrected and a cluster‐forming threshold of p < .05 FWE‐corrected.

Abbreviations: ANOVA, analysis of variance; FWE, familywise error.

FIGURE B1.

F values for the main effects of stimulus type and emotion condition thresholded at p < .001 uncorrected and a cluster‐forming threshold of p < .05 FWE‐corrected. FWE, familywise error

APPENDIX C: INFLUENCES OF GENDER AND AGE ON THE EMOTION EFFECTS 1.

We found no interaction between gender and emotion effects, even at more liberal statistical threshold. There were no effects of age on the emotion effects at the applied cluster‐forming threshold k according to p < .05 familywise error rate corrected. However, in an exploratory analysis of age effects at a more liberal, arbitrary, cluster‐threshold of k = 50 voxels (Figure C1), there was a negative correlation of age and the emotion effects for pictures in the lateral occipital cortex of both hemispheres. Age was positively correlated with the emotion effect in the right dorsolateral prefrontal cortex for faces and in the right fusiform gyrus and the left Heschl's gyrus for words.

FIGURE C1.

T values for the correlation of emotion effects and age thresholded at p < .001 uncorrected and a cluster‐forming threshold of k = 50 voxels within each stimulus type. Blue: negative correlation. Red: positive correlation

APPENDIX D: THE INFLUENCE OF AROUSAL ON THE AMYGDALA ACTIVATION BY PICTURES 1.

FIGURE D1.

Mean contrast estimates in the structural ROI of the amygdala for negative and neutral pictures split into higher and lower arousal subgroups. After exclusion of all pictures depicting animals, negative and neutral pictures were divided into halves each based on median split of the normative arousal ratings (Lang et al., 2008) and approximately matched the four subsets for social content. Normative arousal ratings of all subgroups differed significantly: neutral_low (arousal: M = 2.99, SE = 0.08): pictures 2,026, 2,038, 2,102, 2,384, 2,393, 2,396, 2,397, 2,411, 2,440, 2,495, 2,499, 2,513, 2,580, 2,840, 2,850, 5,471, 7,009, 7,187, 7,491, 7,513, 8,312; neutral_high (arousal: M = 4.01, SE = 0.09): pictures 2,308, 2,372, 2,377, 2,390, 2,400, 2,435, 2,484, 2,487, 2,525, 2,575, 2,579, 2,594, 2,635, 2,745, 2,749, 5,531, 7,476, 7,512, 7,550, 7,632, 8,241; negative_low (arousal: M = 5.08, SE = 0.10): pictures 2,095, 2,205, 2,276, 2,710, 3,051, 3,185, 3,230, 3,300, 3,301, 6,311, 9,000, 9,006, 9,007, 9,041, 9,043, 9,265, 9,402, 9,421, 9,432, 9,480, 9,584, 9,611; negative_high (arousal: M = 6.28, SE = 0.09): pictures 2,683, 2,811, 2,981, 3,103, 3,213, 3,550, 6,021, 6,313, 6,520, 6,821, 6,834, 7,380, 8,230, 8,485, 9,040, 9,433, 9,630, 9,800, 9,810, 9,909, 9,940. Error bars denote 95% confidence intervals

Reisch LM, Wegrzyn M, Woermann FG, Bien CG, Kissler J. Negative content enhances stimulus‐specific cerebral activity during free viewing of pictures, faces, and words. Hum Brain Mapp. 2020;41:4332–4354. 10.1002/hbm.25128

Funding information Deutsche Forschungsgemeinschaft, Grant/Award Numbers: KI1286/6‐1, BI1254/8‐1

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from the corresponding author upon reasonable request.

REFERENCES

- Adamaszek, M. , Kirkby, K. C. , Olbrich, S. , Langner, S. , Steele, C. , Sehm, B. , … Hamm, A. (2015). Neural correlates of impaired emotional face recognition in cerebellar lesions. Brain Research, 1613, 1–12. 10.1016/j.brainres.2015.01.027 [DOI] [PubMed] [Google Scholar]

- Aldhafeeri, F. M. , Mackenzie, I. , Kay, T. , Alghamdi, J. , & Sluming, V. (2012). Regional brain responses to pleasant and unpleasant IAPS pictures: Different networks. Neuroscience Letters, 512(2), 94–98. 10.1016/j.neulet.2012.01.064 [DOI] [PubMed] [Google Scholar]

- Alpers, G. W. , & Gerdes, A. B. M. (2007). Here is looking at you: Emotional faces predominate in binocular rivalry. Emotion, 7(3), 495–506. 10.1037/1528-3542.7.3.495 [DOI] [PubMed] [Google Scholar]

- Anderson, A. K. (2005). Affective influences on the attentional dynamics supporting awareness. Journal of Experimental Psychology: General, 134(2), 258–281. 10.1037/0096-3445.134.2.258 [DOI] [PubMed] [Google Scholar]

- Bach, D. R. , Schmidt‐Daffy, M. , & Dolan, R. J. (2014). Facial expression influences face identity recognition during the attentional blink. Emotion, 14(6), 1007–1013. 10.1037/a0037945 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett, L. F. , Bliss‐Moreau, E. , Duncan, S. L. , Rauch, S. L. , & Wright, C. I. (2007). The amygdala and the experience of affect. Social Cognitive and Affective Neuroscience, 2(2), 73–83. 10.1093/scan/nsl042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayer, M. , & Schacht, A. (2014). Event‐related brain responses to emotional words, pictures, and faces—A cross‐domain comparison. Frontiers in Psychology, 5, 1106 10.3389/fpsyg.2014.01106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisby, J. A. , Horner, A. J. , Hørlyck, L. D. , & Burgess, N. (2016). Opposing effects of negative emotion on amygdalar and hippocampal memory for items and associations. Social Cognitive and Affective Neuroscience, 11(6), 981–990. 10.1093/scan/nsw028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borod, J. C. , Tabert, M. H. , Santschi, C. , & Strauss, E. (2000). Neuropsychological assessment of emotional processing in brain‐damaged patients In The neuropsychology of emotion (pp. 80–105). New York: NY: Oxford University Press. [Google Scholar]

- Brett, M. , Anton, J.‐L. , Valabregue, R. , & Poline, J.‐B. (2002). Region of interest analysis using an SPM toolbox. Paper presented at the 8th International Conferance on Functional Mapping of the Human Brain, Sendai, Japan.

- Britton, J. C. , Taylor, S. F. , Sudheimer, K. D. , & Liberzon, I. (2006). Facial expressions and complex IAPS pictures: Common and differential networks. NeuroImage, 31(2), 906–919. 10.1016/j.neuroimage.2005.12.050 [DOI] [PubMed] [Google Scholar]

- Brown, S. , Gao, X. , Tisdelle, L. , Eickhoff, S. B. , & Liotti, M. (2011). Naturalizing aesthetics: Brain areas for aesthetic appraisal across sensory modalities. NeuroImage, 58(1), 250–258. 10.1016/j.neuroimage.2011.06.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costafreda, S. G. , Brammer, M. J. , David, A. S. , & Fu, C. H. Y. (2008). Predictors of amygdala activation during the processing of emotional stimuli: A meta‐analysis of 385 PET and fMRI studies. Brain Research Reviews, 58(1), 57–70. 10.1016/j.brainresrev.2007.10.012 [DOI] [PubMed] [Google Scholar]

- Damaskinou, N. , & Watling, D. (2018). Neurophysiological evidence (ERPs) for hemispheric processing of facial expressions of emotions: Evidence from whole face and chimeric face stimuli. Laterality, 23(3), 318–343. 10.1080/1357650X.2017.1361963 [DOI] [PubMed] [Google Scholar]

- De Renzi, E. (1986). Prosopagnosia in two patients with CT scan evidence of damage confined to the right hemisphere. Neuropsychologia, 24(3), 385–389. 10.1016/0028-3932(86)90023-0 [DOI] [PubMed] [Google Scholar]

- Ebner, N. C. , Riediger, M. , & Lindenberger, U. (2010). FACES—A database of facial expressions in young, middle‐aged, and older women and men: Development and validation. Behavior Research Methods, 42(1), 351–362. 10.3758/BRM.42.1.351 [DOI] [PubMed] [Google Scholar]

- Eickhoff, S. B. , Stephan, K. E. , Mohlberg, H. , Grefkes, C. , Fink, G. R. , Amunts, K. , & Zilles, K. (2005). A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage, 25(4), 1325–1335. 10.1016/j.neuroimage.2004.12.034 [DOI] [PubMed] [Google Scholar]