Abstract

Objective:

The objective of the present study was to determine whether long-term cochlear implant (CI) users would show greater variability in rapid phonological coding skills and greater reliance on slow-effortful compensatory executive functioning (EF) skills than normal hearing (NH) peers on perceptually challenging high-variability sentence recognition tasks. We tested the following three hypotheses: First, CI users would show lower scores on sentence recognition tests involving high speaker and dialect variability than NH controls, even after adjusting for poorer sentence recognition performance by CI users on a conventional low-variability sentence recognition test. Second, variability in fast-automatic rapid phonological coding skills would be more strongly associated with performance on high-variability sentence recognition tasks for CI users than NH peers. Third, compensatory EF strategies would be more strongly associated with performance on high-variability sentence recognition tasks for CI users than NH peers.

Design:

Two groups of children, adolescents, and young adults aged 9 to 29 years participated in this cross-sectional study: 49 long-term CI users (≥ 7 years) and 56 NH controls. All participants were tested on measures of rapid phonological coding (Children’s Test of Nonword Repetition), conventional sentence recognition (Harvard Sentence Recognition Test), and two novel high-variability sentence recognition tests that varied the indexical attributes of speech [Perceptually Robust English Sentence Test Open-set test (PRESTO) and PRESTO Foreign-Accented English test]. Measures of EF included verbal working memory (WM), spatial WM, controlled cognitive fluency, and inhibition-concentration.

Results:

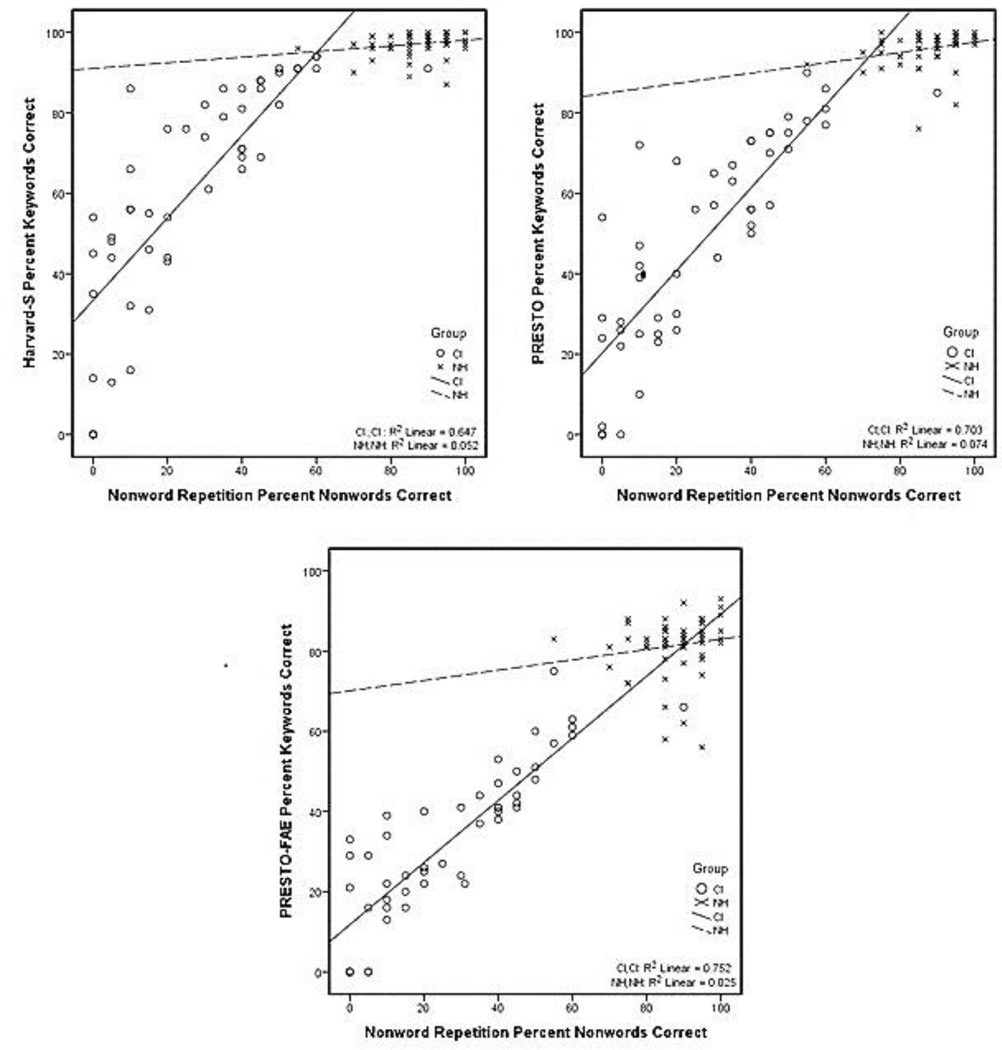

CI users scored lower than NH peers on both tests of high-variability sentence recognition even after conventional sentence recognition skills were statistically controlled. Correlations between rapid phonological coding and high-variability sentence recognition scores were stronger for the CI sample than for the NH sample even after basic sentence perception skills were statistically controlled. Scatterplots revealed different ranges and slopes for the relationship between rapid phonological coding skills and high-variability sentence recognition performance in CI users and NH peers. Although no statistically significant correlations between EF strategies and sentence recognition were found in the CI or NH sample after use of a conservative Bonferroni-type correction, medium to high effect sizes for correlations between verbal WM and sentence recognition in the CI sample suggest that further investigation of this relationship is needed.

Conclusions:

These findings provide converging support for neurocognitive models that propose two channels for speech-language processing: a fast-automatic channel that predominates whenever possible and a compensatory slow-effortful processing channel that is activated during perceptually-challenging speech processing tasks that are not fully managed by the fast-automatic channel (Ease of Language Understanding, Framework for Understanding Effortful Listening, and Auditory Neurocognitive Model). CI users showed significantly poorer performance on measures of high-variability sentence recognition than NH peers, even after simple sentence recognition was controlled. Nonword repetition scores showed almost no overlap between CI and NH samples, and correlations between nonword repetition scores and high-variability sentence recognition were consistent with greater reliance on engagement of fast-automatic phonological coding for high-variability sentence recognition in the CI sample than in the NH sample. Further investigation of the verbal WM-sentence recognition relationship in CI users is recommended. Assessment of fast-automatic phonological processing and slow-effortful EF skills may provide a better understanding of speech perception outcomes in CI users in the clinical setting.

INTRODUCTION

Cochlear implants (CIs) provide auditory stimulation and novel sensory experience for prelingually deaf children, supporting the development of speech perception and spoken language skills after a period in early life without exposure to sound. However, speech perception and spoken language outcomes vary substantially among individual CI users. Some CI users achieve near-normal speech perception skills in quiet, while others struggle with simple speech perception tasks assessing open-set spoken word recognition and basic sentence perception. Demographic and hearing history variables such as age at implantation, duration of CI use, communication mode, education and marital status of the parents, size of the family, socioeconomic status of the family, level of speech emphasis at home and school, and nonverbal IQ have been associated with individual differences in speech perception and spoken language outcomes (e.g., Geers & Brenner 2003; Moog & Geers 2003). However, these demographic and hearing history variables do not fully account for the individual differences and variability in speech perception and spoken language outcomes observed in CI users.

Information processing models of neurocognition offer the potential to understand heretofore unexplained variance in speech perception and spoken language outcomes for CI users (Pisoni 2000). These approaches, based on the premise that “hearing loss is primarily a brain issue, not an ear issue” (Flexer 2011 p. S21), are beginning to address a significant gap in our understanding of the role of cognition, neural plasticity, learning, and early experience in hearing and spoken language processing. Several information processing-based theories have been developed recently to address the interface between neurocognitive abilities and speech perception, particularly speech perception under challenging conditions. A central premise of these models is that speech perception outcomes for any given listener result from a combination of fast-automatic processing operations and slow-effortful compensatory processing operations. The extent to which fast-automatic vs. slow-effortful language processing occurs is dependent on the complexity and challenge of the speech perception stimuli, with the fast-automatic processing channel predominating whenever possible and the slow-effortful channel activating when fast-automatic processing is insufficient (Kronenberger & Pisoni in press; Pichora-Fuller et al. 2016; Rönnberg et al. 2013). The goal of the present study was to determine whether long-term cochlear implant (CI) users would show greater variability in fast-automatic phonological coding skills and greater reliance on slow-effortful compensatory executive functioning (EF) skills than normal hearing (NH) peers during perceptually challenging sentence recognition tasks. The engagement of slow-effortful controlled processing to compensate for delays in fast-automatic processing of speech-language information may be salient under challenging language processing conditions regularly encountered outside the clinic or laboratory. These ecologically valid, challenging language processing conditions include high-variability sentence recognition tasks when the speech of different talkers must be processed. Under high-variability sentence recognition conditions, CI users are unlikely to rely solely on fast-automatic processing because of increased demands on listening effort, working memory (WM) capacity, and active search of the mental lexicon, and may rely instead on compensatory engagement of slow-effortful EF processes (Pisoni et al. 2011; Rönnberg et al. 2013). Understanding the difficulty that arises in spoken language processing in these types of high-variability sentence recognition conditions would begin to address a critical barrier to explaining and remediating individual differences in spoken language outcomes in CI users.

Neurocognitive Models of Fast-Automatic Versus Slow-Effortful Speech Perception and Spoken Language Processing

The Ease of Language Understanding model (ELU; Rönnberg et al. 2013) posits that when the incoming speech signal matches phonological and semantic representations stored in long-term memory (LTM), speech processing is fast and automatic, requiring little if any engagement of active neurocognitive processing resources. However, when challenging listening conditions such as hearing loss, signal degradation, or background noise degrade the match between the incoming speech signal and cognitive representations in LTM, explicit, controlled WM mechanisms are engaged to reconcile the mismatch. Explicit WM storage and processing mechanisms act in a compensatory manner to predict and fill in the information missing from the compromised speech signal and, thereby, facilitate comprehension of the talker’s intended message. Because WM is a limited-capacity neurocognitive resource, the engagement of WM in processing spoken language reduces the available WM capacity for other cognitive functions. The Auditory Neurocognitive Model (ANM; Kronenberger & Pisoni in press) was developed to explain individual differences in language and EF in CI users. Similar to ELU, ANM proposes that CI users will rely more heavily on slow, controlled-effortful EF strategies such as verbal WM and controlled fluency-speed (controlled attention and concentration under time-pressured, speed-demanding conditions) in order to compensate for delays in fast-automatic processing during challenging spoken language processing tasks when compared to NH peers. The Framework for Understanding Effortful Listening (FUEL) model (Pichora-Fuller et al. 2016) adapts Kahneman’s (1973) capacity model of attention to listening using a set of principles for conceptualizing how the amount of cognitive effort expended on speech perception varies as a function of task demands and a listener’s motivation to use limited cognitive resources to overcome challenging listening conditions. Challenging listening conditions include degraded acoustic-phonetic quality of the sound, limited contextual cues, or when the listener shows weaker listening or lower cognitive abilities than their peers (Pichora-Fuller et al. 2016). In order to manage these types of listening demands, a listener may need to rely more on controlled cognitive effort toward the goal of speech perception (Pichora-Fuller 2016). However, cognitive effort is a limited-capacity resource used intentionally to overcome challenging listening demands, and a listener with sufficient cognitive resources to complete the speech perception task may still disengage from a challenging listening condition if it does not activate attention, arousal, and other responses related to motivation (Pichora-Fuller et al. 2016).

These 3 theories linking neurocognitive functioning and language processing share the common hypothesis that speech perception and spoken language processing rely on two potential information processing channels: (1) fast-automatic processing of phonological, lexical, and semantic attributes of language, which predominates whenever possible, particularly when the incoming speech signal is well-specified and closely matches phonological and semantic representations; and (2) slow-effortful processing strategies that actively utilize cognitive resources to process underspecified and sparsely-coded signals in challenging listening environments that cannot be managed by the fast-automatic channel alone. For children with cochlear implants, it is likely that both channels contribute to spoken language processing, and that slow-effortful processing strategies using EF skills and resources are relied on more often under challenging speech perception and language processing conditions because of the increased demands of such tasks on cognitive resources for CI users.

Rapid Phonological Coding

The speech perception skills of almost all typically-developing NH children are sufficiently fast and automatic that routine spoken language processing outcomes (even under mildly challenging conditions such as multiple talkers, dialects, and accents) vary less across the population of NH children than across the population of prelingually deaf children with CIs, who show more variability in speech perception tasks (Eisenberg et al. 2002; Pisoni et al. 2017; Roman et al. 2017). One contributor to the variability in speech perception skills observed in children with CIs is rapid phonological coding—that is, the ability to efficiently (quickly, accurately, and with relatively little effort) detect and manipulate (decompose and reassemble) sounds of speech separate from their meaning. While some children with CIs are able to make use of rapid phonological coding strategies to process speech signals, other children with CIs struggle to use this skill to facilitate fast, automatic speech perception (e.g., Cleary et al. 2002; Dillon et al. 2004a, b, c; Nittrouer et al. 2014).

Previous studies have shown that nonword repetition tests, which require children to listen to and repeat back unfamiliar phonological forms, can be used to measure variability and study individual differences in rapid phonological coding consistently observed in children with CIs (e.g., Casserly & Pisoni 2013; Cleary et al. 2002; Dillon et al. 2004a, b, c). Nonword repetition tests have also been found to be a useful clinical tool for assessing speech and language delays in children with CIs (Nittrouer et al. 2014). Studies of reading development show that rapid automatic decoding of words in print is one of the necessary skills for the development of basic reading skills (Shaywitz, 1998) and that these skills develop and can be assessed in spoken language earlier in life using measures including nonword repetition (Wagner et al. 2013). Nonword repetition scores are one way to quantify fluid, efficient phonological processing, consistent with the fast-automatic channel of language processing (Gathercole & Baddeley 1996; Wagner et al. 2013).

One consistent finding from studies of nonword repetition in children with CIs and NH children is that nonword repetition skills are weaker and less efficient in children with CIs compared to NH peers (e.g. Casserly & Pisoni 2013; Cleary et al. 2002; Hansson et al. 2017; Nittrouer et al. 2014; Willstedt -Svensson et al. 2004). It is likely that underspecified phonological representations contribute to the variance on nonword repetition tasks observed in CI users compared to NH peers (e.g., Nittrouer et al. 2013, 2014, 2017). Furthermore, poor rapid phonological coding (the skill routinely measured by nonword repetition tests) underlies delays in reading observed in some children with CIs (Dillon et al. 2012; Dillon & Pisoni 2006; Geers 2003; Geers & Hayes 2011; Johnson & Goswami 2010). Casserly and Pisoni (2013) reported that nonword repetition was a reliable predictor of long-term speech and language skills in children with CIs.

Nonword repetition tests measure numerous other speech and language processing abilities closely related to, but distinct from rapid phonological coding ability including auditory processing, phonological decomposition and re-assembly, phonological memory and storage, and speech and motor planning/articulation (Coady & Evans 2008; Gathercole 2006). In fact, individual differences in performance on nonword repetition tests observed with children with CIs routinely have a bidirectional predictive association with many speech and language abilities that tap basic neurocognitive information processing skills related to the speed, precision, and efficiency by which CI children process and encode speech. For example, nonword repetition scores are correlated with digit span, which is an index of WM capacity (Pisoni & Cleary 2003; Pisoni et al. 2011), and with speaking rate, which has been used as a proxy for subvocal verbal rehearsal speed (Pisoni & Cleary 2003). Furthermore, sentence production duration, which is an index of subvocal verbal rehearsal speed, has been associated with accuracy ratings on nonword repetition in children with CIs (Dillon et al. 2004b). More recently, Casserly and Pisoni (2013) found that performance on nonword repetition in children with CIs at ages 8–10 years predicted forward digit span, backward digit span, and speaking rate in the same children after 8 years of CI use.

Compensatory EF and Real-World Speech-Language Processing

Studies have found correlations between the executive functions of WM, controlled fluency-speed, and inhibition-concentration and speech-language skills (including speech perception, vocabulary, and verbal skills) in samples of children with CIs (e.g., Kronenberger et al. 2013, 2014; Pisoni et al. 2011). Furthermore, in many cases, the correlations between EF and language in these CI samples substantially exceed those found in age-and-IQ-matched NH samples. For example, Kronenberger et al. (2014) found that verbal WM and non-verbal information processing speed were both significantly correlated with speech perception and language skills in long-term CI users to a greater extent than in NH peers.

Of the EF domains that CI users rely on more heavily than NH peers, verbal WM has received much of the empirical focus. Verbal WM has been found to be significantly associated with speech perception (Cleary et al. 2000; Nittrouer et al. 2013), vocabulary knowledge (Cleary et al. 2000; Geers et al. 2013; Nittrouer et al. 2013), novel word learning (Willstedt -Svensson et al. 2004), grammar (Willstedt-Svensson et al. 2004), reading (Geers et al. 2013), and social communication (Ibertsson et al. 2009; Lyxell et al. 2008). In contrast to verbal WM, spatial WM has not shown associations with speech and language skills in CI users (Kronenberger et al. 2014; Lyxell et al. 2008; Wass et al. 2008). Some studies have found associations between speech and language outcomes and non-WM domains of EF such as verbal processing speed (Pisoni et al. 2011) and inhibition (Figueras et al. 2008; Horn et al. 2004), while other studies have not reported these same types of associations (Nittrouer et al. 2012, 2013). Figueras and colleagues (2008) suggested that EF domains that rely more heavily on language skills (e.g., verbally-mediated/regulated WM, impulse control, and inhibition) will be more important for speech and language outcomes in CI users compared to NH peers than EF domains that are more dependent on visual-spatial abilities.

Ecologically Valid Measures of Spoken Language Processing

Although recognition of high-variability sentences represents an important functional outcome and long-term goal of cochlear implantation, the most commonly used, conventional measures of speech recognition have relied on simple spoken language processing repetition tasks, such as recognition of isolated words and words in sentences presented by the same talker in quiet. In fact, CI users routinely score better on conventional low-variability speech recognition measures than on more ecologically-valid, perceptually-challenging, high-variability robust measures of spoken language (Gilbert et al. 2013).

Perceptually robust measures of speech perception utilize sentence materials that vary on parameters such as background noise, degree of semantic contextual cues, and indexical characteristics of the speaker (e.g., speaking rate, gender, and regional dialect/accent). Measures of speech perception in noise [e.g., Hearing In Noise Test (HINT)], for example, provide more ecological validity than conventional measures of speech perception in quiet because speech perception in the real world routinely takes place against a backdrop of noise. However, most conventional measures of speech perception in noise still lack the robustness of speech that occurs in natural environments, which frequently includes additional sources of variability pertaining to the indexical attributes of speech. In real-world spoken language tasks, listeners must routinely compensate for differences in the indexical attributes of speech during speech perception. Therefore, it is critically important to broaden the outcome measures used to assess speech and language processing for CI users to include measures that vary the indexical attributes of speech (Bernstein et al. 2016; Gaudrain & Başkent 2018; Ji et al. 2013; Pisoni 1997).

Speaker variability occurs regularly in real-world speech recognition scenarios but has received less attention in CI outcome studies. A reasonable expectation is that high-variability robust speech perception and sentence recognition environments involving multiple speakers and a diverse range of regional dialects will be more demanding for CI users than NH peers because CI users encode and store less detailed acoustic-phonetic information from their CI to help them recognize highly-variable speech than is routinely available to NH listeners (Ji et al. 2014; Tamati & Pisoni 2014). Thus, a pressing need currently exists not only to explain individual differences and variability in speech perception outcomes in CI users, but also to begin understanding the sources of variability in more ecologically-valid, perceptually-challenging, robust measures of speech perception and sentence recognition. Based on theories of dual-channel processing of language such as ELU, FUEL, and ANM, active-slow-effortful compensatory neurocognitive processes will likely be engaged by CI users when processing speech under these challenging conditions.

Hypotheses

We examined 3 hypotheses in this study. Our first hypothesis was that CI users would show poorer performance on high-variability sentence recognition tests (i.e., tasks involving multiple talkers and dialect variability) than NH controls, even after adjusting for poorer sentence recognition performance by CI users on a conventional, low-variability, single-talker sentence recognition test. To address this hypothesis, we used 2 novel sentence recognition tests that differed in number of talkers, regional dialects, and international dialects of the speaker. Our second hypothesis was that variability in fast-automatic rapid phonological coding skills would play a greater role for high-variability sentence recognition in CI users compared to NH controls. This hypothesis is based on the finding that CI users display greater variability in the low end of the range of fast-automatic rapid phonological coding skills compared to NH peers. Variability across the range of fast-automatic phonological coding skills, but particularly at the low end of the range, is expected to be critically important for high-variability sentence recognition under challenging listening conditions because fast-automatic processing operations are critical for reducing the amount of active listening effort (which is a limited resource) during processing of complex, challenging speech perception tasks. Our third hypothesis was that EF strategies would play a greater role in high-variability sentence recognition under challenging listening conditions for CI users compared to NH peers because spoken language processing in CI users requires greater engagement of slow, effortful, limited processing resources to compensate for a reduction in the efficiency and effectiveness of fast-automatic processing.

METHOD

Participants

Forty-nine children, adolescents, and young adults with long-term CI use (7 years or more) (CI sample) and 56 NH peers (NH sample) participated in this study. Participants were recruited from a larger study of long-term CI users and NH peers (Kronenberger et al. 2013).

Inclusion criteria for the CI sample were: severe-to-profound hearing loss [>70 dB Hearing Level (HL)] before age 3 years 0 months, cochlear implantation before age 7 years 0 months, use of a modern, multichannel CI system for ≥7 years, and at time of testing enrolled in a rehabilitative program or living in an environment that encouraged the development and use of spoken language skills. Inclusion criteria for both the CI and NH samples were: under 30 years of age, English the primary language spoken in the household, no other neurological or neurodevelopmental disorders or delays documented in medical chart or reported by parents, and a nonverbal IQ >70 as measured by the Comprehensive Test of Nonverbal Intelligence, Second Edition (CTONI-2; Hammill et al. 2009) Geometric Nonverbal IQ Composite Index (normed standard score).

Procedures

Study procedures were approved by the local Institutional Review Board. Written consent and assent were obtained before administration of study procedures. Participants took part in 2 waves of data collection separated by an average of 2.18 (SD 1.06) years in the CI sample and 1.54 (SD 0.76) years in the NH sample. EF measures used in this study were obtained during the first wave, and measures of rapid phonological coding, simple/conventional sentence recognition, and high-variability sentence recognition were obtained during the second wave. Each wave of data collection was performed in 1 to 2 visits to the laboratory consisting of a total of about 4 hours for each visit. Rapid phonological coding and high-variability sentence recognition tests were presented in quiet at 65 dB using a high-quality loud speaker (Advent AV570, Audiovox Electronics) positioned on a table approximately 3 feet in front of the participant. All tests were administered by ASHA-certified speech-language pathologists using the same standard directions for both the CI and NH samples. All tests and directions were administered in auditory-verbal format without the use of any sign language.

Measures

Rapid phonological coding.

The Children’s Test of Nonword Repetition (CNRep; Gathercole et al. 1994; Gathercole & Baddeley 1996) was used to measure rapid phonological coding. Participants repeated spoken nonwords that were presented via audio recording. Percentage of nonwords reproduced correctly was the primary dependent measure used in the current data analysis.

Conventional sentence recognition.

The Harvard Standard Sentence test (Harvard-S) (Egan 1948; IEEE Subcommittee on Subjective Measurements 1969) was used to measure simple/conventional sentence recognition skills. Participants repeated 28 semantically complex, meaningful sentences taken from the IEEE corpus. Sentences were produced in quiet by a male speaker and consisted of 6 to 10 total words (mean=8.0 words), with 5 words per sentence identified as keywords (e.g., “The boy was there when the sun rose”). These sentences are grammatically correct, are phonetically balanced, and have been used extensively to assess speech recognition skills. Percentage of keywords correctly repeated was the primary dependent measure used in the current data analysis.

High-variability sentence recognition.

Two tests were used to measure high-variability sentence recognition: Perceptually Robust English Sentence Test Open-Set (PRESTO) (Gilbert et al. 2013) and PRESTO-Foreign Accented English (PRESTO-FAE) (Tamati & Pisoni 2015). We refer to these tests as high-variability sentence recognition tests here because they utilize linguistically challenging sentences that place heavy processing demands on controlled attention, WM, and encoding due to the presence of high-variability in the sentence materials such as changes in talker and regional dialect from one speaker to the next. Consequently, we assumed these sentences may be more ecologically valid than conventional clinical sentence recognition tests such as the HINT (Nilsson, Soli, & Gelnett, 1996) or AzBio sentences (Spahr & Dorman 2004) that do not place the same additional information processing demands on the listener. In addition, unlike conventional sentence recognition tests such as the HINT or AzBio, the PRESTO and PRESTO-FAE tests are challenging enough to eliminate or substantially reduce ceiling effects in NH samples, who routinely show low error rates on sentence recognition tests in quiet.

PRESTO.

PRESTO is a new high-variability sentence recognition test consisting of 30 sentences drawn from the TIMIT database (Garafolo et al. 1993). Each sentence was spoken by a different male or female talker selected from 1of 6 regional United States dialects, with 3–5 words in each sentence serving as keywords (e.g., “A flame would use up air”, “John cleaned shellfish for a living”). Successful performance on the PRESTO test requires the listener to quickly adapt to changes in the vocal sound source (Gilbert et al. 2013; Tamati et al. 2013). Test-retest reliability and list equivalency of PRESTO have been demonstrated in an earlier study (Gilbert et al. 2013). Percentage of keywords correctly recognized was the primary dependent measure used in the current data analysis.

PRESTO-FAE.

PRESTO-FAE is a new variation of the PRESTO protocol in which sentences are produced by non-native English speakers who differed in accent and international dialect (Tamati & Pisoni 2015). Like PRESTO, PRESTO-FAE is a high-variability sentence test that requires the listener to rapidly adjust to changes in the vocal sound source, but PRESTO-FAE adds the additional challenge of greater and less familiar (for United States populations) accent in the talker. The sentences were selected from the Multitalker Corpus of Foreign Accented English (MCFAE) database (Tamati et al. 2011). The 26 sentences used in PRESTO-FAE were based on the SPIN sentences list (Kalikow et al. 1977), with 3–6 words identified as keywords (“It was stuck together with glue”, “My jaw aches when I chew gum”). Percentage of keywords correctly repeated was the primary dependent measure used in the current data analysis.

Executive functioning.

A battery of neurocognitive assessments was used to evaluate the following 4 core domains of EF: (1) verbal WM, (2) spatial WM, (3) controlled cognitive fluency, and (4) inhibition-concentration (see Kronenberger et al. 2014 for factor analysis supporting these EF domains and composite measures and details of methods used to obtain and sum z-transformed scores to obtain composites).

Verbal WM.

Verbal WM is a limited-capacity neurocognitive system for immediate, short-term updating, storage, and retrieval of phonological and lexical information under conditions of cognitive load (Baddeley 2007). Verbal WM was assessed using the following tests: Digit Span Forward (DSF) subtest of the Wechsler Intelligence Scale for Children, Third Edition (WISC-III) (Wechsler 1991), Digit Span Backward (DSB) subtest of the WISC-III (Wechsler), and the Visual Digit Span (VDS) subtest of the Wechsler Intelligence Scale for Children, Fourth Edition Integrated (WISC-IV-I) (Wechsler et al. 2004). In all of the verbal memory span tests, a sequence of items was presented by the examiner in either an auditory (DSF or DSB) or visual (VDS) modality. The participant was then asked to reproduce the test sequences in forward (DSF, VDS) or backward (DSB) order. Scaled scores based on normative samples by age were computed for each of the 3 tests of verbal WM. A composite Verbal WM score using a sum of the z-transformed (using mean and SD of the entire sample) scores of the scaled scores was the primary measure used in the current data analysis.

Spatial WM.

Spatial WM is a limited-capacity neurocognitive system for immediate, short-term updating, storage and retrieval of the location of objects in space under conditions of cognitive load (Vandierendonck & Szmalec 2011). Spatial WM was assessed using the following 2 tests: Spatial Span Forward and Spatial Span Backward subtests of the WISC-IV-I (Wechsler et al. 2004). In these spatial span tests, stimuli are presented sequentially in different spatial locations on a visual display, and participants are asked to reproduce the sequences by pointing in forward or backward order. Scaled scores based on normative samples by age were computed for each of the 2 tests of spatial WM. A composite Spatial WM score using a sum of the z-transformed (using mean and SD of the entire sample) scores of the 2 scaled scores was the primary measure used in the current data analysis.

Controlled Cognitive Fluency.

Controlled cognitive fluency is the regulation of attention and concentration in order to rapidly, efficiently, and accurately achieve goals (Kronenberger et al. 2018). Controlled cognitive fluency was assessed using the following tests: Coding and Coding Copy subtests of the WISC-IV-I (Wechsler et al. 2004) and Pair Cancellation subtest of the Woodcock-Johnson III Tests of Cognitive Abilities (WJ-III-Cog) (Woodcock et al. 2001). Coding and Coding Copy are timed tests requiring the participant to quickly copy symbols based either on a number-symbol coding key or on an answer grid consisting of the symbols alone. Pair Cancellation is a timed test in which multiple pictures of a dog, ball, and cup are presented and the participant must circle all items where the ball is followed by the dog. Scaled scores based on normative samples by age were computed for the Coding and Coding Copy tests, and standard scores were computed for the Pair Cancellation subtest. A composite Controlled Cognitive Fluency score using a sum of the z-transformed (using mean and SD of the entire sample) scores of the tests was the primary measure used in the current data analysis.

Inhibition-Concentration.

Inhibition-Concentration is the active control of attention in the presence of distraction and of prepotent responses while evaluating and responding appropriately to stimuli (Miyake et al. 2000). Inhibition-concentration was assessed using the Test of Variables of Attention (TOVA) (Greenberg & Waldman 1993). In the TOVA, participants are required to respond a target square presented at the top of a computer screen while ignoring squares presented at the bottom of the screen. Stimuli (squares at top or bottom of the screen) are presented sequentially and randomly. Standard scores were computed for omission errors and reaction time variability. A composite Inhibition-Concentration score using a sum of the z-transformed (using mean and SD of the entire sample) scores of the two standard scores was the primary measure used in the current data analysis.

Data Analysis Approach

First, descriptive statistics were examined and group comparisons of sample characteristics were computed using t tests. In order to test our first hypothesis (that CI users would show poorer performance on high-variability robust sentence recognition tests than NH controls, even after adjusting for poorer sentence recognition performance by CI users based on simple, single-talker sentence recognition), t tests were used to compare CI and NH samples on scores for the PRESTO and PRESTO-FAE, and ANCOVAs were used to compare groups on scores for the PRESTO and PRESTO-FAE while statistically controlling for performance on the Harvard-S. Holm-Bonferroni corrections for multiple statistical tests were used to adjust the p-value required for statistical significance, based on an overall familywise p-value across all tests of 0.05. Next, in order to test our second hypothesis that variability in fast-automatic rapid phonological coding skills would play a greater role in high-variability sentence recognition in CI users compared to NH controls, correlations (both zero-order and partial correlations controlling for Harvard-S) were used to determine for each group the associations between nonword repetition and PRESTO and PRESTO-FAE. Finally, to test our third hypothesis that EF strategies would play a greater role in high-variability sentence recognition under challenging listening conditions for CI users compared to NH peers, we conducted similar correlations to those for our second hypothesis, investigating EF associations with nonword repetition and PRESTO and PRESTO-FAE. For the correlational analyses, Holm-Bonferroni corrections were used to adjust p-values required for statistical significance, using a familywise p-value of 0.05 for multiple statistical tests, separately by sample (e.g., for 5 correlations for the CI sample and 5 correlations for the NH sample, the number of tests used to calculate the correction would be 5, applied separately to the CI and NH results).

RESULTS

Sample Characteristics

Table 1 summarizes descriptive characteristics of the samples. Participants in the CI sample were on average 3 months old (SD 7.8) when deafness was identified, 37.5 months old (3.1 years old, SD 19.6) at CI implantation, and had used their CIs for a duration of 13.9 years (SD 4.3) at the time of initial testing. Duration of deafness and age at cochlear implantation were similar for all participants in the CI sample (r = 0.96). Eighty-two percent of the CI participants were deaf at birth. Pre-implant unaided pure-tone average for frequencies 500, 1000, 2000 Hz was 106.2 dB HL (SD 11.9). The preferred mode of communication for most participants in the CI sample was auditory-verbal (87.8%). CI and NH samples did not differ on age, gender, income, or nonverbal IQ (Table 1).

Table 1.

Sample Characteristics

| CI Sample | NH Sample | t | |||

|---|---|---|---|---|---|

| Mean (SD) | Range | Mean (SD) | Range | ||

| Demographics and Hearing History | |||||

| Chronological Agea | 17.0 (5.2) | 9.3–29.9 | 17.4 (5.1) | 10.0–29.3 | −0.38(ns) |

| Age at Implantationb | 37.5 (19.6) | 8.3–75.8 | NA | NA | |

| Duration of CI Usea | 13.9 (4.3) | 7.3–24.5 | NA | NA | |

| Age Deafness Identifiedb | 3.0 (7.8) | 0–36 | NA | NA | |

| Preimplant PTAc | 106.2 (11.9) | 85.0–118.4 | NA | NA | |

| Communication Moded | 4.7 (0.8) | 2–5 | NA | NA | |

| Income Levele | 7.3 (2.7) | 1–10 | 7.2 (2.5) | 1–10 | 0.19(ns) |

| Nonverbal Intelligencef | 103.0 (14.1) | 89–130 | 106.1 (11.7) | 78–127 | −1.25(ns) |

| P | |||||

| Sex (Female/Male) | 23/26 | 36/20 | .08 | ||

Note: CI, cochlear implant; NH, normal-hearing; SD, standard deviation; degrees of freedom (df) for t tests = 103, with exception of Income (df = 97); (ns), not significant at p ≤ .05; p value obtained from a Fisher’s exact test, 2-sided.

in Years.

in Months.

PTA, preimplant unaided pure-tone average for frequencies 500, 1000, 2000 Hz in dB HL.

Communication mode coded mostly sign (1) to auditory-verbal (6) (Geers & Brenner 2003)

On a 1 (under $5,500) to 10 ($95,000+) scale (Kronenberger et al. 2013).

CTONI-2 Geometric Nonverbal IQ Composite Index (normed standard score).

Comparison of CI and NH Samples on Rapid Phonological Coding and High-Variability Sentence Recognition

The top section of Table 2 summarizes a comparison of performance on rapid phonological coding, simple sentence recognition, and high-variability sentence recognition measures for the CI and NH samples. The CI sample scored significantly lower than the NH sample on rapid phonological coding, simple sentence recognition (Harvard-S), and the 2 measures of high-variability sentence recognition (PRESTO and PRESTO-FAE). Differences in performance between the CI and NH samples on the 2 high-variability measures of sentence recognition remained significant even after the measure of simple sentence recognition (Harvard-S) was statistically controlled, as shown in the bottom section of Table 2.

Table 2.

Comparison of Rapid Phonological Coding Scores and Simple and High-Variability Sentence Recognition Scores

| CI Sample Mean (SD) | NH Sample Mean (SD) | t | |

|---|---|---|---|

| Rapid Phonological Coding | |||

| Nonword Repetition | 27.4 (21.5) | 87.7 (9.1) | −19.11*** |

| Simple Sentence Recognition | |||

| Harvard-S | 61.4 (27.4) | 97.2 (2.8) | −9.70*** |

| High-Variability Sentence Recognition | |||

| PRESTO | 48.4 (26.4) | 96.0 (4.3) | −13.31*** |

| PRESTO-FAE | 33.0 (19.2) | 81.4 (7.4) | −17.44*** |

| CI Sample Marginal Mean (SE) | NH Sample Marginal Mean (SE) | F | |

|---|---|---|---|

| High-Variability Sentence Recognition Controlling for Simple Sentence Recognition | |||

| PRESTO controlling for Harvard-S | 66.3 (0.8) | 80.4 (0.8) | 116.45*** |

| PRESTO-FAE controlling for Harvard-S | 44.8 (1.4) | 71.0 (1.3) | 143.55*** |

Note: Values are scores for % of nonwords (for Nonword Repetition) or % of keywords (for sentence recognition tests) correct. CI, cochlear implant; NH, normal-hearing; SD, standard deviation; SE, standard error; df for t tests = 103; corrected df for ANCOVAs = 2, 104

Significant values after Holm-Bonferroni correction are indicated by

p ≤ 0.001

p ≤ 0.01

p ≤ 0.05.

Correlations Between Nonword Repetition and High-Variability Sentence Recognition

Table 3 summarizes the correlations computed between scores on rapid phonological coding, simple sentence recognition, and high-variability sentence recognition measures for the CI and NH samples. The associations between rapid phonological coding ability and both the simple and high-variability sentence recognition scores were stronger for the CI sample than for the NH sample, as shown in the substantially higher r values for the CI sample than for the NH sample across all correlation analyses. For the CI sample, performance on nonword repetition was positively correlated with all 3 sentence recognition tests (simple and high-variability) (see top and middle rows in Table 3). For the NH sample, no correlations were significant after correcting for multiple tests. For the CI sample, performance on rapid phonological coding was also correlated with performance on PRESTO and PRESTO-FAE after statistically controlling for Harvard-S (bottom section in Table 3). In contrast, for the NH sample, performance on nonword repetition was not significantly correlated with scores on PRESTO or PRESTO-FAE after controlling for Harvard-S.

Table 3.

Correlations Between Rapid Phonological Coding Scores and Simple and High-Variability Sentence Recognition Scores

| CI Sample | NH Sample | |

|---|---|---|

| Rapid Phonological Coding | Rapid Phonological Coding | |

| Nonword Repetition | Nonword Repetition | |

| R | R | |

| Simple Sentence Recognition | ||

| Harvard-S | .81*** (.69***) | .23 (.23) |

| High-Variability Sentence Recognition | ||

| PRESTO | .84*** (.75***) | .27 (.24) |

| PRESTO-FAE | .87*** (.84***) | .16 (.20) |

| High-Variability Sentence Recognition Controlling for Simple Sentence Recognition | ||

| PRESTO controlling for Harvard-S | .40** (.42*) | .15 (.10) |

| PRESTO-FAE controlling for Harvard-S | .57*** (.73***) | .02 (.07) |

Note: CI, cochlear implant; NH, normal-hearing; r values are Pearson correlation coefficients; values in parentheses are correlations using ranges for Nonword Repetition of equivalent sizes for CI (Nonword Repetition score range=30–60% words correct; N=23) and NH (Nonword Repetition range=70–100 % words correct; N=55) samples. Significant values after Holm-Bonferroni correction for each set of 5 correlations or partial correlations within sample are indicated by

p ≤ 0.001

p ≤ 0.01

p ≤ 0.05.

Nonword repetition scores showed a more restricted range in the NH sample (range=55%−100% nonwords correct, with all subjects except 1 scoring 70% or higher) than in the CI sample (range=0–90% of nonwords correct, with only 1 subject scoring above 60%). On the other hand, NH subjects showed a somewhat balanced distribution of nonword repetition scores within the 70–100% range: 64% scored at or below 90% nonwords correct, and 21% scored at or below 80% nonwords correct. In order to investigate whether differences between CI and NH samples on nonword repetition-sentence perception correlations were a result only of restricted range in nonword repetition in the NH sample, we calculated correlations using only the 55 NH subjects with nonword repetition scores in the range of 70–100 (dropping 1 outlier NH subject with nonword repetition=55) and only the 23 CI subjects with nonword repetition scores in the range of 30–60 (dropping 1 outlier CI subject with nonword repetition=90 as well as 25 CI subjects with nonword repetition < 30). These subsamples had identical nonword repetition ranges of 30 and had no overlapping nonword repetition scores (e.g., the lowest nonword repetition score in the NH sample was 70, and the highest nonword repetition score in the CI sample was 60). After restricting range to an identical 30 points in both groups, removing the 2 outliers, and obtaining non-overlapping ranges in the groups, correlations between nonword repetition and sentence recognition scores were minimally changed, with consistent significant relationships in the CI sample and no significant relationships in the NH sample (Table 3). Furthermore, these correlational patterns also held up after controlling for demographic variables (age, income, nonverbal IQ) and vocabulary knowledge (PPVT standard score)(for CI, all r > 0.75, p<0.001; for NH, all r < 0.27, p>0.05).

Figure 1 shows 3 scatterplots displaying the individual scores for nonword repetition on each x axis with the data for the measure of simple sentence recognition (Harvard-S) and the 2 measures of high-variability sentence recognition (PRESTO, PRESTO-FAE) on each y axis, to illustrate the strength, direction, and nature of the association between these variables for the CI and NH groups. The scatterplots show different ranges and slopes for the relationship between nonword repetition and sentence recognition depending on sample and nonword repetition score. A strong positive relationship exists between nonword repetition and sentence recognition for nonword repetition scores of 60% and lower, which includes almost the entire CI sample and only 1 NH subject. On the other hand, the relationship between nonword repetition and sentence recognition is minimal above a nonword repetition score of 60%, which includes almost the entire NH sample and only 1 subject with a CI. Importantly, for all 3 scatterplots the point at which the relationship between nonword repetition and sentence recognition changes corresponds approximately to the low end of the range for nonword repetition for the NH sample (see data points indicated primarily by “x”) and the upper end of the range for the CI sample (see data points indicated by “o”), accounting for the differences in correlations found in Table 3. While ceiling effects may account for the curvilinear relationship for Harvard-S and nonword repetition (top left panel) and PRESTO and nonword repetition (top right panel), ceiling effects are absent for PRESTO-FAE and nonword repetition (bottom panel), indicating that ceiling effects alone cannot account for the difference in slopes for the nonword repetition-sentence recognition relationship between the CI and NH samples.

Figure 1.

Scatterplots of the Association Between Nonword Repetition Score and Scores on the Simple Sentence Recognition Test (Harvard-S) and Complex Sentence Recognition Tests (PRESTO, PRESTO-FAE).

Correlations Between EF and Rapid Phonological Coding and High-variability Sentence Recognition

Table 4 displays correlations between EF, nonword repetition, and simple and high-variability sentence recognition. No correlations met criteria for statistical significance using the Holm-Bonferroni correction, which required p-values of less than 0.004 for the 4 most statistically significant correlations. Notably, 3 of the 4 correlations calculated between verbal working memory, nonword repetition, and simple and high-variability sentence recognition in the CI sample were in a medium-to-large effect size range (Cohen 1992) and fell at a p-value of 0.009 or lower (and the fourth fell at the p<0.05 level), although none reached the 0.004 corrected p-value required for statistical significance. No other EF measures reached an uncorrected p-value of 0.05 or reached the medium effect size range for the CI sample, and only one correlation for the NH sample reached an uncorrected p-value of 0.05 (verbal WM and Harvard-S score), with all other correlations falling in the small range or lower.

Table 4.

Correlations Between Executive Functioning Scores and Rapid Phonological Coding and High-Variability Sentence Recognition Scores

| CI Sample | NH Sample | |||||||

|---|---|---|---|---|---|---|---|---|

| Executive Functioning | ||||||||

| VWMa | SWMb | CCFc | I-Cd | VWMa | SWMb | CCFc | I-Cd | |

| Rapid Phonological Coding | ||||||||

| Nonword Repetition | .37 | .22 | .21 | −.01 | .16 | .03 | −.12 | .23 |

| Simple Sentence Recognition | ||||||||

| Harvard-S | .37 | .08 | .12 | −.12 | .36 | .12 | .05 | .04 |

| High-Variability Sentence Recognition | ||||||||

| PRESTO | .39 | .13 | .15 | −.10 | .23 | .06 | −.01 | .01 |

| PRESTO-FAE | .30 | .16 | .18 | −.12 | −.04 | −.02 | −.06 | −.16 |

Note: CI, cochlear implant; NH, normal-hearing; r values are Pearson correlation coefficients.

Verbal Working Memory

Spatial Working Memory

Controlled Cognitive Fluency

Inhibition-Concentration

Only significant values after Holm-Bonferroni correction are indicated by

p ≤ 0.001

p ≤ 0.01

p ≤ 0.05

DISCUSSION

The purpose of this study was to examine the relations between fast-automatic rapid phonological coding and slow-effortful compensatory EF mechanisms on sentence recognition under perceptually-challenging conditions. We first tested the hypothesis that CI users would show poorer performance on high-variability robust sentence recognition tests that reflect everyday, real-world challenges of speaker and dialect variability than NH controls, even when simple, low-variability (single talker in quiet) sentence recognition skills were statistically controlled. The results supported our hypothesis. CI users scored significantly lower than NH peers on tests of high-variability sentence recognition. The lower performance of the CI sample on high-variability sentence recognition tests held up even after performance on a conventional sentence recognition test (Harvard-S) was statistically partialled out from performance on the high-variability sentence recognition tests (PRESTO, PRESTO-FAE). This finding suggests that individual differences in sentence recognition abilities obtained using high-variability conditions cannot be fully explained by measures obtained from conventional, low-variability single-talker sentence recognition tests administered in quiet. More robust, ecologically valid measures of spoken language processing are needed to broaden outcome measures and fully understand individual differences in outcomes after cochlear implantation.

Next, we tested the hypothesis that fast-automatic rapid phonological coding skills would relate more strongly with high-variability sentence recognition in CI users compared to NH peers. The results also supported this hypothesis. Rapid phonological coding ability was more strongly correlated with performance on both tests of high-variability sentence recognition in the CI sample than in the NH sample, even after statistically controlling for the less-demanding simple sentence recognition test. This novel finding is consistent with our hypothesis that rapid phonological coding skills are critical for high-variability sentence recognition in CI users (vs. NH peers), especially when the complexity and variability of speech more closely matches that of natural language conditions where additional challenges such variation in talkers are present.

An examination of the scatterplots sheds further light into the nature of the association between rapid phonological coding and high-variability sentence recognition in the CI and NH groups, and supports our prediction that variability particularly at the low end of the range of fast-automatic phonological coding skills for CI users will be a critical factor in sentence recognition outcomes. The finding of different correlations between nonword repetition and sentence recognition in CI vs. NH samples suggests fundamental differences in the role of fast-automatic phonological coding skills and sentence recognition in these samples. For CI users, who have almost universally lower nonword repetition scores of 60% or less, fast-automatic phonological coding skills are very strongly related to sentence recognition. In contrast, for NH peers, who have almost universally higher nonword repetition scores of 70% or greater, fast-automatic phonological coding skills were minimally related to sentence recognition. The cutoff point of a 60% score on nonword repetition for the difference in the strength of the relationship with sentence recognition may be a factor either of sample hearing status/experience (CI vs. NH) or proficiency with fast-automatic phonological coding skills (corresponding to a score greater or less than 60% on the nonword repetition test), or both. Because hearing status and nonword repetition score overlap so significantly in these samples, it is not possible to tease out separate effects of hearing status or proficiency with fast-automatic phonological coping skills as the driver of the differences in correlations between nonword repetition and sentence perception. Nevertheless, the fact that almost all CI users score below this nonword repetition cutoff value of 60%, while almost all NH peers score above that cutoff value suggests that this level of nonword repetition is critically important for the development of the type of high-variability sentence recognition fluency typical of the NH population. There may be a cutoff level of rapid phonological coding, above which sentence recognition skills are uniformly strong regardless of additional improvement in rapid phonological coding ability, as demonstrated by the flat slope of the nonword repetition-sentence recognition association in NH samples above a nonword repetition score of 60%. Furthermore, based on our sample statistics, this cutoff value for rapid phonological coding is attained by almost all NH listeners, supporting strong fast, automatic sentence recognition skills by almost the entire NH population. In contrast, only a small minority of CI users attained this cutoff in rapid phonological coding, requiring allocation of additional resources for sentence recognition in the majority of individuals with CIs.

In their seminal research on speech intelligibility, Miller et al. (1951) showed a non-linear relationship between recognition of words in isolation and sentence recognition performance. In their study, spoken sentences with 5 keywords and the same keywords scrambled and spoken in isolation were presented to NH listeners and scored as the percentage of keywords correctly recognized in each condition. They found a cutoff level of 80% for recognition of isolated words corresponded to ceiling level sentence recognition skills (see Miller 1951 Fig. 3). Furthermore, in a sample of children with CIs, Nittrouer et al. (2014) showed a 70% cutoff level for the benefit of phoneme recognition needed to attain vocabulary skills at a level of 85% or higher (See Table 2). The cutoff levels observed in these studies and in the current study for the benefit of fast-automatic processing of phonological and lexical information in the service of more complex speech and language processing suggests that these cutoff levels should be the goals for speech and language assessment and interventions designed for CI users.

Next, we tested the hypothesis that CI users would rely more heavily on slow-effortful-controlled compensatory EF strategies for high-variability sentence recognition under challenging listening conditions than NH peers. After correcting for multiple statistical tests, no correlation reached the p-value required for statistical significance. However, this result should be interpreted with caution, as it is possible that the trade-off between alpha and beta error may have been adversely affected by this conservative correction. Specifically, all 4 correlations calculated between verbal working memory, nonword repetition, and simple and high-variability sentence recognition in the CI sample were in a medium effect size range or greater and fell at an uncorrected p-value of less than 0.05. In contrast, only 1 of the 16 correlations between EF and sentence recognition in the NH sample reached an uncorrected p-value of less than 0.05. Given this pattern of correlations, additional investigation of a potential relationship between verbal WM, nonword repetition, and high-variability sentence recognition is warranted before concluding that no relationship exists between those constructs. It may be possible that the verbal WM subdomain of EF plays a central foundational role in processing high-variability indexical information in sentences (e.g., by processing and storing phonological information until additional strategies can be used to improve sentence recognition) and that larger sample sizes targeting verbal WM specifically will be necessary to demonstrate this effect.

Findings obtained in this study for rapid phonological coding in zero-order correlations are consistent with compensatory models that propose 2 pathways for spoken language processing— a fast-automatic channel (rapid-automatic phonological coding that predominates whenever possible) and a slow-effortful channel that engages compensatory EF mechanisms when the fast-automatic channel is insufficient (e.g., ELU, FUEL, and the Auditory Neurocognitive Model (ANM)(Kronenberger & Pisoni, in press)). Results for nonword repetition in this study support a strong role for fast-automatic processing in sentence recognition in CI users, but results for verbal WM, a component of EF, were less clear in supporting use of the slow-effortful channel in CI users. Other research has more clearly shown a strong relationship between verbal WM and spoken language outcomes in CI users, which is greater than that for NH peers (Kronenberger & Pisoni, in press). A central premise of compensatory language models such as the ANM, ELU, and FUEL is that explicit, effortful EF processes that rely on WM mechanisms are recruited to resolve poorly-specified input signals under challenging conditions that result in a mismatch between the incoming speech signal and cognitive representations in memory (see Rönnberg et al. 2013). An important premise of the models proposing 2 channels for speech and language processing is not that NH listeners will never rely on compensatory EF under perceptually-challenging conditions, but that CI users will rely on these slow, effortful, resource-demanding, conscious processing mechanisms earlier and more often than NH peers, in order to compensate for delays in fast-automatic processing of language. One clinical implication of these findings is the need to test CI users under a broader range of high-variability language processing conditions, not just conventional, low-variability speech recognition tests that are routinely administered in clinic and laboratory settings.

Coarsely-coded auditory input and significant variability in auditory fidelity in CI users may explain some of the shared variance between nonword repetition and sentence recognition in the CI subsample. Variability in auditory fidelity could also be a contributing factor to the observed correlations between verbal WM (two of the three verbal WM subtests used spoken stimuli) and sentence recognition in the CI sample, although those correlations were nonsignificant as a result of failing to survive the Holm-Bonferroni correction. Thus, a critical topic for future investigation is how variability in auditory outcomes might influence or interact with fast-automatic and slow-effortful pathways of spoken language processing.

In sum, the present findings suggest that CI users showed poorer recognition of high-variability sentences than NH peers and that a stronger relationship exists between high-variability sentence recognition performance and rapid-automatic phonological coding skills in CI users than in NH peers. The results of this study should be interpreted in the context of study limitations. First, the EF measures and the measures of rapid phonological coding, speech perception, and high-variability sentence recognition were obtained at 2 different points in time, approximately 2 years apart. Thus, changes in EF with time may have attenuated the strength of some of the EF-sentence recognition relationships, although EF scores have been found to be somewhat stable over time (Barkley 2012). Second, sample sizes in the current study, while average or greater compared to most other studies of long-term CI users, were powered to detect medium-to-large effect sizes; it is possible that some small effect sizes may not have been detected by the current methodology. Third, the Holm-Bonferroni correction method increased the chance of beta error in correlational analyses of EF, nonword repetition, and sentence recognition skills in children with CIs, with some effect sizes in the medium range and larger (with uncorrected p-values lower than 0.01 in several cases) not meeting criteria for statistical significance. Furthermore, because our measures of sentence recognition were correlated with each other, a Bonferroni-type correction is very conservative. Fourth, the correlational design of this study does not allow for causal conclusions to be drawn; nevertheless, study findings are consistent with existing theory and empirical research supporting dual-channel models of language processing in CI users. Finally, the novel high-variability speech perception measures used in this study were based on sentences that differ in their indexical properties. Although these measures introduced several challenging components to the speech recognition process, they are still only modestly challenging in terms of length and linguistic complexity. Future research should investigate more complex, higher-order language processing tasks such as comprehending spoken paragraphs, retrieval fluency of words from the mental lexicon, and perception of sentences in background noise and under concurrent cognitive load. These higher-order language processing tasks may require more compensatory executive processing to be carried out successfully than the high-variability sentence recognition tasks used in the present study.

Findings from this study have important clinical and translational implications that should be investigated in future research. First, the importance of fast-automatic phonological coding and the potential contribution of EF (particularly WM) in high-variability sentence recognition suggests that these domains of speech-language and neurocognitive processing could serve as novel targets for assessment and intervention for CI users. Second, the present set of findings also demonstrate the importance of using high-variability sentence recognition tests that vary talker, dialect, and other indexical characteristics of speech in evaluating speech perception outcomes of CI users. Third, identification of different ranges and slopes of nonword repetition-sentence recognition associations between CI and NH samples (Figure 1) suggests that there may be a minimal level of rapid phonological coding skills that is associated with ideal speech recognition outcomes for CI users, and this optimal level of performance may be clinically useful in setting goals and evaluating test results. Finally, application of dual channel (fast-automatic vs. slow-effortful processing) models in the clinical setting is supported by these results, although additional research with a broader set of language measures (beyond speech and sentence recognition) and additional investigation of verbal working memory is recommended.

Future Directions

Speech recognition is only the initial stage of language processing that continues with more complex, higher-order processing activities including answering questions, following directions, and completing tasks that require the integration and coordination of information from multiple domains such as problem solving, reasoning, and decision making. Compared to the literature on speech recognition, the domain of spoken language comprehension has received less attention in the clinical literature on CI outcomes, leaving a pressing need for new studies in this area of higher-level cognition and language processing. Individual differences and variability in speech and language outcomes after cochlear implantation are significant clinical problems in the field. Limitations in our knowledge about the causal factors and mechanisms of action underlying individual differences and variability in speech perception and language outcomes in CI users represents a critical barrier to progress in the development of novel interventions for patients with CIs who achieve outcomes on the lower end of the range of sentence recognition outcome measures after several years of CI use. New methods and tools are needed to identify the cause of poor outcomes and motivate the development of new intervention approaches to treat them in order to help CI patients achieve optimal levels of performance after implantation.

Acknowledgments

The authors thank Shirley Henning and Bethany Colson for administering the speech, language, and neurocognitive tests and Allison Ditmars for coordinating the study. We also thank Luis Hernandez for his help and assistance with data analysis connected with the speech production measures. This research was funded by NIH-NIDCD R01DC015257 (to W.G.K & D.B.P.) and NIH-NIDCD R01DC009581 (to D.B.P.). G.N.L.S analyzed the data, wrote the first draft of the paper, and provided critical revisions to subsequent drafts. D.B.P conceptualized the study, designed the experiments, and provided critical revisions to all drafts of the paper. W.G.K. conceptualized the study, designed the experiments, analyzed the data, wrote sections of the paper, and provided critical revisions to the paper. G.N.L.S, D.B.P, and W.G.K gave final approval of the paper prior to submission. Portions of this paper were presented at the American Cochlear Implant Alliance CI2018 Emerging Issues Symposium, Washington D.C., March 8, 2018 (by W.G.K) and the Cognitive Neuroscience Society 25th Annual Meeting, Boston, Massachusetts, March 27, 2018 (by G.N.L.S). W.G.K. is a paid consultant for Shire Pharmaceuticals and the Indiana Hemophilia and Thrombosis Center. G.N.L.S. and D.B.P have no conflicts of interest to declare.

Conflicts of Interest and Sources of Funding:

W.G.K. is a paid consultant for Shire Pharmaceuticals and the Indiana Hemophilia and Thrombosis Center. D.B.P. and G.N.L.S. have no conflicts of interest to declare. This research was funded by R01DC015257 (to W.G.K & D.B.P.) and R01DC009581 (to D.B.P.)

References

- Baddeley A. (2007). Working Memory, Thought, and Action. New York, NY, US: Oxford University Press. [Google Scholar]

- Bernstein JG, Goupell MJ, Schuchman GI, Rivera AL, & Brungart DS (2016). Having Two Ears Facilitates the Perceptual Separation of Concurrent Talkers for Bilateral and Single-Sided Deaf Cochlear Implantees. Ear Hear, 37(3), 289–302. doi: 10.1097/aud.0000000000000284 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casserly ED, & Pisoni DB (2013). Nonword Repetition as a Predictor of Long-Term Speech and Language Skills in Children with Cochlear Implants. Otol & Neuro, 34(3), 460–470. doi: 10.1097/MAO.0b013e3182868340 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cleary M, Dillon C, & Pisoni DB (2002). Imitation of Nonwords by Deaf Children After Cochlear Implantation: Preliminary Findings. Ann Otol Rhinol Laryngol. Supplement, 189, 91–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cleary M, Pisoni DB, & Kirk KI (2000). Working Memory Spans as Predictors of Spoken Word Recognition and Receptive Vocabulary in Children with Cochlear Implants. Volta Rev, 102(4), 259. [PMC free article] [PubMed] [Google Scholar]

- Coady JA, & Evans JL (2008). Uses and Interpretations of Non-Word Repetition Tasks in Children With and Without Specific Language Impairments (SLI). Int J Lang Commun Disord, 43(1), 1–40. doi: 10.1080/13682820601116485 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen J. (1992). A Power Primer. Psychol Bull, 112(1), 155–159. [DOI] [PubMed] [Google Scholar]

- Dillon C, Pisoni DB, Cleary M, & Carter AK (2004). Nonword Imitation by Children with Cochlear Implants: Consonant Analyses. Arch Otolaryngol Head Neck Surg, 130(5), 587–591. doi: 10.1001/archotol.130.5.587 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dillon CM, Burkholder RA, Cleary M, & Pisoni DB (2004). Nonword Repetition by Children With Cochlear Implants: Accuracy Ratings From Normal-Hearing Listeners. J Speech Lang Hear Res, 47(5), 1103–1116. doi: 10.1044/1092-4388(2004/082) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dillon CM, Cleary M, Pisoni DB, & Carter AK (2004). Imitation of Nonwords by Hearing-impaired Children With Cochlear Implants: Segmental Analyses. Clin Linguist Phon, 18(1), 39–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dillon CM, de Jong K, & Pisoni DB (2012). Phonological Awareness, Reading Skills, and Vocabulary Knowledge in Children Who Use Cochlear Implants. J Deaf Stud Deaf Educ, 17(2), 205–226. doi: 10.1093/deafed/enr043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dillon CM, & Pisoni DB (2006). Nonword Repetition and Reading Skills in Children Who Are Deaf and Have Cochlear Implants. Volta Rev, 106(2), 121–145. [PMC free article] [PubMed] [Google Scholar]

- Egan JP (1948). Articulation testing methods. Laryngoscope, 58, 955–991. doi: 10.1288/00005537-194809000-00002 [DOI] [PubMed] [Google Scholar]

- Eisenberg LS, Martinez AS, Holowecky SR, & Pogorelsky S. (2002). Recognition of Lexically Controlled Words and Sentences by Children With Normal Hearing and Children With Cochlear Implants. Ear Hear, 23(5), 450–462. doi: 10.1097/01.aud.0000034736.42644.be [DOI] [PubMed] [Google Scholar]

- Figueras B, Edwards L, & Langdon D. (2008). Executive Function and Language in Deaf Children. J Deaf Stud Deaf Educ, 13(3), 362–377. doi: 10.1093/deafed/enm067 [DOI] [PubMed] [Google Scholar]

- Flexer C. (2011). Cochlear Implants and Neuroplasticity: Linking Auditory Exposure and Practice. Cochlear Implants Int, 12(S1), S19–S21. doi: 10.1179/146701011X13001035752255 [DOI] [PubMed] [Google Scholar]

- Garafolo JS, Lamel LF, Fisher WM, Fiscus JG, Pallett DS, Dahlgren NL, & Zue V. (1993). The DARPA TIMIT Acoustic-Phonetic Continuous Speech Corpus LDC93S1. Philadelphia, PA: Linguistic Data Consortium. [Google Scholar]

- Gathercole SE (2006). Nonword Repetition and Word Learning: The Nature of the Relationship. Appl Psycholinguist, 27(4), 513–543. doi: 10.1017/S0142716406060383 [DOI] [Google Scholar]

- Gathercole SE, & Baddeley AD (1996). Children’s Test of Nonword Repetition. London, UK: Psychological Corporation. [Google Scholar]

- Gathercole SE, Willis CS, Baddeley AD, & Emslie H. (1994). The Children’s Test of Nonword Repetition: A Test of Phonological Working Memory. Memory, 2(2), 103–127. doi: 10.1080/09658219408258940 [DOI] [PubMed] [Google Scholar]

- Gaudrain E, & Baskent D. (2018). Discrimination of Voice Pitch and Vocal-Tract Length in Cochlear Implant Users. Ear Hear, 39(2), 226–237. doi: 10.1097/aud.0000000000000480 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geers A, & Brenner C. (2003). Background and Educational Characteristics of Prelingually Deaf Children Implanted by Five Years of Age. Ear Hear, 24(1 Suppl), 2S–14S. doi: 10.1097/01.AUD.0000051685.19171.BD [DOI] [PubMed] [Google Scholar]

- Geers AE (2003). Predictors of Reading Skill Development in Children with Early Cochlear Implantation. Ear Hear, 24(1), 59S–68S. doi: 10.1097/01.aud.0000051690.43989.5d [DOI] [PubMed] [Google Scholar]

- Geers AE, & Hayes H. (2011). Reading, Writing, and Phonological Processing Skills of Adolescents with 10 or More Years of Cochlear Implant Experience. Ear Hear, 32(1), 49S–59S. doi: 10.1097/AUD.0b013e3181fa41fa [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geers AE, Pisoni DB, & Brenner C. (2013). Complex Working Memory Span in Cochlear Implanted and Normal Hearing Teenagers. Otol Neurotol, 34(3), 396–401. doi: 10.1097/MAO.0b013e318277a0cb [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert JL, Tamati TN, & Pisoni DB (2013). Development, Reliability and Validity of PRESTO: A New High-Variability Sentence Recognition Test. J Am Acad Audiol, 24(1), 26–36. doi: 10.3766/jaaa.24.1.4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenberg LM, & Waldmant ID (1993). Developmental Normative Data on The Test of Variables of Attention (T.O.V.A.™). J Child Psychol Psychiatry, 34(6), 1019–1030. doi:doi: 10.1111/j.1469-7610.1993.tb01105.x [DOI] [PubMed] [Google Scholar]

- Hammil D, Pearson N, & Lee Wiederholt J. (1996). Comprehensive Test of Nonverbal Intelligence (C-TONI). [Google Scholar]

- Hansson K, Ibertsson T, Asker-Árnason L, & Sahlén B. (2017). Phonological Processing, Grammar and Sentence Comprehension in Older and Younger Generations of Swedish Children With Cochlear Implants. Autism Dev Lang Impair, 2, 1–14. doi:DOI: 10.1177/2396941517692809 [DOI] [Google Scholar]

- Horn DL, Davis RAO, Pisoni DB, & Miyamoto RT (2004). Visual Attention, Behavioral Inhibition and Speech/Language Outcomes in Deaf Children With Cochlear Implants. Int Congr Ser, 1273, 332–335. doi: 10.1016/j.ics.2004.07.048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibertsson T, Hansson K, Asker-Arnason L, & Sahlén B. (2009). Speech Recognition, Working Memory and Conversation in Children With Cochlear Implants. Deafness Educ Int, 11(3), 132–151. [Google Scholar]

- IEEE. (1969). IEEE recommended practice for speech quality measurements. Retrieved from [Google Scholar]

- Ji C, Galvin JJ 3rd, Xu A, & Fu QJ (2013). Effect of Speaking Rate on Recognition of Synthetic and Natural Speech by Normal-Hearing and Cochlear Implant Listeners. Ear Hear, 34(3), 313–323. doi: 10.1097/AUD.0b013e31826fe79e [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ji C, Galvin JJ, Chang Y. p., Xu A, & Fu Q-J (2014). Perception of Speech Produced by Native and Nonnative Talkers by Listeners With Normal Hearing and Listeners With Cochlear Implants. J Speech Lang Hear Res, 57(2), 532–554. doi: 10.1044/2014_JSLHR-H-12-0404 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson C, & Goswami U. (2010). Phonological Awareness, Vocabulary, and Reading in Deaf Children With Cochlear Implants. J Speech Lang Hear Res, 53(2), 237–261. doi: 10.1044/1092-4388(2009/08-0139) [DOI] [PubMed] [Google Scholar]

- Kahneman D. (1973). Attention and Effort: Prentice-Hall Inc. [Google Scholar]

- Kalikow DN, Stevens KN, & Elliott LL (1977). Development of a Test of Speech Intelligibility in Noise Using Sentence Materials With Controlled Word Predictability. J Acoust Soc Am, 61(5), 1337–1351. doi: 10.1121/1.381436 [DOI] [PubMed] [Google Scholar]

- Kronenberger WG, Colson BG, Henning SC, & Pisoni DB (2014). Executive Functioning and Speech-Language Skills Following Long-Term Use of Cochlear Implants. J Deaf Stud Deaf Educ, 19(4), 456–470. doi: 10.1093/deafed/enu011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronenberger WG, Henning SC, Ditmars AM, & Pisoni DB (2018). Language Processing Fluency and Verbal Working Memory in Prelingually Deaf Long-Term Cochlear Implant Users: A Pilot Study. Cochlear Implants Int, 1–12. doi: 10.1080/14670100.2018.1493970 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronenberger WG, & Pisoni DB (In press ). Neurocognitive Functioning in Deaf Children with Cochlear Implants In Knoors H. & Marschark M. (Eds.), Educating Deaf Learners [Google Scholar]

- Kronenberger WG, Pisoni DB, Henning SC, & Colson BG (2013). Executive Functioning Skills in Long-Term Users of Cochlear Implants: A Case Control Study. J Pediatr Psychol, 38(8), 902–914. doi: 10.1093/jpepsy/jst034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyxell B, Sahlén B, Wass M, Ibertsson T, Larsby B, Hällgren M, & Mäki-Torkko E. (2008). Cognitive Development in Children With Cochlear Implants: Relations to Reading and Communication. Int J Audiol, 47(sup2), S47–S52. doi: 10.1080/14992020802307370 [DOI] [PubMed] [Google Scholar]

- Miller GA, Heise GA, & Lichten W. (1951). The Intelligibility of Speech as a Function of the Context of the Test Materials. J Exp Psychol, 41(5), 329–335. [DOI] [PubMed] [Google Scholar]

- Miyake A, Friedman NP, Emerson MJ, Witzki AH, Howerter A, & Wager TD (2000). The Unity and Diversity of Executive Functions and Their Contributions to Complex “Frontal Lobe” Tasks: A Latent Variable Analysis. Cogn Psychol, 41(1), 49–100. doi: 10.1006/cogp.1999.0734 [DOI] [PubMed] [Google Scholar]

- Moog J, & Geers A. (2003). Epilogue: Major Findings, Conclusions and Implications for Deaf Education. Ear Hear, 24(1 Suppl), 121S–125S. doi: 10.1097/01.AUD.0000052759.62354.9F [DOI] [PubMed] [Google Scholar]

- Nilsson MJ, Soli SD, & Gelnett DJ (1996). Development of the Hearing in Noise Test for Children (HINT-C). Los Angeles, CA: House Ear Institute. [Google Scholar]

- Nittrouer S, Caldwell-Tarr A, & Holloman C. (2012). Measuring What Matters: Effectively Predicting Language and Literacy in Children With Cochlear Implants. Int J Pediatr Otorhinolaryngol, 76(8), 1148–1158. doi: 10.1016/j.ijporl.2012.04.024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S, Caldwell-Tarr A, Low KE, & Lowenstein JH (2017). Verbal Working Memory in Children With Cochlear Implants. J Speech Hear Res, 60(11), 3342–3364. doi: 10.1044/2017_JSLHR-H-16-0474 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S, Caldwell-Tarr A, & Lowenstein JH (2013). Working Memory in Children with Cochlear Implants: Problems Are in Storage, Not Processing. Int J Pediatr Otorhinolaryngol, 77(11), 1886–1898. doi: 10.1016/j.ijporl.2013.09.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S, Caldwell-Tarr A, Sansom E, Twersky J, & Lowenstein JH (2014). Nonword Repetition in Children With Cochlear Implants: A Potential Clinical Marker of Poor Language Acquisition. Am J Speech Lang Pathol, 23(4), 679–695. doi: 10.1044/2014_AJSLP-14-0040 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichora-Fuller MK (2016). How Social Psychological Factors May Modulate Auditory and Cognitive Functioning During Listening. Ear Hear, 37 Suppl 1, 92s–100s. doi: 10.1097/aud.0000000000000323 [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller MK, Kramer SE, Eckert MA, Edwards B, Hornsby BW, Humes LE, . . . Wingfield A. (2016). Hearing Impairment and Cognitive Energy: The Framework for Understanding Effortful Listening (FUEL). Ear Hear, 37 Suppl 1, 5s–27s. doi: 10.1097/aud.0000000000000312 [DOI] [PubMed] [Google Scholar]