Abstract

Introduction

Acquiring practical skills is essential for dental students. These practical skills are assessed throughout their training, both formatively and summatively. However, by means of visual inspection alone, assessment cannot always be performed objectively. A computerised evaluation system may serve as an objective tool to assist the assessor.

Aim

The aim of the study was to evaluate prepCheck as a tool to assess students’ practical skills and as a means to provide feedback in dental education.

Methods

As part of a previously scheduled practical examination, students made a preparation for a retentive crown on the maxillary right central incisor—tooth 11. Assessments were made four times by two independent assessors in two different ways: (a) conventionally and (b) assisted by prepCheck. By means of Cohen's kappa coefficient, agreements between conventional and digitally assisted assessments were compared. Questionnaires were used to assess how students experienced working with prepCheck.

Results

Without the use of prepCheck, ratings given by teachers differed considerably (mean κ = 0.19), whereas the differences with prepCheck assistance were very small (mean κ = 0.96). Students found prepCheck a helpful tool for teachers to assess practical skills. Extra feedback given by prepCheck was considered useful and effective. However, some students complained about too few scanners and too little time for practice, and some believed that prepCheck is too strict.

Conclusion

prepCheck can be used to assist assessors in order to obtain a more objective outcome. Results showed that practicing with feedback from both prepCheck and the teacher contributes to an effective learning process. Most students appreciated prepCheck for learning practical skills, but introducing prepCheck requires enough equipment and preparation time.

Keywords: assessment software, digital assessment, prepCheck

1. INTRODUCTION

Acquiring practical skills is an important element of the dentistry degree programme. All dental students are frequently assessed on their manual skills. In many dental schools, students prepare for these practical examinations by practicing on artificial teeth. Instructors provide feedback during practical classes subsequently followed by summative assessments.

Assessment of these examinations and the subsequent feedback must be as objective and consistent as possible. Unfortunately, despite assessor calibration, conventional assessments by means of visual inspection have been shown to result in subjectivity and inconsistency. 1 , 2 , 3

Confronted with diversity of assessment and inconsistent feedback, students lose confidence in the feedback. 4 Results from surveys show that students feel that inconsistent feedback impacts the learning process negatively. 4 , 5

Computer‐aided assessment systems can provide objective and consistent feedback. 6 , 7 , 8 , 9 , 10 In several studies, the conventional assessment method was compared to the digital assessment method. All studies show that the digital method was more objective and consistent than the conventional method. 3 , 8 , 11 Taylor et al compared traditional ratings of regular undergraduate crown preparation on a typodont with ratings provided by a software program. They state that the sole use of a digital assessment system cannot mark the students’ work in a valid manner, mainly due to shortcoming of the system (Prepassistant) used. 12 Nevertheless, researchers are positive about the opportunities offered by the integration of digital equipment into the teaching of practical dental skills. 10 , 11 , 13 , 14 , 15 , 16 , 17

It is expected that adding digital information to the conventional feedback will help students understand the elements of the feedback better and thus achieve the desired results. This deeper understanding enhances the mastering of practical skills.

One of the available digital preparation assessment tools is the prepCheck (Dentsply Sirona, Bensheim, Germany). The system was introduced to the University of Groningen, the Netherlands in order to improve the precision of the pre‐clinical preparation assessment.

The software is able to compare a preparation with a master preparation or to make use of geometric analysis function. For the retentive crown restoration, the geometric analysis includes a number of aspects: undercut, preparation taper, occlusal reduction, axial reduction, preparation type, margin and surface quality. At the University of Groningen, the axial reduction is not taken into consideration for the assessment of the student's preparation, because axial reduction is already obviated by a correct taper. Furthermore, not all aspects are weighted equally (Appendices 1 and 2).

The aim of the present study was to compare the inter‐rater concordance of the conventional assessment methods and the assessments made by instructors with the help of prepCheck. We also aim to evaluate how students feel about practicing with prepCheck and having their examinations assessed with the aid of prepCheck.

2. MATERIAL AND METHODOLOGY

In the academic year 2016/2017, 41 Bachelor students participated in the course “Chamfer preparation for placement of a full crown practical” for the first time. The dentistry degree programme of the University of Groningen made use of a digital 3D scanner (CEREC Omnicam; Dentsply Sirona, Bensheim, Germany) for assessing retentive crown preparations (maxillary right central incisor—tooth 11). Preparations were scanned by the students themselves and then independently assessed by two experienced instructors on various criteria, both digitally in the prepCheck software program (prepCheck, version 2.1 PRO; Dentsply Sirona, Bensheim, Germany) and in the conventional way, through visual inspection. After the examination, students were asked to give feedback about the procedure by means of a questionnaire.

To prepare the students for the examination, three practical sessions of four hours each were organised. At that time, they could practice preparations, scanning and receive feedback. Students could additionally practice preparations, scanning and familiarise themselves with the digital assessment in their own time. The fourth session comprised the summative examination. Four scanners were available to the students during the practical sessions and the examination. Ethical approval was obtained from the Netherlands Association for Medical Education (NVMO) Ethical Review Board (NERB file number #832).

2.1. Inclusion and exclusion of students and instructors

Preparations of students who had taken the course unit “Chamfer preparation for placement of a full crown practical” already before 2016/2017 were not included in the study, neither were the assessments of junior instructors (Table 1).

Table 1.

Inclusion and exclusion criteria of the research population

| Research population | |

|---|---|

| Students: inclusion criteria | Third‐year dentistry students from academic year 2016/2017 |

| Students: exclusion criteria | Students who had worked with prepCheck before |

| Instructors: inclusion criteria | Senior dentistry lecturers of the University of Groningen who had supervised the assessment procedure several times before |

| Instructors: exclusion criteria | Junior dentistry lecturers of the University of Groningen |

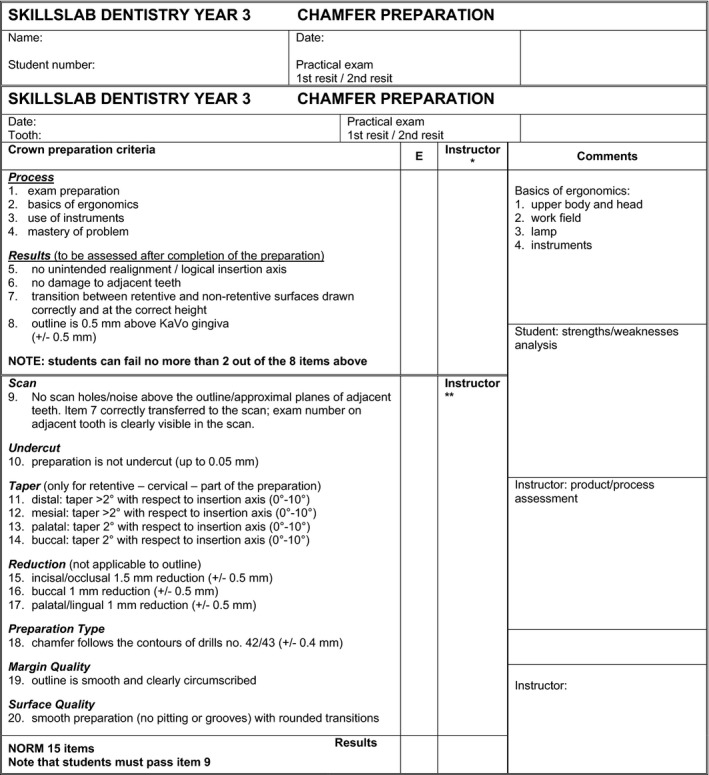

2.2. Criteria

The criteria that the students’ examination preparations must have met are set out in the assessment form (Appendix 1).

This study only covers criteria 10 to 20 since these can be assessed with both the conventional method and the prepCheck software. These criteria are listed in Table 2.

Table 2.

Assessment criteria for the chamfer preparation

| Criteria included in the assessment form and prepCheck | Explanatory notes | Maximum allowed deviation |

|---|---|---|

| Undercut | The preparation is not undercut | To a maximum of 0.05 mm |

| Taper | Taper measure on four planes | |

| distal: taper > 2° with respect to the insertion axis | 0°‐10° | |

| mesial: taper > 2° with respect to the insertion axis | 0°‐10° | |

| palatal: taper 2° with respect to the insertion axis | 0°‐10° | |

| buccal: taper 2° with respect to the insertion axis | 0°‐10° | |

| Incisal reduction | 1.5 mm incisal/occlusal reduction | Reduced by 0.5 mm too much or too little |

| Buccal reduction | 1 mm buccal reduction | Reduced by 0.5 mm too much or too little |

| Palatal reduction | 1 mm of tissue reduced in the palatal plane | Reduced by 0.5 mm too much or too little |

| Preparation type | The type of preparation is a chamfer. The chamfer conforms to the shape of drills no. 42/43 a | Prepared by 0.4 mm too much or too little |

| Margin quality | Preparation margins—the outline—has a smooth finish and is clearly circumscribed | Smoothing to 0% warning |

| Surface quality | Surface finish. Smooth preparation (no pitting or grooves) | Smoothing to 0% warning |

drills no. 42/43 (numbers 6856/8856.314.018, Komet Dental, Lemgo, Germany).

Zero or one point was awarded for each of the criteria, except for “taper,” where a maximum of four points were given.

2.3. Software used

The scanning process of the CEREC Omnicam was controlled by Cerec software, version 4.4.4 (both Dentsply Sirona, Bensheim, Germany). The software in which the assessment criteria were programmed is prepCheck, version 2.1 PRO (Dentsply Sirona, Bensheim, Germany). The assessment matrix (Appendix 2) proves more detailed information about the assessment settings of the prepCheck software, which are based on the criteria in the assessment form. Appendix 3 lists the settings for each criterion.

2.4. Assessment procedure

In the examination, students were given 120 minutes to prepare the maxillary right central incisor—tooth 11 (KaVo EWL model teeth, numbered “roots”; KaVo, Biberach/Riß, Germany) for subsequent placement of a retentive crown.

In order to assess the preparations anonymously, each student was randomly assigned an examination number, which was printed on the assessment form (Appendix 1). The students had to engrave their examination number in the apical part of the maxillary right central and lateral incisor—teeth 11 and 12. To ensure that the examination number is also visible on the scan, the students engraved their examination number in the buccal plane of the maxillary left central incisor—tooth 21 (Figure 1).

Figure 1.

Scan with examination number engraved in the buccal plane of the maxillary left central incisor—tooth 21

When the students finished their preparation, they had to draw the occlusal line with a red pencil on the preparation (Figure 4). This was checked, amongst other criteria, by the instructor (Appendix 1). Subsequently, they (partially) scanned the jaw with the CEREC Omnicam. To prevent fraud, the students were only allowed to remove the preparation (examination element) from the KaVo jaw (Basic study models, KaVo, Biberach/Riß, Germany) after finishing this first scan. Following, the element was replaced by an unprepared maxillary right central incisor—tooth 11 (referred to as the “biocopy”) and scanned as well to allow the prepCheck software to calculate the amount of tissue removed (see Figure 2).

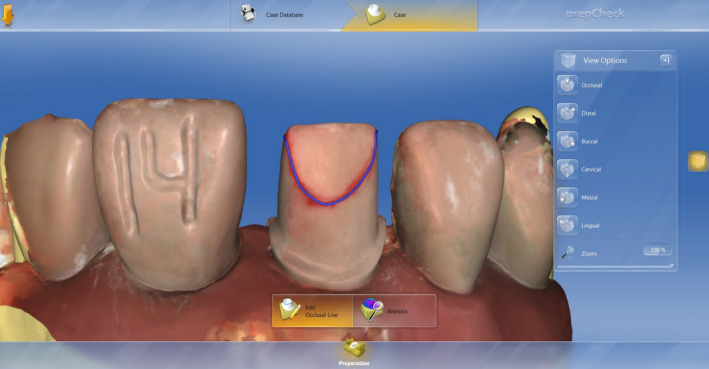

Figure 4.

Drawing the occlusal line, first with a red pencil, then digitally following the red line

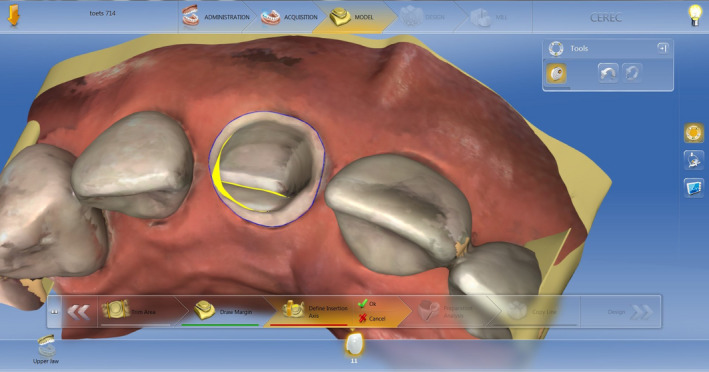

Figure 2.

Superimposed scans of a prepared and an unprepared maxillary right central incisor—tooth 11 (“biocopy”) to enable calculation of removed tissue

The students had to enter the insertion axis, the outline and the occlusal line (representing the beginning of the non‐retentive surfaces of the preparation) also digitally in the prepCheck software (see Figures 3, 4, 5). However, if the drawing of the occlusal line appeared to be incorrectly located, the student had to correct the position prior to scanning (with the result of zero points for item 7). The students stored the files on a USB stick (with date, course name and examination number as file name), which they handed in together with their examination teeth to the instructors.

Figure 3.

Drawing the outline

Figure 5.

Setting the insertion axis. The model must be positioned in such a way that the yellow shadow is the smallest possible. In this figure, the insertion axis is incorrect

Two instructors independently assessed the examinations using both assessment methods—the conventional method and the prepCheck method—and entered the results on a blank assessment form.

The conventional assessment method involved the preparations being assessed on the criteria on the assessment form after visual inspection with the available instruments (sickle probe, double preparation gauge and KaVo jaw). Where necessary, the instructor could use a magnifying lamp.

The prepCheck assessment method involved the instructor assessing the scan on the criteria set out on the assessment form using information provided by prepCheck. prepCheck calculated the acceptable margins for a preparation. Based on these calculations, the instructors awarded points for each criterion. The instructors were allowed to examine the physical tooth as well, in case there was concern about the scan (item No. 9, Appendix 1).

All examination work was therefore assessed four times:

instructor 1, conventional method

instructor 2, conventional method

instructor 1, prepCheck method

instructor 2, prepCheck method

To prevent bias being introduced by prepCheck, both instructors first assessed the work using the conventional method and a week later using prepCheck. Before the results were shared, all students were asked to anonymously fill in a questionnaire (Appendix 4).

2.5. Variables and methodology

All calculations were performed in SPSS. The significance level used was P < .05.

2.6. Inter‐rater agreement

Inter‐rater agreement was determined by calculating Cohen's kappa (κ) for each assessment criterion (Table 3).

Table 3.

Cohen's kappa values (κ) for 2 instructors by criterion and by examination assessment method (N = 41), differences between kappa values and mean kappa values

| Criterion | κ per assessment method | Kappa difference | |

|---|---|---|---|

| Instructors—conventional method | Instructors—prepCheck method | ||

| Undercut | 0.044 | 1.000 | 0.956 |

| Taper κweighed | 0.359 | 0.769 | 0.410 |

| Incisal reduction | 0.470 | 0.945 | 0.475 |

| Buccal reduction | 0.272 | 0.902 | 0.630 |

| Palatal reduction | ‐0.007 | 1.000 | 1.007 |

| Chamfer | 0.378 | 1.000 | 0.622 |

| Margin | 0.044 | 0.951 | 0.907 |

| Surface | 0.114 | 0.949 | 0.835 |

| Mean κ (without Taper) | 0.188 | 0.964 | 0.776 |

A weighted kappa was calculated for the “taper” criterion. Both instructors used both assessment methods.

Cohen suggested the Kappa result be interpreted as follows: values ≤ 0 as indicating no agreement and 0.01‐0.20 as none to slight, 0.21‐0.40 as fair, 0.41‐ 0.60 as moderate, 0.61‐0.80 as substantial and 0.81‐1.00 as almost perfect agreement. 18

For each criterion, the kappa was calculated for assessment with and without the aid of prepCheck. Kappa can vary between −1 and + 1 and is a measure of the agreement between the assessments of instructors 1 and 2. Since a binary value (0 or 1) was given for each criterion, kappa values could be calculated for all criteria except for “taper.” Because 0, 1, 2, 3 or 4 points could be awarded for this criterion, a weighted kappa was calculated for “taper” by using a linearly weighted 5‐point scale.

As the taper criterion was binary, the weighed Kappa was not taken into account for calculating a mean kappa values for each assessment method and the differences between the kappa values.

2.7. Student perception of prepCheck as a learning aid and assessment tool

All students completed the questionnaire (Appendix 4) anonymously, and before the official examination, results were published.

The questionnaire has ten items. For the first seven items, the students indicated on a visual analogue scale (VAS) to what extent they agreed with the statements given. The range of the VAS was 0 to 100, with 0 representing “totally disagree” and 100 “totally agree.”

The seven statements were followed by two multiple‐choice questions, allowing students to state how they preferred to receive feedback and what type of assessment they preferred.

The final item was an open‐ended question, to give students the opportunity to make critical suggestions or comments on the practical sessions and the assessment procedure. The responses were processed in SPSS, with the exception of the answers to the open‐ended question.

3. RESULTS

3.1. Inter‐rater agreement and total points

There was very poor agreement between the instructors for the conventional assessment method (n = 41, Cohen's kappa values for the conventional method ranged between −0.007 and 0.378, see Tables 3 and 4). The number of total points for all items was 278 (instructor 1) vs. 289 (instructor 2).

Table 4.

Overall results of the different assessment methods

| Conventional method | prepCheck method | |||

|---|---|---|---|---|

| Instructor 1 | Instructor 2 | Instructor 1 | Instructor 2 | |

| Undercut | 16 | 32 | 35 | 35 |

| Taper | 128 | 120 | 113 | 112 |

| Incisal reduction | 20 | 31 | 28 | 27 |

| Buccal reduction | 24 | 28 | 23 | 21 |

| Palatal reduction | 21 | 6 | 4 | 4 |

| Preparation type | 15 | 22 | 18 | 18 |

| Margin quality | 38 | 20 | 21 | 22 |

| Surface quality | 16 | 30 | 17 | 16 |

| Total points | 278 | 289 | 259 | 255 |

For the prepCheck assessment method (n = 41), the agreement between the instructors was moderate to perfect, with Cohen's kappa values between 0.769 and 1. The prepCheck assessment resulted in perfect agreement on “undercut,” “palatal reduction” and “Chamfer.” The number of total points for all items was 259 (instructor 1) vs. 255 (instructor 2).

The average kappa value for the prepCheck assessment was 0.776 higher than the average Kappa value for the conventional assessment method.

The weighed Kappa value for the taper was also almost twice as high for the prepCheck assessment as the value for the conventional method.

3.2. Student experiences with prepCheck

Thirty‐seven of the 41 students (90%) completed the questionnaire. Of these, thirty (81%) answered the open‐ended question.

prepCheck provided the students with a good understanding of the quality of their preparations, enabling them to specifically train certain aspects (mean VAS score 84.6). In addition, they felt that the feedback given by prepCheck clearly helped them prepare for the examination during the practice sessions (score 78.3). In general, they believed that prepCheck is a positive addition to the assessment procedure and made the examination results more objective (score 77.3). The students indicated that prepCheck gave them a better understanding of their progress during the practice sessions (score 77.1). Overall, they believed that the instructors had made an honest assessment of their work (score 72.8). Instructor feedback had been coaching in nature rather than judging ever since prepCheck had been providing feedback in the practical sessions (score 63.1). Interestingly, the only statement on which students agreed far less was that instructors (without using prepCheck) were consistent in their feedback during the practice sessions preparing them for the examination (score 58.2). The responses to this statement were the most varied, leading to the highest standard deviation.

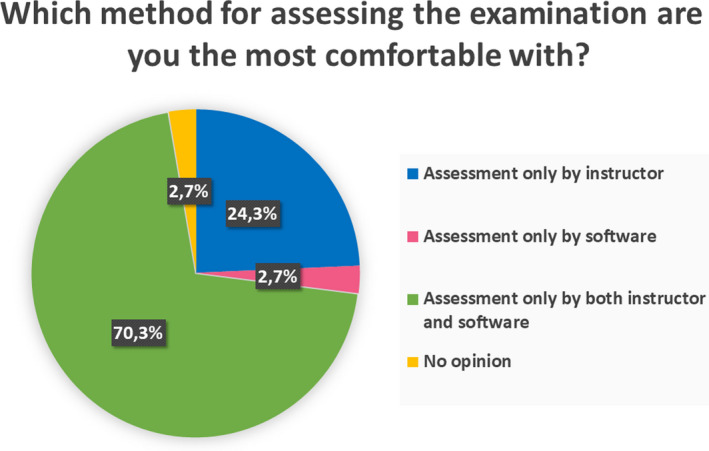

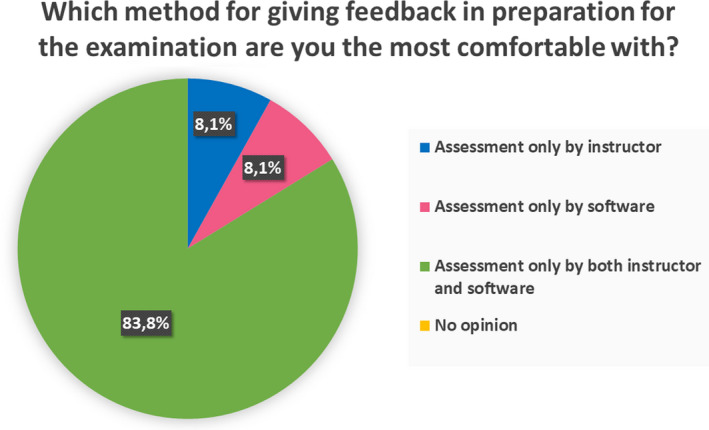

Table 5 presents the mean and the standard deviation for each statement. The mean VAS scores of statements 2, 4, 5 and 6 were between 75 and 100, indicating that on average the students strongly agreed with these statements. For statements 1, 3 and 7, the mean score was between 50 and 74, indicating reasonable agreement. The highest standard deviation is accompanied by the lowest mean and vice versa. Figures 6 and 7 show pie charts representing the responses to the multiple‐choice questions. A large majority of the students indicated that they were most satisfied with the combination of instructor and prepCheck for both feedback (83.8%) and examination assessment (70.3%). With respect to examination assessment, nearly one‐quarter (24.3%) prefers the instructor only. The percentage who preferred assessment by prepCheck only was 2.7%.

Table 5.

Student responses to the first seven questionnaire items

| Statements | Mean (scale from 0 to 100) | SD |

|---|---|---|

| Examination assessment by instructors is an honest procedure | 72.8 | 17.1 |

| prepCheck has been added to the assessment procedure to achieve objective test results | 77.3 | 21.0 |

| I believe that the instructors (without the use of prepCheck) are consistent in their feedback during the practice sessions leading up to the examination | 58.2 | 26.5 |

| The feedback given by prepCheck helps me during the practice sessions leading up to the examination | 78.3 | 19.6 |

| prepCheck helps me to evaluate my preparations, so that I can concentrate on certain elements of the preparation | 84.6 | 13.5 |

| prepCheck helps me to monitor my progress during the practice sessions | 77.1 | 19.9 |

| Since prepCheck has been used to give feedback during the practice sessions, instructors give feedback like a coach rather than an assessor | 63.1 | 23.2 |

Means and standard deviations (SD) of student responses to statements about their experiences with prepCheck on a visual conventional scale (VAS) ranging from 0 (“totally disagree”) to 100.

Figure 6.

Responses to the multiple‐choice question “Which method for assessing the examination are you most comfortable with?” represented as a pie chart (N = 37)

Figure 7.

Responses to the multiple‐choice question “Which method for giving feedback in preparation for the examination are you most comfortable with?” represented as a pie chart (N = 37)

The percentage of students who were comfortable with the feedback given by prepCheck alone (8.1%) was the same as the percentage (8.1%) who preferred feedback from the instructor only. None of the students was indifferent about the feedback given during the practice sessions.

3.3. Open‐ended questions

Feedback by prepCheck was felt to be consistent, objective, specific and accurate.

When explicitly asked about aspects to improve, 43% of the students who filled in the open‐ended questionnaire (n = 13) would have liked to have access to more scanning units. Due to the long waiting time, the assessment was seen as more hectic and chaotic than necessary.

Additionally, learning the scanning procedure took a long time too: 37% of the students (n = 11) required longer preparation time before the assessment in order to practice scanning.

Another point of criticism was that the scanner settings were too strict and making a scan without scanning clutter often proved difficult too. Finally, prepCheck rejected elements of the preparation that could not be seen with the naked eye or felt with the probe, which 27% of the students (n = 8) felt was unjustifiable.

10% of the students (n = 3) said that prepCheck could be a good instrument for formative evaluation during the examination. Students should be allowed to modify their chamfer preparation if desired. Another advantage of this approach is that this procedure would be more in line with clinical practice.

Due to the nature of the open‐ended questions, multiple nominations were possible. All comments are listed unchanged in Appendix 5.

4. DISCUSSION

4.1. Key findings

The instructors’ conventional assessments are markedly different although they used the same criteria. When they used prepCheck, their assessments are much more in agreement. Most of the students feel that prepCheck is a good additional teaching tool when learning practical skills. They prefer the combination of instructor and prepCheck for both feedback and assessment. Learning the scanning process and interpreting the scans took a lot of time, however, which makes the students feel that there was not enough time to prepare for the examination.

4.2. Inter‐rater agreement

When calculating the weighted Cohen's kappa, five categories (the number of points, ranging from 0 to 4) are involved instead of two (0 or 1). For this reason, Cohen's kappa for “taper” is not included in the calculation of the mean of all kappa's. Moreover, the weighted kappa for “taper” for the prepCheck assessment method is considerably lower than the other kappa's. When this deviant value would be included in the calculation of the mean kappa's, it would have introduced additional variance in the comparison of the mean kappa's.

Table 4 shows that the two instructors assigned the students a quite similar amount of points for the different items, regardless which assessment method was used, but the distribution within the conventional method seems to be quite arbitrary.

There was hardly any agreement between the instructors when they use the conventional assessment method. The “palatal reduction” criterion even has a negative Cohen's kappa value, which means that there was no agreement at all between the assessors. By contrast, moderate, strong and perfect agreements are found for the prepCheck assessment method.

The large differences between the kappa values for the two assessment methods show that the use of prepCheck clearly improves the precision of the assessment. Because of the high agreement between the prepCheck assessments, it appears that using prepCheck leads to more objective, consistent and calibrated instructor feedback and assessment.

Similar studies involving digital assessment systems show that such a system can increase inter‐rater agreement for anatomical wax‐up examinations 11 and that preparations can be assessed in a consistent and reliable manner. 3 , 10 ) These studies are in line with our findings and show digital assessment systems to be more precise than one using conventional methods. 3

Several studies show that dentistry departments have difficulties calibrating their instructors and objectifying assessments. 1 , 3 , 8 , 11 Since a digital assessment can lead to fewer differences of opinion amongst instructors, both assessment and feedback become more consistent.

4.3. Student experiences with prepCheck

The students’ ideas about prepCheck and how they feel about working with prepCheck were investigated with the questionnaire. The analysis of the students’ responses shows that, on average, students agree with most of the statements in the questionnaire (Appendix 4). All statement means are higher than 50 on the VAS scale ranging from 0 to 100.

A possible explanation for the small percentage of students who preferred prepCheck as the only source of feedback and assessment might be that students feel that prepCheck assesses their work more strictly than the instructor. Nearly, one‐quarter of students still appreciates the instructor being the only assessor, perhaps because they do not sufficiently trust the new technology yet for the reasons outlined above.

Learning to operate the scanner and the scanning process itself is experienced as clinical relevant but time‐consuming, and some students indicate that they prefer to spend their time on practicing their preparation skills instead. Some students feel that they had too little time during the practical to prepare for the examination. This is in line with the findings of another study. 19

In another survey involving prepCheck, it appears that some students felt that the use of prepCheck should not be implemented in the curriculum, because those students did not feel that assessment software was useful for improving their performance. In this study, a wax‐up examination was assessed in three ways: with the conventional method, with prepCheck and with E4D Compare. In general, what causes the negative student attitude towards the new teaching technologies was that they had been given insufficient time to master the scanning skills and the assessment software, with the rest of the curriculum staying the same except for the addition of the digital components. 15 This is in line with the findings in the present study. However, the overall attitude of the students in the present study was much more positive towards digital assessment, as it must be emphasised that the students were explicitly asked to give critical feedback in the open‐ended questionnaire to improve the teaching method. The majority of students state that they prefer digital assessment to the traditional conventional assessment. It is reported that digital systems provide fast, objective feedback and show exactly where their work is below level. For this reason, these systems support them in their endeavour to produce flawless work. 3 , 17

A study by Gratton et al compares two digital assessment systems (one of which is prepCheck), showing that the two systems are equally effective and that there are no significant differences between them. This study includes a student‐based questionnaire about their perception of and satisfaction with the use of scanners in the curriculum. The majority of students feels that digital techniques should be integrated into the teaching process. 20 The students also appreciate that they were given the opportunity to learn to work with new digital devices and technologies. Those findings are in line with our results.

Also, results of the study by Callan et al show that students find it difficult to produce a scan of their preparations and that they like that the digital assessment eliminates the subjective element. Another advantage is that they do not have to look for an instructor for an assessment. However, it also appears to be difficult and time‐consuming to navigate the software with the assessment criteria and to produce an accurate scan. Again, some students stated that they prefer to spend the time they now have to take to evaluate their preparations on actual practice. Nevertheless, the majority of students is generally positive about the option to modify their preparations after their flaws had been mapped. 19

4.4. Outline, occlusal line and insertion axis

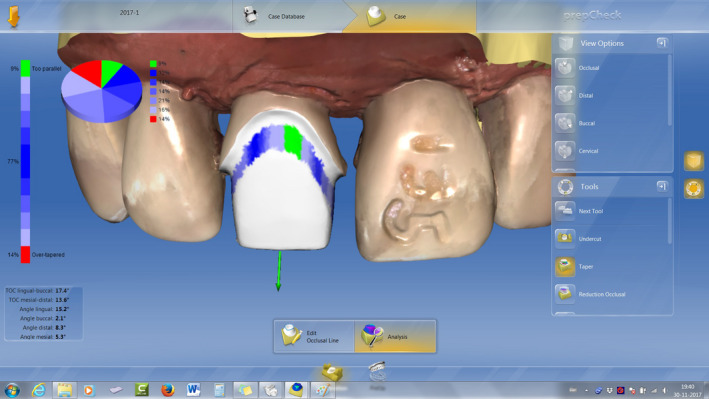

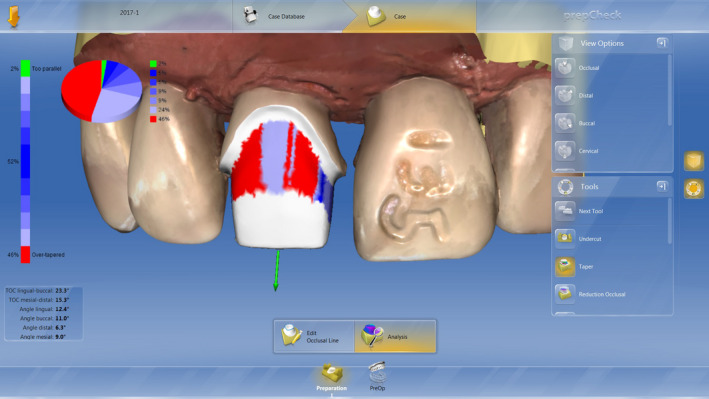

In addition to determining the insertion axis, students also had to mark the outline and the occlusal plane on the scan. Based on the lines they draw, prepCheck calculates an assessment of the scanned element based on the criteria. The student determines where these lines must be drawn, which means they can vary. The closer the line for the occlusal plane is to the gingiva, the lower the number of degrees for the “taper” criterion will be. The closer this is to the tooth's incisal edge, the higher the number of degrees for the “taper” criterion will be, and thus, the greater the risk that “taper” will be higher than allowed (see Figures 8 and 9).

Figure 8.

Because the line for the occlusal plane has been drawn closer to the gingiva, prepCheck concludes that 77% of the taper is within the margins allowed for this criterion

Figure 9.

Because the line for the occlusal plane has been drawn closer to the incisal edge, prepCheck concludes that only 52% of the taper is within the margins allowed for this criterion

Where the outline is drawn determines the shape of the chamfer and thus the “preparation type,” but it may also have an impact on “surface quality.” If there is a rough area or sharp edge inside or above the outline whilst the outline is drawn above this flaw, it will be ignored in the assessment of the “preparation type,” “margin quality” and “surface quality” criteria. This allows students to influence the prepCheck assessment and thus give themselves an advantage (or disadvantage) in the preparation assessment by drawing the line for the occlusal plane closer to the incisal plane. Gratton et al came to a similar conclusion. They found that drawing the outline and the occlusal plane remains a subjective element in a computer‐aided assessment system. 20

Therefore, the outline, occlusal line and insertion axis should be marked by the students according to the logical and intended position in the preparation rather than strategically in order to pass the examination. Consequently, the correct digital position of the outline, occlusal line and insertion axis was part of the assessment in the present study (items 5, 8 and 9).

4.5. Examination of the process versus examination of the result

Taylor et al support the notion that the use of a digital assessment system only is insufficient to validly assess student work. They examined the Prepassistant digital assessment system (KaVo, Biberach, Germany). The main limitation of a scanner as a digital assessment system, they found, is that it can only assess differences in measurements. 12 prepCheck also measures differences, calculates whether the results are within the margins set for the criteria and shows this as percentages. prepCheck does not mark the scanned work either.

In the present study, some criteria were still traditionally assessed by the instructors: the student's preparation for the examination, observing the basics of ergonomics, correct use of instruments and mastery of the problem are more related to the process. No unintended realignment and logical insertion axis, no damage to adjacent teeth, the transitions between retentive and non‐retentive surfaces are correctly drawn at the correct height, and the outline is 0.5 mm above the KaVo gingiva are items that are related to the result (“the preparation”). To assess these criteria and to calculate the total score for all criteria on the assessment form still requires an instructor, who also gives the final mark.

4.6. Relevance of the findings

Teaching dental skills can be improved by adding prepCheck to the assessment procedure since the instructors are considerably more in agreement when they use prepCheck in their assessments.

The results of the present study suggest that practicing with prepCheck is an effective aid for learning practical dental skills. Moreover, learning to work with modern digital technology is important for dentistry students because such techniques will become increasingly incorporated into the dental practice.

Based on these experiences, prepCheck was further implemented into the Bachelor curriculum of the dentistry degree programme at the University of Groningen.

5. CONCLUSIONS

Despite using the same criteria, instructors differ considerably in their assessments of preparations with the conventional assessment method. prepCheck increases agreement between instructor assessments. This calibration may be used to achieve objective assessment. Feedback given by prepCheck was seen as consistent, objective and accurate, and allowed students to practice preparations effectively. The students preferred receiving a combination of feedback from instructors as well as prepCheck. They felt that the examination should also be assessed by both the instructor and prepCheck. However, the scanning process took a lot of time, which meant there was insufficient time to practice in preparation for the examination.

Students see prepCheck as an objective source of feedback and a valuable addition to the teaching of practical dental skills, but introducing prepCheck requires enough equipment and preparation time.

CONFLICT OF INTEREST

The authors declare no conflicts of interest.

AUTHORS CONTRIBUTIONS

US built the design of the study, collected the data and secured funding. MvWP collected and analysed the data, designed the questionnaires, drafted the first manuscript of this paper, revised the article critically and added content. EM had the idea to use prepCheck for teaching, helped to install prepCheck into the practicum, revised the article critically and added content. WK designed the study together with US and MC, analysed the data statistically, took care of the ethical review approval (NVMO‐ERB), revised the article critically and added content. MC substantially contributed to the design of the study, revised the article critically and added content. BB supervised the use of the prepCheck technically and developed the parameters that were used in this study, revised the article critically and added content. All authors approved the final version of the manuscript and agree to be accountable.

ACKNOWLEDGEMENT

This study was financially supported by Dentsply Sirona and by the authors' institutions. Materials were purchased by the authors' institutions with allowance provided by Dentsply Sirona. The authors would like to thank MS Marianne Offereins for her valuable feedback and support for the first version of the manuscript. Also, the authors would like to thank Alexander Krol for introducing prepCheck in the student course and continuously improving the use of it.

Appendix 1. Assessment form

*The instructor is called before the jaw is removed and then assesses items 5‐8.

**With the help of the prepCheck software and the assessment matrix.

Appendix 2. Assessment matrix

| Scan | |

| 9. No scan holes/noise above the outline/approximal planes of adjacent teeth | 9. Scan is complete, without holes above the outline and proximal planes of adjacent teeth |

| Undercut | |

| 10. preparation is not undercut (up to 0.05 mm) | 10. prepCheck (PC) is no more than 0% in the last segment (>0.05) of undercut |

| Taper (only for retentive—cervical—part of the preparation) | 11‐14. the percentage within the margins (blues) results in a maximum score of: |

| 11. distal: taper > 2° with respect to insertion axis (0°‐10°) | 4 points (100%) |

| 12. mesial: taper > 2° with respect to insertion axis (0°‐10°) | 3 points (≥75%) |

| 13. palatal: taper 2° with respect to insertion axis (0°‐10°) | 2 points (≥50%) |

| 14. buccal: taper 2° with respect to insertion axis (0°‐10°) | 1 point (≥25%) |

| Reduction (not applicable to outline) | |

| 15. incisal/occlusal 1.5 mm reduction (± 0.5 mm) | 15. PC makes an axial section to measure the to measure the distance between preoperative and postoperative incisal edge. |

| 16. buccal (if necessary, approximal as occlusal plane was unnecessarily reduced to approximal) 1 mm reduction (± 0.5 mm) | 16‐17. PC distinguishes between axial and occlusal reduction. Axial reduction is obviated by the taper. Occlusal reduction concerns the buccal and palatal planes. PC is no more than 0% in the first and last segments (too much/too little reduction) of the occlusal reduction |

| 17. palatal/lingual 1 mm reduction (± 0.5 mm) | |

| Preparation Type | |

| 18. chamfer follows the semi‐circular contour of drill no. 42/43 (± 0.4 mm) | 18. PC is no more than 0% in the first and last segments (too much/too little reduction) of the preparation type |

| Margin Quality | |

| 19. outline is smooth and clearly circumscribed | 19. Under margin quality, PC indicates a maximum of 0% warning |

| Surface Quality | |

| 20. smooth preparation (no pitting or grooves) with rounded transitions | 20. Under surface quality, PC indicates a maximum of 0% warning. |

| NB Despite the maximum of 0%, PC may still colour the preparation. Although this falls within the margins, it is an indication that the work could be improved |

Appendix 3. Programmed settings for each criterion

Table A1.

Programmed settings for each criterion and prepCheck parameters

| Assessment form | prepCheck | ||

|---|---|---|---|

| Criteria | Analysis tool | Parameters | Settings |

| Undercut | Analysis of undercut | End of tolerance range | 0.050 mm |

| Begin of tolerance range | 0.000 mm | ||

| Taper | Analysis of preparation taper | Buccal taper: | |

| Activated | Yes | ||

| Maximal taper | 10° | ||

| Minimal taper | 0° | ||

| Maximal vertical height | 90% | ||

| Minimal vertical height | 0% | ||

| Maximal sector | 180° | ||

| Minimal sector | 0° | ||

| Cusp taper: | |||

| Activated | No | ||

| Lingual taper: | |||

| Activated | Yes | ||

| Maximal taper | 10° | ||

| Minimal taper | 0° | ||

| Maximal vertical height | 90% | ||

| Minimal vertical height | 0% | ||

| Maximal sector | 360° | ||

| Minimal sector | 180° | ||

| Distance to preparation line | 1.000 mm | ||

| Number of points | 180 | ||

| Incisal reduction | Analysis of occlusal reduction | End of tolerance range | |

| Begin of tolerance range | |||

| Begin of measuring zone | |||

| End of measuring zone | |||

| Buccal reduction | Analysis of occlusal reduction | End of tolerance range | |

| Begin of tolerance range | |||

| Begin of measuring zone | |||

| End of measuring zone | |||

| Palatal reduction | Analysis of occlusal reduction | End of tolerance range | |

| Begin of tolerance range | |||

| Begin of measuring zone | |||

| End of measuring zone | |||

| Preparation type | Analysis of preparation type | Height of interest | 1.000 mm |

| Number of points where the evaluation shall be done | 72 | ||

| Tolerance | 0.400 mm | ||

| Margin quality | Analysis of margin quality | Half window size | 0.400 mm |

| Tube radius | 0.065 mm | ||

| Surface quality | Analysis of surface quality | Taper of a sharp edge | 50.000° |

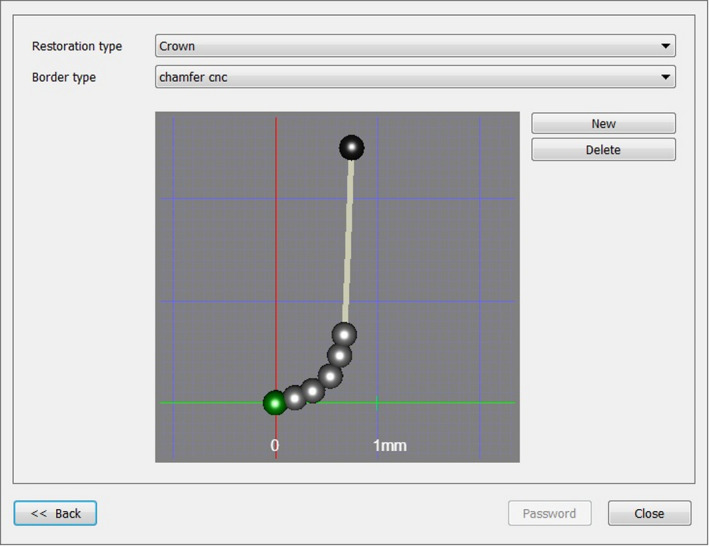

Addition to the “preparation type” criterion

Border type: chamfer

Table A2.

X and Y values in Figure A3

| x = 0.00 | y = 0.00 |

| x = 0.19 | y = 0.05 |

| x = 0.36 | y = 0.12 |

| x = 0.54 | y = 0.26 |

| x = 0.63 | y = 0.47 |

| x = 0.67 | y = 0.67 |

| x = 0.75 | y = 2.50 |

Figure A1.

The form of the chamfer represented as points on a graph. The coordinates are given in Table A2. The axes represent drills no. 42 and 43 (Komet no. 6856 and no. 8856.314.018, Komet Dental, Lemgo, Germany) [Colour figure can be viewed at wileyonlinelibrary.com]

Appendix 4. Questionnaire

The purpose of this questionnaire is to learn your opinions about and experiences with the practical work and the assessment procedure used in the Chamfer preparation for a full crown practical examination. You are not obliged to complete this questionnaire.

By placing a vertical dash on the scale printed under each of the statements, you can indicate to what extent you agree with the statement.

To round off, there are two multiple‐choice questions. Please circle your answer.

Completing this questionnaire takes about a minute.

-

1

Examination assessment by instructors is an honest procedure.

-

2

prepCheck is an improvement to the assessment procedure in order to achieve objective test results.

-

3

I believe that the instructors (without the use of prepCheck) are consistent in their feedback during the practice sessions leading up to the examination.

-

4

The feedback given by prepCheck helps me during the practice sessions leading up to the examination.

-

5

prepCheck helps me to evaluate my preparations, so that I can concentrate on certain elements of the preparation.

-

6

prepCheck helps me to monitor my progress during the practice sessions.

-

7

Since prepCheck has been used to give feedback during the practice sessions, instructors give feedback like a coach rather than an assessor.

-

8

Which method for assessing the examination are you most comfortable with? Please circle the answer that best reflects your opinion.

-

a

Assessment only by instructor

-

b

Assessment only by the software

-

c

Assessment by both instructor and software

-

d

I don't know

-

a

-

9

Which method for giving feedback in preparation for the examination are you most comfortable with?

-

a

Feedback only by instructor

-

b

Feedback only by the software

-

c

Feedback by both instructor and software

-

d

I don't know

-

a

If you have any suggestions for improvement or comments about the practice sessions, the examination or the way in which your chamfer preparation was assessed during the skills lab, or if you feel an issue is not addressed in this questionnaire, please state them below.

End of the questionnaire

Thank you for completing this questionnaire. Your answers will be processed anonymously. Once our study is completed, the questionnaires will be destroyed.

Appendix 5. Critical answers given to the open‐ended question in the questionnaire

-

1

Suggestion: The process could be improved by being allowed to use the scanner for formative evaluation during the examination and subsequently improving the preparation if necessary.

-

2

The scanner is a good additional element in the practical but there was not quite enough time for learning tooth preparation and learning to work with the scanner.

-

3

The scanner is a good additional element in preparing for the examination. I would prefer it if the scanner were not used as the sole assessor, since the prepCheck settings are too strict because the scanner sees things that cannot be observed with the naked eye.

-

4

There was not enough time to practise. It is difficult to obtain a high‐quality scan. The instructors are not in agreement. I felt like a guinea pig.

-

5

Assessment by the scanner is fine but the prepCheck settings are unreasonable. It would be better to use a margin of 1% instead of 0% for the criteria.

-

6

The examination is hectic because of the unavailability (and waiting for) the scanners.

-

7

The scanner is a very handy tool during the practice sessions. It is too strict for the assessment, because whilst working on the preparation, you cannot assess it as the scanner does. The combination of scanner and instructor is better.

-

8

There was not enough time to practise because using the scanner took a lot of time during the practice sessions.

-

9

The scanner is a good additional element whilst practicing but less suitable for examination assessment. The scanner is too strict, and when you are preparing the chamfer, you cannot see/assess the criteria as the scanner does.

-

10

The scanner is a good aid when practicing. The scanner allows practicing your preparation and having it assessed without the need for an instructor. The scanner is not suitable for the examination assessment because it is too strict. Plus, you cannot pass the examination if there are computer malfunctions in the scanner. If you need to remove more tissue at a later stage, this is possible in the clinical situation but not (yet) during the examination, which means you will fail it.

-

11

Not enough time.

-

12

The assessment process was chaotic. We had to wait for a scanner to become available. There was not enough time. Learning the scanning process took a lot of time and energy on the part of the students.

-

13

There was not enough time to practise, and we need more practice to use the scanner properly. It is difficult to obtain a scan without grey blocks. Disadvantage: making a scan during the examination raised the work pressure. Waiting for a scanner and the scanning itself took too much time during the examination.

-

14

It would have been more comfortable if there had been more supervision whilst we were practicing with the scanner. Making a good scan is difficult. The examination was chaotic because we had to wait for the scanner and had to make a temporary preparation in the meantime.

-

15

During practicing, the scanner has a major advantage: it helps one to improve (one's preparation) on very specific points. The disadvantage is that the scanner is very strict and assesses details at one hundredth of a millimetre (eg 0.03 mm) which cannot be seen with the naked eye.

-

16

Too little time to practise.

-

17

The examination was chaotic. The scanner does add value to the learning process.

-

18

The examination is not anonymous if you have to send an e‐mail with your self‐assessment, although this is not such an issue with the scan results as the scanner makes anonymous assessments.

-

19

It sometimes took a very long time before you could make a scan.

-

20

Working with the scanner is insightful, which makes practicing easier. The problem is that there are not enough scanners, which meant we had to wait a long time for one to become available.

-

21

More time should be allocated to practising.

-

22

Having more scanners would be nice, but this is not necessary. Not all instructors worked equally well with the scanner. It would be better if all instructors had a thorough understanding of the scanner (better supervision whilst learning to work with the scanner). Even if you scan your work correctly, the scan still contains noise.

-

23

It is nice that the scanner measures more objectively than an instructor but I’m afraid the scanner is too strict. The scanner says that I have not removed sufficient tissue but such a small amount cannot be seen with the naked eye. The same applies to the taper criterion. I now have the impression that students will not pass the examination if it is (only) assessed by the scanner instead of by an instructor.

-

24

Because there is too little time to practise and there are not a lot of scanners (and scanning takes a whilst), I have only been able to make few scans as feedback.

-

25

Could make only a few scans during the practice sessions.

-

26

Waiting for a scanner when another student is scanning takes a long time. The examination procedure is less clear than it usually is.

-

27

I have not been able to practise sufficiently with the scanner. We had to wait for a scanner often; this took time off the practical work. The 0% norm is too strict, because the scanner sees things that you cannot see with your own eyes or feel with a probe. It would therefore be better if students are allowed during the examination to improve their preparations after making a scan.

-

28

Had to wait too long for a scanner. If possible, make more scanners available as a suggestion for subsequent practicals and agree on a maximum time to use the scanner.

-

29

Had to wait a long time for a scanner during the examination.

-

30

prepCheck allows for a consistent and highly accurate assessment of the preparation. Instructors sometimes say something is “just about right”; prepCheck is much clearer in this respect.

Schepke U, van Wulfften Palthe ME, Meisberger EW, Kerdijk W, Cune MS, Blok B. Digital assessment of a retentive full crown preparation—An evaluation of prepCheck in an undergraduate pre‐clinical teaching environment. Eur J Dent Educ. 2020;24:407–424. 10.1111/eje.12516

Footnotes

This method obviously does not follow the clinical workflow. The limited number of available scanners made “biocopy” scanning of the original tooth prior to preparation impossible.

REFERENCES

- 1. Sharaf AA, AbdelAziz AM, El Meligy OA. Intra‐ and inter‐examiner variability in evaluating preclinical pediatric dentistry operative procedures. J Dent Educ. 2007;71(4):540‐544. [PubMed] [Google Scholar]

- 2. Satterthwaite JD, Grey NJ. Peer‐group assessment of pre‐clinical operative skills in restorative dentistry and comparison with experienced assessors. Eur J Dent Educ. 2008;12(2):99‐102. [DOI] [PubMed] [Google Scholar]

- 3. Renne WG, McGill ST, Mennito AS, et al. E4D compare software: an alternative to faculty grading in dental education. J Dent Educ. 2013;77(2):168‐175. [PMC free article] [PubMed] [Google Scholar]

- 4. Henzi D, Davis E, Jasinevicius R, Hendricson W. North American dental students' perspectives about their clinical education. J Dent Educ. 2006;70(4):361‐377. [PubMed] [Google Scholar]

- 5. Henzi D, Davis E, Jasinevicius R, Hendricson W. In the students' own words: what are the strengths and weaknesses of the dental school curriculum? J Dent Educ. 2007;71(5):632‐645. [PubMed] [Google Scholar]

- 6. Kournetas N, Jaeger B, Axmann D, et al. Assessing the reliability of a digital preparation assistant system used in dental education. J Dent Educ. 2004;68(12):1228‐1234. [PubMed] [Google Scholar]

- 7. Cardoso JA, Barbosa C, Fernandes S, Silva CL, Pinho A. Reducing subjectivity in the evaluation of pre‐clinical dental preparations for fixed prosthodontics using the Kavo PrepAssistant. Eur J Dent Educ. 2006;10(3):149‐156. [DOI] [PubMed] [Google Scholar]

- 8. Esser C, Kerschbaum T, Winkelmann V, Krage T, Faber FJ. A comparison of the visual and technical assessment of preparations made by dental students. Eur J Dent Educ. 2006;10(3):157‐161. [DOI] [PubMed] [Google Scholar]

- 9. Lenherr P, Marinello CP. prepCheck computer‐supported objective evaluation of students preparation in preclinical simulation laboratory. Swiss Dent J. 2014;124(10):1085‐1092. [DOI] [PubMed] [Google Scholar]

- 10. Kateeb ET, Kamal MS, Kadamani AM, Abu Hantash RO, Abu Arqoub MM. Utilising an innovative digital software to grade pre‐clinical crown preparation exercise. Eur J Dent Educ. 2017;21(4):220‐227. [DOI] [PubMed] [Google Scholar]

- 11. Kwon SR, Restrepo‐Kennedy N, Dawson DV, et al. Dental anatomy grading: comparison between conventional visual and a novel digital assessment technique. J Dent Educ. 2014;78(12):1655‐1662. [PubMed] [Google Scholar]

- 12. Taylor CL, Grey NJ, Satterthwaite JD. A comparison of grades awarded by peer assessment, faculty and a digital scanning device in a pre‐clinical operative skills course. Eur J Dent Educ. 2013;17(1):e16‐21. [DOI] [PubMed] [Google Scholar]

- 13. Welk A, Splieth C, Wierinck E, Gilpatrick RO, Meyer G. Computer‐assisted learning and simulation systems in dentistry–a challenge to society. Int J Comput Dent. 2006;9(3):253‐265. [PubMed] [Google Scholar]

- 14. Browning WD, Reifeis P, Willis L, Kirkup ML. Including CAD/CAM dentistry in a dental school curriculum. J Indiana Dent Assoc. 2013;92(4):40‐45. [PubMed] [Google Scholar]

- 15. Kwon SR, Hernandez M, Blanchette DR, Lam MT, Gratton DG, Aquilino SA. Effect of computer‐assisted learning on students' dental anatomy waxing performance. J Dent Educ. 2015;79(9):1093‐1100. [PubMed] [Google Scholar]

- 16. Cho GC, Chee WW, Tan DT. Dental students' ability to evaluate themselves in fixed prosthodontics. J Dent Educ. 2010;74(11):1237‐1242. [PubMed] [Google Scholar]

- 17. Hamil LM, Mennito AS, Renne WG, Vuthiganon J. Dental students' opinions of preparation assessment with E4D compare software versus traditional methods. J Dent Educ. 2014;78(10):1424‐1431. [PubMed] [Google Scholar]

- 18. McHugh ML. Interrater reliability: the kappa statistic. Biochem Med (Zagreb). 2012;22(3):276‐282. [PMC free article] [PubMed] [Google Scholar]

- 19. Callan RS, Palladino CL, Furness AR, Bundy EL, Ange BL. Effectiveness and feasibility of utilizing E4D technology as a teaching tool in a preclinical dental education environment. J Dent Educ. 2014;78(10):1416‐1423. [PubMed] [Google Scholar]

- 20. Gratton DG, Kwon SR, Blanchette DR, Aquilino SA. Performance of two different digital evaluation systems used for assessing pre‐clinical dental students' prosthodontic technical skills. Eur J Dent Educ. 2017;21(4):252‐260. [DOI] [PubMed] [Google Scholar]