Abstract

Typical optical coherence tomographic angiography (OCTA) acquisition areas on commercial devices are 3×3- or 6×6-mm. Compared to 3×3-mm angiograms with proper sampling density, 6×6-mm angiograms have significantly lower scan quality, with reduced signal-to-noise ratio and worse shadow artifacts due to undersampling. Here, we propose a deep-learning-based high-resolution angiogram reconstruction network (HARNet) to generate enhanced 6×6-mm superficial vascular complex (SVC) angiograms. The network was trained on data from 3×3-mm and 6×6-mm angiograms from the same eyes. The reconstructed 6×6-mm angiograms have significantly lower noise intensity, stronger contrast and better vascular connectivity than the original images. The algorithm did not generate false flow signal at the noise level presented by the original angiograms. The image enhancement produced by our algorithm may improve biomarker measurements and qualitative clinical assessment of 6×6-mm OCTA.

1. Introduction

Optical coherence tomographic angiography (OCTA) is a non-invasive imaging technology that can capture retinal and choroidal microvasculature in vivo [1]. Clinicians are rapidly adopting OCTA for evaluation of various diseases, including diabetic retinopathy (DR) [2,3], age-related macular degeneration (AMD) [4,5], glaucoma [6,7], and retinal vessel occlusion (RVO) [8,9]. High-resolution and large-field-of-view OCTA improve clinical observations, provide useful biomarkers and enhance the understanding of retinal and choroidal microvascular circulations [10–13]. Many enhancement techniques have been applied to improve the OCTA image quality, including a regression-based algorithm bulk motion subtraction in OCTA [14], multiple en face image averaging [15,16], enhancement of morphological and vascular features using a modified Bayesian residual transform [17], and quality improvement with elliptical directional filtering [18]. These approaches can improve vessel continuity and suppress the background noise on angiograms with proper sampling density (i.e., sampling density that meets the Nyquist criterion). However, while commercial systems offer a range of fields of view, only 3×3-mm angiograms are adequately sampled for capillary resolution as the OCTA system scanning speed limits the number of A-lines included on each cross-sectional B-scan. Conventional image enhancement techniques like those mentioned above are not effective on the under-sampled 6×6-mm angiograms. This is unfortunate since the larger scans, with reduced resolution, are in more need of enhancement. The difficulty in enlarging the field without sacrificing resolution is a significant issue for development of OCTA technology, as its field of view is significantly smaller than modalities such as fluorescein angiography (FA).

Recently, deep learning has achieved dramatic breakthroughs, and researchers have proposed a number of convolutional neural networks (CNN) for OCTA image processing [19–26]. As an important branch of image processing, super-resolution image reconstruction and enhancement also benefited from deep-learning-based methods [27–31]. Here, we propose a high-resolution angiogram reconstruction network (HARNet) to reconstruct high-resolution angiograms of the superficial vascular complex (SVC). We evaluated the reconstructed high-resolution OCTA for noise level in the foveal avascular zone (FAZ), contrast, vascular connectivity, and false flow signal. We also demonstrate that HARNet is capable of improving not just under-sampled 6×6-mm, but 3×3-mm angiograms as well.

2. Methods

2.1. Data acquisition

The 6×6- and 3×3-mm OCTA scans of the macula used in this study were acquired with 304×304 A-lines using a 70-kHz commercial OCTA system (RTVue-XR; Optovue, Inc.). Two repeated B-scans were taken at each of the 304 raster positions and each B-scan consisted of 304 A-lines. The split-spectrum amplitude-decorrelation angiography (SSADA) algorithm was used to generate the OCTA data [32]. The reflectance values on structural OCT and flow values on OCTA were normalized and converted to unitless values in the range of [0 255]. A guided bidirectional graph search algorithm was employed to segment retinal layer boundaries [33] [Figs. 1(A1), 1(B1)]. 3×3- and 6×6-mm angiograms of the SVC [Figs. 1(A2), 1(B2)] were generated by maximum projection of the OCTA signal in a slab including the nerve fiber layer (NFL) and ganglion cell layer (GCL).

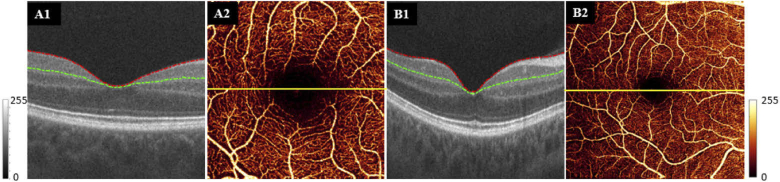

Fig. 1.

Data acquisition for HARNet. (A1) Cross-sectional structural OCT of a 3×3-mm scan volume, with overlaid boundaries showing the top (red) and bottom (green) of the SVC slab. (A2) 3×3-mm angiogram of the superficial vascular complex (SVC) generated by maximum projection of the OCTA signal in the slab delineated in (A1). The yellow line shows the location of the B-scan in (A1). (B1) and (B2) Equivalent images for 6×6-mm angiograms from the same eye capture more peripheral features, but are of lower quality.

2.2. Network architecture

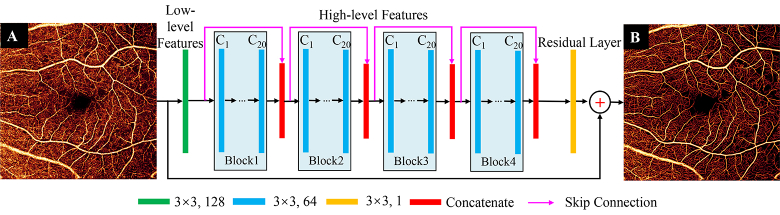

Our network structure is composed of a low-level feature extraction layer, high-level feature extraction layers, and a residual layer (Fig. 2). Input to the network consists of SVC angiograms. The network first extracts shallow features from the input image through one convolutional layer with 128 channels. Then the high-level features are extracted through four convolutional blocks. Each convolutional block is composed of 20 convolutional layers (C1-C20) with 64 channels. The kernel size in all the convolutional layers is 3×3 pixels. Skip connections concatenate the output and input of each convolutional block as the input to the next convolutional block. The output and input of the last convolutional block are concatenated and then fed to the residual layer. The residual layer contains a channel that produces the residual image. The residual image and input image are summed to produce the final reconstructed output image. For the most part, low-resolution and high-resolution images have the same low-frequency information, so the output consists of the original input and the residual high-frequency components predicted by HARNet. By only learning these high-frequency components, we were able to improve the convergence rate of HARNet [27]. After each convolutional layer, excluding the residual layer, we added a rectified linear unit (ReLU) [34] to accelerate the convergence of HARNet.

Fig. 2.

Algorithm flowchart. The network is comprised of three parts: a low-level feature extraction layer, high-level feature extraction layers, and a residual layer. The kernel size in all the convolutional layers is 3×3. The number of channels in the green, blue, and yellow convolutional layer are 128, 64, and 1, respectively. Red layers are concatenation layers that concatenate the output of the convolution block with its input via skip connections. (A) Example input and (B) output 6×6-mm angiogram.

2.3. Training

2.3.1. Training data preprocessing

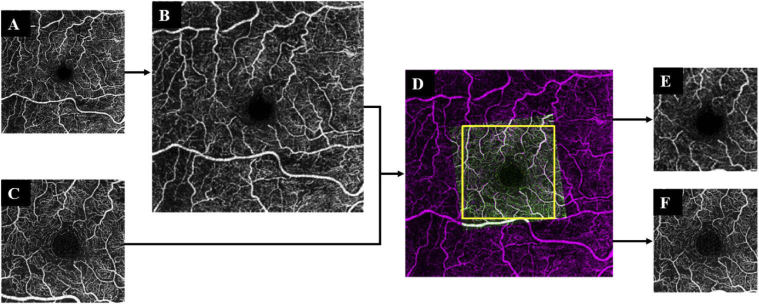

We trained HARNet by reconstructing 6×6-mm angiograms from their densely-sampled 3×3-mm equivalents. To do so, we first used bi-cubic interpolation to scale the size of the 6×6-mm SVC angiograms [Fig. 3(A)] by a factor of 2, so that they would be on the same scale as a 3×3-mm scan. Then we used intensity-based automatic image registration [35] [Fig. 3(D)] to register the scaled 6×6-mm angiograms [Fig. 3(B)] with the 3×3-mm angiograms [Fig. 3(C)]. The registration algorithm can produce a transform matrix, which contains translation, rotation, and scaling operations. Finally, we cropped the overlapping region from each by taking the maximum inscribed rectangle to construct the input for HARNet and the ground truth [Figs. 3(E) and 3(F)].

Fig. 3.

Data preprocessing flow chart. (A) The original 6×6-mm superficial vascular complex (SVC) angiogram. (B) Up-sampled 6×6-mm SVC angiogram. (C) Original 3×3-mm SVC angiogram. (D) Registered image combining both angiograms. The yellow box is the largest inscribed rectangle. (E) Cropped central 3×3-mm section from the 6×6-mm angiogram. (F) Cropped original 3×3-mm angiogram.

2.3.2. Loss function

We trained the network on a ground truth composed of the original 3×3-mm angiograms filtered with a bilateral filter. To minimize the difference between the output of network and the ground truth, the loss function used in the learning stage was a linear combination of the mean square error [MSE; Eq. (1)] and the structural similarity [SSIM; Eq. (2)] index [36,37]. MSE is used to measure the pixel-wised difference, and SSIM is based on three comparison measurements: reflectance amplitude, contrast, and structure:

| (1) |

| (2) |

| (3) |

where refer to the width and height of the image, X and refer to the output of HARNet and the ground truth, respectively, and and are their mean pixel values, and are their standard deviations, and is the covariance. The values of the constants and were taken from the literature [37]. The loss function [Eq. (3)] was a linear combination of the MSE and the SSIM.

2.3.3. Subjects and training parameters

The data set used in this study consisted of 298 eyes scanned from 196 participants. Each eye was scanned with both a 3×3-mm and a 6×6-mm scan pattern. Ten healthy eyes from 10 participants were intentionally defocused and used in defocusing experiments. Of the remaining 288, we used 210 of these paired scans (randomly selected) for training, and reserved the rest for testing (N=78). The training data includes eyes with DR (N=195) and healthy eyes (N=15). The performance of this network on testing data was separately evaluated on eyes with diabetic retinopathy (N=53) and healthy controls (N=25). Finally, false-flow generation experiments also used 10 cases from the test set of healthy eyes. We used several data augmentation methods to expand the training dataset, including horizontal flipping, vertical flipping, transposition, and 90-degree rotation. For training, considering the hardware capability and computation cost, we used 38×38-pixel sub-images. To avoid the gradient exploding problem, we normalized the pixel value range to 0-1 using Eq. (4),

| (4) |

where is the pixel value ranging from 0-255 at position of the angiogram, is the normalized pixel value at location , and and are minimum and maximum pixel value of overall image, respectively. Thus the 1050-images in the training dataset after augmentation can be decomposed into 176,405 sub-images, which are extracted from cropped SVC angiograms with a stride of 19. Since HARNet is a fully convolutional neural network, it can be applied on images of arbitrary sizes. Thus, we input the entire image to the model for testing, as the entire image is the clinically relevant data.

An Adam optimizer [38] with an initial learning rate of 0.01 was used to train HARNet by minimizing the loss. We used a global learning rate decay strategy to reduce the learning rate during training in which the learning rate was reduced by 90% when the loss showed no decline after 3 epochs, provided the rate was greater than 1 × 10−6. Training ceased when loss didn’t change by more than 1 × 10−5 in 5 epochs. The training batch size was 128.

We implemented HARNet in Python 3.6 with Keras (Tensorflow-backend) on a PC with a 16G RAM and Intel i7 CPU, and two NVIDIA GeForce GTX 1080Ti graphics cards.

3. Results

To validate the performance of our algorithm, we used a test dataset that composed of 78 paired original 3×3- and 6×6-mm angiograms and evaluated the reconstructed 3×3-mm and 6×6-mm angiograms using three metrics: noise intensity in the FAZ, global contrast, and vascular connectivity. In addition, we also performed experiments on defocused SVC angiograms, angiograms with different simulated noise intensities, and DR angiograms.

3.1. Evaluation metrics

3.1.1. Noise intensity

In healthy eyes, the FAZ is avascular, so to obtain an estimate of noise intensity , we consider the pixel values in 0.3-mm diameter circle R centered in the FAZ

| (5) |

where is the pixel value at position .

3.1.2. Image contrast

The global contrast of the SVC angiograms produced by the network was measured by the root-mean-square (RMS) contrast [39],

| (6) |

where is the pixel value at position , A is the total area of the SVC angiogram and is its mean value.

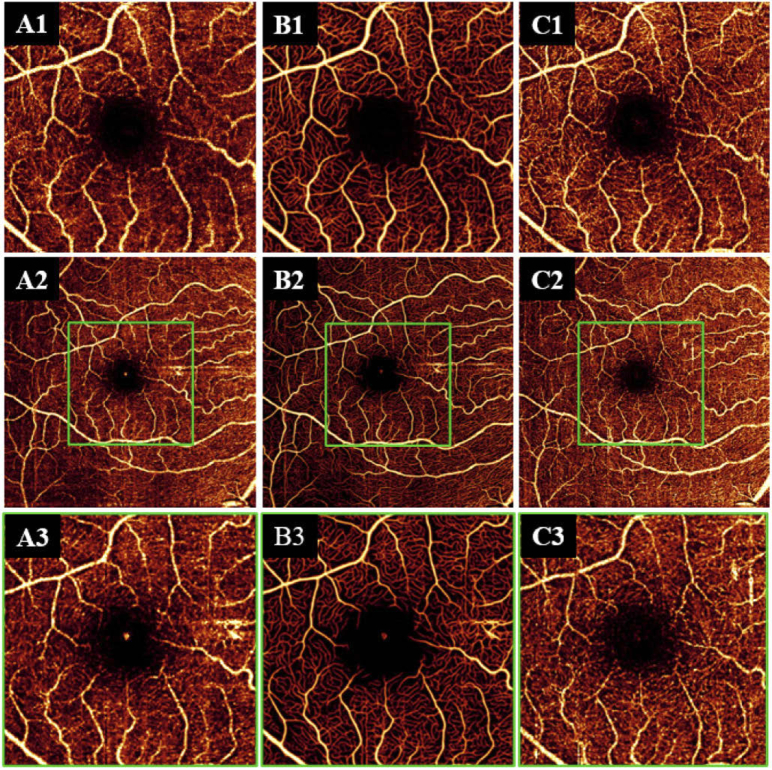

3.1.3. Vascular connectivity

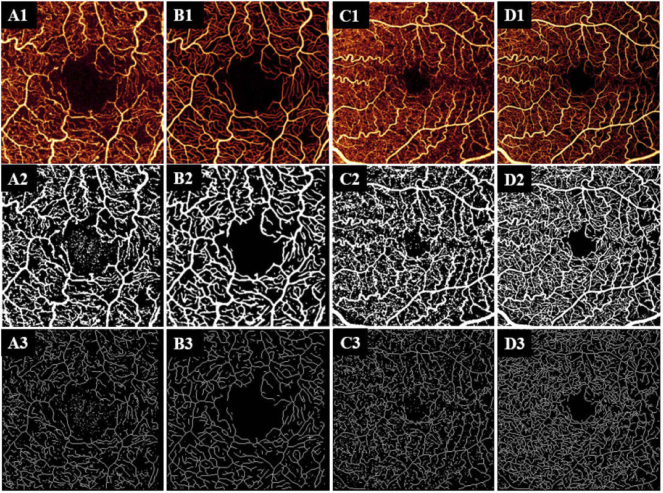

We also assessed vascular connectivity. To do so, we first binarized the angiograms [Figs. 4(A2)–4(D2)] using a global adaptive threshold method [40], then skeletonized the binary map to get the vessel skeleton map [Figs. 4(A3)–4(D3)]. Connected flow pixels were defined as any contiguous flow region with at a length of at least 5 (including diagonal connections), and the vascular connectivity was defined as the ratio of the number of connected flow pixels to the total number of pixels on the skeleton map [32].

Fig. 4.

The performance of HARNet. Row 1: (A1) Original 3×3-mm superficial vascular complex (SVC) angiogram and (B1) HARNet output from (A1). (C1) Original 6×6-mm angiogram, and (D1) HARNet output from (C1). Row 2: adaptive threshold binarization of the corresponding images in row 1. Row 3: skeletonization of the corresponding images in row 2. HARNet outputs show enhanced connectivity relative to the original images.

3.2. Performance on defocused angiograms

In order to further verify that our algorithm can improve the image quality of low-quality scans, we also evaluated its performance on defocused angiograms. To obtain defocused scans, we first performed autofocus to optimize the focal length to get optimal scans, and then manually adjusted the focal length to obtain angiograms defocused by 3 diopters. Finally, 10 defocused 3×3-mm angiograms and 10 defocused 6×6-mm angiograms were obtained. Defocused angiograms have lower signal-to-noise ratios than correctly focused angiograms, and vessels also appear dilated. The results show that angiograms reconstructed from defocused 3×3- and 6×6-mm angiograms had lower noise intensity and better connectivity than scans acquired under optimal focusing conditions (Fig. 5; Table 1). Therefore, our algorithm is also applicable to defocused angiograms and improves the quality of such scans. Since defocus leads to a general reduction in scan quality, this result also implies that our algorithm could be applicable on low-quality scans.

Fig. 5.

Qualitative demonstration of image quality improvement by the proposed reconstruction method. (A1) 3 diopter defocused 3×3-mm superficial vascular complex (SVC) angiogram. (B1) Reconstruction of (A1). (C1) 3×3-mm OCTA acquired under optimal conditions. (A2) 3 diopter defocused 6×6-mm SVC angiogram. (B2) Reconstruction of (A2). (C2) 6×6-mm angiogram acquired under optimal conditions. (A3) Central 3×3-mm section from the defocused 6×6-mm SVC angiogram. (B3) Reconstruction of (A3). (C3) Central 3×3-mm section from the 6×6-mm angiogram acquired under optimal focusing conditions. The green box is the central 3×3-mm section in the 6×6-mm SVC angiograms.

Table 1. Noise intensity, contrast and vascular connectivity (mean ± std.) of reconstructed defocused SVC angiograms, and angiograms captured under optimal conditions.

| Noise intensity | Contrast | Connectivity | ||

|---|---|---|---|---|

| 3×3-mm (N = 10) | SVC angiograms with optimal focuses | 80.89 ± 79.87 | 54.70 ± 1.29 | 0.83 ± 0.04 |

| Reconstructed SVC on defocused angiograms | 1.12 ± 0.56 | 56.33 ± 2.23 | 0.91 ± 0.02 | |

| Optimal vs. Reconstructed | P<0.001 (Mann-Whitney U test a ) | P=0.216 (t-test) | P<0.001 (t-test) | |

| 6×6-mm (N = 10) | SVC angiograms with optimal focuses | 139.71 ± 86.90 | 56.64 ± 2.00 | 0.78 ± 0.02 |

| Reconstructed SVC on defocused angiograms | 1.28 ± 3.50 | 56.00 ± 2.17 | 0.95 ± 0.01 | |

| Optimal vs. Reconstructed | P<0.001 (Mann-Whitney U test a ) | P=0.856 (t-test) | P<0.001 (t-test) | |

The Shapiro-Wilk test was used to check for normality of all variables. Mann-Whitney U test was used for noise intensity that deviates statistically significantly from a normal distribution. N is the number of eyes.

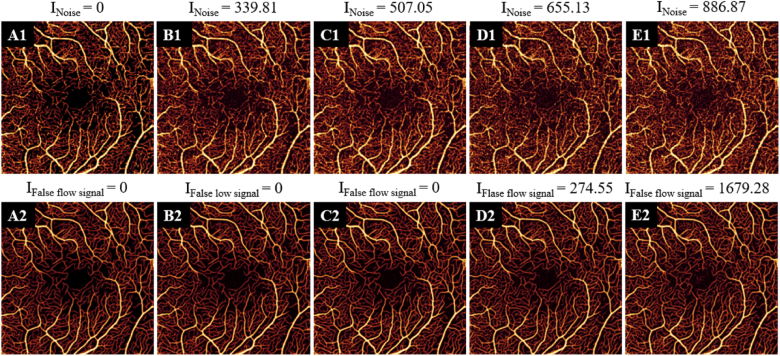

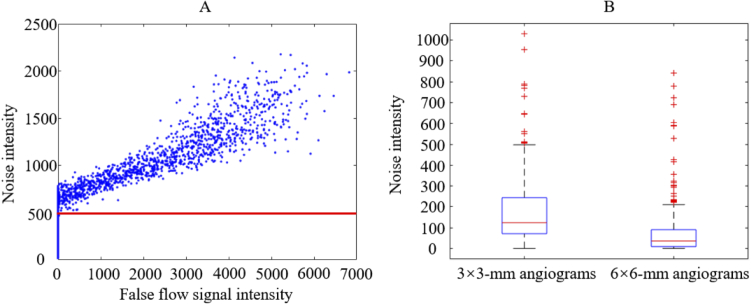

3.3. Assessment of the false flow signal

One concern in OCTA reconstruction is the generation of false flow signal. Because OCTA reconstruction methods are designed to enhance vascular detail, they are susceptible to mistakenly enhancing background that may randomly share some features with true vessels. In order to evaluate whether HARNet produces such artifacts, we selected 10 3×3-mm angiograms with good quality from 10 healthy eyes and then produced denoised angiograms by applying a simple Gabor and median filter to the original 3×3-mm angiograms [(Fig. 6(A1)]. Then we added Gaussian noise to the denoised angiograms using different parameters () [Figs. 6(B1)–6(E1)]. We varied and separately in increments of 0.005 from 0.001 to 0.1 and from 0.001 to 0.05, respectively, to obtain 2000 noisy 3×3-mm SVC angiograms with different noise intensities (0 - 2100). Next, we input the denoised and noisy angiograms into the network to obtain reconstructed angiograms from each [Figs. 6(A2)–6(E2)]. The false flow signal intensity was defined as

| (7) |

where is the false flow signal intensity, is the pixel value at position , and R corresponds to the same, physiologically flow-free 0.3-mm diameter circle within the FAZ as previously. We found our algorithm did not generate false flow signal when the noise intensity was under 500, which is far above the noise intensity measured in original 3×3-mm (146.77 ± 145.87) and 6×6-mm (93.10 ± 159.05) angiograms (Fig. 7).

Fig. 6.

3×3-mm superficial vascular complex (SVC) angiograms with different noise intensities. (A1) In 3×3-mm angiograms denoised with Gabor and median filtering, the noise intensity is 0. (B1-E1) 3×3-mm SVC angiograms with different noise intensities. (A2-E2) 3×3-mm angiograms reconstructed from the corresponding angiograms in row 1. When the noise intensity is less than 500, there is no false flow signal.

Fig. 7.

(A) The relationship between noise intensity and false flow signal intensity. Each point represents one of 2000 noise enhanced scans. The red line indicates the measured cutoff-value () for producing false flow signal. (B) Box plots of the noise intensity of 3×3- and 6×6-mm superficial vascular complex (SVC), non-defocused angiograms in the data set (N=298). The noise intensity measured in original 3×3-mm and 6×6-mm angiograms are far below the cutoff-value for false flow generation, with the exception of outlier images corrupted by apex reflection or true flow signals in the 0.3 mm diameter circle centered in the FAZ.

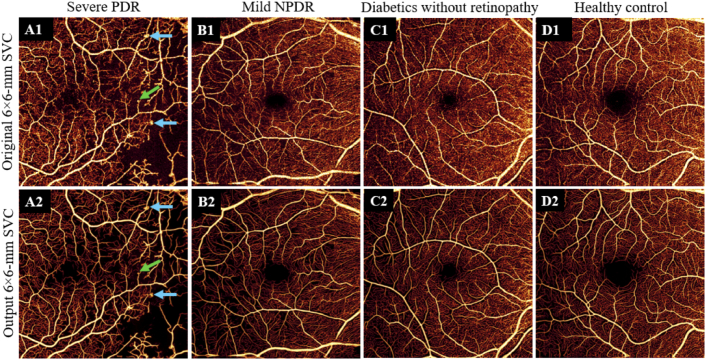

3.4. Performance on DR angiograms

Many diseases present outside of the central area of the macula. The enhancement of larger field-of-view angiograms resolution and image quality may improve the measurements of disease biomarkers such as non-perfusion area and vessel density, thereby further helping ophthalmologists diagnose such diseases. However, since features in diseased eyes may vary from healthy, it is possible that image reconstruction algorithms could suffer from reduced performance on such images. To investigate, we examined reconstructed 6×6-mm angiograms (Fig. 8) of eyes with DR, a leading cause of blindness [41]. Although the 6×6-mm angiograms of eyes with DR have higher noise intensity than healthy eyes, results show that the reconstructed DR angiograms also demonstrate the improvement on noise intensity, contrast, and connectivity comparable to that of healthy controls (Table 2). Because abnormal morphological vessels play a very important role in the diagnosis, it is essential to retain the abnormal vascular morphology when processing images. The DR angiograms reconstructed by our algorithm can preserve pathological vascular abnormalities such as intraretinal microvascular abnormalities (IRMA), early neovascularization and microaneurysms [Fig. 8(A2)].

Fig. 8.

HARNet performance on eyes with DR. Top row: original 6×6-mm superficial vascular complex (SVC) angiograms from an eye with active proliferative diabetic retinopathy (PDR) (A1), mild non-proliferative diabetic retinopathy (NPDR) (B1), diabetics without retinopathy (C1), and a healthy control (D1). Bottom row: 6×6-mm angiograms (A2-D2) HARNet output for (A1-D1). A microaneurysm (a green arrow) and intraretinal microvascular abnormalities (IRMA) (blue arrows) appear same in the reconstructed and original angiograms, demonstrating that HARNet preserves the vascular pathologies.

Table 2. Noise intensity, contrast, and vascular connectivity (mean ± std.) of reconstructed 6×6-mm SVC angiograms in eyes with diabetic retinopathy and healthy controls.

| Noise intensity | Contrast | Connectivity | ||

|---|---|---|---|---|

| Healthy controls (N = 25) | Original | 50.77 ± 59.39 | 55.61 ± 1.23 | 0.80 ± 0.02 |

| Reconstructed | 0.16 ± 0.26 | 59.61 ± 1.71 | 0.96 ± 0.01 | |

| Improvement | 99.69% | 7.20% | 19.51% | |

| Diabetic retinopathy (N = 53) | Original | 109.63 ± 106.34 | 55.44 ± 2.65 | 0.80 ± 0.02 |

| Reconstructed | 9.76 ± 26.76 | 58.11 ± 2.68 | 0.97 ± 0.01 | |

| Improvement | 91.10% | 8.57% | 21.06% | |

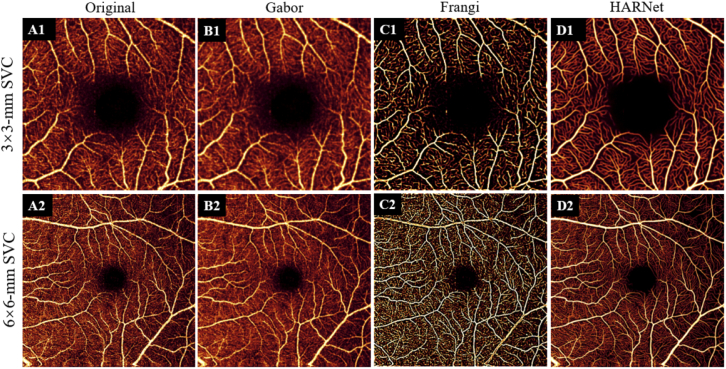

3.5. Performance of different methods

We also compared our algorithm with commonly used image enhancement methods including Gabor and Frangi filters. Compared to the original angiograms, our method significantly reduces noise and improves the vascular connectivity without producing false flow signal on all sizes of scans. There is no significant improvement of image contrast on 3 × 3-mm scans [(Fig. 9(D1)], while, the contrast shows significant improvement on 6 × 6-mm scans [Fig. 9(D2); Table 3]. The Gabor filter reduces the noise intensity and improves vascular connectivity, but the contrast is greatly reduced [Figs. 9(B1), 9(B2); Table 3]. The Frangi filter significantly enhances the contrast and improves vascular connectivity, but the noise intensity is significantly increased and may produce false flow signal [Figs. 9(C1), 9(C2); Table 3].

Fig. 9.

Performance of different methods on image enhancement. Top row: original 3×3-mm superficial vascular complex (SVC) angiograms from a healthy eye; (A1) original data, (B1) after applying a Gabor filter, (C1) after applying a Frangi filter, and (D1) reconstructed using the proposed method. Bottom row: equivalent from a 6×6-mm scan.

Table 3. Comparison of noise intensity, contrast, and vascular connectivity (mean ± std.) between original angiograms and angiograms processed by different methods. N is the number of eyes.

| Noise intensity | Contrast | Connectivity | ||

|---|---|---|---|---|

| 3×3-mm (N=78) | Original | 163.87 ± 136.13 | 53.53 ± 2.31 | 0.83 ± 0.04 |

| Gabor | 108.15 ± 94.13 a | 44.97 ± 2.27 | 0.87 ± 0.03 a | |

| Frangi | 260.92 ± 278.00 | 82.42 ± 4.37 a | 0.90 ± 0.03 a | |

| HARNet (proposed) | 5.79 ± 7.83 a | 53.82 ± 3.05 | 0.93 ± 0.02 a | |

| 6×6-mm (N=78) | Original | 98.10 ± 113.12 | 54.19 ± 2.10 | 0.80 ± 0.02 |

| Gabor | 63.92 ± 83.35 | 44.39 ± 2.04 | 0.83 ± 0.02 a | |

| Frangi | 167.17 ± 268.57 | 82.03 ± 2.96 a | 0.88 ± 0.02 a | |

| HARNet (proposed) | 8.22 ± 24.32 a | 58.59 ± 2.50 a | 0.96 ± 0.00 a | |

Compared to original images using paired t test, the validation metrics with significant improvement (P-value<0.001) was annotated with.

4. Discussion

Image analysis of low-quality or under-sampled OCTA is challenging in several respects. Noise affects the visibility of small blood vessels, especially capillaries, leading to artifactual vessel fragmentation. Motion and shadow artifacts are common, and amplified by under-sampling. OCTA quality, then, can have a significant impact on the judgment of ophthalmologists or researchers. To help mitigate this concern, several noise reduction and image enhancement procedures have been proposed. To reduce noise and enhance vascular connectivity, datasets are sometimes obtained by acquiring multiple images of the same location over time, making it possible to apply various averaging techniques [15,16,42,43]. However, the acquisition of larger and larger amounts of data makes the total acquisition time longer, increasing the probability of image artifacts caused by eye motions and introducing additional difficulty for clinical imaging. Filtering is also often applied to OCTA images to improve image quality [18,44], but typical problems in data filtering are reduced image resolution and the loss of capillary signal. Other noise reduction strategies suffer similar issues. For instance, a regression-based algorithm [14] that can remove decorrelation noise due to bulk motion in OCTA has been reported. Although image contrast was improved by this method, the drawback is worse vessel continuity, and it also suffers the loss of capillaries with weak signal.

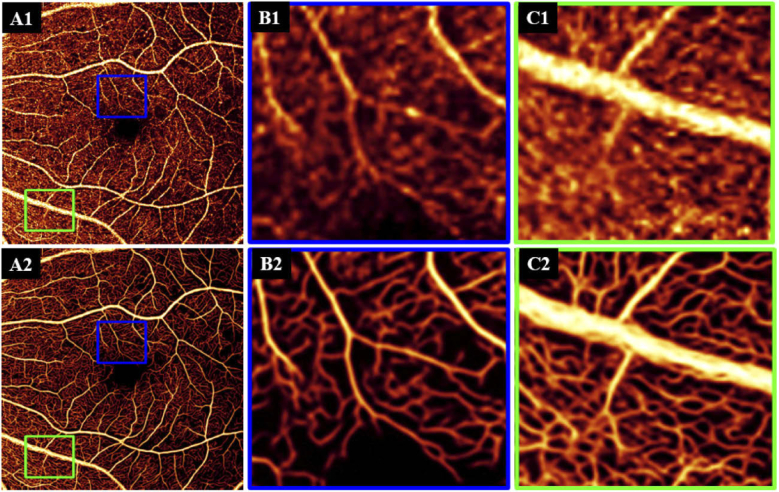

In this study, our proposed method can not only reduce noise and enhance connectivity, but also improve the capability to resolve capillaries in large-field-of-view scans. The two most common scan patterns used in research and the clinic are 3×3-mm and 6×6-mm [45,46]. While the smaller 3×3-mm OCTA can obtain higher image quality due to the denser scanning pattern, its small fields-of-view is a major limitation. Our algorithm’s ability to enhance 6×6-mm OCTA is a step toward compensating for this limitation. We achieved this enhancement by training a network to reconstruct images by learning features from the high-definition 3×3-mm images. This means that we did not need to manually segment vasculature to generate the ground truth, or generate high-definition scans by using a new scanning protocol in a prototype [19]. Therefore, our approach is a practical method to enhance 6×6-mm images by using an acquired 3×3-mm image, that could in principle also be extended to even larger fields-of-view with sparser sampling. Such enhancement via intelligent software could prove to be a superior method for achieving high-quality, large-field scans since hardware solutions (like, for example, increasing sampling density or incorporating adaptive optics) quickly lead to prohibitive cost and imaging times. Improving image quality and resolution may in turn promote better measurements of disease biomarkers such as non-perfusion area and vessel density; by extending improved image quality to a larger field-of-view we also increase the chance that we will detect pathology since disease can manifest outside of the central macular region usually imaged with OCTA [13,47].

We investigated the quality of our algorithm’s output by evaluating reconstructed angiograms with three metrics: noise intensity in the FAZ, global contrast, and vessel connectivity. The 6×6-mm angiograms obtained by our algorithm have almost no noise in the FAZ (0.16 ± 0.26) and vascular connectivity was likewise increased in the HARNet-processed images. In addition to these quantitative improvements, we consider the HARNet output images to appear qualitatively cleaner than the unprocessed input. We also performed experiments on defocused SVC angiograms, and the results show that the algorithm can improve such scans, which is an indication of robustness and broad utility. To demonstrate that the restored flow signal in the reconstructed angiograms is real, we verified whether a false flow signal is generated by using angiograms with different simulated noise intensities. The results show that our algorithm did not generate false flow signal when the noise intensity was under 500. This value far exceeds the noise intensity in the clinically-realistic OCTA angiograms examined in this study. Because the noise intensity in the FAZ and inter-capillary space is similar, we also think that artifactual vessels should not be generated outside of the FAZ.

HARNet improved the quality of both 3×3- and 6×6-mm OCTA angiograms according to the metrics examined in this study. Specifically, HARNet enhanced the quality of under-sampled 6×6-mm OCTA, while other enhancement algorithms perform poorly on such scans [48,49]. And it is interesting that, while HARNet was trained to reconstruct high-resolution 6×6-mm angiograms from sparsely sampled scans, the network also improved 3×3-mm images. In particular, the angiograms reconstructed from defocused scans compared favorably to equivalent images acquired at optimal focus for both scanning patterns. This implies that HARNet is effective as a general OCTA image enhancement tool, outside of the specific context of 6×6-mm angiogram reconstruction. Additionally, the image improvement provided by HARNet is more than just cosmetic, as demonstrated by the improvement in vessel connectivity. Although beyond the scope of this study, we speculate that other OCTA metrics (e.g., non-perfusion area or vessel density) may also prove to be more accurately measured on HARNet-reconstructed images.

Deep-learning-based algorithms are “black boxes” compared to the conventional image processing algorithms. Interpretability of deep-learning is an important field of research in machine learning. Matthew et al. [50] tried to understand CNNs using a kernel visualization technique. More recently, researchers proposed many methods to explain how CNNs work [51–53]. For a specific CNN, we can use kernel visualization techniques or heat maps to understand what features the CNN used to make decisions [54]. For our future work, we could use the same visualization techniques to understand why HARNet is very effective on reconstructing angiograms, and employ an ablation study to get a deeper understanding of the structure of HARNet. The biggest advantage of deep-learning-based methods is that they have strong generalizability, which means CNNs can make a reliable prediction on unseen data. Furthermore, the transfer-learning technique is used to transfer the knowledge learned from one dataset to a new dataset using a small number of samples. OCTA data form different pathologies of the retina share a similar feature space. Thus, with the innate strong generalizability and transfer-learning technique, our HARNet should be able to handle OCTA data from different pathologies of the retina (i.e., age-related-degeneration and glaucoma).

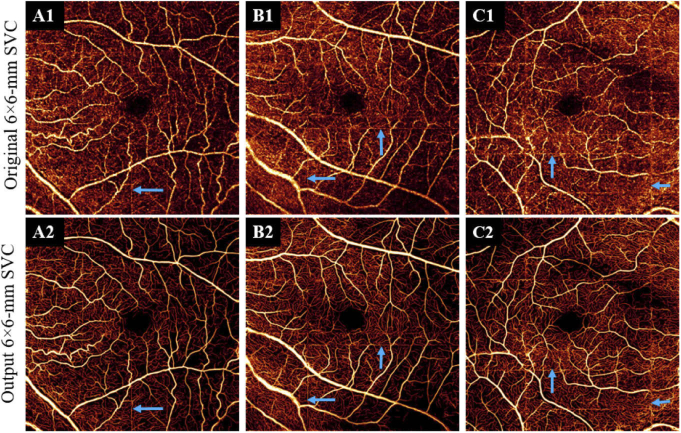

There are some limitations to this study. Since we trained HARNet by using optimally sampled, centrally located 3×3-mm angiograms, features specific to the periphery, i.e., the grating-like vascular structure of the radial peripapillary capillaries [Fig. 10(C1)], could not be learned during training. HARNet therefore may introduce features that are physiologically specific to the central macula into more peripheral regions [Figs. 10(B2), 10(C2)]. Likewise, HARNet may remove features specific to peripheral regions, particularly if there are disease-specific features that are more prevalent in the periphery compared to the macula, such as neovascularization elsewhere, which tend to occur more along the major vessels, away from the central macula. Unfortunately, due to the lack of a high-resolution ground truth for the region outside the central macula, we can only speculate on this issue. HARNet also currently only works in only one vascular complex (the superficial), but the intermediate and deep capillary plexuses, as well as the choriocapillaris, are important in several diseases [55–60]. Reconstruction of these vascular layers would also be beneficial; however, issues such as shadowing that present preferentially in low-density scanning patterns are only exacerbated in these deeper layers. This makes image reconstruction in these locations significantly more challenging. Finally, to completely characterize HARNet, it will also be important to assess performance on pathological scans. While our data indicates that HARNet can perform well on DR angiograms, there are of course many other diseases that could be examined for a more thorough assessment. Furthermore, a complete investigation of HARNet’s performance on these diseases would include the extraction of relevant biomarkers to determine if they are more or less accurately measured on reconstructed images. Due to eye motion, OCTA produces bright strip artifacts that are also passed to reconstructed angiograms (Fig. 11). However, our algorithm did not make efforts to correct this disturbance, since commercial systems could remove most motion artifacts by tracking at the scan acquisition level and such artifacts can also be frequently removed by other software means [14,61].

Fig. 10.

(A1) Original 6×6-mm superficial vascular complex (SVC) angiograms. (B1) The centrally located 3×3-mm angiograms. (C1) The region outside the centrally located 3×3-mm angiograms. (A2-C2) HARNet output for (A1-C1). Since the ground truth used in training did not include the specific vascular patterns present in the green square, the reconstruction here may not be ideal (C2).

Fig. 11.

Top row: (A1-C1) Original 6×6-mm superficial vascular complex (SVC) angiograms with motion artifacts. Bottom row: (A2-C2) HARNet output for (A1-C1). Blue arrows indicate the position of motion artifacts.

5. Conclusions

We proposed an end-to-end image reconstruction technique for high-resolution 6×6-mm SVC angiograms based on high-resolution 3×3-mm angiograms. The high-resolution 6×6-mm angiograms produced by our network had lower noise intensity and better vasculature connectivity than original 6×6-mm SVC angiograms, and we found our algorithm did not generate false flow signal at realistic noise intensities. The enhanced 6×6-mm angiograms may improve the measurements of disease biomarkers such as non-perfusion area and vessel density.

Funding

National Institutes of Health10.13039/100000002 (P30 EY010572, R01 EY024544, R01 EY027833); Research to Prevent Blindness10.13039/100001818 (Unrestricted departmental funding grant, William & Mary Greve Special Scholar Award).

Disclosures

Oregon Health & Science University (OHSU), Yali Jia has a significant financial interest in Optovue, Inc. These potential conflicts of interest have been reviewed and managed by OHSU.

References

- 1.Jia Y., Bailey S. T., Hwang T. S., McClintic S. M., Gao S. S., Pennesi M. E., Flaxel C. J., Lauer A. K., Wilson D. J., Hornegger J., Fujimoto J. G., Huang D., “Quantitative optical coherence tomography angiography of vascular abnormalities in the living human eye,” Proc. Natl. Acad. Sci. 112(18), E2395–E2402 (2015). 10.1073/pnas.1500185112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hwang T. S., Jia Y., Gao S. S., Bailey S. T., Lauer A. K., Flaxel C. J., Wilson D. J., Huang D., “Optical coherence tomography angiography features of diabetic retinopathy,” Retina 35(11), 2371–2376 (2015). 10.1097/IAE.0000000000000716 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Rosen R. B., Andrade Romo J. S., Krawitz B. D., Mo S., Fawzi A. A., Linderman R. E., Carroll J., Pinhas A., Chui T. Y. P., “Earliest evidence of preclinical diabetic retinopathy revealed using optical coherence tomography angiography perfused capillary density,” Am. J. Ophthalmol. 203, 103–115 (2019). 10.1016/j.ajo.2019.01.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jia Y., Bailey S. T., Wilson D. J., Tan O., Klein M. L., Flaxel C. J., Potsaid B., Liu J. J., Lu C. D., Kraus M. F., Fujimoto J. G., Huang D., “Quantitative optical coherence tomography angiography of choroidal neovascularization in age-related macular degeneration,” Ophthalmology 121(7), 1435–1444 (2014). 10.1016/j.ophtha.2014.01.034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Roisman L., Zhang Q., Wang R. K., Gregori G., Zhang A., Chen C. L., Durbin M. K., An L., Stetson P. F., Robbins G., Miller A., Zheng F., Rosenfeld P. J., “Optical coherence tomography angiography of asymptomatic neovascularization in intermediate age-related macular degeneration,” Ophthalmology 123(6), 1309–1319 (2016). 10.1016/j.ophtha.2016.01.044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Takusagawa H. L., Liu L., Ma K. N., Jia Y., Gao S. S., Zhang M., Edmunds B., Parikh M., Tehrani S., Morrison J. C., Huang D., “Projection-resolved optical coherence tomography angiography of macular retinal circulation in glaucoma,” Ophthalmology 124(11), 1589–1599 (2017). 10.1016/j.ophtha.2017.06.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rao H. L., Pradhan Z. S., Weinreb R. N., Reddy H. B., Riyazuddin M., Dasari S., Palakurthy M., Puttaiah N. K., Rao D. A. S., Webers C. A. B., “Regional comparisons of optical coherence tomography angiography vessel density in primary open-angle glaucoma,” Am. J. Ophthalmol. 171, 75–83 (2016). 10.1016/j.ajo.2016.08.030 [DOI] [PubMed] [Google Scholar]

- 8.Patel R. C., Wang J., Hwang T. S., Zhang M., Gao S. S., Pennesi M. E., Bailey S. T., Lujan B. J., Wang X., Wilson D. J., Huang D., Jia Y., “Plexus-specific detection of retinal vascular pathologic conditions with projection-resolved OCT angiography,” Ophthalmol. Retin. 2(8), 816–826 (2018). 10.1016/j.oret.2017.11.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tsuboi K., Sasajima H., Kamei M., “Collateral vessels in branch retinal vein occlusion: anatomic and functional analyses by OCT angiography,” Ophthalmol. Retin. 3(9), 767–776 (2019). 10.1016/j.oret.2019.04.015 [DOI] [PubMed] [Google Scholar]

- 10.de Carlo T. E., Romano A., Waheed N. K., Duker J. S., “A review of optical coherence tomography angiography (OCTA),” Int. J. Retin. Vitr. 1(1), 5–15 (2015). 10.1186/s40942-015-0005-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Jia Y., Simonett J. M., Wang J., Hua X., Liu L., Hwang T. S., Huang D., “Wide-field OCT angiography investigation of the relationship between radial peripapillary capillary plexus density and nerve fiber layer thickness,” Invest. Ophthalmol. Visual Sci. 58(12), 5188–5194 (2017). 10.1167/iovs.17-22593 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ishibazawa A., de Pretto L. R., Yasin Alibhai A., Moult E. M., Arya M., Sorour O., Mehta N., Baumal C. R., Witkin A. J., Yoshida A., Duker J. S., Fujimoto J. G., Waheed N. K., “Retinal nonperfusion relationship to arteries or veins observed on widefield optical coherence tomography angiography in diabetic retinopathy,” Invest. Ophthalmol. Visual Sci. 60(13), 4310–4318 (2019). 10.1167/iovs.19-26653 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.You Q. S., Guo Y., Wang J., Wei X., Camino A., Zang P., Flaxel C. J., Bailey S. T., Huang D., Jia Y., Hwang T. S., “Detection of clinically unsuspected retinal neovascularization with wide-field optical cohenrence tomography angiography,” Retina 40(5), 891–897 (2020). 10.1097/IAE.0000000000002487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Camino A., Jia Y., Liu G., Wang J., Huang D., “Regression-based algorithm for bulk motion subtraction in optical coherence tomography angiography,” Biomed. Opt. Express 8(6), 3053–3066 (2017). 10.1364/BOE.8.003053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Uji A., Balasubramanian S., Lei J., Baghdasaryan E., Al-Sheikh M., Borrelli E., Sadda S. V. R., “Multiple enface image averaging for enhanced optical coherence tomography angiography imaging,” Acta Ophthalmol. 96(7), e820–e827 (2018). 10.1111/aos.13740 [DOI] [PubMed] [Google Scholar]

- 16.Camino A., Zhang M., Dongye C., Pechauer A. D., Hwang T. S., Bailey S. T., Lujan B., Wilson D. J., Huang D., Jia Y., “Automated registration and enhanced processing of clinical optical coherence tomography angiography,” Quant. Imaging Med. Surg. 6(4), 391–401 (2016). 10.21037/qims.2016.07.02 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Tan B., Wong A., Bizheva K., “Enhancement of morphological and vascular features in OCT images using a modified Bayesian residual transform,” Biomed. Opt. Express 9(5), 2394–2406 (2018). 10.1364/BOE.9.002394 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chlebiej M., Gorczynska I., Rutkowski A., Kluczewski J., Grzona T., Pijewska E., Sikorski B. L., Szkulmowska A., Szkulmowski M., “Quality improvement of OCT angiograms with elliptical directional filtering,” Biomed. Opt. Express 10(2), 1013–1031 (2019). 10.1364/BOE.10.001013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Prentašic P., Heisler M., Mammo Z., Lee S., Merkur A., Navajas E., Beg M. F., Šarunic M., Loncaric S., “Segmentation of the foveal microvasculature using deep learning networks,” J. Biomed. Opt. 21(7), 075008 (2016). 10.1117/1.JBO.21.7.075008 [DOI] [PubMed] [Google Scholar]

- 20.Guo Y., Camino A., Wang J., Huang D., Hwang T. S., Jia Y., “MEDnet, a neural network for automated detection of avascular area in OCT angiography,” Biomed. Opt. Express 9(11), 5147–5158 (2018). 10.1364/BOE.9.005147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nagasato D., Tabuchi H., Masumoto H., Enno H., Ishitobi N., Kameoka M., Niki M., Mitamura Y., “Automated detection of a nonperfusion area caused by retinal vein occlusion in optical coherence tomography angiography images using deep learning,” PLoS One 14(11), e0223965–14 (2019). 10.1371/journal.pone.0223965 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Guo M., Zhao M., Cheong A. M. Y., Dai H., Lam A. K. C., Zhou Y., “Automatic quantification of superficial foveal avascular zone in optical coherence tomography angiography implemented with deep learning,” Vis. Comput. Ind. Biomed. Art 2(1), 1–9 (2019). 10.1186/s42492-019-0012-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Guo Y., Hormel T. T., Xiong H., Wang B., Camino A., Wang J., Huang D., Hwang T. S., Jia Y., “Development and validation of a deep learning algorithm for distinguishing the nonperfusion area from signal reduction artifacts on OCT angiography,” Biomed. Opt. Express 10(7), 3257–3268 (2019). 10.1364/BOE.10.003257 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Lauermann J. L., Treder M., Alnawaiseh M., Clemens C. R., Eter N., Alten F., “Automated OCT angiography image quality assessment using a deep learning algorithm,” Graefe’s Arch. Clin. Exp. Ophthalmol. 257(8), 1641–1648 (2019). 10.1007/s00417-019-04338-7 [DOI] [PubMed] [Google Scholar]

- 25.Wang J., Hormel T. T., Gao L., Zang P., Guo Y., Wang X., Bailey S. T., Jia Y., “Automated diagnosis and segmentation of choroidal neovascularization in OCT angiography using deep learning,” Biomed. Opt. Express 11(2), 927–944 (2020). 10.1364/BOE.379977 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wang J., Hormel T. T., You Q., Guo Y., Wang X., Chen L., Hwang T. S., Jia Y., “Robust non-perfusion area detection in three retinal plexuses using convolutional neural network in OCT angiography,” Biomed. Opt. Express 11(1), 330–345 (2020). 10.1364/BOE.11.000330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kim J., Lee J. K., Lee K. M., “Accurate image super-resolution using very deep convolutional networks,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (2016), pp. 1646–1654. [Google Scholar]

- 28.Ledig C., Theis L., Huszár F., Caballero J., Cunningham A., Acosta A., Aitken A., Tejani A., Totz J., Wang Z., Shi W., “Photo-realistic single image super-resolution using a generative adversarial network,” in Proceedings - 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017 (2017), pp. 105–114. [Google Scholar]

- 29.Tong T., Li G., Liu X., Gao Q., “Image super-resolution using dense skip connections,” in Proceedings of the IEEE International Conference on Computer Vision (2017), pp. 4799–4807. [Google Scholar]

- 30.Xu J., Chae Y., Stenger B., Datta A., “Dense bynet: Residual dense network for image super resolution,” in Proceedings - International Conference on Image Processing, ICIP (2018), pp. 71–75. [Google Scholar]

- 31.Zhang K., Zuo W., Zhang L., “Deep plug-and-play super-resolution for arbitrary blur kernels,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2019), pp. 1671–1681. [Google Scholar]

- 32.Jia Y., Tan O., Tokayer J., Potsaid B., Wang Y., Liu J. J., Kraus M. F., Subhash H., Fujimoto J. G., Hornegger J., Huang D., “Split-spectrum amplitude-decorrelation angiography with optical coherence tomography,” Opt. Express 20(4), 4710–4725 (2012). 10.1364/OE.20.004710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Guo Y., Camino A., Zhang M., Wang J., Huang D., Hwang T., Jia Y., “Automated segmentation of retinal layer boundaries and capillary plexuses in wide-field optical coherence tomographic angiography,” Biomed. Opt. Express 9(9), 4429–4442 (2018). 10.1364/BOE.9.004429 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Nair V., Hinton G. E., “Rectified linear units improve restricted boltzmann machines,” in Proceedings of the 27th International Conference on Machine Learning (ICML-10) (2010), pp. 807–814. [Google Scholar]

- 35.Klein S., Staring M., Murphy K., Viergever M. A., Pluim J. P. W., “Elastix: A toolbox for intensity-based medical image registration,” IEEE Trans. Med. Imaging 29(1), 196–205 (2010). 10.1109/TMI.2009.2035616 [DOI] [PubMed] [Google Scholar]

- 36.Horé A., Ziou D., “Image quality metrics: PSNR vs. SSIM,” in Proceedings - International Conference on Pattern Recognition (2010), pp. 2366–2369. [Google Scholar]

- 37.Wang Z., Bovik A. C., Sheikh H. R., Simoncelli E. P., “Image quality assessment: From error visibility to structural similarity,” IEEE Trans. on Image Process. 13(4), 600–612 (2004). 10.1109/TIP.2003.819861 [DOI] [PubMed] [Google Scholar]

- 38.Kingma D. P., Ba J. L., “Adam: A method for stochastic optimization,” in 3rd International Conference on Learning Representations, ICLR 2015 - Conference Track Proceedings (2015), pp. 1–15. [Google Scholar]

- 39.Peli E., “Contrast in complex images,” J. Opt. Soc. Am. A 7(10), 2032–2040 (1990). 10.1364/JOSAA.7.002032 [DOI] [PubMed] [Google Scholar]

- 40.N. O.-I. transactions on systems, U. Man, U. And, and U. 1979 , “A threshold selection method from gray-level histograms,” IEEE Trans. Syst., Man, Cybern. 9(1), 62–66 (1979). 10.1109/TSMC.1979.4310076 [DOI] [Google Scholar]

- 41.Ogurtsova K., da Rocha Fernandes J. D., Huang Y., Linnenkamp U., Guariguata L., Cho N. H., Cavan D., Shaw J. E., Makaroff L. E., “IDF Diabetes Atlas: Global estimates for the prevalence of diabetes for 2015 and 2040,” Diabetes Res. Clin. Pract. 128, 40–50 (2017). 10.1016/j.diabres.2017.03.024 [DOI] [PubMed] [Google Scholar]

- 42.Mo S., Phillips E., Krawitz B. D., Garg R., Salim S., Geyman L. S., Efstathiadis E., Carroll J., Rosen R. B., Chui T. Y. P., “Visualization of radial peripapillary capillaries using optical coherence tomography angiography: The effect of image averaging,” PLoS One 12(1), e0169385 (2017). 10.1371/journal.pone.0169385 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Maloca P. M., Spaide R. F., Rothenbuehler S., Scholl H. P. N., Heeren T., de Carvalho J. E. R., Okada M., Hasler P. W., Egan C., Tufail A., “Enhanced resolution and speckle-free three-dimensional printing of macular optical coherence tomography angiography,” Acta Ophthalmol. 97(2), e317–e319 (2019). 10.1111/aos.13567 [DOI] [PubMed] [Google Scholar]

- 44.Hendargo H. C., Estrada R., Chiu S. J., Tomasi C., Farsiu S., Izatt J. A., “Automated non-rigid registration and mosaicing for robust imaging of distinct retinal capillary beds using speckle variance optical coherence tomography,” Biomed. Opt. Express 4(6), 803–821 (2013). 10.1364/BOE.4.000803 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Kashani A. H., Chen C. L., Gahm J. K., Zheng F., Richter G. M., Rosenfeld P. J., Shi Y., Wang R. K., “Optical coherence tomography angiography: A comprehensive review of current methods and clinical applications,” Prog. Retinal Eye Res. 60, 66–100 (2017). 10.1016/j.preteyeres.2017.07.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Spaide R. F., Fujimoto J. G., Waheed N. K., Sadda S. R., Staurenghi G., “Optical coherence tomography angiography,” Prog. Retinal Eye Res. 64, 1–55 (2018). 10.1016/j.preteyeres.2017.11.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Russell J. F., Flynn H. W., Sridhar J., Townsend J. H., Shi Y., Fan K. C., Scott N. L., Hinkle J. W., Lyu C., Gregori G., Russell S. R., Rosenfeld P. J., “Distribution of diabetic neovascularization on ultra-widefield fluorescein angiography and on simulated widefield OCT angiography,” Am. J. Ophthalmol. 207, 110–120 (2019). 10.1016/j.ajo.2019.05.031 [DOI] [PubMed] [Google Scholar]

- 48.Li P., Huang Z., Yang S., Liu X., Ren Q., Li P., “Adaptive classifier allows enhanced flow contrast in OCT angiography using a histogram-based motion threshold and 3D Hessian analysis-based shape filtering,” Opt. Lett. 42(23), 4816–4819 (2017). 10.1364/OL.42.004816 [DOI] [PubMed] [Google Scholar]

- 49.Ting D. S. W., Pasquale L. R., Peng L., Campbell J. P., Lee A. Y., Raman R., Tan G. S. W., Schmetterer L., Keane P. A., Wong T. Y., “Artificial intelligence and deep learning in ophthalmology,” Br. J. Ophthalmol. 103(2), 167–175 (2019). 10.1136/bjophthalmol-2018-313173 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zeiler M. D., Fergus R., “Visualizing and understanding convolutional networks,” in Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (Springer Verlag, 2014), 8689 LNCS(PART 1), pp. 818–833. [Google Scholar]

- 51.Zhang Q., Wu Y. N., Zhu S.-C., “Interpretable convolutional neural networks,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (IEEE Computer Society, 2017), pp. 8827–8836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Samek W., Wiegand T., Müller K.-R., “Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models,” arXiv Prepr. arXiv1708.08296. (2017).

- 53.shi Zhang Q., chun Zhu S., “Visual interpretability for deep learning: a survey,” Front. Inf. Technol. Electron. Eng. 19(1), 27–39 (2018). 10.1631/FITEE.1700808 [DOI] [Google Scholar]

- 54.Zintgraf L. M., Cohen T. S., Adel T., Welling M., “Visualizing deep neural network decisions: Prediction difference analysis,” 5th Int. Conf. Learn. Represent. ICLR 2017 - Conf. Track Proc. (2019). [Google Scholar]

- 55.Toto L., Borrelli E., Di Antonio L., Carpineto P., Mastropasqua R., “Retinal vascular plexuses’ changes in dry age-related macular degeneration, evaluated by means of optical coherence tomography angiography,” Retina 36(8), 1566–1572 (2016). 10.1097/IAE.0000000000000962 [DOI] [PubMed] [Google Scholar]

- 56.Chi Y. T., Yang C. H., Cheng C. K., “Optical coherence tomography angiography for assessment of the 3-dimensional structures of polypoidal choroidal vasculopathy,” JAMA Ophthalmol. 135(12), 1310–1316 (2017). 10.1001/jamaophthalmol.2017.4360 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Onishi A. C., Nesper P. L., Roberts P. K., Moharram G. A., Chai H., Liu L., Jampol L. M., Fawzi A. A., “Importance of considering the middle capillary plexus on OCT angiography in diabetic retinopathy,” Invest. Ophthalmol. Visual Sci. 59(5), 2167–2176 (2018). 10.1167/iovs.17-23304 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Hwang T. S., Hagag A. M., Wang J., Zhang M., Smith A., Wilson D. J., Huang D., Jia Y., “Automated quantification of nonperfusion areas in 3 vascular plexuses with optical coherence tomography angiography in eyes of patients with diabetes,” JAMA Ophthalmol. 136(8), 929–936 (2018). 10.1001/jamaophthalmol.2018.2257 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Camino A., Guo Y., You Q., Wang J., Huang D., Bailey S. T., Jia Y., “Detecting and measuring areas of choriocapillaris low perfusion in intermediate, non-neovascular age-related macular degeneration,” Neurophotonics 6(04), 1 (2019). 10.1117/1.NPh.6.4.041108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Liu L., Edmunds B., Takusagawa H. L., Tehrani S., Lombardi L. H., Morrison J. C., Jia Y., Huang D., “Projection-resolved optical coherence tomography angiography of the peripapillary retina in glaucoma,” Am. J. Ophthalmol. 207, 99–109 (2019). 10.1016/j.ajo.2019.05.024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Zang P., Liu G., Zhang M., Dongye C., Wang J., Pechauer A. D., Hwang T. S., Wilson D. J., Huang D., Li D., Jia Y., “Automated motion correction using parallel-strip registration for wide-field en face OCT angiogram,” Biomed. Opt. Express 7(7), 2823 (2016). 10.1364/BOE.7.002823 [DOI] [PMC free article] [PubMed] [Google Scholar]