Abstract

Background

Crisis resolution teams (CRTs) offer brief, intensive home treatment for people experiencing mental health crisis. CRT implementation is highly variable; positive trial outcomes have not been reproduced in scaled-up CRT care.

Aims

To evaluate a 1-year programme to improve CRTs’ model fidelity in a non-masked, cluster-randomised trial (part of the Crisis team Optimisation and RElapse prevention (CORE) research programme, trial registration number: ISRCTN47185233).

Method

Fifteen CRTs in England received an intervention, informed by the US Implementing Evidence-Based Practice project, involving support from a CRT facilitator, online implementation resources and regular team fidelity reviews. Ten control CRTs received no additional support. The primary outcome was patient satisfaction, measured by the Client Satisfaction Questionnaire (CSQ-8), completed by 15 patients per team at CRT discharge (n = 375). Secondary outcomes: CRT model fidelity, continuity of care, staff well-being, in-patient admissions and bed use and CRT readmissions were also evaluated.

Results

All CRTs were retained in the trial. Median follow-up CSQ-8 score was 28 in each group: the adjusted average in the intervention group was higher than in the control group by 0.97 (95% CI −1.02 to 2.97) but this was not significant (P = 0.34). There were fewer in-patient admissions, lower in-patient bed use and better staff psychological health in intervention teams. Model fidelity rose in most intervention teams and was significantly higher than in control teams at follow-up. There were no significant effects for other outcomes.

Conclusions

The CRT service improvement programme did not achieve its primary aim of improving patient satisfaction. It showed some promise in improving CRT model fidelity and reducing acute in-patient admissions.

Keywords: Acute care, crisis resolution, service improvement, mental health services, randomised controlled trial

Crisis resolution teams (CRTs) are specialist, multidisciplinary mental health teams that provide short-term, intensive home treatment as an alternative to acute hospital admission.1 CRTs were implemented nationally in England following the National Health Service (NHS) Plan2 in 2000 and have been established elsewhere in Europe and in Australasia.3 Trial evidence suggests CRTs can reduce in-patient admissions and improve patients' satisfaction with acute care.4,5 When CRTs were scaled-up to national level in England, however, patients reported dissatisfaction with the quality of care6,7 and CRTs' impact on admission rates has been disappointing.8 English CRTs' service organisation and delivery is highly variable and adherence to national policy guidance is only partial.9,10 In initial stages of the Crisis team Optimisation and RElapse prevention (CORE) research programme (as part of which the trial reported in this paper was conducted, trial registration number: ISRCTN47185233), a measure of model fidelity for CRTs11 and a service improvement programme for CRTs12 were developed. This followed a model for supporting the implementation of mental health complex interventions widely and successfully used in the USA13 (but not so far in Europe). The trial reported in this paper evaluated whether the CORE CRT service improvement programme increased model fidelity and improved outcomes in CRT teams over a 1-year intervention period.

Method

A non-blind cluster-randomised trial evaluated whether a CRT service improvement programme improved patients' experience of CRT care, reduced acute service use and improved CRT staff well-being. The trial also investigated whether the Fidelity Scale scores of CRTs receiving the service improvement programme increased over the 1-year intervention period compared with control sites, and whether change in team Fidelity Scale score was associated with change in service outcomes. Cluster randomisation was used because the trial involved a team-level intervention. The primary hypothesis was that patients' satisfaction with CRT care, measured using the Client Satisfaction Questionnaire (8-item version, CSQ-8)14 was greater in the intervention group than the control group at end-of-intervention 1-year follow-up.

The trial was registered with the ISRCTN registry (ISRCTN47185233) in August 2014 and the protocol has been published.12 This paper reports the main trial results and relationships between teams' model fidelity and outcomes. Economic and process, and qualitative evaluations will be reported elsewhere. Ethical approval was granted by the Camden & Islington Research Ethics Committee (Ref: 14/LO/0107). Trial reporting in this paper conforms to extended CONSORT guidelines for cluster-randomised trials15 – see supplementary data 1 available at https://doi.org/10.1192/bjp.2019.21. Teams were recruited to the study between September and December 2014, with a 1-year trial intervention period.

Setting

The trial involved 25 CRT teams in eight different health regions (NHS trusts) across Southern England and the Midlands, selected to include inner-city and mixed suburban and rural areas. CRT teams were eligible if no other major service reorganisations were planned over the trial period. At least two participating CRTs were required from each NHS trust, to ensure teams from each trust could be allocated to each group.

Participants

We recruited: (a) CRT patients, (b) CRT staff, and (c) anonymised data about use of acute care from service records.

-

(a)

Patient experience outcomes: we aimed to recruit a cohort of recently discharged CRT patients: 15 per team each team (n = 375) at baseline, and another cohort, 15 per team (n = 375) at follow-up (between months 10 and 12 of the study period). All participants admitted to the CRT during these 3-month periods were eligible if they: had used the CRT service for at least 7 days; had ability to read and understand English and capacity to provide informed consent; and were not assessed by CRT clinical staff to pose too high a risk to others to participate (even via interview on NHS premises, or by phone).

-

(b)

Staff well-being outcomes: all current staff in participating CRTs were invited to complete study questionnaires at baseline and follow-up (months 10–12 of the study intervention period).

-

(c)

Patient-record data were collected for two separate cohorts at each time point: (i) anonymised data about all admissions to in-patient services were collected retrospectively from services' electronic patient records at two time points: 6 months prior to study baseline, and months 7–12 of the study intervention period (in-patient service-use outcomes); (ii) for all patients admitted to the CRT during two 1-month periods, anonymised data about readmissions to any acute care service (including CRTs or in-patient wards) over a 6-month period were collected retrospectively from electronic patient records: the first cohort at 6 months prior to baseline; the second at month 7 of the study follow-up period (readmission following CRT care outcome).

Randomisation and masking

The 25 teams were randomised, stratified by NHS trust, after a baseline fidelity review had been conducted for all teams within each NHS trust. In order to maximise learning about implementation of the service improvement programme within available study resources, more teams (n = 15) were randomly allocated to the service improvement programme than to a control group (n = 10). A statistician independent of the study generated allocation sequence lists and conducted randomisations. Researchers and staff in participating services were aware of teams' allocation status in this non-masked trial. Patient-participants and trusts' informatics teams providing data from patient records were not informed of teams' allocation status.

The intervention

The team-level service improvement programme supported CRT teams to achieve high fidelity to a model of best practice, defined in the CORE CRT Fidelity Scale13 and informed by a systematic evidence review,16 a national CRT survey9 and qualitative interviews with stakeholders.17 The service improvement programme was delivered over 1 year and consisted of: (a) ‘fidelity reviews’ at baseline, 6 months and 12 months: teams were assessed and given feedback on adherence to 39 best practice standards for CRTs; (b) coaching from a CRT facilitator (an experienced clinician or manager) 0.1 full-time equivalent, who could offer the CRT manager and staff advice and support with developing and implementing service improvement plans; (c) access to an online resource kit of materials and guidance to support CRT service improvement for each fidelity item; and (d) access to two ‘learning collaborative’ events where participating teams could meet to share experiences and strategies for improving services. Structures to support service improvement in each team included: an initial ‘scoping day’ for the whole team to prioritise and plan service improvement goals; and regular meetings of a CRT management group and the CRT facilitator, to develop and review detailed service improvement plans.

Through these resources and structures, the service improvement programme constituted a sustained, multicomponent programme of support to CRTs, which aimed to address the different domains of implementation support identified as contributing to attainment of high fidelity in the US Implementing Evidence-Based Practices Program18 in a tailored way to meet individual services' needs: prioritisation of the programme, leadership support, workforce development; workflow re-engineering; and practice reinforcement.

Teams in the control group received a fidelity review and brief feedback at baseline and 12-month follow-up, but no other aspects of the study intervention.

Measures

Patient experience outcomes

Data were collected using two self-report structured questionnaires: the CSQ-8,14 which assesses satisfaction with care; and Continu-um,19 which assesses perceived continuity of care.

Staff well-being outcomes

Staff burnout was assessed using the Maslach Burnout Inventory20 (emotional exhaustion, personal accomplishment and depersonalisation subscales were scored and reported separately, as recommended21); work engagement, using the Work Engagement Scale;22 general psychological health using the General Health Questionnaire;23 and psychological flexibility, measured using the Work-Related Acceptance and Action Questionnaire.24

Team outcomes

Participating teams' CRT model fidelity was assessed at baseline and 12-month follow-up using the CORE CRT Fidelity Scale,11 which scores teams from one to five on 39 fidelity items in four subscales (referrals and access, content and delivery of care, staffing and team procedures, timing and location of care), yielding a total score ranging from 39 to 195.

In-patient admissions

Patient data from patient records for all patients from the catchment area of each participating CRT during baseline and follow-up periods (whether or not they used the CRT itself) were used to generate three team-level outcomes: total number of psychiatric hospital admissions from the catchment area over 6 months, total number of compulsory psychiatric hospital admissions and total occupied in-patient bed days.

Readmission following CRT care

Patient records data for all patients admitted to the CRT during baseline and follow-up periods were used to calculate the number of CRT patients accepted for treatment by any acute care service during the 6-month follow-up, following discharge from the index acute admission.

Procedures

Patient experience and staff well-being participants

Patients were screened for eligibility and contacted about the study initially by CRT staff. Research staff attempted to contact all identified potential participants consecutively in the order they were discharged, until the site recruitment target was achieved. An information sheet about the study was sent by researchers and participants provided informed consent before completing questionnaires through face-to-face interview, online questionnaire or by phone. Patient-participants were given a thank you gift of £10 cash or vouchers. CRT staff were contacted by study researchers and invited to consent and complete measures using an online questionnaire.

In-patient admissions and readmission following CRT care

Information technology staff from participating NHS trusts provided anonymised data from patient records about all acute service use during data-collection periods. Study researchers calculated study outcomes from these raw data (further details in supplementary data 2).

Fidelity Scale scores were derived for each team from a structured, 1-day ‘fidelity review’ audit following a well-defined protocol, involving three independent reviewers from the study team (including at least one clinician and one patient or carer-researcher). Reviewers interviewed the CRT manager, staff, patients and carers and managers from other local services, and conducted a case-note audit and review of team policies and procedures. They then used the information gathered to score the team on each fidelity item, in accordance with criteria and guidance set out in the measure.

Patient experience and staff well-being outcomes data were entered directly into the ‘Opinio’ UCL secure online database, then downloaded as Excel files. Patient-record data were provided by NHS trusts in Excel or Word files. All data were transferred to Stata 14 software for analysis.

Analysis

The primary hypothesis, that patients' satisfaction with the CRT, measured by the CSQ14 is greater in the intervention group teams than control teams, was analysed using a linear random-effects model (mixed model) with a random intercept for CRT, controlling for mean baseline CSQ score by CRT. Patient-reported perceived continuity of care and measures of staff well-being were analysed similarly using linear random-effects models. Regression coefficients and 95% confidence intervals were reported. The calculated sample size provided 95% power to detect half a standard deviation difference between groups in mean satisfaction measured by the CSQ (3.5 points assuming a typical s.d. of 7.0) using a two-sided test, allowing for within-team clustering (intraclass correlation coefficient 0.05).

Patient outcomes at follow-up (in-patient admissions, bed days and readmissions following CRT care) were compared between intervention and control groups using Poisson random-effects modelling with a random intercept for trust. For each outcome, baseline score was set as the exposure variable, as it accounts for the baseline population and health of the catchment area as well as local admissions policies. Incidence rate ratios and 95% confidence intervals are reported. A second set of analyses was also conducted, using catchment area population as the exposure variable.

At team level, Fidelity Scale scores in the intervention and control groups at follow-up were compared, adjusting for baseline scores. The relationship was explored between change in team Fidelity Scale score from baseline to 12-month follow-up, and changes in five study outcomes: patient satisfaction, staff work engagement, in-patient admissions, in-patient bed use and readmission following CRT care. Relationships at team level between change in outcomes and change in fidelity scale subscale scores were also explored. For patient satisfaction and staff work engagement, we fitted linear regression models relating change in outcome (follow-up – baseline) to change in Fidelity Scale score (follow-up – baseline). For the remaining outcomes, we used normalised change in outcome ((follow-up – baseline)/square-root (baseline)). To aid interpretation, we present correlations to summarise these relationships with the corresponding P-values from the regression/correlation analyses.

Results

Trial recruitment

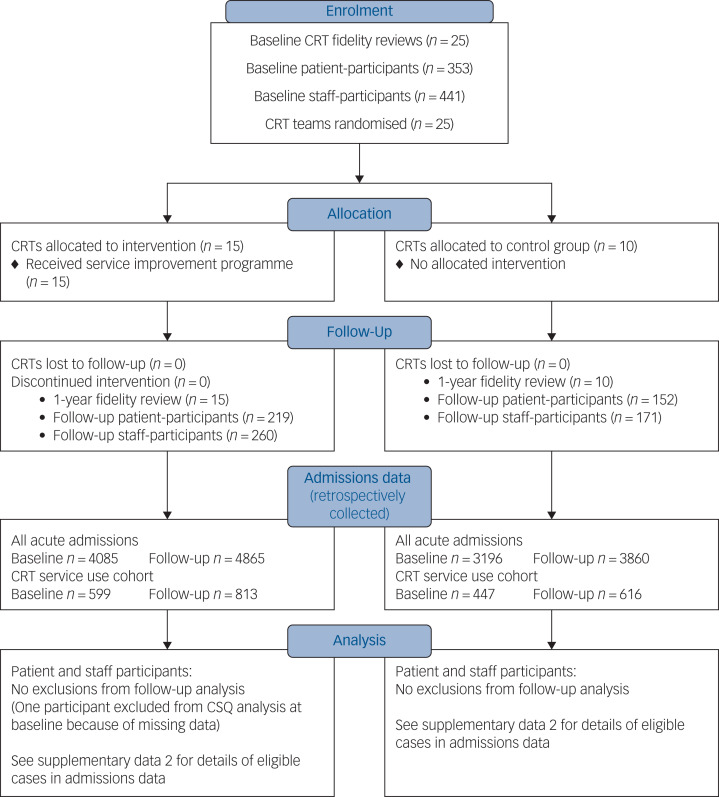

Recruitment to the trial is summarised in the CONSORT diagram in Fig. 1. All 25 CRT teams were retained in the trial. We did not achieve our recruitment target of 15 patients per team in six teams at baseline and in one team at follow-up. These shortfalls occurred in teams with smaller case-loads, or where eliciting staff help to contact potential participants was problematic. At each time point, 62% of eligible patients whose contact details were provided to researchers agreed to participate (353/567 at baseline; 371/594 at follow-up); however, the proportion of eligible patients in each CRT who were initially approached by CRT staff is unknown. At each time point, 79% of all current staff in trial CRTs participated (441/560 at baseline and 431/544 at follow-up). One NHS trust, covering five participating CRTs, was unable to provide data at follow-up from patient records about whether in-patient admissions were compulsory or voluntary; complete patient records data were otherwise obtained.

Fig. 1.

Crisis team Optimisation and RElapse prevention (CORE) crisis resolution team (CRT) service improvement programme cluster randomised trial – CONSORT flow diagram.

CSQ, Client Satisfaction Questionnaire.

The characteristics of participants recruited for patient experience and staff well-being outcomes are summarised in Tables 1 and 2 (further information in supplementary data 3). More patient-participants were men in the control teams than the intervention teams at follow-up (43% compared with 34%); more staff participants were men in control teams than intervention teams at both time points. No other marked differences between groups were apparent. No serious adverse events were identified during participant recruitment or data collection; no study-related harms were reported by participating CRTs.

Table 1.

Patient-participant characteristics in the Crisis team Optimisation and RElapse prevention (CORE) crisis resolution team service improvement programme trial

| Baseline | Follow-up | |||

|---|---|---|---|---|

| Control teams (n = 10) |

Intervention teams (n = 15) |

Control teams (n = 10) |

Intervention teams (n = 15) |

|

| Gender, n/N (%) | ||||

| Men | 56/141 (40) | 85/212 (40) | 66/152 (43) | 74/218 (34) |

| Women | 84/141 (60) | 126/212 (59) | 84/152 (55) | 143/218 (66) |

| Transgender | 1/141 (1) | 1/212 (0.5) | 2/152 (1) | 1/218 (0.5) |

| Age, years: mean (s.d.) | 44 (15) | 42 (15) | 42 (14) | 42 (13) |

| Ethnicity, n/N (%) | ||||

| White | 121/141 (86) | 184/212 (87) | 121/152 (80) | 179/219 (82) |

| Asian | 12/141 (9) | 10/212 (5) | 9/152 (6) | 12/219 (5) |

| Black | 6/141 (4) | 14/212 (7) | 14/152 (9) | 16/219 (7) |

| Mixed or other | 1/141 (1) | 4/212 (2) | 8/152 (5) | 12/219 (5) |

| Episodes of CRT care, n/N (%) | ||||

| 1 | 59/141 (42) | 81/212 (38) | 57/151 (38) | 92/219 (42) |

| 2–5 | 58/141 (41) | 89/212 (42) | 72/151 (48) | 90/219 (41) |

| >5 | 24/141 (17) | 42/212 (20) | 22/151 (15) | 37/219 (17) |

| Previous hospital admission, n/N (%) | ||||

| Yes | 47/141 (33) | 63/212 (30) | 46/152 (30) | 62/219 (28) |

| No | 94/141 (67) | 149/212 (70) | 106/152 (70) | 157/219 (72) |

| First contact with mental health services, years: n/N (%) | ||||

| <1 | 53/141 (38) | 77/212 (36) | 38/152 (25) | 64/219 (29) |

| 1–5 | 32/141 (23) | 43/212 (20) | 44/152 (29) | 44/219 (20) |

| 6–10 | 11/141 (8) | 24/212 (11) | 20/152 (13) | 32/219 (15) |

| >10 | 45/141 (32) | 68/212 (32) | 50/152 (33) | 79/219 (36) |

| Length of index CRT period of support, n/N (%) | ||||

| <2 weeks | 46/141 (33) | 48/212 (23) | 45/150 (30) | 70/219 (32) |

| 2 weeks to 1 month | 32/141 (23) | 62/212 (29) | 48/150 (32) | 55/219 (25) |

| 1–2 months | 28/141 (20) | 63/212 (30) | 41/150 (27) | 63/219 (29) |

| >2 months | 35/141 (25) | 39/212 (18) | 16/150 (11) | 31/219 (14) |

Table 2.

Staff characteristics in the Crisis team Optimisation and RElapse prevention (CORE) crisis resolution team service improvement programme trial

| Baseline | Follow-up | |||

|---|---|---|---|---|

| Control teams (n = 10) |

Intervention teams (n = 15) |

Control teams (n = 10) |

Intervention teams (n = 15) |

|

| Gender, n/N (%) | ||||

| Men | 69/175 (39) | 79/266 (30) | 70/166 (42) | 85/252 (34) |

| Women | 106/175 (61) | 187/266 (70) | 96/166 (58) | 167/252 (66) |

| Age, years: mean (s.d.) | 43 (10) | 42 (10) | 45 (10) | 43 (10) |

| Ethnicity, n/N (%) | ||||

| White | 118/175 (67) | 181/266 (68) | 107/164 (65) | 165/252 (65) |

| Asian | 18/175 (10) | 29/266 (11) | 24/164 (15) | 26/252 (10) |

| Black | 28/175 (16) | 45/266 (17) | 25/164 (15) | 50/252 (20) |

| Mixed or other | 11/175 (7) | 11/266 (4) | 8/164 (5) | 11/252 (4) |

| Professional group, n/N (%) | ||||

| Nurse | 100/175 (57) | 127/266 (48) | 81/165 (49) | 112/252 (44) |

| Occupational therapist | 3/175 (2) | 5/266 (2) | 3/165 (2) | 8/252 (3) |

| Psychiatrist | 12/175 (7) | 19/266 (7) | 16/165 (10) | 20/252 (8) |

| Psychologist | 4/175 (2) | 7/266 (3) | 4/165 (2) | 6/252 (2) |

| Social worker | 12/175 (7) | 26/266 (10) | 8/165 (5) | 22/252 (9) |

| Support worker | 30/175 (17) | 49/266 (18) | 37/165 (22) | 55/252 (22) |

| Other | 14/175 (8) | 33/266 (12) | 16/165 (10) | 29/252 (12) |

| Length of time worked in current team, n/N (%) | ||||

| <1 year | 31/175 (18) | 58/265 (22) | 26/173 (15) | 54/258 (21) |

| 1 to <2 years | 30/175 (17) | 43/265 (16) | 25/173 (14) | 40/258 (16) |

| 2 to <5 years | 48/175 (27) | 74/265 (28) | 71/173 (41) | 73/258 (28) |

| 5 to <10 years | 45/175 (26) | 60/265 (23) | 36/173 (21) | 52/258 (20) |

| ≥10 years | 21/175 (12) | 30/265 (11) | 25/173 (14) | 39/258 (15) |

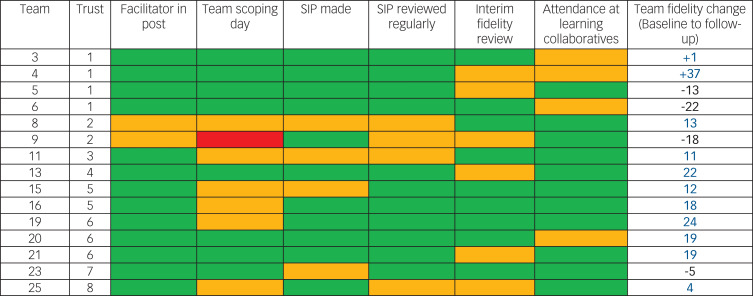

Intervention delivery

Figure 2 describes the implementation of the trial intervention. The CRT manager was unable to organise a scoping day for the whole team in one CRT. Otherwise, all the main components of the intervention were provided in all teams, but not always as promptly or completely as planned. Teams targeted a median of eight fidelity items each (selected as priorities by the team) over the course of the year in their service improvement plans. Further information about the content of intervention group teams' service improvement plans is provided in supplementary data 8.

Fig. 2.

Implementation of the crisis resolution team (CRT) service improvement programme trial intervention.

Facilitator in post: green, yes, throughout; amber, yes, but with a change in facilitator during intervention year; red: no facilitator for full-year. Team scoping day: green, held within first 3 months; amber, held later than 3 months; red, not held. Service improvement plan (SIP) made: green, within first 3 months; amber, later than 3 months; red, plan not made. SIP reviewed regularly: green, reviewed at least 3 times during study year; amber, reviewed fewer than 3 times; red, not reviewed. Interim fidelity review: green, held in month 6 or 7; amber, held later than month 7; red, no reviewed. Attendance at learning collaboratives: green, facilitator and CRT team members attended events; amber, just facilitator attended.

Fidelity Scale scores

Teams' baseline scores for fidelity to a model of good CRT practice ranged from 97 (low fidelity) to 134 (moderate fidelity) – compared with a median score from a 75-team national survey in 2014 of 122.11 Eleven out of 15 teams in the intervention group improved their Fidelity Scale score from baseline to follow-up, including teams from seven of the eight participating trusts, and teams with higher and lower model fidelity at baseline. The range in change scores on the CORE CRT Fidelity Scale in intervention group teams was from −22 to +37, with a mean change of 8.1 points. (The mean baseline Fidelity Scale score in the intervention teams was 116.4 and the mean follow-up score was 124.5). This contrasts with the control group, where none of the ten teams increased their Fidelity Scale score from baseline to follow-up, with a range in change scores from −20 to 0, with a mean change of −9.7 points. (The mean baseline Fidelity Scale score in the control teams was 122.2 and the mean follow-up score was 112.5.) There was a significant difference in outcome Fidelity Scale scores between intervention and control groups, adjusting for baseline scores (P = 0.0060). Further details of participating teams' Fidelity Scale scores are provided in supplementary data 4.

Trial outcomes

There was no significant difference between the intervention and control group teams for the trial's primary outcome of patient satisfaction: regression analysis suggested slightly higher satisfaction in the intervention group (coefficient 0.97, 95% CI −1.02 to 2.97) but this was not significant (P = 0.34). There was also no statistically significant difference between groups in patient-rated continuity of care, or four of the six staff well-being measures (with significantly better staff psychological health and psychological flexibility in the intervention group). The trial outcomes are summarised in Table 3. Descriptive data for patient experience and staff well-being outcomes at team level are provided in supplementary data 5.

Table 3.

Crisis team Optimisation and RElapse prevention (CORE) crisis resolution team (CRT) service improvement trial results – patient, staff and service-use outcomes

| Measure | Control (n = 10 CRTs) |

Intervention (n = 15 CRTs) |

Adjusted analysis,a

coefficient (95% CI), P |

Adjusted analysis,b

IRR (95% CI), P |

|---|---|---|---|---|

| Patient experience outcomes | ||||

| Client Satisfaction Questionnaire, satisfaction with CRT service (primary outcome), median (IQR) | 0.97 (–1.02 to 2.97), 0.34 | |||

| Baseline | 27 (22–30) | 27 (22–31) | ||

| Follow-up | 28 (23–31) | 28 (24–32) | ||

| Continu-um, perceived continuity of care: mean (s.d.) | −0.06 (–2.78 to 2.66), 0.97 | |||

| Baseline | 42 (10) | 43 (10) | ||

| Follow-up | 40 (9) | 40 (10) | ||

| Staff well-being outcomes | ||||

| Maslach Burnout Inventory, emotional exhaustion: mean, (s.d.) | −1.92 (–4.30 to 0.46), 0.11 | |||

| Baseline | 18 (10) | 18 (10) | ||

| Follow-up | 20 (11) | 18 (11) | ||

| Maslach Burnout Inventory, personal accomplishment: mean (s.d.) | 0.19 (–1.39 to 1.78), 0.81 | |||

| Baseline | 37 (7) | 38 (7) | ||

| Follow-up | 36 (8) | 37 (8) | ||

| Maslach Burnout Inventory, depersonalisation: mean (s.d.) | −0.26 (–1.13 to 0.60), 0.55 | |||

| Baseline | 5 (4) | 4 (4) | ||

| Follow-up | 5 (4) | 4 (5) | ||

| Utrecht Work Engagement Scale, mean (s.d.) | 1.07 (–0.81 to 2.96), 0.27 | |||

| Baseline | 39 (8) | 40 (8) | ||

| Follow-up | 38 (9) | 40 (8) | ||

| General Heath Questionnaire, mean (s.d.) | −1.29 (–2.38 to –0.20), 0.020 | |||

| Baseline | 10 (5) | 11 (5) | ||

| Follow-up | 12 (6) | 11 (5) | ||

| Work-related Acceptance and Action Questionnaire, mean, (s.d.) | 1.16 (0.07 to 2.25), 0.037 | |||

| Baseline | 39(6) | 40 (5) | ||

| Follow-up | 38 (6) | 40 (5) | ||

| In-patient service-use outcomes | ||||

| In-patient admissions, median (IQR) | 0.88 (0.83 to 0.94), <0.001 | |||

| Baseline | 170 (129–245) | 152 (60–219) | ||

| Follow-up | 170 (121–236) | 119 (42–179) | ||

| Compulsory admissions, median (IQR)c | 1.03 (0.91 to 1.17), 0.63 | |||

| Baseline | 70 (26–77) | 54 (19–77) | ||

| Follow-up | 56 (32–72) | 42 (23–42) | ||

| In-patient bed days, median (IQR) | 0.96 (0.95 to 0.97), <0.001 | |||

| Baseline | 6061 (4331–6683) | 4294 (2614–5703) | ||

| Follow-up | 4685 (2846–9296) | 3830 (2356–6161) | ||

| Readmission following CRT care outcomes | ||||

| Readmissions, median (IQR) | 0.87 (0.72 to 1.06), 0.17 | |||

| Baseline | 16 (10–22) | 12 (7–16) | ||

| Follow-up | 22 (8–31) | 12 (3–25) |

IQR, interquartile range.

a. Staff and patient analysis: mixed modelling (CRT as random effect).

b. Incidence rate ratio (IRR) baseline score on outcome measure as exposure variable (trust as random effect).

c. Compulsory admissions data missing for five teams at follow-up (all from the same National Health Service trust: three in the intervention group and two in the control group).

At team level, there were significantly fewer total in-patient admissions and in-patient bed days in the intervention group than the control group over 6 months, after adjustment for baseline rates, suggesting that admissions were reduced more in intervention teams than in controls during the study period. These results were not replicated in a second analysis that adjusted for catchment area population instead of baseline rates, suggesting that admission rates relative to the size of the local population may not have been significantly lower in intervention teams than controls at follow-up, although we note that this second analysis does not adjust for differences in patient case-mix across areas. There was no difference between groups in rates of compulsory in-patient admissions or in rates of readmission to acute care following an episode of CRT care. Further details are provided in supplementary data 6.

Relationship between outcomes and model fidelity

There was a weak positive correlation of 0.34 between change in Fidelity Scale score and change in patient satisfaction, which corresponds to a mean increase of 0.65 points on the CSQ Scale for a ten-point increase in Fidelity Scale score. There was a weak negative correlation (i.e. in the expected direction) of −0.38 between Fidelity Scale score and readmissions following CRT care. There was no evidence of associations between change in total Fidelity Scale score and change in in-patient admission rates, bed days or staff work engagement. In post hoc exploratory analyses of subscale scores, a relationship between reduction in in-patient admissions and increase in access and referrals fidelity subscale score was apparent (correlation −0.32). Readmissions following CRT care, by contrast, were most closely correlated with the timing and location of care (−0.45), and content of care (−0.34) subscales. Patient satisfaction was most closely correlated with the content of care subscale (correlation 0.36). Illustrative graphs are provided in supplementary data 7.

Discussion

Main findings

For the primary outcome, patient satisfaction was not significantly greater in teams receiving the programme than in control teams. Model fidelity improved in 11 of 15 teams receiving the CRT service improvement programme over the study period, compared with none of the 10 control teams. There was some indication of significantly better results over the study period for the intervention teams compared with controls regarding hospital admission rates and in-patient bed use. Staff psychological health and psychological flexibility were higher at follow-up in the intervention group. There were non-significant trends favouring the intervention group teams regarding patient satisfaction, readmission to acute services following CRT care, and staff morale and job satisfaction. There was no evidence that the trial intervention reduced rates of compulsory admissions.

Altogether, this suggests the intervention was insufficient to achieve all its intended service improvements, but did achieve some, notably better model fidelity and reduced in-patient admissions. It may thus help unlock the potential benefits of CRTs in reducing the high costs and negative experience for patients associated with in-patient admissions. Positive results from our study also provide international validation for the process developed by the US Implementing Evidence-Based Practice project13 but not previously trialled in a UK NHS context, as a means to support implementation of complex interventions in mental healthcare.

Limitations

Three limitations of the study relate to its design. First, other local and national service initiatives that arose during the year of the trial may have influenced CRT implementation and outcomes independently of the trial intervention. Second, because CRTs in each trust share senior managers and communicate regularly at management level, there is a possibility of contamination, where elements of the trial intervention were also accessed by control teams. Third, the 1-year follow-up period may have been too short for teams to fully enact their service improvement plans and to capture all changes in model fidelity and outcomes resulting from the trial intervention.

Four further limitations of the study relate to data collection. First, for patient experience outcomes, the participants recruited were highly satisfied with care, compared with previous studies,4,25 and may well over-represent those who were best engaged and most easily contactable by CRT staff. CRTs may have varied in the proportion of patients they approached and asked about taking part in the trial, with possible resulting bias. There appears to have been a ceiling effect in our sample with the trial primary satisfaction outcome measure (CSQ-8): 26% of participants in the treatment group at follow-up gave a maximum score of 32, compared with 12% in the control group. There may have been differences between groups in patients' satisfaction with CRTs that our evaluation failed to capture. We further note that fidelity gains regarding service organisation or the extent of care, even where achieved, may not have translated into increased patient satisfaction as broadly measured by the CSQ-8, if not accompanied by improvements in staff's clinical competence26 and reduction in negative interactions with individual staff,27 both of which have been identified as important to patient experience.

Second, data regarding acute service use were provided in anonymised form from NHS patient records and could not be verified as complete by researchers. Information about whether in-patient admissions were compulsory or voluntary was not available for five teams at follow-up.

Third, neither fidelity reviewers nor participating services could be masked to teams' trial allocation status during follow-up CRT fidelity reviews. Intervention group teams may have been more motivated to prepare thoroughly for their review and thus maximise their score. Reviewers may have unconsciously favoured the intervention group when assessing fidelity.

Fourth, it was not possible to confirm wholly accurate data regarding CRTs' catchment area population size, some of which were based on general practitioner registration rather than geographical area (see supplementary data 2). This possible measurement error does not affect the results for service-use outcomes reported in the main text of this paper – which are in any case better able to assess change in service use (and therefore the impact of the intervention) during the study period, through adjusting for baseline service use in each team – but may have affected the second analysis of service-use outcomes (supplementary data 6), which adjusted for catchment area population.

Implications for research

A future economic evaluation of the study will explore the cost-effectiveness of the intervention as delivered in this trial. Qualitative and process evaluations (also to be reported separately) will explore in more depth the content of support provided by facilitators in the project, the focus of teams' service improvement plans, the organisational contexts in which the intervention was delivered, and how these factors may relate to the extent of teams' success in improving model fidelity during the project. This may inform the development, and then evaluation, of a revised, CRT service improvement programme that better targets critical components of care, engages stakeholders and addresses organisational barriers to service change.

Our trial sought to improve outcomes in CRTs through the mechanism of increasing teams' model fidelity, but only weak relationships between changes in teams' overall Fidelity Scale score and outcome were established, and not for all outcomes. Three possible reasons for this could be explored in future research. First, the reliability of the CORE CRT Fidelity Scale has not been unequivocally established.13 In vivo testing of interrater reliability is desirable. Second, some critical components of CRTs may not be assessed by the CORE CRT Fidelity Scale. All fidelity scales are better able to audit team organisation and the extent of service provision than to assess the clinical competence with which care is delivered,26 and relationships between fidelity and outcomes are moderate even for the most well-established scales.28 Other methods, possibly involving direct observation of practice; may be required to evaluate clinical competence in CRTs. Third, critical components of effective CRT services may be present but insufficiently weighted in teams' overall Fidelity Scale score for it to relate closely to service outcomes. The closer relationships with some outcomes we found for specific fidelity subscales provide some support for this idea. A large observational study, evaluating model fidelity and outcomes across many CRTs, could test hypotheses about the relationship between team outcomes and Fidelity Scale item or subscale scores.

Implications for policy and practice

In general, for the control teams in our trial, Fidelity Scale scores dropped, readmissions following CRT care rose, and in-patient service-use outcomes were worse than for the intervention group teams. This suggests that there is a pressing need for effective CRT service improvement support. This trial suggests that considerable input is needed to improve service quality in CRTs: our successfully implemented, multicomponent programme of sustained support for CRTs did not demonstrate improved patient satisfaction, and only partially achieved its aims. The CORE CRT service improvement programme provides a useful starting point for developing future CRT service improvement initiatives, having achieved improvements in model fidelity in teams from varied geographical and provider-trust contexts and a range of baseline levels of fidelity, and having some evidence of effectiveness in reducing admissions and in-patient service use. It is informed by a model of implementing complex interventions in mental health settings with prior evidence of effectiveness in US contexts13 that now also has evidence of applicability to English services, with potential usefulness beyond CRTs. The CORE service improvement structures and the online CRT Resource Pack29 are publicly available and provide guidance, materials and case examples to support good practice in CRTs. Clear specification of a CRT service model and development of effective resources to support CRT service improvement can help ensure that consistent provision of acceptable and effective home treatment for people experiencing mental health crisis is fully achieved.

Acknowledgements

Our colleague Steve Onyett died suddenly in September 2015. He contributed to the design of this study and oversaw delivery of the service improvement programme trial intervention. We miss his warmth and wisdom. Thank you to the members of the CORE Study service user and carer working groups, who contributed to planning the trial and provided peer-reviewers for the trial fidelity reviews: Stephanie Driver, Pauline Edwards, Jackie Hardy, David Hindle, Kate Holmes, Lyn Kent, Jacqui Lynskey, Brendan Macken, Jo Shenton, Jules Tennick, Gen Wallace, Mary Clarke, Kathleen Fraser-Jackson, Mary Plant, Anthony Rivett, Geoff Stone and Janice Skinner. We are grateful for the help of staff and patients from all the CRT teams that participated in this study.

Funding

This study was undertaken as part of the CORE Programme, which is funded by the UK Department of Health National Institute for Health Research (NIHR) under its Programme Grants for Applied Research programme (Reference Number: RP-PG-0109-10078). The views expressed in this paper are those of the authors and not necessarily those of the NHS, the NIHR or the Department of Health. Neither the study funders not its sponsors had any role in study design, in the collection, analysis or interpretation of data, or in the writing of this report or the decision to submit it for publication. S.J. and D.O. were in part supported by the National Institute for Health Research (NIHR) Collaboration for Leadership in Applied Health Research and Care (CLAHRC) North Thames at Bart's Health NHS Trust.

Supplementary material

For supplementary material accompanying this paper visit https://doi.org/10.1192/bjp.2019.21.

click here to view supplementary material

References

- 1.Department of Health. Crisis Resolution/Home Treatment Teams. The Mental Health Policy Implementation Guide. Department of Health, 2001. (http://webarchive.nationalarchives.gov.uk/20130107105354/http://www.dh.gov.uk/prod_consum_dh/groups/dh_digitalassets/@dh/@en/documents/digitalasset/dh_4058960.pdf). [Google Scholar]

- 2.Department of Health. The NHS Plan: A Plan for Investment, a Plan for Reform. Department of Health, 2000. (http://webarchive.nationalarchives.gov.uk/20130107105354/http://www.dh.gov.uk/prod_consum_dh/groups/dh_digitalassets/@dh/@en/@ps/documents/digitalasset/dh_118522.pdf). [Google Scholar]

- 3.Johnson S. Crisis resolution and home treatment teams: an evolving model. Br J Psychiatry 2013; 19: 115–23. [Google Scholar]

- 4.Johnson S, Nolan F, Pilling S, Sandor A, Hoult J, McKenzie N, et al. Randomised controlled trial of acute mental health care by a crisis resolution team: the north Islington crisis study. BMJ 2005; 331: 599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Murphy SM, Irving CB, Adams CE, Waqar M. Crisis intervention for people with severe mental illnesses. Cochrane Database Syst Rev 2015; 12: CD001087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hopkins C, Niemiec S. Mental health crisis at home: service user perspectives on what helps and what hinders. J Psychiatr Ment Health Nurs 2007; 14: 310–8. [DOI] [PubMed] [Google Scholar]

- 7.MIND. Listening to Experience: An Independent Inquiry into Acute and Crisis Mental Healthcare. MIND, 2011. [Google Scholar]

- 8.Jacobs R, Barrenho E. The impact of crisis resolution and home treatment teams on psychiatric admissions in England. J Ment Health Policy Econ 2001; 14: S13. [DOI] [PubMed] [Google Scholar]

- 9.Lloyd-Evans B, Paterson B, Onyett S, Brown E, Istead H, Gray R, et al. National implementation of a mental health service model: a survey of Crisis Resolution Teams in England. Int J Ment Health Nurs 2017; January 11 (Epub ahead of print). [DOI] [PubMed] [Google Scholar]

- 10.Lloyd-Evans B, Lamb D, Barnby J, Eskinazi M, Turner A, Johnson S. Mental health crisis resolution teams and crisis care systems in England: a national survey. BJPsych Bull 2018; 42: 146–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lloyd-Evans B, Bond G, Ruud T, Ivanecka A, Gray R, Osborn D, et al. Development of a measure of model fidelity for mental health crisis resolution teams. BMC Psychiatry 2016; 16: 427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lloyd-Evans B, Fullarton K, Lamb D, Johnston E, Onyett S, Osborn D, et al. The CORE Service Improvement Programme for mental health crisis resolution teams: study protocol for a cluster-randomised controlled trial. BMC Trials 2016; 17: 158.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.McHugo GJ, Drake RE, Whitley R, Bond G, Campbell K, Rapp C, et al. Fidelity outcomes in the national implementing evidence-based practices project. Psychiatr Serv 2007; 58: 1279–84. [DOI] [PubMed] [Google Scholar]

- 14.Atkisson C, Zwick R. The client satisfaction questionnaire: psychometric properties and correlations with service utilisation and psychotherapy outcome. Eval Programme Plann 1982; 5: 233–37. [DOI] [PubMed] [Google Scholar]

- 15.Campbell MK, Piaggio G, Elbourne DR, Altman DG for the CONSORT Group. Consort 2010 statement: extension to cluster randomised trials. BMJ 2012; 345: e5661. [DOI] [PubMed] [Google Scholar]

- 16.Wheeler C, Lloyd-Evans B, Churchard A, Fitzgerald C, Fullarton K, Mosse L, et al. Implementation of the crisis resolution team model in adult mental health settings: a systematic review. BMC Psychiatry 2015; 15: 74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Morant N, Lloyd-Evans B, Lamb D, Fullarton K, Brown E, Paterson B, et al. Crisis resolution and home treatment: stakeholders’ views on critical ingredients and implementation in England. BMC Psychiatry 2017; 17: 254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Torrey WC, Bond GR, McHugo GJ, Swain K. Evidence-based practice implementation in community mental health settings: the relative importance of key domains of implementation activity. Admin Policy Ment Health 2012; 39: 353–64. [DOI] [PubMed] [Google Scholar]

- 19.Rose D, Sweeney A, Leese M, Clement S, Burns T, Catty J, et al. Developing a user-generated measure of continuity of care: brief report. Acta Psychiatr Scand 2009; 119: 320–4. [DOI] [PubMed] [Google Scholar]

- 20.Maslach C, Jackson SE. The measurement of experienced burnout. J Organ Behav 1981; 2: 99–113. [Google Scholar]

- 21.Maslach C, Jackson S. The Maslach Burnout Inventory. Consulting Psychologists Press, 1981. [Google Scholar]

- 22.Schaufeli W, Bakker A. The measurement of work engagement with a short questionnaire. Educ Psychol Meas 2006; 66: 701–16. [Google Scholar]

- 23.Goldberg D, Williams P. The General Health Questionnaire. NFER-Nelson, 1988. [Google Scholar]

- 24.Bond F, Lloyd J, Guenole N. The Work-related Acceptance and Action Questionnaire (WAAQ): initial psychometric findings and their implications for measuring psychological flexibility in specific contexts. J Occupational Organisational Psychol 2013; 86: 331–47. [Google Scholar]

- 25.Johnson S, Nolan F, Hoult J, White R. Outcomes of crises before and after introduction of a crisis resolution team. Br J Psychiatry 2005; 187: 68–75. [DOI] [PubMed] [Google Scholar]

- 26.Bond GR, McGovern MP. Measuring organizational capacity for integrated treatment. J Dual Diagn 2013; 9: 165–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sweeney A, Fahmy S, Nolan F, Morant N, Fox Z, Lloyd-Evans B, et al. The relationship between therapeutic alliance and service user satisfaction in mental health inpatient wards and crisis house alternatives: a cross-sectional study. PLoS One 2014; 9: e100153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kim SJ, Bond GR, Becker DR, Swanson SJ, Reese SL. Predictive validity of the individual placement and support fidelity scale (IPS-25): a replication study. J Vocat Rehab 2015; 43: 209–16. [Google Scholar]

- 29.Lloyd-Evans B, Johnson S. CORE CRT Resource Pack UCL, 2014. (https://www.ucl.ac.uk/core-resource-pack).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

For supplementary material accompanying this paper visit https://doi.org/10.1192/bjp.2019.21.

click here to view supplementary material