Abstract

Networks used in biological applications at different scales (molecule, cell and population) are of different types: neuronal, genetic, and social, but they share the same dynamical concepts, in their continuous differential versions (e.g., non-linear Wilson-Cowan system) as well as in their discrete Boolean versions (e.g., non-linear Hopfield system); in both cases, the notion of interaction graph G(J) associated to its Jacobian matrix J, and also the concepts of frustrated nodes, positive or negative circuits of G(J), kinetic energy, entropy, attractors, structural stability, etc., are relevant and useful for studying the dynamics and the robustness of these systems. We will give some general results available for both continuous and discrete biological networks, and then study some specific applications of three new notions of entropy: (i) attractor entropy, (ii) isochronal entropy and (iii) entropy centrality; in three domains: a neural network involved in the memory evocation, a genetic network responsible of the iron control and a social network accounting for the obesity spread in high school environment.

Keywords: biological networks, dynamic entropy, isochronal entropy, attractor entropy, entropy centrality, robustness

1. Introduction

Biological networks are currently widely used to represent the mechanisms of reciprocal interaction between different biological entities and at different scales [1,2,3]. For example, if the entities are molecular, the networks can concern the genes and the control of their expression, or the enzymes and the regulation of the metabolism in living systems that possess them. At the other end of the life spectrum, at the population level, biological networks are used to model interactions between individuals, for example during the spread of contagious diseases, whether social or infectious [4,5]. Between these two molecular and population levels, the cellular level is also concerned by biological networks: for example, in the same neural tissue, cells such as neurons work together to give rise to emergent properties, such as those of the central nervous system related to memory [6] or motion control [7].

Among the tools used for quantifying the complexity of a biological network, the network entropies are ones of the most easy to calculate. They can be related to the network dynamics, i.e., calculated from the Kolmogorov-Sinaï formula when the dynamics is Markovian, or they can represent the richness of the network attractors or confinors [8], based on the relative weight of their attraction or confinement basins in the network state space. A final way to calculate the entropy of a random network is to use the invariant measure of its dynamics, giving birth to probability measures related to the characteristics of its nodes like centrality, connectivity, strong connected components size, etc. The literature on network entropies is abundant and from reference to recent papers it is possible to find definitions and calculation algorithms in [9,10,11,12,13,14,15,16,17,18,19,20,21,22].

Dynamical systems, discrete or continuous, share common mathematical notions like initial conditions and attraction basin, final conditions and attractor, Jacobian graph, stability and robustness. Moreover, in an energetic view, there are in both cases, two extreme systems, i.e., on one hand, conservative systems with an energy constant along trajectories (e.g., Hamiltonian systems) and, on the other hand, dissipative systems with an energy decreasing along trajectories (e.g., potential systems). For both Hamiltonian and potential systems, it is possible to define at each point x of their state space E, a Jacobian matrix J(x) made of the partial derivatives of the flow of the network dynamics, whose associated graph having J(x) as incidence matrix, is called the interaction graph G(x). The description of the dynamics in terms of energy conservation or dissipation is identical whether the system is time discrete or continuous.

Common definitions of the concepts of attraction basin and attractor will be recalled in Section 2 Material and Methods, in which we give also some examples of Hamiltonian and potential systems by making explicit the energy functions underlying their dynamics, allowing for interpreting these functions in the precise context of different applications. For example, the energy functions can be related to the notions of frustration in statistical mechanics [23] or of kinetic energy, which can be calculated in discrete systems by using an analogue of the continuous velocity [24]. In Section 3, we present the Results through applications in three domains, neural (memory evocation based on the synchronization of neural networks), genetic (regulation of the iron metabolism) and social (dynamics of the obesity spread in a college environment). We define the thermodynamical notions of attractor entropy, dynamic (or evolutionary) entropy and entropy centrality for interpreting the results, and we relate the dynamic entropy to the robustness (or structural stability) of the dynamical systems presented. After, in Section 4, the Discussion presents the interest of a thermodynamic approach in social networks in order to simulate and evaluate the efficiency of public health policies, and eventually, the Section 5 gives the Conclusion and Perspectives of the paper.

2. Material and Methods

2.1. The Dynamical Tools

Several definitions of attractor and stability have been proposed since 50 years. Here we use that of Cosnard and Demongeot [25,26], which covers both continuous and discrete cases. More specific approaches of the notion of attractors especially for network dynamics can be found in [3,27,28,29].

2.1.1. Definition of the Notion of Attractor

Let consider a trajectory starting at the point a of the state space provided with a dynamic flow f and a distance d. , called the limit set of the trajectory starting in a, is the set of accumulation points of the trajectory as time t goes to infinity in the time set T (either discrete or continuous) such as:

where is one time greater than t for which the trajectory passes into a ball of radius centred in y.

If the initial state a lies in a set A, is the union of all limit sets , for a belonging to A:

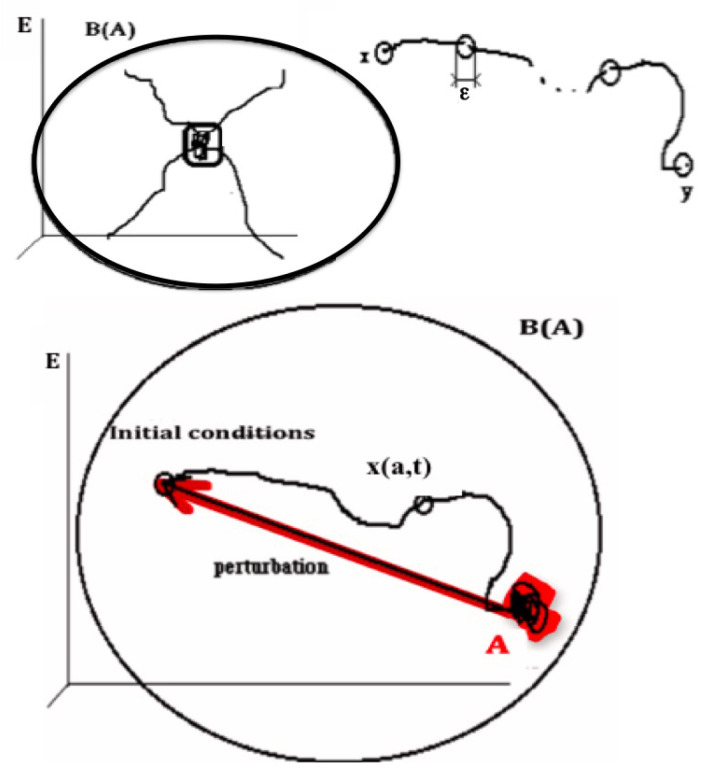

Conversely, is the set of all initial conditions a, whose limit set is included in A (Figure 1). is called the attraction basin of A.

Figure 1.

Top left : Attractor A is invariant for the composed operator LoB. Top right: Shadow trajectory between x and y. Bottom: State of the attractor A returning to A after a perturbation in the attraction basin B(A).

-

(i)

, where the composed operator is obtained by applying the basin operator B and then the limit operator L to the set A

-

(ii)

There is no set A’ containing strictly A, shadow-connected to A and verifying (i)

-

(iii)

There is no set A” strictly contained in A verifying (i) and (ii)

The definition of the “shadow-connectivity” connecting in E the subsets and , lies on the fact that there exists a “shadow-trajectory” between and (Figure 1 Top right, where and ). The notion of “shadow-trajectory” has been defined first in [30]:

x in and y in belong to the same shadow-trajectory between and if, for any , there is an integer and times and states , such as all Hausdorff distances between the successive parts of the shadow-trajectory are less than :

The above definition of attractor is available for all known dynamical systems, in agreement with the common sense and is meaningful for both discrete or continuous time sets T, if the dynamical system is autonomous with respect to time, contrary to other definitions proposed since 1970 such as in [31,32,33,34], all less general.

2.1.2. Potential Dynamics

A dynamical system is potential (or gradient) if its velocity is equal to the gradient of a scalar potential P defined on the state space E: where gradient vector equals and state at time t is [35]. The system is dissipative, because P decreases along trajectories until its attractors located on its minima.

2.1.3. Hamiltonian Dynamics

A dynamical system is Hamiltonian if its velocity is tangent to the projection on E of the contour lines of the surface representative of an energy function H on E: , where is the vector tangent at to the projection on E of the equi-altitude set of altitude . If the dimension of the system is two, then is equal to [36]. The system is said conservative, because the energy function H is constant along trajectories. An example of conservative system will be given in Section 4.2 (Equation (16)).

2.1.4. Potential-Hamiltonian Dynamics

A dynamical system is potential-Hamiltonian if its velocity can be decomposed into two parts, one potential and one Hamiltonian: [37]. If the set of minima of P is a contour line of the surface H on E, then its strong shadow-connected components (i.e., the sets in which any pair of states is shadow-connected in both senses) are the attractors of the system.

2.2. Examples of Dissipative Energy

In a gradient system, the potential energy is dissipative, because it decreases along the trajectories and, more, its gradient is exactly equal to the system velocity. The existence of a morphogenetic dissipative energy has been speculated by the Waddington’s school of embryology [38] as an ontology rule governing the development of living systems and we give in Section 2.2.2 an example of such a dissipative energy.

2.2.1. Discretization of the Continuous Potential System with Block-Parallel Updating

Let us consider a discrete Hopfield dynamics where , if the gene i is expressing its protein, else . The probability that , knowing the states in an interaction neighbourhood of i is given by:

| (1) |

where is a parameter which represents the influence (inhibitory, if ; activatory, if and null, if ) the gene j from the interaction neighbourhood is exerting on the gene i, and is a threshold corresponding to the external field in statistical mechanics and here to a minimal value of activation for the interaction potential . T is a temperature allowing for introducing a certain degree of indeterminism in the rule of the change of state. In particular, if , the rule is practically deterministic and, if H denotes the Heaviside function, we have:

| (2) |

Once this rule defined, three graphs and their associated incidence matrices are important for making more precise the network dynamics. These incidence matrices are:

interaction matrix W: represents the action of the gene j on gene i. W plays the role of a discrete Jacobian matrix. We can consider the associated signed matrix S, with , if , , if and , if ,

updating matrix U: , if j is updated before or with i, else ,

trajectory matrix F: , where b and c are two states of E, if and only if , else .

These matrices can be constant or can depend on time t:

in the case of W, the most frequent dependence is called the Hebbian dynamics: if the vectors and have a correlation coefficient , then , with , corresponding to a reinforcement of the absolute value of the interactions having succeeded in inhibiting or activating their target gene i: in case where, for , remained equal to one, that leads to increase the ’s, if the ’s were positive, and conversely to decrease the ’s , if the ’s were negative,

- in the case of U, we can have an autonomous (in time) clock based on the behaviour of r chromatin clock genes having indices , with three possible behaviours:

- if , then the rule (1) is available

- if and , where is the last time between and t, where

- if and , then (by exhaustion of the pool of expressed genes).

This dynamical system ruling the evolution of U remains autonomous, but the updating is state dependent. If all equal one, the updating is called parallel; if there is a sequence such as for any k such as , the other being equal to zero, then the updating is called sequential. There exist cases other than these extreme cases called block-sequential (the updating is parallel in a block and the blocks are updated sequentially). A case of updating which is more realistic in genetics is called block parallel: there are blocks made of genes sequentially updated, and these blocks are updated in parallel. Interactions between these blocks can exist (i.e., there are , with i and j belonging to two different blocks), and because the block sizes are different, the time at which the stationary state is reached in a block is not necessarily synchronized with the corresponding times in other blocks: the synchronization depends on the intra-block as well as on the inter-block interactions. All the genes of a block can reach their stationary state long before the genes of another block. This behaviour explains in particular that the state of the genes of the block corresponding for example to the chromatin clock is highly depending on the state of the genes in other blocks connected to them (and for example acting as transcription factors of the clock genes), and reciprocally.

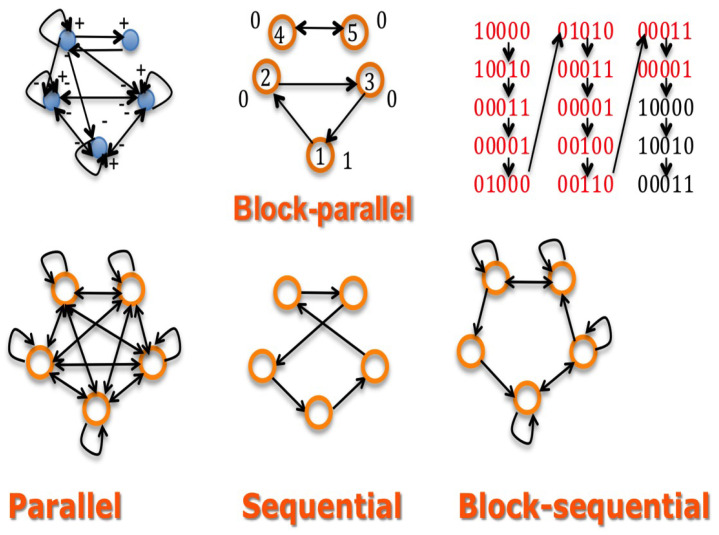

An example of such a block-parallel dynamics is given by a short block made of the nodes 4 and 5 on Figure 2 Top middle of chromatin clock genes reaching rapidly its limit-cycle and entraining other blocks of metabolic (nuclear or mitochondrial) genes. The second block of genes is made of the morphogens responsible of the apex growth of a plant and of the auxin gene inhibiting the morphogens (node 1) [39,40]. When the first block reaches its limit-cycle (possibly entrained by external “Zeitgebers” related to an exogenous seasonal rhythm), it blocks the dynamics of the second block, liberating the first and after, the second axillary bud morphogens (nodes 2 and 3) only when the apex morphogens passed a sufficient time in state 1, corresponding to a sufficient growth of apex for limiting the diffusion of auxin, then cancelling its inhibitory power. We can easily iterate this dynamical behaviour for other successive blocks related to the inferior buds.

Figure 2.

Top left: interaction graph of a network made of a 3-switch (system made of three genes fully inhibited except the auto-activations) representing morphogens linked to a regulon representing chromatin clock genes. Top middle: the updating graph corresponding to a block-parallel dynamics ruling the network. Top right: a part of the trajectory graph exhibiting a limit-cycle of period 12 having internally a cycle of period four for the chromatin clock genes. Bottom: updating graphs corresponding successively (from the left to the right) to the parallel, sequential and block-sequential dynamics.

More precisely, we can implement the dynamics of the morphogenesis network in the following way; let us suppose that:

-

(i)

there are two chromatin clock genes involved in a regulon, i.e., the minimal network having only one negative circuit and one positive circuit reduced to an autocatalytic loop

-

(ii)

there are three morphogens corresponding to the apex and to axillary buds involved as nodes of a 3-switch network fully connected, with all interactions negative except three autocatalytic loops at each vertex

-

(iii)

there are three inhibitory interactions from the auto-catalysed node of the regulon on the nodes of the 3-switch, as indicated on Figure 2 Top left.

The Figure 2 Top shows the three graphs defining the morphogenesis network dynamics, i.e., the interaction (left), updating (middle) and trajectory (right) ones. The dynamics is supposed ruled by a deterministic Hopfield network, with temperature and threshold equal to zero and interaction weights equal to one (if positive), −1 (if negative) or zero. An attractor limit-cycle of this dynamics is shown on Figure 2 Top right, showing two intricate rhythms, one of period four corresponding to the chromatin clock dynamics, embedded in a rhythm of period 12 corresponding to the successive expression of the apex and axillary buds morphogens. The Figure 2 Bottom shows other updating graphs, less realistic than the block-parallel for representing the action of the chromatin clock regulon.

2.2.2. Discrete Lyapunov Function (Neural Network)

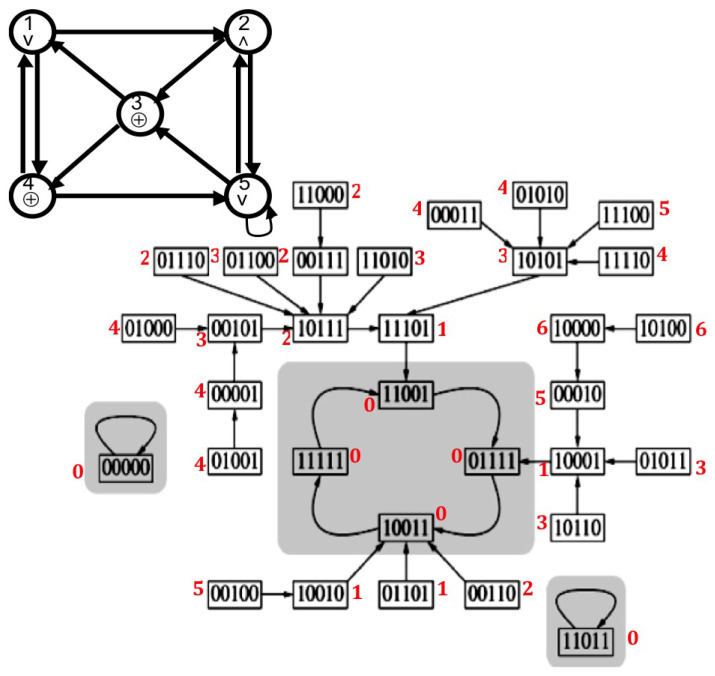

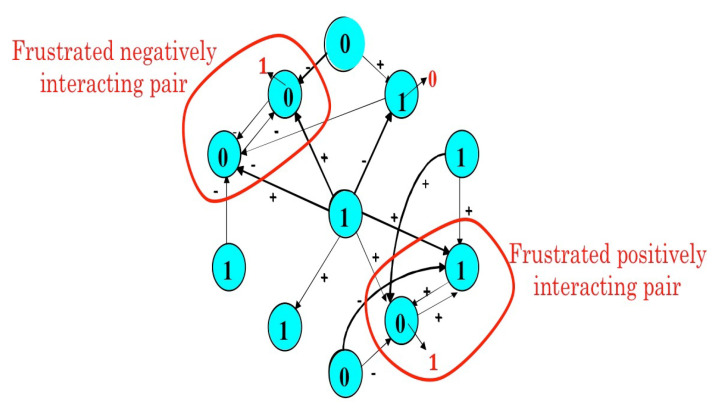

A logic neural network has local transition rules of type ⊕, ∨ and ∧ (Figure 3). Applying these local rules ∧ (“and”), ∨ (inclusive “or”) et ⊕ (exclusive “or”), we can define on each configuration of the logic neural network of the Figure 3, a Lyapunov function L from the global frustration of order four , decreasing along trajectories and vanishing on the attractors of the network dynamics:

with , where is the local frustration of order four, also equal to the discrete kinetic energy between et multiplied by 32:

Figure 3.

Top: Logic neural network with local transition rules ⊕, ∨ and ∧. Bottom: Discrete trajectory graph in the state space with indication of the values of , where L is the Lyapunov function (in red).

In this example of a discrete neural network ruled by logic Boolean functions applied for each node to its entries (proposed in [41,42] as a genetic network), the dynamical behaviour is guided by an energy function deriving from the discrete kinetic energy, built with the discrete equivalent of the velocity [24]. We can indeed write the Lyapunov function of the trajectory as the mean global discrete kinetic energy calculated between times t and :

3. Results

3.1. Attractor Entropy and Neural Networks

The attractor entropy measures the heterogeneity of the attractor landscape on the state space E of a dynamical system. It can be evaluated by the quantity:

| (3) |

where is equal to the attraction basin size of the kth attractor among the m attractors of the network dynamics, divided by , n being the number of genes of the network. We will give an example of use of this notion in the case of a continuous neural network, the Wilson-Cowan oscillator, which describes the biophysical interactions between two populations of neurons:

| (4) |

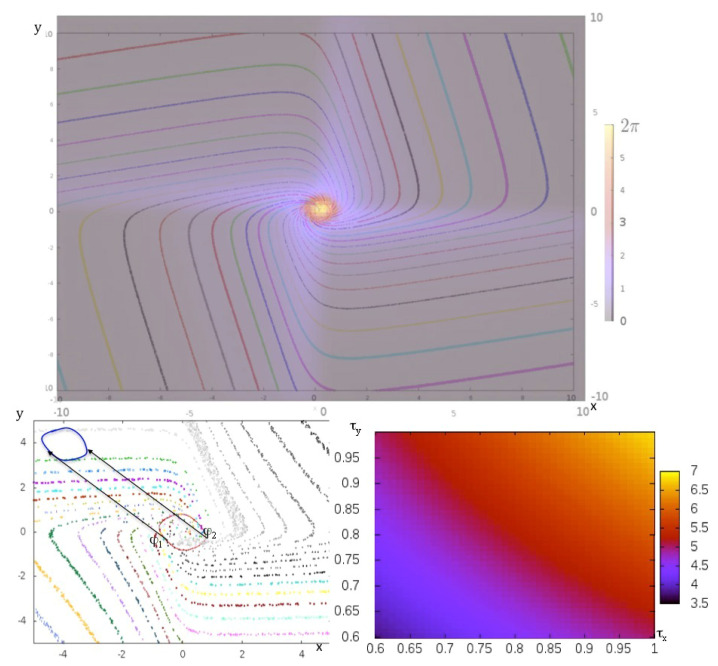

The variables x and y represent the global electrical activity of the two populations, respectively excitatory and inhibitory, whose interaction is described through a sigmoid function parametrized by , which controls its stiffness, accounting for the synaptic response to an input signal, which is non-linear when is large (>>1), depending on the value of the input, greater or less than a potential barrier (here ), and is an affine function of the input signal when and are small (<<1). The parameters and refer to the membrane potential characteristics of the neuronal populations having respective activity x and y. If and , the Wilson-Cowan oscillator presents a Hopf bifurcation when crosses the value one [43], moving from a dynamics involving only an attracting fixed point to a dynamics comprising a repulsor at the origin of E, surrounded by an attractor limit-cycle C. If T denotes the period of the limit-cycle C, let us consider the point of C reached after a time (or phase) h on the trajectory f starting on C at a reference point called the origin . In Figure 4 Top, and this trajectory on C defines a one-to-one map F between the interval and C. We introduce now the notion of isochron of phase h in the attraction basin as the set of points x of such as tends to zero, when t tends to infinity. extends the map F to all the points of B(C). If we consider as new state space , a new time space and the restriction of the flow f on , such as: , we have: .

Figure 4.

Top: asymptotic phase shift between two close isochrons in false colors from 0 to , when an instantaneous perturbation is made on the Wilson-Cowan oscillator. Bottom left: perturbations of same intensity made at two different phases and on the limit-cycle of the Wilson-Cowan oscillator. Bottom right: value of the period of the limit-cycle in false colors depending on the values of the parameters and from 3.5 to 7.

Then, h can be considered as an attractor for f, whose basin is . Let us decompose now the interval in n equidistant sub-intervals . If we consider now the time space , the subset of C denoted is an attractor with an attraction basin equal to , for the flow restrained to , and we have: . Eventually, we can define the entropy related to these attractors in E, called isochronal entropy of degree n, which is just the attractor entropy calculated for the dynamics having as flow :

| (5) |

If all the isochron basins have same size, then . If not, the quantity reflects a spatial heterogeneity of the dynamics: certain can be wide (where the flow f is rapid inside) and other thin (where the flow f is slow inside). In the example of the Wilson-Cowan oscillator, when , and is growing from zero to infinity, the isochronal entropy is diminishing from to .

It is possible to change the value of the period T of the Wilson-Cowan oscillator by tuning the parameters and (Figure 4 Bottom right). Consider now values of the parameters, and , close to the bifurcation in the parameter space and superimpose the isochron landscape on a map in false color giving the asymptotic phase shift between a trajectory starting at a point x on the isochron and a trajectory starting, after an instantaneous perturbation e, at the point x+e belonging to the isochron (Figure 4 Bottom left). The maximum phase shift of the Wilson-Cowan oscillator is inversely proportional to the intensity of this instantaneous perturbation, which means that a population of oscillators can then be synchronized by a sufficiently intense perturbation. Indeed, isochrons are spirals, which diverge from one to another by moving away from the attractor limit-cycle. The phenomenon of memory evocation after a sensory stimulation relies on the synchronizability of a population of identical (but dephased) oscillators whose successive states of their limit cycle store an information [44,45]. This evocation is the more effective since all oscillators have the same phase after stimulation, which is the case if the corresponding perturbation done at different phases and on the limit-cycle leads the oscillator between two successive isochrons (Figure 4 Bottom left). By using either the discrete version of the Wilson-Cowan system, namely the Kauffman-Thomas cellular automaton (the deterministic version of the Hopfield model), or its continuous version (Figure 4), it is easy to see that the potential part of the system is responsible for the spacing between isochrons away from the limit-cycle, causing the decrease of the isochronal entropy, and that the Hamiltonian part is responsible for their spiralization (Figure 4 Top).

By playing with the potential-Hamiltonian distribution [46], it is therefore possible, if the system is instantly perturbed, to increase the efficiency of the post-perturbation synchronization (due to the potential return to the limit-cycle) or to allow for desynchronizing (due to the Hamiltonian spiralization), which is necessary to avoid the perseveration in a synchronized state (observed in many neuro-degenerative pathologies).

3.2. Dynamic Entropy and Genetic Networks

We define first the functions energy U, frustration F and dynamic entropy E of a genetic network N with n genes in interaction [47,48,49,50,51,52,53,54,55,56,57,58,59].

| (6) |

where x is a configuration of gene expression (, if the gene i is expressed and , if not), denotes the set of all configurations of gene expression (i.e., the hypercube ) and is the sign of the interaction weight quantifying the influence the gene j exerts on the gene i: (resp. −1), if j is an activator (resp. inhibitor) of the expression of i, and , if there is no influence of j on i. is the number of positive edges of the interaction graph G of the network N, whose incidence matrix is given by . denotes the global frustration of x, i.e., the number of pairs of genes for which the values of and are contradictory with , the sign of the interaction of j on i:

where is the local frustration of the pair equal to:

The dynamics of the network can be defined by a deterministic transition operator like the following threshold Boolean automaton with a majority rule, we will call in the following deterministic Hopfield transition:

| (7) |

where H is the Heaviside step function defined by: , if ; , if , is the interaction weight of j on i, an activation threshold (whose exponential is called tolerance h: ) and is the set of genes j such as . The Table 1 shows the parallel simulation of such a Boolean automaton, with and . This deterministic dynamics is just a particular case of the random dynamics defined by the transition analogue to the Equation (7):

| (8) |

when threshold and temperature equal to zero.

Table 1.

Recapitulation of the attractors of the iron regulatory network of Figure 5, for the parallel updating mode, with the list of expressed (in state 1) and not expressed (in state 0) genes and their relative attraction basin sizes, obtained by simulating in parallel updating mode a threshold Boolean network having a constant absolute value of non zero weights equal to one and a threshold equal to zero, from all the possible initial configurations.

| Order | Gene | Fixed Point | Fixed Point 2 | Limit-Cycle 1 | Limit-Cycle 2 | |||

|---|---|---|---|---|---|---|---|---|

| 1 | TfR1 | 0 | 0 | 0 | 0 | 1 | 1 | 0 |

| 2 | FPN1a | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | C-Myc | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| 4 | Notch | 0 | 0 | 1 | 1 | 1 | 1 | 1 |

| 5 | GATA-3 | 0 | 0 | 1 | 1 | 1 | 1 | 1 |

| 6 | IRP | 0 | 0 | 0 | 1 | 0 | 0 | 1 |

| 7 | Ft | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 8 | Fe | 0 | 0 | 0 | 0 | 1 | 0 | 1 |

| 9 | MiR-485 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 10 | CiRs-7 anti-sense | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Relative Attraction Basin Size | 512/1024 | 256/1024 | 216/1024 | 40/1024 | ||||

is the probability of expression of the gene i at time t, knowing the configuration at time is equal to y and from the we can easily calculate the Markov transition matrix of the dynamics (8) supposed to be sequential [48,60], i.e., with a sequential updating of the genes in a predefined order.

Let denote the Markov matrix giving the transition probabilities, defined by the rule (8) and an updating mode, between the configurations x and y of , and be its stationary distribution on . The dynamic entropy E can be calculated as:

In the sequential updating mode, where the updating order of the nodes is the integer ranking , we have, by denoting and identifying x with the set of the indices i such that :

and is classically the Gibbs measure [48,60] defined by:

where . When , is concentrated on the attractors of the deterministic dynamics, then, its entropy is defined by:

| (9) |

where m is the number of the final classes of the Markov transition matrix related to the dynamics (8). When , is scattered uniformly over and . An example of calculation of Relative Attraction Basin Sizes is given in the Table 1. We will estimate E between and from the attractor entropy [51] by using the following approximate equality:

| (10) |

E serves as robustness parameter, being related to the capacity the genetic network has to return to the equilibrium after endogenous or exogenous perturbations [53,59]. We can more generally prove that the robustness of the network, supposed to be sequentially updated, decreases when the variance of the frustration F of the network increases, because of the formula: , where is the absolute value supposed to be the same for all the non zero interaction weights between the genes of the network [51].

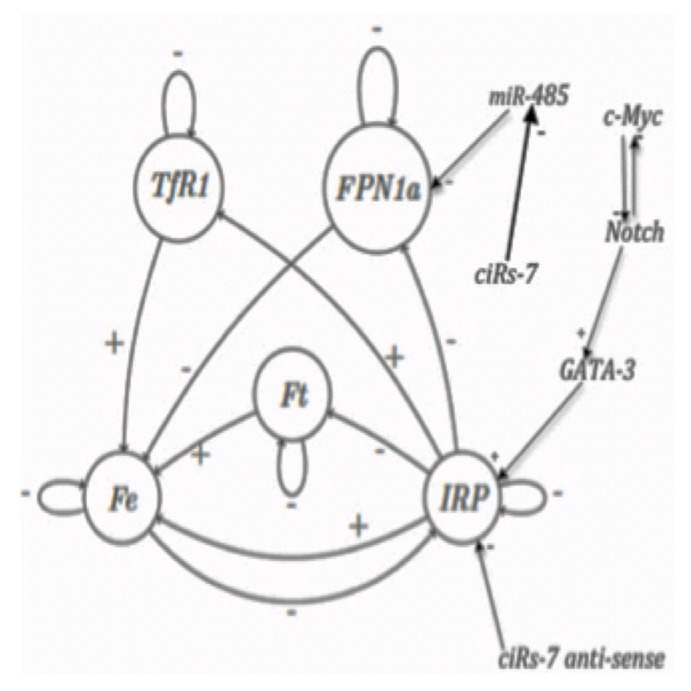

The regulatory network controlling the iron (Fe) metabolism is given in Figure 5. The central proteins controlling the iron metabolism are iron regulatory proteins IRP1 and IRP2 represented in Figure 5 by a unique entity called IRP, which can be active or inactive, active form only having a regulatory role. In absence of iron, IRP shows a mRNA-binding activity on a specific RNA motif called IRE, and when iron concentration is sufficiently high, it looses its mRNA-binding activity on IRE motif. The iron node (Fe) in Figure 5 represents the cellular iron available for various cellular processes and the node ferritin (Ft) represents a protein capable of internalize thousands of iron ions in a given Ft protein.

Figure 5.

The interaction graph of the iron regulatory network, whose interactions can be activatory (+) or inhibitory (−), such as those of microRNAs, like miR-485.

The complex mechanism of iron loading into, and release from, ferritin is taken into account here in a very simplified way: ferritin mRNA contains two IRE motifs in its 5’-UTR regions, and IRP consequently inhibits its translation. These IRE motifs are also inhibited by micro-RNAs like miR485, itself inhibited by the cyclic ARN ciRs7. IRP is also directly inhibited by ciRs7-antisense and activated by GATA-3, itself controlled by the positive circuit Notch/c-Myc The transferrin receptor (TfR1) located at the cellular surface allows iron uptake. TfR1 mRNA contains five IRE motifs in its 3’-UTR region, hence, the density of TfR1 receptors at the cell surface is correlated with the IRP activity. Eventually, ferroportin (FPN1a) is an iron exporter located at the cellular surface and contains one IRE motif in its 5’-UTR mRNA region, thus its translation is inhibited by IRP.

By studying the dynamics of the iron regulatory network (supposed to be Boolean, that is with only two states 1 and 0 for each entity, the state 1 corresponding to the proportion of its active form over a certain threshold), it is possible to calculate its asymptotic states (i.e., observed when the time goes to infinity) obtained for any initial condition in attraction basins (Table 1). From this dynamics study, we can then easily calculate , hence , which corresponds to a relatively low robustness, the maximum being equal to four.

The notion of robustness is related to the capacity to diminish the consequences, on the entropy E, of endogenous or exogenous variations affecting the common absolute value of the non zero interaction weights of the genetic network. This notion of robustness is closed to that of structural stability in dynamical systems. If the variability of the global frustration F of the network is important, then its robustness diminishes. It is for example the case if a gene belonging to a positive circuit [61,62]. of a strong connected component of the interaction graph of the network is randomly inhibited. In this case, the inhibited gene behaves as a sink (i.e., without outing interaction) of state 0, which can enter in contradiction with that predicted by the states and interactions of its neighbors, increasing the variance of F and consequently diminishing the dynamic entropy E.

3.3. Centrality of Nodes and Social Networks

There are four classical types of centrality in a graph (Figure 7). The first is the betweenness centrality [63]. It is be defined for a node k in the interaction graph G as follows:

| (11) |

where is the total number of shortest paths from node i to node j that pass through node k, and .

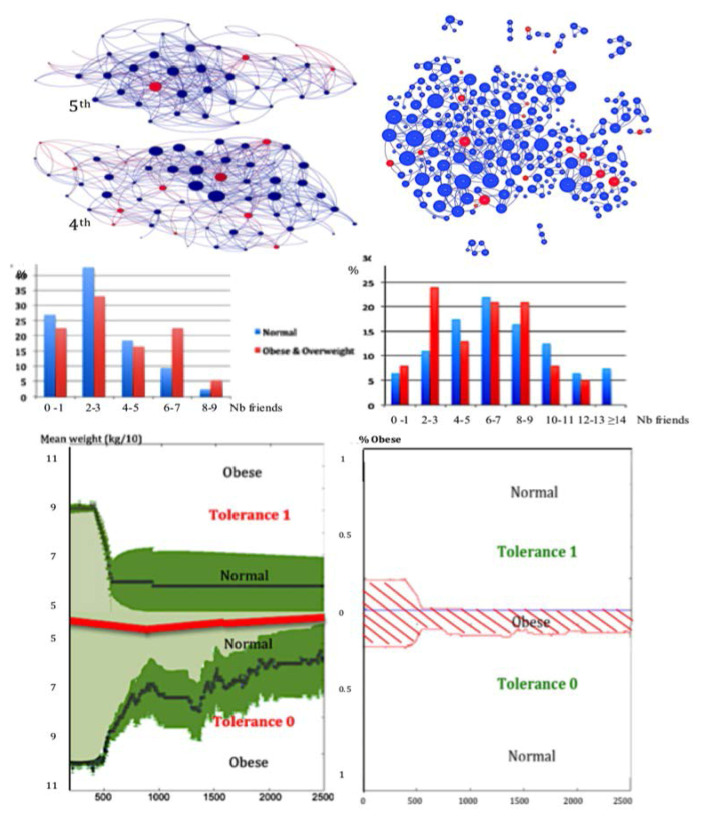

Let consider now a social network with a threshold Boolean dynamics following the Equation (7), containing overweight or obese (state 1) and normal (state 0) individuals as nodes. By concentrating the pedagogic efforts on the betweenness central nodes overweight or obese with tolerance 1, which are “hubs” of the social network, it results a rapid decrease of the number of overweight or obese, more important if the educative effort has been done on individuals with tolerance 1 than with tolerance 0 (Figure 6 Bottom right), as well as a decrease of their mean weight (Figure 6 Bottom left). The second corresponds to the degree centrality, which can be defined from the notions of out-, in- or total degree of a node i, corresponding to the number of arrows of the interaction graph, respectively outing, entering, or both, connected to i. For example, the in-degree centrality is defined by:

| (12) |

where the general coefficient of the adjacency matrix A of the graph, denoted , equals one if there is a link between j and i, and else zero.

Figure 6.

Top left: social graphs related to the friendship relationships between pupils (overweight or obese in red, not obese in blue) of a French high school in 5th and 4th classes, corresponding to ages from 11 to 13 years. Top right: analogue graph for corresponding classes in a Tunisian high school in Tunis. Middle: histograms of the number of friends for pupils from French (left) and Tunisian (right) high schools. Bottom left: mean weight (in black, surrounded by the 95%-confidence interval in green) of pupils coming back to an acceptable “normality”, due to a preventive education of 10% of the betweenness central nodes obese, calculated for two sub-populations of tolerance h = 1 (top) and h = 0 (bottom). Bottom right: percentage of obese in these two sub-populations.

The third type of centrality is the closeness centrality. The closeness is the reciprocal of the average fairness, which is defined by averaging over all nodes j of the network else than i a distance between i and j, equal to , then:

| (13) |

where the chosen distance is the length of the closest path between i and j. The last type of classical centrality is the spectral centrality or eigen-centrality, which takes into account that the neighbors of a node i can be also highly connected to the rest of the graph (connections to highly connected nodes contribute more than those of the weakly connected nodes). Hence, the eigen-centrality measures more the global influence of i on the network and verifies:

| (14) |

where is the greatest eigenvalue of the adjacency matrix A of the graph.

The four centralities above have each their intrinsic interest: (i) the betweenness centrality of a node is related to its global connectivity with all the nodes of the network, (ii) the degree centralities indicate the level of local connectivity (in-, out- or total) of a node with only its nearest nodes, (iii) the closeness centrality measures the relative proximity a node has with the other nodes of the network for a given distance on the interaction graph of the network, and (iv) the eigen-centrality corresponds to the ability for a node to be connected to possibly a few number of nodes, but having secondarily a high connectivity, which indicates for example an important relay for the dissemination of news in social networks, the social networks being also used to model the spread of rumors, political opinions and social diseases, such as obesity and its most important consequence, type II diabetes.

For example, for curing a social disease, an Hospitaller Order of Saint Anthony has been founded in 1095 at La Motte (presently Saint Antoine near Grenoble in France) by Gaston du Dauphiné, whose son suffered from a fungal disease, known in the Middle Ages as Saint Anthony’s fire or ergotism, caused by a transformation of grain like rye into a drug (the ergotamine) in flour provoking convulsions often leading to death. The members of the Saint Anthony Order were specialized in curing patients suffering from ergotism and they spread with about 370 hospitals over the whole Western Europe in the fourteenth century, able to treat about 4000 patients. They observed that the disease propagation was due to social habits like eating same type of bread, then ergotism is considered as the first social disease whose contagion process depends on alimentation behaviour.

Now obesity is considered as the most characteristic social “contagious” disease, and has been defined as a pandemic (i.e., an epidemic having a world wide prevalence) by the WHO . Both nutrition mimicking and social stigmatization explain the dissemination of obesity into a social network [64,65]. Obesity consists in an excessive accumulation of fat in adipose tissue favoring chronic diabetic and cardiovascular diseases. In UK for example, 36% of men and 33% of women are predicted to be obese in 2030 for only 26% of both sexes in 2010 [66,67,68,69] showing the critical character of the present explosion of obesity, especially in young people. For assessing the prevalence of the disease in young population, we have observed social graphs based on the existence or not of friendship between pupils in the 5th and 4h classes of two high schools (corresponding to ages from 11 to 13 years), one in France at Jœuf already published in [5], and a new one in Tunisia at Tunis. The corresponding graphs (Figure 6 Top) and the histograms of the number F of friends of the pupils, show in both cases that the distribution of F is uni-modal for normal weight pupils and bimodal for overweight or obese ones (Figure 6 Middle), because of a double phenomenon in obese pupils: a part of them attracts with a friendship link due to their open personality, and another part repulses. Then, we have done the simulation of a preventive education of central obese nodes following WHO recommendations [70] during the dynamics of obesity spread following the Equation (7), in which we give the tolerance h = 0 (resp. h = 1) to the obese of the first (resp. second) mode. This education has been given in Figure 6 to the obese central nodes for betweenness in the social graph G. The result is the best between the four centralities defined above, but it remains an irreducible percentage of obese in the population of tolerance zero (Figure 6 Bottom right). For that reason, we need to introduce a new notion of centrality based on the notion of entropy.

4. Discussion

4.1. The Notion of Entropy Centrality

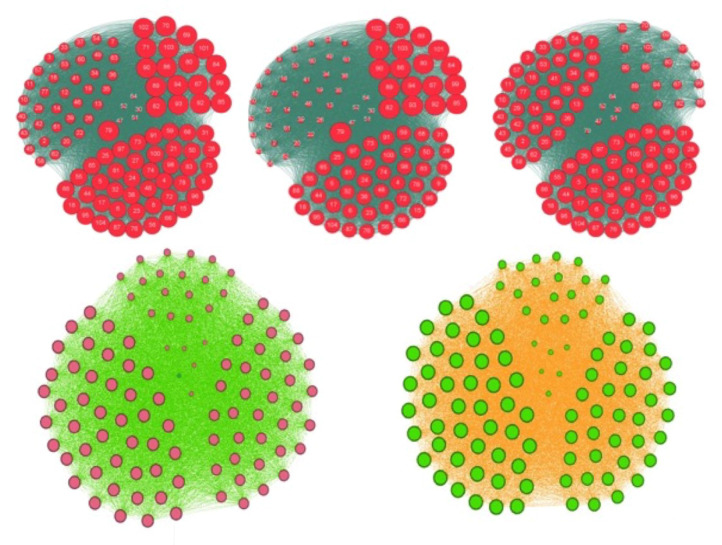

Indeed, despite the interesting properties of the various classical centralities (Figure 6, Figure 7 and Figure 8), the therapeutic education targeting the most central nodes leaves in general a not-neglectible percentage of obese after education, then we will discuss now the introduction of a new notion of centrality, called the entropy centrality, taking into account the heterogeneity of the vector having as components the state and the tolerance, into the neighbor set of the node i, and not only the connectivity of the graph:

| (15) |

where denotes the kth frequency among s of the histogram of the values of the vector (state, tolerance) observed in the neighborhood , set of the nodes linked to the node i.

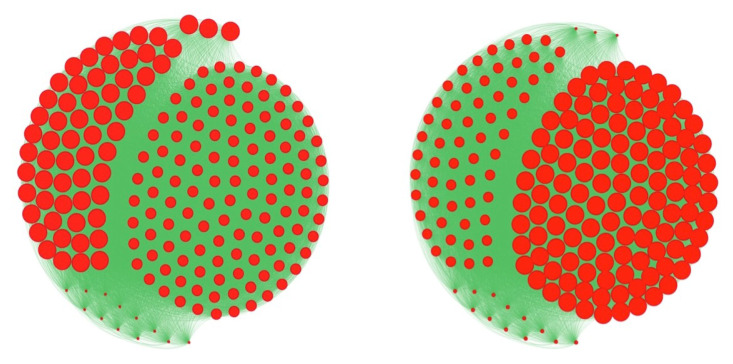

Figure 7.

Comparison between two classical types of centrality in the graph of the Tunisian high school between eigenvector (left) and total degree (right) centralities (node size is proportional to its centrality).

Figure 8.

Top: representation of the whole graph of the French high school. The size of the nodes corresponds to their centrality in-degree (left), eigenvector (middle) and total degree (right). Bottom: threshold for a therapeutic education leading back to the normal weight state the N obese individuals having the entropic centrality maximum: after stabilization of the social network dynamics, we get all individuals overweight or obese in red (left) if N = 20 and all individuals normal in green (right) if N = 21, this number constituting the success threshold of the education.

This new notion of centrality is more useful than the others to detect the good candidates to be educated in order to transform their state to the normal state 0, because they can influence more efficiently a heterogeneous environment to recover the normality. An illustration of this fact is done in the example of the Section 3.3.

By comparing the results obtained thanks to a preventive and therapeutic education leading the overweight or obese nodes to the normal weight, we observe that with only the 21 individuals the most entropic central educated (Figure 8 Bottom right), all the population of pupils is going to the normal weight state, but we need 68 individuals with the total degree, and 85 individuals with the in-degree and eigenvector centralities (Figure 8 Top and Bottom left). Then, the best public health policy against the obesity pandemics consists in using the notion of entropy centrality to select the targets of the therapeutic education [5].

4.2. The Mathematical Problem of Robustness and the Notion of Global Frustration

Each of the examples presented in Section 3 have used the dynamics governed by the deterministic Hopfield transition of the Equation (7) [71]. We have exhibited complex multi-attractor asymptotic behaviors and the central problem to discuss now is the conservation of the attractor organization (namely the number of attractors, the size of their attraction basins, and their nature, i.e., fixed configurations or limit-cycles corresponding to oscillatory states).

This problem of robustness of the attractor landscape is related to the architecture of the interaction graph linked to the (discrete or continuous) Jacobian matrix J. The problem of robustness has been often considered since 50 years [41,72] under different names (bifurcation, structural stability, resilience, etc.) and it is still pertinent [73,74].

If we consider the Hopfield rule with a constant absolute value for its non-zero interaction weights, we can study the robustness of the network with respect to the variations of w, by using the two following propositions [51,52]:

Proposition 1.

Let us consider a deterministic Hopfield Boolean network which is a circuit sequentially or synchronously updated with constant absolute value w for its non-zero interaction weights. Then, its dynamics is conservative, keeping constant on the trajectories the Hamiltonian function G defined by:

(16) where g is the Heaviside function. is equal to the total discrete kinetic energy, and to the half of the global dynamic frustration , where is the local dynamic frustration related to the interaction between the nodes and i: , if sign = 1 and or sign and , else .

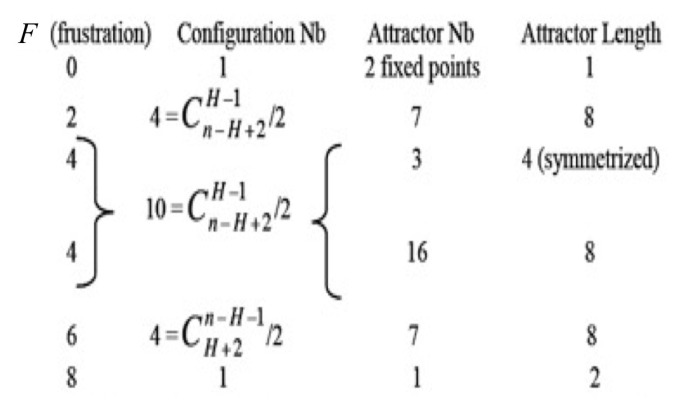

The result of the Proposition 1 holds if the network is a circuit for which transition functions are Boolean identity or negation [75] on which we can easily calculate the global frustration F and show that it characterizes the trajectories and remains constant on them (Figure 9). Then, consider now the dynamic entropy E of a Hopfield network [53,76] as a robustness parameter quantifying the capacity the network has to return to its equilibrium measure on the configuration space after a perturbation. We can calculate E by using the following equality:

where , being the value of the invariant measure at the configuration x, and , m being the number of the network attractors and the attraction basin of the attractor .

Figure 9.

Description of the attractors of circuits of length 8 for which Boolean local transition functions are either identity or negation.

, which denotes the derivative of the dynamic entropy E with respect to the absolute value of the weight w, can be considered as a new robustness parameter. We have: and we can prove the following result [8,51]:

Proposition 2.

In the parallel updating mode of a Hopfield network, we have:

where is taken for the invariant Gibbs measure μ defined by: , with .

The Proposition 2 indicates that there is a close relationship between the robustness (or structural stability) with respect to the parameter w and the global dynamic frustration F. This global dynamic frustration F is in general easy to calculate. For the configuration x of the genetic network controlling the flowering of Arabidopsis thaliana [77], there is only two frustrated pairs, hence (Figure 10).

Figure 10.

Frustrated pairs of nodes belonging to positive circuits of length 2 in the genetic network controlling the flowering of Arabidopsis thaliana. The network evolves by diminishing the global frustration until the attractor (here a fixed configuration, whose last changes are indicated in red) on which the frustration remains constant.

More generally, all the energy functions introduced in the examples are theoretically calculable and could serve as in physics for quantifying the structural stability. When the number of states in a discrete state space E is too large, or when the integrals on a continuous state space E are difficult to evaluate, then Monte Carlo procedures can provide a good estimation of functions like the dynamic entropy or the variance of the global frustration.

5. Conclusions and Perspectives

We have defined some new tools based on the notion of entropy common to both continuous and discrete dynamical systems, based on thermodynamic concepts. These notions are useful in biological modeling to express in which way precise energy functions related to the various concentrations or population sizes involved in the transition equation of a biological dynamical system can be conserved or dissipated along the trajectories until their ultimate asymptotic behavior, the attractors. Future works could be done in the framework of more general random systems [78,79] in which the notions of entropy, invariant measure and stochastic attractors (called also confiners in [54]) are classical to interpret the data observed in all the fields (neural, genetic and social) considered in the present study and use the interpretations they allow in practical applications (see for example [19,80] for some biomedical applications).

Acknowledgments

We are indebted to the Projects PHC Maghreb SCIM (Complex Systems and Medical Engineering), ANR e-swallhome, and H3ABioNet for providing research grants and administrative support.

Author Contributions

All authors contributed equally to the research presented in the paper and the development of the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Mason O., Verwoerd M. Graph theory and networks in biology. IET Syst. Biol. 2007;1:89–119. doi: 10.1049/iet-syb:20060038. [DOI] [PubMed] [Google Scholar]

- 2.Gosak M., Markovič R., Dolenšek J., Rupnik M.S., Marhl M., Stožer A., Perc M. Network Science of Biological Systems at Different Scales: A Review. Phys. Life Rev. 2017 doi: 10.1016/j.plrev.2017.11.003. [DOI] [PubMed] [Google Scholar]

- 3.Kurz F.T., Kembro J.M., Flesia A.G., Armoundas A.A., Cortassa S., Aon M.A., Lloyd D. Network dynamics: Quantitative analysis of complex behavior in metabolism, organelles, and cells, from experiments to models and back. Wiley Interdiscip. Rev. Syst. Biol. Med. 2017;9 doi: 10.1002/wsbm.1352. [DOI] [PubMed] [Google Scholar]

- 4.Christakis N.A., Fowler J.H. The spread of obesity in a large social network over 32 years. N. Engl. J. Med. 2007;2007:370–379. doi: 10.1056/NEJMsa066082. [DOI] [PubMed] [Google Scholar]

- 5.Demongeot J., Jelassi M., Taramasco C. From susceptibility to frailty in social networks: The case of obesity. Math. Popul. Stud. 2017;24:219–245. doi: 10.1080/08898480.2017.1348718. [DOI] [Google Scholar]

- 6.Federer C., Zylberberg J. A self-organizing memory network. BioRxiv. 2017 doi: 10.1101/144683. [DOI] [PubMed] [Google Scholar]

- 7.Grillner S. Biological pattern generation: the cellular and computational logic of networks in motion. Neuron. 2006;52:751–766. doi: 10.1016/j.neuron.2006.11.008. [DOI] [PubMed] [Google Scholar]

- 8.Demongeot J., Benaouda D., Jezequel C. “Dynamical confinement” in neural networks and cell cycle. Chaos Interdiscip. J. Nonlinear Sci. 1995;5:167–173. doi: 10.1063/1.166064. [DOI] [PubMed] [Google Scholar]

- 9.Erdös P., Rényi A. On random graphs. Pub. Math. 1959;6:290–297. [Google Scholar]

- 10.Barabási A.L., Albert R. Emergence of scaling in random networks. Science. 1959;286:509–512. doi: 10.1126/science.286.5439.509. [DOI] [PubMed] [Google Scholar]

- 11.Albert R., Jeong H., Barabási A.L. Error and attack tolerance of complex networks. Nature. 2000;406:378–382. doi: 10.1038/35019019. [DOI] [PubMed] [Google Scholar]

- 12.Demetrius L. Thermodynamics and evolution. J. Theor. Biol. 2000;206:1–16. doi: 10.1006/jtbi.2000.2106. [DOI] [PubMed] [Google Scholar]

- 13.Jeong H., Mason S.P., Barabási A.L., Oltvai Z.N. Lethality and centrality in protein networks. Nature. 2001;411:41–42. doi: 10.1038/35075138. [DOI] [PubMed] [Google Scholar]

- 14.Demetrius L., Manke T. Robustness and network evolution—An entropic principle. Phys. A Stat. Mech. Appl. 2005;346:682–696. doi: 10.1016/j.physa.2004.07.011. [DOI] [Google Scholar]

- 15.Manke T., Demetrius L., Vingron M. An entropic characterization of protein interaction networks and cellular robustness. J. R. Soc. Interface. 2006;3:843–850. doi: 10.1098/rsif.2006.0140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gómez-Gardeñes J., Latora V. Entropy rate of diffusion processes on complex networks. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2008;78:065102. doi: 10.1103/PhysRevE.78.065102. [DOI] [PubMed] [Google Scholar]

- 17.Li L., Bing-Hong W., Wen-Xu W., Tao Z. Network entropy based on topology configuration and its computation to random networks. Chin. Phys. Lett. 2008;25:4177–4180. doi: 10.1016/j.physleta.2008.03.061. [DOI] [Google Scholar]

- 18.Teschendorff A.E., Severini S. Increased entropy of signal transduction in the cancer metastasis phenotype. BMC Syst. Biol. 2010;4:104. doi: 10.1186/1752-0509-4-104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.West J., Bianconi G., Severini S., Teschendorff A. Differential network entropy reveals cancer system hallmarks. Sci. Rep. 2012;2 doi: 10.1038/srep00802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Banerji C.R., Miranda-Saavedra D., Severini S., Widschwendter M., Enver T., Zhou J.X., Teschendorff A.E. Cellular network entropy as the energy potential in Waddington’s differentiation landscape. Sci. Rep. 2013;3:3039. doi: 10.1038/srep03039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Teschendorff A.E., Sollich P., Kuehn R. Signalling entropy: A novel network-theoretical framework for systems analysis and interpretation of functional omic data. Methods. 2014;67:282–293. doi: 10.1016/j.ymeth.2014.03.013. [DOI] [PubMed] [Google Scholar]

- 22.Teschendorff A.E., Banerji C.R.S., Severini S., Kuehn R., Sollich P. Increased signaling entropy in cancer requires the scale-free property of protein interaction networks. Sci. Rep. 2015;2:802. doi: 10.1038/srep09646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Toulouse G. Theory of the frustration effect in spin glasses: I. Commun. Phys. 1977;2:115–119. [Google Scholar]

- 24.Robert F. Discrete Iterations: A Metric Study. Volume 6 Springer; Berlin, Germany: 1986. [Google Scholar]

- 25.Cosnard M., Demongeot J. Attracteurs: Une approche déterministe. C. R. Acad. Sci. Ser. I Math. 1985;300:551–556. [Google Scholar]

- 26.Cosnard M., Demongeot J. On the definitions of attractors. Lect. Notes Comput. Sci. 1985;1163:23–31. [Google Scholar]

- 27.Szabó K.G., Tél T. Thermodynamics of attractor enlargement. Phys. Rev. E. 1994;50:1070–1082. doi: 10.1103/PhysRevE.50.1070. [DOI] [PubMed] [Google Scholar]

- 28.Wang J., Xu L., Wang E. Potential landscape and flux framework of nonequilibrium networks: Robustness, dissipation, and coherence of biochemical oscillations. Proc. Natl. Acad. Sci. USA. 2008;105:12271–12276. doi: 10.1073/pnas.0800579105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Menck P.J., Heitzig J., Marwan N., Kurths J. How basin stability complements the linear-stability paradigm. Nat. Phys. 2013;9:89–92. doi: 10.1038/nphys2516. [DOI] [Google Scholar]

- 30.Bowen R. ω-limit sets for axiom A diffeomorphisms. J. Differ. Equ. 1975;18:333–339. doi: 10.1016/0022-0396(75)90065-0. [DOI] [Google Scholar]

- 31.Williams R. Expanding attractors. Publ. Math. l’Inst. Hautes Études Sci. 1974;43:169–203. doi: 10.1007/BF02684369. [DOI] [Google Scholar]

- 32.Ruelle D. Small random perturbations of dynamical systems and the definition of attractors. Commun. Math. Phys. 1981;82:137–151. doi: 10.1007/BF01206949. [DOI] [Google Scholar]

- 33.Ruelle D. Small random perturbations and the definition of attractors. Geom. Dyn. 1983;82:663–676. [Google Scholar]

- 34.Haraux A. Attractors of asymptotically compact processes and applications to nonlinear partial differential equations. Commun. Part. Differ. Equ. 1988;13:1383–1414. doi: 10.1080/03605308808820580. [DOI] [Google Scholar]

- 35.Hale J. Asymptotic behavior of dissipative systems. Bull. Am. Math. Soc. 1990;22:175–183. [Google Scholar]

- 36.Audin M. Hamiltonian Systems and Their Integrability. American Mathematical Society; Providence, RI, USA: 2008. [Google Scholar]

- 37.Demongeot J., Glade N., Forest L. Liénard systems and potential-Hamiltonian decomposition I–methodology. C. R. Math. 2007;344:121–126. doi: 10.1016/j.crma.2006.10.016. [DOI] [PubMed] [Google Scholar]

- 38.Waddington C. Organizers and Genes. Cambridge University Press; Cambridge, UK: 1940. [Google Scholar]

- 39.Demongeot J., Thomas R., Thellier M. A mathematical model for storage and recall functions in plants. C. R. Acad. Sci. Ser. III. 2000;323:93–97. doi: 10.1016/S0764-4469(00)00103-7. [DOI] [PubMed] [Google Scholar]

- 40.Thellier M., Demongeot J., Norris V., Guespin J., Ripoll C., Thomas R. A logical (discrete) formulation for the storage and recall of environmental signals in plants. Plant Biol. 2004;6:590–597. doi: 10.1055/s-2004-821090. [DOI] [PubMed] [Google Scholar]

- 41.Thomas R. Boolean formalization of genetic control circuits. J. Theor. Biol. 1973;42:563–585. doi: 10.1016/0022-5193(73)90247-6. [DOI] [PubMed] [Google Scholar]

- 42.Thomas R. On the relation between the logical structure of systems and their ability to generate multiple steady states or sustained oscillations. Springer Ser. Synerg. 1981;9:180–193. [Google Scholar]

- 43.Tonnelier A., Meignen S., Bosch H., Demongeot J. Synchronization and desynchronization of neural oscillators. Neural Netw. 1999;12:1213–1228. doi: 10.1016/S0893-6080(99)00068-4. [DOI] [PubMed] [Google Scholar]

- 44.Demongeot J., Kaufman M., Thomas R. Positive feedback circuits and memory. C. R. Acad. Sci. Ser. III. 2000;323:69–79. doi: 10.1016/S0764-4469(00)00112-8. [DOI] [PubMed] [Google Scholar]

- 45.Ben Amor H., Glade N., Lobos C., Demongeot J. The isochronal fibration: Characterization and implication in biology. Acta Biotheor. 2010;58:121–142. doi: 10.1007/s10441-010-9099-4. [DOI] [PubMed] [Google Scholar]

- 46.Demongeot J., Hazgui H., Ben Amor H., Waku J. Stability, complexity and robustness in population dynamics. Acta Biotheor. 2014;62:243–284. doi: 10.1007/s10441-014-9229-5. [DOI] [PubMed] [Google Scholar]

- 47.Demongeot J., Jezequel C., Sené S. Asymptotic behavior and phase transition in regulatory networks. I. Theoretical results. Neural Netw. 2008;21:962–970. doi: 10.1016/j.neunet.2008.04.005. [DOI] [PubMed] [Google Scholar]

- 48.Demongeot J., Elena A., Sené S. Robustness in neural and genetic networks. Acta Biotheor. 2008;56:27–49. doi: 10.1007/s10441-008-9029-x. [DOI] [PubMed] [Google Scholar]

- 49.Demongeot J., Ben Amor H., Elena A., Gillois P., Noual M., Sené S. Robustness in regulatory interaction networks. A generic approach with applications at different levels: physiologic, metabolic and genetic. Int. J. Mol. Sci. 2009;10:4437–4473. doi: 10.3390/ijms10104437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Demongeot J., Elena A., Noual M., Sené S., Thuderoz F. “Immunetworks”, intersecting circuits and dynamics. J. Theor. Biol. 2011;280:19–33. doi: 10.1016/j.jtbi.2011.03.023. [DOI] [PubMed] [Google Scholar]

- 51.Demongeot J., Waku J. Robustness in biological regulatory networks I: Mathematical approach. C. R. Math. 2012;350:221–224. doi: 10.1016/j.crma.2012.01.003. [DOI] [Google Scholar]

- 52.Demongeot J., Cohen O., Henrion-Caude A. Systems Biology of Metabolic and Signaling Networks. Springer; Berlin, Germany: 2013. MicroRNAs and robustness in biological regulatory networks. A generic approach with applications at different levels: physiologic, metabolic, and genetic; pp. 63–114. [Google Scholar]

- 53.Demongeot J., Demetrius L. Complexity and stability in biological systems. Int. J. Bifurc. Chaos. 2015;25:1540013. doi: 10.1142/S0218127415400131. [DOI] [Google Scholar]

- 54.Demongeot J., Jacob C. Confineurs: une approche stochastique des attracteurs. C. R. Acad. Sci. Ser. I Math. 1989;309:699–702. [Google Scholar]

- 55.Demetrius L. Statistical mechanics and population biology. J. Stat. Phys. 1983;30:709–753. doi: 10.1007/BF01009685. [DOI] [Google Scholar]

- 56.Demetrius L., Demongeot J. A Thermodynamic approach in the modeling of the cellular-cycle. Biometrics. 1984;40:259–260. [Google Scholar]

- 57.Demetrius L. Directionality principles in thermodynamics and evolution. Proc. Natl. Acad. Sci. USA. 1997;94:3491–3498. doi: 10.1073/pnas.94.8.3491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Demetrius L., Gundlach V., Ochs G. Complexity and demographic stability in population models. Theor. Popul. Biol. 2004;65:211–225. doi: 10.1016/j.tpb.2003.12.002. [DOI] [PubMed] [Google Scholar]

- 59.Demetrius L., Ziehe M. Darwinian fitness. Theor. Popul. Biol. 2007;72:323–345. doi: 10.1016/j.tpb.2007.05.004. [DOI] [PubMed] [Google Scholar]

- 60.Demongeot J., Sené S. Asymptotic behavior and phase transition in regulatory networks. II Simulations. Neural Netw. 2008;21:971–979. doi: 10.1016/j.neunet.2008.04.003. [DOI] [PubMed] [Google Scholar]

- 61.Delbrück M. Discussion au Cours du Colloque International sur les Unités Biologiques Douées de Continuité Génétique. Volume 8. CNRS; Paris, France: 1949. pp. 33–35. [Google Scholar]

- 62.Cinquin O., Demongeot J. Positive and negative feedback: Striking a balance between necessary antagonists. J. Theor. Biol. 2002;216:229–241. doi: 10.1006/jtbi.2002.2544. [DOI] [PubMed] [Google Scholar]

- 63.Barthelemy M. Betweenness centrality in large complex networks. Eur. Phys. J. B Conden. Matter Complex Syst. 2004;38:163–168. doi: 10.1140/epjb/e2004-00111-4. [DOI] [Google Scholar]

- 64.Myers A., Rosen J.C. Obesity stigmatization and coping: Relation to mental health symptoms, body image, and self-esteem. Int. J. Obes. Related Metab.Disord. 1999;23 doi: 10.1038/sj.ijo.0800765. [DOI] [PubMed] [Google Scholar]

- 65.Cohen-Cole E., Fletcher J.M. Is obesity contagious? Social networks vs. environmental factors in the obesity epidemic. J. Health Econ. 2008;27:1382–1387. doi: 10.1016/j.jhealeco.2008.04.005. [DOI] [PubMed] [Google Scholar]

- 66.Cauchi D., Gauci D., Calleja N., Marsh T., Webber L. Application of the UK foresight obesity model in Malta: Health and economic consequences of projected obesity trend. Obes. Facts. 2015;8:141. doi: 10.1371/journal.pone.0079827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Jones R., Breda J. Surveillance of Overweight including Obesity in Children Under 5: Opportunities and Challenges for the European Region. Obes. Facts. 2015;8:124. [Google Scholar]

- 68.Shaw A., Retat L., Brown M., Divajeva D., Webber L. Beyond BMI: projecting the future burden of obesity in England using different measures of adiposity. Obes. Facts. 2015;8:135–136. [Google Scholar]

- 69.Demongeot J., Elena A., Taramasco C. Social Networks and Obesity. Application to an interactive system for patient-centred therapeutic education. In: Ahima R.S., editor. Metabolic Syndrome: A Comprehensive Textbook. Springer; New York, NY, USA: 2015. pp. 287–307. [Google Scholar]

- 70.Breda J. WHO plans for action on primary prevention of obesity. Obes. Facts. 2015;8:17. [Google Scholar]

- 71.Hopfield J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA. 1982;79:2554–2558. doi: 10.1073/pnas.79.8.2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Gardner M., Ashby W. Connectance of large dynamic (cybernetic) systems: Critical values for stability. Nature. 1970;228:784. doi: 10.1038/228784a0. [DOI] [PubMed] [Google Scholar]

- 73.Wagner A. Princeton Studies in Complexity. Princeton University Press; Princeton, NJ, USA: 2005. Robustness and evolvability in living systems. [Google Scholar]

- 74.Gunawardena S. The robustness of a biochemical network can be inferred mathematically from its architecture. Biol. Syst. Theory. 2010;328:581–582. [Google Scholar]

- 75.Demongeot J., Noual M., Sené S. Combinatorics of Boolean automata circuits dynamics. Discret. Appl. Math. 2012;160:398–415. doi: 10.1016/j.dam.2011.11.005. [DOI] [Google Scholar]

- 76.Demongeot J., Demetrius L. La derivé démographique et la selection naturelle: Étude empirique de la France (1850–1965) Population. 1989;2:231–248. [Google Scholar]

- 77.Demongeot J., Goles E., Morvan M., Noual M., Sené S. Attraction basins as gauges of robustness against boundary conditions in biological complex systems. PLoS ONE. 2010;5:e11793. doi: 10.1371/journal.pone.0011793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Ventsell A., Freidlin M. On small random perturbations of dynamical systems. Russ. Math. Surv. 1970;25:1–55. doi: 10.1070/RM1970v025n01ABEH001254. [DOI] [Google Scholar]

- 79.Young L. Some Large Deviation Results for Dynamical Systems. Trans. Am. Math. Soc. 1990;318:525–543. doi: 10.2307/2001318. [DOI] [Google Scholar]

- 80.Weaver D., Workman C., Stormo G. Modeling regulatory networks with weight matrices. Proc. Pac. Symp. Biocomput. 1999;4:112–123. doi: 10.1142/9789814447300_0011. [DOI] [PubMed] [Google Scholar]