Abstract

Models can be simple for different reasons: because they yield a simple and computationally efficient interpretation of a generic dataset (e.g., in terms of pairwise dependencies)—as in statistical learning—or because they capture the laws of a specific phenomenon—as e.g., in physics—leading to non-trivial falsifiable predictions. In information theory, the simplicity of a model is quantified by the stochastic complexity, which measures the number of bits needed to encode its parameters. In order to understand how simple models look like, we study the stochastic complexity of spin models with interactions of arbitrary order. We show that bijections within the space of possible interactions preserve the stochastic complexity, which allows to partition the space of all models into equivalence classes. We thus found that the simplicity of a model is not determined by the order of the interactions, but rather by their mutual arrangements. Models where statistical dependencies are localized on non-overlapping groups of few variables are simple, affording predictions on independencies that are easy to falsify. On the contrary, fully connected pairwise models, which are often used in statistical learning, appear to be highly complex, because of their extended set of interactions, and they are hard to falsify.

Keywords: statistical inference, model complexity, minimum description length, spin models

1. Introduction

Science, as the endeavour of reducing complex phenomena to simple principles and models, has been instrumental to solve practical problems. Yet, problems such as image or speech recognition and language translation have shown that Big Data can solve problems without necessarily understanding them [1,2,3]. A statistical model trained on a sufficiently large number of instances can learn how to mimic the performance of the human brain on these tasks [4,5]. These models are simple in the sense that they are easy to evaluate, train, and/or to infer. They offer simple interpretations in terms of low order (typically pairwise) dependencies, which in turn afford an explicit graph theoretical representation [6]. Their aim is not to uncover fundamental laws but to “generalize well”, i.e., to describe well yet unseen data. For this reason, machine learning relies on “universal” models that are apt to describe any possible data on which they can be trained [7], using suitable “regularization” schemes in order to tame parameter fluctuations (overfitting) and achieve small generalization error [8]. Scientific models, instead, are the simplest possible descriptions of experimental results. A physical model is a representation of a real system and its structure reflects the laws and symmetries of Nature. It predicts well not because it generalizes well, but rather because it captures essential features of the specific phenomena that it describes. It should depend on few parameters and is designed to provide predictions that are easy to be falsified [9]. For example, Newton’s laws of motion are consistent with momentum conservation, a fact that can be checked in scattering experiments.

The intuitive notion of a “simple model” hints at a succinct description, one that requires few bits [10]. The stochastic complexity [11], derived within Minimum Description Length (MDL) [12,13], provides a quantitative measure for “counting” the complexity of models in bits. The question this paper addresses is: what are the features of simple models according to MDL, and are they simple in the sense surmised in statistical learning or in physics? In particular, are models with up to pairwise interactions, which are frequently used in statistical learning, simple?

We address this issue in the context of spin models, describing the statistical dependence among n binary variables. There has been a surge of recent interest in the inference of spin models [14] from high dimensional data, most of which was limited to pairwise models. This is partly because pairwise models allow for an intuitive graph representation of statistical dependencies. Most importantly, since the number of k-variable interactions grows as , the number of samples is hardly sufficient to go beyond . For this reason, efforts to go beyond pairwise interactions have mostly focused on low order interactions (e.g., , see [15] and references therein). Reference [16] recently suggested that even for data generated by models with higher order interactions, pairwise models may provide a sufficiently accurate description of the data. Within the class of pairwise models, L1 regularization [17] has proven to be a remarkably efficient heuristic of model selection (but see also [18]).

Here we focus on the exponential family of spin models with interactions of arbitrary order. This class of models assumes a sharp separation between relevant observables and irrelevant ones, the expected value of which is predicted by the model. In this setting, the stochastic complexity [11] computed within MDL coincides with the penalty that, in Bayesian model selection, accounts for model’s complexity, under non-informative (Jeffrey’s) priors [19].

1.1. The Exponential Family of Spin Models (With Interactions of Arbitrary Order)

Consider n spin variables, , taking values and the set of all product spin operators, , where is any subset of the indices . Each operator models the interaction that involves all the spins in the subset .

Definition 1.

The probability distribution ofunder spin model is defined as:

(1)

(2) being the partition function, which ensures normalisation. The model is identified by the set of product spin operators that it contains.

Note that, under this definition, we consider interactions of arbitrary order (see Section SM-0 of the Supplementary Material). For instance, for pairwise interaction models, the operators are single spins or product of two spins , for . The are the conjugate parameters [20] that modulate the strength of the interaction associated with .

We remark that the model defined in Equation (1) belongs to the exponential family of spin models. In other words, it can be derived as the maximum entropy distributions that are consistent with the requirement that the model reproduces the empirical averages of the operators for all on a given dataset [21,22]. In other words, empirical averages of are sufficient statistics, i.e., their values are enough to compute the maximum likelihood parameters . Therefore the choice of the operators in inherently entails a sharp separation between relevant variables (the sufficient statistics) and irrelevant ones, which may have important consequences in the inference process. For example, if statistical inference assumes pairwise interactions, it might be blind to relevant patterns in the data resulting from higher order interactions. Without prior knowledge, all models should be compared. According to MDL and Bayesian model selection (see Section SM-0 of the Supplementary Material), models should be compared on the basis of their maximum (log)likelihood penalized by their complexity. In other words, simple models should be preferred a priori.

1.2. Stochastic Complexity

The complexity of a model can be defined unambiguously within MDL as the number of bits needed to specify a priori the parameters that best describe a dataset consisting of N samples independently drawn from the distribution for some unknown (see Section SM-0 of the Supplementary Material). Asymptotically for , for systems of discrete variables, the MDL complexity is given by [23,24]:

| (3) |

The first term in the right hand, which is the basis of the Bayesian Information Criterion (BIC) [25,26], captures the increase of the complexity with the number of model’s parameters and with the number N of data points. This accounts for the fact that the uncertainty in each parameter decreases with N as , so its description requires bits. The second term quantifies the statistical dependencies between the parameters, and encodes the intrinsic notion of simplicity we are interested in. The sum of these two terms, in the right hand side of (3), is generally referred as stochastic complexity [11,26]. However, to distinguish these two terms, we will refer to as the stochastic complexity and the other as BIC term.

Definition 2.

For models of the exponential family, the stochastic complexity in Equation (3) is given by [23,24]

(4) where is the Fisher Information matrix with entries

(5)

For the exponential family of models, the MDL criterium (3) coincides with the Bayesian model selection approach, assuming Jeffreys’ prior over the parameters [26,27,28] (see Section SM-0 of the Supplementary Material). Notice, however, that we take as an information theoretic measure of model complexity, and abstain from entering into the debate on whether Jeffreys’ prior is an adequate choice in Bayesian inference (see e.g., [29]).

Within a fully Bayesian approach, the model that maximises its posterior given the data , , is the one to be selected. Therefore, if two models have the same number of parameters (same BIC term), the simplest one, i.e., the one with the lowest stochastic complexity , has to be chosen a priori. However, the number of possible interactions among n spins is , and therefore the number of spin models is . The super-exponential growth of the number of models with the number of spins n makes selecting the best model unfeasible even for moderate n. Our aim is then to understand how the stochastic complexity depends on the structure of the model and eventually provide guidelines for the search of simpler models in such a huge space.

2. Results

2.1. Gauge Transformations

Let’s start by showing that low order interactions do not have a privileged status and are not necessarily related to low complexity , with the following argument: Alice is interested in finding which model best describes a dataset ; Bob is interested in the same problem, but his dataset is related to Alice’s dataset by a gauge transformation.

Definition 3.

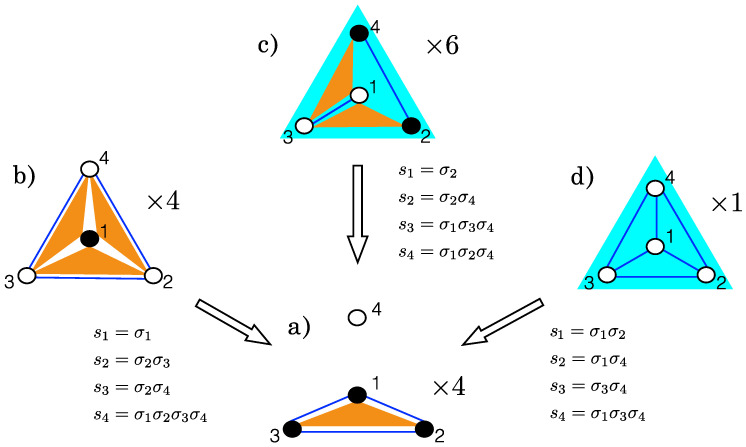

We define a gauge transformation as a bijective transformation between n spin variables and n spin variables that corresponds to a bijection from the set of all operators to itself, (see Section SM-1 of the Supplementary Material). Gauge transformations preserve the structure of the set of all operators. For examples of gauge transformations see Figure 1.

Figure 1.

Example of gauge transformations between models with spins. Models are represented by diagrams (color online): spins are full dots • in presence of a local field, empty dots ∘ otherwise; blue lines are pairwise interactions (); orange triangles denote 3-spin interactions (); and the 4-spin interaction () is a filled blue triangle. Note that model a) has all its interactions grouped on 3 spins; the gauge transformations leading to this model are shown along the arrows. All the models belong to the same complexity class, with , and a number of independent operators (e.g., and in model a)—see tables in Section SM-6 of the Supplementary Material. The class contains in total 15 models, which are grouped, with respect to the permutation of the spins, behind the 4 representatives shown here with their multiplicity (). Models a), b), c) and d) are respectively identified by the following sets of operators: (a) ; (b) ; (c) ; and (d) .

This gauge transformation induces a bijective transformation between Alice’s models and those of Bob, as shown in Figure 1, that preserves the number of interactions . Whatever conclusion Bob draws on the relative likelihood of models can be translated into Alice’s world, where it has to coincide with Alice’s result. It follows that two models, and , related by a gauge transformation, must also have the same complexity . In particular, pairwise interactions can be mapped to interactions of any order (see Figure 1), and, consequently, low order interactions are not necessarily simpler than higher order ones.

Proposition 1.

Two models related by a gauge transformation have the same complexity.

Proposition 2.

The stochastic complexity of a model is not defined by the order of its interactions. Models with low order interactions don’t necessarily have a low complexity, and, reciprocally, high order interactions don’t necessarily imply large complexity.

Observe that models connected by gauge transformations have remarkably different structures. In Figure 1, model a) has all the possible interactions concentrated on 3 spins, having the properties of a simplicial complex [30,31]; however, its gauge-transformed counterparties are not simplicial complexes. Model d) is invariant under any permutations of the four spins, whereas the other models have a lower degree of symmetry under permutations (see the different multiplicities in Figure 1).

Gauge transformations are discussed in more details in Section SM-1 of the Supplementary Material. One can also see them as a change of the basis in which the operators are expressed. Counting the number of possible bases then gives us the number of gauge transformations (see Section SM-1 of the Supplementary Material).

Proposition 3.

The total number of gauge transformations for a system of n spin variables is:

(6)

Notice that the number of gauge transformations, (6) is much smaller than the number of possible bijections of the set of states into itself. Indeed, a generic bijection between the state spaces of and maps each product operator to one of the binary functions , which does not necessarily correspond to a product operator .

2.2. Complexity Classes

Gauge transformations define equivalence relations, which partition the set of all models into equivalence classes. Models belonging to the same class are related to each other by a gauge transformation, and thus, from Proposition 1, have the same complexity , which leads us to introduce the notion of complexity classes.

Definition 4.

A complexity class is an equivalence class of models defined by gauge transformations.

This classification suggests the presence of “quantum numbers” (invariants), in terms of which models can be classified. These invariants emerge explicitly when writing the cluster expansion of the partition function [32,33,34] (see Section SM-2 of the Supplementary Material):

| (7) |

The sum runs on the set of all possible loops ℓ that can be formed with the operators , including the empty loop .

Definition 5.

A loop is any subset such that for any value of , i.e., such that each spin occurs zero or an even number of times in this product.

The structure of in (7) depends on few characteristics of the model : The number of operators (or, equivalently, of parameters) and the structure of its set of loops (which operator is involved in which loop). The invariance under gauge transformation of the complexity in (4) reveals itself in the fact that the partition function of models related by a gauge transformation have the same functional dependence on their parameters up to relabeling.

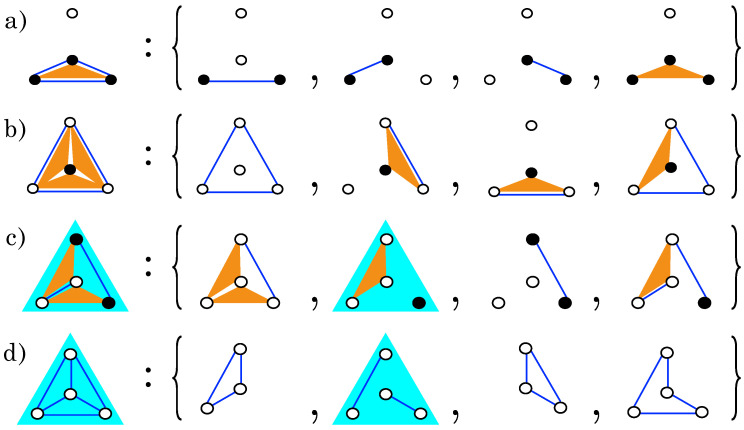

Let us focus on the loop structure of models belonging to the same class. The set of loops of any model has the structure of a finite Abelian group: if , then is also a loop of , where ⊕ is the symmetric difference [35] of two sets (see Section SM-3 of the Supplementary Material). As a consequence, for each model one can identify a minimal generating set of loops, such that any loop in can be uniquely expressed as the symmetric difference of loops in the minimal generating set. Note that the choice of the generating set is not unique, though all choices have the same cardinality ; Figure 2 gives examples of this decomposition for the models of Figure 1. Note also that for each loop . As a consequence, the cardinality of the loop group is (including the empty loop ∅). We found that is related to the number of operators of the model by (see Section SM-3 of the Supplementary Material), where is the number of independent operators of a model , i.e., the maximal number of operators that can be taken in without forming any loop. This implies that attains its minimal value, , for models with only independent operators (), and its maximal value, , for the complete model , that contains all the possible operators. The number of independent operators, , is preserved by gauge transformation, and, as the total number of operators, , is also an invariant of the class, so is the cardinality of the minimal generating set . For example, all models in Figure 1 have independent operators and (see Figure 2). It can also be shown that gauge transformations imply a duality relation, that associates to each class of models with operators a class of models with the complementary operators (see Section SM-3 of the Supplementary Material).

Figure 2.

Example of a minimal generating set of loops for each model of Figure 1. As these models belong to the same class, their (respective) sets of loops have the same cardinality, , where is the number of generators (as shown here). For model a), one can easily check that the 4 loops of the set are independent, as each of them contains at least one operator that doesn’t appear in the other 3 loops (see Section SM-3 of the Supplementary Material). Within each column, on the r.h.s., loops are related by the same gauge transformation morphing models into one another on the l.h.s. (i.e., the transformations displayed in Figure 1). This shows that the loops of these 4 generating sets have the same structure, which implies that the loop structure of the 4 models is the same. Any loop of a model can finally be obtained by combining a subset of its generating loops. Note that the choice of the generating set is not unique. (a–d) refer to the same models as in Figure 1.

To summarize, the distinctive features of a complexity class are given in the following Proposition.

Proposition 4.

The number of operators, , the number of independent operators, , and the structure of the set of loops, (through its generators), fully characterize a complexity class.

3. Discussion: How Do Simple Models Look Like?

3.1. Fewer Independent Operators, Shorter Loops

Coming to the quantitative estimate of the complexity, generally depends on the extent to which ensemble averages of the operators in the model constrain each other. This appears explicitly by rewriting (4) as an integral over the ensemble averages of the operators, , exploiting the bijection between the parameters and their dual parameters and re-parameterization invariance [28,36]:

| (8) |

where is the Fisher Information Matrix in the -coordinates. The new domain, , of integration is over the values of that can be realized in any empirical sample drawn from the model (known in this context as marginal polytope [37,38]) and is related to the mutual constraints between the ensemble averages, , (see Section SM-4 of the Supplementary Material for more details).

Proposition 5.

The complexity of a model without loops, i.e.,, andindependent operators is.

Indeed, if the model contains no loop, then is diagonal: The integral in (8) factorizes and Proposition 5 follows. In this case, the variables are not constrained at all and the domain of integration is . If instead the model contains loops, the variables become constrained and the marginal polytope, , is reduced. For example, for a model with a single loop of length three (e.g., , , and ), the values of in are not all attainable, indeed is reduced, which decreases the complexity.

Proposition 6.

The complexity of models with a fixed number of operators and a single (non-empty) loop increases with the length of the loop.

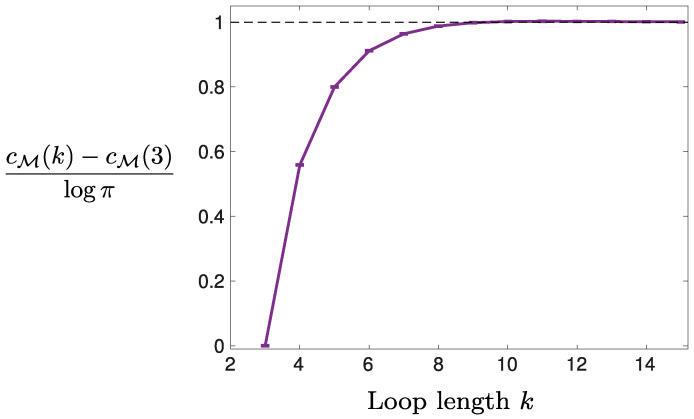

The complexity, , of models with a fixed number, , of parameters and a single (non-empty) loop of length k is shown in Figure 3 (see Section SM-6 of the Supplementary Material): increases with k and saturates at , which is the value one would expect if all operators were unconstrained. This is consistent with the expectation that longer loops induce weaker constraints among the operators. Note that the number of independent operators is kept constant here, equal to .

Figure 3.

Complexity, , of models with a single loop of length k, and free operators, i.e., not involved in any loop. For , can be computed analytically from (4). Values of are averaged over numerical estimates of the integral in (4), using Monte Carlo samples each. Error bars correspond to their standard deviation.

Proposition 7.

At fixed number of operators, the complexity of a model increases with the number of independent operators.

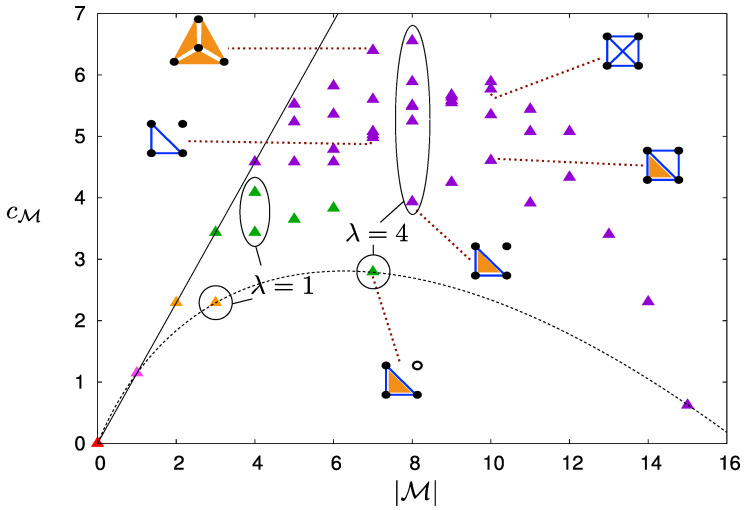

The single loop calculation allows computing the complexity of models with non-overlapping loops ( for all ), for which is the sum over the complexity, , associated to each loop. In the general case of models with more complex loop structures, the explicit calculation of is non-trivial. Yet, the argument above suggests that, at fixed number of parameters , should increase with the number of independent operators. Figure 4 summarises the results for all models with spins and supports this conclusion: For a given value of , classes with lower values of (i.e., with less independent operators) are less complex.

Figure 4.

(color online) Complexity of models for as a function of the number of operators: Each triangle represents a class of complexity, which contains one or more models (see Section SM-6 of the Supplementary Material). For each class, the value of was obtained from a representative of the class; some of them are shown here with their corresponding diagram (same notation as in Figure 1). The triangle colors indicate the values of : Violet for , green for 3, yellow for 2, pink for 1, and red for 0 (model with no operator). Models on the black line have only independent operators () and complexity, ; models on the dashed curve are complete models, the complexities of which are given in (9). Complexity classes with the same values of and have the same value of , i.e., the same number of loops, , but a different loop structure.

Proposition 8.

At fixed number of operators, complete models on a subset of spins and their equivalents are the simplest models. We refer to these models as sub-complete models. Classes of sub-complete models are the classes of minimal complexity.

A surprising result of Figure 4 is that is not monotonic with the number, , of operators of the model, increasing first with and then decreasing. Complete models turn out to be the simplest (see the dashed curve in Figure 4). As a consequence, for a given , models that contain a complete model on a subset of spins are generally simpler than models where operators have support on all the spins. For instance, the complexity class displayed in Figure 1 is the class of models with operators that has the lowest complexity (see green triangle on the dashed curve in Figure 4).

Figure 4 also confirms that pairwise models are not simpler than models with higher order interactions. Indeed, for instance for , increases drastically when changing model a) of Figure 1 into a pairwise model by turning the 3-spin interaction into an external field acting on . Likewise, the model with all six pairwise interactions for is more complex than the one where one of them is turned into a 3-spin interaction.

3.2. Complete and Sub-Complete Models

It is possible to compute explicitly the complexity of a complete model, , with n spins using the mapping between the parameters of and the probabilities constrained by their normalization [39]. The complexity in (4) being invariant under reparametrization [36], one can easily re-write this integral in terms of the variables . Finally, using that , we obtain the following proposition (see Section SM-5 of the Supplementary Material).

Proposition 9.

The complexity of a complete model with n spins is:

(9)

Note that, for , becomes negative (for , ). This suggests that the class of least complex models with interactions is the one that contains the model where the maximal number of loops are concentrated on the smallest number of spins. This agrees with our previous observations on single loop models and sub-complete models. On the contrary, models where interactions are distributed uniformly across the variables (e.g., models with only single spin operators for or with non-overlapping sets of loops) have higher complexity.

We finally note that complete models are also extremely simple to infer from empirical data. Indeed, the maximum likelihood estimates of the parameter are trivially given by , where is the empirical distribution. By contrast, learning the parameters of pairwise interacting models can be a daunting task [14].

3.3. Maximally Overlapping Loops

This finally leads us to conjecture that stochastic complexity is related to the localization properties of the set of loops (i.e., its group structure) rather than to the order of the interactions: Models where the loops, , have a “large” overlap, , are simple, whereas models with an extended homogeneous network of interactions (e.g., fully connected Ising models with up-to pairwise interaction) have many non-overlapping loops, , and therefore are rather complex. It is interesting to note that the former (simple models) lend themselves to predictions on the independence of different groups of spins. These predictions suggest “fundamental” properties of the system under study (i.e., invariance properties, spin permutation symmetry breaking) and are easy to falsify (i.e., it is clear how to devise a statistical test for these hypotheses to any given confidence level). On the contrary, complex models (e.g., fully connected pairwise Ising models) are harder to falsify as their parameters can be adjusted to fit reasonably well any sample, irrespectively of the system under study.

3.4. Summary

We find that at fixed number, , of operators, simpler models are those with fewer independent operators (i.e., smaller ). For the same value of , models can still have different complexities. The simpler ones are then those with a loop structure that will impose the most constraints between the operators of the model. More generally, we show that the complexity of a model is not defined by the order of the interactions involved, but is, instead, intimately connected to its internal geometry, i.e., how interactions are arranged in the model. The geometry of this arrangement implies mutual dependencies between interactions that constrain the states accessible to the system. More complex models are those that implement fewer constraints, and can thus account for broader types of data. This result is consistent with the information geometric approach of Reference [26], which studies model complexity in terms of the geometry of the space of probability distributions [40]. The contribution of this paper clarifies the relation between the information geometric point of view and the specific structure of the model, i.e., the actual arrangement of its interactions. We remark that these results apply to non-degenerate spin models. In the broader class of degenerate models [20], arrangement of the operators and degeneracy of the parameters may interact non trivially in terms of complexity. Our preliminary numerical investigation of single loop models (see Section SM-7 of the Supplementary Material) indicates that degeneracy decreases complexity by constraining the parameters and therefore the statistics of the operators. Also, Reference [41] discusses model selection on mixture models of spin variables, and shows that they can be cast in the form of Equation (1), where are subject to linear constraints. For these models, Bayesian model selection can be performed exactly without resorting to the approach discussed here.

A rough estimate of the number, N, of data samples beyond which the complexity term becomes negligible in Bayesian inference can be obtained with the following argument: An upper bound for the complexity of models with n spins and m parameters is given by , i.e., when all operators are independent. As a lower bound, we take Equation (9) with . This implies that an upper bound for the variation of the complexity is given by . When this is much smaller than the BIC term, the stochastic complexity can be neglected. For large m this implies , which may be relevant for the applicability of fully connected pairwise models () in typical cases, for instance when samples cannot be considered as independent observations from a stationary distribution (see [18]).

4. Conclusions

As pointed out by Wigner [42] long ago, the unreasonable effectiveness of mathematical models relies on isolating phenomena that depend on few variables, whose mutual variation is described by simple models and is independent of the rest. Remarkably we find that, for a fixed number of spin variables and parameters, simple models, according to MDL, are precisely of this form: Statistical dependencies are concentrated on the smallest subset of variables and these are independent of all the rest. Such simple models are not optimal for generalizing, i.e., to describe generic statistical dependencies, rather they are easy to falsify. They are designed for spotting independencies that may hint at deeper principles (e.g., symmetries or conservation laws) that may “take us beyond the data” [43], meaning that they can hint at hypotheses (on e.g., symmetries or other regularities) that can be tested in future experiments. On the contrary, fully connected pairwise models, which provide simple interpretation of statistical dependencies in terms of direct interactions, appears to be rather complex. This, we conjecture, is the origin of pairwise sufficiency [16] that makes them so successful to describe a wide variety of data from neural tissues [44] to voting behaviour [45].

Our results, however, show that any model that can be obtained from a pairwise model via a gauge transformation has the same complexity and hence the same generalisation power, but has higher order interactions. Hence, gauge transformations can be used to compare pairwise models with models in the same complexity class, in order to quantitatively assess when a dataset is genuinely described by pairwise interactions. Notice that this comparison can be done directly on the basis of their maximum likelihood alone.

This is only one of the possible applications of gauge transformations, which are one of the main results of this paper. In loose words, these transformations preserve the topology of models, i.e., the manner in which interactions are mutually arranged, but change the “basis” of the operators that embody these interactions. Besides model selection within the same complexity class, as in the example of pairwise models above, we can think of selecting the appropriate complexity class according to the availability of data. One particularly interesting avenue of future research is to perform model selection among models of minimal complexity (i.e., sub-complete models). Model comparison between and within these classes may be relevant given the high degree of clustering and modularity in neural, social, metabolic, and regulatory networks [46]. These models offer the simplest possible explanation of a dataset, not necessarily the most accurate one (e.g., in terms of generalisation error), but the one that can potentially reveal regularities and symmetries in the data. Interestingly, parameter inference for these models is also remarkably simple.

In conclusion, when data are scarce and high dimensional, Bayesian inference should privilege simple models, i.e., those with small stochastic complexity, over more complex ones, such as fully connected pairwise models that are often used [14,44,45]. A full Bayesian model selection approach is hampered by the calculation of the stochastic complexity that is a daunting task. Developing approximate heuristics for accomplishing this task is a challenging future avenue of research.

Supplementary Materials

The Supplementary Material is available online at http://www.mdpi.com/1099-4300/20/10/739/s1.

Author Contributions

Conceptualization, I.M. and M.M.; Formal analysis, A.B., C.B. and C.d.M.; Methodology, A.B., C.B. and C.d.M.; Software, A.B.; Writing-original draft, C.B., C.d.M. and M.M.

Funding

C.B. acknowledges financial support from the Kavli Foundation and the Norwegian Research Council’s Centre of Excellence scheme (Centre for Neural Computation, grant number 223262). A.B. acknowledges financial support from International School for Advanced Studies (SISSA).

Conflicts of Interest

The authors declare no conflict of interest. The founding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results.

References and Notes

- 1.Mayer-Schonberger V., Cukier K. Big Data: A Revolution That Will Transform How We Live, Work and Think. John Murray Publishers; London, UK: 2013. [Google Scholar]

- 2.Anderson C. The End of Theory: The Data Deluge Makes the Scientific Method Obsolete, 2008. Wired. [(accessed on 20 September 2018)]; Available online: https://www.wired.com/2008/06/pb-theory/

- 3.Cristianini N. Are we there yet? Neural Netw. 2010;23:466–470. doi: 10.1016/j.neunet.2010.01.006. [DOI] [PubMed] [Google Scholar]

- 4.LeCun Y., Kavukcuoglu K., Farabet C. Convolutional networks and applications in vision; Proceedings of the 2010 IEEE International Symposium on Circuits and Systems; Paris, France. 30 May–2 June 2010; pp. 253–256. [Google Scholar]

- 5.Hannun A., Case C., Casper J., Catanzaro B., Diamos G., Elsen E., Prenge R., Satheesh S., Sengupta S., Coates A., Ng A. Deep Speech: Scaling up end-to-end speech recognition. arXiv. 2014. cs.CL/1412.5567

- 6.Bishop C. Pattern Recognition and Machine Learning. Springer-Verlag; New York, NY, USA: 2006. (Information Science and Statistics) [Google Scholar]

- 7.Wu X., Kumar V., Quinlan J.R., Ghosh J., Yang Q., Motoda H., McLachlan G.J., Ng A., Liu B., Yu P.S., et al. Top 10 algorithms in data mining. Knowl. Inf. Syst. 2008;14:1–37. doi: 10.1007/s10115-007-0114-2. [DOI] [Google Scholar]

- 8.Goodfellow I., Bengio Y., Courville A. Deep Learning. MIT Press; Cambridge, MA, USA: 2016. [(accessed on 20 September 2018)]. Available online: http://www.deeplearningbook.org. [Google Scholar]

- 9.Popper K. The Logic of Scientific Discovery (Routledge Classics) Taylor & Francis; London, UK: 2002. [Google Scholar]

- 10.Chater N., Vitányi P. Simplicity: A unifying principle in cognitive science? Trends Cogn. Sci. 2003;7:19–22. doi: 10.1016/S1364-6613(02)00005-0. [DOI] [PubMed] [Google Scholar]

- 11.Rissanen J. Stochastic complexity in learning. J. Comput. Syst. Sci. 1997;55:89–95. doi: 10.1006/jcss.1997.1501. [DOI] [Google Scholar]

- 12.Rissanen J. Modeling by shortest data description. Automatic. 1978;14:465–471. doi: 10.1016/0005-1098(78)90005-5. [DOI] [Google Scholar]

- 13.Grünwald P. The Minimum Description Length Principle. MIT Press; Cambridge, MA, USA: 2007. (Adaptive Computation and Machine Learning) [Google Scholar]

- 14.Chau Nguyen H., Zecchina R., Berg J. Inverse statistical problems: From the inverse Ising problem to data science. arXiv. 2017. cond-mat.dis-nn/1702.01522

- 15.Margolin A., Wang K., Califano A., Nemenman I. Multivariate dependence and genetic networks inference. IET Syst. Biol. 2010;4:428–440. doi: 10.1049/iet-syb.2010.0009. [DOI] [PubMed] [Google Scholar]

- 16.Merchan L., Nemenman I. On the Sufficiency of Pairwise Interactions in Maximum Entropy Models of Networks. J. Stat. Phys. 2016;162:1294–1308. doi: 10.1007/s10955-016-1456-5. [DOI] [Google Scholar]

- 17.Ravikumar P., Wainwright M.J., Lafferty J.D. High-dimensional Ising model selection using ℓ1-regularized logistic regression. Ann. Stat. 2010;38:1287–1319. doi: 10.1214/09-AOS691. [DOI] [Google Scholar]

- 18.Bulso N., Marsili M., Roudi Y. Sparse model selection in the highly under-sampled regime. J. Stat. Mech. Theor. Exp. 2016;2016:093404. doi: 10.1088/1742-5468/2016/09/093404. [DOI] [Google Scholar]

- 19.Balasubramanian V. Statistical inference, Occam’s razor, and statistical mechanics on the space of probability distributions. Neural Comput. 1997;9:349–368. doi: 10.1162/neco.1997.9.2.349. [DOI] [Google Scholar]

- 20.There is a broader class of models, where subsets 𝒱 ⊆ ℳ of operators have the same parameter, i.e., gμ = g𝒱 for all μ ∈ 𝒱 or gμ are subject to linear constrains. These degenerate models are rarely considered in the inference literature. Here we confine our discussion to non-degenerate models and refer the reader to Section SM-7 of the Supplementary Material for more discussion.

- 21.Jaynes E.T. Information Theory and Statistical Mechanics. Phys. Rev. 1957;106:620–630. doi: 10.1103/PhysRev.106.620. [DOI] [Google Scholar]

- 22.Tikochinsky Y., Tishby N.Z., Levine R.D. Alternative approach to maximum-entropy inference. Phys. Rev. A. 1984;30:2638–2644. doi: 10.1103/PhysRevA.30.2638. [DOI] [Google Scholar]

- 23.Rissanen J.J. Fisher information and stochastic complexity. IEEE Trans. Inf. Theory. 1996;42:40–47. doi: 10.1109/18.481776. [DOI] [Google Scholar]

- 24.Rissanen J. Strong optimality of the normalized ML models as universal codes and information in data. IEEE Trans. Inf. Theo. 2001;47:1712–1717. doi: 10.1109/18.930912. [DOI] [Google Scholar]

- 25.Schwarz G. Estimating the dimension of a model. Ann. Stat. 1978;6:461–464. doi: 10.1214/aos/1176344136. [DOI] [Google Scholar]

- 26.Myung I.J., Balasubramanian V., Pitt M.A. Counting probability distributions: Differential geometry and model selection. Proc. Natl. Acad. Sci. USA. 2000;97:11170–11175. doi: 10.1073/pnas.170283897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Jeffreys H. An Invariant Form for the Prior Probability in Estimation Problems. Proc. R. Soc. Lond. A Math. Phys. Eng. Sci. 1946;186:453–461. doi: 10.1098/rspa.1946.0056. [DOI] [PubMed] [Google Scholar]

- 28.Amari S. Information Geometry and Its Applications. Springer; Tokyo, Japan: 2016. (Applied Mathematical Sciences) [Google Scholar]

- 29.Kass R.E., Wasserman L. The selection of prior distributions by formal rules. J. Am. Stat. Assoc. 1996;91:1343–1370. doi: 10.1080/01621459.1996.10477003. [DOI] [Google Scholar]

- 30.A simplicial complex [31], in our notation, is a model such that, for any interaction μ ∈ ℳ, any interaction that involves any subset ν ⊆ μ of spins is also contained in the model (i.e., ν ∈ ℳ).

- 31.Courtney O.T., Bianconi G. Generalized network structures: The configuration model and the canonical ensemble of simplicial complexes. Phys. Rev. E. 2016;93:062311. doi: 10.1103/PhysRevE.93.062311. [DOI] [PubMed] [Google Scholar]

- 32.Landau L., Lifshitz E. Statistical Physics. 3rd ed. Elsevier Science; London, UK: 2013. [Google Scholar]

- 33.Kramers H.A., Wannier G.H. Statistics of the Two-Dimensional Ferromagnet. Part II. Phys. Rev. 1941;60:263–276. doi: 10.1103/PhysRev.60.263. [DOI] [Google Scholar]

- 34.Pelizzola A. Cluster variation method in statistical Physics and probabilistic graphical models. J. Phys. A Math. Gen. 2005;38:R309–R339. doi: 10.1088/0305-4470/38/33/R01. [DOI] [Google Scholar]

- 35.The symmetric difference of two sets ℓ1 and ℓ2 is defined as the set that contains the elements that occur in ℓ1 but not in ℓ2 and viceversa: ℓ1 ⊕ ℓ2 = (ℓ1 ∪ ℓ2) \ (ℓ1 ∩ ℓ2). It corresponds to the XOR operator between the operators of the two loops.

- 36.Amari S., Nagaoka H. Methods of Information Geometry. American Mathematical Society; Providence, RI, USA: 2007. (Translations of mathematical monographs) [Google Scholar]

- 37.Wainwright M.J., Jordan M.I. Graphical Models, Exponential Families, and Variational Inference. Found. Trends® Mach. Learn. 2008;1:1–305. doi: 10.1561/2200000001. [DOI] [Google Scholar]

- 38.Wainwright M.J., Jordan M.I. Variational inference in graphical models: The view from the marginal polytope; Proceedings of the Forty-First Annual Allerton Conference on Communication, Control, and Computing; Monticello, NY, USA. 1–3 October 2003; pp. 961–971. [Google Scholar]

- 39.Mastromatteo I. On the typical properties of inverse problems in statistical mechanics. arXiv. 2013. cond-mat.stat-mech/1311.0190

- 40.In information geometry [28,36], a model ℳ defines a manifold in the space of probability distributions. For exponential models (1), the natural metric, in the coordinates gμ, is given by the Fisher Information (5), and the stochastic complexity (4) is the volume of the manifold [26].

- 41.Gresele L., Marsili M. On maximum entropy and inference. Entropy. 2017;19:642. doi: 10.3390/e19120642. [DOI] [Google Scholar]

- 42.Wigner E.P. The unreasonable effectiveness of mathematics in the natural sciences. Commun. Pure Appl. Math. 1960;13:1–14. doi: 10.1002/cpa.3160130102. [DOI] [Google Scholar]

- 43.In his response to Reference [2] on edge.org, W.D. Willis observes that “Models are interesting precisely because they can take us beyond the data”.

- 44.Schneidman E., Berry M.J., II, Bialek W. Weak pairwise correlations imply strongly correlated network states in a neural population. Nature. 2006;440:1007–1012. doi: 10.1038/nature04701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Lee E., Broedersz C., Bialek W. Statistical mechanics of the US Supreme Court. J. Stat. Phys. 2015;160:275–301. doi: 10.1007/s10955-015-1253-6. [DOI] [Google Scholar]

- 46.Albert R., Barabási A.L. Statistical mechanics of complex networks. Rev. Mod. Phys. 2002;74:47–97. doi: 10.1103/RevModPhys.74.47. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.