Abstract

Zipf’s, Heaps’ and Taylor’s laws are ubiquitous in many different systems where innovation processes are at play. Together, they represent a compelling set of stylized facts regarding the overall statistics, the innovation rate and the scaling of fluctuations for systems as diverse as written texts and cities, ecological systems and stock markets. Many modeling schemes have been proposed in literature to explain those laws, but only recently a modeling framework has been introduced that accounts for the emergence of those laws without deducing the emergence of one of the laws from the others or without ad hoc assumptions. This modeling framework is based on the concept of adjacent possible space and its key feature of being dynamically restructured while its boundaries get explored, i.e., conditional to the occurrence of novel events. Here, we illustrate this approach and show how this simple modeling framework, instantiated through a modified Pólya’s urn model, is able to reproduce Zipf’s, Heaps’ and Taylor’s laws within a unique self-consistent scheme. In addition, the same modeling scheme embraces other less common evolutionary laws (Hoppe’s model and Dirichlet processes) as particular cases.

Keywords: innovation dynamics; stylized facts; Zipf’s law; Heaps’ law, Taylor’s law; adjacent possible; Pólya’s Urns; Poisson-Dirichlet processes

1. Introduction

Innovation processes are ubiquitous. New elements constantly appear in virtually all systems and the occurrence of the new goes well beyond what we now call innovation. The term innovation refers to a complex set of phenomena that includes not only the appearance of new elements in a given system, e.g., technologies, ideas, words, cultural products, etc., but also their adoption by a given population of individuals. From this perspective one can distinguish between a personal, or local, experience of the new—for instance when we discover a new favorite writer or a new song—and a global occurrence of the new, i.e., every time something appears that never appeared before—for instance, if we write a new book or write a new song. In all these cases there is something new entering the history of a given system or a given individual.

Given the paramount relevance of innovation processes, it is highly important to grasp their nature and understand how the new emerges in all its possible instantiations. To this end, it is essential to fix a certain number of stylized facts characterizing the overall phenomenology of the new and quantifying its occurrence and its dynamical properties. Here we focus in particular on three basic laws whose general validity has been assessed in virtually all systems displaying innovation. The Zipf’s law [1,2,3,4], quantifying the frequency distribution of elements in a given system, the Heaps’ law [5,6], quantifying the rate at which new elements enter a given system and the Taylor’s law [7], quantifying the intrinsic fluctuations of variables associated to the occurrence of the new. Any basic theory, supposedly close to the actual phenomenology of innovation processes, should be able at least to explain those three laws from first principles. Despite an abundant literature on the subject related to many different disciplines, a clear and self-consistent framework to explain the above-mentioned stylized facts has been missing for a very long time. Many approaches have been proposed so far, often adopting ad-hoc assumptions or attempting to derive the three laws while taking the others for granted. The aim of this paper is that of trying to put order in the often scattered and disordered literature, by proposing a self-consistent framework that, in its simplicity and generality, is able to account for the existence of the three laws from very first principles.

The framework we propose is based on the notion of “Adjacent Possible” and, more generally, on the interplay between what Francois Jacob named the dichotomy between the “actual” and the “possible”, the actual realization of a given phenomenon and the space of possibilities still unexplored. Originally introduced by the famous biologist and complex-systems scientist Stuart Kauffman, the notion of the adjacent possible [8,9] refers to the progressive expansion, or restructuring, of the space of possibilities, conditional to the occurrence of novel events. Based on this early intuition, we recently introduced, in collaboration with Steven Strogatz, a mathematical framework [10,11] to investigate the dynamics of the new via the adjacent possible. The modeling scheme is based on older schemes, named Polya’s urns and it mathematically predicts the notion that “one thing leads to another”, i.e., the intuitive idea, presumably we all have, that innovation processes are non-linear and the conditions for the occurrence of a given event could realize only after something else happened.

It turns out that the mathematical framework encoding the notion of adjacent possible represents a sufficient first-principle scheme to explain the Zipf’s, Heaps’ and Taylor’s laws on the same ground. In this paper we present this approach and we discuss the links it bears with other approaches. In particular, we discuss the relation of our approach with well known stochastic processes, widely studied in the framework of nonparametric Bayesian inference, namely the Dirichlet and the Poisson-Dirichlet processes [12,13,14]. In addition, based on this comparison, a coherent framework emerges where the importance of the adjacent possible scheme appears as crucial to understand the basic phenomenology of innovation processes. Though we can only conjecture that the expansion of the adjacent possible space is also a necessary condition for the validity of the three laws mentioned above, no counterexamples have been found so far that, without a dynamical space of possibilities, that one can use to satisfactorily explain the empirically observed laws.

2. Zipf’s and Heaps’ Laws

2.1. Frequency-Rank Relations: the Estoup-Zipf’s Law

Let us consider a generic text and count the number of occurrences of each word. Now, suppose one repeats the same operation for all the distinct words in a long text, and ranks all the words according to their frequency of occurrence and plots them in a graph showing the number of occurrences vs. the rank. This is what George Kingsley Zipf did [2,3,4] in the 1920s. A more recent analysis of the same behaviour is reported in Figure 1, based on data of the Gutenberg corpus [15].

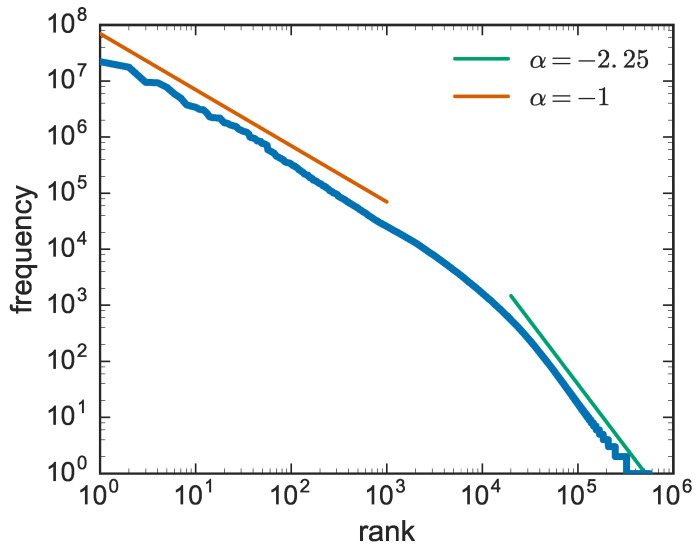

Figure 1.

Zipf’s law computed on the Gutenberg corpus [15]. In this case, the exponent of the asymptotic behaviour is . Similar behaviours are observed in many other systems.

The existence of straight lines in the log-log plot is the signature of power-law functions of the form:

| (1) |

The original result obtained by Zipf, corresponding to the first slope with , revealed a striking regularity in the way words are adopted in texts: said the frequency of the most frequent word (rank ), the frequency of the second most frequent word is , that of the third and so on. For high rankings, i.e., highly infrequent words, one observes a second slope, with an exponent larger than two.

It should be remarked that perhaps the first one to observe the above reported law was Jean-Baptiste Estoup, who was the General Secretary of the Institut Sténographique de France. In his book Gammes sténographiques [1,16], pioneered the investigation of the regularity of word frequencies and observed that the frequency with which words are used in texts appears to follow a power law behaviour. This observation was later acknowledged by Zipf [2] and examined in depth to bring what is also known as Estoup-Zipf’s law. From now on we shall refer to this law as Zipf’s law.

It is also important to remark that Zipf-like behaviours have been observed in a large variety of cases and situations. Zipf itself reported [4] about the distribution of metropolitan districts in 1940 in the USA, as well as service establishments, manufacturers, and retails stores in the USA in 1939. As the years pass, examples and situations where Zipf’s law has been invoked has been steadily growing. For instance, Zipf’s law has been invoked in city populations, the statistics of webpage visits and other internet traffic data, company sizes and other economic data, science citations and other bibliometric data, as well as in scaling in natural and physical phenomena. A thorough account of all these cases is out of the scope of the present paper and we refer to recent reviews and references therein for an account of the latest developments [17,18,19].

2.2. The Innovation Rate: Herdan-Heaps’ Law

Let us now make a step forward and look at a generic text (or, without loss of generality, at a generic sequence of characters) and focus now on the occurrence of the novelties. For a generic text, one can ask when new words, i.e., never occurred before in the text, appear. Now, if one plots the number of new words as a function of the number of words read (which is our measure of the intrinsic time), one gets a plot like that of Figure 2, where one observes two main behaviors.

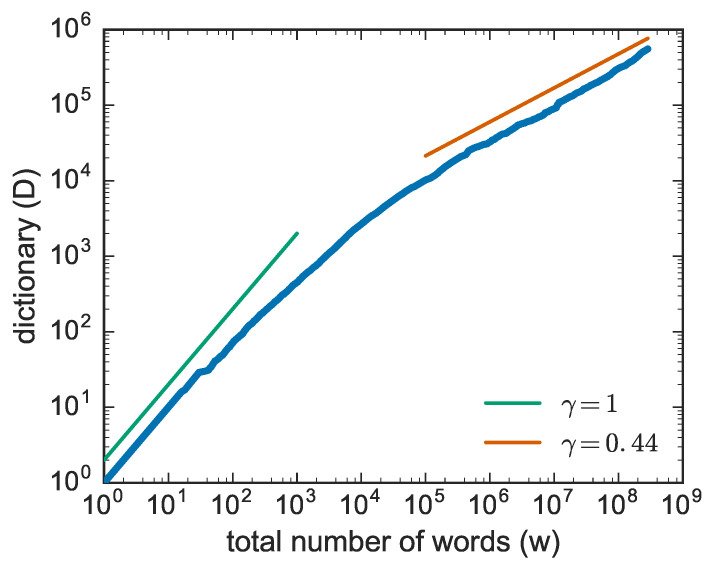

Figure 2.

Growth of the number of distinct words computed on the Gutenberg corpus of texts [15]. The position of texts in the corpus is chosen at random. In this case . Similar behaviours are observed in many other systems.

A linear growth for short times where at the beginning, basically all the words are appearing for the first time. Later on the growth slows down and an asymptotic behavior is observed of the form:

| (2) |

with . In the specific case of Figure 2 but the exponent slightly changes from text to text. The relation of Equation (2) is known as Heaps’ law from Harold Stanley Heaps [6], who formulated it in the framework of information retrieval (see also [20]), though its first discovery is due to Gustav Herdan [5] in the framework of linguistics (see also [21,22]). From now onward we shall refer to it as Heaps’ law.

2.3. Zipf’s vs. Heaps’ Laws

In this section we compare the two laws just observed, Zipf’s law for the frequencies of occurrence of the elements in a system and Heaps’ law for their temporal appearance. It has often been claimed that Heaps’ and Zipf’s law are trivially related and that one can derive Heaps’s law once the Zipf’s is known. This is not true in general. It turns out to be true only under the specific hypothesis of random-sampling as follows. Suppose the existence of a strict power-law behaviour of the frequency-rank distribution, , and construct a sequence of elements by randomly sampling from this Zipf distribution . Through this procedure, one recovers a Heaps’ law with the functional form [23,24] with . In order to do that we need to consider the correct expression for that includes the normalisation factor, whose expression can be derived through the following approximated integral:

| (3) |

Let us now distinguish the two cases. For one has

| (4) |

while for one obtains:

| (5) |

When , one can neglect the term in Equation (4), and when , one can write .

Summarizing one has then:

| (6) |

| (7) |

| (8) |

We are now interested in estimating the number, D, of distinct elements appearing in the sequence as a function of its length N. To do that, let us consider the entrance of a new element (never appeared before) in the sequence and let the number of distinct elements in the sequence be D after this entrance. This new element will have maximum rank , and frequency . From Equation (6) we obtain:

| (9) |

which, after an inversion gives:

| (10) |

The same reasoning can be extended to generic functional forms for as follows:

| (11) |

| (12) |

| (13) |

Inverting these relations, one eventually finds:

| (14) |

| (15) |

| (16) |

Summarizing, under the hypothesis of random sampling from a frequency rank distribution expressed by a power-law function , one recovers a Heaps’ law with the following relation between and :

| (17) |

In [24], it has been demonstrated that finite-size effects can affect the above-seen relationships that happen to be true only for very long sequences. For short enough sequences, one observes a systematic deviation from Equation (17), especially for values close to 1.

Another important observation is now in order. The assumption of random sampling considered above is strong and sometimes unrealistic (e.g., [25]). First of all it implies the a priori existence of Zipf’s law with an infinite support. In addition, the frequency-rank plots one empirically observes are far from featuring a pure power-law behavior. In all those cases, the relation between the Zipf’s law and the Heaps’ law seen above and summarized by Equation (17) happens to hold only when looking at the tail of the Zipf’s plot, i.e., for high ranks (small frequencies) in the frequency-rank plots and long times, i.e., high N, in the plot expressing the Heaps’ law. In a later section we shall also discuss the so-called Taylor’s law that connects the standard deviation s of a random variable (for instance the size D of the dictionary) to its mean . Simple analytic calculations [26] show that the poissonian sampling of a power-law leads to a Taylor’s law with exponent , i.e., . This is not the case for real texts for which one observes an exponent close to 1 [26].

The ensemble of all these facts implies that the explanation of the empirical findings of the Zipf’s and Heaps’ law cannot be done by only deriving one of the laws and deducing the other one accordingly, based on Equation (17). Rather, both Zipf’s and Heaps’ laws and the Taylor’s law should all be derived in the framework of a self-consistent theory. This is precisely the aim of this paper.

3. Urn Model with Triggering

We now introduce a simple modeling scheme able to reproduce both Zipfs’ and Heaps’ laws simultaneously. Crucial for this result is the conditional expansion of the space of possibilities, that we will elucidate in the following. In [8,9], S. Kauffman introduces and discusses the notion of the adjacent possible, which is all those things that are one step away from what actually exists. The idea is that evolution does not proceed by leaps, but moves in a space where each element should be connected with its precursor. The Kauffman’s theoretical concept of adjacent possible, originally discussed in his investigations of molecular and biological evolution, has also been applied to the study of innovation and technological evolution [27,28]. To clarify the concept, let us think about a baby that is learning to talk. We can say almost surely that she will not utter “serendipity” as the first word in her life. More than this, we can safely guess that her first word will be “papa”, or “mama”, or one among a list of few other possibilities. In other words, in the period of lallation, only few words belong to the space of the adjacent possible and can be actualized in the next future. Once the baby has learned how to utter simple words, she can try more sophisticated ones, involving more demanding articulation efforts. In the process of learning, her space of possibilities (her adjacent possible) considerably grows, with the result that guessing a priori the first 100 words learned by a child is much less obvious than guessing which will be the first one.

Here we formalize the idea that by opening up new possibilities, an innovation paves the way for other innovations, explicitly introducing this concept in a Pólya’s urn based model. In particular, we will discuss the simplest version of the model introduced in [10], which we will name Pólya’s urn model with triggering (PUT). The interest of this model lies, on the one hand, in its generality, the only assumptions it makes refer to the general and not system-specific mechanisms for the expansion into the adjacent possible; on the other hand, its simplicity allows to draw analytical solutions.

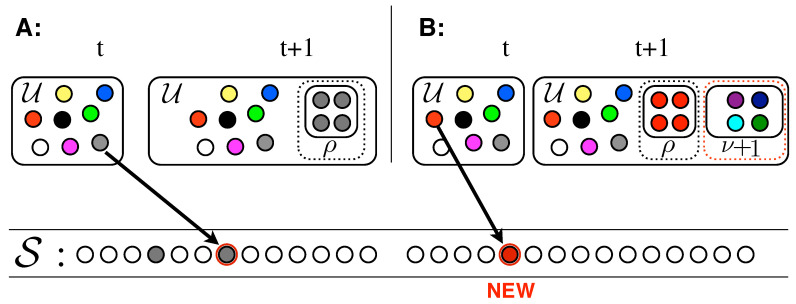

The model works as follows (please refer to Figure 3). An urn initially contains distinct elements, represented by balls of different colors. By randomly extracting elements from the urn, we construct a sequence mimicking the evolution of our system (e.g., the sequence of words in a given text). Both the urn and the sequence enlarge during the process: (i) at each time step t, an element is drawn at random from the urn, added to the sequence, and put back in the urn along with additional copies of it (Figure 3A); (ii) iff the chosen element is new (i.e., it appears for the first time in the sequence ), brand new distinct elements are also added to the urn (Figure 3B). These new elements represent the set of new possibilities opened up by the seed . Hence is the size of the new adjacent possible available once an innovation occurs.

Figure 3.

Urn model with triggering. (A) An element that had previously been drawn from the urn, is drawn again: the element is added to and it is put back in the urn along with additional copies of it. (B) An element that never appeared in the sequence is drawn: the element is added to , put back in the urn along with additional copies of it, and brand new and distinct balls are also added to the urn.

Simple asymptotic formulas for the number of distinct elements appearing in the sequence as a function of the sequence’s length n (Heaps’ law), and for the asymptotic power-law behavior of the frequency-rank distribution (Zipf’s law), in terms of the model parameters and can be derived. In order to do so, one can write a recursive formula for as:

| (18) |

where we have defined as the probability of drawing a new ball (never extracted before) at time n (note that we consider intrinsic time, that is we identify the time elapsed with the length of the sequence constructed). The probability is equal to the ratio (at time n) between the number of elements in the urn never extracted and the total number of elements in the urn. Approximating Equation (18) with its continuous limit, we can write:

| (19) |

where is the number of balls, all distinct, initially placed in the urn. This equation can be integrated analytically in the limit of large n, when can be neglected, by performing a change of variable . After some algebra (detailed computations can be found in [11] and in Appendix A for an extended model), we find the asymptotic solutions (valid for large n):

| (20) |

| (21) |

| (22) |

For the derivation of the Zipf’s law we refer the reader to the SI of [10] and to Appendix B for an alternative derivation based on the continuous approximation. Results contrasting numerical results and theoretical predictions for the Heaps’ and Zipf’s laws are reported in Figure 4.

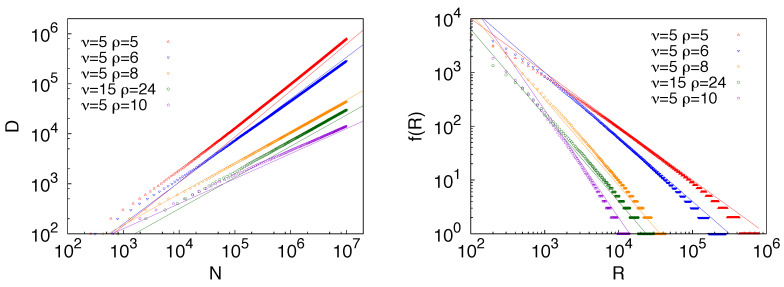

Figure 4.

Heaps’ law (left) and Zipf’s law (right) in the urn model with triggering. Straight lines in the Heaps’ law plots show functions of the form with the exponent as predicted by the analytic results and confirmed in the numerical simulations. Straight lines in the Zipf’s law plots show functions of the form , with .

The Role of the Adjacent Possible: Heaps’ and Zipf’s Laws in the Classic Multicolors Pólya Urn Model

One question that naturally emerges concerns the relevance of the notion of adjacent possible and its conditional growth. One could for instance argue that the same predictions of the PUT model could be replicated having all the possible outcomes of a process immediately available from the outset, instead of appearing progressively through the conditional process related to the very notion of adjacent possible. In order to remove all doubt, we consider an urn initially filled with distinct colors, with arbitrarily large, with no other colors entering into the urn during the process of construction of the sequence . This is the Pólya multicolors urn model [29] and we here briefly discuss the Heaps’ and Zipf’s laws emerging from it. Let us thus consider an urn initially containing balls, all of different colors. At each time step, a ball is withdrawn at random, added to a sequence, and placed back in the urn along with additional copies of it. This process corresponds to the one depicted in Figure 3A, that is to the rule of the PUT model in the case that the drawn element is not new.

Note that although in this case the urn does not acquire new colors during the process, we can still study the dynamic of innovation by looking at the entrance of new color in the growing sequence. Let us then consider very large, so that we can consider a long time interval far from saturation (when there are still many colors in the urn that have not already appeared in ). The number of different colors added to the sequence at time n follows the equation (when the continuous limit is taken):

| (23) |

We thus obtain that for , follows a linear behaviour (), while for large n saturates at , failing to predict the power law (sublinear) growth of new elements. In Figure 5, we report results for both the Heaps’ and Zipf’s laws predicted by the model along with their theoretical predictions, referring the reader to [30] for a detailed derivation of the Zipf’s law. It is evident that a simple exploration of a static, though large, space of possibilities cannot account for the empirical observations summarized by the Zipf’s and the Heaps’s laws.

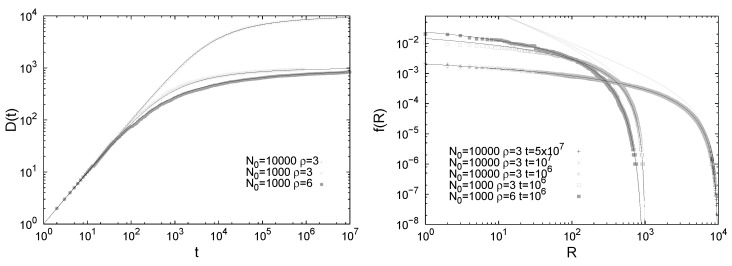

Figure 5.

Results for the multicolors Pólya urn model without innovation. Results are reported both from simulations of the process (points) and from the analytical predictions (straight lines), for different values of the initial number of balls and of the reinforcement parameter . Left: Number of different colors added in the sequence as a function of the total number t of extracted balls. The curves from analytical predictions of Equation (23) exactly overlap the simulated points. Right: Frequency-rank distribution. Simulations of the process are here reported along with both: (i) the prediction obtained by inverting the relation ; (ii) the asymptotic solution, valid for , obtained by inverting Equation (23) (refer to [30] for their derivation).

4. Connection of the Urn Model with Triggering and with Stochastic Processes Featuring Innovation

The PUT model is closely related to well known stochastic processes, widely studied in the framework of nonparametric Bayesian inference, namely the Dirichlet and the Poisson-Dirichlet processes. We will discuss here those processes in terms of their predictive probabilities, referring to excellent reviews [12,13,14] for a complete and formal definition of them.

The problem can be framed in the following way. Given a sequence of events , we want to estimate the probability that the next event will be , where can be one of the already seen events , , or a completely new one, unseen until the intrinsic time n.

4.1. Urn Model with Triggering and the Poisson-Dirichlet Process

Let us first discuss the Poisson-Dirichlet process, whose predictive conditional probability reads:

| (24) |

where and are parameters of the model, a given continuous probability distribution defined a priori on the possible values the variables can take, named base probability distribution, and the D distinct values appearing in the sequence , respectively with multiplicity . Let us briefly discuss Equation (24). The first term on the right hand side refers to the probability that takes a value that has never appeared before, i.e., a novel event. This happens with probability , depending both on the total number n of events seen until time n, and on the total number D of distinct events seen until time n. In this way, in the Poisson-Dirichlet process, the concept that the more novelties that are actualized, the higher the probability of encountering further novelties is implicit. The second term in Equation (24) weights the probability that equals one of the events that has previously occurred, and differs from a bare proportionality rule when .

The Poisson-Dirichlet process predicts an asymptotic power-law behavior for the number of distinct elements seen as a function of the sequence length n. The exact expression for the expected value of can be found in [12]. Here we report the results obtained under the same approximations made for the urn model with triggering:

| (25) |

that can be solved by separation of variables, leading to:

| (26) |

Note that the Poisson-Dirichlet process predicts a sublinear power law behavior for but cannot reproduce a linear growth for it, being only defined for .

The ubiquity of the Poisson-Dirichlet process is due, together with its ability of producing sequences featuring Heaps’ and Zipf’s laws, to the fundamental property of exchangeability [12,31]. This refers to the fact that the probability of a sequence generated by the Poisson-Dirichlet process does not depend on the order of the elements in the sequence: for any permutation of the sequence elements, so that we can write the joint probability distribution for the number of occurrences of the variables . Exchangeability is a powerful property related to the de Finetti theorem [32,33]; it is also a strong and sometimes unrealistic assumption on the lack of correlations and causality in the data.

Returning to the PUT model, we observe that the model produces, in general, sequences that are not exchangeable. It recovers exchangeability in a particular case, corresponding to a slightly different definition of rule (i): the drawn element is put back in the urn along with additional copies of it iff is not new; in the other case (i.e., when we apply rule (ii)), is put back in the urn along with additional copies of it, with . In this particular case the PUT model corresponds exactly to the Poisson-Dirichlet process, with and . In this case, at odds with the previously discussed version of the model, the urn acquires the same number of balls at each time step, regardless of whether a novelty occurs. This variant makes the generated sequences exchangeable, but imposes the constraint , and thus in this case we cannot recover the linear growth of ; this is the same for the Poisson-Dirichlet process. We demonstrate in Appendix A that the dependence of the power law’s exponents of the Heaps’ and Zipf’s laws on the PUT model’s parameters and reads the same as in Equations (20–22) if we modify rule (i) with any .

Here we wish to remark that the urn representation of the PUT model allows for straightforward generalizations where correlations can be explicitly taken into account (see for instance [10] for a first step in this direction). In addition, it can be easily rephrased in terms of walks in a complex space (for instance a graph), allowing to consider more complex underlying structures for the space of possibilities (see for instance the SI of [10,34,35]).

4.2. Urn Model with Triggering, Dirichlet Process and Hoppe Model

By setting in Equation (24), we obtain the predictive conditional probability for the Dirichlet process, predicting a logarithmic growth of [12]. Correspondingly, if we chose in the urn model, we obtain:

| (27) |

If we now neglect in the denominator of (27), we can solve in the large limit of large n:

| (28) |

The same asymptotic growth of is also found in one of the first models introducing innovation in the framework of Pólya’s urn, namely the Hoppe’s model [36]. The motivation of the Hoppe’s work was to derive the Ewens’ sampling formula [37], describing the allelic partition at equilibrium of a sample from a population evolved according to a discrete time Wright-Fisher process [38,39]. In the Hoppe model, innovations are introduced through a special ball, the “mutator”. In particular, the process starts with only the mutator in the urn, with a mass . At any time n, a ball is withdrawn with a probability proportional to its mass, and, if the ball is the mutator, it is placed back in the urn along with a ball of a brand new color, with unitary mass, thus increasing the number of different colors present in the urn. Otherwise, the selected ball is placed back in the urn along with another ball of the same color. Writing the recursive formula for and taking the continuous limit, we obtain:

| (29) |

that is exactly Equation (27) with . It predicts a logarithmic increase of new colors in the urn:

| (30) |

corresponds to Equation (28), by identifying with . Hoppe’s urn scheme is non-cooperative in the sense that one novelty does nothing to facilitate another. In other words, while in the Hoppe’s model a mechanism is already present that allows for the expansion of the space of possibilities, this mechanism is completely independent of the actual realization of a novelty, and fails to reproduce both the Heaps’ and the Zipf’s laws.

5. Fluctuation Scaling (Taylor’s Law)

From Equations (14)–(16), it is clear that randomly sampling a Zipf’s law with a given exponent results in a Heaps’ law with linear and sublinear exponents tuned by the exponent of the Zipf’s. On the other hand, Equations (20)–(22) show that the PUT model is also producing the same Heaps’ exponents with the same relation to the Zipf’s exponent as in the random sampling. Therefore, one legitimate question is whether the PUT is also actually performing a kind of sophisticated random sampling of an underlying Zipf’s law. One possible way to discriminate PUT from a random sampling is to look at the fluctuation scaling, i.e., the Taylor’s law discussed in Section 2.3, which connects the standard deviation s of a random variable to its mean . Simple analytic calculations [26] show that the Poissonian sampling of a power-law leads to a Taylor’s law with exponent 1/2, i.e., .

Real text analysis shows instead a Taylor exponent of 1 [26], which points to the obvious conclusion that the process of writing texts is not an uncorrelated choice of words from a fixed distribution. In [26] this was explained by a “topic-dependent frequencies of individual words”. The empirical observation therein, was that the frequency of a given word changes according to the topic of the writing. For example, the term “electron” has a high frequency in physics books and a low frequency in fairy tales, so that its rank is low in the first case and high in the second. The result is that there exist different Zipf’s laws with the same exponent according to the topic and the enhanced variance of the dictionary size is ascribable to this multitude of Zipf’s laws that add a further variability to the sampling process.

In PUT there is certainly no topicality as in real texts. Nevertheless, we find numerically a linear Taylor’s law in the case of sublinear Heaps’ exponents (). In PUT, there is no Zipf’s law beforehand: it is built during the process instead and this is sufficient to boost the variance of the dictionary, on average, at any given time.

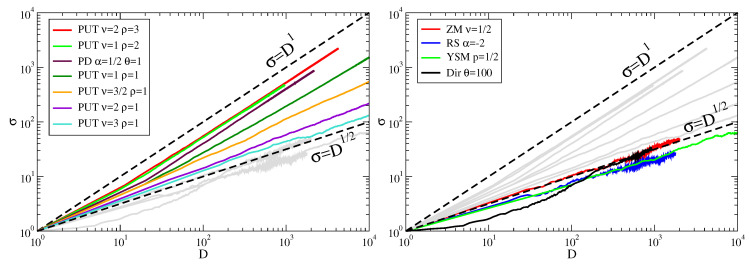

In Figure 6, we show the numerical results of two simulations of PUT with , one with and one with , in order to cover all the possible cases of Equations (20)–(22), plus the random sampling from a zipfian distribution with exponent . Besides the interesting linearity of the fluctuation scaling in the case of , its behaviour in case of fast growing spaces can also be pointed out. In that case, Heaps’ law is linear as shown in Equation (21), and the traditional model of reference is the Yule-Simon model (YSM) [40]. The Yule-Simon model generates a sequence of characters with the following iterative rule. Starting from an initial character, at each time with a constant probability p, a brand new character is chosen while with probability one selects one of the characters already present in the sequence (which implies drawing them with their multiplicity). In this way, in YSM, the rate of growth of different characters is constant and equal to p and this constant rate of innovation yields a linear Heaps’ law. The preferential attachment rule leads to a Zipf’s law with exponent . This is consistent with Equation (16) of random sampling and even with Equation (21) of PUT. The difference between YSM and PUT can be appreciated with Taylor’s law. In YSM, new characters appear with probability p so that the average number of different characters at step N is , and the variance as in the binomial distribution. As a result, in YSM one gets the Poissonian result . In contrast, PUT features numerically an exponent of , i.e., larger than but still less than 1 (see Figure 6).

Figure 6.

Taylor’s law in various generative models. (Left panel) Models that do not display a square root dependence of the dictionary standard deviation versus the dictionary itself are shown in color, the others in gray. Curves are listed from top to bottom according to their visual ordering. The Pólya’s urn model with triggering (PUT) shows an exponent one when and exponents in the range from 1/2 to ca. 0.87 when . The Poisson-Dirichlet (PD) process also displays a unity exponent. (Right panel) Models with a square root dependence of the dictionary standard deviation versus the dictionary itself are shown in color, the rest (highlighted in the left panel) in gray. The models are Zanette-Montemurro (ZM), Random Sampling (RS), Yule-Simon Model (YSM) and the Dirichlet process (Dir). All these four as well as the PUT with parameters and , the PD with and produce the same Heaps’ law with exponent . Each curve is the result of 100 runs of steps each. The dashed lines with exponents 1/2 and 1 are shown as a guide for the eye.

Given the intrinsic inability of YSM to accomplish for sub-linear dictionary growths, Zanette and Montemurro [41] proposed a simple variant of it. In this variant (ZM), the rate of introduction of new characters, i.e., p, is not constant any more. It is made instead time-dependent with an ad hoc chosen functional form able to reproduce the right range for the Heaps’ exponents. For a Heaps’ exponent , the rate of innovation p is chosen proportional to . This expedient allows to reproduce both Zipf’s law and, by construction, Heaps’ law. The two mechanisms for Zipf’s and Heaps’ production are independent of each other as in YSM so that we expect for Taylor’s law the same behavior of YSM, i.e., an exponent . After all, ZM can be seen as a YSM with a diluting time flow, which might not affect the scaling of the fluctuations of YSM at a given time. In Figure 6 we show that indeed ZM features a Taylor’s exponent of (magenta curve).

For the Poisson-Dirichlet and the Dirichlet processes, analytical solutions can be computed for the moments of the probability distribution [13,42], yielding the asymptotic exponents respectively 1 and in the Taylor’s law. Numerical results are given in Figure 6. Note that a non trivial exponent in the Taylor’s law is featured by the Poisson-Dirichlet process, where the probability of a novelty to occur does depend on the number of previous novelties, while the Dirichlet process lacks both properties.

6. Discussion

In this paper we have argued that the notion of adjacent possible is key to explain the occurrence of the Zipf’s, Heaps’ and Taylor’s laws in a very general way. We have presented a mathematical framework, based on the notion of adjacent possible, and instantiated through a Polya’s urn modelling scheme, that accounts for the simultaneous validity of the three laws just mentioned in all their possible regimes.

We think this a very important result that will help in assessing the relevance and the scope of the many approaches proposed so far in the literature. In order to be as clear as possible, let us itemize the key points:

The first point we make is about the many claims made in literature about the possibility to deduce the Heaps’ law by simply sampling a Zipf-like distribution of frequencies of events. Though, as seen above, it is possible to deduce a power-law behaviour for the growth of distinct elements by randomly drawing from a Zipf-like distribution, this procedure does not allow to reproduce the empirical results. It has been conjectured in [26], that texts are subject to a topicality phenomenon, i.e., writers do not sample the same Zipf’s law. This implies that the same word can appear at different ranking positions depending on the specific context. Though this is an interesting point, we think that the deduction of the Heaps’ law from the sampling of a Zipfian distribution is not satisfactory from two different points of view. First of all, the empirical Heaps’ and Zipf’s laws are never pure power-laws. We have seen for instance that for written texts the frequency-rank plot features a double slope. Nevertheless, we have seen that a relation exists between the exponent of the frequency-rank distribution at high ranks (rare words) and the asymptotic exponent of the Heaps’ law. In other words, the behaviour of the rarest words is responsible for the entrance rate of new words (or new items). Even though a pure power-law behaviour was observed, we have shown that the statistics of fluctuations, represented by the Taylor’s law, would not reproduce the empirical results (unless a specific sampling procedure based on the hypothesis of topicality is adopted [26]). The conclusion to be taken is that in general the Heaps’ and the Zipf’s laws are non-trivially related and their explanation should be made based instead on first-principle.

Models featuring a fixed space of possibilities are not able to reproduce the simultaneous occurrence of the three laws. For instance, a multicolor Polya’s urn model [29] does not even produce power-law-like behaviours for the Zipf’s and the Heaps’ laws. It rather features a saturation phenomenon, related to the exploration of the predefined boundaries of the space of possibilities. The conclusion here is that one needs a modelling scheme featuring a space of possibilities with dynamical boundaries, for instance expanding ones.

Models that incorporate the possibility to expand the space of possibilities like the Yule-Simon [40] model or the Hoppe model fail in explaining the empirical results. In the Yule-Simon model, the innovation rate is constant and the the Heaps’ law is reproduced with the trivial unitary exponent. An ad-hoc correction to this has been proposed by Zanette and Montemurro [41], who postulate a sublinear power-law Heaps’s law form the outset, without providing any first-principle explanation for it. In addition, in this case the result is not satisfactory because the resulting time-series does not obey the Taylor’s law, being instead compatible with a series of i.i.d variables. The question is now why this approach is not reproducing Taylor’s law despite the fact that it fixes the expansion of the space of possibilities. In our opinion what is lacking in the scheme by Zanette and Montemurro is the interplay between the preferential attachment mechanism and the exploration of new possibilities. In other words, the triggering effect which is instead a key features of the PUT model (see next item). The situation for the Hoppe model is different [36], i.e., a multicolor Polya’s urn with a special replicator color. In this case, though a self-consistent expansion of the space of possibilities is in place, an explicit mechanism of triggering, in which the realization of an innovation facilitates the realization of further innovations, lacks. In this case the innovation rate is too weak and the Heap’s law features only a logarithmic growth, i.e., it is slower than any power-law sublinear behaviour.

The Polya’s urn model with triggering (PUT) [10], incorporating the notion of adjacent possible, allows to simultaneously account for the three laws, Zipf’s, Heaps’ and Taylor’s, in all their regimes, without ad-hoc or arbitrary assumptions. In this case, the space of possibilities expands conditional to the occurrence of novel events in a way that is compatible with the empirical findings. From the mathematical point of view, the expansion into the adjacent possible solves another issue related to Zipf’s and Heaps’ generative models. In fact, in PUT one can switch with continuity from the sublinear to the linear regime of the dictionary growth and vice-versa and this by tuning one parameter only: the ratio . This ratio is not limited to a ratio of integers. In fact, in the SI of [10] it was demonstrated that the same expressions for the Heaps’ and Zipf’s laws are recovered if one uses parameters and extracted from a distribution with fixed means. One possible strategy is to fix an integer while can assume any value in the real numbers (in simulations this is a floating point value), and the mantissa can be taken into account by resorting to probabilities. Therefore, it is perfectly sound to state that one switches with continuity from the sublinear regime to the linear one in the interval , with , although the rigorous mathematical characterization of the transition is far from being understood.

It should be remarked that the Poisson-Dirichlet process [12,13,14] is also able to explain the three Zipf’s, Heaps’ and Taylor’s laws only in the strict sub-linear regime for the Heaps’ law. It cannot however account for a constant innovation rate as in the PUT modelling scheme. We also point out that the PUT model embraces the Poisson-Dirichlet and the Dirichlet processes as particular cases.

In this paper we highlighted that the simultaneous occurrence of the Zipf’s, Heaps’ and Taylor’s laws can be explained in the framework of the adjacent possible scheme. This implies considering a space of possibilities that expands or gets restructured conditional to the occurrence of a novel event. The Pólya’s urn with triggering features these properties. Poisson-Dirichlet processes can also be said to belong to the adjacent possible scheme. Though no explicit mention is made about the space of possibilities in those schemes, the probability of the occurrence of a novel event closely depends on how many novelties occurred in the past. We recall that the PUT model includes Dirichlet-like processes as particular cases. From this perspective, PUT-like models seem to be good candidates to explain higher order features connected to innovation processes. We conclude by saying that the very notion of adjacent possible, though sufficient to explain the stylized facts of innovation processes, can be only conjecture as a necessary condition for the validity of the three laws mentioned above. No counterexamples have been found so far with which, without a dynamically restructured space of possibilities, one can satisfactorily explain the empirically observed laws.

Acknowledgments

We thank S. H. Strogatz for interesting discussions. V.D.P.S. is grateful to M. Osella for interesting discussions on the relevance of Taylor’s law. V.D.P.S. acknowledges the Austrian Research Promotion Agency FFG under grant #857136 for financial support.

Abbreviations

The following abbreviations are used in this manuscript:

Appendix A. Analytic Derivation of Heaps’ law in the Urn Model with Triggering

We here derive the Heaps’ law for a general variation of the urn model with triggering. For the sake of completeness, we recall here the model: An urn initially contains distinct elements, represented by balls of different colors. By randomly extracting elements from the urn, we construct a sequence . Both the urn and the sequence enlarge during the process. At each time step t, an element is drawn at random from the urn: (i) iff the chosen element is old (i.e., it already appeared in the sequence ), it is added to the sequence, and put back in the urn along with additional copies of it; (ii) iff the chosen element is new (i.e., it appears for the first time in the sequence ), it is added to the sequence, and put back in the urn along with additional copies of it. Further, brand new distinct elements are also added to the urn.

We can now write the equation governing the growth of the number of distinct elements as a function of the total number n of elements in the sequence (n is also obviously denoting the time step t above):

| (A1) |

where we have defined .

By defining and neglecting , we can write:

| (A2) |

which gives:

| (A3) |

Here we note that by definition , and , for a given such that the solutions we found are valid for any . In order to integrate Equation (A3) we need to study the sign of the expression . Let us do this by considering separately the case and , and postponing the computation for .

Appendix A.1. Case 1: ρ>ν

In this case, if we have (and thus obviously ), while if it exists a such that for . Thus, if is decreasing in n, we can safely perform the integration for any , for some . Let us make this assumption and verify it at the end of the computation. By integrating Equation (A3) we thus obtain:

| (A4) |

and solving:

| (A5) |

We can now substitute , and after some algebra we can write:

| (A6) |

that gives the solution:

| (A7) |

We observe that monotonically, so that the assumption made above is satisfied.

Appendix A.2. Case 2: ρ<ν

Let us now assume and let us verify the assumption at the end. We thus write:

| (A8) |

After similar calculation as in the case , we arrive to the relation:

| (A9) |

that gives the solution:

| (A10) |

Note that per . We already discussed in the main text the case , that has to be treated separately, so we here consider . From (A10) we observe that is increasing in n: with . Thus , with limit zero in the asymptotic limit . The initial assumption is thus satisfied in the entire range of the z values.

Appendix B. Analytic Determination of Zipf’s Law in the Continuous Approximation

In the following we derive the expression of Zipf’s exponents for the model of Pólya’s urn with innovations, by exploiting the continuous approximation.

Appendix B.1. Preliminary Considerations

The time evolution of the number of different colors D in the stream can be approximated by the following equation (see Equation (19) with ):

| (A11) |

Putting aside the particular cases and , Equation (A11) can be solved analytically to yield, in the leading terms at large t (see also Appendix A):

| (A12) |

The two regimes given by the relative values of and result in two different Heaps’ exponents , i.e., and .

In the denominator of Equation (A11), the total number of balls in the urn appears: , so that we can write:

| (A13) |

Appendix B.2. Master Equation

We denote with the number of balls with a given color occurring k-times in the urn. In particular, we have . The following master equation can be written for the :

| (A14) |

We introduce now the probability that a given color appears k-times in the urn, i.e., the corresponding normalized version of the number of occurrences . In order to have , we must choose . The idea is that, as the time runs, the probabilities will tend to a stationary distribution, i.e., a distribution independent of t. By substituting in Equation (A14), we get

| (A15) |

This equation can be solved easily by substituting and solving for , which leads to

| (A16) |

and conversely the frequency-rank exponent . Note that, while depends on the relative values of and , does not. To relate the distribution in the urn to that of the stream is an easy task.

Appendix B.3. Particular case ν=ρ

In addition, in this case Equation (A11) can be solved analytically with the solution including the Lambert W function. At large values of t, the solution can be approximated as

| (A17) |

so that . Equation (A14) can be written as before as:

| (A18) |

which results in and .

Appendix B.4. Particular Case ν=0

This case is identical to the Hoppe’s urn model. When a ball with a brand new color is extracted, exactly one new color enters the urn so that the number of unobserved colors stays the same during the whole dynamics. If we start with one single ball, there will always be only one unobserved color in the urn and this color would have exactly the same function of the black ball with weight one in Hoppe’s model. The equation for the growth of novelties will be:

| (A19) |

while the frequency-rank will be decaying exponentially. In order to introduce the equivalent counterpart of the weight of the black ball in the Hoppe’s model, whenever a novelty is extracted, w balls of the same brand new color could be added to the urn.

Author Contributions

Conceptualization, F.T., V.L. and V.D.P.S.; Data curation, F.T. and V.D.P.S.; Formal analysis, F.T. and V.D.P.S.; Funding acquisition, V.L.; Investigation, F.T., V.L. and V.D.P.S.; Methodology, F.T., V.L. and V.D.P.S.; Resources, V.L.; Software, V.D.P.S., F.T.; Validation, F.T., V.L. and V.D.P.S.; Writing—Original Draft, F.T., V.L. and V.D.P.S.; Writing—Review & Editing, F.T., V.L. and V.D.P.S.

Funding

This research was partially funded by the Sony Computer Science Laboratories Paris.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Estoup J.B. Les Gammes Sténographiques. Institut Sténographique de France; Paris, France: 1916. [Google Scholar]

- 2.Zipf G.K. Relative Frequency as a Determinant of Phonetic Change. Harvard Stud. Class. Philol. 1929;40:1–95. doi: 10.2307/310585. [DOI] [Google Scholar]

- 3.Zipf G.K. The Psychobiology of Language. Houghton-Mifflin; New York, NY, USA: 1935. [Google Scholar]

- 4.Zipf G.K. Human Behavior and the Principle of Least Effort. Addison-Wesley; Reading, MA, USA: 1949. [Google Scholar]

- 5.Herdan G. Type-Token Mathematics: A Textbook of Mathematical Linguistics. Mouton en Company; The Hague, The Netherlands: 1960. (Janua linguarum. Series Maior. No. 4). [Google Scholar]

- 6.Heaps H.S. Information Retrieval-Computational and Theoretical Aspects. Academic Press; Orlando, FL, USA: 1978. [Google Scholar]

- 7.Taylor L. Aggregation, Variance and the Mean. Nature. 1961;189:732. doi: 10.1038/189732a0. [DOI] [Google Scholar]

- 8.Kauffman S.A. Investigations: The Nature of Autonomous Agents and the Worlds They Mutually Create. Santa Fe Institute; Santa Fe, NM, USA: 1996. SFI Working Papers. [Google Scholar]

- 9.Kauffman S.A. Investigations. Oxford University Press; New York, NY, USA: Oxford, UK: 2000. [Google Scholar]

- 10.Tria F., Loreto V., Servedio V.D.P., Strogatz S.H. The dynamics of correlated novelties. Nat. Sci. Rep. 2014;4 doi: 10.1038/srep05890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Loreto V., Servedio V.D.P., Tria F., Strogatz S.H. Dynamics on expanding spaces: modeling the emergence of novelties. In: Altmann E., Esposti M.D., Pachet F., editors. Universality and Creativity in Language. Springer; Cham, Switzerland: 2016. pp. 59–83. [Google Scholar]

- 12.Pitman J. Lecture Notes in Mathematics. Volume 1875. Springer-Verlag; Berlin, Germany: 2006. Combinatorial stochastic processes; p. x+256. [Google Scholar]

- 13.Buntine W., Hutter M. A Bayesian View of the Poisson-Dirichlet Process. arXiv. 2010. 1007.0296

- 14.De Blasi P., Favaro S., Lijoi A., Mena R.H., Pruenster I., Ruggiero M. Are Gibbs-Type Priors the Most Natural Generalization of the Dirichlet Process? IEEE Trans. Pattern Anal. Mach. Intel. 2015;37:212–229. doi: 10.1109/TPAMI.2013.217. [DOI] [PubMed] [Google Scholar]

- 15.Hart M. Project Gutenberg. 1971 Available online: http://www.gutenberg.org/

- 16.Petruszewycz M. L’histoire de la loi d’Estoup-Zipf: Documents. Math. Sci. Hum. 1973;44:41–56. [Google Scholar]

- 17.Li W. Zipf’s Law everywhere. Glottometrics. 2002;5:14–21. [Google Scholar]

- 18.Newman M.E.J. Power laws, Pareto distributions and Zipf’s law. Contemp. Phys. 2005;46:323–351. doi: 10.1080/00107510500052444. [DOI] [Google Scholar]

- 19.Piantadosi S.T. Zipf’s word frequency law in natural language: A critical review and future directions. Psychon. Bull. Rev. 2014;21:1112–1130. doi: 10.3758/s13423-014-0585-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Baeza-Yates R., Navarro G. Block addressing indices for approximate text retrieval. J. Am. Soc. Inf. Sci. 2000;51:69–82. doi: 10.1002/(SICI)1097-4571(2000)51:1<69::AID-ASI10>3.0.CO;2-C. [DOI] [Google Scholar]

- 21.Baayen R. Word Frequency Distributions. Springer; Dordrecht, The Netherlands: 2001. Number v. 1 in Text, Speech and Language Technology. [Google Scholar]

- 22.Egghe L. Untangling Herdan’s law and Heaps’ Law: Mathematical and informetric arguments. J. Am. Soc. Inf. Sci. Technol. 2007;58:702–709. doi: 10.1002/asi.20524. [DOI] [Google Scholar]

- 23.Serrano M.A., Flammini A., Menczer F. Modeling statistical properties of written text. PLoS ONE. 2009;4:e5372. doi: 10.1371/journal.pone.0005372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Lü L., Zhang Z.K., Zhou T. Zipf’s law leads to Heaps’ law: Analyzing their relation in finite-size systems. PLoS ONE. 2010;5:e14139. doi: 10.1371/journal.pone.0014139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cristelli M., Batty M., Pietronero L. There is More than a Power Law in Zipf. Sci. Rep. 2012;2:812. doi: 10.1038/srep00812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gerlach M., Altmann E.G. Scaling laws and fluctuations in the statistics of word frequencies. New J. Phys. 2014;16:113010. doi: 10.1088/1367-2630/16/11/113010. [DOI] [Google Scholar]

- 27.Johnson S. Where Good Ideas Come From: The Natural History of Innovation. Riverhead Hardcover; New York, NY, USA: 2010. [Google Scholar]

- 28.Wagner A., Rosen W. Spaces of the possible: universal Darwinism and the wall between technological and biological innovation. J. R. Soc. Interface. 2014;11:20131190. doi: 10.1098/rsif.2013.1190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gouet R. Strong Convergence of Proportions in a Multicolor P’olya Urn. J. Appl. Probab. 1997;34:426–435. doi: 10.2307/3215382. [DOI] [Google Scholar]

- 30.Tria F. The dynamics of innovation through the expansion in the adjacent possible. Nuovo Cim. C Geophys. Space Phys. C. 2016;39:280. [Google Scholar]

- 31.Pitman J. Exchangeable and partially exchangeable random partitions. Probab. Theory Relat. Fields. 1995;102:145–158. doi: 10.1007/BF01213386. [DOI] [Google Scholar]

- 32.De Finetti B. La Prévision: Ses Lois Logiques, Ses Sources Subjectives. Annales de l’Institut Henri Poincaré. 1937;17:1–68. [Google Scholar]

- 33.Zabell S. Predicting the unpredictable. Synthese. 1992;90:205–232. doi: 10.1007/BF00485351. [DOI] [Google Scholar]

- 34.Monechi B., Ruiz-Serrano A., Tria F., Loreto V. Waves of Novelties in the Expansion into the Adjacent Possible. PLoS ONE. 2017;12:e0179303. doi: 10.1371/journal.pone.0179303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Iacopini I., Milojević S.C.V., Latora V. Network Dynamics of Innovation Processes. Phys. Rev. Lett. 2018;120:048301. doi: 10.1103/PhysRevLett.120.048301. [DOI] [PubMed] [Google Scholar]

- 36.Hoppe F.M. Pólya-like urns and the Ewens’ sampling formula. J. Math. Biol. 1984;20:91–94. doi: 10.1007/BF00275863. [DOI] [Google Scholar]

- 37.Ewens W. The Sampling Theory of Selectively Neutral Alleles. Theor. Popul. Biol. 1972;3:87–112. doi: 10.1016/0040-5809(72)90035-4. [DOI] [PubMed] [Google Scholar]

- 38.Fisher R.A. The Genetical Theory of Natural Selection. Clarendon Press; Oxford, UK: 1930. [Google Scholar]

- 39.Wright S. Evolution in Mendelian populations. Genetics. 1931;16:97. doi: 10.1093/genetics/16.2.97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Simon H. On a class of skew distribution functions. Biometrika. 1955;42:425–440. doi: 10.1093/biomet/42.3-4.425. [DOI] [Google Scholar]

- 41.Zanette D., Montemurro M. Dynamics of Text Generation with Realistic Zipf’s Distribution. J. Quant. Linguist. 2005;12:29. doi: 10.1080/09296170500055293. [DOI] [Google Scholar]

- 42.Yamato H., Shibuya M. Moments of some statistics of pitman sampling formula. Bull. Inf. Cybern. 2000;32:1–10. [Google Scholar]