Abstract

Information is often described as a reduction of uncertainty associated with a restriction of possible choices. Despite appearing in Hartley’s foundational work on information theory, there is a surprising lack of a formal treatment of this interpretation in terms of exclusions. This paper addresses the gap by providing an explicit characterisation of information in terms of probability mass exclusions. It then demonstrates that different exclusions can yield the same amount of information and discusses the insight this provides about how information is shared amongst random variables—lack of progress in this area is a key barrier preventing us from understanding how information is distributed in complex systems. The paper closes by deriving a decomposition of the mutual information which can distinguish between differing exclusions; this provides surprising insight into the nature of directed information.

Keywords: entropy, mutual information, pointwise, directed, information decomposition

1. Introduction

In information theory, there is a duality between the concepts entropy and information: entropy is a measure of uncertainty or freedom of choice, whereas information is a measure of reduction of uncertainty (increase in certainty) or restriction of choice. Interestingly, this description of information as a restriction of choice predates even Shannon [1], originating with Hartley [2]:

“By successive selections a sequence of symbols is brought to the listener’s attention. At each selection there are eliminated all of the other symbols which might have been chosen. As the selections proceed more and more possible symbol sequences are eliminated, and we say that the information becomes more precise.”

Indeed, this interpretation led Hartley to derive the measure of information associated with a set of equally likely choices, which Shannon later generalised to account for unequally likely choices. Nevertheless, despite being used since the foundation of information theory, there is a surprising lack of a formal characterisation of information in terms of the elimination of choice. Both Fano [3] and Ash [4] motivate the notion of information in this way, but go on to derive the measure without explicit reference to the restriction of choice. More specifically, their motivational examples consider a set of possible choices modelled by a random variable X. Then in alignment with Hartley’s description, they consider information to be something which excludes possible choices x, with more eliminations corresponding to greater information; however, this approach does not capture the concept of information in its most general sense since it cannot account for information provided by partial eliminations which merely reduces the likelihood of a choice x from occurring. (Of course, despite motivating the notion of information in this way, both Fano and Ash provide Shannon’s generalised measure of information which can account for unequally likely choices.) Nonetheless, Section 2 of this paper generalises Hartley’s interpretation of information by providing a formal characterisation of information in terms of probability mass exclusions.

Our interest in providing a formal interpretation of information in terms of exclusions is driven by a desire to understand how information is distributed in complex systems [5,6]. In particular, we are interested in decomposing the total information provided by a set of source variables about one or more target variables into the following atoms of information: the unique information provided by each individual source variable, the shared information that could be provided by two or more source variables, and the synergistic information which is only available through simultaneous knowledge of two or more variables [7]. This idea was originally proposed by Williams and Beer who also introduced an axiomatic framework for such a decomposition [8]. However, flaws have been identified with a specific detail in their approach regarding “whether different random variables carry the same information or just the same amount of information” [9] (see also [10,11]). With this problem in mind, Section 3 discusses how probability mass exclusions may provide a principled method for determining if variables provide the same information. Based upon this, Section 4 derives an information-theoretic expression which can distinguish between different probability mass exclusions. Finally, Section 5 closes by discussing how this expression could be used to identify when distinct events provide the same information.

2. Information and Eliminations

Consider two random variables X and Y with discrete sample spaces and , and say that we are trying to predict or infer the value of an event x from X using an event y from Y which has occurred jointly. Ideally, there is a one-to-one correspondence between the occurrence of events from X and Y such that an event x can be exactly predicted using an event y. However, in most complex systems, the presence of noise or some other such ambiguity means that we typically do not have this ideal correspondence. Nevertheless, when a particular event y is observed, knowledge of the distributions and can be utilised to improve the prediction on average by using the posterior in place of the prior . Our goal now is to understand how Hartley’s description relates to the notion of conditional probability.

When a particular event y is observed, we know that the complementary event did not occur. Thus we can consider the joint distribution and eliminate the probability mass which is associated with this complementary event . In other words, we exclude the probability mass which leaves only the probability mass remaining. The surviving probability mass can then be normalised by dividing by , which, by definition, yields the conditional distribution . Hence, with this elimination process in mind, consider the following definition:

Definition 1

(Probability Mass Exclusion). A probability mass exclusion induced by the event y from the random variable Y is the probability mass associated with the complementary event , i.e., .

Echoing Hartley’s description, it is perhaps tempting to think that the greater the probability mass exclusion , the greater the information that y provides about x; however, this is not true in general. To see this, consider the joint event x from the random variable X. Knowing the event x occurred enables us to categorise the probability mass exclusions induced by y into two distinct types: the first is the portion of the probability mass exclusion associated with the complementary event , i.e., ; while the second is the portion of the exclusion associated with the event x, i.e., . Before discussing these distinct types of exclusion, consider the conditional probability of x given y written in terms of these two categories,

| (1) |

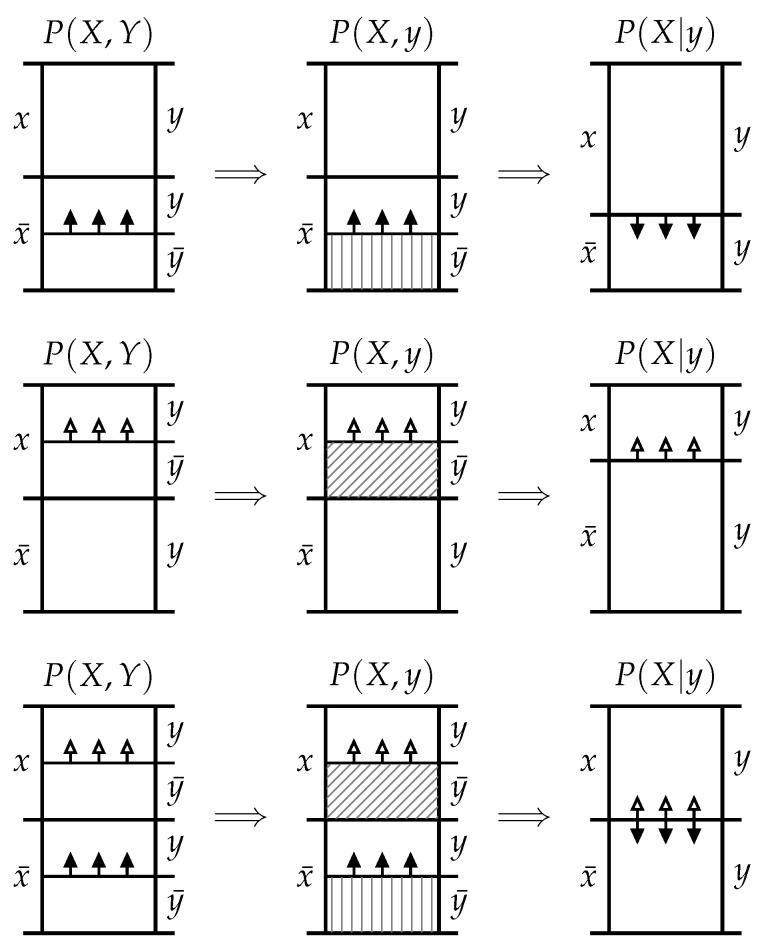

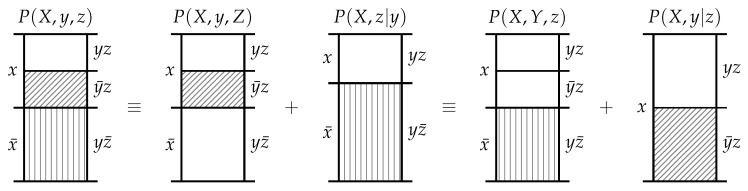

To see why these two types of exclusions are distinct, consider two special cases: The first special case is when the event y induces exclusions which are confined to the probability mass associated with the complementary event . This means that the portion of exclusion is non-zero while the portion . In this case the posterior is larger than the prior and is an increasing function of the exclusion for a fixed . This can be seen visually in the probability mass diagram at the top of Figure 1 or can be formally demonstrated by inserting into (1). In this case, the mutual information

| (2) |

is a strictly positive, increasing function of for a fixed . (Note that this is the mutual information between events rather than the average mutual information between variables; depending on the context, it is also referred to as the the information density, the pointwise mutual information, or the local mutual information.) For this special case, it is indeed true that the greater the probability mass exclusion , the greater the information y provides about x. Hence, we define this type of exclusion as follows:

Definition 2

(Informative Probability Mass Exclusion). For the joint event from the random variables X and Y, an informative probability mass exclusion induced by the event y is the portion of the probability mass exclusion associated with the complementary event , i.e., .

Figure 1.

In probability mass diagrams, height represents the probability mass of each joint event from which must sum to 1. The leftmost of the diagrams depicts the joint distribution , while the central diagrams depict the joint distribution after the occurence of the event leads to exclusion of the probability mass associated with the complementary event . By convention, vertical and diagonal hatching represent informative and misinformative exclusions, respectively. The rightmost diagrams represent the conditional distribution after the remaining probability mass has been normalised. Top row: A purely informative probability mass exclusion, and , leading to and hence . Middle row: A purely misinformative probability mass exclusion, and , leading to and hence . Bottom row: The general case and . Whether turns out to be greater or less than depends on the size of both the informative and misinformative exclusions.

The second special case is when the event y induces exclusions which are confined to the probability mass associated with the event x. This means that the portion of exclusion while the potion is non-zero. In this case, the posterior is smaller than the prior and is a decreasing function of the exclusion for a fixed . This can be seen visually in the probability mass diagram in the middle row of Figure 1 or can be formally demonstrated by inserting into (1). In this case, the mutual information (2) is a strictly negative, decreasing function of for fixed . (Although the mutual information is non-negative when averaged across events from both variables, it may be negative between pairs of events.) This second special case demonstrates that it is not true that the greater the probability mass exclusion , the greater the information y provides about x. Hence, we define this type of exclusion as follows:

Definition 3

(Misinformative Probability Mass Exclusion). For the joint event from the random variables X and Y, a misinformative probability mass exclusion induced by the event y is the portion of the probability mass exclusion associated with the event x, i.e., .

Now consider the general case where both informative and misinformative probability mass exclusions are present simultaneously. It is not immediately clear whether the posterior is larger or smaller than the prior , as this depends on the relative size of the informative and misinformative exclusions. Indeed, for a fixed prior , we can vary the informative exclusion whilst still maintaining a fixed posterior by co-varying the misinformative exclusion appropriately; specifically by choosing

| (3) |

Although it is not immediately clear whether the posterior is larger or smaller than the prior , the general case maintains the same monotonic dependence as the two constituent special cases. Specifically, if we fix and the misinformative exclusion , then the posterior is an increasing function of the informative exclusion . On the other hand, if we fix and the informative exclusion , then the posterior is a decreasing function of the misinformative exclusion . This can been seen visually in the probability mass diagram at the bottom of Figure 1 or can be formally demonstrated by fixing and varying the appropriate values for each case in (1). Finally, the relationship between the mutual information and the exclusions in this general case can be explored by inserting (1) into (2), which yields

| (4) |

If and the misinformative exclusion are fixed, then is an increasing function of the informative exclusion . On the other hand, if and the informative exclusion are fixed, then is a decreasing function of the misinformative exclusion . Finally, if both the informative exclusion and misinformative exclusion are fixed, the is an increasing function of .

Now that a formal relationship between eliminations and information has been established using probability theory, we return to the motivational question—can this understanding of information in terms of exclusions aid in our understanding of how random variables share information?

3. Information Decomposition and Probability Mass Exclusions

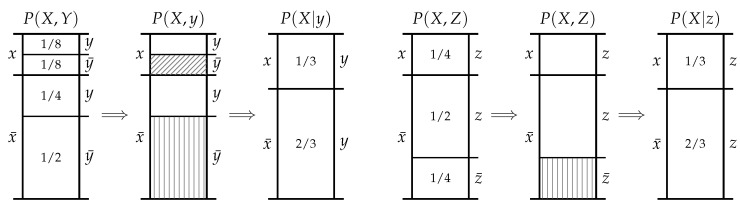

Consider the example in Figure 2 where the events y and z each induce different exclusions, both in terms of size and type, and yet provide the same amount of information about the event x since

| (5) |

Figure 2.

Top: probability mass diagram for . Bottom: probability mass diagram for . Note that the events and can induce different exclusions in and yet still yield the same conditional distributions and hence provide the same amount of information about the event .

The events y and z reduce our uncertainty about x in distinct ways and yet, after making the relevant exclusions, we have the same freedom of choice about x. It is our belief that the information provided by y and z should only be deemed to be the same information if they both reduce our uncertainty about x in the same way; we contend that for the events y and z to reduce our uncertainty about x in the same way, they would have to identically restrict our choice, or make the same exclusions with respect to x.

What this example demonstrates is that the mutual information does not—and indeed cannot—distinguish between how events provide information about other events. By definition, the mutual information only depends on the prior and posterior probabilities. Although the posterior depends on both the informative and misinformative exclusions, there is no one-to-one correspondence between these exclusions and the resultant mutual information. Indeed, as we saw in (3), there is a continuous range of informative and misinformative exclusions which could yield any given value for the mutual information. As such, any information decomposition based upon the mutual information alone could never distinguish between how events provide information in terms of exclusions. Thus the question naturally arises—can we express the exclusions in terms of information-theoretic measures such that there is a one-to-one correspondence between exclusions and the measures? Such an expression could be utilised in an information decomposition which can distinguish between whether events provide the same information or merely the same amount of information.

4. The Directed Components of Mutual Information

The mutual information cannot distinguish between events which induce different exclusions because any given value could arise from a whole continuum of possible informative and misinformative exclusions. Hence, consider decomposing the mutual information into two separate information-theoretic components. Motivated by the strictly positive mutual information observed in the purely informative case and the strictly negative mutual information observed in the purely informative case, let us demand that one of the components be positive while the other component is negative.

Postulate 1

(Decomposition). The information provided by y about x can be decomposed into two non-negative components, such that .

Furthermore, let us demand that the two components preserve the functional dependencies between the mutual information and the informative and misinformative exclusion observed in (4) for the general case.

Postulate 2

(Monotonicity). The functions and should satisfy the following conditions:

- 1.

For all fixed and , the function is a continuous, increasing function of .

- 2.

For all fixed and , the function is a continuous, increasing function of .

- 3.

For all fixed and , the functions and are increasing and decreasing functions of , respectively.

Before considering the functions which might satisfy Postulates 1 and 2, there are two further observations to be made about probability mass exclusions. The first observation is that an event x could never induce a misinformative exclusion about itself, since the misinformative exclusion . Indeed, inserting this result into the self-information in terms of (4) yields the Shannon information content of the event x,

| (6) |

Postulate 3

(Self-Information). An event cannot misinform about itself, hence .

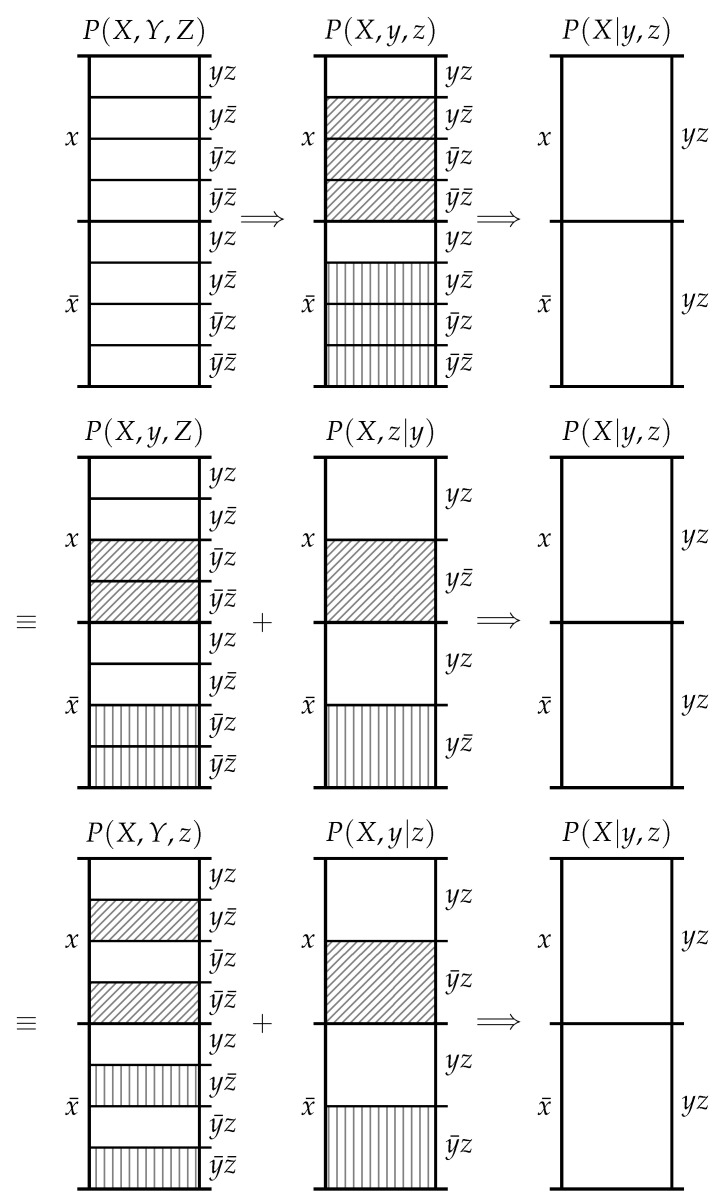

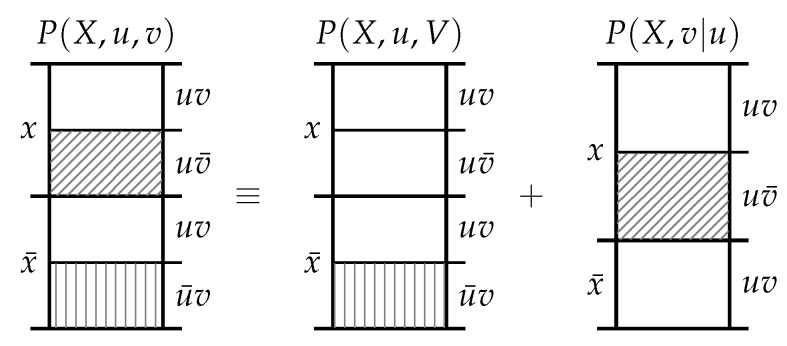

The second observation is that the informative and misinformative exclusions exclusions must individually satisfy the chain rule of probability. As shown in Figure 3, there are three equivalent ways to consider the exclusions induced in by the events y and z. Firstly, we could consider the information provided by the joint event which excludes the probability mass in associated with the joint events , and . Secondly, we could first consider the information provided by y which excludes the probability mass in associated with the joint events and , and then subsequently consider the information provided by z which excludes the probability mass in associated with the joint event . Thirdly, we could first consider the information provided by z which excludes the probability mass in associated with the joint events and , and then subsequently consider the information provided by y which excludes the probability mass in associated with the joint event . Regardless of the chaining, we start with the same and finish with the same .

Figure 3.

Top: y and z both simultaneously induce probability mass exclusions in leading directly to . Middle: y could induce exclusions in yielding , and then z could induce exclusions in leading to . Bottom: the same as the middle, only vice versa in y and z.

Postulate 4

(Chain Rule). The functions and satisfy a chain rule; i.e.,

where the conditional notation denotes the same function only with conditional probability as an argument.

Theorem 1.

The unique functions satisfying Postulates 1–4 are

(7)

(8)

By rewriting (7) and (8) in terms of probability mass exclusions, it is easy to verify that Theorem 1 satisfies Postulates 1–4. Perhaps unsurprisingly, this yields a decomposed version of (4),

| (9) |

| (10) |

Hence, in order to prove Theorem 1 we must demonstrate that (7) and (8) are the unique functions which satisfy Postulates 1–4. This proof is provided in full in Appendix A.

5. Discussion

Theorem 1 answers the question posed at the end of Section 3—although there is no one-to-one correspondence between these exclusions and the mutual information, there is a one-to-one correspondence between exclusions and the decomposition

| (11) |

It is important to note the directed nature of this decomposition—this equation considers the exclusions induced by y with respect to x. It is novel that this particular decomposition enables us to uniquely determine the size of the exclusions induced by y with respect to x, rather than , which would not satisfy Postulate 4. Indeed, this latter decomposition is more typically associated with the information provided by y about x since it reflects the change from the prior to the posterior . Of course, by Theorem 1 this latter decomposition would allow us to uniquely determine the size exclusions induced by x with respect to y.

There is another important asymmetry which can be seen from (9) and (10). The negative component depends on the size of only the misinformative exclusion while the positive component depends on the size of both the informative and misinformative exclusions. The positive component depends on the total size of the exclusions induced by y and hence has no functional dependence on x. It quantifies the specificity of the event y: the less likely the outcome y is to occur, the greater the total amount of probability mass excluded by y and therefore the greater the potential for y to inform about x. On the other hand, the negative component quantifies the ambiguity of y given x: the less likely the outcome y is to coincide with the outcome x, the greater the misinformative probability mass exclusion and therefore the greater the potential for y to misinform about x. This asymmetry between the components is apparent when considering the two special cases. In the purely informative case where , only the positive informational component is non-zero. On the other hand, in the purely misinformative case, both the positive and negative informational components are non-zero, although it is clear that and hence .

Let us now consider how this information-theoretic expression (which has a one-to-one correspondence with exclusion) could be utilised to provide an information decomposition that can distinguish between whether events provide the same information or merely the same amount of information. Recall the example from Section 3 where y and z provide the same amount of information about x, and consider this example in terms of the decomposition (11),

| (12) |

In contrast to the mutual information in (5), the decomposition reflects the different ways y and z provide information through differing exclusions even if they provide the same amount of information. As for how to decompose multivariate information using this decomposition? This is not the subject of this paper—those who are interested in seen an operational definition of shared information based on redundant exclusions should see [12].

Acknowledgments

We thank Mikhail Prokopenko, Nathan Harding, Nils Bertschinger, and Nihat Ay for helpful discussions relating to this manuscript. We especially thank Michael Wibral for some of our earlier discussions regarding information and exclusions. Finally, we would like to thank the anonymous “Reviewer 2” of [12] for their helpful feedback regarding this paper.

Appendix A

This section contains the proof of Theorem 1. Since it is trivial to verify that (7) and (8) satisfy Postulates 1–4, the proof will focuses on establishing uniqueness. The proof is structured as follows: Lemma A1 considers the functional form required when and is used in the proof of Lemma A3; Lemmas A2 and A3 consider the purely informative and misinformative special cases respectively; finally, the proof of Theorem 1 brings these two special cases together for the general case.

The proof of Theorem 1 may seem convoluted, however there are two points to be made about this. Firstly, the proof of Lemma A1 is well-known in functional equation theory [13] and is only given for the sake of completeness. (Accepting this substantially reduces the length of the proof.) Secondly, when establishing uniqueness of the two components, we cannot assume that the components share a common base for the logarithm. Specifically, when considering the purely informative case, Lemma A2 shows that the positive component is a logarithm with same base as the logarithm from Postulate 3, denoted as b throughout. On the other hand, considering the purely misinformative case in Lemma A3 demonstrates that the negative component is a logarithm with base k which is greater than or equal to b. When combining these in the proof of Theorem 1, it is necessary to show that in order to prove that the components have a common base.

Lemma A1.

In the special case where , we have that with , where b is the base of the logarithm from Postulate 3.

Proof.

That the logarithm is the unique function which satisfies Postulates 2–4 is well-known in functional equation theory [13]; however, for the sake of completeness the proof is given here in full. Since , we have that and hence by Postulate 1, that . Furthermore, we also have that ; thus, without loss of generality, we will consider to be a function of rather than . As such, let be our candidate function for where . First consider the case where , such that . Postulate 4 demands that and hence , i.e., if there is no misinformative exclusion, then the negative informational component should be zero.

Now consider the case where so that m is a positive integer greater than 1. If r is an arbitrary positive integer, then lies somewhere between two powers of m, i.e., there exists a positive integer n such that

(A1) So long as the base k is greater than 1, the logarithm is a monotonically increasing function, thus

(A2) or equivalently,

(A3) By Postulate 2, is a monotonically increasing function of m, hence applying it to (A1) yields

(A4) Note that, by Postulate 4 and mathematical induction, it is trivial to verify that

(A5) Hence, by (A4) and (A5), we have that

(A6) Now, (A3) and (A6) have the same bounds, hence

(A7) Since m is fixed and r is arbitrary, let . Then, by the squeeze theorem, we get that

(A8) and here

(A9) Now consider the case where so that m is a rational number; in particular, let where s and r are positive integers. By Postulate 4,

(A10) Thus, combining (A9) and (A10), we get that

(A11) Now consider the case where such that m is a real number. By Postulate 2, the function (A11) is the unique solution, and hence, .

Finally, to show that , consider an event . By Postulate 3, . Furthermore, since , by Postulate 2, . Thus, , and hence . □

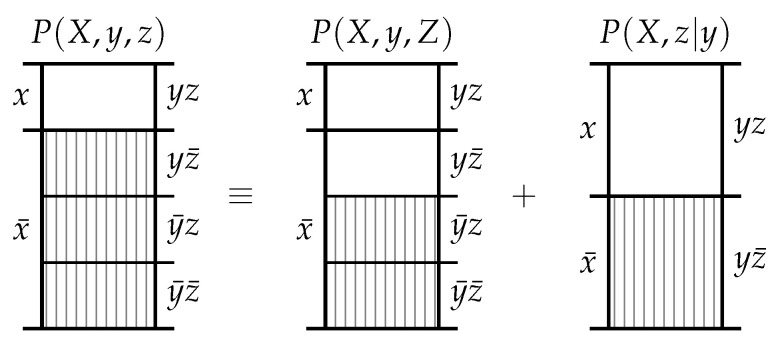

Figure A1.

The probability mass diagram associated with (A12). Lemma A2 uses Postulates 3 and 4 to provide a solution for the purely informative case.

Lemma A2.

In the purely informative case where , we have that and , where b is the base of the logarithm from Postulate 3.

Proof.

Consider an event z such that and . By Postulate 4,

(A12) as depicted in Figure A1. By Postulate 3, and , where the latter equality follows from the equivalence of the events x and z given y. Furthermore, since , we have that , and hence that . Thus, from (A12), we have that

(A13) Finally, by Postulate 1, . □

Lemma A3.

In the purely misinformative case where , we have that and with , where b is the base of the logarithm from Postulate 3.

Proof.

Consider an event . By Postulate 4,

(A14)

(A15) as depicted in Figure A2. Since , by Postulate 3, , , and . Furthermore, since , by Lemma A1, , hence, from (A14) and (A15), we get that

(A16)

(A17) as required. □

Figure A2.

The diagram corresponding to (A14) and (A15). Lemma A3 uses Postulate 4 and Lemma A1 to provide a solution for the purely misinformative case.

Proof of Theorem 1.

In the general case, both and are non-zero. Consider two events, u and v, such that , and . By Postulate 4,

(A18)

(A19) as depicted in Figure A3. Since , by Lemma A2, and ; furthermore, we also have that , and hence . In addition, since , by Lemma A3, we have that and where . Therefore, by (A18) and (A19),

(A20)

(A21) Finally, since Postulate 1 requires that , we have that , or equivalently,

(A22) This must hold for all and , which is only true in general for . Hence, and therefore

(A23)

(A24)

Figure A3.

The probability mass diagram associated with (A18) and (A19). Theorem 1 uses Lemmas A2 and A3 to provide a solution to the general case.

Corollary A1.

The conditional decomposition of the information provided by y about x given z is given by

(A25)

(A26)

Proof.

Follows trivially using conditional distributions. □

Corollary A2.

The joint decomposition of the information provided by y and z about x is given by

(A27)

(A28) The joint decomposition of the information provided by y about x and z is given by

(A29)

(A30)

Proof.

Follows trivially using joint distributions. □

Corollary A3.

We have the following three identities,

(A31)

(A32)

(A33)

Proof.

The identity (A31) follows from (7), while (A32) follows from (8) and (A25); finally, (A33) follows from (A26) and (A30). □

Finally, it is not true that the components satisfy a target chain rule. That is, in general the following relation does not hold, nor does . However, the mutual information must satisfy a chain rule over target events. Thus, it is interesting to observe how the target chain rule for mutual information arises in terms of exclusions. The key observation is that the positive informational component provided by y about z given x equals the negative informational component provided by y about z, as per (A32).

Corollary A4.

The information provided by y about x and z satisfies the following chain rule,

(A34)

Proof.

Starting from the joint decomposition (A29) and (A30). By the identities (A31) and (A33), we get that

(A35) Then, by identity (A32), and recomposition, we get that

(A36)

Author Contributions

C.F. and J.L. conceived the idea and wrote the manuscript.

Funding

J.L. was supported through the Australian Research Council DECRA grant DE160100630.

Conflicts of Interest

The authors declare no conflict of interest. The funding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results.

References

- 1.Shannon C.E. A Mathematical Theory of Communication. Bell Syst. Labs Tech. J. 1948;27:379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x. [DOI] [Google Scholar]

- 2.Hartley R.V.L. Transmission of information. Bell Syst. Labs Tech. J. 1928;7:535–563. doi: 10.1002/j.1538-7305.1928.tb01236.x. [DOI] [Google Scholar]

- 3.Fano R. Transmission of Information. The MIT Press; Massachusetts, MA, USA: 1961. [Google Scholar]

- 4.Ash R. Information Theory Interscience Tracts in Pure and Applied Mathematics. Interscience Publishers; Hoboken, NJ, USA: 1965. [Google Scholar]

- 5.Lizier J.T. The Local Information Dynamics of Distributed Computation in Complex Systems. Springer; Berlin/Heidelberg, Germany: 2013. Computation in Complex Systems; pp. 13–52. [DOI] [Google Scholar]

- 6.Prokopenko M., Boschetti F., Ryan A.J. An information-theoretic primer on complexity, self-organization, and emergence. Complexity. 2008;15:11–28. doi: 10.1002/cplx.20249. [DOI] [Google Scholar]

- 7.Lizier J.T., Bertschinger N., Jost J., Wibral M. Information Decomposition of Target Effects from Multi-Source Interactions: Perspectives on Previous, Current and Future Work. Entropy. 2018;20:307. doi: 10.3390/e20040307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Williams P.L., Beer R.D. Nonnegative decomposition of multivariate information. arXiv. 2010. 1004.2515

- 9.Bertschinger N., Rauh J., Olbrich E., Jost J. Shared information—New insights and problems in decomposing information in complex systems. Mathematics. 2012:251–269. [Google Scholar]

- 10.Harder M., Salge C., Polani D. Bivariate measure of redundant information. Phys. Rev. E. 2013;87:012130. doi: 10.1103/PhysRevE.87.012130. [DOI] [PubMed] [Google Scholar]

- 11.Griffith V., Koch C. Quantifying Synergistic Mutual Information. In: Prokopenko M., editor. Guided Self-Organization: Inception. Springer; Berlin/Heidelberg, Germany: 2014. pp. 159–190. [Google Scholar]

- 12.Finn C., Lizier J.T. Pointwise Partial Information Decomposition Using the Specificity and Ambiguity Lattices. Entropy. 2018;20:297. doi: 10.3390/e20040297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Yuichiro K. Abstract Methods in Information Theory. World Scientific; Singapore: 2016. [Google Scholar]