Abstract

Coherent neuronal activity is believed to underlie the transfer and processing of information in the brain. Coherent activity in the form of synchronous firing and oscillations has been measured in many brain regions and has been correlated with enhanced feature processing and other sensory and cognitive functions. In the theoretical context, synfire chains and the transfer of transient activity packets in feedforward networks have been appealed to in order to describe coherent spiking and information transfer. Recently, it has been demonstrated that the classical synfire chain architecture, with the addition of suitably timed gating currents, can support the graded transfer of mean firing rates in feedforward networks (called synfire-gated synfire chains—SGSCs). Here we study information propagation in SGSCs by examining mutual information as a function of layer number in a feedforward network. We explore the effects of gating and noise on information transfer in synfire chains and demonstrate that asymptotically, two main regions exist in parameter space where information may be propagated and its propagation is controlled by pulse-gating: a large region where binary codes may be propagated, and a smaller region near a cusp in parameter space that supports graded propagation across many layers.

Keywords: pulse-gating, channel capacity, neural coding, feedforward networks, neural information propagation

1. Introduction

Faithful information transmission between neuronal populations is essential to computation in the brain. Correlated spiking activity has been measured experimentally between many brain areas [1,2,3,4,5,6,7,8]. Experimental and theoretical studies have shown that synchronized volleys of spikes can propagate within cortical networks and are thus capable of transmitting information between neuronal populations on millisecond timescales [9,10,11,12,13,14,15,16,17]. Many such mechanisms have been proposed for feedforward networks [14,15,17,18,19,20,21,22,23]. Commonly, mechanisms use transient synchronization to provide windows in time during which spikes may be transferred more easily from layer to layer [14,15,17,18,19,20,21,22].

For instance, the successful propagation of synchronous activity has been identified in “synfire chains” [18,19,21,24,25], wherein volleys of transiently synchronous spikes can be propagated through a predominantly excitatory feedforward architecture. Studies have shown that synchronous spike volleys can reliably drive responses through the visual cortex [13] with the temporal precision required for neural coding [26,27]. However, only sufficiently strong stimuli can elicit transient spike volleys that can successfully propagate through the network, and the waveform of spiking tends to an attractor with a single, fixed amplitude [21,28]. Thus, it is not possible to transfer graded information in the amplitudes of synchronously propagating spike volleys in this type of synfire chain.

Nonetheless, recent work has shown that synfire chains may be used as a pulse-gating mechanism coupled with a parallel “graded” chain (“synfire-gated synfire chain”—SGSC) to transfer arbitrary firing-rate amplitudes (graded information) through many layers in a feedforward neural circuit [14,15,16,17]. The addition of a companion gating circuit additionally provides a new mechanism for controlling information propagation in neural circuits [14,15,16,17]. Within a feedforward network, graded-rate transfer manifests as approximately time-translationally invariant spiking probabilities that propagate through many layers (guided by gating pulses) [17]. Using a Fokker–Planck (FP) approach, it has been demonstrated that this time-translational invariance arises near a cusp catastrophe in the parameter space of the gating current, synaptic strength and synaptic noise [17].

While many researchers have studied the dynamics of activity transmission in feedforward networks [21,23,25,29], few have examined these networks as information channels (however, see [30]). Shannon information [31,32] provides a natural framework with which to quantify the capacity of neural information transmission, by providing a measure of the correlation between input and output variables.

In particular, mutual information (MI), and measures based on MI, can be used to evaluate the expected reduction in entropy, for example, of the input, from the measurement of the output. Much work has focused on estimating probability distributions from spiking data (see, e.g., [33,34,35,36,37,38]). At the circuit level, studies have shown that MI is maximized in balanced networks (in cortical cultures, rats and macaques) that admit neuronal avalanches [39,40]. Furthermore, by examining the effects of gamma oscillations on MI in infralimbic and prelimbic cortex of mice, it has been shown that gamma rhythms enhance information transfer by reducing noise and signal amplification [7].

Theoretically, maximizing MI has been used to find nonlinear “infomax” networks [41] that can find statistically independent components capable of separating features in the visual scene [42]. MI has been used to assess the effectiveness and precision of population codes [43]. By combining decoding and MI, one can extract single-trial information from population activity and, at the same time, a quantitative estimate of how each neuron in the population contributes to the internal representation of the stimulus [44]. Furthermore, information-theoretic measures such as MI have been used on large-scale measurements of brain activity to estimate connectivity between different brain regions [45].

Here we consider information propagation properties in feedforward networks, and in particular, examine MI of mean firing-rate transfers across many layers of an SGSC neural circuit. We make use of an FP model to describe the dynamic evolution of membrane-potential probability densities in a pulse-gated, feedforward, integrate-and-fire (I&F) neuronal network [17]. We investigate the efficacy of information transfer in the parameter space near where graded mean firing-rate transfer is possible. We find that MI can be optimized by adjusting the strength of the gating (gating current), the feedforward synaptic strength, the level of synaptic noise, and the input distribution. Furthermore, our results reveal that via the coordination of pulse-gating and synaptic noise, a graded channel may be transformed into a binary channel. Our results demonstrate a wide range of possible information propagation choices in feedforward networks and the dynamic coding capacity of SGSCs.

2. Materials and Methods

We use a neuronal network model consisting of a set of populations, each with excitatory, current-based, I&F point neurons whose membrane potential, , and (feedforward) synaptic current, , are described by

| (1a) |

| (1b) |

where is the reset voltage, is the synaptic timescale, S is the synaptic coupling strength, is a Bernoulli distributed random variable and is the mean synaptic coupling probability. The lth spike time of the kth neuron in layer is determined by , that is, when the neuron reaches the threshold. The gating current, , is a white-noise process with a square pulse envelope, , where is a Heaviside theta function and T is the pulse length [14] of pulse height and variance . We note that with the equation, an exponentially decaying current is injected into population 1, providing graded synchronized activity that subsequently propagates downstream through populations .

Assuming the spike trains in Equation (1b) to be Poisson-distributed, the collective behavior of this feedforward network may be described by the FP equations:

| (2a) |

| (2b) |

(While the output spike-train of a single neuron in general does not obey Poisson statistics, the spike train obtained from the summed output of all neurons in a single population does obey these statistics asymptotically for a large network size N. In the case of pulse-gating, the summed output spikes of a single population tend to a time-dependent Poisson process.) These equations describe the evolution of the probability density function (PDF), , in terms of the probability density flux, , the mean feedforward synaptic current, , and the population firing rate, . For each layer j, the probability density function gives the probability of finding a neuron with membrane potential at time t.

The probability density flux is given by

where indicates the mean gating current. The effective diffusivity is

| (3) |

In the simulations reported below, we have taken , thus ignoring the second term in Equation (3) (i.e., ignoring diffusion due to finite size effects). The population firing rate is the flux of the PDF at threshold:

| (4) |

The boundary conditions for the FP equations are

| (5) |

| (6) |

and

| (7) |

To efficiently obtain solutions to the FP equations [17], we have used an approximate Gaussian initial distribution, , with width and mean , where is a normalization factor that accounts for the truncation of the Gaussian at threshold, . At the onset of gating, the distribution is advected toward the voltage threshold, , and the population starts to fire. The advection neglects a small amount of firing due to a diffusive flux across the firing threshold; thus the fold bifurcation occurs at a slightly larger value of synaptic coupling, S, for this approximation, relative to numerical simulations [17]. Because the pulse is fast, neurons only have time to fire once (approximately). Thus, we neglect the re-emergent population at , which does not have enough time to advect to and therefore does not contribute to firing during the transient pulse.

Using this approximation, Equation (2a) gives rise to , where , with upstream current . Setting , this integrates to

| (8) |

and from Equation (4), we have

| (9) |

which, from Equation (2b), results in a downstream synaptic current at :

| (10) |

After the gating pulse terminates, the current decays exponentially. This decaying current feeds forward and is integrated by the next layer. For an exact transfer, .

To compute MI, we generated a distribution of upstream current amplitudes . These were typically within or near the range of fixed points of the map . Using Equations (8)–(10), we generated a distribution of downstream current amplitudes with as initial values. The joint probability distribution was estimated by forming a two-dimensional histogram with . MI, , was computed (in bits) with and , where the marginal probabilities and were computed from the histogram.

3. Results

We consider the transfer of spiking activity between two successive layers of a pulse-gated feedforward network. The spiking activity of feedforward propagation quickly converges to a stereotypical firing-rate waveform with arbitrary amplitudes (within a certain range) and with associated dynamics of the membrane potential PDF, , for each layer. This waveform essentially represents a volley of spikes that propagate downstream within the network. Furthermore, the temporal evolution of the first two moments (i.e., mean and variance) of suffice to capture the dynamics during pulse-gating [17]. Therefore, as [17] showed, the population dynamics across a large region of parameter space can be mapped by using a Gaussian approximation to the membrane potential PDF (see Materials and Methods), revealing the bifurcation structure underlying a cusp catastrophe.

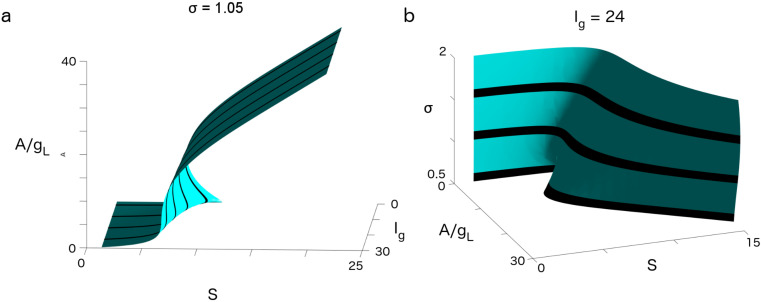

We first examined the cusp catastrophe in SGSC systems and its role in shaping the transfer of mean firing rates. Because of the existence of stable, attracting, translationally invariant firing-rate waveforms, we could capture and understand the dynamics in the SGSC system as an iterated map describing the firing-rate amplitude as it changed between successive network layers. Figure 1 shows two cases of the fixed points of this iterated map. Figure 1a plots the firing-rate amplitude, A, at the fixed points at the end of the pulse-gating period as a function of the strength of the gating current, , and synaptic coupling strength, S, for fixed . For small , there existed a range in S where the system was bistable; however, as we increased the strength of the gating, the region of bistability (two stable attracting solutions with an unstable solution in between) disappeared at a cusp where the manifold of the fixed point was nearly vertical. It was shown in [17] that near this cusp, the slow dynamics along the unstable manifold of the unstable fixed point allow for the nearly graded transfer of firing rates between successive layers, giving rise to an approximate line attractor in the amplitude of the output firing rates. Figure 1b shows that this cusp catastrophe also existed at fixed gating current as we varied both the feedforward synaptic coupling strength S and the variance of the initial PDF, , just before pulse-gating. As the variance, , was increased, the bistable region in S also disappeared.

Figure 1.

Cusp enabling graded and binary propagation in a synfire-gated synfire chain (SGSC). (a) View for fixed noise, , as a function of firing-rate amplitude, ; synaptic strength, S; and gating current, . (b) View for fixed gating current, , as a function of , S, and . Plotted in both panels are zeros of the function (the difference between input and output firing-rate amplitudes in a given layer). This function becomes zero at a fixed point. At a fixed point, a firing-rate amplitude propagates exactly from one layer to the next. When this function is small but non-zero, the firing-rate amplitude only changes slowly as it propagates. We note that the cusp is high-dimensional and can be viewed in various projections. Graded propagation (approximately exact amplitude propagation) is enabled as a result of ghost (slow) dynamics near the cusp, such that the firing-rate amplitude A varies only slowly from layer to layer. At the cusp, an approximate line attractor exists where an input firing rate in a given layer changes only slowly as it propagates downstream. Away from the cusp, firing rates rapidly approach attractors. For many parameter regions, there are two attractors giving rise to the propagation of a binary code.

Next, we examine the evolution of MI through the network. Figure 2 shows the transmission of the mean firing rate, , in a pulse-gated feedforward network, as well as the associated MI for three types of channels. Figure 2a plots the firing rate for an idealized exact transfer across many layers (), where we utilized a thresholded linear f–I curve to model each layer [14]. In this toy model, the transfer was exact and the mean firing rate propagated indefinitely without change. Figure 2b shows the propagation of firing rates for an SGSC situated near the cusp of Figure 1a. The long-term propagation of the initially uniform input firing rates revealed the dynamical structure near the cusp, namely, two stable, attracting firing rates (top and bottom) with an unstable saddle in between. Because of the slowness of the dynamics along the unstable manifold of the saddle, the transfer of mean firing rates through the network was approximately graded for many layers ( 1–30). Furthermore, this transfer of the mean firing rates was order preserving (i.e., the relative ordering of amplitudes was maintained) across many more layers (j up to 100). Figure 2c demonstrates the propagation of firing rates for a binary transfer, where the unstable saddle was strongly repelling; for most initial conditions, the rates converged to one of two rates within 10–15 layers. To investigate the effects of the SGSC dynamics on information propagation, we computed the MI for each of the three cases. Figure 2d demonstrates the effect of the SGSC dynamics on information propagation. In the exact transfer case, the MI between the input and each layer remained constant and represented an upper bound on the information transfer. In the binary transfer case, the MI quickly decayed to 1 bit, but, as for the exact case, was stable over long timescales. Near the cusp, where the firing-rate transfer was approximately graded, the MI decayed slowly, so that even after transfers, the channel retained almost 4 bits of information. In Figure 2e, the joint probabilities from which the MI was computed for representative layers are plotted. For the exact case, the distribution is always along the diagonal. The fast transition from diagonal to binary is evident for binary transfers, and a slow transition from diagonal to binary is seen for the graded case.

Figure 2.

Mutual information (MI) for transfers across a 100 layer synfire-gated synfire chain (SGSC). (a) Exact transfer: Firing-rate amplitudes for theoretically perfect transfer. The range of is the same for (a–c). Amplitudes transfer exactly from one layer to the next (). This practically unattainable communication mode is shown for comparison and attains the maximum possible information transfer (MI; see (d)) as a function of the layer. (b) Graded transfer: Firing-rate amplitudes from approximate Fokker–Planck (FP) solutions in a multi-layer SGSC. Parameters: ; ; . We note that as the number of layers through which the initial amplitudes propagate increases, the amplitudes drift slowly away from an unstable attractor in the center of the amplitude distribution towards stable attractors at the sides of the distribution. (c) Binary transfer: Firing-rate amplitudes from approximate FP solutions in a multi-layer SGSC. Parameters: ; ; . For these parameters, the unstable attractor rapidly repels the amplitudes toward stable attractors on either side, resulting in binary transfer. Binary transfer is extremely stable in the SGSC. (d) MI as a function of layer, j, for each of the above types of channel (a–c). (e) The joint probabilities, , , and , from which the MI was computed for exact, graded and binary transfer. Here, the exact case is as would be expected. The graded case shows a gradual deformation away from the diagonal that approaches binary transfer asymptotically. The binary case rapidly approaches the propagation of only two states. We note that in much of the parameter space, the approach to binary is much faster than that shown here. We used these parameters so that the transition was slower and therefore more evident.

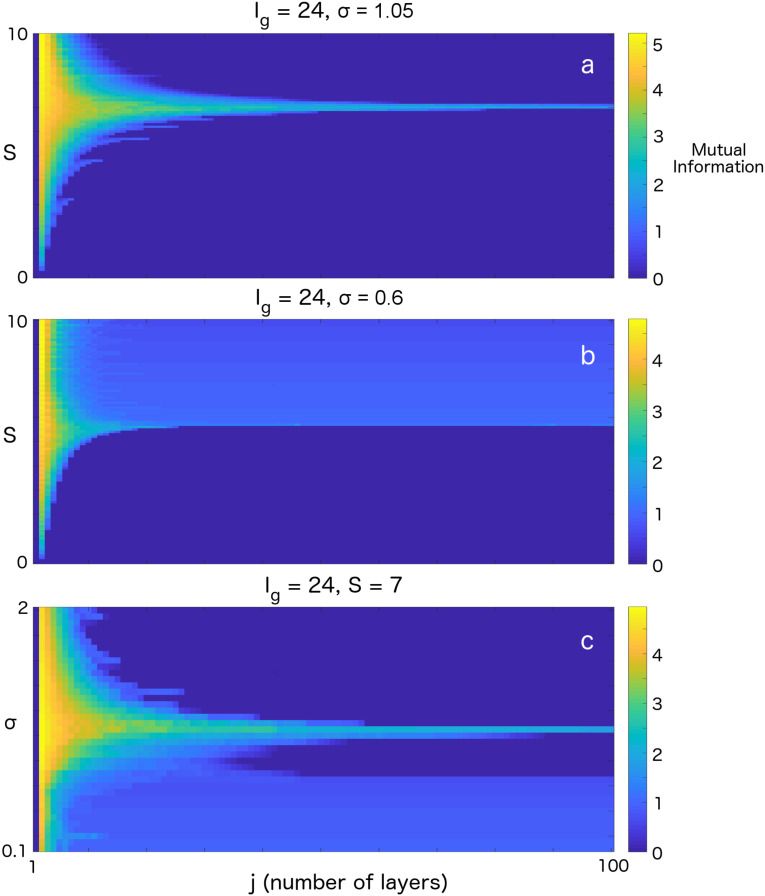

An FP analysis of the SGSC system reveals four important system parameters: strength of the gating current, ; strength of the feedforward synaptic coupling, S; mean membrane potential distribution at the beginning of pulse-gating, ; and variance of the synaptic current, (see Materials and Methods). Figure 3 shows where graded and binary codes are supported. Figure 3a,b plots the MI as a function of S and the network layer (which is equivalent to propagation time) for fixed , and (which equals 0 for all panels). As we can see, for both cases, there exists an optimal S for which MI (and approximate graded activity) can be maintained for many layers. As we move away from this optimal S, we quickly go into a binary coding regime (). Figure 3c demonstrates that similar qualitative behavior can be obtained by varying .

Figure 3.

Mutual information (MI) for parameters supporting graded and binary codes. (a) MI propagation across 100 layers for , and S ranging from 0 to 10. We note that graded transfer allows high values of MI to propagate across 100 layers at . (b) MI propagation across 100 layers for , and S from 0 to 10. We note that above , there is a large range of S for which binary propagation (MI of 1 bit; see colorbar) is supported. (c) MI propagation for , and from to 2. Here, both graded and binary information propagate depending on the value of .

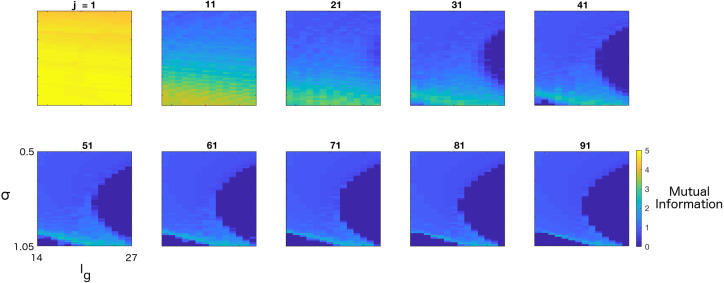

Out of the four system parameters, it is easiest to manipulate either the gating current or the variance of the synaptic current. Both can be viewed as controls independent of the SGSC system. Therefore, in Figure 4, we examine the evolution of MI as a function of and through the network. As we expect from our results thus far, large regions of parameter space support binary coding; however, there is a thin line that materializes towards the bottom of the binary region that corresponds to the location of the cusp, where MI can be maintained at high levels () through many layers. We note that asymptotically, as , approaches 1 (a binary channel) because of the existence of two attractors, even near the cusp.

Figure 4.

Regions of parameter space supporting graded and binary information propagation. Visualization of mutual information (MI) for between and and between 14 and 27 at successive layers j; and axes as denoted in the lower left panel are the same for all panels. Colorbar for lower right panel is the same for all panels. We note that across a few layers, MI remains high for a large region of parameter space, but by , only binary and graded codes persist. By , it is seen that a large region of parameter space supports binary propagation. Along a thin diagonal line at the bottom of the binary region, corresponding to the location of the cusp, graded information may be transferred.

4. Discussion

Although coherent activity has now been measured in many regions of the mammalian brain, the precise mechanism and the extent to which the brain can make use of synchronous spiking activity to transfer information have remained unclear. Many mechanisms have been proposed for information transfer via transiently synchronous spiking that relies on oscillations and gating [18,20,22,46,47,48,49,50,51,52]. These mechanisms make use of the fact that coherent input can provide temporal windows during which spiking activity may be more easily transferred between sending and receiving populations. However, from the theoretical perspective, how MI and other measures of communication capacity can be related to the underlying neuronal network architecture and the emergent network dynamics have remained unexplored.

Here we study the capacity for information transfer of feedforward networks by examining the evolution of mutual information through many layers of an SGSC system. Previous work has showed that by introducing suitably timed pulses, graded information could be transferred and controlled in a feedforward excitatory neuronal network [14,15,16,17]. In this context, pulse-gating has allowed us to understand information propagation as an iterated map. FP analysis of this map has enabled the identification of a cusp catastrophe in the relevant parameter space. Our results here demonstrate that the dynamics of the SGSC system naturally give rise to two different types of channel (as measured by MI). Large regions of parameter space support binary coding by using transiently synchronous propagation of high and low firing rates. (In the classical synfire chain case, the low firing-rate state is a silent state. In the SGSC case, as a result of the gating current, it is small, but non-zero.) In the binary regime, the distance between low and high firing rates is much larger than the variance of the distributions; therefore propagation is stable. Furthermore, by systematically varying the relevant SGSC system parameters, we were able to optimize graded-rate transfer near the fold of a cusp catastrophe, which enabled us to maintain a relatively high MI through many layers.

Evidence exists for graded information coding in visual and other cortices [53], and there is some evidence for binary coding in the auditory cortex [54]; sparse coding mechanisms for its use have been put forward [55,56]. Luczak et al. [51] have argued that spike packets and stereotypical and repeating sequences (similar to sequences observed in implementations of information processing algorithms in graded-transfer SGSCs [15]) underlie neural coding. More recently, Piet et al. [57] have used attractor networks to model frontal orienting fields in rat cortices to argue that bistable attractor dynamics can account for the memory observed in a perceptual decision-making task. Indeed, in many decision-making tasks, cortical activity appears to be holding graded information in working memory, before a decision forces the activity into a binary code [58]. Therefore, it is of interest that both graded and binary pulse-gated channels are supported by the SGSC mechanism and that it is fairly simple and rapid to convert a graded code to a binary code.

Examining the structure of the cusp catastrophe in the parameter space, it appears that in general, weaker and higher S are correlated with bistability, while stronger can support graded information transfer at lower S (see Figure 1a); at the same time, for fixed , a lower tends to bistable states (see Figure 1b).

In previous theoretical studies of graded propagation and line attractors, it has been shown that some fine tuning of system parameters is required [59,60,61]. However, graded propagation and line attractors have been observed in many areas of the brain (see, e.g., [62,63,64,65]). Our results here demonstrate that an important consideration for understanding graded propagation is the depth of the circuit being used to propagate the information. That is, graded information propagation circuits with a depth of 20 to 30 layers are not particularly fine-tuned. In this depth range, about th of the parameter space (e.g., S or ; see Figure 3, top and bottom plots) is capable of propagating graded information (with relatively high MI). As the depth of the circuit grows, , approaches 1 bit, and hence graded propagation in deep circuits is not possible (with an SGSC with parameters near the cusp).

A clear advantage of a graded information channel is that a vector of high-resolution graded information (resolution , where n is the number of bits of MI—up to 32 levels of resolution in the graded channel shown in Figure 2) could be rapidly processed in a network with linear synaptic connectivity. Thus, synaptic processing such as Gabor transforms, seen in the visual cortex, would most naturally operate on graded information, rapidly reducing the dimension of and orthogonalizing input data. However, the stable processing of information through deep and complicated neural logic circuits would take better advantage of binary channels. Here, essentially exact pulse-gated binary transformations and decisions [15] can make use of the attractor structure of the channel to reduce noise and maintain discrete states. In order to make use of high-bandwidth graded processing, at some point in a neural circuit, graded information would need to be transformed to binary information. Mechanisms to do this are beyond the scope of this paper, but they could make use of dimension-reducing transforms on the input (e.g., Gabor transform) and subsequent digitization of the data subspace.

Finally, we note that the symmetric nature of the binary SGSC channel may be an advantage for logic circuit gating, as either of the parallel synfire chains can operate as information or the gate [15]. Finally, it should be remembered that with a stable binary code, binary digit coding can be constructed, effectively increasing the information resolution propagating in a binary circuit.

5. Conclusions

We have performed an investigation of the communication capabilities of SGSCs using the metric of MI. The main conclusion of this investigation is that SGSCs sustain two types of channel: the first is binary transfer (MI = 1, in bits), which is supported for a wide range of parameters. For circuit depths of up to 30–40 layers, in a narrower range of parameters, graded transfer is also supported. A secondary conclusion is that, because of the dependence of MI on the depth of a circuit in some parameter regimes, circuit depth should be taken into account when considering the communication capacity of a neural circuit.

Acknowledgments

This work was supported by the Natural Science Foundation of China, Grant Nos. 31771147 and 91232715 (B.W. and L.T.); by the Open Research Fund of the State Key Laboratory of Cognitive Neuroscience and Learning, Grant No. CNLZD1404 (B.W. and L.T.); by the Beijing Municipal Science and Technology Commission under contract Z151100000915070 (B.W. and L.T.); by the SLS-Qidong Innovation Fund (B.W. and L.T.); and by the LANL ASC Beyond Moore’s Law project (A.T.S.).

Author Contributions

All authors conceived and designed the numerical simulations. A.T.S. and L.T. wrote the paper, with major input from Z.X. and B.W. All authors have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Gray C., König P., Engel A., Singer W. Oscillatory responses in cat visual cortex exhibit inter-columnar synchronization which reflects global stimulus properties. Nature. 1989;338:334–337. doi: 10.1038/338334a0. [DOI] [PubMed] [Google Scholar]

- 2.Livingstone M. Oscillatory firing and interneuronal correlations in squirrel monkey striate cortex. J. Neurophysiol. 1996;66:2467–2485. doi: 10.1152/jn.1996.75.6.2467. [DOI] [PubMed] [Google Scholar]

- 3.Bragin A., Jandó G., Nádasdy Z., Hetke J., Wise K., Buzsáki G. Gamma (40–100 Hz) oscillation in the hippocampus of the behaving rat. J. Neurosci. 1995;15:47–60. doi: 10.1523/JNEUROSCI.15-01-00047.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Brosch M., Budinger E., Scheich H. Stimulus-related gamma oscillations in primate auditory cortex. J. Neurophysiol. 2002;87:2715–2725. doi: 10.1152/jn.2002.87.6.2715. [DOI] [PubMed] [Google Scholar]

- 5.Bauer M., Oostenveld R., Peeters M., Fries P. Tactile spatial attention enhances gamma-band activity in somatosensory cortex and reduces low-frequency activity in parieto-occipital areas. J. Neurosci. 2006;26:490–501. doi: 10.1523/JNEUROSCI.5228-04.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Pesaran B., Pezaris J., Sahani M., Mitra P., Andersen R. Temporal structure in neuronal activity during working memory in macaque parietal cortex. Nat. Neurosci. 2002;5:805–811. doi: 10.1038/nn890. [DOI] [PubMed] [Google Scholar]

- 7.Sohal V.S., Zhang F., Yizhar O., Deisseroth K. Parvalbumin neurons and gamma rhythms enhance cortical circuit performance. Nature. 2009;459:698–702. doi: 10.1038/nature07991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Popescu A., Popa D., Paré D. Coherent gamma oscillations couple the amygdala and striatum during learning. Nat. Neurosci. 2009;12:801–807. doi: 10.1038/nn.2305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bair W., Koch C. Temporal precision of spike trains in extrastriate cortex of the behaving macaque monkey. Neural Comput. 1996;8:1185–1202. doi: 10.1162/neco.1996.8.6.1185. [DOI] [PubMed] [Google Scholar]

- 10.Butts D.A., Weng C., Jin J., Yeh C.I., Lesica N.A., Alonso J.M., Stanley G.B. Temporal precision in the neural code and the timescales of natural vision. Nature. 2007;449:92–95. doi: 10.1038/nature06105. [DOI] [PubMed] [Google Scholar]

- 11.Varga C., Golshani P., Soltesz I. Frequency-invariant temporal ordering of interneuronal discharges during hippocampal oscillations in awake mice. Proc. Natl. Acad. Sci. USA. 2012;109:E2726–E2734. doi: 10.1073/pnas.1210929109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Reyes A.D. Synchrony-dependent propagation of firing rate in iteratively constructed networks in vitro. Nat. Neurosci. 2003;6:593–599. doi: 10.1038/nn1056. [DOI] [PubMed] [Google Scholar]

- 13.Wang H.P., Spencer D., Fellous J.M., Sejnowski T.J. Synchrony of thalamocortical inputs maximizes cortical reliability. Science. 2010;328:106–109. doi: 10.1126/science.1183108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sornborger A.T., Wang Z., Tao L. A mechanism for graded, dynamically routable current propagation in pulse-gated synfire chains and implications for information coding. J. Comput. Neurosci. 2015;39:181–195. doi: 10.1007/s10827-015-0570-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wang Z., Sornborger A., Tao L. Graded, dynamically routable information processing with synfire-gated synfire chains. PLoS Comput. Biol. 2016;12:e1004979. doi: 10.1371/journal.pcbi.1004979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Shao Y., Sornborger A., Tao L. A pulse-gated, predictive neural circuit; Proceedings of the 50th Asilomar Conference on Signals, Systems and Computers; Pacific Grove, CA, USA. 6–9 November 2016. [Google Scholar]

- 17.Xiao Z., Zhang J., Sornborger A., Tao L. Cusps enable line attractors for neural computation. Phys. Rev. E. 2017;96:052308. doi: 10.1103/PhysRevE.96.052308. [DOI] [PubMed] [Google Scholar]

- 18.Abeles M. Role of the cortical neuron: Integrator or coincidence detector? Isr. J. Med. Sci. 1982;18:83–92. [PubMed] [Google Scholar]

- 19.König P., Engel A., Singer W. Integrator or coincidence detector? The role of the cortical neuron revisited. Trends Neurosci. 1996;19:130–137. doi: 10.1016/S0166-2236(96)80019-1. [DOI] [PubMed] [Google Scholar]

- 20.Fries P. A mechanism for cognitive dynamics: Neuronal communication through neuronal coherence. Trends Cogn. Sci. 2005;9:474–480. doi: 10.1016/j.tics.2005.08.011. [DOI] [PubMed] [Google Scholar]

- 21.Diesmann M., Gewaltig M.O., Aertsen A. Stable propagation of synchronous spiking in cortical neural networks. Nature. 1999;402:529–533. doi: 10.1038/990101. [DOI] [PubMed] [Google Scholar]

- 22.Rubin J., Terman D. High frequency stimulation of the subthalamic nucleus eliminates pathological thalamic rhythmicity in a computational model. J. Comput. Neurosci. 2004;16:211–235. doi: 10.1023/B:JCNS.0000025686.47117.67. [DOI] [PubMed] [Google Scholar]

- 23.Van Rossum M.C., Turrigiano G.G., Nelson S.B. Fast propagation of firing rates through layered networks of noisy neurons. J. Neurosci. 2002;22:1956–1966. doi: 10.1523/JNEUROSCI.22-05-01956.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kistler W., Gerstner W. Stable propagation of activity pulses in populations of spiking neurons. Neural Comput. 2002;14:987–997. doi: 10.1162/089976602753633358. [DOI] [PubMed] [Google Scholar]

- 25.Litvak V., Sompolinsky H., Segev I., Abeles M. On the transmission of rate code in long feedforward networks with excitatory-inhibitory balance. J. Neurosci. 2003;23:3006–3015. doi: 10.1523/JNEUROSCI.23-07-03006.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kumar A., Rotter S., Aertsen A. Conditions for propagating synchronous spiking and asynchronous firing rates in a cortical network model. J. Neurosci. 2008;28:5268–5280. doi: 10.1523/JNEUROSCI.2542-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Nemenman I., Lewen G.D., Bialek W., de Ruyter van Steveninck R.R. Neural coding of natural stimuli: Information at sub-millisecond resolution. PLoS Comput. Biol. 2008;4:e1000025. doi: 10.1371/journal.pcbi.1000025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Moldakarimov S., Bazhenov M., Sejnowski T.J. Feedback stabilizes propagation of synchronous spiking in cortical neural networks. Proc. Natl. Acad. Sci. USA. 2015;112:2545–2550. doi: 10.1073/pnas.1500643112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kumar A., Rotter S., Aertsen A. Spiking activity propagation in neuronal networks: Reconciling different perspectives on neural coding. Nat. Rev. Neurosci. 2010;11:615–627. doi: 10.1038/nrn2886. [DOI] [PubMed] [Google Scholar]

- 30.Cannon J. Analytical calculation of mutual information between weakly coupled poisson-spiking neurons in models of dynamically gated communication. Neural Comput. 2017;29:118–145. doi: 10.1162/NECO_a_00915. [DOI] [PubMed] [Google Scholar]

- 31.Shannon C. A mathematical theory of communication. Bell Syst. Tech. J. 1948;27:379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x. [DOI] [Google Scholar]

- 32.Cover T., Thomas J. Elements of Information Theory. 2nd ed. Wiley; Hoboken, NJ, USA: 2006. [Google Scholar]

- 33.Rieke F., Warland D., de Ruyter van Steveninck R., Bialek W. Spikes: Exploring the Neural Code. The MIT Press; Cambridge, MA, USA: 1997. [Google Scholar]

- 34.Paninski L. Estimation of entropy and mutual information. Neural Comput. 2003;15:1191–1253. doi: 10.1162/089976603321780272. [DOI] [Google Scholar]

- 35.Victor J.D. Binless strategies for estimation of information from neural data. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2002;66:051903. doi: 10.1103/PhysRevE.66.051903. [DOI] [PubMed] [Google Scholar]

- 36.Nemenman I., Bialek W., de Ruyter van Steveninck R. Entropy and information in neural spike trains: progress on the sampling problem. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2004;69:056111. doi: 10.1103/PhysRevE.69.056111. [DOI] [PubMed] [Google Scholar]

- 37.Shapira A.H., Nelken I. Binless Estimation of Mutual Information in Metric Spaces. In: DiLorenzo P., Victor J., editors. Spike Timing: Mechanisms and Function. CRC Press; Boca Raton, FL, USA: 2013. pp. 121–135. Chapter 5. [Google Scholar]

- 38.Lopes-dos Santos V., Panzeri S., Kayser C., Diamond M.E., Quian Quiroga R. Extracting information in spike time patterns with wavelets and information theory. J. Neurophysiol. 2015;113:1015–1033. doi: 10.1152/jn.00380.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Shew W.L., Plenz D. The functional benefits of criticality in the cortex. Neuroscientist. 2013;19:88–100. doi: 10.1177/1073858412445487. [DOI] [PubMed] [Google Scholar]

- 40.Shew W.L., Yang H., Yu S., Roy R., Plenz D. Information capacity and transmission are maximized in balanced cortical networks with neuronal avalanches. J. Neurosci. 2011;31:55–63. doi: 10.1523/JNEUROSCI.4637-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Bell A.J., Sejnowski T.J. An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 1995;7:1129–1159. doi: 10.1162/neco.1995.7.6.1129. [DOI] [PubMed] [Google Scholar]

- 42.Bell A.J., Sejnowski T.J. The “independent components” of natural scenes are edge filters. Vis. Res. 1997;37:3327–3338. doi: 10.1016/S0042-6989(97)00121-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Yarrow S., Challis E., Series P. Fisher and Shannon information in finite neural populations. Neural Comput. 2012;24:1740–1780. doi: 10.1162/NECO_a_00292. [DOI] [PubMed] [Google Scholar]

- 44.Quiroga R.Q., Panzeri S. Extracting information from neuronal populations: Information theory and decoding approaches. Nat. Rev. Neurosci. 2009;10:173–185. doi: 10.1038/nrn2578. [DOI] [PubMed] [Google Scholar]

- 45.Bullmore E., Sporns O. Complex brain networks: Graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 2009;10:186–198. doi: 10.1038/nrn2575. [DOI] [PubMed] [Google Scholar]

- 46.Fries P., Reynolds J., Rorie A., Desimone R. Modulation of oscillatory neuronal synchronization by selective visual attention. Science. 2001;291:1560–1563. doi: 10.1126/science.1055465. [DOI] [PubMed] [Google Scholar]

- 47.Salinas E., Sejnowski T. Correlated neuronal activity and the flow of neural information. Nat. Rev. Neurosci. 2001;2:539–550. doi: 10.1038/35086012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Csicsvari J., Jamieson B., Wise K., Buzsáki G. Mechanisms of gamma oscillations in the hippocampus of the behaving rat. Neuron. 2003;37:311–322. doi: 10.1016/S0896-6273(02)01169-8. [DOI] [PubMed] [Google Scholar]

- 49.Womelsdorf T., Schoffelen J., Oostenveld R., Singer W., Desimone R., Engel A., Fries P. Modulation of neuronal interactions through neuronal synchronization. Science. 2007;316:1609–1612. doi: 10.1126/science.1139597. [DOI] [PubMed] [Google Scholar]

- 50.Colgin L., Denninger T., Fyhn M., Hafting T., Bonnevie T., Jensen O., Moser M., Moser E. Frequency of gamma oscillations routes flow of information in the hippocampus. Nature. 2009;462:75–78. doi: 10.1038/nature08573. [DOI] [PubMed] [Google Scholar]

- 51.Luczak A., McNaughton B.L., Harris K.D. Packet-based communication in the cortex. Nat. Rev. Neurosci. 2015;16:745–755. doi: 10.1038/nrn4026. [DOI] [PubMed] [Google Scholar]

- 52.Yuste R., MacLean J., Smith J., Lansner A. The cortex as a central pattern generator. Nat. Rev. Neurosci. 2005;6:477–483. doi: 10.1038/nrn1686. [DOI] [PubMed] [Google Scholar]

- 53.Hubel D., Wiesel T. Receptive fields and functional architecture in two non striate visual areas (18 and 19) of the cat. J. Neurophysiol. 1965;28:229–289. doi: 10.1152/jn.1965.28.2.229. [DOI] [PubMed] [Google Scholar]

- 54.DeWeese M., Wehr M., Zador A. Binary coding in auditory cortex. J. Neurosci. 2003;23:7940–7949. doi: 10.1523/JNEUROSCI.23-21-07940.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Olshausen B., Field D. Sparse coding of sensory inputs. Curr. Opin. Neurobiol. 2004;14:481–487. doi: 10.1016/j.conb.2004.07.007. [DOI] [PubMed] [Google Scholar]

- 56.Hromádka T., DeWeese M., Zador A. Sparse representation of sounds in the unanesthetized auditory cortex. PLoS Biol. 2008;6:e16. doi: 10.1371/journal.pbio.0060016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Piet A.T., Erlich J.C., Kopec C.D., Brody C.D. Rat Prefrontal Cortex Inactivations during Decision Making Are Explained by Bistable Attractor Dynamics. Neural Comput. 2017;29:2861–2886. doi: 10.1162/neco_a_01005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Brody C.D., Hernandez A., Zainos A., Romo R. Timing and neural encoding of somatosensory parametric working memory in macaque prefrontal cortex. Cereb. Cortex. 2003;13:1196–1207. doi: 10.1093/cercor/bhg100. [DOI] [PubMed] [Google Scholar]

- 59.Seung H.S. How the brain keeps the eyes still. Proc. Natl. Acad. Sci. USA. 1996;93:13339–13344. doi: 10.1073/pnas.93.23.13339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Koulakov A.A., Raghavachari S., Kepecs A., Lisman J.E. Model for a robust neural integrator. Nat. Neurosci. 2002;5:775–782. doi: 10.1038/nn893. [DOI] [PubMed] [Google Scholar]

- 61.Goldman M. Memory without feedback in a neural network. Neuron. 2008;61:621–634. doi: 10.1016/j.neuron.2008.12.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Brody C.D., Romo R., Kepecs A. Basic mechanisms for graded persistent activity: Discrete attractors, continuous attractors, and dynamic representations. Curr. Opin. Neurobiol. 2003;13:204–211. doi: 10.1016/S0959-4388(03)00050-3. [DOI] [PubMed] [Google Scholar]

- 63.Major G., Tank D. Persistent neural activity: Prevalence and mechanisms. Curr. Opin. Neurobiol. 2004;14:675–684. doi: 10.1016/j.conb.2004.10.017. [DOI] [PubMed] [Google Scholar]

- 64.Aksay E., Olasagasti I., Mensh B.D., Baker R., Goldman M.S., Tank D.W. Functional dissection of circuitry in a neural integrator. Nat. Neurosci. 2007;10:494–504. doi: 10.1038/nn1877. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Mante V., Sussillo D., Shenoy K.V., Newsome W.T. Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature. 2013;503:78–84. doi: 10.1038/nature12742. [DOI] [PMC free article] [PubMed] [Google Scholar]