Abstract

The evaluation of complexity in univariate signals has attracted considerable attention in recent years. This is often done using the framework of Multiscale Entropy, which entails two basic steps: coarse-graining to consider multiple temporal scales, and evaluation of irregularity for each of those scales with entropy estimators. Recent developments in the field have proposed modifications to this approach to facilitate the analysis of short-time series. However, the role of the downsampling in the classical coarse-graining process and its relationships with alternative filtering techniques has not been systematically explored yet. Here, we assess the impact of coarse-graining in multiscale entropy estimations based on both Sample Entropy and Dispersion Entropy. We compare the classical moving average approach with low-pass Butterworth filtering, both with and without downsampling, and empirical mode decomposition in Intrinsic Multiscale Entropy, in selected synthetic data and two real physiological datasets. The results show that when the sampling frequency is low or high, downsampling respectively decreases or increases the entropy values. Our results suggest that, when dealing with long signals and relatively low levels of noise, the refine composite method makes little difference in the quality of the entropy estimation at the expense of considerable additional computational cost. It is also found that downsampling within the coarse-graining procedure may not be required to quantify the complexity of signals, especially for short ones. Overall, we expect these results to contribute to the ongoing discussion about the development of stable, fast and robust-to-noise multiscale entropy techniques suited for either short or long recordings.

Keywords: complexity, multiscale dispersion and sample entropy, refined composite technique, intrinsic mode dispersion and sample entropy, moving average, Butterworth filter, empirical mode decomposition, downsampling

1. Introduction

A system is complex when it entails a number of components intricately entwined altogether (e.g., the subway network of the New York City) [1]. Following Costa’s framework [2,3], the complexity in univariate signals denotes “meaningful structural richness”, which may be in contrast with regularity measures defined from entropy metrics such as sample entropy (SampEn), permutation entropy, (PerEn), and dispersion entropy (DispEn) [3,4,5,6]. In fact, these entropy techniques assess repetitive patterns and return maximum values for completely random processes [3,5,7]. However, a completely ordered signal with a small entropy value or a completely disordered signal with maximum entropy value is the least complex [3,5,8]. For instance, white noise is more irregular than noise (pink noise), although the latter is more complex because noise contains long-range correlations and its decay produces a fractal structure in time [3,5,8].

From the perspective of physiology, some diseased individuals’ recordings, when compared with those for healthy subjects, are associated with the emergence of more regular behavior, thus leading to lower entropy values [3,9]. In contrast, certain pathologies, such as cardiac arrhythmias, are associated with highly erratic fluctuations with statistical characteristics resembling uncorrelated noise. The entropy values of these noisy signals are higher than those of healthy individuals, even though the healthy individuals’ time series show more physiologically complex adaptive behavior [3,10].

In brief, the concept of complexity for univariate physiological signals builds on the following three hypotheses [3,5]:

The complexity of a biological or physiological time series indicates its ability to adapt and function in an ever-changing environment.

A biological time series requires operating across multiple temporal and spatial scales and so its complexity is similarly multiscaled and hierarchical.

A wide class of disease states, in addition to ageing, which decrease the adaptive capacity of the individual, appear to degrade the information carried by output variables.

Therefore, the multiscale-based methods focus on quantifying the information expressed by the physiological dynamics over multiple temporal scales.

To provide a unified framework for the evaluation of impact of diseases in physiological signals, multiscale SampEn (MSE) [3] was proposed to quantify the complexity of signals over multiple temporal scales. The MSE algorithm includes two main steps: (1) coarse-graining technique—i.e., combination of moving average (MA) filter and downsampling (DS) process—; and (2) calculation of SampEn of the coarse-grained signals at each scale factor [3]. A low-pass Butterworth (BW) filter was used as an alternative to MA to limit aliasing and avoid ripples [11]. To differentiate it from the original MSE, we call this method MSEBW herein.

Since their introduction, MSE and MSEBW have been widely used to characterize physiological and non-physiological signals [12]. However, they have several main shortcomings [12,13,14]. First, the coarse-graining process causes the length of a signal to be shortened by the scale factor as a consequence of the downsampling in the process. Therefore, when the scale factor increases, the number of samples in the coarse-grained sequence decreases considerably [14]. This may yield an unstable estimation of entropy. Second, SampEn is either undefined or unreliable for short coarse-grained time series [13,14].

To alleviate the first problem of MSE, intrinsic mode SampEn (InMSE) [15] and refined composite MSE (RCMSE) [14] were developed [15]. The coarse-graining technique is substituted by an approach based on empirical mode decomposition (EMD) in InMSE. The length of coarse-grained series obtained by InMSE is equal to that of the original signal, leading to more stable entropy values. Nevertheless, EMD-based approaches have certain limitations such as sensitivity to noise and sampling [16]. At the scale factor , RCMSE considers different coarse-grained signals, corresponding to different starting points of the coarse-graining process [14]. Therefore, RCMSE yields more stable results in comparison with MSE. Nevertheless, both InMSE and RCMSE may lead to undefined values for short signals as a consequence of using SampEn in the second step of their algorithms [13]. Additionally, the SampEn-based approaches may not be fast enough for some real-time applications.

To deal with these deficiencies, multiscale DispEn (MDE) based on our introduced DispEn was developed [13]. Refined composite MDE (RCMDE) was then proposed to improve the stability of the MDE-based values [13]. It was found that MDE and RCMDE have the following advantages over MSE and RCMSE: (1) they are noticeably faster as a consequence of using DispEn with computational cost of O(N)—where N is the signal length—, compared with the O for SampEn; (2) they result in more stable profiles for synthetic and real signals; (3) MDE and RCMDE discriminate different kinds of physiological time series better than MSE and RCMSE; and (4) they do not yield undefined values [13].

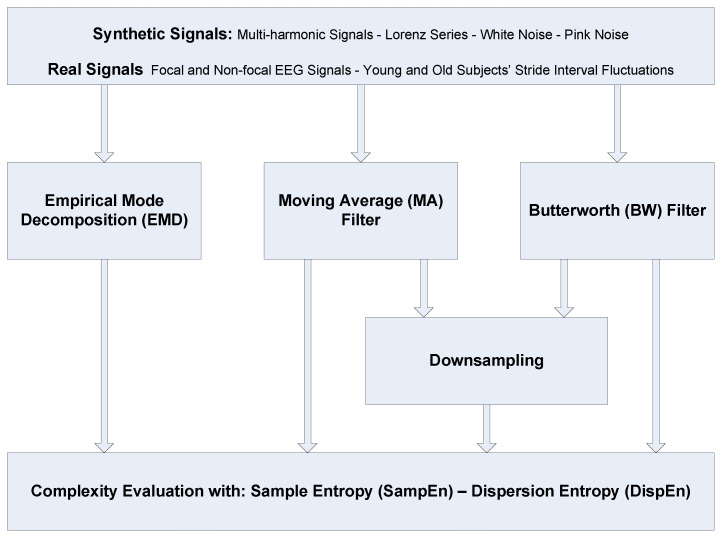

The aim of this research is to contribute to the understanding of different alternatives to coarse-graining in complexity approaches. To this end, we first revise the frequency responses for the three main filtering processes (i.e., MA, BW, and EMD) used in such methods. The role of downsampling in the classical coarse-graining process, which has not been systematically explored yet, is then investigated in the article. We assess the impact of coarse-graining in multiscale entropy estimations based on both SampEn and DispEn. To compare these methods, several synthetic data and two real physiological datasets are employed. For the sake of clarity, a flowchart of the alternatives to the coarse-graining method in addition to the datasets used in this article is shown in Figure 1.

Figure 1.

Flowchart of the alternatives to the coarse-graining method and the datasets used in this study.

2. Multiscale Entropy-Based Approaches

The MSE- and MDE-based methods include two main steps: (1) coarse-graining process; and (2) calculation of SampEn and DispEn at each scale . For simplicity, we detail only the DispEn-based complexity algorithms. Likewise, the SampEn-based algorithms are defined.

2.1. MDE Based on Moving Average (MA) and Butterworth (BW) Filters with and without Downsampling (DS)

2.1.1. Coarse-Graining Approaches

A coarse-graining technique with DS denotes a decimation by scale factor . Decimation is defined as two steps [17,18]: (1) reducing high-frequency time series components with a digital low-pass filter; and (2) DS the filtered time series by ; that is, keep only one every sample points.

Assume that we have a univariate signal of length L: . In the coarse-graining process, the original signal u is first filtered by an MA—a low-pass finite-impulse response (FIR) filter—as follows:

| (1) |

The frequency response of the MA filter is as follows [19]:

| (2) |

where f denotes the normalized frequency ranging from 0 to 0.5 cycles per sample (normalized Nyquist frequency). The frequency response of the MA filter has several shortcomings: (1) a slow roll-off of the main lobe; (2) large transition band; (3) and important side lobes in the stop-band. To alleviate these problems, a low-pass BW filter was proposed [11]. This filter provides a maximally flat (no ripples) response [19]. The squared magnitude of the frequency of BW filter is defined as follows:

| (3) |

where and n denote the normalized cut-off frequency and filter order, respectively [11,19]. Herein, and [11]. The original signal u is filtered by BW filter. In fact, the low-pass filters eliminate the fast temporal scales (higher frequency components) to take into account progressively slower time scales (lower frequency components).

Next, the time series filtered by either MA or BW is downsampled by the scale factor . Assume the downsampled signal is .

In this study, we consider the coarse-graining process with and without DS. MSE and MDE with MA filter and without DS are respectively named MSEMA and MDEMA. MSEMA and MDEMA with DS are termed MSE and MDE herein.

2.1.2. Calculation of DispEn or SampEn at Every Scale Factor

The DispEn or SampEn value is calculated for each coarse-grained signal . It is worth noting that MDE is more than the combination of the coarse-graining [3] with DispEn: the mapping based on the normal cumulative distribution function (NCDF) used in the calculation of DispEn [6] for the first temporal scale is maintained across all scales. That is, in MDE and RCMDE, and of NCDF are respectively set at the average and standard deviation (SD) of the original signal and they remain constant for all scale factors. This approach is similar to keeping the threshold r constant fixed (usually 0.15 of the SD of the original signal) in the MSE-based algorithms [3]. In a number of studies (e.g., [3,20]), it was found that keeping r constant is preferable to recalculating the threshold r at each scale factor separately.

2.2. Refined Composite Multiscale Dispersion Entropy (RCMDE)

At scale factor , RCMDE considers different coarse-grained signals, corresponding to different starting points of the coarse-graining process. Then, for each of these shifted series, the relative frequency of each dispersion pattern is calculated. Finally, the RCMDE value is defined as the Shannon entropy value of the averages of the rates of appearance of dispersion patterns of those shifted sequences [13]. The MA filter used in RCMDE and RCMSE may be substituted by the BW filter, respectively called RCMDEBW and RCMSEBW here.

2.3. Intrinsic Mode Dispersion Entropy (InMDE)

Due to the advantages of DispEn over SampEn for short signals, intrinsic mode DispEn (InMDE) based on the algorithm of InMSE is proposed herein. The algorithm of InMDE includes the following two key steps:

- Calculation of the sum of the intrinsic mode functions (IMFs) obtained by EMD: In this step, the original signal u is decomposed to and a residual signal . It is worth noting that the first IMF, , shows the highest frequency component in a signal, while the last IMF, , reflects the trend of the time series. Next, the cumulative sums of IMFs (CSI) for each scale factor are defined as follows [15]:

where denotes the IMF obtained by EMD. Thus, is equal to the original signal u.(4) Calculation of DispEn of at each scale factor: The DispEn value is calculated at each scale factor. Like MDE and RCMDE, and of NCDF are respectively set at the average and SD of the original signal and they remain constant for all scale factors in InMDE.

It is worth noting that InMSE and InMDE do not downsample the filtered signals. That is, the number of samples for each is equal to that for the original signal, leading to more reliable results for higher scale factors. The complexity metrics for univariate signals and their characteristics are summarized in Table 1. The Matlab codes used in this study are described in Appendix.

Table 1.

Characteristics of the complexity metrics for univariate signals.

2.4. Parameters of the Multiscale Entropy Approaches

For all the SampEn-based methods, we set , , and of the SD of the original signal [3]. For all the DispEn-based approaches, we set and . For more information about c and d, please refer to [6,13].

For the DispEn-based complexity measures without DS, as the length of coarse-grained signals is equal to that of the original signal, it is advisable to follow . For the SampEn-based complexity approaches without DS, it is recommended to have at least (or preferably ) sample points for the embedding dimension m [21,22].

For the DispEn-based multiscale approaches with DS, since the decimation process causes the length of a signal decreases to , is recommended. Similarly, for the SampEn-based complexity techniques with DS, [3] is recommended.

On the other hand, in RCDME, we consider coarse-grained time series with length . Therefore, the total sample points calculated in RCMDE is . Thus, RCMDE follows , leading to more reliable results, especially for short signals. Likewise, it is advisable to have at least (or preferably ) sample points for RCMSE with embedding dimension m.

3. Evaluation Signals

In this section, the synthetic and real signals used in this study to evaluate the behaviour of the multiscale entropy approaches are described.

3.1. Synthetic Signals

White noise is more irregular than pink noise ( noise), although the latter is more complex because pink noise contains long-range correlations and its decay produces a fractal structure in time [3,5,8]. Therefore, white and pink noise are two important signals to evaluate the multiscale entropy techniques [3,5,8,23,24,25].

In order to investigate the change in the behavior of a nonlinear system, the Lorenz attractor is used. Further details can be found in [26,27]. To evaluate the effect of filtering and downsampling processes on different frequency components of time series, multi-harmonic signals are employed [16]. Finally, to inspect the effect of noise on multiscale approaches, white noise was added to the Lorenz and multi-harmonic time series.

3.2. Real Biomedical Datasets

Multiscale entropy techniques are broadly used to characterize physiological recordings [2,3,12,25]. To this end, electroencephalograms (EEGs) [28] and stride internal fluctuations [29] are used to distinguish different kinds of dynamics of time series.

3.2.1. Dataset of Focal and Non-Focal Brain Activity

The ability of complexity measures to discriminate focal from non-focal signals is evaluated by the use of an EEG dataset (publicly-available at [30]) [28]. The dataset includes five patients and, for each patient, there are 750 focal and 750 non-focal bivariate time series. The length of each signal was 20 s with sampling frequency of 512 Hz (10,240 samples). For more information, please, refer to [28]. All subjects gave written informed consent that their signals from long-term EEG might be used for research purposes [28]. Before computing the entropies, the EEG signals were digitally band-pass filtered between 0.5 Hz and 150 Hz using a fourth-order Butterworth filter.

3.2.2. Dataset of Stride Internal Fluctuations

To compare multiscale entropy methods, stride interval recordings are used [29,31]. The time series were recorded from five young, healthy men (23–29 years old) and five healthy old adults (71–77 years old). All the individuals walked continuously on level ground around an obstacle-free path for 15 min. The stride interval was measured by the use of ultra-thin, force sensitive resistors placed inside the shoe. For more information, please refer to [29].

4. Results and Discussion

4.1. Synthetic Signals

4.1.1. Frequency Responses of Cumulative Sums of IMFs (CSI), and Moving Average (MA) and Butterworth (BW) Filters

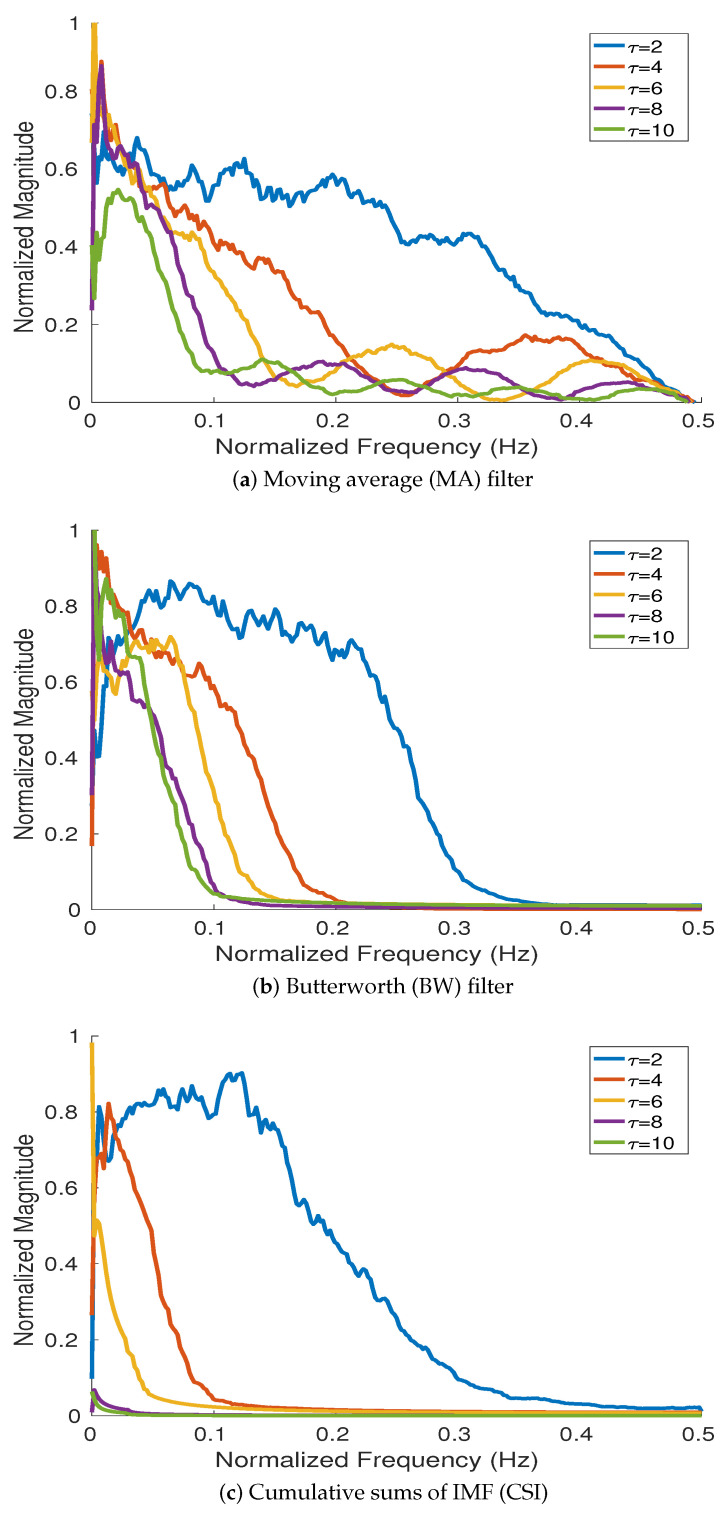

To investigate the frequency responses of MA, BW, and CSI, we used 200 realizations of white noise with length 512 sample points following [32,33]. The average Fourier spectra obtained by MA, BW, and CSI at different scale factors (i.e., 2, 4, 6, 8, and 10) are depicted in Figure 2. The results show that BW, MA, and CSI can be considered as low-pass filters with different cut-off frequencies. The results for MA and BW filters are in agreement with their theoretical frequency responses shown in Equations (2) and (3), respectively. The results for CSI are also in agreement with the fact that corresponds to a half-band high-pass filter and () can be considered as a filter bank of overlapping bandpass filters [33].

Figure 2.

Magnitude of the frequency response for (a) MA, (b) BW, and (c) CSI at different scale factors (2, 4, 6, 8, and 10) computed from 200 realizations of white noise with length 512 sample points.

The magnitude of the frequency response for BW, compared with MA, is flatter in the passband, side lobes in its stopband are not present, and the roll-off is faster. Therefore, the filter’s frequency response leads to a more accurate elimination of the components with frequency above cut-off frequencies. This fact reduces aliasing while the filtered signals are downsampled. The behavior of the frequency response for CSI is similar to that for BW. However, the cut-off frequencies obtained by CSI are considerably smaller than those for BW. This fact results in very low entropy values at high scale factors.

4.1.2. Effect of Different Low-Pass Filters on Multi-Harmonic and Lorenz Series

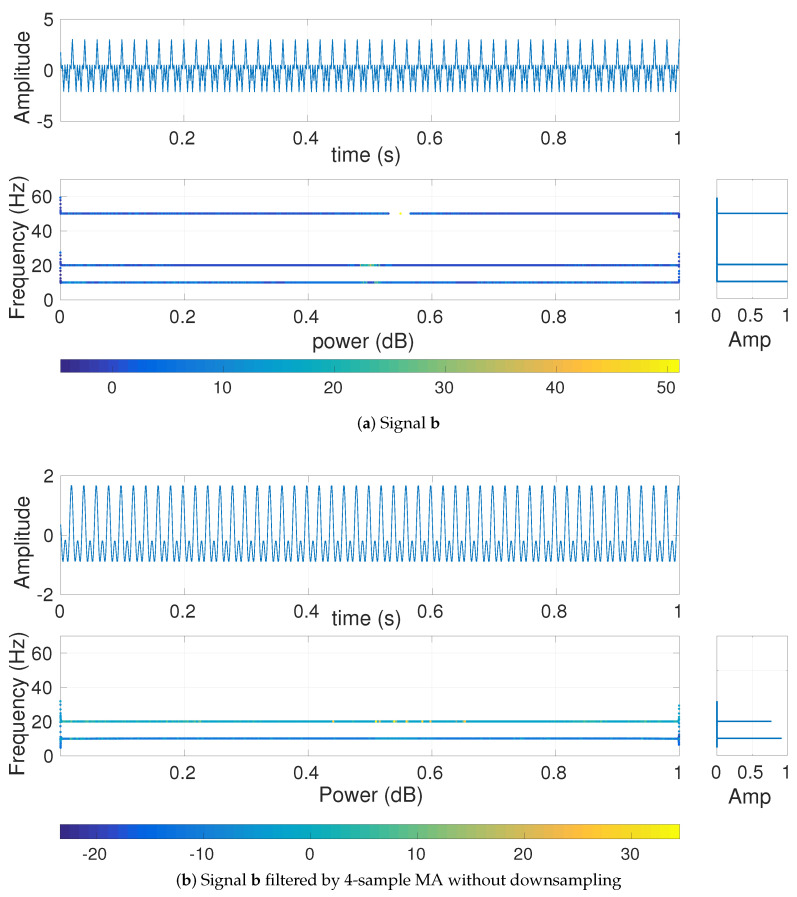

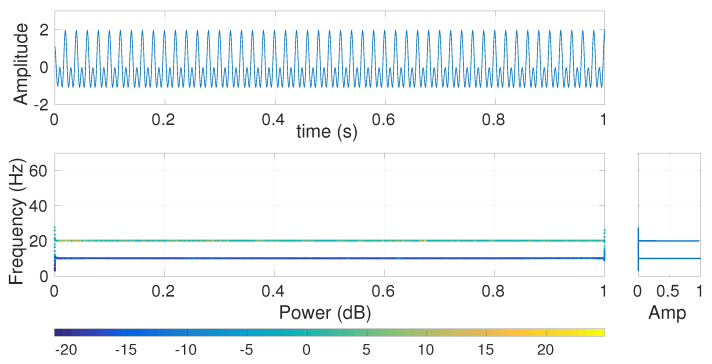

To understand the effect of MA, BW, and CSI on multi-harmonic signals, we use with sampling frequency 200 Hz and length 20 s. The first second of the signal b is depicted in Figure 3. To show the frequency components of and their amplitude values, we used the combination of Hilbert transform and recently introduced variational mode decomposition (VMD). VMD is a generalization of the classic Wiener filter into adaptive, multiple bands [16]. After decomposing the original signals into its IMFs using VMD, we employ the Hilbert transform to find the instantaneous frequency of each IMF [16,34].

Figure 3.

Hilbert transform of the decomposed VMD-based IMFs obtained from (a) and (b) filtered by 20-sample MA (scale 20).

The frequency components of b and their corresponding amplitudes are depicted in Figure 3a. The Hilbert transform of filtered by 4-sample MA (Figure 3b) illustrates that the harmonic is completely eliminated, in agreement with the fact that MA is a low-pass filter with cut-off frequency and completely eliminates the frequency component at (here at ) based on Equation (2) [11].

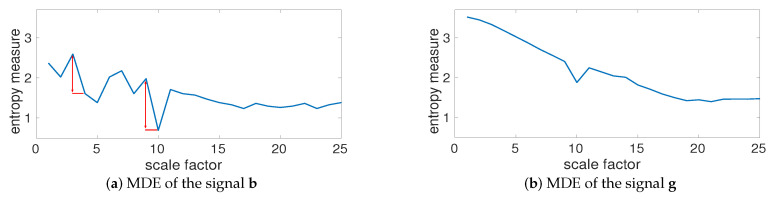

The MDE values for b, depicted in Figure 4a, show that the largest changes in entropy values occur at temporal scale 4 and 10 (based on and —please see the red double arrows in Figure 4). In fact, the largest changes in entropy values are related to the main frequency components of a multi-harmonic time series. To investigate the effect of noise on MDE values, we created , where denotes a uniform random variable between 0 to 1. The MDE values for g, plotted in Figure 4b, illustrate a decrease at temporal scales from 1 to 19 and then the entropy values become approximately constant. This is in agreement with the fact that the smaller scale factors correspond to higher frequency components, whereas smaller scales correspond to lower frequencies [35]. Comparing Figure 4a,b shows that after filtering the effect of white noise by MA, the profiles for b and g are very close (temporal scales 19 to 25). This suggests that white noise affects lower temporal scales. It is worth noting that the behavior of MDEBW and that of MDEMA is similar.

Figure 4.

MDE values for (a) and (b) . The largest changes in entropy values (the red double arrows) occur at temporal scale 4 and 10 (respectively correspond to and ).

However, the effect of CSI at scale 2 on b is shown in Figure 5. The results, compared with those for MA (see Figure 4b), illustrate similar behavior of CSI at scale 2 and MA at scale 4 in terms of the elimination of the highest frequency component of b. This is in agreement with the fact that, at a specific scale factor, the cut-off frequency for CSI is considerably lower than that for MA or BW (see Figure 2).

Figure 5.

Hilbert transform of the decomposed VMD-based IMFs obtained from the signal b for CSI at scale 2.

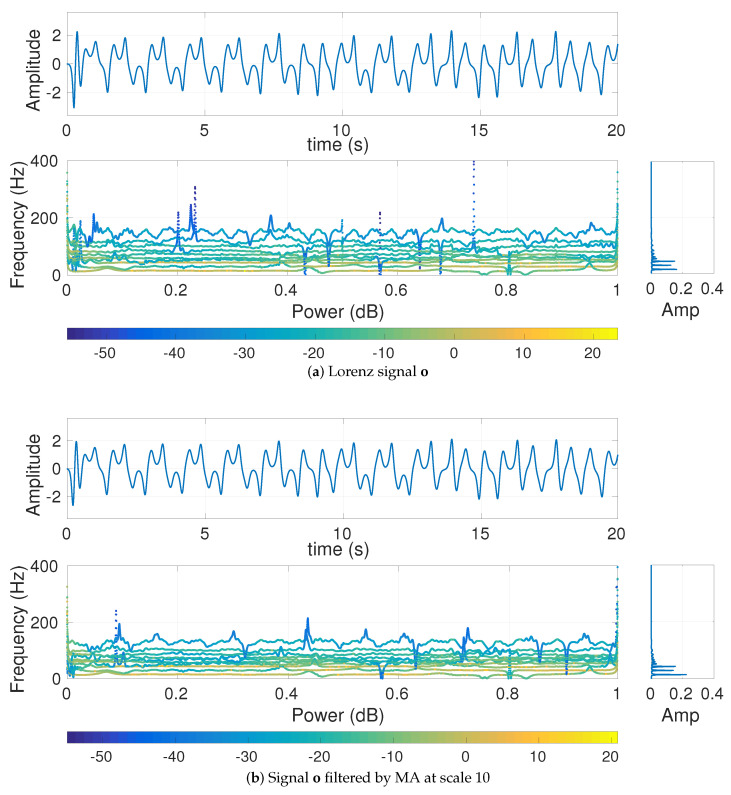

We also generated the Lorenz signal o with length 10,000 sample points and sampling frequency () 300 Hz. To have a nonlinear behavior, , , and were set [26,27]. The signal o and filtered by MA at scale 10 are shown in Figure 6. The MDE-based values for o are depicted in Figure 7a. The Nyquist frequency of the signal is () Hz and is close to its highest frequency component (around 150 Hz). Note that choosing a lower sampling frequency may result in aliasing. As the main frequency components of this time series are around 20–30 Hz, the MA filter is not able to completely eliminate the main frequency components of this signal at scale 10. It leads to the amplitude values of the filtered signal at scale 10 (without downsampling) being very close to those of the original time series o.

Figure 6.

Hilbert transform of the decomposed VMD-based IMFs obtained from (a) the Lorenz signal and (b) filtered by MA at scale 10.

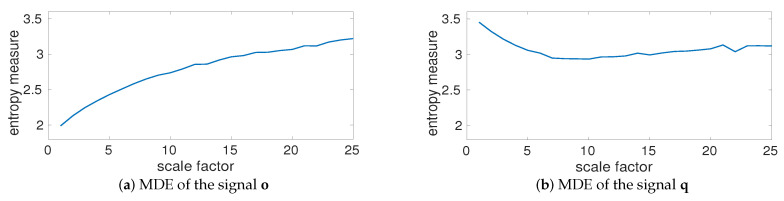

Figure 7.

MDE results for (a) the Lorenz signal o and (b) .

To inspect the effect of additive noise on MDE values, we created , where is a random variable between 0 to 1. The MDE values for q, plotted in Figure 7b, illustrate a decrease at low temporal scale and then an increase at high time scale factors. It is also found that the MDE values of o and q are approximately equal at scales between 18 to 25. This is also consistent with the fact that lower scale factors correspond to higher frequency components, whereas larger scales correspond to lower frequencies [35].

4.1.3. Effect of Downsampling and Sampling Frequency on Multiscale Entropy Methods

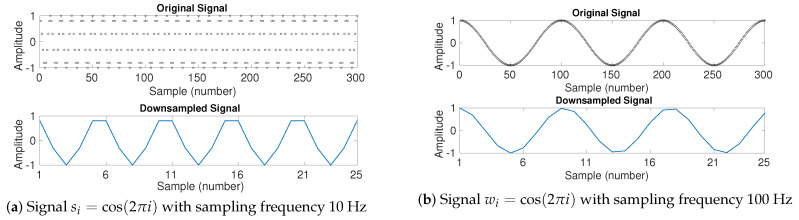

To investigate the effect of downsampling (without low-pass filtering) on multiscale entropy approaches, we created the signal with length 300 sample points and sampling frequency 10 Hz, and (b) with length 300 sample points and sampling frequency 100 Hz. The signals and their downsampled series by a factor of 12 are depicted in Figure 8.

Figure 8.

Downsampling the signal (a) with length 300 sample points and sampling frequency 10 Hz, and (b) with length 300 sample points and sampling frequency 100 Hz. The factor of downsampling is 12.

When the sampling frequency of a time series is close to its main frequency components (see s—Figure 8a), the downsampled signal may have a lower frequency component in comparison with the original signal. It shows the effect of aliasing in the time series. Accordingly, the downsampled signals are more regular (have smaller entropy values). It is confirmed by the fact that the DispEn of s and its corresponding downsampled series are 2.0267 and 1.6058, respectively.

On the other hand, when the sampling frequency is high (see w—Figure 8b), the amplitude values of downsampled signal are approximately equal to those of the original signal. However, as the number of sample points decreases by 12, the rate of change along sample points is 12 times larger than that for the original signal. Thus, the original signal is more regular than its corresponding downsampled series. It is confirmed by the fact that the DispEn of w and its corresponding downsampled series are respectively 1.9618 and 2.5539.

4.1.4. Multiscale Entropy Methods vs. Noise

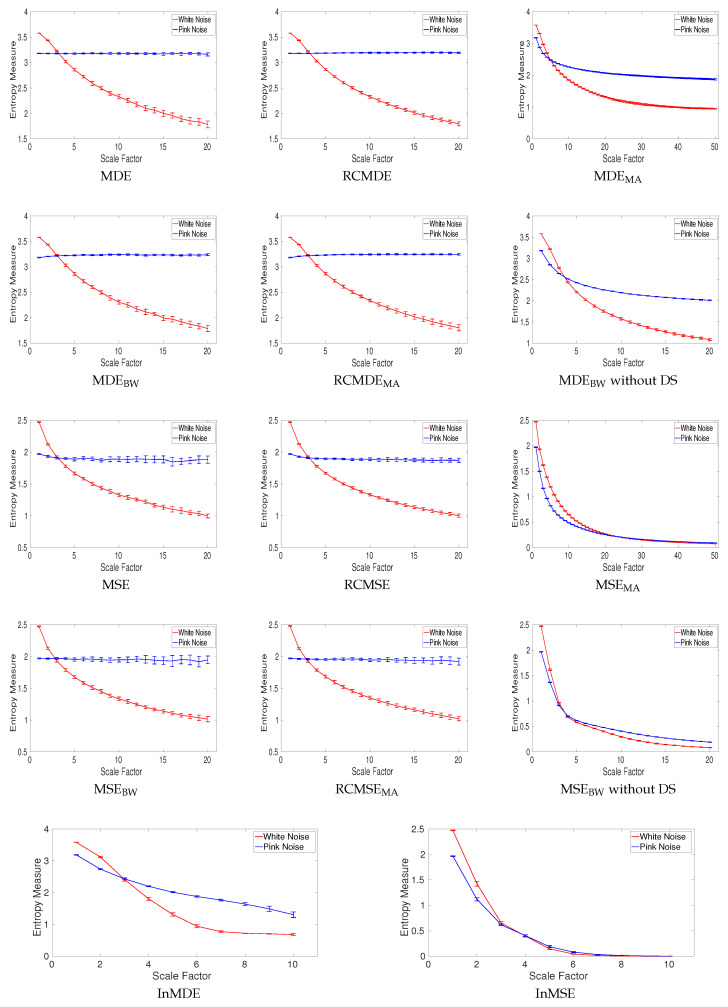

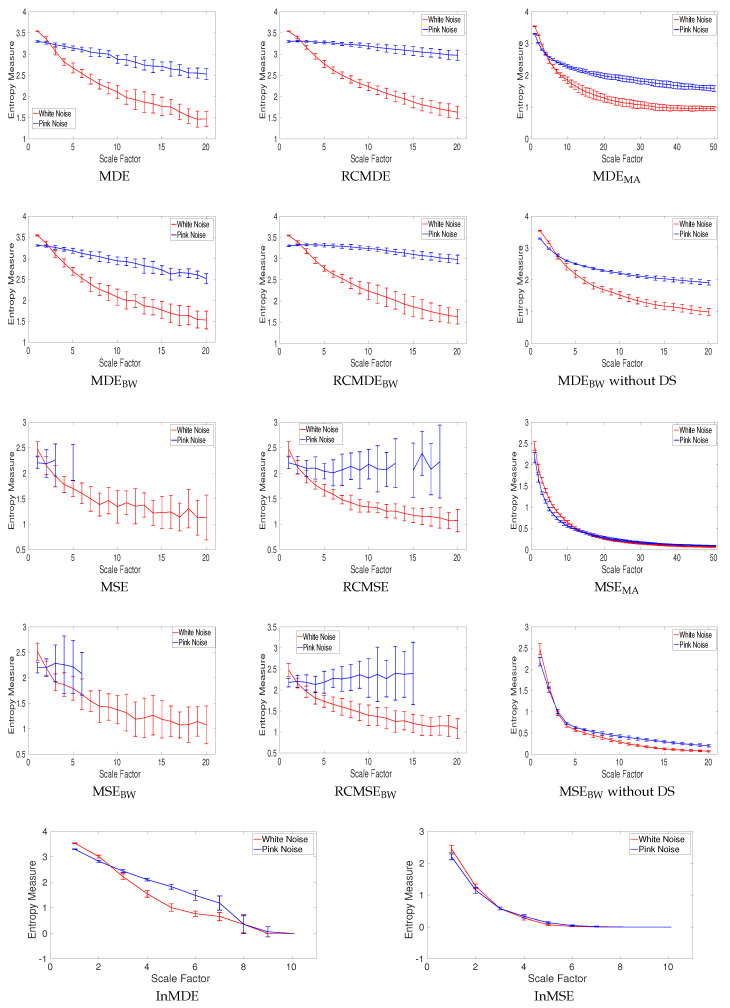

All of the complexity methods are used to distinguish the dynamics of white from pink noise. The mean and SD of results for the signals with length 8000 (long series) and 400 (short series) sample points are respectively depicted in Figure 9 and Figure 10. The results obtained by the complexity techniques with DS show that the entropy values decrease monotonically with scale factor for white noise. However, for pink noise, the entropy values become approximately constant over larger-scale factors. These are in agreement with the fact that, unlike white noise, noise has structure across temporal scale factors [3,5]. The profiles for MDEMA and MSEMA without DS, MDEBW and MSEBW without DS, InMSE, and InMDE decrease along the temporal scales as there is not a DS process to increase the rate of changes to increase entropy values. It should be mentioned that, as the crossing point of profiles for white and pink noise is at scale 23, for the MA-based coarse graining is equal to 50. Furthermore, for InMSE and InMDE is 10, as the entropy values at high scales are close to 0.

Figure 9.

Mean value and SD of results obtained by the complexity measures computed from 40 different realizations of pink and white noise with length 8000 samples. Red and blue demonstrate white and pink noise, respectively.

Figure 10.

Mean value and SD of results obtained by the complexity measures computed from 40 different realizations of pink and white noise with length 400 samples. Entropy values obtained by MSE, RCMSE, MSEBW, and RCMSEBW are undefined at several high scale factors. Red and blue demonstrate white and pink noise, respectively.

Entropy values obtained by MSE, RCMSE, MSEBW, and RCMSEBW are undefined at high scale factors. Comparing Figure 9 and Figure 10 demonstrates that the longer the signals, the more robust the multiscale entropy estimations. The results also show that InMDE, compared with InMSE, better discriminates white from pink noise.

To compare the results obtained by the complexity algorithms, we used the coefficient of variation (CV) defined as the SD divided by the mean. We use such a metric as the SDs of signals may increase or decrease proportionally to the mean. The CV values at scale 10, as a trade-off between low and high scale factors, for noise signals with length 8000 and 400 sample points are respectively illustrated in Table 2 and Table 3. Of note is that we consider scale 25 and 5 for the MSEMA and MDEMA, and InMSE and InMDE profiles, respectively. The refined composite technique decreases the CVs for all the MSE- and MDE-based algorithms, showing its advantage to improve the stability of results for short and long noise. The smallest CVs for long pink and white noise are our developed MDEBW without DS and RCMDEBW methods, respectively. The smallest CVs for short pink and white noise are achieved by RCMDEBW and RCMDE, respectively. Overall, the smallest CVs are obtained by the DispEn-based complexity measures.

Table 2.

CV values obtained by the complexity measures at scale factor 10 for forty realizations of pink and white noise with length 8000 sample points. Note that the scales 25 and 5 are considered for MSEMA and MDEMA, and InMSE and InMDE, respectively.

| Noise | MDE | RCMDE | MDEMA (Scale 25) | MDEBW | RCMDEBW | MDEBW without DS | InMDE (Scale 5) |

| Pink | 0.0058 | 0.0038 | 0.0069 | 0.0044 | 0.0038 | 0.0031 | 0.0091 |

| White | 0.0174 | 0.0124 | 0.0246 | 0.0166 | 0.0115 | 0.0182 | 0.0394 |

| Noise | MSE | RCMSE | MSEMA (Scale 25) | MSEBW | RCMSEBW | MSEBW without DS | InMSE (Scale 5) |

| Pink | 0.0186 | 0.0105 | 0.0131 | 0.0176 | 0.0124 | 0.0130 | 0.0982 |

| White | 0.0201 | 0.0133 | 0.0135 | 0.0219 | 0.0203 | 0.0308 | 0.1330 |

Table 3.

CV values obtained by the complexity measures at scale factor 10 for forty realizations of pink and white noise with length 400 sample points. Note that the scales 25 and 5 are considered for MSEMA and MDEMA, and InMSE and InMDE, respectively.

| Noise | MDE | RCMDE | MDEMA (Scale 25) | MDEBW | RCMDEBW | MDEBW without DS | InMDE (Scale 5) |

| Pink | 0.0317 | 0.0194 | 0.0473 | 0.0320 | 0.0141 | 0.0204 | 0.0522 |

| White | 0.0726 | 0.0415 | 0.1116 | 0.0929 | 0.0876 | 0.0726 | 0.1435 |

| Noise | MSE | RCMSE | MSEMA (Scale 25) | MSEBW | RCMSEBW | MSEBW without DS | InMSE (Scale 5) |

| Pink | undefined | 0.1327 | 0.0434 | undefined | 0.2008 | 0.0822 | 0.2351 |

| White | 0.2385 | 0.0738 | 0.0605 | 0.2024 | 0.1736 | 0.1060 | 0.3779 |

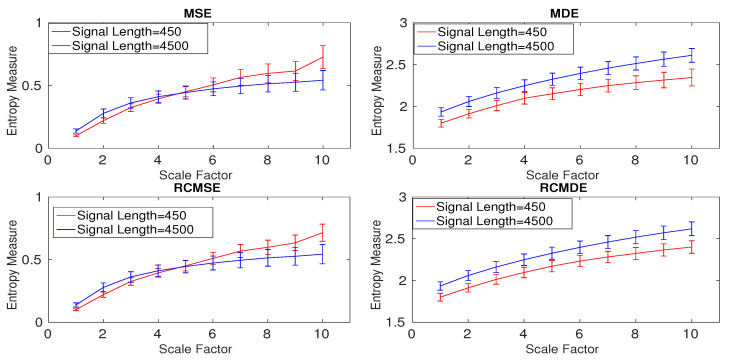

4.1.5. Effect of Refined Composite on Nonlinear Systems without Noise

To understand the effect of the refined composite technique on nonlinear signals without noise, we created 40 realizations of two Lorenz signals with lengths of 450 and 4500 sample points and sampling frequency () 150 Hz. To have a nonlinear behavior, the values of , , and were used in the Lorenz system [26,27]. The results obtained by MSE, MDE, RCMSE, and RCMDE are depicted in Figure 11 and are in agreement with [25,27]. Of note is that the entropy values for RCMSEBW and RCMDEBW are similar to those for RCMSE and RCMDE, respectively. Thus, these results are not shown herein.

Figure 11.

Mean and SD of the results obtained by the MSE, MDE, RCMSE, and RCMDE for the Lorenz series with lengths 450 and 4500 sample points.

To investigate the effect of the refined composite technique on the stability of results, the CVs for the multiscale approaches at scale 5 are calculated. The smallest CVs, illustrated in Table 4 are obtained by MDE and RCMDE approaches. The results also suggest that the refined composite does not improve the stability of profiles for the signal with length 4500 samples (long signals). For the Lorenz series with length 450 sample points, RCMSE and RCMDE lead to smaller CV values in comparison with MSE and MDE, in that order, showing the importance of the refined composite method to characterize small time series.

Table 4.

CVs of MSE, RCMSE, MDE, and RCMDE values for the 40 different realizations of the Lorenz signals with length 450 and 4500 samples at scale five.

| Signal Length | MSE | MDE | RCMSE | RCMDE |

|---|---|---|---|---|

| 450 sample points | 0.1000 | 0.0898 | 0.0700 | 0.0309 |

| 4500 sample points | 0.1156 | 0.0310 | 0.1134 | 0.0312 |

4.2. Real Signals

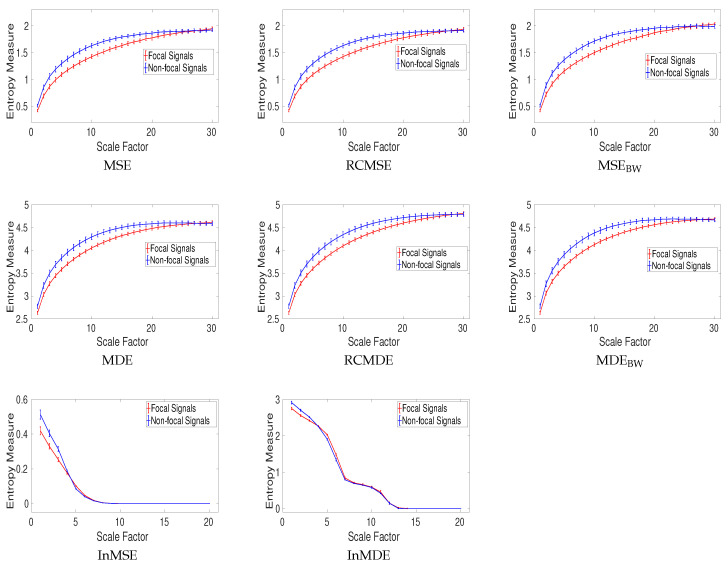

4.2.1. Dataset of Focal and Non-Focal Brain Activity

For the focal and non-focal EEG dataset, the results obtained by MSE, MDE, RCMSE, RCMDE, MSEBW, MDEBW, InMSE, and InMDE, depicted in Figure 12, show that the non-focal signals are more complex than the focal ones. This fact is in agreement with previous studies [28,36].

Figure 12.

Mean value and SD of results obtained by the MSE, MDE, RCMSE, RCMDE, MSEBW, MDEBW, InMSE, and InMDE computed from the focal and non-focal EEGs.

The results for RCMSEBW and RCMDEBW were respectively similar to those for MSEBW and MDEBW. Thus, they are not shown herein. Note that, for MDE and RCMDE, and m, respectively, were 30 and 3. It should also be mentioned that the average entropy values over two channels for these bivariate EEG signals are reported for the univariate complexity techniques.

To compare the results, the CV values obtained by the univariate multiscale approaches, except InMSE and InMDE, are calculated at scale factor 15. These are shown in Table 5. The CV values for MDE, RCMDE, MSE, and RCMSE illustrate that the refined composite approach does not enhance the stability of the MDE and MSE profiles. Overall, the smallest CV values are achieved by DispEn-based complexity methods.

Table 5.

CVs of MSE, RCMSE, MSEBW, MDE, RCMDE, and MDEBW values for the focal and non-focal EEGs at scale 15.

| Signals | MSE | RCMSE | MSEBW | MDE | RCMDE | MDEBW |

|---|---|---|---|---|---|---|

| Focal EEGs | 0.0229 | 0.0229 | 0.0224 | 0.0083 | 0.0089 | 0.0083 |

| Non-focal EEGs | 0.0178 | 0.0191 | 0.0172 | 0.0111 | 0.0121 | 0.0109 |

4.2.2. Dataset of Stride Internal Fluctuations

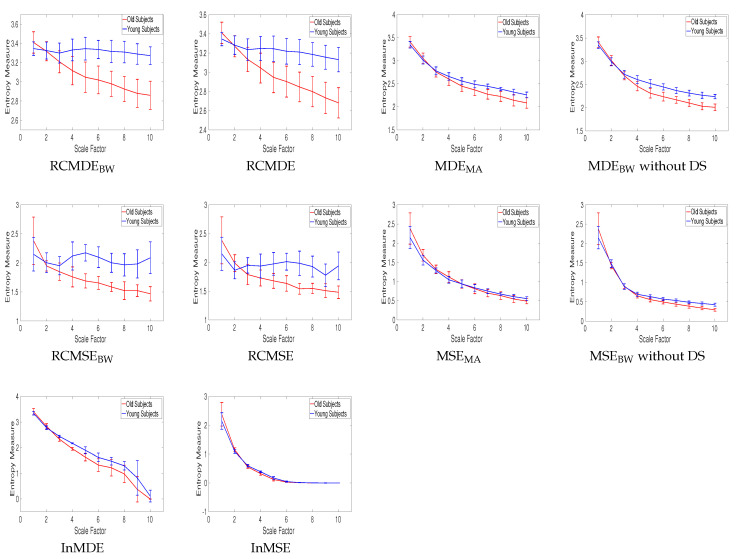

In Figure 13, the mean and SD of the RCMDEBW, RCMDE, MDEMA, MDEBW without DS, InMDE, RCMSEBW, RCMSE, MSEMA, MSEBW without DS, and InMSE values computed from young and old subjects’ stride internal fluctuations are illustrated. As the number of samples for these time series are between 400 to 800 sample points, we do not use MSE, MDE, MSEBW, and MDEBW.

Figure 13.

Mean value and SD of results obtained by the complexity measures computed from the young and old subjects’ stride interval recordings.

For each scale factor, the average of entropy values for elderly subjects is smaller than that for young ones, in agreement with those obtained by the other entropy-based methods [37] and the fact that recordings from healthy young subjects correspond to more complex states because of their ability to adapt to adverse conditions, whereas aged individuals’ signals present complexity loss [3,5,38]. The results also suggest that, when dealing with short signals, the complexity measures without downsampling (i.e., MSEMA, MDEMA, and MSEBW and MDEBW without DS) are appropriate to distinguish different kinds of dynamics of real signals.

The CV values at those scales whose profiles do not have an overlap are illustrated in Table 6. It is found that MDEBW without DS leads to the smallest CV values.

Table 6.

CV values obtained by the complexity measures for the stride interval recordings for young and old subjects.

| Signals | RCMDEBW | RCMDE | MDEBW without DS | RCMSEBW |

|---|---|---|---|---|

| Young subjects | 0.0355 | 0.0410 | 0.0334 | 0.0644 |

| Old subjects | 0.0517 | 0.0540 | 0.0449 | 0.0723 |

5. Time Delay, Downsampling, and Nyquist Frequency

According the previous complexity-based approaches [2,3,13,15], the time delay was equal to 1 in this study. Nevertheless, if the sampling frequency is considerably larger than the highest frequency component of a signal, the first minimum or zero crossing of the autocorrelation function or mutual information can be used for the selection of an appropriate time delay [39].

Alternatively, a signal may be downsampled before calculating the complexity-based entropy approaches to adjust its highest frequency component to its Nyquist frequency () [40]. Accordingly, when the coarse-graining process starts, the low-pass filtering will affect the highest frequency component of the signal at low temporal scale factors. It is worth noting that if the main frequency components of the signal are considerably lower than its highest frequency component (e.g., the signal o - please see Figure 7), the filtering process may make only a little change in the amplitude values of the signal at even large scales.

6. Future Work

Wavelet transform, which is a powerful filter bank broadly used for analysis of non-stationary recordings, can be employed to decompose a signal to several series with specific frequency bands [41]. Accordingly, the wavelet-based filter bank could be used as a complexity approach. VMD can also be used as an alternative to EMD in InMSE and InMDE. VMD, unlike EMD, provides a solution to the decomposition problem that is theoretically well founded and more robust to noise than EMD [16]. A recent development in the field has tried to generalize multivariate and univariate multiscale algorithms to a family of statistics by using different moments (e.g., variance, skewness, and kurtosis) in the univariate and multivariate coarse-graining process [25,42,43,44]. It is recommended to compare these techniques in the context of signal processing and to investigate their interpretations. As the existing univariate and even multivariate coarse-graining processes filter only series in each channel separately [38,43,45], there is a need to propose new multivariate filters dealing with the spatial and time domains at the same time.

7. Conclusions

In summary, we have compared existing and newly proposed coarse-graining approaches for univariate multiscale entropy estimation. Our results indicate that, as expected due to the filter bank properties of the EMD [33] in comparison with moving average and Butterworth filtering, the cut-off frequencies at each temporal scale of the former are considerably smaller than those for the latter. Therefore, InMSE and our developed InMDE have entropy values very close to 0 for relatively low values of temporal scales due to the exponential, rather than linear, dependency of the bandwidth at each scale. We also inspected the effect of the downsampling in the coarse-graining process in the entropy values, showing that it may lead to increased or decreased values of entropy depending on the sampling frequency of the time series.

Our results confirmed previous reports indicating that, when dealing with short or noisy signals, the refined composite approach [14,25] may improve the stability of entropy results. On the other hand, for long signals with relatively low levels of noise, the refined composite method makes little difference in the quality of the entropy estimation at the expense of a considerable additional computational cost. In any case, the use of dispersion entropy over sample entropy in the estimations led to more stable results based on CV values and ensured that the entropy values were defined at all temporal scales.

Finally, the profiles obtained by the multiscale techniques with and without downsampling led to similar findings (e.g., pink noise is more complex than white noise based on all the complexity methods) although the specific values of entropy may differ depending on the coarse-graining used. This suggests that downsampling within the coarse-graining procedure may not be needed to quantify the complexity of signals, especially for short ones. In fact, these kinds of techniques still eliminate the fast temporal scales to deal with progressively slower time scales as increases and take into account multiple time scales inherent in time series.

On the whole, it is expected that these findings contribute to the ongoing discussion regarding the development of stable, fast, and less sensitive-to-noise complexity approaches appropriate for either short or long time series. We recommend that future studies explicitly justify their choices for coarse-graining procedure in the light of the characteristics of the signals under analysis and the hypothesis of the study, and that they discuss their findings on the light of the behaviour of the selected entropy metric and coarse-graining procedure.

Appendix. Matlab Codes used in this Article

The Matlab codes of DispEn and MDE are available at https://datashare.is.ed.ac.uk/handle/10283/2637. The codes of SampEn and MSE can be found at https://physionet.org/physiotools/matlab/wfdb-app-matlab/. The code of EMD is also available at http://perso.ens-lyon.fr/patrick.flandrin/emd.html. For the Butterworth filter, we used the functions “butter” and “filter” in Matlab R2015a.

Author Contributions

Hamed Azami and Javier Escudero conceived and designed the methodology. Hamed Azami was responsible for analysing and writing the paper. Both the authors contributed critically to revise the results and discussed them and have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Yang A.C., Tsai S.J. Is mental illness complex? From behavior to brain. Prog. Neuro-Psychopharmacol. Biol. Psychiatry. 2013;45:253–257. doi: 10.1016/j.pnpbp.2012.09.015. [DOI] [PubMed] [Google Scholar]

- 2.Costa M., Goldberger A.L., Peng C.K. Multiscale entropy analysis of complex physiologic time series. Phys. Rev. Lett. 2002;89:068102. doi: 10.1103/PhysRevLett.89.068102. [DOI] [PubMed] [Google Scholar]

- 3.Costa M., Goldberger A.L., Peng C.K. Multiscale entropy analysis of biological signals. Phys. Rev. E. 2005;71:021906. doi: 10.1103/PhysRevE.71.021906. [DOI] [PubMed] [Google Scholar]

- 4.Bar-Yam Y. Dynamics of Complex Systems. Addison-Wesley Reading; Boston, MA, USA: 1997. [Google Scholar]

- 5.Fogedby H.C. On the phase space approach to complexity. J. Stat. Phys. 1992;69:411–425. doi: 10.1007/BF01053799. [DOI] [Google Scholar]

- 6.Rostaghi M., Azami H. Dispersion entropy: A measure for time series analysis. IEEE Signal Process. Lett. 2016;23:610–614. doi: 10.1109/LSP.2016.2542881. [DOI] [Google Scholar]

- 7.Zhang Y.C. Complexity and 1/f noise. A phase space approach. J. Phys. I. 1991;1:971–977. doi: 10.1051/jp1:1991180. [DOI] [Google Scholar]

- 8.Silva L.E.V., Cabella B.C.T., da Costa Neves U.P., Junior L.O.M. Multiscale entropy-based methods for heart rate variability complexity analysis. Phys. A Stat. Mech. Appl. 2015;422:143–152. doi: 10.1016/j.physa.2014.12.011. [DOI] [Google Scholar]

- 9.Goldberger A.L., Peng C.K., Lipsitz L.A. What is physiologic complexity and how does it change with aging and disease? Neurobiol. Aging. 2002;23:23–26. doi: 10.1016/S0197-4580(01)00266-4. [DOI] [PubMed] [Google Scholar]

- 10.Hayano J., Yamasaki F., Sakata S., Okada A., Mukai S., Fujinami T. Spectral characteristics of ventricular response to atrial fibrillation. Am. J. Physiol. Heart Circ. Physiol. 1997;273:H2811–H2816. doi: 10.1152/ajpheart.1997.273.6.H2811. [DOI] [PubMed] [Google Scholar]

- 11.Valencia J.F., Porta A., Vallverdu M., Claria F., Baranowski R., Orlowska-Baranowska E., Caminal P. Refined multiscale entropy: Application to 24-h holter recordings of heart period variability in healthy and aortic stenosis subjects. IEEE Trans. Biomed. Eng. 2009;56:2202–2213. doi: 10.1109/TBME.2009.2021986. [DOI] [PubMed] [Google Scholar]

- 12.Humeau-Heurtier A. The multiscale entropy algorithm and its variants: A review. Entropy. 2015;17:3110–3123. doi: 10.3390/e17053110. [DOI] [Google Scholar]

- 13.Azami H., Rostaghi M., Abasolo D., Escudero J. Refined Composite Multiscale Dispersion Entropy and its Application to Biomedical Signals. IEEE Trans. Biomed. Eng. 2017;64:2872–2879. doi: 10.1109/TBME.2017.2679136. [DOI] [PubMed] [Google Scholar]

- 14.Wu S.D., Wu C.W., Lin S.G., Lee K.Y., Peng C.K. Analysis of complex time series using refined composite multiscale entropy. Phys. Lett. A. 2014;378:1369–1374. doi: 10.1016/j.physleta.2014.03.034. [DOI] [Google Scholar]

- 15.Amoud H., Snoussi H., Hewson D., Doussot M., Duchêne J. Intrinsic mode entropy for nonlinear discriminant analysis. IEEE Signal Process. Lett. 2007;14:297–300. doi: 10.1109/LSP.2006.888089. [DOI] [Google Scholar]

- 16.Dragomiretskiy K., Zosso D. Variational mode decomposition. IEEE Trans. Signal Process. 2014;62:531–544. doi: 10.1109/TSP.2013.2288675. [DOI] [Google Scholar]

- 17.Unser M., Aldroubi A., Eden M. B-spline signal processing. I. Theory. IEEE Trans. Signal Process. 1993;41:821–833. doi: 10.1109/78.193220. [DOI] [Google Scholar]

- 18.Fliege N.J. Multirate Digital Signal Processing. John Wiley; Hoboken, NJ, USA: 1994. [Google Scholar]

- 19.Oppenheim A.V. Discrete-Time Signal Processing. Pearson Education India; Delhi, India: 1999. [Google Scholar]

- 20.Castiglioni P., Coruzzi P., Bini M., Parati G., Faini A. Multiscale sample entropy of cardiovascular signals: Does the choice between fixed-or varying-tolerance among scales influence its evaluation and interpretation? Entropy. 2017;19:590. doi: 10.3390/e19110590. [DOI] [Google Scholar]

- 21.Richman J.S., Moorman J.R. Physiological time-series analysis using approximate entropy and sample entropy. Am. J. Physiol. Heart Circ. Physiol. 2000;278:H2039–H2049. doi: 10.1152/ajpheart.2000.278.6.H2039. [DOI] [PubMed] [Google Scholar]

- 22.Chen W., Wang Z., Xie H., Yu W. Characterization of surface EMG signal based on fuzzy entropy. Neural Syst. Rehabil. Eng. IEEE Trans. 2007;15:266–272. doi: 10.1109/TNSRE.2007.897025. [DOI] [PubMed] [Google Scholar]

- 23.Wu S.D., Wu C.W., Humeau-Heurtier A. Refined scale-dependent permutation entropy to analyze systems complexity. Phys. A Stat. Mech. Appl. 2016;450:454–461. doi: 10.1016/j.physa.2016.01.044. [DOI] [Google Scholar]

- 24.Humeau-Heurtier A., Wu C.W., Wu S.D., Mahé G., Abraham P. Refined Multiscale Hilbert–Huang Spectral Entropy and Its Application to Central and Peripheral Cardiovascular Data. IEEE Trans. Biomed. Eng. 2016;63:2405–2415. doi: 10.1109/TBME.2016.2533665. [DOI] [PubMed] [Google Scholar]

- 25.Azami H., Fernández A., Escudero J. Refined multiscale fuzzy entropy based on standard deviation for biomedical signal analysis. Med. Biol. Eng. Comput. 2017;55:2037–2052. doi: 10.1007/s11517-017-1647-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Baker G.L., Gollub J.P. Chaotic dynamics: an introduction. Cambridge University Press; Cambridge, UK: 1996. [Google Scholar]

- 27.Thuraisingham R.A., Gottwald G.A. On multiscale entropy analysis for physiological data. Phys. A Stat. Mech. Appl. 2006;366:323–332. doi: 10.1016/j.physa.2005.10.008. [DOI] [Google Scholar]

- 28.Andrzejak R.G., Schindler K., Rummel C. Nonrandomness, nonlinear dependence, and nonstationarity of electroencephalographic recordings from epilepsy patients. Phys. Rev. E. 2012;86:046206. doi: 10.1103/PhysRevE.86.046206. [DOI] [PubMed] [Google Scholar]

- 29.Gait in Aging and Disease Database. [(accessed on 17 February 2018)]; Available online: https://www.physionet.org/physiobank/database/gaitdb.

- 30.The Bern-Barcelona EEG database. [(accessed on 17 February 2018)]; Available online: http://ntsa.upf.edu/downloads/andrzejak-rg-schindler-k-rummel-c-2012-nonrandomness-nonlinear-dependence-and.

- 31.Hausdorff J.M., Purdon P.L., Peng C., Ladin Z., Wei J.Y., Goldberger A.L. Fractal dynamics of human gait: stability of long-range correlations in stride interval fluctuations. J. Appl. Physiol. 1996;80:1448–1457. doi: 10.1152/jappl.1996.80.5.1448. [DOI] [PubMed] [Google Scholar]

- 32.Wu Z., Huang N.E. A study of the characteristics of white noise using the empirical mode decomposition method. Proc. R. Soc. Lond. A Math. Phys. Eng. Sci. 2004;460:1597–1611. doi: 10.1098/rspa.2003.1221. [DOI] [Google Scholar]

- 33.Flandrin P., Rilling G., Goncalves P. Empirical mode decomposition as a filter bank. IEEE Signal Process. Lett. 2004;11:112–114. doi: 10.1109/LSP.2003.821662. [DOI] [Google Scholar]

- 34.Huang N.E., Shen Z., Long S.R., Wu M.C., Shih H.H., Zheng Q., Yen N.C., Tung C.C., Liu H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. A Math. Phys. Eng. Sci. 1998;454:903–995. doi: 10.1098/rspa.1998.0193. [DOI] [Google Scholar]

- 35.Gow B.J., Peng C.K., Wayne P.M., Ahn A.C. Multiscale entropy analysis of center-of-pressure dynamics in human postural control: methodological considerations. Entropy. 2015;17:7926–7947. doi: 10.3390/e17127849. [DOI] [Google Scholar]

- 36.Sharma R., Pachori R.B., Acharya U.R. Application of entropy measures on intrinsic mode functions for the automated identification of focal electroencephalogram signals. Entropy. 2015;17:669–691. doi: 10.3390/e17020669. [DOI] [Google Scholar]

- 37.Nemati S., Edwards B.A., Lee J., Pittman-Polletta B., Butler J.P., Malhotra A. Respiration and heart rate complexity: effects of age and gender assessed by band-limited transfer entropy. Respir. Physiol. Neurobiol. 2013;189:27–33. doi: 10.1016/j.resp.2013.06.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ahmed M.U., Mandic D.P. Multivariate multiscale entropy: A tool for complexity analysis of multichannel data. Phys. Rev. E. 2011;84:061918. doi: 10.1103/PhysRevE.84.061918. [DOI] [PubMed] [Google Scholar]

- 39.Kaffashi F., Foglyano R., Wilson C.G., Loparo K.A. The effect of time delay on approximate & sample entropy calculations. Phys. D Nonlinear Phenom. 2008;237:3069–3074. [Google Scholar]

- 40.Berger S., Schneider G., Kochs E.F., Jordan D. Permutation Entropy: Too Complex a Measure for EEG Time Series? Entropy. 2017;19:692. doi: 10.3390/e19120692. [DOI] [Google Scholar]

- 41.Strang G., Nguyen T. Wavelets Filter Banks. Wellesley; Cambridge, UK: 1996. [Google Scholar]

- 42.Costa M.D., Goldberger A.L. Generalized multiscale entropy analysis: application to quantifying the complex volatility of human heartbeat time series. Entropy. 2015;17:1197–1203. doi: 10.3390/e17031197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Azami H., Escudero J. Refined composite multivariate generalized multiscale fuzzy entropy: A tool for complexity analysis of multichannel signals. Phys. A Stat. Mech. Appl. 2017;465:261–276. doi: 10.1016/j.physa.2016.07.077. [DOI] [Google Scholar]

- 44.Xu M., Shang P. Analysis of financial time series using multiscale entropy based on skewness and kurtosis. Phys. A Stat. Mech. Appl. 2018;490:1543–1550. doi: 10.1016/j.physa.2017.08.136. [DOI] [Google Scholar]

- 45.Azami H., Fernández A., Escudero J. Multivariate Multiscale Dispersion Entropy of Biomedical Times Series. arXiv. 2017. 1704.03947