Abstract

Understanding how different information sources together transmit information is crucial in many domains. For example, understanding the neural code requires characterizing how different neurons contribute unique, redundant, or synergistic pieces of information about sensory or behavioral variables. Williams and Beer (2010) proposed a partial information decomposition (PID) that separates the mutual information that a set of sources contains about a set of targets into nonnegative terms interpretable as these pieces. Quantifying redundancy requires assigning an identity to different information pieces, to assess when information is common across sources. Harder et al. (2013) proposed an identity axiom that imposes necessary conditions to quantify qualitatively common information. However, Bertschinger et al. (2012) showed that, in a counterexample with deterministic target-source dependencies, the identity axiom is incompatible with ensuring PID nonnegativity. Here, we study systematically the consequences of information identity criteria that assign identity based on associations between target and source variables resulting from deterministic dependencies. We show how these criteria are related to the identity axiom and to previously proposed redundancy measures, and we characterize how they lead to negative PID terms. This constitutes a further step to more explicitly address the role of information identity in the quantification of redundancy. The implications for studying neural coding are discussed.

Keywords: information theory, mutual information decomposition, synergy, redundancy

MSC: 94A15, 94A17

1. Introduction

The characterization of dependencies between the parts of a multivariate system helps to understand its function and its underlying mechanisms. Within the information-theoretic framework, this problem can be investigated by breaking down into parts the joint entropy of a set of variables [1,2,3] or the mutual information between sets of variables [4,5,6]. These approaches have many applications to study dependencies in complex systems such as gene networks (e.g., [7,8,9]), neural coding and communication (e.g., [10,11,12]), or interactive agents (e.g., [13,14,15]).

An important aspect of how information is distributed across a set of variables concerns whether different variables provide redundant, unique or synergistic information when combined with other variables. Intuitively, variables share redundant information if each variable carries individually the same information carried by other variables. Information carried by a certain variable is unique if it is not carried by any other variables or their combination, and a group of variables carries synergistic information if some information arises only when they are combined. The presence of these different types of information has implications for example to determine how the information can be decoded [16], how robust it is to disruptions of the system [17], or how the variables’ set can be compressed without information loss [18].

Characterizing the distribution of redundant, unique, and synergistic information is especially relevant in systems neuroscience, to understand how information is distributed in neural population responses. This requires identifying the features of neural responses that represent sensory stimuli and behavioral actions [19,20] and how this information is transmitted and transformed across brain areas [21,22]. The breakdown of information into these different types of components can determine the contribution of different classes of neurons and of different spatiotemporal components of population activity [23,24]. Moreover, the identification of synergistic or redundant components of information transfer may help to map dynamic functional connectivity and the integration of information across neurons or networks [25,26,27,28].

Although the notions of redundant, unique, and synergistic information seem at first intuitive, their rigorous quantification within the information-theoretic framework has proven to be elusive. Synergy and redundancy have traditionally been quantified with the measure called interaction information [29] or co-information [30], but this measure does not quantify them separately, and the presence of one or the other is associated with positive or negative values, respectively. Synergy has also been quantified using maximum entropy models as the information that can only be retrieved from the joint distribution of the variables [1,31,32].

However, a recent seminal work of [33] introduced a framework, called Partial Information Decomposition (PID), to more precisely and simultaneously quantify the redundant, unique, and synergistic information that a set of variables (or primary sources) S has about a target X. This decomposition has two cornerstones. The first is the definition of a general measure of redundancy following a set of axioms that impose desirable properties, in agreement with the corresponding abstract notion of redundancy [34]. The second is the construction of a redundancy lattice, structured according to these axioms, which reflects a partial ordering of redundancies for different sets of variables [33].

The PID framework has been further developed by others (e.g., [35,36,37,38,39,40]). However, the properties that the PID should have continue to be debated [38,41]. In particular, properly quantifying redundancy is inherently difficult because it requires assigning an identity to different pieces of information. This is needed to assess when different sources carry the same information about the target. The work in [35] argued that the original redundancy measure of [33] quantifies only quantitatively equal amounts of information and not information that is qualitatively the same. They introduced a new axiom, namely the identity axiom, which states that, for the concrete case of a target that is a copy of two sources, redundancy should correspond to the mutual information between the sources, and thus vanish for independent sources. Several redundancy measures that fulfill the identity axiom have been subsequently proposed [35,36,37]. However, although this axiom imposes a necessary condition to capture qualitatively common information, the question of how to generally determine the identity of different pieces of information to assess redundancy has not yet been solved, and information identity criteria are implicit in the axioms and measures used. Furthermore, the identity axiom is incompatible with ensuring the nonnegativity of the PID terms when there are more than two sources (multivariate case). This was proven by [42] with a counterexample that involves deterministic target-source dependencies, just like the target-source copy example used to motivate the axiom.

In this work, we examine in more detail how assumptions on the assignment of information identity determine the properties of the PIDs. We study in a general way the form of the PID terms for systems with deterministic target-source dependencies. These dependencies are particularly relevant to address the question of information identity because they allow exploring the consequences of alternative assumptions about how target-source identity associations constrain the existence of information synergistic contributions. These target-source identity associations naturally occur for example when the same variable appears both as a source and in the target: if some piece of information is assumed to be only associated with a variable that appears both as a source and as part of the target, this identity association would imply that there is no need to combine that source with any other to retrieve that piece of information. In other words, the corresponding synergy should be zero. Importantly, the deterministic relationships between the target and sources allow us to analyze how information identity criteria constrain the properties of the PIDs without the need to rely on any specific definition of PID measures.

To formalize the effect of deterministic target-source dependencies on the PID terms, we enunciate and compare the implications of two axioms that propose two alternative ways in which deterministic dependencies can constrain synergistic contributions because of assumptions on target-source identity associations. These axioms impose constraints to synergy for any (possibly multivariate) system with deterministic target-source dependencies, while the identity axiom only concerns a particular class of bivariate systems. We prove that the fulfillment of these axioms implies the fulfillment of the identity axiom and that several measures that fulfill the identity axiom also comply with one of the synergy axioms in general [36], or at least for a wider class of systems [35,43] than the one addressed by the identity axiom. The proof of the existence of negative terms when adopting the identity axiom was based on a concrete counterexample [41,42,44]. Oppositely, the stricter conditions of our synergy axioms allow us to explain in general how negative PID terms result from the specific information identity criteria underlying these axioms. More concretely, we derive, specifically for each of the two axioms, general expressions for deterministic components of the PID terms, which occur in the presence of deterministic target-source dependencies.

The comparison of the two axioms allows us to better understand the role of information identity in the quantification of redundancy. When the target contains a copy of some primary sources, an important difference between the redundancy measures derived from the two axioms regards their invariance, or lack thereof, to a transformation that reduces the target by removing all variables within it that are deterministically determined by this copy. This transformation does not alter the entropy of the target, and thus, a redundancy measure not invariant under it depends on semantic aspects regarding the identity of the variables within the target. We discuss why, in contrast to the mutual information itself, the PID terms may not be invariant to this transformation and depend on semantic aspects, as a consequence of the assignment of identity to the pieces of information, which is intrinsic to the notion of redundancy. In particular, we indicate how the overall composition of the target can affect the identity of the pieces of information and also can determine the existence of redundancy for independent sources (mechanistic redundancy [35]). Furthermore, based on this analysis, we identify the minimal set of assumptions that when added to the original PID axioms [33,34] can lead to negative PID terms. We indicate that this set comprises the assumption of the target invariance mentioned above. Overall, we conclude that if the redundancy lattice of [33] is to remain as the backbone of a nonnegative decomposition of the mutual information, a new criterion of information identity should be established that is compatible with the identity axiom, considers the semantic aspects of redundancy and results in less restrictive constraints on synergy in the presence of deterministic target-source dependencies than the two synergy axioms herein studied. Alternatively, the redundancy lattice should have to be modified to preserve nonnegativity.

We start this work by reviewing the PIDs (Section 2). We then introduce two alternative axioms that impose constraints on the value of synergistic terms in the presence of deterministic target-source dependencies, following an information identity criterion based on target-source identity associations (Section 3). Using these axioms, we derive general expressions that separate each PID term into a stochastic and a deterministic component for the bivariate (Section 4.1) and trivariate (Section 5.1) case. We show how these axioms constitute two alternative extensions of the identity axiom (Section 4.2) and examine if several previously-proposed redundancy measures conform to our axioms (Section 4.3). We reconsider the examples used by [42], characterizing their bivariate and trivariate decompositions and illustrating how in general negative PID terms can occur as a consequence of the information identity criteria underlying the synergy axioms (Section 4.4 and Section 5.2). The comparison between the two axioms allows us to discuss the implications of using an information identity criterion that, in the presence of deterministic target-source dependencies, identifies pieces of information in the target by assuming that their identity is related to specific sources. More generally, we discuss how our results constitute a further step to more explicitly address the role of information identity in the quantification of information (Section 4.5, Section 4.6 and Section 5.3).

2. A Review of the PID Framework

The seminal work of [33] introduced a new approach to decompose the mutual information into a set of nonnegative contributions. Let us consider first the bivariate case. Assume that we have a target X formed by one variable or by a set of variables and two variables (primary sources) 1 and 2 from which we want to characterize the information about X. The work in [33] argued that the mutual information of each variable about the target can be expressed as:

| (1) |

and similarly for . The term refers to a redundancy component between variables 1 and 2, which can be obtained either by observing 1 or 2 separately. The terms and quantify a component that is unique to 1 and to 2, respectively, that is, the information that can be obtained from one of the variables alone, but that cannot be obtained from the other alone. Furthermore, the joint information of 12 can be expressed as:

| (2) |

where the term refers to the synergistic information of the two variables, that is information that can only be obtained when combining the two variables. Therefore, given the standard information-theoretic chain rule equalities [45]:

| (3) |

the conditional mutual information , that is the average information that 2 provides about X once the value of 1 is known, is decomposed as:

| (4) |

and analogously for . Conditioning removes the redundant component, but adds the synergistic component so that conditional information is the sum of the unique and synergistic terms.

In this decomposition, a redundancy and a synergy component can exist simultaneously. The work in [33] showed that the measure of co-information [30] that previously had been used to quantify synergy and redundancy, defined as:

| (5) |

for any assignment of to , corresponds to the difference between the redundancy and the synergy terms of Equation (2):

| (6) |

More generally, [33] defined decompositions of the mutual information about a target X for any multivariate set of variables S. This general formulation relies on the definition of a general measure of redundancy and the construction of a redundancy lattice. In more detail, to decompose the information , [33] defined a source A as a subset of the variables in S and a collection as a set of sources. They then introduced a measure of redundancy to quantify for each collection the redundancy between the sources composing the collection, and constructed a redundancy lattice, which reflects the relation between the redundancies of all different collections. Here, we will generically refer to the redundancy of a collection by . Furthermore, following [46], we use a more concise notation than in [33]: for example, instead of writing for the collection composed by the source containing variable 1 and the source containing variables 2 and 3, we write , that is, we save the curly brackets that indicate for each source the set of variables and we use instead a dot to separate the sources. We will also refer to the single variables in S as primary sources when we want to specifically distinguish them from general sources that can contain several variables.

The work in [34] argued that a measure of redundancy should comply with the following axioms:

Symmetry: is invariant to the order of the sources in the collection.

Self-redundancy: The redundancy of a collection formed by a single source is equal to the mutual information of that source.

Monotonicity: Adding sources to a collection can only decrease the redundancy of the resulting collection, and redundancy is kept constant when adding a superset of any of the existing sources.

The monotonicity property allows introducing a partial ordering between the collections, which is reflected in the redundancy lattice. Self-redundancy links the lattice to the joint mutual information because at its top there is the collection formed by a single source including all the variables in S. Furthermore, the number of collections to be included in the lattice is limited by the fact that adding a superset of any source does not change redundancy. For example, the redundancy between the source 12 and the source 2 is all the information . The set of collections that can be included in the lattice is defined as:

| (7) |

where is the set of all nonempty subsets of the set of nonempty sources that can be formed from S. This domain reflects the symmetry axiom in that it does not distinguish the order of the sources. For this set of collections, [33] defined a partial ordering relation to construct the lattice:

| (8) |

that is, for two collections and , if for each source in there is a source in that is a subset of that source. This partial ordering relation is reflexive, transitive, and antisymmetric. In fact, the consistency of the redundancy measures with the partial ordering of the collections, that is that if represents a stronger condition than the monotonicity axiom. This is because the monotonicity axiom only considers the cases in which is obtained from adding more sources (e.g., and ), while the partial ordering comprises also the removal of variables from sources (e.g., and , or and ).

The mutual information multivariate decomposition was constructed in [33] by implicitly defining partial information measures associated with each node of the redundancy lattice, such that redundancy measures are obtained from the sum of partial information measures:

| (9) |

where refers to the set of collections lower than or equal to in the partial ordering, and hence reachable descending from in the lattice. The partial information measures are obtained inverting Equation (9) by applying the Möbius inversion to the terms in the lattice [33]. Redundancy lattices for S being bivariate and trivariate are shown in Figure 1. As studied in [46], a mapping exists between the terms of the trivariate and bivariate PIDs, as indicated by the colors and labels.

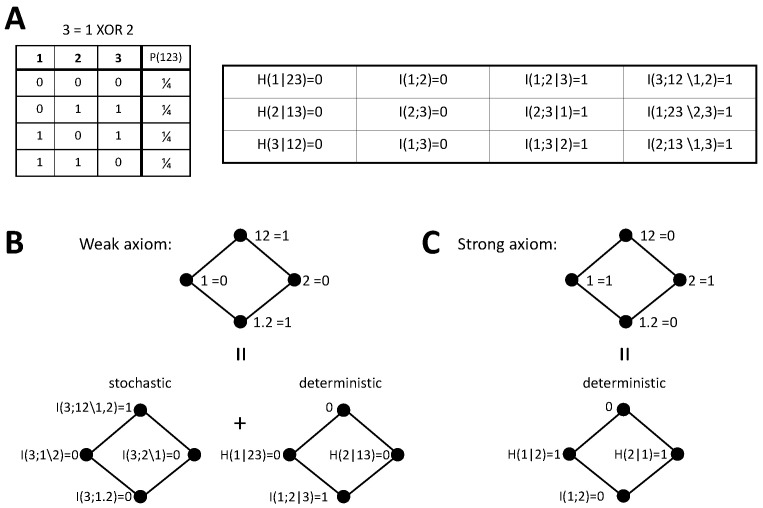

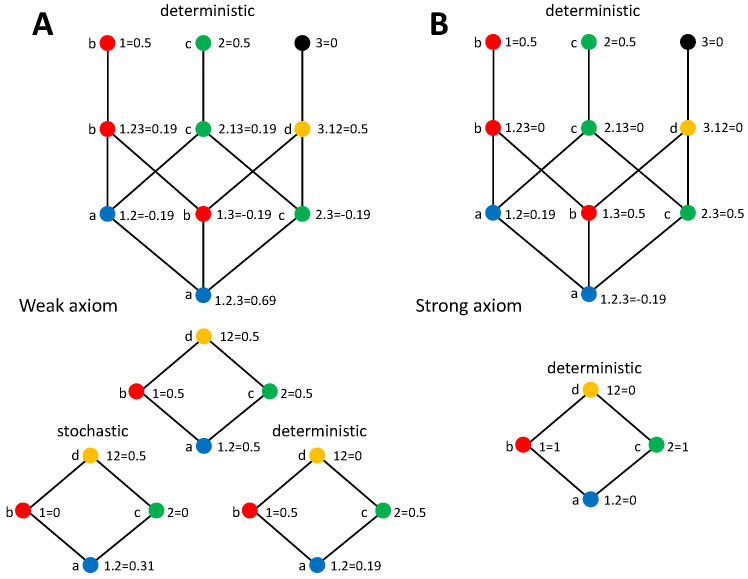

Figure 1.

Redundancy lattices of [33]. The lattices reflect the partial ordering defined by Equation (8). (A) Bivariate lattice corresponding to the decomposition of . (B) Trivariate lattice corresponding to the decomposition of . The color and label of the nodes indicate the mapping of partial information decomposition (PID) terms from the trivariate to the bivariate lattice; in particular, nodes with the same color in the trivariate lattice are accumulated in the corresponding node in the bivariate lattice.

An extra axiom, called the identity axiom, was later introduced by [35] specifically for the bivariate redundancy measure:

Identity axiom: For two sources and , is equal to .

The work in [35] pointed out that with the original measure of redundancy of [33] a nonzero redundancy is obtained for two independent variables and a target being a copy of them, while a measure quantifying the amount of qualitatively common information and not the quantitatively equal amount of information should be zero in this case. The work in [38] has specifically differentiated between the identity axiom, which states the form of redundancy for any degree of dependence between the primary sources when the target is a copy of them, and a more specific property, namely the independent identity property:

Independent identity property: For two sources and , .

This means that the independent identity property is fulfilled when the identity axiom is fulfilled, but the fulfilling of the independent identity property does not necessarily imply fulfilling the identity axiom. Several alternative measures have been proposed that fulfill the identity axiom [35,36,37]. The properties of the PID terms have been characterized, either based on the axioms and the structure of the redundancy lattice [46,47], or also considering the properties of specific measures [37,41,42,44,48,49]. However, only for specific cases such as multivariate Gaussian systems with univariate targets, it has been shown that several of the proposed measures are actually equivalent [50,51].

3. Stochasticity Axioms for Synergistic Information

In this section, we analyze the consequences of information identity criteria that in the presence of deterministic target-source dependencies identify pieces of information in the target by assuming that their identity is related to specific primary sources. As a first step, we formulate two axioms that impose constraints on synergistic information due to the presence of identity associations between variables within the target and the primary sources. Both axioms assume that, when a subset of the target X can be completely determined by one primary source from the set of primary sources , the identity of the bits of is associated with . This target-source identity association between and then imposes constraints on the synergistic information that can provide about X combined with the other variables in S, because alone already can provide all the information about . That is, the amount of synergy is constrained by the degree of stochasticity of the target variables with respect to the sources. The strength of the synergy constraints varies between the two axioms, as we will describe below, and we distinguish them as the weak and strong axiom. We start formulating the constraints that each axiom imposes on synergy conceptually, and subsequently, we will propose concrete constraints for the PID synergistic terms following from these axioms.

Weak axiom on stochasticity requirements for the presence of synergy: Any primary source that completely determines a subset of variables of the target X does not provide information about synergistically, since alone provides all the information about .

Strong axiom on stochasticity requirements for the presence of synergy: Any primary source that completely determines a subset of variables of the target X does not provide information about synergistically, since alone provides all the information about . Furthermore, can only provide synergistic information about the rest of the target, , to the extent that there is some remaining uncertainty in both and after determining .

Both axioms impose a common conceptual constraint on the presence of synergy, and the strong axiom imposes an extra constraint. The difference between the two axioms, as we will see in Section 4, can be understood in terms of the order in which is used to obtain information about the target. According to the weak axiom, there are no constraints on the synergistic contributions of to the information about . Oppositely, the logic of the strong axiom is that, because already provides alone the information associated to the entropy , only if and , then can still provide some extra information about X, and only in this case can this information possibly be synergistic.

The axioms constrain synergy on the basis that an identity is assigned to the bits of information related to the uncertainty as corresponding to source . In general, in the presence of dependencies between the variables constituting the target, bits cannot be associated univocally to specific variables within the target. Therefore, the identification of the bits of with source does not follow univocally from the joint distribution of the variables. The assignment of an identity to the different pieces of information determines the assessment of whether different sources provide the same information and thus determines the quantification of redundant, unique, and synergistic information. This means that this quantification will also in general depend on the criterion used to assign identity, and will not be reducible to an analysis of the dependencies that are present in the joint distribution.

For simplicity, we will from now on refer to the axioms as the weak or strong stochasticity axioms, or simply the weak or strong axiom. In order to render these axioms operative, we have to formalize their conceptual formulation into sets of constraints imposed on the synergistic PID terms. We now propose the concrete formalization of the axioms. For the weak axiom, we will propose constraints on synergistic PID terms resulting from the existence of functional dependencies of target variables on primary sources (Section 3.1). For the strong axiom, we will also propose constraints resulting from these general functional dependencies, and moreover, we will propose extra constraints specific for the case in which some of the sources themselves are contained in the target (Section 3.2). Finally, we will briefly discuss the motivation to study these axioms in our subsequent analyses, namely as a way to examine how information identity criteria determine the PID terms (Section 3.3).

3.1. Constraints on Synergistic PID Terms That Formalize the Weak Axiom

We propose the following constraints to formalize the weak axiom:

Constraints imposed by functional dependencies of target variables on primary sources: For a target X and a set of n variables (primary sources) , consider the subsets of X, , such that can be determined completely by the single primary source . Define as the subset of X determined by single primary sources, then:

| (10) |

where indicates the collections reachable by descending the lattice from node i, corresponding to primary source .

The above means that the synergy about X is equal to the synergy in the lattice associated with the decomposition of the mutual information about a target that does not include the variables in determined by single primary sources alone. This implies that the primary sources cannot have synergistic information about a part of the target that is deterministically related to any of them. However, if we define as the subset of S comprising any primary source that determines some of the target variables (i.e., having a nonempty ), the weak axiom does not constrain that the variables in may provide information about other parts of the target in a synergistic way. Conversely, the strong axiom imposes that the variables in can only provide synergistic information to the extent that they are not themselves deterministically related to the variables in .

3.2. Constraints on Synergistic PID Terms that Formalize the Strong Axiom

We propose the following constraints as a formalization of the strong axiom. First, we propose general constraints for any system with functional dependencies of target variables on primary sources:

Constraints imposed by functional dependencies of target variables on primary sources: For a target X and a set of n variables (primary sources) , consider the subsets of X, , such that can be determined completely by the single primary source . Define as the subset of X determined by single primary sources, then:

| (11) |

That is, the synergy about X is equal to the synergy in the lattice associated with the decomposition of the mutual information that S has about conditioned on . Note that the PID of is the same as the one of , and thus, .

Comparing Equations (10) and (11), we can outline an important difference of the PIDs derived from each axiom. Define as the variables in that can be determined as a function of . Because in Equation (11) the synergistic PID terms are related to the decomposition of , given the conditioning on , these terms are invariant to a transformation of the target that removes from it all variables , i.e., . Note that and also are themselves invariant to this transformation. Oppositely, according to Equation (10), the synergistic PID terms are related to the decomposition of , which is not invariant to the removal of from . As we will discuss in detail in Section 4.6, the invariance, or lack thereof, to this transformation plays an important role in the characterization of the notion of redundancy that underpins the PIDs, and in particular determines the sensitivity of the PID terms to the overall composition of the target, comprising semantics aspects beyond the statistical properties of the joint distribution of the target variables.

In general, Equation (11) only expresses the synergistic terms of the PID of in terms of the synergistic terms of the PID of . However, these latter PID terms are themselves only specified after the definition of particular measures to implement the PID. However, in the more specific case where the primary sources in are in fact contained in the target (i.e., ), the logic of the conceptual formulation of the strong axiom leads to more specific constraints on the synergistic terms. In particular, because , then , and the primary sources in cannot provide other information about the target than the information about themselves. Since such information is already available without combining the sources in with any other source, this implies that the primary sources in do not provide any information about X synergistically. Therefore, we propose the following extra constraints for the synergistic PID terms specifically for the case in which .

Constraints imposed by copies of the primary sources within the target: For a target X and a set of n variables (primary sources) , consider the subset formed by all variables in X, which are a copy of one of the primary sources. Similarly, consider the subset formed by all primary sources with a copy within the target, i.e., . Then:

| (12) |

That is, there is no synergy for those nodes whose collection has a source A containing a variable from .

Since we have separately proposed the constraints of Equations (11) and (12) from the conceptual formulation of the strong axiom, they constitute, for the case of , complementary requirements that should be fulfilled by a PID to be compatible with the strong axiom. However, we will show that for those previously proposed measures that at least for some class of systems comply with Equation (12), [35,36,43], Equation (11) is consistently fulfilled (Appendix D). More generally, the constraints of Equation (12) can be derived from Equation (11) in the case of if an extra desirable property is imposed to construct the PIDs (Appendix A). This extra property requires that the PID of is equivalent to the PID of .

Furthermore, although the distinction between a weak and a strong axiom is motivated by the fact that the strong axiom conceptually imposes an extra requirement for the presence of synergy, this hierarchical relation is not conferred by construction to the concrete constraints imposed to the synergistic PID terms. The PIDs depend on the specific definition of the measures used to construct them, and these measures are expected to comply with one or the other axiom, so that PIDs complying with the axioms cannot be compared on the same measures. However, in agreement with the conceptual formulation, synergistic PID terms are expected to be smaller under the strong axiom because, in (Equation (11)), for primary sources with a nonempty , the synergy that other primary sources may have with will already be partially accounted by the combination of these other primary sources with , which is part of . See Appendix A for further details on the effect of conditioning on for the specific case of .

3.3. Using the Stochasticity Axioms to Examine the Role of Information Identity Criteria in the Mutual Information Decomposition

In this work we will study how, based on the constraints imposed to the synergistic PID terms following the two stochasticity axioms, bivariate and trivariate PIDs are affected by deterministic relations between the target and the primary sources. Before focusing on that analysis, we complete the general formulation of the axioms with some considerations about their role in this work and their generality.

Regarding their role, we remark that we do not introduce the stochasticity axioms per se, to propose that they should be added to the set of axioms that PID measures should satisfy. Instead, the axioms are introduced to study the implications of identifying different pieces of information based on the target-source identity associations that result from deterministic target-source dependencies. The final objective is to better understand the role of information identity criteria in the quantification of redundancy. As we will see, these axioms are instrumental to characterize how the underlying information identity criterion can lead to negative PID terms. This characterization is also relevant in relation to previous studies because we prove that several previously proposed measures conform to the strong axiom generally [36] or at least for a wider class of systems [35,43] than the one concerned by the identity axiom.

Since our intention is not to formulate these axioms in their most general form, we have only considered their conceptual formulation, and propose concrete constraints to synergistic PID terms following from them, for the case in which there exist functional relations of target variables on single primary sources, that is, . The same logic could be applied to formulate the axioms more generally and propose further constraints regarding functional relations of target variables on a subset of S. The PID terms affected by these other functional relations differ from the ones involved in Equations (10)–(12). For example, the existence of a functional relation depending on sources 1 and 2, would constrain the synergy of 12 with other variables, but not the synergy between 1 and 2. We will not pursue this more general formulation of synergy constraints. Conversely, to further simplify the derivations, we will focus on cases where the target X contains some of the primary sources themselves, that is, when the target overlaps with the sources as . The more general formulation that considers target variables determined as a function of primary sources leads to the same main qualitative conclusions. All the general derivations in the rest of this work follow from the relations characteristic of the redundancy lattice (Equation (9)) and from the constraints to synergistic PID terms proposed following the axioms (Equations (10)–(12)). We do not need to select any specific measure of redundant, unique or synergistic information. For simplicity, from now on we will not distinguish between the conceptual formulation of the axioms and the constraints to synergistic PID terms proposed following from them, and we will refer to them as the weak and strong stochasticity axioms.

4. Bivariate Decompositions with Deterministic Target-Source Dependencies

We start with the bivariate case. Consider that the target X may have some overlap with the sources 1 and 2. Following the weak stochasticity axiom (Equation (10)), synergy is expressed as:

| (13) |

On the other hand, the strong stochasticity axiom (Equation (12)) implies that:

| (14) |

From these expressions of the synergistic terms, we will now derive how deterministic relations affect the other PID terms.

4.1. General Formulation

For both forms of the stochasticity axiom, we will derive expressions of unique and redundant information in the presence of a target-source overlap. These derivations follow the same procedure: First, given that unique and synergistic information are related to conditional mutual information by Equation (4), the synergy stochasticity axioms determine the form of the unique information terms. Second, once the unique information terms are derived, their relation to the mutual information together with the redundancy term (Equation (1)) allows identifying redundancy. For both unique and redundant information terms this procedure separates the PID term into stochastic and deterministic components. These stochastic and deterministic components quantify contributions associated with the information that the sources provide about the non-overlapping part of the target, , and the overlapping part, , respectively. However, how these components are combined depends on the order in which stochastic and deterministic target-source dependencies are partitioned. In particular, using the chain rule [45] of the mutual information, we can separate the information about the target in two different ways:

| (15a) |

| (15b) |

The first case considers first the stochastic dependencies and after the conditional deterministic dependencies. In the second case, this order is reversed. We will see that for each axiom only one of these partitioning orders leads to expressions that additively separate stochastic and deterministic components for each PID term. Oppositely, the other partitioning order leads to cross-over components across PID terms, in particular to some PID terms being expressed in terms of the stochastic component of another PID term.

4.1.1. PIDs with the Weak Axiom

We start with the PID of derived from the weak axiom (Equation (13)). Consider the mutual information partitioning order of Equation (15a), which can be re-expressed as:

| (16) |

that is, the second summand corresponds to the conditional entropy of the overlapping target variables given the non-overlapping ones. We now proceed analogously for the PID terms. Since conditional mutual informations are the sum of a unique and a synergistic information component (Equation (4)), we have that:

| (17) |

The first equality indicates that unique information is conditional information minus synergy. The second equality uses the chain rule to separate the conditional mutual information stochastic and deterministic components, and applies the stochasticity axiom to remove the overlapping part of the target in the synergy term. Using again the relation between conditional mutual information and unique and synergistic terms (Equation (4)), but now, for the target we get:

| (18) |

where we also used that equals the entropy . Accordingly, the unique information of 1 can be separated into a stochastic component, the unique information about target , and a deterministic component, the entropy . This last term is zero if the target does not contain source 1. If it does, it quantifies the entropy that only 1 as a source can explain about itself as part of the target, which is thus an extra contribution to the unique information.

Once we have identified the stochastic and deterministic components of the unique information we can use Equation (1) to characterize the redundancy. Combining Equations (1) and (18), we obtain:

| (19) |

Therefore, it suffices that one of the two primary sources overlaps with the target so that their conditional mutual information given the non-overlapping target variables contributes to redundancy. Note that when then ; hence, the axiom has no effect on the redundancy.

Following the same procedure, it is possible to derive expressions for the unique and redundant information terms, but applying the other mutual information partitioning order of Equation (15b). The resulting terms can be compared in Table 1 and are derived in more detail in Appendix B, where we also show the consistency between the expressions obtained with each partitioning order. We present in the upper part of the table the decompositions into stochastic and deterministic contributions for each PID term and for the two partitioning orders. To simplify the expressions, their form is shown only for the case of . With the alternative partitioning order, both the expressions of unique information and redundancy contain a cross-over component, namely the synergy about , instead of being expressed in terms of the unique information and redundancy of , respectively. Furthermore, the separation of the deterministic and stochastic components is not additive. This indicates that, while the chain rule holds for the mutual information, it is not guaranteed that the same type of separation holds separately for each PID term. Only for a certain partitioning order, when stochastic dependencies are considered first, unique and redundant information terms derived from the weak axiom can both be separated additively into a stochastic and a deterministic component without cross-over terms. We individuate in the lower part of the table the deterministic PID components obtained from the partitioning order for which each PID term is separated additively into a stochastic and deterministic component.

Table 1.

Decompositions of synergistic, unique, and redundant information terms into stochastic and deterministic contributions obtained assuming the weak stochasticity axiom. For each term we show the decompositions resulting from two alternative mutual information partitioning orders (Equation (15)), which are consistent with each other (see Appendix B). For the partitioning order leading to an additive separation of each partial information decomposition (PID) term into a stochastic and deterministic component we also individuate the deterministic contributions . Synergy has only a stochastic component, according to the axiom (Equation (13)). Expressions of unique information come from Equations (18) and (A3), and the ones of redundancy from Equations (19) and (A5). The expressions have been simplified with respect to the equations, indicating their form for the case . The terms have analogous expressions for when a symmetry exists between i and j and are zero otherwise.

| Term | Decomposition |

|---|---|

| Term | Measure |

| 0 | |

4.1.2. PIDs with the Strong Axiom

The procedure to derive the unique and redundant PID terms is the same if the strong stochasticity axiom is assumed, but determining synergy with Equation (14) instead of Equation (13). To simplify the expressions we indicate in advance that if each PID term with target X is by definition equal to the one with target and we only provide expressions derived with some target-source overlap. In contrast to the weak axiom, with the strong axiom an additive separation of stochastic and deterministic components is obtained with the partitioning order of Equation (15b). See Appendix B for details about the other partitioning order. For the unique information the strong axiom implies that:

| (20) |

and for the redundancy:

| (21) |

As before, we summarize the PIDs in Table 2. Comparing Table 1 and Table 2, we see that the expressions obtained with the weak and strong axiom differ because of a cross-over contribution, corresponding to the synergy about , which is transferred from redundancy to unique information. This is due to the synergy constraints imposed by each axiom: the strong axiom imposes that there is no synergy, and hence this part of the information has to be transferred to the unique information because the sum of synergy and unique information is constrained to equal the conditional mutual information. As a consequence, redundancy is reduced by an equivalent amount to comply with the constraints that relate unique informations and redundancy to mutual informations (Equation (1)). Furthermore, like for the weak axiom, the chain rule property does not generally hold for each PID term separately. This has been previously proven for specific measures. The work in [42] provided a counterexample for the original redundancy measure of [33] () and for the one of [35] (). The work in [44] provided counterexamples for the redundancy and synergy measures of the decomposition based on maximum conditional entropy [36]. Our results prove this for any measure conforming to the stochasticity axioms. In particular, they show that the PID terms are consistent with the mutual information decompositions obtained applying the chain rule, but that, depending on the partitioning order and on the version of the axiom assumed, information contributions are redistributed between different PID terms, and between their stochastic and deterministic components.

Table 2.

Decompositions of synergistic, unique, and redundant information terms into stochastic and deterministic contributions obtained assuming the strong stochasticity axiom. The table is analogous to Table 1. Synergy is null according to the axiom (Equation (14)). Expressions of unique information come from Equations (A8) and (20), and the ones of redundancy from Equations (A9) and (21). Again, expressions are shown for the case , with the corresponding symmetries holding for and with terms equal to zero otherwise.

| Term | Decomposition |

|---|---|

| 0 | |

| Term | Measure |

| 0 | |

4.2. The Relation between the Stochasticity Axioms and the Identity Axiom

In the previous section, we derived how the two stochasticity axioms imply different expressions for the redundancy term. We now examine how these expressions are related to the redundancy term stated by the identity axiom [35]. It is straightforward to show that the identity axiom is subsumed by both stochasticity axioms:

Proposition 1.

The fulfillment of the synergy weak or strong stochasticity axioms implies the fulfillment of the identity axiom.

Proof.

If then and . If the weak stochasticity axiom holds, redundancy (Equation (19)) reduces to . If the strong stochasticity axiom holds, Equation (21) is already . ☐

Therefore, the stochasticity axioms represent two alternative extensions of the identity axiom: First, they do not only consider a target that is a copy of the primary sources, but a target with any degree of overlap or functional dependence with the primary sources. Second, they are not restricted to the bivariate case but are formulated for any number of primary sources. This means that their fulfillment imposes stricter conditions to the redundancy measures. Redundancy terms derived from each axiom coincide for the particular case that is addressed by the identity axiom, but more generally they differ. We will further discuss these differences below based on concrete examples.

4.3. How Different PID Measures Comply with the Stochasticity Axioms

We now investigate whether several proposed measures conform to the predictions of the stochasticity axioms. We examine the original redundancy measures of [33] (), the one based on the pointwise common change in surprisal of [38] (), the one based on maximum conditional entropy of [36] (), the one based on projected information of [35] (), and the one based on dependency constraints of [43] ().

It is well-known that the redundancy measure does not comply with the identity axiom [35]. Even if , a redundancy can be obtained. Nor does comply with the identity axiom. Since the fulfillment of the stochasticity axioms implies the fulfillment of the identity axiom, none of these measures complies with the stochasticity axioms.

On the other hand, , , and fulfill the identity axiom. We will show that always conforms to the strong stochasticity axiom. For , we will show that it complies with the strong axiom at least when some primary source is part of the target, i.e., . We will also show that complies with the strong axiom at least for the case of . This latter case is particularly relevant to examine nonnegativity (Section 5.3).

We proceed as follows: For , we now prove that it complies with Equation (12) specifically for the case in which some primary sources are also part of the target, which is the case considered throughout this work. The longer complete proof of compliance with Equation (11) for systems comprising any type of functional relation between parts of the target and single primary sources is left for Appendix C. For , we also provide here the proof of compliance with Equation (12) for the case of primary sources being part of the target. Again because of length reasons, the proof for is left for Appendix C. In all cases, we also prove in Appendix D that, for those cases herein studied in which these measures comply with Equation (12), Equation (11) is consistently fulfilled. We start with :

Proposition 2.

The PID associated with the redundancy measure [36] conforms to the synergy strong stochasticity axiom when some primary source is part of the target.

Proof.

Consider a target X and two sources 1 and 2. The redundancy measure is defined as:

(22) where the co-information is maximized within the family of distributions that preserves the marginals and . We will now show that conforms to Equation (21) when . It is a general property following from the definition of the co-information (Equation (5)) that, if either 1 or 2 are in X, that is, , then . Further, because preserves and , it suffices that so that is preserved. This means that is preserved and for all . This leads to . Given that for any valid bivariate PID one of the four PID terms already determines the other PID terms, because they have to comply with Equations (1) and (4), this shows that the PID equals the one derived from the strong axiom. ☐

We now continue with the proof for :

Proposition 3.

The PID associated with the redundancy measure [43] conforms to the synergy strong stochasticity axiom when some primary source is part of the target.

Proof.

The work in [43] defined unique information based on the construction of a dependency constraints lattice in which constraints to maximum entropy distributions are hierarchically added. The unique information is defined as the least increase in the information when adding the constraint of preserving the distribution to the list of constraints imposed to the maximum entropy distribution Q. This results in the following expression for , according to Appendix B of [43]:

(23) where indicates the conditional mutual information for the maximum entropy distribution preserving and , and analogously for . Now, consider that some source is part of the target, in particular, without loss of generality, that . In this case , and preserving and implies preserving the joint distribution , given that 1 is part of X. This means that . Furthermore, . Since the unique information is , which already determines the PID, and in particular leads to the redundancy being . ☐

4.4. Illustrative Systems

So far, we have derived the predictions for the PIDs according to each version of the stochasticity axiom, pointed out the relation with the identity axiom, and checked how different previously proposed measures conform to these predictions. We now analyze concrete examples to further examine the implications of our axioms on the PIDs. In particular, we reconsider two examples that have been previously studied in [42,44], namely the decompositions of the mutual information about a target jointly formed by the inputs and the output of a logical XOR operation or of an AND operation (see Figure 2A and Figure 3A, respectively). We first describe below the decompositions obtained, then in Section 4.5, we will discuss these decompositions in the light of the underlying assumptions on how to assign an identity to different pieces of information of the target. The deterministic components for these examples are derived without assuming any specific measure of redundancy, unique, or synergistic information. The stochastic components have already been previously studied and some of the terms depend on the measures selected to compute the PID terms. We will indicate previous work examining these terms when required.

Figure 2.

Bivariate decomposition of for the XOR system. (A) Joint distribution of the inputs 1 and 2 and the output 3 for the XOR operation. We also collect the value of the information-theoretic quantities used to calculate this bivariate decomposition and the trivariate decomposition in Section 5.2. (B) Bivariate decomposition derived from the weak stochasticity axiom. Stochastic and deterministic components are separated in agreement with Table 1. (C) Bivariate decomposition derived from the strong axiom. Only deterministic components are present, following Table 2.

Figure 3.

Bivariate decomposition of for the AND system. The structure of the figure is analogous to Figure 2. (A) Joint distribution of the inputs 1 and 2 and the output 3 for the AND operation. (B) Bivariate decomposition derived from the weak stochasticity axiom. (C) Bivariate decomposition derived from the strong axiom.

4.4.1. XOR

We first examine the XOR system. Consider an output variable 3 determined through the operation , resulting in the joint probability displayed in Figure 2A. We also indicate the values of the information-theoretic measures needed to calculate the PID bivariate decompositions studied here and that will also serve for the trivariate decompositions addressed in Section 5.2. We want to examine the decomposition of , where the target is composed by the three variables. For each version of the stochasticity axiom we will focus on the mutual information partitioning order that allows separating additively a stochastic and a deterministic component of each PID term.

Since , for the weak axiom the PID (Figure 2B) can be obtained by implementing the decomposition of and separately calculating the deterministic PID components as collected in Table 1. As indicated in [37], the decomposition of for the XOR operation can be derived without adopting any particular redundancy measure, just using Equations (1) and (4) and the axioms of [34] described in Section 2. Furthermore, the same value is obtained with , which does not fulfill the nonnegativity axiom. There is no stochastic component of redundancy or unique information because for , and synergy contributes one bit of information. Regarding the deterministic components, redundancy has 1 bit because . The deterministic unique information components are zero because for , and according to the axiom, there is no deterministic component of synergy.

In the case of the strong axiom (Figure 2C), since both primary sources overlap with the target, only deterministic components are larger than zero in the decomposition when selecting the partitioning order that additively separates stochastic and deterministic contributions, as indicated in Table 2. By assumption, there is no synergy. Since , the redundancy is also zero and all the information is contained in the unique information terms. As pointed out for the generic expressions, the two decompositions differ in the transfer of the stochastic component of synergy to unique information, which in turns forces an equivalent transfer from redundancy to unique information.

4.4.2. AND

As a second example, we now consider the AND system. Following the weak axiom, again the decomposition can be obtained by implementing the PID of and separately calculating the deterministic PID components from Table 1, using the joint distribution of inputs and output displayed in Figure 3A. The PID of for the AND operation has also been already characterized and coincides for [33], [35], and [36]. However, in contrast to the XOR case, this decomposition depends on the redundancy measure used and for example differs for . Each PID term contributes half a bit to . Unique contributions come exclusively from the deterministic components. Each unique information amounts to half a bit because the output and one input determine the other input only when not both have a value of 0. Redundancy is also bit, but it comes in part from a stochastic component and in part from a deterministic one. The stochastic component appears intrinsically because of the AND mechanism, even if the inputs are independent. This type of redundancy has been called mechanistic redundancy [35]. The deterministic component appears because, although the inputs are independent, conditioned on the output . The synergy was also previously determined [33,35,36]. This PID differs from the one obtained with the weak axiom for the XOR example. Conversely, with the strong axiom the decomposition is the same as for the XOR example, because it is completely determined by . This latter decomposition is again in agreement with the arguments of [42,44] based on the identity axiom.

4.5. Implications of Target-Source Identity Associations for the Quantification of Redundant, Unique, and Synergistic Information

Each version of the stochasticity axiom implies a different quantification of redundancy. We now examine in more detail how these different quantifications are related to the notion of redundancy as common information about the target that can be obtained by observing either source alone. The key point is how identity is assigned to different pieces of information in order to assess which information about the target carried by the sources is qualitatively common to the sources. In particular, the logic of the strong axiom is that if a source is part of the target it cannot provide other information about the target than the information about itself. As a consequence, if the other source does not contain information about the former source, this information is unique. This logic rests on the assumption that when there is a copy of a primary source in the target we can identify and separate the bits of information about that copy from the information about the rest of the target. The idea of assigning an identity to bits of information in the target by associating them with specific variables also motivated the introduction of the identity axiom. Although this axiom was formulated for sources with any degree of dependence, its motivation [35] was mainly based on the case of independent sources, that is, the particular case considered by the independent identity property. For that case, we can identify the bits of information associated with variable 1 and the ones with variable 2, and thus redundancy, that should quantify the qualitatively equal information that is shared among the sources and not only common amounts of information, has to be null.

However, assigning an identity to pieces of information in the target is in general less straightforward. For example, in the XOR system, with target 123 and sources 1 and 2, we have two target-source identity associations, namely between each source and its copy in the target. However, the two bits of 123 cannot be identified as belonging to a certain variable, because of the conditional dependencies between the variables. The only information identity criterion that seems appropriate in this case to identify the two bits is the following: the bit that any first variable provides alone, and the bit that a second variable provides combined with the first. This lack of correspondence between pieces of information and individual variables is incompatible with the identification of the pieces of information based on the target-source identity associations that are formalized by the stochasticity axioms. To show this, we now consider different combinations of mutual information partitioning orders for and and show how, if the assignment of identity to the bits in the target 123 is based on target-source identity associations, the interpretation of redundant and unique information is ambiguous. First, consider that we decompose the information of each primary source as follows:

| (24) |

If we assume that we can identify the bit of information carried by each primary source about the target using the target-source identity associations, these decompositions would suggest that there is no redundant information. This is because each source only carries one bit of information about its associated copy within the target and for the XOR system. However, keeping the same decomposition of , we can consider alternative decompositions of :

| (25a) |

| (25b) |

The redundancy and unique information terms should not depend on how we apply the chain rule to . However, in contrast to Equation (24), the first decomposition of Equation (25a) suggests, based on the target-source identity associations, that there is redundancy between sources 1 and 2. In particular, in Equation (25a) can be interpreted as information that source 2 provides about the copy of source 1 within the target, thus redundant with the information = in Equation (24) that source 1 has about its copy. The second decomposition in Equation (25b) further challenges the interpretation of redundancy and unique information based on the assignment of an identity to bits of information in the target given their association with the overlapping target variables. Given , source 2 provides information about 3. However, the bit of 3 is shared with the copies of 1 and 2 within the target, given the conditional dependencies of the XOR system. Moreover, the information in is information that source 2 provides about 3 after conditioning on the copy of source 1 within the target, so that the target-source identity association of 1 suggests that both sources are combined to retrieve this information. Note that for both and in Equation (25), we expressed the information in terms of the entropy of the target variable, 1 and 3, respectively, because it is the identity of the pieces of information within the target what determines their assignment to a certain PID term.

In summary, when using the target-source identity associations to identify pieces of information, different partitioning orders of the mutual information ambiguously suggest that the same information can be obtained uniquely, redundantly, or even in a synergistic way. These problems arise because, in contrast to the case of with independent sources, in the XOR system the two bits of 123 cannot be identified as belonging to a certain variable, and thus the target-source identity associations between the variables cannot identify the bits unambiguously.

The differences in the quantification of redundancy with each stochasticity axiom are related to the alternative interpretations of identity discussed for Equations (24) and (25). A notion of redundancy compatible with the weak axiom considers the common information about the target that can be obtained by observing either source alone or conditioned on variables in the target, which means that redundancy depends on the overall composition of the target. Indeed, the deterministic component of redundancy comprises the conditional dependence of the sources given the rest of the target, , when there is a target-source overlap, and thus fits to Equation (25a), where the term appears. Conversely, with the strong axiom, when there is a target-source overlap, redundancy equals independently of , in agreement with Equation (24). We will now further discuss the implications of this independence or dependence of redundancy on the overall composition of the target.

4.6. The Notion of Redundancy and the Identity of Target Variables

We showed above that enforcing the identification of the bits of 123 based on target-source identity associations between the variables leads to ambiguous interpretations of whether this information is retrieved redundantly, uniquely, or synergistically, depending on the partitioning order used to decompose the target. That is, the ambiguity arises because we consider 1, 2, and 3 as three separate variables within the target, which furthermore can be observed sequentially in any order, and not only simultaneously. The possibility to separately observe these variables is not relevant to quantify their entropy or the mutual information , but, as we will argue below, it is potentially relevant to determine the PID terms.

In particular, for both the XOR and AND systems, 3 is completely determined by 12, so that and . That is, the entropy and mutual information do not depend on whether we consider 3 as a separate variable or it is removed from the target. We can then ask how the assignment of the two bits to the PID terms depends on reducing the target 123 to 12. We repeat the comparison of different partitioning orders of and of Section 4.5 but now after this reduction. For the only possible partitioning orders are:

| (26) |

and analogously for . Since , in all cases each source retrieves information about its associated copy in the target, and thus all information is contained in the unique information terms. Therefore, with the reduction of 123 to 12, the decompositions obtained are consistent with the ones derived from the strong axiom, which effectively also reduces 123 to 12 since the decomposition is independent of when 12 is part of the target. Indeed, [44] derived for the AND system the same decomposition as with the strong axiom using the measure and the reduction of 123 to 12.

The consistency between the strong axiom and the reduction of 123 to 12 is also reflected in the equality between the PIDs derived from the strong axiom for the XOR and AND systems. This is because the distributions of the targets 123 of these systems are isomorphic, i.e., one can be mapped to the other by relabeling the states, and are indistinguishable after the reduction to 12. However, the decompositions of the XOR and AND systems differ when derived with the weak axiom, and are not consistent with the reduction of 123 to 12. This is because with the weak axiom the PID terms have components in which 12 is explicitly separated from 3, in particular the redundancy contains the terms and I(3;1.2) (Table 1).

Therefore, an important difference between the redundancy measures derived from the two stochasticity axioms regards their invariance to transformations of the target consisting on the removal of the variables within it that are completely determined by copies of the primary sources contained in the target. We will in general call this type of invariance as TSC (target to sources copy) reduction invariance. Because the removal of these variables does not alter the entropy of the target, the mutual information is TSC reduction invariant. The lack of TSC reduction invariance implies that the redundancy depends on semantic aspects of the joint probability distribution of the target, related to the identity of the variables. The reason why the redundancy measure following from the weak axiom depends on these semantic aspects, while the measure following from the strong axiom does not, can be understood from how the identification of pieces of information based on the target-source variables associations is later used to constrain synergy in each case. With the weak axiom, the bits of identified with the primary source due to the presence of within the target, are constrained to be non-synergistic in nature when the primary sources provide information about . Oppositely, the weak axiom imposes no restriction on synergy about . However, if there is some dependence between the variables and (i.e., ), part of the bits of are shared by the variables . This means that the same bits that are constrained to be non-synergistic in nature when the primary sources provide information about are still allowed to be synergistic when the primary sources provide information about . Therefore, it is the identity of the variables about which the primary sources provide information what determines whether the same bits are subjected to the synergy constraints or not. The dependence of synergistic terms on the semantic aspects of the probability distribution determines that also redundancy terms inherit this dependence, because of their relation as terms of the PID. Oppositely, in the case of the strong axiom, once the identity of the bits of is associated with the primary source due to the presence of within the target, the fact that due to these bits can also be associated with is not considered, and they are constrained to be non-synergistic in nature without any consideration of the identity of the target variables about which the primary sources provide information.

The fact that redundancy is invariant or not to the TSC reduction has implications to determine other properties of the PIDs. In particular, it plays a crucial role in the counterexamples provided by [42,44] to prove that nonnegativity and left monotonicity are not compatible with the independent identity property. We will address in detail the counterexample of nonnegativity after studying trivariate PIDs with the stochasticity axioms, in Section 5. With regard to left monotonicity, it is useful to remind that [44] assumed the invariance of when reducing 123 to 12 to prove that left monotonicity is violated in the decomposition of of the AND system, because . As can be seen in Figure 3, although we have that with the strong axiom, the opposite holds with the weak axiom, for which the invariance under reduction of 123 to 12 does not hold.

More generally, the TSC reduction is just one type of isomorphism of the target to which entropy and mutual information are always invariant. The comparison of the decompositions obtained with the two stochasticity axioms raises the question of whether we should expect the PIDs to be invariant to isomorphisms of the target, as the entropy and mutual information are. This question is intrinsically related to the role assigned to information identity in the notion of redundancy. Two aspects of this notion would justify a lack of invariance. First, the assessment of redundancy implies assigning an identity to pieces of information, and this identity can change depending on the variables included in the target. For example, for the target 123 in the XOR and AND systems, if 1, 2, and 3 are taken as variables that can be observed separately and sequentially, the bits of 123 cannot be identified as belonging to a certain variable, because of the conditional dependence . However, after the reduction of 123 to 12, the two bits can be associated each to a single variable of the target because . Second, mechanistic redundancy can only be assessed when explicitly considering the mechanism of the input-output deterministic relation generating 3 from 12. This mechanism is not preserved under isomorphic transformations, and the information about it is lost when reducing 123 to 12 for the XOR and AND systems. These two arguments highlight the role of information identity to quantify redundancy, and indicate that requiring or not that the redundancy measures should be invariant to target isomorphisms implies further specifications of which is the underlying notion of redundancy that is quantified.

5. Trivariate Decompositions with Deterministic Target-Source Dependencies

We now extend the analysis to the trivariate case. This is particularly relevant because, in contrast to the bivariate case, it has been proven that, in the multivariate case, the PIDs that jointly comply with the monotonicity and the identity axioms do not guarantee the nonnegativity of the PID terms [42]. In particular, [42] used the XOR example we reconsidered above as a counterexample to show that PID terms can be negative. The work in [41] reexamined this counterexample indicating that the independent identity property, which is a weaker condition than the identity axiom, already implies the existence of negative terms. Therefore, we would like to be able to extend the general formulation of Section 4.1 to the trivariate case, and thus apply it to further examine the XOR and AND examples by identifying each component of the trivariate decomposition of and not only of the decomposition of .

5.1. General Formulation

While in the bivariate lattice there is a single PID term that involves synergistic information, in the trivariate lattice of Figure 1B, all nodes that are not reached descending from 1, 2, or 3 imply by definition synergistic information, and the nodes of the form too. This is because these nodes correspond to collections containing sources composed by several primary sources, and hence quantify information only obtained by combining primary sources. The weak and strong axioms impose constraints on these terms given Equations (10) and (11), respectively.

5.1.1. PIDs with the Weak Axiom

We begin with the weak stochasticity axiom, for a target X and three primary sources 1, 2, and 3. Expressing the general constraints of the weak axiom (Equation (10)) particularly for the trivariate case, and separating stochastic and deterministic components of the PID terms as in Table 1, that is, as , Equation (10) can be expressed as:

| (27) |

To characterize the remaining deterministic contributions to PID terms, analogously to the bivariate case, we apply the mutual information chain rule to separate stochastic and deterministic dependencies. Again we focus on the partitioning order that considers first the stochastic dependencies, since only this order leads to an additive separation of stochastic and deterministic components for each PID term. With this partitioning order, we obtain:

| (28) |

Following derivations analogous to the ones of Section 4.1 (see Appendix E), if a certain primary source i does not overlap with the target, the nodes that can only be reached descending from its corresponding node will not have a deterministic component. Accordingly, deterministic contributions are further restricted by:

| (29) |

This can be understood examining the term in Equation (28). For example, suppose that the target includes 1 and 2 but not 3. Then the entropy in Equation (28) is , corresponding to . The PID terms that can be reached descending from 3 and not from 1 or 2 are and (see Figure 1B). The first quantifies information that can only be obtained from 3, and not from 12. The second is information that can be obtained from 3 or from 12, but not from 1 or 2 alone. However, the information can be obtained from either 1 or 2 alone, so there is no information exclusive of 12. This means that and do not contribute to the decomposition of .

Using the condition of Equation (29), we can use the same procedure as in Section 4.1 to derive the expressions of all the deterministic PID trivariate components. These terms are collected in Table 3 and we leave the detailed derivations and discussion for Appendix E. Their expressions are indicated for the case in which variable i is part of the target and are symmetric with respect to j or k when this symmetry is characteristic of a certain PID term, or vanish otherwise, consistently with Equation (29).

Table 3.

Deterministic components of the PID terms for the trivariate decomposition derived from the weak stochasticity axiom. All terms not included in the table have no deterministic component due to the axiom. These expressions correspond to the case in which the primary source i overlaps with the target. If i does not overlap, and are zero, while the other terms depend on their characteristic symmetry for the other variables j and k, and vanish if none of the variables with the corresponding symmetry overlaps with the target. See the main text and Appendix E for details.

| Term | Measure |

|---|---|

The first two terms and are nonnegative, the former because it is an entropy and the latter because according to the axiom adding a new source can only reduce synergy. However, for the terms and it is not guaranteed that they are nonnegative. For , we will see examples of negative values below. For , the conditional co-information can be negative if there is synergy between the primary sources when conditioning on the non-overlapping target variables, and this can happen when there is no synergy about the target, leading to a negative value. Therefore, following the weak stochasticity axiom, the PID cannot ensure the nonnegativity of all terms when deterministic target-source dependencies are in place. We will further discuss this limitation after examining the full trivariate decomposition for the XOR and AND examples.

5.1.2. PIDs with the Strong Axiom

With the strong axiom, not only deterministic but stochastic components of synergy are restricted. There cannot be any synergistic contribution that involves a source overlapping with the target. Equation (12) can be applied with . Furthermore, since synergistic terms have to vanish not only for the terms of the trivariate lattice but also of any bivariate lattice associated with it, given the mapping of PID terms between these lattices (Figure 1), this implies that in the trivariate lattice also the PID terms of the form are constrained. There is only one case in which synergistic contributions can be nonzero if there is any target-source overlap for the trivariate case, and this is when only one variable overlaps. Consider that only variable 1 is part of the target. Since there cannot be any synergy involving 1, all synergistic PID terms contained in , , or have to vanish, and also and . It can be checked that this includes all synergistic terms except and . The former quantifies synergy about other target variables and the latter synergy redundant with the information of 1 itself. With more than one primary source overlapping with the target all synergistic terms have to vanish for the trivariate case.

Like for the weak axiom, we now leave the derivations for Appendix E. The PID deterministic terms are collected in Table 4, again for simplicity showing their expressions for the case in which i overlaps with the target. The form of the expressions respects the symmetries of each term. For example, if j instead of i overlaps with the target then . Note however that, because when i overlaps, if both i and j overlap then . See Appendix E for further details.

Table 4.

Deterministic components of the PID terms for the trivariate decomposition derived from the strong stochasticity axiom. All terms not included in the table have no deterministic component due to the axiom. Again, the expressions shown here correspond to the case in which the source i overlaps with the target. For we further consider that neither j nor k overlap with the target, and otherwise this term vanishes. If i does not overlap, is zero, while the other terms depend on their characteristic symmetry for the other variables j and k and vanish otherwise. See the main text and Appendix E for details.

| Term | Measure |

|---|---|