Abstract

We derive a lower bound on the differential entropy of a log-concave random variable X in terms of the p-th absolute moment of X. The new bound leads to a reverse entropy power inequality with an explicit constant, and to new bounds on the rate-distortion function and the channel capacity. Specifically, we study the rate-distortion function for log-concave sources and distortion measure , with , and we establish that the difference between the rate-distortion function and the Shannon lower bound is at most bits, independently of r and the target distortion d. For mean-square error distortion, the difference is at most bit, regardless of d. We also provide bounds on the capacity of memoryless additive noise channels when the noise is log-concave. We show that the difference between the capacity of such channels and the capacity of the Gaussian channel with the same noise power is at most bit. Our results generalize to the case of a random vector X with possibly dependent coordinates. Our proof technique leverages tools from convex geometry.

Keywords: differential entropy, reverse entropy power inequality, rate-distortion function, Shannon lower bound, channel capacity, log-concave distribution, hyperplane conjecture

1. Introduction

It is well known that the differential entropy among all zero-mean random variables with the same second moment is maximized by the Gaussian distribution:

| (1) |

More generally, the differential entropy under p-th moment constraint is upper bounded as (see e.g., [1] (Appendix 2)), for ,

| (2) |

where

| (3) |

Here, denotes the Gamma function. Of course, if , , and Equation (2) reduces to Equation (1). A natural question to ask is whether a matching lower bound on can be found in terms of p-norm of X, . The quest is meaningless without additional assumptions on the density of X, as is possible even if is finite. In this paper, we show that if the density of X, , is log-concave (that is, is concave), then stays within a constant from the upper bound in Equation (2) (see Theorem 3 in Section 2 below):

| (4) |

where . Moreover, the bound (4) tightens for , where we have

| (5) |

The bound (4) actually holds for if, in addition to being log-concave, X is symmetric (that is, ), (see Theorem 1 in Section 2 below).

The class of log-concave distributions is rich and contains important distributions in probability, statistics and analysis. Gaussian distribution, Laplace distribution, uniform distribution on a convex set, chi distribution are all log-concave. The class of log-concave random vectors has good behavior under natural probabilistic operations: namely, a famous result of Prékopa [2] states that sums of independent log-concave random vectors, as well as marginals of log-concave random vectors, are log-concave. Furthermore, log-concave distributions have moments of all orders.

Together with the classical bound in Equation (2), the bound in (4) tells us that entropy and moments of log-concave random variables are comparable.

Using a different proof technique, Bobkov and Madiman [3] recently showed that the differential entropy of a log-concave X satisfies

| (6) |

Our results in (4) and (5) tighten (6), in addition to providing a comparison with other moments.

Furthermore, this paper generalizes the lower bound on differential entropy in (4) to random vectors. If the random vector consists of independent random variables, then the differential entropy of X is equal to the sum of differential entropies of the component random variables, and one can trivially apply (4) component-wise to obtain a lower bound on . In this paper, we show that, even for nonindependent components, as long as the density of the random vector X is log-concave and satisfies a symmetry condition, its differential entropy is bounded from below in terms of covariance matrix of X (see Theorem 4 in Section 2 below). As noted in [4], such a generalization is related to the famous hyperplane conjecture in convex geometry. We also extend our results to a more general class of random variables, namely, the class of -concave random variables, with .

The bound (4) on the differential entropy allows us to derive reverse entropy power inequalities with explicit constants. The fundamental entropy power inequality of Shannon [5] and Stam [6] states that for all independent continuous random vectors X and Y in ,

| (7) |

where

| (8) |

denotes the entropy power of X. It is of interest to characterize distributions for which a reverse form of (7) holds. In this direction, it was shown by Bobkov and Madiman [7] that, given any continuous log-concave random vectors X and Y in , there exist affine volume-preserving maps such that a reverse entropy power inequality holds for and :

| (9) |

for some universal constant (independent of the dimension).

In applications, it is important to know the precise value of the constant c that appears in (9). It was shown by Cover and Zhang [8] that, if X and Y are identically distributed (possibly dependent) log-concave random variables, then

| (10) |

Inequality (10) easily extends to random vectors (see [9]). A similar bound for the difference of i.i.d. log-concave random vectors was obtained in [10], and reads as

| (11) |

Recently, a new form of reverse entropy power inequality was investigated in [11], and a general reverse entropy power-type inequality was developed in [12]. For further details, we refer to the survey paper [13]. In Section 5, we provide explicit constants for non-identically distributed and uncorrelated log-concave random vectors (possibly dependent). In particular, we prove that as long as log-concave random variables X and Y are uncorrelated,

| (12) |

A generalization of (12) to arbitrary dimension is stated in Theorem 8 in Section 2 below.

The bound (4) on the differential entropy is essential in the study of the difference between the rate-distortion function and the Shannon lower bound that we describe next. Given a nonnegative number d, the rate-distortion function under r-th moment distortion measure is given by

| (13) |

where the infimum is over all transition probability kernels satisfying the moment constraint. The celebrated Shannon lower bound [14] states that the rate-distortion function is lower bounded by

| (14) |

where is defined in (3). For mean-square distortion (), (14) simplifies to

| (15) |

The Shannon lower bound states that the rate-distortion function is lower bounded by the difference between the differential entropy of the source and the term that increases with target distortion d, explicitly linking the storage requirements for X to the information content of X (measured by ) and the desired reproduction distortion d. As shown in [15,16,17] under progressively less stringent assumptions (Koch [17] showed that (16) holds as long as ), the Shannon lower bound is tight in the limit of low distortion,

| (16) |

The speed of convergence in (16) and its finite blocklength refinement were recently explored in [18]. Due to its simplicity and tightness in the high resolution/low distortion limit, the Shannon lower bound can serve as a proxy for the rate-distortion function , which rarely has an explicit representation. Furthermore, the tightness of the Shannon lower bound at low d is linked to the optimality of simple lattice quantizers [18], an insight which has evident practical significance. Gish and Pierce [19] showed that, for mean-square error distortion, the difference between the entropy rate of a scalar quantizer, , and the rate-distortion function converges to bit/sample in the limit . Ziv [20] proved that is bounded by bit/sample, universally in d, where is the entropy rate of a dithered scalar quantizer.

In this paper, we show that the gap between and is bounded universally in d, provided that the source density is log-concave: for mean-square error distortion ( in (13)), we have

| (17) |

Besides leading to the reverse entropy power inequality and the reverse Shannon lower bound, the new bounds on the differential entropy allow us to bound the capacity of additive noise memoryless channels, provided that the noise follows a log-concave distribution.

The capacity of a channel that adds a memoryless noise Z is given by (see e.g., [21] (Chapter 9)),

| (18) |

where P is the power allotted for the transmission. As a consequence of the entropy power inequality (7) (or more elementary as a consequence of the worst additive noise lemma, see [22,23]), it holds that

| (19) |

for arbitrary noise Z, where denotes the capacity of the additive white Gaussian noise channel with noise variance . This fact is well known (see e.g., [21] (Chapter 9)), and is referred to as the saddle-point condition.

In this paper, we show that, whenever the noise Z is log-concave, the difference between the capacity and the capacity of a Gaussian channel with the same noise power satisfies

| (20) |

Let us mention a similar result by Zamir and Erez [24], who showed that the capacity of an arbitrary memoryless additive noise channel is well approximated by the mutual information between the Gaussian input and the output of the channel:

| (21) |

where is a Gaussian input satisfying the power constraint. The bounds (20) and (21) are not directly comparable.

The rest of the paper is organized as follows. Section 2 presents and discusses our main results: the lower bounds on differential entropy in Theorems 1, 3 and 4, the reverse entropy power inequalities with explicit constants in Theorems 7 and 8, the upper bounds on in Theorems 9 and 10, and the bounds on the capacity of memoryless additive channels in Theorems 12 and 13. The convex geometry tools served to prove the bounds on differential entropy and the bounds in Theorems 1, 3 and 4 are presented in Section 3. In Section 4, we extend our results to the class of -concave random variables. The reverse entropy power inequalities in Theorems 7 and 8 are proven in Section 5. The bounds on the rate-distortion function in Theorems 9 and 10 are proven in Section 6. The bounds on the channel capacity in Theorems 12 and 13 are proven in Section 7.

2. Main Results

2.1. Lower Bounds on the Differential Entropy

A function is log-concave if is a concave function. Equivalently, f is log-concave if for every and for every , one has

| (22) |

We say that a random vector X in is log-concave if it has a probability density function with respect to Lebesgue measure in such that is log-concave.

Our first result is a lower bound on the differential entropy of symmetric log-concave random variable in terms of its moments.

Theorem 1.

Let X be a symmetric log-concave random variable. Then, for every ,

(23) Moreover, (23) holds with equality for uniform distribution in the limit .

As we will see in Theorem 3, for , the bound (23) tightens as

| (24) |

The difference between the upper bound in (2) and the lower bound in (23) grows as log(p) as , as as , and reaches its minimum value of log(e) ≈ 1.4 bits at .

The next theorem, due to Karlin, Proschan and Barlow [25], shows that the moments of a symmetric log-concave random variable are comparable, and demonstrates that the bound in Theorem 1 tightens as .

Theorem 2.

Let X be a symmetric log-concave random variable. Then, for every ,

(25) Moreover, the Laplace distribution satisfies (25) with equality [25].

Combining Theorem 2 with the well-known fact that is non-decreasing in p, we deduce that for every symmetric log-concave random variable X, for every ,

| (26) |

Using Theorem 1 and (24), we immediately obtain the following upper bound for the relative entropy between a symmetric log-concave random variable X and a Gaussian with same variance as that of X.

Corollary 1.

Let X be a symmetric log-concave random variable. Then, for every ,

(27) where , and

(28)

Remark 1.

The uniform distribution achieves equality in (27) in the limit . Indeed, if U is uniformly distributed on a symmetric interval, then

(29) and so, in the limit , the upper bound in Corollary 1 coincides with the true value of :

(30)

We next provide a lower bound for the differential entropy of log-concave random variables that are not necessarily symmetric.

Theorem 3.

Let X be a log-concave random variable. Then, for every ,

(31) Moreover, for , the bound (31) tightens as

(32)

The next proposition is an analog of Theorem 2 for log-concave random variables that are not necessarily symmetric.

Proposition 1.

Let X be a log-concave random variable. Then, for every ,

(33)

Remark 2.

Contrary to Theorem 2, we do not know whether there exists a distribution that realizes equality in (33).

Using Theorem 3, we immediately obtain the following upper bound for the relative entropy between an arbitrary log-concave random variable X and a Gaussian with same variance as that of X. Recall the definition of in (28).

Corollary 2.

Let X be a zero-mean, log-concave random variable. Then, for every ,

(34) where . In particular, by taking , we necessarily have

(35)

For a given distribution of X, one can optimize over p to further tighten (35), as seen in (29) for the uniform distribution.

We now present a generalization of the bound in Theorem 1 to random vectors satisfying a symmetry condition. A function is called unconditional if, for every and every , one has

| (36) |

For example, the probability density function of the standard Gaussian distribution is unconditional. We say that a random vector X in is unconditional if it has a probability density function with respect to Lebesgue measure in such that is unconditional.

Theorem 4.

Let X be a symmetric log-concave random vector in , . Then,

(37) where denotes the determinant of the covariance matrix of X, and . If, in addition, X is unconditional, then .

By combining Theorem 4 with the well-known upper bound on the differential entropy, we deduce that, for every symmetric log-concave random vector X in ,

| (38) |

where in general, and if, in addition, X is unconditional.

Using Theorem 4, we immediately obtain the following upper bound for the relative entropy between a symmetric log-concave random vector X and a Gaussian with the same covariance matrix as that of X.

Corollary 3.

Let X be a symmetric log-concave random vector in . Then,

(39) where , with in general, and when X is unconditional.

For isotropic unconditional log-concave random vectors (whose definition we recall in Section 3.3 below), we extend Theorem 4 to other moments.

Theorem 5.

Let be an isotropic unconditional log-concave random vector. Then, for every ,

(40) where . If, in addition, is invariant under permutations of coordinates, then .

2.2. Extension to -Concave Random Variables

The bound in Theorem 1 can be extended to a larger class of random variables than log-concave, namely the class of -concave random variables that we describe next.

Let . We say that a probability density function is γ-concave if is convex. Equivalently, f is -concave if for every and every , one has

| (41) |

As , (41) agrees with (22), and thus 0-concave distributions corresponds to log-concave distributions. The class of -concave distributions has been deeply studied in [26,27].

Since for fixed the function is non-decreasing in , we deduce that any log-concave distribution is -concave, for any .

For example, extended Cauchy distributions, that is, distributions of the form

| (42) |

where is the normalization constant, are -concave distributions (but are not log-concave).

We say that a random vector X in is -concave if it has a probability density function with respect to Lebesgue measure in such that is -concave.

We derive the following lower bound on the differential entropy for one-dimensional symmetric -concave random variables, with .

Theorem 6.

Let . Let X be a symmetric γ-concave random variable. Then, for every ,

(43)

Notice that (43) reduces to (23) as . Theorem 6 implies the following relation between entropy and second moment, for any .

Corollary 4.

Let . Let X be a symmetric γ-concave random variable. Then,

(44)

2.3. Reverse Entropy Power Inequality with an Explicit Constant

As an application of Theorems 3 and 4, we establish in Theorems 7 and 8 below a reverse form of the entropy power inequality (7) with explicit constants, for uncorrelated log-concave random vectors. Recall the definition of the entropy power (8).

Theorem 7.

Let X and Y be uncorrelated log-concave random variables. Then,

(45)

As a consequence of Corollary 4, reverse entropy power inequalities for more general distributions can be obtained. In particular, for any uncorrelated symmetric -concave random variables X and Y, with ,

| (46) |

One cannot have a reverse entropy power inequality in higher dimensions for arbitrary log-concave random vectors. Indeed, just consider X uniformly distributed on and Y uniformly distributed on in , with small enough so that and are arbitrarily small compared to . Hence, we need to put X and Y in a certain position so that a reverse form of (7) is possible. While the isotropic position (discussed in Section 3) will work, it can be relaxed to the weaker condition that the covariance matrices are proportionals. Recall that we denote by the covariance matrix of X.

Theorem 8.

Let X and Y be uncorrelated symmetric log-concave random vectors in such that and are proportionals. Then,

(47) If, in addition, X and Y are unconditional, then

(48)

2.4. New Bounds on the Rate-distortion Function

As an application of Theorems 1 and 3, we show in Corollary 5 below that in the class of one-dimensional log-concave distributions, the rate-distortion function does not exceed the Shannon lower bound by more than bits (which can be refined to bits when the source is symmetric), independently of d and . Denote for brevity

| (49) |

and recall the definition of in (3).

We start by giving a bound on the difference between the rate-distortion function and the Shannon lower bound, which applies to general, not necessarily log-concave, random variables.

Theorem 9.

Let and . Let X be an arbitrary random variable.

Let . If , then

(50) If , then .

Let . If , then

(51) If , then . If and , then .

Remark 3.

For Gaussian X and , the upper bound in (50) is 0, as expected.

The next result refines the bounds in Theorem 9 for symmetric log-concave random variables when .

Theorem 10.

Let and . Let X be a symmetric log-concave random variable.

If , then

(52) If or , then . If and , then .

To bound independently of the distribution of X, we apply the bound (35) on to Theorems 9 and 10:

Corollary 5.

Let X be a log-concave random variable. For , we have

(53) For , we have

(54) If, in addition, X is symmetric, then, for we have

(55)

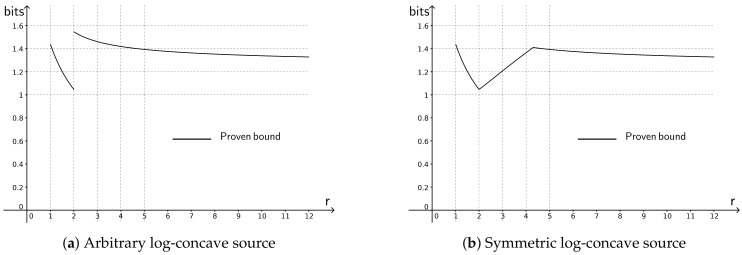

Figure 1a presents our bound for different values of r. Regardless of r and d,

Figure 1.

The bound on the difference between the rate-distortion function under r-th moment constraint and the Shannon lower bound, stated in Corollary 5.

| (56) |

The bounds in Figure 1a tighten for symmetric log-concave sources when . Figure 1b presents this tighter bound for different values of r. Regardless of r and d,

| (57) |

One can see that the graph in Figure 1b is continuous at , contrary to the graph in Figure 1a. This is because Theorem 2, which applies to symmetric log-concave random variables, is strong enough to imply the tightening of (51) given in (52), while Proposition 1, which provides a counterpart of Theorem 2 applicable to all log-concave random variables, is insufficient to derive a similar tightening in that more general setting.

Remark 4.

While Corollary 5 bounds the difference by a universal constant independent of the distribution of X, tighter bounds can be obtained if one is willing to relinquish such universality. For example, for mean-square distortion () and a uniformly distributed source U, using Remark 1, we obtain

(58)

Theorem 9 easily extends to random vector X in , , with a similar proof. The only difference being an extra term of that will appear on the right-hand side of (50) and (51), and will come from the upper bound on the differential entropy (38). Here,

As a result, the bound can be arbitrarily large in higher dimensions because of the term . However, for isotropic random vectors (whose definition we recall in Section 3.3 below), one has . Hence, using the bound (39) on , we can bound independently of the distribution of isotropic log-concave random vector X in , .

Corollary 6.

Let X be an isotropic log-concave random vector in , . Then,

(59) where in general, and if, in addition, X is unconditional.

Let us consider the rate-distortion function under the determinant constraint for random vectors in , :

| (60) |

where the infimum is taken over all joint distributions satisfying the determinant constraint . For this distortion measure, we have the following bound.

Theorem 11.

Let X be a symmetric log-concave random vector in . If , then

(61) with . If, in addition, X is unconditional, then . If , then .

2.5. New Bounds on the Capacity of Memoryless Additive Channels

As another application of Theorem 3, we compare the capacity of a channel with log-concave additive noise Z with the capacity of the Gaussian channel. Recall that the capacity of the Gaussian channel is

| (62) |

Theorem 12.

Let Z be a log-concave random variable. Then,

(63)

Remark 5.

Theorem 12 tells us that the capacity of a channel with log-concave additive noise exceeds the capacity a Gaussian channel by no more than bits.

As an application of Theorem 4, we can provide bounds for the capacity of a channel with log-concave additive noise Z in , . The formula for capacity (18) generalizes to dimension n as

| (64) |

Theorem 13.

Let Z be a symmetric log-concave random vector in . Then,

(65) where . If, in addition, Z is unconditional, then .

The upper bound in Theorem 13 can be arbitrarily large by inflating the ratio . For isotropic random vectors (whose definition is recalled in Section 3.3 below), one has , and the following corollary follows.

Corollary 7.

Let Z be an isotropic log-concave random vector in . Then,

(66) where . If, in addition, Z is unconditional, then .

3. New Lower Bounds on the Differential Entropy

3.1. Proof of Theorem 1

The key to our development is the following result for one-dimensional log-concave distributions, well-known in convex geometry. It can be found in [28], in a slightly different form.

Lemma 1.

The function

(67) is log-concave on , whenever is log-concave [28].

Proof of Theorem 1.

Let . Applying Lemma 1 to the values , we have

(68)

The bound in Theorem 1 follows by computing the values , and for .

One has

| (69) |

To compute , we first provide a different expression for . Notice that

| (70) |

Denote the generalized inverse of by , . Since is log-concave and

| (71) |

it follows that is non-increasing on . Therefore, . Hence,

| (72) |

We deduce that

| (73) |

Plugging (69) and (73) into (68), we obtain

| (74) |

It follows immediately that

| (75) |

For , the bound is obtained similarly by applying Lemma 1 to the values .

We now show that equality is attained, by letting , by U uniformly distributed on a symmetric interval , for some . In this case, we have

| (76) |

Hence,

| (77) |

☐

Remark 6.

From (71) and (74), we see that the following statement holds: For every symmetric log-concave random variable , for every , and for every ,

(78) Inequality (78) is the main ingredient in the proof of Theorem 1. It is instructive to provide a direct proof of inequality (78) without appealing to Lemma 1, the ideas going back to [25]:

Proof of inequality (78)

By considering , where X is symmetric log-concave, it is enough to show that for every log-concave density f supported on , one has

(79) By a scaling argument, one may assume that . Take . If , then the result follows by a straightforward computation. Assume that . Since and , the function changes sign at least one time. However, since , f is log-concave and g is log-affine, the function changes sign exactly once. It follows that there exists a unique point such that for every , , and for every , . We deduce that for every , and ,

(80) Integrating over , we arrive at

(81) which yields the desired result. ☐

Actually, the powerful and versatile result of Lemma 1, which implies (78), is also proved using the technique in (79)–(81). In the context of information theory, Lemma 1 has been previously applied to obtain reverse entropy power inequalities [7], as well as to establish optimal concentration of the information content [29]. In this paper, we make use of Lemma 1 to prove Theorem 1. Moreover, Lemma 1 immediately implies Theorem 2. Below, we recall the argument for completeness.

Proof of Theorem 2.

The result follows by applying Lemma 1 to the values . If , then

(82) Hence,

(83) which yields the desired result. The bound is obtained similarly if or if . ☐

3.2. Proof of Theorem 3 and Proposition 1

The proof leverages the ideas from [10].

Proof of Theorem 3.

Let Y be an independent copy of X. Jensen’s inequality yields

(84) Since is symmetric and log-concave, we can apply inequality (74) to to obtain

(85) where the last inequality again follows from Jensen’s inequality. Combining (84) and (85) leads to the desired result:

(86) For , one may tighten (85) by noticing that

(87) Hence,

(88) ☐

Proof of Proposition 1.

Let Y be an independent copy of X. Since is symmetric and log-concave, we can apply Theorem 2 to . Jensen’s inequality and triangle inequality yield:

(89) ☐

3.3. Proof of Theorem 4

We say that a random vector is isotropic if X is symmetric and for all unit vectors , one has

| (90) |

for some constant . Equivalently, X is isotropic if its covariance matrix is a multiple of the identity matrix ,

| (91) |

for some constant . The constant

| (92) |

is called the isotropic constant of X.

It is well known that is bounded from below by a positive constant independent of the dimension [30]. A long-standing conjecture in convex geometry, the hyperplane conjecture, asks whether the isotropic constant of an isotropic log-concave random vector is also bounded from above by a universal constant (independent of the dimension). This conjecture holds under additional assumptions, but, in full generality, is known to be bounded only by a constant that depends on the dimension. For further details, we refer the reader to [31]. We will use the following upper bounds on (see [32] for the best dependence on the dimension up to date).

Lemma 2.

Let X be an isotropic log-concave random vector in , with . Then, . If, in addition, X is unconditional, then .

If X is uniformly distributed on a convex set, these bounds hold without factor .

Even though the bounds in Lemma 2 are well known, we could not find a reference in the literature. We thus include a short proof for completeness.

Proof.

It was shown by Ball [30] (Lemma 8) that if X is uniformly distributed on a convex set, then . If X is uniformly distributed on a convex set and is unconditional, then it is known that (see e.g., [33] (Proposition 2.1)). Now, one can pass from uniform distributions on a convex set to log-concave distributions at the expense of an extra factor , as shown by Ball [30] (Theorem 7). ☐

We are now ready to prove Theorem 4.

Proof of Theorem 4.

Let be an isotropic log-concave random vector. Notice that , hence, using Lemma 2, we have

(93) with . If, in addition, is unconditional, then again by Lemma 2, .

Now consider an arbitrary symmetric log-concave random vector X. One can apply a change of variable to put X in isotropic position. Indeed, by defining , one has for every unit vector ,

(94) It follows that is an isotropic log-concave random vector with isotropic constant 1. Therefore, we can use (93) to obtain

(95) where in general, and when X is unconditional. We deduce that

(96) ☐

3.4. Proof of Theorem 5

First, we need the following lemma.

Lemma 3.

Let be an isotropic unconditional log-concave random vector. Then, for every ,

(97) where is the marginal distribution of the i-th component of X, i.e., for every ,

(98) Here, . If, in addition, is invariant under permutations of coordinates, then [33] (Proposition 3.2).

Proof of Theorem 5.

Let . We have

(99) Since is unconditional and log-concave, it follows that is symmetric and log-concave, so inequality (74) applies to :

(100) We apply Lemma 3 to pass from to in the right side of (100):

(101) Thus,

(102) ☐

4. Extension to -Concave Random Variables

In this section, we prove Theorem 6, which extends Theorem 1 to the class of -concave random variables, with . First, we need the following key lemma, which extends Lemma 1.

Lemma 4.

Let be a γ-concave function, with . Then, the function

(103) is log-concave on [34] (Theorem 7).

One can recover Lemma 1 from Lemma 4 by letting tend to 0 from below.

Proof of Theorem 6.

Let us first consider the case . Let us denote by the probability density function of X. By applying Lemma 4 to the values , we have

From the proof of Theorem 1, we deduce that . In addition, notice that, for ,

(104) Hence,

(105) and the bound on differential entropy follows:

(106) For the case , the bound is obtained similarly by applying Lemma 4 to the values . ☐

5. Reverse Entropy Power Inequality with Explicit Constant

5.1. Proof of Theorem 7

Proof.

Using the upper bound on the differential entropy (1), we have

(107) the last equality being valid since X and Y are uncorrelated. Hence,

(108) Using inequality (32), we conclude that

(109) ☐

5.2. Proof of Theorem 8

Proof.

Since X and Y are uncorrelated and and are proportionals,

(110) Using (110) and the upper bound on the differential entropy (38), we obtain

(111) Using Theorem 4, we conclude that

(112) where in general, and if X and Y are unconditional. ☐

6. New Bounds on the Rate-Distortion Function

6.1. Proof of Theorem 9

Proof.

Under mean-square error distortion (), the result is implicit in [21] (Chapter 10). Denote for brevity .

(1) Let . Assume that . We take

(113) where is independent of X. This choice of is admissible since

(114) where we used and the left-hand side of inequality (26). Upper-bounding the rate-distortion function by the mutual information between X and , we obtain

(115) where we used homogeneity of differential entropy for the last equality. Invoking the upper bound on the differential entropy (1), we have

(116) and (50) follows.

If , then , and setting leads to .

(2) Let . The argument presented here works for every . However, for , the argument in part (1) provides a tighter bound. Assume that . We take

(117) where Z is independent of X and realizes the maximum differential entropy under the r-th moment constraint, . The probability density function of Z is given by

(118) Notice that

(119) We have

(120)

(121) where is defined in (49). Hence,

(122) If , then setting leads to . Finally, if and , then, from (120), we obtain

(123) ☐

6.2. Proof of Theorem 10

Proof.

Denote for brevity , and recall that X is a symmetric log-concave random variable.

Assume that . We take

(124) where is independent of X. This choice of is admissible since

(125) where we used and Theorem 2. Using the upper bound on the differential entropy (1), we have

(126) Hence,

(127) If , then from Theorem 2 , hence . Finally, if and , then, from (126), we obtain

(128) ☐

Remark 7.

1) Let us explain the strategy in the proof of Theorems 9 and 10. By definition, for any satisfying the constraint. In our study, we chose of the form , with , where Z is independent of X. To find the best bounds possible with this choice of , we need to minimize over λ. Notice that if and Z symmetric, then .

To estimate in terms of and , one can use triangle inequality and the convexity of to get the bound

(129) or one can apply Jensen’s inequality directly to get the bound

(130) A simple study shows that (130) provides a tighter bound over (129). This justifies choosing as in (117) in the proof of (51).

To justify the choice of in (113) (also in (124)), which leads the tightening of (51) for in (50) (also in (52)), we bound r-th norm by second norm, and we note that by the independence of X and Z,

(131) A simple study shows that (131) provides a tighter bound over (130).

2) Using Corollary 2, if , one may rewrite our bound in terms of the rate-distortion function of a Gaussian source as follows:

(132) where is defined in (28), and where

(133) is the rate-distortion function of a Gaussian source with the same variance as X. It is well known that for arbitrary source and mean-square distortion (see e.g., [21] (Chapter 10))

(134) By taking in (132), we obtain

(135) The bounds in (134) and (135) tell us that the rate-distortion function of any log-concave source is approximated by that of a Gaussian source. In particular, approximating of an arbitrary log-concave source by

(136) we guarantee the approximation error of at most bits.

6.3. Proof of Theorem 11

Proof.

If , then we choose , where is independent of X. This choice is admissible by independence of X and Z and the fact that and are proportionals. Upper-bounding the rate-distortion function by the mutual information between X and , we have

(137) Since the Shannon lower bound for determinant constraint coincides with that for the mean-square error constraint,

(138) On the other hand, using (137), we have

(139) where (139) follows from Corollary 3.

If , then we put , which leads to . ☐

7. New Bounds on the Capacity of Memoryless Additive Channels

Recall that the capacity of such a channel is

| (140) |

We compare the capacity of a channel with log-concave additive noise with the capacity of the Gaussian channel.

7.1. Proof of Theorem 12

Proof.

The lower bound is well known, as mentioned in (19). To obtain the upper bound, we first use the upper bound on the differential entropy (1) to conclude that

(141) for every random variable X such that . By combining (140), (141) and (32), we deduce that

(142) which is the desired result. ☐

7.2. Proof of Theorem 13

Proof.

The lower bound is well known, as mentioned in (19). To obtain the upper bound, we write

(143) where in general, and if Z is unconditional. The first inequality in (143) is obtained from the upper bound on the differential entropy (38). The last inequality in (143) is obtained by applying the arithmetic-geometric mean inequality and Theorem 4. ☐

8. Conclusions

Several recent results show that the entropy of log-concave probability densities have nice properties. For example, reverse, strengthened and stable versions of the entropy power inequality were recently obtained for log-concave random vectors (see e.g., [3,11,35,36,37,38]). This line of developments suggest that, in some sense, log-concave random vectors behave like Gaussians.

Our work follows this line of results, by establishing a new lower bound on differential entropy for log-concave random variables in (4), for log-concave random vectors with possibly dependent coordinates in (37), and for -concave random variables in (43). We made use of the new lower bounds in several applications. First, we derived reverse entropy power inequalities with explicit constants for uncorrelated, possibly dependent log-concave random vectors in (12) and (47). We also showed a universal bound on the difference between the rate-distortion function and the Shannon lower bound for log-concave random variables in Figure 1a and Figure 1b, and for log-concave random vectors in (59). Finally, we established an upper bound on the capacity of memoryless additive noise channels when the noise is a log-concave random vector in (20) and (66).

Under the Gaussian assumption, information-theoretic limits in many communication scenarios admit simple closed-form expressions. Our work demonstrates that, at least in three such scenarios (source coding, channel coding and joint source-channel coding), the information-theoretic limits admit a closed-form approximation with at most 1 bit of error if the Gaussian assumption is relaxed to the log-concave one. We hope that the approach will be useful in gaining insights into those communication and data processing scenarios in which the Gaussianity of the observed distributions is violated but the log-concavity is preserved.

Acknowledgments

This work is supported in part by the National Science Foundation (NSF) under Grant CCF-1566567, and by the Walter S. Baer and Jeri Weiss CMI Postdoctoral Fellowship. The authors would also like to thank an anonymous referee for pointing out that the bound (23) and, up to a factor 2, the bound (25) also apply to the non-symmetric case if .

Author Contributions

Arnaud Marsiglietti and Victoria Kostina contributed equally to the research and writing of the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Zamir R., Feder M. On Universal Quantization by Randomized Uniform/Lattice Quantizers. IEEE Trans. Inf. Theory. 1992;32:428–436. doi: 10.1109/18.119699. [DOI] [Google Scholar]

- 2.Prékopa A. On logarithmic concave measures and functions. Acta Sci. Math. 1973;34:335–343. [Google Scholar]

- 3.Bobkov S., Madiman M. The entropy per coordinate of a random vector is highly constrained under convexity conditions. IEEE Trans. Inf. Theory. 2011;57:4940–4954. doi: 10.1109/TIT.2011.2158475. [DOI] [Google Scholar]

- 4.Bobkov S., Madiman M. Entropy and the hyperplane conjecture in convex geometry; Proceedings of the 2010 IEEE International Symposium on Information Theory Proceedings (ISIT); Austin, TX, USA. 13–18 June 2010; pp. 1438–1442. [Google Scholar]

- 5.Shannon C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948;27:379–423, 623–656. doi: 10.1002/j.1538-7305.1948.tb01338.x. [DOI] [Google Scholar]

- 6.Stam A.J. Some inequalities satisfied by the quantities of information of Fisher and Shannon. Inf. Control. 1959;2:101–112. doi: 10.1016/S0019-9958(59)90348-1. [DOI] [Google Scholar]

- 7.Bobkov S., Madiman M. Reverse Brunn-Minkowski and reverse entropy power inequalities for convex measures. J. Funct. Anal. 2012;262:3309–3339. doi: 10.1016/j.jfa.2012.01.011. [DOI] [Google Scholar]

- 8.Cover T.M., Zhang Z. On the maximum entropy of the sum of two dependent random variables. IEEE Trans. Inf. Theory. 1994;40:1244–1246. doi: 10.1109/18.335945. [DOI] [Google Scholar]

- 9.Madiman M., Kontoyiannis I. Entropy bounds on abelian groups and the Ruzsa divergence. IEEE Trans. Inf. Theory. 2018;64:77–92. doi: 10.1109/TIT.2016.2620470. [DOI] [Google Scholar]

- 10.Bobkov S., Madiman M. Limit Theorems in Probability, Statistics and Number Theory. Volume 42. Springer; Berlin/Heidelberg, Germany: 2013. On the problem of reversibility of the entropy power inequality; pp. 61–74. Springer Proceedings in Mathematics and Statistics. [Google Scholar]

- 11.Ball K., Nayar P., Tkocz T. A reverse entropy power inequality for log-concave random vectors. Studia Math. 2016;235:17–30. doi: 10.4064/sm8418-6-2016. [DOI] [Google Scholar]

- 12.Courtade T.A. Links between the Logarithmic Sobolev Inequality and the convolution inequalities for Entropy and Fisher Information. arXiv. 2016. 1608.05431

- 13.Madiman M., Melbourne J., Xu P. Forward and Reverse Entropy Power Inequalities in Convex Geometry. In: Carlen E., Madiman M., Werner E., editors. Convexity and Concentration. Volume 161. Springer; New York, NY, USA: 2017. pp. 427–485. The IMA Volumes in Mathematics and Its Applications. [Google Scholar]

- 14.Shannon C.E. Coding theorems for a discrete source with a fidelity criterion. IRE Int. Conv. Rec. 1959;7:142–163. Reprinted with changes in Information and Decision Processes; Machol, R.E., Ed.; McGraw-Hill: New York, NY, USA, 1960; pp. 93–126. [Google Scholar]

- 15.Linkov Y.N. Evaluation of ϵ-entropy of random variables for small ϵ. Probl. Inf. Transm. 1965;1:18–26. [Google Scholar]

- 16.Linder T., Zamir R. On the asymptotic tightness of the Shannon lower bound. IEEE Trans. Inf. Theory. 1994;40:2026–2031. doi: 10.1109/18.340474. [DOI] [Google Scholar]

- 17.Koch T. The Shannon Lower Bound is Asymptotically Tight. IEEE Trans. Inf. Theory. 2016;62:6155–6161. doi: 10.1109/TIT.2016.2604254. [DOI] [Google Scholar]

- 18.Kostina V. Data compression with low distortion and finite blocklength. IEEE Trans. Inf. Theory. 2017;63:4268–4285. doi: 10.1109/TIT.2017.2676811. [DOI] [Google Scholar]

- 19.Gish H., Pierce J. Asymptotically efficient quantizing. IEEE Trans. Inf. Theory. 1968;14:676–683. doi: 10.1109/TIT.1968.1054193. [DOI] [Google Scholar]

- 20.Ziv J. On universal quantization. IEEE Trans. Inf. Theory. 1985;31:344–347. doi: 10.1109/TIT.1985.1057034. [DOI] [Google Scholar]

- 21.Cover T.M., Thomas J.A. Elements of Information Theory. John Wiley & Sons; Hoboken, NJ, USA: 2012. [Google Scholar]

- 22.Ihara S. On the capacity of channels with additive non-Gaussian noise. Inf. Control. 1978;37:34–39. doi: 10.1016/S0019-9958(78)90413-8. [DOI] [Google Scholar]

- 23.Diggavi S.N., Cover T.M. The worst additive noise under a covariance constraint. IEEE Trans. Inf. Theory. 2001;47:3072–3081. doi: 10.1109/18.959289. [DOI] [Google Scholar]

- 24.Zamir R., Erez U. A Gaussian input is not too bad. IEEE Trans. Inf. Theory. 2004;50:1340–1353. doi: 10.1109/TIT.2004.828153. [DOI] [Google Scholar]

- 25.Karlin S., Proschan F., Barlow R.E. Moment inequalities of Pólya frequency functions. Pac. J. Math. 1961;11:1023–1033. doi: 10.2140/pjm.1961.11.1023. [DOI] [Google Scholar]

- 26.Borell C. Convex measures on locally convex spaces. Ark. Mat. 1974;12:239–252. doi: 10.1007/BF02384761. [DOI] [Google Scholar]

- 27.Borell C. Convex set functions in d-space. Period. Math. Hungar. 1975;6:111–136. doi: 10.1007/BF02018814. [DOI] [Google Scholar]

- 28.Borell C. Complements of Lyapunov’s inequality. Math. Ann. 1973;205:323–331. doi: 10.1007/BF01362702. [DOI] [Google Scholar]

- 29.Fradelizi M., Madiman M., Wang L. High Dimensional Probability VII 2016. Volume 71. Birkhäuser; Cham, Germany: 2016. Optimal concentration of information content for log-concave densities; pp. 45–60. [Google Scholar]

- 30.Ball K. Logarithmically concave functions and sections of convex sets in ℝn. Studia Math. 1988;88:69–84. doi: 10.4064/sm-88-1-69-84. [DOI] [Google Scholar]

- 31.Brazitikos S., Giannopoulos A., Valettas P., Vritsiou B.H. Geometry of Isotropic Convex Bodies. American Mathematical Society; Providence, RI, USA: 2014. Mathematical Surveys and Monographs, 196. [Google Scholar]

- 32.Klartag B. On convex perturbations with a bounded isotropic constant. Geom. Funct. Anal. 2006;16:1274–1290. doi: 10.1007/s00039-006-0588-1. [DOI] [Google Scholar]

- 33.Bobkov S., Nazarov F. Geometric Aspects of Functional Analysis. Springer; Berlin/Heidelberg, Germany: 2003. On convex bodies and log-concave probability measures with unconditional basis; pp. 53–69. [Google Scholar]

- 34.Fradelizi M., Guédon O., Pajor A. Thin-shell concentration for convex measures. Studia Math. 2014;223:123–148. doi: 10.4064/sm223-2-2. [DOI] [Google Scholar]

- 35.Ball K., Nguyen V.H. Entropy jumps for isotropic log-concave random vectors and spectral gap. Studia Math. 2012;213:81–96. doi: 10.4064/sm213-1-6. [DOI] [Google Scholar]

- 36.Toscani G. A concavity property for the reciprocal of Fisher information and its consequences on Costa’s EPI. Physica A. 2015;432:35–42. doi: 10.1016/j.physa.2015.03.018. [DOI] [Google Scholar]

- 37.Toscani G. A strengthened entropy power inequality for log-concave densities. IEEE Trans. Inf. Theory. 2015;61:6550–6559. doi: 10.1109/TIT.2015.2495302. [DOI] [Google Scholar]

- 38.Courtade T.A., Fathi M., Pananjady A. Wasserstein Stability of the Entropy Power Inequality for Log-Concave Densities. arXiv. 2016. 1610.07969