Abstract

What are the distinct ways in which a set of predictor variables can provide information about a target variable? When does a variable provide unique information, when do variables share redundant information, and when do variables combine synergistically to provide complementary information? The redundancy lattice from the partial information decomposition of Williams and Beer provided a promising glimpse at the answer to these questions. However, this structure was constructed using a much criticised measure of redundant information, and despite sustained research, no completely satisfactory replacement measure has been proposed. In this paper, we take a different approach, applying the axiomatic derivation of the redundancy lattice to a single realisation from a set of discrete variables. To overcome the difficulty associated with signed pointwise mutual information, we apply this decomposition separately to the unsigned entropic components of pointwise mutual information which we refer to as the specificity and ambiguity. This yields a separate redundancy lattice for each component. Then based upon an operational interpretation of redundancy, we define measures of redundant specificity and ambiguity enabling us to evaluate the partial information atoms in each lattice. These atoms can be recombined to yield the sought-after multivariate information decomposition. We apply this framework to canonical examples from the literature and discuss the results and the various properties of the decomposition. In particular, the pointwise decomposition using specificity and ambiguity satisfies a chain rule over target variables, which provides new insights into the so-called two-bit-copy example.

Keywords: mutual information, pointwise information, information decomposition, unique information, redundant information, complementary information, redundancy, synergy

PACS: 89.70.Cf, 89.75.Fb, 05.65.+b, 87.19.lo

1. Introduction

The aim of information decomposition is to divide the total amount of information provided by a set of predictor variables, about a target variable, into atoms of partial information contributed either individually or jointly by the various subsets of the predictors. Suppose that we are trying to predict a target variable T, with discrete state space , from a pair of predictor variables and , with discrete state spaces and . The mutual information quantifies the information individually provides about T. Similarly, the mutual information quantifies the information individually provides about T. Now consider the joint variable with the state space . The (joint) mutual information quantifies the total information and together provide about T. Although Shannon’s information theory provides the prior three measures of information, there are four possible ways and could contribute information about T: the predictor could uniquely provide information about T; or the predictor could uniquely provide information about T; both and could both individually, yet redundantly, provide the same information about T; or the predictors and could synergistically provide information about T which is not available in either predictor individually. Thus we have the following underdetermined set of equations,

| (1) |

where and are the unique information provided by and respectively, is the redundant information, and is the synergistic or complementary information. (The directed notation is utilise here to emphasis the privileged role of the variable T.) Together, the equations in (1) form the bivariate information decomposition. The problem is to define one of the unique, redundant or complementary information—something not provided by Shannon’s information theory—in order to uniquely evaluate the decomposition.

Now suppose that we are trying to predict a target variable T from a set of n finite state predictor variables . In this general case, the aim of information decomposition is to divide the total amount of information into atoms of partial information contributed either individually or jointly by the various subsets of . But what are the distinct ways in which these subsets of predictors might contribute information about the target? Multivariate information decomposition is more involved than the bivariate information decomposition because it is not immediately obvious how many atoms of information one needs to consider, nor is it clear how these atoms should relate to each other. Thus the general problem of information decomposition is to provide both a structure for multivariate information which is consistent with the bivariate decomposition, and a way to uniquely evaluate the atoms in this general structure.

In the remainder of Section 1, we will introduce an intriguing framework called partial information decomposition (PID), which aims to address the general problem of information decomposition, and highlight some of the criticisms and weaknesses of this framework. In Section 2, we will consider the underappreciated pointwise nature of information and discuss the relevance of this to the problem of information decomposition. We will then propose a modified pointwise partial information decomposition (PPID), but then quickly repudiate this approach due to complications associated with decomposing the signed pointwise mutual information. In Section 3, we will discuss circumventing this issue by examining information on a more fundamental level, in terms of the unsigned entropic components of pointwise mutual information which we refer to as the specificity and the ambiguity. Then in Section 4—the main section of this paper—we will introduce the PPID using the specificity and ambiguity lattices and the measures of redundancy in Definitions 1 and 2. In Section 5, we will apply this framework to a number of canonical examples from the PID literature, discuss some of the key properties of the decomposition, and compare these to existing approaches to information decomposition. Section 6 will conclude the main body of the paper. Appendix A contains discussions regarding the so-called two-bit-copy problem in terms of Kelly gambling, Appendix B contains many of the technical details and proofs, while Appendix B contains some more examples.

1.1. Notation

The following notational conventions are observed throughout this article:

-

T, , t,

denote the target variable, event space, event and complementary event respectively;

-

S, , s,

denote the predictor variable, event space, event and complementary event respectively;

-

,

represent the set of n predictor variables and events respectively;

-

,

denote the two-event partition of the event space, i.e., and ;

-

,

uppercase function names be used for average information-theoretic measures;

-

,

lowercase function names be used for pointwise information-theoretic measures.

When required, the following index conventions are observed:

-

, , ,

superscripts distinguish between different different events in a variable;

-

, , ,

subscripts distinguish between different variables;

-

,

multiple superscripts represent joint variables and joint events.

Finally, to be discussed in more detail when appropriate, consider the following:

-

sources are sets of predictor variables, i.e., where is the power set without ∅;

-

source events are sets of predictor events, i.e., .

1.2. Partial Information Decomposition

The partial information decomposition (PID) of Williams and Beer [1,2] was introduced to address the problem of multivariate information decomposition. The approach taken is appealing as rather than speculating about the structure of multivariate information, Williams and Beer took a more principled, axiomatic approach. They start by considering potentially overlapping subsets of called sources, denoted . To examine the various ways these sources might contain the same information, they introduce three axioms which “any reasonable measure for redundant information [] should fulfil” ([3], p. 3502). Note that the axioms appear explicitly in [2] but are discussed in [1] as mere properties; a published version of the axioms can be found in [4].

W&B Axiom 1 (Commutativity).

Redundant information is invariant under any permutation σ of sources,

W&B Axiom 2 (Monotonicity).

Redundant information decreases monotonically as more sources are included,

with equality if for any .

W&B Axiom 3 (Self-redundancy).

Redundant information for a single source equals the mutual information,

These axioms are based upon the intuition that redundancy should be analogous to the set- theoretic notion of intersection (which is commutative, monotonically decreasing and idempotent). Crucially, Axiom 3 ties this notion of redundancy to Shannon’s information theory. In addition to these three axioms, there is an (implicit) axiom assumed here known as local positivity [5], which is the requirement that all atoms be non-negative. Williams and Beer [1,2] then show how these axioms reduce the number of sources to the collection of sources such that no source is a superset of any other. These remaining sources are called partial information atoms (PI atoms). Each PI atom corresponds to a distinct way the set of predictors can contribute information about the target T. Furthermore, Williams and Beer show that these PI atoms are partially ordered and hence form a lattice which they call the redundancy lattice. For the bivariate case, the redundancy lattice recovers the decomposition (1), while in the multivariate case it provides a meaningful structure for decomposition of the total information provided by an arbitrary number of predictor variables.

While the redundancy lattice of PID provides a structure for multivariate information decomposition, it does not uniquely determine the value of the PI atoms in the lattice. To do so requires a definition of a measure of redundant information which satisfies the above axioms. Hence, in order to complete the PID framework, Williams and Beer simultaneously introduced a measure of redundant information called which quantifies redundancy as the minimum information that any source provides about a target event t, averaged over all possible events from T. However, not long after its introduction was heavily criticised. Firstly, does not distinguish between “whether different random variables carry the same information or just the same amount of information” ([5], p. 269; see also [6,7]). Secondly, does not possess the target chain rule introduced by Bertschinger et al. [5] (under the name left chain rule). This latter point is problematic as the target chain rule is a natural generalisation of the chain rule of mutual information—i.e., one of the fundamental, and indeed characterising, properties of information in Shannon’s theory [8,9].

These issues with prompted much research attempting to find a suitable replacement measure compatible with the PID framework. Using the methods of information geometry, Harder et al. [6] focused on a definition of redundant information called (see also [10]). Bertschinger et al. [11] defined a measure of unique information based upon the notion that if one variable contains unique information then there must be some way to exploit that information in a decision problem. Griffith and Koch [12] used an entirely different motivation to define a measure of synergistic information whose decomposition transpired to be equivalent to that of [11]. Despite this effort, none of these proposed measures are entirely satisfactory. Firstly, just as for , none of these proposed measures possess the target chain rule. Secondly, these measures are not compatible with the PID framework in general, but rather are only compatible with PID for the special case of bivariate predictors, i.e., the decomposition (1). This is because they all simultaneously satisfy the Williams and Beers axioms, local positivity, and the identity property introduced by Harder et al. [6]. In particular, Rauh et al. [13] proved that no measure satisfying the identity property and the Williams and Beer Axioms 1–3 can yield a non-negative information decomposition beyond the bivariate case of two predictor variables. In addition to these proposed replacements for , there is also a substantial body of literature discussing either PID, similar attempts to decompose multivariate information, or the problem of information decomposition in general [3,4,5,7,10,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28]. Furthermore, the current proposals have been applied to various problems in neuroscience [29,30,31,32,33,34]. Nevertheless (to date), there is no generally accepted measure of redundant information that is entirely compatible with PID framework, nor has any other well-accepted multivariate information decomposition emerged.

To summarise the problem, we are seeking a meaningful decomposition of the information provided an arbitrarily large set of predictor variables about a target variable, into atoms of partial information contributed either individually or jointly by the various subsets of the predictors. Crucially, the redundant information must capture when two predictor variables are carrying the same information about the target, not merely the same amount of information. Finally, any proposed measure of redundant information should satisfy the target chain rule so that net redundant information can be consistently computed for consistently for multiple target events.

2. Pointwise Information Theory

Both the entropy and mutual information can be derived from first principles as fundamentally pointwise quantities which measure the information content of individual events rather than entire variables. The pointwise entropy quantifies the information content of a single event t, while the pointwise mutual information

| (2) |

quantifies the information provided by s about t, or vice versa. To our knowledge, these quantities were first considered by Woodward and Davies [35,36] who noted that the average form of Shannon’s entropy “tempts one to enquire into other simpler methods of derivation [of the per state entropy]” ([35], p. 51). Indeed, they went on to show that the pointwise entropy and pointwise mutual information can be derived from two axioms concerning the addition of the information provided by the occurrence of individual events [36]. Fano [9] further formalised this pointwise approach by deriving both quantites from four postulates which “should be satisfied by a useful measure of information” ([9], p. 31). Taking the expectation of these pointwise quantities over all events recovers the average entropy and average mutual information first derived by Shannon [8]. Although both approaches arrive at the same average quantities, Shannon’s treatment obfuscates the pointwise nature of the fundamental quantities. In contrast, the approach of Woodward, Davis and Fano makes this pointwise nature manifestly obvious.

It is important to note that, in contrast to the average mutual information, the pointwise mutual information is not non-negative. Positive pointwise information corresponds to the predictor event s raising the probability relative to the prior probability . Hence when the event t occurs it can be said that the event s was informative about the event t. Conversely, negative pointwise information corresponds to the event s lowering the posterior probability relative to the prior probability . Hence when the event t occurs we can say that the event s was misinformative about the event t. (Not to be confused with disinformation, i.e., intentionally misleading information.) Although a source event s may be misinformative about a particular target event t, a source event s is never misinformative about the target variable T since the pointwise mutual information averaged over all target realisations is non-negative [9]. The information provided by s is helpful for predicting T on average; however, in certain instances this (typically helpful) information is misleading in that it lowers relative to —typically helpful information which subsequently turns out to be misleading is misinformation.

Finally, before continuing, there are two points to be made about the terminology used to describe pointwise information. Firstly, in certain literature (typically in the context of time-series analysis), the word local is used instead of pointwise, e.g., [4,18]. Secondly, in contemporary information theory, the word average is generally omitted while the pointwise quantities are explicitly prefixed; however, this was not always the accepted convention. Woodward [35] and Fano [9] both referred to pointwise mutual information as the mutual information and then explicitly prefixed the average mutual information. To avoid confusion, we will always prefix both pointwise and average quantities.

2.1. Pointwise Information Decomposition

Now that we are familiar with pointwise nature of information, suppose that we have a discrete realisation from the joint event space consisting of the target event t and predictor events and . The pointwise mutual information quantifies the information provided individually by about t, while the pointwise mutual information quantifies the information provided individually by about t. The pointwise joint mutual information quantifies the total information provided jointly by and about t. In correspondence with the (average) bivariate decomposition (1), consider the pointwise bivariate decomposition, first suggested by Lizier et al. [4],

| (3) |

Note that the lower case quantities denote the pointwise equivalent of the corresponding upper case quantities in (1). This decomposition could be considered for every discrete realisation on the support of the joint distribution . Hence, consider taking the expectation of these pointwise atoms over all discrete realisations,

| (4) |

Since the expectation is a linear operation, this will recover the (average) bivariate decomposition (1). Equation (3) for every discrete realisation, together with (1) and (4) form the bivariate pointwise information decomposition. Just as in (1), these equations are underdetermined requiring a separate definition of either the pointwise unique, redundant or complementary information for uniqueness. (Defining an average atom is sufficient for a unique bivariate decomposition (1), but still leaves the pointwise decomposition (3) within each realisation underdetermined).

2.2. Pointwise Unique

Now consider applying this pointwise information decomposition to the probability distribution Pointwise Unique (PwUnq) in Table 1. In PwUnq, observing 0 in either of or provides zero information about the target T, while complete information about the outcome of T is obtained by observing 1 or a 2 in either predictor. The probability distribution is structured such that in each of the four realisations, one predictor provides complete information while the other predictor provides zero information—the two predictors never provide the same information about the target which is justified by noting that one of the two predictors always provides zero pointwise information.

Table 1.

Example PwUnq. For each realisation, the pointwise mutual information provided by each individual and joint predictor events, about the target event has been evaluated. Note that one predictor event always provides full information about the target while the other provides zero information. Based on the this, it is assumed that there must be zero redundant information. The pointwise partial information (PPI) atoms are then calculated via (3).

| p | t | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | |

| 1 | 0 | 1 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | |

| 0 | 2 | 2 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | |

| 2 | 0 | 2 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | |

| Expected values | 1 | 0 | 0 | |||||||

Given that redundancy is supposed to capture the same information, it seems reasonable to assume there must be zero pointwise redundant information for each realisation. This assumption is made without any measure of pointwise redundant information; however, no other possibility seems justifiable. This assertion is used to determine the pointwise redundant information terms in Table 1. Then using the pointwise information decomposition (3), we can then evaluate the other pointwise atoms of information in Table 1. Finally using (4), we get that there is zero (average) redundant information, and bit of (average) unique information from each predictor. From the pointwise perspective, the only reasonable conclusion seems to be that the predictors in PwUnq must contain only unique information about the target.

However, in contrast to the above, , , , and all say that the predictors in PwUnq contain no unique information, rather only bit of redundant information plus bit of complementary information. This problem, which will be referred to as the pointwise unique problem, is a consequence of the fact that these measures all satisfy Assumption () of Bertschinger et al. [11], which (in effect) states that the unique and redundant information should only depend on the marginal distributions and . In particular, any measure which satisfies Assumption () will yield zero unique information when is isomorphic to , as is the case for PwUnq. (Here, isomorphic should be taken to mean isomorphic probability spaces, e.g., [37], p. 27 or [38], p. 4.) It arises because Assumption () (and indeed the operational interpretation the led to its introduction) does not respect the pointwise nature of information. This operational view does not take into account the fact that individual events and may provide different information about the event t, even if the probability distributions and are the same. Hence, we contend that for any measure to capture the same information (not merely the same amount), it must respect the pointwise nature of information.

2.3. Pointwise Partial Information Decomposition

With the pointwise unique problem in mind, consider constructing an information decomposition with the pointwise nature of information as an inherent property. Let be potentially intersecting subsets of the predictor events , called source events. Now consider rewriting the Williams and Beer axioms in terms of a measure of pointwise redundant information where the aim is to deriving a pointwise partial information decomposition (PPID).

PPID Axiom 1 (Symmetry).

Pointwise redundant information is invariant under any permutation σ of source events,

PPID Axiom 2 (Monotonicity).

Pointwise redundant information decreases monotonically as more source events are included,

with equality if for any .

PPID Axiom 3 (Self-redundancy).

Pointwise redundant information for a single source event equals the pointwise mutual information,

It seems that the next step should be to define some measure of pointwise redundant information which is compatible with these PPID axioms; however, there is a problem—the pointwise mutual information is not non-negative. While this would not be an issue for the examples like PwUnq, where none of the source events provide negative pointwise information, it is an issue in general (e.g., see RdnErr in Section 5.4). The problem is that set-theoretic intuition behind Axiom 2 (monotonicity) makes little sense when considering signed measures like the pointwise mutual information.

Given the desire to address the pointwise unique problem, there is a need to overcome this issue. Ince [18] suggested that the set-theoretic intuition is only valid when all source events provide either positive or negative pointwise information. Ince contends that information and misinformation are “fundamentally different” ([18], p. 11) and that the set-theoretic intuition should be admitted in the difficult to interpret situations where both are present. We however, will take a different approach—one which aims to deal with these difficult to interpret situations whilst preserving the set-theoretic intuition that redundancy corresponds to overlapping information.

By way of a preview, we first consider precisely how an event provides information about an event t by the means of two distinct types of probability mass exclusion. We show how considering the process in this way naturally splits the pointwise mutual information into particular entropic components, and how one can consider redundancy on each of these components separately. Splitting the signed pointwise mutual information into these unsigned entropic components circumvents the above issue with Axiom 2 (monotonicity). Crucially, however, by deriving these entropic components from the probability mass exclusions, we retain the set-theoretic intuition of redundancy—redundant information will correspond to overlapping probability mass exclusions in the two-event partition .

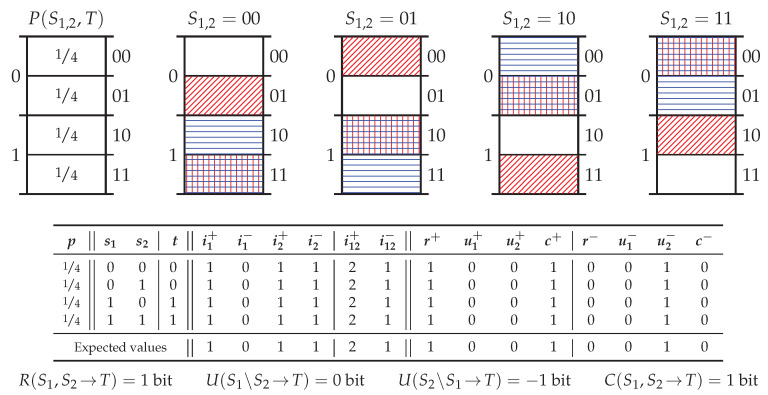

3. Probability Mass Exclusions and the Directed Components of Pointwise Mutual Information

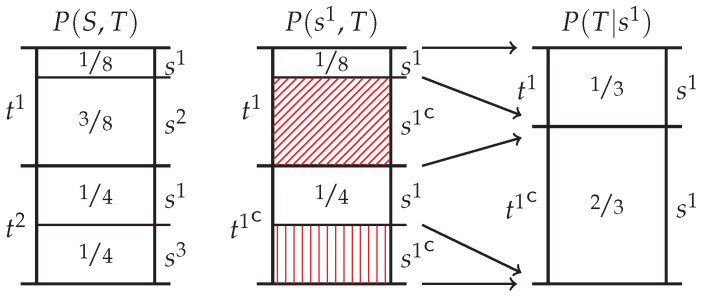

By definition, the pointwise information provided by s about t is associated with a change from the prior to the posterior . As we explored from first principles in Finn and Lizier [39], this change is a consequence of the exclusion of probability mass in the target distribution induced by the occurrence of the event s and inferred via the joint distribution . To be specific, when the event s occurs, one knows that the complementary event did not occur. Hence one can exclude the probability mass in the joint distribution associated with the complementary event, i.e., exclude , leaving just the probability mass remaining. The new target distribution is evaluated by normalising this remaining probability mass. In [39] we introduced probability mass diagrams in order to visually explore the exclusion process. Figure 1 provides an example of such a diagram. Clearly, this process is merely a description of the definition of conditional probability. Nevertheless, we content that by viewing the change from the prior to the posterior in this way—by focusing explicitly on the exclusions rather than the resultant conditional probability—the vague intuition that redundancy corresponds to overlapping information becomes more apparent. This point will elaborated upon in Section 3.3. However, in order to do so, we need to first discuss the two distinct types of probability mass exclusion (which we do in Section 3.1) and then relate these to information-theoretic quantities (which we do in Section 3.2).

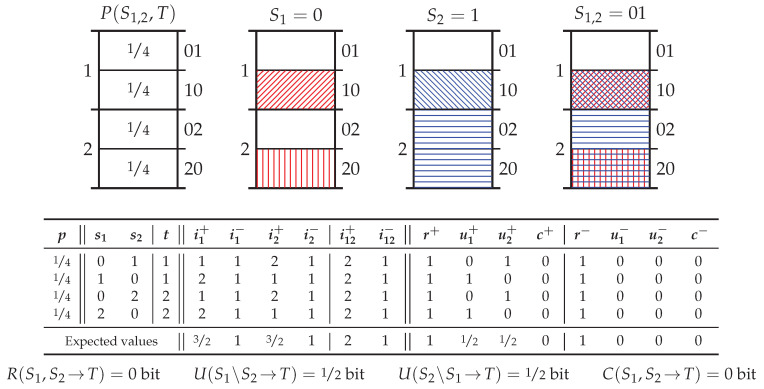

Figure 1.

Sample probability mass diagrams, which use length to represent the probability mass of each joint event from . (Left) the joint distribution ; (Middle) The occurrence of the event leads to exclusions of the complementary event which consists of two elementary event, i.e., . This leaves the probability mass remaining. The exclusion of the probability mass was misinformative since the event did occur. By convention, misinformative exclusions will be indicated with diagonal hatching. On the other hand, the exclusion of the probability mass was informative since the complementary event did not occur. By convention, informative exclusions will be indicated with horizontal or vertical hatching; (Right) this remaining probability mass can be normalised yielding the conditional distribution .

3.1. Two Distinct Types of Probability Mass Exclusions

In [39] we examined the two distinct types of probability mass exclusions. The difference between the two depends on where the exclusion occurs in the target distribution and the particular target event t which occurred. Informative exclusions are those which are confined to the probability mass associated with the set of elementary events in the target distribution which did not occur, i.e., exclusions confined to the probability mass of the complementary event . They are called such because the pointwise mutual information is a monotonically increasing function of the total size of these exclusions . By convention, informative exclusions are represented on the probability mass diagrams by horizontal or vertical lines. On the other hand, the misinformative exclusion is confined to the probability mass associated with the elementary event in the target distribution which did occur, i.e., an exclusion confined to . It is referred to as such because the pointwise mutual information is a monotonically decreasing function of the size of this type of exclusion . By convention, misinformative exclusions are represented on the probability mass diagrams by diagonal lines.

Although an event s may exclusively induce either type of exclusion, in general both types of exclusion are present simultaneously. The distinction between the two types of exclusions leads naturally to the following question—can one decompose the pointwise mutual information into a positive informational component associated with the informative exclusions, and a negative informational component associated with the misinformative exclusions? This question is considered in detail in Section 3.2. However, before moving on, there is a crucial observation to be made about the pointwise mutual information which will have important implications for the measure of redundant information to be introduced later.

Remark 1.

The pointwise mutual information depends only on the size of informative and misinformative exclusions. In particular, it does not depend on the apportionment of the informative exclusions across the set of elementary events contained in the complementary event .

In other words, whether the event s turns out to be net informative or misinformative about the event t—whether is positive or negative—depends on the size of the two types of exclusions; but, to be explicit, does not depend on the distribution of the informative exclusion across the set of target events which did not occur. This remark will be crucially important when it comes to providing the operational interpretation of redundant information in Section 3.3. (It is also further discussed in terms of Kelly gambling [40] in Appendix A).

3.2. The Directed Components of Pointwise Information: Specificity and Ambiguity

We return now to the idea that one might be able to decompose the pointwise mutual information into a positive and negative component associated with the informative amd misinformative exclusions respectively. In [39] we proposed four postulates for such a decomposition. Before stating the postulates, it is important to note that although there is a “surprising symmetry” ([41], p. 23) between the information provided by s about t and the information provided by t about s, there is nothing to suggest that the components of the decomposition should be symmetric—indeed the intuition behind the decomposition only makes sense when considering the information is considered in a directed sense. As such, directed notation will be used to explicitly denote the information provided by s about t.

Postulate 1 (Decomposition).

The pointwise information provided by s about t can be decomposed into two non-negative components, such that .

Postulate 2 (Monotonicity).

For all fixed and , the function is a monotonically increasing, continuous function of . For all fixed and , the function is a monotonically increasing continuous function of . For all fixed and , the functions and are monotonically increasing and decreasing functions of , respectively.

Postulate 3 (Self-Information).

An event cannot misinform about itself, .

Postulate 4 (Chain Rule).

The functions and satisfy a chain rule, i.e.,

In Finn and Lizier [39], we proved that these postulates lead to the following forms which are unique up to the choice of the base of the logarithm in the mutual information in Postulates 1 and 3,

| (5) |

| (6) |

| (7) |

| (8) |

| (9) |

| (10) |

That is, the Postulates 1–4 uniquely decompose the pointwise information provided by s about t into the following entropic components,

| (11) |

Although the decomposition of mutual information into entropic components is well-known, it is non-trivial that Postulates 1 and 3, based on the size of the two distinct types of probability mass exclusions, lead to this particular form, but not or .

It is important to note that although the original motivation was to decompose the pointwise mutual information into separate components associated with informative and misinformative exclusion, the decomposition (11) does not quite possess this direct correspondence:

The positive informational component does not depend on t but rather only on s. This can be interpreted as follows: the less likely s is to occur, the more specific it is when it does occur, the greater the total amount of probability mass excluded , and the greater the potential for s to inform about t (or indeed any other target realisation).

The negative informational component depends on both s and t, and can be interpreted as follows: the less likely s is to coincide with the event t, the more uncertainty in s given t, the greater size of the misinformative probability mass exclusion , and therefore the greater the potential for s to misinform about t.

In other words, although the negative informational component does correspond directly to the size of the misinformative exclusion , the positive informational component does not correspond directly to the size of the informative exclusion . Rather, the positive informational component corresponds to the total size of the probability mass exclusions , which is the sum of the sum of the informative and misinformative exclusions. For the sake of brevity, the positive informational component will be referred to as the specificity, while the negative informational component will be referred to as the ambiguity. The term ambiguity is due to Shannon: “[equivocation] measures the average ambiguity of the received signal” ([42], p. 67). Specificity is an antonym of ambiguity and the usage here is inline with the definition since the more specific an event s, the more information it could provide about t after the ambiguity is taken into account.

3.3. Operational Interpretation of Redundant Information

Arguing about whether one piece of information differs from another piece of information is nonsensical without some kind of unambiguous definition of what it means for two pieces of information to be the same. As such, Bertschinger et al. [11] advocate the need to provide an operational interpretation of what it means for information to be unique or redundant. This section provides our operational definition of what it means for information to be the same. This definition provides a concrete interpretation of what it means for information to be redundant in terms of overlapping probability mass exclusions.

The operational interpretation of redundancy adopted here is based upon the following idea: since the pointwise information is ultimately derived from probability mass exclusions, the same information must induce the same exclusions. More formally, the information provided by a set of predictor events about a target event t must be the same information if each source event induces the same exclusions with respect to the two-event partition . While this statement makes the motivational intuition clear, it is not yet sufficient to serve as an operational interpretation of redundancy: there is no reference to the two distinct types of probability mass exclusions, the specific reference to the pointwise event space has not been explained, and there is no reference to the fact the exclusions from each source may differ in size.

Informative exclusions are fundamentally different from misinformative exclusions and hence each type of exclusion should be compared separately: informative exclusions can overlap with informative exclusions, and misinformative exclusions can overlap with misinformative exclusions. In information-theoretic terms, this means comparing the specificity and the ambiguity of the sources separately—i.e., considering a measure of redundant specificity and a separate measure of redundant ambiguity. Crucially, these quantities (being pointwise entropies) are unsigned meaning that the difficulties associated with Axiom 2 (Monotonicity) and signed pointwise mutual information in Section 2.3 will not be an issue here.

The specific reference to the two-event partition in the above statement is based upon Remark 1 and is crucially important. The pointwise mutual information does not depend on the apportionment of the informative exclusions across the set of events which did not occur, hence the pointwise redundant information should not depend on this apportionment either. In other words, it is immaterial if two predictor events and exclude different elementary events within the target complementary event (assuming the probability mass excluded is equal) since with respect to the realised target event t the difference between the exclusions is only semantic. This has important implications for the comparison of exclusions from different predictor events. As the pointwise mutual information depends on, and only depends on, the size of the exclusions, then the only sensible comparison is a comparison of size. Hence, the common or overlapping exclusion must be the smallest exclusion. Thus, consider the following operational interpretation of redundancy:

Operational Interpretation (Redundant Specificity).

The redundant specificity between a set of predictor events is the specificity associated with the source event which induces the smallest total exclusions.

Operational Interpretation (Redundant Ambiguity).

The redundant ambiguity between a set of predictor events is the ambiguity associated with the source event which induces the smallest misinformative exclusion.

3.4. Motivational Example

To motivate the above operational interpretation, and in particular the need to treat the specificity separately to the ambiguity, consider Figure 2. In this pointwise example, two different predictor events provide the same amount of pointwise information since , and yet the information provided by each event is in some way different since each excludes different sections of the target distribution . In particular, and both preclude the target event , while additionally excludes probability mass associated with target events and . From the perspective of the pointwise mutual information the events and seem to be providing the same information as

| (12) |

Figure 2.

Sample probability mass diagrams for two predictors and to a given target T. Here events in the two different predictor spaces provide the same amount of pointwise information about the target event, bits, since , although each excludes different sections of the target distribution . Since they both provide the same amount of information, is there a way to characterise what information the additional unique exclusions from the event are providing?

However, from the perspective of the specificity and the ambiguity it can be seen that information is being provided in different ways since

| (13) |

Now consider the problem of decomposing information into its unique, redundant and complementary components. Figure 2 shows where exclusions induced by and overlap where they both exclude the target event which is an informative exclusion. This is the only exclusion induced by and hence all of the information associated with this exclusion must be redundantly provided by the event . Without any formal framework, consider taking the redundant specificity and redundant ambiguity,

| (14) |

| (15) |

This would mean that the event provides the following unique specificity and unique ambiguity,

| (16) |

| (17) |

The redundant specificity log bit accounts for the overlapping informative exclusion of the event . The unique specificity and unique ambiguity from are associated with its non-overlapping informative and misinformative exclusions; however, both of these 1 bit and hence, on net, is no more informative than . Although obtained without a formal framework, this example highlights a need to consider the specificity and ambiguity rather than merely the pointwise mutual information.

4. Pointwise Partial Information Decomposition Using Specificity and Ambiguity

Based upon the argumentation of Section 3, consider the following axioms:

Axiom 1 (Symmetry).

Pointwise redundant specificity and pointwise redundant ambiguity are invariant under any permutation σ of source events,

Axiom 2 (Monotonicity).

Pointwise redundant specificity and pointwise redundant ambiguity decreases monotonically as more source events are included,

with equality if for any .

Axiom 3 (Self-redundancy).

Pointwise redundant specificity and pointwise redundant ambiguity for a single source event equals the specificity and ambiguity respectively,

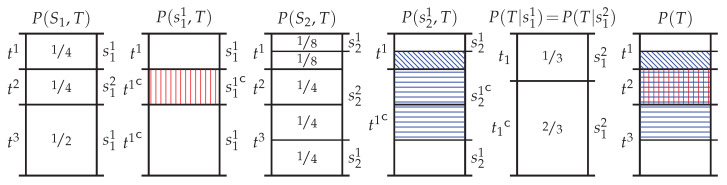

As shown in Appendix B.1, Axioms 1–3 induce two lattices—namely the specificity lattice and ambiguity lattice—which are depicted in Figure 3. Furthermore, each lattice is defined for every discrete realisation from . The redundancy measures or can be thought of as a cumulative information functions which integrate the specificity or ambiguity uniquely contributed by each node as one moves up each lattice. Finally, just as in PID, performing a Möbius inversion over each lattice yielding the unique contributions of specificity and ambiguity from each sources event.

Figure 3.

The lattice induced by the partial order ⪯ (A15) over the sources (A14). (Left) the lattice for ; (Right) the lattice for . See Appendix B for further details. Each node corresponds to the self-redundancy (Axiom 3) of a source event, e.g., corresponds to the source event , while corresponds to the source event . Note that the specificity and ambiguity lattices share the same structure as the redundancy lattice of partial information decomposition (PID) (cf. Figure 2 in [1]).

Similarly to PID, the specificity and ambiguity lattices provide a structure for information decomposition, but unique evaluation requires a separate definition of redundancy. However, unlike PID (or even PPID), this evaluation requires both a definition of pointwise redundant specificity and pointwise redundant ambiguity. Before providing these definitions, it is helpful to first see how the specificity and ambiguity lattices can be used to decompose multivariate information in the now familiar bivariate case.

4.1. Bivariate PPID Using the Specificity and Ambiguity

Consider again the bivariate case where the aim is to decompose the information provided by and about t. The specificity lattice can be used to decompose the pointwise specificity,

| (18) |

while the ambiguity lattice can be used to decompose the pointwise ambiguity,

| (19) |

These equations share the same structural form as (3) only now decompose the specificity and the ambiguity rather than the pointwise mutual information, e.g., denotes the redundant specificity while denoted the unique ambiguity from . Just as in for (3), this decomposition could be considered for every discrete realisation on the support of the joint distribution .

There are two ways one can be combine these values. Firstly, in a similar manner to (4), one could take the expectation of the atoms of specificity, or the atoms of ambiguity, over all discrete realisations yielding the average PI atoms of specificity and ambiguity,

| (20) |

Alternatively, one could subtract the pointwise unique, redundant and complementary ambiguity from the pointwise unique, redundant and complementary specificity yielding the pointwise unique, pointwise redundant and pointwise complementary information, i.e., recover the atoms from PPID,

| (21) |

Both (20) and (21) are linear operations, hence one could perform both of these operations (in either order) to obtain the average unique, average redundant and average complementary information, i.e., recover the atoms from PID,

| (22) |

4.2. Redundancy Measures on the Specificity and Ambiguity Lattices

Now that we have a structure for our information decomposition, there is a need to provide a definition of the pointwise redundant specificity and pointwise redundant ambiguity. However, before attempting to provide such a definition, there is a need to consider Remark 1 and the operational interpretation of in Section 3.3. In particular, the pointwise redundant specificity and pointwise redundant ambiguity should only depend on the size of informative and misinformative exclusions. They should not depend on the apportionment of the informative exclusions across the set of elementary events contained in the complementary event . Formally, this requirement will be enshrined via the following axiom.

Axiom 4 (Two-event Partition).

The pointwise redundant specificity and pointwise redundant ambiguity are functions of the probability measures on the two-event partitions .

Since the pointwise redundant specificity is specificity associated with the source event which induces the smallest total exclusions, and pointwise redundant ambiguity is the ambiguity associated with the source event which induces the smallest misinformative exclusion, consider the following definitions.

Definition 1.

The pointwise redundant specificity is given by

(23)

Definition 2.

The pointwise redundant ambiguity is given by

(24)

Theorem 1.

The definitions of and satisfy Axioms 1–4.

Theorem 2.

The redundancy measures and increase monotonically on the .

Theorem 3.

The atoms of partial specificity and partial ambiguity evaluated using the measures and on the specificity and ambiguity lattices (respectively), are non-negative.

Appendix B.2 contains the proof of Theorems 1–3 and further relevant consideration of Defintions 1 and 2. As in (20), one can take the expectation of the either the pointwise redundant specificity or the pointwise redundant ambiguity to get the average redundant specificity or the average redundant ambiguity . Alternatively, just as in (21), one can recombine the pointwise redundant specificity and the pointwise redundant ambiguity to get the pointwise redundant information . Finally, as per (22), one could perform both of these (linear) operations in either order to obtain the average redundant information . Note that while Theorem 3 proves that the atoms of partial specificity and partial ambiguity are non-negative, it is trivial to see that could be negative since when source events can redundantly provide misinformation about a target event. As shown in the following theorem, can also be negative.

Theorem 4.

The atoms of partial average information evaluated by recombining and averaging are not non-negative.

This means that the measure does not satisfy local positivity. Nonetheless the negativity of is readily explainable in terms of the operational interpretation of Section 3.3, as will be discussed further in Section 5.4. However, failing to satisfy local positivity does mean that and do not satisfy the target monotonicity property first discussed in Bertschinger et al. [5]. Despite this, as the following theorem shows, the measures do satisfy the target chain rule.

Theorem 5 (Pointwise Target Chain Rule).

Given the joint target realisation , the pointwise redundant information satisfies the following chain rule,

(25)

The proof of the last theorem is deferred to Appendix B.3. Note that since the expectation is a linear operation, Theorem 5 also holds for the average redundant information . Furthermore, as these results apply to any of the source events, the target chain rule will hold for any of the PPI atoms, e.g., (21), and any of the PI atoms, e.g., (22). However, no such rule holds for the pointwise redundant specificity or ambiguity. The specificity depends only on the predictor event, i.e., does not depend on the target events. As such, when an increasing number of target events are considered, the specificity remains unchanged. Hence, a target chain rule cannot hold for the specificity, or the ambiguity alone.

5. Discussion

PPID using the specificity and ambiguity takes the ideas underpinning PID and applies them on a pointwise scale while circumventing the monotonicity issue associated with the signed pointwise mutual information. This section will explore the various properties of the decomposition in an example driven manner and compare the results to the most widely-used measures from the existing PID literature. (Further examples can be found in Appendix C.) The following shorthand notation will be utilised in the figures throughout this section:

5.1. Comparison to Existing Measures

A similar approach to the decomposition presented in this paper is due to Ince [18], who also sought to define a pointwise information decomposition. Despite the similarity in this regard, the redundancy measure presented in [18] approaches the pointwise monotonicity problem of Section 2.3 in a different way to the decomposition presented in this paper. Specifically, aims to utilise the pointwise co-information as a measure of pointwise redundant information since it “quantifies the set-theoretic overlap of the two univariate [pointwise] information values” ([18], p. 14). There are, however, difficulties with this approach. Firstly (unlike the average mutual information and the Shannon inequalities), there are no inequalities which support this interpretation of pointwise co-information as the set-theoretic overlap of the univariate pointwise information terms—indeed, both the univariate pointwise information and the pointwise co-information are signed measures. Secondly, the pointwise co-information conflates the pointwise redundant information with the pointwise complementary information, since by (3) we have that

| (26) |

Aware of these difficulties, Ince defines such that it only interprets the pointwise co-information as a measure of set-theoretic overlap in the case where all three pointwise information terms have the same sign, arguing that these are the only situations which admit a clear interpretation in terms of a common change in surprisal. In the other difficult to interpret situations, defines the pointwise redundant information to be zero. This approach effectively assumes that in (26) when , and all have the same sign.

In a subsequent paper, Ince [19] also presented a partial entropy decomposition which aims to decompose multivariate entropy rather than multivariate information. As such, this decomposition is more similar to PPID using specificity and ambiguity than Ince’s aforementioned decomposition. Although similar in this regard, the measure of pointwise redundant entropy presented in [19] takes a different approach to the one presented in this paper. Specifically, also uses the pointwise co-information as a measure of set-theoretic overlap and hence as a measure of pointwise redundant entropy. As the pointwise entropy is unsigned, the difficulties are reduced but remain present due to the signed pointwise co-information. In a manner similar to , Ince defines such that it only interprets the pointwise co-information as a measure of set-theoretic overlap when it is positive. As per , this effectively assumes that in (26) when all information terms have the same sign. When the pointwise co-information is negative, simply ignores the co-information by defining the pointwise redundant information to be zero. In contrast to both of Ince’s approaches, PPID using specificity and ambiguity does not dispose of the set-theoretic intuition in these difficult to interpret situations. Rather, our approach considers the notion of redundancy in terms of overlapping exclusions—i.e., in terms of the underlying, unsigned measures which are amenable to a set-theoretic interpretation.

The measures of pointwise redundant specificity and pointwise redundant ambiguity , from Definitions 1 and 2 are also similar to both the minimum mutual information [17] and the original PID redundancy measure [1]. Specifically, all three of these approaches consider the redundant information to be the minimum information provided about a target event t. The difference is that applies this idea to the sources , i.e., to collections of entire predictor variables from , while apply this notion to the source events , i.e., to collections of predictor events from . In other words, while the measure can be regarded as being semi-pointwise (since it considers the information provided by the variables about an event t), the measures are fully pointwise (since they consider the information provided by the events about an event t). This difference in approach is most apparent in the probability distribution PwUnq—unlike PID, PPID using the specificity and ambiguity respects the pointwise nature of information, as we will see in Section 5.3.

PPID using specificity and ambiguity also share certain similarities with the bivariate PID induced by the measure of Bertschinger et al. [11]. Firstly, Axiom 4 can be considered to be a pointwise adaptation of their Assumption (), i.e., the measures depend only on the marginal distributions and with respect to the two-event partitions and . Secondly, in PPID using specificity and ambiguity, the only way one can only decide if there is complementary information is by knowing the joint distribution with respect to the joint two-event partitions . This is (in effect) a pointwise form of their Assumption (). Thirdly, by definition are given by the minimum value that any one source event provides. This is the largest possible value that one could take for these quantities whilst still requiring that the unique specificity and ambiguity be non-negative. Hence, within each discrete realisation, minimise the unique specificity and ambiguity whilst maximising the redundant specificity and ambiguity. This is similar to which minimises the (average) unique information while still satisfying Assumption (). Finally, note that since the measure produces a bivariate decomposition which is equivalent to that of [11], the same similarities apply between PPID using specificity and ambiguity and the decomposition induced by from Griffith and Koch [12].

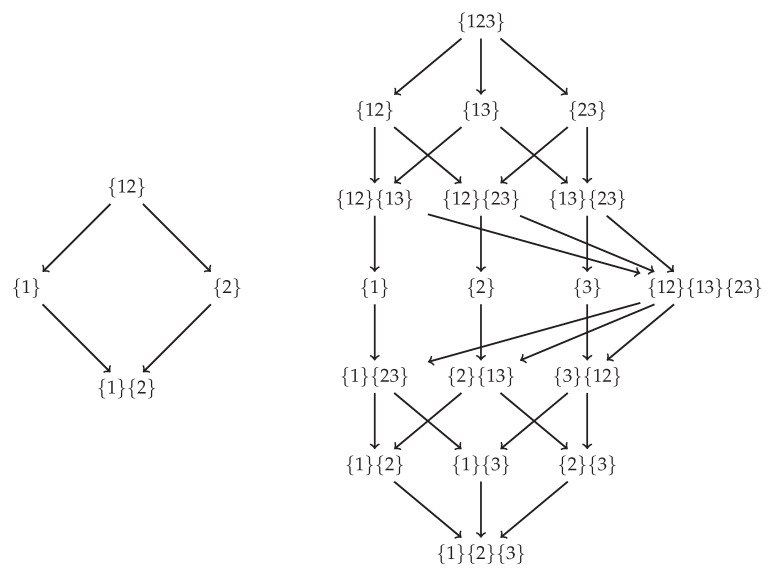

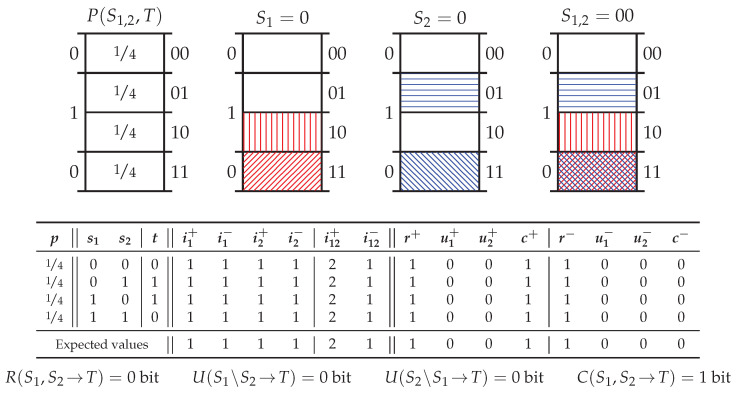

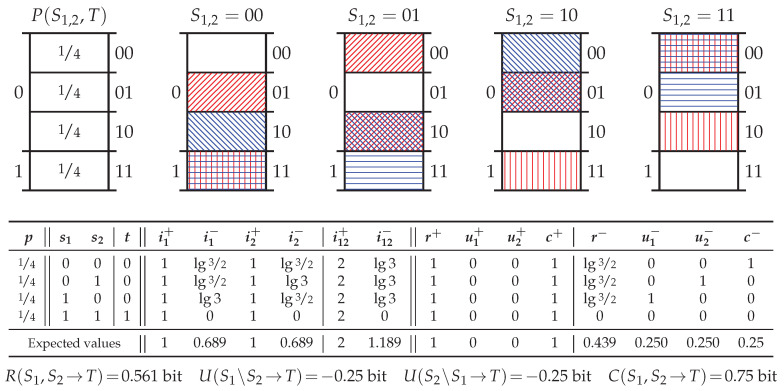

5.2. Probability Distribution Xor

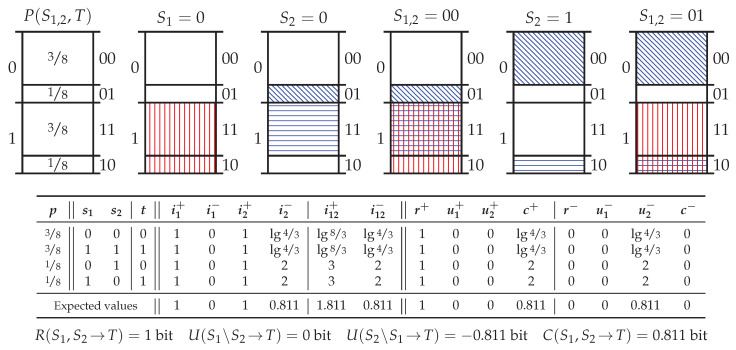

Figure 4 shows the canonical example of synergy, exclusive-or (Xor) which considers two independently distributed binary predictor variables and and a target variable . There are several important points to note about the decomposition of Xor. Firstly, despite providing zero pointwise information, an individual predictor event does indeed induce exclusions. However, the informative and misinformative exclusions are perfectly balanced such that the posterior (conditional) distribution is equal to the prior distribution, e.g., see the red coloured exclusions induced by in Figure 4. In information-theoretic terms, for each realisation, the pointwise specificity equals 1 bit since half of the total probability mass remains while the pointwise ambiguity also equals 1 bit since half of the probability mass associated with the event which subsequently occurs (i.e., ), remains. These are perfectly balanced such that when recombined, as per (11), the pointwise mutual information is equal to 0 bit, as one would expect.

Figure 4.

Example Xor. (Top) probability mass diagrams for the realisation ; (Middle) For each realisation, the pointwise specificity and pointwise ambiguity has been evaluated using (5) and (8) respectively. The pointwise redundant specificity and pointwise redundant ambiguity are then determined using (23) and (24). The decomposition is calculated using (18) and (19). The expected specificity and ambiguity are calculated with (20); (Bottom) The average information is given by (22). As expected, Xor yields 1 bit of complementary information.

Secondly, and both induce the same exclusions with respect to the target pointwise event space . Hence, as per the operational interpretation of redundancy adopted in Section 3.3, there is 1 bit of pointwise redundant specificity and 1 bit of pointwise redundant ambiguity in each realisation. The presence of (a form of) redundancy in Xor is novel amongst the existing measures in the PID literature. (Ince [19] also identifies a form of redundancy in Xor.) Thirdly, despite the presence of this redundancy, recombining the atoms of pointwise specificity and ambiguity for each realisation, as per (21), leaves only one non-zero PPI atom: namely the pointwise complementary information = 1 bit. Furthermore, this is true for every pointwise realisation and hence, by (22), the only non-zero PI atom is the average complementary information = 1 bit.

5.3. Probability Distribution PwUnq

Figure 5 shows the probability distribution PwUnq introduced in Section 2.2. Recombining the decomposition via (21) yields the pointwise information decomposition proposed in Table 1—unsurprisingly, the explicitly pointwise approach results in a decomposition which does not suffer from the pointwise unique problem of Section 2.2.

Figure 5.

Example PwUnq. (Top) probability mass diagrams for the realisation ; (Middle) For each realisation, the pointwise partial information decomposition (PPID) using specificity and ambiguity is evaluated (see Figure 4 for details). Upon recombination as per (21), the PPI decomposition from Table 1 is attained; (Bottom) as does the average information—the decomposition does not have the pointwise unique problem.

In each realisation, observing a 0 in either source provides the same balanced informative and misinformative exclusions as in Xor. Observing either a 1 or 2 provides the same misinformative exclusion as observing the 0, but provides a larger informative exclusion than 0. This leaves only the probability mass associated with the event which subsequently occurs remaining (hence why observing a 1 and 2 is fully informative about the target). Information theoretically, in each realisation the predictor events provide 1 bit of redundant pointwise specificity and 1 bit of redundant pointwise ambiguity while the fully informative event additionally provides 1 bit of unique specificity.

5.4. Probability Distribution RdnErr

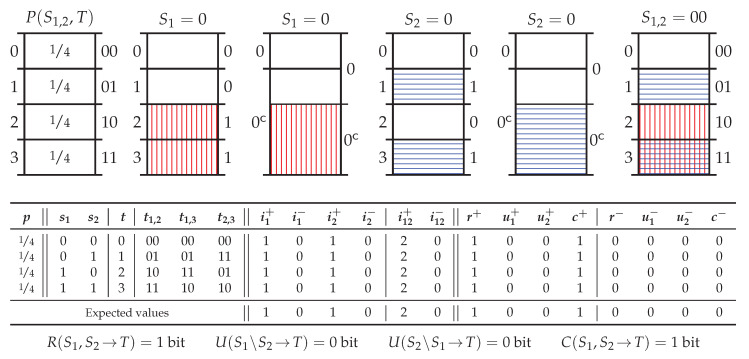

Figure 6 shows the probability distribution redundant-error (RdnErr) which considers two predictors which are nominally redundant and fully informative about the target, but where one predictor occasionally makes an erroneous prediction. Specifically, Figure 6 shows the decomposition of RdnErr where makes an error with a probability . The important feature to note about this probability distribution is that upon recombining the specificity and ambiguity and taking the expectation over every realisation, the resultant average unique information from is = −0.811 bit.

Figure 6.

Example RdnErr. (Top) probability mass diagrams for the realisations and ; (Middle) for each realisation, the PPID using specificity and ambiguity is evaluated (see Figure 4 for details); (Bottom) the average PI atoms may be negative as the decomposition does not satisfy local positivity.

On first inspection, the result that the average unique information can be negative may seem problematic; however, it is readily explainable in terms of the operational interpretation of Section 3.3. In RdnErr, a source event always excludes exactly of the total probability mass, thus every realisation contains 1 bit of redundant pointwise specificity. The events of the error-free induce only informative exclusions and as such provide 0 bit of pointwise ambiguity in each realisation. In contrast, the events in the error-prone always induce a misinformative exclusion, meaning that provides unique pointwise ambiguity in every realisation. Since never provides unique specificity, the average unique information is negative on average.

Despite the negativity of the average unique information, in is important to observe that provides 0.189 bit of information since also provides 1 bit of average redundant information. It is not that provides negative information on average (as this is not possible); rather it is that not all of the information provided by (i.e., the specificity) is “useful” ([42], p. 21). This is in contrast to which only provides useful specificity. To summarise, it is the unique ambiguity which distinguishes the information provided by variable from , and hence why is deemed to provide negative average unique information. This form of uniqueness can only be distinguished by allowing the average unique information to be negative. This of course, requires abandoning the local positivity as a required property, as per Theorem 4. Few of the existing measures in the PID literature consider dropping this requirement as negative information quantities are typically regarded as being “unfortunate” ([43], p. 49). However, in the context of the pointwise mutual information, negative information values are readily interpretable as being misinformative values. Despite this, the average information from each predictor must be non-negative; however, it may be that what distinguishes one predictor from another are precisely the misinformative predictor events, meaning that the unique information is in actual fact, unique misinformation. Forgoing local positivity makes the PPID using specificity and ambiguity novel (the other exception in this regard is Ince [18] who was first to consider allowing negative average unique information.)

5.5. Probability Distribution Tbc

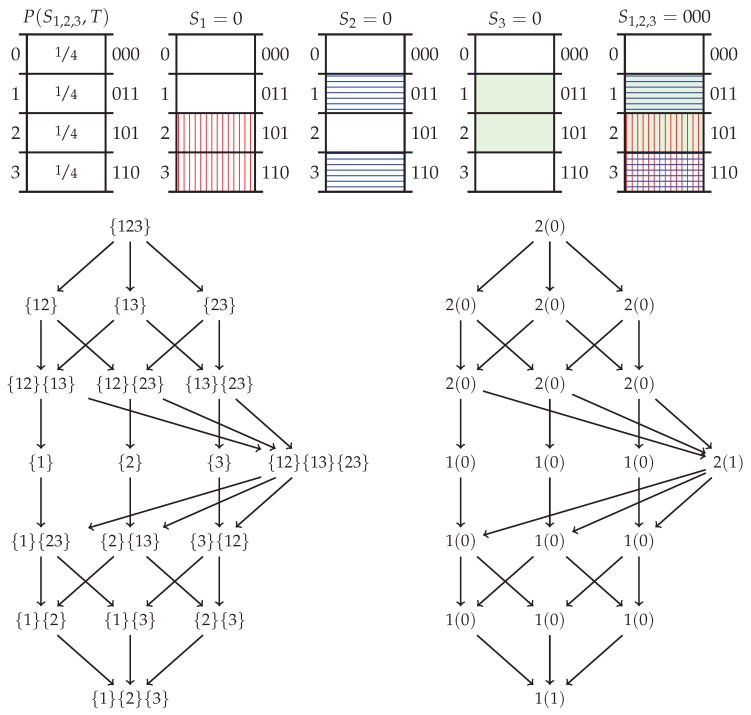

Figure 7 shows the probability distribution two-bit-copy (Tbc) which considers two independently distributed binary predictor variables and , and a target variable T consisting of a separate elementary event for each joint event . There are several important points to note about the decomposition of Tbc. Firstly, due to the symmetry in the probability distribution, each realisation will have the same pointwise decomposition. Secondly, due to the construction of the target, there is an isomorphism (Again, isomorphism should be taken to mean isomorphic probability spaces, e.g., [37], p. 27 or [38], p. 4) between and , and hence the pointwise ambiguity provided by any (individual or joint) predictor event is 0 bit (since given t, one knows and ). Thirdly, the individual predictor events and each exclude of the total probability mass in and so each provide 1 bit of pointwise specificity; thus, by (23), there is 1 bit of redundant pointwise specificity in each realisation. Fourthly, the joint predictor event excludes of the total probability mass, providing 2 bit of pointwise specificity; hence, by (18), each joint realisation provides 1 bit of pointwise complementary specificity in addition to the 1 bit of redundant pointwise specificity. Finally, putting this together via (22), Tbc consists of 1 bit of average redundant information and 1 bit of average complementary information.

Figure 7.

Example Tbc. (Top) the probability mass diagrams for the realisation ; (Middle) for each realisation, the PPID using specificity and ambiguity is evaluated (see Figure 4); (Bottom) the decomposition of Xor yields the same result as .

Although “surprising” ([5], p. 268), according to the operational interpretation adopted in Section 3.3, two independently distributed predictor variables can share redundant information. That is, since the exclusions induced by and are the same with respect to the two-event partition , the information associated with these exclusions is regarded as being the same. Indeed, this probability distribution highlights the significance of specific reference to the two-event partition in Section 3.3 and Axiom 4. (This can be seen in the probability mass diagram in Figure 7, where the events and exclude different elementary target events within the complementary event and yet are considered to be the same exclusion with respect to the two-event partition .) That these exclusions should be regarded as being the same is discussed further in Appendix A. Now however, there is a need to discuss Tbc in terms of Theorem 5 (Target Chain Rule).

Tbc was first considered as a “mechanism” ([6], p. 3) where “the wires don’t even touch” ([12], p. 167), which merely copies or concatenates and into a composite target variable where and . However, using causal mechanisms as a guiding intuition is dubious since different mechanisms can yield isomorphic probability distributions ([44], and references therein). In particular, consider two mechanisms which generate the composite target variables and where . As can be seen in Figure 7, both of these mechanisms generate the same (isomorphic) probability distribution as the mechanism generating . If an information decomposition is to depend only on the probability distribution , and no other semantic details such as labelling, then all three mechanisms must yield the same information decomposition—this is not clear from the mechanistic intuition.

Although the decomposition of the various composite target variables must be the same, there is no requirement that the three systems must yield the same decomposition when analysed in terms of the individual components of the composite target variables. Nonetheless, there ought to be a consistency between the decomposition of the composite target variables and the decomposition of the component target variables—i.e., there should be a target chain rule. As shown in Theorem 5, the measures and satisfy the target chain rule, whereas , , and do not [5,7]. Failing to satisfy the target chain rule can lead to inconsistencies between the composite and component decompositions, depending on the order in which one considers decomposing the information (this is discussed further in Appendix A.3). In particular, Table 2 shows how , and all provide the same inconsistent decomposition for Tbc when considered in terms of the composite target variable . In contrast, produces a consistent decomposition of . Finally, based on the above isomorphism, consider the following (the proof is deferred to Appendix B.3).

Table 2.

Shows the decomposition of the quantities in the first row induced by the measures in the first column. For consistency, the decomposition of should equal both the sum of the decomposition of and , and the sum of the decomposition of and . Note that the decomposition induced by , and are not consistent. In contrast, is consistent due to Theorem 5.

Theorem 6.

The target chain rule, identity property and local positivity, cannot be simultaneously satisfied.

5.6. Summary of Key Properties

The following are the key properties of the PPID using the specificity and ambiguity. Property 1 follows directly from the Definitions 1 and 2. Property 2 follows from Theorems 3 and 4. Property 3 follows from the probability distribution Tbc in Section 5.5. Property 4 was discussed in Section 4.2. Property 5 is proved in Theorem 5.

Property 1.

When considering the redundancy between the source events , at least one source event will provide zero unique specificity, and at least one source event will provide zero unique ambiguity. The events and are not necessarily the same source event.

Property 2.

The atoms of partial specificity and partial ambiguity satisfy local positivity, . However, upon recombination and averaging, the atoms of partial information do not satisfy local positivity, .

Property 3.

The decomposition does not satisfy the identity property.

Property 4.

The decomposition does not satisfy the target monotonicity property.

Property 5.

The decomposition satisfies the target chain rule.

6. Conclusions

The partial information decomposition of Williams and Beer [1,2] provided an intriguing framework for the decomposition of multivariate information. However, it was not long before “serious flaws” ([11], p. 2163) were identified. Firstly, the measure of redundant information failed to distinguish between whether predictor variables provide the same information or merely the same amount of information. Secondly, fails to satisfy the target chain rule, despite this addativity being one of the defining characteristics of information. Notwithstanding the problems, the axiomatic derivation of the redundancy lattice was too elegant to be abandoned and hence several alternate measures were proposed, i.e., , and [6,11,12]. Nevertheless, as these measures all satisfy the identity property, they cannot produce a non-negative decomposition for an arbitrary number of variables [13]. Furthermore, none of these measures satisfy the target chain rule meaning they produce inconsistent decompositions for multiple target variables. Finally, in spite of satisfying the identity property (which many consider to be desirable), these measures still fail to identify when variables provide the same information, as exemplified by the pointwise unique problem presented in Section 2.

This paper took the axiomatic derivation of the redundancy lattice from PID and applied it to the unsigned entropic components of the pointwise mutual information. This yielded two separate redundancy lattices—the specificity and the ambiguity lattices. Then based upon an operational interpretation of redundancy, measures of pointwise redundant specificity and pointwise redundant ambiguity were defined. Together with specificity and ambiguity lattices, these measures were used to decompose multivariate information for an arbitrary number of variables. Crucially, upon recombination, the measure satisfies the target chain rule. Furthermore, when applied to PwUnq, these measures do not result in the pointwise unique problem. In our opinion, this demonstrates that the decomposition is indeed correctly identifying redundant information. However, others will likely disagree with this point given that the measure of redundancy does not satisfy the identity property. According to the identity property, independent variables can never provide the same information. In contrast, according to the operational interpretation adopted in this paper, independent variables can provide the same information if they happen to provide the same exclusions with respect to the two-event target distribution. In any case, the proof of Theorem 6 and the subsequent discussion in Appendix B.3, highlights the difficulties that the identity property introduces when considering the information provided about events in separate target variables. (See further discussion in Appendix A.3).

Our future work with this decomposition will be both theoretical and empirical. Regarding future theoretical work, given that the aim of information decomposition is to derive measures pertaining to sets of random variables, it would be worthwhile to derive the information decomposition from first principles in terms of measure theory. Indeed, such an approach would surely eliminate the semantic arguments (about what it means for information to unique, redundant or complementary), which currently plague the problem domain. Furthermore, this would certainly be a worthwhile exercise before attempting to generalise the information decomposition to continuous random variables. Regarding future empirical work, there are many rich data sets which could be decomposed using this decomposition including financial time-series and neural recordings, e.g., [28,33,34].

Acknowledgments

Joseph T. Lizier was supported through the Australian Research Council DECRA grant DE160100630. We thank Mikhail Prokopenko, Richard Spinney, Michael Wibral, Nathan Harding, Robin Ince, Nils Bertschinger, and Nihat Ay for helpful discussions relating to this manuscript. We also thank the anonymous reviewers for their particularly detailed and helpful feedback.

Appendix A. Kelly Gambling, Axiom 4, and Tbc

In Section 3.3, it was argued that the information provided by a set of predictor events about a target event t is the same information if each source event induces the same exclusions with respect to the two-event partition . This was based on the fact that pointwise mutual information does not depend on the apportionment of the exclusions across the set of events which did not occur . It was argued that since the pointwise mutual information is independent of these differences, the redundant mutual information should also be independent of these differences. This requirement was then integrated into the operational interpretation of Section 3.3 and was later enshrined in the form of Axiom 4. This appendix aims to justify this operational interpretation and argue why redundant information in Tbc is not “unreasonably large” ([5], p. 269).

Appendix A.1. Pointwise Side Information and the Kelly Criterion

Consider a set of horses running in a race which can be considered a random variable T with distribution . Say that for each a bookmaker offers odds of -for-1, i.e., the bookmaker will pay out dollars on a $1 bet if the horse t wins. Furthermore, say that there is no track take as , and these odds are fair, i.e., for all [40]. Let be the fraction of a gambler’s capital bet on each horse and assume that the gambler stakes all of their capital on the race, i.e., .

Now consider an i.i.d. series of these races such that for all and let represent the winner of the k-th race. Say that the bookmaker offers the same odds on each race and the gambler bets their entire capital on each race. The gambler’s capital after m races is a random variable which depends on two factors per race: the amount the gambler staked on each race winner , and the odds offered on each winner . That is,

| (A1) |

where monetary units $ have been chosen such that = $1. The gambler’s wealth grows (or shrinks) exponentially, i.e.,

| (A2) |

where

| (A3) |

is the doubling rate of the gambler’s wealth using a betting strategy . Here, the last equality is by the weak law of large numbers for large m.

Any reasonable gambler would aim to use an optimal strategy which maximises the doubling rate . Kelly [40,43] proved that the optimal doubling rate is given by

| (A4) |

and is achieved by using the proportional gambling scheme . When the race occurs and the horse wins, the gambler will receive a payout of = $1, i.e., the gambler receives their stake back regardless of the outcome. In the face of fair odds, the proportional Kelly betting scheme is the optimal strategy—non-terminating repeated betting with any other strategy will result in losses.

Now consider a gambler with access to a private wire S which provides (potentially useful) side information about the upcoming race. Say that these messages are selected from the set , and that the gambler receives the message before the race . Kelly [40,43] showed that the optimal doubling rate in the presence of this side information is given by

| (A5) |

and is achieved by using the conditional proportional gambling scheme . Both the proportional gambling scheme and the conditional proportional gambling scheme are based upon the Kelly criterion whereby bets are apportioned according to the best estimation of the outcome available. The financial value of the private wire to a gambler can be ascertained by comparing their doubling rate of the gambler with access to the side wire to that of a gambler with no side information, i.e.,

| (A6) |

This important result due to Kelly [40] equates the increase in the doubling rate due to the presence of side information, with the mutual information between the private wire S and the horse race T. If on average, the gambler receives 1 bit of information from their private wire, then on average the gambler can expect to double their money per race. Furthermore, as one would expect, independent side information does not increase the doubling rate.

With no side information, the Kelly gambler always received their original stake back from the bookmaker. However, this is not true for the Kelly gambler with side information. Although their doubling rate is greater than or equal to that of the gambler with no side information, this is only true on average. Before the race , the gambler receives the private wire message and then, the horse wins the race. From (A6), one can see that the return for the k-th race is given by the pointwise mutual information,

| (A7) |

Hence, just like the pointwise mutual information, the per race return can be positive or negative: if it is positive, the gambler will make a profit; if it is negative, the gambler will sustain a loss. Despite the potential for pointwise loses, the average return (i.e., the doubling rate) is, just like the average mutual information, non-negative—and indeed, is optimal. Furthermore, while a Kelly gambler with side information can lose money on any single race, they can never actually go bust. The Kelly gambler with side information s still hedges their risk by placing bets on all horses with a non-zero probability of winning according to their side information, i.e., according to . The only reason they would fail to place a bet on a horse is if their side information completely precludes any possibility of that horse winning. That is, a Kelly gambler with side information will never fall foul of gambler’s ruin.

Appendix A.2. Justification of Axiom 4 and Redundant Information in Tbc

Consider Tbc semantically described in terms of a horse race. That is, consider a four horse race T where each horse has an equiprobable chance of winning, and consider the binary variables , , and which represent the following, respectively: the colour of the horse, black 0 or white 1; the sex of the jockey, female 0 or male 1; and the colour of the jockey’s jersey, red 0 or green 1. Say that the four horses have the following attributes:

-

Horse 0

is a black horse , ridden by a female jockey , who is wearing a red jersey .

-

Horse 1

is a black horse , ridden by a male jockey , who is wearing a green jersey .

-

Horse 2

is a white horse , ridden by a female jockey , who is wearing a green jersey .

-

Horse 3

is a white horse , ridden by a male jockey , who is wearing a red jersey .

There are two important points to note. Firstly, the horses in the race T could also be uniquely described in terms of the composite binary variables , or . Secondly, if one knows and then one knows (which can be represented by the relationship ). Finally, consider private wires and which independently provide the colour of the horse and the colour of the jockey’s jersey (respectively) before the upcoming race, i.e., and .