Abstract

A conditional Gaussian framework for understanding and predicting complex multiscale nonlinear stochastic systems is developed. Despite the conditional Gaussianity, such systems are nevertheless highly nonlinear and are able to capture the non-Gaussian features of nature. The special structure of the system allows closed analytical formulae for solving the conditional statistics and is thus computationally efficient. A rich gallery of examples of conditional Gaussian systems are illustrated here, which includes data-driven physics-constrained nonlinear stochastic models, stochastically coupled reaction–diffusion models in neuroscience and ecology, and large-scale dynamical models in turbulence, fluids and geophysical flows. Making use of the conditional Gaussian structure, efficient statistically accurate algorithms involving a novel hybrid strategy for different subspaces, a judicious block decomposition and statistical symmetry are developed for solving the Fokker–Planck equation in large dimensions. The conditional Gaussian framework is also applied to develop extremely cheap multiscale data assimilation schemes, such as the stochastic superparameterization, which use particle filters to capture the non-Gaussian statistics on the large-scale part whose dimension is small whereas the statistics of the small-scale part are conditional Gaussian given the large-scale part. Other topics of the conditional Gaussian systems studied here include designing new parameter estimation schemes and understanding model errors.

Keywords: conditional Gaussian systems, multiscale nonlinear stochastic systems, physics-constrained nonlinear stochastic models, stochastically coupled reaction–diffusion models, conditional Gaussian mixture, superparameterization, conformation theory, model error, hybrid strategy, parameter estimation

Contents

1. Introduction

Multiscale nonlinear dynamical systems are ubiquitous in different areas, including geoscience, engineering, neural science and material science [1,2,3,4,5,6,7,8]. They are characterized by a large dimensional phase space and a large dimension of strong instabilities which transfer energy throughout the system. Many non-Gaussian characteristics, such as extreme and rare events, intermittency and fat-tailed probability density functions (PDFs), are often observed in these multiscale nonlinear dynamical systems [1,9,10]. Key mathematical issues are their basic mathematical structural properties and qualitative features [2,3,11,12], short- and long-range forecasting [13,14,15], uncertainty quantification (UQ) [16,17,18], and state estimation, filtering or data assimilation [15,19,20,21,22]. Due to the lack of physical understanding or the inadequate resolution in the models because of the current computing power, coping with the inevitable model errors that arise in approximating such complex systems becomes a necessary and crucial issue in dealing with these multiscale nonlinear dynamical systems [16,23,24,25,26]. Understanding and predicting complex multiscale turbulent dynamical systems involve the blending of rigorous mathematical theory, qualitative and quantitative modelling, and novel numerical procedures [2,27].

Despite the fully nonlinearity in many multiscale turbulent dynamical systems and the non-Gaussian features in both the marginal and joint PDFs, these systems have conditional Gaussian structures [1,15,28,29]. Writing the state variables as , the conditional Gaussianity means that once the trajectories of are given, the dynamics of conditioned on these highly nonlinear observed trajectories become Gaussian processes. In this article, we develop a general conditional Gaussian framework for mathematical modeling, prediction, state estimation and UQ. One of the desirable features of such conditional Gaussian system is that it allows closed analytical formulae for updating the conditional statistics of given the trajectories of [28,30]. This facilitates the development of both rigorous mathematical theories and efficient numerical algorithms for these complex multiscale turbulent dynamical systems.

With the great progress in developing and improving the observational networks, a vast amount of observational data is now available. An important scientific issue is to make use of these observational data to build data-driven models that advance the understanding of the underlying physical processes and improve the prediction skill. Physics-constrained nonlinear stochastic models are the recent development of data-driven statistical-dynamical models for the time series of a partial subset of observed variables, which arise from observations of nature or from an extremely complex physical model [31,32]. The physics-constrained nonlinear stochastic modeling framework builds on early works for single layer models without memory effects, which uses physical analytic properties to constrain data-driven methods. They succeed in overcoming both the finite-time blowup and the lack of physical meaning issues in various ad hoc multi-layer regression models [31,33]. Many of the physics-constrained nonlinear stochastic models belong to the conditional Gaussian framework, including the noisy version of the famous Lorenz 63 and 84 models as well as a two-layer Lorenz 96 model [34,35,36]. In addition, two important conceptual models for turbulent dynamical systems [37,38] and a conceptual model of the coupled atmosphere and ocean [39] also fit perfectly into the physics-constrained nonlinear stochastic modeling framework with conditional Gaussian structures. These models are extremely useful for testing various new multiscale data assimilation and prediction schemes [1,38,40]. Other physics-constrained nonlinear stochastic models with conditional Gaussian structures include a low-order model of Charney–DeVore flows [29] and a paradigm model for topographic mean flow interaction [32]. Notably, the physics-constrained nonlinear stochastic models combining with the conditional Gaussian framework have been successfully applied for the real-time prediction and data assimilation of the Madden–Julian oscillation (MJO) and monsoon [41,42,43,44], which are the dominant modes of intraseasonal variabilities in nature.

In many multiscale turbulent dynamical systems, there is a natural time scale separation between different variables. Therefore, the MTV strategy [45,46,47,48] (named after Majda, Timofeyev and Vanden-Eijnden) can be applied to these systems for stochastic mode reduction. Using the MTV strategy, the equations of motion for the unresolved fast modes are modified by representing the nonlinear self-interactions terms between unresolved modes by damping and stochastic terms. The resulting systems then naturally belong to the conditional Gaussian framework and they also preserve physics-constrained properties. Examples include a simple stochastic model with key features of atmospheric low-frequency variability [49] and a simple prototype nonlinear stochastic model with triad interactions that mimics structural features of low-frequency variability of global circulation models (GCM) [50] .

In addition to the data-driven physics-constrained nonlinear stochastic models, many large-scale dynamical models in turbulence, fluids and geophysical flows also have conditional Gaussian structures. These stochastic partial differential equation (SPDE) models include the stochastic skeleton model for the MJO [51] and a coupled El Niño dynamical model capturing the El Niño diversity [52], where nonlinearity interacts with state-dependent (multiplicative) noise and many salient non-Gaussian features such as intermittency and fat-tailed PDFs are observed in these systems. The stochastically forced Boussinesq equation [11] for the Rayleigh–Bénard convection [53,54] and strongly stratified flows in geophysics [55] as well as its generalized version, namely the Darcy–Brinkman–Oberbeck–Boussinesq system [56] for the convection phenomena in porous media, also belong to the conditional Gaussian framework. Likewise, the multiscale shallow water equation [11] with coupled geostrophically balanced (GB) and gravity modes fits into the conditional Gaussian model family. On the other hand, quite a few stochastically coupled reaction–diffusion models in neuroscience and ecology illustrate conditional Gaussian structures as well. Examples include the stochastically coupled FitzHugh–Nagumo (FHN) models [57], the predator–prey models [58], a stochastically coupled Susceptible-Infectious-Recovered (SIR) epidemic model [59] and a nutrient-limited model for avascular cancer growth [60]. Furthermore, the conditional Gaussian framework can be applied to model the coupled observation-filtering systems for the state estimation of turbulent ocean flows using Lagrangian tracers [61,62,63]. The framework can also be utilized to develop cheap and effective stochastic parameterizations for turbulent dynamical systems [64,65].

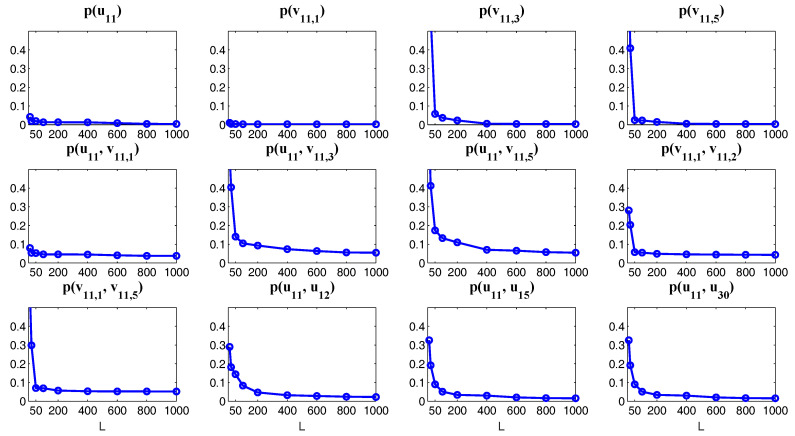

One of the key issues in studying the complex multiscale nonlinear turbulent dynamical systems is to solve the time evolution of the associated PDFs, which is extremely useful in ensemble prediction, data assimilation as well as understanding the intermittency and extreme events. The time evolution of the PDFs associated with the underlying turbulent dynamical systems is described by the so-called Fokker–Planck equation [66,67]. Due to the complexity of many multiscale turbulent dynamical systems, the dimension of the systems can be quite large. However, solving the Fokker–Planck equation in high dimensions is a well-known notoriously difficult problem. Traditional numerical methods such as finite element and finite difference as well as the direct Monte Carlo simulations all suffer from the curse of dimension [68,69]. Nevertheless, for the conditional Gaussian systems, efficient statistically accurate algorithms can be developed for solving the Fokker–Planck equation in high dimensions and thus beat the curse of dimension. The algorithms involve a hybrid strategy that requires only a small number of ensembles [38]. Specifically, a conditional Gaussian mixture in a high-dimensional subspace via an extremely efficient parametric method is combined with a judicious non-parametric Gaussian kernel density estimation in the remaining low-dimensional subspace. The parametric method provides closed analytical formulae for determining the conditional Gaussian distributions in the high-dimensional subspace and is therefore computationally efficient and accurate. The full non-Gaussian PDF of the system is then given by a Gaussian mixture [38]. For even larger dimensional systems, a judicious block decomposition and statistical symmetry are further applied that facilitate an extremely efficient parallel computation and a significant reduction of sample numbers [36]. These algorithms are applied here to the statistical prediction of a stochastically coupled FHN model with 1500 dimensions and an inhomogeneous two-layer Lorenz 96 model with 240 dimensions. Significant prediction skill shows the advantages of these algorithms in terms of both accuracy and efficiency.

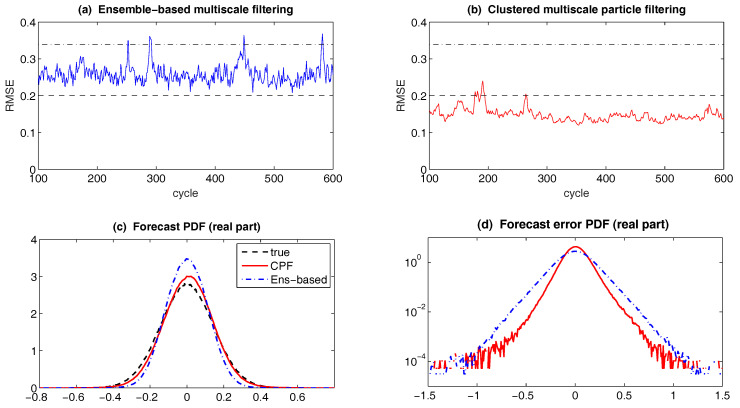

The conditional Gaussian framework also provides a power tool for the multiscale data assimilation. In fact, data assimilation of turbulent signals is an important challenging problem because of the extremely complicated large dimension of the signals and incomplete partial noisy observations that usually mix the large scale mean flow and small scale fluctuations. Due to the limited computing power, it is desirable to use multiscale forecast models which are cheap and fast to mitigate the curse of dimensionality in turbulent systems. An extremely cheap multiscale data assimilation scheme, called stochastic superparameterization [70,71,72,73], resolves the large-scale mean flow on a coarse grid and replaces the nonlinear small-scale eddy terms by quasilinear stochastic processes on formally infinite embedded domains where the stochastic processes are Gaussian conditional to the large scale mean flow. The key ingredient of such a multiscale data assimilation method is the systematic use of conditional Gaussian mixtures which make the methods efficient by filtering a subspace whose dimension is smaller than the full state. This conditional Gaussian closure approximation results in a seamless algorithm without using the high resolution space grid for the small scales and is much cheaper than the conventional superparameterization, with significant success in difficult test problems [71,72,74] including the Majda-McLaughlin-Tabak (MMT) model [71,75] and ocean turbulence [76,77,78]. The method uses particle filters [20,79] or ensemble filters on the large scale part [75,76] whose dimension is small enough so that the non-Gaussian statistics of the large scale part can be calculated from a particle filter, whereas the statistics of the small scale part are conditionally Gaussian given the large scale part. This framework is not restricted to superparameterization as the forecast model and other cheap forecast models can also be employed. In fact, another multiscale filtering algorithm with quasilinear Gaussian dynamically orthogonality method as the forecast method in an adaptively evolving low dimensional subspace has been developed [80]. The multiscale filtering also provides a mathematical framework for representation errors, which are due to the contribution of unresolved scales [81,82] in the observations. Other theoretic and applied studies of the conditional Gaussian framework include effective parameter estimation, model reduction and the understanding of various model errors using information theory.

The remaining of the article is organized as follows. Section 2 provides an overview of data, model and data-driven modeling framework as well as efficient data assimilation and prediction strategies with solvable conditional statistics. Section 3 summarizes the general mathematical structure of nonlinear conditional Gaussian systems, the physics-constrained nonlinear stochastic models and the application of the MTV strategy to the conditional Gaussian systems. Then, a gallery of examples of conditional Gaussian systems is shown in Section 4. Section 5 involves the effective statistically accurate algorithms that beat the curse of dimension for Fokker–Planck equation for conditional Gaussian systems together with their applications to statistical prediction. The topics related to the parameter estimation, model error and multiscale data assimilation utilizing the conditional Gaussian framework are shown in Section 6. The article is concluded in Section 7.

2. Overview: Data vs. Model, Data-Driven Modeling Framework, and Efficient Data Assimilation and Prediction Strategies with Solvable Conditional Statistics

A central contemporary issue for complex turbulent systems is to use data to improve scientific understanding of the underlying physics, make real-time predictions, and quantify the uncertainty in long range forecasting [1,27]. Recently, with the great progress in developing and improving the observational networks, vast amount of observational data are now available. Many purely data-driven statistical methods (regression, clustering and analog forecast etc.) [83,84,85,86,87] are thus developed and have become popular approaches in attempting to understand and predict nature. Despite the success in a crude understanding of nature in terms of explaining and forecasting some of the coarse-grained variables in the largest scale to some extent, these purely data-driven statistical methods usually cannot discover the nonlinear and intermittent nature in the underlying complex dynamics. They often fail to reveal the non-trivial interactions between physical processes in different scales either. In addition, these purely data-driven statistical methods typically require a large training dataset in order to obtain complete and unbiased information from nature, which is however infeasible in many areas, including climate, atmosphere and ocean science. In fact, satellite observations are only available for a few decades [88,89] which are far from enough in understanding decadal or interannual variabilities containing rare and extreme events. Note that many complex turbulent systems in nature are in high dimensions, and therefore most purely data driven statistical methods are extremely expensive to use.

Therefore, combining model with data becomes necessary in understanding and predicting nature. Suitable models involve important physical mechanisms and they can be used for real-time prediction by incorporating only a small amount of data. In many simple but natural scenarios, some low-dimensional physical variables are observed and low-order nonlinear stochastic models are preferred for describing their behavior. Using data outcome to fit a quadratic regression model [90,91] is a data-driven modeling strategy which outweighs the linear regression models and allows nonlinearity and memory in time. However, there is no physical information in such quadratic regression models. In fact, it has been shown in [33] via rigorous mathematical theory that such ad hoc nonlinear regression strategies can exhibit finite time blow-up and pathological invariant measures even though they fit the data with high precision. To include physical information into the models, systematic physics-constrained multi-level quadratic regression models have been developed [31,32]. These models avoid pathological behavior by incorporating physical constraints with nonlinear energy-conserving principles developed in the earlier stochastic climate modeling strategy [45]. Meanwhile, these physics-constrained models allow memory in effects. Although the number of the parameters in physics-constrained nonlinear models can be large, the models are in general robust with respect to the perturbation of the parameters around their optimal values [41]. This is crucial in practice because it requires only a crude estimation of the parameters for the model, which greatly reduces the computational cost for searching in high-dimensional parameter space. A striking real-world application of these physics-constrained nonlinear models is to assess the predictability limits of time series associated with the tropical intraseasonal variability such as the the Madden–Julian oscillation (MJO) and monsoon. It has been shown in [41,42,43] that these physics-constrained nonlinear models are able to reach the predictability limits of the large-scale MJO and monsoon and improves prediction skill using the low-order models. Notably, the physics-constrained nonlinear stochastic models require only a short training period [42,44] because the model development automatically involves a large portion of the information of nature. This is extremely important since practically only a limited observational data is available. In fact, comparable and even slightly more skillful prediction results have been found using the physics-constrained nonlinear model compared with those using non-parametric methods in predicting the MJO and monsoon intermittent time series [92,93], but the prediction using the physics-constrained nonlinear model adopted a much shorter training period [44].

Another important reason that entails the model in understanding and predicting nature is due to the fundamental limitations in measuring the observations. In fact, only partial observations are available in most applications and observational noise is inevitable. For example, the sea surface temperature is easy to measure, but the temperature in the deep ocean is hard to estimate. In addition, although the surface wind in the entire earth can be measured by satellites, the measured signals usually contain large noise. Therefore, models are combined with the available observations for the state estimation of the unobserved variables as well as reducing the noise in the observed ones. This is known as data assimilation or filtering [15,20,22]. Note that most of the complex multiscale dynamical systems involve strong nonlinearity and extreme events. Thus, the classical Kalman filter that works only for linear models [94] fails to capture the nonlinear and non-Gaussian features in nature. Although the so-called particle filter [95,96] is able to recover all the non-Gaussian statistics, it is computationally expensive and can only be applied to low-dimensional scenarios, which are typically not the case in most applications. In fact, even a global weather model with very coarse grid points at every 200 km already has about state variables. Other direct strategies in handling large dimensional systems, such as the ensemble Kalman filters [97,98], can also be problematic. For example, they are not immune from “catastrophic filter divergence” (diverge beyond machine infinity) when observations are sparse, even when the true signal is a dissipative system [99,100]. Therefore, designing new efficient strategies for data assimilation and prediction that are accurate and can overcome the curse of dimension is crucial in studying complex multiscale nonlinear dynamical systems with only noisy partial observations [20,27,65,101,102]. Since both the data assimilation and prediction involve running forward the dynamical models (known as the forecast models), the development of new strategies entails the understanding and utilization of model structures.

Due to the complexity in many complex multiscale turbulent dynamical systems, developing cheap and effective approximate models which nevertheless capture the main characteristics of the underlying dynamics becomes necessary in data assimilation, prediction and the understanding of nature. In particular, novel stochastic parameterization schemes play a significant role in reducing the model complexity while retaining the key features of various multiscale turbulent dynamical systems. These key features include the statistical feedback from small to large scales, accurate dynamical and statistical behavior in large scale variables, and the main effect of the large-scale variables on the statistical evolution of small scale processes. Then, efficient hybrid strategy can be developed for dealing with large and small scale variables, respectively.

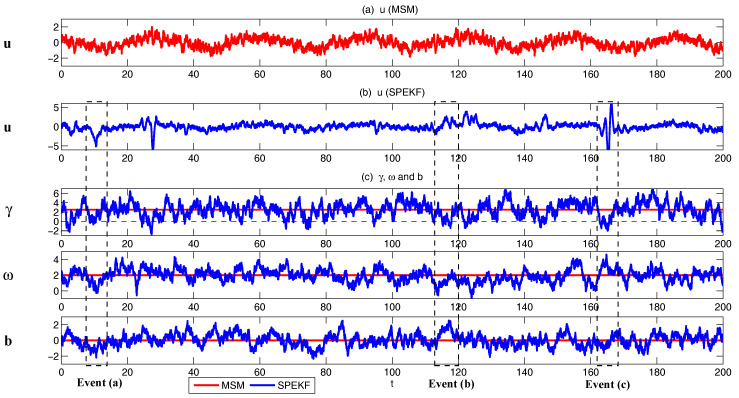

A simple yet practically useful strategy in filtering nonlinear and intermittent signals is via the so-called stochastic parameterized extended Kalman filter (SPEKF) type of the forecast model [64,65]. The idea of the SKEPF model is that the small or unresolved scale variables are stochastically parameterized by cheap linear and Gaussian processes, representing stochastic damping, stochastic phase and stochastic forcing. Despite the model error in using such Gaussian approximations for the unresolved nonlinear dynamics, these Gaussian processes succeed in providing accurate statistical feedback from the unresolved scales to the resolved ones and thus the intermittency and non-Gaussian features as observed in the resolved variables can be accurately recovered. The statistics in the SPEKF model can also be solved with exact and analytic formulae, which allow an accurate and efficient estimation of the model states. The SPEKF type of model has been used for filtering mutiscale turbulent dynamical systems [20], stochastic superresolution [103] and filtering Navier–Stokes equations with model error [104]. A detailed description of the SPEKF model can be found in Section 4.5.1. Notably, the SPEKF model involves a hybrid approach, where certain cheap and statistically solvable Gaussian approximations are used to describe the statistical features of the unresolved (or small scale) variables in a large dimensional subspace while the highly nonlinear dynamical features of the resolved (or large scale) variables as well as their interactions with the unresolved variables are retained in a relatively low dimensional subspace. Such a strategy can be used as a guideline for designing suitable approximate forecast models for various complex multiscale turbulent dynamical systems.

For multiscale data assimilation of many realistic and complex turbulent dynamical systems, an extremely cheap scheme, called stochastic superparameterization [70,71,72,73], has been developed, which makes use of a similar hybrid strategy as discussed above but with a more refined setup. In the stochastic superparameterization, the large-scale mean flow is resolved on a coarse grid and the nonlinear small-scale eddy terms are replaced by quasilinear stochastic processes on formally infinite embedded domains where these stochastic processes are Gaussian conditional to the large scale mean flow. The key ingredient of such a multiscale data assimilation method is the systematic use of conditional Gaussian mixtures which make the methods efficient by filtering a subspace whose dimension is smaller than the full state. This conditional Gaussian closure approximation results in a seamless algorithm without using the high resolution space grid for the small scales and is thus extremely efficient. The method uses particle filters [20,79] or ensemble filters on the large scale part [75,76] whose dimension is small enough so that the non-Gaussian statistics of the large scale part can be calculated from a particle filter, whereas the statistics of the small scale part are conditionally Gaussian given the large scale part. See Section 6.3 for more details. Note that such ideas can also be applied to other cheap forecast models in addition to the superparameterization. For example, another multiscale filtering algorithm with quasilinear Gaussian dynamically orthogonality method as the forecast method in an adaptively evolving low dimensional subspace has been developed in [80].

Due to the significance in model approximation, data assimilation and prediction, it is necessary to develop a general framework for the complex multiscale nonlinear turbulent dynamical systems with conditional Gaussian structures. The key idea of such conditional Gaussian systems is that a hybrid strategy can be applied to deal with the state variables , where particle methods are used to solve the non-Gaussian statistics in the relatively low dimensional subspace associated with and extremely cheap algorithms with closed analytical formulae are adopted to solve the conditional Gaussian statistics of conditioned on in the remaining high dimensional subspace. The Gaussianity in the conditional statistics are usually quite accurate as approximations since the small or unresolved scales with the given (or fixed) large-scale variables usually represent fluctuations in multiscale systems and the statistics are close to Gaussian. Nevertheless, the marginal statistics of the small-scale variables themselves can be highly non-Gaussian, which is one of the salient features as in nature [37]. Notably, developing such computationally efficient models that explores conditional statistics also involves the mutual feedback between large scale and small scale variables. Thus, the full system is completely nonlinear and allows physics-constrained nonlinear interactions. The general conditional Gaussian nonlinear modeling framework provides a powerful tool in multiscale data assimilation, statistical prediction, solving high-dimensional PDFs as well as parameter estimation, causality analysis and understanding model errors.

3. A Summary of the General Mathematical Structure of Nonlinear Conditional Gaussian Systems

3.1. Conditional Gaussian Nonlinear Dynamical Systems

The conditional Gaussian systems have the following abstract form [28]:

| (1) |

| (2) |

Once for is given, conditioned on becomes a Gaussian process:

| (3) |

Despite the conditional Gaussianity, the coupled system (1) and (2) remains highly nonlinear and is able to capture the non-Gaussian features as in nature. The conditional Gaussian distribution in (3) has closed analytic form [30]

| (4) |

| (5) |

which can be solved in an exact and efficient way. The conditional Gaussian framework (1)-(2)-(5) is useful in the parameter estimation, data assimilation, prediction and uncertainty quantification of complex turbulent dynamical systems as will be discussed throughout this article.

In this article, many conditional Gaussian systems with complex turbulent dynamical structures will be studied. For the convenience of the reader, we will always use a blue color to represent the variables that belong to and use a magenta color to denote those for .

3.2. Kalman–Bucy Filter: The Simplest and Special Data Assimilation Example within Conditional Gaussian Framework

A special case of the general conditional Gaussian framework (1) and (2) is the so-called Kalman–Bucy filter [105,106,107,108]. The Kalman–Bucy filter is a continuous time version of the Kalman filter [21,94] and it deals with the linear coupled systems:

| (6) |

| (7) |

In (6) and (7), all the vectors and matrices and are functions of only t and they have no dependence on in order to guarantee the linearity in the coupled system. In the Kalman–Bucy filter, (7) is the underlying dynamics and (6) is the observational process. The observation cannot change the underlying dynamics and therefore no appears in (7).

The filter estimate (also known as the posterior distribution) is the conditional distribution of given the observation , i.e., . In light of (4) and (5), the mean and variance of has the following explicit expressions:

Corresponding to (4) and (5), Chapter 6 of Bensoussan’s book [109] includes rigorous mathematical derivations of the exact solutions of the Kalman–Bucy filter and some other more general conditional Gaussian filters. It is also pointed out in [109] that all these filters belong to the general conditional Gaussian filtering framework in (1)-(2)-(4)-(5) introduced in [110], which is an early version of the book authored by Liptser and Shiryaev [30].

3.3. Physics-Constrained Nonlinear Models with Conditional Gaussian Statistics

Physics-constrained nonlinear stochastic models are the recent development of data driven statistical-dynamical models for the time series of a partial subset of observed variables, which arise from observations of nature or from an extremely complex physical model [31,32]. The physics-constrained nonlinear stochastic modeling framework builds on early works for single layer models without memory effects, which uses physical analytic properties to constrain data driven methods. These physics-constrained nonlinear stochastic models succeed in overcoming both the finite-time blowup and the lack of physical meaning issues in various ad hoc multi-layer regression models [31,33].

The physics-constrained nonlinear stochastic models contain energy-conserving quadratic nonlinear interactions [1,31,32], namely

| (8) |

where and the dimensions of and are and , respectively. In (8), is a linear operator representing dissipation and dispersion. Here, is skew symmetric representing dispersion and is a negative definite symmetric operator representing dissipative process such as surface drag, radiative damping, viscosity, etc. is a bilinear term and it satisfies energy conserving property with .

Many of the physics-constrained nonlinear stochastic models belong to the conditional Gaussian framework. The goal here is to derive a general class of conditional Gaussian physics-constrained nonlinear stochastic models. To this end, we rewrite the Equation (8) in the following way:

| (9) |

where the explicit dependence of the coefficients on time t has been omitted for notation simplicity. In (9), , , and correspond to the the linear term in (8) while and represent the nonlinear terms in the processes associated with the variables in (1) and those in (2), respectively. Since the conditional Gaussian systems do not allow quadratic nonlinear interactions between and itself, both and can be written down as follows:

| (10) |

where stands for the quadratic terms involving only and represents the quadratic interactions between and . Given the nonlinear terms in (10), the energy-conserving quadratic nonlinearity in (8) implies

| (11) |

Inserting (10) into (9) yields the conditional Gaussian systems with energy-conserving quadratic nonlinear interactions,

| (12) |

| (13) |

Now, we explore the detailed forms of the energy-conserving nonlinear terms in (12) and (13).

We start with , which can be written as

| (14) |

where each is a skew-symmetric matrix with and is the j-th entry of . The energy-conserving property is easily seen by multiplying to in (14),

Due to the skew-symmetric property of . In fact, usually represents the internal oscillation with non-constant oscillation frequency that depends on .

Next, contains three components,

| (15) |

One of the components in (15), say , has its own energy conservation, i.e.,

Here, and therefore

| (16) |

where each column of is given by

| (17) |

with being a skew-symmetric matrix. Thus, with (17) in hand, (16) becomes

where is the j-th entry of .

The other two components of in (12) involve the interactions with in (13). On one hand, the energy-conserving property in the following two terms is obvious,

| (18) |

| (19) |

where each is a matrix, is the j-th entry of and is a vector of size with the j-th entry being . On the other hand, the remaining two terms and are similar to those in (18)–(20) but deal with the cross-interactions between different components of such as replacing by in (20). To this end, we define the following

| (20) |

which satisfies

| (21) |

In fact, (18)–(21) are important for generating the intermittent instability, where plays the role of both damping and anti-damping for the dynamics of .

Finally, involves any iterations between and itself that satisfies

| (22) |

Therefore, with (14)–(22) in hand, the conditional Gaussian system with energy-conserving quadratic nonlinear interactions (12) and (13) has the following form:

| (23) |

| (24) |

3.4. Multiscale Conditional Gaussian with MTV Stochastic Modeling Strategy

Let’s start with a general nonlinear deterministic model with quadratic nonlinearity,

| (25) |

where the notations of vectors and matrices are the same as in Section 3.3. In (25), the state variables are again decomposed into . Here, has multiscale features, where denotes the resolved variables that evolve slowly in time (e.g., climate variables) while are unresolved or unobserved fast variables (e.g., weather variables). The system (25) can be written down into more detailed forms:

| (26) |

| (27) |

Different from (12) and (13), the nonlinear interaction between fast scale variables themselves and are also included in (26) and (27). To make the mutiscale system (26) and (27) fit into the conditional Gaussian framework, two modifications are needed. First, if we link (26) and (27) with the general conditional Gaussian framework (1) and (2), the quadratic terms involving the interactions between and itself, namely and , are not allowed there. Second, stochastic noise is required at least to the system of .

To fill in these gaps, the most natural way is to apply idea from the MTV strategy to the multiscale system (26) and (27). The MTV strategy [45,46,47,48], named after Majda, Timofeyev and Vanden-Eijnden, is a systematic mathematical strategy for stochastic mode reduction. The MTV strategy is a two-step procedure:

The equations of motion for the unresolved fast modes are modified by representing the nonlinear self-interactions terms between unresolved modes by stochastic terms.

The equations of motion for the unresolved fast modes are eliminated using the standard projection technique for stochastic differential equations.

In fact, we only need to take the first step in the MTV strategy here. Using to represent the time scale separation between and , the terms with quadratic nonlinearity of and itself are approximated by

| (28) |

That is, we replace the quadratic nonlinearity of by a linear damping and stochastic noise, where , , and are positive definite symmetric matrices. The motivation of (28) is that the nonlinear self-interacting terms of fast variables are responsible for the chaotic sensitive dependence on small perturbations and do not require a more detailed description if their effect on the coarse-grained dynamics for the climate variables alone is the main objective. On the other hand, the quadratic nonlinear interactions between and are retained.

Note that in the second step of the MTV strategy, standard techniques of averaging and adiabatic elimination of fast modes in stochastic equations are applied, assuming that . Here, we assume is a finite small value and thus do not apply the second step of the MTV strategy.

Now, inserting (28) into (26) and (27) yields

| (29) |

| (30) |

where , , and . Clearly, (29) and (30) belongs to the conditional Gaussian framework. Notably, if the nonlinear terms in (29) and (30) satisfy the conditions in Section 3.3, then (29) and (30) becomes a physics-constrained nonlinear model.

4. A Gallery of Examples of Conditional Gaussian Systems

This section includes various conditional Gaussian complex nonlinear dynamical systems and their applications.

4.1. Physics-Constrained Nonlinear Low-Order Stochastic Models

4.1.1. The Noisy Versions of Lorenz Models

Lorenz proposed three famous models in 1963, 1984 and 1996. These models are widely used as simplified models to mimic the turbulent and chaotic behavior and as test models for data assimilation and prediction in atmosphere and ocean science. By incorporating noise and other small-scale parameterizations, these models all belong to the conditional Gaussian family.

1. The Noisy Lorenz 63 (L-63) Model

| (31) |

The deterministic L-63 model ( in (31)) is proposed by Lorenz in 1963 [34]. It is a simplified mathematical model for atmospheric convection. The equations relate the properties of a two-dimensional fluid layer uniformly warmed from below and cooled from above. In particular, the equations describe the rate of change of three quantities with respect to time: x is proportional to the rate of convection, y to the horizontal temperature variation, and z to the vertical temperature variation. The constants , , and are system parameters proportional to the Prandtl number, Rayleigh number, and certain physical dimensions of the layer itself [111]. The L-63 model is also widely used as simplified models for lasers, dynamos, thermosyphons, brushless direct current DC motors, electric circuits, chemical reactions and forward osmosis [112,113,114,115,116,117,118]. the noisy version of the L-63 includes more turbulent and small-scale features and their interactions with the three large scale variables while retains the characteristics in the original L-63. The noisy L-63 model is a conditional Gaussian system (1) and (2) with and . It also belongs to the conditional Gaussian family with and .

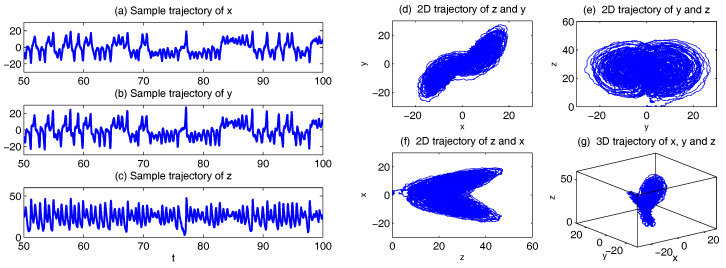

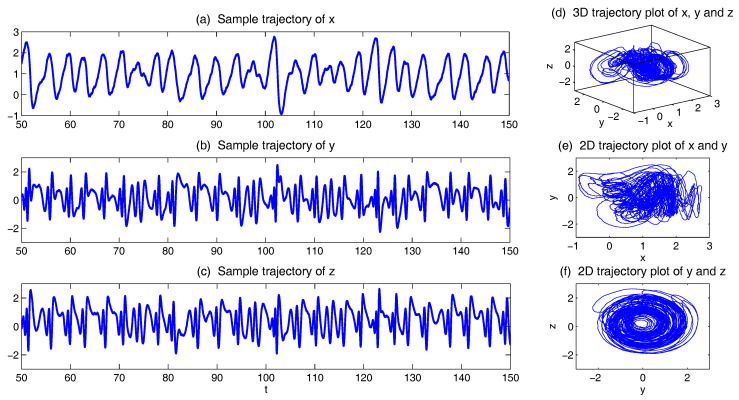

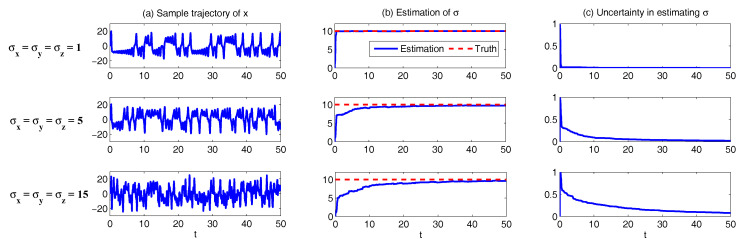

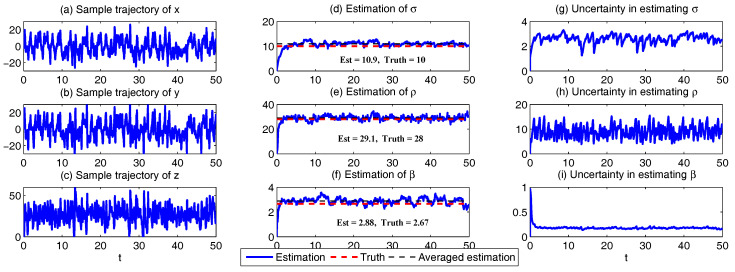

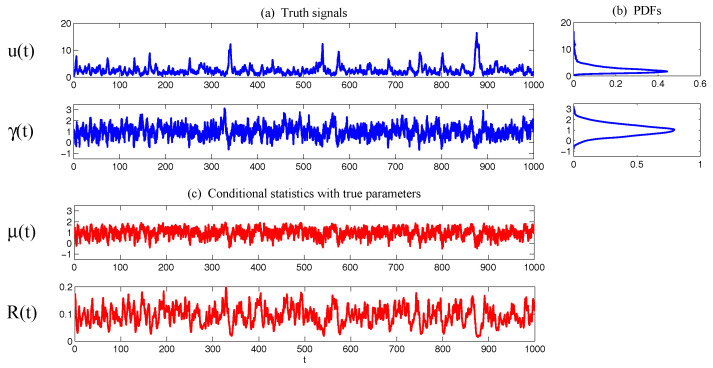

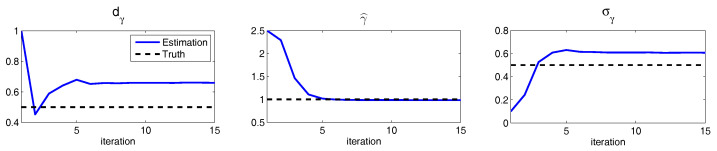

In Figure 1, we show some sample trajectories of the noisy L-63 model (31) with the typical values that Lorenz used [34],

| (32) |

Figure 1.

Sample trajectories of the noisy L-63 model (31) with parameters = 28, = 10, = 8/3, . (a–c) 1D trajectories of x, y and z, respectively; (d–f) 2D trajectories of , and ; (g) 3D trajectory of .

Together with a moderate noise level

| (33) |

In addition to the chaotic behavior and the butterfly profile, the small fluctuations due to the noise are also clearly observed in these trajectories.

2. The Noisy Lorenz 96 (L-96) and Two-Layer L-96 Models

The original L-96 model with noise is given by

| (34) |

The model can be regarded as a coarse discretization of atmospheric flow on a latitude circle with complicated wave-like and chaotic behavior. It schematically describes the interaction between small-scale fluctuations with larger-scale motions. However, the noisy L-96 model in (34) usually does not have the conditional Gaussian structure unless a careful selection of a subset of for . Nevertheless, some two-layer L-96 models do belong to conditional Gaussian framework. These two-layer L96 models are conceptual models in geophysical turbulence that includes the interaction between small-scale fluctuations in the second layer with the larger-scale motions. They are widely used as a testbed for data assimilation and parameterization in numerical weather forecasting [36,40,119,120]. One natural choice of the two-layer L-96 model is the following [36]:

| (35) |

Linking the general conditional Gaussian framework (1) and (2) with the two-layer L-96 model in (35), represents the large-scale motions while involves the small-scale variables.

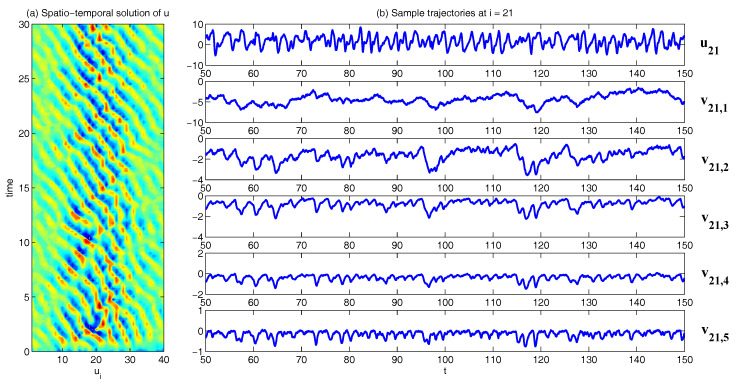

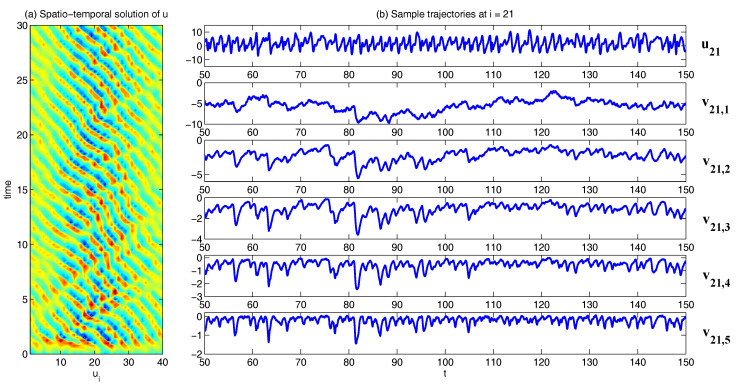

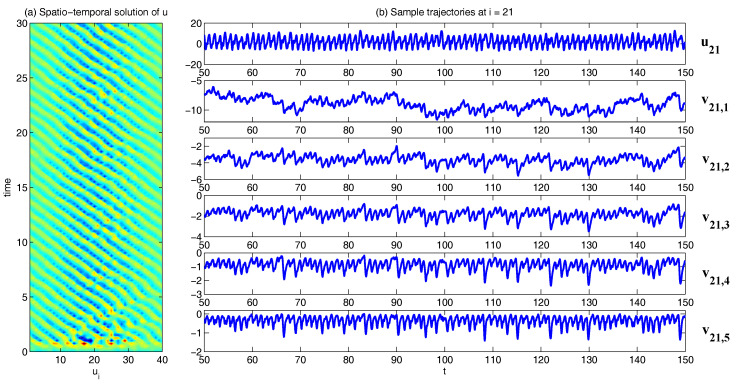

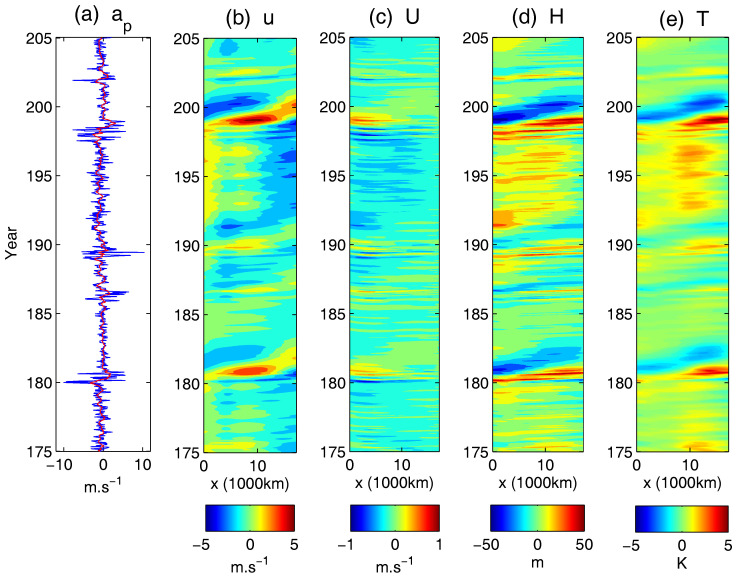

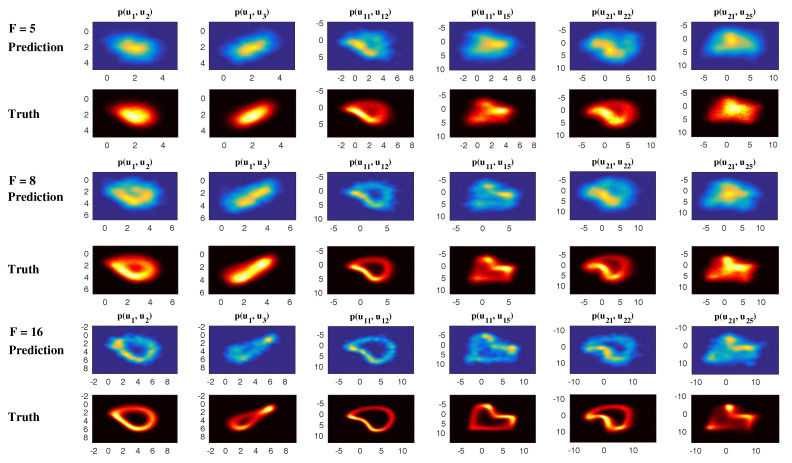

In Figure 2, Figure 3 and Figure 4, numerical simulations of the two-layer L-96 model in (35) with and are shown. Here, the damping and coupling coefficients are both functions of space with

| (36) |

Figure 2.

Simulations of the two-layer L-96 model in (35) with inhomogeneous parameters (36). Here, . (a) spatio-temporal evolution of the large-scale variable ; (b) time series of and at ; from top to bottom: larger to smaller scales with increasing intermittency.

Figure 3.

Simulations of the two-layer L-96 model in (35) with inhomogeneous parameters (36). Here, . (a) spatio-temporal evolution of the large-scale variable ; (b) time series of and at ; from top to bottom: larger to smaller scales with increasing intermittency.

Figure 4.

Simulations of the two-layer L-96 model in (35) with inhomogeneous parameters (36). Here, . (a) spatio-temporal evolution of the large-scale variable ; (b) time series of and at ; from top to bottom: larger to smaller scales with increasing intermittency.

These mimic the situation that the damping and coupling above the ocean are weaker than those above the land since the latter usually have stronger friction or dissipation. Therefore the model is inhomogeneous and the large-scale wave patterns over the ocean are more significant, where a westward propagation is clearly seen in all these figures (Panel (a)). The other parameters are as follows:

| (37) |

which imply that the damping time is shorter in the smaller scales. The model in (35) has many desirable properties as in more complicated turbulent systems. Particularly, the smaller scales are more intermittent (Panel (b)) with stronger fat tails in PDFs. Different constant forcing and 16 are adopted in Figure 2, Figure 3 and Figure 4, which result in various chaotic behavior for the system. With the forcing increase, the oscillation patterns in u become more regular while the small scale variables at each fixed grid point show more turbulent behavior.

3. The Noisy Lorenz 84 (L-84) Model

The noisy L-84 model is an extremely simple analogue of the global atmospheric circulation [121,122], which has the following form [35,123]:

| (38) |

In (38), the zonal flow x represents the intensity of the mid-latitude westerly wind current (or the zonally averaged meridional temperature gradient, according to thermal wind balance), and a wave component exists with y and z representing the cosine and sine phases of a chain of vortices superimposed on the zonal flow. Relative to the zonal flow, the wave variables are scaled so that is the total scaled energy (kinetic plus potential plus internal). Note that these equations can be derived as a Galerkin truncation of the two-layer quasigeostrophic potential vorticity equations in a channel.

In the L-84 model (38), the vortices are linearly damped by viscous and thermal processes. The damping time defines the time unit and is a Prandtl number. The terms and represent the amplification of the wave by interaction with the zonal flow. This occurs at the expense of the westerly current: the wave transports heat poleward, thus reducing the temperature gradient, at a rate proportional to the square of the amplitudes, as indicated by the term . The terms and represent the westward (if ) displacement of the wave by the zonal current, and allows the displacement to overcome the amplification. The zonal flow is driven by the external force which is proportional to the contrast between solar heating at low and high latitudes. A secondary forcing g affects the wave, it mimics the contrasting thermal properties of the underlying surface of zonally alternating oceans and continents. When and , the system has a single steady solution , representing a steady Hadley circulation. This zonal flow becomes unstable for , forming steadily progressing vortices. For , the system clearly shows chaotic behavior.

Linking (38) to the general conditional Gaussian framework (1) and (2), it is clear that the zonal wind current is the variable for state estimation or filtering given the two phases of the large-scale vortices .

In Figure 5, we show the sample trajectories of the system with the following parameters:

| (39) |

which were Lorenz adopted [35]. Small noise is also added to the system. It is clear that y and z are quite chaotic and they appear as a pair (Panels (b,c,f)). On the other hand, x is less turbulent and occurs in a relatively slower time scale. It plays an important role in changing both the phase and amplitude of y and z.

Figure 5.

Simulations of the L-84 model (38) with parameters in (39). (a–c) sample trajectories of x, y and z, respectively; (d) 3D trajectory of ; (e,f) 2D trajectories of and .

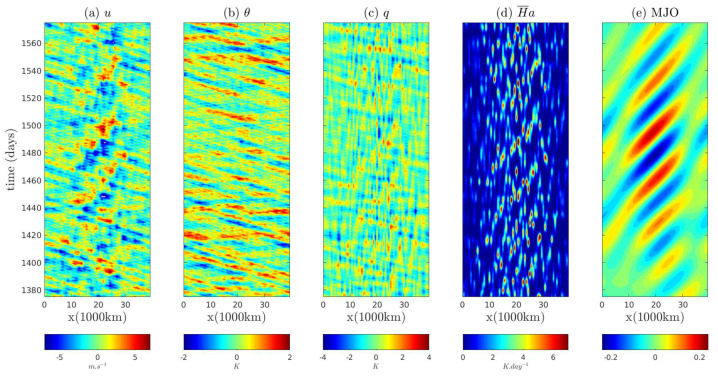

4.1.2. Nonlinear Stochastic Models for Predicting Intermittent MJO and Monsoon Indices

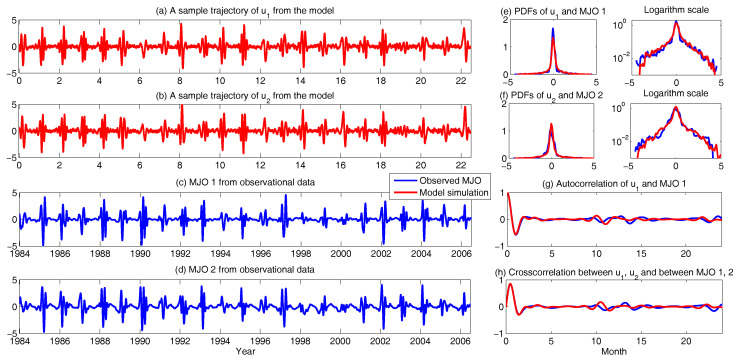

Assessing the predictability limits of time series associated with the tropical intraseasonal variability such as the the Madden–Julian oscillation (MJO) and monsoon [41,42,43] is an important topic. They yield an interesting class of low-order turbulent dynamical systems with extreme events and intermittency. For example, Panels (c,d) in Figure 6 show the MJO time series [41], measured by outgoing longwave radiation (OLR; a proxy for convective activity) from satellite data [88]. These time series are obtained by applying a new nonlinear data analysis technique, namely nonlinear Laplacian spectrum analysis [124], to these observational data. To describe and predict such intermittent time series, the following model is developed:

| (40) |

with

| (41) |

Figure 6.

Using physics-constrained nonlinear low-order stochastic model (6) to capture the key features of the observed MJO indices. (a,b) sample trajectories from the model (6); (c,d) MJO indices from observations; (e,f) comparison of the PDFs in both linear and logarithm scales; (g,h) comparison of the autocorrelation and cross-correlation functions.

Such a model is derived based on the physics-constrained nonlinear data-driven strategy [31,32]. In this model, are the two-dimensional indices obtained from observational data while are the two hidden unobserved variables representing other important underlying physical processes that interact with the observational ones. The coupled system is a conditional Gaussian one, which plays an important role here since there is no direct observational data for the two hidden processes v and . In fact, in order to obtain the initial values of v and for ensemble forecast, the data assimilation formula in (4) and (5) is used given the observational trajectories of and . The parameters here are estimated via information theory. With the calibrated parameters, the sample trajectories as shown in Panels (a,b) capture all the important features as in the MJO indices from observations. In addition, the non-Gaussian PDFs (Panels (e,f)) and the correlation and cross-correlation functions (Panels (g,h)) from the model nearly perfectly match those associated with the observations. In [41], significant prediction skill of these MJO indices using the physics-constrained nonlinear stochastic model (6) was shown. The prediction based on ensemble mean can have skill even up to 40 days. In addition, the ensemble spread accurately quantify the forecast uncertainty in both short and long terms. In light of a twin experiment, it was also revealed in [41] that the model in (6) is able to reach the predictability limit of the large-scale cloud patterns of the MJO.

4.1.3. A Simple Stochastic Model with Key Features of Atmospheric Low-Frequency Variability

This simple stochastic climate model [49,125] presented below is set-up in such a way that it features many of the important dynamical features of comprehensive global circulation models (GCMs) but with many fewer degree of freedom. Such simple toy models allow the efficient exploration of the whole parameter space that is impossible to conduct with GCMs:

| (42) |

where . It contains a quadratic nonlinear part that conserves energy as well as a linear operator. Therefore, this model belongs to physics-constrained nonlinear stochastic model family. The linear operator includes a skew-symmetric part that mimics the Coriolis effect and topographic Rossby wave propagation, and a negative definite symmetric part that is formally similar to the dissipation such as the surface drag and radiative damping. The two variables and can be regarded as climate variables while the other two variables and become weather variables that occur in a much faster time scale when is small. In fact, the MTV strategy as described in Section 3.4 is applied to and that introduce this together with stochastic noise and damping terms. The coupling in different variables is through both linear and nonlinear terms, where the nonlinear coupling through produces multiplicative noise. Note that when , applying an explicit stochastic mode reduction results in a two-dimensional system for the climate variables [45,46]. Clearly, the 4D stochastic climate model (42) is a conditional Gaussian system with and .

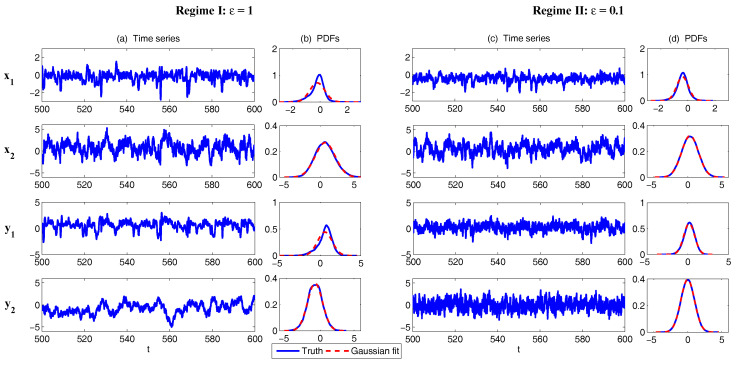

In Figure 7, sample trajectories and the associated PDFs in two dynamical regimes are shown. The two regimes differ by the scale separation with (Regime I) and (Regime II), respectively. The other parameters are the same in the two regimes:

| (43) |

Figure 7.

Simulations of the simple stochastic climate model (42) with parameters given by (43). (Left) Regime I with . (Right) Regime II with .

It is obvious that with , all the four variables lie in the same time scale. Both “climate variable” and “weather variable” can have intermittent behavior with non-Gaussian PDFs. On the other hand, with , a clear scale separation can be seen in the time series, where and occur in a much faster time scale than and . Since the memory time due to the strong damping becomes much shorter in and , the associated PDFs for these “weather variables” become Gaussian.

4.1.4. A Nonlinear Triad Model with Multiscale Features

The following nonlinear triad system is a simple prototype nonlinear stochastic model that mimics structural features of low-frequency variability of GCMs with non-Gaussian features [50] and it was used to test the skill for reduced nonlinear stochastic models for the fluctuation dissipation theorem [126]:

| (44) |

| (45) |

| (46) |

The triad model (44)–(46) involves a quadratic nonlinear interaction between and with energy-conserving property that induces intermittent instability. On the other hand, the coupling between and is linear and is through the skew-symmetric term with coefficient , which represents an oscillation structure of and . Particularly, when is large, fast oscillations become dominant for and while the overall evolution of can still be slow provided that the feedback from and is damped quickly. Such multiscale structure appears in the turbulent ocean flows described for example by shallow water equation, where stands for the geostrophically balanced part while and mimics the fast oscillations due to the gravity waves [11,122]. The large-scale forcing represents the external time-periodic input to the system, such as the seasonal effects or decadal oscillations in a long time scale [121,127]. In addition, the scaling factor plays the same role as in the 4D stochastic climate model (42) that allows a difference in the memory of the three variables. Note that the MTV strategy as described in Section 3.4 is applied to and that introduces the factor . The nonlinear triad model in (44)–(46) belongs to conditional Gaussian model family with and .

To understand the dynamical behavior of the nonlinear triad model (44)–(46), we show the model simulations in Figure 8 for the following two regimes:

| (47) |

Figure 8.

Model simulation of the triad model (44)–(46) with parameters in (47). (a) Regime I with ; (b) Regime II with .

In Regime I, and therefore and occur in the same time scale. Since a large noise coefficient is adopted in the dynamics of , the PDF of is nearly Gaussian and the variance is large. The latter leads to a skewed PDF of due to the nonlinear feedback term , where the extreme events are mostly towards the negative side of due to the negative sign in front of the nonlinear term. As a consequence, the also has a skewed PDF but the skewness is positive. On the other hand, in Regime I, where , and both have a short decorrelation time and the associated PDFs are nearly Gaussian. Nevertheless, the slow variable is highly non-Gaussian due to the direct nonlinear interaction between in which plays the role of stochastic damping and it results in the intermittent instability in the signal of .

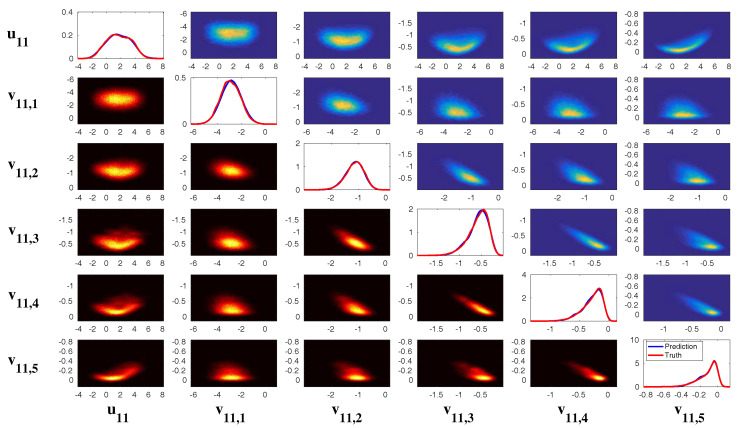

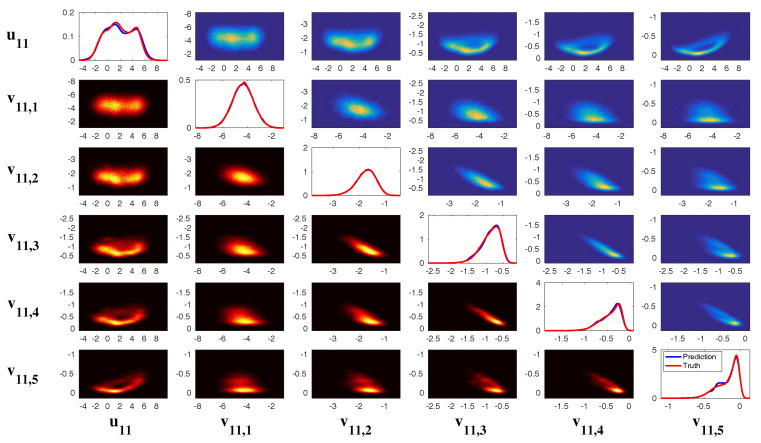

4.1.5. Conceptual Models for Turbulent Dynamical Systems

Understanding the complexity of anisotropic turbulence processes over a wide range of spatiotemporal scales in engineering shear turbulence [128,129,130] as well as climate atmosphere ocean science [73,121,122] is a grand challenge of contemporary science. This is especially important from a practical viewpoint because energy often flows intermittently from the smaller scales to affect the largest scales in such anisotropic turbulent flows. The typical features of such anisotropic turbulent flows are the following: (A) The large-scale mean flow is usually chaotic but more predictable than the smaller-scale fluctuations. The overall single point PDF of the flow field is nearly Gaussian whereas the mean flow pdf is sub-Gaussian, in other words, with less extreme variability than a Gaussian random variable; (B) There are nontrivial nonlinear interactions between the large-scale mean flow and the smaller-scale fluctuations which conserve energy; (C) There is a wide range of spatial scales for the fluctuations with features where the large-scale components of the fluctuations contain more energy than the smaller-scale components. Furthermore, these large-scale fluctuating components decorrelate faster in time than the mean-flow fluctuations on the largest scales, whereas the smaller-scale fluctuating components decorrelate faster in time than the larger-scale fluctuating components; (D) The PDFs of the larger-scale fluctuating components of the turbulent field are nearly Gaussian, whereas the smaller-scale fluctuating components are intermittent and have fat-tailed PDFs, in other words, a much higher probability of extreme events than a Gaussian distribution.

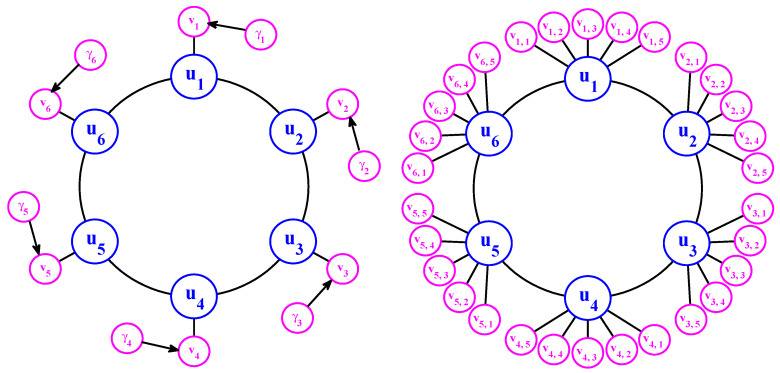

Denote u the largest variable and a family of small-scale variables. One can think of u as the large-scale spatial average of the turbulent dynamics at a single grid point in a more complex system and as the turbulent fluctuations at the grid point. To add a sense of spatial scale, one can also regard as the amplitude of the k-th Fourier cosine mode evaluated at a grid point. A hallmark of turbulence is that the large scales can destabilize the smaller scales in the turbulent fluctuations intermittently and this increased small-scale energy can impact the large scales; this key feature is captured in the conceptual models. With the above discussion, here are the simplest models with all these features, the conceptual dynamical models for turbulence [37]:

| (48) |

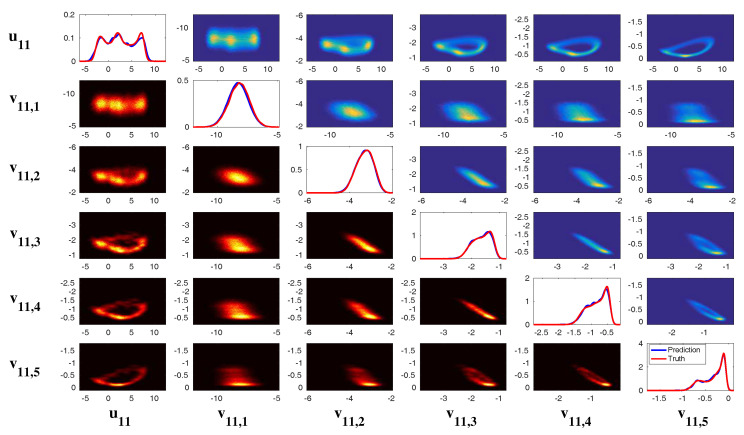

Now, let us take and use the following parameters which have been tested in [37] that represent the features of turbulent flows:

| (49) |

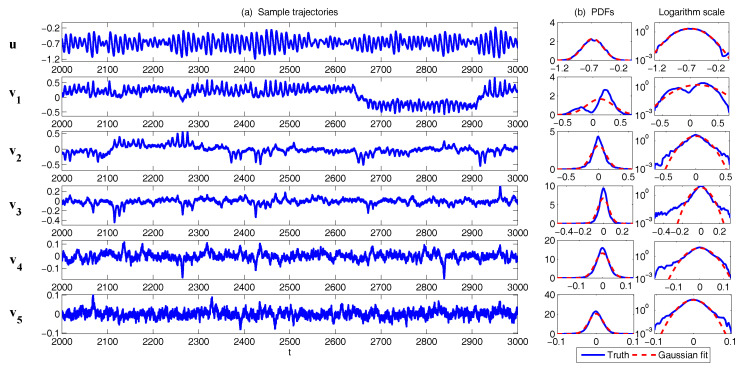

The sample trajectories and the associated PDFs are shown in Figure 9. The largest scale u is nearly Gaussian while more intermittent behavior is observed in the smaller scale variables. Here, the nonlinear interaction plays an important role in generating these turbulent features and the total energy in the nonlinear terms is conserved. Thus, all the features (A)–(D) above are addressed in this model. It is also clear that the conceptual turbulent model (48) is a conditional Gaussian system with , .

Figure 9.

Sample trajectories (a) and the associated PDFs (b) of the conceptual turbulent model (48) with parameters in (49).

In [36], a modified version of the conceptual model was developed:

| (50) |

| (51) |

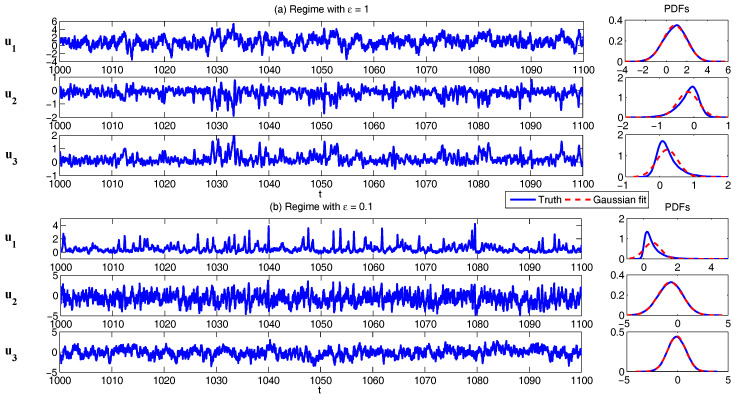

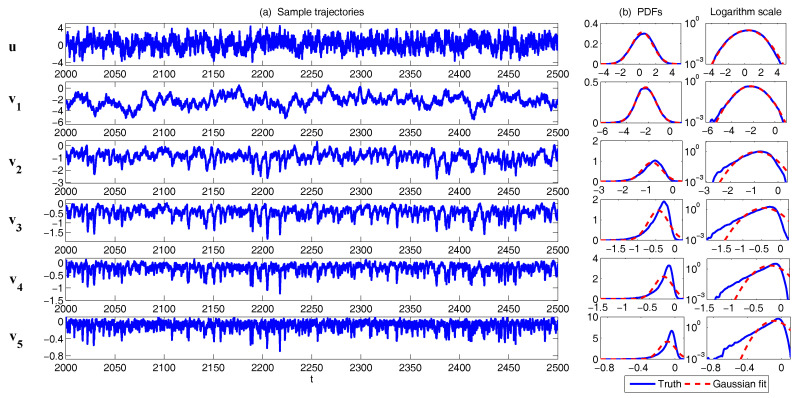

This modified conceptual turbulent model again fits into the conditional Gaussian framework, where includes the largest scale variable and represents small-scale ones. The modified conceptual turbulent model (50) and (51) inherits many important features from the dynamics in (48). For example, with suitable choices of parameters, the large-scale observed variable u is nearly Gaussian while small-scale variables becomes more intermittent with the increasing of k. In addition, the small-scale turbulent flows provide feedback to large scales through the nonlinear coupling with energy-conserving property. An example is shown in Figure 10 with the following choice of parameters:

| (52) |

Figure 10.

Sample trajectories (a) and the associated PDFs (b) of the modified conceptual turbulent model (50) and (51) with parameters in (52).

4.1.6. A Conceptual Model of the Coupled Atmosphere and Ocean

In [39], the signatures of feedback between the atmosphere and the ocean are studied with a simple coupled model, which can be used to exam the effects of oceanic variability and seasonality.

The atmosphere component is the Lorenz 1984 model (38) discussed in Section 4.1.1, except that the forcing has an explicit form

which represents seasonal effect. Therefore, the atmosphere model reads

| (53) |

Again, x represents the amplitude of the zonally averaged meridional temperature gradient while y and z denote the amplitudes of the cosine and sine longitudinal phases of a chain of large-scale waves. The poleward heat transport is achieved by the eddies at a rate proportional to , and this heat transport reduces the zonally averaged temperature gradient. The term represents the zonally averaged forcing due to the equator-pole difference in solar heating and it varies on a seasonal timescale.

On the other hand, the oceanic module simulates the wind-driven circulation in a basin that occupies a fraction r of the longitudinal extent of the atmosphere. Its dynamics are described by a set of four ordinary differential equations, namely,

| (54) |

Here, p represents the zonally averaged meridional temperature gradient at the sea surface, while q represents the basin-averaged sea surface temperature. The poleward heat transport is achieved by a large-scale flow, at a rate proportional to . The average temperature Q is conserved in the absence of any coupling with the atmosphere. The transport is represented by two phases of the streamfunction, and . The streamfunction undergoes damped oscillations with a period, , of y and a decay time, , of 17 y. This damped oscillation is the only source of internal variability in the ocean and is due to the intrinsic decadal variability of the wind-driven circulation. Note that the equations for the two phases of the streamfunction can be derived as a Galerkin truncation of the one-and-a-half-layer quasigeostrophic potential vorticity equation for long linear Rossby waves. It is suggested in [131,132] that basin modes with decadal frequencies can be excited by stochastic atmospheric forcing and represent a resonant response of the ocean. This model essentially assumes that the intrinsic decadal variability of the ocean wind-driven circulation is described by one such mode. For weak flow, the wind-driven gyres reduce the zonally averaged north-south temperature gradient in a basin.

The feedback between the ocean and the atmosphere are constructed so as to conserve total heat in the air–sea exchange. The coupled atmosphere-ocean model reads:

| (55) |

where the terms with underlines represent the coupling between atmosphere and ocean. In (55), the term on the right-hand side of the evolution of p is parameterized by a constant n such that the ocean part become a linear model. Therefore, (55) includes a nonlinear atmosphere model and a linear ocean model. The coupling is through linear terms. Thus, treating the atmosphere variables as and the ocean variables as , the coupled system (55) belongs to conditional Gaussian framework. It is also obvious that the coupled model belongs to the physics-constrained regression model family.

In (55), the air–sea heat fluxes are proportional to the difference between the oceanic and the atmospheric temperature: these are the terms and . The bulk transfer coefficient, m, is assumed to be constant. In the atmospheric model, this term needs to be multiplied by the fraction of earth covered by ocean, r. In the oceanic model, the air–sea flux is divided by c, which is the ratio of the vertically integrated heat capacities of the atmosphere and the ocean. The constant represents the fraction of solar radiation that is absorbed directly by the ocean. There is no heat exchange between the atmospheric standing wave z and the ocean because z represents the sine phase of the longitudinal eddies and has zero zonal mean across the ocean. A feedback between z and the ocean would appear if we added an equation for the longitudinal temperature gradient of the ocean. The effect of the wind stress acting on the ocean is represented as a linear forcing proportional to x and y in the equations for the streamfunction, . The coupling constants , , , and are chosen to produce realistic values for the oceanic heat transport. Detailed discussions of the parameter choices and model behaviors in different dynamical regimes are shown in [39].

4.1.7. A Low-Order Model of Charney–DeVore Flows

The concept of multiple equilibria in a severely truncated “low-order” image of the atmospheric circulation was proposed by Charney and DeVore [133] (the CdV model). The simplest CdV model describes a barotropic zonally unbounded flow over a sinusoidal topography in a zonal channel with quasigeostrophic dynamics. The vorticity balance of such a flow

| (56) |

needs an additional constraint to determine the boundary values of the streamfunction on the channel walls. The vorticity concept has eliminated the pressure field and its reconstruction in a multiconnected domain requires the validity of the momentum balance, integrated over the whole domain,

| (57) |

Here, U is the zonally and meridionally averaged zonal velocity and is the vorticity and the zonal momentum imparted into the system.

The depth of the fluid is and the topography height b is taken sinusoidal, with , where L is the length and the width of the channel. A heavily truncated expansion

represents the flow in terms of the zonal mean U and a wave component with sine and cosine amplitudes A and B. It yields the low-order model [29,133]:

| (58) |

where is the barotropic Rossby wave speed and . Apparently, (58) belongs to the conditional Gaussian framework when the zonal mean flow is treated as while the two wave components belong to . This model also belongs to physics-constrained nonlinear modeling family.

Without the stochastic noise, three equilibria are found if is well above . The three possible steady states can be classified according to the size of the mean flow U compared to the wave amplitudes: (a) The high zonal index regime is frictionally controlled, the flow is intense and the wave amplitude is low; (b) The low zonal index regime is controlled by form stress, the mean flow is weak and the wave is intense; (c) The intermediate state is transitional, it is actually unstable to perturbations. This “form stress instability” works obviously when the slope of the resonance curve is below the one associated with friction, i.e., , so that a perturbation must run away from the steady state.

4.1.8. A Paradigm Model for Topographic Mean Flow Interaction

A barotropic quasi-geostrophic equation with a large scale zonal mean flow can be used to solve topographic mean flow interaction. The full equations are given as follows [2]:

| (59) |

Here, q and are the small-scale potential vorticity and stream function, respectively. The large scale zonal mean flow is characterized by and the topography is defined by function . The parameter is associated with the -plane approximation to the Coriolis force. The domain considered here is a periodic box .

Now, we construct a set of special solutions to (59), which inherit the nonlinear coupling of the small-scale flow with the large-scale mean flow via topographic stress. Consider the following Fourier decomposition:

| (60) |

where and . By assuming and inserting (60) into (59), we arrive at the layered topographic equations in Fourier form:

where and . Consider a finite Fourier truncation. By adding extra damping and stochastic noise for compensating the information truncated in the finite Fourier decomposition in ,

We arrive at a conditional Gaussian system with and .

In [2], very rich chaotic dynamics are reached with two-layer topographic models. Here as a concrete example, we consider a single layer topography in the zonal direction [32],

where and H denotes the topography amplitude. Choose

and rotate the variables counterclockwise by 45° to coordinate . We arrive at

| (61) |

where = . This model is similar to the Charney and Devore model for nonlinear regime behavior without dissipation and forcing. Then, with additional damping and noise in and approximating the interaction with the truncated Rossby wave modes, we have the following system:

| (62) |

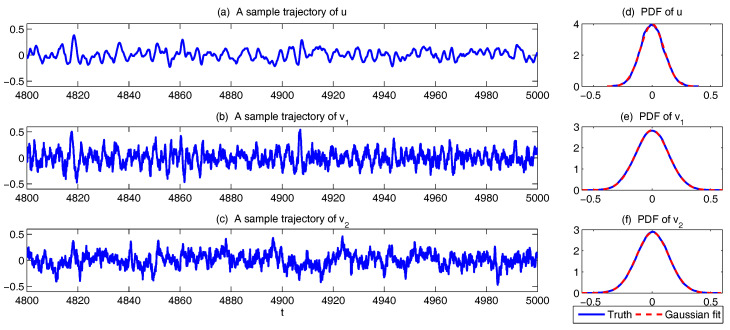

Linear stability is satisfied for while there is only neutral stability of u. The system in (62) satisfies the conditional Gaussian framework where and . Notably, this model also belongs to the physics-constrained nonlinear model family. One interesting property of this model is that, if the invariant measure exists, then, despite the nonlinear terms, the invariant measure is Gaussian. The validation of this argument can be easily reached following [31]. A numerical illustration is shown Figure 11 with the following parameters:

| (63) |

Figure 11.

Sample trajectories (a–c) and the associated PDFs (d–f) of the single layer topography model (62) with parameters in (63). Note that the invariant measure is Gaussian.

Note that, if stochastic noise is also added in the evolution equation of u, then the system (62) also belongs to the conditional Gaussian model family with and .

4.2. Stochastically Coupled Reaction–Diffusion Models in Neuroscience and Ecology

4.2.1. Stochastically Coupled FitzHugh–Nagumo (FHN) Models

The FitzHugh–Nagumo model (FHN) is a prototype of an excitable system, which describes the activation and deactivation dynamics of a spiking neuron [57]. Stochastic versions of the FHN model with the notion of noise-induced limit cycles were widely studied and applied in the context of stochastic resonance [134,135,136,137]. Furthermore, its spatially extended version has also attracted much attention as a noisy excitable medium [138,139,140,141].

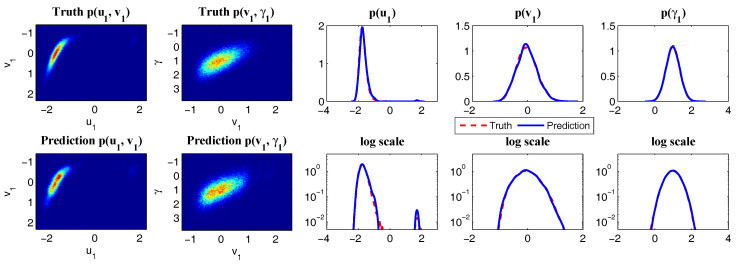

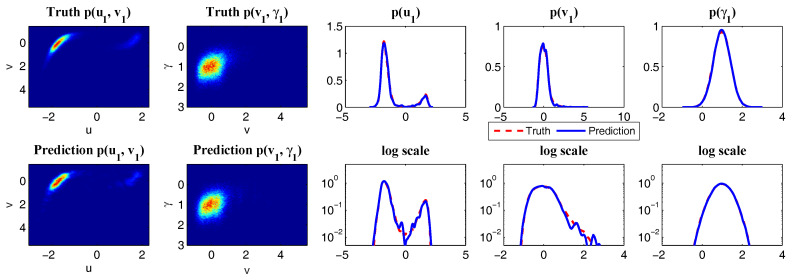

One common representation of the stochastic FHN model is given by

| (64) |

where the time scale ratio is much smaller than one (e.g., ), implying that is the fast and is the slow variable. The coupled FHN system in (64) is obviously a conditional Gaussian system with and . The nonlinear function is one of the nullclines of the deterministic system. A common choice for this function is

| (65) |

where the parameter c is either 1 or . On the other hand, is usually a linear function of u. In (64), is an external source and it can be a time-dependent function. In the following, we set to be a constant external forcing for simplicity. Diffusion term is typically imposed in the dynamics of u. Thus, with these choices, a simple stochastically coupled spatial-extended FHN model is given by

| (66) |

With and , the model in (66) contains the model families with both coherence resonance and self-induced stochastic resonance [142]. Applying a finite difference discretization to the diffusion term in (66), we arrive at the FHN model in the lattice form

| (67) |

Note that the parameter is required in order to guarantee that the system has a global attractor in the absence of noise and diffusion. The random noise is able to drive the system above the threshold level of global stability and triggers limit cycles intermittently. The model behavior of (67) in various dynamical regimes has been studied in [36]. The model can show both strongly coherent and irregular features as well as scale-invariant structure with different choices of noise and diffusion coefficients.

There are several other modified versions of the FHN model that are widely used in applications. One that appears in the thermodynamic limit of an infinitely large ensemble is the so-called globally coupled FHN model

| (68) |

where . Different from the model in (67) where each is only directly coupled to its two nearest neighbors and , each in the globally coupled FHN model (68) is directly affected by all . In [57], various closure methods are developed to solve the time evolution of the statistics associated with the globally coupled FHN model (68).

Another important modification of the FHN model is to include the colored noise into the system as suggested by [57,143]. For example, the constant a on the right-hand side of (67) can be replaced by an OU process [143] that allows the memory of the additive noise. Below, by introducing a stochastic coefficient in front of the linear term u, a stochastically coupled FHN model with multiplicative noise is developed. The model reads

| (69) |

The stochastically coupled FHN model with multiplicative noise belongs to the conditional Gaussian framework with and .

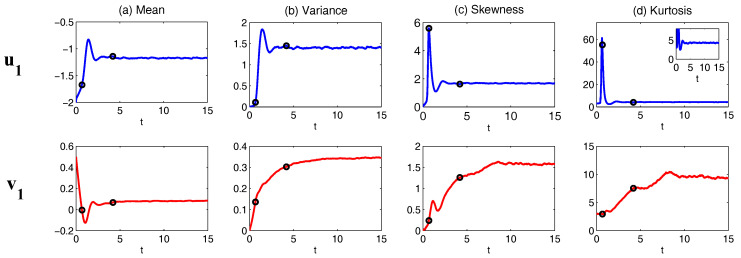

To provide intuition about the dynamical behavior of the stochastically coupled FHN model with multiplicative noise (69), we show the time series of the model with , namely there is no diffusion term and the coupled model is three-dimensions. The three-dimensional model with no diffusion reads,

| (70) |

with the following parameters

| (71) |

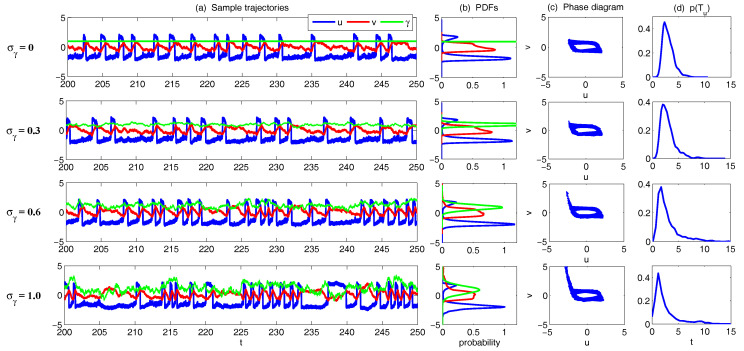

Figure 12 shows the model simulations with different . Note that, when , the model (70) reduces to a two-dimensional model with . From Figure 12, it is clear that u is always intermittent. On the other hand, v has only a small variation when is nearly a constant while more extreme events in v are observed when increases (see the PDF of v). Note that the extreme events in v are strongly correlated with the quiescent phase of u according to the phase diagram. These extreme events do not affect the regular ones that form a closed loop with the signal of u. With the increase of , the periods of u becomes more irregular. This can be seen in (d), which shows the distribution of the time interval T between successive oscillations in u. With a large , this distribution not only has a large variance but also shows a fat tail.

Figure 12.

Simulations of the three-dimensional stochastically coupled FHN model with multiplicative noise (70). Different values of noise coefficient are used here. (a) sample trajectories of u (blue), v (red) and (green); (b) the associated PDFs; (c) phase diagram of ; (d) distribution of the time interval T between successive oscillations in u.

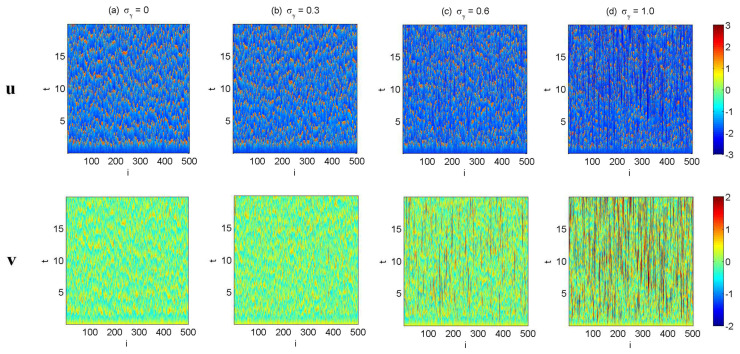

Finally, Figure 13 shows the model simulation in the spatial-extended system (69), where . The same parameters as in (71) are taken and and for all i. Homogeneous initial conditions and are adopted for all . The four rows show the simulation with different , where is the noise coefficient at all the grid points, namely for all i. With the increase of , the spatial structure of u becomes less coherent and more disorganized, which is consistent with the temporal structure as shown in Figure 12. In addition, more extreme events can be observed in the field of v due to the increase of the multiplicative noise.

Figure 13.

Simulations of the stochastically coupled FHN model with multiplicative noise system with spatial-extended structure (69). The same parameters as in (71) are taken and and for all i. Homogeneous initial conditions and are adopted for all . (a–d) show the simulation with different and , where is the noise coefficient at all the grid points, namely for all i.

4.2.2. The Predator–Prey Models

The functioning of a prey–predator community can be described by a reaction–diffusion system. The standard deterministic predator–prey models have the following form [58]:

| (72) |

where u and v represent predator and prey, respectively. Here, , and are constants: is the maximal growth rate of the prey, b is the carrying capacity for the prey population, and H is the half-saturation abundance of prey. Introducing dimensionless variables

and, using the dimensionless parameters,

The non-dimensional system (by dropping the primes) becomes

| (73) |

Note that, in the predator–prey system, both u and v must be positive.

Clearly, the deterministic model is highly idealized and therefore stochastic noise is added into the system [144,145]. In order to keep the positive constraints for the variables u and v, multiplicative noise is added to the system. One natural choice of the noise is the following:

| (74) |

Here, is a function of u and its value vanishes at to guarantee the positivity of the system. The most straightforward choice of is , which leads to small noise when the signal of u is small and large noise when the amplitude of u is large. Another common choice of this multiplicative noise is such that the noise level remains nearly constant when u is larger than a certain threshold. Applying a spatial discretization to the diffusion terms, the stochastic coupled predator–prey in (74) belongs to the conditional Gaussian framework with and . Note that when , the conditional Gaussian estimation (4) and (5) will become singular due to the term . Nevertheless, the limit cycle in the dynamics will not allow the solution to be trapped at . Assessing this issue using rigorous analysis is an interesting future work.

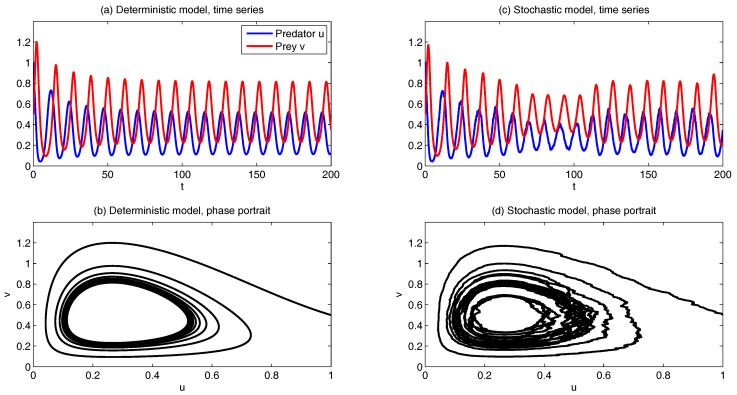

Figure 14 shows sample trajectories and phase portraits under the simplest setup simulation of (74) without diffusion terms and . The parameters are given as follows:

| (75) |

Figure 14.

Sample trajectories and phase portraits under the simplest setup simulation of (74) without diffusion terms and . The parameters are given in (75). (a,b) simulations without stochastic noise; (c,d) simulations with a multiplicative noise .

Panels (a,b) show the simulations without stochastic noise while Panels (c,d) show the results with a multiplicative noise . The intermittent behavior is found in both u and v.

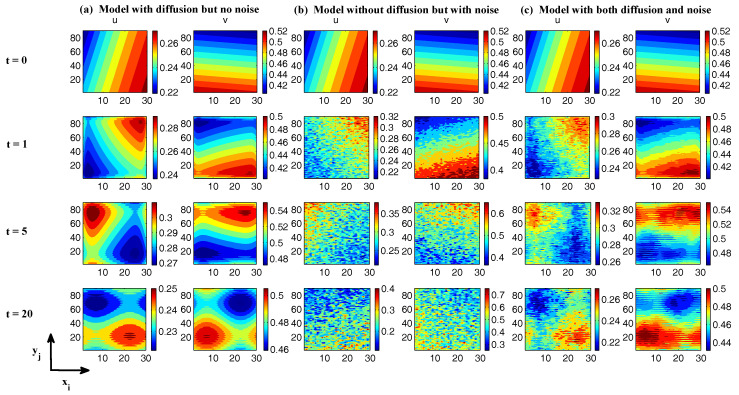

In Figure 15, we show the simulations of the spatial-extended system in (74), where we take 30 and 90 grid points in x and y directions, respectively. Spatially periodic boundary conditions are used here. In all three of the simulations in Columns (a–c), the initial values for both u and v are the same. Column (a) shows the model simulation of (74) with diffusion terms and , but the noise coefficient is zero, namely . Therefore, the model is deterministic and spatial patterns can be seen in all the time instants. In Column (b), we ignore the diffusion terms and thus the system is spatially decoupled. Nevertheless, a stochastic noise is added to the system. The consequence is that the initial correlated structure will be removed by the noise and, at , the spatial structures are purely noisy. Finally, in Column (c), the simulations of the model with both the diffusion terms , and the stochastic noise are shown. Although after , the initial structure completely disappears, the diffusion terms correlate the nearby grid points and spatial structures are clearly observed in these simulations. Due to the stochastic noise, the patterns are polluted and are more noisy than those shown in Column (a). In addition, the structure in v is more clear since the noise is directly imposed only on u.

Figure 15.

Snapshots at and of the spatial-extended system (74). Spatially periodic boundary conditions are used here. The parameters are given in (75). In all the three simulations in (a–c), the initial values for both u and v are the same. (a) model simulation of (74) with diffusion terms and but the noise coefficient ; (b) model simulation of (74) without diffusion terms . Therefore, the system is spatially decoupled. However, a stochastic noise is added to the system; (c) simulations with both the diffusion terms and and the stochastic noise .

4.2.3. A Stochastically Coupled SIR Epidemic Model

The SIR model is one of the simplest compartmental models for epidemiology, and many models are derivations of this basic form [59,146]. The model consists of three compartments: “S” for the number susceptible, “I” for the number of infectious, and “R” for the number recovered (or immune). Each member of the population typically progresses from susceptible to infectious to recovered, namely

| (76) |

This model is reasonably predictive for infectious diseases which are transmitted from human to human, and where recovery confers lasting resistance, such as measles, mumps and rubella.

The classical SIR model is as follows:

| (77) |

where the total population size has been normalized to one and the influx of the susceptible comes from a constant recruitment rate b. The death rate for the and R class is, respectively, given by and . Biologically, it is natural to assume that . The standard incidence of disease is denoted by , where is the constant effective contact rate, which is the average number of contacts of the infectious per unit time. The recovery rate of the infectious is denoted by such that is the mean time of infection.

When the distribution of the distinct classes is in different spatial locations, the diffusion terms should be taken into consideration and random noise can also be added. Thus, an extended version of the above SIR system (77) can be described as the following [147,148,149]:

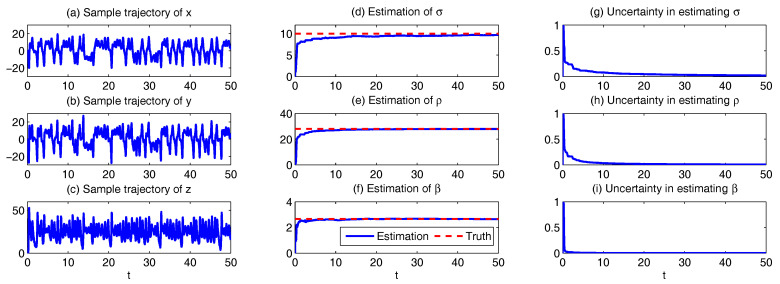

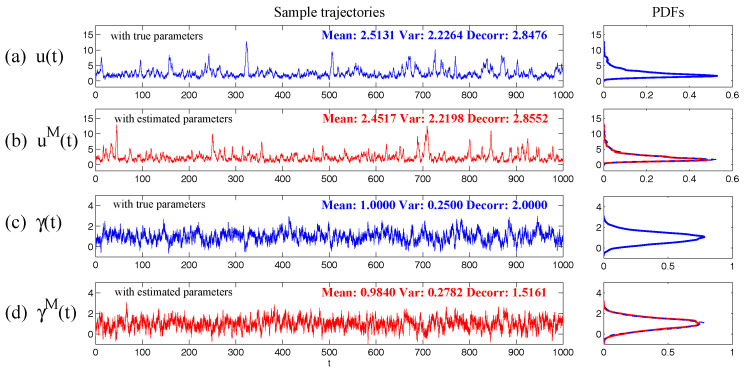

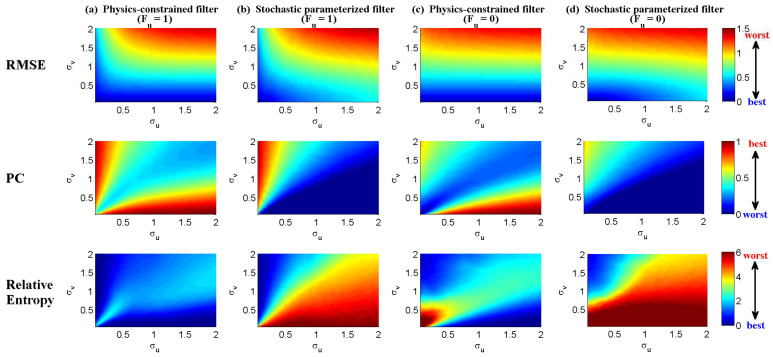

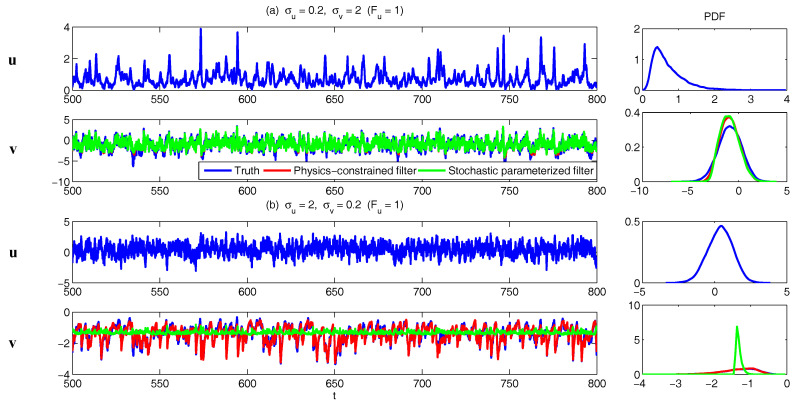

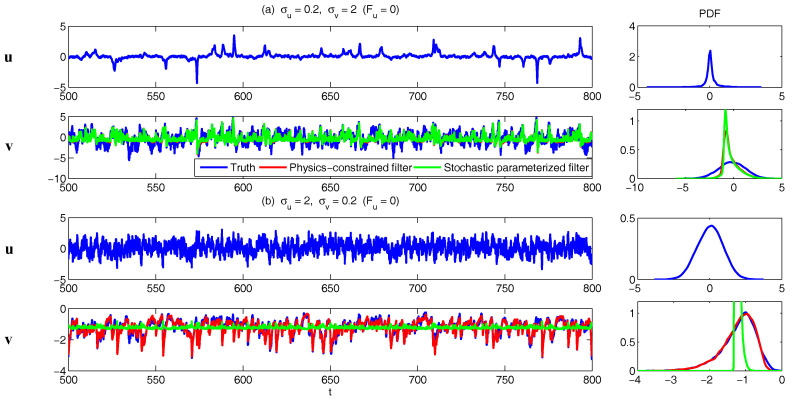

| (78) |