Abstract

The time evolution of stochastic reaction networks can be modeled with the chemical master equation of the probability distribution. Alternatively, the numerical problem can be reformulated in terms of probability moment equations. Herein we present a new alternative method for numerically solving the time evolution of stochastic reaction networks. Based on the assumption that the entropy of the reaction network is maximum, Lagrange multipliers are introduced. The proposed method derives equations that model the time derivatives of these Lagrange multipliers. We present detailed steps to transform moment equations to Lagrange multiplier equations. In order to demonstrate the method, we present examples of non-linear stochastic reaction networks of varying degrees of complexity, including multistable and oscillatory systems. We find that the new approach is as accurate and significantly more efficient than Gillespie’s original exact algorithm for systems with small number of interacting species. This work is a step towards solving stochastic reaction networks accurately and efficiently.

Keywords: stochastic chemical reactions, probability distributions, maximum entropy, lagrange multipliers

1. Introduction

The traditional approach to describe chemically reacting systems involves the use of deterministic rate laws. This macroscopic, continuous-deterministic modeling formalism is appropriate at the thermodynamic limit, when the volume of the system and the numbers of molecules of reactants and products all tend to very large values [1]. However, this approach fails to describe molecular populations that are small, finite and countable [2]. Such populations must be described by variables that can only change stochastically and by discrete amounts [3,4,5,6,7,8].

Markov chain models can be used to describe chemical reactions away from the thermodynamic limit [9]. When the system evolves stochastically, the all-encompassing chemical master equation (CME) can model the probability distribution of the system being at a particular state at time t [3,4].

The historical difficulties in solving the CME are well documented in the literature [5,9,10,11]. The CME is not a single equation but an infinite set of coupled equations. As a result the CME is analytically unsolvable for the majority of systems, with only few exceptions [12,13]. An established way to sample the master probability distribution is to use Gillespie’s Stochastic Simulation Algorithm (SSA) [6]. Despite the accuracy of SSA, the method is a kinetic Monte Carlo algorithm and hence computationally expensive, especially for systems with large numbers of molecules, or with reaction kinetics that span multiple time scales [14,15].

A proposed alternative to solve the CME is by calculating the probability moments [3]. Moments are expected values, e.g., the mean and the variance of the number of molecules [5,16,17,18]. This approach is based on the concept that any probability distribution can be completely described by its moments [19]. The starting point of moment equations is to rewrite the CME in terms of moments describing the master probability distribution [18,20]. Instead of a master equation that governs the probability distribution in time, one can then write a set of ordinary differential equations evolving the moments of the probability distribution [15,21]. Efficient algorithms have been developed to generate these moment equations for arbitrary networks [10,15,17,18,21]. Yet, because the dynamics of lower-order moments depend on the higher ones for non-linear reaction networks, the system of ODEs needs to be closed or somehow truncated in order to be solved [18,22].

A common approach to tackle the moment equation closure challenge is to a priori assume a specific form of the species behavior. By assuming a molecular component’s behavior one can relate the higher order moments to the lower order ones [19,23,24]. An important subset of the literature approaches the issue by assuming a specific form of the system’s probability distribution, such as normal, Poison, lognormal, Gamma etc. [10,21,24,25,26]. In cases when the molecular components behave in a known fashion, such distribution assumptions may produce reasonably accurate results. However, in most systems, there is a lack of knowledge of the species time evolution and thus such assumptions may break down or become impractical.

Recent advances in probability moment equation closure schemes have made it possible to efficiently calculate the stationary probability distribution [22]. These methods may quite accurately calculate the stationary behavior, however, they still face significant numerical challenges calculating the time evolution of the moments [15,19,27].

To circumvent the challenges of solving moment equations in time, we propose the use of Lagrange multipliers [22,28,29]. The connection between moments and Lagrange multipliers relies on the maximum entropy principle, which states the system attains a probability distribution that maximizes its entropy [20,22,28,30,31].

By Shannon’s definition entropy is given by [30], where is the number of molecules for each component and P the probability function. Using this definition and assuming that S is maximum, the system of moment equations can be transformed into a system of equations that depend only on the Lagrange multipliers [20,22]. We refer to the new system as “Lagrange multiplier equations (LMEs)”. The LMEs set, unlike moment equations [3,18], is a closed system, i.e., it has the same number of unknowns with equations. Thus, an intial value problem numerical technique, like Runge-Kutta [32], can be used to solve the system in time.

Herein, we unpack in full mathematical detail the derivation of a general system of LMEs in the next section. We then apply the LMEs method to multiple examples of non-linear reaction networks, including the bistable Schlögl model [33], the multistable Wilhelm model [34], the oscillatory Brusselator system [35,36] and the viral infection model [37,38]. After presenting a numerical procedure for the implementation of the method, we present results comparing the accuracy of LMEs solutions to SSA simulations. We conclude that the significance of this new method is that LMEs can solve reaction networks as accurate as SSA with significantly less computational cost. SSA has been used widely in the past two decades to capture the stochastic nature of chemical and biological systems away from the thermodynamic limit. LMEs may offer a potentially more efficient alternative to SSA, enabling practitioners to model stochastic reacting networks.

2. Materials and Methods/Theory

2.1. Lagrange Multiplier Equations

2.1.1. Connect Moments to Lagrange Multipliers

The general form of the moment equations for any arbitrary chemical reaction network is:

| (1) |

where is the lower-order moment vector (not including the zeroth-order moment, which is always equal to 1), is the higher-order moment vector, is a vector of constants and t represents time. A and are constant matrices that represent the linear and non-linear components of the network, respectively. In most cases, the vectors and have different dimensions [20]. For non-linear reaction networks, the vector is nonempty.

For a chemical reaction network with N reactants and products, the moment is connected to the probability function P through the following expression:

| (2) |

where is the functional form of the ith moment . For example, for a one component system the functional form of the 3rd polynomial moment is , where Y is the system’s component. The number of molecules of each component is contained in the matrix and corresponds to the N-dimensional state space for all the possible values of . The differences between and becomes clear if one compares Equation (2) and the definition of entropy ().

One can connect the probability distribution to Lagrange multipliers by maximizing the entropy [22]:

| (3) |

where is the jth Lagrange multiplier and M is the number of lower-order moments and also the size of vector . Thus, moments can be related to Lagrange multipliers by combing Equations (2) and (3):

| (4) |

2.1.2. Time Derivatives

In Equation (3), only the Lagrange multipliers depend on time; the state space and the functional form of the moments are time-independent. Hence, the time derivative of the probability distribution is:

| (5) |

This equation and the definition of moments (Equation (2)) connect the time derivative of the moments to the time derivative of the Lagrange multipliers:

| (6) |

Thus, the moments’ time derivative is transformed to:

| (7) |

where is the combined moment of and , given by

| (8) |

The sum of the probabilities across the whole state space is always 1 by definition. As a result, the zeroth-order moment is 1 () and its time derivative is zero (). This results in the zeroth Lagrange multiplier to be dependent on the rest of Lagrange multipliers

| (9) |

Hence, the time derivative of the zeroth Lagrange multiplier depends on the rest of the time derivatives as follows:

| (10) |

Based on this relation, Equation (7) can be modified as:

| (11) |

We have previously proved [39] that:

| (12) |

Hence, the time derivative of one moment is given by (Equations (11) and (12)):

| (13) |

or in matrix form:

| (14) |

J represents the Jacobian matrix of the system. Equation (14) also represents the chain rule of differentiation. There is now a way to connect the moment equations to equations that involve the Lagrange multipliers. The Lagrange multiplier equations can be derived by Equation (14) and the moment equations (Equation (1)):

| (15) |

where is the inverse of the Jacobian matrix. In the Appendix A, we discuss a computationally efficient way to calculate it. Even though the above equation also includes the moments of the probability distribution, it only depends on the Lagrange multipliers since the moments are directly correlated with Lagrange multipliers (Equation (4)).

All the Lagrange multipliers can be calculated through Equation (15) except the zeroth-order one . As discussed earlier, depends on the rest of the Lagrange multipliers through the relation [39]:

| (16) |

We refer to Equation (15) as the “Lagrange multiplier equations (LMEs)”. To our knowledge, this is the first time that LMEs are being discussed and derived in the literature. Through this step, the problem of calculating the probability for each point of the state space of stochastic networks has been transformed into a problem of calculating a finite set of Lagrange multipliers. The system is closed, it has the same number of equations and unknowns, and it is an initial value problem. An iterative numerical method can be used to solve this system. Appendix B outlines a proposed numerical approach to solve LMEs based on the Dormand-Prince Runge-Kutta RK5(4)7M method [32].

2.2. Initial Value from Moments to Lagrange Multipliers

The LMEs problem is an initial value problem; the values of the Lagrange multipliers for the initial state are required. However, Lagrange multipliers are numerical variables with no physical meaning and as a result an initial value is unknown. What is known is either the initial probability distribution or the values of its moments. Hence, a way to calculate Lagrange multipliers from moments is required. It should be noted that there is not a universally acceptable way to back calculate probability distributions from moments and the problem is not trivial [40].

Let’s assume that the moments, of the initial distribution, are known. If only the initial distribution is known, its moments can be calculated from Equation (2). The moments are related to the Lagrange multipliers by Equation (4). Hence:

| (17) |

G denotes the functional form of the matrix with dependences of the Lagrange multipliers. To calculate the Lagrange multipliers at the initial time from the moments, one has to solve a problem of the form: . This is an infinite set and in order to be numerically solvable the user should decide the necessary number of lower-order moments (i.e., to specify the closure order [22]). When closure order is specified, the system has the same number of equations and unknowns (the Lagrange multipliers) and a root-finding method such as Newton-Rapshon can be used. The residual of the method is and the Jacobian matrix is [39]. With this approach all the Lagrange multipliers can be calculated other than the zeroth one. The zeroth one can be calculated from Equation (16).

The knowledge of moments is used here to calculate the Lagrange multipliers, which then can be used to calculate the probability distribution based on Equation (3). This approach allows to calculate probability distributions from its moments. The novelty of the method is the connection of the probability distribution with Lagrange multipliers by maximizing the entropy of the system.

3. Results

To demonstrate the proposed Lagrange multiplier equations (LMEs) approach, we employ four different example networks: the bistable Schlögl model [33], the multistable Wilhelm’s system [34], the oscillatory Brusselator [35,36] and the viral infection [37,38]. The reaction networks and kinetic constants for each model are reported in Table 1. To assess the accuracy and computational efficiency of this method, the results are compared to SSA results using the same initial condition and kinetic constants.

Table 1.

The table shows the reaction networks with their kinetic constants for the bistable Schlögl, multistable Wilhelm, oscillatory Brusselator and viral infection networks. The kinetic constants values and initial condition for each system are also presented. For the third order reactions the kinetic constants are in (molecules· s) units, for the second order in (molecules · s), for the first order in s and for the zeroth order in molecules · s. For the Schlögl model the initial distribution is bimodal. All the other systems have a unimodal initial distribution. The first and second-order factorial moments of the initial distribution is reported for each network.

| Initial Moments | ||||

|---|---|---|---|---|

| Models | Reactions | Kinetic Constants | First-Order | Second-Order |

| Schlögl | ||||

| Wilhelm |

|

|

||

| Brusselator |

|

|

||

|

Viral Infection*

|

|

|

||

For all the systems the initial condition is a distribution generated with SSA, away from the steady state. The first and second-order factorial moments [17] of each initial distribution can be found in Table 1. Using the algorithm in Section 2.2, the initial distribution was transformed into an initial set of Lagrange multipliers. Based on the initial Lagrange multipliers and the Prince-Dormant algorithm outlined in Appendix B, the Lagrange multipliers for every time point were calculated until steady state was reached. Equations (3) and (4) were then used to calculate the probability distribution and factorial moments for each time point based on the calculated Lagrange multipliers.

All the LMEs results obtained with RK5(4)M are compared with SSA results on Table 2, in order to draw conclusions about the accuracy and the time efficiency of the method. The solution for each time point was compared to the one obtained from SSA simulations with 500,000 trajectories. The average difference among all time points between the two methods can be found in Table 2. Results for both the probability distribution and the first two orders of factorial moments are presented. For the system with more than one components (i.e., Wilhelm’s, Brusselator and viral infection models), the moments of the same order are averaged. The difference between LMEs results and the SSA probability distribution is calculated with the Kullbalck-Leiber divergence [41], whereas the second norm is used to calculate the errors in the moments.

Table 2.

The table presents the results of the LMEs solved with RK5(4)M compared to the ones obtained from SSA alongside the required computational time for each reaction network of Table 1. Each SSA simulation used 500,000 trajectories. This number of trajectories is sufficient for the errors for the mean and variance to be at most and , respectively. The first three columns show the average error between the two methods, which is calculated for each time point until steady state is reached and then averaged across all of them. For the probability distribution (), the Kullback-Leibler divergence [41] is used. For the first and second-order moments the second norm is used. The last three columns present the time that is required for each method. Each system has different initial and final time as well as different step size. To take into account these differences, we report the solving time per each method’s time step in CPU seconds. The last column presents the ratio of SSA time required over the RK5(4)M time.

| Network | Average Error (%) of | Time per Step (CPU s) | ||||

|---|---|---|---|---|---|---|

| 1st-Orde Moments |

2nd-Order Moments |

LMEs | SSA | Time Ratio |

||

| Schlögl | 0.05 | 0.22 | 0.22 | 0.02 | 419.2 | 20,960 |

| Wilhelm | 0.61 | 0.56 | 0.71 | 0.15 | 732.75 | 4,885 |

| Brusselator | 9.90 | 2.81 | 6.07 | 0.74 | 68.05 | 92 |

| Viral Infection | 0.43 | 0.01 | 0.02 | 8.97 | 64.96 | 7 |

3.1. Multistable Systems: The Schlögl and Wilhelm Models

The Schlögl model is one of the most commonly used theoretical bistable systems and is the simplest single-component system that can exhibit bistability [9,34]. Biological systems exhibiting bistability, both natural and synthetic, have gained an increasing interest recently [42,43,44], and the Schlögl model is a simple prototypical bistable model.

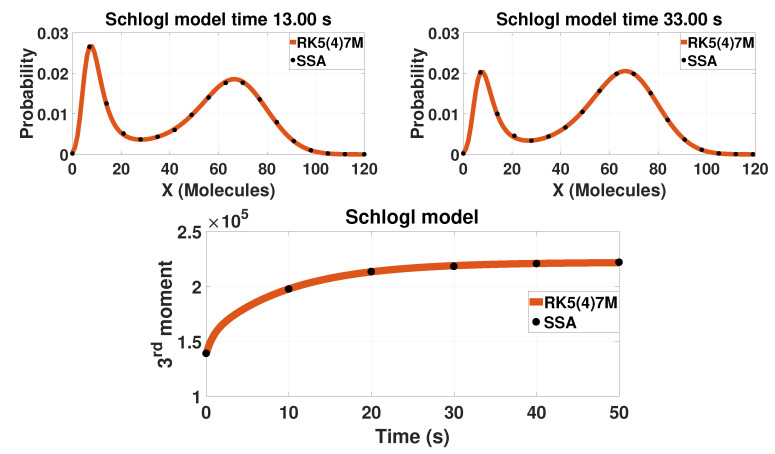

For different kinetic constants, the model has either a unimodal or a bimodal distribution. The kinetic constants selected in Table 1 result in a bimodal network. Results for the bistable model are reported on Figure 1. The probability distribution of the model is a bimodal distribution that changes form with time.

Figure 1.

The probability distribution and the third-order moment of the bistable Schlögl model. The first row presents the probability distribution of the single component for two different time points (13 s after the initial condition the left and 33 s the right one). The second row presents the time evolution of the third moment. The solid lines are solutions calculated based on LMEs with tenth-order closure (). The closure order is chosen based on the findings of [29]. The dots represent results from 500,000 SSA trajectories.

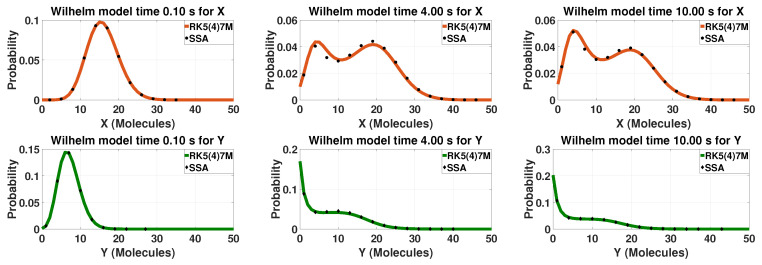

Another example of a theoretical system that can exhibit bistability is Wilhelm’s network. The network is a non-linear two-component model and it was created by Wilhelm as the smallest known bistable chemical reaction system [34]. Depending on the kinetic constants, both components can exhibit bistability simultaneously which results in a multistable system overall. This is the case for the kinetic constants reported in Table 1. The probability distribution for different time points for the two component of the network can be found in Figure 2. The system starts with a unimodal distribution for both components. As the time progresses, both components have a different bimodal distribution resulting in a mutlistable network. Close to the steady state, both components still have bimodal distributions but different than before.

Figure 2.

The probability distributions of the multistable Wilhelm’s model. The first row presents results for component X and the second row for component Y. The first column presents the time close to the initial condition (0.1 s), the second a mid-time point (4 s) and the last column a time point close to steady-state (10 s). The solid lines are solutions calculated based on LMEs and the dots/diamonds SSA solutions. The LMEs are calculated with up to sixth-order moments (). The closure order is chosen based on the findings of [39,45]. For SSA, 500,000 trajectories were used.

For these two example systems, the LMEs solutions are as accurate as the SSA results (Table 2). All the probability and moments errors are lower than 1%. The accuracy of the LMEs approach does not seem to be affected by the number of stability points of the system. There is also no significant difference between the first and second-order moment errors.

3.2. Oscillatory System: The Brusselator Model

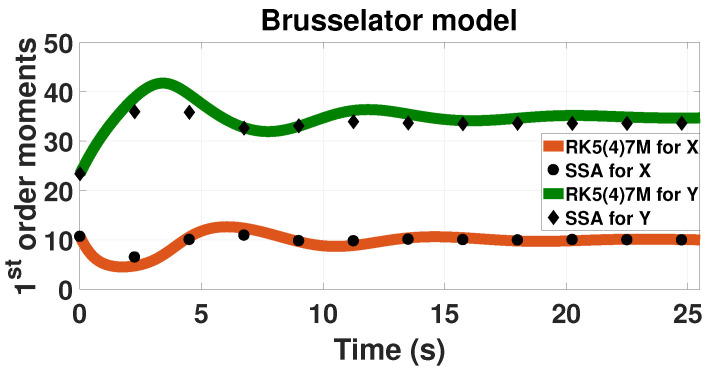

Oscillatory behaviors are particularly challenging to capture with stochastic models [46]. We have reported earlier how methods that can capture multistable behaviors fail to appropriately capture oscillatory ones [39].

We used the LMEs to investigated the Brusselator as a simple example of a theoretical oscillatory network [36]. We study the network in its oscillatory region as shown in Figure 3. Both network’s first-order moments oscillate with respect to time.

Figure 3.

Time evolution of the first-order probability moments for the oscillatory Brusselator model. The solid-line upper green line presents the moment for Y component calculated based on LMEs. The solid-line lower orange line represents the moment for X. SSA results are also included from 500,000 trajectories; dots represent component X and diamonds component Y. The results are calculated with up to fourth-order moments ().

As observed, the LMEs can capture the damped oscillatory behavior of the Brusselator. However, the method exhibits limited accuracy for solving this model (Table 2). The solution by LMEs of the Brusselator network has an increased error in all categories compared to the other networks. The error in both probability and moments reaches up to 10%. In this case, unlike in the other networks, there is a significant increase in the error of the second-order moments compared to the first-order ones.

The difference in the accuracy can be associated with the order of closure that is used to generate the LMEs (in this case 4 or , Figure 3). The accuracy of the Lagrange multipliers approach depends on the number of lower-order moments, M [29]. The higher the value of M, the more accurate results the method can produce. After, a certain value of M, the improvement in accuracy is insignificant. For more information on how the lower-order moments affects probability distributions the reader is directed to the literature [29]. It has been reported that the Brusselator requires more than fourth-order moments for accurate results [46].

A low order is used for this system due to the numerical difficulties of the algorithm that generates the initial condition (Section 2.2). In the LMEs approach, the closure order is specified through the initial condition. This is the number of Lagrange multipliers with known values at the initial time. For the rest of the time points, the number of Lagrange multipliers is the same as the initial time. For this system, the initial probability distribution was created with SSA and then, based on the algorithm described in Section 2.2, it was translated into the initial Lagrange multipliers. However, the algorithm in Section 2.2 faced significant numerical issues for moment orders higher than 4 and we were not able to generate initial conditions for higher lower-order moments. Thus, we did not provide the LMEs approach with the necessary number of lower-order moments that produce accurate results. The high error was generated by the algorithm that calculates the initial condition and not the Runge-Kutta algorithm of the LMEs. The Brusselator results are not ideal; however, given the difficulties of current methods to solve oscillatory systems, this is a step in the right direction.

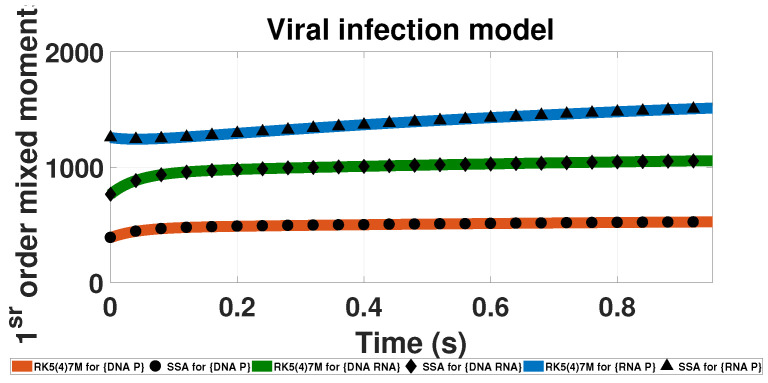

3.3. Multicomponent System: The Viral Infection Model

The LMEs approach is only limited to theoretical systems with complex behavior but can also be applied to natural and synthetic biological networks. One example is the viral infection model. The model was first created by Haseltine [37] as a general model of a cell infection by a virus. The model was then revised and simplified by Goutsias [38]. The network in Table 1 reflects the revised version of Goutsias. Results for the mixed first-order moments (moments than depend on more than one component) are reported in Figure 4. The LMEs approach accurately captures the different dynamics of all components and their combinations throughout the entire simulated time (Table 2).

Figure 4.

Time evolution of the first-order mixed probability moments for the Viral infection model. The solid-lines present results calculated based on LMEs and the doted points present SSA results. The results are calculated with up to second-order moment closure () and 500,000 SSA trajectories. The upper blue line with triangles present the mixed moment, the mid green line with diamonds corresponds to the mixed moment and the lower orange line with dots the mixed moment.

3.4. Computational Cost of LMEs

Aside from the accuracy of the approach, Table 2 also includes the computational time that is required for RK5(4)M to solve the LMEs. In all the systems, the LMEs approach is significantly faster than SSA. Each model has different computational time steps that are used; in order to take this difference into account, the table shows the time required for one time point per system. For each system, at least 10,000 time steps were used. As observed, the total amount of time that is required for LMEs approach is significantly lower than for SSA.

Among all systems, the LMEs approach requires less computational time to solve the two multistable systems (the Schlögl and the Wilhelm’s models). The LMEs approach is significantly faster than SSA (at least 4000 times faster, Table 2) and advantageous especially for multistable systems. As for oscillatory dynamics, they do not seem to affect the computational time of the LMEs approach.

The number of components, on the other hand, affects significantly the computational time of the LMEs approach. The Wilhelm’s and Brusselator models (both two-component systems) are slower than the Schlögl model (one-component system) and faster that the viral infection model (three-component system).

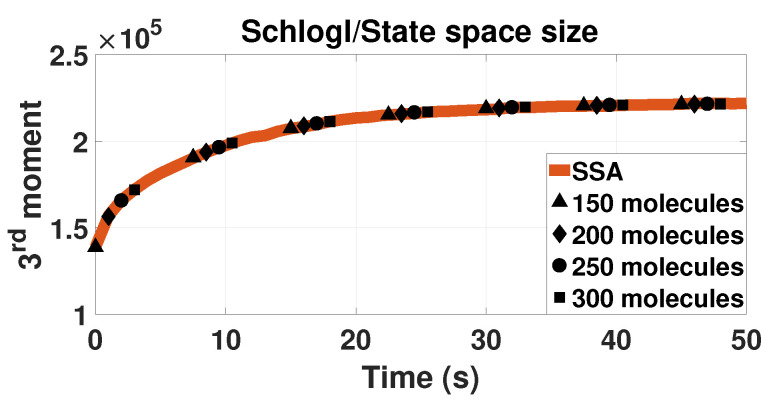

3.5. Dependence on State Space Size

The proposed method is a numerical method to solve stochastic reaction networks. The LMEs include summations across all the possible values of the state space. The state space needs to be specified and be finite in order for the algorithm to perform numerical calculations. This is a known case with numerical methods [22,47] and it can raise concerns about the accuracy of a method, especially in the case of systems with unknown state space. Some ways to calculate the appropriate state space of stochastic networks have been discussed in the literature [11,47].

To evaluate the stability of the Lagrange multipliers approach with the state space size, we use the Schlögl model as an example. The model has one component and thus the connection between state space size and solution accuracy can be drawn easily; yet it has a complex enough bistable behavior. Figure 5 shows results of the LMEs approach for different state space sizes. The solutions are also compared with the SSA results. The accuracy of the method is not affected by the state space size in this example. All different state space lengths provide the same value for the moments of the probability.

Figure 5.

Time evolution of the third-order moment for the Schögl model. The plot is similar to the bottom plot of Figure 1. Here, four different state space lengths are plotted. All the cases have zero as the lower bound of the state space. The coloring in this picture is reverse than the rest of them. The solid orange line is the SSA solution for 500,000 trajectories. The black dots are results with tenth-closure LMEs (). The triangles represent the solution with 150 molecules as the upper bound of the state space, diamonds use 200 molecules limit, circles 250 molecules and the squares 300 molecules.

4. Discussion

Stochasticity governs reaction networks away from the thermodynamic limit. A common approach to solve stochastic chemically reacting systems is through moment equations. However, moment equation systems are not closed for non-linear chemical reactions, and require important assumptions about the form of the probability function.

Here, we present an alternative method to solve stochastic reaction networks by using Lagrange multipliers. An approach to transform moment equations into a closed system of Lagrange multiplier equations (LMEs) is described. Since, LMEs is a closed system, Runge-Kutta methods (e.g., Durmand-Prince RK5(4)7M) can be employed to solve the transient problem and calculate the Lagrange multipliers for different time points. With the knowledge of the Lagrange multipliers, one then can calculate the probability distribution and its moments at any given time.

We show that this is a novel approach to bypass numerical challenges with chemical master equations and moment equations. The only assumption used is that the entropy of the system is maximum at all times. The LMEs solutions are as accurate as alternative methods, such as SSA, with significant computational advantages. Four different non-linear reaction networks are employed to demonstrate the advantages of the new LMEs approach. The networks vary in numbers of components (from one to three) and complexity in behavior (including multistability and oscillations). The number of stable solutions of the network does not affect the accuracy of the LMEs approach. On the other hand, the number of the network’s components can affect the method’s computational requirements. Future studies may be required to assess how the method scales with the size of the reaction network.

For all of the examples studied, the LMEs approach is faster than SSA. Notably, the method is computationally efficient for multistable systems, which are of interest to a large community [42,43,44]. The method also appears unaffected by the size of the system’s state space; the method is stable and accurate for multiple state space sizes. On the other hand, oscillatory behaviors can reduce the method’s accuracy.

Appendix A. Inverse of Jacobian

The Jacobian matrix J of the moment equations for a stochastic reaction network is calculated as:

| (A1) |

By the definition (Equation (8)), it is true that:

| (A2) |

Thus, . It is then apparent that the Jacobian matrix is symmetric since:

| (A3) |

As a symmetric matrix, J can be calculated as [48]:

| (A4) |

where is a diagonal matrix with the diagonal elements being the eigenvalues of J and Q the associated eigenvector matrix. Q is also real orthogonal [48], i.e., . The inverse of the Jacobian matrix, can be calculated as:

| (A5) |

Since is diagonal, is also diagonal with elements the reciprocal elements of (). Additionally, each element of , , represents each eigenvalue of the Jacobian J.

Based on Equation (A5), in order to calculate the inverse of the Jacobian matrix, one only needs to solve the eigenproblem of the Jacobian.

Appendix B. Runge-Kutta

As mentioned, the LMEs problem is an initial value problem. A proposed way to solve the system is the Dormand-Prince RKF5(4)7M Runge-Kutta method [32]. RKF5(4)7M is an explicit numerical method with adaptive step size. Dormand and Prince formulated the method in order to create a stable approach for local extrapolations; this is the reason it is chosen in this work as it will help to create more stable solution for time calculations by extrapolating the knowledge of past time solutions. A table that includes all the constants of the RKF5(4)7M method (often called “Butcher tableau" [49] or simply “tableau") can be found in the Appendix B.1.

As derived in Equation (15), the LMEs are:

| (A6) |

where F denotes the functional form of the LMEs.

The calculation of Lagrange multipliers, , is desired for multiple time points. To calculate the multipliers at the time point , the knowledge of the multipliers at time n is required. The Lagrange multipliers at time , , are calculated as:

| (A7) |

where are the Lagrange multipliers at time n, are constants of RKF5(4)7M and are the Runge-Kutta coefficients:

| (A8) |

h is the adaptive step size and are RKF5(4)7M constants.

In RKF5(4)7M, a lower-order solution, , is used to evaluate the error of the solution and change accordingly the adaptive step size h. The lower-order solution at time , , are calculated as:

| (A9) |

where ’s are another set of RKF5(4)7M constants. All the constants (, & ) can be found in the Appendix B.1.

More details about the algorithm of RKF5(4)7M used in this work can be found in the Appendix B.2.

Appendix B.1. Runge-Kutta Tableau

In this section we list the Runge-Kutta RK5(4)7M tableau. The method was first described by Dormand and Prince [32] and we just list it here for the convenience of the reader. The Runge-Kutta tableau of the method is:

| 1 | 2 | 3 | 4 | 5 | 6 | ||||

| i | |||||||||

| 1 | 0 | ||||||||

| 2 | 0 | 0 | |||||||

| 3 | |||||||||

| 4 | − | ||||||||

| 5 | − | − | − | − | |||||

| 6 | 1 | − | − | ||||||

| 7 | 1 | 0 | − | 0 | |||||

Appendix B.2. Runge-Kutta Algorithm

The proposed algorithm to solve LMEs based on the Dormand-Prince RKF5(4)M method is:

Calculate vector and matrices A and as in [17]. These matrices depend only on the kinetic constants of the system and they are constant for all time points.

Introduce the time step size h. The time point of this iteration is , where is the time point of the previous iteration.

Increase the iteration counter by 1. For the rest of the algorithm the iteration step will be denoted by . At the first iteration, .

Introduce the previous step (iteration n) Lagrange multipliers . At the first iteration, introduce the initial condition . The subscripts here refers to the time step and are applied on the whole Lagrange multiplier vector.

Calculate the ’s coefficients from equation and the tableau (Appendix B.1). Each coefficient depends on the functional form F of the Lagrange multiplier equations () and on different set of Lagrange multipliers (). To account for the change of the Lagrange multipliers, there is a need for a subroutine to calculate .

- This is a proposed subroutine for

-

(a)Calculate the augmented Lagrange multipliers for the specific , . The values of can be found in the tableau in Appendix B.1.

-

(b)Calculate the lower () and higher-order moments () for the augmented Lagrange multipliers based on equation .

-

(c)Calculate the inverse of the Jacobian, for the augmented multipliers as described in Appendix A.

-

(d)Calculate the functional form of the Lagrange multiplier equations ().

-

(e)Calculate based on equations .

-

(a)

Repeat step 6 for all seven coefficients, . Its coefficient depends on all the previous coefficients, thus the calculation of each one needs to be in sequence and include the subroutine. For example, the calculations of step 6 should first performed for , then based on to be performed for , then based on to be performed for etc.

Calculate the Lagrange multipliers for the current iteration , , based on equation and the tableau (Appendix B.1).

Calculate the lower-order solution for the current iteration , , based on equation and the tableau (Appendix B.1).

- Check the accuracy of the solution by calculating the error :

(A10) - If the error is smaller than the wanted tolerance accept the solution and proceed to the next step. Otherwise, set a new time step size and go back to step 5 and re-perform the calculation with the new step size. s is used to adjust the step size based on the accuracy of the solution and is given by:

(A11) Accept the solution of Lagrange multipliers for the current iteration () and current time point () as calculated at step 8.

Set the new step size to and proceed to step 3 to calculate the solution for the rest of the time points until the final is reached.

For any given time t, the probability distribution and its moments can be calculated based on the Lagrange multipliers () and equations & .

Author Contributions

M.V. and Y.N.K. designed the study and wrote the manuscript. M.V. conducted the simulations. Conceptualization, M.V. and Y.N.K.; Formal analysis, M.V.; Funding acquisition, Y.N.K.; Investigation, M.V.; Methodology, M.V.; Project administration, Y.N.K.; Resources, Y.N.K.; Software, M.V.; Validation, M.V.; Visualization, M.V.; Writing—original draft, M.V. and Y.N.K.

Funding

This work was supported by the National Institutes of Health [Grant No. GM111358]; National Science Foundation [Grant No. CBET-1412283]; Extreme Science and Engineering Discovery Environment (XSEDE) [National Science Foundation Grant No. ACI-10535753]; Minnesota Supercomputing Institute (MSI); and the University of Minnesota Digital Technology Center.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Folger H. Elements of Chemical Reaction Engineering. 4th ed. Prentice Hall; Upper Saddle River, NJ, USA: 2016. [Google Scholar]

- 2.Schnoerr D., Sanguinetti G., Grima R. Approximation and inference methods for stochastic biochemical kinetics-A tutorial review. J. Phys. A. 2017;50:093001. doi: 10.1088/1751-8121/aa54d9. [DOI] [Google Scholar]

- 3.McQuarrie D.A. Stochastic approach to chemical kinetics. J. Appl. Probab. 1967;4:413–478. doi: 10.2307/3212214. [DOI] [Google Scholar]

- 4.Oppenheim I., Shuler K.E. Master Equations and Markov Processes. Phys. Rev. 1965;138:B1007. doi: 10.1103/PhysRev.138.B1007. [DOI] [Google Scholar]

- 5.Kampen N.V. Stochastic Processes in Physics and Chemistry. 5th ed. Elsevier; Amsterdam, The Netherlands: 2004. [Google Scholar]

- 6.Gillespie D.T. A general method for numerically simulating the stochastic time evolution of coupled chemical reactions. J. Comput. Phys. 1976;22:403–434. doi: 10.1016/0021-9991(76)90041-3. [DOI] [Google Scholar]

- 7.Gibson M.A., Bruck J. Efficient Exact Stochastic Simulation of Chemical Systems with Many Species and Many Channels. J. Phys. Chem. A. 2000;104:1876–1889. doi: 10.1021/jp993732q. [DOI] [Google Scholar]

- 8.Cao Y., Li H., Petzold L. Efficient formulation of the stochastic simulation algorithm for chemically reacting systems. J. Chem. Phys. 2004;121:4059–4067. doi: 10.1063/1.1778376. [DOI] [PubMed] [Google Scholar]

- 9.Gillespie D.T. Markov Processes, An Introduction for Physical Scientists. Academic Press; San Diego, CA, USA: 1992. [Google Scholar]

- 10.Lakatos E., Ale A., Kirk P.D.W., Stumpf M.P.H. Multivariate moment closure techniques for stochastic kinetic models. J. Chem. Phys. 2015;143:094107. doi: 10.1063/1.4929837. [DOI] [PubMed] [Google Scholar]

- 11.Munsky B., Khammash M. The finite state projection algorithm for the solution of the chemical master equation. J. Chem. Phys. 2006;124:044104. doi: 10.1063/1.2145882. [DOI] [PubMed] [Google Scholar]

- 12.Jahnke T., Huisinga W. Solving the chemical master equation for monomolecular reaction systems analytically. J. Math. Bio. 2006;54:1–26. doi: 10.1007/s00285-006-0034-x. [DOI] [PubMed] [Google Scholar]

- 13.Grima R., Schmidt D.R., Newman T.J. Steady-state fluctuations of a genetic feedback loop: An exact solution. J. Chem. Phys. 2012;137:035104. doi: 10.1063/1.4736721. [DOI] [PubMed] [Google Scholar]

- 14.Sotiropoulos V., Kaznessis Y.N. An adaptive time step scheme for a system of stochastic differential equations with multiple multiplicative noise: Chemical Langevin equation, a proof of concept. J. Chem. Phys. 2008;128:014103. doi: 10.1063/1.2812240. [DOI] [PubMed] [Google Scholar]

- 15.Ale A., Kirk P., Stumpf M.P.H. A general moment expansion method for stochastic kinetic models. J. Chem. Phys. 2013;138:174101. doi: 10.1063/1.4802475. [DOI] [PubMed] [Google Scholar]

- 16.Sotiropoulos V., Kaznessis Y.N. Analytical Derivation of Moment Equations in Stochastic Chemical Kinetics. Chem. Eng. Sci. 2011;66:268–277. doi: 10.1016/j.ces.2010.10.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Smadbeck P., Kaznessis Y.N. Efficient Moment Matrix Generation for Arbitrary Chemical Networks. Chem. Eng. Sci. 2012;84:612–618. doi: 10.1016/j.ces.2012.08.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gillespie C. Moment-closure approximations for mass-action models. IET Syst. Biol. 2009;3:52–58. doi: 10.1049/iet-syb:20070031. [DOI] [PubMed] [Google Scholar]

- 19.Grima R. A study of the accuracy of moment-closure approximations for stochastic chemical kinetics. J. Chem. phys. 2012;136:154105. doi: 10.1063/1.3702848. [DOI] [PubMed] [Google Scholar]

- 20.Constantino P.H., Vlysidis M., Smadbeck P., Kaznessis Y.N. Modeling stochasticity in biochemical reaction networks. J. Phys. D Appl. Phys. 2016;49:093001. doi: 10.1088/0022-3727/49/9/093001. [DOI] [Google Scholar]

- 21.Milner P., Gillespie C.S., Wilkinson D.J. Moment closure based parameter inference of stochastic kinetic models. Stat. Comput. 2013;23:287–295. doi: 10.1007/s11222-011-9310-8. [DOI] [Google Scholar]

- 22.Smadbeck P., Kaznessis Y.N. A closure scheme for chemical master equations. Proc. Natl. Acad. Sci. USA. 2013;110:14261–14265. doi: 10.1073/pnas.1306481110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Zechner C., Ruess J., Krenn P., Pelet S., Peter M., Lygeros J., Koeppl H. Moment-based inference predicts bimodality in transient gene expression. Proc. Natl. Acad. Sci. USA. 2012;109:8340–8345. doi: 10.1073/pnas.1200161109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Singh A., Hespanha J. Moment closure techniques for stochastic models in population biology; Proceedings of the 2006 American Control Conference; Minneapolis, MN, USA. 14–16 June 2006. [Google Scholar]

- 25.Krishnarajah I., Cook A., Marion G., Gibson G. Novel moment closure approximations in stochastic epidemics. Bull. Math. Biol. 2005;67:855–873. doi: 10.1016/j.bulm.2004.11.002. [DOI] [PubMed] [Google Scholar]

- 26.Schnoerr D., Sanguinetti G., Grima R. Comparison of different moment-closure approximations for stochastic chemical kinetics. J. Chem. Phys. 2015 doi: 10.1063/1.4934990. [DOI] [PubMed] [Google Scholar]

- 27.Goutsias J., Jenkinson G. Markovian dynamics on complex reaction networks. Phys. Rep. 2013;2:199–264. doi: 10.1016/j.physrep.2013.03.004. [DOI] [Google Scholar]

- 28.Kapur J. Maximum-Entropy Models in Science and Engineering. Wiley Eastern Ltd.; Darya Ganj, New Delhi: 1989. [Google Scholar]

- 29.Vlysidis M., Constantino P.H., Kaznessis Y.N. Stochastic Processes, Multiscale Modeling, and Numerical Methods for Computational Cellular Biology. Springer International Publishing; Cham, Switzerland: 2017. ZI-Closure Scheme: A Method to Solve and Study Stochastic Reaction Networks; pp. 159–174. [Google Scholar]

- 30.Shannon C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948;27:379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x. [DOI] [Google Scholar]

- 31.Sutter T., Sutter D., Esfahani P.M., Lygeros J. Generalized Maximum Entropy Estimation. [(accessed on 6 September 2018)];arXiv. 2004 Available online: https://arxiv.org/abs/1708.07311.1708.07311 [Google Scholar]

- 32.Dormand J., Prince P. A family of embedded Runge-Kutta formulae. J. Comput. Appl. Math. 1980;6:19–26. doi: 10.1016/0771-050X(80)90013-3. [DOI] [Google Scholar]

- 33.Schlogl F. On Thermodynamics Near a Steady State. Z. Physik. 1971;458:446–458. doi: 10.1007/BF01395694. [DOI] [Google Scholar]

- 34.Wilhelm T. The smallest chemical reaction system with bistability. BMC Syst. Biol. 2009;3:90. doi: 10.1186/1752-0509-3-90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lefever R., Nicolis G. Chemical instabilities and sustained oscillations. J. Theor. Biol. 1971;30:267–284. doi: 10.1016/0022-5193(71)90054-3. [DOI] [PubMed] [Google Scholar]

- 36.Lavenda B., Nicolis G., Herschkowitz-Kaufman M. Chemical instabilities and relaxation oscillations. J. Theor. Biol. 1971;32:283–292. doi: 10.1016/0022-5193(71)90166-4. [DOI] [PubMed] [Google Scholar]

- 37.Haseltine E.L., Rawlings J.B. Approximate simulation of coupled fast and slow reactions for stochastic chemical kinetics. J. Chem. Phys. 2002;117:6959–6969. doi: 10.1063/1.1505860. [DOI] [Google Scholar]

- 38.Goutsias J. Quasiequilibrium approximation of fast reaction kinetics in stochastic biochemical systems. J. Chem. Phys. 2005;122:184102. doi: 10.1063/1.1889434. [DOI] [PubMed] [Google Scholar]

- 39.Vlysidis M., Kaznessis Y.N. A linearization method for probability moment equations. Comput. Chem. Eng. 2018;112:1–5. doi: 10.1016/j.compchemeng.2018.01.015. [DOI] [Google Scholar]

- 40.Munkhammar J., Mattsson L., Rydén J. Polynomial probability distribution estimation using the method of moments. PLoS ONE. 2017;12:e0174573. doi: 10.1371/journal.pone.0174573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kullback S., Leibler R.A. On Information and Sufficiency. Ann. Math. Stat. 1951;22:79–86. doi: 10.1214/aoms/1177729694. [DOI] [Google Scholar]

- 42.Gardner T.S., Cantor C.R., Collins J.J. Construction of a genetic toggle switch in Escherichia coli. Nature. 2000;403:339–342. doi: 10.1038/35002131. [DOI] [PubMed] [Google Scholar]

- 43.Angeli D., Ferrell J.E., Sontag E.D., Sontag E.D. Detection of multistability, bifurcations, and hysteresis in a large class of biological positive-feedback systems. Proc. Natl. Acad. Sci. USA. 2004;101:1822–1827. doi: 10.1073/pnas.0308265100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Artyomov M.N., Das J., Kardar M., Chakraborty A.K. Purely stochastic binary decisions in cell signaling models without underlying deterministic bistabilities. Proc. Natl. Acad. Sci. USA. 2007;104:18958–18963. doi: 10.1073/pnas.0706110104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Vlysidis M., Schiek A.C., Kaznessis Y.N. ZICS: An Application for Calculating the Stationary Probability Distribution of Stochastic Reaction Networks. [(accessed on 6 September 2018)];arXiv. 2018 Available online: https://arxiv.org/abs/1806.06428.1806.06428 [Google Scholar]

- 46.Constantino P.H., Kaznessis Y.N. Maximum entropy prediction of non-equilibrium stationary distributions for stochastic reaction networks with oscillatory dynamics. Chem. Eng. Sci. 2017;171:139–148. doi: 10.1016/j.ces.2017.05.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Peleš S., Munsky B., Khammash M. Reduction and solution of the chemical master equation using time scale separation and finite state projection. J. Chem. Phys. 2006;125:204104. doi: 10.1063/1.2397685. [DOI] [PubMed] [Google Scholar]

- 48.Golub G.H., Van Loan C.F. Matrix Computations. 4th ed. The Johns Hopkins University Press; Baltimore, MD, USA: 2013. [Google Scholar]

- 49.Butcher J.C. A stability property of implicit Runge-Kutta methods. BIT. 1975;15:358–361. doi: 10.1007/BF01931672. [DOI] [Google Scholar]