Abstract

OBJECTIVES:

To introduce cost-effective expert clinical diagnoses of dementia into population-based research using an online platform and to demonstrate their validity against in-person clinical assessment and diagnosis.

DESIGN:

The online platform provides standardized data necessary for clinicians to rate participants on the Clinical Dementia Rating (CDR®). Using this platform, clinicians diagnosed 60 patients at a range of CDR levels at two clinical sites. The online consensus diagnosis was compared with in-person clinical consensus diagnosis.

SETTING:

All India Institute of Medical Sciences (AIIMS), Delhi, and National Institute of Mental Health and Neurosciences (NIMHANS), Bengaluru, India.

PARTICIPANTS:

Thirty patients each at AIIMS and NIMHANS with equal numbers of patients previously independently rated in person by experts as CDR is 0 (cognitively normal), CDR is 0.5 (mild cognitive impairment), and CDR is 1 or greater (dementia).

MEASUREMENTS:

Multiple clinicians independently rate each participant on each CDR domain using standardized data and expert clinical judgment. The overall summary CDR is calculated by algorithm. When there are discrepancies among clinician ratings, clinicians discuss the case through a virtual consensus conference and arrive at a consensus overall rating.

RESULTS:

Online clinical consensus diagnosis based on standardized interview data provides consistent clinical diagnosis with in-person clinical assessment and consensus diagnosis (κ coefficient = 0.76).

CONCLUSION:

A web-based clinical consensus platform built on the Harmonized Diagnostic Assessment of Dementia for the Longitudinal Aging Study in India interview data is a cost-effective way to obtain reliable expert clinical judgments. A similar approach can be used for other epidemiological studies of dementia.

Keywords: Clinical Dementia Rating, clinical judgment, Longitudinal Aging Study in India, Harmonized Cognitive Assessment Protocol, population-based research

For clinical syndromes, such as dementia, there is no single definitive diagnostic test. Many clinical researchers rely on a process of data review, adjudication, and consensus by a panel of expert clinicians.1 The panel meets in person to review detailed information on various aspects of the clinical assessment of a given patient, discusses the findings, and renders a consensus diagnosis using standardized criteria. This process allows the data of each study participant to be individually considered in detail, taking advantage of a wealth of collective clinical expertise and judgment. However, it involves the cost of the time spent by experts in examining the patients, the inefficiency of scheduling meetings at a time and location that all experts can attend, and the near impossibility of including experts at different sites.

With the aim of bringing together expert clinical judgment for the diagnosis of dementia, we developed an online clinical consensus panel approach for the Longitudinal Aging Study in India–Diagnostic Assessment of Dementia (LASI-DAD). We used the Clinical Dementia Rating (CDR®)2 as the basis for the clinical diagnosis of dementia. Although alternative approaches for dementia classification based on psychometric assessment3–5 and well-characterized convenience samples6 have been successfully utilized, such approaches cannot be used in India. It is because the psychometric distribution is largely unknown for the Indian population, and India is a subcontinent that includes many population groups differing by language, ethnicity, religion, and caste.7

In this article, we describe the web portal we developed for the clinical consensus diagnosis and the process of obtaining consensus diagnosis. We then report the results from a validation study, examining whether the online clinical consensus diagnosis based on the LASI-DAD interview data provides consistent CDR ratings with the clinical consensus diagnosis from cliniciansʼ in-person assessment of patients. Because its validity has been proved, we are currently in the process of obtaining online clinical consensus diagnosis for all LASI-DAD respondents. Once all diagnoses are completed, we plan to release the deidentified clinical consensus diagnosis data. Further demonstrating its external validity, we applied the online clinical consensus portal to obtain clinical consensus diagnosis of another study sample in Korea in a successful feasibility study.

METHODS

Web Interface for Clinical Consensus Diagnosis

Despite high costs associated with implementing the gold standard of an “in-person consensus conference,” there have only been limited attempts to use a web-based approach to bring in expertsʼ review, analysis, and consensus. Building on the online consensus approach developed by the Monongahela-Youghiogheny Healthy Aging Team (MYHAT) in Pittsburgh, PA,1,8 we have developed an online clinical consensus site for the LASI-DAD. The MYHAT study is an ongoing population-based cohort study of individuals aged 65 years and older in southwestern Pennsylvania. In the MYHAT study, interviewers who have been trained and certified in CDR rating by the Washington University online program collect data in the field, which are then uploaded to the “consensus diagnosis” website. Each expert clinician (geriatric psychiatrist, neuropsychologist, and neurologist) logs in at his/her own convenience, reviews the data presented, and renders a CDR rating.

A key feature of the consensus diagnosis process is that expert clinician judgment is used to weigh variables that may have nonspecific contributions or may be part of complex interactions contributing to the dementia syndrome, a task not readily replicated by a statistical algorithm. Our objective, therefore, was to involve clinical experts in reviewing and rating standardized assessment data from the LASI-DAD interview to render a standardized diagnostic rating, and then to arrive at a consensus among the clinical experts for each participant.

For the basis of clinical diagnosis, CDR was used, a global rating instrument that is one of the most widely used measures for dementia diagnosis.9 CDR is composed of six cognitively driven functional domains: (1) memory; (2) orientation; (3) judgment and problem solving; (4) community affairs; (5) home and hobbies; and (6) personal care. We designed the consensus web portal to provide relevant information that enables clinicians to provide domain-specific ratings. We developed a demonstration site by extracting data from the LASI-DAD interview and organizing the information according to CDR criteria to facilitate the cliniciansʼ review. For each domain, we pulled relevant information from the respondentʼs self-report, from various rating scales, including the Hindi Mental State Examination,10 the Telephone Interview for Cognitive Status,11 and the Community Screening Interview for Dementia,12 and from the informantʼs report. For details on informantʼs reports, see the article in this issue13 and elsewhere.14

Once we built a demonstration site, we conducted a feasibility study from February to April of 2018. Specifically, we identified five patients whom the clinicians at the National Institute of Mental Health and Neurosciences (NIMHANS), Bangalore, India, have recently accessed, including two with dementia, two with mild cognitive impairment (MCI), and one cognitively normal. The LASI-DAD interview team conducted interviews with these five patients and their informants following the LASI-DAD protocol, and the collected data were uploaded to the secured server and fed into the online diagnosis and consensus website.

We invited eight CDR-certified expert clinicians to the demonstration site and asked them to provide CDR ratings and to evaluate the website. We then organized a debriefing session with the expert clinicians, identifying what information was lacking, soliciting their suggestions to improve the website, and measuring the time required for an online rating. All clinicians agreed that the website provided relevant information, enabling clinicians to develop a good understanding of the patientʼs cognitive status and everyday functioning, but suggested that we include additional information on judgment and problem solving, and on self- reports of memory loss. Following this advice, we added additional questions assessing judgment and problem solving2 and self-reports of memory loss to the phase 2 instrument (following the core LASI fieldwork schedule, the LASI-DAD fieldwork was performed in phases). We also made further refinements to the site, reflecting the expert cliniciansʼ suggestions.

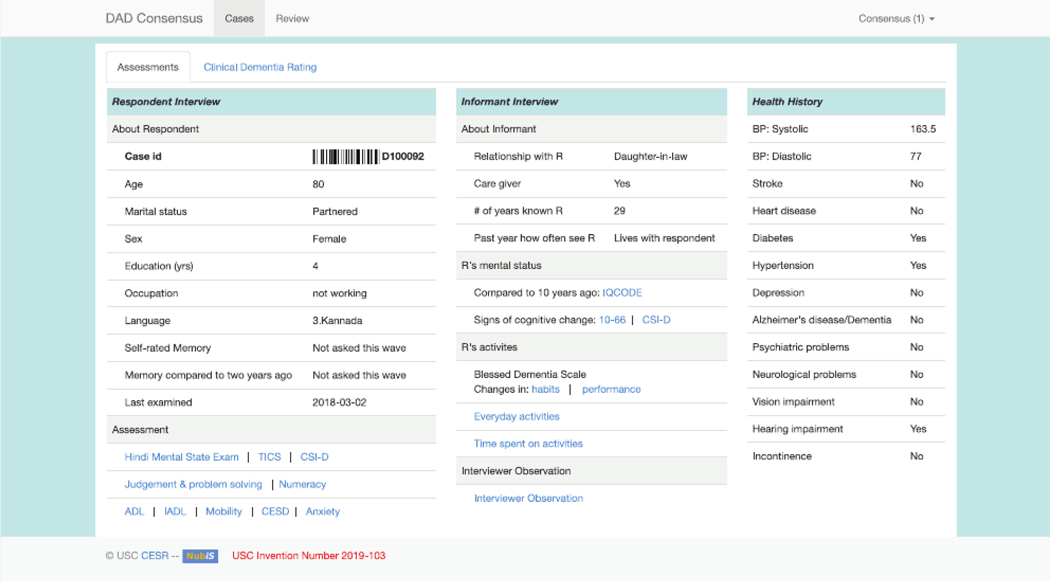

The resulting consensus website is organized in two main screens. The first screen shows: (1) basic background information, such as age, sex, education, occupation, and self-rated memory loss, (2) the results of global screening cognitive tests obtained from the LASI-DAD, (3) information extracted from the informant interview, and (4) the respondentʼs health history collected from both the LASI- DAD geriatric assessment and from the core LASI interview (Figure 1). The second screen shows information reorganized according to six CDR domains and invites clinicians to provide domain-specific ratings. Supplementary Figure S1 shows the second screen, and Supplementary Figure S2 shows a pop-up screen for the orientation domain that summarizes all relevant information from the respondent and informant interviews. The overall summary CDR is calculated by algorithm using the domain-specific ratings provided by the clinician.

Figure 1.

Online consensus site screen 1. ADL, activity of daily living; BP, blood pressure; CESD, Center for Epidemiological Studies-Depression; CSI-D, Community Screening Interview for Dementia; DAD, Diagnostic Assessment of Dementia; IADL, instrumental ADL; ID, identifier; IQCODE, Informant Questionnaire on Cognitive Decline in the Elderly; R, respondent.

Consensus Diagnosis Process

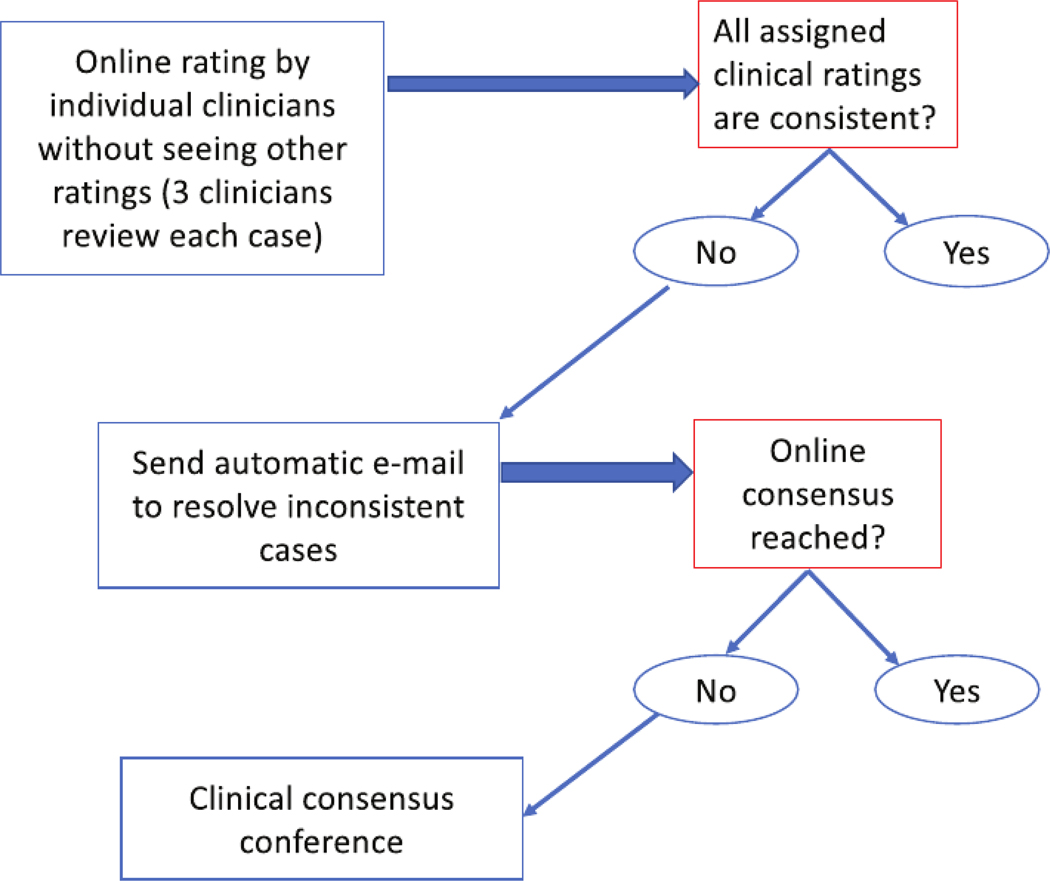

For the online clinical consensus review, we assigned three CDR-certified clinicians to each case. First, each clinical rater was asked to review cases independently. After logging in to the web site and providing their login credentials, they were able to see their assigned cases and the website automatically selected a case to be rated. Clinicians were able to move between different screens before providing their initial rating, but were not allowed to move out of an assigned case before completing their rating.

Once all cliniciansʼ ratings are completed for a case, an automatic e-mail is sent out to resolve any inconsistent ratings. To resolve these cases, each individual rater was encouraged to review other ratersʼ comments, to engage in online chat, and, if wishing to do so, to update his/her own rating. Figure 2 shows the online consensus process.

Figure 2.

Online clinical consensus diagnosis process.

Consensus was reached in many cases through the aforementioned process, but there were still cases where a consensus could not be reached. For such cases, we organized an online consensus meeting, discussed each ambiguous case, and tried to reach a consensus. An expert clinician moderated the consensus meeting and indicated whether a consensus was reached for each case. If a consensus was not reached, a majority rating was recorded with a indicating the differences in clinical diagnosis.

Validation Study Design

We conducted a validation study to examine whether the online clinical consensus diagnosis based on the interviews administered using the LASI-DAD protocol yielded consistent diagnosis results in comparison to the in-person clinical consensus diagnosis based on cliniciansʼ in-person assessment of patients.

To do so, we recruited 60 participants from two partner hospitals in India, the All India Institute of Medical Sciences (AIIMS) and the NIMHANS, where a number of CDR-certified clinicians were available for the gold standard of in-person clinical consensus diagnosis.

We recruited 30 participants from each institution, targeting one-third to have dementia, one-third to have MCI, and one-third to be cognitively normal. First, expert clinical teams of three to four CDR-certified clinicians at each institution conducted in-person assessments of patients and their informants, followed by a traditional in-person diagnostic consensus conference. The LASI-DAD interview team, consisting of trained interviewers without clinical training, then conducted the LASI-DAD interview with the same patients and collected standardized data. Supplementary Table S1 pro- vides sample characteristics.

Once all data were collected, we invited the team of CDR-certified clinicians from the other institution, who had not been exposed to this group of patients, to rate the patients using the consensus website. We provided the collected LASI-DAD data for their review and obtained online clinical consensus diagnoses following the procedure outlined above.

To compare the in-person and online consensus diagnosis, we calculated the κ interrater agreement measure. A κ value of 0.75 is generally considered excellent, and values between 0.40 and 0.75 are considered fair to good agreement.15 According to a power analysis using the fractions above and a standard underlying psychometric model, the sample size of 60 cases allowed for an almost 90% chance of obtaining a κ of 0.75 or higher if the population κ of the process is 0.80.

RESULTS

The validation study was performed at two sites, AIIMS and NIMHANS, from January through March 2019. Table 1 presents the results of the validation study. We found consistency between the in-person clinical consensus diagnosis after an in-person clinical assessment and the online clinical consensus diagnosis based on the LASI-DAD interview data. Specifically, for 49 of 60 cases (82%), “on-diagonal” agreement was achieved between in-person and online consensus diagnosis, meaning consistent CDR global scores were provided. There was no case with a global rating difference greater than 0.5. The agreement between the in-person and online ratings was 90.8% (z = 7.52; Probability > z = 0.00). The κ statistic was 0.76, with a standard error of 0.10, suggesting excelhlent agreement. However, 11/60 (18%) were misclassified, most of which involved MCI: 4/20 (20%) of MCI cases were misclassified as either dementia or normal, 5/30 (17%) of dementia cases were misclassified as MCI, and 2/10 (20%) normal cases were misclassified as MCI. For the cases with inconsistent in-person and online consensus global CDR scores, we further investigated CDR domain-specific score differences and found that significant score differences were most frequently observed in the “social and community activities” and “home and hobbies” domains (Supplementary Table S2 provides case-specific comparisons of domain rating differences). To provide additional information on these domains, we extracted relevant information from the core LASI survey data and updated the consensus website for the LASI-DAD cases.

Table 1.

Results of Validation Study: Consensus Clinical Dementia Rating

| Online consensus ratings |

||||

|---|---|---|---|---|

| In-person consensus ratings | 0 | 0.5 | ≥1 | Total |

| 0 | 8 | 2 | 0 | 10 |

| 0.5 | 2 | 16 | 2 | 20 |

| ≥1 | 0 | 5 | 25 | 30 |

| Total | 10 | 23 | 27 | 60 |

Abbreviations: 0, cognitively normal; 0.5, mild cognitive impairment; ≥1, dementia.

We also calculated the reliability of each clinician by calculating κ statistics based on individual ratings. The κ statistics for eight raters who participated in the online consensus diagnosis varied from 0.72 to 0.90, showing good to excellent agreement. From this exercise, we recognized the interrater differences in reliability and set additional screening criteria. Before being invited to participate in the online consensus diagnosis, we asked all interested clinicians to upload their CDR certificates to the consensus website. They were then asked to review 10 to 15 cases, after which we examined the consistency of their individual ratings with the in-person consensus rating. Only clinicians whose κ statistics were above 0.70 were invited to participate.

Clinicians reviewed a case in an average of 3.6 minutes (standard deviation = 3.8 minutes). After the individual ratings, discrepancies among the clinicians were found in 7 of 60 cases, triggering a virtual consensus conference. During the regularly scheduled 60-minute teleconference, moderated by senior clinicians and coauthors of this article, clinicians reviewed every inconsistent case and collectively reached a consensus diagnosis for each, taking approximately 6 to 96 minutes per case.

CONCLUSION

We developed a web portal for clinical consensus diagnosis as a cost-effective alternative to the in-person clinical diagnosis consensus conference. Results from a validation study demonstrate that online clinical consensus diagnosis based on the LASI-DAD interview data is a cost-effective alternative, yielding results consistent with in-person clinical assessment and diagnosis. We are currently conducting online consensus diagnosis for the entire LASI-DAD sample, the results of which will be provided as part of a public data set once completed.

The online consensus site we developed also presents great promise for other population-based studies of dementia to obtain expert cliniciansʼ judgment in identifying dementia status in a cost-effective manner. In low- and middle-income countries, and in parts of high-income countries that are outside of major cities, there are few specialist clinicians with expertise in geriatrics and dementia. As such, in-person consensus diagnosis takes time and effort, requiring careful scheduling and frequently travel to obtain diagnoses of cognitive impairment outside of specialized memory clinics and dementia research centers.

For research purposes, where a live “diagnostic consensus conference” or adjudication conference is the norm, the online approach provides further levels of flexibility, efficiency, and cost-effectiveness. Here, expert clinicians at a specialty center can remotely view and rate standardized data from multiple patients or study participants at their convenience and without the necessity of travel. This differs from standard telemedicine because experts are not directly viewing the patients, but rather evaluating standardized data collected by trained interviewers. In turn, respondents are less burdened because they can be assessed in their homes or at nonspecialist locations by a single trained interviewer rather than by multiple clinicians.

Building on the success found in LASI-DAD, we are currently applying this online consensus approach for another epidemiological Harmonized Cognitive Assessment Protocol (HCAP) study in Korea. The consensus website was first translated into the Korean language and examined to ensure cultural propriety for administration in South Korea. Four clinicians from the Seoul National University Hospital were invited to participate in the online consensus process and to review the interviewer-administered cases. The Korean clinicians have also assured the feasibility of this approach and concluded its suitability for CDR.

Online clinical consensus diagnosis has several notable limitations. Using this approach, clinicians do not observe respondents themselves, which we attempt to compensate for by requesting interviewers to add notes based on their observations. Additional information is required to adequately assess judgment and problem-solving ability, which we aim to further improve in our follow-up effort. Although less costly and time consuming than in-person diagnosis, clinicians still need to be compensated for their time and effort. On average, an individual rating takes about 4 minutes. For cases in which initial clinical ratings differ across clinicians, a virtual consensus conference call averages 60 minutes to reach consensus for about 7 to 10 cases. The current online consensus diagnosis portal does not allow causative diagnosis, which we plan to further develop, identifying and collecting relevant information for follow-up studies. Once its full potential is achieved, online clinical consensus diagnosis could be revolutionary for the diagnosis of cognitive impairment and dementia in epidemiological studies.

Supplementary Material

Supplementary Figure S1: Online consensus site screen 2.

Supplementary Figure S2: Pop-up screen for orientation domain.

Supplementary Table S1: Sample Characteristics

Supplementary Table S2: Domain-Specific Clinical Dementia Rating Difference: In-Person Rating–Online Rating

ACKNOWLEDGMENT

We thank Kenneth Langa, David Llewellyn, Pranali Y. Khobragade, Joyita Banerjee, Erik Meijer, Prasun Chatterjee, Gaurav R. Deasi, Krishna Prasad, Sivakumar Thangaraju, Preeti Sinha, Santosh Loganathan, Abhijit Rao, Rishav Bansal, Sunny Singhal, Swaroop Bhatankar, Swati Bajpai, Gihwan Byeon, Kiyoung Sung, and Dongkyun Han.

Financial Disclosure:

This project is funded by the National Institute on Aging, National Institutes of Health (R01AG051125 and R56 AG056117).

Sponsorʼs Role: The National Institute on Aging had no role in preparing the data or the manuscript.

Footnotes

Conflict of Interest: The authors have no conflicts of interest to declare.

Ethics Approval: We obtained ethics approval from the Indian Council of Medical Research and all collaborating institutions, including the University of Southern California; the All India Institute of Medical Sciences, New Delhi; the National Institute of Mental Health and Neurosciences, Bengaluru; and Seoul National University Hospital.

SUPPORTING INFORMATION

Additional Supporting Information may be found in the online version of this article.

REFERENCES

- 1.Weir DR, Wallace RB, Langa KM, et al. Reducing case ascertainment costs in U.S. population studies of Alzheimerʼs disease, dementia, and cognitive impairment—part 1. Alzheimers Dement. 2011;7(1):94–109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Morris JC. The clinical dementia rating (CDR): current version and scoring rules. Neurology. 1993;43(11):2412–2414. [DOI] [PubMed] [Google Scholar]

- 3.John SE, Gurnani AS, Bussell C, Saurman JL, Griffin JW, Gavett BE. The effectiveness and unique contribution of neuropsychological tests and the δ latent phenotype in the differential diagnosis of dementia in the uniform data set. Neuropsychology. 2016;30:946–960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Gavett BE, Vudy V, Jeffrey M, John SE, Gurnani A, Adams J. The δ latent dementia phenotype in the NACC UDS: cross-validation and extension. Neuropsychology. 2015;29:344–352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Royall DR, Palmer RF, Markides KS. Exportation and validation of latent constructs for dementia case-finding in a Mexican-American population-based cohort. J Gerontol B Psychol Sci Soc Sci. 2017;72:947–955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Royall DR, Palmer RF, Matsuoka T, et al. Greater than the sum of its parts: δ can be constructed from item level data. J Alzheimers Dis. 2016;49:571–579. [DOI] [PubMed] [Google Scholar]

- 7.James KS. Indiaʼs demographic change: opportunities and challenges. Science. 2011;333:576–580. [DOI] [PubMed] [Google Scholar]

- 8.Becker JT, Duara R, Lee CW, et al. Cross-validation of brain structural bio-markers and cognitive aging in a community-based study. Int Psychogeriatr. 2012;24(7):1065–1075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gross AL, Hassenstab JJ, Johnson SC, et al. A classification algorithm for predicting progression from normal cognition to mild cognitive impairment across five cohorts: the preclinical AD consortium. Alzheimers Dement. 2017;8:147–155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ganguli M, Ratcliff G, Chandra V, et al. A Hindi version of the MMSE: the development of a cognitive screening instrument for a largely illiterate rural elderly population in India. Int J Geriatr Psychiatry. 1995;10(5):367–377. [Google Scholar]

- 11.Brandt J, Spencer M, Folstein M. The telephone interview for cognitive status. Neuropsychiatry Neuropsychol Behav Neurol. 1988;1(2):111–117. [Google Scholar]

- 12.Hall K, Hendrie HC, Brittain HM, et al. The development of a dementia screening interview in two distinct languages. Int J Methods Psychiatr Res. 1993;3:1–28. [Google Scholar]

- 13.Lee J, Khobragade PY, Banerjee J, et al. Design and methodology of the Longitudinal Aging Study in India – Diagnostic Assessment of Dementia (LASI-DAD). J Am Geriatr Soc;68:S5–S10, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lee J, Banerjee J, Khobragade PY, Angrisani M, Dey AB. LASI-DAD study: a protocol for a prospective cohort study of late-life cognition and dementia in India. BMJ Open. 2019;9:e030300. 10.1136/bmjopen-2019-030300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Fleiss JL, Levin B, Paik MC. Statistical Methods for Rates and Proportions. 3rd ed Hoboken, NJ: John Wiley & Sons, Inc; 2003:598–622. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Figure S1: Online consensus site screen 2.

Supplementary Figure S2: Pop-up screen for orientation domain.

Supplementary Table S1: Sample Characteristics

Supplementary Table S2: Domain-Specific Clinical Dementia Rating Difference: In-Person Rating–Online Rating