Abstract

OBJECTIVES:

To test whether a relatively complex model of human cognitive abilities based on Cattell-Horn-Carroll (CHC) theory, developed mainly in English-speaking samples, adequately describes correlations among tests in the Longitudinal Aging Study in India–Diagnostic Assessment of Dementia (LASI-DAD), and to develop accurate measures of cognition for older individuals in India.

DESIGN:

LASI-DAD participants were recruited from participants aged 60 years and older from 14 states in the core LASI survey, with a stratified sampling design.

SETTING:

Participants were interviewed at home or in a participating hospital, according to their preferences.

PARTICIPANTS:

Community-residing older adults aged 60 years and older (N = 3,224).

MEASUREMENTS:

A variety of cognitive tests were administered during two pretests and chosen for their appropriateness for measuring cognition in older adults in India and suitability for calibration with the core LASI survey and the Harmonized Cognitive Assessment Protocol.

RESULTS:

We evaluated the factor structure of the test battery and its conformity with a classical CHC factor model that incorporated measurement models for general cognition, five broad domains (orientation, executive functioning, language/fluency, memory, and visuospatial), and five narrow domains (reasoning, attention/speed, immediate memory, delayed memory, and recognition memory) of cognitive performance. Model fit was adequate (root mean square error of approximation = 0.051; comparative fit index = 0.916; standardized root mean squared residual = 0.060).

CONCLUSION:

We demonstrated configural factorial invariance of a cognitive battery in the Indian LASI-DAD using CHC theory. Broad domain factors may be used in future research to rank individuals with respect to cognitive performance and classify cognitive impairment. J Am Geriatr Soc 68:S11-S19, 2020.

Keywords: Harmonized Cognitive Assessment Protocol, Cattell-Horn-Carroll theory, factor analysis, epidemiology, international comparisons

INTRODUCTION

Cognitive functions can be organized into domains of ability, including memory, attention, language, visuo-spatial, and others. Impairment in certain functions may be indicative of an underlying brain disorder, such as dementia, stroke, or traumatic brain injury. There are over 500 neuropsychological tests available for use clinically and in research that are designed to measure one or more of these cognitive functions.1

There is no strong consensus about how many domains exist empirically.2 For example, should immediate and delayed episodic memory be separate, or is memory an adequate factor? Are receptive and expressive language domains distinguishable from one another? Given a sufficiently broad range of cognitive tests in a large enough and diverse sample, factor analysis methods can be used to address these issues by distinguishing different domains.3–6 Factor analysis can provide such clarity through quantification of what model—in other words, what domains and subdomains—best describes the correlations among tests. Clinically, establishing the factor structure of a neuropsychological test battery can facilitate interpretation of test results for differential diagnosis (e.g., does a problem with serial 7 subtraction indicate an attentional deficit or a numeracy problem). Moreover, cognitive domains can be used for validation of new tests: a clinician who implements a test or devises a new test intended to measure a domain can correlate scores on the new test with scores of tests from that domain as well as other domains to evaluate convergent and divergent validity.

Complicating the search for adequate cognitive domains, there are no “domain-pure” tests of a given domain; for instance, a test of category fluency requires expressive language ability, long-term episodic memory, and even some degree of organizational ability (e.g., executive function) to provide responses within the required category (e.g., animals).7 Again, factor analysis methods are used to isolate common variability between tests designed to measure a domain. Such common variability can represent the domain, sans extraneous measurement error or nondomain variance that is not shared by other tests.

The factor structure of a neuropsychological test battery offers insight into the abilities tested by the battery.8 Derived from intelligence research rather than clinical neuropsychology,9 Cattell-Horn-Carroll (CHC) taxonomy is a comprehensive attempt to organize human cognitive abilities. This theory is an integration of previous theoretical models of fluid and crystallized intelligence,10–12 coupled with Carroll’s13 three-stratum theory in which individual differences in cognition are organized into major (e.g., broad domains), minor (e.g., narrow domains), and general sources. Although there are many applications of CHC theory to data,14 common features of CHC-derived models are classification of cognitive tests into narrow (e.g., reasoning and attention/speed), broad (e.g., executive functioning and memory), and general cognitive ability.15 Clinically, the general and broadest domains are typically of most interest; however, the narrow domains may have utility for differential diagnoses.9,15

An important limitation of previously published multiple-domain factor analyses of cognitive batteries is that many, if not most, have been conducted among samples composed of U.S. and European populations who took tests administered in just one or a few languages, such as English or Spanish.16 The structure of domains for tests administered in other languages is worth exploring in greater detail because translation issues as well as cultural differences in how certain stimuli are understood can lead to different test interpretations. Cross-national research provides an opportunity to identify drivers of both individual variation and population average differences across different groups defined by country and language; however, such research mandates the use of tests that carry the same meaning across cultures and countries. In particular, India represents a heterogeneous population in terms of language, culture, and socioeconomic characteristics.17 Classical tests, such as the Tower of Hanoi (a measure of problem solving), have been shown to not perform as well in India as in Western countries,18 calling into question their utility as a measure of cognitive functioning that means the same thing across people. As a further example, a common test of general language comprehension is to ask participants to read and follow a command (e.g., close your eyes), but often illiterate persons are asked instead to follow the example of an interviewer who closes his/her eyes. It is arguable whether this modified item can still be considered a test of language comprehension.

The objectives of this study were to evaluate the factor structure of a broad cognitive test battery in India, and in particular to test whether a hierarchical, multiple-domain model based on CHC taxonomy, developed mainly from English-speaking samples, adequately describes the correlations among tests. We additionally tested whether the factor structure was similar across 10 languages of administration in our sample.

METHODS

We used data from the Longitudinal Aging Study in India– Diagnostic Assessment of Dementia (LASI-DAD). This is a substudy of the core LASI study, which starting in 2010 recruited over 70,000 participants aged 45 years and older from all 29 states in India using a stratified, multistage area probability sample.19 LASI collected rich cognitive testing and other information from participants. LASI-DAD leveraged the LASI sampling framework to administer a more detailed neuropsychological battery, based largely on tests from the Harmonized Cognitive Assessment Protocol (HCAP) from the U.S. Health and Retirement Study (HRS), to a subsample of 3,300 adults aged 60 years and older in 14 states. LASI-DAD oversampled individuals with lower scores on cognitive tests in the core LASI survey. Participants were interviewed at home or in a participating hospital, according to their preferences.

Variables

The cognitive test battery in LASI-DAD was adapted from tests in the HCAP.20 The HCAP battery was designed to assess cognitive impairment without dementia and dementia in the HRS and has been successfully adapted in the United States, England, Mexico, China, and South Africa.21 For LASI-DAD, some culturally and logically appropriate modifications were made to the HCAP, including identification of tests less dependent on schooling and literacy.

We organized tests into broad domains (orientation, executive functioning, language/fluency, memory, and visuospatial) and further into narrow subdomains to be consistent with CHC theory of human cognitive abilities. Tests included in the cognitive battery are in Table 1. Tests were assigned to domains based on a priori knowledge and theory. The broad domains reflect largely well-accepted categories of cognitive functioning,1 whereas narrower domains serve to further partition domains with more tests (in this study, memory and executive functioning). The domain to which a test belongs is partly dependent on other tests in the battery; more tests of a domain, such as language, may merit stronger evidence for narrower subdomains, such as expressive versus receptive or semantic versus nonsemantic aspects of language.

Table 1.

Characteristics of the LASI-DAD Sample (N = 3,224)

| Characteristic | Mean or No. (SD or %) | Range | % Missing |

|---|---|---|---|

| Age, mean (SD), y | 69.3 (7.7) | 60.0–104.0 | 0.0 |

| Female sex, No. (%) | 1,737 (53.9) | 0.0 | |

| Any education, No. (%) | 1,702 (52.8) | 0.0 | |

| Educational attainment, No. (%) | 0.0% | ||

| Never attended school | 1,522 (47.22) | ||

| Less than primary school (standard = 1–4) | 448 (13.90) | ||

| Completed primary school (standard = 5–7) | 402 (12.47) | ||

| Completed middle school (standard = 8–9) | 286 (8.87) | ||

| Completed secondary school (standard = 10–11) | 300 (9.31) | ||

| Completed higher secondary (standard = 12) | 101 (3.13) | ||

| Completed diploma and certificate | 24 (0.74) | ||

| Graduate degree (BA/BS) | 81 (2.51) | ||

| Postgraduate degree (MA, MS, PhD) | 35 (1.09) | ||

| Professional degree (MBBS, MD, MB) | 24 (0.74) | ||

| Language of interview, No. (%) | 8.5 (6.5) | 1.0–19.0 | 0.0 |

| English | 10 (0.3) | ||

| Hindi | 1,018 (31.6) | ||

| Kannada | 244 (7.6) | ||

| Malayalam | 349 (10.8) | ||

| Tamil | 299 (9.3) | ||

| Urdu | 152 (4.7) | ||

| Bengali | 294 (9.1) | ||

| Assamese | 199 (6.2) | ||

| Odiya | 252 (7.8) | ||

| Marathi | 218 (6.8) | ||

| Telugu | 189 (5.9) | ||

| Orientation, mean (SD) | |||

| Month | 0.8 (0.4) | 0.0–1.0 | 3.7 |

| Year | 0.5 (0.5) | 0.0–1.0 | 13.0 |

| Day of the week | 0.8 (0.4) | 0.0–1.0 | 4.6 |

| Day of the month | 0.6 (0.5) | 0.0–1.0 | 6.8 |

| Season | 0.8 (0.4) | 0.0–1.0 | 2.2 |

| State | 0.6 (0.5) | 0.0–1.0 | 6.6 |

| City | 0.9 (0.2) | 0.0–1.0 | 2.2 |

| Hospital name (or district if at home) | 0.8 (0.4) | 0.0–1.0 | 3.8 |

| Area of town/village or street name | 0.9 (0.3) | 0.0–1.0 | 3.8 |

| Floor of the building | 0.9 (0.3) | 0.0–1.0 | 2.3 |

| Prime Minister | 0.6 (0.5) | 0.0–1.0 | 1.6 |

| Immediate memory, mean (SD) | |||

| 3-Word recall (three trials) | 8.6 (1.4) | 0.0–9.0 | 2.0 |

| 10-Word recall (three trials) | 11.4 (5.2) | 0.0–28.0 | 2.7 |

| Brave Man, immediate recall | 1.1 (1.2) | 0.0–6.0 | 0.0 |

| Logical memory, immediate recall | 1.1 (1.6) | 0.0–12.0 | 0.0 |

| Delayed memory, mean (SD) | |||

| 10-Word delayed recall | 3.1 (2.4) | 0.0–10.0 | 2.9 |

| 3-Word delayed recall | 1.9 (1.1) | 0.0–3.0 | 1.8 |

| Brave Man, delayed recall | 0.5 (1.0) | 0.0–5.0 | 0.0 |

| Logical memory, delayed recall | 0.7 (1.4) | 0.0–11.0 | 0.0 |

| Constructional praxis, delayed | 1.1 (1.0) | 0.0–4.0 | 15.0 |

| Recognition memory, mean (SD) | |||

| 10-Word recognition | 8.0 (2.4) | 0.0–10.0 | 4.7 |

| Logical memory, recognition | 7.5 (3.2) | 0.0–15.0 | 10.2 |

| Executive/abstract reasoning, mean (SD) | |||

| Raven progressive matrices | 7.5 (3.4) | 0.0–17.0 | 8.1 |

| Go/no-go trial 1 | 6.4 (3.4) | 0.0–10.0 | 4.7 |

| Go/no-go trial 2 | 4.9 (3.6) | 0.0–10.0 | 5.2 |

| Clock drawing | 1.4 (1.7) | 0.0–5.0 | 9.7 |

| Attention/speed, mean (SD) | |||

| Serial 7s | 1.8 (1.8) | 0.0–5.0 | 10.6 |

| Backward day naming | 3.8 (2.6) | 0.0–6.0 | 5.5 |

| Symbol cancelation | 6.9 (8.3) | 0.0–30.0 | 0.0 |

| Digit span, backwards score | 0.3 (0.5) | 0.0–1.0 | 5.6 |

| Digit span, forwards score | 0.3 (0.4) | 0.0–1.0 | 4.8 |

| Language/fluency, mean (SD) | |||

| Naming described objects (coconut, scissors) | 1.8 (0.4) | 0.0–2.0 | 2.2 |

| Animal naming | 11.7 (4.6) | 0.0–25.0 | 2.8 |

| Writing or saying sentence | 0.9 (0.3) | 0.0–1.0 | 2.4 |

| Repeat a phrase | 0.9 (0.3) | 0.0–1.0 | 3.6 |

| Close your eyes | 1.5 (0.9) | 0.0–3.0 | 2.3 |

| 3-Stage task | 2.6 (0.7) | 0.0–3.0 | 2.6 |

| Naming common objects | 4.9 (1.2) | 0.0–6.0 | 2.4 |

| Visuospatial, mean (SD) | |||

| Interlocking pentagons | 0.5 (0.9) | 0.0–2.0 | 7.5 |

| Constructional praxis | 2.0 (1.1) | 0.0–4.0 | 8.9 |

Abbreviation: LASI-DAD, Longitudinal Aging Study in India–Diagnostic Assessment of Dementia.

There were 11 questions assessing orientation to time (e.g., name the current month, year, and season) and place (e.g., state and city); further subdomain classifications for time and place proved unnecessary in preliminary analyses. Memory tests included immediate, delayed, and recognition recall of a 10-word list22; immediate, delayed, and recognition recall of the logical memory story recall test (names of person and places were modified from the original so that the Indian population could relate to it),23 immediate and delayed recall of the Brave Man story learning test,20 and a three-object recall task administered over three trials. Additionally, delayed recall of the constructional praxis test, which requires participants to remember a prior drawing,24,25 was used to measure delayed memory. For the three-object recall task, the first of three trials was a registration task. The second trial was administered a few minutes later, but only if participants could not name all objects during the first trial. Analogously, a third trial followed a few minutes after the second trial only if the three objects could not be recalled at trial 2. This administration is designed to ascertain learning of new material, which is memory.1 To score this item for use in factor analyses, we summed the trials, giving three points per trial and assigning 3s if the second or third trials were skipped.

Reasoning ability, a narrow domain of executive functioning, was represented by the Raven progressive matrices task,26 clock drawing,27 and two trials of the go/no-go test.28 Although clock drawing also reflects planning and visuospatial ability, and the go/no-go test also requires response control and sustained attention, there is an element of reasoning ability underlying each of these items that we sought to represent in a narrow domain. Attention/speed, a second narrow domain of executive functioning, was represented by a numeracy task,20 backwards day counting,29 symbol cancelation,30 and the digit span forwards and backwards tasks.22 We included attention/processing speed as a narrow subdomain of executive functioning rather than its own factor because each of the available items also require elements of executive functioning (e.g., planning and response control) and thus the factor should be correlated with the other executive functioning factor. More generally, inability to distinguish attention/speed from executive functioning is a common problem for large epidemiological studies that do not administer high-quality tests of processing speed in sufficient number to support an independent factor.31

The language/fluency domain was represented by animal naming,32 writing or saying a sentence,29 phrase repetition,29 naming of common objects by sight (watch and pencil),29 naming common objects from the Community Screening Instrument for Dementia (elbow, hammer, store, and pointing to a window and a door),33 naming described objects (scissors and coconut),34 following a read or acted command to close one’s eyes, and comprehending and doing a three-stage task.29 In preliminary analyses, we explored semantic (e.g., animal naming and naming objects) and nonsemantic narrow subdomains for the language factor, but these factors were too highly correlated to be meaningfully different.

Visuospatial function was measured by constructional praxis (drawing a circle, rectangle, cube, and diamond) and interlocking pentagons.24

Analysis Plan

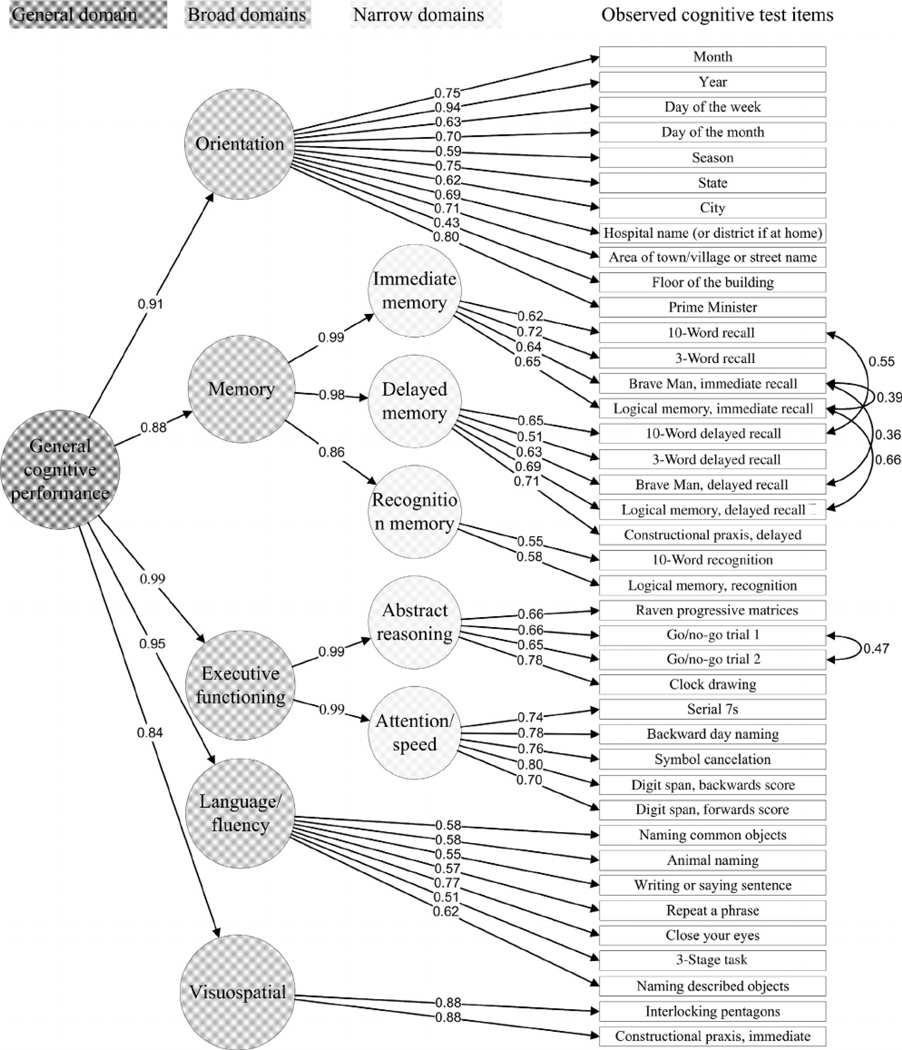

We used descriptive statistics, including means and proportions, to describe the LASI-DAD sample. Table 1 shows the percentage of observations with missing data on each cognitive test; no variable was missing more than 15% in the sample, so as is common in survey data, we imputed most missing observations (see Supplemental Information for details). We then estimated a series of unidimensional factor analysis models for each narrow and broad cognitive domain. Variance in the factors represents only the shared variance among indicators given the estimated factor model, as opposed to the total variance of each indicator. In this way, factors are thought to minimize random error in tests that is not shared by other tests. These factors are designed to represent cognitive domains. Once adequate fit was obtained for each model, we combined each measurement model into a hierarchical multiple domain factor analysis that included a general factor and that represented all domains (Figure 1).

Figure 1.

Structural equation model diagram of the hierarchical confirmatory factor analysis: results from Longitudinal Aging Study in India–Diagnostic Assessment of Dementia (N = 3,224). Note: Latent variables (shown in circles) representing general cognition, broad, and narrow cognitive domains were formed from sets of observed cognitive tests (shown in boxes). Residual correlations between test scores are shown with curved double-headed arrows. Numbers on the paths are standardized factor loadings, with a range from 0 to 1, representing the strength of the relationship (e.g., a correlation) between a test item and a latent variable, or among latent variables.

To improve fit of the unidimensional domain models to the data that did not fit well initially, we considered adding theory-based residual correlations that allowed particular sets of items within domains to be correlated with each other over and above the correlation accounted for by the factor. Model fit was evaluated based on a set of a priori cutoffs for the comparative fit index (CFI), root mean square error of approximation (RMSEA), and the standardized root mean squared residual (SRMR).35 We characterized model fit as perfect if the CFI = 1 and RMSEA = 0 and SRMR = 0, good if CFI ≥ 0.95 and RMSEA ≤ 0.05 and SRMR ≤ 0.05, adequate if CFI ≥ 0.90 and RMSEA ≤ 0.08 and SRMR ≤ 0.08, and poor if either CFI < 0.9 or RMSEA > 0.08 or SRMR > 0.08. We chose this combination of fit statistics because each statistic has advantages and disadvantages. Although low SRMR implies low model residuals, it does not incorporate model complexity and may be partial to overly complex models. The RMSEA provides an index of model discrepancy per degree of freedom (which accounts for model complexity); however, it tends to improve with larger sample size. The CFI compares an estimated model with a hypothetical null baseline model, which may itself be incorrect. Together, these three statistics considered in conjunction minimize the risk of choosing a bad model.36

After developing the final model, we then tested for differences in the correlational structure by language; the LASI-DAD sample translated cognitive tests into 11 languages. Tests might have a different correlational structure in different language groups attributable to differences in translations and cultural factors. To evaluate this, multiple-group versions of the best-fitting hierarchical confirmatory factor analysis (CFA) were fit to evaluate whether tests varied by language group. We tested three levels of invariance: configural, metric, and scalar.37 Configural invariance is met when tests are related to the same physiological factors across language groups. Metric invariance tests whether the magnitude of correlations (factor loadings) of tests with their underlying factor is equal across groups. Satisfaction of metric invariance implies the tests comparably measure their underlying cognitive domain in different languages. Scalar invariance tests whether tests are similarly difficult across language, where difficulty is defined relative to other tests. Satisfaction of scalar invariance implies metric invariance and further that cognitive test thresholds for impairment are comparable across groups. In this analysis, due to small numbers, we excluded people tested in English (N = 10).

The LASI-DAD study by design included people with probable dementia. In main analyses, we included all participants because the goal was to test a CHC factor structure in a representative sample in India. However, dementia may affect the correlational structure of some tests.2 Because of this, we reran models in a subsample of N = 733 participants known to not have dementia at the time of interview, based on an online clinical consensus procedure.21 From the full sample of N = 3,224 LASI-DAD participants, adjudicated clinical dementia rating (CDR) scores are available for N = 829 participants, of whom N = 96 had a CDR of one or higher.

All factor analyses were estimated using the weight least squares estimator in Mplus software.38

RESULTS

The N = 3,224 LASI-DAD participants were on average 69 years old (range = 60–104 years) (Table 1). Just over half of the sample was female (54%), and just over half had at least 1 year of education (53%). Of those with any education, N = 1,436 (84%) had completed a secondary school education or less (Table 1); educational attainment was inversely related with age, such that younger cohorts were more likely to obtain more education (data not shown). Interviews were conducted in 11 different languages; Hindi was the most common language of administration. Statistics for cognitive test scores are also provided in Table 1.

Unidimensional CFAs of individual domains each provided good, adequate, or perfect fit to the data (Table 2). The worst fit, which was still adequate, was evident for the reasoning and attention/speed domains, both of which comprised the broad executive functioning domain; this is consistent with the notion that these domains incorporate tests that measure related but more heterogeneous cognitive processes (e.g., set shifting and planning) compared with, for example, tests of language or of memory.39 We were able to improve the fit of the unidimensional model of immediate memory by allowing an additional, or residual, correlation specifically between the Brave Man and logical memory immediate recall. For the delayed memory factor, the same was the case for the counterparts of these tests. Fit for the reasoning factor was improved as well by including a residual correlation between the two go/no-go trials.

Table 2.

Fit Statistics for Unidimensional and Hierarchical CFA Models: Results from LASI-DAD (N = 3,224)

| Domain specificity | Model | No. of items | RMSEA | CFI | SRMR | Descriptor |

|---|---|---|---|---|---|---|

| Single-domain models | ||||||

| Broad | Orientation | 11 | 0.053 | 0.969 | 0.077 | Good |

| Narrow | Memory-episodic-immediate | 4 | 0.025 | 0.999 | 0.005 | Good |

| Narrow | Memory-episodic-delayed | 5 | 0.040 | 0.991 | 0.012 | Good |

| Narrow | Memory-episodic-recognition | 2 | 0.000 | 1.000 | 0.000 | Perfect |

| Narrow | EF-reasoning | 4 | 0.060 | 0.993 | 0.006 | Adequate |

| Narrow | EF-attention/speed | 5 | 0.056 | 0.992 | 0.020 | Adequate |

| Broad | Language/fluency | 7 | 0.045 | 0.978 | 0.027 | Good |

| Broad | Visuospatial | 2 | 0.000 | 1.000 | 0.000 | Perfect |

| Multiple-domain model | ||||||

| Full hierarchical CFA | 40 | 0.048 | 0.918 | 0.055 | Adequate |

Abbreviations: CFA, confirmatory factor analysis; CFI, comparative fit index; EF, executive functioning; LASI-DAD, Longitudinal Aging Study in India– Diagnostic Assessment of Dementia; RMSEA, root mean square error of approximation; SRMR, standardized root mean squared residual.

Supplementary Table S1 lists item parameters (loadings and thresholds) for each item from each single domain CFA. All standardized factor loadings were in an acceptable range (0.4–0.9 on a 0 to 1 scale). As evidence of unidimensionality, no standardized loadings were exceptionally higher than others in a given domain.

We estimated a full hierarchical model, including broad domains for memory and executive functioning that were second-order factors as well as the general factor. The overall fit of this model was adequate (Table 2). Standardized loadings are shown in Figure 1: all loadings were relatively uniform, suggesting the domains were not dominated by a particular test. Factor loadings for the general factor with the broad domains were all high (between r = 0.84 and r = 0.99), suggesting a high degree of shared covariance among the domains. In addition to the within-domain residual correlations mentioned earlier, fit for the hierarchical multiple domain factor analysis was further improved by including a residual correlation between immediate and delayed subtests of the Brave Man story, immediate and delayed subtests for logical memory, and immediate and delayed 10-word recall.

We evaluated levels of measurement invariance of the hierarchical CFA by language. Across data sets, absolute fit was acceptable for multiple-group models conforming to configural (RMSEA = 0.054; CFI = 0.912; SRMR = 0.077), metric (RMSEA = 0.039; CFI = 0.912; SRMR = 0.110), and scalar invariance (RMSEA = 0.049; CFI = 0.919; SRMR = 0.101). These absolute fit statistics suggest the CFA provides an adequate—and comparable—fit for the structure of cognitive domains in each language. Relative fit statistics, reflecting differences in relative distributions of tests by language, did not appear to differ significantly between configural and scalar invariance (χ2 = 505.5; df = 531; P = .79).

When we reestimated the domain-specific and final models among N = 733 participants known to not have dementia, fit statistics for unidimensional and hierarchical models did not change considerably (Supplementary Table S2).

DISCUSSION

We evaluated the factor structure of the LASI-DAD neuropsychological test battery, and demonstrated configural measurement invariance with a CHC-inspired measurement model that is popular in Western models of human cognition. Clinically and for research purposes, the broad domains of memory, executive functioning, orientation, visuospatial ability, and language/fluency may be used to rank people and to classify cognitive impairment in future work.

The correlations among variables in the HCAP battery, adapted for LASI-DAD, are well described by five broad cognitive domains: orientation, executive functioning, language/fluency, memory, and visuospatial ability. Intercorrelations among these broad domains are represented by a general factor. Executive functioning items were further divided into narrow subdomains of reasoning and attention/speed. Memory items were further divided into narrow subdomains of immediate, delayed, and recognition memory. Although our final model included these broad and narrow domains, other organizations are possible. For example, we might drop narrow domains and instead estimate a factor analysis with general and broad factors only; such a model was inconsistent with CHC theory, which we sought to test. Conversely, there could be other narrow domains, such as semantic and nonsemantic language subdomains of language/fluency; when we evaluated this in LASI-DAD (results available on request), the correlation between these two factors was above r = 0.97, which suggests those domains are indistinguishable. That said, other narrow domains are possible.

Estimation of domains for cognitive functioning, rather than a general factor, is a choice motivated not just by statistical considerations but also scientific and clinical needs. Clinicians suspecting deficits in certain cognitive abilities may be inclined to evaluate tests of those particular abilities, rather than assessing general mental status through an examination such as the Mini-Mental State Examination (MMSE)40 or the Hindi-MMSE.29 Assessment of function across multiple domains can also reveal impairment on one or multiple cognitive domains, which can be useful clinically and in research (e.g., Crane and colleagues41 evaluated relative impairments in several cognitive domains and were able to isolate genetic and neuropathological risk factors specific to subtypes of dementia patients).

Advantages of this study include a large, well-characterized sample of Indian older adults with detailed neuropsychological testing that was designed to include instruments from HCAP that are comparable to the HRS. There are some study limitations as well. We did not include tests of crystallized cognitive abilities, and so the CHC model tested here is not as complete a representation of cognitive abilities as it could be. An additional limitation is that the tests selected to represent certain domains are often imperfect measures of those domains. For example, with respect to what we call reasoning, clock drawing also taps planning and visuospatial ability, whereas the go/no-go test also measures response control and sustained attention. In the particular case of this narrow domain, as for all domains in the final model presented in this study, tests were assigned a priori based on substantive theory by neuropsychologists and the final solution was validated by the uniformity of standardized loadings, as indicated in Figure 1. A final limitation of this study is that education is profoundly related to later-life cognitive outcomes.42–44 Educational systems are rapidly changing in India; although we noted that 47% of participants in this cross-sectional sample had no formal education, we also noted that amount of education was inversely proportional to age. The World Bank estimates that 75% of children aged 7 to 10 years were literate in 2011.45 Thus, as younger cohorts that have more education, different occupational exposures, and other early life determinants of later-life cognition age into older adulthood, findings from this study will need to be updated.

In conclusion, we found that a CHC model of cognitive abilities adequately characterizes the factor structure of the LASI-DAD adaptation of the HCAP battery, an extensive battery of tests that is being deployed in several other studies around the world. Although this model is more complex than many in neuropsychology because of the incorporation of broad and narrow domains, it adequately represents the factor structure in LASI-DAD and has potential future research uses.

Supplementary Material

Supplementary Table S1: Item Parameters from Unidimensional CFA Models: Results from LASI-DAD (N = 3,224)

Supplementary Table S2: Fit Statistics for Unidimensional and Hierarchical Confirmatory Factor Analysis Models Among Participants Without Dementia: Results from LASI-DAD (N = 733)

ACKNOWLEDGMENTS

Financial Disclosure: This work was supported by the National Institute on Aging (K01-AG050699 to Gross and R01-AG051125 to Jinkook Lee).

Sponsor’s Role: Sponsors had no direct role in the design, methods, subject recruitment, data collection, analysis, or preparation of this article.

Footnotes

Confiict of Interest: The authors have no conflicts, whether personal, financial, or otherwise.

SUPPORTING INFORMATION

Additional Supporting Information may be found in the online version of this article.

REFERENCES

- 1.Lezak MD, Howieson DB, Loring DW. Neuropsychological Assessment. New York, NY: Oxford University Press; 2004. [Google Scholar]

- 2.Delis DC, Jacobson M, Bondi MW, Hamilton JM, Salmon DP. The myth of testing construct validity using factor analysis or correlations with normal or mixed clinical populations: lessons from memory assessment. J Int Neuropsychol Soc. 2003;9(6):936–946. [DOI] [PubMed] [Google Scholar]

- 3.Crooks VC, Petitti DB, Robins SB, Buckwalter JG. Cognitive domains associated with performance on the telephone interview for cognitive status-modified. Am J Alzheimers Dis Other Demen. 2006;21(1):45–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kanne SM, Balota DA, Storandt M, McKeel DW Jr, Morris JC. Relating anatomy to function in Alzheimer’s disease: neuropsychological profiles predict regional neuropathology 5 years later. Neurology. 1998;50(4):979–985. [DOI] [PubMed] [Google Scholar]

- 5.Ritchie SJ, Tucker-Drob EM, Cox SR, et al. Predictors of ageing-related decline across multiple cognitive functions. Dermatol Int. 2016;59:115–126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tucker-Drob EM. Global and domain-specific changes in cognition through-out adulthood. Dev Psychol. 2011;47(2):331–343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ruff RM, Light RH, Parker SB, Levin HS. The psychological construct of word fluency. Brain Lang. 1997;57(3):394–405. [DOI] [PubMed] [Google Scholar]

- 8.Widaman KF, Reise SP. Exploring the measurement invariance of psychological instruments: applications in the substance use domain In: Bryant KJ, Windle M, West SG, eds. The Science of Prevention: Methodological Advances from Alcohol and Substance Abuse Research. Washington, DC: American Psychological Assocation; 1997:281–324. [Google Scholar]

- 9.McGrew KS. CHC theory and the human cognitive abilities project: standing on the shoulders of the giants of psychometric intelligence research. Intelligence. 2009;37(1):1–10. [Google Scholar]

- 10.Cattell RB. Some theoretical issues in adult intelligence testing. Psychol Bull. 1941;38:592. [Google Scholar]

- 11.Horn JL (1965). Fluid and crystallized intelligence: a factor analytic and developmental study of the structure among primary mental abilities [unpublished doctoral dissertation]. Champaign: University of Illinois. [Google Scholar]

- 12.Keith TZ, Reynolds MR. Cattell–Horn–Carroll abilities and cognitive tests: what we’ve learned from 20 years of research. Psychol Sch. 2010;47(7): 635–650. [Google Scholar]

- 13.Carroll JB. Human Cognitive Abilities: A Survey of Factor-Analytic Studies. Cambridge, UK: Cambridge University Press; 1993. [Google Scholar]

- 14.Schneider WJ, Flanagan DP. The relationship between theories of intelligence and intelligence tests In: Goldstein S, Princiotta D, Naglieri JA, eds. Handbook of Intelligence: Evolutionary Theory, Historical Perspective, and Current Concepts. New York, NY: Springer; 2015:317–340. [Google Scholar]

- 15.Jewsbury PA, Bowden SC, Strauss ME. Integrating the switching, inhibition, and updating model of executive function with the Cattell-Horn-Carroll model. J Exp Psychol Gen. 2016;145(2):220–245. [DOI] [PubMed] [Google Scholar]

- 16.Siedlecki KL, Manly JJ, Brickman AM, Schupf N, Tang MX, Stern Y. Do neuropsychological tests have the same meaning in Spanish speakers as they do in English speakers? Neuropsychology. 2010;24(3):402–411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Porrselvi AP, Shankar V. Status of cognitive testing of adults in India. Ann Indian Acad Neurol. 2017;20(4):334–340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Balachandar R, Tripathi R, Bharath S, Kumar K. Classic tower of Hanoi, planning skills, and the Indian elderly. East Asian Arch Psychiatry. 2015;25: 108–114. [PubMed] [Google Scholar]

- 19.Arokiasamy P, Bloom DE, Lee J, Feeney K, Ozolins M. Longitudinal aging study in India: vision, design, implementation and preliminary findings In: Smith JP, Majmundar M, eds. Aging in Asia: Findings from New and Emerging Data Initiatives. Washington, DC: National Academies Press; 2012. [PubMed] [Google Scholar]

- 20.Weir DR, Langa KM, Ryan LH. 2016. Harmonized cognitive assessment protocol (HCAP): study protocol summary. http://hrsonline.isr.umich.edu/index.php?p=shoavail&iyear=ZU. Accessed May 20, 2020. [Google Scholar]

- 21.Lee J, Banerjee J, Khobragade PY, Angrisani M, Dey AB. LASI-DAD study: a protocol for a prospective cohort study of late-life cognition and dementia in India. BMJ Open. 2019;9(7):e030300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Strauss ME, Fritsch T. Factor structure of the CERAD neuropsychological battery. J Int Neuropsychol Soc. 2004;10(4):559–565. [DOI] [PubMed] [Google Scholar]

- 23.Wechsler D Wechsler Memory Scale—Fourth Edition (WMS–IV) Technical and Interpretive Manual. San Antonio, TX: Pearson; 2009. [Google Scholar]

- 24.Rosen WG, Mohs RC, Davis KL. A new rating scale for Alzheimer’s disease. Am J Psychiatry. 1984;141(11):1356–1364. [DOI] [PubMed] [Google Scholar]

- 25.Yuspeh RL, Vanderploeg RD, Kershaw AJ. CERAD praxis memory and recognition in relation to other measures of memory. Clin Neuropsychol. 1998; 12:468–474. [Google Scholar]

- 26.Raven J The Raven’s progressive matrices: changes and stability over culture and time. Cogn Psychol. 2000;41:1–48. [DOI] [PubMed] [Google Scholar]

- 27.Agrell B, Dehlin O. The clock-drawing test. Age Ageing. 1998;27:399–403. [DOI] [PubMed] [Google Scholar]

- 28.Gordon B, Caramazza A. Lexical decision for open-and closed-class words: failure to replicate differential frequency sensitivity. Brain Lang. 1982;15:143–160. [DOI] [PubMed] [Google Scholar]

- 29.Ganguli M, Ratcliff G, Chandra V, et al. A Hindi version of the MMSE: the development of a cognitive screening instrument for a largely illiterate rural elderly population in India. Int J Geriatr Psychiatry. 1995;10(5):367–377. [Google Scholar]

- 30.Lowery N, Ragland D, Gur RC, Gur RE, Moberg PJ. Normative data for the symbol cancellation test in young healthy adults. Appl Neuropsychol. 2004;11(4):216–219. [DOI] [PubMed] [Google Scholar]

- 31.Park LQ, Gross AL, Pa J, et al. Confirmatory factor analysis of the ADNI neuropsychological battery. Brain Imaging Behav. 2012;6(4):528–539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Benton A, Hamsher K. Multilingual Aphasia Examination. Iowa City: University of Iowa; 1976. [Google Scholar]

- 33.Hall KS, Gao S, Emsley CL, Ogunniyi AO, Morgan O, Hendrie HC. Community screening interview for dementia (CSI ‘D’); performance in five disparate study sites. Int J Geriatr Psychiatry. 2000;15(6):521–531. [DOI] [PubMed] [Google Scholar]

- 34.Brandt J, Spencer M, Folstein M. The telephone interview for cognitive status. Neuropsychiatry. Neuropsychol Behav Neurol. 1988;1(2):111–117. [Google Scholar]

- 35.Hu L, Bentler PM. Cutoff criteria for fit indices in covariance structure analysis: conventional versus new alternatives. Struct Equ Modeling. 1999;6: 1–55. [Google Scholar]

- 36.Kenny DA, Kaniskan B, McCoach B. The performance of RMSEA in models with small degrees of freedom. Sociol Methods Res. 2015;44(3):486–507. [Google Scholar]

- 37.Kline R Principles and Practice of Structural Equation Modeling. 4th ed New York, NY: Guilford Press; 2016. [Google Scholar]

- 38.Muthén LK, Muthén BO. Mplus User’s Guide. 8th ed Los Angeles, CA: Muthén & Muthén; 2017. https://statmodel.com. Accessed July 17, 2020. [Google Scholar]

- 39.Gross AL, Mungas DM, Crane PK, et al. Effects of education and race on cognitive decline: an integrative study of generalizability versus study-specific results. Psychol Aging. 2015;30(4):863–880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Folstein MF, Folstein SE, McHugh PR. “Mini–mental state”: a practical guide for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12:189–198. [DOI] [PubMed] [Google Scholar]

- 41.Crane PK, Trittschuh E, Mukherjee S, et al. Incidence of cognitively defined late-onset Alzheimer’s dementia subgroups from a prospective cohort study. Alzheimers Dement. 2017;13(12):1307–1316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Angel L, Fay S, Bouazzaoui B, Baudouin A, Isingrini M. Protective role of educational level on episodic memory aging: an event-related potential study. Brain Cogn. 2010;74(3):312–323. [DOI] [PubMed] [Google Scholar]

- 43.Clouston SA, Kuh D, Herd P, Elliott J, Richards M, Hofer SM. Benefits of educational attainment on adult fluid cognition: international evidence from three birth cohorts. Int J Epidemiol. 2012;41(6):1729–1736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Dekhtyar S, Wang HX, Fratiglioni L, Herlitz A Childhood school performance, education and occupational complexity: a life-course study of dementia in the Kungsholmen project. Int J Epidemiol. 2016;45(4):1207–1215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.World Bank. Education in India. https://www.worldbank.org/en/news/feature/2011/09/20/education-in-india. Accessed March 20, 2020

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Table S1: Item Parameters from Unidimensional CFA Models: Results from LASI-DAD (N = 3,224)

Supplementary Table S2: Fit Statistics for Unidimensional and Hierarchical Confirmatory Factor Analysis Models Among Participants Without Dementia: Results from LASI-DAD (N = 733)