Abstract

This paper adopts the information and fractional calculus tools for studying the dynamics of a national soccer league. A soccer league season is treated as a complex system (CS) with a state observable at discrete time instants, that is, at the time of rounds. The CS state, consisting of the goals scored by the teams, is processed by means of different tools, namely entropy, mutual information and Jensen–Shannon divergence. The CS behavior is visualized in 3-D maps generated by multidimensional scaling. The points on the maps represent rounds and their relative positioning allows for a direct interpretation of the results.

Keywords: entropy, mutual information, Jensen–Shannon divergence, fractional calculus, multidimensional scaling, complex systems

1. Introduction

Soccer (also known as association football, or football) is one of the most popular team sports around the world. It involves more than 250 million players in about 200 countries [1,2]. Five of the most prestigious national soccer leagues are located in Europe, namely the English “Premier League”, the Spanish “La Liga”, the German “Bundesliga”, the Italian “Serie A”, and the French “Ligue 1”. The total annual revenue of these leagues amounts to nearly 17 billion Euros. The game is played by two teams, composed of 11 players each, on a rectangular field with a goal placed at each end. The objective of the game is to score by getting a spherical ball into the opposing goal. The 10 field players can maneuver the ball using any part of the body except hands and arms, while the goalkeeper is allowed to touch the ball with the whole body, as long as he/she stays in his/her penalty area. Otherwise, the rules of the field players apply. The match has two periods of 45 min each. The winning team is the one that scores more goals by the end of the match.

For most European leagues, one season includes two parts, so that the visited and visitor teams interchange place. All teams start with zero points and, at every round one {victory, draw, defeat} worth points. By the end of the last round, the team that accumulated more points is crowned champion.

A soccer league can be seen as a complex system (CS) constituted by multiple agents that interact at different scales in time and space. For example, at the match time scale, we observe interactions between players, coaches, referees, supporters, and environment, among others, that lead to a certain team performance during the match [1,3,4,5,6,7,8,9,10,11,12]. On the other hand, at the season time scale, we verify interactions between teams, at several matches, while the teams behavior evolves subject to transfers of players and coaches, injuries, suspensions, physical and mental stress, administrative decisions, and others [13,14,15]. Therefore, a plethora of elements gives rise to the emergence of a collective dynamics, with time-space patterns that can be analyzed by the mathematical and computational tools adopted for tackling dynamical systems [16,17].

Entropy-based techniques have been successfully applied in the study of many problems in sciences and engineering [18,19,20,21]. Divergence measures are tightly connected to entropy and assume a key role in theoretical and applied statistical inference and data processing problems, namely estimation, classification, detection, recognition, compression, indexation, diagnosis, and others [22]. We can mention the Kullback–Leibler [23], Hellinger [24], Csiszár [22], Rényi [25], Jensen–Rényi [26,27], Jensen–Shannon [28] formulations, among others [22].

Fractional Calculus (FC) generalizes the classical differential operations to non-integer orders [29,30,31,32,33]. The area of FC dates back to the year 1695, in the follow-up of several letters between l’Hôpital and Leibniz about the meaning and apparent paradox of the nth-order derivative , for . However, only in recent decades was FC recognized to play an important role in the modeling and control of many physical phenomena. FC emerged as a key tool in the area of dynamical systems with complex behavior and non-locality. Nowadays, the FC concepts are applied in different scientific fields, namely mathematics, physics, biology, finance and geophysics [34,35,36,37,38,39,40,41,42]. Indeed, fractional derivatives capture memory effects [43] and hereditary properties, providing a more insightful description of the phenomena [31,44,45,46].

In this paper, we adopt the information and FC theories for studying the evolution of a national soccer league season, while unveiling possible patterns in successive seasons. A soccer league season is treated as a CS with a state observable at discrete time instants, that is, at the time of rounds. The CS state consists of the goals scored by the teams, which is processed by means of different tools, namely entropy, mutual information and Jensen–Shannon divergence. The CS behavior is visualized using multidimensional scaling (MDS). The MDS generates maps of points in the 3-D space that represent the CS dynamics. The relative positioning of the points and the emerging patterns allow for a direct interpretation of the CS behavior. Therefore, with this scheme we can investigate the dynamics along each season, while embedding, indirectly, different time scales and entities at distinct levels of detail. In other words, we are not tackling a specific player, team, match, or entity, but the behavior of the CS that involves all aspects in a macro scale.

Bearing these ideas in mind, this paper is organized as follows. Section 2 describes the experimental dataset and includes a summary of the main characteristics and rules of each league. Section 3 introduces the main mathematical tools for processing the data. Section 4 analyses two top European soccer leagues. Finally, Section 5 outlines the main conclusions.

2. Dataset and Description of the Leagues

Data for worldwide soccer is available at http://www.worldfootball.net/. The database contains information about national leagues and international competitions. For the national leagues, the results of the matches are organized on a per season basis. For each match we know the names of the home and away teams, the goals scored, the points gathered, and the date of the match, along with other information.

We consider 23 seasons from the years 1995/1996 up to 2017/2018 of the two top national European leagues, namely the English “Premier League” and the Spanish “La Liga”. The “Premier League”, or “Premiership”, was established in 1992 as the most important league of the English association football. It involves 20 teams and adopts a system of promotion and relegation with the “Championship”, meaning that the three worst classified of the “Premier League” are relegated to the “Championship” and the three best classified of the “Championship” are promoted to the “Premier League”. The “Premier League” is now the most popular football league in the world, and the one that registers the highest stadium occupancy among all soccer leagues in Europe. “La Liga” started in 1929 as the top division of the Spanish soccer league system. It has been considered by UEFA the strongest league in Europe in recent years. Since 1997, “La Liga” engaged 20 teams. At the end of every season, the three lowest placed teams are relegated to the “Second Division”, and are replaced by the top two teams of this league plus the winner of a play-off competition.

3. Mathematical Fundamentals

This section introduces the main mathematical tools adopted for data processing, namely entropy, mutual information, Jensen–Shannon divergence and MDS.

3.1. Information Measures

Let us consider a discrete 1-D random variable X with possible values in and probability mass function . An event with probability of occurrence , , has information content, I, given by:

| (1) |

The expected value of I is the Shannon entropy:

| (2) |

where denotes the expected value operator. Expression (2) satisfies the four Khinchin axioms [47,48] and measures the uncertainty in .

The joint entropy quantifies the shape of the mass function associated with a set of random variables [49]. The joint Shannon entropy of two 2-D discrete random variables is defined as:

| (3) |

where denotes the joint probability mass function. If X and Y are independent, then their joint entropy is the sum of the individual entropies, meaning that .

The mutual information is also an important concept in information theory. The quantifies the information shared by the two random variables. Loosely speaking, the measures the average amount of information in one random variable about the other. Formally, the of the random variables X and Y, with marginal probability mass functions and , respectively, is given by [50,51]:

| (4) |

where denotes the pointwise mutual information.

When the random variables X and Y are independent, there is no shared information between them, and the mutual information is .

The Jensen–Shannon divergence between the two probability mass functions and is given by [52]:

| (5) |

where .

3.2. A Fractional Calculus Approach to Information Measures

In the scope of the Shannon approach, we note that the information, , is a function between the cases and , where I and D denote the integral and derivative operators, respectively. The interpretation of these expressions in the perspective of FC led to rewriting the information and entropy of order as follows [53,54]:

| (6) |

| (7) |

where and represent the gamma and digamma functions, respectively.

Similarly, for the pair of random variables X and Y the joint fractional entropy of order can be written as:

| (8) |

Expressions (6)–(8) lead to the Shannon information and entropies when .

The fractional mutual information results in:

| (9) |

Using Equations (5) and (6), leads to the fractional (generalized) Jensen–Shannon divergence:

| (10) |

3.3. Multidimensional Scaling

MDS is a computational technique for clustering and visualizing data [55]. In a first phase, given W items in a c-dim space and a measure of dissimilarity, we calculate a symmetric matrix, , , of item to item dissimilarities. The matrix represents the input of the MDS numerical scheme. The MDS rational is to assign points for representing items in a d-dim space, with , and to reproduce the measured dissimilarities, . In a second phase, the MDS evaluates different configurations for maximizing some fitness functions, arriving at a set of point coordinates (and, therefore, to a symmetric matrix of distances that represents the reproduced dissimilarities) that best approximates the original . A fitness function used often is the raw stress:

| (11) |

where indicates some type of transformation.

The MDS interpretation is based on the patterns of points visualized in the generated map. Similar (dissimilar) objects are represented by points that are close to (far from) each other. Thus, the information retrieval is not based on the point coordinates, or the geometrical form of the clusters, and we can rotate, translate and magnify the map, since the distances remain identical. The MDS axes have neither units nor special meaning.

The quality of the MDS is evaluated by means of the stress and Shepard plots. The stress plot represents the locus of versus the number of dimensions d. The map is a monotonic decreasing chart and choosing the value of d is a compromise between achieving low values of or d. Often we adopt the values or , since they allow a direct visualization. The Shepard diagram, for a particular value of d, compares and . A narrow scatter around the 45 degree line represents a good fit between and .

4. Analysis and Visualization of Soccer Data

4.1. Analysis of Soccer Data Based on Information Measures

The top European leagues engage M teams that play rounds. Throughout one season, each team has matches at home and matches away from home. For the mth team, , at round r, , we define the variables:

—goals scored at home;

—goals scored at home of the adversary.

For a league season the data is processed by means of the following steps:

define a dimensional matrix, A, initialized with void elements;

at the end of round update such that and . Therefore, at each round, r, a total of cells of are updated with new information based on the results of the matches;

- normalize the matrix by calculating , where:

(12) interpret as a 2-D probability mass function, and calculate the information measures , , and .

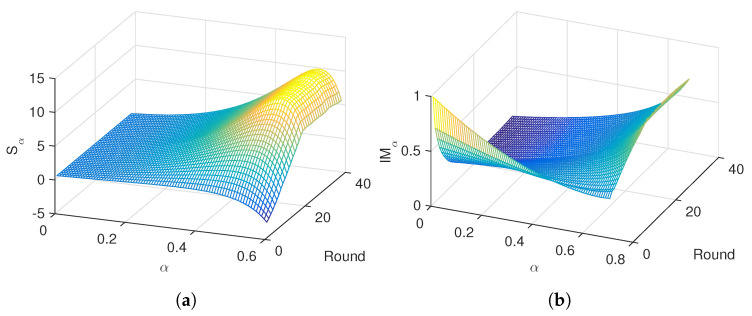

In the follow-up we apply the proposed numerical scheme. The order was adjusted experimentally as a compromise between maximizing sensitivity of the information measures and smoothing the transient at the beginning of the curves. Figure 1 illustrates the variation of and versus and round, , for the “Premier League” in season 2014/2015. We verify that close to the entropy, , is maximum, while for both and mutual information, , the transients are smooth. For other seasons and leagues we obtain the same type of results.

Figure 1.

Evolution of (a) and (b) versus and round, , for the ”Premier League“ in season 2014/2015.

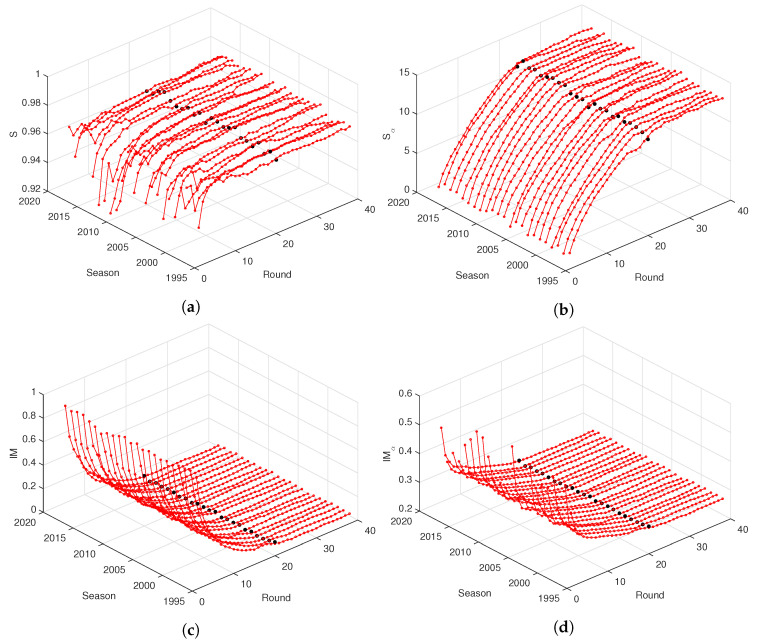

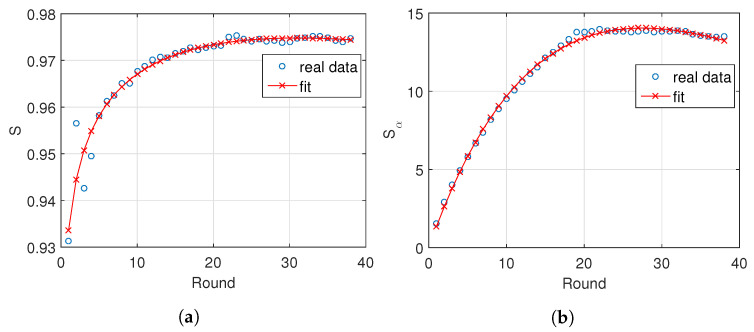

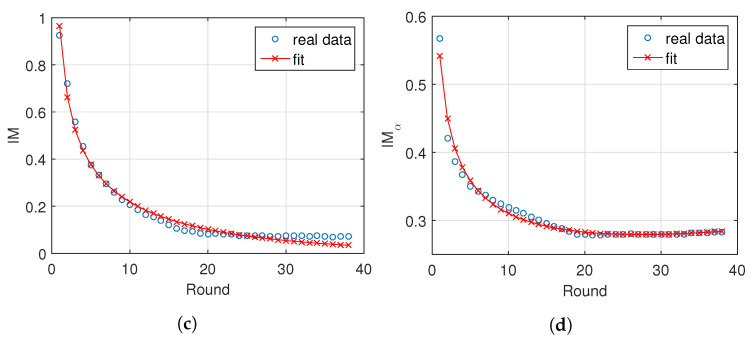

Figure 2 and Figure 3 depict the entropy, S and , and mutual information, and , versus round, , for the “Premier League” and “La Liga”, during the seasons from 1995/1996 up to 2017/2018. For other national leagues, the graphs are of the same type. We verify the emergence of similar patterns on the charts both for S and , and and , independently of the season. In fact, after an initial transient due to data scarcity and period of adaptation of the teams, visible mainly in S, all information measures evolve smoothly with r. The entropy increases and the mutual information decreases in time, while we observe that the fractional measures are less noisy.

Figure 2.

The entropy, (a) S and (b) , and mutual information, (c) and (d) , with , versus round, r, for the “Premier League” during the seasons 1995/1996 up to 2017/2018. The black marks denote half season .

Figure 3.

The entropy, (a) S and (b) , and mutual information, (c) and (d) , with , versus round, r, for the “La Liga” during seasons 1995/1996 up to 2017/2018. The black marks denote half season .

For each league and season the information measures , , and are approximated by the function:

| (13) |

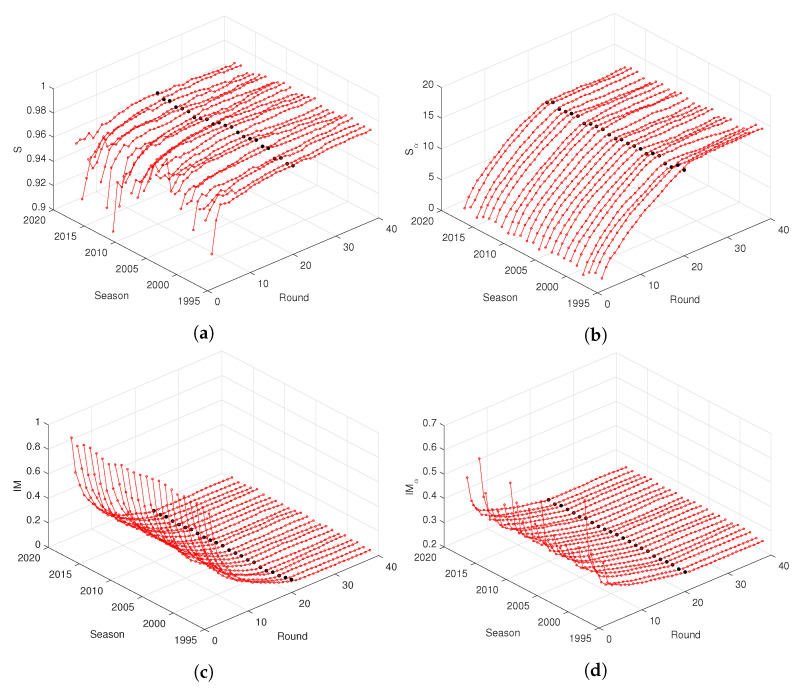

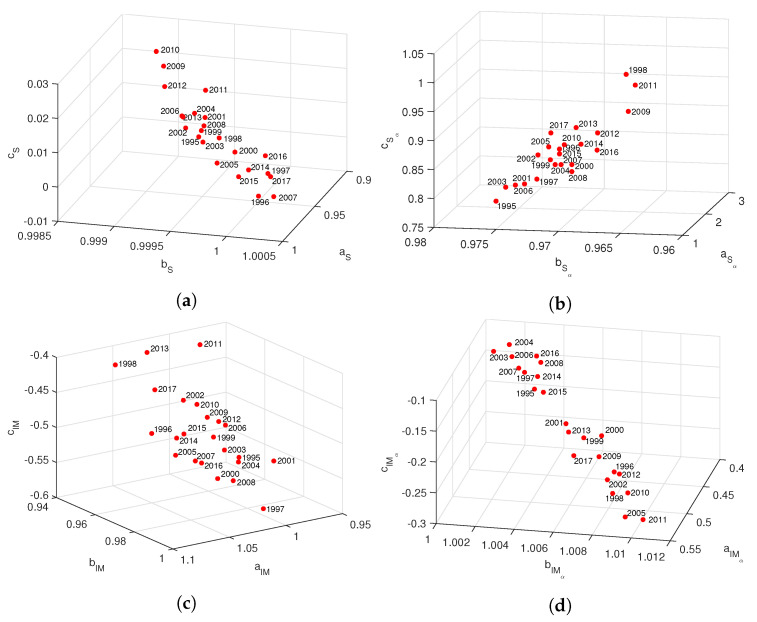

Several numerical experiments demonstrated that model (13) fits well to the data and has a reduced number of parameters. Figure 4 illustrates the fit of S, , and , with , for the “Premier League” in season 2014/2015. Figure 5 depicts the locii () for seasons 1995/1996 to 2017/2018, where we observe a strong correlation between the three parameters. On the other hand, comparing the time evolution we find a strong variation, due to the known volatility of the results.

Figure 4.

The fit of the information measures, (a) S, (b) , (c) and (d) , with , for the “Premier League” in season 2014/2015.

Figure 5.

Locii of the parameters of for the information measures, (a) S, (b) , (c) and (d) , with , for the “Premier League” in seasons 1995/1996 to 2017/2018.

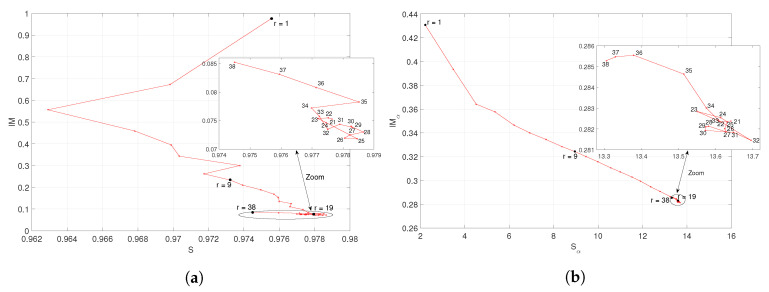

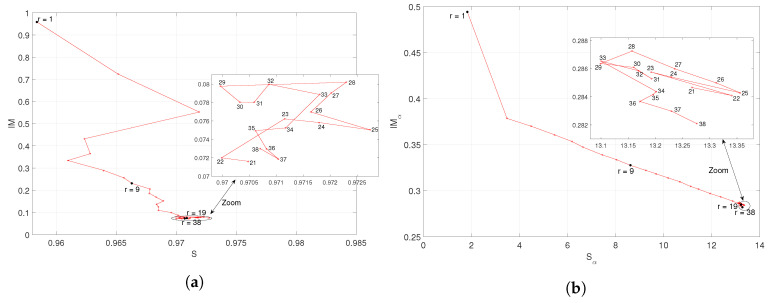

Inspired by the phase plane technique, widely used in the analysis of dynamical systems [42,56], we interpret the entropy and mutual information as phase variables of a soccer league season and we analyze the phase plane trajectories. Figure 6 and Figure 7 depict the locii of versus S and versus , respectively, for the “Premier League” and “La Liga” in season 2014/2015. For other seasons, the locii are of the same type. We note that after an initial transient, the locci converge smoothly towards a given region of the plane. In the final part, the trajectories unveil a complex behavior. We conjecture that such patterns are due to the proximity of the end of the season. In fact, a few rounds before the end of the season, we verify that some teams play demotivated, since they have already been relegated to the lower division, or they have already achieved their objectives. Conversely, other teams compete with extra motivation, since they are close to achieving their main endeavor.

Figure 6.

Locii of (a) and (b) , ), respectively, for the “Premier League” in season 2014/2015.

Figure 7.

Locii of (a) and (b) , ), respectively, for the “La Liga” in season 2014/2015.

4.2. Clustering and Visualization of Soccer Data Based on Information Measures

In this subsection we adopt the Jensen–Shannon divergence and the MDS technique to study the dynamics of a soccer league season. The MDS input are the dissimilarity matrices:

| (14) |

| (15) |

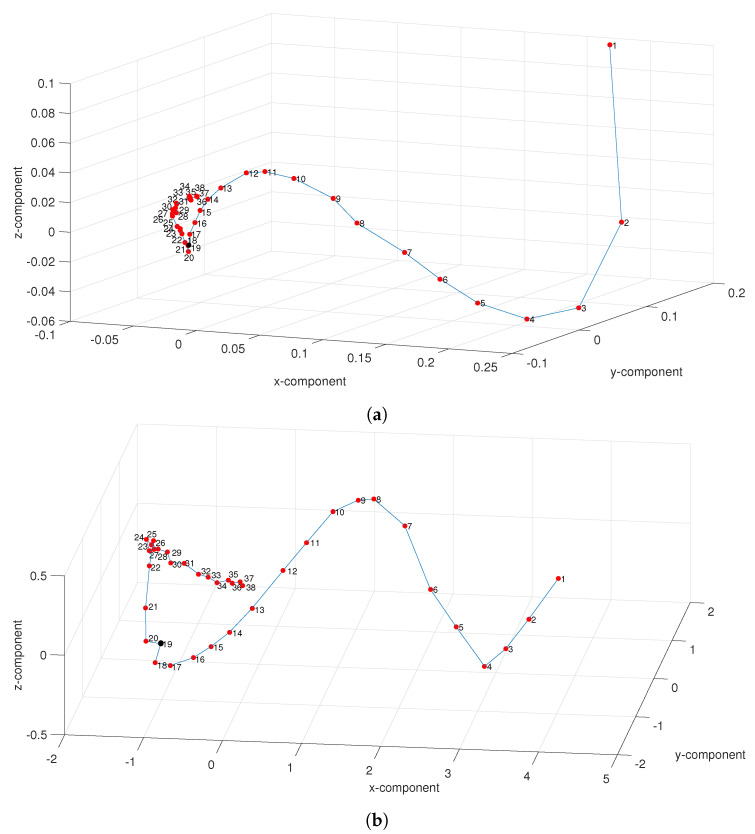

Figure 8 depicts the 3-D MDS maps (i.e., ) obtained with the dissimilarity matrices (14) and (15) for the “Premier League” in season 2014/2015. For other seasons the results are of the same type. On both maps we observe the time-flow captured by the relative position of the points that represent rounds. For the evolution is more clear, namely in the second part of the league, revealing the superiority of the fractional information measures. Nonetheless, we find a considerable volatility between the distinct seasons, confirming the previous plots of the parameters ().

Figure 8.

The 3-D MDS map based on matrices (a) (14) and (b) (15), with , for the “Premier League” in season 2014/2015. The black mark denotes half season .

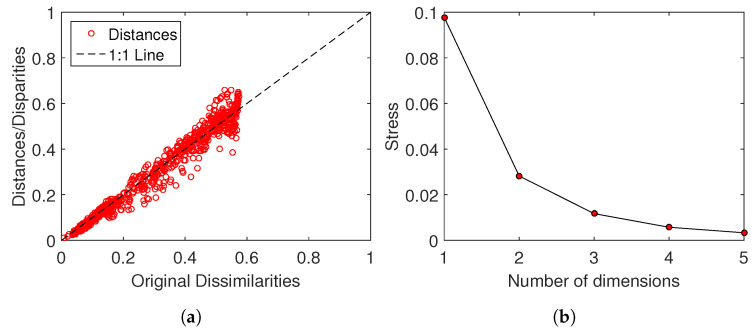

Figure 9 shows the Shepard and stress plots that assess the MDS results obtained with the distance matrix for the “Premier League” in season 2014/2015. The Shepard diagram shows that the points are distributed around the 45 degree line, indicating a good fit between the original and the reproduced distances. The stress plot reveals that a 3-D space () describes well the locus of points, since for we obtain the maximum curvature of the line and, therefore, 3-dim maps represent a good compromise between accuracy and visualization performance. For the matrix the Shepard and stress plots lead to similar conclusions.

Figure 9.

(a) Shepard and (b) stress plots assessing the quality of the MDS with matrix for the “Premier League” in season 2014/2015.

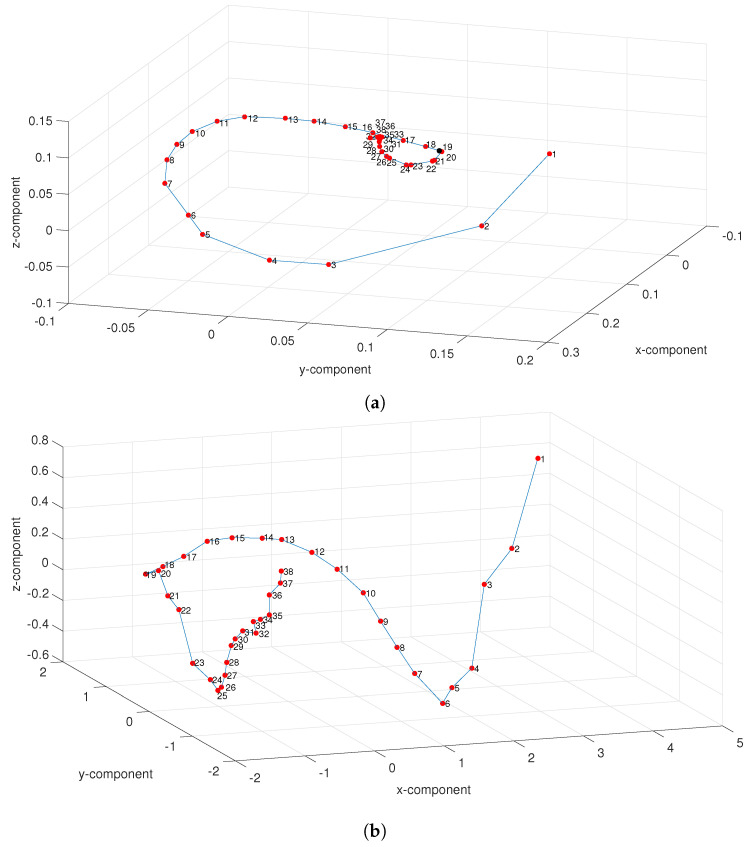

Figure 10 shows the 3-D MDS maps obtained with the dissimilarity matrices (14) and (15) for the “La Liga” in season 2014/2015. The results are consistent with those obtained for the “Premier League”, demonstrating that both leagues have the same type of dynamics when viewed in the perspective of the adopted information measures.

Figure 10.

The 3-D MDS map based on matrices (a) (14) and (b) (15), with , for the “La Liga” in season 2014/2015. The black marks denote half season .

The applied ideas and tools were applied to a non-standard field, yielding realistic results. We do not expect that they are directly applicable in the field, but we believe that they will trigger future developments.

5. Conclusions

We proposed an original scheme based on information and fractional calculus tools for studying the dynamics of a national soccer league. A soccer league season was treated as a CS that evolves in discrete time, i.e., the rounds time. We considered that the CS state consisted of the goals scored by the teams, and we processed it by means of different tools, namely entropy, mutual information and Jensen–Shannon divergence. The CS behavior was visualized in 3-D maps generated by MDS. Fractional-order information measures were accurate in describing the complex behavior of such challenging systems.

The area of application is not classic and present-day tools adopted in that area are very limited. The proposed idea follows the perspective of applied mathematics, computer science and physics. It is not intended to develop a product directly applicable in the field. The authors believe that this initial study and others to follow will trigger future applications.

Acknowledgments

The authors acknowledge the worldfootball.net (http://www.worldfootball.net/) for the data used in this paper.

Author Contributions

A.M.L. and J.A.T.M. conceived, designed and performed the experiments, analyzed the data and wrote the paper.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Wallace J.L., Norton K.I. Evolution of World Cup soccer final games 1966–2010: Game structure, speed and play patterns. J. Sci. Med. Sport. 2014;17:223–228. doi: 10.1016/j.jsams.2013.03.016. [DOI] [PubMed] [Google Scholar]

- 2.Dunning E. Sport Matters: Sociological Studies of Sport, Violence And Civilisation. Routledge; London, UK: New York, NY, USA: 1999. [Google Scholar]

- 3.Vilar L., Araújo D., Davids K., Bar-Yam Y. Science of winning soccer: Emergent pattern-forming dynamics in association football. J. Syst. Sci. Complex. 2013;26:73–84. doi: 10.1007/s11424-013-2286-z. [DOI] [Google Scholar]

- 4.Passos P., Davids K., Araujo D., Paz N., Minguéns J., Mendes J. Networks as a novel tool for studying team ball sports as complex social systems. J. Sci. Med. Sport. 2011;14:170–176. doi: 10.1016/j.jsams.2010.10.459. [DOI] [PubMed] [Google Scholar]

- 5.Zengyuan Y., Broich H., Seifriz F., Mester J. Kinetic energy analysis for soccer players and soccer matches. Prog. Appl. Math. 2011;1:98–105. [Google Scholar]

- 6.Couceiro M.S., Clemente F.M., Martins F.M., Machado J.A.T. Dynamical stability and predictability of football players: The study of one match. Entropy. 2014;16:645–674. doi: 10.3390/e16020645. [DOI] [Google Scholar]

- 7.McGarry T., Anderson D.I., Wallace S.A., Hughes M.D., Franks I.M. Sport competition as a dynamical self-organizing system. J. Sports Sci. 2002;20:771–781. doi: 10.1080/026404102320675620. [DOI] [PubMed] [Google Scholar]

- 8.Grehaigne J.F., Bouthier D., David B. Dynamic-system analysis of opponent relationships in collective actions in soccer. J. Sports Sci. 1997;15:137–149. doi: 10.1080/026404197367416. [DOI] [PubMed] [Google Scholar]

- 9.Camerino O.F., Chaverri J., Anguera M.T., Jonsson G.K. Dynamics of the game in soccer: Detection of T-patterns. Eur. J. Sports Sci. 2012;12:216–224. doi: 10.1080/17461391.2011.566362. [DOI] [Google Scholar]

- 10.Mack S.H.M.G., Dutler K.E., Mintah J.K. Chaos Theory: A New Science for Sport Behavior? Athl. Insight. 2000;2:8–16. [Google Scholar]

- 11.Travassos B., Araújo D., Correia V., Esteves P. Eco-dynamics approach to the study of team sports performance. Open Sports Sci. J. 2010;3:56–57. doi: 10.2174/1875399X01003010056. [DOI] [Google Scholar]

- 12.Neuman Y., Israeli N., Vilenchik D., Cohen Y. The Adaptive Behavior of a Soccer Team: An Entropy-Based Analysis. Entropy. 2018;20:758. doi: 10.3390/e20100758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Barros C.P., Leach S. Performance evaluation of the English Premier Football League with data envelopment analysis. Appl. Econ. 2006;38:1449–1458. doi: 10.1080/00036840500396574. [DOI] [Google Scholar]

- 14.Criado R., García E., Pedroche F., Romance M. A new method for comparing rankings through complex networks: Model and analysis of competitiveness of major European soccer leagues. Chao Interdiscip. J. Nonlinear Sci. 2013;23:043114. doi: 10.1063/1.4826446. [DOI] [PubMed] [Google Scholar]

- 15.Callaghan T., Mucha P.J., Porter M.A. Random walker ranking for NCAA division IA football. Am. Math. Mon. 2007;114:761–777. doi: 10.1080/00029890.2007.11920469. [DOI] [Google Scholar]

- 16.Machado J.T., Lopes A.M. Multidimensional scaling analysis of soccer dynamics. Appl. Math. Model. 2017;45:642–652. doi: 10.1016/j.apm.2017.01.029. [DOI] [Google Scholar]

- 17.Machado J.T., Lopes A.M. On the mathematical modeling of soccer dynamics. Commun. Nonlinear Sci. Numer. Simul. 2017;53:142–153. doi: 10.1016/j.cnsns.2017.04.024. [DOI] [Google Scholar]

- 18.Pincus S.M. Approximate entropy as a measure of system complexity. Proc. Natl. Acad. Sci. USA. 1991;88:2297–2301. doi: 10.1073/pnas.88.6.2297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Latora V., Baranger M., Rapisarda A., Tsallis C. The rate of entropy increase at the edge of chaos. Phys. Lett. A. 2000;273:97–103. doi: 10.1016/S0375-9601(00)00484-9. [DOI] [Google Scholar]

- 20.Cloude S.R., Pottier E. An entropy based classification scheme for land applications of polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997;35:68–78. doi: 10.1109/36.551935. [DOI] [Google Scholar]

- 21.Machado J.T., Costa A.C., Quelhas M.D. Entropy analysis of the DNA code dynamics in human chromosomes. Comput. Math. Appl. 2011;62:1612–1617. doi: 10.1016/j.camwa.2011.03.005. [DOI] [Google Scholar]

- 22.Basseville M. Divergence measures for statistical data processing—An annotated bibliography. Signal Process. 2013;93:621–633. doi: 10.1016/j.sigpro.2012.09.003. [DOI] [Google Scholar]

- 23.Seghouane A.K. A Kullback–Leibler divergence approach to blind image restoration. IEEE Trans. Image Process. 2011;20:2078–2083. doi: 10.1109/TIP.2011.2105881. [DOI] [PubMed] [Google Scholar]

- 24.Bissinger B.E., Culver R.L., Bose N. Minimum Hellinger distance based classification of underwater acoustic signals; Proceedings of the 43rd Annual Conference on Information Sciences and Systems; Baltimore, MD, USA. 18–20 March 2009; pp. 47–49. [Google Scholar]

- 25.Van Erven T., Harremos P. Rényi divergence and Kullback–Leibler divergence. IEEE Trans. Inf. Theory. 2014;60:3797–3820. doi: 10.1109/TIT.2014.2320500. [DOI] [Google Scholar]

- 26.Hamza A.B., Krim H. Jensen–Rényi divergence measure: Theoretical and computational perspectives; Proceedings of the IEEE International Symposium on Information Theory; Yokohama, Japan. 29 June–4 July 2003; p. 257. [Google Scholar]

- 27.Mohamed W., Hamza A.B. Medical image registration using stochastic optimization. Opt. Lasers Eng. 2010;48:1213–1223. doi: 10.1016/j.optlaseng.2010.06.011. [DOI] [Google Scholar]

- 28.Machado J.A.T., Mendes Lopes A. Fractional Jensen–Shannon analysis of the scientific output of researchers in fractional calculus. Entropy. 2017;19:127. doi: 10.3390/e19030127. [DOI] [Google Scholar]

- 29.Changpin L., Zeng F. Numerical Methods for Fractional Calculus. Chapman and Hall/CRC; Boca Raton, FL, USA: 2015. [Google Scholar]

- 30.Changpin L., Yujiang W., Ruisong Y. Recent Advances in Applied Nonlinear Dynamics with Numerical Analysis. World Scientific; Singapore: 2013. [Google Scholar]

- 31.Kilbas A., Srivastava H., Trujillo J. Theory and Applications of Fractional Differential Equations. Volume 204 Elsevier; Amsterdam, The Netherlands: 2006. North-Holland Mathematics Studies. [Google Scholar]

- 32.Gorenflo R., Mainardi F. Fractals and Fractional Calculus in Continuum Mechanics. Springer; Vienna, Austria: 1997. Fractional calculus. [Google Scholar]

- 33.Baleanu D., Diethelm K., Scalas E., Trujillo J.J. Models and Numerical Methods. Volume 3 World Scientific; Singapore: 2012. [Google Scholar]

- 34.Ionescu C.M. The Human Respiratory System: An Analysis of the Interplay between Anatomy, Structure, Breathing and Fractal Dynamics. Springer; London, UK: 2013. [Google Scholar]

- 35.Yang X.J., Baleanu D., Baleanu M.C. Observing diffusion problems defined on Cantor sets in different coordinate systems. Therm. Sci. 2015;19:151–156. doi: 10.2298/TSCI141126065Y. [DOI] [Google Scholar]

- 36.Yang X.J., Gao F., Srivastava H. A new computational approach for solving nonlinear local fractional PDEs. J. Comput. Appl. Math. 2018;339:285–296. doi: 10.1016/j.cam.2017.10.007. [DOI] [Google Scholar]

- 37.Tar J.K., Bitó J.F., Kovács L., Faitli T. Fractional Order PID-type Feedback in Fixed Point Transformation-based Adaptive Control of the FitzHugh-Nagumo Neuron Model with Time-delay. IFAC-PapersOnLine. 2018;51:906–911. doi: 10.1016/j.ifacol.2018.06.108. [DOI] [Google Scholar]

- 38.Lopes A.M., Machado J. Fractional order models of leaves. J. Vib. Control. 2014;20:998–1008. doi: 10.1177/1077546312473323. [DOI] [Google Scholar]

- 39.Machado J.T., Lopes A.M. The persistence of memory. Nonlinear Dyn. 2015;79:63–82. doi: 10.1007/s11071-014-1645-1. [DOI] [Google Scholar]

- 40.Machado J., Lopes A.M. Analysis of natural and artificial phenomena using signal processing and fractional calculus. Fract. Calc. Appl. Anal. 2015;18:459–478. doi: 10.1515/fca-2015-0029. [DOI] [Google Scholar]

- 41.Machado J., Lopes A., Duarte F., Ortigueira M., Rato R. Rhapsody in fractional. Fract. Calc. Appl. Anal. 2014;17:1188–1214. doi: 10.2478/s13540-014-0206-0. [DOI] [Google Scholar]

- 42.Machado J., Mata M.E., Lopes A.M. Fractional state space analysis of economic systems. Entropy. 2015;17:5402–5421. doi: 10.3390/e17085402. [DOI] [Google Scholar]

- 43.Sumelka W., Voyiadjis G.Z. A hyperelastic fractional damage material model with memory. Int. J. Solids Struct. 2017;124:151–160. doi: 10.1016/j.ijsolstr.2017.06.024. [DOI] [Google Scholar]

- 44.Hilfer R. Applications of Fractional Calculus in Physics. World Scientific Publishing; Singapore: 2000. [Google Scholar]

- 45.Podlubny I. Fractional Differential Equations. Academic Press; San Diego, CA, USA: 1999. [Google Scholar]

- 46.Samko S., Kilbas A., Marichev I. Fractional Integrals and Derivatives, Translated from the 1987 Russian Original. Gordon and Breach; Yverdon, Switzerland: 1993. [Google Scholar]

- 47.Jaynes E. Information Theory and Statistical Mechanics. Phys. Rev. 1957;106:620–630. doi: 10.1103/PhysRev.106.620. [DOI] [Google Scholar]

- 48.Khinchin A. Mathematical Foundations of Information Theory. Dover; New York, NY, USA: 1957. [Google Scholar]

- 49.Korn G.A., Korn T.M. Mathematical Handbook for Scientists and Engineers: Definitions, Theorems, and Formulas for Reference and Review. McGraw-Hill; New York, NY, USA: 1968. [Google Scholar]

- 50.Shannon C., Weaver W. The Mathematical Theory of Communication. University of Illinois Press; Urbana, IL, USA: 1949. [Google Scholar]

- 51.Cover T., Thomas J. Elements of Information Theory. 1st ed. John Wiley & Sons; New York, NY, USA: 1991. [Google Scholar]

- 52.Cover T.M., Thomas J.A. Elements of Information Theory. 2nd ed. John Wiley & Sons; New York, NY, USA: 2012. [Google Scholar]

- 53.Valério D., Trujillo J., Rivero M., Machado J., Baleanu D. Fractional Calculus: A Survey of Useful Formulas. Eur. Phys. J. Spec. Top. 2013;222:1827–1846. doi: 10.1140/epjst/e2013-01967-y. [DOI] [Google Scholar]

- 54.Machado J. Fractional order generalized information. Entropy. 2014;16:2350–2361. doi: 10.3390/e16042350. [DOI] [Google Scholar]

- 55.Saeed N., Nam H., Haq M.I.U., Muhammad Saqib D.B. A Survey on Multidimensional Scaling. ACM Comput. Surv. (CSUR) 2018;51:47. doi: 10.1145/3178155. [DOI] [Google Scholar]

- 56.Willems J.C., Polderman J.W. Introduction to Mathematical Systems Theory: A Behavioral Approach. Volume 26 Springer Science & Business Media; New York, NY, USA: 2013. [Google Scholar]