Abstract

In its most basic form, decision-making can be viewed as a computational process that progressively eliminates alternatives, thereby reducing uncertainty. Such processes are generally costly, meaning that the amount of uncertainty that can be reduced is limited by the amount of available computational resources. Here, we introduce the notion of elementary computation based on a fundamental principle for probability transfers that reduce uncertainty. Elementary computations can be considered as the inverse of Pigou–Dalton transfers applied to probability distributions, closely related to the concepts of majorization, T-transforms, and generalized entropies that induce a preorder on the space of probability distributions. Consequently, we can define resource cost functions that are order-preserving and therefore monotonic with respect to the uncertainty reduction. This leads to a comprehensive notion of decision-making processes with limited resources. Along the way, we prove several new results on majorization theory, as well as on entropy and divergence measures.

Keywords: uncertainty, entropy, divergence, majorization, decision-making, bounded rationality, limited resources, Bayesian inference

1. Introduction

In rational decision theory, uncertainty may have multiple sources that ultimately share the commonality that they reflect a lack of knowledge on the part of the decision-maker about the environment. A paramount example is the perfectly rational decision-maker [1] that has a probabilistic model of the environment and chooses its actions to maximize the expected utility entailed by the different choices. When we consider bounded rational decision-makers [2], we may add another source of uncertainty arising from the decision-maker’s limited processing capabilities, since the decision-maker will not only accept a single best choice, but will accept any satisficing option. Today, bounded rationality is an active research topic that crosses multiple scientific fields such as economics, political science, decision theory, game theory, computer science, and neuroscience [3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21], where uncertainty is one of the most important common denominators.

Uncertainty is often equated with Shannon entropy in information theory [22], measuring the average number of yes/no-questions that have to be answered to resolve the uncertainty. Even though Shannon entropy has many desirable properties, there are plenty of alternative suggestions for entropy measures in the literature, known as generalized entropies, such as Rényi entropy [23] or Tsallis entropy [24]. Closely related to entropies are divergence measures, which express how probability distributions differ from a given reference distribution. If the reference distribution is uniform then divergence measures can be expressed in terms of entropy measures, which is why divergences can be viewed as generalizations of entropy, for example the Kullback-Leibler divergence [25] generalizing Shannon entropy.

Here, we introduce the concept of elementary computation based on a slightly stronger notion of uncertainty than is expressed by Shannon entropy, or any other generalized entropy alone, but is equivalent to all of them combined. Equating decision-making with uncertainty reduction, this leads to a new comprehensive view of decision-making with limited resources. Our main contributions can be summarized as follows:

-

(i)

Based on a fundamental concept of probability transfers related to the Pigou–Dalton principle of welfare economics [26], we promote a generalized notion of uncertainty reduction of a probability distribution that we call elementary computation. This leads to a natural definition of cost functions that quantify the resource costs for uncertainty reduction necessary for decision-making. We generalize these concepts to arbitrary reference distributions. In particular, we define Pigou–Dalton-type transfers for probability distributions relative to a reference or prior distribution, which induce a preorder that is slightly stronger than Kullback-Leibler divergence, but is equivalent to the notion of divergence given by all f-divergences combined. We prove several new characterizations of the underlying concept, known as relative majorization.

-

(ii)

An interesting property of cost functions is their behavior under coarse-graining, which plays an important role in decision-making and formalizes the general notion of making abstractions. More precisely, if a decision in a set is split up into two steps by partitioning and first deciding in the set of (coarse-grained) partitions and secondly choosing a fine-grained option inside the selected partition , then it is an important question how the cost for the total decision-making process differs from the sum of the costs in each step. We show that f-divergences are superadditive with respect to coarse-graining, which means that decision-making costs can potentially be reduced by splitting up the decision into multiple steps. In this regard, we find evidence that the well-known property of Kullback-Leibler divergence of being additive under coarse-graining might be viewed as describing the minimal amount of processing cost that cannot be reduced by a more intelligent decision-making strategy.

-

(iii)

We define bounded rational decision-makers as decision-making processes that are optimizing a given utility function under a constraint on the cost function, or minimizing the cost function under a minimal requirement on expected utility. As a special case for Shannon-type information costs, we arrive at information-theoretic bounded rationality, which may form a normative baseline for bounded-optimal decision-making in the absence of process-dependent constraints. We show that bounded-optimal posteriors with informational costs trace a path through probability space that can itself be seen as an anytime decision-making process, where each step optimally trades off utility and processing costs.

-

(iv)

We show that Bayesian inference can be seen as a decision-making process with limited resources given by the number of available datapoints.

Section 2 deals with Items and , aiming at a general characterization of decision-making in terms of uncertainty reduction. Item is covered in Section 3, deriving information-theoretic bounded rationality as a special case. Section 4 illustrates the concepts with an example including Item . Section 5 and Section 6 contain a general discussion and concluding remarks, respectively.

Notation

Let denote the real numbers, the set of non-negative real numbers, and the rational numbers. We write for the number of elements contained in a countable set A, and for the set difference, that is the set of elements in B that are not in A. denotes the set of probability distributions on a set , in particular, any is normalized so that . Random variables are denoted by capital letters , while their explicit values are denoted by small letters . For the probability distribution of a random variable X we write , and for the values of . Correspondingly, the expectation is also written as , , or . We also write , to denote the approximation of by an average over samples from .

2. Decision-Making with Limited Resources

In this section, we develop the notion of a decision-making process with limited resources following the simple assumption that any decision-making process

-

(i)

reduces uncertainty

-

(ii)

by spending resources.

Starting from an intuitive interpretation of uncertainty and resource costs, these concepts are refined incrementally until a precise definition of a decision-making process is given at the end of this section (Definition 7) in terms of elementary computations. Here, a decision-making process is a comprehensive term that describes all kinds of biological as well as artificial systems that are searching for solutions to given problems, for example a human decision-maker that burns calories while thinking, or a computer that uses electric energy to run an algorithm. However, resources do not necessarily refer to a real consumable quantity but can also represent more explicit resources (e.g., time) as a proxy, for example the number of binary comparisons in a search algorithm, the number of forward simulations in a reinforcement learning algorithm, the number of samples in a Monte Carlo algorithm, or, even more abstractly, they can express the limited availability of some source of information, for example the number of data that are available to an inference algorithm (see Section 4).

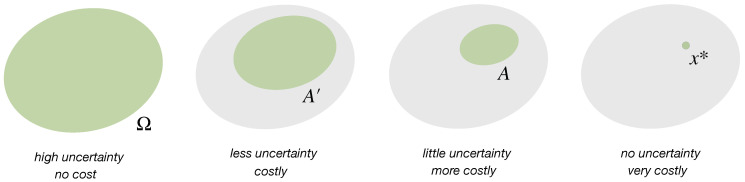

2.1. Uncertainty Reduction by Eliminating Options

In its most basic form, the concept of decision-making can be formalized as the process of looking for a decision in a discrete set of options . We say that a decision is certain, if repeated queries of the decision-maker will result in the same decision, and it is uncertain, if repeated queries can result in different decisions. Uncertainty reduction then corresponds to reducing the amount of uncertain options. Hence, a decision-making process that transitions from a space of options to a strictly smaller subset reduces the amount of uncertain options from to , with the possible goal to eventually find a single certain decision . Such a process is generally costly, the more uncertainty is reduced the more resources it costs (Figure 1). The explicit mapping between uncertainty reduction and resource cost depends on the details of the underlying process and on which explicit quantity is taken as the resource. For example, if the resource is given by time (or any monotone function of time), then a search algorithm that eliminates options sequentially until the target value is found (linear search) is less cost efficient than an algorithm that takes a sorted list and in each step removes half of the options by comparing the mid point to the target (logarithmic search). Abstractly, any real-valued function C on the power set of that satisfies whenever might be used as a cost function in the sense that quantifies the expenses of reducing the uncertainty from to .

Figure 1.

Decision-making as search in a set of options. At the expense of more and more resources, the number of uncertain options is progressively reduced until is the only remaining option.

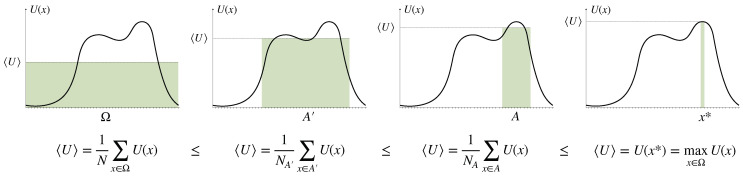

In utility theory, decision-making is modeled as an optimization process that maximizes a so-called utility function (which can itself be an expected utility with respect to a probabilistic model of the environment, in the sense of von Neumann and Morgenstern [1]). A decision-maker that is optimizing a given utility function U obtains a utility of on average after reducing the amount of uncertain options from N to (see Figure 2). A decision-maker that completely reduces uncertainty by finding the optimum is called rational (without loss of generality we can assume that is unique, by redefining in the case when it is not). Since uncertainty reduction generally comes with a cost, a utility optimizing decision-maker with limited resources, correspondingly called bounded rational (see Section 3), in contrast will obtain only uncertain decisions from a subset . Such decision-makers seek satisfactory rather than optimal solutions, for example by taking the first option that satisfies a minimal utility requirement, which Herbert A. Simon calls a satisficing solution [2].

Figure 2.

Decision-making as utility optimization process.

Summarizing, we conclude that a decision-making process with decision space that successively eliminates options can be represented by a mapping between subsets of , together with a cost function C that quantifies the total expenses of arriving at a given subset,

| (1) |

such that

| (2) |

For example, a rational decision-maker can afford , whereas a decision-maker with limited resources can typically only afford uncertainty reduction with cost .

From a probabilistic perspective, a decision-making process as described above is a transition from a uniform probability distribution over N options to a uniform probability distribution over options, that converges to the Dirac measure centered at in the fully rational limit. From this point of view, the restriction to uniform distributions is artificial. A decision-maker that is uncertain about the optimal decision might indeed have a bias towards a subset A without completely excluding other options (the ones in ), so that the behavior must be properly described by a probability distribution . Therefore, in the following section, we extend Equations (1) and (2) to transitions between probability distributions. In particular, we must replace the power set of by the space of probability distributions on , denoted by .

2.2. Probabilistic Decision-Making

Let be a discrete decision space of options, so that consists of discrete distributions p, often represented by probability vectors . However, many of the concepts presented in this and the following section can be generalized to the continuous case [27,28].

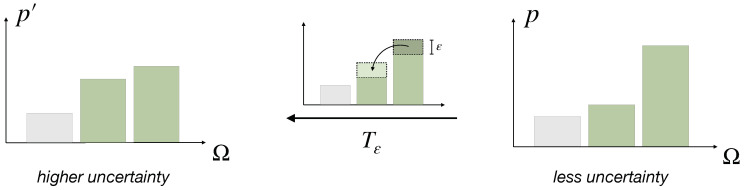

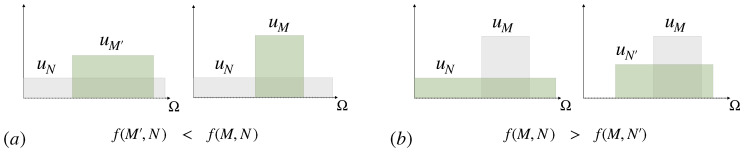

Intuitively, the uncertainty contained in a distribution is related to the relative inequality of its entries, the more similar its entries are, the higher the uncertainty. This means that uncertainty is increased by moving some probability weight from a more likely option to a less likely option. It turns out that this simple idea leads to a concept widely known as majorization [27,29,30,31,32,33], which has roots in the economic literature of the early 19th century [26,34,35], where it was introduced to describe income inequality, later known as the Pigou–Dalton Principle of Transfers. Here, the operation of moving weight from a more likely to a less likely option corresponds to the transfer of income from one individual of a population to a relatively poorer individual (also known as a Robin Hood operation [30]). Since a decision-making process can be viewed as a sequence of uncertainty reducing computations, we call the inverse of such a Pigou–Dalton transfer an elementary computation.

Definition 1

(Elementary computation). A transformation on of the form

(3) where are such that , and , is called a Pigou–Dalton transfer (see Figure 3). We call its inverse an elementary computation.

Figure 3.

A Pigou–Dalton transfer as given by Equation (3). The transfer of probability from a more likely to a less likely option increases uncertainty.

Since making two probability values more similar or more dissimilar are the only two possibilities to minimally transform a probability distribution, elementary computations are the most basic principle of how uncertainty is reduced. Hence, we conclude that a distribution has more uncertainty than a distribution p if and only if p can be obtained from by finitely many elementary computations (and permutations, which are not considered an elementary computation due to the choice of ).

Definition 2

(Uncertainty). We say that contains more uncertainty than , denoted by

(4) if and only if p can be obtained from by a finite number of elementary computations and permutations.

Note that, mathematically, this defines a preorder on , i.e., a reflexive ( for all ) and transitive (if , then for all ) binary relation.

In the literature, there are different names for the relation between p and expressed by Definition 2, for example is called more mixed than p [36], more disordered than p [37], more chaotic than p [32], or an average of p [29]. Most commonly, however, p is said to majorize , which started with the early influences of Muirhead [38], and Hardy, Littlewood, and Pólya [29] and was developed by many authors into the field of majorization theory (a standard reference was published by Marshall, Olkin, and Arnold [27]), with far reaching applications until today, especially in non-equilibrium thermodynamics and quantum information theory [39,40,41].

There are plenty of equivalent (arguably less intuitive) characterizations of , some of which are summarized below. However, one characterization makes use of a concept very closely related to Pigou–Dalton transfers, known as T-transforms [27,32], which expresses the fact that moving some weight from a more likely option to a less likely option is equivalent to taking (weighted) averages of the two probability values. More precisely, a T-transform is a linear operator on with a matrix of the form , where denotes the identity matrix on , denotes a permutation matrix of two elements, and . If permutes and , then for all , and

| (5) |

Hence, a T-transform considers any two probability values and of a given , calculates their weighted averages with weights and , and replaces the original values with these averages. From Equation (5), it follows immediately that a T-transform with parameter and a permutation of with is a Pigou–Dalton transfer with . In addition, allowing means that T-transfers include permutations, in particular, if and only if can be derived from p by successive applications of finitely many T-transforms.

Due to a classic result by Hardy, Littlewood and Pólya ([29] (p. 49)), this characterization can be stated in an even simpler form by using doubly stochastic matrices, i.e., matrices with and for all . By writing for all , and , these conditions are often stated as

| (6) |

Note that doubly stochastic matrices can be viewed as generalizations of T-transforms in the sense that a T-transform takes an average of two entries, whereas if with a doubly stochastic matrix A, then is a convex combination, or a weighted average, of p with coefficients for each j. This is also why is then called more mixed than p [36]. Therefore, similar to T-transforms, we might expect that, if is the result of an application of a doubly stochastic matrix, , then is an average of p and therefore contains more uncertainty than p. This is exactly what is expressed by Characterization in the following theorem. A similar characterization of is that must be given by a convex combination of permutations of the elements of p (see property below).

Without having the concept of majorization, Schur proved that functions of the form with a convex function f are monotone with respect to the application of a doubly stochastic matrix [42] (see property below). Functions of this form are an important class of cost functions for probabilistic decision-makers, as we discuss in Example 1.

Theorem 1

(Characterizations of [27]). For , the following are equivalent:

- (i)

, i.e., contains more uncertainty than p (Definition 2)

- (ii)

is the result of finitely many T-transforms applied to p

- (iii)

for a doubly stochastic matrix A

- (iv)

where , , , and is a permutation for all

- (v)

for all continuous convex functions f

- (vi)

for all , where denotes the decreasing rearrangement of p

As argued above, the equivalence between and is straight-forward. The equivalences among , , and are due to Muirhead [38] and Hardy, Littlewood, and Pólya [29]. The implication is due to Karamata [43] and Hardy, Littlewood, and Pólya [44], whereas goes back to Schur [42]. Mathematically, means that belongs to the convex hull of all permutations of the entries of p, and the equivalence is known as the Birkhoff–von Neumann theorem. Here, we state all relations for probability vectors , even though they are usually stated for all with the additional requirement that .

Condition is the classical and most commonly used definition of majorization [27,29,34], since it is often the easiest to check in practical examples. For example, from , it immediately follows that uniform distributions over N options contain more uncertainty than uniform distributions over options, since for all , i.e., for we have

| (7) |

In particular, if , then the uniform distribution over A contains less uncertainty than the uniform distribution over , which shows that the notion of uncertainty introduced in Definition 2 is indeed a generalizatin of the notion of uncertainty given by the number of uncertain options introduced in the previous section.

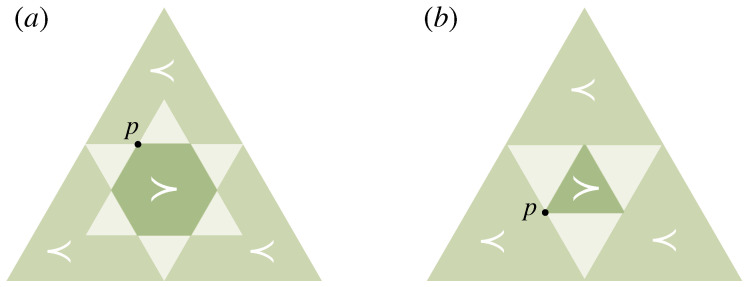

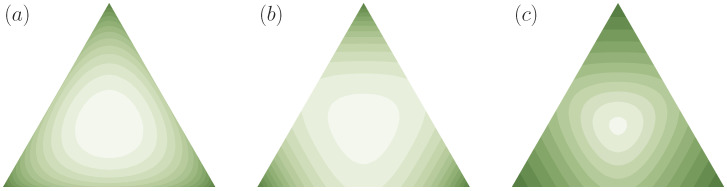

Note that ≺ only being a preorder on , in general, two distributions are not necessarily comparable, i.e., we can have both and . In Figure 4, we visualize the regions of all comparable distributions for two exemplary distributions on a three-dimensional decision space (), represented on the two-dimensional simplex of probability vectors . For example, and cannot be compared under ≺, since , but .

Figure 4.

Comparability of probability distributions in . The region in the center consists of all that are majorized by p, i.e., , whereas the outer region consists of all that majorize p, . The bright regions are not comparable to p. (a) ; (b) .

Cost functions can now be generalized to probabilistic decision-making by noting that the property whenever in Equation (2) means that C is strictly monotonic with respect to the preorder given by set inclusion.

Definition 3

(Cost functions on ). We say that a function is a cost function, if it is strictly monotonically increasing with respect to the preorder ≺, i.e., if

(8) with equality only if p and are equivalent, , which is defined as and . Moreover, for a parameterized family of posteriors , we say that r is a resource parameter with respect to a cost function C, if the mapping is strictly monotonically increasing.

Since monotonic functions with respect to majorization were first studied by Schur [42], functions with this property are usually called (strictly) Schur-convex ([27] (Ch. 3)).

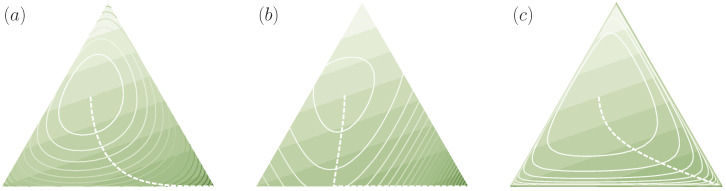

Example 1

(Generalized entropies). From in Theorem 1, it follows that functions of the form

(9) where f is strictly convex, are examples of cost functions. Since many entropy measures used in the literature can be seen to be special cases of Equation (9) (with a concave f), functions of this form are often called generalized entropies [45]. In particular, for the choice , we have , where denotes the Shannon entropy of p. Thus, if contains more uncertainty than p in the sense of Definition 2 () then the Shannon entropy of is larger than the Shannon entropy of p and therefore contains also more uncertainty in the sense of classical information theory than p. Similarly, for we obtain the (negative) Burg entropy, and for functions of the form for we get the (negative) Tsallis entropy, where the sign is chosen depending on α such that f is convex (see, e.g., [46] for more examples). Moreover, the composition of any (strictly) monotonically increasing function g with Equation (9) generates another class of cost functions, which contains for example the (negative) Rényi entropy [23]. Note also that entropies of the form of Equation (9) are special cases of Csiszár’s f-divergences [47] for uniform reference distributions (see Example 3 below). In Figure 5, several examples of cost functions are shown for . In this case, the two-dimensional probability simplex is given by the triangle in with edges , , and . Cost functions are visualized in terms of their level sets.

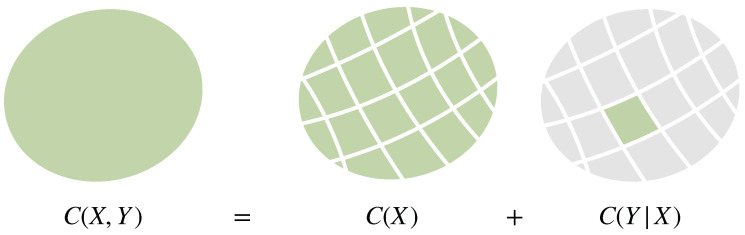

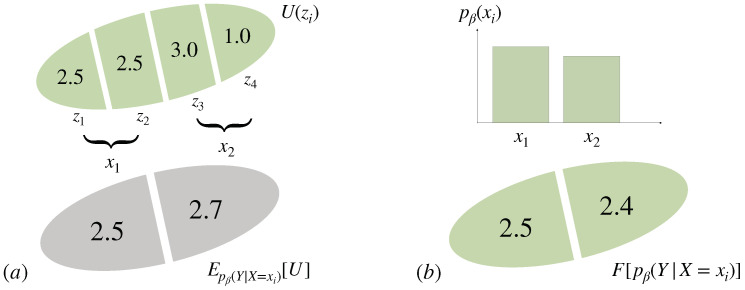

We prove in Proposition A1 in Appendix A that all cost functions of the form of Equation (9) are superadditive with respect to coarse-graining. This seems to be a new result and an improvement upon the fact that generalized entropies (and f-divergences) satisfy information monotonicity [48]. More precisely, if a decision in Ω, represented by a random variable Z, is split up into two steps by partitioning and first deciding about the partition , correspondingly described by a random variable X with values in I, and then choosing an option inside of the selected partition , represented by a random variable Y, i.e., , then

(10) where and . For symmetric cost functions (such as Equation (9)) this is equivalent to

(11) The case of equality in Equations (10) and (11) (see Figure 6) is sometimes called separability [49], strong additivity [50], or recursivity [51], and it is often used to characterize Shannon entropy [23,52,53,54,55,56]. In fact, we also show in Appendix A (Proposition A2) that cost functions C that are additive under coarse-graining are proportional to the negative Shannon entropy . See also Example 3 in the next section, where we discuss the generalization to arbitrary reference distributions.

Figure 5.

Examples of cost functions for decision spaces with three elements (): (a) Shannon entropy; (b) Tsallis entropy of order ; and (c) Rényi entropy of order .

Figure 6.

Additivity under coarse-graining. If the cost for is the sum of the costs for X and the cost for Y given X, then the cost function is proportional to Shannon entropy.

We can now refine the notion of a decision-making process introduced in the previous section as a mapping together with a cost function C satisfying Equation (2). Instead of simply mapping from sets to smaller subsets by successively eliminating options, we now allow to be a mapping between probability distributions such that can be obtained from p by a finite number of elementary computations (without permutations), and we require C to be a cost function on , so that

| (12) |

Here, quantifies the total costs of arriving at a distribution p, and means that and . In other words, a decision-making process can be viewed as traversing probability space by moving pieces of probability from one option to another option such that uncertainty is reduced.

Up to now, we have ignored one important property of a decision-making process, the distribution q with minimal cost, i.e., satisfying for all p, which must be identified with the initial distribution of a decision-making process with cost function C. As one might expect (see Figure 5), it turns out that all cost functions according to Definition 3 have the same minimal element.

Proposition 1

(Uniform distributions are minimal). The uniform distribution is the unique minimal element in with respect to ≺, i.e.

(13)

Once Equation (13) is established, it follows from Equation (8) that for all p, in particular the uniform distribution corresponds to the initial state of all decision-making processes with cost function C satisfying Equation (12). In particular, it contains the maximum amount of uncertainty with respect to any entropy measure of the form of Equation (9), known as the second Khinchin axiom [49], e.g., for Shannon entropy . Proposition 1 follows from Characterization in Theorem 1 after noticing that every can be transformed to a uniform distribution by permuting its elements cyclically (see Proposition A3 in Appendix A for a detailed proof).

Regarding the possibility that a decision-maker may have prior information, for example originating from the experience of previous comparable decision-making tasks, the assumption of a uniform initial distribution seems to be artificial. Therefore, in the following section, we arrive at the final notion of a decision-making process by extending the results of this section to allow for arbitrary initial distributions.

2.3. Decision-Making with Prior Knowledge

From the discussion at the end of the previous section we conclude that, in full generality, a decision-maker transitions from an initial probability distribution , called prior, to a terminal distribution , called posterior. Note that, since once eliminated options are excluded from the rest of the decision-making process, a posterior p must be absolutely continuous with respect to the prior q, denoted by , i.e., can be non-zero for a given only if is non-zero.

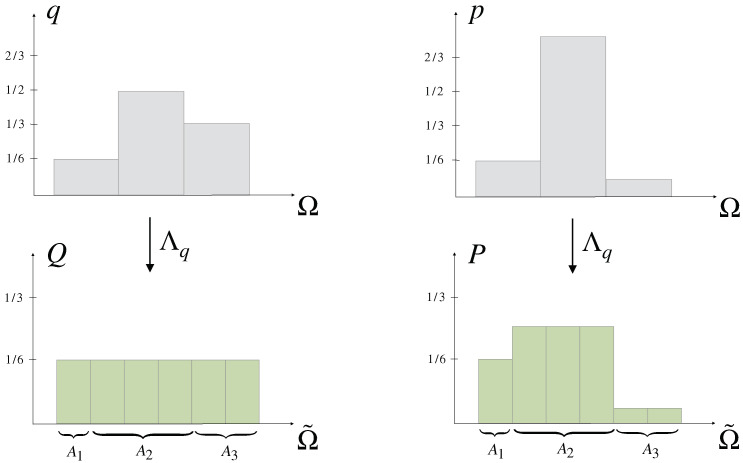

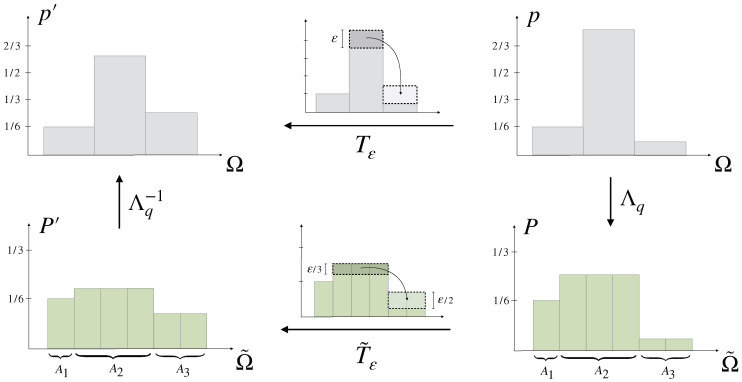

The notion of uncertainty (Definition 2) can be generalized with respect to a non-uniform prior by viewing the probabilities as the probabilities of partitions of an underlying elementary probability space of equally likely elements under Q, in particular Q represents q as the uniform distribution on (see Figure 7). The similarity of the entries of the corresponding representation of any (its uncertainty) then contains information about how close p is to q, which we call the relative uncertainty of p with respect to q (Definition 4 below).

Figure 7.

Representation of q and p by Q and P on (Example 2), such that the probabilities and are given by the probabilities of the partitions with respect to Q and P, respectively.

The formal construction is as follows: Let be such that and . The case when then follows from a simple approximation of each entry by a rational number. Let be such that for all , for example could be chosen as the least common multiple of the denominators of the . The underlying elementary probability space then consists of elements and there exists a partitioning of such that

| (14) |

where Q denotes the uniform distribution on . In particular, it follows that

| (15) |

i.e., Q represents q in with respect to the partitioning . Similarly, any can be represented as a distribution on by requiring that for all and letting P to be constant inside of each partition, i.e., similar to Equation (15) we have for all and therefore by Equation (14)

| (16) |

Note that, if then by absolute continuity () in which case we can either exclude option i from or set .

Example 2.

For a prior we put , so that should be partitioned as . Then, corresponds to the probability of the ith partition under the uniform distribution , while the distribution is represented on by the distribution (see Figure 7).

Importantly, if the components of the representation in given by Equation (16) are similar to each other, i.e., if P is close to uniform, then the components of p must be very similar to the components of q, which we express by the concept of relative uncertainty.

Definition 4

(Uncertainty relative to q). We say that contains more uncertainty with respect to a prior than , denoted by , if and only if contains more uncertainty than , i.e.

(17) where is given by Equation (16).

As we show in Theorem 2 below, it turns out that coincides with a known concept called q-majorization [57], majorization relative to q [27,28], or mixing distance [58]. Due to the lack of a characterization by partial sums, it is usually defined as a generalization of Characterization in Theorem 1, that is is q-majorized by p iff , where A is a so-called q-stochastic matrix, which means that it is a stochastic matrix () with . In particular, does not depend on the choice of in the definition of . Here, we provide two new characterizations of q-majorization, the one given by Definition 4, and one using partial sums generalizing the original definition of majorization.

Theorem 2

(Characterizations of ). The following are equivalent

- (i)

, i.e., contains more uncertainty relative to q than p (Definition 4).

- (ii)

can be obtained from by a finite number of elementary computations and permutations on .

- (iii)

for a q-stochastic matrix A, i.e., and .

- (iv)

for all continuous convex functions f.

- (v)

for all and , where , and the arrows indicate that is ordered decreasingly.

To prove that , , and are equivalent (see Proposition A4 in Appendix A), we make use of the fact that has a left inverse . This can be verified by simply multiplying the corresponding matrices given in the proof of Proposition A4. The equivalence between and is shown in [28] (see also [27,58]). Characterization follows immediately from Definitions 2 and 4.

As required from the discussion at the end of the previous section, q is indeed minimal with respect to , which means that it contains the most amount of uncertainty with respect to itself.

Proposition 2

(The prior is minimal). The prior is the unique minimal element in with respect to , that is

(18)

This follows more or less directly from Proposition 1 and the equivalence of and in Theorem 2 (see Proposition A5 in Appendix A for a detailed proof).

Order-preserving functions with respect to generalize cost functions introduced in the previous section (Definition 3). According to Proposition 2, such functions have a unique minimum given by the prior q. Since cost functions are used in Definition 7 below to quantify the expenses of a decision-making process, we require their minimum to be zero, which can always be achieved by redefining a given cost function by an additive constant.

Definition 5

(Cost functions relative to q). We say that a function is a cost function relative to q, if , if it is invariant under relabeling , and if it is strictly monotonically increasing with respect to the preorder , that is if

(19) with equality only if , i.e., if and . Moreover, for a parameterized family of posteriors , we say that r is a resource parameter with respect to a cost function , if the mapping is strictly monotonically increasing.

Similar to generalized entropy functions discussed in Example 1, in the literature, there are many examples of relative cost functions, usually called divergences or measures of divergence.

Example 3

(f-divergences). From in Theorem 2, it follows that functions of the form

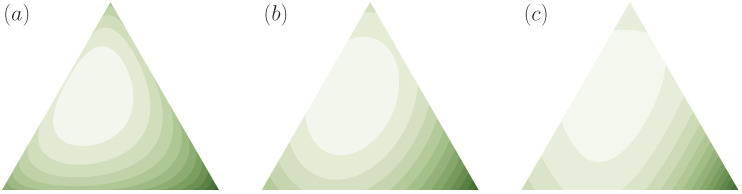

(20) where f is continuous and strictly convex with , are examples of cost functions relative to q. Many well-known divergence measures can be seen to belong to this class of relative cost functions, also known as Csiszár’s f-divergences [47]: the Kullback-Leibler divergence (or relative entropy), the squared distance, the Hartley entropy, the Burg entropy, the Tsallis entropy, and many more [46,50] (see Figure 8 for visualizations of some of them in relative to a non-uniform prior).

As a generalization of Proposition A1 (superadditivity of generalized entropies), we prove in Proposition A6 in Appendix A that f-divergences are superadditive under coarse-graining, that is

(21) whenever , and and ,

This generalizes Equation (10) to the case of a non-uniform prior. Similar to entropies, the case of equality in Equation (21) is sometimes called composition rule [59], chain rule [60], or recursivity [50], and is often used to characterize Kullback-Leibler divergence [8,50,59,60].

Indeed, we also show in Appendix A (Proposition A7) that all additive cost functions with respect to q are proportional to Kullback-Leibler divergence (relative entropy). This goes back to Hobson’s modification [59] of Shannon’s original proof [22], after establishing the following monotonicity property for uniform distributions: If denotes the cost of a uniform distribution over M elements relative to a uniform distribution over elements, then (see Figure 9).

(22) Note that, even though our proof of Proposition A7 uses additivity under coarse graining to show the monotonicity property in Equation (22), it is easy to see that any relative cost function of the form of Equation (20) also satisfies Equation (22) by using the convexity of f as with .

In terms of decision-making, superadditivity under coarse-graining means that decision-making costs can potentially be reduced by splitting up the decision into multiple steps, for example by a more intelligent search strategy. For example, if for some and is superadditive, then the cost for reducing uncertainty to a single option, i.e., , when starting from a uniform distribution q, satisfies

where , and we have set as unit cost (corresponding to 1 bit in the case of Kullback-Leibler divergence). Thus, intuitively the property of the Kullback-Leibler divergence of being additive under coarse-graining might be viewed as describing the minimal amount of processing costs that must be contained in any cost function, because it cannot be reduced by changing the decision-making process. Therefore, in the following, we call cost functions that are proportional to the Kullback-Leibler divergence simply informational costs.

Figure 8.

Examples of cost functions for relative to : (a) Kullback-Leibler divergence; (b) Squared distance; and (c) Tsallis relative entropy of order .

Figure 9.

Monotonicity property in Equation (22): and (a) the cost is higher when more uncertainty has been reduced; and (b) if the posterior is the same, then it is cheaper to start from a prior with fewer options.

In contrast to the previous section, in the definition of and its characterizations, we never use elementary computations on directly. This is because permutations interact with the uncertainty relative to q, and therefore cannot be characterized by a finite number of elementary computations and permutations on . However, we can still define elementary computations relative to q by the inverse of Pigou–Dalton transfers of the form of Equation (3) such that for , which is arguably the most basic form of how to generate uncertainty with respect to q.

Even for small , a regular Pigou–Dalton transfer does not necessarily increase uncertainty relative to q, because the similarity of the components now needs to be considered with respect to q. Instead, we compare the components of the representation of , and move some probability weight from to whenever for and , by distributing evenly among the elements in (see Figure 10), denoted by the transformation . Here, must be small enough such that the inequality is invariant under , which means that

| (23) |

Figure 10.

Pigou–Dalton transfer relative to q. A distribution is transformed relative to q by first moving some amount of weight from to where are such that whenever and , with small enough such that this relation remains true after the transformation, and then mapping the transformed distribution back to by (see Definition 6).

By construction, minimally increases uncertainty in while staying in the image of under , by keeping the values of P constant in each partition, and therefore can be considered as the most basic way of how to increase uncertainty relative to q.

Definition 6

(Elementary computation relative to q). We call a transformation on of the form

(24) with such that , and ε satisfying Equation (23), a Pigou–Dalton transfer relative to q, and its inverse an elementary computation relative to q.

We are now in the position to state our final definition of a decision-making process.

Definition 7

(Decision-making process). A decision-making process is a gradual transformation

of a prior to a posterior , such that each step decreases uncertainty relative to q. This means that p is obtained from q by successive application of a mapping ϕ between probability distributions on Ω, such that can be obtained from by finitely many elementary computations relative to q, in particular

(25) where quantifies the total costs of a distribution , and means that and .

In other words, a decision-making process can be viewed as traversing probability space from prior q to posterior p by moving pieces of probability from one option to another option such that uncertainty is reduced relative to q, while expending a certain amount of resources determined by the cost function .

3. Bounded Rationality

3.1. Bounded Rational Decision-Making

In this section, we consider decision-making processes that trade off utility against costs. Such decision-makers either maximize a utility function subject to a constraint on the cost function, for example an author of a scientific article who optimizes the article’s quality until a deadline is reached, or minimizing the cost function subject to a utility constraint, for example a high-school student who minimizes effort such that the requirement to pass a certain class is achieved. In both cases, the decision-makers are called bounded rational, since in the limit of no resource constraints they coincide with rational decision-makers.

In general, depending on the underlying system, such an optimization process might have additional process dependent constraints that are not directly given by resource limitations, for example in cases when the optimization takes place in a parameter space that has less degrees of freedom than the full probability space . Abstractly, this is expressed by allowing the optimization process to search only in a subset .

Definition 8

(Bounded rational decision-making process). Let be a given utility function, and . A decision-making process with prior q, posterior , and cost function is called bounded rational if its posterior satisfies

(26) for a given upper bound , or equivalently

(27) for a given lower bound . In the case when the process constraints disappear, i.e., if , then a bounded rational decision-maker is called bounded-optimal.

The equivalence between Equation (26) and Equation (27) is easily seen from the equivalent optimization problem given by the formalism of Lagrange multipliers [61],

| (28) |

where the cost or utility constraint is expressed by a trade-off between utility and cost, or cost and utility, with a trade-off parameter given by the Lagrange multiplier , which is chosen such that the constraint given by or is satisfied. It is easily seen from the maximization problem on the right side of Equation (28) that a larger value of decreases the weight of the cost term and thus allows for higher values of the cost function. Hence, parameterizes the amount of resources the decision-maker can afford with respect to the cost function , and, at least in non-trivial cases (non-constant utilities) it is therefore a resource parameter with respect to in the sense of Definition 5. In particular, for , the decision-maker minimizes its cost function irrespective of the expected utility, and therefore stays at the prior, , whereas makes the cost function disappear so that the decision-maker becomes purely rational with a Dirac posterior centered on the optima of the utility function U.

For example, in Figure 11, we can see how the posteriors of bounded-optimal decision-makers with different cost functions for and with utility leave a trace in probability space, by moving away from an exemplary prior and eventually arriving at the rational solution .

Figure 11.

Paths of bounded-optimal decision-makers in for . The straight lines in the background denote level sets of expected utility, the solid lines are level sets of the cost functions, and the dashed curves represent the paths of a bounded-optimal decision-maker given by Equation (28) with utility , prior , and cost functions given by: (a) Kullback-Leibler divergence; (b) Tsallis relative entropy of order ; and (c) Burg relative entropy.

For informational costs (i.e., proportional to Kullback-Leibler divergence), is a resource parameter with respect to any cost function.

Proposition 3.

If is a family of bounded-optimal posteriors given by Equation (28) with , then β is a resource parameter with respect to any cost function, in particular

(29)

This generalizes a result in [37] to the case of non-uniform priors, by making use of our new Characterization of , by which it suffices to show that is an increasing function of for all specified in Theorem 2 (see Proposition A8 in Appendix A for details). For simplicity, we restrict ourselves to the case of the Kullback-Leibler divergence, however the proof is analogous for cost functions of the form of Equation (20) with f being differentiable and strictly convex on (so that is strictly monotonically increasing and thus invertible on , see [37] for the case of uniform priors).

Hence, for any , the posteriors of a bounded-optimal decision-making process with the Kullback-Leibler divergence as cost function can be regarded as the steps of a decision-making process (i.e., satisfying Equation (25)) with posterior , where each step optimally trades off utility against informational cost. This means that with increasing the posteriors do not only decrease entropy in the sense of the Kullback-Leibler divergence, but also in the sense of any other cost function.

The important case of bounded-optimal decision-makers with informational costs is termed information-theoretic bounded rationality [14,18,62] and is studied more closely in the following sections.

3.2. Information-Theoretic Bounded Rationality

For bounded-optimal decision-making processes with informational costs, the unconstrained optimization problem in Equation (28) takes the form , where

| (30) |

which has a unique maximum , the bounded-optimal posterior given by

| (31) |

with normalization constant . This form can easily be derived by finding the zeros of the functional derivative of the objective functional in Equation (30) with respect to p (with an additional normalization constraint), whereas the uniqueness follows from the convexity of the mapping . For the actual maximum of we obtain

so that .

Due to its analogy with physics, in particular thermodynamics (see, e.g., [18]), the maximization of Equation (30) is known as the Free Energy principle of information-theoretic bounded rationality, pioneered in [14,18,62], further developed in [63,64], and applied in recent studies of artificial systems, such as generative neural networks trained by Markov chain Monte Carlo methods [65], or in reinforcement learning as an adaptive regularization strategy [66,67], as well as in recent experimental studies on human behavior [68,69]. Note that there is a formal connection of Equation (30) and the Free Energy principle of active inference [70], however, as discussed in [64] Section 6.3: both Free Energy principles have conceptually different interpretations.

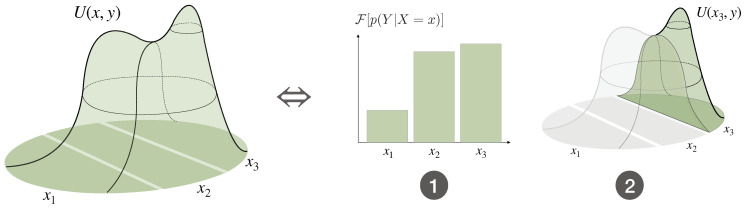

Example 4

(Bayes rule as a bounded-optimal posterior). In Bayesian inference, the parameter θ of the distribution of a random variable Y is inferred from a given dataset of observations of Y by treating the parameter itself as a random variable Θ with a prior distribution . The parameterized distribution of Y evaluated at an observation given a certain value of Θ, i.e., , is then understood as a function of θ, known as the likelihood of the datapoint under the assumption of . After seeing the dataset d, the belief about Θ is updated by using Bayes rule

This takes the form of a bounded-optimal posterior in Equation (31) with and utility function given by the average log-likelihood per datapoint,

since then Bayes rule reads

(32) The corresponding Free Energy in Equation (30), which is maximized by Equation (32),

(33) coincides with the variational Free Energy from Bayesian statistics. Indeed, from Equation (33) it is easy to see that assumes its maximum when is proportional to , that is when is given by Equation (32). In the literature, is used in the variational characterization of Bayes rule, in cases when the form of Equation (32) cannot be achieved exactly but instead is approximated by optimizing Equation (33) over the parameters ϑ of a parameterized distribution [71,72].

In the following section, we show that the Free Energy of a bounded rational decision-making process satisfies a recursivity property, which allows the interpretation of as a certainty-equivalent.

3.3. The Recursivity of and the Value of a Decision Problem

Consider a bounded-optimal decision-maker with an informational cost function deciding about a random variable Z with values in that is decomposed into the random variables X and Y, i.e., . This decomposition can be understood as a two-step process, where first a decision about a partition of the full search space is made, represented by a random variable X with values in I, followed by a decision about Y inside the partition selected by X (see Figure 6).

Since , by the additivity of the Kullback-Leibler divergence (Proposition A7), we have

and therefore, if denotes the Free Energy of the second step,

| (34) |

In particular, the Free Energy of the second decision-step plays the role of the utility function of the first decision-step (see Figure 12). In Equation (34), the two decision-steps have the same resource parameter , controlling the strength of the constraint on the total informational costs

Figure 12.

Recursivity of the Free Energy under coarse-graining. The decision about is equivalent to a two-step process consisting of the decision about X and the decision about Y given X. The objective function for the first step is the Free Energy of the second step.

More generally, each step might have a separate information-processing constraint, which requires two resource parameters and , and results in the total Free Energy

Example 5.

Consider a bounded-rational decision-maker with informational cost function and a utility function U defined on a set with values as given in Figure 13 and an information-processing bound of bits (). If we partition Ω into the disjoint subsets and , then the decision about Z can be decomposed into two steps, , the decision about X corresponding to the choice of the partition and the decision about Y given X corresponding to the choice of inside the given partition determined by X. According to Equation (34), the choice of the partition is not in favor of the achieved expected utility inside each partition, but of the Free Energy (see Figure 13).

Figure 13.

The Free Energy as certainty-equivalent (Example 5). (a) Utility function U as a function of (top) and expected utilities for the coarse-grained partitions and corresponding to the choices and , respectively, for a bounded-rational decision-maker with (bottom). (b) The bounded optimal probability distribution over (top) does not correspond to the expected utilities in (a) but to the Free Energy of the second decision-step, i.e., the decision about Y given X (bottom).

Therefore, a bounded rational decision-maker that has the choice among decision-problems ideally should base its decision not on the expected utility that might be achieved but on the Free Energy of the subordinate problems. In other words, the Free Energy quantifies the value of a decision-problem that, besides the achieved average utility, also takes the information-processing costs into account.

3.4. Multi-Task Decision-Making and the Optimal Prior

Thus far, we have considered decision-making problems with utility functions defined on only, modeling a single decision-making task. This is extended to multi-task decision-making problems by utility functions of the form , where the additional variable represents the current state of the world. Different world states w in general lead to different optimal decisions . For example, in a chess game, the optimal moves depend on the current board configurations the players are faced with.

The prior q for a bounded-rational multi-task decision-making problem may either depend or not depend on the world state . In the first case, the multi-task decision-making problem is just given by multiple single-task problems, i.e., for each , and are the prior and posterior of a bounded rational decision-making process with utility function , as described in the previous sections. In the case when there is a single prior for all world states , the Free Energy is

| (35) |

where is a given world state distribution. Note that, for simplicity, we assume that is independent of , which means that only the average information-processing is constrained, in contrast to the information-processing being constrained for each world state which in general would result in being a function of w. Similar to single-task decision-making (Equation (31)), the maximum of Equation (35) is achieved by

| (36) |

with normalization constant . Interestingly, the deliberation cost in Equation (35) depends on how well the prior was chosen to reach all posteriors without violating the processing constraint. In fact, viewing the Free Energy in Equation (35) as a function of both, posterior and prior, , and optimizing for the prior yields the marginal of the joint distribution , i.e., the mean of the posteriors for the different world states,

| (37) |

Similar to Equation (31), Equation (37) follows from finding the zeros of the functional derivative of the Free Energy with respect to (modified by an additional term for the normalization constraint).

Optimizing the Free Energy for both prior and posterior can be achieved by iterating Equations (36) and (37). This results in an alternating optimization algorithm, originally developed independently by Blahut and Arimoto to calculate the capacity of a memoryless channel [73,74] (see [75] for a convergence proof by Csiszár and Tusnády). Note that

in particular that the information-processing cost is now given by the mutual information between the random variables W and X. In this form, we can see that the Free Energy optimization with respect to prior and posterior is equivalent to the optimization problem in classical rate distortion theory [76], where U is given by the negative of the distortion measure.

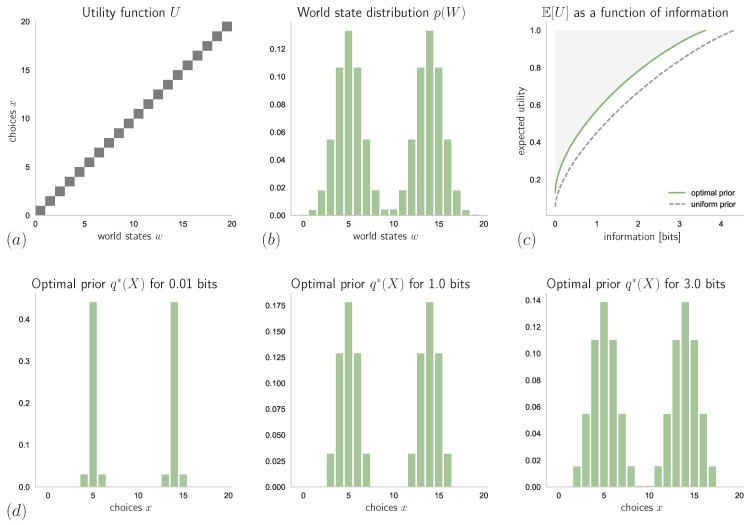

Similar to in rate-distortion theory, where compression algorithms are analyzed with respect to the rate-distortion function, any decision-making system can now be analyzed with respect to informational bounded-optimality. More precisely, when plotting the achieved expected utility against the information-processing resources of a bounded-rational decision-maker with optimal prior, we obtain a Pareto-optimality curve that forms an efficiency-frontier that cannot be surpassed by any decision-making process (see Figure 14c).

Figure 14.

Absolute identification task with known world state distribution: (a) utility function; (b) world states distribution (a mixture of two Gaussians); (c) expected utility as a function of information-processing resources for a bounded-optimal decision-maker with a uniform and with an optimal prior (the shaded region cannot be reached by any decision-making process); and (d) exemplary optimal priors for different information-processing bounds.

3.5. Multi-Task Decision-Making with Unknown World State Distribution

A bounded rational decision-making process with informational cost and utility that has an optimal prior given by the marginal in Equation (37) must have perfect knowledge about the world state distribution . In contrast, here we consider the case when the exact shape of the world state distribution is unknown to the decision-maker and therefore has to be inferred from the already seen world states. More precisely, we assume that the world state distribution is parameterized by a parameter , i.e., for a given parameterized distribution . Since the true parameter is unknown, is treated as a random variable by itself, so that . After a dataset of samples from has been observed the joint distribution of all involved random variables can be written as

where denotes the decision-maker’s prior belief about , and is the likelihood of the previously observed world states. Therefore, the resulting (multi-task) Free Energy (see Equation (35)) is given by

| (38) |

It turns out that we obtain Bayesian inference as a byproduct of optimizing Equation (38) with respect to the prior . Indeed, by calculating the functional derivative with respect to of the Free Energy in Equation (38) plus an additional term for the normalization constraint of (with Lagrange multiplier ), we can see that any distribution that optimizes Equation (38) must satisfy

where is chosen such that for any . This is equivalent to

where denotes the normalization constant of , given by , since as well as . Therefore, we obtain

with as defined in Equation (39). Hence, we have shown

Proposition 4

(Optimality of Bayesian inference). The optimal prior that maximizes Equation (38) is given by , where is the Bayes posterior

(39)

4. Example: Absolute Identification Task with Known and Unknown Stimulus Distribution

Consider a bounded rational decision-maker with a multi-task utility function U such that, for each , is non-zero for only one choice , as shown in Figure 14. Here, the decision and world spaces are both finite sets of elements. The world state distribution is given by a mixture of two Gaussian distributions, as shown in Figure 14b. Due to some world states being more likely than others, there are some choices that are less likely to be optimal.

4.1. Known Stimulus Distribution

As can be seen in Figure 14c (dashed line), here it is not ideal to have a uniform prior distribution, for all . Instead, if the world state distribution is known perfectly and the prior has the form suggested by Equation (37), i.e., , then, as can be seen in Figure 14c (solid line), achieving the same expected utility as with a uniform prior requires less informational resources. In particular, the explicit form of depends on the resource parameter , see Figure 14d. For low resource availability (small ), only the choices that correspond to the most probable world states are considered. However, for , we have

because here is the posterior of a rational decision-maker, where denotes the Kronecker- (which is only non-zero if ). Hence, for decision-makers with abundant information-processing resources (large ) the optimal prior approaches the form of the world state distribution (since here ).

4.2. Unknown Stimulus Distribution

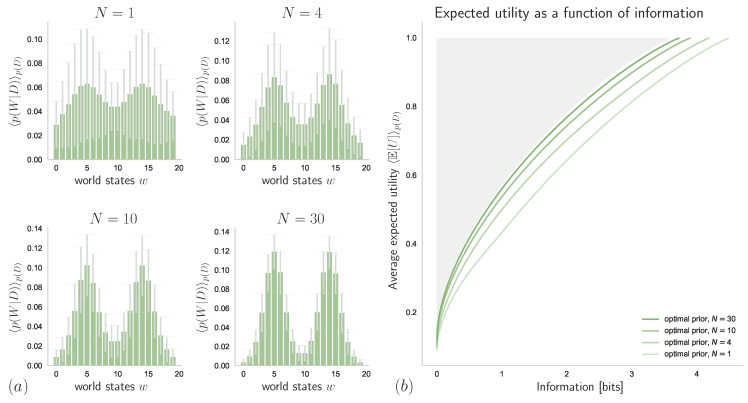

In the case when the decision-maker has to infer its knowledge about from a set of samples , we know from Section 3.5 that this is optimally done via Bayesian inference. Here, we assume a mixture of two Gaussians as a parameterization of , so that , where and denote mean and standard-deviation of the ith component, respectively (for simplicity, with fixed equal weights for the two mixture components).

In Figure 15a, we can see how different values of N affect the belief about the world state distribution, , when is given by the Bayes posterior (39) with a uniform prior belief . The resulting expected utilities (averaged over samples from ) as functions of available information-processing resources are displayed in Figure 15b, which shows how the performance of a bounded-rational decision-maker with optimal prior and perfect knowledge about the true world state distribution is approached by bounded rational decision-makers with limited but increasing knowledge given by the sample size N.

Figure 15.

Absolute identification task with unknown world state distribution: (a) average of inferred world state distributions for different sizes N of datasets (standard-deviations across datasets indicated by error bars); and (b) resulting utility-information curves of a bounded-rational decision-maker with optimal prior that has to infer the world state distribution from datasets with different sizes N.

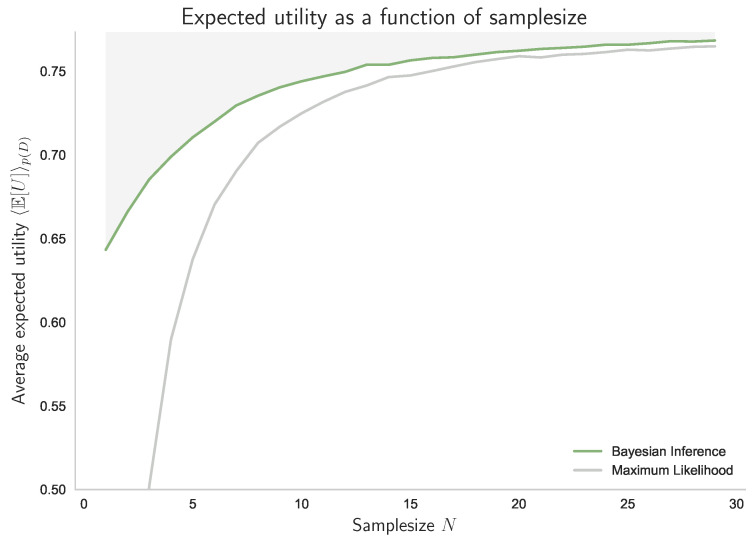

Abstractly, we can view Equation (39) as the bounded optimal solution to the decision-making problem that starts with a prior and arrives at a posterior after processing the samples in (see also Example 4). In fact, the posteriors shown in Figure 15a satisfy the requirements for a decision-making process with resource given by the number of data N, when averaged over . In particular, by increasing N the posteriors contain less and less uncertainty with respect to the preorder ≺ given by majorization. Accordingly, if we plot the achieved expected utility against the number of samples, we obtain an optimality curve similar to Figure 14c and Figure 15b. In Figure 16, we can see how Bayesian Inference outperforms Maximum Likelihood when evaluated with respect to the average expected utility of a bounded-rational decision-maker with 2 bits of information-processing resources.

Figure 16.

Optimality curve given by Bayesian inference. The average expected utility as a function of N achieved by a bounded-rational decision-maker that infers the world state distribution with Bayes rule in Equation (39) forms an efficiency frontier that cannot be surpassed by any other inference scheme, like for example Maximum Likelihood, when starting from the same prior belief about the world.

5. Discussion

In this work, we have developed a generalized notion of decision-making in terms of uncertainty reduction. Based on the simple idea of transferring pieces of probability between the elements of a probability distribution, which we call elementary computations, we have promoted a notion of uncertainty which is known in the literature as majorization, a preorder ≺ on . Taking non-uniform initial distributions into account, we extended the concept to the notion of relative uncertainty, which corresponds to relative majorization . Even though a large amount of research has been done on majorization theory, from the early works [29,34,38] through further developments [27,30,31,32,36,77,78] to modern applications [39,40,41], there is a lack of results on the more general concept of relative majorization. This does not seem to be due to a lack of interest, as can be seen from the results [28,57,58,79], but mostly because relative majorization looses some of the appealing properties of majorization which makes it harder to deal with, for example that permutations no longer leave the ordering invariant, in contrast to the case of a uniform prior. This restriction does, however, not affect our application of the concept to decision-making, as permutations are not considered as elementary computations, since they do not diminish uncertainty. By reducing the non-uniform to the uniform case, we managed to prove new results on relative majorization (Theorem 2), which then enabled new results in other parts of the paper (Example 3 and Propositions A6 and A8), and allowed an intuitive interpretation of our final definition of a decision-making process (Definition 7) in terms of elementary computations with respect to non-uniform priors (Definition 6).

More precisely, starting from stepwise elimination of uncertain options (Section 2.3), we have argued that decision-making can be formalized by transitions between probability distributions (Section 2.2), and arrived at the concept of decision-making processes traversing probability space from prior to posterior by successively moving pieces of probability between options such that uncertainty relative to the prior is reduced (Section 2.1). Such transformations can be quantified by cost functions, which we define as order-preserving functions with respect to and capture the resource costs that must be expended by the process. We have shown (Propositions A1 and A6) that many known generalized entropies and divergences, which are examples of such cost functions (Examples 1 and 3), satisfy superadditivity with respect to coarse-graining. This means that under such cost functions, decision-making costs can potentially be reduced by a more intelligent search strategy, in contrast to Kullback-Leibler divergence, which was characterized as the only additive cost function (Proposition A7). There are plenty of open questions for further investigation in that regard. First, it is not clear under which assumptions on the cost functions superadditivity could be improved to with and . Additionally, it would be an interesting challenge to find sufficient conditions implying super-additivity that include more cost functions than f-divergences. The field of information geometry might give further insights on the topic, since there are studies in similar directions, in particular characterizations of divergence measures in terms of information monotonicity and the data-processing inequality [48,80,81,82]. One interesting result is the characterization of Kullback-Leibler divergence as the single divergence measure being both an f-divergence and a Bregman divergence.

In Section 3, bounded rational decision-makers were defined as decision-making processes that are maximizing utility under constraints on the cost function, or equivalently minimizing resource costs under a minimal utility requirement. In the important case of additive cost functions (i.e., proportional to Kullback-Leibler divergence), this leads to information-theoretic bounded rationality [14,18,62,63,64], which has precursors in the economic and game-theoretic literature [4,8,11,12,13,14,15,16,19,83,84,85]. We have shown that the posteriors of a bounded rational decision-maker with increasing informational constraints leave a path in probability space that can itself be considered an anytime decision-making process, in each step perfectly trading off utility against processing costs (Proposition 3). In particular, this means that the path of a bounded rational decision-maker with informational cost decreases uncertainty with respect to all cost functions, not just Kullback-Leibler divergence. We have also studied the role of the prior in bounded rational multi-task decision-making, where we have seen that imperfect knowledge about the world state distribution leads to Bayesian inference as a byproduct, which is in line with the characterization of Bayesian inference as minimizing prediction surprise [86], but also demonstrates the wide applicability of the developed theory of decision-making with limited resources.

Finally, in Section 4, we have presented the results of a simulated bounded rational decision-maker solving an absolute identification task with and without knowledge about the world state distribution. Additionally, we have seen that Bayesian inference can be considered a decision-making process with limited resources by itself, where the resource is given by the number of available data points.

6. Conclusions

To our knowledge, this is the first principled approach to decision-making based on the intuitive idea of Pigou–Dalton-type probability transfers (elementary computations). Information-theoretic bounded rationality has been introduced by other axiomatic approaches before [8,62]. For example, in [62], a precise relation between rewards and information value is derived by postulating that systems will choose those states with high probability that are desirable for them. This leads to a direct coupling of probabilities and utility, where utility and information inherit the same structure, and only differ with respect to normalization (see [87] for similar ideas). In contrast, we assume utility and probability to be independent objects a priori that only have a strict relationship in the case of bounded-optimal posteriors. The approach in [8] introduces Kullback-Leibler divergence as disutility for decision control. Based on Hobson’s characterization [59], the authors argued that cost functions should be monotonic with respect to uniform distributions (the property in Equation (22)) and invariant under decomposition, which coincides with additivity under coarse-graining (see Examples 1 and 3). Both assumptions are special cases of our more general treatment, where cost functions must be monotonic with respect to elementary computations and are generally not restricted to being additive.

In the literature, there are many mechanistic models of decision-making that instantiate decision-making processes with limited resources. Examples include reinforcement learning algorithms with variable depth [88,89], Markov chain Monte Carlo (MCMC) models where only a certain number of samples can be evaluated [65,85,90], and evidence accumulation models that accumulate noisy evidence until either a fixed threshold is reached [91,92,93,94,95] or where thresholds are determined dynamically by explicit cost functions depending on the number of allowed evidence accumulation steps [96,97]. Many of these concrete models may be described abstractly by resource parameterizations (Definition 5). More precisely, in such cases, the posteriors are generated by an explicit process with process constraints and resource parameter r. For example, in diffusion processes r may correspond to the amount of time allowed for evidence accumulation, in Monte Carlo algorithms r may reflect the number of MCMC steps, and in a reinforcement learning agent r may represent the number of forward-simulations. If the resource restriction is described by a monotonic cost function [96,97], then the process can be optimized by a maximization problem of the form

where are non-negative constants, denotes the subset of probability distributions with resource r, and denotes a cost function such that for is strictly monotonically increasing. In particular, such cases can also be regarded as bounded rational decision-making problems of the form of Equation (26).

Bounded rationality models in the literature come in a variety of flavors. In the heuristics and biases paradigm, the notion of optimization is often dismissed in its entirety [7], even though decision-makers still have to have a notion of options being better or worse, for example to adapt their aspiration levels in a satisficing scheme [98]. We have argued that from an abstract normative perspective we can formulate satisficing in probabilistic terms, such that one could investigate the efficiency of heuristics within this framework. Another prominent approach to bounded rationality is given by systems capable of decision-making about decision-making, i.e., meta-decision-making. Explicit decision-making processes composed of two decision steps have been studied, for example, in the reinforcement learning literature [88,89,99,100], where the first step is represented by a meta decision about whether a cheap model-free or a more expensive model-based learning algorithm is used in the second step. The meta step consists of a trade-off between the estimated utility against the decision-making costs of the second decision step. In the information-theoretic framework of bounded rationality, this could be seen as a natural property of multi-step decision-making and the recursivity property in Equation (34), from which it follows that the value of a decision-making problem is given by its free energy that, besides the achieved utility, also takes the corresponding processing costs into account. Another prominent approach to bounded rationality is computational rationality [19], where the focus lies on finding bounded-optimal programs that solve constrained optimization problems presented by the decision-maker’s architecture and the task environment. As described above, such architectural constraints could be represented by a process dependent subset , and in fact our resource costs could be included into such a subset as well. From this point of view, both frameworks would look for bounded-optimal solutions in that the search space is first restricted and then the best solution in the restricted search space is found. However, our search space would consist of distributions describing probabilistic input-output maps, whereas the search space of programs would be far more detailed.

The notion of decision-making presented in this work, intuitively developed from the basic concept of uncertainty reduction given by elementary computations and motivated by the simple idea of progressively eliminating options, on the one hand provides a promising theoretical playground that is open for further investigation (e.g., superadditivity of cost functions and minimality of relative entropy), potentially providing new insights into the connection between the fields of rationality theory and information theory, and on the other hand serves the purpose of a general framework to describe and analyze all kinds of decision-making processes (e.g., in terms of bounded-optimality).

Appendix A. Proofs of Technical Results from Section 2 and Section 3

Proposition A1

(Superadditivity of generalized entropies under coarse-graining, Example 1). All cost functions of the form

(A1) with f (strictly) convex and differentiable on , and , are superadditive with respect to coarse-graining, that is

whenever , and and .

Proof.

As shown in the following proof, strict convexity is not needed for superadditivity, but it is required for the definition of a cost function. First, since , notice that we can always redefine the convex function f in Equation (A1) by for an arbitrary constant without changing for all . Hence, without loss of generality, we may assume , so that is a global minimum of f (since due to convexity). Since C is symmetric, superadditivity under coarse-graining is equivalent to

(A2) This simply follows by induction, since Equation (A2) corresponds to the partitioning with and for all (see also [101] Proposition 2.3.5). In terms of f, Equation (A2) reads

By setting and , this is equivalent to

(A3) for all . Writing and noting that and , it suffices to show that , which shows that is monotonically decreasing from to and thus for all . We have for all

By the symmetry of under the replacement of v by , without loss of generality, we may assume that , so that . Since f is convex, is monotonically increasing on , and thus . In particular,

and thus, since , it follows that , which completes the proof. □

Proposition A2

(Characterization of Shannon entropy, Example 1). If a cost function C is additive under coarse-graining, that is if with the notation from Proposition A1, then

for some , i.e., C is proportional to the negative Shannon entropy .

Proof.

Since uniform distributions over N options are majorized by uniform distributions over options (see Equation (7)), it follows for any cost function C that the function f defined by is monotonically increasing. Therefore, the claim follows directly from Shannon’s proof [22], who showed that this monotonicity, additivity under coarse-graining, and continuity determine Shannon entropy up to a constant factor. □

Proposition A3

(Proposition 1). The uniform distribution is the unique minimal element in with respect to ≺, i.e.,

(A4)

Proof.

For the proof of Equation (A4), let be the family of all cyclic permutations of the N entries of a probability vector p, such that

It follows that for all , where as above, and therefore the uniform distribution is given by a convex combination of permutations of p, so that in Theorem 1 implies . There are many different ways to prove uniqueness. A direct way is to assume there exists with for all , so that by in Theorem 1 there exists a stochastic matrix A with . However, since (A stochastic), it follows that . An indirect way would be to use that if for all , then from Example 1 we know that this implies for all , where H denotes the Shannon entropy, simply because is a cost function. In particular, q maximizes H and therefore coincides with the uniform distribution . □

Proposition A4

(Equivalence of in Theorem 2). The following are equivalent

- (i)

, i.e., contains more uncertainty relative to q than p (Definition 4).

- (iii)

for a q-stochastic matrix A, i.e., and .

- (v)

for all and , where , and the arrows indicate that is ordered decreasingly.

Proof.

We use the fact that has a left inverse satisfying , where denotes the identity on . This can be verified by simply multiplying the corresponding matrices, given by

and noting that, by definition, .

Characterization follows from of Theorem 1 and Definition 4, since if and only if

for all , from which is an immediate consequence.

: Assuming that , we have and, therefore, by in Theorem 1, there exists a doubly stochastic matrix B such that . With , it follows that

where we use that and which is easy to check, and from B being a stochastic matrix. Note that, by slightly abusing notation, here e is always the constant vector irrespective of the number of its entries (N or , depending on which space the operator is defined). Moreover, we have

where we have used that by definition is the uniform distribution on , i.e., , and therefore also . In particular, since also ( have non-negative entries), it follows that A is a q-stochastic matrix such that .

: Similarly, if A is a q-stochastic matrix with , then , where satisfies and , where we have used that , , , and . In particular, since also , B is doubly stochastic and therefore , i.e., . □

Proposition A5

(Proposition 2). The prior is the unique minimal element in with respect to , that is for all .

Proof.

Let , and let denote its representation in . Then, by Proposition A3 (uniform distributions are minimal) and therefore , in particular q is minimal with respect to . For uniqueness, let be possibly another minimal element. Then, and therefore by in Theorem 2 there exists a q-stochastic matrix A with . However, since A is q-stochastic, , and thus . □

Proposition A6

(Example 3: Superadditivity of f-divergences under coarse-graining). All relative cost functions of the form

(A5) with f (strictly) convex and differentiable on , and , are superadditive with respect to coarse-graining, that is

whenever , and and , which for cost functions that are symmetric with respect to permutations of (such as Equation (20)) is equivalent to

(A6)

Proof.

This is a simple corollary to Proposition A1, after establishing the following interesting property of cost functions of the form of Equation (A5):

(A7) where denotes the representation mapping defined in Section 2.3 that maps q to a uniform distribution on an elementary decision space of elements, given by whenever , where is a disjoint partition of such that . Equation (A7) then follows from

where we use that . Hence, the case of a non-uniform prior reduces to the case of a uniform prior, which is shown in Proposition A1. □

Proposition A7

(Example 3: Characterization of Kullback-Leibler divergence). If is a continuous cost function relative to q that is additive under coarse-graining, that is in the notation of Proposition A6, then

(A8) for some , where denotes the Kullback-Leibler divergence .

Proof.

First, we show that any relative cost function that is additive under coarse-graining satisfies Equation (22), the monotonicity property for uniform distributions: If denotes the cost of a uniform distribution over M elements relative to a uniform distribution over elements, then (22) is true. Once Equation (22) has been established, then Equation (A8) goes back to a result by Hobson [59] (see also [8]), whose proof is a modification of Shannon’s axiomatic characterization [22].

The first property in Equation (22) actually is true for all relative cost functions: For with , we have iff and thus the first property follows from Equation (7), and the same is true in the case when , since we always assume that are absolutely continuous with respect to q, which allows to redefine to only contain the N options covered by q.

For the proof of the second property in Equation (22), we let the random variable X indexing the partitions of , where denotes the support of and its complement, and Y representing the choice inside of the selected partition given . Letting , and , then it follows from addivity under coarse-graining that , and letting , we obtain

since , , and , and thus . □

Proposition A8