Abstract

Permutation Entropy (PE) and Multiscale Permutation Entropy (MPE) have been extensively used in the analysis of time series searching for regularities. Although PE has been explored and characterized, there is still a lack of theoretical background regarding MPE. Therefore, we expand the available MPE theory by developing an explicit expression for the estimator’s variance as a function of time scale and ordinal pattern distribution. We derived the MPE Cramér–Rao Lower Bound (CRLB) to test the efficiency of our theoretical result. We also tested our formulation against MPE variance measurements from simulated surrogate signals. We found the MPE variance symmetric around the point of equally probable patterns, showing clear maxima and minima. This implies that the MPE variance is directly linked to the MPE measurement itself, and there is a region where the variance is maximum. This effect arises directly from the pattern distribution, and it is unrelated to the time scale or the signal length. The MPE variance also increases linearly with time scale, except when the MPE measurement is close to its maximum, where the variance presents quadratic growth. The expression approaches the CRLB asymptotically, with fast convergence. The theoretical variance is close to the results from simulations, and appears consistently below the actual measurements. By knowing the MPE variance, it is possible to have a clear precision criterion for statistical comparison in real-life applications.

Keywords: Multiscale Permutation Entropy, ordinal patterns, estimator variance, Cramér–Rao Lower Bound, finite-length signals

1. Introduction

Information entropy, originally defined by Shannon [1], has been used as a measure of information content in the field of communications. Several other applications of entropy measurements have been proposed, such as the analysis of physiological electrical signals [2], where a reduction in entropy has been linked to aging [3] and various motor diseases [4]. Another application is the characterization of electrical load behavior, which can be used to perform non-intrusive load disaggregation and to design smart grid applications [5].

Multiple types of entropy measures [6,7,8] have been proposed in recent years to assess the information content of time series. One notable approach is the Permutation Entropy (PE) [9], used to measure the recurrence of ordinal patterns within a discrete signal. PE is fast to compute and robust even in the presence of outliers [9]. To better measure the information content at different time scales, Multiscale Permutation Entropy (MPE) [10] was formulated as an extension of PE, by using the multiscale approach proposed in [11]. Multiscaling is particularly useful in measuring the information content in long range trends. The main disadvantage of PE is the necessity of a large data set for it to be reliable [12]. This is especially important in MPE, where the signal length is reduced geometrically at each time scale. Signal-length limitations have been addressed and improved with Composite MPE and Refined Composite MPE [13].

PE theory and properties have been extensively explored [14,15,16]. However, there is still a lack of understanding regarding the statistical properties of MPE. In our previous work [17], we already derived the expected value of the MPE measurement, taking into consideration the time scale and the finite-length constraints. We found the MPE expected value to be biased. Nonetheless, this bias depends only on the MPE parameters, and it is constant with respect to the pattern probability distribution. In practice, the MPE bias does not depend on the signal [17], and thus, can be easily corrected.

In the present article, our goal is to continue this statistical analysis by obtaining the variance of the MPE estimator by means of Taylor series expansion. We develop an explicit equation for the MPE variance. We also obtain the Cramér–Rao Lower Bound (CRLB) of MPE, and compare it to our obtained expression to assess its theoretical efficiency. Lastly, we compare these results with simulated data with known parameters. This gives a better understanding of the MPE statistic, which is helpful in the interpretation of this measurement in real-life applications. By knowing the precision of the MPE, it is possible to take informed decisions regarding experimental design, sampling, and hypothesis testing.

The reminder of the article is organized as follows: In Section 2, we lay the necessary background of PE and MPE, the main derivation of the MPE variance, and the CRLB. We also develop the statistical model to generate the surrogate data simulations for later testing. In Section 3, we show and discuss the results obtained, including the properties of the MPE variance, its theoretical efficiency, and similarities with our simulations. Finally, in Section 4, we add some general remarks regarding the results obtained.

2. Materials and Methods

In this section, we establish the concepts and tools necessary for the derivation of the MPE model. In Section 2.1 we review the formulation of PE and MPE in detail. In Section 2.2, we show the derivation of the MPE variance. In Section 2.3, we derive the expression for the CRLB of the MPE, and we compare it to the variance. Finally, in Section 2.4, we explain the statistical model used to generate surrogate signals, which are used to test the MPE model.

2.1. Theoretical Background

2.1.1. Permutation Entropy

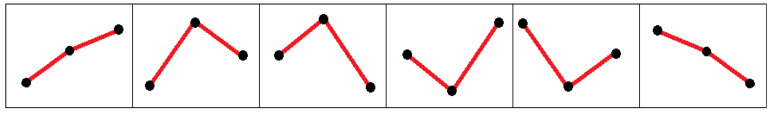

PE [9] measures the information content by counting the ordinal patters present within a signal. An ordinal pattern is defined as the comparison between the values of adjacent data points in a segment of size d, known as the embedded dimension. For example, for a discrete signal of length N, , and , only two possible patterns can be found within the series, and . For , there are six possible patterns present, as shown in Figure 1. In general, for any integer value of d, there exists d factorial () possible patterns within any given signal segment. To calculate PE, we must first obtain the sample probabilities within the signal, by counting the number of times a certain pattern occurs. This is formally expressed as follows:

| (1) |

where is the label of a particular ordinal pattern i, and is the estimated pattern probability. Some authors [15] also introduce a downsampling parameter in Equation (1), to address the analysis of an oversampled signal. For the purposes of this article, we assume a properly sampled signal, and thus, we do not include this parameter.

Figure 1.

All possible patterns for embedded dimension , from a three-point sequence . The patterns represented, from left to right, are , , , , , and . The difference in amplitude between data points does not affect the pattern, as long as the order is preserved.

Using these estimations, we can apply the entropy definition [1] to obtain the PE of the signal

| (2) |

where is the estimated normalized PE from the data.

PE is a very simple and fast estimator to calculate, and it is invariant to non-linear monotonous transformations [15]. It is also convenient to note that we need no prior assumptions on the probability distribution of the patterns, which makes PE a very robust estimator. The major disadvantage in PE involves the length of the signal, where we need for PE to be meaningful. This imposes a practical constraint in the use of PE for short signals, or in conditions where the data length is reduced.

2.1.2. Multiscale Permutation Entropy

The MPE consists of applying the classical PE analysis on coarse-grained signals [10]. First, we define m, as the time scale parameter for the MPE analysis. The coarse-graining procedure consists in dividing the original signal into adjacent, non-overlapping segments of size m, where m is a positive integer less than N. The data points within each segment are averaged to produce a single data point, which represents the segment at the given time scale [11].

| (3) |

MPE consists in applying the PE procedure in Equation (2) on the coarse-grained signals in Equation (3) for different time scales m. This technique allows us to measure the information inside longer trends and time scales, which is not possible using PE. Nonetheless, the resulting coarse-grained signals have a length of , which limits the analysis. Moreover, for a sufficiently large m, the condition is eventually not satisfied.

At this point, it is important to discuss some constraints regarding the interaction between time scale m and the signal length N. First, strictly speaking, must be an integer. In practice, the coarse-grained signal length will be the integer number immediately below . Second, the proper domain of m is , as the segments size, at most, can be the same length as the signal itself. It is handy to use a normalized scale with domain which does not change between signals. This normalized scale is the inverse of the coarse-grained signal length. Taking the length constraints from the previous section, this means that . For , a normalized scale of will result in a coarse-grained signal of 2 data points, which is not meaningful for this analysis. For , the normalized scale must be significantly less than , which corresponds to a coarse-grained signal of six elements. Therefore, for practical applications, we restrict our analysis for values of close to zero, by selecting a small time scale m, a large signal length N, or both.

2.2. Variance of MPE Statistic

The calculated MPE can be interpreted as a sample statistic that estimates the true entropy value, with an associated expected value, variance, and bias, for each time scale. We have previously developed the calculation of the expected value of the MPE in [17], where the bias has been found to be independent from the pattern probabilities. We expand on this result by first proposing an explicit equation to the MPE statistic, and then we formulate the variance of MPE, as a function of the true pattern probabilities and time scale.

For the following development, we use H, the non-normalized version of Equation (2), for convenience.

| (4) |

By taking the Taylor expansion of Equation (4) on a coarse-grained signal in Equation (3), we get

| (5) |

where is the MPE estimator, H is the true unknown MPE value, m is the time scale, N is the signal length, and d the embedded dimension. The probabilities correspond to the true probabilities (unknown parameters) of each pattern, and correspond to the random part in the multinomially (Mu) distributed frequency of each pattern,

| (6) |

being the number of pattern counts of type i in the signal.

If we take into consideration the length constraints discussed in Section 2.1.2, we know that the normalized time scale is very close to zero. This implies that the high-order terms of Equation (5) will quickly vanish for increasing values of k. Therefore, we propose to make the simplest approximation of Equation (5) by taking only the term . By doing this, we get the following expression:

| (7) |

Using our previous results involving MPE [17], we know the expected value of Equation (7) is

| (8) |

The statistic presents a bias that does not depend on the pattern probabilities , and thus, can be easily corrected for any signal.

Now, we move further and calculate the variance of the MPE estimator. First, it is convenient to express Equation (4) in vectorial form.

| (9) |

where

| (10) |

being the column vector of pattern probability estimators, the column vector of the logarithm of each pattern probability estimator, and T is the transpose symbol. We can now rewrite Equation (7) as

| (11) |

where

| (12) |

The circle notation ∘ represents the Hadamard power (element-wise).

Now, we can obtain the variance of the MPE estimator (11),

| (13) |

Now, we know that is the Covariance matrix of , which is the multinomial random variable defined in Equation (6). The matrix is the Coskewness matrix, and is the Cokurtosis. We know that (see Appendix A),

| (14) |

| (15) |

| (16) |

where is a diagonal matrix, where the diagonal elements are the elements of .

We can further summarize the covariance matrix (14) as follows:

| (17) |

We also rewrite the following term in Equation (13),

| (18) |

By combining Equations (14) to (18) explicitly in Equation (13), we get the expression,

| (19) |

We note that Equation (19) is a cubic polynomial respect to the normalized scale . Since we are still working in the region where is close to (but not including) zero, we propose to further simplify this expression using only the linear term. This means that we can approximate Equation (19) as follows:

| (20) |

We note that Equation (20) will be equal to zero for a perfectly uniform pattern distribution (which yields a maximum PE). In this particular case, Equation (20) will not be a good approximation for the MPE variance, and we will need, at least, the quadratic term in Equation (19). Except for extremely high or low values of MPE, we expect the variance linear approximation to be accurate. We discuss more properties of this statistic in the Section 3.2.

2.3. MPE Cramér–Rao Lower Bound

In this section, we compare the MPE variance (20) to the CRLB, to test the efficiency of our estimator. The CRLB is defined as

| (21) |

where B is the bias of the MPE expected value from Equation (8) and

| (22) |

where is the Fisher Information, and is the probability distribution function of H.

Although we do not have an explicit expression for , we can express as a function of as follows [18]:

| (23) |

where

| (24) |

| (25) |

is the Fisher information matrix for , which we know has a multinomial distribution related to Equation (6). Each element of is defined as

| (26) |

where is the probability distribution of , which is identically distributed to Equation (6) (for the full calculation of , see Appendix B). Thus, by obtaining the inverse of and all partial derivatives of H with respect to each element of , we obtain the .

| (27) |

As we recall from our results in Equations (19) and (20), the CRLB corresponds to the first term of the Taylor series expansion of the MPE variance. The high-order terms in Equation (19) increase the MPE variance above the CRLB. For small values of , the higher-order terms in Equations (20) become neglectable, which make the MPE variance approximation converge to .

2.4. Simulations

To test the MPE variance, we need an appropriate benchmark. We need to design a proper signal model with the following goals in mind: First, the model must preserve the pattern probabilities across all the signal generated; second, the function must have the pattern probability as an explicit parameter, easily modifiable for testing. The following equation satisfies these criteria for dimension :

| (28) |

where

| (29) |

| (30) |

This is a non-stationary process, with a trend function . is a Gaussian noise term with variance , without loss of generality. Although different values of will indeed modify the trend function, it will not affect the pattern probabilities in the simulation, as PE is invariant to non-linear monotonous transformations [15].

For dimension , and , which are the probabilities of each of the two possible patterns. The formulation of comes from the Gaussian Complementary Cumulative Distribution function, taking p as a parameter. This guarantees that we can directly modify the pattern probabilities p and for simulation.

Although p is not invariant at different time scales, it is consistent within each coarse-grained signal, which suffices for our purposes. We chose to restrict our simulation analysis to the embedded dimension . Although our theoretical work holds for any value of d (see Section 2.2), it is difficult to visualize the results at higher dimensions.

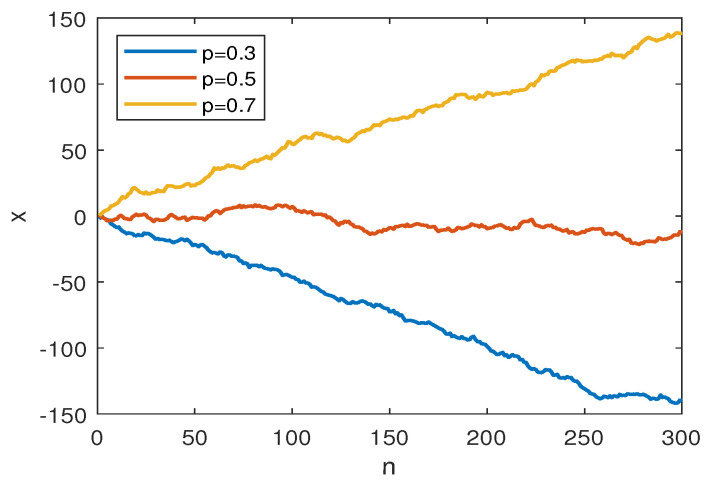

This surrogate model (28) was implemented in the Matlab environment. For the test, we generated 1000 signals each, for 99 different values for . The signal length was set to . Some sample paths are shown in Figure 2. These signals were then subject to the coarse-graining procedure (3) for time scale . The MPE measurement was taken for each coarse-grained simulated signal using Equation (2). Finally, we obtained the mean and variance of the resulting MPE’s for each scale. This simulation results are discussed in Section 3.2.

Figure 2.

Simulated paths for MPE testing, where p is the probability of for dimension . The graph shows sample paths for , ,

3. Results and Discussion

In this section, we explore the results from the theoretical MPE variance. In Section 3.1, we contrast the results from the model against the MPE variance measured from the simulations. In Section 3.2, we discuss these findings in detail.

3.1. Results

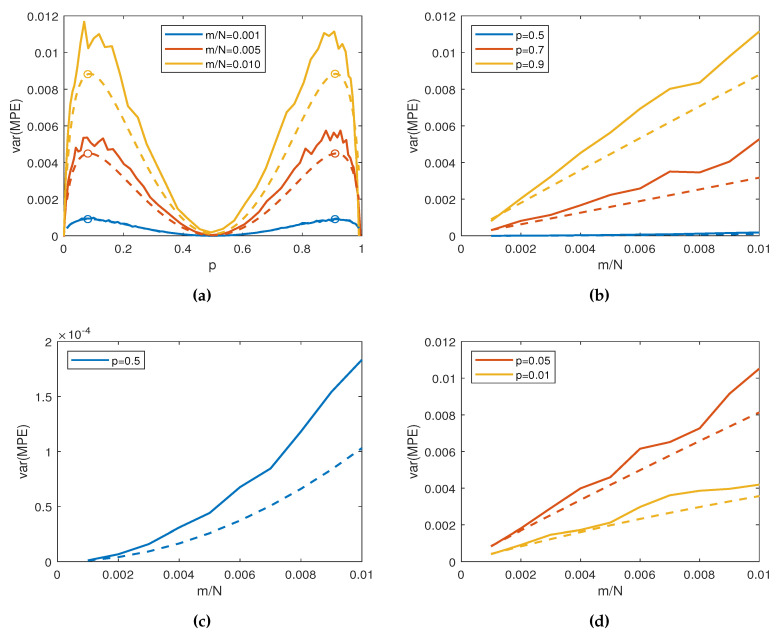

Here, we compare the theoretical results with the surrogate data obtained by means of the procedure described in Section 2.4. We use the cubic model from Equation (19) instead of the linear approximation in Equation (20), to take in consideration non-linear effects that could arise from simulations. Figure 3 shows the theoretical predictions in dotted lines, and simulation measurements in solid lines.

Figure 3.

for embedded dimension from theory (dotted lines) and simulations (solid lines). (a) vs. pattern probability p for different normalized scales . (b) vs. for different values of p. (c) vs. at , which corresponds to maximum entropy. (d) vs. at with small p, which approaches minimum entropy.

Figure 3a shows the variance of the MPE (19) as a function of p for . The lines correspond to a normalized time scale of , and . The variance presents symmetry with respect to the middle value of . This is to be expected, as for , the variance has only one degree of freedom. The structure is preserved, albeit scaled, for different values of m.

Figure 3b–d show the MPE variance versus the normalized time scale, for different values of pattern probability p. As we can see in Figure 3b, the variance increases linearly with respect to the normalized scale at the positive vicinity of zero, as predicted in Equation (20). This is not the case for when (maximum entropy), as shown in Figure 3c, where both the theory and simulations show a clear non-linear tendency. Finally, Figure 3d shows the case where we have extreme pattern probability distributions, with entropy close to zero. Although we use the complete cubic model (19), the predicted curves are almost linear.

In general, we can observe that the simulation results have greater variance than the prediction of the model. The real values from the simulations correspond to the sample variance from the signals, calculated from instead of . Nonetheless, the simulations and theoretical graphs have the same shape. It is interesting to note that the discrepancies increase with the scale. This effect is addressed in Section 3.2.

3.2. Discussion

It is interesting to explore the particular structure of the variance. As we can observe from Figure 3b, the MPE variance increases linearly with respect to the time scale for a wide range of pattern distributions, as described in Equation (20). This is even true with highly uneven distributions, where the entropy is very close to zero, as shown in Figure 3d. Nonetheless, when we observe the expression (20), the equation collapses to zero when all pattern probabilities are the same. For any embedded dimension d, all probabilities . This particular pattern probability distribution is the discrete uniform distribution, which yield to the maximum possible entropy in Equation (2). As we can observe from Figure 3c, the linear approximation in Equation (19) is not enough to estimate the variance in this case. Nonetheless, the results suggest a quadratic increase. This agrees with previous results by Little and Kane [16], where they characterized the classical normalized PE variance for white noise under finite-length constraints.

| (31) |

This result matches our model in Equation (19) (taking the quadratic term) for the specific case of uniform pattern distribution and time scale . This suggest that, when we approach the maximum entropy, the quadratic approximation is necessary.

Contrary to the bias in the expected value of MPE [17], the variance is strongly dependent on the pattern probabilities present in the signal, as shown in Figure 3a. For the MPE with embedded dimension , the variance of MPE has a symmetric shape around equally probable patterns. We observe, for a fixed time scale, that the variance increases the further we deviate from the center, and sharply decreases for extreme probabilities. The variance presents its lowest points at the center and the extremes of the pattern probability distributions, which corresponds to the points where the entropy is maximum and minimum, respectively. For , we can calculate the variance (20) more explicitly,

| (32) |

It is evident that the zeros of this equation correspond to , (points of minimum entropy), and (maximum entropy). We can get the critical points by calculating the first derivative of Equation (32) with respect to p

| (33) |

which is equal to zero to get the extreme points. From the first term of Equation (33), we obtain the critical point , which is a minimum. If the second term of the equation is equal to zero, we need to solve the transcendental function

| (34) |

Numerically, we found the maximum points to be and , as shown in Figure 3a. Both these values yield to a normalized PE value of . This implies that, when we obtain values of MPE close to this value, we will have a region with maximum variance. This effect arises directly from the pattern probability distribution. Hence, in the region around for , we will have maximum estimation uncertainty, even before factoring the finite length of the signal, or the time scale. Therefore, Equation (20) implies an uneven variance across all possible values of the entropy measurement, regardless of time scale and embedded dimension. It also implies a region where this variance is maximum.

Lastly, as noted in Section 2.3, the MPE variance (20) approaches the CRLB for small values of m. This is further supported by the simulation variance MPE measurements shown in Figure 3, which are consistently above the theoretical prediction. We can attribute this effect to the number of iterations of the testing model in Equation (28), where an increasing number of repetitions will yield to a more precise MPE estimation, with a reduced variance. Since we already include the effect of the time scale in Equations (19) and (20), the difference between the theoretical results and the simulations does not come directly from the signal length or the pattern distribution.

4. Conclusions

By following on from our previous work [17], we further explored and characterized the MPE statistic. By using a Taylor series expansion, we were able to obtain an explicit expression of the MPE variance. We also derived the Cramér–Rao Lower bound of the MPE, and compared it to our obtained expression. Finally, we proposed a suitable signal model for testing our results against simulations.

By analyzing the properties of the MPE variance graph, we found the estimator to be symmetric around the point of equally probable patterns. Moreover, the estimator is minimum in both the points of maximum and minimum entropy. This implies, first, that the variance of the MPE is dependent on the MPE estimation itself. In regions where the entropy measurement is near the maximum or minimum, the estimation will have a minimum variance. On the other hand, there is an MPE measurement where the variance will have a maximum. This effect comes solely from the pattern distribution, and not from the signal length or the MPE time scale. We should take in account this effect when comparing real entropy measurements, as it could affect the interpretation of statistically significant difference.

Regarding the time scale, the MPE variance linear approximation is sufficiently accurate for almost all pattern distributions, provided that the time scale is small compared to the signal length. An important exception to this is the case where the pattern probability distribution is almost uniform. For equally probable patterns, the linear term of the MPE variance vanishes, regardless of time scale. In this case, we need to increase the order of the approximation to the quadratic term. Hence, for MPE values close to the maximum, the variance presents a quadratic growth respect to scale.

We found the MPE variance estimator obtained in this article to be almost efficient. When the time scale is small compared to the signal length, the MPE variance resembles the MPE CRLB closely. Since the CRLB is equal to the first term on the Taylor series approximation for the variance, this implies that the efficiency limit also changes with the MPE measurement itself. Since this effect also comes purely from the pattern distribution, we cannot correct it with the established improvements of the MPE algorithm, like Composite MPE or Refined Composite MPE [13].

By knowing the variance of the MPE, we can improve the interpretation of this estimator in real-life applications. This is important because researchers can impose a precision criterion over the MPE measurements, given the characteristics of the data to analyze. For example, the electrical load behavior analysis can be achieved using short-term measurements where the time scale is a limiting factor for MPE. By knowing the variance, we can compute the maximum time scale until which the MPE is still significant.

By better understanding the advantages and disadvantages of the MPE technique, it is possible to have a clear benchmark for statistically significant comparisons between signals at any time scale.

Acknowledgments

This work was supported by CONACyT, Mexico, with scholarship 439625.

Abbreviations

The following abbreviations are used in this manuscript:

| PE | Permutation Entropy |

| MSE | Multiscale Entropy |

| MPE | Multiscale Permutation Entropy |

| CRLB | Cramér–Rao Lower Bound |

Appendix A. Multinomial Moment Matrices

In this section we will briefly derive the expressions for the Covariance, Coskewness, and Cokurtosis matrices for a multinomial distribution. These will be necessary in the calculation of the MPE variance in Section 2.2.

First, we start with a multinomial random variable with the following characteristics,

| (A1) |

where we use the same definitions as in Equations (10) and (12), where is the vector composed of all pattern probabilities , and is the estimation of . We will also use the definitions in Section 2.2, where is the number of possible patterns (number of events in the sample space), m is the MPE time scale, and N is the length of the signal. For the remainder of this section, we will assume m and N are such that is integer.

We note that Y is composed of a deterministic part , and a random part . It should be evident that and is identically distributed to Y.

We proceed to calculate the Covariance matrix of the binomial random variable . We know that

| (A2) |

| (A3) |

for and . Thus, if we gather all possible combinations of i and j in the Covariance matrix, we get

| (A4) |

Similarly, the skewness and coskewness can be expressed as,

| (A5) |

| (A6) |

which yields to the Coskewness matrix

| (A7) |

where we use again the vector definitions in Equation (12).

Lastly, we follow the same procedure to obtain the Cokurtosis matrix, by obtaining the values

| (A8) |

| (A9) |

which combines in the matrix as follows

| (A10) |

By taking advantage of this expressions, we are able to calculate the MPE variance in Section 2.2.

Appendix B. Cramér–Rao Lower Bound of MPE

In this section we will explicitly develop the calculations of . Before calculating the elements of the Information matrix, we should note that the parameter vector in Equation (12) has degrees of freedom. since the elements of represent the probabilities of each possible pattern in the sample space, the sum of the elements of must be one. We are subject to the restriction

| (A11) |

We should note that, as a consequence of this restriction, is not an independent variable. We will define a new parameter vector , such that

| (A12) |

which has the same degrees of freedom as . This will be necessary to use the lemma (A19) as we shall see later in this section.

Now, we calculate all the partial derivatives of H (4) respect to the pattern probabilities , such that, for

| (A13) |

where

| (A14) |

| (A15) |

following the same logic as Equation (A12).

The next step is to compute the Fisher Information matrix. We know from Equation (6) that , the pattern probabilities estimated from a signal, are multinomially distributed. The number of trials in the multinomial variable is .

| (A16) |

where

| (A17) |

is the count of patterns in the signal, as described in Equations (1) and (6). Since , they have the same probability distribution. Using (A16), is calculated as follows,

| (A18) |

where is a square matrix of ones, with rank 1. We should note that and , so is of size . Because of the constraint in (A11), offers no additional information. Moreover, we guarantee that is non-singular, and thus, invertible.

To get , we will need the inverse of : We will use the following lemma from [19]. If A and are non-singular matrices, and B has rank 1, then,

| (A19) |

therefore

| (A20) |

Lastly, if we introduce Equations (A13) and (A20) into (23), we get

| (A21) |

By noting the following relations,

we can simplify and rewrite Equation (A21) as

which is what we obtained in Equation (27).

Author Contributions

Conceptualization, D.A. and J.M.; methodology, D.A.; software, D.A.; validation, J.M., R.P. and B.O.; formal analysis, D.A.; resources, D.A.; writing—original draft preparation, D.A.; writing—review and editing, J.M. and R.P.; visualization, D.A.; supervision, B.O.; project administration, B.O.

Funding

The work is part of the ECCO project supported by the french Centre Val-de-Loire region under the contract #15088PRI.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Shannon C.E. A Mathematical Theory of Communication. SIGMOBILE Mob. Comput. Commun. Rev. 2001;5:3–55. doi: 10.1145/584091.584093. [DOI] [Google Scholar]

- 2.Goldberger A.L., Peng C.K., Lipsitz L.A. What is physiologic complexity and how does it change with aging and disease? Neurobiol. Aging. 2002;23:23–26. doi: 10.1016/S0197-4580(01)00266-4. [DOI] [PubMed] [Google Scholar]

- 3.Cashaback J.G.A., Cluff T., Potvin J.R. Muscle fatigue and contraction intensity modulates the complexity of surface electromyography. J. Electromyogr. Kinesiol. 2013;23:78–83. doi: 10.1016/j.jelekin.2012.08.004. [DOI] [PubMed] [Google Scholar]

- 4.Wu Y., Chen P., Luo X., Wu M., Liao L., Yang S., Rangayyan R.M. Measuring signal fluctuations in gait rhythm time series of patients with Parkinson’s disease using entropy parameters. Biomed. Signal Process. Control. 2017;31:265–271. doi: 10.1016/j.bspc.2016.08.022. [DOI] [Google Scholar]

- 5.Aquino A.L.L., Ramos H.S., Frery A.C., Viana L.P., Cavalcante T.S.G., Rosso O.A. Characterization of electric load with Information Theory quantifiers. Physica A. 2017;465:277–284. doi: 10.1016/j.physa.2016.08.017. [DOI] [Google Scholar]

- 6.Pincus S.M. Approximate entropy as a measure of system complexity. Proc. Natl. Acad. Sci. USA. 1991;88:2297–2301. doi: 10.1073/pnas.88.6.2297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Richman J.S., Moorman J.R. Physiological time-series analysis using approximate entropy and sample entropy. Am. J. Physiol. Heart Circ. Physiol. 2000;278:H2039–H2049. doi: 10.1152/ajpheart.2000.278.6.H2039. [DOI] [PubMed] [Google Scholar]

- 8.Liu C., Li K., Zhao L., Liu F., Zheng D., Liu C., Liu S. Analysis of heart rate variability using fuzzy measure entropy. Comput. Biol. Med. 2013;43:100–108. doi: 10.1016/j.compbiomed.2012.11.005. [DOI] [PubMed] [Google Scholar]

- 9.Bandt C., Pompe B. Permutation Entropy: A Natural Complexity Measure for Time Series. Phys. Rev. Lett. 2002;88:174102. doi: 10.1103/PhysRevLett.88.174102. [DOI] [PubMed] [Google Scholar]

- 10.Aziz W., Arif M. Multiscale Permutation Entropy of Physiological Time Series; Proceedings of the 2005 Pakistan Section Multitopic Conference; Karachi, Pakistan. 24–25 December 2005; pp. 1–6. [DOI] [Google Scholar]

- 11.Costa M., Peng C.K., Goldberger A.L., Hausdorff J.M. Multiscale entropy analysis of human gait dynamics. Physica A. 2003;330:53–60. doi: 10.1016/j.physa.2003.08.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zanin M., Zunino L., Rosso O.A., Papo D. Permutation Entropy and Its Main Biomedical and Econophysics Applications: A Review. Entropy. 2012;14:1553–1577. doi: 10.3390/e14081553. [DOI] [Google Scholar]

- 13.Humeau-Heurtier A., Wu C.W., Wu S.D. Refined Composite Multiscale Permutation Entropy to Overcome Multiscale Permutation Entropy Length Dependence. IEEE Signal Process. Lett. 2015;22:2364–2367. doi: 10.1109/LSP.2015.2482603. [DOI] [Google Scholar]

- 14.Bandt C., Shiha F. Order Patterns in Time Series. J. Time Ser. Anal. 2007;28:646–665. doi: 10.1111/j.1467-9892.2007.00528.x. [DOI] [Google Scholar]

- 15.Zunino L., Pérez D.G., Martín M.T., Garavaglia M., Plastino A., Rosso O.A. Permutation entropy of fractional Brownian motion and fractional Gaussian noise. Phys. Lett. A. 2008;372:4768–4774. doi: 10.1016/j.physleta.2008.05.026. [DOI] [Google Scholar]

- 16.Little D.J., Kane D.M. Permutation entropy of finite-length white-noise time series. Phys. Rev. E. 2016;94:022118. doi: 10.1103/PhysRevE.94.022118. [DOI] [PubMed] [Google Scholar]

- 17.Dávalos A., Jabloun M., Ravier P., Buttelli O. Theoretical Study of Multiscale Permutation Entropy on Finite-Length Fractional Gaussian Noise; Proceedings of the 26th European Signal Processing Conference (EUSIPCO); Rome, Italy. 3–7 September 2018; pp. 1092–1096. [Google Scholar]

- 18.Friedlander B., Francos J.M. Estimation of Amplitude and Phase Parameters of Multicomponent Signals. IEEE Trans. Signal Process. 1995;43:917–926. doi: 10.1109/78.376844. [DOI] [Google Scholar]

- 19.Miller K.S. On the Inverse of the Sum of Matrices. Math. Mag. 1981;54:67–72. doi: 10.1080/0025570X.1981.11976898. [DOI] [Google Scholar]