Abstract

Fluctuation theorems are a class of equalities that express universal properties of the probability distribution of a fluctuating path functional such as heat, work or entropy production over an ensemble of trajectories during a non-equilibrium process with a well-defined initial distribution. Jinwoo and Tanaka (Jinwoo, L.; Tanaka, H. Sci. Rep. 2015, 5, 7832) have shown that work fluctuation theorems hold even within an ensemble of paths to each state, making it clear that entropy and free energy of each microstate encode heat and work, respectively, within the conditioned set. Here we show that information that is characterized by the point-wise mutual information for each correlated state between two subsystems in a heat bath encodes the entropy production of the subsystems and heat bath during a coupling process. To this end, we extend the fluctuation theorem of information exchange (Sagawa, T.; Ueda, M. Phys. Rev. Lett. 2012, 109, 180602) by showing that the fluctuation theorem holds even within an ensemble of paths that reach a correlated state during dynamic co-evolution of two subsystems.

Keywords: local non-equilibrium thermodynamics, fluctuation theorem, mutual information, entropy production, local mutual information, thermodynamics of information, stochastic thermodynamics

1. Introduction

Thermal fluctuations play an important role in the functioning of molecular machines: fluctuations mediate the exchange of energy between molecules and the environment, enabling molecules to overcome free energy barriers and to stabilize in low free energy regions. They make positions and velocities random variables, and thus make path functionals such as heat and work fluctuating quantities. In the past two decades, a class of relations called fluctuation theorems have shown that there are universal laws that regulate fluctuating quantities during a process that drives a system far from equilibrium. The Jarzynski equality, for example, links work to the change of equilibrium free energy [1], and the Crooks fluctuation theorem relates the probability of work to the dissipation of work [2] if we mention a few. There are many variations on these basic relations. Seifert has extended the second-law to the level of individual trajectories [3], and Hatano and Sasa have considered transitions between steady states [4]. Experiments on single molecular levels have verified the fluctuation theorems, providing critical insights on the behavior of bio-molecules [5,6,7,8,9,10,11,12,13].

Information is an essential subtopic of fluctuation theorems [14,15,16]. Beginning with pioneering studies on feedback controlled systems [17,18], unifying formulations of information thermodynamics have been established [19,20,21,22,23]. Especially, Sagawa and Ueda have introduced information to the realm of fluctuation theorems [24]. They have established a fluctuation theorem of information exchange, unifying non-equilibrium processes of measurement and feedback control [25]. They have considered a situation where a system, say X, evolves in such a manner that depends on state y of another system Y the state of which is fixed during the evolution of the state of X. In this setup, they have shown that establishing a correlation between the two subsystems accompanies an entropy production. Very recently, we have released the constraint that Sagawa and Ueda have assumed, and proved that the same form of the fluctuation theorem of information exchange holds even when both subsystems X and Y co-evolve in time [26].

In the context of fluctuation theorems, external control defines a process by varying the parameter in a predetermined manner during . One repeats the process according to initial probability distribution , and then, a system generates as a response an ensemble of microscopic trajectories . Jinwoo and Tanaka [27,28] have shown that the Jarzynski equality and the Crooks fluctuation theorem hold even within an ensemble of trajectories conditioned on a fixed microstate at final time , where the local form of non-equilibrium free energy replaces the role of equilibrium free energy in the equations, making it clear that free energy of microstate encodes the amount of supplied work for reaching during processes . Here local means that a term is related to microstate x at time considered as an ensemble.

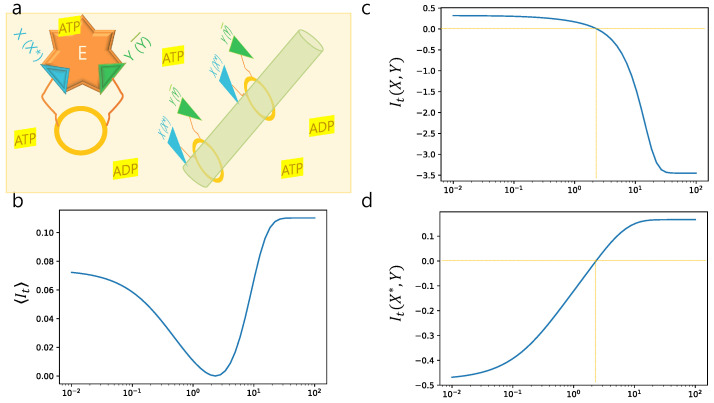

In this paper, we apply this conceptual framework of considering a single microstate as an ensemble of trajectories to the fluctuation theorem of information exchange (see Figure 1a). We show that mutual information of a correlated-microstates encodes the amount of entropy production within the ensemble of paths that reach the correlated-states. This local version of the fluctuation theorem of information exchange provides much more detailed information for each correlated-microstates compared to the results in [25,26]. In the existing approaches that consider the ensemble of all paths, each point-wise mutual information does not provide specific details on a correlated-microstates, but in this new approach of focusing on a subset of the ensemble, local mutual information provides detailed knowledge on particular correlated-states.

Figure 1.

Ensemble of conditioned paths and dynamic information exchange: (a) and denote respectively the set of all trajectories during process for and that of paths that reach at time . Red curves schematically represent some members of . (b) We magnified a single trajectory in the left panel to represent a detailed view of dynamic coupling of during process . The point-wise mutual information may vary not necessarily monotonically.

We organize the paper as follows: In Section 2, we briefly review some fluctuation theorems that we have mentioned. In Section 3, we prove the main theorem and its corollary. In Section 4, we provide illustrative examples, and in Section 5, we discuss the implication of the results.

2. Conditioned Nonequilibrium Work Relations and Sagawa–Ueda Fluctuation Theorem

We consider a system in contact with a heat bath of inverse temperate where is the Boltzmann constant, and T is the temperature of the heat bath. External parameter drives the system away from equilibrium during . We assume that the initial probability distribution is equilibrium one at control parameter . Let be the set of all microscopic trajectories, and be that of paths conditioned on at time . Then, the Jarzynski equality [1] and end-point conditioned version [27,28] of it read as follows:

| (1) |

| (2) |

respectively, where brackets indicates the average over all trajectories in and indicates the average over trajectories reaching at time . Here W indicates work done on the system through , is equilibrium free energy at control parameter , and is local non-equilibrium free energy of at time . Work measurement over a specific ensemble of paths gives us equilibrium free energy as a function of through Equation (1) and local non-equilibrium free energy as a micro-state function of at time through Equation (2). The following fluctuation theorem links Equations (1) and (2):

| (3) |

where brackets indicates the average over all microstates at time [27,28]. Defining the reverse process by for , the Crooks fluctuation theorem [2] and end-point conditioned version [27,28] of it read as follows:

| (4) |

| (5) |

respectively, where and are probability distributions of work W normalized over all paths in and , respectively. Here indicates corresponding probabilities for the reverse process. For Equation (4), the initial probability distribution of the reverse process is an equilibrium one at control parameter . On the other hand, for Equation (5), the initiail probability distribution for the reverse process should be non-equilibrium probability distribution of the forward process at control parameter . By identifying such W that , one obtains , the difference in equilibrium free energy between and , through Equation (4) [9]. Similar identification may provide through Equation (5).

Now we turn to the Sagawa–Ueda fluctuation theorem of information exchange [25]. Specifically, we discuss the generalized version [26] of it. To this end, we consider two subsystems X and Y in the heat bath of inverse temperature . During process , they interact and co-evolve with each other. Then, the fluctuation theorem of information exchange reads as follows:

| (6) |

where brackets indicate the ensemble average over all paths of the combined subsystems, and is the sum of entropy production of system X, system Y, and the heat bath, and is the change in mutual information between X and Y. We note that in the original version of the Sagawa–Ueda fluctuation theorem, only system X is in contact with the heat bath and Y does not evolve during the process [25,26]. In this paper, we prove an end-point conditioned version of Equation (6):

| (7) |

where brackets indicate the ensemble average over all paths to and at time , and () is local form of mutual information between microstates of X and Y at time t (see Figure 1b). If there is no initial correlation, i.e., , Equation (7) clearly indicates that local mutual information as a function of correlated-microstates encodes entropy production within the end-point conditioned ensemble of paths. In the same vein, we may interpret initial correlation as encoded entropy production for the preparation of the initial condition.

3. Results

3.1. Theoretical Framework

Let X and Y be finite classical stochastic systems in the heat bath of inverse temperate . We allowed external parameter drives one or both subsystems away from equilibrium during time [29,30,31]. We assumed that classical stochastic dynamics describes the time evolution of X and Y during process along trajectories and , respectively, where () denotes a specific microstate of X (Y) at time t for on each trajectory. Since trajectories fluctuate, we repeated process with initial joint probability distribution over all microstates of systems X and Y. Then the subsystems may generate a joint probability distribution for . Let and be the corresponding marginal probability distributions. We assumed

| (8) |

so that we have , , and for all x and y during . Now we consider entropy production of system X along , system Y along , and heat bath during process for as follows

| (9) |

where

| (10) |

We remark that Equation (10) is different from the change of stochastic entropy of combined super-system composed of X and Y, which reads that reduces to Equation (10) if processes and are independent. The discrepancy leaves room for correlation Equation (11) below [25]. Here the stochastic entropy of microstate ○ at time t is uncertainty of ○ at time t: the more uncertain that microstate ○ occurs, the greater the stochastic entropy of ○ is. We also note that in [25], system X was in contact with the heat reservoir, but system Y was not. Nor did system Y evolve. Thus their entropy production reads .

Now we assume, during process , that system X exchanged information with system Y. By this, we mean that trajectory of system X evolved depending on the trajectory of system Y (see Figure 1b). Then, the local form of mutual information at time t between and is the reduction of uncertainty of due to given [25]:

| (11) |

where is the conditional probability distribution of given . The more information was being shared between and for their occurrence, the larger the value of was. We note that if and were independent at time t, became zero. The average of with respect to over all microstates is the mutual information between the two subsystems, which was greater than or equal to zero [32].

3.2. Proof of Fluctuation Theorem of Information Exchange Conditioned on a Correlated-Microstates

Now we are ready to prove the fluctuation theorem of information exchange conditioned on a correlated-microstates. We define reverse process for , where the external parameter is time-reversed [33,34]. The initial probability distribution for the reverse process should be the final probability distribution for the forward process so that we have

| (12) |

Then, by Equation (8), we have , , and for all x and y during . For each trajectories and for , we define the time-reversed conjugate as follows:

| (13) |

where ∗ denotes momentum reversal. Let be the set of all trajectories and , and be that of trajectories conditioned on correlated-microstates at time . Due to time-reversal symmetry of the underlying microscopic dynamics, the set of all time-reversed trajectories was identical to , and the set of time-reversed trajectories conditioned on and was identical to . Thus we may use the same notation for both forward and backward pairs. We note that the path probabilities and were normalized over all paths in and , respectively (see Figure 1a). With this notation, the microscopic reversibility condition that enables us to connect the probability of forward and reverse paths to dissipated heat reads as follows [2,35,36,37]:

| (14) |

where is the conditional joint probability distribution of paths and conditioned on initial microstates and , and is that for the reverse process. Now we restrict our attention to those paths that are in , and divide both numerator and denominator of the left-hand side of Equation (14) by . Since is identical to , Equation (14) becomes as follows:

| (15) |

since the probability of paths is now normalized over . Then we have the following:

| (16) |

| (17) |

| (18) |

| (19) |

To obtain Equation (17) from Equation (16), we multiply Equation (16) by and , which are 1. We obtain Equation (18) by applying Equations (10)–(12) and (15) to Equation (17). Finally, we use Equation (9) to obtain Equation (19) from Equation (18). Now we multiply both sides of Equation (19) by and , and take integral over all paths in to obtain the fluctuation theorem of information exchange conditioned on a correlated-microstates:

| (20) |

Here we use the fact that is constant for all paths in , probability distribution is normalized over all paths in , and and due to the time-reversal symmetry [38]. Equation (20) clearly shows that just as local free energy encodes work [27], and local entropy encodes heat [28], the local form of mutual information between correlated-microstates encodes entropy production, within the ensemble of paths that reach each microstate. The following corollary provides more information on entropy production in terms of energetic costs.

3.3. Corollary

To discuss entropy production in terms of energetic costs, we define local free energy of and of at control parameter as follows:

| (21) |

where T is the temperature of the heat bath, is the Boltzmann constant, and are internal energy of systems X and Y, respectively, and is stochastic entropy [2,3]. Work done on either one or both systems through process is expressed by the first law of thermodynamics as follows:

| (22) |

where is the change in internal energy of the total system composed of X and Y. If we assume that systems X and Y are weakly coupled, in that interaction energy between X and Y is negligible compared to the internal energy of X and Y, we may have

| (23) |

where and [39]. We rewrite Equation (18) by adding and subtracting the change of internal energy of X and of Y as follows:

| (24) |

| (25) |

where we have applied Equations (21)–(23) consecutively to Equation (24) to obtain Equation (25). Here and . Now we multiply both sides of Equation (25) by and , and take integral over all paths in to obtain the following:

| (26) |

which generalizes known relations in the literature [24,39,40,41,42,43]. We note that Equation (26) holds under the weak-coupling assumption between systems X and Y during process , and in Equation (26) is the difference in non-equilibrium free energy, which is different from the change in equilibrium free energy that appears in similar relations in the literature [24,40,41,42,43]. If there is no initial correlation, i.e., , Equation (26) indicates that local mutual information as a state function of correlated-microstates encodes entropy production, , within the ensemble of paths in . In the same vein, we may interpret initial correlation as encoded entropy-production for the preparation of the initial condition.

In [25], they showed that the entropy of X can be decreased without any heat flow due to the negative mutual information change under the assumption that one of the two systems does not evolve in time. Equation (20) implies that the negative mutual information change can decrease the entropy of X and that of Y simultaneously without any heat flow by the following:

| (27) |

provided . Here . In terms of energetics, Equation (26) implies that the negative mutual information change can increase the free energy of X and that of Y simultaneously without any external-supply of energy by the following:

| (28) |

provided .

4. Examples

4.1. A Simple One

Let X and Y be two systems that weakly interact with each other, and be in contact with the heat bath of inverse temperature . We may think of X and Y, for example, as bio-molecules that interact with each other or X as a device which measures the state of other system and Y be a measured system. We consider a dynamic coupling process as follows: Initially, X and Y are separately in equilibrium such that the initial correlation is zero for all and . At time , system X starts (weak) interaction with system Y until time . During the coupling process, external parameter for may exchange work with either one or both systems (see Figure 1b). Since each process fluctuates, we repeat the process many times to obtain probability distribution for . We allow both systems co-evolve interactively and thus may vary not necessarily monotonically. Let us assume that the final probability distribution is as shown in Table 1.

Table 1.

The joint probability distribution of x and y at final time : Here we assume that both systems X and Y have three states, 0, 1, and 2.

| 0 | 1 | 2 | |

|---|---|---|---|

| 0 | 1/6 | 1/9 | 1/18 |

| 1 | 1/18 | 1/6 | 1/9 |

| 2 | 1/9 | 1/18 | 1/6 |

Then, a few representative mutual information read as follows:

| (29) |

By Jensen’s inequality [32], Equation (20) implies

| (30) |

Thus coupling accompanies on average entropy production of at least which is greater than 0. Coupling may not produce entropy on average. Coupling on average may produce negative entropy by . Three individual inequalities provide more detailed information than that from currently available from [25,26].

4.2. A “Tape-Driven” Biochemical Machine

In [44], McGrath et al. proposed a physically realizable device that exploits or creates mutual information, depending on system parameters. The system is composed of an enzyme E in a chemical bath, interacting with a tape that is decorated with a set of pairs of molecules (see Figure 2a). A pair is composed of substrate molecule X (or phosphorylated ) and activator Y of the enzyme (or which denotes the absence of Y). The binding of molecule Y to E converts the enzyme into active mode , which catalyzes phosphate exchange between ATP and X:

| (31) |

Figure 2.

Analysis of a “tape-driven” biochemical machine: (a) a schematic illustration of enzyme E, pairs of and in the chemical bath including ATP and ADP. (b) The graph of as a function of time t, which shows the non-monotonicity of . (c) The graph of which decreases monotonically and composed of trajectories that harness mutual information to work against the chemical bath. (d) The graph of that increases monotonically and composed of paths that create mutual information between and Y.

The tape is prepared in a correlated manner through a single parameter :

| (32) |

If , a pair of Y and is abundant so that the interaction of enzyme E with molecule Y activates the enzyme, causing the catalytic reaction of Equation (31) from the right to the left, resulting in the production of ATP from ADP. If the bath were prepared such that , the reaction corresponds to work on the chemical bath against the concentration gradient. Note that this interaction causes the conversion of to X, which reduces the initial correlation between and Y, resulting in the conversion of mutual information into work. If E interacts with a pair of and X which is also abundant for , the enzyme becomes inactive due to the absence of Y, preventing the reaction Equation (31) from the left to the right, which plays as a ratchet that blocks the conversion of X and ATP to and ADP, which might happen otherwise due to the the concentration gradient of the bath.

On the other hand, if , a pair of Y and X is abundant which allows the enzyme to convert X into using the pressure of the chemical bath, creating the correlation between Y and . If E interacts with a pair of and which is also abundant for , the enzyme is again inactive, preventing the de-phosphorylation of , keeping the created correlation. In this regime, the net effect is the conversion of work (due to the chemical gradient of the bath) to mutual information. The concentration of ATP and ADP in the chemical bath is adjusted via such that

| (33) |

relative to a reference concentration . For the analysis of various regimes of different parameters, we refer the reader to [44].

In this example, we concentrate on the case with and , where Ref. [44] pays a special attention. They analyzed the dynamics of mutual information during . Due to the high initial correlation, the enzyme converts the mutual information between and Y into work against the pressure of the chemical bath with . As the reactions proceed, correlation drops until the minimum reaches, which is zero. Then, eventually the reaction is inverted, and the bath begins with working to create mutual information between and Y as shown in Figure 2b.

We split the ensemble of paths into composed of trajectories reaching at each t and composed of those reaching at time t. Then, we calculate and using the analytic form of probability distributions that they derived. Figure 2c,d show and , respectively, as a function of time t. During the whole process, mutual information monotonically decreases. For , it keeps positive, and after that, it becomes negative which is possible for local mutual information. Trajectories in harness mutual information between and Y, converting to X and ADP to ATP against the chemical bath. Contrary to this, increases monotonically. It becomes positive after , indicating that the members in create mutual information between and Y by converting X to using the excess of in the chemical bath. The effect accumulates, and the negative values of turn to the positive after .

5. Conclusions

We have proved the fluctuation theorem of information exchange conditioned on correlated-microstates, Equation (20), and its corollary, Equation (26). Those theorems make it clear that local mutual information encodes as a state function of correlated-states entropy production within an ensemble of paths that reach the correlated-states. Equation (20) also reproduces lower bound of entropy production, Equation (30), within a subset of path-ensembles, which provides more detailed information than the fluctuation theorem involved in the ensemble of all paths. Equation (26) enables us to know the exact relationship between work, non-equilibrium free energy, and mutual information. This end-point conditioned version of the theorem also provides more detailed information on the energetics for coupling than current approaches in the literature. This robust framework may be useful to analyze thermodynamics of dynamic molecular information processes [44,45,46] and to analyze dynamic allosteric transitions [47,48].

Funding

L.J. was supported by the National Research Foundation of Korea Grant funded by the Korean Government (NRF-2010-0006733, NRF-2012R1A1A2042932, NRF-2016R1D1A1B02011106), and in part by Kwangwoon University Research Grant in 2017.

Conflicts of Interest

The author declares no conflict of interest.

References

- 1.Jarzynski C. Nonequilibrium equality for free energy differences. Phys. Rev. Lett. 1997;78:2690–2693. doi: 10.1103/PhysRevLett.78.2690. [DOI] [Google Scholar]

- 2.Crooks G.E. Entropy production fluctuation theorem and the nonequilibrium work relation for free energy differences. Phys. Rev. E. 1999;60:2721–2726. doi: 10.1103/PhysRevE.60.2721. [DOI] [PubMed] [Google Scholar]

- 3.Seifert U. Entropy production along a stochastic trajectory and an integral fluctuation theorem. Phys. Rev. Lett. 2005;95:040602. doi: 10.1103/PhysRevLett.95.040602. [DOI] [PubMed] [Google Scholar]

- 4.Hatano T., Sasa S.I. Steady-state thermodynamics of Langevin systems. Phys. Rev. Lett. 2001;86:3463–3466. doi: 10.1103/PhysRevLett.86.3463. [DOI] [PubMed] [Google Scholar]

- 5.Hummer G., Szabo A. Free energy reconstruction from nonequilibrium single-molecule pulling experiments. Proc. Natl. Acad. Sci. USA. 2001;98:3658–3661. doi: 10.1073/pnas.071034098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Liphardt J., Onoa B., Smith S.B., Tinoco I., Bustamante C. Reversible unfolding of single RNA molecules by mechanical force. Science. 2001;292:733–737. doi: 10.1126/science.1058498. [DOI] [PubMed] [Google Scholar]

- 7.Liphardt J., Dumont S., Smith S., Tinoco I., Jr., Bustamante C. Equilibrium information from nonequilibrium measurements in an experimental test of Jarzynski’s equality. Science. 2002;296:1832–1835. doi: 10.1126/science.1071152. [DOI] [PubMed] [Google Scholar]

- 8.Trepagnier E.H., Jarzynski C., Ritort F., Crooks G.E., Bustamante C.J., Liphardt J. Experimental test of Hatano and Sasa’s nonequilibrium steady-state equality. Proc. Natl. Acad. Sci. USA. 2004;101:15038–15041. doi: 10.1073/pnas.0406405101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Collin D., Ritort F., Jarzynski C., Smith S.B., Tinoco I., Bustamante C. Verification of the Crooks fluctuation theorem and recovery of RNA folding free energies. Nature. 2005;437:231–234. doi: 10.1038/nature04061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Alemany A., Mossa A., Junier I., Ritort F. Experimental free-energy measurements of kinetic molecular states using fluctuation theorems. Nat. Phys. 2012;8:688–694. doi: 10.1038/nphys2375. [DOI] [Google Scholar]

- 11.Holubec V., Ryabov A. Cycling Tames Power Fluctuations near Optimum Efficiency. Phys. Rev. Lett. 2018;121:120601. doi: 10.1103/PhysRevLett.121.120601. [DOI] [PubMed] [Google Scholar]

- 12.Šiler M., Ornigotti L., Brzobohatý O., Jákl P., Ryabov A., Holubec V., Zemánek P., Filip R. Diffusing up the Hill: Dynamics and Equipartition in Highly Unstable Systems. Phys. Rev. Lett. 2018;121:230601. doi: 10.1103/PhysRevLett.121.230601. [DOI] [PubMed] [Google Scholar]

- 13.Ciliberto S. Experiments in Stochastic Thermodynamics: Short History and Perspectives. Phys. Rev. X. 2017;7:021051. doi: 10.1103/PhysRevX.7.021051. [DOI] [Google Scholar]

- 14.Strasberg P., Schaller G., Brandes T., Esposito M. Quantum and Information Thermodynamics: A Unifying Framework Based on Repeated Interactions. Phys. Rev. X. 2017;7:021003. doi: 10.1103/PhysRevX.7.021003. [DOI] [Google Scholar]

- 15.Demirel Y. Information in biological systems and the fluctuation theorem. Entropy. 2014;16:1931–1948. doi: 10.3390/e16041931. [DOI] [Google Scholar]

- 16.Demirel Y. Nonequilibrium Thermodynamics: Transport and Rate Processes in Physical, Chemical and Biological Systems. 4th ed. Elsevier; Amsterdam, The Netherlands: 2019. [Google Scholar]

- 17.Lloyd S. Use of mutual information to decrease entropy: Implications for the second law of thermodynamics. Phys. Rev. A. 1989;39:5378–5386. doi: 10.1103/PhysRevA.39.5378. [DOI] [PubMed] [Google Scholar]

- 18.Cao F.J., Feito M. Thermodynamics of feedback controlled systems. Phys. Rev. E. 2009;79:041118. doi: 10.1103/PhysRevE.79.041118. [DOI] [PubMed] [Google Scholar]

- 19.Gaspard P. Multivariate fluctuation relations for currents. New J. Phys. 2013;15:115014. doi: 10.1088/1367-2630/15/11/115014. [DOI] [Google Scholar]

- 20.Barato A.C., Seifert U. Unifying Three Perspectives on Information Processing in Stochastic Thermodynamics. Phys. Rev. Lett. 2014;112:090601. doi: 10.1103/PhysRevLett.112.090601. [DOI] [PubMed] [Google Scholar]

- 21.Barato A.C., Seifert U. Stochastic thermodynamics with information reservoirs. Phys. Rev. E. 2014;90:042150. doi: 10.1103/PhysRevE.90.042150. [DOI] [PubMed] [Google Scholar]

- 22.Horowitz J.M., Esposito M. Thermodynamics with continuous information flow. Phys. Rev. X. 2014;4:031015. doi: 10.1103/PhysRevX.4.031015. [DOI] [Google Scholar]

- 23.Rosinberg M.L., Horowitz J.M. Continuous information flow fluctuations. EPL Europhys. Lett. 2016;116:10007. doi: 10.1209/0295-5075/116/10007. [DOI] [Google Scholar]

- 24.Sagawa T., Ueda M. Generalized Jarzynski equality under nonequilibrium feedback control. Phys. Rev. Lett. 2010;104:090602. doi: 10.1103/PhysRevLett.104.090602. [DOI] [PubMed] [Google Scholar]

- 25.Sagawa T., Ueda M. Fluctuation theorem with information exchange: Role of correlations in stochastic thermodynamics. Phys. Rev. Lett. 2012;109:180602. doi: 10.1103/PhysRevLett.109.180602. [DOI] [PubMed] [Google Scholar]

- 26.Jinwoo L. Fluctuation Theorem of Information Exchange between Subsystems that Co-Evolve in Time. Symmetry. 2019;11:433. doi: 10.3390/sym11030433. [DOI] [Google Scholar]

- 27.Jinwoo L., Tanaka H. Local non-equilibrium thermodynamics. Sci. Rep. 2015;5:7832. doi: 10.1038/srep07832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Jinwoo L., Tanaka H. Trajectory-ensemble-based nonequilibrium thermodynamics. arXiv. 20141403.1662v1 [Google Scholar]

- 29.Jarzynski C. Equalities and inequalities: Irreversibility and the second law of thermodynamics at the nanoscale. Annu. Rev. Codens. Matter Phys. 2011;2:329–351. doi: 10.1146/annurev-conmatphys-062910-140506. [DOI] [Google Scholar]

- 30.Seifert U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 2012;75:126001. doi: 10.1088/0034-4885/75/12/126001. [DOI] [PubMed] [Google Scholar]

- 31.Spinney R., Ford I. Nonequilibrium Statistical Physics of Small Systems. Wiley-VCH Verlag GmbH & Co. KGaA; Weinheim, Germany: 2013. Fluctuation Relations: A Pedagogical Overview; pp. 3–56. [Google Scholar]

- 32.Cover T.M., Thomas J.A. Elements of Information Theory. John Wiley & Sons; Hoboken, NJ, USA: 2012. [Google Scholar]

- 33.Ponmurugan M. Generalized detailed fluctuation theorem under nonequilibrium feedback control. Phys. Rev. E. 2010;82:031129. doi: 10.1103/PhysRevE.82.031129. [DOI] [PubMed] [Google Scholar]

- 34.Horowitz J.M., Vaikuntanathan S. Nonequilibrium detailed fluctuation theorem for repeated discrete feedback. Phys. Rev. E. 2010;82:061120. doi: 10.1103/PhysRevE.82.061120. [DOI] [PubMed] [Google Scholar]

- 35.Kurchan J. Fluctuation theorem for stochastic dynamics. J. Phys. A Math. Gen. 1998;31:3719. doi: 10.1088/0305-4470/31/16/003. [DOI] [Google Scholar]

- 36.Maes C. The fluctuation theorem as a Gibbs property. J. Stat. Phys. 1999;95:367–392. doi: 10.1023/A:1004541830999. [DOI] [Google Scholar]

- 37.Jarzynski C. Hamiltonian derivation of a detailed fluctuation theorem. J. Stat. Phys. 2000;98:77–102. doi: 10.1023/A:1018670721277. [DOI] [Google Scholar]

- 38.Goldstein H., Poole C., Safko J. Classical Mechanics. 3rd ed. Pearson; London, UK: 2001. [Google Scholar]

- 39.Parrondo J.M., Horowitz J.M., Sagawa T. Thermodynamics of information. Nat. Phys. 2015;11:131–139. doi: 10.1038/nphys3230. [DOI] [Google Scholar]

- 40.Kawai R., Parrondo J.M.R., den Broeck C.V. Dissipation: The phase-space perspective. Phys. Rev. Lett. 2007;98:080602. doi: 10.1103/PhysRevLett.98.080602. [DOI] [PubMed] [Google Scholar]

- 41.Takara K., Hasegawa H.H., Driebe D.J. Generalization of the second law for a transition between nonequilibrium states. Phys. Lett. A. 2010;375:88–92. doi: 10.1016/j.physleta.2010.11.002. [DOI] [Google Scholar]

- 42.Hasegawa H.H., Ishikawa J., Takara K., Driebe D.J. Generalization of the second law for a nonequilibrium initial state. Phys. Lett. A. 2010;374:1001–1004. doi: 10.1016/j.physleta.2009.12.042. [DOI] [Google Scholar]

- 43.Esposito M., Van den Broeck C. Second law and Landauer principle far from equilibrium. Europhys. Lett. 2011;95:40004. doi: 10.1209/0295-5075/95/40004. [DOI] [Google Scholar]

- 44.McGrath T., Jones N.S., ten Wolde P.R., Ouldridge T.E. Biochemical Machines for the Interconversion of Mutual Information and Work. Phys. Rev. Lett. 2017;118:028101. doi: 10.1103/PhysRevLett.118.028101. [DOI] [PubMed] [Google Scholar]

- 45.Becker N.B., Mugler A., ten Wolde P.R. Optimal Prediction by Cellular Signaling Networks. Phys. Rev. Lett. 2015;115:258103. doi: 10.1103/PhysRevLett.115.258103. [DOI] [PubMed] [Google Scholar]

- 46.Ouldridge T.E., Govern C.C., ten Wolde P.R. Thermodynamics of Computational Copying in Biochemical Systems. Phys. Rev. X. 2017;7:021004. doi: 10.1103/PhysRevX.7.021004. [DOI] [Google Scholar]

- 47.Tsai C.J., Nussinov R. A unified view of “how allostery works”. PLoS Comput. Biol. 2014;10:e1003394. doi: 10.1371/journal.pcbi.1003394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Cuendet M.A., Weinstein H., LeVine M.V. The allostery landscape: Quantifying thermodynamic couplings in biomolecular systems. J. Chem. Theory Comput. 2016;12:5758–5767. doi: 10.1021/acs.jctc.6b00841. [DOI] [PMC free article] [PubMed] [Google Scholar]