Abstract

Action potentials (spikes) can trigger the release of a neurotransmitter at chemical synapses between neurons. Such release is uncertain, as it occurs only with a certain probability. Moreover, synaptic release can occur independently of an action potential (asynchronous release) and depends on the history of synaptic activity. We focus here on short-term synaptic facilitation, in which a sequence of action potentials can temporarily increase the release probability of the synapse. In contrast to the phenomenon of short-term depression, quantifying the information transmission in facilitating synapses remains to be done. We find rigorous lower and upper bounds for the rate of information transmission in a model of synaptic facilitation. We treat the synapse as a two-state binary asymmetric channel, in which the arrival of an action potential shifts the synapse to a facilitated state, while in the absence of a spike, the synapse returns to its baseline state. The information bounds are functions of both the asynchronous and synchronous release parameters. If synchronous release facilitates more than asynchronous release, the mutual information rate increases. In contrast, short-term facilitation degrades information transmission when the synchronous release probability is intrinsically high. As synaptic release is energetically expensive, we exploit the information bounds to determine the energy–information trade-off in facilitating synapses. We show that unlike information rate, the energy-normalized information rate is robust with respect to variations in the strength of facilitation.

Keywords: short-term synaptic facilitation, release site, information theory, binary asymmetric channel, mutual information rate, information bound

1. Introduction

Action potentials are the key carriers of information in the brain. The arrival of an action potential at a synapse opens calcium channels in the presynaptic site, which leads to the release of vesicles filled with neurotransmitters [1]. In turn, the released neurotransmitters activate post-synaptic receptors, thereby leading to a change in the post-synaptic potential.

This process of release, however, is stochastic. The release probability is affected by the level of intracellular calcium and the size of the readily releasable pool of vesicles [2,3]. Moreover, the release of a vesicle is not necessarily synchronized with the spiking process; a synapse may release asynchronously tens of milliseconds after the arrival of an action potential [4], or sometimes even spontaneously [5].

The release properties of a synapse also change on different time scales. The successive release of vesicles can deplete the pool of vesicles, thereby depressing the synapse. On the other hand, a sequence of action potentials with short inter-spike intervals can “prime” the release mechanism and increase the release probability, inducing short-term facilitation [6].

Several studies have addressed the modulatory role of short-term depression on synaptic information transmission [7,8,9]. In contrast, the information rate of a facilitating synapse is not yet fully understood, though it has been suggested that short-term facilitation temporally filters the incoming spike train [10].

To study the impact of short-term facilitation on synaptic information efficacy, we employ a binary asymmetric channel with two states. The model synapse switches between a baseline state and facilitated state based on the history of the input. Each state has distinct release probabilities, both for synchronous and asynchronous release. We derive a lower bound and an upper bound for the mutual information rate of such a facilitating synapse and assess the functional role of short-term facilitation on the synaptic information efficacy.

Short-term facilitation increases the release probability and consequently raises the metabolic energy consumption of the synapse [11]. We calculate the rate of information transmission per unit of energy to evaluate the compromises that a facilitating synapse makes to balance energy consumption and information transmission.

2. Synapse Model and Information Bounds

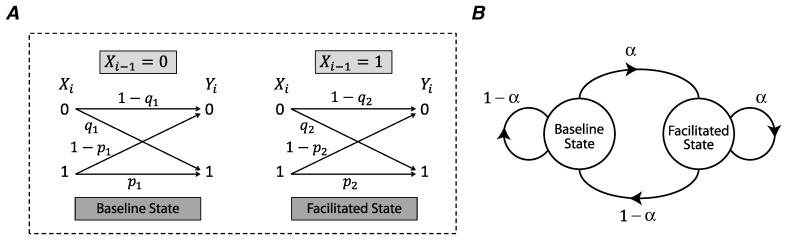

We use a binary asymmetric channel to model the stochasticity of release in a synapse (Figure 1A) [12]. The input of the model is the presynaptic spike process , where is a binary random variable corresponding to the presence () or absence () of a spike at time i. We assume that X is an i.i.d. random process, and is a Bernoulli random variable with . The output process of the channel , represents a release () or lack of release () at time i. The synchronous spike-evoked release probability is characterized as , and asynchronous release probability as .

Figure 1.

(A) Short-term facilitation in a synapse is modeled by a binary asymmetric channel whose state at time i is determined by the previous input, . If , the synapse remains in the baseline state; the synapse goes to the facilitated state after an action potential, . (B) The transition between the baseline state and the facilitated state is modeled by a two-state Markov chain and the transition probabilities are determined by the normalized input spike rate .

In short-term synaptic facilitation, a presynaptic input spike facilitates the synaptic release for the next spike. We model this phenomenon as a binary asymmetric channel whose state is determined by the previous input of the channel (Figure 1A). In the absence of a presynaptic spike (), the channel is in the baseline state and the probabilities of synchronous spike-evoked and asynchronous release are and . If a presynaptic spike occurs at time , i.e., , the state of the channel is switched to the facilitated state and the synchronous and asynchronous release probabilities are increased to and as follows,

| (1) |

| (2) |

Here, u and v are facilitation coefficients of synchronous and asynchronous release probabilities (), and and are the maximum release probabilities of these two modes of release. A Markov chain describes the transitions between the baseline state and the facilitated state, and the transition probabilities correspond to the presence or absence of an action potential in the presynaptic neuron (Figure 1B).

If and represent the mutual information rates of the binary asymmetric channels corresponding to the baseline state and facilitated state, then for ,

| (3) |

where and . First we derive a lower bound for the information rate between the input spike process X and the output process of the release site Y (the proofs for the theorems are in the Appendix A).

Theorem 1

(Lower Bound). Let denote the mutual information rate of a synapse with short-term facilitation, modeled by the two-state binary asymmetric channel (Figure 1A). Then is a lower bound for .

Since , is the statistical average over the mutual information rates of the two constituent states of the release site. Therefore, our theorem shows that at least in this simple model of facilitation, the mutual information rate is higher than the statistical average over the mutual information rates of the single states. This contrasts with the result for the two-state model of depression [12], for which is an exact result for the mutual information rate.

Theorem 2

(Lower Bound). The mutual information rate of the two-state model of facilitation is upper-bounded by

(4) where

(5)

(6)

(7)

(8)

(9)

(10)

(11)

In a facilitating synapse, the release probability and consequently, the energy consumption of the synapse increases. We define the ratio of the mutual information rate by the release probability as the energy-normalized information rate of the synapse. The energy-normalized information rate of the synapse without facilitation, denoted by , is then

| (12) |

Moreover, the release probability of the synapse in the two-state model of facilitation is

| (13) |

which is independent of i. Hence, the energy-normalized information rate of a facilitated synapse, , as well as the lower and upper bounds of energy-normalized information rate, and , are calculated by dividing , , and by .

3. Results

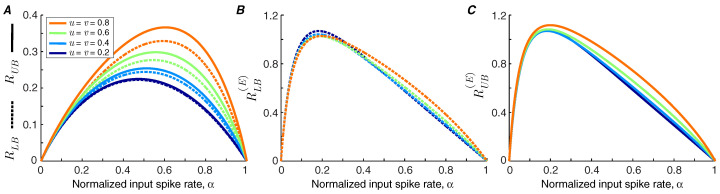

We use Theorems 1 and 2 to calculate the lower bound and upper bound of the information transmission rate of a synapse under short-term facilitation (Figure 2A). It is shown that the bounds are tighter for synapses with lower facilitation levels. We find that the information rate increases with the level of facilitation. By contrast, the bounds on the energy-normalized information rate of the synapse are relatively invariant to the strength of facilitation (Figure 2B,C). This finding indicates that a synapse can change the balance between its energy consumption and transmission rate by altering its level of facilitation without affecting the information rate per release.

Figure 2.

(A) Bounds of information rate in a synapse with short-term facilitation. The lower bound and upper bound are plotted as a function of for different values of facilitation coefficients, u and v. (B) The lower bound of energy-normalized information rate of a synapse under short-term facilitation. (C) The upper bound of energy-normalized information rate. The model parameters are , , , and .

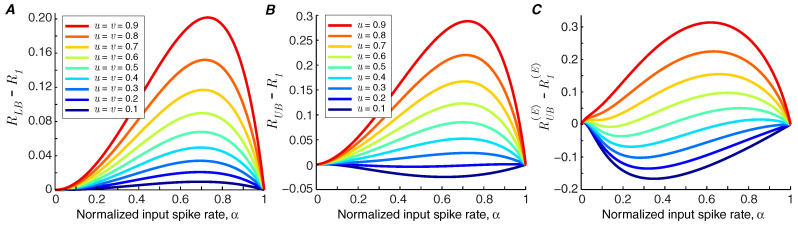

If the lower bound of information rate, , is greater than the information rate of the synapse in the baseline state, , we can conclude that short-term facilitation increases the mutual information rate of the synapse (i.e., ). In Figure 3A, it is shown that for the modeled synapse (with and ), short-term facilitation always increases the mutual information rate, provided that synchronous spike-evoked release and asynchronous release are facilitated equally, i.e., .

Figure 3.

(A) The difference between and against input spike rate for various facilitation coefficients. It is assumed that the facilitation coefficients of synchronous spike-evoked release and asynchronous release are identical, . (B) The difference between and . (C) The difference between and . In (B) and (C), the facilitation coefficient of asynchronous release is fixed at . The other parameters are , , and .

Recent findings suggest that synchronous and asynchronous release may be governed by different mechanisms [4], and consequently they may show distinct levels of facilitation. We study the impact of different facilitation coefficients in the modeled synapse by fixing the facilitation coefficient of asynchronous release at and calculate the information bounds for different values of u. Short-term facilitation reduces the mutual information rate of the synapse if the upper bound of the rate of the synapse, , goes below the information rate of the synapse without facilitation, . Figure 3B shows that short-term facilitation degrades the information rate of the synapse if the facilitation level of synchronous release is much lower than that of asynchronous release. The degrading effect of facilitation is pronounced when we compare the upper bound of the energy-normalized information rate of the synapse with facilitation, , with the energy-normalized information rate of a static synapse, . The values below zero in Figure 3C show the operating points of synapses in which facilitation reduces the energy-normalized information rate.

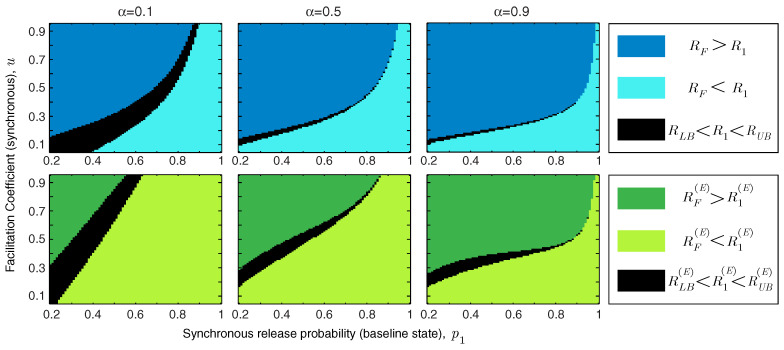

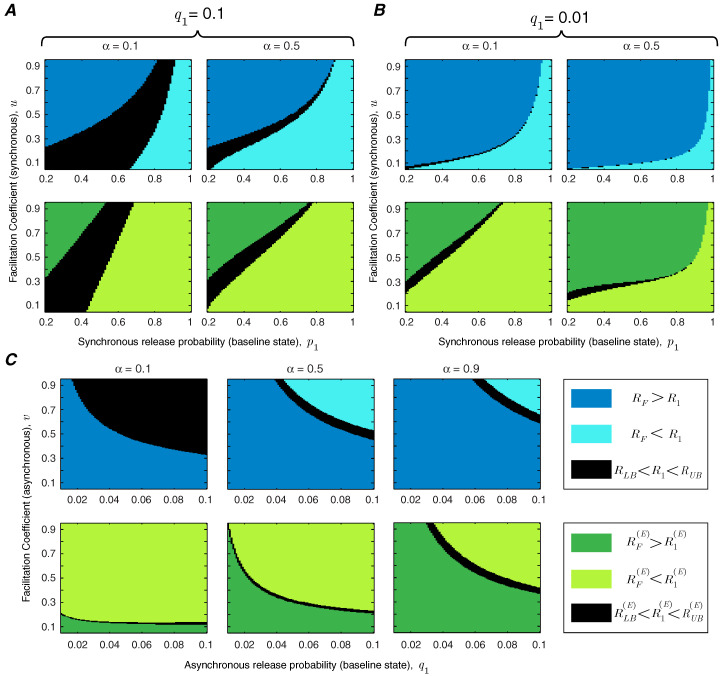

In addition to the facilitation coefficient, the release probability of the synapse in the baseline state plays a critical role in determining the functional role of short-term facilitation. We study the interaction between u and in Figure 4. We show the regime of parameters for which short-term facilitation increases/decreases the mutual information rate and energy-normalized information rate of the synapse. If (or ), the bounds cannot specify whether facilitation increases or decreases the rate of information transmission (or energy-normalized information rate); these regions are marked in black in Figure 4. We show that for an unreliable synapse (with small ) and relatively large facilitation coefficient, u, short-term facilitation increases both mutual information rate and energy-normalized information rate of the synapse, since the enhancement of synchronous release dominates the rise of asynchronous release. Interestingly, it has been observed that for many facilitating synapses the baseline release probability is quite low [13,14]. For synapses that are more reliable a priori, the relative facilitation of asynchronous releases counteracts the improvement in the information rate. In reliable synapses (with higher values of ) and relatively small facilitation coefficients, short-term facilitation not only decreases the energy-normalized information rate of the synapse but also drops the information transmission rate. In addition, Figure 4 shows that higher input spike rates expand the range of synaptic parameters ( and u) for which short-term facilitation enhances the rate-energy performance of the synapse.

Figure 4.

The regime of parameters (u and ) for which short-term facilitation increases/decreases the mutual information rate or energy-normalized information rate of the synapse. Asynchronous release is fixed at and . The other parameters are and .

To study the effect of asynchronous release, we repeat the analysis of Figure 4 for very high () and very low () asynchronous release probabilities. Comparing Figure 5A,B reveals that decreasing the level of asynchronous release expands the range of synchronous release parameters, u and , for which short-term facilitation increases the mutual information rate and energy-normalized information rate. We also study the interaction between asynchronous release probability and the facilitation coefficient of asynchronous release v, keeping the parameters of synchronous release fixed at and (Figure 5C). To benefit from short-term facilitation, the synapse needs to decrease the release probability and/or the facilitation coefficient of the asynchronous mode of release. For synapses with very high asynchronous release probabilities, short-term facilitation can still boost the information rate and energy-normalized rate of the synapse, provided that the facilitation coefficient of the asynchronous release is small enough. Similar to the results in Figure 4, by increasing the normalized spike rate, the synapse spends more time in the facilitated state and therefore, the impact of short-term facilitation on rate-energy efficiency of the synapse is enhanced.

Figure 5.

The functional impact of asynchronous release. (A) The range of synchronous release parameters, u and , in which short-term facilitation enhances energy-rate efficiency of the synapse; the asynchronous release probability is . (B) Similar to (A) for . (C) The functional classes of short-term facilitation are modified by the baseline release probability, , and facilitation coefficient, v, of the asynchronous release probability. The other simulation parameters are in (A,B), and and in (C). For all simulations, and .

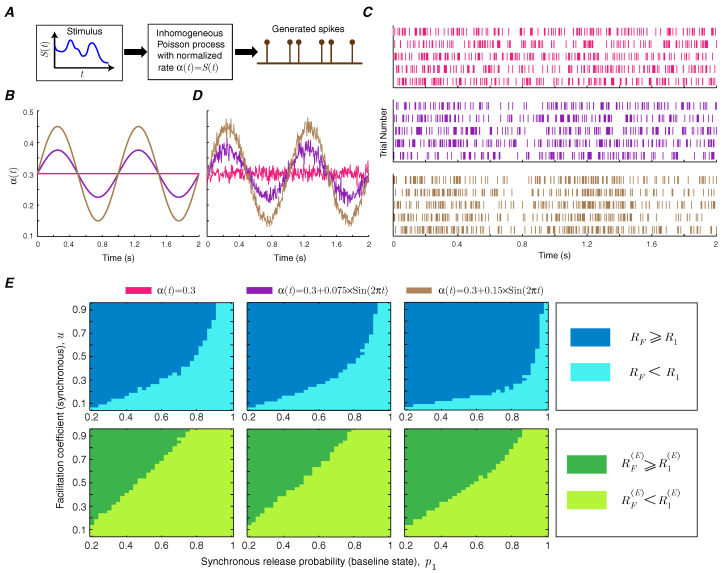

Short-term facilitation creates a memory for the synapse, since the release probability of the synapse depends on the history of spiking activity. It is, therefore, important to study how short-term facilitation modulates information transmission rate in synapses with temporally correlated spike trains by making the Poisson rate of the input spike train time-dependent [15] (Figure 6A). We use a sinusoidal rate stimulus with a frequency of 1 Hz on top of a baseline rate (Figure 6B) and use the context-tree weighting algorithm to numerically estimate the mutual information and energy-normalized information rates of the facilitating synapse [16]. The amplitude of the sinusoidal signal specifies the level of correlation. The raster plots of the neurons show the synchrony between the spiking activity of the neuron and the sinusoidal instantaneous rate (Figure 6C). The instantaneous firing rate, averaged over 1000 trials, provides a good estimate of the stimulus (Figure 6D). The functional classes of short-term facilitation are calculated as a function of baseline release probability and facilitation coefficient of synchronous release for different levels of correlation. This numerical analysis shows that correlations in the presynaptic spike train can slightly enlarge the regions in which short-term facilitation increases the mutual information rate and energy-normalized information rate (Figure 6E).

Figure 6.

(A) Generation of correlated spike trains. (B) Sinusoidal stimulus signals, with a frequency of 1 Hz, average value of 0.3, and amplitudes 0, , and , are used as the normalized rate of the inhomogeneous Poisson process. (C) The spike raster plots of the simulated neurons (5 trials for each amplitude). (D) The estimation of the instantaneous neuronal firing rate from 1000 trials. (E) Functional classes of short-term facilitation for correlated input. The first column corresponds to the uncorrelated input () and the second and third columns correspond to the correlated spike trains generated by sinusoidal stimulus signals with amplitudes and . The other simulation parameters are , , , and .

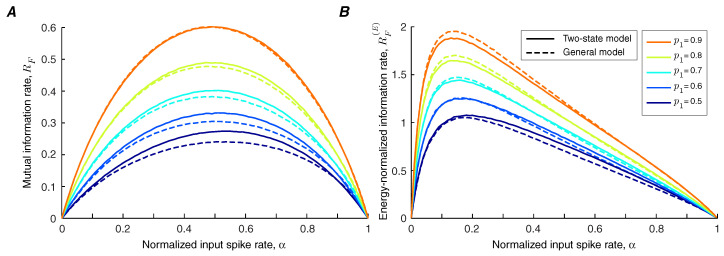

In the general model of facilitation, it is assumed that the state of the synapse at time i is affected not only by the spiking activity of the presynaptic neuron at time , but also by the whole history of the spiking events. Synchronous and asynchronous release probabilities converge to the limit probabilities, and , exponentially by time constants and . The arrival of an action potential at time i increases the limit probabilities and by and respectively; the initial values of the limit probabilities are and . In the quiescent intervals, the limit probabilities decay to the baseline values, and , by facilitation decay time constants, and . The numerical methods are used to compare the synaptic information efficacy of the two-state model with the general model of short-term facilitation. We show that the two-state model provides a good approximation for the mutual information rate (Figure 7A) and energy-normalized information rate (Figure 7B) of a facilitating synapse, provided that the facilitation decays rapidly. If the facilitation decay time constant is large, similar to the approach in [12], the parameters of the two-state model can be tuned to provide a better estimation.

Figure 7.

(A) Mutual information rate of the two-state model (solid lines) and general model (dashed lines) of short-term facilitation as a function of normalized input spike rate for various values of baseline synchronous release probability, . (B) Similar to (A) for energy-normalized information rates. The simulation parameters are , , , , msec, and msec.

4. Discussion

We studied how prior spikes, by facilitating the release of neurotransmitter at a synapse, modulate the rate of synaptic information transfer. Most components of neural hardware are noisy, hybrid analog-digital devices. In particular, the synapse maps quite naturally onto an asymmetric binary channel in communication theory. Some neurons, such as thalamic relay neurons, act as nodes in a network for long-range communication using spikes, so it is natural to quantify the performance of the synapses in bits [17,18,19,20,21,22,23]. Synaptic information efficacy quantifies the amount of information that the post-synaptic potential contains about the spiking activity of the presynaptic neuron. This analysis, however, does not guarantee that the post-synaptic neuron accesses or uses this information, which rather depends on the biophysical mechanisms of encoding and decoding.

To capture the phenomenon of facilitation, we made the binary asymmetric channel have two states. The resulting model permits the short-term dynamics of synchronous and asynchronous releases to be different, which enabled us to assess the impact of each release mode on the efficacy of synaptic information transfer. We first assumed identical facilitation coefficients for synchronous and asynchronous release (i.e., ) and demonstrated that the lower bound of information rate of a facilitating synapse is higher than the information rate of a static synapse (Figure 3A). We were, therefore, able to show that synapses quantifiably transmit more information through short-term facilitation, as long as synchronous and asynchronous release of neurotransmitter obey the same dynamics. Indeed, the increase in information can outweigh the higher energy consumption, as measured by the energy-normalized information rate, provided that synchronous release is facilitated more than asynchronous release. In contrast, when facilitation enhances the asynchronous component of release more strongly than the synchronous component, short-term facilitation would have the opposite effect, namely to decrease synaptic information efficacy.

In previous work, we studied the information transmission in a synapse during short-term depression [9,12]. There, the state of the binary asymmetric channel, which models the synapse, depended on the history of the output . Facilitation, in contrast, depends on the history of the input . This simple change makes the problem much more challenging mathematically, as it is, in fact, isomorphic to an unsolved problem in information theory, namely the entropy rate of a hidden Markov process [24]. Nevertheless, the lower bound we derive for a facilitating synapse mirrors the exact result we had derived earlier for short-term depression. Moreover, in practice, this bound is fairly tight.

The bounds derived here are only a first step towards understanding information transmission in facilitating synapses. The two-state binary asymmetric channel simplifies the process of facilitation by making the release probability depend only on the presence or absence of a presynaptic spike at the previous time-point. Yet when the facilitation decays rapidly, the two-state model converges in behavior to a more general model that considers the entire history of spiking.

Our model ignores the possibility of temporal correlations in the presynaptic spike train. Instead, in line with many other studies, the time series of spikes were assumed to obey Poisson statistics [8,22,25]. This simplification made the information-theoretic analysis of the synapse tractable and helped us to derive the upper bound and lower bound of information rate. Different methods have been suggested for modeling correlated spike trains, such as inhomogeneous Poisson processes [15,26], autoregressive point processes [27], and random spike selections from a set of spike trains [15]. We used an inhomogeneous Poisson process to generate correlated spike trains and estimated the mutual information rate and energy-normalized information rate of the synapse numerically. In the future, it will be of interest to study the effect of correlated input on the information efficacy of the general model of facilitation in which the release probabilities are determined by the whole history of spiking activity.

In this study, we have assumed that in response to an incoming action potential, the release site releases at most one vesicle. To capture multiple releases, the model should be extended to a communication channel with binary input and multiple outputs. The analysis of this channel will reveal the impact of multiple releases on the mutual information rate of a static and dynamic synapse.

Appendix A

Proof of Theorem 1.

Let . By definition,

(A1) where

(A2) The chain rule implies that

(A3)

(A4) The model in Figure 1A posits that depends only on and . Given , is independent of and consequently

(A5) Also

(A6) From (A6),

(A7) Hence,

(A8) and together with (A3),

(A9)

(A10) By applying (A9) and (A10) to (A2),

(A11)

(A12) Using the definition of conditional mutual information (for ),

(A13)

(A14) Finally, the lemma follows from (A1), (A12) and (A14). □

Proof of Theorem 2.

The non-negativity of mutual information implies that

(A15) By conditioning on ,

(A16) and similarly

(A17) Moreover

(A18)

(A19) and

(A20) We have

(A21)

(A22)

(A23)

(A24) Also

(A25) By conditioning on , and given the fact that and are independent,

(A26) Hence,

(A27) and similarly, we can show that

(A28) Therefore,

(A29) With the same approach,

(A30) The conditional entropy can be written as

(A31) and for , we can infer from (A16)–(A19), (A29), and (A30),

(A32)

(A33) We also conclude that is independent of i for . Moreover, we can easily obtain

(A34)

(A35)

(A36)

(A37) Therefore, from (A33) and (A35),

(A38) and by dividing by n and calculating the limit when n goes to infinity,

(A39) and the proof is complete. □

Author Contributions

Conceptualization: M.S. (Mehrdad Salmasi), M.S. (Martin Stemmler), S.G., A.L.; Formal analysis: M.S. (Mehrdad Salmasi); Investigation: M.S. (Mehrdad Salmasi), M.S. (Martin Stemmler), S.G., A.L.; Methodology: M.S. (Mehrdad Salmasi), M.S. (Martin Stemmler), S.G., A.L.; Software: M.S. (Mehrdad Salmasi); Data curation: M.S. (Mehrdad Salmasi); Resources: S.G.; Supervision: M.S. (Martin Stemmler), S.G., A.L.; Visualization: M.S. (Mehrdad Salmasi); Funding acquisition: S.G.; Writing—original draft: M.S. (Mehrdad Salmasi), M.S. (Martin Stemmler), S.G., A.L.; Writing—review and editing: M.S. (Mehrdad Salmasi), M.S. (Martin Stemmler), S.G., A.L.

Funding

This research was funded by Bundesministerium für Bildung und Forschung (BMBF), German Center for Vertigo and Balance Disorders, grant number 01EO1401 (recipients: M.S. (Mehrdad Salmasi), S.G.), and Bundesministerium für Bildung und Forschung (BMBF), grant number 01GQ1004A (recipient: M.S. (Martin Stemmler)).

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Kandel E.R., Schwartz J.H., Jessell T.M., Siegelbaum S.A., Hudspeth A.J., Mack S. Principles of Neural Science. Volume 4 McGraw-Hill; New York, NY, USA: 2000. [Google Scholar]

- 2.Awatramani G.B., Price G.D., Trussell L.O. Modulation of transmitter release by presynaptic resting potential and background calcium levels. Neuron. 2005;48:109–121. doi: 10.1016/j.neuron.2005.08.038. [DOI] [PubMed] [Google Scholar]

- 3.Branco T., Staras K. The probability of neurotransmitter release: Variability and feedback control at single synapses. Nat. Rev. Neurosci. 2009;10:373–383. doi: 10.1038/nrn2634. [DOI] [PubMed] [Google Scholar]

- 4.Kaeser P.S., Regehr W.G. Molecular mechanisms for synchronous, asynchronous, and spontaneous neurotransmitter release. Annu. Rev. Physiol. 2014;76:333–363. doi: 10.1146/annurev-physiol-021113-170338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kavalali E.T. The mechanisms and functions of spontaneous neurotransmitter release. Nat. Rev. Neurosci. 2015;16:5–16. doi: 10.1038/nrn3875. [DOI] [PubMed] [Google Scholar]

- 6.Zucker R.S., Regehr W.G. Short-term synaptic plasticity. Annu. Rev. Physiol. 2002;64:355–405. doi: 10.1146/annurev.physiol.64.092501.114547. [DOI] [PubMed] [Google Scholar]

- 7.Goldman M.S. Enhancement of information transmission efficiency by synaptic failures. Neural Comput. 2004;16:1137–1162. doi: 10.1162/089976604773717568. [DOI] [PubMed] [Google Scholar]

- 8.Fuhrmann G., Segev I., Markram H., Tsodyks M. Coding of temporal information by activity-dependent synapses. J. Neurophysiol. 2002;87:140–148. doi: 10.1152/jn.00258.2001. [DOI] [PubMed] [Google Scholar]

- 9.Salmasi M., Loebel A., Glasauer S., Stemmler M. Short-term synaptic depression can increase the rate of information transfer at a release site. PLoS Comput. Biol. 2019;15:e1006666. doi: 10.1371/journal.pcbi.1006666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fortune E.S., Rose G.J. Short-term synaptic plasticity as a temporal filter. Trends Neurosci. 2001;24:381–385. doi: 10.1016/S0166-2236(00)01835-X. [DOI] [PubMed] [Google Scholar]

- 11.Attwell D., Laughlin S.B. An energy budget for signaling in the grey matter of the brain. J. Cereb. Blood Flow Metab. 2001;21:1133–1145. doi: 10.1097/00004647-200110000-00001. [DOI] [PubMed] [Google Scholar]

- 12.Salmasi M., Stemmler M., Glasauer S., Loebel A. Information Rate Analysis of a Synaptic Release Site Using a Two-State Model of Short-Term Depression. Neural Comput. 2017;29:1528–1560. doi: 10.1162/NECO_a_00962. [DOI] [PubMed] [Google Scholar]

- 13.Dobrunz L.E., Stevens C.F. Heterogeneity of release probability, facilitation, and depletion at central synapses. Neuron. 1997;18:995–1008. doi: 10.1016/S0896-6273(00)80338-4. [DOI] [PubMed] [Google Scholar]

- 14.Markram H., Wang Y., Tsodyks M. Differential signaling via the same axon of neocortical pyramidal neurons. Proc. Natl. Acad. Sci. USA. 1998;95:5323–5328. doi: 10.1073/pnas.95.9.5323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Brette R. Generation of correlated spike trains. Neural Comput. 2009;21:188–215. doi: 10.1162/neco.2009.12-07-657. [DOI] [PubMed] [Google Scholar]

- 16.Jiao J., Permuter H.H., Zhao L., Kim Y.H., Weissman T. Universal estimation of directed information. IEEE Trans. Inf. Theory. 2013;59:6220–6242. doi: 10.1109/TIT.2013.2267934. [DOI] [Google Scholar]

- 17.Palmer S.E., Marre O., Berry M.J., Bialek W. Predictive information in a sensory population. Proc. Natl. Acad. Sci. USA. 2015;112:6908–6913. doi: 10.1073/pnas.1506855112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Osborne L.C., Bialek W., Lisberger S.G. Time course of information about motion direction in visual area MT of macaque monkeys. J. Neurosci. 2004;24:3210–3222. doi: 10.1523/JNEUROSCI.5305-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sincich L.C., Horton J.C., Sharpee T.O. Preserving information in neural transmission. J. Neurosci. 2009;29:6207–6216. doi: 10.1523/JNEUROSCI.3701-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Arabzadeh E., Panzeri S., Diamond M.E. Whisker vibration information carried by rat barrel cortex neurons. J. Neurosci. 2004;24:6011–6020. doi: 10.1523/JNEUROSCI.1389-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dimitrov A.G., Lazar A.A., Victor J.D. Information theory in neuroscience. J. Comput. Neurosci. 2011;30:1–5. doi: 10.1007/s10827-011-0314-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Panzeri S., Schultz S.R., Treves A., Rolls E.T. Correlations and the encoding of information in the nervous system. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1999;266:1001–1012. doi: 10.1098/rspb.1999.0736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Gjorgjieva J., Sompolinsky H., Meister M. Benefits of pathway splitting in sensory coding. J. Neurosci. 2014;34:12127–12144. doi: 10.1523/JNEUROSCI.1032-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Han G., Marcus B. Analyticity of entropy rate of hidden Markov chains. IEEE Trans. Inf. Theory. 2006;52:5251–5266. doi: 10.1109/TIT.2006.885481. [DOI] [Google Scholar]

- 25.Softky W.R., Koch C. The highly irregular firing of cortical cells is inconsistent with temporal integration of random EPSPs. J. Neurosci. 1993;13:334–350. doi: 10.1523/JNEUROSCI.13-01-00334.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Song S., Abbott L.F. Cortical development and remapping through spike timing-dependent plasticity. Neuron. 2001;32:339–350. doi: 10.1016/S0896-6273(01)00451-2. [DOI] [PubMed] [Google Scholar]

- 27.Farkhooi F., Strube-Bloss M.F., Nawrot M.P. Serial correlation in neural spike trains: Experimental evidence, stochastic modeling, and single neuron variability. Phys. Rev. E. 2009;79:021905. doi: 10.1103/PhysRevE.79.021905. [DOI] [PubMed] [Google Scholar]