Abstract

We explore a well-known integral representation of the logarithmic function, and demonstrate its usefulness in obtaining compact, easily computable exact formulas for quantities that involve expectations and higher moments of the logarithm of a positive random variable (or the logarithm of a sum of i.i.d. positive random variables). The integral representation of the logarithm is proved useful in a variety of information-theoretic applications, including universal lossless data compression, entropy and differential entropy evaluations, and the calculation of the ergodic capacity of the single-input, multiple-output (SIMO) Gaussian channel with random parameters (known to both transmitter and receiver). This integral representation and its variants are anticipated to serve as a useful tool in additional applications, as a rigorous alternative to the popular (but non-rigorous) replica method (at least in some situations).

Keywords: integral representation, logarithmic expectation, universal data compression, entropy, differential entropy, ergodic capacity, SIMO channel, multivariate Cauchy distribution

1. Introduction

In analytic derivations pertaining to many problem areas in information theory, one frequently encounters the need to calculate expectations and higher moments of expressions that involve the logarithm of a positive-valued random variable, or more generally, the logarithm of the sum of several i.i.d. positive random variables. The common practice, in such situations, is either to resort to upper and lower bounds on the desired expression (e.g., using Jensen’s inequality or any other well-known inequalities), or to apply the Taylor series expansion of the logarithmic function. A more modern approach is to use the replica method (see, e.g., in [1] (Chapter 8)), which is a popular (but non-rigorous) tool that has been borrowed from the field of statistical physics with considerable success.

The purpose of this work is to point out to an alternative approach and to demonstrate its usefulness in some frequently encountered situations. In particular, we consider the following integral representation of the logarithmic function (to be proved in the sequel),

| (1) |

The immediate use of this representation is in situations where the argument of the logarithmic function is a positive-valued random variable, X, and we wish to calculate the expectation, . By commuting the expectation operator with the integration over u (assuming that this commutation is valid), the calculation of is replaced by the (often easier) calculation of the moment-generating function (MGF) of X, as

| (2) |

Moreover, if are positive i.i.d. random variables, then

| (3) |

This simple idea is not quite new. It has been used in the physics literature, see, e.g., [1] (Exercise 7.6, p. 140), [2] (Equation (2.4) and onward) and [3] (Equation (12) and onward). With the exception of [4], we are not aware of any work in the information theory literature where it has been used. The purpose of this paper is to demonstrate additional information-theoretic applications, as the need to evaluate logarithmic expectations is not rare at all in many problem areas of information theory. Moreover, the integral representation (1) is useful also for evaluating higher moments of , most notably, the second moment or variance, in order to assess the statistical fluctuations around the mean.

We demonstrate the usefulness of this approach in several application areas, including entropy and differential entropy evaluations, performance analysis of universal lossless source codes, and calculations of the ergodic capacity of the Rayleigh single-input multiple-output (SIMO) channel. In some of these examples, we also demonstrate the calculation of variances associated with the relevant random variables of interest. As a side remark, in the same spirit of introducing integral representations and applying them, Simon and Divsalar [5,6] brought to the attention of communication theorists useful, definite-integral forms of the Q–function (Craig’s formula [7]) and Marcum Q–function, and demonstrated their utility in applications.

It should be pointed out that most of our results remain in the form of a single- or double-definite integral of certain functions that depend on the parameters of the problem in question. Strictly speaking, such a definite integral may not be considered a closed-form expression, but nevertheless, we can say the following.

-

(a)

In most of our examples, the expression we obtain is more compact, more elegant, and often more insightful than the original quantity.

-

(b)

The resulting definite integral can actually be considered a closed-form expression “for every practical purpose” since definite integrals in one or two dimensions can be calculated instantly using built-in numerical integration operations in MATLAB, Maple, Mathematica, or other mathematical software tools. This is largely similar to the case of expressions that include standard functions (e.g., trigonometric, logarithmic, exponential functions, etc.), which are commonly considered to be closed-form expressions.

-

(c)

The integrals can also be evaluated by power series expansions of the integrand, followed by term-by-term integration.

-

(d)

Owing to Item (c), the asymptotic behavior in the parameters of the model can be evaluated.

-

(e)

At least in two of our examples, we show how to pass from an n–dimensional integral (with an arbitrarily large n) to one– or two–dimensional integrals. This passage is in the spirit of the transition from a multiletter expression to a single–letter expression.

To give some preliminary flavor of our message in this work, we conclude this introduction by mentioning a possible use of the integral representation in the context of calculating the entropy of a Poissonian random variable. For a Poissonian random variable, N, with parameter , the entropy (in nats) is given by

| (4) |

where the nontrivial part of the calculation is associated with the last term, . In [8], this term was handled by using a nontrivial formula due to Malmstén (see [9] (pp. 20–21)), which represents the logarithm of Euler’s Gamma function in an integral form (see also [10]). In Section 2, we derive the relevant quantity using (1), in a simpler and more transparent form which is similar to [11] ((2.3)–(2.4)).

The outline of the remaining part of this paper is as follows. In Section 2, we provide some basic mathematical background concerning the integral representation (2) and some of its variants. In Section 3, we present the application examples. Finally, in Section 4, we summarize and provide some outlook.

2. Mathematical Background

In this section, we present the main mathematical background associated with the integral representation (1), and provide several variants of this relation, most of which are later used in this paper. For reasons that will become apparent shortly, we extend the scope to the complex plane.

Proposition 1.

(5)

Proof.

(6)

(7)

(8)

(9)

(10) where Equation (7) holds as for all , based on the assumption that ; (8) holds by switching the order of integration. □

Remark 1.

In [12] (p. 363, Identity (3.434.2)), it is stated that

(11) Proposition 1 also applies to any purely imaginary number, z, which is of interest too (see Corollary 1 in the sequel, and the identity with the characteristic function in (14)).

Proposition 1 paves the way to obtaining some additional related integral representations of the logarithmic function for the reals.

Corollary 1.

([12] (p. 451, Identity 3.784.1)) For every ,

(12)

Proof.

By Proposition 1 and the identity (with ), we get

(13) Subtracting both sides by the integral in (13) for (which is equal to zero) gives (12). □

Let X be a real-valued random variable, and let be the characteristic function of X. Then, by Corollary 1,

| (14) |

where we are assuming, here and throughout the sequel, that the expectation operation and the integration over u are commutable, i.e., Fubini’s theorem applies.

Similarly, by returning to Proposition 1 (confined to a real-valued argument of the logarithm), the calculation of can be replaced by the calculation of the MGF of X, as

| (15) |

In particular, if are positive i.i.d. random variables, then

| (16) |

Remark 2.

One may further manipulate (15) and (16) as follows. As for any and , then the expectation of can also be represented as

(17) The idea is that if, for some , can be expressed in closed form, whereas it cannot for (or even for some , but not for ), then (17) may prove useful. Moreover, if are positive i.i.d. random variables, , and , then

(18) For example, if are i.i.d. standard Gaussian random variables and , then (18) enables to calculate the expected value of the logarithm of a chi-squared distributed random variable with n degrees of freedom. In this case,

(19) and, from (18) with ,

(20) Note that according to the pdf of a chi-squared distribution, one can express as a one-dimensional integral even without using (18). However, for general , the direct calculation of leads to an n-dimensional integral, whereas (18) provides a one-dimensional integral whose integrand involves in turn the calculation of a one-dimensional integral too.

Identity (1) also proves useful when one is interested, not only in the expected value of , but also in higher moments, in particular, its second moment or variance. In this case, the one-dimensional integral becomes a two-dimensional one. Specifically, for any ,

| (21) |

| (22) |

| (23) |

| (24) |

More generally, for a pair of positive random variables, , and for ,

| (25) |

For later use, we present the following variation of the basic identity.

Proposition 2.

Let X be a random variable, and let

(26) be the MGF of X. If X is non-negative, then

(27)

(28)

Proof.

Equation (27) is a trivial consequence of (15). As for (28), we have

(29)

(30)

(31)

(32) □

The following result relies on the validity of (5) to the right-half complex plane, and its derivation is based on the identity for all . In general, it may be used if the characteristic function of a random variable X has a closed-form expression, whereas the MGF of does not admit a closed-form expression (see Proposition 2). We introduce the result, although it is not directly used in the paper.

Proposition 3.

Let X be a real-valued random variable, and let

(33) be the characteristic function of X. Then,

(34) and

(35)

As a final note, we point out that the fact that the integral representation (2) replaces the expectation of the logarithm of X by the expectation of an exponential function of X, has an additional interesting consequence: an expression like becomes the integral of the sum of a geometric series, which, in turn, is easy to express in closed form (see [11] ((2.3)–(2.4))). Specifically,

| (36) |

Thus, for a positive integer-valued random variable, N, the calculation of requires merely the calculation of and the MGF, . For example, if N is a Poissonian random variable, as discussed near the end of the Introduction, both and are easy to evaluate. This approach is a simple, direct alternative to the one taken in [8] (see also [10]), where Malmstén’s nontrivial formula for (see [9] (pp. 20–21)) was invoked. (Malmstén’s formula for applies to a general, complex–valued z with ; in the present context, however, only integer real values of z are needed, and this allows the simplification shown in (36)). The above described idea of the geometric series will also be used in one of our application examples, in Section 3.4.

3. Applications

In this section, we show the usefulness of the integral representation of the logarithmic function in several problem areas in information theory. To demonstrate the direct computability of the relevant quantities, we also present graphs of their numerical calculation. In some of the examples, we also demonstrate calculations of the second moments and variances.

3.1. Differential Entropy for Generalized Multivariate Cauchy Densities

Let be a random vector whose probability density function is of the form

| (37) |

for a certain non–negative function g and positive constant q such that

| (38) |

We refer to this kind of density as a generalized multivariate Cauchy density, because the multivariate Cauchy density is obtained as a special case where and . Using the Laplace transform relation,

| (39) |

f can be represented as a mixture of product measures:

| (40) |

Defining

| (41) |

we get from (40),

| (42) |

and so,

| (43) |

The calculation of the differential entropy of f is associated with the evaluation of the expectation . Using (27),

| (44) |

From (40) and by interchanging the integration,

| (45) |

In view of (40), (44), and (45), the differential entropy of is therefore given by

| (46) |

For , with an arbitrary , we obtain from (41) that

| (47) |

In particular, for and , we get the multivariate Cauchy density from (37). In this case, as , it follows from (47) that for , and from (43)

| (48) |

Combining (46), (47) and (48) gives

| (49) |

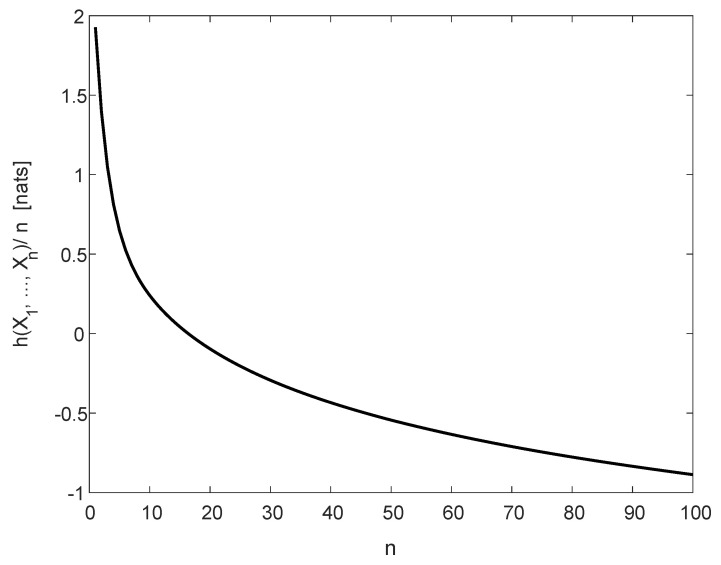

Figure 1 displays the normalized differential entropy, , for .

Figure 1.

The normalized differential entropy, (see (49)), for a multivariate Cauchy density, , with in (48).

We believe that the interesting point, conveyed in this application example, is that (46) provides a kind of a “single–letter expression”; the n–dimensional integral, associated with the original expression of the differential entropy , is replaced by the two-dimensional integral in (46), independently of n.

As a final note, we mention that a lower bound on the differential entropy of a different form of extended multivariate Cauchy distributions (cf. [13] (Equation (42))) was derived in [13] (Theorem 6). The latter result relies on obtaining lower bounds on the differential entropy of random vectors whose densities are symmetric log-concave or -concave (i.e., densities f for which is concave for some ).

3.2. Ergodic Capacity of the Fading SIMO Channel

Consider the SIMO channel with L receive antennas and assume that the channel transfer coefficients, , are independent, zero–mean, circularly symmetric complex Gaussian random variables with variances . Its ergodic capacity (in nats per channel use) is given by

| (50) |

where , , and is the signal–to–noise ratio (SNR) (see, e.g., [14,15]).

Paper [14] is devoted, among other things, to the exact evaluation of (50) by finding the density of the random variable defined by , and then taking the expectation w.r.t. that density. Here, we show that the integral representation in (5) suggests a more direct approach to the evaluation of (50). It should also be pointed out that this approach is more flexible than the one in [14], as the latter strongly depends on the assumption that are Gaussian and statistically independent. The integral representation approach also allows other distributions of the channel transfer gains, as well as possible correlations between the coefficients and/or the channel inputs. Moreover, we are also able to calculate the variance of , as a measure of the fluctuations around the mean, which is obviously related to the outage.

Specifically, in view of Proposition 2 (see (27)), let

| (51) |

For all ,

| (52) |

where (52) holds since

| (53) |

From (27), (50) and (52), the ergodic capacity (in nats per channel use) is given by

| (54) |

A similar approach appears in [4] (Equation (12)).

As for the variance, from Proposition 2 (see (28)) and (52),

| (55) |

A similar analysis holds for the multiple-input single-output (MISO) channel. By partial–fraction decomposition of the expression (see the right side of (54))

the ergodic capacity C can be expressed as a linear combination of integrals of the form

| (56) |

where is the (modified) exponential integral function, defined as

| (57) |

A similar representation appears also in [14] (Equation (7)).

Consider the example of , and . From (54), the ergodic capacity of the SIMO channel is given by

| (58) |

The variance in this example (see (55)) is given by

| (59) |

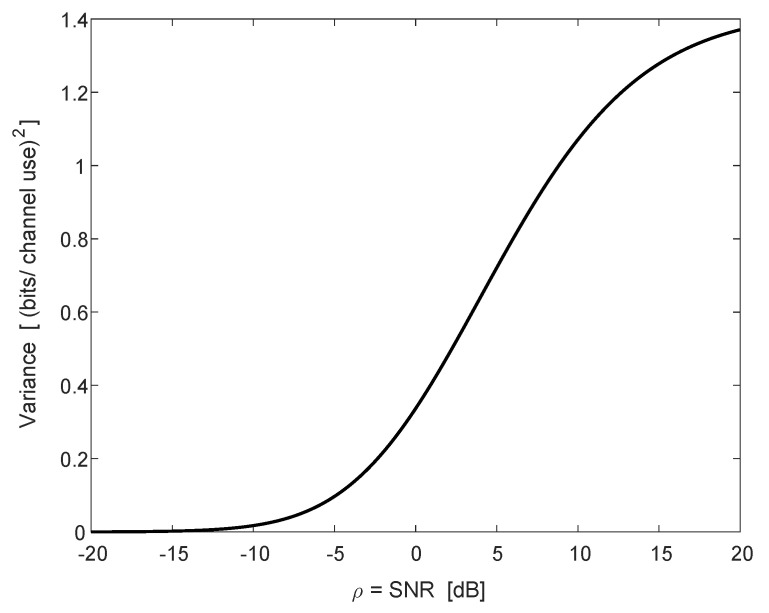

Figure 2 depicts the ergodic capacity C as a function of the SNR, , in dB (see (58), and divide by for conversion to bits per channel use). The same example exactly appears in the lower graph of Figure 1 in [14]. The variance appears in Figure 3 (see (59), and similarly divide by ).

Figure 2.

The ergodic capacity C (in bits per channel use) of the SIMO channel as a function of (in dB) for receive antennas, with noise variances and .

Figure 3.

The variance of (in ) of the SIMO channel as a function of (in dB) for receive antennas, with noise variances and .

3.3. Universal Source Coding for Binary Arbitrarily Varying Sources

Consider a source coding setting, where there are n binary DMS’s, and let denote the Bernoulli parameter of source no. . Assume that a hidden memoryless switch selects uniformly at random one of these sources, and the data is then emitted by the selected source. Since it is unknown a-priori which source is selected at each instant, a universal lossless source encoder (e.g., a Shannon or Huffman code) is designed to match a binary DMS whose Bernoulli parameter is given by . Neglecting integer length constraints, the average redundancy in the compression rate (measured in nats per symbol), due to the unknown realization of the hidden switch, is about

| (60) |

where is the binary entropy function (defined to the base e), and the redundancy is given in nats per source symbol. Now, let us assume that the Bernoulli parameters of the n sources are i.i.d. random variables, , all having the same density as that of some generic random variable X, whose support is the interval . We wish to evaluate the expected value of the above defined redundancy, under the assumption that the realizations of are known. We are then facing the need to evaluate

| (61) |

We now express the first and second terms on the right-hand side of (61) as a function of the MGF of X.

In view of (5), the binary entropy function admits the integral representation

| (62) |

which implies that

| (63) |

The expectations on the right-hand side of (63) can be expressed as functionals of the MGF of X, , and its derivative, for . For all ,

| (64) |

and

| (65) |

On substituting (64) and (65) into (63), we readily obtain

| (66) |

Define . Then,

| (67) |

which yields, in view of (66), (67) and the change of integration variable, , the following:

| (68) |

Similarly as in Section 3.1, here too, we pass from an n-dimensional integral to a one-dimensional integral. In general, similar calculations can be carried out for higher integer moments, thus passing from n-dimensional integration for a moment of order s to an s-dimensional integral, independently of n.

For example, if are i.i.d. and uniformly distributed on [0,1], then the MGF of a generic random variable X distributed like all is given by

| (69) |

From (68), it can be verified numerically that is monotonically increasing in n, being equal (in nats) to , 0.602, 0.634, 0.650, 0.659 for , respectively, with the limit as we let (this is expected by the law of large numbers).

3.4. Moments of the Empirical Entropy and the Redundancy of K–T Universal Source Coding

Consider a stationary, discrete memoryless source (DMS), P, with a finite alphabet of size and letter probabilities . Let be an n–vector emitted from P, and let be the empirical distribution associated with , that is, , for all , where is the number of occurrences of the letter x in .

It is well known that in many universal lossless source codes for the class of memoryless sources, the dominant term of the length function for encoding is , where is the empirical entropy,

| (70) |

For code length performance analysis (as well as for entropy estimation per se), there is therefore interest in calculating the expected value as well as . Another motivation comes from the quest for estimating the entropy as an objective on its own right, and then the expectation and the variance suffice for the calculation of the mean square error of the estimate, . Most of the results that are available in the literature, in this context, concern the asymptotic behavior for large n as well as bounds (see, e.g., [16,17,18,19,20,21,22,23,24,25,26,27,28,29,30], as well as many other related references therein). The integral representation of the logarithm in (5), on the other hand, allows exact calculations of the expectation and the variance. The expected value of the empirical entropy is given by

| (71) |

For convenience, let us define the function as

| (72) |

which yields,

| (73) |

| (74) |

where and are first and second order derivatives of w.r.t. t, respectively. From (71) and (73),

| (75) |

where the integration variable in (75) was changed using a simple scaling by n.

Before proceeding with the calculation of the variance of , let us first compare the integral representation in (75) to the alternative sum, obtained by a direct, straightforward calculation of the expected value of the empirical entropy. A straightforward calculation gives

| (76) |

| (77) |

We next compare the computational complexity of implementing (75) to that of (77). For large n, in order to avoid numerical problems in computing (77) by standard software, one may use the Gammaln function in Matlab/Excel or the LogGamma in Mathematica (a built-in function for calculating the natural logarithm of the Gamma function) to obtain that

| (78) |

The right-hand side of (75) is the sum of integrals, and the computational complexity of each integral depends on neither n, nor . Hence, the computational complexity of the right-hand side of (75) scales linearly with . On the other hand, the double sum on the right-hand side of (77) consists of terms. Let be fixed, which is expected to be large () if a good estimate of the entropy is sought. The computational complexity of the double sum on the right-hand side of (77) grows like , which scales quadratically in . Hence, for a DMS with a large alphabet, or when , there is a significant computational reduction by evaluating (75) in comparison to the right-hand side of (77).

We next move on to calculate the variance of .

| (79) |

| (80) |

The second term on the right-hand side of (80) has already been calculated. For the first term, let us define, for ,

| (81) |

| (82) |

| (83) |

| (84) |

Observe that

| (85) |

| (86) |

For , we have

| (87) |

| (88) |

| (89) |

and for ,

| (90) |

| (91) |

| (92) |

Therefore,

| (93) |

Defining (see (74) and (86))

| (94) |

we have

| (95) |

To obtain numerical results, it would be convenient to particularize now the analysis to the binary symmetric source (BSS). From (75),

| (96) |

For the variance, it follows from (84) that for with and ,

| (97) |

| (98) |

and, from (87)–(89), for

| (99) |

From (72), for and ,

| (100) |

| (101) |

and, from (90)–(92), for ,

| (102) |

Combining Equations (93), (99), and (102), gives the following closed-form expression for the variance of the empirical entropy:

| (103) |

where

| (104) |

| (105) |

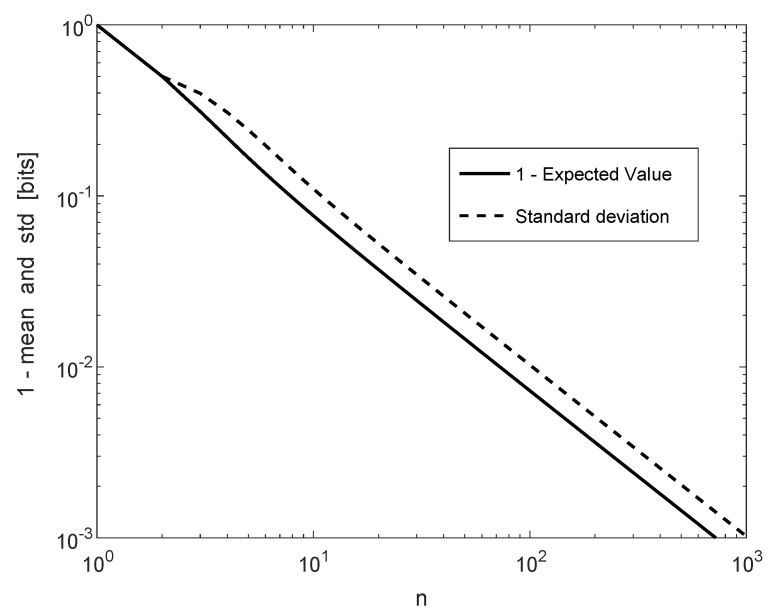

For the BSS, and the standard deviation of both decay at the rate of as n grows without bound, according to Figure 4. This asymptotic behavior of is supported by the well-known result [31] (see also [18] (Section 3.C) and references therein) that for the class of discrete memoryless sources with a given finite alphabet ,

| (106) |

in law, where is a chi-squared random variable with d degrees of freedom. The left-hand side of (106) can be rewritten as

| (107) |

and so, decays like , which is equal to for the BSS. In Figure 4, the base of the logarithm is 2, and therefore, decays like . It can be verified numerically that (in bits) is equal to and for and , respectively (see Figure 4), which confirms (106) and (107). Furthermore, the exact result here for the standard deviation, which decays like , scales similarly to the concentration inequality in [32] ((9)).

Figure 4.

and for a BSS (in bits per source symbol) as a function of n.

We conclude this subsection by exploring a quantity related to the empirical entropy, which is the expected code length associated with the universal lossless source code due to Krichevsky and Trofimov [23]. In a nutshell, this is a predictive universal code, which at each time instant t, sequentially assigns probabilities to the next symbol according to (a biased version of) the empirical distribution pertaining to the data seen thus far, . Specifically, consider the code length function (in nats),

| (108) |

where

| (109) |

is the number of occurrences of the symbol in , and is a fixed bias parameter needed for the initial coding distribution ().

We now calculate the redundancy of this universal code,

| (110) |

where H is the entropy of the underlying source. From Equations (108), (109), and (110), we can represent as follows,

| (111) |

The expectation on the right-hand side of (111) satisfies

| (112) |

which gives from (111) and (112) that the redundancy is given by

| (113) |

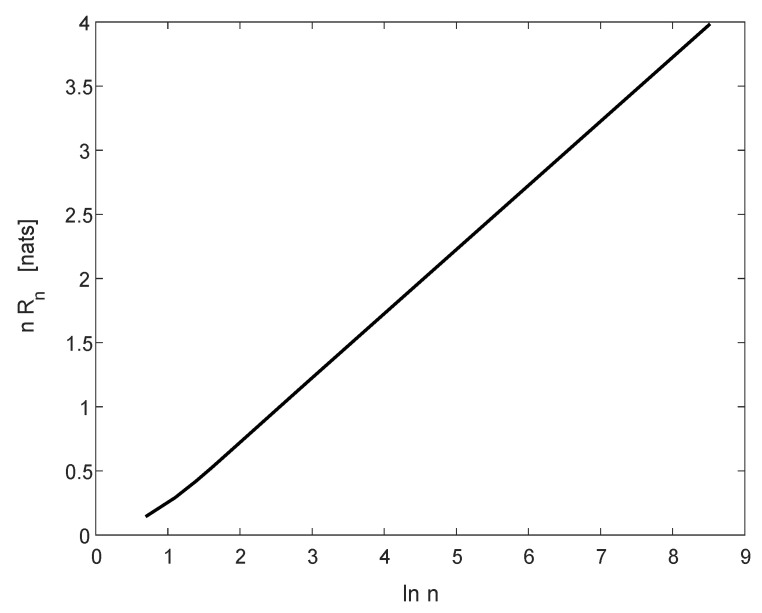

Figure 5 displays as a function of for in the range . As can be seen, the graph is nearly a straight line with slope , which is in agreement with the theoretical result that (in nats per symbol) for large n (see [23] (Theorem 2)).

Figure 5.

The function vs. for the BSS and , in the range .

4. Summary and Outlook

In this work, we have explored a well-known integral representation of the logarithmic function, and demonstrated its applications in obtaining exact formulas for quantities that involve expectations and second order moments of the logarithm of a positive random variable (or the logarithm of a sum of i.i.d. such random variables). We anticipate that this integral representation and its variants can serve as useful tools in many additional applications, representing a rigorous alternative to the replica method in some situations.

Our work in this paper focused on exact results. In future research, it would be interesting to explore whether the integral representation we have used is useful also in obtaining upper and lower bounds on expectations (and higher order moments) of expressions that involves logarithms of positive random variables. In particular, could the integrand of (1) be bounded from below and/or above in a nontrivial manner, that would lead to new interesting bounds? Moreover, it would be even more useful if the corresponding bounds on the integrand would lend themselves to closed-form expressions of the resulting definite integrals.

Another route for further research relies on [12] (p. 363, Identity (3.434.1)), which states that

| (114) |

Let , and where are positive i.i.d. random variables. Taking expectations of both sides of (114) and rearranging terms, gives

| (115) |

where X is a random variable having the same density as of the ’s, and (for ) denotes the MGF of X. Since

| (116) |

it follows that (115) generalizes (3) for the logarithmic expectation. Identity (115), for the -th moment of a sum of i.i.d. positive random variables with , may be used in some information-theoretic contexts rather than invoking Jensen’s inequality.

Acknowledgments

The authors are thankful to Cihan Tepedelenlioǧlu and Zbigniew Golebiewski for bringing references [4,11], respectively, to their attention.

Author Contributions

Investigation, N.M. and I.S.; Writing-original draft, N.M. and I.S.; Writing-review & editing, N.M. and I.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Mézard M., Montanari A. Information, Physics, and Computation. Oxford University Press; New York, NY, USA: 2009. [Google Scholar]

- 2.Esipov S.E., Newman T.J. Interface growth and Burgers turbulence: The problem of random initial conditions. Phys. Rev. E. 1993;48:1046–1050. doi: 10.1103/PhysRevE.48.1046. [DOI] [PubMed] [Google Scholar]

- 3.Song J., Still S., Rojas R.D.H., Castillo I.P., Marsili M. Optimal work extraction and mutual information in a generalized Szilárd engine. arXiv. 2019 doi: 10.1103/PhysRevE.103.052121.1910.04191 [DOI] [PubMed] [Google Scholar]

- 4.Rajan A., Tepedelenlioǧlu C. Stochastic ordering of fading channels through the Shannon transform. IEEE Trans. Inform. Theory. 2015;61:1619–1628. doi: 10.1109/TIT.2015.2400432. [DOI] [Google Scholar]

- 5.Simon M.K. A new twist on the Marcum Q-function and its application. IEEE Commun. Lett. 1998;2:39–41. doi: 10.1109/4234.660797. [DOI] [Google Scholar]

- 6.Simon M.K., Divsalar D. Some new twists to problems involving the Gaussian probability integral. IEEE Trans. Inf. Theory. 1998;46:200–210. doi: 10.1109/26.659479. [DOI] [Google Scholar]

- 7.Craig J.W. MILCOM 91-Conference Record. IEEE; Piscataway, NJ, USA: A new, simple and exact result for calculating the probability of error for two-dimensional signal constellations; pp. 25.5.1–25.5.5. [Google Scholar]

- 8.Appledorn C.R. The entropy of a Poisson distribution. SIAM Rev. 1988;30:314–317. doi: 10.1137/1029046. [DOI] [Google Scholar]

- 9.Erdélyi A., Magnus W., Oberhettinger F., Tricomi F.G., Bateman H. Higher Transcendental Functions. Volume 1 McGraw-Hill; New York, NY, USA: 1987. [Google Scholar]

- 10.Martinez A. Spectral efficiency of optical direct detection. JOSA B. 2007;24:739–749. doi: 10.1364/JOSAB.24.000739. [DOI] [Google Scholar]

- 11.Knessl C. Integral representations and asymptotic expansions for Shannon and Rényi entropies. Appl. Math. Lett. 1998;11:69–74. doi: 10.1016/S0893-9659(98)00013-5. [DOI] [Google Scholar]

- 12.Ryzhik I.M., Gradshteĭn I.S. Tables of Integrals, Series, and Products. Academic Press; New York, NY, USA: 1965. [Google Scholar]

- 13.Marsiglietti A., Kostina V. A lower bound on the differential entropy of log-concave random vectors with applications. Entropy. 2018;20:185. doi: 10.3390/e20030185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Dong A., Zhang H., Wu D., Yuan D. 2015 IEEE 82nd Vehicular Technology Conference (VTC2015-Fall) IEEE; Piscataway, NJ, USA: 2015. Logarithmic expectation of the sum of exponential random variables for wireless communication performance evaluation. [Google Scholar]

- 15.Tse D., Viswanath P. Fundamentals of Wireless Communication. Cambridge University Press; Cambridge, UK: 2005. [Google Scholar]

- 16.Barron A., Rissanen J., Yu B. The minimum description length principle in coding and modeling. IEEE Trans. Inf. Theory. 1998;44:2743–2760. doi: 10.1109/18.720554. [DOI] [Google Scholar]

- 17.Blumer A.C. Minimax universal noiseless coding for unifilar and Markov sources. IEEE Trans. Inf. Theory. 1987;33:925–930. doi: 10.1109/TIT.1987.1057366. [DOI] [Google Scholar]

- 18.Clarke B.S., Barron A.R. Information-theoretic asymptotics of Bayes methods. IEEE Trans. Inf. Theory. 1990;36:453–471. doi: 10.1109/18.54897. [DOI] [Google Scholar]

- 19.Clarke B.S., Barron A.R. Jeffreys’ prior is asymptotically least favorable under entropy risk. J. Stat. Plan. Infer. 1994;41:37–60. doi: 10.1016/0378-3758(94)90153-8. [DOI] [Google Scholar]

- 20.Davisson L.D. Universal noiseless coding. IEEE Trans. Inf. Theory. 1973;29:783–795. doi: 10.1109/TIT.1973.1055092. [DOI] [Google Scholar]

- 21.Davisson L.D. Minimax noiseless universal coding for Markov sources. IEEE Trans. Inf. Theory. 1983;29:211–215. doi: 10.1109/TIT.1983.1056652. [DOI] [Google Scholar]

- 22.Davisson L.D., McEliece R.J., Pursley M.B., Wallace M.S. Efficient universal noiseless source codes. IEEE Trans. Inf. Theory. 1981;27:269–278. doi: 10.1109/TIT.1981.1056355. [DOI] [Google Scholar]

- 23.Krichevsky R.E., Trofimov V.K. The performance of universal encoding. IEEE Trans. Inf. Theory. 1981;27:199–207. doi: 10.1109/TIT.1981.1056331. [DOI] [Google Scholar]

- 24.Merhav N., Feder M. Universal prediction. IEEE Trans. Inf. Theory. 1998;44:2124–2147. doi: 10.1109/18.720534. [DOI] [Google Scholar]

- 25.Rissanen J. A universal data compression system. IEEE Trans. Inf. Theory. 1983;29:656–664. doi: 10.1109/TIT.1983.1056741. [DOI] [Google Scholar]

- 26.Rissanen J. Universal coding, information, prediction, and estimation. IEEE Trans. Inf. Theory. 1984;30:629–636. doi: 10.1109/TIT.1984.1056936. [DOI] [Google Scholar]

- 27.Rissanen J. Fisher information and stochastic complexity. IEEE Trans. Inf. Theory. 1996;42:40–47. doi: 10.1109/18.481776. [DOI] [Google Scholar]

- 28.Shtarkov Y.M. Universal sequential coding of single messages. IPPI. 1987;23:175–186. [Google Scholar]

- 29.Weinberger M.J., Rissanen J., Feder M. A universal finite memory source. IEEE Trans. Inf. Theory. 1995;41:643–652. doi: 10.1109/18.382011. [DOI] [Google Scholar]

- 30.Xie Q., Barron A.R. Asymptotic minimax regret for data compression, gambling and prediction. IEEE Trans. Inf. Theory. 1997;46:431–445. [Google Scholar]

- 31.Wald A. Tests of statistical hypotheses concerning several parameters when the number of observations is large. Trans. Am. Math. Soc. 1943;54:426–482. doi: 10.1090/S0002-9947-1943-0012401-3. [DOI] [Google Scholar]

- 32.Mardia J., Jiao J., Tánczos E., Nowak R.D., Weissman T. Concentration inequalities for the empirical distribution of discrete distributions: Beyond the method of types. Inf. Inference. 2019:1–38. doi: 10.1093/imaiai/iaz025. [DOI] [Google Scholar]