Abstract

This tutorial paper focuses on the variants of the bottleneck problem taking an information theoretic perspective and discusses practical methods to solve it, as well as its connection to coding and learning aspects. The intimate connections of this setting to remote source-coding under logarithmic loss distortion measure, information combining, common reconstruction, the Wyner–Ahlswede–Korner problem, the efficiency of investment information, as well as, generalization, variational inference, representation learning, autoencoders, and others are highlighted. We discuss its extension to the distributed information bottleneck problem with emphasis on the Gaussian model and highlight the basic connections to the uplink Cloud Radio Access Networks (CRAN) with oblivious processing. For this model, the optimal trade-offs between relevance (i.e., information) and complexity (i.e., rates) in the discrete and vector Gaussian frameworks is determined. In the concluding outlook, some interesting problems are mentioned such as the characterization of the optimal inputs (“features”) distributions under power limitations maximizing the “relevance” for the Gaussian information bottleneck, under “complexity” constraints.

Keywords: information bottleneck, rate distortion theory, logarithmic loss, representation learning

1. Introduction

A growing body of works focuses on developing learning rules and algorithms using information theoretic approaches (e.g., see [1,2,3,4,5,6] and references therein). Most relevant to this paper is the Information Bottleneck (IB) method of Tishby et al. [1], which seeks the right balance between data fit and generalization by using the mutual information as both a cost function and a regularizer. Specifically, IB formulates the problem of extracting the relevant information that some signal provides about another one that is of interest as that of finding a representation U that is maximally informative about Y (i.e., large mutual information ) while being minimally informative about X (i.e., small mutual information ). In the IB framework, is referred to as the relevance of U and is referred to as the complexity of U, where complexity here is measured by the minimum description length (or rate) at which the observation is compressed. Accordingly, the performance of learning with the IB method and the optimal mapping of the data are found by solving the Lagrangian formulation

| (1) |

where is a stochastic map that assigns the observation X to a representation U from which Y is inferred and is the Lagrange multiplier. Several methods, which we detail below, have been proposed to obtain solutions to the IB problem in Equation (4) in several scenarios, e.g., when the distribution of the sources is perfectly known or only samples from it are available.

The IB approach, as a method to both characterize performance limits as well as to design mapping, has found remarkable applications in supervised and unsupervised learning problems such as classification, clustering, and prediction. Perhaps key to the analysis and theoretical development of the IB method is its elegant connection with information-theoretic rate-distortion problems, as it is now well known that the IB problem is essentially a remote source coding problem [7,8,9] in which the distortion is measured under logarithmic loss. Recent works show that this connection turns out to be useful for a better understanding the fundamental limits of learning problems, including the performance of deep neural networks (DNN) [10], the emergence of invariance and disentanglement in DNN [11], the minimization of PAC-Bayesian bounds on the test error [11,12], prediction [13,14], or as a generalization of the evidence lower bound (ELBO) used to train variational auto-encoders [15,16], geometric clustering [17], or extracting the Gaussian “part” of a signal [18], among others. Other connections that are more intriguing exist also with seemingly unrelated problems such as privacy and hypothesis testing [19,20,21] or multiterminal networks with oblivious relays [22,23] and non-binary LDPC code design [24]. More connections with other coding problems such as the problems of information combining and common reconstruction, the Wyner–Ahlswede–Korner problem, and the efficiency of investment information are unveiled and discussed in this tutorial paper, together with extensions to the distributed setting.

The abstract viewpoint of IB also seems instrumental to a better understanding of the so-called representation learning [25], which is an active research area in machine learning that focuses on identifying and disentangling the underlying explanatory factors that are hidden in the observed data in an attempt to render learning algorithms less dependent on feature engineering. More specifically, one important question, which is often controversial in statistical learning theory, is the choice of a “good” loss function that measures discrepancies between the true values and their estimated fits. There is however numerical evidence that models that are trained to maximize mutual information, or equivalently minimize the error’s entropy, often outperform ones that are trained using other criteria such as mean-square error (MSE) and higher-order statistics [26,27]. On this aspect, we also mention Fisher’s dissertation [28], which contains investigation of the application of information theoretic metrics to blind source separation and subspace projection using Renyi’s entropy as well as what appears to be the first usage of the now popular Parzen windowing estimator of information densities in the context of learning. Although a complete and rigorous justification of the usage of mutual information as cost function in learning is still awaited, recently, a partial explanation appeared in [29], where the authors showed that under some natural data processing property Shannon’s mutual information uniquely quantifies the reduction of prediction risk due to side information. Along the same line of work, Painsky and Wornell [30] showed that, for binary classification problems, by minimizing the logarithmic-loss (log-loss), one actually minimizes an upper bound to any choice of loss function that is smooth, proper (i.e., unbiased and Fisher consistent), and convex. Perhaps, this justifies partially why mutual information (or, equivalently, the corresponding loss function, which is the log-loss fidelity measure) is widely used in learning theory and has already been adopted in many algorithms in practice such as the infomax criterion [31], the tree-based algorithm of Quinlan [32], or the well known Chow–Liu algorithm [33] for learning tree graphical models, with various applications in genetics [34], image processing [35], computer vision [36], etc. The logarithmic loss measure also plays a central role in the theory of prediction [37] (Ch. 09) where it is often referred to as the self-information loss function, as well as in Bayesian modeling [38] where priors are usually designed to maximize the mutual information between the parameter to be estimated and the observations. The goal of learning, however, is not merely to learn model parameters accurately for previously seen data. Rather, in essence, it is the ability to successfully apply rules that are extracted from previously seen data to characterize new unseen data. This is often captured through the notion of “generalization error”. The generalization capability of a learning algorithm hinges on how sensitive the output of the algorithm is to modifications of the input dataset, i.e., its stability [39,40]. In the context of deep learning, it can be seen as a measure of how much the algorithm overfits the model parameters to the seen data. In fact, efficient algorithms should strike a good balance between their ability to fit training dataset and that to generalize well to unseen data. In statistical learning theory [37], such a dilemma is reflected through that the minimization of the “population risk” (or “test error” in the deep learning literature) amounts to the minimization of the sum of the two terms that are generally difficult to minimize simultaneously, the “empirical risk” on the training data and the generalization error. To prevent over-fitting, regularization methods can be employed, which include parameter penalization, noise injection, and averaging over multiple models trained with distinct sample sets. Although it is not yet very well understood how to optimally control model complexity, recent works [41,42] show that the generalization error can be upper-bounded using the mutual information between the input dataset and the output of the algorithm. This result actually formalizes the intuition that the less information a learning algorithm extracts from the input dataset the less it is likely to overfit, and justifies, partly, the use of mutual information also as a regularizer term. The interested reader may refer to [43] where it is shown that regularizing with mutual information alone does not always capture all desirable properties of a latent representation. We also point out that there exists an extensive literature on building optimal estimators of information quantities (e.g., entropy, mutual information), as well as their Matlab/Python implementations, including in the high-dimensional regime (see, e.g., [44,45,46,47,48,49] and references therein).

This paper provides a review of the information bottleneck method, its classical solutions, and recent advances. In addition, in the paper, we unveil some useful connections with coding problems such as remote source-coding, information combining, common reconstruction, the Wyner–Ahlswede–Korner problem, the efficiency of investment information, CEO source coding under logarithmic-loss distortion measure, and learning problems such as inference, generalization, and representation learning. Leveraging these connections, we discuss its extension to the distributed information bottleneck problem with emphasis on its solutions and the Gaussian model and highlight the basic connections to the uplink Cloud Radio Access Networks (CRAN) with oblivious processing. For this model, the optimal trade-offs between relevance and complexity in the discrete and vector Gaussian frameworks is determined. In the concluding outlook, some interesting problems are mentioned such as the characterization of the optimal inputs distributions under power limitations maximizing the “relevance” for the Gaussian information bottleneck under “complexity” constraints.

Notation

Throughout, uppercase letters denote random variables, e.g., X; lowercase letters denote realizations of random variables, e.g., x; and calligraphic letters denote sets, e.g., . The cardinality of a set is denoted by . For a random variable X with probability mass function (pmf) , we use , for short. Boldface uppercase letters denote vectors or matrices, e.g., , where context should make the distinction clear. For random variables and a set of integers , denotes the set of random variables with indices in the set , i.e., . If , . For , we let , and assume that . In addition, for zero-mean random vectors and , the quantities , and denote, respectively, the covariance matrix of the vector , the covariance matrix of vector , and the conditional covariance matrix of , conditionally on , i.e., , and . Finally, for two probability measures and on the random variable , the relative entropy or Kullback–Leibler divergence is denoted as . That is, if is absolutely continuous with respect to , (i.e., for every , if , then , , otherwise .

2. The Information Bottleneck Problem

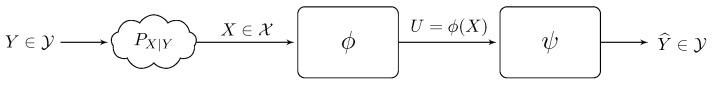

The Information Bottleneck (IB) method was introduced by Tishby et al. [1] as a method for extracting the information that some variable provides about another one that is of interest, as shown in Figure 1.

Figure 1.

Information bottleneck problem.

Specifically, the IB method consists of finding the stochastic mapping that from an observation X outputs a representation that is maximally informative about Y, i.e., large mutual information , while being minimally informative about X, i.e., small mutual information (As such, the usage of Shannon’s mutual information seems to be motivated by the intuition that such a measure provides a natural quantitative approach to the questions of meaning, relevance, and common-information, rather than the solution of a well-posed information-theoretic problem—a connection with source coding under logarithmic loss measure appeared later on in [50].) The auxiliary random variable U satisfies that is a Markov Chain in this order; that is, that the joint distribution of satisfies

| (2) |

and the mapping is chosen such that U strikes a suitable balance between the degree of relevance of the representation as measured by the mutual information and its degree of complexity as measured by the mutual information . In particular, such U, or effectively the mapping , can be determined to maximize the IB-Lagrangian defined as

| (3) |

over all mappings that satisfy and the trade-off parameter is a positive Lagrange multiplier associated with the constraint on .

Accordingly, for a given and source distribution , the optimal mapping of the data, denoted by , is found by solving the IB problem, defined as

| (4) |

over all mappings that satisfy . It follows from the classical application of Carathéodory’s theorem [51] that without loss of optimality, U can be restricted to satisfy .

In Section 3 we discuss several methods to obtain solutions to the IB problem in Equation (4) in several scenarios, e.g., when the distribution of is perfectly known or only samples from it are available.

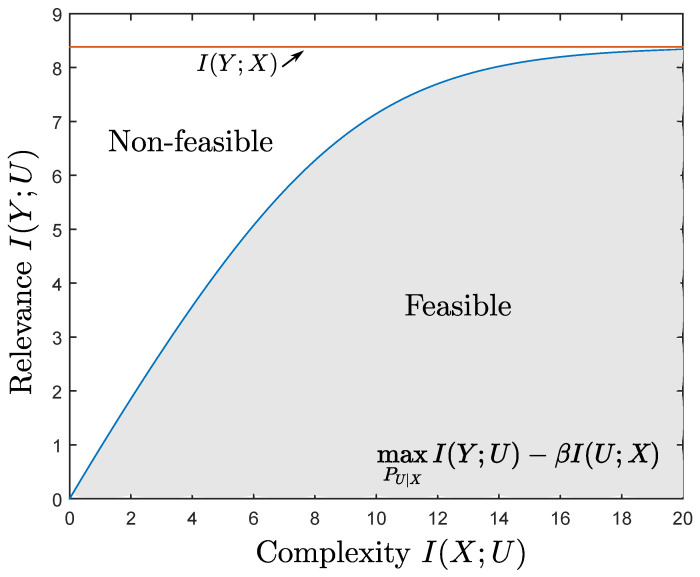

2.1. The Ib Relevance–Complexity Region

The minimization of the IB-Lagrangian in Equation (4) for a given and results in an optimal mapping and a relevance–complexity pair where and are, respectively, the relevance and the complexity resulting from generating with the solution . By optimizing over all , the resulting relevance–complexity pairs characterize the boundary of the region of simultaneously achievable relevance–complexity pairs for a distribution (see Figure 2). In particular, for a fixed , we define this region as the union of relevance–complexity pairs that satisfy

| (5) |

where the union is over all such that U satisfies form a Markov Chain in this order. Any pair outside of this region is not simultaneously achievable by any mapping .

Figure 2.

Information bottleneck relevance–complexity region. For a given , the solution to the minimization of the IB-Lagrangian in Equation (3) results in a pair on the boundary of the IB relevance–complexity region (colored in grey).

3. Solutions to the Information Bottleneck Problem

As shown in the previous region, the IB problem provides a methodology to design mappings performing at different relevance–complexity points within the region of feasible pairs, characterized by the IB relevance–complexity region, by minimizing the IB-Lagrangian in Equation (3) for different values of . However, in general, this optimization is challenging as it requires computation of mutual information terms.

In this section, we describe how, for a fixed parameter , the optimal solution , or an efficient approximation of it, can be obtained under: (i) particular distributions, e.g., Gaussian and binary symmetric sources; (ii) known general discrete memoryless distributions; and (iii) unknown memory distributions and only samples are available.

3.1. Solution for Particular Distributions: Gaussian and Binary Symmetric Sources

In certain cases, when the joint distribution is know, e.g., it is binary symmetric or Gaussian, information theoretic inequalities can be used to minimize the IB-Lagrangian in (4) in closed form.

3.1.1. Binary IB

Let X and Y be a doubly symmetric binary sources (DSBS), i.e., for some . (A DSBS is a pair of binary random variables and and , where ⊕ is the sum modulo 2. That is, Y is the output of a binary symmetric channel with crossover probability p corresponding to the input X, and X is the output of the same channel with input Y.) Then, it can be shown that the optimal U in (4) is such that for some . Such a U can be obtained with the mapping such that

| (6) |

In this case, straightforward algebra leads to that the complexity level is given by

| (7) |

where, for , is the entropy of a Bernoulli- source, i.e., , and the relevance level is given by

| (8) |

where . The result extends easily to discrete symmetric mappings with binary X (one bit output quantization) and discrete non-binary Y.

3.1.2. Vector Gaussian IB

Let be a pair of jointly Gaussian, zero-mean, complex-valued random vectors, of dimension and , respectively. In this case, the optimal solution of the IB-Lagrangian in Equation (3) (i.e., test channel ) is a noisy linear projection to a subspace whose dimensionality is determined by the tradeoff parameter . The subspaces are spanned by basis vectors in a manner similar to the well known canonical correlation analysis [52]. For small , only the vector associated to the dimension with more energy, i.e., corresponding to the largest eigenvalue of a particular hermitian matrix, will be considered in U. As increases, additional dimensions are added to U through a series of critical points that are similar to structural phase transitions. This process continues until U becomes rich enough to capture all the relevant information about Y that is contained in X. In particular, the boundary of the optimal relevance–complexity region was shown in [53] to be achievable using a test channel , which is such that is Gaussian. Without loss of generality, let

| (9) |

where is an complex valued matrix and is a Gaussian noise that is independent of with zero-mean and covariance matrix . For a given non-negative trade-off parameter , the matrix has a number of rows that depends on and is given by [54] (Theorem 3.1)

| (10) |

where are the left eigenvectors of sorted by their corresponding ascending eigenvalues . Furthermore, for , are critical -values, with , denotes the -dimensional zero vector and semicolons separate the rows of the matrix. It is interesting to observe that the optimal projection consists of eigenvectors of , combined in a judicious manner: for values of that are smaller than , reducing complexity is of prime importance, yielding extreme compression , i.e., independent noise and no information preservation at all about . As increases, it undergoes a series of critical points , at each of which a new eignevector is added to the matrix , yielding a more complex but richer representation—the rank of increases accordingly.

For the specific case of scalar Gaussian sources, that is , e.g., where N is standard Gaussian with zero-mean and unit variance, the above result simplifies considerably. In this case, let without loss of generality the mapping be given by

| (11) |

where Q is standard Gaussian with zero-mean and variance . In this case, for , we get

| (12) |

3.2. Approximations for Generic Distributions

Next, we present an approach to obtain solutions to the the information bottleneck problem for generic distributions, both when this solution is known and when it is unknown. The method consists in defining a variational (lower) bound on the IB-Lagrangian, which can be optimized more easily than optimizing the IB-Lagrangian directly.

3.2.1. A Variational Bound

Recall the IB goal of finding a representation U of X that is maximally informative about Y while being concise enough (i.e., bounded ). This corresponds to optimizing the IB-Lagrangian

| (13) |

where the maximization is over all stochastic mappings such that and . In this section, we show that minimizing Equation (13) is equivalent to optimizing the variational cost

| (14) |

where is an given stochastic map (also referred to as the variational approximation of or decoder) and is a given stochastic map (also referred to as the variational approximation of ), and is the relative entropy between and .

Then, we have the following bound for a any valid , i.e., satisfying the Markov Chain in Equation (2),

| (15) |

where the equality holds when and , i.e., the variational approximations correspond to the true value.

In the following, we derive the variational bound. Fix (an encoder) and the variational decoder approximation . The relevance can be lower-bounded as

| (16) |

| (17) |

| (18) |

| (19) |

| (20) |

| (21) |

where in the term is the conditional relative entropy between and , given ; holds by the non-negativity of relative entropy; holds by the non-negativity of entropy; and follows using the Markov Chain .

Similarly, let be a given the variational approximation of . Then, we get

| (22) |

| (23) |

| (24) |

where the inequality follows since the relative entropy is non-negative.

Combining Equations (21) and (24), we get

| (25) |

The use of the variational bound in Equation (14) over the IB-Lagrangian in Equation (13) shows some advantages. First, it allows the derivation of alternating algorithms that allow to obtain a solution by optimizing over the encoders and decoders. Then, it is easier to obtain an empirical estimate of Equation (14) by sampling from: (i) the joint distribution ; (ii) the encoder ; and (iii) the prior . Additionally, as noted in Equation (15), when evaluated for the optimal decoder and prior , the variational bound becomes tight. All this allows obtaining algorithms to obtain good approximate solutions to the IB problem, as shown next. Further theoretical implications of this variational bound are discussed in [55].

3.2.2. Known Distributions

Using the variational formulation in Equation (14), when the data model is discrete and the joint distribution is known, the IB problem can be solved by using an iterative method that optimizes the variational IB cost function in Equation (14) alternating over the distributions , and . In this case, the maximizing distributions , and can be efficiently found by an alternating optimization procedure similar to the expectation-maximization (EM) algorithm [56] and the standard Blahut–Arimoto (BA) method [57]. In particular, a solution to the constrained optimization problem is determined by the following self-consistent equations, for all , [1]

| (26a) |

| (26b) |

| (26c) |

where and is a normalization term. It is shown in [1] that alternating iterations of these equations converges to a solution of the problem for any initial . However, by opposition to the standard Blahut–Arimoto algorithm [57,58], which is classically used in the computation of rate-distortion functions of discrete memoryless sources for which convergence to the optimal solution is guaranteed, convergence here may be to a local optimum only. If , the optimization is non-constrained and one can set , which yields minimal relevance and complexity levels. Increasing the value of steers towards more accurate and more complex representations, until in the limit of very large (infinite) values of for which the relevance reaches its maximal value .

For discrete sources with (small) alphabets, the updating equations described by Equation (26) are relatively easy computationally. However, if the variables X and Y lie in a continuum, solving the equations described by Equation (26) is very challenging. In the case in which X and Y are joint multivariate Gaussian, the problem of finding the optimal representation U is analytically tractable in [53] (see also the related [54,59]), as discussed in Section 3.1.2. Leveraging the optimality of Gaussian mappings to restrict the optimization of to Gaussian distributions as in Equation (9), allows reducing the search of update rules to those of the associated parameters, namely covariance matrices. When Y is a deterministic function of X, the IB curve cannot be explored, and other Lagrangians have been proposed to tackle this problem [60].

3.3. Unknown Distributions

The main drawback of the solutions presented thus far for the IB principle is that, in the exception of small-sized discrete for which iterating Equation (26) converges to an (at least local) solution and jointly Gaussian for which an explicit analytic solution was found, solving Equation (3) is generally computationally costly, especially for high dimensionality. Another important barrier in solving Equation (3) directly is that IB necessitates knowledge of the joint distribution . In this section, we describe a method to provide an approximate solution to the IB problem in the case in which the joint distribution is unknown and only a give training set of N samples is available.

A major step ahead, which widened the range of applications of IB inference for various learning problems, appeared in [48], where the authors used neural networks to parameterize the variational inference lower bound in Equation (14) and show that its optimization can be done through the classic and widely used stochastic gradient descendent (SGD). This method, denoted by Variational IB in [48] and detailed below, has allowed handling handle high-dimensional, possibly continuous, data, even in the case in which the distributions are unknown.

3.3.1. Variational IB

The goal of the variational IB when only samples are available is to solve the IB problem optimizing an approximation of the cost function. For instance, for a given training set , the right hand side of Equation (14) can be approximated as

| (27) |

However, in general, the direct optimization of this cost is challenging. In the variational IB method, this optimization is done by parameterizing the encoding and decoding distributions , , and that are to optimize using a family of distributions whose parameters are determined by DNNs. This allows us to formulate Equation (14) in terms of the DNN parameters, i.e., its weights, and optimize it by using the reparameterization trick [15], Monte Carlo sampling, and stochastic gradient descent (SGD)-type algorithms.

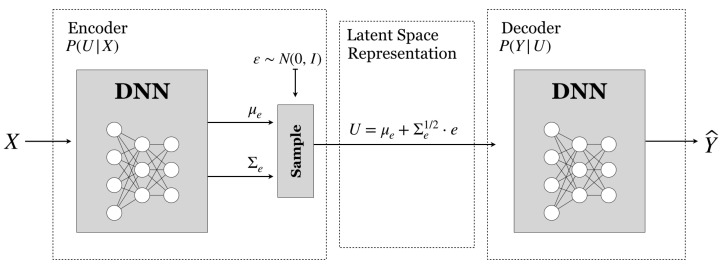

Let denote the family of encoding probability distributions over for each element on , parameterized by the output of a DNN with parameters . A common example is the family of multivariate Gaussian distributions [15], which are parameterized by the mean and covariance matrix , i.e., . Given an observation X, the values of are determined by the output of the DNN , whose input is X, and the corresponding family member is given by . For discrete distributions, a common example are concrete variables [61] (or Gumbel-Softmax [62]). Some details are given below.

Similarly, for decoder over for each element on , let denote the family of distributions parameterized by the output of the DNNs . Finally, for the prior distributions over we define the family of distributions , which do not depend on a DNN.

By restricting the optimization of the variational IB cost in Equation (14) to the encoder, decoder, and prior within the families of distributions , , and , we get

| (28) |

where , and denote the DNN parameters, e.g., its weights, and the cost in Equation (29) is given by

| (29) |

Next, using the training samples , the DNNs are trained to maximize a Monte Carlo approximation of Equation (29) over using optimization methods such as SGD or ADAM [63] with backpropagation. However, in general, the direct computation of the gradients of Equation (29) is challenging due to the dependency of the averaging with respect to the encoding , which makes it hard to approximate the cost by sampling. To circumvent this problem, the reparameterization trick [15] is used to sample from . In particular, consider to belong to a parametric family of distributions that can be sampled by first sampling a random variable Z with distribution , and then transforming the samples using some function parameterized by , such that . Various parametric families of distributions fall within this class for both discrete and continuous latent spaces, e.g., the Gumbel-Softmax distributions and the Gaussian distributions. Next, we detail how to sample from both examples:

-

Sampling from Gaussian Latent Spaces: When the latent space is a continuous vector space of dimension D, e.g., , we can consider multivariate Gaussian parametric encoders of mean , and covariance , i.e., . To sample , where and are determined as the output of a NN, sample a random variable i.i.d. and, given data sample , and generate the jth sample as

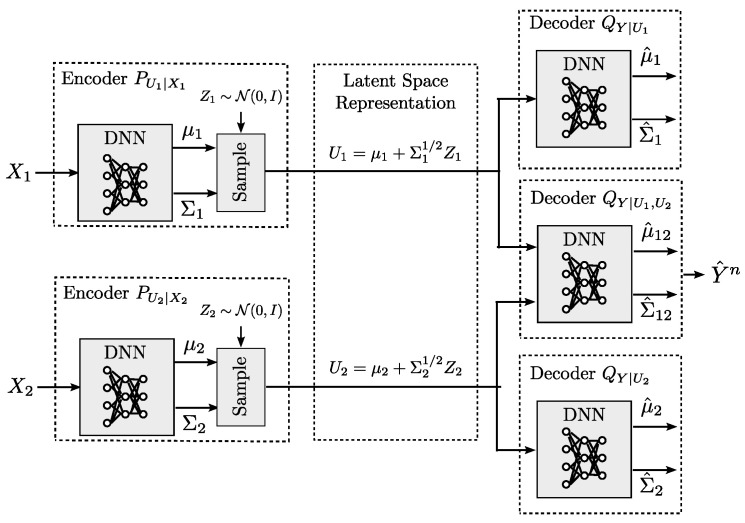

where is a sample of , which is an independent Gaussian noise, and and are the output values of the NN with weights for the given input sample x.(30) An example of the resulting DIB architecture to optimize with an encoder, a latent space, and a decoder parameterized by Gaussian distributions is shown in Figure 3.

-

Sampling from a discrete latent space with the Gumbel-Softmax:

If U is categorical random variable on the finite set of size D with probabilities ), we can encode it as D-dimensional one-hot vectors lying on the corners of the -dimensional simplex, . In general, costs functions involving sampling from categorical distributions are non-differentiable. Instead, we consider Concrete variables [62] (or Gumbel-Softmax [61]), which are continuous differentiable relaxations of categorical variables on the interior of the simplex, and are easy to sample. To sample from a Concrete random variable at temperature , with probabilities , sample i.i.d. (The distribution can be sampled by drawing and calculating .), and set for each of the components of(31) We denote by the Concrete distribution with parameters . When the temperature approaches 0, the samples from the concrete distribution become one-hot and [61]. Note that, for discrete data models, standard application of Caratheodory’s theorem [64] shows that the latent variables U that appear in Equation (3) can be restricted to be with bounded alphabets size.

Figure 3.

Example parametrization of Variational Information Bottleneck using neural networks.

The reparametrization trick transforms the cost function in Equation (29) into one which can be to approximated by sampling M independent samples for each training sample , and allows computing estimates of the gradient using backpropagation [15]. Sampling is performed by using with i.i.d. sampled from . Altogether, we have the empirical-DIB cost for the ith sample in the training dataset:

| (32) |

Note that, for many distributions, e.g., multivariate Gaussian, the divergence can be evaluated in closed form. Alternatively, an empirical approximation can be considered.

Finally, we maximize the empirical-IB cost over the DNN parameters as,

| (33) |

By the law of large numbers, for large , we have almost surely. After convergence of the DNN parameters to , for a new observation X, the representation U can be obtained by sampling from the encoders . In addition, note that a soft estimate of the remote source Y can be inferred by sampling from the decoder . The notion of encoder and decoder in the IB-problem will come clear from its relationship with lossy source coding in Section 4.1.

4. Connections to Coding Problems

The IB problem is a one-shot coding problem, in the sense that the operations are performed letter-wise. In this section, we consider now the relationship between the IB problem and (asymptotic) coding problem in which the coding operations are performed over blocks of size n, with n assumed to be large and the joint distribution of the data is in general assumed to be known a priori. The connections between these problems allow extending results from one setup to another, and to consider generalizations of the classical IB problem to other setups, e.g., as shown in Section 6.

4.1. Indirect Source Coding under Logarithmic Loss

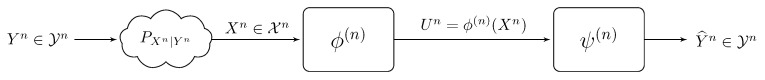

Let us consider the (asymptotic) indirect source coding problem shown in Figure 4, in which Y designates a memoryless remote source and X a noisy version of it that is observed at the encoder.

Figure 4.

A remote source coding problem.

A sequence of n samples is mapped by an encoder which outputs a message from a set , that is, the encoder uses at most R bits per sample to describe its observation and the range of the encoder map is allowed to grow with the size of the input sequence as

| (34) |

This message is mapped with a decoder to generate a reconstruction of the source sequence as . As already observed in [50], the IB problem in Equation (3) is essentially equivalent to a remote point-to-point source coding problem in which distortion between as is measured under the logarithm loss (log-loss) fidelity criterion [65]. That is, rather than just assigning a deterministic value to each sample of the source, the decoder gives an assessment of the degree of confidence or reliability on each estimate. Specifically, given the output description of the encoder, the decoder generates a soft-estimate of in the form of a probability distribution over , i.e., . The incurred discrepancy between and the estimation under log-loss for the observation is then given by the per-letter logarithmic loss distortion, which is defined as

| (35) |

for and designates here a probability distribution on and is the value of that distribution evaluated at the outcome .

That is, the encoder uses at most R bits per sample to describe its observation to a decoder which is interested in reconstructing the remote source to within an average distortion level D, using a per-letter distortion metric, i.e.,

| (36) |

where the incurred distortion between two sequences and is measured as

| (37) |

and the per-letter distortion is measured in terms of that given by the logarithmic loss in Equation (53).

The rate distortion region of this model is given by the union of all pairs that satisfy [7,9]

| (38a) |

| (38b) |

where the union is over all auxiliary random variables U that satisfy that forms a Markov Chain in this order. Invoking the support lemma [66] (p. 310), it is easy to see that this region is not altered if one restricts U to satisfy . In addition, using the substitution , the region can be written equivalently as the union of all pairs that satisfy

| (39a) |

| (39b) |

where the union is over all Us with pmf that satisfy , with .

The boundary of this region is equivalent to the one described by the IB principle in Equation (3) if solved for all , and therefore the IB problem is essentially a remote source coding problem in which the distortion is measured under the logarithmic loss measure. Note that, operationally, the IB problem is equivalent to that of finding an encoder which maps the observation X to a representation U that satisfies the bit rate constraint R and such that U captures enough relevance of Y so that the posterior probability of Y given U satisfies an average distortion constraint.

4.2. Common Reconstruction

Consider the problem of source coding with side information at the decoder, i.e., the well known Wyner–Ziv setting [67], with the distortion measured under logarithmic-loss. Specifically, a memoryless source X is to be conveyed lossily to a decoder that observes a statistically correlated side information Y. The encoder uses R bits per sample to describe its observation to the decoder which wants to reconstruct an estimate of X to within an average distortion level D, where the distortion is evaluated under the log-loss distortion measure. The rate distortion region of this problem is given by the set of all pairs that satisfy

| (40) |

The optimal coding scheme utilizes standard Wyner–Ziv compression [67] at the encoder and the decoder map is given by

| (41) |

for which it is easy to see that

| (42) |

Now, assume that we constrain the coding in a manner that the encoder is be able to produce an exact copy of the compressed source constructed by the decoder. This requirement, termed common reconstruction constraint (CR), was introduced and studied by Steinberg [68] for various source coding models, including the Wyner–Ziv setup, in the context of a “general distortion measure”. For the Wyner–Ziv problem under log-loss measure that is considered in this section, such a CR constraint causes some rate loss because the reproduction rule in Equation (41) is no longer possible. In fact, it is not difficult to see that under the CR constraint the above region reduces to the set of pairs that satisfy

| (43a) |

| (43b) |

for some auxiliary random variable for which holds. Observe that Equation (43b) is equivalent to and that, for a given prescribed fidelity level D, the minimum rate is obtained for a description U that achieves the inequality in Equation (43b) with equality, i.e.,

| (44) |

Because , we have

| (45) |

Under the constraint , it is easy to see that minimizing amounts to maximizing , an aspect which bridges the problem at hand with the IB problem.

In the above, the side information Y is used for binning but not for the estimation at the decoder. If the encoder ignores whether Y is present or not at the decoder side, the benefit of binning is reduced—see the Heegard–Berger model with common reconstruction studied in [69,70].

4.3. Information Combining

Consider again the IB problem. Assume one wishes to find the representation U that maximizes the relevance for a given prescribed complexity level, e.g., . For this setup, we have

| (46) |

| (47) |

where the first equality holds since is a Markov Chain. Maximizing is then equivalent to minimizing . This is reminiscent of the problem of information combining [71,72], where X can be interpreted as a source information that is conveyed through two channels: the channel and the channel . The outputs of these two channels are conditionally independent given X, and they should be processed in a manner such that, when combined, they preserve as much information as possible about X.

4.4. Wyner–Ahlswede–Korner Problem

Here, the two memoryless sources X and Y are encoded separately at rates and , respectively. A decoder gets the two compressed streams and aims at recovering Y losslessly. This problem was studied and solved separately by Wyner [73] and Ahlswede and Körner [74]. For given , the minimum rate that is needed to recover Y losslessly is

| (48) |

Thus, we get

and therefore, solving the IB problem is equivalent to solving the Wyner–Ahlswede–Korner Problem.

4.5. The Privacy Funnel

Consider again the setting of Figure 4, and let us assume that the pair models data that a user possesses and which have the following properties: the data Y are some sensitive (private) data that are not meant to be revealed at all, or else not beyond some level ; and the data X are non-private and are meant to be shared with another user (analyst). Because X and Y are correlated, sharing the non-private data X with the analyst possibly reveals information about Y. For this reason, there is a trade off between the amount of information that the user shares about X and the information that he keeps private about Y. The data X are passed through a randomized mapping whose purpose is to make maximally informative about X while being minimally informative about Y.

The analyst performs an inference attack on the private data Y based on the disclosed information U. Let be an arbitrary loss function with reconstruction alphabet that measures the cost of inferring Y after observing U. Given and under the given loss function ℓ, it is natural to quantify the difference between the prediction losses in predicting prior and after observing . Let

| (49) |

where is deterministic and is any measurable function of . The quantity quantifies the reduction in the prediction loss under the loss function ℓ that is due to observing , i.e., the inference cost gain. In [75] (see also [76]), it is shown that that under some mild conditions the inference cost gain as defined by Equation (49) is upper-bounded as

| (50) |

where L is a constant. The inequality in Equation (50) holds irrespective to the choice of the loss function ℓ, and this justifies the usage of the logarithmic loss function as given by Equation (53) in the context of finding a suitable trade off between utility and privacy, since

| (51) |

Under the logarithmic loss function, the design of the mapping should strike a right balance between the utility for inferring the non-private data X as measured by the mutual information and the privacy metric about the private date Y as measured by the mutual information .

4.6. Efficiency of Investment Information

Let Y model a stock market data and X some correlated information. In [77], Erkip and Cover investigated how the description of the correlated information X improves the investment in the stock market Y. Specifically, let denote the maximum increase in growth rate when X is described to the investor at rate C. Erkip and Cover found a single-letter characterization of the incremental growth rate . When specialized to the horse race market, this problem is related to the aforementioned source coding with side information of Wyner [73] and Ahlswede-Körner [74], and, thus, also to the IB problem. The work in [77] provides explicit analytic solutions for two horse race examples, jointly binary and jointly Gaussian horse races.

5. Connections to Inference and Representation Learning

In this section, we consider the connections of the IB problem with learning, inference and generalization, for which, typically, the joint distribution of the data is not known and only a set of samples is available.

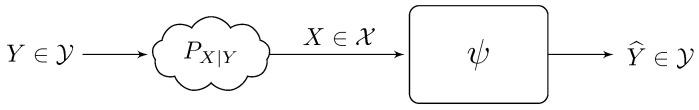

5.1. Inference Model

Let a measurable variable and a target variable with unknown joint distribution be given. In the classic problem of statistical learning, one wishes to infer an accurate predictor of the target variable based on observed realizations of . That is, for a given class of admissible predictors and a loss function that measures discrepancies between true values and their estimated fits, one aims at finding the mapping that minimizes the expected (population) risk

| (52) |

An abstract inference model is shown in Figure 5.

Figure 5.

An abstract inference model for learning.

The choice of a “good” loss function is often controversial in statistical learning theory. There is however numerical evidence that models that are trained to minimize the error’s entropy often outperform ones that are trained using other criteria such as mean-square error (MSE) and higher-order statistics [26,27]. This corresponds to choosing the loss function given by the logarithmic loss, which is defined as

| (53) |

for , where designates here a probability distribution on and is the value of that distribution evaluated at the outcome . Although a complete and rigorous justification of the usage of the logarithmic loss as distortion measure in learning is still awaited, recently a partial explanation appeared in [30] where Painsky and Wornell showed that, for binary classification problems, by minimizing the logarithmic-loss one actually minimizes an upper bound to any choice of loss function that is smooth, proper (i.e., unbiased and Fisher consistent), and convex. Along the same line of work, the authors of [29] showed that under some natural data processing property Shannon’s mutual information uniquely quantifies the reduction of prediction risk due to side information. Perhaps, this justifies partially why the logarithmic-loss fidelity measure is widely used in learning theory and has already been adopted in many algorithms in practice such as the infomax criterion [31], the tree-based algorithm of Quinlan [32], or the well known Chow–Liu algorithm [33] for learning tree graphical models, with various applications in genetics [34], image processing [35], computer vision [36], and others. The logarithmic loss measure also plays a central role in the theory of prediction [37] (Ch. 09), where it is often referred to as the self-information loss function, as well as in Bayesian modeling [38] where priors are usually designed to maximize the mutual information between the parameter to be estimated and the observations.

When the join distribution is known, the optimal predictor and the minimum expected (population) risk can be characterized. Let, for every , . It is easy to see that

| (54a) |

| (54b) |

| (54c) |

| (54d) |

with equality iff the predictor is given by the conditional posterior . That is, the minimum expected (population) risk is given by

| (55) |

If the joint distribution is unknown, which is most often the case in practice, the population risk as given by Equation (56) cannot be computed directly, and, in the standard approach, one usually resorts to choosing the predictor with minimal risk on a training dataset consisting of n labeled samples that are drawn independently from the unknown joint distribution . In this case, one is interested in optimizing the empirical population risk, which for a set of n i.i.d. samples from , , is defined as

| (56) |

The difference between the empirical and population risks is normally measured in terms of the generalization gap, defined as

| (57) |

5.2. Minimum Description Length

One popular approach to reducing the generalization gap is by restricting the set of admissible predictors to a low-complexity class (or constrained complexity) to prevent over-fitting. One way to limit the model’s complexity is by restricting the range of the prediction function, as shown in Figure 6. This is the so-called minimum description length complexity measure, often used in the learning literature to limit the description length of the weights of neural networks [78]. A connection between the use of the minimum description complexity for limiting the description length of the input encoding and accuracy studied in [79] and with respect to the weight complexity and accuracy is given in [11]. Here, the stochastic mapping is a compressor with

| (58) |

for some prescribed “input-complexity” value R, or equivalently prescribed average description-length.

Figure 6.

Inference problem with constrained model’s complexity.

Minimizing the constrained description length population risk is now equivalent to solving

| (59) |

| (60) |

It can be shown that this problem takes its minimum value with the choice of and

| (61) |

The solution to Equation (61) for different values of R is effectively equivalent to the IB-problem in Equation (4). Observe that the right-hand side of Equation (61) is larger for small values of R; it is clear that a good predictor should strike a right balance between reducing the model’s complexity and reducing the error’s entropy, or, equivalently, maximizing the mutual information about the target variable Y.

5.3. Generalization and Performance Bounds

The IB-problem appears as a relevant problem in fundamental performance limits of learning. In particular, when is unknown, and instead n samples i.i.d from are available, the optimization of the empirical risk in Equation (56) leads to a mismatch between the true loss given by the population risk and the empirical risk. This gap is measured by the generalization gap in Equation (57). Interestingly, the relationship between the true loss and the empirical loss can be bounded (in high probability) in terms of the IB-problem as [80]

where and are the empirical encoder and decoder and is the optimal decoder. and are the empirical loss and the mutual information resulting from the dataset and is a function that measures the mismatch between the optimal decoder and the empirical one.

This bound shows explicitly the trade-off between the empirical relevance and the empirical complexity. The pairs of relevance and complexity simultaneously achievable is precisely characterized by the IB-problem. Therefore, by designing estimators based on the IB problem, as described in Section 3, one can perform at different regimes of performance, complexity and generalization.

Another interesting connection between learning and the IB-method is the connection of the logarithmic-loss as metric to common performance metrics in learning:

- The logarithmic-loss gives an upper bound on the probability of miss-classification (accuracy):

- The logarithmic-loss is equivalent to maximum likelihood for large n:

- The true distribution P minimizes the expected logarithmic-loss:

Since for the joint distribution can be perfectly learned, the link between these common criteria allows the use of the IB-problem to derive asymptotic performance bounds, as well as design criteria, in most of the learning scenarios of classification, regression, and inference.

5.4. Representation Learning, Elbo and Autoencoders

The performance of machine learning algorithms depends strongly on the choice of data representation (or features) on which they are applied. For that reason, feature engineering, i.e., the set of all pre-processing operations and transformations applied to data in the aim of making them support effective machine learning, is important. However, because it is both data- and task-dependent, such feature-engineering is labor intensive and highlights one of the major weaknesses of current learning algorithms: their inability to extract discriminative information from the data themselves instead of hand-crafted transformations of them. In fact, although it may sometimes appear useful to deploy feature engineering in order to take advantage of human know-how and prior domain knowledge, it is highly desirable to make learning algorithms less dependent on feature engineering to make progress towards true artificial intelligence.

Representation learning is a sub-field of learning theory that aims at learning representations of the data that make it easier to extract useful information, possibly without recourse to any feature engineering. That is, the goal is to identify and disentangle the underlying explanatory factors that are hidden in the observed data. In the case of probabilistic models, a good representation is one that captures the posterior distribution of the underlying explanatory factors for the observed input. For related works, the reader may refer, e.g., to the proceedings of the International Conference on Learning Representations (ICLR), see https://iclr.cc/.

The use of the Shannon’s mutual information as a measure of similarity is particularly suitable for the purpose of learning a good representation of data [81]. In particular, a popular approach to representation learning are autoencoders, in which neural networks are designed for the task of representation learning. Specifically, we design a neural network architecture such that we impose a bottleneck in the network that forces a compressed knowledge representation of the original input, by optimizing the Evidence Lower Bound (ELBO), given as

| (62) |

over the neural network parameters . Note that this is precisely the variational-IB cost in Equation (32) for and , i.e., the IB variational bound when particularized to distributions whose parameters are determined by neural networks. In addition, note that the architecture shown in Figure 3 is the classical neural network architecture for autoencoders, and that is coincides with the variational IB solution resulting from the optimization of the IB-problem in Section 3.3.1. In addition, note that Equation (32) provides an operational meaning to the -VAE cost [82], as a criterion to design estimators on the relevance–complexity plane for different values, since the -VAE cost is given as

| (63) |

which coincides with the empirical version of the variational bound found in Equation (32).

5.5. Robustness to Adversarial Attacks

Recent advances in deep learning has allowed the design of high accuracy neural networks. However, it has been observed that the high accuracy of trained neural networks may be compromised under nearly imperceptible changes in the inputs [83,84,85]. The information bottleneck has also found applications in providing methods to improve robustness to adversarial attacks when training models. In particular, the use of the variational IB method of Alemi et al. [48] showed the advantages of the resulting neural network for classification in terms of robustness to adversarial attacks. Recently, alternatives strategies for extracting features in supervised learning are proposed in [86] to construct classifiers robust to small perturbations in the input space. Robustness is measured in terms of the (statistical)-Fisher information, given for two random variables as

| (64) |

The method in [86] builds upon the idea of the information bottleneck by introducing an additional penalty term that encourages the Fisher information in Equation (64) of the extracted features to be small, when parametrized by the inputs. For this problem, under jointly Gaussian vector sources , the optimal representation is also shown to be Gaussian, in line with the results in Section 6.2.1 for the IB without robustness penalty. For general source distributions, a variational method is proposed similar to the variational IB method in Section 3.3.1. The problem shows connections with the I-MMSE [87], de Brujin identity [88,89], Cramér–Rao inequality [90], and Fano’s inequality [90].

6. Extensions: Distributed Information Bottleneck

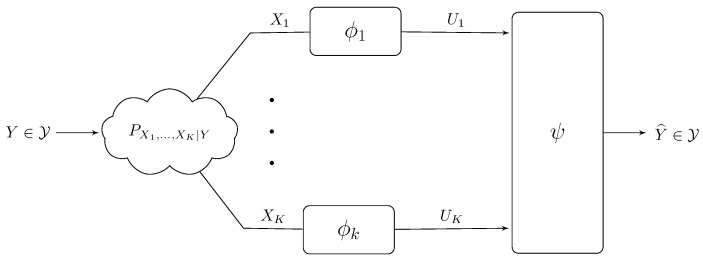

Consider now a generalization of the IB problem in which the prediction is to be performed in a distributed manner. The model is shown in Figure 7. Here, the prediction of the target variable is to be performed on the basis of samples of statistically correlated random variables that are observed each at a distinct predictor. Throughout, we assume that the following Markov Chain holds for all ,

| (65) |

Figure 7.

A model for distributed, e.g., multi-view, learning.

The variable Y is a target variable and we seek to characterize how accurately it can be predicted from a measurable random vector when the components of this vector are processed separately, each by a distinct encoder.

6.1. The Relevance–Complexity Region

The distributed IB problem of Figure 7 is studied in [91,92] from information-theoretic grounds. For both discrete memoryless (DM) and memoryless vector Gaussian models, the authors established fundamental limits of learning in terms of optimal trade-offs between relevance and complexity, leveraging on the connection between the IB-problem and source coding. The following theorem states the result for the case of discrete memoryless sources.

Theorem 1

([91,92]). The relevance–complexity region of the distributed learning problem is given by the union of all non-negative tuples that satisfy

(66) for some joint distribution of the form .

Proof.

The proof of Theorem 1 can be found in Section 7.1 of [92] and is reproduced in Section 8.1 for completeness. □

For a given joint data distribution , Theorem 1 extends the single encoder IB principle of Tishby in Equation (3) to the distributed learning model with K encoders, which we denote by Distributed Information Bottleneck (DIB) problem. The result characterizes the optimal relevance–complexity trade-off as a region of achievable tuples in terms of a distributed representation learning problem involving the optimization over K conditional pmfs and a pmf . The pmfs correspond to stochastic encodings of the observation to a latent variable, or representation, which captures the relevant information of Y in observation . Variable T corresponds to a time-sharing among different encoding mappings (see, e.g., [51]). For such encoders, the optimal decoder is implicitly given by the conditional pmf of Y from , i.e., .

The characterization of the relevance–complexity region can be used to derive a cost function for the D-IB similarly to the IB-Lagrangian in Equation (3). For simplicity, let us consider the problem of maximizing the relevance under a sum-complexity constraint. Let and

We define the DIB-Lagrangian (under sum-rate) as

| (67) |

The optimization of Equation (67) over the encoders allows obtaining mappings that perform on the boundary of the relevance–sum complexity region . To see that, note that it is easy to see that the relevance–sum complexity region is composed of all the pairs for which , with

| (68) |

where the maximization is over joint distributions that factorize as . The pairs that lie on the boundary of can be characterized as given in the following proposition.

Proposition 1.

For every pair that lies on the boundary of the region , there exists a parameter such that , with

(69)

(70) where is the set of conditional pmfs that maximize the cost function in Equation (67).

Proof.

The proof of Proposition 1 can be found in Section 7.3 of [92] and is reproduced here in Section 8.2 for completeness. □

The optimization of the distributed IB cost function in Equation (67) generalizes the centralized Tishby’s information bottleneck formulation in Equation (3) to the distributed learning setting. Note that for the optimization in Equation (69) reduces to the single encoder cost in Equation (3) with a multiplier .

6.2. Solutions to the Distributed Information Bottleneck

The methods described in Section 3 can be extended to the distributed information bottleneck case in order to find the mappings in different scenarios.

6.2.1. Vector Gaussian Model

In this section, we show that for the jointly vector Gaussian data model it is enough to restrict to Gaussian auxiliaries in order to exhaust the entire relevance–complexity region. In addition, we provide an explicit analytical expression of this region. Let be a jointly vector Gaussian vector that satisfies the Markov Chain in Equation (83). Without loss of generality, let the target variable be a complex-valued, zero-mean multivariate Gaussian with covariance matrix , i.e., , and given by

| (71) |

where models the linear model connecting to the observation at encoder k and is the noise vector at encoder k, assumed to be Gaussian with zero-mean, covariance matrix , and independent from all other noises and .

For the vector Gaussian model Equation (71), the result of Theorem 1, which can be extended to continuous sources using standard techniques, characterizes the relevance–complexity region of this model. The following theorem characterizes the relevance–complexity region, which we denote hereafter as . The theorem also shows that in order to exhaust this region it is enough to restrict to no time sharing, i.e., and multivariate Gaussian test channels

| (72) |

where projects and is a zero-mean Gaussian noise with covariance .

Theorem 2.

For the vector Gaussian data model, the relevance–complexity region is given by the union of all tuples that satisfy

for some matrices .

Proof.

The proof of Theorem 2 can be found in Section 7.5 of [92] and is reproduced here in Section 8.4 for completeness. □

Theorem 2 extends the result of [54,93] on the relevance–complexity trade-off characterization of the single-encoder IB problem for jointly Gaussian sources to K encoders. The theorem also shows that the optimal test channels are multivariate Gaussian, as given by Equation (72).

Consider the following symmetric distributed scalar Gaussian setting, in which and

| (73a) |

| (73b) |

where and are standard Gaussian with zero-mean and unit variance, both independent of Y. In this case, for and , the optimal relevance is

| (74) |

An easy upper bound on the relevance can be obtained by assuming that and are encoded jointly at rate , to get

| (75) |

The reader may notice that, if and are encoded independently, an achievable relevance level is given by

| (76) |

6.3. Solutions for Generic Distributions

Next, we present how the distributed information bottleneck can be solved for generic distributions. Similar to the case of single encoder IB-problem, the solutions are based on a variational bound on the DIB-Lagrangian. For simplicity, we look at the D-IB under sum-rate constraint [92].

6.4. A Variational Bound

The optimization of Equation (67) generally requires computing marginal distributions that involve the descriptions , which might not be possible in practice. In what follows, we derive a variational lower bound on on the DIB cost function in terms of families of stochastic mappings (a decoder), and priors . For the simplicity of the notation, we let

| (77) |

The variational D-IB cost for the DIB-problem is given by

| (78) |

Lemma 1.

For fixed , we have

(79) In addition, there exists a unique that achieves the maximum , and is given by, ,

(80a)

(80b)

(80c) where the marginals and the conditional marginals and are computed from .

Proof.

The proof of Lemma 1 can be found in Section 7.4 of [92] and is reproduced here in Section 8.3 for completeness. □

Then, the optimization in Equation (69) can be written in terms of the variational DIB cost function as follows,

| (81) |

The variational DIB cost in Equation (78) is a generalization to distributed learning with K-encoders of the evidence lower bound (ELBO) of the target variable Y given the representations [15]. If , the bound generalizes the ELBO used for VAEs to the setting of encoders. In addition, note that Equation (78) also generalizes and provides an operational meaning to the -VAE cost [82] with , as a criteria to design estimators on the relevance–complexity plane for different values.

6.5. Known Memoryless Distributions

When the data model is discrete and the joint distribution is known, the DIB problem can be solved by using an iterative method that optimizes the variational IB cost function in Equation (81) alternating over the distributions . The optimal encoders and decoders of the D-IB under sum-rate constraint satisfy the following self consistent equations,

where .

Alternating iterations of these equations converge to a solution for any initial , similarly to a Blahut–Arimoto algorithm and the EM.

6.5.1. Distributed Variational IB

When the data distribution is unknown and only data samples are available, the variational DIB cost in Equation (81) can be optimized following similar steps as for the variational IB in Section 3.3.1 by parameterizing the encoding and decoding distributions using a family of distributions whose parameters are determined by DNNs. This allows us to formulate Equation (81) in terms of the DNN parameters, i.e., its weights, and optimize it by using the reparameterization trick [15], Monte Carlo sampling, and stochastic gradient descent (SGD)-type algorithms.

Considering encoders and decoders parameterized by DNN parameters , the DIB cost in Equation (81) can be optimized by considering the following empirical Monte Carlo approximation:

| (82) |

where are samples obtained from the reparametrization trick by sampling from K random variables . The details of the method can be found in [92]. The resulting architecture is shown in Figure 8. This architecture generalizes that from autoencoders to the distributed setup with K encoders.

Figure 8.

Example parameterization of the Distributed Variational Information Bottleneck method using neural networks.

6.6. Connections to Coding Problems and Learning

Similar to the point-to-point IB-problem, the distributed IB problem also has abundant connections with (asymptotic) coding and learning problems.

6.6.1. Distributed Source Coding under Logarithmic Loss

Key element to the proof of the converse part of Theorem 3 is the connection with the Chief Executive Officer (CEO) source coding problem. For the case of encoders, while the characterization of the optimal rate-distortion region of this problem for general distortion measures has eluded the information theory for now more than four decades, a characterization of the optimal region in the case of logarithmic loss distortion measure has been provided recently in [65]. A key step in [65] is that the log-loss distortion measure admits a lower bound in the form of the entropy of the source conditioned on the decoders’ input. Leveraging this result, in our converse proof of Theorem 3, we derive a single letter upper bound on the entropy of the channel inputs conditioned on the indices that are sent by the relays, in the absence of knowledge of the codebooks indices . In addition, the rate region of the vector Gaussian CEO problem under logarithmic loss distortion measure has been found recently in [94,95].

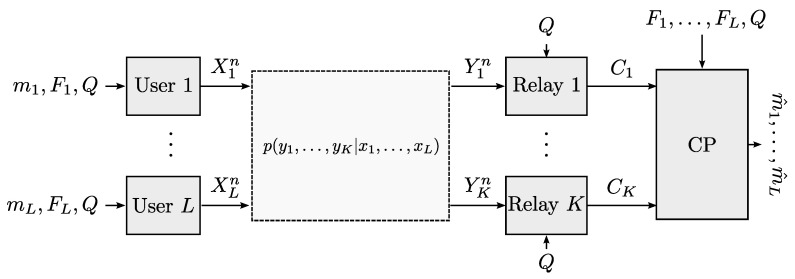

6.6.2. Cloud RAN

Consider the discrete memoryless (DM) CRAN model shown in Figure 9. In this model, L users communicate with a common destination or central processor (CP) through K relay nodes, where and . Relay node k, , is connected to the CP via an error-free finite-rate fronthaul link of capacity . In what follows, we let and indicate the set of users and relays, respectively. Similar to Simeone et al. [96], the relay nodes are constrained to operate without knowledge of the users’ codebooks and only know a time-sharing sequence , i.e., a set of time instants at which users switch among different codebooks. The obliviousness of the relay nodes to the actual codebooks of the users is modeled via the notion of randomized encoding [97,98]. That is, users or transmitters select their codebooks at random and the relay nodes are not informed about the currently selected codebooks, while the CP is given such information.

Figure 9.

CRAN model with oblivious relaying and time-sharing.

Consider the following class of DM CRANs in which the channel outputs at the relay nodes are independent conditionally on the users’ inputs. That is, for all and all ,

| (83) |

forms a Markov Chain in this order.

The following theorem provides a characterization of the capacity region of this class of DM CRAN problem under oblivious relaying.

Theorem 3

([22,23]). For the class of DM CRANs with oblivious relay processing and enabled time-sharing for which Equation (83) holds, the capacity region is given by the union of all rate tuples which satisfy

for all non-empty subsets and all , for some joint measure of the form

(84)

The direct part of Theorem 3 can be obtained by a coding scheme in which each relay node compresses its channel output by using Wyner–Ziv binning to exploit the correlation with the channel outputs at the other relays, and forwards the bin index to the CP over its rate-limited link. The CP jointly decodes the compression indices (within the corresponding bins) and the transmitted messages, i.e., Cover-El Gamal compress-and-forward [99] (Theorem 3) with joint decompression and decoding (CF-JD). Alternatively, the rate region of Theorem 3 can also be obtained by a direct application of the noisy network coding (NNC) scheme of [64] (Theorem 1).

The connection between this problem, source coding and the distributed information bottleneck is discussed in [22,23], particularly in the derivation of the converse part of the theorem. Note also the similarity between the resulting capacity region in Theorem 3 and the relevance complexity region of the distributed information bottleneck in Theorem 1, despite the significant differences of the setups.

6.6.3. Distributed Inference, ELBO and Multi-View Learning

In many data analytics problems, data are collected from various sources of information or feature extractors and are intrinsically heterogeneous. For example, an image can be identified by its color or texture features and a document may contain text and images. Conventional machine learning approaches concatenate all available data into one big row vector (or matrix) on which a suitable algorithm is then applied. Treating different observations as a single source might cause overfitting and is not physically meaningful because each group of data may have different statistical properties. Alternatively, one may partition the data into groups according to samples homogeneity, and each group of data be regarded as a separate view. This paradigm, termed multi-view learning [100], has received growing interest, and various algorithms exist, sometimes under references such as co-training [101,102,103,104], multiple kernel learning [104], and subspace learning [105]. By using distinct encoder mappings to represent distinct groups of data, and jointly optimizing over all mappings to remove redundancy, multi-view learning offers a degree of flexibility that is not only desirable in practice but is also likely to result in better learning capability. Actually, as shown in [106], local learning algorithms produce fewer errors than global ones. Viewing the problem as that of function approximation, the intuition is that it is usually not easy to find a unique function that holds good predictability properties in the entire data space.

Besides, the distributed learning of Figure 7 clearly finds application in all those scenarios in which learning is performed collaboratively but distinct learners either only access subsets of the entire dataset (e.g., due to physical constraints) or access independent noisy versions of the entire dataset.

In addition, similar to the single encoder case, the distributed IB also finds applications in fundamental performance limits and formulation of cost functions from an operational point of view. One of such examples is the generalization of the commonly used ELBO and given in Equation (62) to the setup with K views or observations, as formulated in Equation (78). Similarly, from the formulation of the DIB problem, a natural generalization of the classical autoencoders emerge, as given in Figure 8.

7. Outlook

A variant of the bottleneck problem in which the encoder’s output is constrained in terms of its entropy, rather than its mutual information with the encoder’s input as done originally in [1], was considered in [107]. The solution of this problem turns out to be a deterministic encoder map as opposed to the stochastic encoder map that is optimal under the IB framework of Tishby et al. [1], which results in a reduction of the algorithm’s complexity. This idea was then used and extended to the case of available resource (or time) sharing in [108].

In the context of privacy against inference attacks [109], the authors of [75,76] considered a dual of the information bottleneck problem in which represents some private data that are correlated with the non-private data . A legitimate receiver (analyst) wishes to infer as much information as possible about the non-private data Y but does not need to infer any information about the private data X. Because X and Y are correlated, sharing the non-private data X with the analyst possibly reveals information about Y. For this reason, there is a trade-off between the amount of information that the user shares about X as measured by the mutual information and the information that he keeps private about Y as measured by the mutual information , where .

Among interesting problems that are left unaddressed in this paper is that of characterizing optimal input distributions under rate-constrained compression at the relays where, e.g., discrete signaling is already known to sometimes outperform Gaussian signaling for single-user Gaussian CRAN [97]. It is conjectured that the optimal input distribution is discrete. Other issues might relate to extensions to continuous time filtered Gaussian channels, in parallel to the regular bottleneck problem [108], or extensions to settings in which fronthauls may be not available at some radio-units, and that is unknown to the systems. That is, the more radio units are connected to the central unit, the higher is the rate that could be conveyed over the CRAN uplink [110]. Alternatively, one may consider finding the worst-case noise under given input distributions, e.g., Gaussian, and rate-constrained compression at the relays. Furthermore, there are interesting aspects that address processing constraints of continuous waveforms, e.g., sampling at a given rate [111,112] with focus on remote logarithmic distortion [65], which in turn boils down to the distributed bottleneck problem [91,92]. We also mention finite-sample size analysis (i.e., finite block length n, which relates to the literature on finite block length coding in information theory). Finally, it is interesting to observe that the bottleneck problem relates to interesting problem when R is not necessarily scaled with the block length n.

8. Proofs

8.1. Proof of Theorem 1

The proof relies on the equivalence of the studied distributed learning problem with the Chief-Executive Officer (CEO) problem under logarithmic-loss distortion measure, which was studied in [65] (Theorem 10). For the K-encoder CEO problem, let us consider K encoding functions satisfying and a decoding function , which produces a probabilistic estimate of Y from the outputs of the encoders, i.e., is the set of distributions on . The quality of the estimation is measured in terms of the average log-loss.

Definition 1.

A tuple is said to be achievable in the K-encoder CEO problem for for which the Markov Chain in Equation (83) holds, if there exists a length n, encoders for , and a decoder , such that

(85)

(86) The rate-distortion region is given by the closure of all achievable tuples .

The following lemma shows that the minimum average logarithmic loss is the conditional entropy of Y given the descriptions. The result is essentially equivalent to [65] (Lemma 1) and it is provided for completeness.

Lemma 2.

Let us consider and the encoders , and the decoder . Then,

(87) with equality if and only if .

Proof.

Let be the argument of and be a distribution on . We have for :

(88)

(89)

(90)

(91) where Equation (91) is due to the non-negativity of the KL divergence and the equality holds if and only if for where for all z and . Averaging over Z completes the proof. □

Essentially, Lemma 2 states that minimizing the average log-loss is equivalent to maximizing relevance as given by the mutual information . Formally, the connection between the distributed learning problem under study and the K-encoder CEO problem studied in [65] can be formulated as stated next.

Proposition 2.

A tuple if and only if .

Proof.

Let the tuple be achievable for some encoders . It follows by Lemma 2 that, by letting the decoding function , we have , and hence .

Conversely, assume the tuple is achievable. It follows by Lemma 2 that , which implies with . □

The characterization of rate-distortion region has been established recently in [65] (Theorem 10). The proof of the theorem is completed by noting that Proposition 2 implies that the result in [65] (Theorem 10) can be applied to characterize the region , as given in Theorem 1.

8.2. Proof of Proposition 1

Let be the maximizing in Equation (69). Then,

| (92) |

| (93) |

| (94) |

| (95) |

| (96) |

where Equation (94) is due to the definition of in Equation (67); Equation (95) holds since using Equation (70); and Equation (96) follows by using Equation (68).

Conversely, if is the solution to the maximization in the function in Equation (68) such that , then and and we have, for any , that

| (97) |

| (98) |

| (99) |

| (100) |

| (101) |

| (102) |

| (103) |

where in Equation (100) we use that . which follows by using the Markov Chain ; Equation (101) follows since is the maximum over all possible distributions (possibly distinct from the that maximizes ); and Equation (102) is due to Equation (69). Finally, Equation (103) is valid for any and . Given s, and hence , letting yields . Together with Equation (96), this completes the proof of Proposition 1.

8.3. Proof of Lemma 1

Let, for a given random variable Z and , a stochastic mapping be given. It is easy to see that

| (104) |

In addition, we have

| (105) |

| (106) |

Substituting it into Equation (67), we get

| (107) |

| (108) |

where Equation (108) follows by the non-negativity of relative entropy. In addition, note that the inequality in Equation (108) holds with equality iff is given by Equation (80).

8.4. Proof of Theorem 2

The proof of Theorem 2 relies on deriving an outer bound on the relevance–complexity region, as given by Equation (66), and showing that it is achievable with Gaussian pmfs and without time-sharing. In doing so, we use the technique of [89] (Theorem 8), which relies on the de Bruijn identity and the properties of Fisher information and MMSE.

Lemma 3

([88,89]). Let be a pair of random vectors with pmf . We have

(109) where the conditional Fischer information matrix is defined as

(110) and the minimum mean square error (MMSE) matrix is

(111)

For and fixed , choose , satisfying such that

| (112) |

Note that such exists since , for all , and .

Using Equation (66), we get

| (113) |

where the inequality is due to Lemma 3, and Equation (113) is due to Equation (112).

In addition, we have

| (114) |

| (115) |

where Equation (114) is due to Lemma 3 and Equation (115) is due to to the following equality, which relates the MMSE matrix in Equation (112) and the Fisher information, the proof of which follows,

| (116) |

To show Equation (116), we use de Brujin identity to relate the Fisher information with the MMSE as given in the following lemma, the proof of which can be found in [89].

Lemma 4.

Let be a random vector with finite second moments and independent of . Then,

(117)

From the MMSE of Gaussian random vectors [51],

| (118) |

where and , and

| (119) |

Note that is independent of due to the orthogonality principle of the MMSE and its Gaussian distribution. Hence, it is also independent of .

Thus, we have

| (120) |

| (121) |

where Equation (120) follows since the cross terms are zero due to the Markov Chain (see Appendix V of [89]); and Equation (121) follows due to Equation (112) and .

Finally, we have

| (122) |

| (123) |

| (124) |

where Equation (122) is due to Lemma 4; Equation (123) is due to Equation (121); and Equation (124) follows due to Equation (119).