Abstract

In this paper, we introduce a new class of robust model selection criteria. These criteria are defined by estimators of the expected overall discrepancy using pseudodistances and the minimum pseudodistance principle. Theoretical properties of these criteria are proved, namely asymptotic unbiasedness, robustness, consistency, as well as the limit laws. The case of the linear regression models is studied and a specific pseudodistance based criterion is proposed. Monte Carlo simulations and applications for real data are presented in order to exemplify the performance of the new methodology. These examples show that the new selection criterion for regression models is a good competitor of some well known criteria and may have superior performance, especially in the case of small and contaminated samples.

Keywords: model selection, minimum pseudodistance estimation, Robustness

1. Introduction

Model selection is fundamental to the practical applications of statistics and there is a substantial literature on this issue. Classical model selection criteria include, among others, the C-criterion, the Akaike Information Criterion (AIC), based on the Kullback-Leibler divergence, and the Bayesian Information Criterion (BIC) as well as a General Information Criterion (GIC) which corresponds to a general class of criteria which also estimates the Kullback-Leibler divergence. These criteria have been proposed respectively in [1,2,3,4], and represent powerful tools for choosing the best model among different candidate models that can be used to fit a given data set. On the other hand, many classical procedures for model selection are extremely sensitive to outliers and to other departures from the distributional assumptions of the model. Robust versions of classical model selection criteria, which are not strongly affected by outliers, have been proposed for example in [5,6,7]. Some recent proposals for robust model selection are criteria based on divergences and minimum divergence estimators. We recall here, the Divergence Information Criteria (DIC) based on the density power divergences introduced in [8], the Modified Divergence Information Criteria (MDIC) introduced in [9] and the criteria based on minimum dual divergence estimators introduced in [10].

The interest on statistical methods based on divergence measures has grown significantly in recent years. For a wide variety of models, statistical methods based on divergences have high model efficiency and are also robust, representing attractive alternatives to the classical methods. We refer to the monographs [11,12] for an excellent presentation of such methods, for their importance and applications. The pseudodistances that we use in the present paper were originally introduced in [13], where they are called “type-0” divergences, and corresponding minimum divergence estimators have been studied. They are also presented and extensively studied in [14] where they are called -divergences, as well as in [15] in the context of decomposable pseudodistances. Like divergences, the pseudodistances are not mathematical metrics in the strict sense of the term. They satisfy two properties, namely the nonnegativity and the fact that the pseudodistance between two probability measures equals to zero if and only if the two measures are equal. The divergences are moreover characterized by the information processing property, that is, the complete invariance with respect to statistically sufficient transformations of the observation space. In general, a pseudodistance may not satisfy this property. We have adopted the term pseudodistance for this reason, but in literature we can also encounter the other terms mentioned above.

The pseudodistances that we consider in this paper have also been used to define robustness and efficiency measures, as well as the corresponding optimal robust M-estimators following the Hampel’s infinitesimal approach in [16]. The minimum pseudodistance estimators for general parametric models have been studied in [15] and consist of minimizing an empirical version of a pseudodistance between the assumed theoretical model and the true model underlying the data. These estimators have the advantage of not requiring any prior smoothing and conciliate robustness with high efficiency, providing a high degree of stability under model misspecification, often with a minimal loss in model efficiency. Such estimators are also defined and studied in the case of the multivariate normal model, as well as for linear regression models in [17,18], where applications for portfolio optimization models are also presented.

In the present paper we propose new criteria for model selection, based on pseudodistances and on minimum pseudodistance estimators. These new criteria have robustness properties, are asymptotically unbiased, consistent and compare well with some other known model selection criteria, even for small samples.

The paper is organized as follows—Section 2 is devoted to minimum pseudodistance estimators and to their asymptotic properties, which will be needed in the next sections. Section 3 presents new estimators of the expected overall discrepancy using pseudodistances, together with corresponding theoretical properties including robustness, consistency and limit laws. The new asymptotically unbiased model selection criteria are presented in Section 3.3, where the case of the univariate normal model and the case of linear regression models are investigated. Applications based on Monte Carlo simulations and on real data, illustrating the performance of the new methodology in the case of linear regression models, are included in Section 4.

2. Minimum Pseudodistance Estimators

The construction of new model selection criteria is based on using the following family of pseudodistances (see [15]). For two probability measures P and Q admitting densities p and q respectively with respect to the Lebesgue measure, the family of pseudodistances of order is defined by

| (1) |

and satisfies the limit relation

| (2) |

where is the modified Kullback-Leibler divergence.

Let be a parametric model indexed by , where is a d-dimensional parameter space, and be the corresponding densities with respect to the Lebesgue measure . Let be a random sample on , . For fixed, a minimum pseudodistance estimator of the unknown parameter from the law is defined by replacing the measure in the pseudodistance by the empirical measure pertaining to the sample, and then minimizing this empirical quantity with respect to on the parameter space. Since the middle term in does not depend on , these estimators are defined by

| (3) |

or equivalently as

| (4) |

where . Denoting , these estimators can be written as

| (5) |

The optimum given above need not be uniquely defined.

On the other hand,

| (6) |

and here is the unique optimizer, since implies .

Define

An estimator of is defined by

| (7) |

The following regularity conditions of the model will be assumed throughout the rest of the paper.

(C1) The density has continuous partial derivatives with respect to up to the third order (for all x -a.e.).

(C2) There exists a neighborhood of such that the first-, the second- and the third- order partial derivatives with respect to of are dominated on by some -integrable functions.

(C3) The integrals and exist.

Theorem 1.

Assume that conditions (C1), (C2) and (C3) are fulfilled. Then

- (a)

Let . Then, as , with probability one, the function attains a local maximal value at some point in the interior of B, which implies that the estimator is -consistent.

- (b)

converges in distribution to a centered multivariate normal random variable with covariance matrixwhere and .

(8) - (c)

converges in distribution to a centered normal variable with variance .

We refer to [15] for details regarding these estimators and for the proofs of the above asymptotic properties.

3. Model Selection Criteria Based on Pseudodistances

Model selection is a method for selecting the best model among candidate models that can be used to fit a given data set. A model selection criterion can be considered as an approximately unbiased estimator of the expected overall discrepancy, a nonnegative quantity which measures the distance between the true unknown model and a fitted approximating model. If the value of the criterion is small, then the approximated candidate model can be chosen. In the following, by applying the same methodology used for AIC, we construct new criteria for model selection using pseudodistances (1) and minimum pseudodistance estimators.

Let be a random sample from the distribution associated with the true model Q with density q and let be the density of a candidate model from a parametric family , where .

3.1. The Expected Overall Discrepancy

For fixed, we consider the quantity

| (9) |

which is the same as the pseudodistance without the middle term that remains constant irrespectively of the model used.

The target theoretical quantity that will be approximated by an asymptotically unbiased estimator is given by

| (10) |

where is a minimum pseudodistance estimator defined as in (3). The same pseudodistance is used for both and . The quantity (10) can be seen as an average distance between Q and up to a constant and is called the expected overall discrepancy between Q and .

The next Lemma gives the gradient vector and the Hessian matrix of and is useful for the evaluation of through Taylor expansion.

Throughout this paper, for a scalar function , the quantity denotes the d-dimensional gradient vector of with respect to the vector and denotes the corresponding Hessian matrix. We also use the notations and for the first and the second order derivatives of with respect to .

We assume the following conditions allowing derivation under the integral sign:

(C4) There exists a neighborhood of such that

(C5) There exists a neighborhood of such that

Lemma 1.

Under (C4) and (C5), the gradient vector and the Hessian matrix of are

(11)

When the true model Q belongs to the parametric model , hence and , the gradient vector and the Hessian matrix of simplify to

| (12) |

| (13) |

where

| (14) |

In the following Propositions we suppose that the true model Q belongs to the parametric model , hence , and is the value of the parameter corresponding to the true model . We also say that is the true value of the parameter (All the proof of the propositions can be seen in the Appendix A).

Proposition 1.

When the true model Q belongs to the parametric model , assuming that (C4) and (C5) are fulfilled for and , the expected overall discrepancy is given by

(15) where , is given by (14).

3.2. Estimation of the Expected Overall Discrepancy

In this section, we introduce an estimator of the expected overall discrepancy, under the hypothesis that the true model Q belongs to the parametric model . Hence, and the unknown parameter will be estimated by a minimum pseudodistance estimator

For a given , a natural estimator of is defined by

| (16) |

Lemma 2.

Assuming (C4), the gradient vector and the Hessian matrix of are given by

Proposition 2.

When the true model Q belongs to the parametric model , by imposing the conditions (C1)-(C5), it holds

(17) where .

The following result allows to define an asymptotically unbiased estimator of the expected overall discrepancy.

Proposition 3.

When the true model Q belongs to the parametric model , under (C1)-(C5), it holds

(18) where and .

3.2.1. Limit Properties of the Estimator

Under the hypothesis that the true model Q belongs to the family of models , hence , we prove the consistency and the asymptotic normality for the estimator .

Note that

| (19) |

| (20) |

where and is given by (7).

First we prove that is a consistent estimator of . Indeed, using Theorem 1 and the fact that , a Taylor expansion of in around gives

| (21) |

Using the weak law of large numbers,

| (22) |

Combining (21) and (22), we obtain that converges to in probability.

Then, using the continuous mapping theorem, since is a continuous function, we get

in probability.

On the other hand, using the asymptotic normality of the estimator (according to Theorem 1 (c)) together with the univariate delta method, we obtain the asymptotic normality of . The Proposition below summarizes the above asymptotic results.

Proposition 4.

Under (C1)-(C3), when , it holds

(a) converges to in probability.

(b) converges in distribution to a centered univariate normal random variable with variance , being defined in Theorem 1.

3.2.2. Robustness Properties of the Estimator

The influence function is a useful tool for describing robustness of an estimator. Recall that, a map T defined on a set of probability measures and parameter space valued is a statistical functional corresponding to an estimator of the parameter , whenever , where is the empirical measure associated to the sample. The influence function of T at is defined by

where , being the Dirac measure putting all mass at x. The gross error sensitivity of the estimator is defined by

Whenever the influence function is bounded with respect to x, the corresponding estimator is called B-robust (see [19]).

In what follows, for a given , we derive the influence function of the estimator . The statistical functional associated with this estimator, which we denote by U, is defined by

where T is the statistical functional corresponding to the used minimum pseudodistance estimator estimator , namely

where .

Due to the Fisher consistency of the functional T, according to (6), we have which implies that .

Proposition 5.

When , the influence function of is given by

(23)

Note that the influence function of the estimator does not depend on the estimator , but depends on the used pseudodistance. Usually, is bounded with respect to x and therefore is a robust estimator with respect to .

For comparison at the level of the influence function, we consider the AIC criterion which is defined by

where is the maximum value of the likelihood function for the model, the maximum likelihood estimator and d the dimension of the parameter. The statistical functional corresponding to the statistic is

where T here is the statistical functional corresponding to the maximum likelihood estimator. The influence function of the functional V is given by

| (24) |

This influence function is not bounded with respect to x, therefore the statistic is not robust.

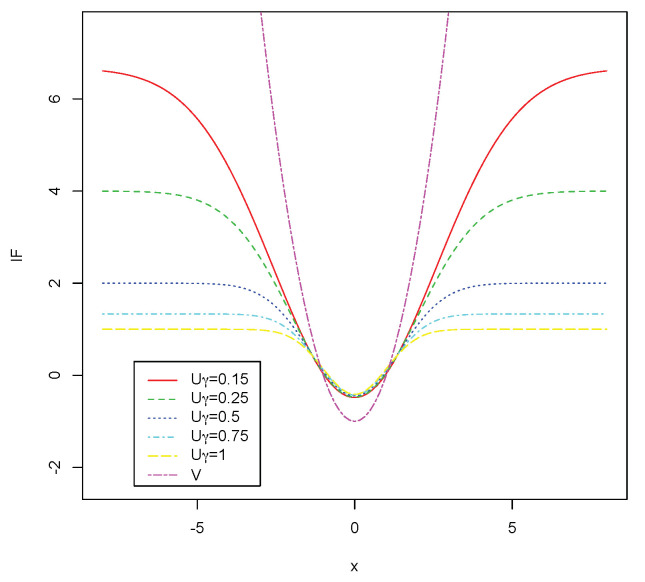

For example, in the case of the univariate normal model, for a positive , the influence function (23) writes as

| (25) |

while the influence function (24) writes as

| (26) |

(here ). For all the pseudodistances, the influence function (25) is bounded with respect to x, therefore the selection criteria based on the statistic will be robust. On the other hand, the influence function (26) is not bounded with respect to x, showing the non robustness of AIC in this case. Moreover, the gross error sensitivities corresponding to these influence functions are and . These results show that, in the case of the normal model, when increases the gross error sensitivity decreases. Therefore, larger values of are associated with more robust procedures. For the particular case and , the influence functions (25) and (26) are represented in Figure 1.

Figure 1.

Influence functions in the case of the normal model.

3.3. Model Selection Criteria Using Pseudodistances

3.3.1. The Case of Univariate Normal Family

The criteria that we propose in this section correspond to the case where the candidate model is a univariate normal model from the family of normal models indexed by . We also suppose that the true model Q belongs to .

In the case of the univariate normal model, defined in (14) expresses as

| (27) |

where V is the asymptotic covariance matrix given by (8) and the matrix is given by

For small positive values of , the matrix can be approximated by the identity matrix I.

According to Theorem 1, is asymptotically multivariate normal and then the statistic has approximately a distribution. For large n, it holds

| (28) |

Also, for the normal model, it holds

| (29) |

Therefore, (18) becomes

| (30) |

Using the central limit theorem and asymptotic properties of given in Theorem 1, the following hold

| (31) |

| (32) |

Using (30), (31) and (32) we obtain:

Proposition 6.

For the univariate normal family, an asymptotically unbiased estimator of the expected overall discrepancy is given by

(33) where is a minimum pseudodistance estimator given by (3).

Under the hypothesis that is the univariate normal model, as we supposed in this subsection, the function h writes as

| (34) |

and it can be easily checked that all the conditions (C1)–(C5) are fulfilled. Therefore we can use all results presented in the preceding subsections, such that Proposition 6 is fully justified.

Moreover, the selection criteria based on (33) are consistent on the basis of Proposition 4. It should also be noted that the bias correction term in (33) decreases slowly as the parameter increases staying always very close to zero . As expected, the larger the sample size the smaller the bias correction. As we saw in Section 3.2.2, since the gross error sensitivity of is , larger values of are associated with more robust procedures. On the other hand, the approximation of with the identity matrix is realized for values of close to zero. Thus, positive values of smaller than 0.5 for example could represent choices satisfying the robustness requirement and the approximation of through the identity matrix, approximation which is necessary to construct the criterion in this case.

3.3.2. The Case of Linear Regression Models

In the following, we adapt the pseudodistance based model selection criterion in the case of linear regression models. Consider the linear regression model

| (35) |

where and e is independent of X. Suppose we have a sample given by the i.i.d. random vectors , , such that .

We consider the joint distribution of the entire data and write a pseudodistance between the theoretical model and the true model corresponding to the data. Let , , be the probability measure associated to the theoretical model given by the random vector and Q the probability measure associated to the true model corresponding to the data. Denote by , respectively by q the corresponding densities. For , the pseudodistance between and Q is defined by

| (36) |

Similar to [18], since the middle term above does not depend on , a minimum pseudodistance estimator of the parameter is defined by

| (37) |

where is the empirical measure associated with the sample. This estimator can be written as

| (38) |

where is the density of the random variable . Then, the estimator can be written as

| (39) |

In order to construct an asymptotic unbiased estimator of the expected overall discrepancy in the case of the linear regression models, we evaluated the second and the third terms from (18).

For values of close to 0 ( smaller than 0.3), we found the following approximation of the matrix

| (40) |

where V is the asymptotic covariance matrix of and I is the identity matrix. We refer to [15] for the asymptotic properties of the minimum pseudodistance estimators in the case of linear regression models. Since is asymptotically multivariate normal distributed, using the distribution, we obtain the approximation

| (41) |

Also, the third term in (18) is given by

| (42) |

Then, according to Proposition 3, an asymptotically unbiased estimator of the expected overall discrepancy is given by

| (43) |

where is given by (39). Note that, using the asymptotic properties of and the central limit theorem, the last two terms in (18) of Proposition 3 are .

When we compare different linear regression models, as in Section 4 below, we can ignore the terms depending only on n and in (43). Therefore, we can use as model selection criterion the simplified expression

| (44) |

which we call Pseudodistance based Information Criterion (PIC).

4. Applications

4.1. Simulation Study

In order to illustrate the performance of the PIC criterion (44) in the case of linear regression models, we performed a simulation study using for comparison the model selection criteria AIC, BIC and MDIC. These criteria are defined respectively by

where n the sample size, p the number of covariates of the model and the classical unbiased estimator of the variance of the model,

with and

where is a consistent estimate of the vector of unknown parameters involved in the model with p covariates and is the associated probability density function. Note that MDIC is based on the well known BHHJ family of divergence measures indexed by a parameter and on the minimum divergence estimating method for robust parameter estimation (see [20]). The value of was found in [9] to be an ideal one for a great variety of settings. The above three criteria have been chosen to be used in this comparative study with PIC not only due to their popularity, but also due to their special characteristics. Indeed, AIC is the classical representative of asymptotically efficient criteria, BIC is known to be consistent, while MDIC is associated with robust estimations (see e.g., [20,21,22,23]).

Let be four variables following respectively the normal distributions , , and . We consider the model

with and . This is the uncontaminated model. In order to evaluate the robustness of the new PIC criterion, we also consider the contaminated model

where and such that . Note that for and the uncontaminated model is obtained.

The simulated data corresponding to the contaminated model are

for , where are values of the variables independently generated from the normal distributions , , , correspondingly.

With a set of four possible regressors there are possible model specifications that include at least one regressor. These 15 possible models constitute the set of candidate models in our study. More precisely, this set contains the full model () given by

as well as all 14 possible subsets of the full model consisting of one (, two () and three () of the four regressors and , with , and .

In our simulation study, for several values of the parameter associated with the pseudodistance, we compared the new criterion PIC with the other model selection criteria. Different levels of contamination and different sample sizes have been considered. In the examples presented in this work, and . Additional examples for have been analyzed (results not shown) with similar findings (see below). For each setting, fifty experiments were performed in order to select the best model among the available candidate models. In the framework of each of the fifty experiments, on the basis of the simulated observations, the value of each of the above model selection criteria was calculated for each of the 15 possible models. Then, for each criterion, the 15 candidate models were ranked from 1st to 15th according to the value of the criterion. The model chosen by a given criterion is the one for which the value of the criterion is the lowest among all the 15 candidate models.

Table 1, Table 2, Table 3, Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10, Table 11 and Table 12 present the proportions of models selected by the considered criteria. Among the 15 candidate models only 4 were chosen at least once. These four models are the same in all instances and appear in the 2nd column of all tables.

Table 1.

Proportions of selected models by the considered criteria (n = 20, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| PIC | 90 | 84 | 88 | 84 | 92 | 90 | 86 | |

| AIC | 62 | 56 | 52 | 56 | 66 | 56 | 60 | |

| BIC | 74 | 76 | 60 | 74 | 72 | 68 | 70 | |

| MDIC | 86 | 86 | 64 | 78 | 84 | 80 | 74 | |

Table 2.

Proportions of selected models by the considered criteria (n = 20, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| 80 | 84 | 90 | 82 | 82 | 80 | 80 | ||

| AIC | 60 | 52 | 56 | 62 | 64 | 54 | 52 | |

| BIC | 76 | 70 | 78 | 72 | 84 | 76 | 76 | |

| MDIC | 86 | 76 | 88 | 74 | 92 | 78 | 86 | |

Table 3.

Proportions of selected models by the considered criteria (n = 20, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| 82 | 88 | 80 | 94 | 82 | 88 | 86 | ||

| AIC | 78 | 50 | 66 | 70 | 66 | 64 | 66 | |

| BIC | 84 | 64 | 74 | 84 | 84 | 76 | 82 | |

| MDIC | 90 | 74 | 82 | 88 | 88 | 80 | 88 | |

Table 4.

Proportions of selected models by the considered criteria (n = 20, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| 86 | 86 | 86 | 86 | 88 | 82 | 92 | ||

| AIC | 64 | 74 | 62 | 58 | 64 | 62 | 70 | |

| BIC | 78 | 90 | 78 | 80 | 82 | 80 | 74 | |

| MDIC | 84 | 92 | 88 | 88 | 88 | 88 | 80 | |

Table 5.

Proportions of selected models by the considered criteria (n = 50, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| 86 | 96 | 94 | 90 | 88 | 86 | 90 | ||

| AIC | 74 | 64 | 82 | 62 | 64 | 78 | 72 | |

| BIC | 94 | 86 | 96 | 86 | 90 | 88 | 90 | |

| MDIC | 94 | 82 | 98 | 82 | 86 | 88 | 90 | |

Table 6.

Proportions of selected models by the considered criteria (n = 50, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| 92 | 88 | 92 | 90 | 82 | 94 | 86 | ||

| AIC | 70 | 64 | 62 | 64 | 66 | 74 | 72 | |

| BIC | 92 | 88 | 82 | 92 | 88 | 88 | 86 | |

| MDIC | 92 | 86 | 76 | 88 | 84 | 88 | 86 | |

Table 7.

Proportions of selected models by the considered criteria (n = 50, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| 94 | 92 | 92 | 88 | 84 | 90 | 88 | ||

| AIC | 70 | 62 | 66 | 68 | 70 | 72 | 58 | |

| BIC | 96 | 82 | 92 | 86 | 92 | 92 | 86 | |

| MDIC | 90 | 78 | 88 | 86 | 86 | 90 | 82 | |

Table 8.

Proportions of selected models by the considered criteria (n = 50, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| 94 | 90 | 80 | 84 | 90 | 94 | 88 | ||

| AIC | 64 | 68 | 62 | 68 | 66 | 64 | 62 | |

| BIC | 86 | 86 | 86 | 90 | 86 | 94 | 82 | |

| MDIC | 84 | 84 | 82 | 88 | 84 | 90 | 82 | |

Table 9.

Proportions of selected models by the considered criteria (n = 100, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| 94 | 94 | 94 | 92 | 88 | 88 | 94 | ||

| AIC | 70 | 82 | 78 | 70 | 68 | 68 | 72 | |

| BIC | 90 | 96 | 98 | 90 | 96 | 94 | 88 | |

| MDIC | 86 | 96 | 92 | 86 | 92 | 90 | 88 | |

Table 10.

Proportions of selected models by the considered criteria (n = 100, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| 88 | 92 | 96 | 88 | 88 | 88 | 86 | ||

| AIC | 68 | 72 | 78 | 66 | 70 | 78 | 60 | |

| BIC | 98 | 98 | 96 | 88 | 92 | 94 | 92 | |

| MDIC | 90 | 90 | 96 | 84 | 82 | 90 | 82 | |

Table 11.

Proportions of selected models by the considered criteria (n = 100, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| 90 | 88 | 92 | 90 | 98 | 96 | 92 | ||

| AIC | 70 | 78 | 78 | 66 | 82 | 68 | 68 | |

| BIC | 96 | 92 | 92 | 94 | 96 | 94 | 88 | |

| MDIC | 90 | 88 | 82 | 90 | 94 | 84 | 88 | |

Table 12.

Proportions of the selected models by the considered criteria (n = 100, ).

| Criteria | Variables | |||||||

|---|---|---|---|---|---|---|---|---|

| 94 | 96 | 92 | 92 | 96 | 90 | 94 | ||

| AIC | 78 | 74 | 72 | 74 | 70 | 62 | 74 | |

| BIC | 96 | 100 | 92 | 96 | 94 | 90 | 100 | |

| MDIC | 94 | 92 | 86 | 90 | 86 | 80 | 94 | |

For small sample sizes (, ) the criteria PIC and MDIC yield the best results. When the level of contamination is 10% or 20%, the PIC criterion yields very good results and beats the other competitors almost all the time. When the level of contamination is small, for example 5% or when there is no contamination, the two criteria are comparable, in the sense that in many cases the proportions of selected models by the two criteria are very close, so that sometimes PIC wins and sometimes MDIC wins. Table 1, Table 2, Table 3 and Table 4 present these results for , but similar results are obtained for , too.

For medium sample sizes (, ), the criteria PIC and BIC yield the best results. The results for are given in Table 5, Table 6, Table 7 and Table 8. Note that the PIC criterion yields the best results for 0% and 10% contamination. For the other levels of contamination, there are values of for which PIC is the best among all the considered criteria. On the other hand, in most cases when BIC wins, the proportions of selections of the true model by BIC and PIC are close.

When the sample size is large (, , ), BIC generally yields better results than PIC which stays relatively close behind, but sometimes BIC and PIC have the same performance. Table 9, Table 10, Table 11 and Table 12 present the results obtained for .

Thus, the new PIC criterion works very well for small to medium sample sizes and for levels of contamination up to 20%, but falls behind BIC for large sample sizes. Note that for contaminated data, PIC with prevails in most of the considered cases. On the other hand, for uncontaminated data, it is PIC with that prevails in all the considered instances. It is also worth mentioning that PIC with appears to behave very satisfactorily in most cases irrespectively of the proportion of contamination (–) and the sample size. Observe also that in all cases, AIC has the highest overestimation rate which is somehow expected (see [24]).

Although the consistency is the main focus of the applications presented in this work, one should point out that if prediction is part of the objective of a regression analysis, then model selection carried out using criteria such as the ones used in this work, have desirable properties. In fact, the case of finite-dimensional normal regression models has been shown to be associated with satisfactory prediction errors for criteria such as AIC and BIC (see [25]). Furthermore, it should be pointed out that in many instances PIC has a behavior quite similar to the above criteria by choosing the same models. Also, according to the presented simulation results, the proportion of choosing the true model by PIC is always better than the proportion of choosing the true model by AIC (even in the case of non contaminated data) and sometimes it is better than the proportion of choosing the true model by BIC. These results imply a satisfactory prediction ability for the proposed PIC criterion.

In conclusion, the new PIC criterion is a good competitor of the well known model selection criteria AIC, BIC and MDIC and may have superior performance especially in the case of small and contaminated samples.

4.2. Real Data Example

In order to illustrate the proposed method, we used the Hald cement data (see [26]) which represent a popular example for multiple linear regression. This example concern the heat evolved in calories per gram of cement Y as a function of the amount of each of four ingredient in the mix: tricalcium aluminate (), tricalcium silicate (), tetracalcium alumino-ferrite () and dicalcium silicate (). The data are presented in Table 13.

Table 13.

Hald cement data.

| Y | ||||

|---|---|---|---|---|

| 7 | 26 | 6 | 60 | 78.5 |

| 1 | 29 | 15 | 52 | 74.3 |

| 11 | 56 | 8 | 20 | 104.3 |

| 11 | 31 | 8 | 47 | 87.6 |

| 7 | 52 | 6 | 33 | 95.9 |

| 11 | 55 | 9 | 22 | 109.2 |

| 3 | 71 | 17 | 6 | 102.7 |

| 1 | 31 | 22 | 44 | 72.5 |

| 2 | 54 | 18 | 22 | 93.1 |

| 21 | 47 | 4 | 26 | 115.9 |

| 1 | 40 | 23 | 34 | 83.8 |

| 11 | 66 | 9 | 12 | 113.3 |

| 10 | 68 | 8 | 12 | 109.4 |

Since 4 variables are available, there are 15 possible candidate models (involving at least one regressor) for this data set. Note that the 4 single-variable models should be excluded from the analysis, because cement involves a mixture of at least two components that react chemically (see [27], p. 102). The model selection criteria that have been used are PIC for several values of , AIC, BIC and MDIC with . Table 14 shows the model selected by each of the considered criteria.

Table 14.

Selected models by model selection criteria.

| Criteria | Variables |

|---|---|

| PIC, | |

| PIC, | |

| PIC, | |

| PIC, | |

| PIC, | |

| AIC | |

| BIC | |

| MDIC |

Observe that, in this example, PIC behaves similarly to AIC and MDIC having a slight tendency of overestimation. Note that for this specific dataset the collinearity is quite strong with and as well as and being seriously correlated. It should be pointed out that the model is chosen not only by AIC and PIC, but also by Mallows’ criterion ([1]) with coming very close second. Note further that has also been chosen by cross validation ([28], p. 33) and PRESS ([26], p. 325). Finally, it is worth noticing that these two models share the highest adjusted values which are almost identical (0.976 for and 0.974 for ) making the distinction between them extremely hard. Thus, in this example, the new PIC criterion gives results as good as other recognized classical model selection criteria.

5. Conclusions

In this work, by applying the same methodology as for AIC to a family of pseudodistances, we constructed new model selection criteria using minimum pseudodistance estimators. We proved theoretical properties of these criteria including asymptotic unbiasedness, robustness, consistency, as well as the limit laws. The case of the linear regression models was studied in detail and specific selection criteria based on pseudodistance are proposed.

For linear regression models, a comparative study based on Monte Carlo simulations illustrate the performance of the new methodology. Thus, for small sample sizes, the criteria PIC and MDIC yield the best results and in many cases PIC wins, for example when the level of contamination is 10% or 20%. For medium sample sizes, the criteria PIC and BIC yield the best results. When the sample size is large, BIC generally yields better results than PIC which stays relatively close behind, but sometimes BIC and PIC have the same performance.

Based on the results of the simulation study and on the real data example, we conclude that the new PIC criterion is a good competitor of the well known criteria AIC, BIC and MDIC with an overall performance which is very satisfactory for all possible settings according to the sample size and contamination rate. Also PIC may have superior performance, especially in the case of small and contaminated samples.

An important issue that needs further investigation is the choice of the appropriate value for the parameter associated to the procedure. The findings of the presented simulation study show that, for contaminated data, the value leads to very good results, irrespectively of the sample size. Also, produces overall very satisfactory results, irrespectively of the sample size and the contamination rate. We hope to explore further and provide a clear solution to this problem, in a future work. We also intend to extend this methodology to other type of models including nonlinear or time series models.

Acknowledgments

The work of the first author was partially supported by a grant of the Romanian National Authority for Scientific Research, CNCS-UEFISCDI, project number PN-II-RU-TE-2012-3-0007. The work of the third author was completed as part of the activities of the Laboratory of Statistics and Data Analysis of the University of the Aegean.

Abbreviations

The following abbreviations are used in this manuscript:

| AIC | Akaike Information Criterion |

| BIC | Bayesian Information Criterion |

| GIC | General Information Criterion |

| DIC | Divergence Information Criterion |

| MDIC | Modified Divergence Information Criterion |

| PIC | Pseudodistance based Information Criterion |

| BHHJ family of measures | Basu, Harris, Hjort and Jones family of measures |

Appendix A

Proof of Proposition 1.

Using a Taylor expansion of around the true parameter and taking , on the basis of (12) and (13) we obtain

(A1) Then (15) holds. □

Proof of Proposition 2.

Using a Taylor expansion of around to and taking , we obtain

(A2) Note that by the very definition of .

By applying the weak law of large numbers and the continuous mapping theorem, we get

(A3) and using (13)

(A4) Then, using the consistency of and (A4), we obtain

(A5) Consequently,

(A6) and we deduce (17). □

Proof of Proposition 3.

Using Proposition 1 and Proposition 2, we obtain

(A7) where .

In order to evaluate , note that

(A8) A Taylor expansion of the function around to yields

(A9) Then

where .

On the other hand,

(A10) Consequently,

(A11)

Proof of Proposition 5.

For the contaminated model , it holds

(A12) Derivation with respect to yields

Thus we obtain

(A13) □

Author Contributions

A.T. conceived the methodology, obtained the theoretical results. A.T., A.K. and P.T. conceived the application part. A.K. and P.T. implemented the method in R and obtained the numerical results. All authors wrote the paper. All authors have read and approved the final manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interst.

References

- 1.Mallows C.L. Some comments on Cp. Technometrics. 1973;15:661–675. [Google Scholar]

- 2.Akaike H. Proceedings of the Second International Symposium on Information Theory Petrov. Springer; Berlin/Heidelberger, Germany: 1973. Information theory and an extension of the maximum likelihood principle; pp. 267–281. [Google Scholar]

- 3.Schwarz G. Estimating the dimension of a model. Ann. Stat. 1978;6:461–464. doi: 10.1214/aos/1176344136. [DOI] [Google Scholar]

- 4.Konishi S., Kitagawa G. Generalised information criteria in model selection. Biometrika. 1996;83:875–890. doi: 10.1093/biomet/83.4.875. [DOI] [Google Scholar]

- 5.Ronchetti E. Robust model selection in regression. Statist. Probab. Lett. 1985;3:21–23. doi: 10.1016/0167-7152(85)90006-9. [DOI] [Google Scholar]

- 6.Ronchetti E., Staudte R.G. A robust version of Mallows’ Cp. J. Am. Stat. Assoc. 1994;89:550–559. [Google Scholar]

- 7.Agostinelli C. Robust model selection in regression via weighted likelihood estimating equations. Stat. Probab. Lett. 2002;76:1930–1934. doi: 10.1016/j.spl.2006.04.048. [DOI] [Google Scholar]

- 8.Mattheou K., Lee S., Karagrigoriou A. A model selection criterion based on the BHHJ measure of divergence. J. Stat. Plann. Inf. 2009;139:228–235. doi: 10.1016/j.jspi.2008.04.022. [DOI] [Google Scholar]

- 9.Mantalos P., Mattheou K., Karagrigoriou A. An improved divergence information criterion for the determination of the order of an AR process. Commun. Stat.-Simul. Comput. 2010;39:865–879. doi: 10.1080/03610911003650391. [DOI] [Google Scholar]

- 10.Toma A. Model selection criteria using divergences. Entropy. 2014;16:2686–2698. doi: 10.3390/e16052686. [DOI] [Google Scholar]

- 11.Pardo L. Statistical Inference Based on Divergence Measures. Chapmann & Hall; London, UK: 2006. [Google Scholar]

- 12.Basu A., Shioya H., Park C. Statistical Inference: The Minimum Distance Approach. Chapmann & Hall; London, UK: 2011. [Google Scholar]

- 13.Jones M.C., Hjort N.L., Harris I.R., Basu A. A comparison of related density-based minimum divergence estimators. Biometrika. 2001;88:865–873. doi: 10.1093/biomet/88.3.865. [DOI] [Google Scholar]

- 14.Fujisawa H., Eguchi S. Robust parameter estimation with a small bias against heavy contamination. J. Multivar. Anal. 2008;99:2053–2081. doi: 10.1016/j.jmva.2008.02.004. [DOI] [Google Scholar]

- 15.Broniatowski M., Toma A., Vajda I. Decomposable pseudodistances and applications in statistical estimation. J. Stat. Plan. Infer. 2012;142:2574–2585. doi: 10.1016/j.jspi.2012.03.019. [DOI] [Google Scholar]

- 16.Toma A., Leoni-Aubin S. Optimal robust M-estimators using Renyi pseudodistances. J. Multivar. Anal. 2013;115:359–373. doi: 10.1016/j.jmva.2012.10.003. [DOI] [Google Scholar]

- 17.Toma A., Leoni-Aubin S. Robust portfolio optimization using pseudodistances. PLoS ONE. 2015;10:e0140546. doi: 10.1371/journal.pone.0140546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Toma A., Fulga C. Robust estimation for the single index model using pseudodistances. Entropy. 2018;20:374. doi: 10.3390/e20050374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hampel F.R., Ronchetti E., Rousseeuw P.J., Stahel W. Robust Statistics: The Approach Based on Influence Functions. Wiley Blackwell; Hoboken, NJ, USA: 1986. [Google Scholar]

- 20.Basu A., Harris I.R., Hjort N.L., Jones M.C. Robust and efficient estimation by minimising a density power divergence. Biometrika. 1998;85:549–559. doi: 10.1093/biomet/85.3.549. [DOI] [Google Scholar]

- 21.Karagrigoriou A. Asymptotic efficiecy of the order selection of a nongaussian AR process. Stat. Sin. 1997;7:407–423. [Google Scholar]

- 22.Vonta F., Karagrigoriou A. Generalized measures of divergence in survival analysis and reliability. J. Appl. Prob. 2010;47:216–234. doi: 10.1239/jap/1269610827. [DOI] [Google Scholar]

- 23.Karagrigoriou A., Mattheou K., Vonta F. On asymptotic properties of AIC variants with applications. Open J. Stat. 2011;1:105–109. doi: 10.4236/ojs.2011.12012. [DOI] [Google Scholar]

- 24.Shibata R. Selection of the order of an autoregressive model by Akaike’s information criterion. Biometrika. 1976;63:117–126. doi: 10.1093/biomet/63.1.117. [DOI] [Google Scholar]

- 25.Speed T.P., Yu B. Model selection and prediction: Normal regression. Ann. Inst. Stat. Math. 1993;45:35–54. doi: 10.1007/BF00773667. [DOI] [Google Scholar]

- 26.Draper N.R., Smith H. Applied Regression Analysis. 2nd ed. Wiley Blackwell; Hoboken, NJ, USA: 1981. [Google Scholar]

- 27.Burnham K.P., Anderson D.R. Model Selection and Multimodel Inference: A Practical Information-Theoretic Approach. Springer; Berlin/Heidelberger, Germany: 2002. [Google Scholar]

- 28.Hjorth J.S.U. Computer Intensive Statistical Methods: Validation, Model Selection and Bootstrap. Chapman and Hall; London, UK: 1994. [Google Scholar]