Abstract

A distributed binary hypothesis testing (HT) problem involving two parties, a remote observer and a detector, is studied. The remote observer has access to a discrete memoryless source, and communicates its observations to the detector via a rate-limited noiseless channel. The detector observes another discrete memoryless source, and performs a binary hypothesis test on the joint distribution of its own observations with those of the observer. While the goal of the observer is to maximize the type II error exponent of the test for a given type I error probability constraint, it also wants to keep a private part of its observations as oblivious to the detector as possible. Considering both equivocation and average distortion under a causal disclosure assumption as possible measures of privacy, the trade-off between the communication rate from the observer to the detector, the type II error exponent, and privacy is studied. For the general HT problem, we establish single-letter inner bounds on both the rate-error exponent-equivocation and rate-error exponent-distortion trade-offs. Subsequently, single-letter characterizations for both trade-offs are obtained (i) for testing against conditional independence of the observer’s observations from those of the detector, given some additional side information at the detector; and (ii) when the communication rate constraint over the channel is zero. Finally, we show by providing a counter-example where the strong converse which holds for distributed HT without a privacy constraint does not hold when a privacy constraint is imposed. This implies that in general, the rate-error exponent-equivocation and rate-error exponent-distortion trade-offs are not independent of the type I error probability constraint.

Keywords: Hypothesis testing, privacy, testing against conditional independence, error exponent, equivocation, distortion, causal disclosure

1. Introduction

Data inference and privacy are often contradicting objectives. In many multi-agent system, each agent/user reveals information about its data to a remote service, application or authority, which in turn, provides certain utility to the users based on their data. Many emerging networked systems can be thought of in this context, from social networks to smart grids and communication networks. While obtaining the promised utility is the main goal of the users, privacy of data that is shared is becoming increasingly important. Thus, it is critical that the users ensure a desired level of privacy for the sensitive information revealed, while maximizing the utility subject to this constraint.

In many distributed learning or distributed decision-making applications, typically the goal is to learn the joint probability distribution of data available at different locations. In some cases, there may be prior knowledge about the joint distribution, for example, that it belongs to a certain set of known probability distributions. In such a scenario, the nodes communicate their observations to the detector, which then applies hypothesis testing (HT) on the underlying joint distribution of the data based on its own observations and those received from other nodes. However, with the efficient data mining and machine learning algorithms available today, the detector can illegitimately infer some unintended private information from the data provided to it exclusively for HT purposes. Such threats are becoming increasingly imminent as large amounts of seemingly irrelevant yet sensitive data are collected from users, such as in medical research [1], social networks [2], online shopping [3] and smart grids [4]. Therefore, there is an inherent trade-off between the utility acquired by sharing data and the associated privacy leakage.

There are several practical scenarios where the above-mentioned trade-off arises. For example, consider the issue of consumer privacy in the context of online shopping. A consumer would like to share some information about his/her shopping behavior, e.g., shopping history and preferences, with the shopping portal to get better deals and recommendations on relevant products. The shopping portal would like to determine whether the consumer belongs to its target age group (e.g., below 30 years old) before sending special offers to this customer. Assuming that the shopping patterns of the users within and outside the target age groups are independent, the shopping portal performs a hypothesis test to check if the consumer’s shared data is correlated with the data of its own customers. If the consumer is indeed within the target age group, the shopping portal would like to gather more information about this potential customer, particular interests, more accurate age estimation, etc.; while the user is reluctant to provide any further information. Yet another relevant example is the issue of user privacy in the context of wearable Internet of Things (IoT) devices such as smart watches and fitness trackers, which collect information on routine daily activities, and often have a third-party cloud interface.

In this paper, we study distributed HT (DHT) with a privacy constraint, in which an observer communicates its observations to a detector over a noiseless rate-limited channel of rate R nats per observed sample. Using the data received from the observer, the detector performs binary HT on the joint distribution of its own observations and those of the observer. The performance of the HT is measured by the asymptotic exponential rate of decay of the type II error probability, known as the type II error exponent (or error exponent henceforth), for a given constraint on the type I error probability (definitions will be given below). While the goal is to maximize the performance of the HT, the observer also wants to maintain a certain level of privacy against the detector for some latent private data that is correlated with its observations. We are interested in characterizing the trade-off between the communication rate from the observer to the detector over the channel, error exponent achieved by the HT and the amount of information leakage of private data. A special case of HT known as testing against conditional independence (TACI) will be of particular interest. In TACI, the detector tests whether its own observations are independent of those at the observer, conditioned on additional side information available at the detector.

1.1. Background

Distributed HT without any privacy constraint has been studied extensively from an information- theoretic perspective in the past, although many open problems remain. The fundamental results for this problem are first established in [5], which includes a single-letter lower bound on the optimal error exponent and a strong converse result which states that the optimal error exponent is independent of the constraint on the type I error probability. Exact single-letter characterization of the optimal error exponent for the testing against independence (TAI) problem, i.e., TACI with no side information at the detector, is also obtained. The lower bound established in [5] is further improved in [6,7]. Strong converse is studied in the context of complete data compression and zero-rate compression in [6,8], respectively, where in the former, the observer communicates to the detector using a message set of size two, while in the latter using a message set whose size grows sub-exponentially with the number of observed samples. The TAI problem with multiple observers remains open (similar to several other distributed compression problems when a non-trivial fidelity criterion is involved); however, the optimal error exponent is obtained in [9] when the sources observed at different observers follow a certain Markov relation. The scenario in which, in addition to HT, the detector is also interested in obtaining a reconstruction of the observer’s source, is studied in [10]. The authors characterize the trade-off between the achievable error exponent and the average distortion between the observer’s observations and the detector’s reconstruction. The TACI is first studied in [11], where the optimality of a random binning-based encoding scheme is shown. The optimal error exponent for TACI over a noisy communication channel is established in [12]. Extension of this work to general HT over a noisy channel is considered in [13], where lower bounds on the optimal error exponent are obtained by using a separation-based scheme and also using hybrid coding for the communication between the observer and the detector. The TACI with a single observer and multiple detectors is studied in [14], where each detector tests for the conditional independence of its own observations from those of the observer. The general HT version of this problem over a noisy broadcast channel and DHT over a multiple access channel is explored in [15]. While all the above works consider the asymmetric objective of maximizing the error exponent under a constraint on the type I error probability, the trade-off between the exponential rate of decay of both the type I and type II error probabilities are considered in [16,17,18].

Data privacy has been a hot topic of research in the past decade, spanning across multiple disciplines in computer and computational sciences. Several practical schemes have been proposed that deal with the protection or violation of data privacy in different contexts, e.g., see [19,20,21,22,23,24]. More relevant for our work, HT under mutual information and maximal leakage privacy constraints have been studied in [25,26], respectively, where the observer uses a memoryless privacy mechanism to convey a noisy version of its observed data to the detector. The detector performs HT on the probability distribution of the observer’s data, and the optimal privacy mechanism that maximizes the error exponent while satisfying the privacy constraint is analyzed. Recently, a distributed version of this problem has been studied in [27], where the observer applies a privacy mechanism to its observed data prior to further coding for compression, and the goal at the detector is to perform a HT on the joint distribution of its own observations with those of the observer. In contrast with [25,26,27], we study DHT with a privacy constraint, but without considering a separate privacy mechanism at the observer. In Section 2, we will further discuss the differences between the system model considered here and that of [27].

It is important to note here that the data privacy problem is fundamentally different from that of data security against an eavesdropper or an adversary. In data security, sensitive data is to be protected against an external malicious agent distinct from the legitimate parties in the system. The techniques for guaranteeing data security usually involve either cryptographic methods in which the legitimate parties are assumed to have additional resources unavailable to the adversary (e.g., a shared private key) or the availability of better communication channel conditions (e.g., using wiretap codes). However, in data privacy problems, the sensitive data is to be protected from the same legitimate party that receives the messages and provides the utility; and hence, the above-mentioned techniques for guaranteeing data security are not applicable. Another model frequently used in the context of information-theoretic security assumes the availability of different side information at the legitimate receiver and the eavesdropper [28,29]. A DHT problem with security constraints formulated along these lines is studied in [30], where the authors propose an inner bound on the rate-error exponent-equivocation trade-off. While our model is related to that in [30] when the side information at the detector and eavesdropper coincide, there are some important differences which will be highlighted in Section 2.3.

Many different privacy measures have been considered in the literature to quantify the amount of private information leakage, such as k-anonymity [31], differential privacy (DP) [32], mutual information leakage [33,34,35], maximal leakage [36], and total variation distance [37] to count a few; see [38] for a detailed survey. Among these, mutual information between the private and revealed information (or, equivalently, the equivocation of private information given the revealed information) is perhaps the most commonly used measure in the information-theoretic studies of privacy. It is well known that a necessary and sufficient condition to guarantee statistical independence between two random variables is to have zero mutual information between them. Furthermore, the average information leakage measured using an arbitrary privacy measure is upper bounded by a constant multiplicative factor of that measured by mutual information [34]. It is also shown in [33] that a differentially private scheme is not necessarily private when the information leakage is measured by mutual information. This is done by constructing an example that is differentially private, yet the mutual information leakage is arbitrarily high. Mutual information-based measures have also been used in cryptographic security studies. For example, the notion of semantic security defined in [39] is shown to be equivalent to a measure based on mutual information in [40].

A rate-distortion approach to privacy is first explored by Yamamoto in [41] for a rate-constrained noiseless channel, where in addition to a distortion constraint for legitimate data, a minimum distortion requirement is enforced for the private part. Recently, there have been several works that have used distortion as a security or privacy metric in several different contexts, such as side-information privacy in discriminatory lossy source coding [42] and rate-distortion theory of secrecy systems [43,44]. More specifically, in [43], the distortion-based security measure is analyzed under a causal disclosure assumption, in which the data samples to be protected are causally revealed to the eavesdropper (excluding the current sample), yet the average distortion over the entire block has to satisfy a desired lower bound. This assumption ensures that distortion as a secrecy measure is more robust (see ([43], Section I-A)), and could in practice model scenarios in which the sensitive data to be protected is eventually available to the eavesdropper with some delay, but the protection of the current data sample is important. In this paper, we will consider both equivocation and average distortion under a causal disclosure assumption as measures of privacy. In [45], error exponent of a HT adversary is considered to be a privacy measure. This can be considered to be the opposite setting to ours, in the sense that while the goal here is to increase the error exponent under a privacy leakage constraint, the goal in [45] is to reduce the error exponent under a constraint on possible transformations that can be applied on the data.

It is instructive to compare the privacy measures considered in this paper with DP. Towards this, note that average distortion and equivocation (see Definitions 1 and 2) are “average case” privacy measures, while DP is a “worst case” measure that focuses on the statistical indistinguishability of neighboring datasets that differ in just one entry. Considering this aspect, it may appear that these privacy measures are unrelated. However, as shown in [46], there is an interesting connection between them. More specifically, the maximum conditional mutual information leakage between the revealed data Y and an entry in the dataset given all the other entries , i.e., , is sandwiched between the so-called - DP and -DP in terms of the strength of the privacy measure, where the maximization is over all distributions on and entries ([46], Theorem 1). This implies that as a privacy measure, equivocation (equivalent to mutual information leakage) is weaker than - DP, and stronger than -DP, at least for some probability distributions on the data. On the other hand, equivocation and average distortion are relatively well-behaved privacy measures compared to DP, and often result in clean and exact computable characterizations of the optimal trade-off for the problem at hand. Moreover, as already shown in [39,40,47,48], the trade-off resulting from “average” constraints turns out to be the same as that with more stricter constraints in many interesting cases. Hence, it is of interest to consider such average case privacy measures as a starting point for further investigation with stricter measures.

DP has been used extensively in privacy studies including those that involve learning and HT [49,50,51,52,53,54,55,56,57,58,59]. More relevant to the distributed HT problem at hand is the local differentially private model employed in [49,50,51,56], in which, depending on the privacy requirement, a certain amount of random noise is injected into the user’s data before further processing, while the utility is maximized subject to this constraint. Nevertheless, there are key differences between these models and ours. For example, in [49], the goal is to learn from differentially private “examples”, the underlying “concept” (model that maps examples to “labels”) such that the error probability in predicting the label for future examples is minimized, irrespective of the statistics of the examples. Hence, the utility in [49] is to learn an unknown model accurately, whereas our objective is to test between two known probability distributions. Furthermore, in our setting (unlike [49,50,51,56]), there is an additional requirement to satisfy in terms of the communication rate. These differences perhaps also make DP less suitable as a privacy measure in our model relative to equivocation and average distortion. On one hand, imposing a DP measure in our setting may be overly restrictive since there are only two probability distributions involved and DP is tailored for situations where the statistics of the underlying data is unknown. On the other hand, DP is also more unwieldy to analyze under a rate constraint compared to mutual information or average distortion.

The amount of private information leakage that can be tolerated depends on the specific application at hand. While it may be possible to tolerate a moderate amount of information leakage in applications like online shopping or social networks, it may no longer be the case in matters related to information sharing among government agencies or corporations. While it is obvious that maximum privacy can be attained by revealing no information, this typically comes at the cost of zero utility. On the other hand, maximum utility can be achieved by revealing all the information, but at the cost of minimum privacy. Characterizing the optimal trade-off between the utility and the minimum privacy leakage between these two extremes is a fundamental and challenging research problem.

1.2. Main Contributions

The main contributions of this work are as follows.

In Section 3, Theorem 1 (resp. Theorem 2), we establish a single-letter inner bound on the rate-error exponent-equivocation (resp. rate-error exponent-distortion) trade-off for DHT with a privacy constraint. The distortion and equivocation privacy constraints we consider, which is given in (6) and (7), respectively, are slightly stronger than what is usually considered in the literature (stated in (8) and (9), respectively).

-

Exact characterizations are obtained for some important special cases in Section 4. More specifically, a single-letter characterization of the optimal rate-error exponent-equivocation (resp. rate-error exponent-distortion) trade-off is established for:

-

(a)

TACI with a privacy constraint (for vanishing type I error probability constraint) in Section 4.1, Proposition 1 (resp. Proposition 2),

-

(b)

DHT with a privacy constraint for zero-rate compression in Section 4.2, Proposition 4 (resp. Proposition 3).

Since the optimal trade-offs in Propositions 3 and 4 are independent of the constraint on the type I error probability, they are strong converse results in the context of HT.

-

(a)

Finally, in Section 5, we provide a counter-example showing that for a positive rate , the strong converse result does not hold in general for TAI with a privacy constraint.

1.3. Organization

The organization of the paper is as follows. Basic notations are introduced in Section 2.1. The problem formulation and associated definitions are given in Section 2.2. Main results are presented in Section 3 to Section 5. The proofs of the results are presented either in the Appendix or immediately after the statement of the result. Finally, Section 6 concludes the paper with some open problems for future research.

2. Preliminaries

2.1. Notations

, and stand for the set of natural numbers, real numbers and non-negative real numbers, respectively. For , and for , ( represents equality by definition). Calligraphic letters, e.g., , denotes sets, while and denotes its cardinality and complement, respectively. denotes the indicator function, while , and stands for the standard asymptotic notations of Big-O, Little-O and Big-, respectively. For a real sequence and , represents . Similar notations apply for asymptotic inequalities, e.g., , means that . Throughout this paper, the base of the logarithms is taken to be e, and whenever the range of the summation is not specified, it means summation over the entire support, e.g., denotes .

All the random variables (r.v.’s) considered in this paper are discrete with finite support unless specified otherwise. We denote r.v.’s, their realizations and support by upper case, lower case and calligraphic letters (e.g., X, x and ), respectively. The joint probability distribution of r.v.’s X and Y is denoted by , while their marginals are denoted by and . The set of all probability distributions with support and are represented by and , respectively. For , the random vector , , is denoted by , while stands for . Similar notation holds for the vector of realizations. denotes a Markov chain relation between the r.v.’s X, Y and Z. denotes the probability of event with respect to the probability measure induced by distribution P, and denotes the corresponding expectation. The subscript P is omitted when the distribution involved is clear from the context. For two probability distributions P and Q defined on a common support, denotes that P is absolutely continuous with respect to Q.

Following the notation in [60], for and , the -typical set is

and the -type class (set of sequences of type or empirical distribution ) is . The set of all possible types of sequences of length n over an alphabet and the set of types in are denoted by and , respectively. Similar notations apply for pairs and larger combinations of r.v.’s, e.g., , , and . The conditional type class of a sequence is

| (1) |

The standard information-theoretic quantities like Kullback–Leibler (KL) divergence between distributions and , the entropy of X with distribution , the conditional entropy of X given Y and the mutual information between X and Y with joint distribution , are denoted by , , and , respectively. When the distribution of the r.v.’s involved are clear from the context, the last three quantities are denoted simply by , and , respectively. Given realizations and , denotes the conditional empirical entropy given by

| (2) |

where denotes the joint type of . Finally, the total variation between probability distributions and defined on the same support is

2.2. Problem Formulation

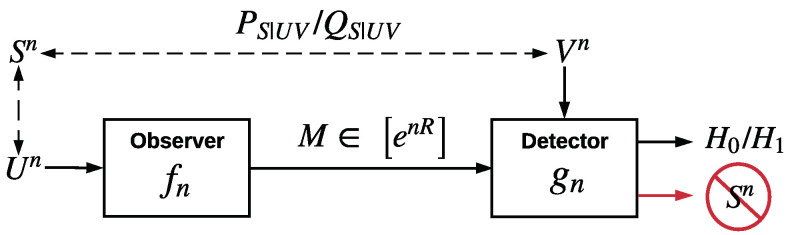

Consider the HT setup illustrated in Figure 1, where denote n independent and identically distributed (i.i.d.) copies of triplet of r.v.’s . The observer observes and sends the message index M to the detector over an error-free channel, where and , . Given its own observation , the detector performs a HT on the joint distribution of and with null hypothesis

and alternate hypothesis

Figure 1.

DHT with a privacy constraint.

Let H and denote the r.v.’s corresponding to the true hypothesis and the output of the HT, respectively, with support , where 0 denotes the null hypothesis and 1 the alternate hypothesis. Let denote the decision rule at the detector, which outputs . Then, the type I and type II error probabilities achieved by a pair are given by

and

respectively, where

and

Let and denote the joint distribution of under the null and alternate hypotheses, respectively. For a given type I error probability constraint , define the minimum type II error probability over all possible detectors as

| (3) |

The performance of HT is measured by the error exponent achieved by the test for a given constraint on the type I error probability, i.e., . Although the goal of the detector is to maximize the error exponent achieved for the HT, it is also curious about the latent r.v. that is correlated with . is referred to as the private part of , which is distributed i.i.d. according to the joint distribution and under the null and alternate hypothesis, respectively. It is desired to keep the private part as concealed as possible from the detector. We consider two measures of privacy for at the detector. The first is the equivocation defined as . The second one is the average distortion between and its reconstruction at the detector, measured according to an arbitrary bounded additive distortion metric with multi-letter distortion defined as

| (4) |

We will assume the causal disclosure assumption, i.e., is a function of in addition to . The goal is to ensure that the error exponent for HT is maximized, while satisfying the constraints on the type I error probability and the privacy of . In the sequel, we study the trade-off between the rate, error exponent (henceforth also referred to simply as the error exponent) and privacy achieved in the above setting. Before delving into that, a few definitions are in order.

Definition 1.

For a given type I error probability constraint ϵ, a rate-error exponent-distortion tuple is achievable, if there exists a sequence of encoding and decoding functions , and such that

(5) and for any , there exists an such that

(6) where , and denotes an arbitrary stochastic reconstruction map at the detector. The rate-error exponent-distortion region is the closure of the set of all such achievable tuples for a given ϵ.

Definition 2.

For a given type I error probability constraint ϵ, a rate-error exponent-equivocation (It is well known that equivocation as a privacy measure is a special case of average distortion under the causal disclosure assumption and log-loss distortion metric [43]. However, we provide a separate definition of the rate-error exponent-equivocation region for completeness.) tuple is achievable, if there exists a sequence of encoding and decoding functions and such that (5) is satisfied, and for any , there exists a such that

(7) The rate-error exponent-equivocation region is the closure of the set of all such achievable tuples for a given ϵ.

Please note that the privacy measures considered in (6) and (7) are stronger than

| (8) |

| (9) |

respectively. To see this for the equivocation privacy measure, note that if , , for some , then an equivocation pair is achievable under the constraint given in (9), while it is not achievable under the constraint given in (7).

2.3. Relation to Previous Work

Before stating our results, we briefly highlight the differences between our system model and the ones studied in [27,30]. In [27], the observer applies a privacy mechanism to the data before releasing it to the transmitter, which performs further encoding prior to transmission to the detector. More specifically, the observer checks if and if successful, sends the output of a memoryless privacy mechanism applied to , to the transmitter. Otherwise, it outputs a n-length zero-sequence. The privacy mechanism plays the role of randomizing the data (or adding noise) to achieve the desired privacy. Such randomized privacy mechanisms are popular in privacy studies, and have been used in [25,26,61]. In our model, the tasks of coding for privacy and compression are done jointly by using all the available data samples . Also, while we consider the equivocation (and average distortion) between the revealed information and the private part as the privacy measure, in [27], the mutual information between the observer’s observations and the output of the memoryless mechanism is the privacy measure. As a result of these differences, there exist some points in the rate-error exponent-privacy trade-off that are achievable in our model, but not in [27]. For instance, a perfect privacy condition for testing against independence in ([27], Theorem 2) would imply that the error exponent is also zero, since the output of the memoryless mechanism has to be independent of the observer’s observations (under both hypotheses). However, as we later show in Example 2, a positive error exponent is achievable while guaranteeing perfect privacy in our model.

On the other hand, the difference between our model and [30] arises from the difference in the privacy constraint as well as the privacy measure. Specifically, the goal in [30] is to keep private from an illegitimate eavesdropper, while the objective here is to keep a r.v. that is correlated with private from the detector. Also, we consider the more general average distortion (under causal disclosure) as a privacy measure, in addition to equivocation in [30]. Moreover, as already noted, the equivocation privacy constraint in (7) is more stringent than (9) that is considered in [30]. To satisfy the distortion requirement or the stronger equivocation privacy constraint in (7), we require that the a posteriori probability distribution of given the observations at the detector is close in some sense to a desired “target" memoryless distribution. To achieve this, we use a stochastic encoding scheme to induce the necessary randomness for at the detector, which to the best of our knowledge has not been considered previously in the context of DHT. Consequently, the analysis of the type I and type II error probabilities and privacy achieved are novel. Another subtle yet important difference is that the marginal distributions of and the side information at the eavesdropper are assumed to be the same under the null and alternate hypotheses in [30], which is not the case here. This necessitates separate analysis for the privacy achieved under the two hypotheses.

Next, we state some supporting results that will be useful later for proving the main results.

2.4. Supporting Results

Let

| (10) |

denote a deterministic detector with acceptance region for and for . Then, the type I and type II error probabilities are given by

| (11) |

| (12) |

Lemma 1.

Any error exponent that is achievable is also achievable by a deterministic detector of the form given in (10) for some , where and denote the acceptance regions for and , respectively.

The proof of Lemma 1 is given in Appendix A for completeness. Due to Lemma 1, henceforth we restrict our attention to a deterministic as given in (10).

The next result shows that without loss of generality (w.l.o.g), it is also sufficient to consider (in Definition 1) to be a deterministic function of the form

| (13) |

for the minimization in (6), where , , denotes an arbitrary deterministic function.

Lemma 2.

The infimum in (6) is achieved by a deterministic function as given in (13), and hence it is sufficient to restrict to such deterministic in (6).

The proof of Lemma 2 is given in Appendix B. Next, we state some lemmas that will be handy for upper bounding the amount of privacy leakage in the proofs of the main results stated below. The following one is a well-known result proved in [60] that upper bounds the difference in entropy of two r.v.’s (with a common support) in terms of the total variation distance between their probability distributions.

Lemma 3.

([60], Lemma 2.7) Let and be distributions defined on a common support and let . Then, for

The next lemma will be handy in proving Theorems 1 and 2, Proposition 3 and the counter-example for strong converse presented in Section 5.

Lemma 4.

Let denote n i.i.d. copies of r.v.’s , and and denote two joint probability distributions on . For , define

(14) If , then for sufficiently small, there exists and such that for all ,

(15) If , then for any , there exists and such that for all ,

(16) Also, for any , there exists and such that for all ,

(17)

Proof.

The proof is presented in Appendix C. ☐

In the next section, we establish an inner bound on and .

3. Main Results

The following two theorems are the main results of this paper providing inner bounds for and , respectively.

Theorem 1.

For , if there exists an auxiliary r.v. W, such that , and

(18)

(19)

(20)

(21) where

(22)

(23)

Theorem 2.

For a given bounded additive distortion measure and , if there exist an auxiliary r.v. W and deterministic functions and , such that and (18) and (19),

(24)

(25) are satisfied, where and are as defined in Theorem 1.

The proof of Theorems 1 and 2 is given in Apppendix Appendix D. While the rate-error exponent trade-off in Theorems 1 and 2 is the same as that achieved by the Shimokawa-Han-Amari (SHA) scheme [7], the coding strategy achieving it is different due to the requirement of the privacy constraint. As mentioned above, in order to obtain a single-letter lower bound for the achievable distortion (and achievable equivocation) of the private part at the detector, it is required that the a posteriori probability distribution of given the observations at the detector is close in some sense to a desired “target” memoryless distribution. For this purpose, we use the so-called likelihood encoder [62,63] (at the observer) in our achievability scheme. The likelihood encoder is a stochastic encoder that induces the necessary randomness for at the detector, and to the best of our knowledge has not been used before in the context of DHT. The analysis of the type I and type II error probabilities and the privacy achieved by our scheme is novel and involves the application of the well-known channel resolvability or soft-covering lemma [62,64,65]. Properties of the total variation distance between probability distributions mentioned in [43] play a key role in this analysis. The analysis also reveals the interesting fact that the coding schemes in Theorems 1 and 2, although quite different from the SHA scheme, achieves the same lower bound on the error exponent.

Theorems 1 and 2 provide single-letter inner bounds on and , respectively. A complete computable characterization of these regions would require a matching converse. This is a hard problem, since such a characterization is not available even for the DHT problem without a privacy constraint, in general (see [5]). However, it is known that a single-letter characterization of the rate-error exponent region exists for the special case of TACI [11]. In the next section, we show that TACI with a privacy constraint also admits a single-letter characterization, in addition to other optimality results.

4. Optimality Results for Special Cases

4.1. TACI with a Privacy Constraint

Assume that the detector observes two discrete memoryless sources and , i.e., . In TACI, the detector tests for the conditional independence of U and Y, given Z. Thus, the joint distribution of the r.v.’s under the null and alternate hypothesis are given by

| (26a) |

and

| (26b) |

respectively.

Let and denote the rate-error exponent-equivocation and rate-error exponent-distortion regions, respectively, for the case of vanishing type I error probability constraint, i.e.,

Assume that the privacy constraint under the alternate hypothesis is inactive. Thus, we are interested in characterizing the set of all tuples and , where

| (27) |

Please note that and correspond to the equivocation and average distortion of at the detector, respectively, when is available directly at the detector under the alternate hypothesis. The above assumption is motivated by scenarios, in which the observer is more eager to protect when there is a correlation between its own observation and that of the detector, such as the online shopping portal example mentioned in Section 1. In that example, , and corresponds to shopping behavior, more information about the customer, and customers data available to the shopping portal, respectively.

For the above-mentioned case, we have the following results.

Proposition 1.

For the HT given in (26), if and only if there exists an auxiliary r.v. W, such that , and

(28)

(29)

(30) for some joint distribution of the form .

Proof.

For TACI, the inner bound in Theorem 1 yields that for , if there exists an auxiliary r.v. W, such that , and

(31)

(32)

(33)

(34) where

(35)

(36) Please note that since , we have

(37) Let . Then, for , we have,

Hence,

(38) By noting that (by the data processing inequality), we have shown that for , if (28)–(30) are satisfied. This completes the proof of achievability.

Converse: Let . Let T be a r.v. uniformly distributed over and independent of all the other r.v.’s . Define an auxiliary r.v. , where , . Then, we have for sufficiently large n that

(39)

(40)

(41)

(42) Here, (39) follows since the sequences are memoryless; (40) follows since form a Markov chain; and, (41) follows from the fact that T is independent of all the other r.v.’s.

The equivocation of under the null hypothesis can be bounded as follows.

(43)

(44) where for some conditional distribution . In (43), we used the fact that conditioning reduces entropy.

Finally, we prove the upper bound on . For any encoding function and decision region for such that , we have,

(45) Here, (45) follows from the log-sum inequality [60]. Thus,

(46)

(47) where (46) follows since . The last term can be single-letterized as follows:

(48) Substituting (48) in (47), we obtain

(49) Also, note that holds. To see this, note that are i.i.d across . Hence, any information in on is only through M as a function of , and so given , is independent of . The above Markov chain then follows from the fact that T is independent of . This completes the proof of the converse and the theorem. ☐

Next, we state the result for TACI with a distortion privacy constraint, where the distortion is measured using an arbitrary distortion measure . Let .

Proposition 2.

For the HT given in (26), if and only if there exist an auxiliary r.v. W and a deterministic function such that

(50)

(51)

(52) for some as defined in Proposition 1.

Proof.

The proof of achievability follows from Theorem 2, similarly to the way Proposition 1 is obtained from Theorem 1. Hence, only differences will be highlighted. Similar to the inequality in the proof of Proposition 1, we need to prove the inequality , where for some conditional distribution . This can be shown as follows:

(53) where in (53), is chosen such that

Converse: Let denote the auxiliary r.v. defined in the converse of Proposition 1. Inequalities (50) and (51) follow similarly as obtained in Proposition 1. We prove (52). Defining , we have

(54) where (54) is due to (A1) (in Appendix B). Hence, any satisfying (6) satisfies

This completes the proof of the converse and the theorem. ☐

A more general version of Propositions 1 and 2 is claimed in [66] as Theorems 7 and 8, respectively, in which a privacy constraint under the alternate hypothesis is also imposed. However, we have identified a mistake in the converse proof; and hence, a single-letter characterization for this general problem remains open.

To complete the single-letter characterization in Propositions 1 and 2, we bound the alphabet size of the auxiliary r.v. W in the following lemma, whose proof is given in Appendix E.

Lemma 5.

In Propositions 1 and 2, it suffices to consider auxiliary r.v.’s W such that .

The proof of Lemma 5 uses standard arguments based on the Fenchel–Eggleston–Carathéodory’s theorem and is given in Appendix E.

Remark 1.

When , a tight single-letter characterization of and exists even if the privacy constraint is active under the alternate hypothesis. This is due to the fact that given and , M is independent of under the alternate hypothesis. In this case, if and only if there exists an auxiliary r.v. W, such that , and

(55)

(56)

(57)

(58) for some as in Proposition 1. Similarly, we have that if and only if there exist an auxiliary r.v. W and a deterministic function such that (55) and (56),

(59)

(60) are satisfied for some as in Proposition 1.

The computation of the trade-off given in Proposition 1 is challenging despite the cardinality bound on the auxiliary r.v. W provided by Lemma 5, as closed form solutions do not exist in general. To see this, note that the inequality constraints defining are not convex in general, and hence even computing specific points in the trade-off could be a hard problem. This is evident from the fact that in the absence of the privacy constraint in Proposition 1, i.e., (30), computing the maximum error exponent for a given rate constraint is equivalent to the information bottleneck problem [67], which is known to be a hard non-convex optimization problem. Also, the complexity of brute force search is exponential in , and hence intractable for large values of . Below we provide an example which can be solved in closed form and hence computed easily.

Example 1.

Let , , constant, , , , and . Then, if there exists such that

(61)

(62)

(63) where for , , and is the binary entropy function given by

The above characterization (Numerical computation shows that the characterization given in (61)–(63) is exact even when .) is exact for , i.e., only if there exists such that (61)–(63) are satisfied.

Proof.

Taking , and , the constraints defining the trade-off given in Proposition 1 simplifies to

On the other hand, if , note that . Hence, the same constraints can be bounded as follows:

(64)

(65) where is the inverse of the binary entropy function. Here, the inequality in (64) and (65) follows by an application of Mrs Gerber’s lemma [68], since under the null hypothesis and is independent of U and W. Also, since . Noting that , and defining , the result follows. ☐

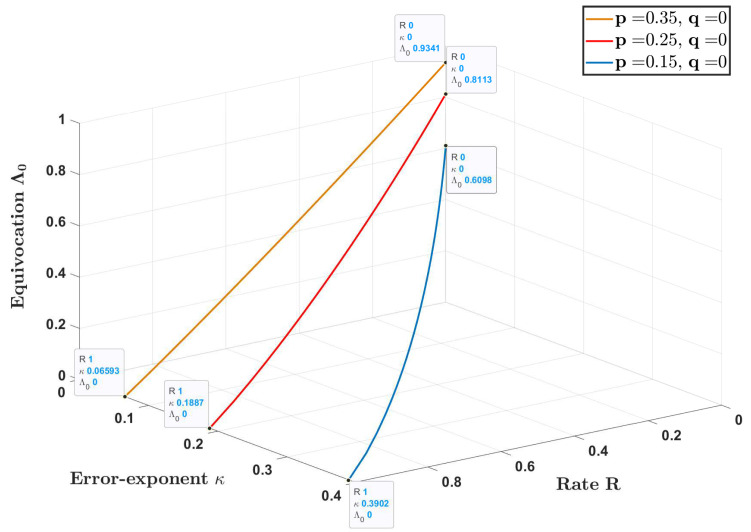

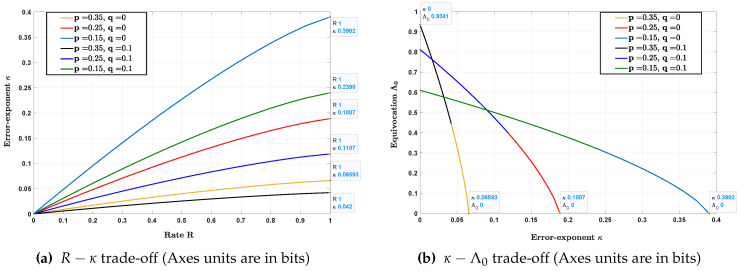

Figure 2 depicts the curve for and , as r is varied in the range . The projection of this curve on the and plane is shown in Figure 3a,b, respectively, for and the same values of p. As expected, the error exponent increases with rate R while the equivocation decreases with at the boundary of .

Figure 2.

trade-off at the boundary of in Example 1 (Axes units are in bits)

Figure 3.

Projections of Figure 2 in the plane and plane

Proposition 1 (resp. Proposition 2) provide a characterization of (resp. ) under the condition of vanishing type I error probability constraint. Consequently, the converse part of these results are known as weak converse results in the context of HT. In the next subsection, we establish the optimal error exponent-privacy trade-off for the special case of zero-rate compression. This trade-off is independent of the type I error probability constraint , and hence known as a strong converse result.

4.2. Zero-Rate Compression

Assume the following zero-rate constraint on the communication between the observer and the detector,

| (66) |

Please note that (66) does not imply that , i.e., nothing can be transmitted, but that the message set cardinality can grow at most sub-exponentially in n. Such a scenario is motivated practically by low power or low bandwidth constrained applications in which communication is costly. Propositions 3 and 4 stated below provide an optimal single-letter characterization of and in this case. While the coding schemes in the achievability part of these results are inspired from that in [6], the analysis of privacy achieved at the detector is new. Lemma 4 serves as a crucial tool for this purpose. We next state the results. Let

| (67a) |

| (67b) |

Proposition 3.

For , if and only if it satisfies,

(68)

(69)

(70) where is a deterministic function and

Proof.

First, we prove that satisfying (68)–(70) is achievable. While the encoding and decoding scheme is the same as that in [6], we mention it for the sake of completeness.

Encoding: The observer sends the message if , , and otherwise.

Decoding: The detector declares if and , . Otherwise, is declared.

We analyze the type I and type II error probabilities for the above scheme. Please note that for any , the weak law of large numbers implies that

Hence, the type I error probability tends to zero, asymptotically. The type II error probability can be written as follows:

where

Next, we lower bound the average distortion for achieved by this scheme at the detector. Defining

(71)

(72)

(73) we can write

(74)

(75)

(76) where (74) is since with probability one by the encoding scheme; (75) follows from

(77) and ([43], Property 2(b)); and, (76) is due to (17). Similarly, it can be shown using (16) that if , then

(78) On the other hand, if and is small enough, we have

(79) Hence, we can write for small enough,

(80)

(81)

(82) where (80) is since with probability one; (81) is due to (79) and ([43], Property 2(b)); and, (82) follows from (15). This completes the proof of the achievability.

We next prove the converse. Please note that by the strong converse result in [8], the right hand side (R.H.S) of (68) is an upper bound on the achievable error exponent for all even without a privacy constraint (hence, also with a privacy constraint). Also,

(83) Here, (83) follows from the fact that the detector can always reconstruct as a function of for . Similarly,

Hence, any achievable and must satisfy (69) and (70), respectively. This completes the proof. ☐

The following Proposition is the analogous result to Proposition 3 when the privacy measure is equivocation.

Proposition 4.

For , if and only if it satisfies (68) and

(84)

(85)

Proof.

For proving the achievability part, the encoding and decoding scheme is the same as in Proposition 3. Hence, the analysis of the error exponent given in Proposition 3 holds. To lower bound the equivocation of at the detector, defining , and as in (71)–(73), we can write

(86)

(87) where (86) follows due to Lemma 3, ([60], Lemma 2.12) and the fact that entropy of a r.v. is bounded by the logarithm of cardinality of its support; and, (87) follows from (17) in Lemma 4 since . In a similar way, it can be shown using (16) that if , then

(88) On the other hand, if and is small enough, we can write

(89) where (89) follows from Lemma 3 and (79). It follows from (15) in Lemma 4 that for sufficiently small, for some , thus implying that the R.H.S. of (89) tends to zero. This completes the proof of achievability.

The converse follows from the results in [6,8] that the R.H.S of (68) is the optimal error exponent achievable for all values of even when there is no privacy constraint, and the following inequality

(90) This concludes the proof of the Proposition. ☐

In Section 2.2, we mentioned that it is possible to achieve a positive error exponent with perfect privacy in our model. Here, we provide an example of TAI with an equivocation privacy constraint under both hypothesis, and show that perfect privacy is possible. Recall that TAI is a special case of TACI, in which constant, and hence, the null and alternate hypothesis are given by

Example 2.

Let , ,

and , where . Then, we have bits. Also, noting that under the null hypothesis, , bits. It follows from the inner bound given by Equations (31)–(34), and, (37) and (38) that , if

where and for some conditional distribution . If we set , then we have bit, bit, bits, and bits. Thus, by revealing only W to the detector, it is possible to achieve a positive error exponent while ensuring maximum privacy under both the null and alternate hypothesis, i.e., the tuple , .

5. A Counter-Example to the Strong Converse

Ahlswede and Csiszár obtained a strong converse result for the DHT problem without a privacy constraint in [5], where they showed that for any positive rate R, the optimal achievable error exponent is independent of the type I error probability constraint . Here, we explore whether a similar result holds in our model, in which an additional privacy constraint is imposed. We will show through a counter-example that this is not the case in general. The basic idea used in the counter-example is a “time-sharing” argument which is used to construct from a given coding scheme that achieves the optimal rate-error exponent-equivocation trade-off under a vanishing type I error probability constraint, a new coding scheme that satisfies the given type I error probability constraint and the same error exponent as before, yet achieves a higher equivocation for at the detector. This concept has been used previously in other contexts, e.g., in the characterization of the first-order maximal channel coding rate of additive white gaussian noise (AWGN) channel in the finite block-length regime [69], and subsequently in the characterization of the second order maximal coding rate in the same setting [70]. However, we will provide a self-contained proof of the counter-example by using Lemma 4 for this purpose.

Assume that the joint distribution is such that . Proving the strong converse amounts to showing that any for some also belongs to . Consider TAI problem with an equivocation privacy constraint, in which and . Then, from the optimal single-letter characterization of given in Proposition 1, it follows by taking that . Please note that is the maximum error exponent achievable for any type I error probability constraint , even when is observed directly at the detector. Thus, for vanishing type I error probability constraint and , the term denotes the maximum achievable equivocation for under the null hypothesis. From the proof of Proposition 1, the coding scheme achieving this tuple is as follows:

Quantize to codewords in and send the index of quantization to the detector, i.e., if , send , where j is the index of in . Else, send .

At the detector, if , declare . Else, declare if for some , and otherwise.

The type I error probability of the above scheme tends to zero asymptotically with n. Now, for a fixed , consider a modification of this coding scheme as follows:

If , send with probability , where j is the index of in , and with probability , send . If , send .

At the detector, if , declare . Else, declare if for some , and otherwise.

It is easy to see that for this modified coding scheme, the type I error probability is asymptotically equal to , while the error exponent remains the same as since the probability of declaring is decreased. Recalling that , we also have

| (91) |

| (92) |

| (93) |

| (94) |

where denotes some sequence of positive numbers such that , and

| (95) |

| (96) |

| (97) |

Equation (91) follows similarly to the proof of Theorem 1 in [71]. Equation (92) is obtained as follows:

| (98) |

| (99) |

Here, (98) is obtained by an application of Lemma 3; and (99) is due to the assumption that .

It follows from Lemma 4 that , which in turn implies that

| (100) |

From (95), (97) and (100), we have that . Hence, Equation (94) implies that for some . Since , this implies that in general, the strong converse does not hold for HT with an equivocation privacy constraint. The same counter-example can be used in a similar manner to show that the strong converse does not hold for HT with an average distortion privacy constraint either.

6. Conclusions

We have studied the DHT problem with a privacy constraint, with equivocation and average distortion under a causal disclosure assumption as the measures of privacy. We have established a single-letter inner bound on the rate-error exponent-equivocation and rate-error exponent-distortion trade-offs. We have also obtained the optimal rate-error exponent-equivocation and rate-error exponent-distortion trade-offs for two special cases, when the communication rate over the channel is zero, and for TACI under a privacy constraint. It is interesting to note that the strong converse for DHT does not hold when there is an additional privacy constraint in the system. Extending these results to the case when the communication between the observer and detector takes place over a noisy communication channel is an interesting avenue for future research. Yet another important topic worth exploring is the trade-off between rate, error probability and privacy in the finite sample regime for the setting considered in this paper.

Abbreviations

The following abbreviations are used in this manuscript:

| HT | Hypothesis testing |

| DHT | Distributed hypothesis testing |

| TACI | Testing against conditional independence |

| TAI | Testing against independence |

| DP | Differential privacy |

| KL | Kullback–Leibler |

| SHA | Shimokawa-Han-Amari |

Appendix A. Proof of Lemma 1

Please note that for a stochastic detector, the type I and type II error probabilities are linear functions of . As a result, for each fixed n and , and for a stochastic detector can be thought of as the type I and type II errors achieved by “time-sharing” among a finite number of deterministic detectors. To see this, consider some ordering on the elements of the set and let , , where i denotes the element of and . Then, we can write

Then, it is easy to see that , where and is an N length vector with 1 at the component and 0 elsewhere. Now, suppose and denote the pair of type I and type II error probabilities achieved by deterministic detectors and , respectively. Let and denote their corresponding acceptance regions for . Let denote the stochastic detector formed by using and with probabilities and , respectively. From the above-mentioned linearity property, it follows that achieves type I and type II error probabilities of and , respectively. Let . Then, for ,

Hence, either

or

Thus, since , a stochastic detector does not offer any advantage over deterministic detectors in the trade-off between the error exponent and the type I error probability.

Appendix B. Proof of Lemma 2

Let and denote the joint distribution of the r.v.’s under hypothesis and , respectively, where denotes for . Then, we have

where

Continuing, we have

| (A1) |

This completes the proof.

Appendix C. Proof of Lemma 4

We will first prove (15). Fix . For , define the following sets:

| (A2) |

| (A3) |

Then, we can write

| (A4) |

Next, note that

| (A5) |

for sufficiently large n (depending on ), since . Thus, for n large enough,

| (A6) |

We can bound the last term in (A4) as follows:

| (A7) |

| (A8) |

Let denote the type of and define

Then, for , arbitrary and n sufficiently large (depending on ), it follows from ([60], Lemma 2.6) that

| (A9) |

| (A10) |

From (A4), (A6) and (A8)–(A10), it follows that

| (A11) |

We next show that for sufficiently small and . This would imply that the R.H.S of (A11) converges exponentially to zero (for small enough) with exponent , thus proving (15). We can write,

| (A12) |

| (A13) |

where

Here, (A12) follows due to the convexity of KL divergence (A13) is due to Pinsker’s inequality [60]. We also have from the triangle inequality satisfied by total variation that,

For ,

Also, for ,

Hence,

Since , for sufficiently small and . This completes the proof of (15).

We next prove (17). Similar to (A4) and (A5), we have,

| (A14) |

and

| (A15) |

since .

Also, for and sufficiently large n (depending on ), we have

| (A16) |

| (A17) |

where to obtain (A16), we used

| (A18) |

| (A19) |

Here, (A18) follows from ([60], Lemma 2.12), and (A19) follows from ([60], Lemmas 2.10 and 2.12), respectively. Thus, from (A14), (A15) and (A17), we can write that,

This completes the proof of (17). The proof of (16) is exactly the same as (17), with the only difference that the sets and are used in place of and , respectively.

Appendix D. Proof of Theorems 1 and 2

We describe the encoding and decoding operations which are the same for both Theorems 1 and 2. Fix positive numbers (small) , and let and .

Codebook Generation: Fix a finite alphabet and a conditional distribution . Let , , denote a random codebook such that each is randomly and independently generated according to distribution , where

Denote a realization of by and the support of by .

Encoding: For a given codebook , let

| (A20) |

denote the likelihood encoding function. If , the observer performs uniform random binning on the indices in , i.e., for each , it selects an index uniformly at random from the set . Denote the random binning function by and a realization of it by . If , set as the identity function with probability one, i.e., . If , then the observer outputs the message if or otherwise, where is chosen randomly with probability and t denotes the index of the joint type of in the set of types . If , the observer outputs the error message . Please note that since the total number of types in is upper bounded by ([60], Lemma 2.2). Let , and let and denote its realization and probability distribution, respectively. For a given , let represent the encoder induced by the above operations, where and .

Decoding: If or , is declared. Else, given and , the detector decodes for a codeword in the codebook such that

Denote the above decoding rule by , where . The detector declares if and otherwise. Let stand for the decision rule induced by the above operations.

System induced distributions and auxiliary distributions:

The system induced probability distribution when is given by

| (A21) |

and

| (A22) |

Consider two auxiliary distribution and given by

| (A23) |

and

| (A24) |

Let and denote probability distributions under defined by the R.H.S. of (A21)–(A23) with replaced by , and let denote the R.H.S. of (A24) with replaced by . Please note that the encoder is such that and hence, the only difference between the joint distribution and is the marginal distribution of . By the soft-covering lemma [62,64], it follows that for some ,

| (A25) |

Hence, from ([43], Property 2(d)), it follows that

| (A26) |

Also, note that the only difference between the distributions and is when . Since

| (A27) |

it follows that

| (A28) |

Equations (A26) and (A28) together imply via ([43], Property 2(c)) that

| (A29) |

Please note that for , the joint distribution satisfies

| (A30) |

Also, since , by the application of soft-covering lemma,

| (A31) |

for some .

If , then it again follows from the soft-covering lemma that

| (A32) |

thereby implying that

| (A33) |

Also, note that the only difference between the distributions and is when . Since implies , it follows that

| (A34) |

Equations (A33) and (A34) together imply that

| (A35) |

Let and denote the expected probability measure (random coding measure) induced by PMF’s and , respectively. Then, note that from (A24), (A29), (A31) and the weak law of large numbers,

| (A36) |

Analysis of type I and type II error probabilities:

We analyze type I and type II error probabilities of the coding scheme mentioned above averaged over the random ensemble .

Type I error probability:

Please note that a type I error occurs only if one of the following events occur:

Let . Then, the expected type I error probability over be upper bounded as

| (A37) |

Please note that tends to 0 asymptotically by the weak law of large numbers. From (A36), . Given and holds, it follows from the Markov chain relation and the Markov lemma [68] that . Also, as in the proof of Theorem 2 in [13], it follows that

| (A38) |

where . Thus, if , it follows by choosing that for small enough, the R.H.S. of (A38) tends to zero asymptotically. By the union bound on probability, the R.H.S. of (A37) tends to zero.

Type II error probability:

Let . Please note that a type II error occurs only if and , i.e., and . Hence, we can restrict the type II error analysis to only such . Denoting the event that a type II error occurs by , we have

| (A39) |

Let . The last term in (A39) can be upper bounded as follows:

| (A40) |

| (A41) |

| (A42) |

where (A41) follows since the term in (A40) is independent of the indices due to the symmetry of the codebook generation, encoding and decoding procedure. The first term in (A42) can be upper bounded as

| (A43) |

To obtain (A43), we used the fact that in (A20) is invariant to the joint type of (keeping all the other codewords fixed). This in turn implies that given , each sequence in the conditional type class is equally likely (in the randomness induced by and stochastic encoding in (A20)) and its probability is upper bounded by . Defining the events

| (A44) |

| (A45) |

| (A46) |

| (A47) |

the last term in (A42) can be written as

| (A48) |

The analysis of the terms in (A48) is essentially similar to that given in the proof of Theorem 2 in [13], except for a subtle difference that we mention next. To bound the binning error event , we require an upper bound similar to

| (A49) |

that is used in the proof of Theorem 2 in [13]. Please note that the stochastic encoding scheme considered here is different from the encoding scheme in [13]. In place (A49), we will show that for ,

| (A50) |

which suffices for the proof. Please note that

| (A51) |

| (A52) |

Since the codewords are generated independently of each other and the binning operation is done independent of the codebook generation, we have

| (A53) |

and

| (A54) |

Also, note that

| (A55) |

Next, consider the term in (A51). Let

Then, the numerator and denominator of (A51) can be written as

| (A56) |

and

| (A57) |

respectively. The R.H.S. of (A56) (resp. (A57)) denote the average probability that is chosen by given , and (resp. ) other independent codewords in . Let

Please note that

| (A58) |

Hence, denoting by the probability measure induced by , we have

| (A59) |

| (A60) |

| (A61) |

| (A62) |

| (A63) |

where (A59) is due to (A58); (A61) is since the term within in (A60) is upper bounded by one; (A62) is since for some which follows similar to ([68], Section 3.6.3), and (A63) follows since the term within the expectation which is exponential in order dominates the double exponential term. From (A52)–(A55), (A63) and (A50) follows. The analysis of the other terms in (A48) is the same as in the SHA scheme in [7], and results in the error exponent (within an additive term) claimed in the Theorem. We refer the reader to ([13], Theorem 2) for a detailed proof (In [13], the communication channel between the observer and the detector is a DMC. However, since the coding scheme used in the achievability part of Theorem 2 in [13] is a separation-based scheme, the error exponent when the channel is noiseless can be recovered by setting and in Theorem 2 to ∞). By the random coding argument followed by the standard expurgation technique [72] (see ([13], Proof of Theorem 2)), there exists a deterministic codebook and binning function pair such that the type I and type II error probabilities are within a constant multiplicative factor of their average values over the random ensemble , and

| (A64) |

| (A65) |

| (A66) |

| (A67) |

where and are some positive numbers. Since the average type I error probability for our scheme tends to zero asymptotically, and the error exponent is unaffected by a constant multiplicative scaling of the type II error probability, this codebook achieves the same type I error probability and error exponent as the average over the random ensemble. Using this deterministic codebook for encoding and decoding, we first lower bound the equivocation and average distortion of at the detector as follows:

First consider the equivocation of under the null hypothesis.

| (A68) |

| (A69) |

| (A70) |

| (A71) |

| (A72) |

| (A73) |

| (A74) |

| (A75) |

Here, (A68) follows from (A27); (A69) follows since M is a function of for a deterministic codebook; (A71) follows from (A65) and Lemma 3; (A72) follows from (A24); and (A75) follows from (A67) and .

If , it follows similarly to above that

| (A76) |

| (A77) |

| (A78) |

| (A79) |

| (A80) |

Finally, consider the case and . We have for small enough that

| (A81) |

Hence, for small enough, we can write

| (A82) |

| (A83) |

| (A84) |

| (A85) |

Here, (A82) follows from (A81); (A83) follows since implies ; (A84) follows from Lemma 3 and (15). Thus, since is arbitrary, we have shown that for , if (18)–(21) holds.

On the other hand, average distortion of at the detector can be lower bounded under as follows:

| (A86) |

| (A87) |

| (A88) |

| (A89) |

| (A90) |

Here, (A86) follows from Lemma 2; (A87) follows from ([43], Property 2(b)) due to (A65) and boundedness of distortion measure; (A88) follows from the Markov chain in (A64); (A89) follows from (A67) and the fact that .

Next, consider the case and . Then, similarly to above, we can write

| (A91) |

| (A92) |

| (A93) |

| (A94) |

If and , we have

| (A95) |

| (A96) |

Here, (A96) follows from (15) in Lemma 4 and (A96) follows from (A81). Thus, since is arbitrary, we have shown that , , provided that (18), (19), (24) and (25) are satisfied. This completes the proof of the theorem.

Appendix E. Proof of Lemma 5

Consider the functions of ,

| (A97) |

| (A98) |

| (A99) |

| (A100) |

where

Thus, by the Fenchel–Eggleston–Carathéodory’s theorem [68], it is sufficient to have at most points in the support of W to preserve and three more to preserve , and . Noting that and are automatically preserved since is preserved (and holds), points are sufficient to preserve the R.H.S. of Equations (28)–(30). This completes the proof for the case of . Similarly, considering the functions of given in (A97)–(A99) and

where

similar result holds also for the case of .

Author Contributions

Conceptualization, S.S., A.C. and D.G.; writing—original draft preparation, S.S.; supervision, A.C. and D.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the European Research Council Starting Grant project BEACON (grant agreement number 677854).

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Appari A., Johnson E. Information security and privacy in healthcare: Current state of research. Int. J. Internet Enterp. Manag. 2010;6:279–314. doi: 10.1504/IJIEM.2010.035624. [DOI] [Google Scholar]

- 2.Gross R., Acquisti A. Information revelation and privacy in online social networks; Proceedings of the ACM workshop on Privacy in Electronic Society; Alexandria, VA, USA. 7 November 2005; pp. 71–80. [Google Scholar]

- 3.Miyazaki A., Fernandez A. Consumer Perceptions of Privacy and Security Risks for Online Shopping. J. Consum. Aff. 2001;35:27–44. doi: 10.1111/j.1745-6606.2001.tb00101.x. [DOI] [Google Scholar]

- 4.Giaconi G., Gündüz D., Poor H.V. Privacy-Aware Smart Metering: Progress and Challenges. IEEE Signal Process. Mag. 2018;35:59–78. doi: 10.1109/MSP.2018.2841410. [DOI] [Google Scholar]

- 5.Ahlswede R., Csiszár I. Hypothesis Testing with Communication Constraints. IEEE Trans. Inf. Theory. 1986;32:533–542. doi: 10.1109/TIT.1986.1057194. [DOI] [Google Scholar]

- 6.Han T.S. Hypothesis Testing with Multiterminal Data Compression. IEEE Trans. Inf. Theory. 1987;33:759–772. doi: 10.1109/TIT.1987.1057383. [DOI] [Google Scholar]

- 7.Shimokawa H., Han T.S., Amari S. Error Bound of Hypothesis Testing with Data Compression; Proceedings of the IEEE International Symposium on Information Theory; Trondheim, Norway. 27 June–1 July 1994. [Google Scholar]

- 8.Shalaby H.M.H., Papamarcou A. Multiterminal Detection with Zero-Rate Data Compression. IEEE Trans. Inf. Theory. 1992;38:254–267. doi: 10.1109/18.119685. [DOI] [Google Scholar]

- 9.Zhao W., Lai L. Distributed Testing Against Independence with Multiple Terminals; Proceedings of the 52nd Annual Allerton Conference; Monticello, IL, USA. 30 September–3 October 2014; pp. 1246–1251. [Google Scholar]

- 10.Katz G., Piantanida P., Debbah M. Distributed Binary Detection with Lossy Data Compression. IEEE Trans. Inf. Theory. 2017;63:5207–5227. doi: 10.1109/TIT.2017.2688348. [DOI] [Google Scholar]

- 11.Rahman M.S., Wagner A.B. On the Optimality of Binning for Distributed Hypothesis Testing. IEEE Trans. Inf. Theory. 2012;58:6282–6303. doi: 10.1109/TIT.2012.2206793. [DOI] [Google Scholar]

- 12.Sreekumar S., Gündüz D. Distributed Hypothesis Testing Over Noisy Channels; Proceedings of the IEEE International Symposium on Information Theory; Aachen, Germany. 25–30 June 2017; pp. 983–987. [Google Scholar]

- 13.Sreekumar S., Gündüz D. Distributed Hypothesis Testing Over Discrete Memoryless Channels. IEEE Trans. Inf. Theory. 2020;66:2044–2066. doi: 10.1109/TIT.2019.2953750. [DOI] [Google Scholar]

- 14.Salehkalaibar S., Wigger M., Timo R. On Hypothesis Testing Against Conditional Independence with Multiple Decision Centers. IEEE Trans. Commun. 2018;66:2409–2420. doi: 10.1109/TCOMM.2018.2798659. [DOI] [Google Scholar]

- 15.Salehkalaibar S., Wigger M. Distributed Hypothesis Testing based on Unequal-Error Protection Codes. arXiv. 2018 doi: 10.1109/TIT.2020.2993172.1806.05533 [DOI] [Google Scholar]

- 16.Han T.S., Kobayashi K. Exponential-Type Error Probabilities for Multiterminal Hypothesis Testing. IEEE Trans. Inf. Theory. 1989;35:2–14. doi: 10.1109/18.42171. [DOI] [Google Scholar]

- 17.Haim E., Kochman Y. On Binary Distributed Hypothesis Testing. arXiv. 20181801.00310 [Google Scholar]

- 18.Weinberger N., Kochman Y. On the Reliability Function of Distributed Hypothesis Testing Under Optimal Detection. IEEE Trans. Inf. Theory. 2019;65:4940–4965. doi: 10.1109/TIT.2019.2910065. [DOI] [Google Scholar]

- 19.Bayardo R., Agrawal R. Data privacy through optimal k-anonymization; Proceedings of the International Conference on Data Engineering; Tokyo, Japan. 5–8 April 2005; pp. 217–228. [Google Scholar]

- 20.Agrawal R., Srikant R. Privacy-preserving data mining; Proceedings of the ACM SIGMOD International Conference on Management of Data; Dallas, TX, USA. 18–19 May 2000; pp. 439–450. [Google Scholar]

- 21.Bertino E. Big Data-Security and Privacy; Proceedings of the IEEE International Congress on BigData; New York, NY, USA. 27 June–2 July 2015; pp. 425–439. [Google Scholar]

- 22.Gertner Y., Ishai Y., Kushilevitz E., Malkin T. Protecting Data Privacy in Private Information Retrieval Schemes. J. Comput. Syst. Sci. 2000;60:592–629. doi: 10.1006/jcss.1999.1689. [DOI] [Google Scholar]

- 23.Hay M., Miklau G., Jensen D., Towsley D., Weis P. Resisting structural re-identification in anonymized social networks. J. Proc. VLDB Endow. 2008;1:102–114. doi: 10.14778/1453856.1453873. [DOI] [Google Scholar]

- 24.Narayanan A., Shmatikov V. De-anonymizing Social Networks; Proceedings of the IEEE Symposium on Security and Privacy; Berkeley, CA, USA. 17–20 May 2009. [Google Scholar]

- 25.Liao J., Sankar L., Tan V., Calmon F. Hypothesis Testing Under Mutual Information Privacy Constraints in the High Privacy Regime. IEEE Trans. Inf. Forensics Secur. 2018;13:1058–1071. doi: 10.1109/TIFS.2017.2779108. [DOI] [Google Scholar]

- 26.Liao J., Sankar L., Calmon F., Tan V. Hypothesis testing under maximal leakage privacy constraints; Proceedings of the IEEE International Symposium on Information Theory; Aachen, Germany. 25–30 June 2017. [Google Scholar]

- 27.Gilani A., Amor S.B., Salehkalaibar S., Tan V. Distributed Hypothesis Testing with Privacy Constraints. Entropy. 2019;21:478. doi: 10.3390/e21050478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Gündüz D., Erkip E., Poor H.V. Secure lossless compression with side information; Proceedings of the IEEE Information Theory Workshop; Porto, Portugal. 5–9 May 2008; pp. 169–173. [Google Scholar]

- 29.Gündüz D., Erkip E., Poor H.V. Lossless compression with security constraints; Proceedings of the IEEE International Symposium on Information Theory; Toronto, ON, Canada. 6–11 July 2008; pp. 111–115. [Google Scholar]

- 30.Mhanna M., Piantanida P. On secure distributed hypothesis testing; Proceedings of the IEEE International Symposium on Information Theory; Hong Kong, China. 14–19 June 2015; pp. 1605–1609. [Google Scholar]

- 31.Sweeney L. K-anonymity: A model for protecting privacy. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2002;10:557–570. doi: 10.1142/S0218488502001648. [DOI] [Google Scholar]

- 32.Dwork C., McSherry F., Nissim K., Smith A. Theory of Cryptography. Springer; Berlin/Heidelberg, Germany: 2006. Calibrating Noise to Sensitivity in Private Data Analysis; pp. 265–284. [Google Scholar]

- 33.Calmon F., Fawaz N. Privacy Against Statistical Inference; Proceedings of the 50th Annual Allerton Conference; Illinois, IL, USA. 1–5 October 2012; pp. 1401–1408. [Google Scholar]

- 34.Makhdoumi A., Salamatian S., Fawaz N., Medard M. From the information bottleneck to the privacy funnel; Proceedings of the IEEE Information Theory Workshop; Hobart, Australia. 2–5 November 2014; pp. 501–505. [Google Scholar]

- 35.Calmon F., Makhdoumi A., Medard M. Fundamental limits of perfect privacy; Proceedings of the IEEE International Symposium on Information Theory; Hong Kong, China. 14–19 June 2015; pp. 1796–1800. [Google Scholar]

- 36.Issa I., Kamath S., Wagner A.B. An Operational Measure of Information Leakage; Proceedings of the Annual Conference on Information Science and Systems; Princeton, NJ, USA. 16–18 March 2016; pp. 1–6. [Google Scholar]

- 37.Rassouli B., Gündüz D. Optimal Utility-Privacy Trade-off with Total Variation Distance as a Privacy Measure. IEEE Trans. Inf. Forensics Secur. 2019;15:594–603. doi: 10.1109/TIFS.2019.2903658. [DOI] [Google Scholar]

- 38.Wagner I., Eckhoff D. Technical Privacy Metrics: A Systematic Survey. arXiv. 2015 doi: 10.1145/3168389.1512.00327v1 [DOI] [Google Scholar]

- 39.Goldwasser S., Micali S. Probabilistic encryption. J. Comput. Syst. Sci. 1984;28:270–299. doi: 10.1016/0022-0000(84)90070-9. [DOI] [Google Scholar]

- 40.Bellare M., Tessaro S., Vardy A. Semantic Security for the Wiretap Channel; Proceedings of the Advances in Cryptology-CRYPTO 2012; Heidelberg, Germany. 19–23 August 2012; pp. 294–311. [Google Scholar]

- 41.Yamamoto H. A Rate-Distortion Problem for a Communication System with a Secondary Decoder to be Hindered. IEEE Trans. Inf. Theory. 1988;34:835–842. doi: 10.1109/18.9781. [DOI] [Google Scholar]

- 42.Tandon R., Sankar L., Poor H.V. Discriminatory Lossy Source Coding: Side Information Privacy. IEEE Trans. Inf. Theory. 2013;59:5665–5677. doi: 10.1109/TIT.2013.2259613. [DOI] [Google Scholar]

- 43.Schieler C., Cuff P. Rate-Distortion Theory for Secrecy Systems. IEEE Trans. Inf. Theory. 2014;60:7584–7605. doi: 10.1109/TIT.2014.2365175. [DOI] [Google Scholar]

- 44.Agarwal G.K. Ph.D. Thesis. University of California; Los Angeles, CA, USA: 2019. [(accessed on 3 January 2020)]. On Information Theoretic and Distortion-based Security. Available online: https://escholarship.org/uc/item/7qs7z91g. [Google Scholar]

- 45.Li Z., Oechtering T., Gündüz D. Privacy against a hypothesis testing adversary. IEEE Trans. Inf. Forensics Secur. 2019;14:1567–1581. doi: 10.1109/TIFS.2018.2882343. [DOI] [Google Scholar]

- 46.Cuff P., Yu L. Differential privacy as a mutual information constraint; Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security; Vienna, Austria. 24–28 October 2016; pp. 43–54. [Google Scholar]

- 47.Goldfeld Z., Cuff P., Permuter H.H. Semantic-Security Capacity for Wiretap Channels of Type II. IEEE Trans. Inf. Theory. 2016;62:3863–3879. doi: 10.1109/TIT.2016.2565483. [DOI] [Google Scholar]

- 48.Sreekumar S., Bunin A., Goldfeld Z., Permuter H.H., Shamai S. The Secrecy Capacity of Cost-Constrained Wiretap Channels. arXiv. 20202004.04330 [Google Scholar]

- 49.Kasiviswanathan S.P., Lee H.K., Nissim K., Raskhodnikova S., Smith A. What can we learn privately? SIAM J. Comput. 2011;40:793–826. doi: 10.1137/090756090. [DOI] [Google Scholar]

- 50.Duchi J.C., Jordan M.I., Wainwright M.J. Local Privacy and Statistical Minimax Rates; Proceedings of the 2013 IEEE 54th Annual Symposium on Foundations of Computer Science; Berkeley, CA, USA. 26–29 October 2013; pp. 429–438. [Google Scholar]

- 51.Duchi J.C., Jordan M.I., Wainwright M.J. Privacy Aware Learning. J. ACM. 2014;61:1–57. doi: 10.1145/2666468. [DOI] [Google Scholar]

- 52.Wang Y., Lee J., Kifer D. Differentially Private Hypothesis Testing, Revisited. arXiv. 20151511.03376 [Google Scholar]

- 53.Gaboardi M., Lim H., Rogers R., Vadhan S. Differentially Private Chi-Squared Hypothesis Testing: Goodness of Fit and Independence Testing; Proceedings of the 33rd International Conference on Machine Learning; New York City, NY, USA. 19–24 June 2016; pp. 2111–2120. [Google Scholar]

- 54.Rogers R.M., Roth A., Smith A.D., Thakkar O. Max-Information, Differential Privacy, and Post-Selection Hypothesis Testing. arXiv. 20161604.03924 [Google Scholar]