Key Points

Question

How accurate are physicians and medical students in interpreting electrocardiograms (ECGs)?

Findings

In this meta-analysis of 78 original studies, the accuracy of ECG interpretation was low in the absence of training and varied widely across studies. Accuracy was higher after training but still relatively low and was higher, as expected, with progressive training and specialization.

Meaning

Physicians at all training levels may have deficiencies in ECG interpretation, even after educational interventions.

Abstract

Importance

The electrocardiogram (ECG) is the most common cardiovascular diagnostic test. Physicians’ skill in ECG interpretation is incompletely understood.

Objectives

To identify and summarize published research on the accuracy of physicians’ ECG interpretations.

Data Sources

A search of PubMed/MEDLINE, Embase, Cochrane CENTRAL (Central Register of Controlled Trials), PsycINFO, CINAHL (Cumulative Index to Nursing and Allied Health), ERIC (Education Resources Information Center), and Web of Science was conducted for articles published from database inception to February 21, 2020.

Study Selection

Of 1138 articles initially identified, 78 studies that assessed the accuracy of physicians’ or medical students’ ECG interpretations in a test setting were selected.

Data Extraction and Synthesis

Data on study purpose, participants, assessment features, and outcomes were abstracted, and methodological quality was appraised with the Medical Education Research Study Quality Instrument. Results were pooled using random-effects meta-analysis.

Main Outcomes and Measures

Accuracy of ECG interpretation.

Results

Of 1138 studies initially identified, 78 assessed the accuracy of ECG interpretation. Across all training levels, the median accuracy was 54% (interquartile range [IQR], 40%-66%; n = 62 studies) on pretraining assessments and 67% (IQR, 55%-77%; n = 47 studies) on posttraining assessments. Accuracy varied widely across studies. The pooled accuracy for pretraining assessments was 42.0% (95% CI, 34.3%-49.6%; n = 24 studies; I2 = 99%) for medical students, 55.8% (95% CI, 48.1%-63.6%; n = 37 studies; I2 = 96%) for residents, 68.5% (95% CI, 57.6%-79.5%; n = 10 studies; I2 = 86%) for practicing physicians, and 74.9% (95% CI, 63.2%-86.7%; n = 8 studies; I2 = 22%) for cardiologists.

Conclusions and Relevance

Physicians at all training levels had deficiencies in ECG interpretation, even after educational interventions. Improved education across the practice continuum appears warranted. Wide variation in outcomes could reflect real differences in training or skill or differences in assessment design.

This systematic review and meta-analysis identifies and summarizes published research on the accuracy of physicians’ interpretations of electrocardiograms.

Introduction

Electrocardiography is the most commonly performed cardiovascular diagnostic test,1 and electrocardiogram (ECG) interpretation is an essential skill for most physicians.2,3,4 Interpretation of an ECG is a complex task that requires integration of knowledge of anatomy, electrophysiology, and pathophysiology, visual pattern recognition, and diagnostic reasoning.5 Despite the importance of this diagnostic test and several position statements regarding education in ECG interpretation,2,3,4,6,7,8 evidence regarding the optimal techniques for training, assessing, and maintaining this skill is lacking.9 As part of understanding the potential need for further educational reform, it would be helpful to know the accuracy of physicians and physician trainees in interpreting ECGs. A systematic review10 published in 2003 found frequent errors and disagreements in physicians’ ECG interpretations as reported in 32 studies. However, that review10 did not offer a quantitative synthesis of results and is now 18 years old. Other reviews9,11,12,13,14 of physicians’ ECG interpretations have focused on training interventions rather than accuracy. We believe an updated review of evidence regarding physicians’ ECG interpretation accuracy, together with a quantitative synthesis, would be useful to physicians in practice, medical school teachers, and administrators.

The purpose of the present study (part of a larger systematic review of ECG education) is to systematically identify and summarize published research that measured the accuracy of physicians’ ECG interpretations. We focused this review on studies that assessed interpretation accuracy in a controlled (educational test) setting; this approach permits adjudication relative to a single correct (accurate) response, which is challenging in real clinical practice.

Methods

Data Sources and Searches

We systematically searched the PubMed/MEDLINE, Embase, Cochrane CENTRAL (Central Register of Controlled Trials), PsycINFO, CINAHL (Cumulative Index to Nursing and Allied Health), ERIC (Education Resources Information Center), and Web of Science databases for articles published from database inception to February 21, 2020, using a search strategy developed with assistance from a research librarian. The full search strategy can be found in the eBox in the Supplement; key terms included the topic (ECG, EKG, and electrocardiogram), population (medical education, medical students, residents, and physicians), and outcomes (learning effectiveness, learning outcomes, learning efficiency, and impact). We also hand searched the references of reviews to identify omitted articles.9,10,11,12,13,14 This study followed the Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) reporting guideline.15

Study Selection

We included all studies that assessed the accuracy of physicians’ or medical students’ ECG interpretations in a test setting. We made no exclusions based on the language or date of publication. Working independently, 2 authors (M.V.P. and S.Y.O.) screened each study for inclusion, reading first the title and abstract and second the full text if needed. Conflicts were resolved by consensus.

Data Extraction and Quality Assessment

For each included article, all authors (D.A.C., M.V.P., and S.Y.O.) worked in pairs using software designed for systematic reviews (DistillerSR, Evidence Partners Inc) to abstract data on study purpose (training intervention, survey of competence, or assessment validation), study participants (number and training level), accuracy scores, assessment features (number and selection of items, response format, and scoring rubric), validity evidence, and study methodological quality. We recorded accuracy scores separately for assessments conducted before training (including survey studies without intervention) and those performed after a training intervention. Interrater agreement was substantial (κ>0.6) for all extracted elements. All disagreements were resolved by consensus.

We appraised general methodological quality using the Medical Education Research Study Quality Instrument,16 which was developed to appraise the methodological quality of any quantitative medical education research study. We appraised the quality of the outcome measure (ie, accuracy test) using the 5 sources validation framework,17 which identifies 5 potential sources of validity evidence: content, response process, internal structure, relations with other variables, and consequences.18,19

Statistical Analysis

We used the I2 statistic20 to quantify inconsistency (heterogeneity) across studies. The I2 estimates the percentage of variability across studies not attributable to chance, and values greater than 50% indicate substantial inconsistency. Because we anticipated (and confirmed) substantial inconsistency across studies, we pooled accuracy scores within each physician subgroup and weighted by sample size using random-effects meta-analysis. Given that meta-analysis may be inappropriate in the setting of large differences in test content and difficulty, we also reported the median score. We planned subgroup analyses by timing (before vs after a training intervention), response format (free text vs selection from a predefined list), and author-reported examination difficulty. We found only 1 study21 that restricted items to a single empirically determined level of difficulty and thus used the number of correct diagnoses per ECG (1 or >1 [more difficult]) as a surrogate for item difficulty. We used the z test22 to evaluate the statistical significance of subgroup interactions. We planned sensitivity analyses restricted to survey studies and to studies using robust approaches to determine correct answers. We used SAS software, version 9.4 (SAS Institute Inc) for all analyses. Statistical significance was defined by a 2-sided α = .05.

Results

In this systematic review and meta-analysis, of the 1138 articles initially identified, 78 studies (enrolling a total of 10 056 participants) reported accuracy data (Figure 1).12,21,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98 For 2 studies,59,68 data were reported in several different publications; in each case, we abstracted 1 report (the most complete). The eTable in the Supplement summarizes the key features of study designs and tests.

Figure 1. Trial Flow Diagram.

Features of Studies

Forty-one studies23,24,25,27,29,31,34,35,36,40,42,43,48,51,52,56,57,59,60,61,62,64,65,67,69,71,72,73,74,75,77,81,82,84,85,86,91,93,95,96,98 involved medical students (n = 4256 participants), 42 studies12,21,23,24,26,32,33,35,36,37,38,39,41,44,45,46,47,49,50,51,53,54,55,58,60,61,62,63,66,69,70,75,76,79,80,84,88,89,90,91,94,97 involved postgraduate physicians (n = 2379), 11 studies25,38,47,60,66,68,78,83,87,92,94 involved noncardiologist practicing physicians (n = 1074), and 10 studies25,28,30,36,47,68,83,87,91,92 involved cardiologists or cardiology fellows (n = 2094); 4 mixed-participant studies35,47,74,84 did not report training level–specific sample sizes (n = 253). Twenty-six studies12,27,29,31,35,36,39,56,57,58,59,62,64,65,71,72,74,75,77,80,81,82,85,86,96,98 were randomized trials, 11 studies21,23,24,34,42,43,48,52,53,73,97 were 2-group nonrandomized comparisons, 19 studies25,30,32,41,50,66,69,70,78,79,84,87,88,89,90,92,93,94,95 were single-group pre-post comparisons, and 22 studies26,28,33,37,38,40,44,45,46,47,49,51,54,55,60,61,63,67,68,76,83,91 were cross-sectional (single time point). Twenty-two studies were identified as surveys.26,30,33,36,38,39,40,41,44,45,46,49,51,53,54,55,61,63,66,67,68,83 Of the 47 studies reporting posttraining data,12,23,24,25,27,28,29,31,32,34,35,37,41,42,43,47,48,50,52,56,57,59,62,64,65,66,69,71,72,73,74,75,77,78,79,80,81,82,84,85,86,87,88,89,95,96,97 the training interventions included face-to-face lectures or seminars (22 studies12,25,27,29,31,32,42,43,52,57,59,62,72,74,75,77,80,81,82,88,95,97), computer tutorials (20 studies12,27,29,35,47,48,66,69,71,73,74,75,77,78,79,82,84,86,87,89), independent study materials (7 studies24,25,34,57,64,85,96), clinical training activities (4 studies28,37,41,50), and 7 other miscellaneous activities23,24,52,56,65,90,92 (some studies used >1 intervention type). On further quality appraisal, all studies reported an objective outcome of learning (ie, accuracy), 54 studies23,24,25,26,28,30,31,32,33,34,35,37,38,39,40,41,42,43,44,46,47,48,49,50,51,52,53,54,55,56,57,58,59,61,62,64,65,67,68,74,75,76,78,81,85,88,89,90,92,93,94,96,97,98 had high (≥75%) follow-up, 16 studies12,26,28,37,41,44,45,51,55,66,67,70,75,76,79,87 involved more than 1 institution, and 66 studies12,21,23,24,27,29,32,33,34,35,36,37,39,40,41,42,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,78,79,80,81,82,85,86,87,88,90,91,93,94,95,96,97,98 used appropriate statistical tests.

Features of ECG Tests

The number of test items ranged from 1 to 100 (median, 10; IQR, 9-20). The ECG diagnoses represented in the test were reported in 53 studies (68%)12,21,25,26,30,31,33,34,36,37,38,40,43,44,45,46,47,49,50,51,52,53,54,55,57,59,61,63,64,66,67,68,69,70,71,75,76,78,80,83,84,85,86,87,88,89,90,91,92,94,95,96,98; these studies included normal ECGs (26 studies31,33,34,37,38,40,45,47,49,51,52,54,63,66,68,69,75,76,78,84,87,90,91,92,94,96) and abnormalities of rhythm (45 studies12,25,26,31,33,34,36,37,40,43,44,45,46,47,49,50,51,52,54,55,57,59,61,63,64,66,67,68,70,71,75,76,78,80,83,84,85,86,87,88,89,90,95,96,98), ischemia (42 studies12,21,25,26,30,31,33,34,36,37,38,40,44,46,47,49,51,52,55,57,59,61,63,67,68,69,70,71,75,76,78,83,84,85,88,89,90,91,92,94,95,96), structure (41 studies12,21,25,26,31,33,34,36,37,38,40,44,45,46,47,49,50,51,52,54,55,57,59,61,63,66,67,68,70,75,76,78,80,83,84,85,87,89,90,91,96), and metabolism/inflammation (23 studies21,25,26,31,34,36,38,46,47,49,53,54,55,57,61,63,66,68,70,75,76,91,96). The ECG complexity or difficulty was intentionally set or empirically determined in 32 studies.21,24,26,28,30,31,32,33,36,38,43,44,45,49,51,53,54,56,59,60,61,63,66,67,69,75,90,91,92,94,95,98 Among these, the ECGs reflected a simple interpretation (single diagnosis) in 20 studies,26,31,33,38,43,44,45,49,51,53,54,59,61,66,67,75,92,94,95,98 several straightforward diagnoses in 2 studies,28,63 deliberately complex cases in 3 studies,21,30,36 and a mixture of simple and complex cases in 7 studies.24,32,56,60,69,90,91 Participants provided free-text responses in 26 studies21,23,30,32,33,36,37,38,39,40,42,44,45,46,49,50,51,55,59,61,68,70,80,90,94,97 and selected from a predefined list of answers in 24 studies.12,28,47,53,54,56,62,66,67,69,71,75,76,77,79,81,84,87,91,92,93,95,96,98

We grouped studies according to the method of confirming the correct answer: (1) clinical data (such as laboratory test or echocardiogram; n = 5 studies30,38,53,69,94), (2) robust expert panel (≥2 people with clearly defined expertise and independent initial review or explicit consensus on final answers; n = 18 studies26,36,39,41,44,45,46,49,55,60,61,62,63,67,68,79,91,98), or (3) less robust expert panel, single individual, or undefined (n = 55 studies12,21,23,24,25,27,28,29,31,32,33,34,35,37,40,42,43,47,48,50,51,52,54,56,57,58,59,64,65,66,70,71,72,73,74,75,76,77,78,80,81,82,83,84,85,86,87,88,89,90,92,93,95,96,97). Thirty-seven studies (47%)21,26,28,30,31,34,36,38,39,41,44,45,46,49,51,53,54,55,56,58,59,60,61,62,63,65,67,68,69,70,73,75,76,79,91,94,98 reported information about test development, content, and scoring (content validity evidence), 9 studies (12%)29,39,41,46,47,56,59,70,81reported reliability or other internal structure validity evidence, 9 studies (12%)23,31,60,61,75,76,80,91,93 reported associations with scores from another instrument or with training status (relations with other variables validity evidence), 3 studies (4%)28,59,76 reported consequences validity evidence, and 1 study (1%)36 reported response process validity evidence.

Accuracy of Physicians’ ECG Interpretations

Across all studies and all training levels, the median accuracy on the 62 pretraining assessments12,21,23,24,25,26,27,29,30,31,32,33,34,35,36,38,39,40,41,43,44,45,46,47,49,50,51,53,54,55,57,58,59,60,61,62,63,66,67,68,69,70,71,73,76,77,78,79,83,84,87,88,89,90,91,92,93,94,95,96,97,98 was 54% (range, 4%-95%; IQR, 40%-66%) (Figure 2). For the 47 studies12,23,24,25,27,28,29,31,32,34,35,37,41,42,43,47,48,50,52,56,57,59,62,64,65,66,69,71,72,73,74,75,77,78,79,80,81,82,84,85,86,87,88,89,95,96,97 that reported posttraining assessments, the median accuracy was 67% (range, 10%-88%; IQR, 55%-77%) (eFigure in the Supplement).

Figure 2. Physician Electrocardiogram (ECG) Interpretation Accuracy Without Additional ECG Training, for All Training Levels Combined.

Basic 1 indicates a single straightforward diagnosis; basic 2, 2 or more straightforward diagnoses; Card, cardiologists in practice or cardiology fellows; I, ischemia/infarction, M, metabolism/inflammation (eg, pericarditis, hyperkalemia, or drug effect); MS, medical students; N, normal; O, other; PG, postgraduate physicians (residents); R, rhythm; S, structure (eg, hypertrophy or conduction block). Boxes indicate the mean accuracy score for each study. The diamond and dashed vertical line indicate the median score across studies.

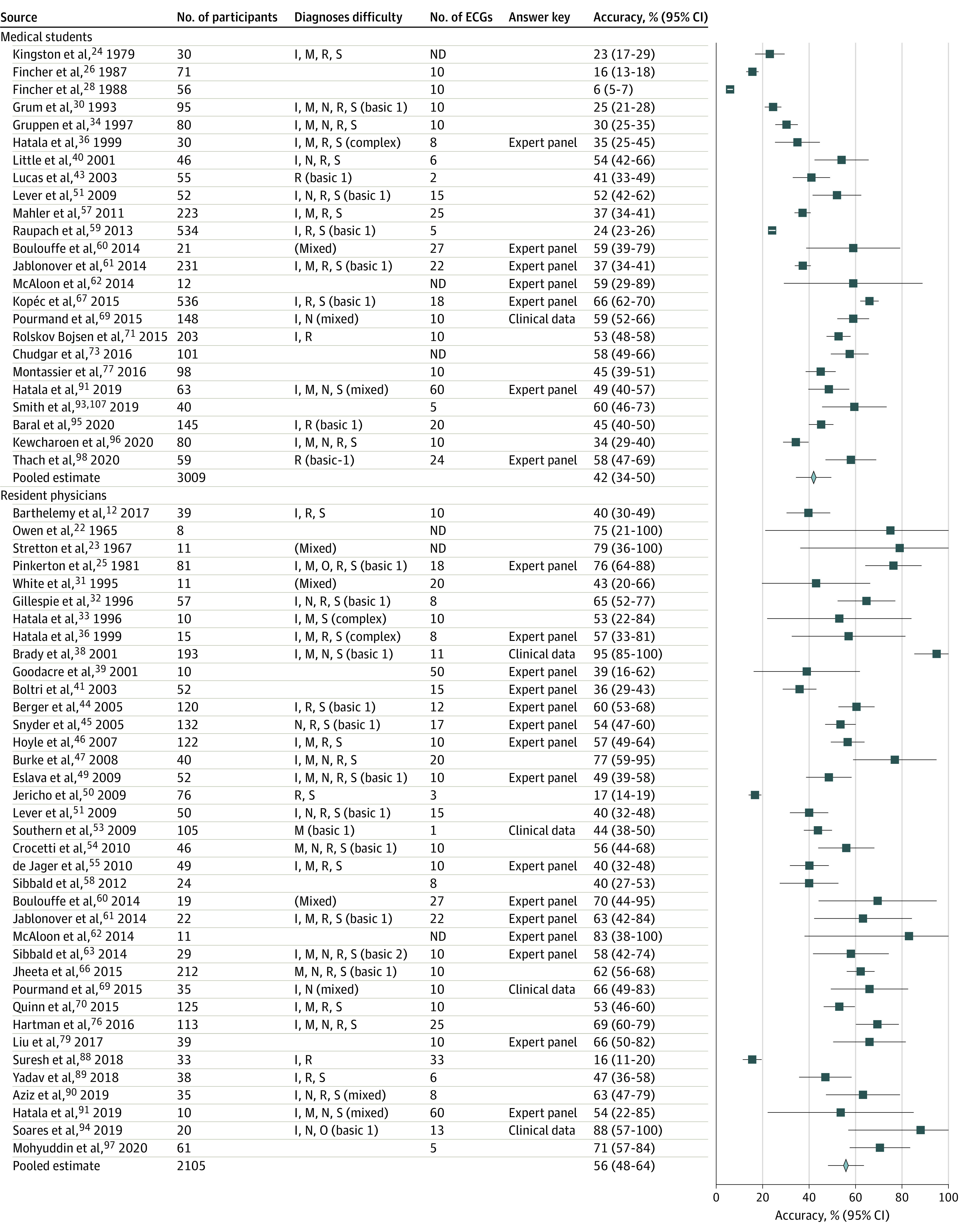

We conducted random-effects meta-analyses of pretraining assessment scores for each training group (Figure 3). For medical students, the pooled accuracy was 42.0% (95% CI, 34.3%-49.6%; median, 45%; IQR, 32%-58%; n = 24 studies25,27,29,31,34,36,40,43,51,57,59,60,61,62,67,69,71,73,77,91,93,95,96,98) with substantial inconsistency (I2 = 99%). For residents, the pooled accuracy was 55.8% (95% CI, 48.1%-63.6%; median, 57%; IQR, 44%-69%; n = 37 studies12,21,23,24,26,32,36,38,39,41,44,45,46,47,49,50,51,53,54,55,58,60,61,62,63,66,69,70,76,79,88,89,90,91,94,97; I2 = 96%). For practicing physicians, the pooled accuracy was 68.5% (95% CI, 57.6%-79.5%; median, 66%; IQR, 63%-78%; n = 10 studies25,38,60,66,68,78,83,87,92,94; I2 = 86%). For cardiologists and cardiology fellows, the pooled accuracy was 74.9% (95% CI, 63.2%-86.7%; median, 79%; IQR, 68%-86%; n = 8 studies25,30,36,68,83,87,91,92; I2 = 22%).

Figure 3. Random-Effects Meta-analysis of Physician Electrocardiogram (ECG) Interpretation Accuracy Without Additional ECG Training, for Medical Students and Resident Physicians.

See Figure 2 for explanation of abbreviations and difficulty levels. Boxes indicate the mean accuracy score for each study, diamonds indicate pooled estimates across studies, and horizontal lines indicate 95% CIs.

The pooled accuracies of posttraining assessment scores were higher than the pretraining scores. For medical students, the pooled accuracy after training was 61.5% (95% CI, 56%-66.9%; median, 61%; IQR, 55%-72%; n = 29 studies23,24,25,27,29,31,34,42,43,48,52,56,57,59,62,64,65,69,71,72,73,75,77,81,82,85,86,95,96; I2 = 91%; P<.001 for interaction comparing pretraining with posttraining scores). For residents, the pooled accuracy was 66.5% (95% CI, 57.3%-75.7%; median, 75%; IQR, 51%-79%; n = 15 studies12,32,37,41,47,50,62,66,69,75,79,80,88,89,97; I2 = 84%; P = 0.08 for interaction). For practicing physicians, the pooled accuracy was 80.1% (95% CI, 72.7%-87.5%; median, 81%; range, 72.9%-83.6%; n = 3 studies66,78,87; I2 = 37%; P = 0.09 for interaction). For cardiologists and cardiology fellows, the pooled accuracy was 87.5% (95% CI, 84.8%-90.2%; median, 88%; range, 83.0%-90.5%; n = 3 studies28,47,87; I2 = 0%; P = 0.04 for interaction).

We planned subgroup analyses according to item difficulty. Nineteen pretraining assessments26,31,33,38,43,44,45,49,51,53,54,59,61,66,67,92,94,95,98 included only ECGs with 1 diagnosis (less difficult), and 10 assessments21,24,30,32,36,60,63,69,90,91 included ECGs with multiple diagnoses or empirically determined high difficulty. In analyses limited to these 29 studies,21,24,26,30,31,32,33,36,38,43,44,45,49,51,53,54,59,60,61,63,66,67,69,90,91,92,94,95,98 the median pretraining accuracy (across all participants) for less difficult ECGs was 56% (IQR, 44%-66%; n = 19 studies26,31,33,38,43,44,45,49,51,53,54,59,61,66,67,92,94,95,98) and for difficult ECGs was 59% (IQR, 53%-67%; n = 10 studies21,24,30,32,36,60,63,69,90,91).

We also conducted subgroup analyses according to response format. Twenty-three pretraining assessments21,23,30,32,33,36,38,39,40,44,45,46,49,50,51,55,59,61,68,70,90,94,97 used free-text response, with a median accuracy of 54% (IQR, 43%-65%). Twenty assessments12,47,53,54,62,66,67,69,71,76,77,79,84,87,91,92,93,95,96,98 used a predefined list of answers, with a median accuracy of 60% (IQR, 48%-68%).

Acknowledging that assessments linked to training interventions might be enriched for ECG findings specific to that intervention (and thus less representative of real-life prevalence), we performed sensitivity analyses limited to the 22 survey studies26,30,33,36,38,39,40,41,44,45,46,49,51,53,54,55,61,63,66,67,68,83 (which would presumably be designed to ascertain performance in a more representative fashion). This analysis (across all participants) found a median accuracy of 55% (IQR, 44%-65%). Finally, in sensitivity analyses limited to assessments using clinical data or a robust panel to confirm the correct answer, the median pretraining accuracy was 58% (IQR, 44%-67%; n = 23 studies26,30,36,38,39,41,44,45,46,49,53,55,60,61,62,63,67,68,69,79,91,94,98).

Discussion

This systematic review and meta-analysis identified 78 studies that assessed the accuracy of physicians’ ECG interpretations in a controlled (test) setting. Accuracy scores varied widely across studies, ranging from 4% to 95%. The median accuracy across all training levels was relatively low (54%), and scores increased as expected with progressive training and specialization (medical students, residents, physicians in noncardiology practice, and cardiologists). Scores assessed after a training intervention were modestly higher but remained low (median, 67%). These findings have implications for training, assessment, and setting standards in ECG interpretation.

Integration With Prior Work

Several reviews9,11,12,13 have examined training on ECG interpretation, documenting improved accuracy after training compared with no intervention and evaluating the comparative effectiveness of several instructional modalities and methods. The only previous review10 of the accuracy of physicians’ ECG interpretations found 32 studies of postgraduate trainees and physicians in practice; to that report, we have added 46 additional studies, an expanded population (the addition of medical students), a robust quantitative synthesis, and detailed data visualizations. We found limited validity evidence for the original outcome measures, as has been previously reported for educational assessments in other domains, including clinical skills,99 continuing medical education,100 and simulation-based assessments.19,101

Implications for Future Work

This review has important implications for practitioners, educators, and researchers. First and foremost, according to these findings, physicians at all training levels could improve their ECG interpretation skills. Even cardiologists had performance gaps, with a pooled accuracy of 74.9%. Moreover, substantial deficiencies persisted after training. These findings suggest that novel training approaches that span the training continuum are needed. Recent guidelines endorsed by professional societies3,4 have identified developmentally appropriate competencies for ECG interpretation and reviewed several options for training and assessment. Other original studies highlight creative use of workshops,95 peer groups,102 online self-study,87 and social media.79 Large ECG databanks, properly indexed by diagnosis and difficulty (such as the NYU Emergency Care Electrocardiogram Database) could enable regular and repeated practice in these skills. Research also highlights the disparate impact of different cognitive strategies on learning and performing ECG interpretation.64,103 Adaptive computer instructional technologies might further facilitate efficient learning of ECG interpretation.104 Enhanced clinical decision support at the point of care may also be helpful; automated computer ECG interpretations have demonstrated variable accuracy,105,106 but novel artificial intelligence–driven algorithms may improve on past performance.107 Careful consideration should also be given to instructor qualifications.4

Interpretation accuracy varied widely across studies, ranging from 49% to 92% for cardiologists and even more for other groups (Figure 3, Figure 4). High variability persisted after training. This finding suggests a role for increased standardization in ECG interpretation education. National or international agreement on relevant competencies, development and dissemination of training resources and assessment tools that embody educational best practices, and adoption of a mastery learning (competency-based) paradigm might help remediate these performance gaps. Inasmuch as ECGs can be simulated perfectly in a digital environment, online educational resources may prove particularly useful and easily shared. These resources might include adaptive training that accounts for variation in baseline performance and learning rate and aims for achievement of a defined benchmark (mastery). In addition, given available information, it is difficult to disentangle true differences in physicians’ skill or training from differences in ECG selection (sampling across domains and difficulty) and test calibration. Robust test item selection procedures were infrequently reported, and we propose this as an area for improved assessment.

Figure 4. Random-Effects Meta-analysis of Physician Electrocardiogram (ECG) Interpretation Accuracy Without Additional ECG Training, for Practicing Physicians and Cardiologists and Cardiology Fellows.

See Figure 2 for explanation of abbreviations and difficulty levels. Boxes indicate the mean accuracy score for each study, diamonds indicate pooled estimates across studies, and horizontal lines indicate 95% CIs.

The data in the present study highlight at least 4 additional areas for improvement in the assessment of ECG interpretation. First, nearly all the tests were developed de novo for a given study and never used again; the adoption or adaptation of previously used tests would streamline test development and facilitate cross-study comparisons. Second, tests were generally short (median, 10 ECGs), which limits reliability and precision of estimates. In comparison with the more than 120 diagnostic statements cataloged by the American Heart Association108 or the 37 common and essential ECG patterns identified in recent guidelines,4 a 10-item test seems unlikely to fully represent the domain. Third, investigators rarely used robust procedures for confirming the correct answer, such as independent expert review and consensus or use of clinical data. Fourth, validity evidence in general was rarely reported. In addition to the above-mentioned steps regarding the content of ECG assessments, we suggest reporting evidence to support their internal structure (eg, reliability) and relations with other variables.

Strengths and Limitations

This study has strengths. These include use of studies representing a broad range of study designs, a literature search supported by a librarian trained in systematic reviews, duplicate review at all stages, and a robust quantitative synthesis with planned subgroup and sensitivity analyses.

This study also has limitations. As with all reviews, the information obtained is limited by the methodological and reporting quality of the original studies. The tests used to assess interpretation accuracy varied widely and were often suboptimal; however, when analysis was limited to studies that used more robust approaches to select ECGs and confirm correct answers, the results were largely unchanged. We found limited validity evidence for the original outcome measures. We also note a paucity of outcomes for practicing physicians.

By design, this study focused its search and inclusion criteria on assessments conducted in a test setting in which the correct answers can be known. We believe this represents a best-case scenario for interpretation and expect that performance in a fast-paced clinical practice would typically be worse. Although tests created for educational purposes may not reflect the spectrum of difficulty or disease seen in clinical practice (eg, test ECGs might be enriched for challenging or rare cases), we did not find a difference in accuracy between more-and less-difficult tests. We restricted our study to physicians, acknowledging that a wide range of nonphysicians, including nurses, physician assistants, and paramedics, also interpret ECGs.

The meta-analysis results should not be interpreted as an estimate of or a suggestion that there is a single true level of accuracy in physicians’ ECG interpretation; indeed, the between-study variation in diagnoses and difficulty (with difficulty sometimes targeted deliberately by the investigators) stipulates that such is not the case. Rather, these analyses help to succinctly represent the existing evidence and support the implications suggested above.

Conclusions

Physicians at all training levels had deficiencies in ECG interpretation, even after educational interventions. Improvement in both training in and assessment of ECG interpretation appears warranted, across the practice continuum. Standardized competencies, educational resources, and mastery benchmarks could address all these concerns.

eBox. Full Search Strategy

eTable. Features of Studies of Physician ECG Interpretation Accuracy

eFigure. Physician ECG Interpretation Accuracy After an Educational Intervention, All Training Levels Combined

References

- 1.Kligfield P, Gettes LS, Bailey JJ, et al. ; American Heart Association Electrocardiography and Arrhythmias Committee, Council on Clinical Cardiology; American College of Cardiology Foundation; Heart Rhythm Society . Recommendations for the standardization and interpretation of the electrocardiogram: part I: the electrocardiogram and its technology: a scientific statement from the American Heart Association Electrocardiography and Arrhythmias Committee, Council on Clinical Cardiology; the American College of Cardiology Foundation; and the Heart Rhythm Society: endorsed by the International Society for Computerized Electrocardiology. Circulation. 2007;115(10):1306-1324. doi: 10.1161/CIRCULATIONAHA.106.180200 [DOI] [PubMed] [Google Scholar]

- 2.Salerno SM, Alguire PC, Waxman HS; American College of Physicians . Training and competency evaluation for interpretation of 12-lead electrocardiograms: recommendations from the American College of Physicians. Ann Intern Med. 2003;138(9):747-750. doi: 10.7326/0003-4819-138-9-200305060-00012 [DOI] [PubMed] [Google Scholar]

- 3.American Academy of Family Physicians Electrocardiograms, family physician interpretation (position paper). Accessed February 14, 2020. https://www.aafp.org/about/policies/all/electrocardiograms.html

- 4.Antiperovitch P, Zareba W, Steinberg JS, et al. . Proposed in-training electrocardiogram interpretation competencies for undergraduate and postgraduate trainees. J Hosp Med. 2018;13(3):185-193. [DOI] [PubMed] [Google Scholar]

- 5.Wood G, Batt J, Appelboam A, Harris A, Wilson MR. Exploring the impact of expertise, clinical history, and visual search on electrocardiogram interpretation. Med Decis Making. 2014;34(1):75-83. doi: 10.1177/0272989X13492016 [DOI] [PubMed] [Google Scholar]

- 6.Fisch C. Clinical competence in electrocardiography: a statement for physicians from the ACP/ACC/AHA Task Force on Clinical Privileges in Cardiology. Circulation. 1995;91(10):2683-2686. doi: 10.1161/01.CIR.91.10.2683 [DOI] [PubMed] [Google Scholar]

- 7.Kadish AH, Buxton AE, Kennedy HL, et al. ; American College of Cardiology/American Heart Association/American College of Physicians-American Society of Internal Medicine Task Force; International Society for Holter and Noninvasive Electrocardiology . ACC/AHA clinical competence statement on electrocardiography and ambulatory electrocardiography: a report of the ACC/AHA/ACP-ASIM Task Force on Clinical Competence (ACC/AHA Committee to Develop a Clinical Competence Statement on Electrocardiography and Ambulatory Electrocardiography) endorsed by the International Society for Holter and Noninvasive Electrocardiology. Circulation. 2001;104(25):3169-3178. doi: 10.1161/circ.104.25.3169 [DOI] [PubMed] [Google Scholar]

- 8.Balady GJ, Bufalino VJ, Gulati M, Kuvin JT, Mendes LA, Schuller JL. COCATS 4 Task Force 3: training in electrocardiography, ambulatory electrocardiography, and exercise testing. J Am Coll Cardiol. 2015;65(17):1763-1777. doi: 10.1016/j.jacc.2015.03.021 [DOI] [PubMed] [Google Scholar]

- 9.Rourke L, Leong J, Chatterly P. Conditions-based learning theory as a framework for comparative-effectiveness reviews: a worked example. Teach Learn Med. 2018;30(4):386-394. doi: 10.1080/10401334.2018.1428611 [DOI] [PubMed] [Google Scholar]

- 10.Salerno SM, Alguire PC, Waxman HS. Competency in interpretation of 12-lead electrocardiograms: a summary and appraisal of published evidence. Ann Intern Med. 2003;138(9):751-760. doi: 10.7326/0003-4819-138-9-200305060-00013 [DOI] [PubMed] [Google Scholar]

- 11.Fent G, Gosai J, Purva M. Teaching the interpretation of electrocardiograms: which method is best? J Electrocardiol. 2015;48(2):190-193. doi: 10.1016/j.jelectrocard.2014.12.014 [DOI] [PubMed] [Google Scholar]

- 12.Barthelemy FX, Segard J, Fradin P, et al. . ECG interpretation in Emergency Department residents: an update and e-learning as a resource to improve skills. Eur J Emerg Med. 2017;24(2):149-156. doi: 10.1097/MEJ.0000000000000312 [DOI] [PubMed] [Google Scholar]

- 13.Pontes PAI, Chaves RO, Castro RC, de Souza EF, Seruffo MCR, Francês CRL. Educational software applied in teaching electrocardiogram: a systematic review. Biomed Res Int. 2018;2018:8203875. doi: 10.1155/2018/8203875 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Breen CJ, Kelly GP, Kernohan WG. ECG interpretation skill acquisition: A review of learning, teaching and assessment. J Electrocardiol. 2019;S0022-0736(18)30641-1. Published online April 12, 2019. doi: 10.1016/j.jelectrocard.2019.03.010 [DOI] [PubMed] [Google Scholar]

- 15.Moher D, Liberati A, Tetzlaff J, Altman DG; PRISMA Group . Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264-269, W64. doi: 10.7326/0003-4819-151-4-200908180-00135 [DOI] [PubMed] [Google Scholar]

- 16.Reed DA, Cook DA, Beckman TJ, Levine RB, Kern DE, Wright SM. Association between funding and quality of published medical education research. JAMA. 2007;298(9):1002-1009. doi: 10.1001/jama.298.9.1002 [DOI] [PubMed] [Google Scholar]

- 17.American Educational Research Association , American Psychological Association, National Council on Measurement in Education. Validity. Standards for Educational and Psychological Testing. Washington, DC: American Educational Research Association; 2014:11-31. [Google Scholar]

- 18.Cook DA, Beckman TJ. Current concepts in validity and reliability for psychometric instruments: theory and application. Am J Med. 2006;119(2):166.e7-166.e16. doi: 10.1016/j.amjmed.2005.10.036 [DOI] [PubMed] [Google Scholar]

- 19.Cook DA, Zendejas B, Hamstra SJ, Hatala R, Brydges R. What counts as validity evidence? examples and prevalence in a systematic review of simulation-based assessment. Adv Health Sci Educ Theory Pract. 2014;19(2):233-250. doi: 10.1007/s10459-013-9458-4 [DOI] [PubMed] [Google Scholar]

- 20.Higgins JPT, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003;327(7414):557-560. doi: 10.1136/bmj.327.7414.557 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hatala RA, Norman GR, Brooks LR. The effect of clinical history on physicians’ ECG interpretation skills. Acad Med. 1996;71(10)(suppl):S68-S70. doi: 10.1097/00001888-199610000-00047 [DOI] [PubMed] [Google Scholar]

- 22.Borenstein M, Hedges LV, Higgins JPT, Rothstein HR. Subgroup Analyses. Introduction to Meta-Analysis. Wiley; 2009. doi: 10.1002/9780470743386 [DOI] [Google Scholar]

- 23.Owen SG, Hall R, Anderson J, Smart GA. Programmed learning in medical education: an experimental comparison of programmed instruction by teaching machine with conventional lecturing in the teaching of electrocardiography to final year medical students. Postgrad Med J. 1965;41(474):201-205. doi: 10.1136/pgmj.41.474.201 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Stretton TB, Hall R, Owen SG. Programmed instruction in medical education: comparison of teaching-machine and programmed textbook. Br J Med Educ. 1967;1(3):165-168. doi: 10.1111/j.1365-2923.1967.tb01693.x [DOI] [PubMed] [Google Scholar]

- 25.Kingston ME. Electrocardiograph course. J Med Educ. 1979;54(2):107-110. [DOI] [PubMed] [Google Scholar]

- 26.Pinkerton RE, Francis CK, Ljungquist KA, Howe GW. Electrocardiographic training in primary care residency programs. JAMA. 1981;246(2):148-150. doi: 10.1001/jama.1981.03320020040021 [DOI] [PubMed] [Google Scholar]

- 27.Fincher RM, Abdulla AM, Sridharan MR, Gullen WH, Edelsberg JS, Henke JS. Comparison of computer-assisted and seminar learning of electrocardiogram interpretation by third-year students. J Med Educ. 1987;62(8):693-695. doi: 10.1097/00001888-198708000-00015 [DOI] [PubMed] [Google Scholar]

- 28.Hancock EW, Norcini JJ, Webster GD. A standardized examination in the interpretation of electrocardiograms. J Am Coll Cardiol. 1987;10(4):882-886. doi: 10.1016/S0735-1097(87)80284-X [DOI] [PubMed] [Google Scholar]

- 29.Fincher RE, Abdulla AM, Sridharan MR, Houghton JL, Henke JS. Computer-assisted learning compared with weekly seminars for teaching fundamental electrocardiography to junior medical students. South Med J. 1988;81(10):1291-1294. doi: 10.1097/00007611-198810000-00020 [DOI] [PubMed] [Google Scholar]

- 30.Dunn PM, Levinson W. The lack of effect of clinical information on electrocardiographic diagnosis of acute myocardial infarction. Arch Intern Med. 1990;150(9):1917-1919. doi: 10.1001/archinte.1990.00390200101019 [DOI] [PubMed] [Google Scholar]

- 31.Grum CM, Gruppen LD, Woolliscroft JO. The influence of vignettes on EKG interpretation by third-year students. Acad Med. 1993;68(10)(suppl):S61-S63. doi: 10.1097/00001888-199310000-00047 [DOI] [PubMed] [Google Scholar]

- 32.White T, Woodmansey P, Ferguson DG, Channer KS. Improving the interpretation of electrocardiographs in an accident and emergency department. Postgrad Med J. 1995;71(833):132-135. doi: 10.1136/pgmj.71.833.132 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Gillespie ND, Brett CT, Morrison WG, Pringle SD. Interpretation of the emergency electrocardiogram by junior hospital doctors. J Accid Emerg Med. 1996;13(6):395-397. doi: 10.1136/emj.13.6.395 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gruppen LD, Grum CM, Fitzgerald JT, McQuillan MA. Comparing two formats for practising electrocardiograph interpretation. In: Scherpbier AJJ, Van der Vleuten CPM, Rethans JJ, VanderSteeg AFW, eds. Advances in Medical Education. Springer; 1997:756-758. doi: 10.1007/978-94-011-4886-3_230 [DOI] [Google Scholar]

- 35.Devitt P, Worthley S, Palmer E, Cehic D. Evaluation of a computer based package on electrocardiography. Aust N Z J Med. 1998;28(4):432-435. doi: 10.1111/j.1445-5994.1998.tb02076.x [DOI] [PubMed] [Google Scholar]

- 36.Hatala R, Norman GR, Brooks LR. Impact of a clinical scenario on accuracy of electrocardiogram interpretation. J Gen Intern Med. 1999;14(2):126-129. doi: 10.1046/j.1525-1497.1999.00298.x [DOI] [PubMed] [Google Scholar]

- 37.Sur DK, Kaye L, Mikus M, Goad J, Morena A. Accuracy of electrocardiogram reading by family practice residents. Fam Med. 2000;32(5):315-319. [PubMed] [Google Scholar]

- 38.Brady WJ, Perron AD, Chan T. Electrocardiographic ST-segment elevation: correct identification of acute myocardial infarction (AMI) and non-AMI syndromes by emergency physicians. Acad Emerg Med. 2001;8(4):349-360. doi: 10.1111/j.1553-2712.2001.tb02113.x [DOI] [PubMed] [Google Scholar]

- 39.Goodacre S, Webster A, Morris F. Do computer generated ECG reports improve interpretation by accident and emergency senior house officers? Postgrad Med J. 2001;77(909):455-457. doi: 10.1136/pmj.77.909.455 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Little B, Mainie I, Ho KJ, Scott L. Electrocardiogram and rhythm strip interpretation by final year medical students. Ulster Med J. 2001;70(2):108-110. [PMC free article] [PubMed] [Google Scholar]

- 41.Boltri JM, Hash RB, Vogel RL. Are family practice residents able to interpret electrocardiograms? Adv Health Sci Educ Theory Pract. 2003;8(2):149-153. doi: 10.1023/A:1024943613613 [DOI] [PubMed] [Google Scholar]

- 42.Hatala RM, Brooks LR, Norman GR. Practice makes perfect: the critical role of mixed practice in the acquisition of ECG interpretation skills. Adv Health Sci Educ Theory Pract. 2003;8(1):17-26. doi: 10.1023/A:1022687404380 [DOI] [PubMed] [Google Scholar]

- 43.Lucas J, McKay S, Baxley E. EKG arrhythmia recognition: a third-year clerkship teaching experience. Fam Med. 2003;35(3):163-164. [PubMed] [Google Scholar]

- 44.Berger JS, Eisen L, Nozad V, et al. . Competency in electrocardiogram interpretation among internal medicine and emergency medicine residents. Am J Med. 2005;118(8):873-880. doi: 10.1016/j.amjmed.2004.12.004 [DOI] [PubMed] [Google Scholar]

- 45.Snyder CS, Bricker JT, Fenrich AL, et al. . Can pediatric residents interpret electrocardiograms? Pediatr Cardiol. 2005;26(4):396-399. doi: 10.1007/s00246-004-0759-5 [DOI] [PubMed] [Google Scholar]

- 46.Hoyle RJ, Walker KJ, Thomson G, Bailey M. Accuracy of electrocardiogram interpretation improves with emergency medicine training. Emerg Med Australas. 2007;19(2):143-150. doi: 10.1111/j.1742-6723.2007.00946.x [DOI] [PubMed] [Google Scholar]

- 47.Burke JF, Gnall E, Umrudden Z, Kyaw M, Schick PK. Critical analysis of a computer-assisted tutorial on ECG interpretation and its ability to determine competency. Med Teach. 2008;30(2):e41-e48. doi: 10.1080/01421590801972471 [DOI] [PubMed] [Google Scholar]

- 48.Nilsson M, Bolinder G, Held C, Johansson BL, Fors U, Ostergren J. Evaluation of a web-based ECG-interpretation programme for undergraduate medical students. BMC Med Educ. 2008;8:25. doi: 10.1186/1472-6920-8-25 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Eslava D, Dhillon S, Berger J, Homel P, Bergmann S. Interpretation of electrocardiograms by first-year residents: the need for change. J Electrocardiol. 2009;42(6):693-697. doi: 10.1016/j.jelectrocard.2009.07.020 [DOI] [PubMed] [Google Scholar]

- 50.Jericho BG, DeChristopher PJ, Schwartz DE. A structured educational curriculum for residents in the anesthesia preoperative evaluation clinic. Internet Journal of Anesthesiology. 2009;22:2. [Google Scholar]

- 51.Lever NA, Larsen PD, Dawes M, Wong A, Harding SA. Are our medical graduates in New Zealand safe and accurate in ECG interpretation? N Z Med J. 2009;122(1292):9-15. [PubMed] [Google Scholar]

- 52.Rubinstein J, Dhoble A, Ferenchick G. Puzzle based teaching versus traditional instruction in electrocardiogram interpretation for medical students: a pilot study. BMC Med Educ. 2009;9:4. doi: 10.1186/1472-6920-9-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Southern WN, Arnsten JH. The effect of erroneous computer interpretation of ECGs on resident decision making. Med Decis Making. 2009;29(3):372-376. doi: 10.1177/0272989X09333125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Crocetti M, Thompson R. Electrocardiogram interpretation skills in pediatric residents. Ann Pediatr Cardiol. 2010;3(1):3-7. doi: 10.4103/0974-2069.64356 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.de Jager J, Wallis L, Maritz D. ECG interpretation skills of South African emergency medicine residents. Int J Emerg Med. 2010;3(4):309-314. doi: 10.1007/s12245-010-0227-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Gregory A, Walker I, McLaughlin K, Peets AD. Both preparing to teach and teaching positively impact learning outcomes for peer teachers. Med Teach. 2011;33(8):e417-e422. doi: 10.3109/0142159X.2011.586747 [DOI] [PubMed] [Google Scholar]

- 57.Mahler SA, Wolcott CJ, Swoboda TK, Wang H, Arnold TC. Techniques for teaching electrocardiogram interpretation: self-directed learning is less effective than a workshop or lecture. Med Educ. 2011;45(4):347-353. doi: 10.1111/j.1365-2923.2010.03891.x [DOI] [PubMed] [Google Scholar]

- 58.Sibbald M, de Bruin AB. Feasibility of self-reflection as a tool to balance clinical reasoning strategies. Adv Health Sci Educ Theory Pract. 2012;17(3):419-429. doi: 10.1007/s10459-011-9320-5 [DOI] [PubMed] [Google Scholar]

- 59.Raupach T, Brown J, Anders S, Hasenfuss G, Harendza S. Summative assessments are more powerful drivers of student learning than resource intensive teaching formats. BMC Med. 2013;11:61. doi: 10.1186/1741-7015-11-61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Boulouffe C, Doucet B, Muschart X, Charlin B, Vanpee D. Assessing clinical reasoning using a script concordance test with electrocardiogram in an emergency medicine clerkship rotation. Emerg Med J. 2014;31(4):313-316. doi: 10.1136/emermed-2012-201737 [DOI] [PubMed] [Google Scholar]

- 61.Jablonover RS, Lundberg E, Zhang Y, Stagnaro-Green A. Competency in electrocardiogram interpretation among graduating medical students. Teach Learn Med. 2014;26(3):279-284. doi: 10.1080/10401334.2014.918882 [DOI] [PubMed] [Google Scholar]

- 62.McAloon C, Leach H, Gill S, Aluwalia A, Trevelyan J. Improving ECG competence in medical trainees in a UK district general hospital. Cardiol Res. 2014;5(2):51-57. doi: 10.14740/cr333e [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Sibbald M, Davies EG, Dorian P, Yu EH. Electrocardiographic interpretation skills of cardiology residents: are they competent? Can J Cardiol. 2014;30(12):1721-1724. doi: 10.1016/j.cjca.2014.08.026 [DOI] [PubMed] [Google Scholar]

- 64.Blissett S, Cavalcanti R, Sibbald M. ECG rhythm analysis with expert and learner-generated schemas in novice learners. Adv Health Sci Educ Theory Pract. 2015;20(4):915-933. doi: 10.1007/s10459-014-9572-y [DOI] [PubMed] [Google Scholar]

- 65.Dong R, Yang X, Xing B, et al. . Use of concept maps to promote electrocardiogram diagnosis learning in undergraduate medical students. Int J Clin Exp Med. 2015;8(5):7794-7801. [PMC free article] [PubMed] [Google Scholar]

- 66.Jheeta JS, Narayan O, Krasemann T. Republished: Accuracy in interpreting the paediatric ECG: a UK-wide study and the need for improvement. Postgrad Med J. 2015;91(1078):436-438. doi: 10.1136/postgradmedj-2013-305788rep [DOI] [PubMed] [Google Scholar]

- 67.Kopeć G, Magoń W, Hołda M, Podolec P. Competency in ECG interpretation among medical students. Med Sci Monit. 2015;21:3386-3394. doi: 10.12659/MSM.895129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Novotny T, Bond RR, Andrsova I, et al. . Data analysis of diagnostic accuracies in 12-lead electrocardiogram interpretation by junior medical fellows. J Electrocardiol. 2015;48(6):988-994. doi: 10.1016/j.jelectrocard.2015.08.023 [DOI] [PubMed] [Google Scholar]

- 69.Pourmand A, Tanski M, Davis S, Shokoohi H, Lucas R, Zaver F. Educational technology improves ECG interpretation of acute myocardial infarction among medical students and emergency medicine residents. West J Emerg Med. 2015;16(1):133-137. doi: 10.5811/westjem.2014.12.23706 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Quinn KL, Baranchuk A. Feasibility of a novel digital tool in automatic scoring of an online ECG examination. Int J Cardiol. 2015;185:88-89. doi: 10.1016/j.ijcard.2015.03.135 [DOI] [PubMed] [Google Scholar]

- 71.Rolskov Bojsen S, Räder SB, Holst AG, et al. . The acquisition and retention of ECG interpretation skills after a standardized web-based ECG tutorial: a randomised study. BMC Med Educ. 2015;15:36. doi: 10.1186/s12909-015-0319-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Zeng R, Yue RZ, Tan CY, et al. . New ideas for teaching electrocardiogram interpretation and improving classroom teaching content. Adv Med Educ Pract. 2015;6:99-104. doi: 10.2147/AMEP.S75316 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Chudgar SM, Engle DL, Grochowski COC, Gagliardi JP. Teaching crucial skills: an electrocardiogram teaching module for medical students. J Electrocardiol. 2016;49(4):490-495. doi: 10.1016/j.jelectrocard.2016.03.021 [DOI] [PubMed] [Google Scholar]

- 74.Davies A, Macleod R, Bennett-Britton I, McElnay P, Bakhbakhi D, Sansom J. E-learning and near-peer teaching in electrocardiogram education: a randomised trial. Clin Teach. 2016;13(3):227-230. doi: 10.1111/tct.12421 [DOI] [PubMed] [Google Scholar]

- 75.Fent G, Gosai J, Purva M. A randomized control trial comparing use of a novel electrocardiogram simulator with traditional teaching in the acquisition of electrocardiogram interpretation skill. J Electrocardiol. 2016;49(2):112-116. doi: 10.1016/j.jelectrocard.2015.11.005 [DOI] [PubMed] [Google Scholar]

- 76.Hartman ND, Wheaton NB, Williamson K, Quattromani EN, Branzetti JB, Aldeen AZ. A novel tool for assessment of emergency medicine resident skill in determining diagnosis and management for emergent electrocardiograms: a multicenter study. J Emerg Med. 2016;51(6):697-704. doi: 10.1016/j.jemermed.2016.06.054 [DOI] [PubMed] [Google Scholar]

- 77.Montassier E, Hardouin JB, Segard J, et al. . e-Learning versus lecture-based courses in ECG interpretation for undergraduate medical students: a randomized noninferiority study. Eur J Emerg Med. 2016;23(2):108-113. doi: 10.1097/MEJ.0000000000000215 [DOI] [PubMed] [Google Scholar]

- 78.Porras L, Drezner J, Dotson A, Stafford H, Berkoff D, Chung EH, Agnihotri K. Novice interpretation of screening electrocardiograms and impact of online training. J Electrocardiol. 2016;49(3):462-466. doi: 10.1016/j.jelectrocard.2016.02.004 [DOI] [PubMed] [Google Scholar]

- 79.Liu SS, Zakaria S, Vaidya D, Srivastava MC. Electrocardiogram training for residents: a curriculum based on Facebook and Twitter. J Electrocardiol. 2017;50(5):646-651. doi: 10.1016/j.jelectrocard.2017.04.010 [DOI] [PubMed] [Google Scholar]

- 80.Mirtajaddini M. A new algorithm for arrhythmia interpretation. J Electrocardiol. 2017;50(5):634-639. doi: 10.1016/j.jelectrocard.2017.05.007 [DOI] [PubMed] [Google Scholar]

- 81.Monteiro S, Melvin L, Manolakos J, Patel A, Norman G. Evaluating the effect of instruction and practice schedule on the acquisition of ECG interpretation skills. Perspect Med Educ. 2017;6(4):237-245. doi: 10.1007/s40037-017-0365-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Rui Z, Lian-Rui X, Rong-Zheng Y, Jing Z, Xue-Hong W, Chuan Z. Friend or foe? flipped classroom for undergraduate electrocardiogram learning: a randomized controlled study. BMC Med Educ. 2017;17(1):53. doi: 10.1186/s12909-017-0881-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Compiet SAM, Willemsen RTA, Konings KTS, Stoffers HEJH. Competence of general practitioners in requesting and interpreting ECGs: a case vignette study. Neth Heart J. 2018;26(7-8):377-384. doi: 10.1007/s12471-018-1124-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Kellman PJ, Krasne S. Accelerating expertise: perceptual and adaptive learning technology in medical learning. Med Teach. 2018;40(8):797-802. doi: 10.1080/0142159X.2018.1484897 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Kopeć G, Waligóra M, Pacia M, et al. . Electrocardiogram reading: a randomized study comparing 2 e-learning methods for medical students. Pol Arch Intern Med. 2018;128(2):98-104. [DOI] [PubMed] [Google Scholar]

- 86.Nag K, Rani P, Kumar YRH, Monickam A, Singh DR, Sivashanmugam T. Effectiveness of algorithm based teaching on recognition and management of periarrest bradyarrhythmias among interns: a randomized control study. Anaesthesia Pain and Intensive Care. 2018;22:81-86. [Google Scholar]

- 87.Riding NR, Drezner JA. Performance of the BMJ learning training modules for ECG interpretation in athletes. Heart. 2018;104(24):2051-2057. doi: 10.1136/heartjnl-2018-313066 [DOI] [PubMed] [Google Scholar]

- 88.Suresh K, Badrinath AK, Suresh B, Eswarawaha M. Evaluation on ECG training program for interns and postgraduates. J Evol Med Dent Sci. 2018;7:407-410. doi: 10.14260/jemds/2018/91 [DOI] [Google Scholar]

- 89.Yadav R, Vidyarthi A. Electrocardiogram interpretation skills in psychiatry trainees. The Psychiatrist. 2013;37:94-97. doi: 10.1192/pb.bp.112.038992 [DOI] [Google Scholar]

- 90.Aziz F, Yeh B, Emerson G, Way DP, San Miguel C, King AM. Asteroids® and electrocardiograms: proof of concept of a simulation for task-switching training. West J Emerg Med. 2019;20(1):94-97. doi: 10.5811/westjem.2018.10.39722 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Hatala R, Gutman J, Lineberry M, Triola M, Pusic M. How well is each learner learning? validity investigation of a learning curve-based assessment approach for ECG interpretation. Adv Health Sci Educ Theory Pract. 2019;24(1):45-63. doi: 10.1007/s10459-018-9846-x [DOI] [PubMed] [Google Scholar]

- 92.Knoery CR, Bond R, Iftikhar A, et al. . SPICED-ACS: study of the potential impact of a computer-generated ECG diagnostic algorithmic certainty index in STEMI diagnosis: towards transparent AI. J Electrocardiol. 2019;57S:S86-S91. doi: 10.1016/j.jelectrocard.2019.08.006 [DOI] [PubMed] [Google Scholar]

- 93.Smith MW, Abarca Rondero D. Predicting electrocardiogram interpretation performance in Advanced Cardiovascular Life Support simulation: comparing knowledge tests and simulation performance among Mexican medical students. PeerJ. 2019;7:e6632. doi: 10.7717/peerj.6632 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Soares WE III, Price LL, Prast B, Tarbox E, Mader TJ, Blanchard R. Accuracy screening for ST elevation myocardial infarction in a task-switching simulation. West J Emerg Med. 2019;20(1):177-184. doi: 10.5811/westjem.2018.10.39962 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Baral R, Murphy DC, Mahmood A, Vassiliou VS. The effectiveness of a nationwide interactive ECG teaching workshop for UK medical students. J Electrocardiol. 2020;58:74-79. doi: 10.1016/j.jelectrocard.2019.11.047 [DOI] [PubMed] [Google Scholar]

- 96.Kewcharoen J, Charoenpoonsiri N, Thangjui S, Panthong S, Hongkan W. A comparison between peer-assisted learning and self-study for electrocardiography interpretation in Thai medical students. J Adv Med Educ Prof. 2020;8(1):18-24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Mohyuddin GR, Jobe A, Thomas L. Does use of high-fidelity simulation improve resident physician competency and comfort identifying and managing bradyarrhythmias? Cureus. 2020;12(2):e6872. doi: 10.7759/cureus.6872 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Thach TH, Blissett S, Sibbald M. Worked examples for teaching electrocardiogram interpretation: salient or discriminating features? Med Educ. 2020;54(8):720-726. doi: 10.1111/medu.14066 [DOI] [PubMed] [Google Scholar]

- 99.Kogan JR, Holmboe ES, Hauer KE. Tools for direct observation and assessment of clinical skills of medical trainees: a systematic review. JAMA. 2009;302(12):1316-1326. doi: 10.1001/jama.2009.1365 [DOI] [PubMed] [Google Scholar]

- 100.Ratanawongsa N, Thomas PA, Marinopoulos SS, et al. . The reported validity and reliability of methods for evaluating continuing medical education: a systematic review. Acad Med. 2008;83(3):274-283. doi: 10.1097/ACM.0b013e3181637925 [DOI] [PubMed] [Google Scholar]

- 101.Cook DA, Hamstra SJ, Brydges R, et al. . Comparative effectiveness of instructional design features in simulation-based education: systematic review and meta-analysis. Med Teach. 2013;35(1):e867-e898. doi: 10.3109/0142159X.2012.714886 [DOI] [PubMed] [Google Scholar]

- 102.Raupach T, Hanneforth N, Anders S, Pukrop T, ten Cate OTJ, Harendza S. Impact of teaching and assessment format on electrocardiogram interpretation skills. Med Educ. 2010;44(7):731-740. doi: 10.1111/j.1365-2923.2010.03687.x [DOI] [PubMed] [Google Scholar]

- 103.Sibbald M, Sherbino J, Ilgen JS, et al. . Debiasing versus knowledge retrieval checklists to reduce diagnostic error in ECG interpretation. Adv Health Sci Educ Theory Pract. 2019;24(3):427-440. doi: 10.1007/s10459-019-09875-8 [DOI] [PubMed] [Google Scholar]

- 104.Kulik JA, Fletcher JD. Effectiveness of intelligent tutoring systems: a meta-analytic review. Rev Educ Res. 2016;86(1):42-78. doi: 10.3102/0034654315581420 [DOI] [Google Scholar]

- 105.Smulyan H. The computerized ECG: friend and foe. Am J Med. 2019;132(2):153-160. doi: 10.1016/j.amjmed.2018.08.025 [DOI] [PubMed] [Google Scholar]

- 106.Schläpfer J, Wellens HJ. Computer-interpreted electrocardiograms: benefits and limitations. J Am Coll Cardiol. 2017;70(9):1183-1192. doi: 10.1016/j.jacc.2017.07.723 [DOI] [PubMed] [Google Scholar]

- 107.Smith SW, Walsh B, Grauer K, et al. . A deep neural network learning algorithm outperforms a conventional algorithm for emergency department electrocardiogram interpretation. J Electrocardiol. 2019;52:88-95. doi: 10.1016/j.jelectrocard.2018.11.013 [DOI] [PubMed] [Google Scholar]

- 108.Mason JW, Hancock EW, Gettes LS, et al. ; American Heart Association Electrocardiography and Arrhythmias Committee, Council on Clinical Cardiology; American College of Cardiology Foundation; Heart Rhythm Society . Recommendations for the standardization and interpretation of the electrocardiogram, part II: electrocardiography diagnostic statement list: a scientific statement from the American Heart Association Electrocardiography and Arrhythmias Committee, Council on Clinical Cardiology; the American College of Cardiology Foundation; and the Heart Rhythm Society: endorsed by the International Society for Computerized Electrocardiology. Circulation. 2007;115(10):1325-1332. doi: 10.1161/CIRCULATIONAHA.106.180201 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eBox. Full Search Strategy

eTable. Features of Studies of Physician ECG Interpretation Accuracy

eFigure. Physician ECG Interpretation Accuracy After an Educational Intervention, All Training Levels Combined