Abstract

Constructive feedback is an important aspect of medical education to help students improve performance in cognitive and clinical skills assessments. However, for students to appropriately act on feedback, they must recognize quality feedback and have the opportunity to practice giving, receiving, and acting on feedback. We incorporated feedback literacy into a case-based concept mapping small group-learning course. Student groups engaged in peer review of group-constructed concept maps and provided written peer feedback. Faculty also provided written feedback on group concept maps and used a simple rubric to assess the quality of peer feedback. Groups were provided feedback on a weekly basis providing an opportunity for timely improvement. Precourse and postcourse evaluations along with peer-review feedback assessment scores were used to show improvement in both group and individual student feedback quality. Feedback quality was compared to a control student cohort that engaged in the identical course without implementing peer review or feedback assessment. Student feedback quality was significantly improved with feedback training compared to the control cohort. Furthermore, our analysis shows that this skill transferred to the quality of student feedback on course evaluations. Feedback training using a simple rubric along with opportunities to act on feedback greatly enhanced student feedback quality.

Keywords: Constructive feedback, feedback rubric, feedback quality, collaborative learning, peer review

Introduction

Feedback is an important aspect of education that allows students and instructors to engage in a process of reflection and improvement to enhance learning.1 In medical education, effective feedback strategies can be an integral part of training to promote student learning in areas such as communication, note-taking, and presentation skills.2-5 Feedback can come in many forms such as praise, cues, goal setting, and corrective and is meant to be formative in nature.1,6,7 Feedback can be provided either through peer-evaluation or from instructors using any number of mechanisms, including computer based, written, or face to face. One traditional way for instructors to provide feedback to students is through the feedback sandwich, where positive comments are placed before and after negative comments.8 However, this method has been called into question and does not appear to be an effective approach to truly elicit change in student performance or learning.9-12 This method also lends itself to a 1-way dialogue of the instructor transposing information onto the student. Such 1-way feedback mechanisms are thought to be only corrective, where collected data on student performance is used to try and change performance.1,13,14 More recently, opportunities for feedback have evolved to include 2-way communication between student and instructor to recognize clear expectations and promote a common set of objectives and goals.15,16 One method to achieve this is through student peer review, which places the student as an active participant in feedback to foster self-reflection and higher order thought processes such as knowledge application and problem solving17,18 Student peer review has been shown to be an effective feedback approach such as to improve academic writing.19

For feedback to be effective in promoting learning, students must have a timely opportunity to act on feedback, thus closing the feedback loop.15,20,21 This allows for the instructor to assess student changes in knowledge application and for the student to continue to improve in their learning. For the feedback loop to occur, opportunities should be integrated within medical curriculum.14,22 However, few opportunities for feedback and feedback training are formally introduced into undergraduate medical education.23 In this report, we describe and analyze the impact of an active learning curriculum-integrated peer-review feedback training for first-year medical students.

Methods

Participants

Two cohorts of first-year medical students took part in a concept map case-based small group-learning course.24 The 11-week course consisted of a weekly, 2-h session using medical case-based concept mapping with students working in groups of 4 to 6 learners. In the small group setting, students created group concept maps to integrate basic science and clinical practice knowledge. Student-group concept maps were assessed by faculty weekly using a defined rubric.24 Both cohorts of students participated in the concept map small group learning.

Cohort 1 (n = 127) was trained and undertook an additional task of concept map peer review and denoted as the “Feedback” cohort. During the first session of the course, students were trained in concept mapping and expectations for peer review. To promote the development of quality student peer review, a 1-criteria, 3-point scale rubric was provided to students and used by faculty to assess feedback quality (Table 1).24 In each session following completion of small group concept maps, students exchanged group concept maps and used the same concept map assessment rubric as the faculty for peer review (Figure 1). In addition to the rubric score, student peer groups provided written feedback on the concept map, which was provided to the student group under review and to faculty facilitators. Faculty facilitators used the peer feedback assessment rubric (Table 1) to assess the quality of student-group peer review and incorporated the score into students’ weekly grade. Written feedback was also provided to student groups by faculty on the group concept map to model constructive feedback on a weekly basis (Figure 2).

Table 1.

Peer feedback assessment rubric.

| Exceeds expectations 3 points |

Meets expectations 2 points |

Below expectations 1 point |

|---|---|---|

| • Critical assessment of peer’s concept map using 4 categories listed on rubric (content; connection; links; and organization). • In addition, group provides specific comments on improvement that can be integrated in following sessions. Peer review matches or exceeds faculty assessment. |

• Critical assessment of peer’s concept map using 4 categories listed on rubric (content; connection; links; and organization). • Written critiques but not specific enough for improvement. Peer review close to matching faculty assessment but still needs some improvement. |

• No critical assessment of peer’s concept map. • No critiques for improvement. Peer review does not come close to matching faculty assessment. |

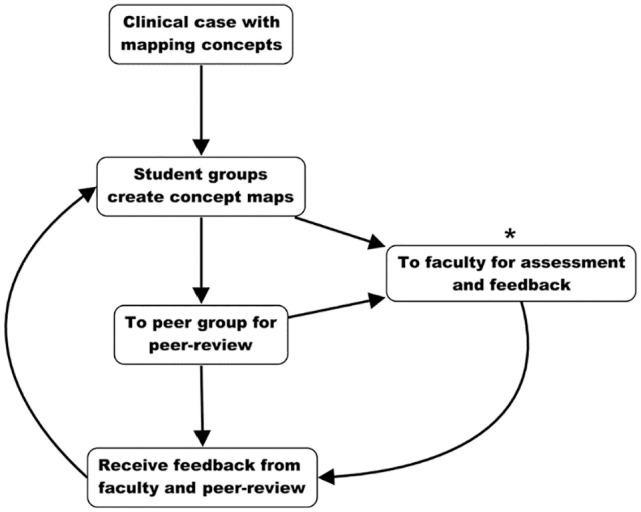

Figure 1.

Flow diagram of each concept map case-based small group-learning session. Groups of students created concept maps based on clinical cases. Student-group concept maps were submitted to faculty for assessment and shared with other student groups for peer review. Group peer review was submitted to faculty for assessment and to peer groups as feedback. Asterisk indicates where data are collected from student-group concept maps and assessments from concept maps and peer review.

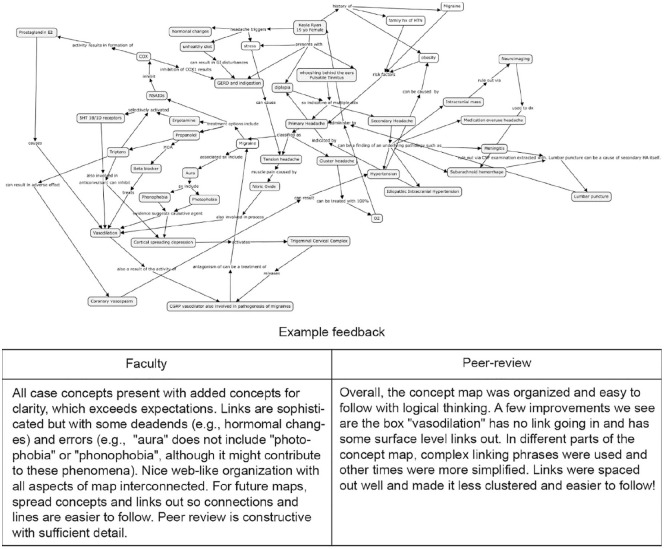

Figure 2.

Example of student-group concept map and associated feedback from faculty and peer review. Clinical case topic was “migraine headache” to integrate foundational science and clinical practice concepts.

Cohort 2 (n = 316) was designated the “Control” cohort. The Control cohort underwent the same concept mapping case-based learning small group activity. However, no peer-review process was implemented, and weekly written feedback was not provided by faculty facilitators. Students in the Control cohort did receive scores from faculty using the concept map assessment rubric. This study received an exempt designation by the New York Institute of Technology Institutional Review Board.

Feedback and rubric scoring

Student groups received weekly peer feedback on case-based concept maps created during the learning session. Similarly, following completion of the session and before the subsequent session, faculty facilitators provided written feedback on student-group-generated concept maps including a point total using the concept map assessment rubric.24 Along with the concept map assessment rubric scores, student groups were assessed on the quality of peer-review feedback using the peer-review feedback assessment rubric (Table 1).

As an independent measure of feedback assessment, open response data collected from precourse and postcourse evaluations were analyzed using the constructive feedback rubric (Table 2). The rubric was modified from a template available through the Center for Engaged Teaching and Learning at the University of California Merced (https://cetl.ucmerced.edu/). The rubric was aimed to identify specific but neutral feedback language in student feedback, as complimentary language such as “great job” does not enhance performance.25,26 The constructive feedback rubric also emphasized whether student peers provided specific actions to guide future decisions.6 Blinded data sets were scored using the feedback rubric and compiled for analysis. The Control of Syntax and Mechanisms criteria (Row 1, Table 2) was analyzed separately from the rest of the rubric as no difference in this criterion was found between any data set or cohort.

Table 2.

Providing constructive feedback rubric.

| Criteria | Exceeds expectations 3 points |

Meets expectations 2 points |

Below expectations 1 point |

|---|---|---|---|

| Control of syntax and mechanics | Uses graceful language that skillfully communicates meaning to readers with clarity and fluency, and is error-free. | Uses straightforward language that generally conveys meaning to readers. The language has few errors. | Uses language that sometimes impedes meaning because of errors in usage. Multiple errors detected in language. |

| Quality of comments | Comments are nonjudgmental and descriptive rather than evaluative (focus on description rather than judgment). Eg,: “Providing examples would help to understand the concept you were explaining.” | Comments are nonevaluative but are judgmental, Eg,: “Please add more examples.” | Comments are both judgmental and evaluative in nature, Eg,: “Poor work.” |

| Balance of comments | Comments provide a good balance of positive and negative feedback. Eg,: “You include a thought provoking topic, but it seems to me that it needs more elaboration with examples.” | Comments are more negative than positive and are provided with no reinforcement of appropriate actions, Eg,: “Will you elaborate on the topic?” | Comments are negative, dismissive, and discouraging, no reinforcement of appropriate actions, Eg,: “Needs elaboration.” |

| Positive feedback phrasing | Attribute positive feedback to internal causes and give it in the second person (you), Eg,: “You worked hard to explain the material well using relevant sources.” | Attribute positive feedback to third person, Eg,: “This was a relevant exercise.” | Positive feedback is not attributed or tied to any accomplishment, Eg,: “Good job.” |

| Negative feedback phrasing | Give negative information in the first person (I) and then shift to third person (s/he), or shift from a statement to a question that frames the problem objectively, Eg,: “I thought I understood the organization of the material from the lecture, but then I was not sure . . .” | Give negative information in the first person (I) only, Eg,: “I was not sure where you were going in this assignment.” | Give negative information in an accusatory and subjective delivery, Eg,: “This is very poor . You lost me.” |

| Appropriate suggestions | Offer specific suggestions that model appropriate behavior, Eg,: “Have you considered trying . . .? How do you think that would work?” | Offer specific suggestions that directs the blame on the person, Eg,: “Why haven’t you tried . . .?” | Offer specific suggestions that are negative in tone and directs the blame at the person, Eg,: “This was a waste of time.” |

Data collection and analysis

Data used for analysis was collected throughout the course. One set of data originated from anonymous course evaluations which were provided electronically to the Feedback cohort during the first and last session of the course. Completion of the course evaluations was voluntary. The evaluations included ordinal 5-point Likert-type scale questions along with freeform open responses as previously described.24 Likert-type scale question responses were exported in a spreadsheet for statistical analysis. The Control cohort was provided the same postcourse evaluation with Likert-type scale questions and open responses. All open-response data were exported, and the quality of the responses was assessed using the constructive feedback rubric to establish a numerical score (Table 2). Mann-Whitney U-test was performed to compare Likert-type scale and feedback rubric scores, which were collected after each session. First session and final session peer-review feedback assessments (Table 1) were compared for the Feedback cohort using student’s t-test. Precourse evaluation question correlation was performed using Pearson correlation. Figure 1 shows a flow diagram to illustrate stepwise the methodology and points of data collection.

Results

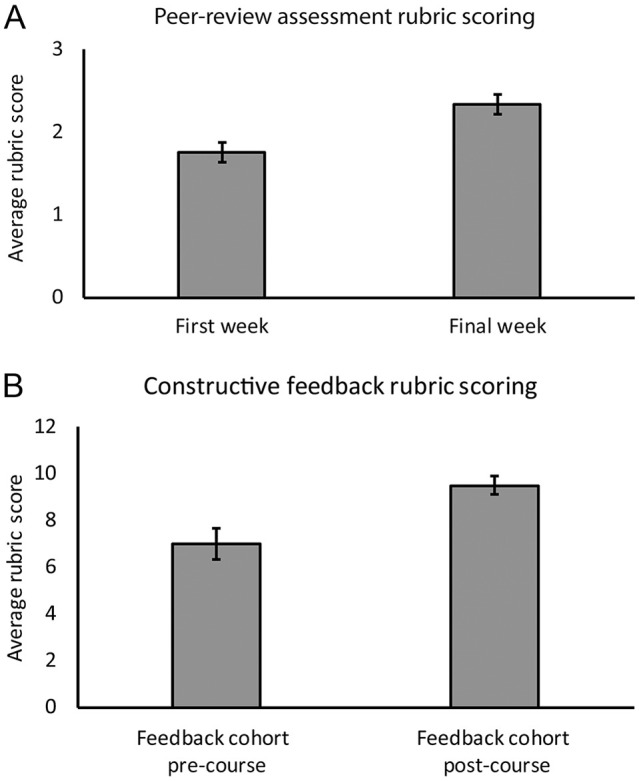

To begin to determine the impact of the combination of peer review and faculty feedback on student feedback quality, peer-review feedback assessment scores for each student group (n = 24) for the Feedback cohort was compared between the first and last session of the course (Figure 3A). Using the 3-point peer-review assessment rubric (Table 1), student-group average scores for the first session was 1.75 (SEM: 0.12). The peer-review feedback assessment scores improved by close to half a point to an average of 2.33 (SEM: 0.12) by the end of the course. Comparison of the first and final group rubric scores produces a P-value < .01 (t = 3.44) suggesting the increase is statistically significant. However, these data analyze group feedback responses and not individual student feedback.

Figure 3.

Feedback improvement in the Feedback cohort: (A) Comparison of group peer-review assessment feedback rubric scores (Table 1) from the Feedback cohort between the first week and final week of the course. P < .01 and (B) open-response constructive feedback rubric scores (Table 2) from Feedback cohort course evaluations. P < .01. Error bars, standard error of the mean.

To address if the feedback training had a measurable impact on individual student feedback, open-response feedback on precourse and postcourse evaluations were analyzed. The quality of feedback on individual student open responses were assessed using a rubric (Table 2) modified from Center for Engaged Teaching and Learning at UC Merced (see Methods) to produce a numerical score. The criterium “control of syntax and mechanics” was scored individually from all other criteria as no differences were found in use of grammar or language between data sets. Average constructive feedback rubric scores were compared between precourse and postcourse evaluations. From the 122 precourse evaluations, 12 students (10%) provided open-response feedback for analysis. From 124 postcourse evaluations, 56 students (45%) provided open-response feedback. Individual constructive feedback rubric scores increased from an average of 7.0 (SEM: 0.67) on precourse evaluations to 9.50 (SEM: 0.39) on postcourse evaluations (Figure 3B; P < .01). Similar to student-group feedback, individual student feedback quality improved over the course.

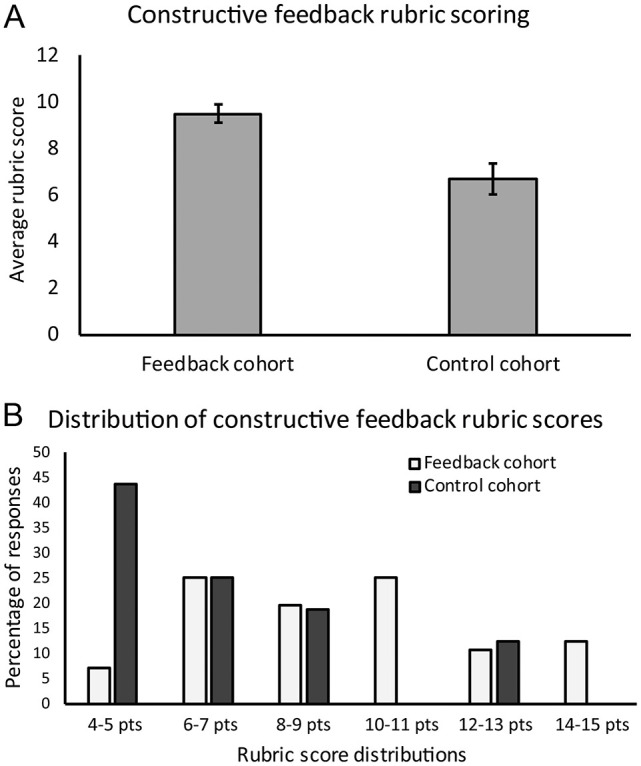

To further support the improved student feedback quality through feedback training, we compared feedback quality between the Feedback cohort and a Control cohort. The Control cohort students participated in the identical case-based concept mapping small group-learning course. However, there was no student-group peer review and no weekly written feedback by faculty. Students in the Control cohort were provided the identical postcourse evaluation as the Feedback cohort comprised of Likert-type scale questions and open-response feedback. As with the Feedback cohort, open responses from the Control cohort were analyzed using the constructive feedback rubric (Table 2). From 62 postcourse evaluations submitted by the Control cohort, 16 (26%) provided open-response feedback. The average constructive feedback rubric score for the Control cohort was 6.69 (SEM: 0.67; Figure 4A). This average constructive feedback score was not significantly different from the Feedback cohort precourse feedback (6.69 and 7.0, respectively; P = .6). However, it was significantly lower than the postcourse average from Feedback cohort (Figure 3A; P < .01). The differences in constructive feedback quality between the Feedback and Control cohorts is supported by the distribution of scores using the constructive feedback rubric assessment (Figure 4B). The Control cohort had a larger percentage of comments on the lower range of scores (4-5 points), while the Feedback cohort had a larger percentage of feedback comments on the higher end of the score range (greater than 10 points) on postcourse evaluations.

Figure 4.

Comparison of constructive feedback rubric scores between student cohorts: (A) open-response constructive feedback rubric scores (Table 2) between Feedback and Control cohorts from postcourse evaluations. P < .01. Errors bars, standard error of the mean. (B) Constructive feedback rubric score distribution of Feedback and Control cohorts.

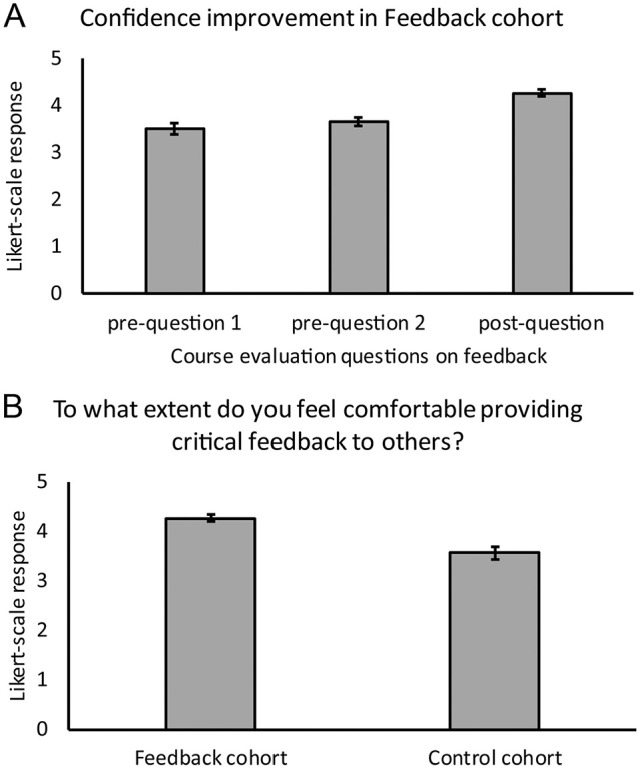

Student comfort with providing feedback was determined using self-reporting Likert-type scale questions on precourse and postcourse evaluations. Feedback cohort precourse evaluations contained 2 questions relating to feedback. Prequestion-1 asked, “Have you had any experience providing constructive feedback to others in a professional setting?” while prequestion-2 asked, “How comfortable do you feel in providing feedback to others in a professional setting?” Responses to both questions showed a significant correlation (P < .001) confirming those students that did not have a great deal of experience providing feedback were not comfortable giving feedback. The strong correlation between the 2 precourse evaluation questions also provided internal survey reliability. Feedback cohort responses to the precourse evaluation questions were compared to the postcourse evaluation question; “To what extent do you feel comfortable providing critical feedback to others?” At the end of the course, student comfort with providing feedback increased in the Feedback cohort (Figure 5A). Comparing responses to prequestion-2 (Likert-type scale average = 3.66, SEM: 0.11, n = 122) and the postcourse question (Likert-type scale average = 4.27, SEM: 0.08, n = 124) showed a significant increase (P < .001). Student comfort with providing feedback was also compared postcourse between the Feedback and Control cohorts (Figure 5B). Students in the Control cohort reported a lower level of comfort (Likert-type scale average = 3.56, SEM: 0.13, n = 62) compared to students in the Feedback cohort (P < .0001).

Figure 5.

Student reporting on confidence with providing feedback: (A) feedback cohort precourse and postcourse evaluation survey responses. Prequestion 1: Have you had any experience providing constructive feedback to others in a professional setting? Prequestion 2: How comfortable do you feel in providing feedback to others in a professional setting? Postquestion: To what extent do you feel comfortable providing critical feedback to others? Comparison of prequestion 1 and postquestion, P < .001. Comparison of prequestion 2 and postquestion, P < .001. (B) Comparison of comfort with feedback between Feedback and Control cohorts, P < .001. Error bars, standard error of the mean.

Discussion

Here, we report the use of feedback training including peer-review and feedback exemplars by faculty in a first-year medical student active learning course to measurably increase the quality of student feedback. The three data sets collected (peer-review feedback assessment, constructive feedback assessment, and course evaluations) support a conclusion that feedback training with an emphasis on quality increased both group and individual feedback responses. The reiterative process of weekly peer review of group-constructed concept maps along with faculty feedback helped to improve concept map quality and knowledge integration24 as well as increasing the quality of peer feedback throughout the course (this study). The improvement in feedback quality is apparent from not only comparing the Feedback cohort from the beginning and end of the course but also comparing the Feedback and Control cohorts. The Control cohort displayed reduced feedback quality, which corresponds with the lower level of comfort reported in providing feedback at the end of the course. Differences in feedback quality between cohorts were not due to use of language and grammar as control of syntax and mechanics criteria were not significantly different in the 2 cohorts on postcourse evaluations. Therefore, the measurable differences are most likely explained by interventions used in the Feedback cohort.

One issue with providing feedback, especially with large groups of learners is sustainability.14,27 The method of feedback and training that we employed allowed for feedback to be integrated within a curriculum to a large number of students without the need for faculty to provide extensive feedback to every single student. Most of the effort was targeted to small groups of 4 to 6 learners. However, the group-level feedback still had a detectable and significant impact on individual students. Another aspect of sustainability in our method was the incorporation of peer review. Actively engaging students in the feedback process keeps it from being a teacher-centered approach.14-16 Student involvement as an integral and equal part of the feedback process has been shown to increase the acceptance, use, and seeking of feedback28,29 as well as creating an appropriate environment for feedback literacy.15 Through faculty and student peer-review feedback, we also observed significant increases in student feedback quality. It is possible that students became proficient at mimicking the faculty example feedback during peer review. However, this seems unlikely as feedback quality was significantly better on postcourse evaluations in the Feedback cohort, which was an independent tool from concept map assessment feedback used by students and faculty.

The improved quality of feedback in the Feedback cohort appears to be associated with training on feedback and expectations.30,31 The Control cohort, although engaged in the identical learning course but without feedback training or peer review, did not have the same level of comfort with or quality of feedback responses. Similar feedback improvement outcomes from feedback training have been observed in other educational settings.23,32-36 Another important feature was the use of the peer-review feedback assessment rubric (Table 1).24 The rubric was focused to encourage specific, neutral language directed toward the task of concept map assessment.22 Improved academic performance has been demonstrated with students using rubrics as a method of self-assessment.37 We believe the rubric contributed to feedback quality by providing clear expectations, an important feature to reduce anxiety regarding assessment.38,39 Peer-review feedback was supported by faculty models of proper feedback which fits with the exemplar model of providing clear examples.6,15,40 Future studies can be designed to determine the relative contribution of each of these forms of feedback in the course. However, the combination of methods had a strong and positive impact on learners. With each round of faculty and peer feedback, student groups were then allowed to use the feedback to both improve group-constructed concept maps as well as improve the quality of peer-review feedback, thus completing the feedback loop.14,15,20,21

Data for the study were collected over a single year at a single institution. However, similar improvement in feedback quality has been found in a second class of first-year medical students undergoing the same process as the Feedback cohort (data not shown). Some of the differences detected between cohorts may be due to motivation to provide comments on open-response sections of precourse and postcourse evaluations. The lack of emphasis on feedback in the Control cohort, for example, may have reduced the motivation to provide what could have been quality feedback using the constructive feedback rubric. This would skew the data toward the Feedback cohort. However, not providing feedback even with the opportunity to do so also strengthens our conclusion that training and peer review increases feedback quality. This study was also limited to a single course using one form of feedback, written feedback. However, long-term impacts of student training in feedback during a single course have been shown.23 Despite these limitations, easily integrated interventions in the active learning course had measurable and significant impacts on student feedback. Future work can be designed to implement similar strategies for verbal feedback such as in case-based learning41 as well as more purposeful feedback sessions for clinical skills courses and patient simulation laboratories.

Conclusion

The work presented confirms that feedback training measurably enhances student feedback quality. Peer review along with faculty modeling of constructive feedback allowed students to develop a strong understanding of specific constructive feedback. The use of a peer-review feedback rubric provided clear expectations and promoted quality student feedback. The constructive student feedback went beyond the specific task of group concept map construction and transferred over to course evaluations, showing how supporting feedback literacy can have an impact outside of the specific task in which it was introduced. Instructors and institutions that want to enhance quality and responses of students’ course evaluations may need to train students in providing proper feedback and can use the simple model described in this report.

Acknowledgments

The authors would like to thank Clinton Iadanza, and Drs Dosha Cummins and Rajendram Rajnarayanan for their constructive feedback and support for this project.

Footnotes

Funding:The author(s) received no financial support for the research, authorship, and/or publication of this article.

Declaration of conflicting interests:The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Author Contributors: Both authors contributed equally to the design, data collection and analysis, draft, revision, and all aspects of this work.

ORCID iD: Troy Camarata  https://orcid.org/0000-0002-7628-2716

https://orcid.org/0000-0002-7628-2716

References

- 1. Hattie J, Timperley H. The power of feedback. Rev Educ Res. 2007;77:81-112. [Google Scholar]

- 2. Ende J. Feedback in clinical medical education. JAMA. 1983;250:777-781. [PubMed] [Google Scholar]

- 3. Spickard A, 3rd, Gigante J, Stein G, Denny JC. Automatic capture of student notes to augment mentor feedback and student performance on patient write-ups. J Gen Intern Med. 2008;23:979-984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Gigante J, Dell M, Sharkey A. Getting beyond “Good job”: how to give effective feedback. Pediatrics. 2011;127:205-207. [DOI] [PubMed] [Google Scholar]

- 5. Engerer C, Berberat PO, Dinkel A, Rudolph B, Sattel H, Wuensch A. Specific feedback makes medical students better communicators. BMC Med Educ. 2019;19:51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Krackov SK. Giving feedback. In Dent JA, Harden RM, eds. A Practical Guide for Medical Teachers. London, England: Churchill Livingstone; 2013:323-332. [Google Scholar]

- 7. Mulliner E, Tucker M. Feedback on feedback practice: perceptions of students and academics. Assess Eval High Edu. 2017;42:266-288. [Google Scholar]

- 8. Dohrenwend A. Serving up the feedback sandwich. Fam Pract Manag. 2002;9:43-46. [PubMed] [Google Scholar]

- 9. Shute VJ. Focus on formative feedback. Rev Educ Res. 2008;78:153-189. [Google Scholar]

- 10. Molloy E. Time to pause: giving and receiving feedback in clinical education. In Delany C, Molloy E, eds. Clinical Education in the Health Professions. Sydney, NSW, Australia: Churchill Livingstone; 2009:128-146. [Google Scholar]

- 11. Kluger AN, Van Dijk D. Feedback, the various tasks of the doctor, and the feedforward alternative. Med Educ. 2010;44:1166-1174. [DOI] [PubMed] [Google Scholar]

- 12. Johnson C, Molloy E. Building evaluative judgement through the process of feedback. In Boud D, Ajjawi R, Dawson P, Tai J, eds. Developing Evaluative Judgement in Higher Education Assessment for Knowing and Producing Quality Work. London, England: Routledge; 2018:166-175. [Google Scholar]

- 13. Gibbs G, Simpson C. Conditions under which assessment supports student learning. Learn Teach High Educ. 2004;1:1-31. [Google Scholar]

- 14. Boud D, Molloy E. Rethinking models of feedback for learning: the challenge of design. Assess Eval High Edu. 2013;38:698-712. [Google Scholar]

- 15. Carless D, Boud D. The development of student feedback literacy: Enabling uptake of feedback. Assess Eval High Edu. 2018;43:1315-1325. [Google Scholar]

- 16. Molloy E, Ajjawi R, Bearman M, Noble C, Rudland J, Ryan A. Challenging feedback myths: values, learner involvement and promoting effects beyond the immediate task. Med Educ. 2020;54:33-39. [DOI] [PubMed] [Google Scholar]

- 17. Milan FB, Parish SJ, Reichgott MJ. A model for educational feedback based on clinical communication skills strategies: beyond the “Feedback Sandwich.” Teach Learn Med. 2006;18:42-47. [DOI] [PubMed] [Google Scholar]

- 18. Nicol D, Thomson A, Breslin C. Rethinking feedback practices in higher education: a peer review perspective. Assess Eval High Edu. 2014;39:102-122. [Google Scholar]

- 19. Huisman B, Saab N, van den Broek P, van Driel J. The impact of formative peer feedback on higher education students’ academic writing: a meta-analysis. Assess Eval High Edu. 2019;44:863-880. [Google Scholar]

- 20. Sutton P. Conceptualizing feedback literacy: knowing, being, and acting. Innov Educ Teach Int. 2012;49:31-40. [Google Scholar]

- 21. Rudland J, Wilkinson T, Wearn A, et al. A student-centred feedback model for educators. Clin Teach. 2013;10:99-102. [DOI] [PubMed] [Google Scholar]

- 22. Ramani S, Krackov SK. Twelve tips for giving feedback effectively in the clinical environment. Med Teach. 2012;34:787-791. [DOI] [PubMed] [Google Scholar]

- 23. Kruidering-Hall M, O’Sullivan PS, Chou CL. Teaching feedback to first-year medical students: long-term skill retention and accuracy of student self-assessment. J Gen Intern Med. 2009;24:721-726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Slieman TA, Camarata T. Case-based group learning using concept maps to achieve multiple educational objectives and behavioral outcomes. J Med Educ Curric Dev. 2019;6:1-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Boehler ML, Rogers DA, Schwind CJ, et al. An investigation of medical student reactions to feedback: a randomised controlled trial. Med Educ. 2006;40:746-749. [DOI] [PubMed] [Google Scholar]

- 26. Rogers DA, Boehler ML, Schwind CJ, Meier AH, Wall JCH, Brenner MJ. Engaging medical students in the feedback process. Am J Surg. 2012;203:21-25. [DOI] [PubMed] [Google Scholar]

- 27. Carless D, Salter D, Yang M, Lam J. Developing sustainable feedback practices. Stud High Educ. 2011;36:395-407. [Google Scholar]

- 28. Telio S, Regehr G, Ajjawi R. Feedback and the educational alliance: examining credibility judgements and their consequences. Med Educ. 2016;50:933-942. [DOI] [PubMed] [Google Scholar]

- 29. Sargeant J, Lockyer JM, Mann K, et al. The R2C2 model in residency education: how does it foster coaching and promote feedback use? Acad Med. 2018;93:1055-1063. [DOI] [PubMed] [Google Scholar]

- 30. Patton C. “Some kind of weird, evil experiment”: student perceptions of peer assessment. Assess Eval High Edu. 2012;37:719-731. [Google Scholar]

- 31. Tai JH-M, Canny BJ, Haines TP, Molloy EK. The role of peer-assisted learning in building evaluative judgement: opportunities in clinical medical education. Adv Health Sci Educ Theory Pract. 2016;21:659-676. [DOI] [PubMed] [Google Scholar]

- 32. Berg EC. The effects of trained peer response on ESL students’ revision types and writing quality. J Second Lang Writ. 1999;8:215-241. [Google Scholar]

- 33. Hu G. Using peer review with Chinese ESL student writers. Lang Teach Res. 2005;9:321-342. [Google Scholar]

- 34. Min H-T. Training students to become successful peer reviewers. System. 2005;33:293-308. [Google Scholar]

- 35. Min H-T. The effects of trained peer review on EFL students’ revision types and writing quality. J Second Lang Writ. 2006;15:118-141. [Google Scholar]

- 36. Rahimi M. Is training student reviewers worth its while? a study of how training influences the quality of students’ feedback and writing. Lang Teach Res. 2013;17:67-89. [Google Scholar]

- 37. He X, Canty A. Empowering student learning through rubric-referenced self-assessment. J Chiropr Educ. 2012;26:24-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Yucel R, Bird FL, Young J, Blanksby T. The road to self-assessment: exemplar marking before peer review develops first-year students’ capacity to judge the quality of a scientific report. Assess Eval High Edu. 2014;39:971-986. [Google Scholar]

- 39. Carless D, Chan KKH. Managing dialogic use of exemplars. Assess Eval High Edu. 2017;42:930-941. [Google Scholar]

- 40. Cantillon P, Sargeant J. Giving feedback in clinical settings. BMJ. 2008;337:a1961. [DOI] [PubMed] [Google Scholar]

- 41. Bird EC, Osheroff N, Pettepher CC, Cutrer WB, Carnahan RH. Using small case-based learning groups as a setting for teaching medical students how to provide and receive peer feedback. Med Sci Educ. 2017;27:759-765. [DOI] [PMC free article] [PubMed] [Google Scholar]