Abstract

Background:

There is limited research about the effects of video quality on the accuracy of assessments of physical function.

Methods:

A repeated measures study design was used to assess reliability and validity of the finger–nose test (FNT) and the finger-tapping test (FTT) carried out with 50 veterans who had impairment in gross and/or fine motor coordination. Videos were scored by expert raters under eight differing conditions, including in-person, high definition video with slow motion review and standard speed videos with varying bit rates and frame rates.

Results:

FTT inter-rater reliability was excellent with slow motion video (ICC 0.98–0.99) and good (ICC 0.59) under the normal speed conditions. Inter-rater reliability for FNT ‘attempts’ was excellent (ICC 0.97–0.99) for all viewing conditions; for FNT ‘misses’ it was good to excellent (ICC 0.89) with slow motion review but substantially worse (ICC 0.44) on the normal speed videos. FTT criterion validity (i.e. compared to slow motion review) was excellent (β = 0.94) for the in-person rater and good (β = 0.77) on normal speed videos. Criterion validity for FNT ‘attempts’ was excellent under all conditions (r ⩾ 0.97) and for FNT ‘misses’ it was good to excellent under all conditions (β = 0.61–0.81).

Conclusions:

In general, the inter-rater reliability and validity of the FNT and FTT assessed via video technology is similar to standard clinical practices, but is enhanced with slow motion review and/or higher bit rate.

Keywords: Telemedicine, coordination impairment, task performance and analysis, motor skills, psychometrics

Introduction

Increasing access to low-cost internet technologies has brought with it the ability to use such technology to deliver healthcare services from afar. This has led to considerable interest in telehealth for diverse conditions and interventions, which can vary widely in terms of the nature of the care provided.1 Characterizing physical abilities is central to numerous specialties (e.g. neurology, orthopaedic surgery, physical therapy, etc.) and typically requires hands-on evaluation. It is vital, therefore, to determine whether clinicians can remotely evaluate physical performance with similar fidelity to in-person evaluation.

The accuracy of commonly used measures of physical abilities may be affected by many factors, but when measures are obtained remotely they also may be affected by the technology itself. Most telerehabilitation studies have used high internet bandwidth and/or supplemented video visits with other technologies (e.g. remote sensors, in-home messaging devices, video recordings) and/or in-person visits to compensate for lack of high bandwidth.2–8 While internet-based audio–video communication is widely available, specialized technology may not be available and internet bandwidth may be limited. Widespread implementation of telehealth requires an understanding the potential impact on clinical evaluations of the commonly available technology.

When low bandwidth conditions occur during use of video technology, standard compression algorithms which reduce frame rate and/or bit rate can affect the quality of the video image, in turn potentially affecting accuracy when evaluating physical performance. For example, video ‘freezing’ due to lower frame rate could interfere with evaluating the fluidity of movement (e.g. frequency of tremors) and ‘tiling’ due to lower bit rate could limit the ability to perceive fine deviations (e.g. the extent of tremors) and/or the interface of the patient with the environment (e.g. fumbling). Preliminary studies show that bandwidth indeed may affect accurate measurement of fine motor coordination.9 Additionally, most telehealth clinics are supported by a single camera. The loss of stereoscopic vision might limit assessment of three-dimensional activities.

Many tests of fine motor function are either timed or quantified according to counts of objects moved over a pre-specified testing time span, neither of which is likely to be informative about the impact of video technology on assessing movement quality as video very accurately times performance and it is easy to count items even when an image is frozen. Qualitative measures of fine motor function designed for specific patient populations (e.g. Action Research Arm Test – stroke) also are of limited value for purposes of identifying potential technological limitations that may affect diverse clinicians across diverse patient populations. However, the finger-tapping test (FTT) and the finger–nose test (FNT) are widely used clinical tests employed by a multitude of clinicians across multiple populations in which image quality and lack of three-dimensional perception might affect accuracy.

The FTT is a subtest of the Halstead–Reitan neuropsychological battery developed to examine fine motor function, kinaesthetic, and visual-motor ability. Individuals are instructed to tap their index finger as quickly as possible for 10 s, keeping the hand and arm stationary.10 The FNT was developed to test cerebellar function and involves an individual being asked to use their forefinger to touch their nose and then touch a distal object (e.g. the examiner’s finger) in rapid succession as fast as possible.11

The goal of this study was to examine the effect of video transmission characteristics (frame rate and bit rate) and camera viewing angle (frontal, lateral, both) on the reliability and criterion validity of the FTT and FNT.

Methods

This study is a part of a larger study examining effects of video parameters on tests of fine and gross motor coordination, with this study focusing on fine motor coordination.

Study design

We used a repeated measures design to examine the reliability and validity of two fine motor coordination tests under multiple technological configurations.

Study sample

Study subjects were recruited through advertisement and mailed letters to veterans treated in physical therapy, occupational therapy, neurology and/or rheumatology clinics at the Durham Veterans Affairs Medical Center (DVAMC). Exclusion criteria for the larger study were as follows: (1) unable to ambulate ⩾ 10 feet (3 m) without human help; (2) unable to rise from sit to stand without human help; (3) unable to stand for ⩾ 10 s without human help; (4) unable to provide informed consent.

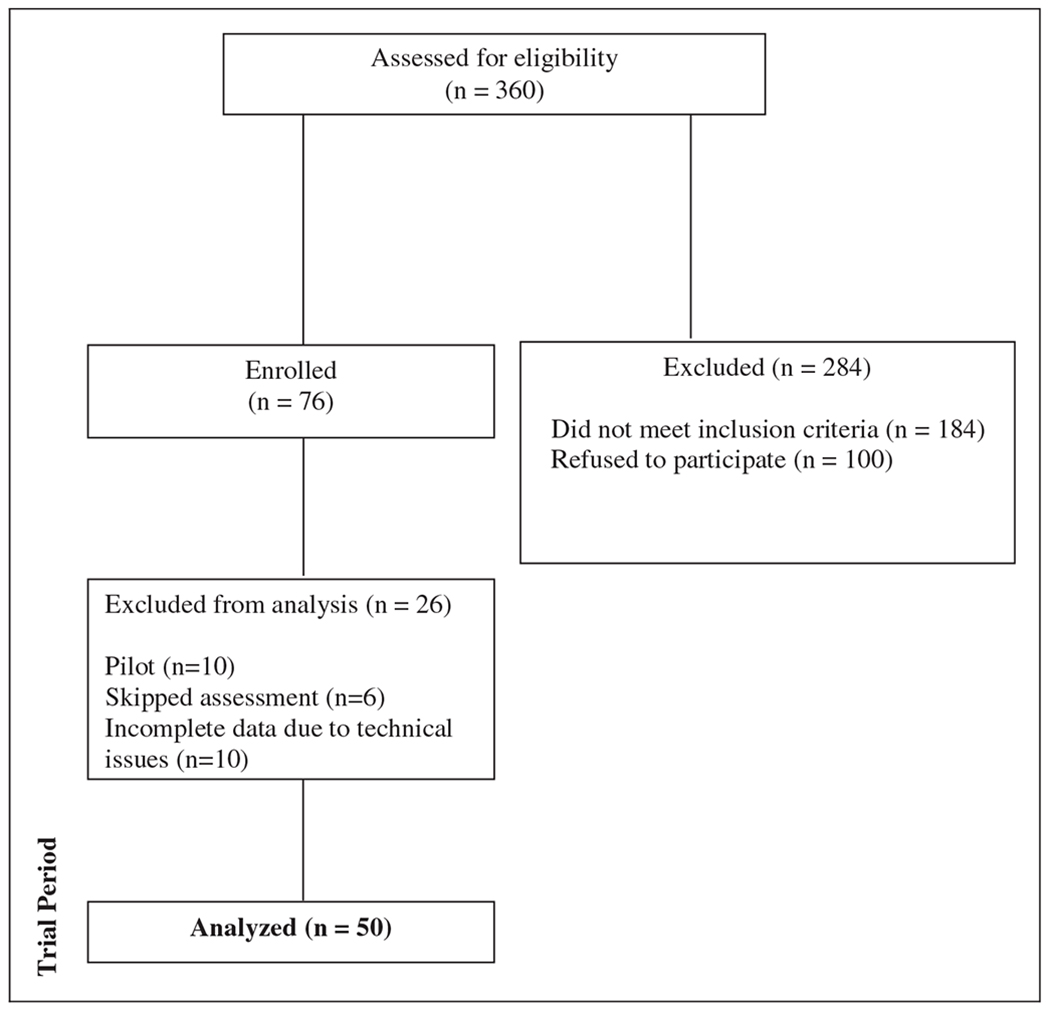

A total of 360 veterans were screened over the recruitment period, of whom 184 were excluded per the criteria above and 100 refused to participate. Of the remaining 76 veterans, 26 were excluded from analysis due to incomplete data and/or technical issues for a final study sample of 50 (13.9% of those screened) (Figure 1).

Figure 1.

Consort diagram.

Fine motor coordination tests

Finger-tapping test.

Participants were seated at a table with their palm on the table top. Using the right index finger, participants tapped in a designated area as quickly as possible for 10 s. The procedure was repeated with the left hand. The number of finger taps in 10 s was counted. Video recordings were scored on the frontal view distinct from the lateral view (with the order randomized across raters) and then a combined score was provided by the rater after observation of both views.

Finger–nose test.

Participants were seated at a table with eyes open, and instructed to begin the test by touching their nose with right elbow flexed, then to touch a 13.18 inch dowel placed at arm’s length and return to touch their nose again – repeating this process as fast as possible for 10 s. The procedure was repeated with the left upper extremity. The number of attempts to touch the dowel in 10 s and the number of missed attempts in 10 s were counted. Similar to the FTT, video recordings were scored on the frontal view distinct from the lateral view and a combined score was provided by the rater given their observations on both views.

Technological configurations

Both tests of fine motor coordination were rated under the following conditions and technological configurations:

in-person

high definition video recording with optional slow-motion review (deemed the gold standard),

- video-recording at real-time speed with fixed resolution but variable frame rates, including:

- 8 frames per second (fps),

- 15 fps

- 30 fps

- video-recording at real-time speed with 30 fps fixed frame rate but variable bit rate (bandwidth) equivalent to:

- 126 kilobytes per second (kbps)

- 384 kbps

- 768 kbps

The in-person rating and video recording were done on the same day and in the same location. To mimic the conditions under which viewing of home telehealth visits might take place, video recordings were viewed on individual desktop computers with 19-inch, flat panel 1280 × 1024 DVI VGA LCD monitors (Dell 1098FP) placed on a 29 inch high desk top and at a self-selected, comfortable viewing distance.

Rater selection and training

A total of 14 raters assessed performance across eight potential conditions, as listed below. In-person rating was limited to a single person and always was the same individual. The ‘gold standard’ (slow motion, high definition) recording was rated by the same two ‘expert’ raters. The two expert raters were so identified based on years of clinical experience and on availability to serve in an expert role. All other conditions were rated by two raters each with raters randomized across subjects and conditions.

All raters were staff members of the DVAMC Physical Medicine & Rehabilitation Service with at least 1 year experience, including physical therapists (n = 4), occupational therapists (n = 8), a physical therapy assistant (n = 1) with ⩾2 years of clinical experience and a rehabilitation research coordinator with >4 years of experience administering similar tests (n = 1). Training manuals, videos and protocols were created to reduce potential bias among raters, and all raters were required to rate one demonstration video, two practice tests and a final test video. Raters were deemed ‘certified’ if their final test scores were within an allowable range (±the square root of the known inter-rater reliability times the standard deviation squared) of the ‘true’ score per the mean score of the expert raters per slow motion review.

Video recording and editing

Subjects were recorded on a lateral and a frontal view using two Toshiba Camileo X200 video cameras with SD memory cards (for detailed information, see http://support.toshiba.com/support/staticContentDetail?contentId=3182210&isFromTOCLink=false). For the FTT, video recordings captured the entire hand and surface of table; for the FNT, video recordings captured the entire upper extremity and test dowel.

Videos were edited on an iMac desktop, using Final Cut Pro software, to delete any irrelevant footage (e.g. demonstrating tests to the subjects) and to ensure a similar the sequence and timing of the test presentation for all raters. Once edited, videos were compressed using Final Cut Pro Compressor software to manipulate the frame rate and/or bit rate per the configurations above holding the other variables constant (e.g. frame size). All conditions had the same size and aspect ratio for the video.

Ethical approval

The study was approved by the Durham VAMC Institutional Review Board (MIRB#01648/0023) and informed consent was obtained from all participants.

Statistical methods

Missing value rates were assessed for right and left hand and for frontal and lateral ratings. Comparative analyses were carried out on the mean of the right and the left hand scores using the final combined score provided by the raters after review of frontal and lateral views.

Initial analyses examined the mean and variance of the two dependent variables (FTT and FNT scores) across the three main configurations for viewing/rating these tests (in-person, slow motion review of a high-definition video recording and real-time review of video recording with variable resolution and/or frame rate). Then we examined results for the various technological configurations for viewing video recordings at normal speed under the various technological configurations (i.e. the variations in frame rate and bit rate). Having confirmed that the dependent measures met assumptions of normality in preliminary analyses we used SAS PROC GLM and SAS PROC MIXED in these analyses.12 Although we randomized raters across the configurations, to control for any potential rater specific differences, rater was treated as a random effect. Inter-rater reliability was determined using SAS PROC VARCOMP according to the methods described in Streiner and Norman,13 again treating rater as a random component. Finally, we use regression models to estimate a ‘validity coefficient’ for the overall association between rater and criterion scores, controlling for rater,14 to examine differences in validity according to technological configuration. In these analyses, we do not use overall tests of contrasts (i.e. across multiple measures) but rather test each configuration / measure individually. As this is an exploratory study, we do not correct for multiple comparisons and the statistical significance is set at p < 0.05.

Results

The study population was reflective of the veteran population as whole, with a mean age of 50, 52% Caucasian and 80% male (see Table 1). The most common medical condition among participants was arthritis (62% of subjects), with 58% of subjects having ⩾3 comorbid conditions.

Table 1.

Subject characteristics.

| Characteristics | N | Mean (SD) or % |

|---|---|---|

| Age (m) | 50 | 61.3 (1.8) |

| Race | ||

| • Caucasian (%) | 26 | 52.0 |

| • African American (%) | 24 | 48.0 |

| • Other (%) | 0 | 0.0 |

| Gender – male (%) | 40 | 80.0 |

| female (%) | 10 | 20.0 |

| Cardiac diagnosis (%) | 3 | 6.0 |

| Neurological diagnosis (%) | 12 | 24.0 |

| Arthritis diagnosis (%) | 31 | 62.0 |

| Diabetes diagnosis (%) | 1 | 2.0 |

| Cancer diagnosis (%) | 3 | 6.0 |

| Total number of diagnoses ⩾ 3 (%) | 29 | 58.0 |

Simple descriptive analyses of the FTT and the FNT revealed low values for missing data and/or inability to rate (data not shown). For the FTT, total of 3 (0.5%) were missing (i.e. missing data and/or deemed ‘unable to rate’ by the rater) for the combined rating of both right and left hands. For the FTT combined hand rating on the frontal and lateral views had a missing value rate of 22 (3.3%) and 32 (4.8%) respectively, with 3 (0.5%) missing data on both views and therefore missing on final rating. For the FNT, 13 (2.0%) had missing data for the combined mean of both right and left hands. For the FNT, the combined hand rating on the frontal and lateral views had a missing value rate of 27 (4.1%) and 32 (4.8%) respectively, with 13 (2.0%) missing/unable to rate on both views and therefore missing the final rating.

There was wide variability across subjects in performance on both tests (see Table 2). On the ‘gold standard’ slow motion review, for the FTT, subjects tapped a mean of 42.4 taps with a standard deviation (SD) of 14.8. There were significantly more taps counted on average in-person and on slow motion review compared to the videos rated at a normal rate of speed across all bit rates and frame rates (Table 3). The number of taps counted did not differ significantly according to bit rate (p-value for contrasts among bit rates ranged from p=0.69 to p=0.81), but significantly more taps were counted at 30 fps and 15 fps than at the slower 8 fps frame rate (p < 0.001 for 30 fps vs 8 fps and for 15 fps vs 8 fps, p=0.85 for 30 fps vs 15 fps). For the FNT, the gold standard slow motion review showed a mean of 19.9 (SD 6.4) attempts to tap the dowel with a mean of 2.0 misses (SD 2.6). Thus, overall, subjects made many more successful attempts to tap the dowel than unsuccessful. We did not find a difference in the number of total taps or missed taps according to viewing method (i.e. in-person vs video, slow motion vs standard speed video across all bandwidths and frame rates) and neither did we find any differences in the ratings for number of taps according to frame rate or bit rate.

Table 2.

Descriptive data for FTT and FNT according to viewing speed, frame rate and resolution.

| Finger taps/30 s |

Finger–nose test attempts/10 s |

Finger–nose test misses/l0 s |

||||

|---|---|---|---|---|---|---|

| Technological configuration | N | Mean (SD) | N | Mean (SD) | N | Mean (SD) |

| Viewing method | ||||||

| IP – in-person rater | 50 | 44.2 (18.2) | 49 | 19.39 (6.4) | 50 | 1.50 (1.54) |

| SM – optional slow motion | 100 | 42.4 (14.8) | 101 | 19.91 (6.4) | l0l | 2.03 (2.56) |

| NS – normal speed (all frame rates & bandwidths) | 492 | 36.6 (13.6) | 497 | 19.81 (6.4) | 499 | 2.53 (2.83) |

| Frame rate (normal speed, bit rate 768 kbps) | ||||||

| 30 fps | 99 | 39.6 (15.0) | 100 | 19.94 (6.3) | l02 | 2.65 (3.25) |

| 15 fps | 96 | 39.3 (13.7) | 100 | 19.98 (6.4) | l00 | 2.52 (2.74) |

| 8 fps | 102 | 26.9 (7.2) | 101 | 19.89 (6.2) | l03 | 3.17 (3.16) |

| Bit rate (normal speed, frame rate 30 fps) | ||||||

| 768 kbps | 99 | 39.6 (15.0) | 100 | 19.94 (6.33) | l02 | 2.65 (3.25) |

| 384 kbps | 97 | 39.0 (12.9) | 95 | 19.34 (6.48) | 95 | 2.08 (2.21) |

| 128 kbps | 98 | 38.6 (13.6) | 99 | 19.87 (6.48) | 99 | 2.21 (2.52) |

Table 3.

Contrasts for FTT and FNT according to viewing speed, frame rate and resolution.

| Technological configuration | p-values | p-values | p-values |

|---|---|---|---|

| Viewing method | |||

| Heteroscedasticity | <0.001 | 0.89 | 0.10 |

| SM vs IP | 0.59 | 0.64 | 0.39 |

| NS vs IP | 0.02 | 0.66 | 0.25 |

| NS vs SM | 0.02 | 0.88 | 0.39 |

| Frame rate (normal speed, bandwidth 768 kbps) | |||

| Heteroscedasticity | <0.001 | 0.51 | 0.94 |

| 30 vs 15 fps | 0.85 | 0.92 | 0.86 |

| 30 vs 8 fps | <0.001 | 0.95 | 0.13 |

| 15 vs 8 fps | <0.001 | 0.97 | 0.18 |

| Bit rate (normal speed, frame rate 30 fps) | |||

| Heteroscedasticity | 0.24 | 0.10 | 0.91 |

| 768 vs 384 kbps | 0.81 | 0.70 | 0.67 |

| 768 vs 128 kbps | 0.69 | 0.85 | 0.38 |

| 384 vs 128 kbps | 0.81 | 0.57 | 0.66 |

Inter-rater reliability was excellent for the FTT (intraclass correlation coefficient (ICC) 0.99, 95% confidence interval (CI) 0.99–0.99) with slow motion video, but declined for the videos viewed at normal rate of speed (ICC 0.53, 95% CI 0.82-0.91), with the non-overlapping confidence intervals indicating the statistically significant differences. Sub-analyses according to particular frame rates and bandwidths did not reveal any significant differences in inter-rater reliability according to frame rate or bit rate, with ICCs ranging from 0.76-0.87. See Table 4 for detailed results.

Table 4.

Reliability and validity of FTT and FNT according to treatment group, frame rate and bandwidth.

| Mean finger taps / 30 s |

FNT – total attempts |

FNT – missed attempts |

||||

|---|---|---|---|---|---|---|

| Technological configuration | Inter-rater reliability ICC (95% CL)a | Criterion validity Regression coefficient (95% CI)b | Inter-rater reliability ICC (95% CI)a | Criterion validity Regression coefficient (95% CI)b | Inter-rater reliability ICC (95% CI)a | Criterion validity Regression coefficient (95% CI)b |

| Viewing method | ||||||

| (IP) in-person | NAc | 0.94 (0.83, 1.05)* | NAc | 0.97 (0.89, 1.04) | NAc | 0.81 (0.64, 0.98) |

| (SM) slow motion | 0.99 (0.99, 0.99)* | NAd | 0.99 (0.99, 0.99)* | NAd | 0.83 (0.76, 0.88)* | NAd |

| (NS) normal speed | 0.59 (0.53, 0.64)* *CI do not overlap: NS vs SM |

0.77 (0.72, 0.83)* *CI do not overlap: NS vs IP |

0.97 (0.96, 0.97)* *CI do not overlap: SM vs NS |

0.99 (0.97, 1.00) | 0.44 (0.36, 0.51)* *CI do not overlap: SM vs NS |

0.61 (0.54, 0.68) |

| Frame rate* | ||||||

| 30 | 0.87 (0.82, 0.91) | 0.92(0.84,0.99)* | 0.95 (0.92, 0.96) | 0.98 (0.93, 1.02) | 0.57 (0.42, 0.69) | 0.52 (0.34, 0.69) |

| 15 | 0.82 (0.74, 0.88) | 0.92(0.84,0.99)* | 0.99 (0.994, 0.997)* | 1.00 (0.99, 1.01) | 0.59 (0.45, 0.71) | 0.71 (0.56, 0.87) |

| 8 | 0.76 (0.67, 0.83) | 0.35(0.16,0.54)* | 0.95 (0.91, 0.96) | 0.97 (0.92, 1.02) | 0.70 (0.58, 0.78) | 0.73 (0.59, 0.88) |

| *normal speed & bit rate 768 kbps | *CI do not overlap: 8 vs 15, 8 vs 30 | *CI do not overlap: 15 vs 8, 30 | ||||

| Bit rate | ||||||

| 768 kbps | 0.87 (0.82,0.91 | 0.92(0.84,0.99) | 0.95 (0.92, 0.96) | 0.98 (0.93, 1.02) | 0.57 (0.42, 0.69) | 0.52 (0.34, 0.69) |

| 384 kbps | 0.79 (0.70,0.86) | 0.90(0.84,0.96) | 0.88 (0.82, 092)* | 0.98 (0.93, 1.02) | 0.35 (0.16, 0.52) | 0.63, (0.45,0.80) |

| 128 kbps | 0.78 (0.68,0.84) | 0.95(0.87,1.02) | 0.95 (0.93, 0.97) | 1.00 (0.99, 1.02) | 0.45 (0.28,0.59) | 0.65, (0.51, 0.79) |

| *normal speed & 30 fps | *CI do not overlap: 384 vs 768, 128 | |||||

Reliability based on Streiner and Norman.13 Confidence intervals estimated using Fisher Z transformation.

Validity estimated using standardized regression coefficient for relationship between finger tap and criterion measure.

With single in-person rater, reliability could not be estimated

Criterion group in validity analyses = slow motion.

Inter-rater reliability for FNT attempts was excellent (ICC 0.97–0.99) for all viewing conditions (i.e. in-person, slow motion and normal rate of speed across bandwidths and frame rates) (see Table 4). We did find higher reliability, with non-overlapping confidence intervals indicating differences were statistically significant, at 15 fps compared to 30 fps or to 8 fps (ICC 0.99, 95% CI 0.994–0.997 vs ICC 0.95, 95% CI 0.92–0.96 and 0.95, 95% CI 0.91–0.96, respectively). However, that difference likely isn’t clinically significant given all ICCs were above 0.95 (generally considered excellent). We also found differences in inter-rater reliability according to bandwidth, with non-overlapping confidence intervals for 384 kbps (ICC 0.88, 95% CI 0.82–0.92) compared to 768 kbps (ICC 0.95, 95% CI 0.92–0.96) and 128 kbps (ICC 0.95, 95% CI 0.93–0.97), but again, the clinical significance of these differences would be modest at best with the lowest ICC being 0.88 at 384 kbps and 30 fps and with confidence limits nearly overlapping.

We did find concerning differences in inter-rater reliability for FNT misses (see Table 3). Inter-rater reliability was good-excellent (ICC 0.89, 95% CI 0.76–0.88) for slow motion review, but declined substantially on the videos reviewed at a normal rate of speed across the various bit rates and frame rates (ICC 0.44, 95% CI 0.36–0.51), with the non-overlapping confidence intervals indicating this difference was statistically significant. Sub-analyses did not reveal statistically significant differences in reliability according to bit rate or framerate. Inter-rater reliability was moderate at all frame rates (ICC 0.57–0.70) and it was in the poor to moderate range (ICC 0.35–0.57) for the various bit rates.

Criterion validity for the FTT, compared to slow motion review, was excellent (β = 0.94, 95% CI 0.830–1.05) for the in-person rater and it was good (β = 0.77, 95% CI 0.72–0.825) for normal speed across the various bandwidths and frame rates combined, with the non-overlapping confidence intervals indicating this difference was statistically significant (see Table 4). On examination of criterion validity for specific frame rates and bit rates, we found no significant differences according to bandwidth but criterion validity did differ significantly according to frame rate. Validity declined markedly for the slowest frame rate (β = 0.35, 95% CI 0.16–0.54), compared to 30 fps (β = 0.92, 95% CI 0.84–0.99) and to 15 fps (β = 0.92, 95% CI 0.84–0.99), with non-overlapping confidence intervals indicating these differences were statistically significant.

Criterion validity for FNT Attempts was excellent (β ⩾ 0.97) under all conditions and without statistically significant differences across the various video configurations. However, for FNT misses, criterion validity was good (β = 0.81, 95% CI 0.64–0.98) for the in-person rating and moderate (β = 0.44, 95% CI 0.36–0.51) for normal speed across all frame and bit rates. We saw little difference in the criterion validity according to frame rate or bit rate, with regression coefficients ranging from 0.52 to 0.73, with 95% confidence limits overlapping across the various conditions (i.e. differences were not statistically significant).

Discussion

Our findings show that typical conditions available for telehealth, even in remote locations, would support acceptable inter-rater reliability and validity for use of the FTT or FNT to evaluate fine motor coordination. Reliability and validity were not substantively affected by diminished frame rate, internet band width or by having a single planar view of the movement. These findings are highly reassuring for potential use of video for common neurological tests of coordination such as the FTT and FNT.

On the FTT, there was a statistically significant decrease in total number of finger taps counted according to frame rate, but it was only at the lowest frame rate that the differences were substantive. On the FNT, count of total attempted touches showed excelling inter-rater reliability and criterion validity under all conditions. The count of missed FNT attempts showed good inter-rater reliability with slow-motion review, but it diminished at the lowest bit rates.

We studied the FTT and the FNT tests because we thought that the effects of video parameters might differ between the two tests. In particular, we thought the FTT might be vulnerable to the effect of frame rate due to the potential to not see taps when frame rate diminished. Indeed, our results support the notion that high frequency fine motor coordination (e.g. tremors) may be poorly assessed under very low frame rate conditions. In contrast, we thought the FNT may be vulnerable to low bit rate conditions and to limitations with a view from a single camera, either of which may make it particularly difficult to discern when an attempted touch on the dowel missed the mark. And we indeed found that inter-rater reliability for missed attempts diminished to levels generally considered poor at 128–384 kbps. However, slow motion review had high inter-rater reliability on the FNT and correlated well with in-person measurement.

Tests of rapidly alternating movements such as the FTT and FNT are commonly employed to examine motor function in patients with various neurological disorders.15 Inter-rater reliability for the FTT is good (r = 0.68).16–18 Use of computerized technology can reduce potential observer bias and improve accuracy with the FTT;16,17 however, that requires patients to be in a setting with availability of such technology and there is good concurrent validity across various finger tapping assessments regardless of technology.18 Several studies have examined reliability and validity of the FNT. For example, Swaine and Sullivan found moderate intrarater reliability (kappa 0.18–0.31) and fair inter-rater reliability (kappa 0.26–0.40) among persons with a head injury.19 Feys et al. reported moderate to good inter-rater reliability (kappa 0.33-0.74) using the FNT to rate various tremors among persons with multiple sclerosis.21 We note that some studies of the FNT quantified performance differently than we did (e.g. use of Likert scale vs counting number of attempted and missed target hits).20–23 Nonetheless, our findings support inter-rater reliability and validity comparable to that of standard clinical practices for both the FTT and the FNT under commonly available conditions for telehealth, particularly at higher bandwidths and/or with slow motion review.

While our study conditions mimicked standard clinical care, we did not examine all circumstances under which such tests may be carried out. For example, to maximize internal validity, the in-person rater was restricted to a fixed position comparable to the position of the fixed cameras. However, in real clinical care, a rater may move about for an optimal view. Similarly, there are other ways in which FNF tests may be evaluated in clinical care (e.g. degree of dysmetria when moving from nose to finger, degree to which the target met/missed). The metric we used for quantifying the FNF test examined solely on one aspect of performance (i.e. successfully touching vs missing the target). As the larger study dealt with both gross and fine motor function, the study population was selected to ensure safety with the tests of gross motor coordination (e.g. gait) and had predominantly musculoskeletal disorders with relatively well preserved fine motor coordination. Results may differ for neurological patients with greater impairment, although we suspect that would (if anything) improve reliability and validity as these tests are prone to ‘floor’ effects. This study did not examine supplemental methods other than slow motion review of a high quality video (e.g. use of electronic counters for finger tapping) to compensate for subpar conditions.

We had a few missing values. Missing values could be due to the rater not scoring the video due to poor quality or due to neglecting to enter a score. Given the very low missing value rate under any view and the potential for inconsistency among raters indicating the reasons for a missing value, we do not distinguish between these possibilities. However, given the missing value rate tended to be lower on the combined rating (i.e. when the rater considered both views), poor quality likely was the primary factor underlying these missing values. As this was an exploratory study, we did not control for multiple comparisons. Thus, results need to take into account the risks of Type II statistical error.

In summary, our results provide reassurance that standard video-based technology can be used with confidence to assess fine motor coordination and that slow motion review and/or high bandwidth transmission can be used to enhance accuracy in the face of clinical uncertainty.

Acknowledgments

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: Funding for this study was provided by VHA Rehabilitation Research & Development (grant number F0900-R).

Footnotes

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

References

- 1.Dorsey ER and Topol EJ. State of telehealth. N Engl J Med 2016; 375: 154–161. [DOI] [PubMed] [Google Scholar]

- 2.Chumbler NR, Quigley P, Li X, et al. Effects of telerehabilitation on physical function and disability for stroke patients: a randomized, controlled trial. Stroke 2012; 43: 2168–2174. [DOI] [PubMed] [Google Scholar]

- 3.Sanford JA, Griffiths PC, Richardson P, et al. The effects of in-home rehabilitation on task self-efficacy in mobility-impaired adults: a randomized clinical trial. J Am Geriatr Soc 2006; 54: 1641–1648. [DOI] [PubMed] [Google Scholar]

- 4.Schein RM, Schmeler MR, Holm MB, et al. Telerehabilitation wheeled mobility and seating assessments compared with in person. Arch Phys Med Rehabil 2010; 91: 874–878. [DOI] [PubMed] [Google Scholar]

- 5.Russell TG, Buttrum P, Wootton R, et al. Internet-based outpatient telerehabilitation for patients following total knee arthroplasty: a randomized controlled trial. J Bone Joint Surg Am 2011; 93: 113–120. [DOI] [PubMed] [Google Scholar]

- 6.Agostini M, Moja L, Banzi R, et al. Telerehabilitation and recovery of motor function: a systematic review and meta-analysis. J Telemed Telecare 2015; 21: 202–213. [DOI] [PubMed] [Google Scholar]

- 7.Levy CE, Geiss M and Omura D. Effects of physical therapy delivery via home video telerehabilitation on functional and health-related quality of life outcomes. J Rehabil Res Dev 2015; 52: 361. [DOI] [PubMed] [Google Scholar]

- 8.Jackson J, Ely EW, Morey MC, et al. Cognitive and physical rehabilitation of intensive care unit survivors: results of the return randomized controlled pilot investigation. Crit Care Med 2011; 40: 1088–1097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hoenig H, Landerman LR, Tate L, et al. A quality assurance study on the accuracy of measuring physical function under current conditions for use of clinical video telehealth. Arch Phys Med Rehabil 2013; 94: 998–1002. [DOI] [PubMed] [Google Scholar]

- 10.Reitan RM and Wolfson D. The Halstead–Reitan neuropsychological test battery: theory and clinical interpretation. Tucson, AZ: Neuropsychology Press, 1985. [Google Scholar]

- 11.Wang YC, Magasi SR, Bohannon RW, et al. Assessing dexterity function: a comparison of two alternatives for the NIH toolbox. J Hand Ther 2011; 24: 313–320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.SAS Institute Inc. SAS/STAT software, version 9.4. Data quality server reference. Cary, NC: SAS Institute Inc, 2014. [Google Scholar]

- 13.Streiner D and Norman G. Health measurement scales: a practical guide to their development and use, 3rd ed Oxford: Oxford University Press, 2003. [Google Scholar]

- 14.Spreen O and Strauss E. A compendium of neuropsychological tests: administration, norms and commentary, 2nd ed New York: Oxford University Press, 1998. [Google Scholar]

- 15.Arceneaux JM, Kirkendall DJ, Hill SK, et al. Validity and reliability of rapidly alternating movement tests. Int J Neurosci 1997; 89: 281–286. [DOI] [PubMed] [Google Scholar]

- 16.Gualtieri CT and Johnson LG. Reliability and validity of a computerized neurocognitive test battery, CNS Vital Signs. Arch Clin Neuropsychol 2006; 21: 623–643. [DOI] [PubMed] [Google Scholar]

- 17.Hubel KA, Yund EW, Herron TJ, et al. Computerized measures of finger tapping: reliability, malingering, and traumatic brain injury. J Clin Exp Neuropsychol 2013; 35: 745–758. [DOI] [PubMed] [Google Scholar]

- 18.Christianson MK and Leathem JM. Development and standardization of the computerized finger tapping test: comparison with other finger tapping instruments. N Z J Psychol 2004; 33: 44–49. [Google Scholar]

- 19.Swaine BR and Sullivan SJ. Reliability of the scores for the finger-to-nose test in adults with traumatic brain injury. Phys Ther 1993; 73: 71–78. [DOI] [PubMed] [Google Scholar]

- 20.Hooper J, Taylor R, Pentland B, et al. Rater reliability of Fahn’s tremor rating scale in patients with multiple sclerosis. Arch Phys Med Rehabil 1998; 79: 1076–1079. [DOI] [PubMed] [Google Scholar]

- 21.Feys P, Davies-Smith A, Jones R, et al. Intention tremor rated according to different finger-to-nose test protocols: a survey. Arch Phys Med Rehabil 2003; 84: 79–82. [DOI] [PubMed] [Google Scholar]

- 22.Fugl-Meyer A, Jaasko L, Leyman I, et al. The post-stroke hemiplegic patient. Scand J Rehabil Med 1975; 7: 13–31. [PubMed] [Google Scholar]

- 23.Swaine BR, Lortie E and Gravel D. The reliability of the time to execute various forms of the finger-to-nose test in healthy subjects. Physiother Theory Pract 2005; 21: 271–279. [DOI] [PubMed] [Google Scholar]