Abstract

This paper provides a comprehensive study of Federated Learning (FL) with an emphasis on enabling software and hardware platforms, protocols, real-life applications and use-cases. FL can be applicable to multiple domains but applying it to different industries has its own set of obstacles. FL is known as collaborative learning, where algorithm(s) get trained across multiple devices or servers with decentralized data samples without having to exchange the actual data. This approach is radically different from other more established techniques such as getting the data samples uploaded to servers or having data in some form of distributed infrastructure. FL on the other hand generates more robust models without sharing data, leading to privacy-preserved solutions with higher security and access privileges to data. This paper starts by providing an overview of FL. Then, it gives an overview of technical details that pertain to FL enabling technologies, protocols, and applications. Compared to other survey papers in the field, our objective is to provide a more thorough summary of the most relevant protocols, platforms, and real-life use-cases of FL to enable data scientists to build better privacy-preserving solutions for industries in critical need of FL. We also provide an overview of key challenges presented in the recent literature and provide a summary of related research work. Moreover, we explore both the challenges and advantages of FL and present detailed service use-cases to illustrate how different architectures and protocols that use FL can fit together to deliver desired results.

Keywords: Federated Learning, Machine Learning, Collaborative AI, Privacy, Security, Decentralized Data, On-Device AI, Peer-to-peer network

I. Introduction

Federated Learning (FL) is a newly introduced technology [1] that has attracted a lot of attention from researchers to explore its potential and applicability [2], [3]. FL simply attempts to answer this main question [4], can we train the model without needing to transfer data over to a central location? Within the FL framework, the focus is geared towards collaboration, which is not always achieved through standard machine learning algorithms [5]. In addition, FL allows the algorithm(s) used to gain experience, which is also something that cannot always be guaranteed through traditional machine learning methods [6], [7]. FL has been employed in a variety of applications, ranging from medical to IoT, transportation, defense, and mobile apps. Its applicability makes FL highly reliable, with several experiments having been conducted already. Despite FL’s promising potential, FL is still not widely understood in regard to some of its technical components such as platforms, hardware, software, and others regarding data privacy and data access [8], [9]. Therefore, our focus in this paper is to expand on FL’s technical aspects while presenting detailed examples of FL-based [10] architectures that can be adapted for any industry.

Because of strict regulations regarding data privacy, it is usually considered not practical to gather and share consumers’ data within a centralized location. This also challenges traditional machine learning algorithms because they require huge quantities of data training examples to learn [11]. The reason for traditional machine learning algorithms having these caveats is due to how their models get trained [12], [13]. Traditional machine learning usually has a main server that handles data storage and training models. Typically, there are two ways of using these trained models of machine learning [14]. Either we build a pipeline for the data so it can pass through the server, or transfer the machine learning models to any device that interacts with the environment [15]. Unfortunately, both of these approaches are not optimal because their models are not able to rapidly adapt.

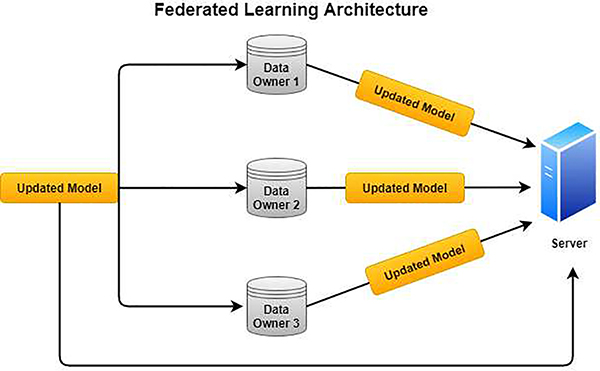

With FL, the models get trained at the device level. So the models are brought over to the data sources or devices for training and prediction [16]. The models (i.e., models’ updates) are sent back to the main server for aggregating. Then, one consolidated model gets transferred back to the devices using concepts from distributed computing [17]. This is so that we can track and re-distribute each of the models at various devices. FL’s approach is very advantageous for utilizing low-costing machine learning models on devices such as cell phones and sensors [18]. A figure representing the general architecture of FL is shown in Fig. 1

Fig. 1.

General Federated Learning Architecture

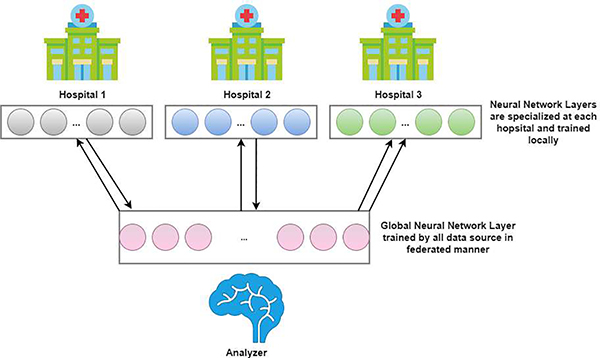

FL has unique use cases, with plenty of research relating to FL’s applications, one example being in the healthcare sector [19], [20]. Fig. 2 illustrates how one would apply an FL architecture in a healthcare setting. Unfortunately, there are still some crucial obstacles for FL to be fully incorporated in other settings, especially regarding the data. For our models and algorithms to learn effectively to obtain optimal results, it requires a lot of data in order to ensure our models will be as accurate as possible [21]. However, even the data itself can prove difficult to handle because there is often a lot of diversity within the data, such as contents, structure, and file-formats. Additionally, the idea of centralizing streams of sensitive data over to tech companies has proven to be very unpopular with the United States [22], [23]. According to MIT computer science associate professor Ramesh Raskar, the dichotomy between data privacy and the benefits of using that data on society is false. The reasoning is that we can achieve both utility plus privacy, so concerns about privacy can be lessened [24].

Fig. 2.

Federated Learning Architecture applied in a hospital setting

A. Purpose of Study

This work’s purpose is to expand on the current platforms, protocols, and architectures of FL. While there is research on this topic, sufficient progress has not been made in regard to understanding FL on a deeper, technical level. Not only is FL still new, but it is also not widely understood and there has been little application of it in most industries. So, because of this, we currently do not have a sufficient understanding of FL nor are we able to see the bigger picture of how exactly FL can benefit multiple industries. Specifically, this work will attempt to answer the questions below:

What are current architectures and platforms that are used for Federated Learning?

What are the current hardware and software technologies that are used in Federated Learning?

In answering these questions, we hope this work can allow for FL to be applied towards more industries. That way we gain a comprehensive picture of how FL directly impacts these industries.

B. Contributions

The work hopes to contribute a comprehensive overview of FL in terms of definition, applicability, and usefulness. Several works have studied this topic, but how our work stands out in comparison to previous works is that we manage to dive deep into the architectures of FL and its use cases. In doing so, the work can contribute an overall blueprint for data scientists and other researches on designing FL-based solutions for alleviating future challenges. So, this work contributes to the following:

Compared to other survey papers on FL, this survey provides a deeper summary of the most relevant FL hardware, software, platforms, and protocols to enable researchers to get up to speed about FL quickly, giving them enough knowledge to pursue the topic of Federated Learning without having to endure possible steep learning curves.

We also provide solid examples of applications and use cases of FL to illustrate how different architectures of FL can be applied for multiple scenarios, allowing the audience to better understand how FL can be applicable. Besides, highlighting use cases and applications of FL in particular medical settings would allow healthcare professionals to have more faith in streamlining their data for FL.

We provide an overview of some of the key FL challenges presented in the recent literature and provide a summary of related research work. Additionally, we offer insight into best design practices for designing FL models.

C. Paper Organization

There are twelve sections within this paper. Section II discusses related works that have studied FL. Section III goes over architectures and platforms that are used for FL. Section IV discusses the enabling technologies of FL, such as hardware/software, and algorithms geared toward FL. Section V discusses what optimization techniques can be applied to some FL models while Section VI discusses various network protocols that can support FL. Section VII discusses the limitations and challenges of FL. Then, Section VIII discusses the market implications of FL. Section IX discusses the benefits and costs of FL and Section X discusses in detail the applications and use-cases of FL. Section XI goes over the best practices in designing FL models and finally Section XII concludes the paper.

II. Related Works

Most notably, FL is often compared against distributed learning, parallel learning, and deep learning. While FL remains a new topic, there have been several related works that do examine FL in detail. Table I summarizes various works that tackle FL, along with other topics focusing on use-cases for FL.

TABLE I.

Summary of related works on FL

| Ref. No | Author (s) | Article Topic(s) |

|---|---|---|

| [24] [25] [26] |

Y. Xia Tal Ben-Nun, T. Hoefler M.G. Poirot, et al. |

Deep Learning |

| [27] [28] |

P. Vepakomma, et al. | HIPAA Guidelines for FL Drawbacks of FL |

| [29] | Kevin Hsieh | Traditional ML Methods |

| [30] | Qinbin Li, et al. | Data Privacy and Protection Future Direction of FL Challenges of FL |

| [31] | V. Kulkarni, et al. | Personalization techniques for FL |

| [32] | J. Geiping, et al. | Privacy of FL |

| [33] | Y.Liu, et al. | FL for 6G |

A. Deep Learning

In comparison to our work, articles [25], [26], [27], [28], and [29] only cover Deep Learning and its comparisons to FL. FL is often compared to Deep Learning techniques because DNNs (Deep Neural Networks) have been used for various purposes and often have promising results. Unfortunately, DNNs are also prone to several drawbacks which make it more difficult to incorporate into FL. DNNs are not always optimal for FL because as data-sets increase, so do the DNNs’ complexity. So the DNN’s complexity is proportional to the computational requirements and memory demands. Additionally, Deep Learning models applied for FL need to be able to still work without accessing raw client data, so privacy is the main focus. Comparisons of deep learning models have been made in regard to the protection offered, performances, resources, etc. Both of these aspects depend on architecture, which is something FL also needs to be successful. In addition to considering these metrics, we also need to consider the HIPAA guidelines because data cannot be shared with external entities. Overall, the authors do an excellent job of diving into deep learning, but they do not dive into enough detail about FL.

B. Drawbacks of using Machine Learning

The article by [30] discusses how cost and latency still plague traditional machine learning (ML) methods. Both of these problems are difficult to solve because the data itself is highly distributed. This poses problems with traditional machine learning methodologies in regards to computation and communication. For instance, if the data spans multiple locations, data centers’ communication can very easily overwhelm the limited bandwidth. While Machine Learning does allow us to pre-process data to reduce latency, it can still lead to high monetary costs. For communication, because the data can be highly independent and highly distributed, this can create some inter-operability issues with Machine Learning algorithms using the data, because the data could end up not being useable. While the article does highlight the drawbacks of traditional machine learning methods, that is not the primary focus of our paper.

C. Federated Learning

Another work by [31] talks about FL in regards to future direction, and feasibility for data privacy and protection. They also compare various FL systems. FL systems face challenges such as system assumptions being unpractical, efficiency, and scalability. It should be noted that when designing FL systems, it seems that two main characteristics are rarely considered in implementation, which is heterogeneity and autonomy.

Heterogeneity refers to diversity, systems that use more than one kind of processors or cores (this is known as Heterogeneous Computing) to achieve optimal performance and energy efficiency. In the context of Machine Learning and FL, Heterogeneity concerns data, privacy, and task requirements.

Autonomy refers to independent control. The systems involved in FL need to be willing to share information with others. The authors break down autonomy into several different categories, such as association and communication autonomy. Both of them highlight the ability to associate with FL, the ability to partake in more than one FL system, and deciding how much information should be communicated to others.

The authors also manage to classify FL Systems by a few aspects: distributing data, the model, privacy frameworks, communication architectures, etc. The work also manages to expand on the concept of FL by explaining two different types of FL systems: private and public.

Private FL systems have few amounts of entities, each with huge amounts of data and computation power. The challenge that private FL systems have to overcome is how to transfer computations to data centers under certain constraints.

Public FL Systems have a large number of entities but they have small quantities of data and computation power.

The authors manage to examine other related works on FL, making this work a reliable foundation of FL. However, the article could be stronger in providing solid examples of use-cases and real-life applications to remedy some of the challenges mentioned. Our work contains plenty of use-cases and applications to provide a comprehensive overview of how FL can remedy the challenges mentioned in the article.

In addition, the authors V. Kulkarni, M. Kulkarni, and A. Pant conduct a survey of techniques for FL. They focus specifically on personalization techniques. Here, the authors mention why we need to consider personalization. Personalizing a global model is necessary in order to account for FL’s challenge of heterogeneity. Most techniques for personalizing a model usually require two steps; In the first step, a global model is built. In the second step, the global model is modified for each client via private data from that client. The article also mentions other techniques for building global models, some of which include transfer learning, multi-task learning, meta-learning, and others [32]. The article does well in distinguishing these techniques from each other. Unfortunately, the article discusses FL regarding one challenge of it. Additionally, there are no use-cases or examples presented regarding the techniques the article mentions. What is also missing is the hardware/software platforms for such techniques. As such, it seems only a surface level of FL is covered.

Another work that dives into the topic of FL is [33]. In their work, the authors focus on parameter updates for FL, which are crucial to ensure the models are working with the most recent parameters. The article attempts to answer the question of how easy is it to break privacy in FL, which is an essential question to answer in order to effectively implement FL. The article not only discusses FL in detail but discusses in detail the privacy limitations of FL both in theory and practice. Interestingly, the article focuses on using FL to recover image data, which is an interesting use case that could be beneficial to the community. Another aspect of FL that is effectively discussed is the impact of both the network architecture and state of parameters. Admittedly, their work is more implementation-focused and contains quite a bit of math that may be difficult for newcomers in FL to follow.

The article [34] discusses FL’s challenges, methods, and future directions in regard to 6G communications. While this is beyond the scope of our paper, the article effectively highlights both the drawbacks of using traditional machine learning for 6G and the potential of FL to improve the likelihood of 6G communications. The article emphasizes several challenges of FL regarding this, such as expensive communication cost, security, and privacy. The authors effectively dive into the detail of each of the aforementioned challenges, while presenting an architecture of what FL could like in 6G.

III. Architectures and Platforms of Federated Learning

FL comes with quite a few architectures and platforms. In regards to the medical field, there are already several institutions that are trying to develop FL architectures [35], [36]. One of the leading institutions is the University of Pennsylvania and Intel. Besides, many platforms have also been developed for FL, and some will be discussed in this section. Table II summarizes the various architectures and their focuses. These architectures are talked about in more detail in this section.

TABLE II.

Summary of architectures and their focus

| Architecture | Benefit(s) | Focus |

|---|---|---|

| Horizontal FL | Independence | Security |

| Vertical FL | Encryption | Privacy |

| FTL | Higher accuracy Encryption |

Prevents accuracy loss |

| MMVFL | Label sharing Multiple participants |

Complex scenarios Data leakage |

| FEDF | Improved training speed | Parallel training Privacy preservation |

| PerFit | Cloud-based Local-sharing |

IoT applicability |

| FedHealth | Powerful models More generalization More applicability |

Healthcare |

| FADL | No data aggregation needed Reduced classification errors |

EHRs |

| Blockchain-FL | High efficiency Enhanced security |

Industrial IoT |

According to the author(s) of [37], the architecture that we use for FL is dependent on data distribution, specifically the distribution characteristics of the data. The authors define two types of FL architectures: Horizontal FL and Vertical FL. These two architectures are different in terms of how their architectures are structured, and their definitions. Horizontal FL(also known as sample-based FL), is where features are similar but vary in terms of data. Interestingly, there have been examples of a Horizontal FL framework being proposed. One example is where Google proposed a Horizontal FL approach for managing the android mobile phone updates. From a more technical perspective, Horizontal FL assumes there are honest consumers and security against a server. So just the central server can modify consumers’ data [35]. With Horizontal FL’s architecture, there are an x amount of elements of similar structures that learn a model with the aid servers or parameters; the Training process of Horizontal FL is shown in Fig. 4. Vertical FL is also known as feature-based FL; Fig. 3 illustrates the workflow of Vertical FL. Here, data-sets can have similar sample IDs but differ in their features. With Vertical FL, what we are doing is collecting and grouping these various features. Then we need to calculate training loss so we can form a model that contains data from both entities collaboratively. Under Vertical FL, each entity has the same identity and status. With regard to security, the Vertical FL system also assumes there are honest consumers. However, the security aspect of Vertical FL concerns two entities. Also, in this framework, the adversary (assuming one of these two entities is compromised) can only learn data from the compromised client. With the Vertical FL architecture, this architecture is less explored than the Horizontal FL architecture. There are two main parts in the Vertical FL architecture: (1) Encrypted entity alignment and (2) Encrypted model training [35], [37]. A benefit of this architecture is that it is independent of other machine learning methods. Interestingly, Horizontal FL has been used in medical cases, such as drug detection.

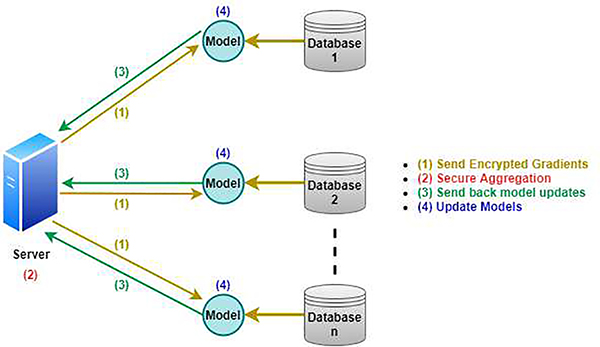

Fig. 4.

Horizontal Federated Learning Architecture

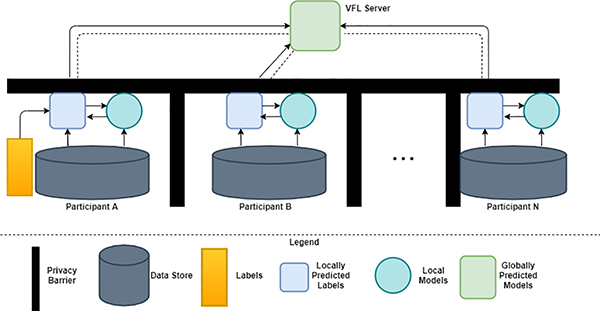

Fig. 3.

Vertical Federated Learning Arcitecture

The participants locally calculate gradients. Then after computation, they need to mask that selection of gradients via encryption, privacy techniques, or secret sharing techniques. The results are transferred to servers.

Servers handles the collection and does so without learning anything from the participant(s)

Server sends the results back to the participants.

Participants update the model accordingly.

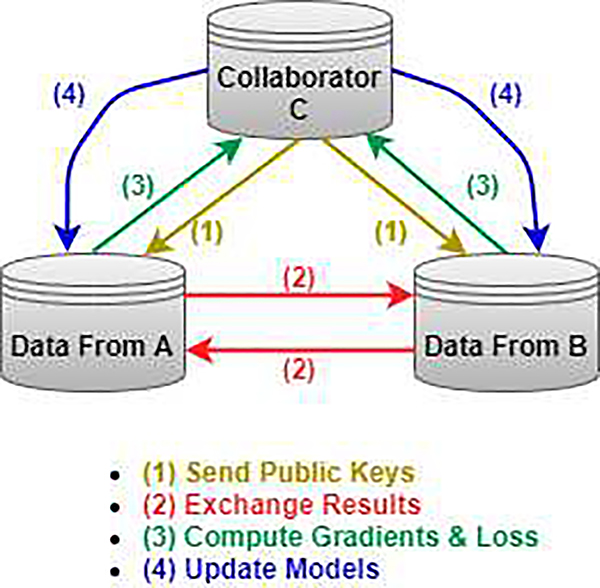

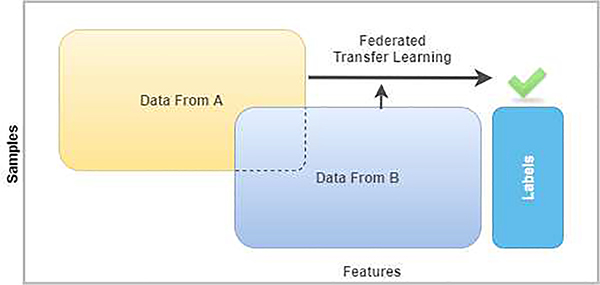

In addition to the Horizontal FL and Vertical Architectures, another architecture for FL is called Federated Transfer Learning (FTL). FTL was proposed in [38]. With FTL, it is used to utilize data from a different source for training the model(s). With transfer learning, it involves learning a common representation between one entity’s features and reducing error in predicting the labels for the targeted entity. That way, accuracy loss is minimal. FTL obtained huge attention in various industries, especially healthcare [39]. To avoid potentially exposing client data, FTL utilizes encryption and approximation to make sure privacy is in fact, protected. So as a result, the actual raw data and models are both kept locally [40]. There are also three components of the FTL system:

Guest - Data holder. Guests are responsible for launching task-based and multi-party model training with data-sets provided by both itself and the Host. They mainly deal with data encrypting and computation.

Host - Also a Data holder.

Arbiter - Sends public keys to both the Guest and Host. The Arbiter is primarily responsible for collecting gradients and check whether the loss is converging.

Regarding the workflow of FTL, it is summarized in Fig. 5. With the workflow, the Guest and Host first, need to compute and encrypt their results locally via their data. The data is used for gradient and loss computations. Then they transfer the encrypted values to Arbiter. Afterward, the Guest and Host obtain the gradients and loss computations from the Arbiter to modify the models. The FTL framework continues iteratively until the loss function converges [38]. FTL also supports two different training approaches: heterogenous and homogenous. The homogenous approach is where entities help train the model(s) with differing kinds of samples. Heterogenous is where entities share the same samples but in different feature spaces. They group these features in an encrypted state and then build a model with all the data collaboratively. Regarding the performance of FTL, FTL was meant to replace deep-learning approaches since deep-learning approaches are prone to accuracy loss. The authors that proposed FTL were able to conclude that FTL’s accuracy is higher. The authors of the proposed framework were able to conclude that FTL is more scalable and flexible. Unfortunately, FTL does have some limitations. For instance, it needs entities to exchange encrypted results from only the common representation layers. So they do not apply to all transfer mechanisms [38], [39].

Fig. 5.

Framework of Federated Transfer Learning

Another study by Siwei Feng and Han Yu proposes a new architecture based on the Vertical FL system. Specifically, the authors’ proposed architecture is called the Multi-Participant Multi-class Vertical Federated Learning framework (MMVFL). This particular framework, shown in Fig. 6, is supposed to handle multiple participants. The authors note that MMVFL enables label sharing from its owner to other participants in a manner of privacy preservation. One problem with using Horizontal FL architecture is the assumption that data-sets from different entities have the same feature area yet they may not similar to the same sample ID space. That is not always the case, unfortunately, so the proposed framework is meant to alleviate that setback. With the MMVFL framework, the goal is to learn multiple frameworks to complete different various objectives. The reason for doing so is to make the learning process more personalized. To evaluate the framework’s performance, the authors used two computer-vision data-sets. The authors also compare their framework against other methods. Their framework achieved better results depending on how many features used. The MMVFL framework was also noted to perform better by using a smaller amount of features as well [41].

Fig. 6.

Framework of MMVFL

Another framework of FL is proposed by Tien-Dung et al. [42]. Their framework in terms of FL is geared toward privacy preservation and parallel training. Their framework, called FEDF, allows a model to be learned on multiple geographically distributed training data-sets which could belong to different owners. The authors’ proposed architecture consists of a master and X amount of workers as shown in Fig. 7. The master is responsible for handling the training process, which consists of the following:

Initializing execution of the training algorithm

Obtaining all the training outcomes from the workers

Modifying the global model instance(s)

Notifying the workers after the global model instance has been modified and ready for the next training session.

Fig. 7.

FEDF Framework

Meanwhile, the workers represent the data owners that are equipped with a computing server to run a training model on their data. When having a model instance downloaded from the master, each worker runs the training method. Then they send over training outcomes to the master by using the communication protocol that is set up. We make the assumption that each worker is responsible for determining what parameters are used in the training algorithm such as the learning rate. These parameters are the private information of each worker and they are kept unknown to the master as well as other workers. So it prevents others from gaining information about the training data. Something to keep in mind is that the size of the workers’ data-sets is not homogeneous. So with this in mind, training time will vary between workers. The authors manage to implement their framework using Python and TensorFlow. To handle communication between the workers and master, the authors used secure socket programming. The authors managed to test their framework on a variety of machines as well. The FEDF framework was tested on different datasets, mainly the CIFAR-10 membrane data-set named MEMBRANE, and a medical image data-set called HEART-VESSEL. The evaluation metrics used were training speed, performance, and amount of data exchanged. Upon experimentation, the authors were able to conclude their proposed framework was able to improve training speed nine times faster without sacrificing accuracy.

Another interesting framework of FL comes from Qiong Wu et al. [43]. Their proposed architecture for FL centers around IoT applicability. While IoT is not the focus of this paper, It is noteworthy to mention that FL has been proposed for IoT. [44] [45]. The authors’ proposed framework is called PerFit, shown in Fig. 8. PerFit was meant to alleviate some challenges of both FL and IoT. These challenges include:

Heterogeneity of Devices - Devices that are used in the medical community and in general are all different in terms of hardware, such as CPU, memory, networking bandwidth, capacity, and power. This could create high communication costs when applying FL, so extra factors need to be considered, such as fault tolerance. Additionally, medical devices may end up dropping out of the various learning processes due to bad connectivity and energy constraints.

Statistical Heterogeneity - This deals with different usage scenarios and settings. In the context of healthcare, the distributions of users? activity data can vary by a lot depending on the users? diverse physical characteristics and behavioral habits. Another thing to consider is that data samples across devices could vary significantly.

Heterogeneity of Models - If we were to examine the original FL framework, we would see that the involved devices would need to agree on a specific architecture of the training model. In doing so, we can obtain an overall effective model by collecting all the model weights that were collected from the local models. However, this approach would likely need to be modified for medical communities [46]. Different devices want to form their own models based on their environment and resource capacities. Due to privacy concerns in healthcare, sharing of model specificities would be much harder.

Fig. 8.

Framework of PerFit

Examining PerFit’s architecture, PerFit’s architecture is cloud-based, which the authors say will bring readily available computing power for IoT devices. The architecture structured so that each IoT device can unload its computing tasks so that efficiency requirements and low latency requirements can be fulfilled. FL is applied towards devices, servers, and the cloud. In doing so, models can be shared locally without compromising sensitive data. The learning process of the PerFit Framework has three stages:

Unload Stage - Here in this stage, the IoT device can transfer its learning model and data samples to the cloud for fast calculation.

The Learning Stage - In this step, the device and the cloud both compute models depending on samples of data, then transmit information. Then the server collects information submitted, then averages them into a global model. The model information exchange process repeats until it converges after a certain amount of iterations. The end result is an optimal global model that can be modified for further personalization.

The Personalization Stage - In this last stage, each device trains a personalized model in order to capture specific characteristics and requirements. This is based on the global model’s information and its own personal intel. The specific learning operations at this stage depend on the adopted federated learning mechanism.

To verify PerFit’s effectiveness, the authors used a data-set called Mobile-Act, which centers on human activity recognition. The data-set has ten kinds of activities such as walking, falls, jumping, jogging, etc. The authors actually compared two different FL approaches as well: FTL and Federated Distillation (FD) in terms of performance. The authors’ result confirmed that their proposed framework, PerFit was effectively and can be considered a promising framework for future FL implementations.

While there are plenty of architectures for FL in general, there do not seem to be many FL-architectures geared towards specific industries [47]. However, this does not mean such architectures do not exist; There are simply fewer FL-frameworks that center around specific industries. Technically, the FL architectures discussed so far could potentially work for any industry, but there would likely be some hurdles in modifying the mentioned FL frameworks to fit them [48]. In regards to the system architecture, we need to take into account these possible challenges:

Ensuring data integrity when communicating

Designing secure encryption methods that take full advantage of the computational resources

Use devices to reduce idle time

One example of an FL architecture geared towards a specific industry comes from [49]. In the article, the authors propose an FL framework centering around healthcare, specifically wearable devices, which fall into the category of Smart Healthcare. The proposed architecture is called FedHealth, shown in Fig. 9. According to the authors, the challenges that Smart Healthcare is faced with are a lack of personalization and how to access user data without violating privacy. The users’ data is often very isolated, making it difficult to aggregate. The authors claim their proposed architecture is the first FL framework to address these challenges. What FedHealth does is gather information from individual institutions to generate robust frameworks without compromising the users’ privacy. After the model is made, FedHealth uses other methods for obtaining well-tailored models for individual institutions. Simply speaking, transfer learning involves transmitting information via current entities to a new entity. FedHealth assumes there are three institutions and one server, which can be extended to be more generalized. There are four procedures in the framework.

Fig. 9.

FedHealth Framework

Interestingly, FedHealth architecture uses Deep Neural Networks (DNNs) for learning both models. DNNs are used in the framework to learn features and train classifiers. FedHealth works continuously with new emerging user data. The framework can update both models at the same time when facing new user data. So the longer the consumer uses the product, the more personalized the model can be. Other than transfer learning, FedHealth can also incorporate other popular methods for personalization. Additionally, the authors’ proposed framework allows them to adopt other conventional machine learning methods, making the framework more generalized and applicable. The authors test their framework by using a data-set centered on human activity recognition. The data-set, called UCI Smartphone contained six activities collected from 30 users. The six activities were walking, walking upstairs, walking downstairs, sitting, standing, and laying. The authors split the data-set with a 70–30 Train-Test ratio and tested against a few traditional machine learning algorithms. The FedHealth framework managed to out-perform all of them. The framework itself is very applicable to the healthcare setting.

Another architecture geared towards the medical field comes from the authors Dianbo Liu, Timothy Miller, et al. Their proposed architecture is called Federated-Autonomous Deep Learning (FADL), shown in Fig. 10. This architecture dealt with Electronic Health Records (EHR). EHR data is usually collected via individual institutions and stored across locations. Unfortunately, obtaining access to EHR data can be difficult and slow thanks to security, privacy, regulatory, and operational setbacks. According to the authors, the FADL framework trains some parts of a model using all data sources plus additional parts using data via certain data resources. To test their framework, the authors used ICU hospital data. The authors also used data from fifty-eight hospitals. Regarding FADL’s structure, the structure consists of an artificial neural network (ANN) with three layers. Upon testing, the authors were able to conclude that FADL managed to out-perform traditional FL [50].

Fig. 10.

Framework of FADL

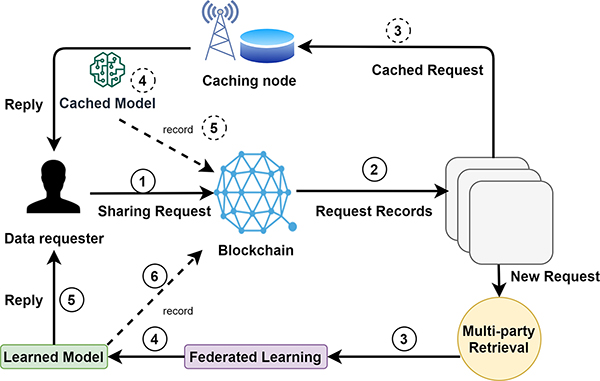

Furthermore, another architecture that uses FL and is geared towards a specific industry comes from Yunlong Lu et al. Here, the authors propose an FL architecture for blockchain. Specifically, the industry targeted for this FL-blockchain based framework is the Industrial IoT. The architecture is meant to solve data-leakage problems relating to the security and privacy issues in FL. The proposed architecture has two modules: a blockchain module and a federated learning module. The blockchain module establishes secure connections among all the IoT devices via encrypted records, which is maintained by entities equipped with computing and storage resources, such as base stations. There are two types of transactions in the blockchain module: retrieval transactions and data sharing transactions. For privacy concerns and storage limitations, the blockchain is only used for obtaining related data and handling data accessibility [51]. Fig. 11 illustrates a working mechanism of the proposed architecture. The authors were able to evaluate their proposed architecture, and results indicated that the proposed architecture achieved great accuracy, high efficiency, and enhanced security.

Fig. 11.

FL-based framework that incorporates blockchain.

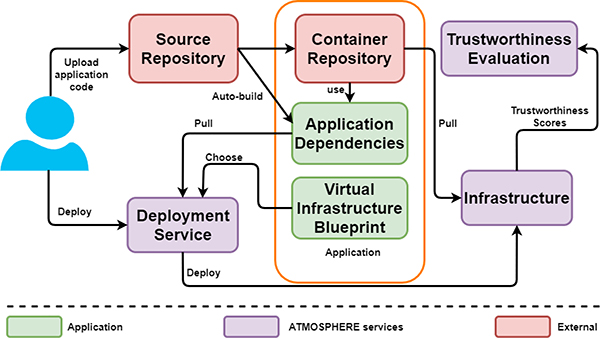

Several platforms exist for FL as well. Thanks to the growth of FL, there are a lot of industries and research teams that research FL for product and research development [52]. There are several popular platforms for FL, which have been summarized in Table III in terms of their focus and supporting software. There are plenty of platforms and architectures for FL, with more examples including [53], [54], [55], [56]. These platforms and architectures help refine FL better. One other architecture involves using blockchain on FL, where the authors of [57], [58], [59], and [60] propose an architecture incorporating both Blockchain and FL. By proposing this architecture, there will be no need for centralized training data, and devices could potentially get trained much faster. The architectures discussed is not only applicable for FL in general, but it can apply for other industries too.

TABLE III.

A Summary of FL platforms, their focus and supporting software.

| Platform | Focus | Supporting software |

|---|---|---|

| PySyft | Privacy | Python library |

| FATE | FL | FATEBoard FATEFlow |

| Tensor\IO | Mobile Devices | Tensor Flow |

| FFL-ERL | Parallel computing Real-time Systems |

Erlang |

| CrypTen | Preserving Privacy | PyTorch |

| LEAF | Multi-tasking | Python library |

| TFF | FL | Tensor Flow |

PySyft - PySyft is mainly geared towards privacy. It handles private data from the models’ training using federated learning within PyTorch, which is another library in the Python library.

Tensor Flow Federated (TFF) - TFF is another platform for federated learning. TFF, it provides users with a more flexible and open framework for their needs.

FATE (Federated AI Technology Enabler) - FATE is another open-source project geared towards FL. The platform was initiated by the Webank AI division. The goal of FATE is to provide a secured computing architecture, where a secure computing protocol is implemented based on encryption. FATE can support various federated learning architectures and machine learning algorithms, including logistic regression, transfer learning, etc. Through multiple upgrades, the platform was able to have a new tool that allowed for a more visual approach to FL: FATEBoard. The new upgrades also let the platform have FL modeling pipeline scheduling and life cycle management tools called FATEFlow.

Tensor/IO - This particular platform is a platform that brings the power of TensorFlow to mobile devices such as iOS, Android, and React native applications. While this platform does not implement any machine learning methods, the platform cooperates with TensorFlow to ease the process of implementing and deploying models on mobile phones. It can run on iOS and Android phones. The library also has choices of back-end programming languages the consumer can choose from when using this platform. The languages it supports are objectivec, Swift, Java, Kotlin, or JavaScript. A benefit of using this platform is that prediction can be done in as little as five lines of code.

Functional Federated Learning in Erlang (FFL-ERL) - Erlang is a structured, dynamic- typed programming language that has built-in parallel computing support, which is suitable for establishing real-time systems. This particular platform was proposed by the authors Gregor Ulm, Emil Gustavsson, and Mats Jirstran back in 2018. In the authors’ work, they go through the mathematical implementation of their proposed platform. For testing, the authors actually generated an artificial data-set. This particular platform, unfortunately, does have a performance penalty [61].

CrypTen - CrypTen is actually a framework geared towards privacy preservation built on PyTorch. There are a few benefits to using this platform, especially for machine learning. One benefit is that the platform was made with real-world challenges in mind. So it has the potential to be applicable to a lot of real-world medical care challenges. Another benefit is that CrypTen is library-based. So it is easier for users to debug, experiment on, and explore different models. Currently, the CrypTen platform runs only on the Linux and Mac operating systems.

LEAF - LEAF is a framework for FL, multi-tasking, meta-learning, etc. This platform has several data-sets available for experimentation. The authors of [62] proposed the framework LEAF back in 2019. Core components of LEAF consists of three components: the datasets, implementation references, and metrics.

IV. Enabling Technologies: Used Hardware, Software, and Algorithms

There is plenty of hardware, software, and algorithms that have been applied toward FL. These technologies have been utilized to further refine FL. These technologies can also be applied to different communities too.

A study by [63] manages to evaluate and compare several algorithms for FL. The mentioned algorithms include FedAvg, and Federated Stochastic Variance Reduced Gradient (FSVGR), and CO-OP.

FedAvg is one of the algorithms for FL. The way the FedAvg algorithm works is that it initializes training thanks to the main server, where the hosts share an overall global model [63]. Optimization is done via the Stochastic Gradient Descent Algorithm (SGD). In addition, the FedAvg algorithm has five parameters that need to be accounted for: the number of clients, batch sizes, amount of epochs, learning rate, and decay. The FedAvg algorithm begins by starting up the global model. The server chooses a group of clients and transmits the recent model to all the clients. Then After modifying the local models to the shared model, clients divide their own data into different batch sizes and perform a certain amount of epochs of SGD. Then the clients transfer their newly modified local models to the server. The server makes new global models and does so by calculating a weighted total of all the obtained local models [63].

Unfortunately, the FedAvg algorithm is not without a few setbacks. While it has been proven to be successful, the FedAvg algorithm still does not tackle all the challenges associated with heterogeneity. Specifically, FedAvg does not allow the devices involved to perform various amounts of local work based on the constraints of their system; What typically happens is that it is common to simply drop devices that are unable to compute x amount of epochs within a specific time duration [63].

The FSVRG algorithm’s goal is to do one full calculation, then there are a lot of updates on each client. The updates are done by going through random permutations of data, performing a single update. The actual focus of the FSVRG algorithm is sparse data. Some features are rarely represented in the data-set [63].

The authors compared the mentioned algorithms on the MNIST data-set. Upon analyzing results the authors concluded that the FedAvg algorithm had better performance on the MNIST data-set [63].

Another algorithm that has been proposed for FL is called FedProx. FedProx and FedAvg are both similar since they both require that groups of gadgets are chosen at each iteration. Local updates are executed and grouped together to produce a global update. FedProx is meant to be a modification of the original FedAvg algorithm. FedProx makes simple modifications that allow for even better performance and better heterogeneity. The reasoning behind this is that a variety of devices that get used for FL will often have their own device constraints, so it would not be ideal or realistic to expect all the devices to perform the same amount of work. The authors that proposed the FedProx algorithm claim that the algorithm allows for different amounts of work to be performed by taking into account different devices’ performances at different iterations. More specifically, the algorithm tolerates partial work instead of uniform work. By tolerating partial work, heterogeneity of the systems is accounted for, and there is more stability in comparison to the default FedAvg algorithm. To verify the effectiveness of FedProx, the authors used different kinds of tasks, models, and four data-sets. Synthetic data and simulated heterogeneous systems were also used for evaluation. Upon experimentation, the authors were able to validate their proposed algorithm’s effectiveness [64].

The authors Hongyi Wang, Yuekai Sun, et al. proposed an algorithm for FL called FedMA (Federated Matched Averaging). FedMA was intended for federated learning of recent neural network frameworks. In FedMA, global models are constructed via layers, and by matching and averaging hidden elements with the same features. Regarding the layers in FedMA, there are some steps that are involved. Firstly, the data center gathers the weights of the first layers via clients and performs one-layer matching for obtaining the first layer weights of the federated model. The data centers then send these weights to the clients, which then train all the other layers on their data-sets. This procedure is repeated up to the last layer, a weighted average is calculated based on proportions of data for based on proportions of data points per client. Another aspect of FedMA is communication. With this aspect, clients obtain the global model at the beginning of a new round, then modify their own local models with sizes equivalent to the original models. As a result, sizes can be smaller thus easier to manage. The FedMA algorithm was implemented in PyTorch and simulations of a federated learning environment were used. A total of four data-sets were also used for experimentation as well. Upon evaluation, the authors concluded their proposed algorithm outperformed other algorithms. Furthermore, one benefit of FedMA is that it can handle communication more effectively than other algorithms [65].

Interestingly, there have been algorithms for FL that have been applied to a specific industry. Two examples come from [66] and [67]; Both of these works have proposed algorithms for the medical industry. In [66], The proposed algorithm for FL is called LoAdaBoost, shown in Fig. 12. LoAdaBoost was meant for handling medical data. After all, medical data itself is often stored on different devices and in different locations. The authors of LoAdaBoost claim that other FL algorithms only focus on modifying one issue, such as accuracy, but none of them take into consideration the computational load that various clients could be dealing with. LoAdaBoost considers three issues with FL: the client’s computation complexity, the communication cost, and accuracy. LoAdaBoost is also supposed to help clients’ performance too, and facilitate communication between FL’s clients and servers. For evaluation, the authors compared LoAdaBoost against the baseline FedAvg algorithm. The LoAdaBoost algorithm managed to not only outperform the FedAvg algorithm but also managed to improve communication efficiency.

Fig. 12.

LoAdaBoost Client-Server Architecture

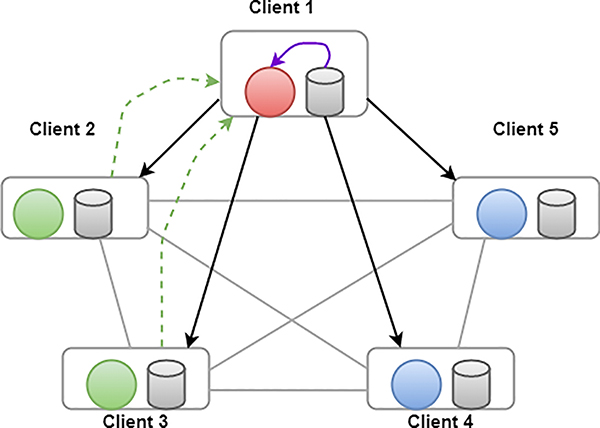

In [67], another algorithm that was applied in a medical setting comes from the authors Abhijit Guha Roy, Shayan Siddiqui, et al, where they propose an algorithm called BrainTorrent [67]. The concept of BrainTorrent is shown in Fig 13. According to the authors, BrainTorrent operates in a peer-to-peer environment. In the peer-to-peer environment, the goal of BrainTorrent is to have all centers talk with each other, instead of relying on the main server in traditional FL. BrainTorrent was meant to provide help for mobile device users. Another element that makes BrainTorrent stand out from other algorithms discussed in this section is that BrainTorrent is server-less; Typically where there can be millions if not billions of users, it is normally very convenient to have the main server. The main goal of FL is to reduce costs for communicating. The motivations to do so are different for collaborative learning within communities for four main reasons:

The amount of health facilities forming communities is much lower.

Centers would be required to have stronger communication, so there is minimal latency.

Going off the second reason, in the context of healthcare settings, it would be difficult to have a central server because every center would likely want to communicate directly with the rest of the servers.

By relying on servers, if a problem were to occur, the entire structure would be rendered non-functional. That would be dangerous in a medical setting.

Fig. 13.

Architecture of BrainTorrent Algorithm

By not having a central server in the algorithm’s framework, BrainTorrent is resistant to failure and there is no need for a central server that everyone trusts. So a client at any point in time can begin an updating process. This leads to more interaction taking place, and higher accuracy. Along with the models, each client has to maintain its own model version and latest model versions used during training. The training process at any step is conducted with the following steps:

Train all clients via a local data-set.

Have a single client at random initiate the training process. To do so, the client sends a request to other clients for obtaining the most recent models.

All the clients with updates send in their weights and training sample sizes.

A group of models is combined with the random client’s recent framework via a weighted average. Then we return to the first step.

To evaluate the effectiveness of BrainTorrent, the authors used the Multi-Atlas Labelling Challenge (MALC) data-set. The data-set consisted of 30 brain scans from various people. The authors used the ADAM optimization method as well. The authors confirmed that BrainTorrent managed to outperform the traditional FL system.

V. Optimization Techniques to Federated Learning models

There have been some optimization techniques proposed for FL models [68] as seen in Table IV. One of the studies on this topic comes [69]. According to them, in FL mini-batch gradient descent is typically used. Mini-batch gradient descent is used to optimize models. It essentially partitions the training set into tiny batches, which get used to calculate the error of our model(s) and update the models’ coefficients [70]. With mini-batch gradient descent, we are trying to find a compromise between efficiency and how comprehensive the gradient descent method should be. Using this method has several advantages and drawbacks [71], [72].

TABLE IV.

Optimization Techniques to Federated Learning models

| Technique | Advantage(s) |

|---|---|

| Mini-batch Gradient Descent | Efficiency Frequent Model Updates |

| Ada-grad | Faster More Reliable |

| RMSProp | Low Memory Requirements |

| Fed Adagrad | Adaptability |

| FedYogi | Adaptability |

| FedAdam | Higher Accuracy |

In addition, other optimizers, such as Ada-grad, RMSProp, and Adam have also been applied. These optimization methods have been modified for Federated Learning. The RMSProp method is an algorithm that is gradient-based. A benefit of using the RMSProp Method is that it can automatically tune the learning rate, so we do not need to manually do it [73]. The Adam optimization method is a combination of both SGD and RMSProp. The method was presented by two people in their paper [74]. Since Adam combines both from two optimization methods, it has a multitude of advantages, including low memory requirements and it still works well even if there is a little tuning of parameters [73].

Additionally, the study by [75] proposes a variety of optimization methods, namely FedAdaGrad, FedYogi, and FedAdam. For the FedAdaGrad Algorithm, there are three steps: initialization, sampling subsets, compute estimates. The other algorithms, FedYogi and FedAdam have the same structure, but the difference lies in their parameters. For FedYogi and FedAdam they rely on the degree of adaptivity, meaning how well can the algorithms adapt. Having smaller values for their parameters indicate high adaptivity. Implementation of FedAdaGrad, FedYogi, and FedAdam was done in Tensor Flow Federated (TFF). The three optimization methods were compared against the standard FedAvg algorithm. The algorithms managed to have higher accuracy than the standard FedAvg algorithm.

Interestingly, regarding the optimization of FL, there is a lot of optimization for FL regarding wireless networks [76] [77]. This is because wireless networks often face some constraints, such as bandwidth for example. Several works such as [78], [79], [80], and [81] have attempted to solve such issues, with mostly successful results. However, there is still an opportunity for designing guidelines and frameworks for wireless networks to accommodate FL. The work of [82] attempts to design such a framework for FL’s incorporation into wireless networks for improving communication. Upon the implementation and testing of their framework, results indicated that loss can be reduced between 10 to 16 percent. This is crucial since the current FL framework does not account for wireless networks and healthcare [83]. Other works, such as as [84], [85], [86], and [87] have also attempted to incorporate FL into different types of wireless networks, with some of these works attempting to apply FL into IoT. In addition, [88] manages to use FL for detecting malicious clients, which will likely become important when considering the reliability of participants involved in FL to behave accordingly. Because of how much research is into FL’s optimization, we can expect that FL will be able to grow in potential, applicability, and availability, thus leading FL to be more mobile [10], [89].

VI. Secure Network Protocols in support of Federated Learning

Network protocols are not entirely new. They have been around since the 1970s and 1980s, with these network protocols being modified over time to reflect current trends [90]. In the context of Federated Learning, there have been proposed network protocols and methods to tackle a few issues with FL, mainly related to privacy and traffic flow [91]. In addition, network protocols for FL have been mainly focused on wireless networks, which are essential for any industry, especially for health/medical care [92]. A summary of the network protocols is provided in Table V.

TABLE V.

Optimization Techniques to Federated Learning models

| Network Protocol | Focus | Benefit(s) |

|---|---|---|

| Hybrid FL | Communication Resource Scheduling Accuracy |

Accuracy |

| FedCS | Clients’ training process | Higher accuracy Robust models |

| PrivFL | Mobile Networks Data Privacy |

Accounts for threats |

| VerifyNet | User privacy Integrity |

Receptiveness High security |

| FedGRU/JAP | Traffic flow prediction | Reduced overhead |

The Hybrid-FL protocol is supposed to make learning non-IID data easier. In applying FL for networking, there are some common problems FL runs into. One problem is resource scheduling, due to how different clients can be and bandwidth constraints. Because clients often have wireless linking qualities and computation limits, communication is essential so they can communicate and update any model(s) accordingly [93]. So, the FL operator has to coordinate in terms of which client(s) will take part in the FL system. While resource scheduling has been studied extensively, resource scheduling for networks in the context of FL is still new, thus a challenge. In FL, we need to look at how the client’s data is distributed and consider communication capabilities, computation capabilities, and quantity of client data [94]. How Hybrid-FL works are that the server updates a model via the clients’ gathered data. Then it combines that model with other models that were trained by other clients. There is a server-client architecture in the Hybrid-FL protocol, shown in Fig. 14, where the server handles the scheduling of clients, by considering the data distribution and channel condition of each client. The Hybrid Protocol’s main idea is while a few clients transmit their information to the server, both the clients and server will update models. The protocol has a Resource Request step, where clients are asked about how much data they have, their computational and communication properties, and if they allow their information to be transmitted to the server. That way the time required for updates can be estimated. There is also a distributing step, where a model is transferred for choosing a few clients so that local updates can take place. Upon testing the proposed protocol’s effectiveness, the authors noted that Hybrid-FL was able to increase overall accuracy in regards to data distribution [94].

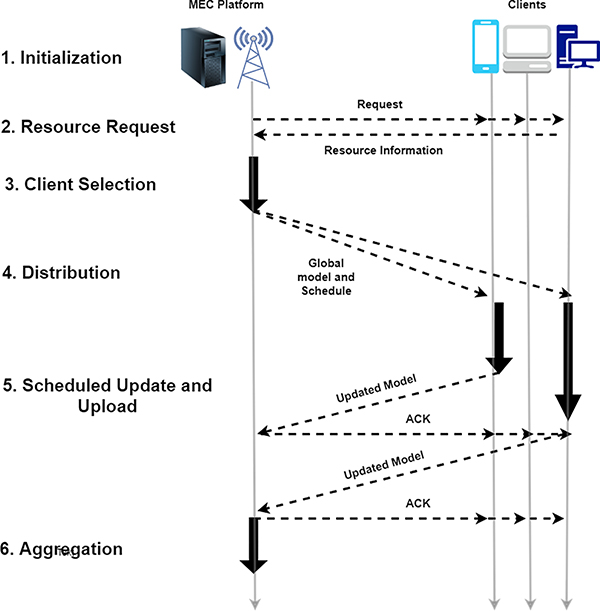

Fig. 14.

Hybrid-FL Framework

Like the Hybrid-FL protocol, FedCS was intended to tackle problems associated with FL. Specifically, the problems FedCS attempts to solve deals with the clients’ training process. While the original FL protocol’s clients are free from revealing their own data, sometimes these clients can have limited computational resources, so the training process for these clients can be inefficient [95]. By attempting to remedy this, we can cope with many updates while considering the clients’ restraints. The authors note that FedCS was built using a MEC framework [96]. Regarding FedCS’s framework as shown in Fig. 15, there are a few key steps:

Client Selection - In the original FL protocol, clients are randomly selected. In the FedCS protocol, there are two additional steps. First, there is a resource request that asks random clients to indicate their statuses on different things, such as channel states. Then, the client selection receives this information, which is used to estimate how much time is needed for the next two steps. The clients’ information is also used to determine which clients can proceed to the next two steps.

Distribution - In this step, a global model gets transmitted.

Update and Uploading Schedules - Here, the model is updated via clients. The clients transmit the new parameters to the server. A server gathers up clients’ updates and measures the models’ performance.

Fig. 15.

FedCS protocol framework

To verify FedCS’s effectiveness, the authors used two data-sets: the CIFAR data-set and the Fashion MNIST data-set, both of which were focused on object classification. FedCS was compared against the default FL protocol. From analyzing the results, FedCS was able to obtain higher classification accuracy and was able to generate high performing models in shorter amounts of time [96].

In addition, a protocol called PrivFL was introduced, focusing on privacy-preservation and is geared toward mobile networks. With PrivFL, what is being considered in regard to privacy is the mobile users’ data privacy from the server and the model privacy against the users. Interestingly, the authors modified their proposed protocol for three regression methods: Linear, Ridge, and Logistic Regression. With the PrivFL protocol, there is a security aspect to it, which the authors demonstrate by using three different threat models. PrivFL was also evaluated by using eleven data-sets [97]. Regarding PrivFL’s structure, there are two key elements: a server, and groups of mobile users that are connected to a mobile network. The server is responsible for training. The training process has a few factors:

Correctness - For ensuring accurate inputs from users, PrivFL needs to output the right model during training. If the server manipulates the model, or if the users use incorrect inputs, then the model lacks correctness.

Privacy - PrivFL’s objective is to ensure the users’ privacy is not compromised when they input something and to protect the server’s privacy. So the server should not memorize any information about the users’ inputs. Similarly, users should not learn any information regarding the model.

Efficiency - The server performs most work in the training phase. Therefore, the computation and communication costs need to be minimal.

Overall, the PrivFL protocol is both comprehensive in its implementation and structure. The protocol also considers different threat models, which make it a reliable protocol overall [97].

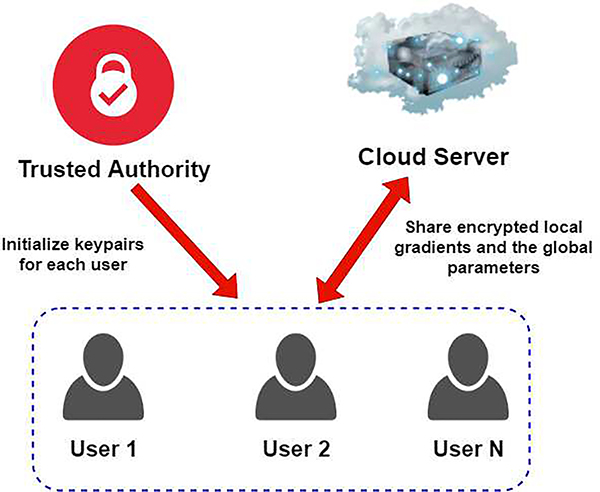

Furthermore, another protocol for FL comes from the authors Guowen Xu, Hongwei Li, et al. Their proposed protocol is called VerifyNet, which is shown in Fig. 16. VerifyNet tries to protect the users’ privacy for the process of training and verify the reliability of the results that are sent back. The issue that the authors’ highlight is that while there are several approaches focusing on security and privacy, there is still a problem of clients being able to verify if a server is functioning properly without compromising the users’ privacy [98]. As such, the overall goal of this protocol is to address three major problems that federated training often runs into:

How to protect the users’ privacy in workflow

Spoofing

How to offer offline support for users

Fig. 16.

VerifyNet Architecture

There are five rounds in the VerifyNet protocol that are: Initialization, Key Sharing, Masked Input, Unmasking, and Verification, with Fig. 16 summarizing VerifyNet’s architecture. First, the system is initialized, then, public and private keys get generated. Each user then needs to encrypt their local gradient and send it to the server(s). When the server receives messages from all the users online, it gathers all of the online users’ returns results. Users can decide to accept or reject the results. The authors tested VerifyNet by utilizing 600 mobile devices in order to evaluate the protocol’s performance. The devices have 4GB of RAM and had Android 6.0 systems. The data used for evaluation came from the MNIST database which had sixty-thousand examples for training and ten-thousand examples for testing. The authors noted that VerifyNet was receptive to users dropping out of the FL learning process. VerifyNet was also able to achieve high security, but unfortunately, VerifyNet has high communication overheadm [98].

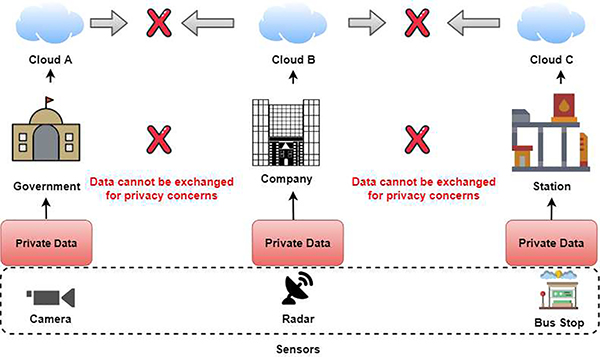

Another protocol comes from the authors Yi Liu, James J.Q. Yu, et al. Here, they propose a protocol for FL that is geared toward traffic flow prediction [99]. The protocol aims to achieve an accurate prediction of traffic while still being able to preserve privacy. The algorithm is called the Federated Learning-based Gated Recurrent Unit neural network (FedGRU). The actual protocol designed is called the Joint Announcement Protocol. Traffic flow prediction provides information about traffic flow, such as traffic history to predict future traffic flow. Privacy concerns exist in predicting traffic flow too as seen in Fig. 17. Regarding the FedGRU algorithm, FedGRU aims to provide traffic flow prediction. There are about four steps to it, which are the following:

Initiate the model by pre-training it. This relies on public data-sets that we can use without privacy concerns.

A copy of a global model is sent to institutions, and they train their copy on local data.

Then, each of the institutions sends model updates to the Cloud. No private data is shared, just the parameters which are encrypted.

The cloud gathers all the updated parameters uploaded by all institutions to generate a new model, and then the new global model is distributed to all participating institutions.

Fig. 17.

Traffic Flow diagram. This illustrates how difficult traffic flow prediction can be due to data not being able to be shared.

Then, regarding the Joint Announcement Protocol, we need to keep in mind the number of participants in FedGRU because there is a small number of participants. [99] Since there is a small number of participants, we can think of the Joint Announcement Protocol as a small-scale FL model. The participants in the Joint-Announcement protocol are the institutions and the cloud. There are three phases in the protocol:

Preparation - When given an objective to complete, the institutions that are voluntarily participating will checkin with the Cloud.

Training - The cloud sends in the pre-trained models first. Afterward, it sends a checkpoint to the institution. The cloud needs to randomly select a fixed ratio of institutions to partake in training. Each institution trains data locally and sends parameters.

Gathering - In this phase, the cloud gathers up all the parameters sent in to modify the model. Then the cloud modifies the global model by sending in check-points. After this, the global model transfers all the updated parameters to each institution.

To evaluate their proposed methods, the authors used data from the Caltrans Performance Measurement System (PeMS) database. Traffic flow information was obtained from over 30,000 detectors. The authors chose a few metrics, mainly the Mean Absolute Error (MAE), Mean Square Error (MSE), Root Mean Square Error (RMSE), and Mean Absolute Percentage Error (MAPE) for testing predicting accuracy. Results obtained proved that their proposed methods were able to perform comparably to the other competing methods. In addition, the Joint Announcement Protocol was able to reduce communication overhead by sixty-four percent [99].

VII. Challenges and Limitations of Federated Learning

Despite FL having many benefits, FL is still prone to many limitations and there are still challenges that hinder FL from being fully adopted by various industries. These challenges mainly are regarded in terms of privacy, security, and in some cases the technical requirements [100] [101] [102]. Table VI summarizes various challenges of FL.

TABLE VI.

Summary of existing challenges for FL

| Reference No. | Challenge(s) of FL |

|---|---|

| [117] - [121] | Data skewing Data Imbalance |

| [122] - [126] | Communication Systems Heterogeneity Privacy |

| [127] | Hardware Power Network Connectivity |

| [128] - [133] | Security Privacy |

| [134] - [139] | Model Performance Accuracy Security Privacy |

| [140] - [149] | Privacy Security Performance Trust Accountability System Architecture Trace-ability |

When using data for training in Federated Learning, the data itself is often imperfect. The data could be imbalanced, and some classes in data-sets could be missing entirely [103]. With these drawbacks, the end result is models that are highly inaccurate. Typically, training data is skewed quite heavily [104]. The training data’s characteristics can vary by a lot due to being spread at different sites. In these scenarios, it would not be beneficial to have a naive implementation of an FL model because the resulting model would be inaccurate [105]. To remedy the data skewing problem, we would need to rely on the server [106]. Furthermore, data skewing can even affect different kinds of data that can be used in FL. One example is tabular data [107]. Overall, data skewing is due to four primary factors: data imbalance, missing classes, missing features, and missing values.

Data Imbalance - This issue happens when one or both entities have more (or less) training data than another entity. For instance, one partner has thousands of training samples for class A and the other partner has a few tens of training samples for class A. When this happens, the resulting model built via FL can be very poor So techniques are needed in order to remedy data imbalance issues.

Missing Classes - This can happen when one or both entities have training data that represents a class, but that class is not present in the other entity’s training data.

Missing Features - Missing features occur when one or more entities have training data containing features that do not exist in the other entity’s data. If we were to create a model, the resulting model would not be able to know the missing features, or the model could be incomplete because it requires features that are missing.

Missing Values - With this issue, this particular issue happens when one or both entities have training data where some values are missing.

In addition to the data skew problem previously mentioned, there are other challenges that FL is prone to, such as Communication, Systems Heterogeneity, and Privacy. Communication is essential for FL, especially in the healthcare and medical care settings [108], [109]. Combined with privacy concerns over raw data being sent, there is the demand that the devices need to remain local [110]. FL involves a vast quantity of devices, so communication can be slower by a lot. In order to formulate a model, we need to design methods that are communication-efficient [111]. This means that we send over smaller messages or smaller model updates for the training process, instead of just sending the entire training data-set [112]. For this, we need to consider two factors: reducing the number of rounds for communication and reducing the size of each message for each round.

Additionally, because FL can involve a lot of different devices, each device is different in terms of hardware, network connectivity, and power [113]. Depending on the devices’ configuration, network size, and system constraints usually result in a small portion of the devices operating. The devices themselves could be untrustworthy, and it is not unusual for a device to suddenly leave at a certain point because of connection or energy limitations [112]. As such, FL methods that are developed need to take into account a few factors:

How to anticipate there are low amounts of participation

Tolerating different device hardware

Robust methods we need to account for these dropped devices in network settings.

In FL, privacy is often the biggest concern. In the training process, communicating model updates can reveal sensitive information [114] [115]. Even though there have been newer approaches proposed for tackling privacy, these methods can reduce model performance and accuracy. Being able to find a compromise with this trade-off is still a challenge when attempting to solve the privacy issue [112], [116]. The authors of [117] also note that the biggest issues in FL are mainly security and privacy. As such, efficient FL algorithms that deliver models with high performance and privacy protection without adding computational burden are desirable [118], [119]. Because local models are trained via newer data to highlight new updates, it is likely that adversaries can influence the local training data-sets to compromise the models’ results. There also several attacks that FL can be prone to. So being able to formulate algorithms and methods that can protect themselves from these attacks are crucial so that model performance and accuracy do not suffer [120]. Additionally, the authors of [121] also note that security and privacy are the biggest challenges in FL. Regarding privacy, it is possible to extract information from a trained model. When training, the connections that are noted in the training samples are aggregated inside the trained model. So, if the trained model is released, what happens is unexpected information reveal to hackers. It is also possible to obtain data of a victim by instantiating requests to the model [122]. This occurs when someone gains unauthorized permission to make prediction requests on a model that has been trained. Then, the adversary can use the prediction requests to extract the trained model from the owner of the data.

In terms of security, FL is prone to a variety of attacks that can compromise the model performance and accuracy [123], [124]. One attack is called the Data-Poisoning attack, where a person can tamper with the model by creating poor-quality data for training that model. The goal here is to generate false parameters. These types of attacks can achieve high misclassification, up to ninety percent. There can be different modifications to the data-poisoning attack too [125]. One example is a Sybil-based Data-Poisoning attack, where the adversary boosts the effectiveness of data poisoning by creating multiple adversaries. Another type of attack is Model-Poisoning, which is more effective than Data-Poisoning. In Model-Poisoning attacks, it is possible for the adversary to modify the updated model. Another type of attack that FL can be prone to be called a Free-Riding Attack, where the adversary wants to leech benefits from the model without being part of the learning process. This results in legitimate entities contributing more resources to the training process [121].

In the context of other settings, the challenges, and limitations of FL are somewhat similar, and there may actually be more challenges and limitations to applying FL for these other settings [126]. Several challenges and limitations include Privacy and Security, Security, Performance, Level of Trust, Data Heterogeneity, Trace-ability and Accountability, and System Architecture. We often work with sensitive data that needs to be guarded accordingly. While one of FL’s purposes is to protect privacy, FL is not able to solve all privacy issues [127]. Because of strict regulations and government policies regarding medical data, leakages of private information or the possibility of information leakage are unacceptable. Even more challenging is that there are a lot of different regulations on medical data, so there is no one-size-fits-all solution [126]. In terms of trust, this mainly deals with the possibility of information leakage and preventing that from occurring [128]. Trust is needed in the medical and healthcare industries so that confidence in FL and FL’s performance can be established [122]. Regarding trust, there are two types of collaboration participating FL entities can be in:

Trusted - This is mainly for FL entities that are considered reliable and are bound by a collaboration agreement. So we can eliminate a lot of malevolent attempts. As a result, the need for counter-measures are reduced, and we can rely on the typical collaborative research.

Non-Trusted - For FL systems that operate on larger scales, it can be impractical to establish an enforceable collaborative agreement that can guarantee that all of the parties are behaving accordingly. Some entities could try to degrade performance, bring the system down, or extract information from other entities. In this particular setting, security strategies are needed to mitigate these risks such as encryption, secure authentication, trace-ability, privacy, verification, integrity, model confidentiality, and protection against adversarial attacks.

With Data heterogeneity, data is very diverse in terms of type, dimensionality, and characteristics. We also have to consider the task(s) due to demographics. [129] [130]. This can pose a challenge within the FL frameworks and methods. One of the biggest assumptions in FL is that the data is IID (Independently and Identically Distributed) across entities [131]. Another concern is that data heterogeneity may lead to a scenario where the global solution may not be the optimal final solution [132].

Furthermore, the reproducibility of a system is essential in industries such as healthcare. A trace-ability requirement should be fulfilled to make sure that system events, data access history, and configuration changes can be traced during the training processes [27]. Trace-ability can also be used to note the training history of a model and avoid the training and testing data-set overlapping [133], [134]. In cases where there is non-trusted collaboration, traceability, and accountability processes both need integrity. After the training process reaches the agreed optimal model guidelines, it may also be helpful to measure how much each entity contributed, such as resources consumed. We also need to consider the system architecture. Various institutional entities are usually equipped with better computational resources and reliable and higher throughput networks [28]. As a result, this allows us to train larger models with larger amounts of training steps [135]. This way we can share more model information. FL is not perfect, but with more research and implementation, these challenges will hopefully be minimal. Overall, there are many challenges and limitations of FL that still need to be considered such as Data Skewing, Communication, System Heterogeneity, Privacy, Security, Performance, Level of Trust, Data Heterogeneity, Accountability, and System Architectures.

VIII. Market Implications of Federated Learning

While not explicitly researched, FL is anticipated to have considerable implications for the marketplace, especially in industries that in critical need of newer technology such as the healthcare and medical markets [136]. According to CMS.gov, national healthcare spending is expected to increase by an average rate of 5.7% per year. At that rate by 2027, healthcare spending will reach nearly six trillion dollars. In addition, CMS also noted that hospital spending is forecasted to have increased by 4.4% back in 2018, but this number is higher in 2020, and will likely continue to increase over time. These statistics indicate that the medical and healthcare industries heavily impact the market by a lot [137].

It has also been noted that the healthcare industry produces more data than a few industries, such as advertising. The healthcare industry is producing about 30% of the world’s data if not more. As such, healthcare is expected to keep flourishing through 2025 [138]. So in terms of the market impact, healthcare and medical data are expected to grow quicker than the manufacturing, financial services, or media industries. Interestingly, the IDC(International Data Corporation) used an index called DATCON(DATa readiness CONdition) to assess the data management, utilization, and monetization of various industries. There are a few metrics used such as data growth, security, management, and involvement. Scores of DATCON range from 1-meaning that the industry lacks the capabilities to manage and monetize its data, and 5-meaning that the industry is completely optimized [139]. The healthcare industry has a score of 2.4, meaning that they fall below average in a few data competency metrics such as technological innovation. Because technology innovation is low in both healthcare and medical industries, the industries themselves fail to tackle recent data management problems, and are unable to invest in more advanced architectures [140].