Abstract

Objective:

The authors reviewed educational interventions for improving literature searching skills in the health sciences.

Methods:

We performed a scoping review of experimental and quasi-experimental studies published in English and German, irrespective of publication year. Targeted outcomes were objectively measurable literature searching skills (e.g., quality of search strategy, study retrieval, precision). The search methods consisted of searching databases (CINAHL, Embase, MEDLINE, PsycINFO, Web of Science), tracking citations, free web searching, and contacting experts. Two reviewers performed screening and data extraction. To evaluate the completeness of reporting, the Template for Intervention Description and Replication (TIDieR) was applied.

Results:

We included 6 controlled trials and 8 pre-post trials from the 8,484 references that we screened. Study participants were students in various health professions and physicians. The educational formats of the interventions varied. Outcomes clustered into 2 categories: (1) developing search strategies (e.g., identifying search concepts, selecting databases, applying Boolean operators) and (2) database searching skills (e.g., searching PubMed, MEDLINE, or CINAHL). In addition to baseline and post-intervention measurement, 5 studies reported follow-up. Almost all studies adequately described their intervention procedures and delivery but did not provide access to the educational material. The expertise of the intervention facilitators was described in only 3 studies.

Conclusions:

The results showed a wide range of study populations, interventions, and outcomes. Studies often lacked information about educational material and facilitators' expertise. Further research should focus on intervention effectiveness using controlled study designs and long-term follow-up. To ensure transparency, replication, and comparability, studies should rigorously describe their intervention.

INTRODUCTION

Identifying and evaluating available scientific evidence by means of literature review is one component of evidence-based practice (EBP) in health care [1]. Systematic reviews often are the bases of wide-ranging, important decisions in clinical practice [2]. Therefore, literature searches of high methodological quality have considerable scientific, practical, and ethical relevance. Constructing appropriate search strategies to retrieve relevant literature for answering clinical questions is fundamental to systematic reviews. Indeed, effective searching is a core competency of EBP for health sciences professionals, researchers, and librarians [3–5].

In practice, however, many systematic reviews do not employ high-quality literature searches. Several analyses demonstrate shortcomings of literature searches described by published systematic reviews. For example, 7–9 out of 10 search strategies in Cochrane reviews or other systematic reviews contain at least 1 error, such as missing Medical Subject Headings (MeSH), unwarranted explosion of MeSH terms, irrelevant MeSH or free-text terms, missed spelling variants, failure to tailor the search strategy for other databases, and misuse of logical operators [6, 7]. Furthermore, 50%–80% of these errors potentially lower the recall of relevant studies and may impact the overall results of the review [6–8].

Education in EBP should integrate training on literature searching [3]. A recent systematic review indicates that training can improve evidence-based knowledge and skills among health care professionals [9]. However, the actual impact of such training on EBP remains unclear, largely because its implementation and patient-relevant outcomes are rarely examined or are affected by risk of bias [9, 10]. Although training in EBP might improve the skills of health professionals [9], the complexity of these interventions precludes drawing conclusions about their impact on literature searching skills. EBP training can contain several intervention components, such as (1) formulating a research question, (2) developing a search strategy, (3) critically appraising the evidence, and (4) communicating research results to patients. These educational interventions and their components are characterized by varying durations and intensities of training as well as differing materials and methods of delivery [9].

Literature searching skills are, therefore, one outcome of interest among others, and the complexity of EBP training makes it challenging to isolate components and outcomes specifically related to literature searching skills. An older review published in 2003 found some evidence for a positive impact of training on health professionals' skill levels in literature searching; however, the included studies suffered from methodological shortcomings and were underpowered [11]. To scope educational interventions for improving literature searching skills in the health sciences, an updated review is warranted. Therefore, the objective of the current review was to answer the following research questions: What is known about educational interventions to improve literature searching skills in the health sciences? Which outcomes were measured? How completely are these interventions reported in the published literature?

METHODS

The authors conducted a scoping review following the stages defined by Arksey and O'Malley to (1) map the available evidence relevant to our broad research questions, (2) describe the number and characteristics of available studies, and (3) evaluate the design and reporting of these studies [12–17]. We used an internal review protocol developed by all authors to guide the process and applied PRISMA-ScR for reporting [18].

Eligibility criteria

We included journal articles that described experimental or quasi-experimental studies in English or German, irrespective of publication year, as we were interested in all available studies focusing on educational interventions with outcome evaluation. The populations of interest were researchers, students, and librarians in the health sciences and health professionals such as nurses, physicians, and pharmacists, regardless of their searching experience or expertise. We included any type of educational intervention (e.g., training, instruction, course, information, peer review) aimed at improving health sciences–related literature searching skills.

We were interested in outcomes that were objectively measurable in terms of improvements in literature searching skills (e.g., proficiency, quality or correctness of search strategies, study retrieval). We excluded studies in which literature searching was one skill or intervention component among others (e.g., EBP courses, courses on reviewing the literature) and those concerning the performance or effectiveness of search filters, hand searching, or citation tracking. We also excluded studies addressing subjective outcomes (e.g., self-perceived knowledge and confidence in literature searching skills), as there is evidence of a weak correlation between self-perceived and objectively assessed literature searching skills [19]. In summary, we were interested in outcomes indicating an impact on literature searching performance.

Information sources

We searched CINAHL, Embase, MEDLINE via PubMed, Web of Science Core Collection, and PsycINFO via Ovid. Additionally, we performed free web searching via Google Scholar and hand searching in reviews that were identified as relevant. We also contacted expert health sciences librarians through four email lists (Netzwerk Fachbibliotheken Gesundheit, Canadian Medical Libraries, Expertsearch, and MEDBIB-L/German-speaking medical librarians). By forward and backward citation tracking of the included studies, we retrieved additional references. For citation tracking, we used Scopus because this database seemed to cover the largest number of relevant citations for the purpose of this review [20]. Based on already included studies, we searched for more references using the “cited by” and “citing” buttons in Scopus. We exported the retrieved references and, after de-duplication, performed the study selection process as described below. We repeated this process using “cited by” and “citing” buttons in Scopus, if newly identified studies were eligible, until no further studies were included.

Search

The search strategy was based on database-specific controlled vocabulary and free-text terms. We identified initial search terms on the basis of our experience, an orienting search, and familiar literature. To identify relevant search terms and synonyms, we used the MeSH Browser, COREMINE Medical, and a thesaurus. We analyzed keywords in relevant publications and similar articles that we identified via PubMed to determine frequently occurring terms to include in the search strategy. Considering the presence of delays in keyword indexing, we also searched for controlled vocabulary terms in title and abstract search fields [21]. The search strategy is provided in supplemental Appendix A and Appendix B.

Selection of sources of evidence

Two authors (Hirt, Nordhausen) designed the search strategy after consultation with the senior authors (Zeller, Meyer). An information specialist (Braun) reviewed the search strategy by means of the Peer Review of Electronic Search Strategy (PRESS) [5]. One author (Hirt) conducted the search. Two independent authors (Hirt, Nordhausen) screened titles, abstracts, and full texts for inclusion using Rayyan and Citavi. We discussed conflicts until reaching consensus.

Data charting process

Two authors (Hirt, Nordhausen) developed a standardized data extraction sheet. One author (Meichlinger) extracted data on study design, country, setting, participants (i.e., number, gender, age), intervention and control characteristics, outcome measurement, time of measurement, and main results. A second author (Hirt or Nordhausen) checked data extraction. We clarified uncertainties by consulting with the senior authors (Zeller, Meyer). To determine the completeness of reporting in included studies, we applied the Template for Intervention Description and Replication (TIDieR) [22]. For this purpose, two authors (Hirt, Nordhausen) defined a set of minimum required information to meet a TIDieR criterion (supplemental Appendix C). One author (Meichlinger) assessed the reporting of interventions, which was checked by a second author (Hirt or Nordhausen).

Synthesis of results

One author (Hirt) narratively summarized study characteristics, interventions, outcomes, results, and the reporting of interventions.

RESULTS

Search and study selection

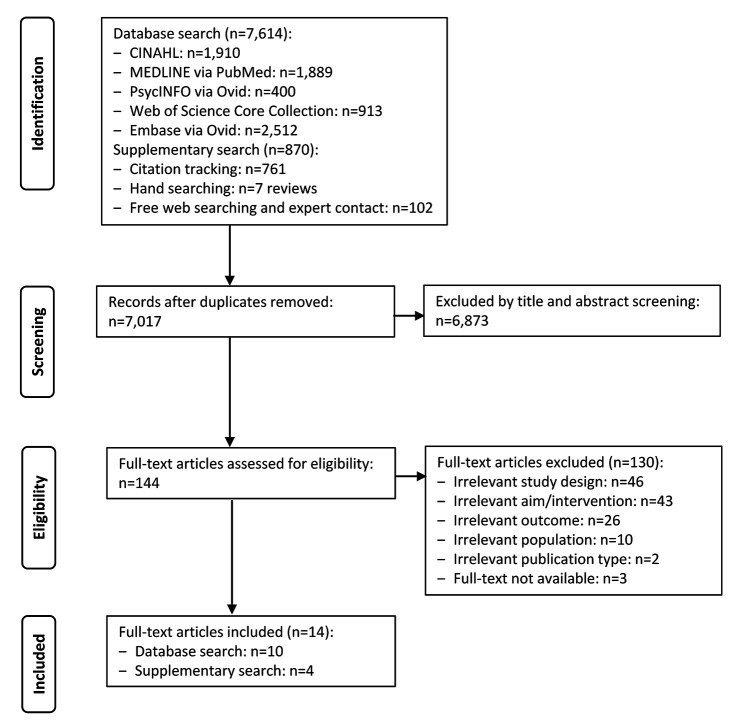

The systematic database and supplementary search yielded 8,484 references. After removal of duplicates, we screened 7,017 titles and abstracts and 144 full texts, resulting in the inclusion of 14 studies (Figure 1).

Figure 1.

Search and selection process

Study characteristics

The included studies (Table 1, with additional details provided in supplemental Appendix D) were published between 1989 and 2019 and were conducted in the United States [23–30], Canada [31, 32], United Kingdom [33, 34], Australia [35], and Pakistan [36]. Eight studies were pre-post trials [24, 27–31, 33, 36], 3 were randomized controlled trials (RCTs) [23, 32, 34], and 3 were controlled trials [25, 26, 35]. One RCT had 2 intervention groups [23]. Most studies were performed in a university setting [24–26, 29–31, 33–36], whereas 4 were conducted in a hospital setting [23, 27, 28, 32]. Participants were physicians or physicians-in-training in 3 studies [23, 28, 32] and students of different health sciences fields in the other studies. The number of participants ranged between 9 and 300 (mean, 77 participants; median, 42 participants). Most studies did not report participants' gender or age.

Table 1.

Characteristics, interventions, and outcomes of included studies

| Reference | Design | Participants | (n) | Intervention | Outcome |

|---|---|---|---|---|---|

| Erickson and Warner, 1998 [23] | RCT | Residents in obstetrics and gynecology | 31 | IG1: Hands-on tutorial session on the use of MEDLINE by health sciences librarian with hands-on instruction IG2: Tutorial on the use of MEDLINE at prescribed sessions performed by health sciences librarian |

MEDLINE search recall and precision rates of 4 searches |

| Hobbs et al., 2015 [24] | Pre-post trial | Senior undergraduate radiologic science students | 17 | Library instruction on planning literature searches, developing search strategies, searching health-related and medicine-related databases | Questionnaire on knowledge and skills in information literacy developed by the health sciences librarian and the Radiologic Science Faculty |

| Carlock and Anderson, 2007 [25] | CT | Undergraduate nursing students | 90 | Librarian instruction including homework and in-class assignment | Self-created rubric comparing the search history of a predefined search in CINAHL |

| Gruppen, et al., 2005 [26] | CT | Fourth-year medical students | 92 | Instructional intervention on EBP-based techniques for searching MEDLINE for evidence related to a clinical problem taught by medical librarians | Structured clinical scenario (described in a publication) and scoring on literature search quality and search errors developed by librarians at the University of Michigan, based on a template designed by librarians at the University of Rochester |

| Rosenfeld et al., 2002 [27] | Pre-post trial | Intensive care unit nurses | 36 | Educational sessions complemented by a web-tutorial regarding information literacy competencies, performed by the medical librarian | Self-defined, point-based competency rating scale |

| Vogel et al., 2002 [28] | Pre-post trial | Second-year medicine residents | 42 | Workshop on using Ovid's version of MEDLINE | Participants completed the MEDLINE performance checklist |

| Grant et al., 1996 [29] | Pre-post trial | Pharmacy students | 48 | Lecture on systematic approach in combination with an online demonstration with OVID to develop search strategies and homework assignments to perform a literature search | Evaluation of 2 written search strategies (one sensitive, one specific) concerning a predefined research question by pre-established scoring criteria |

| Bradigan and Mularski, 1989 [30] | Pre-post trial | Second-year medical students | 9 | Mini module courses performed by 2 librarian instructors | Number of correct answers, 3 questions on the ability to extract important concepts in a statement of a medical problem to be searched online |

| Sikora et al., 2019 [31] | Pre-post trial | Undergraduate or graduate health sciences or medical students | 29 | Scheduled individualized research consultations for students, performed by librarians | Self-developed information literacy rubric for scoring of open-ended questions regarding the use of appropriate keywords and search strategies |

| Haynes et al., 1993 [32] | RCT | Physicians and physicians-in-training | 264 | Feedback on the first 10 searches and assignment by a clinical MEDLINE preceptor | Participants performed 10 MEDLINE searches concerning individual research questions; the percentage of successful searches was defined if at least 1 relevant reference was retrieved |

| Grant and Brettle, 2006 [33] | Pre-post trial | Postgraduate students in research in health and social care | 13 | Self-developed web-based MEDLINE tutorial by an information specialist and tutor | Modified Rosenberg assessment tool comprised a skills checklist |

| Brettle and Raynor, 2013 [34] | RCT | Undergraduate nursing students | 55 | Online in-house information literacy tutorial (session 1) and follow-up information skills session (face-to-face) after one month (session 2) | Test of skills to search for evidence via CINAHL concerning specific research questions using a rubric identifying key features in the search strategy |

| Wallace et al., 2000 [35] | CT | Undergraduate nursing, health, and behavioral sciences students | 300 | Curriculum-integrated information literacy program | Objective test of library catalog skills regarding 5 domains |

| Qureshi et al., 2015 [36] | Pre-post trial | Postgraduate dental students | 42 | Workshop comprising 3 sessions of lectures and hands-on practice | Questions of the Fresno Test tool |

Abbreviations: CT=Controlled trial; EBP=Evidence-based practice; IG=Intervention group; MeSH=Medical Subject Headings; NA=Not applicable; RCT=Randomized controlled trial.

Intervention characteristics

Intervention groups received an instructional session [23, 24, 26], an instructional session combined with homework [25], an instructional or educational session complemented by a web-based tutorial [27], a consultation [31], training and feedback [32], a workshop [28, 36], a course [30], a curriculum-integrated program [35], a lecture [33], an online tutorial with a face-to-face follow-up session [34], a hands-on tutorial [23], or a web-based tutorial [33]. Control groups received a face-to-face session and follow-up sessions [34], training without feedback [33], or no intervention [23, 26, 32, 35].

Study outcomes

Outcomes clustered into two groups: (1) search strategy development and (2) database searching skills (Table 2). Beside baseline and post-intervention measurement, five studies reported follow-up outcomes one to eleven months later [26, 28, 29, 32, 33].

Table 2.

Outcomes clustered in two categories: (1) search strategy development and (2) database search skills

| Search strategy development | Database searching skills |

|---|---|

| Using appropriate search strategies | Showing database search skills in general |

| Using appropriate keywords | Searching more than one database |

| Developing search strategies in general | Creating sensitive and specific search strategies using Ovid |

| Identifying search concepts | Retrieving a manageable number of references in MEDLINE |

| Selecting databases | Having recall and precision in MEDLINE |

| Applying Boolean operators | Searching PubMed |

| Applying indexing terms | Searching MEDLINE |

| Applying search limits | Searching CINAHL |

| Search quality and errors | Searching a library catalog |

Outcomes related to developing search strategies included using appropriate keywords and appropriate search strategies [31]; developing search strategies in general [24]; identifying search concepts [24, 30]; selecting databases and where to find evidence [24, 34, 36]; applying Boolean operators, indexing terms, and search limits [27, 30, 33]; and having search quality as well as errors [26].

Outcomes related to database searching skills included database searching skills in general or searching more than one database [24, 27]; creating sensitive and specific search strategies using Ovid [29]; retrieving a manageable and relevant number of references in MEDLINE [33]; having recall and precision in MEDLINE [23]; searching PubMed [36], MEDLINE [28, 32], CINAHL [25, 34]; and searching a library catalog [35].

Study results

Most of the included studies—which consisted of pre-post trials, RCTs, and controlled trials—reported positive outcomes (i.e., an improvement in literature searching skills). Some pre-post trials reported significant improvements in the development of search strategies [31, 33, 36] and database searching skills [33, 36]. Other pre-post trials reported non-significant improvements in the development of search strategies [24, 27, 30] and database searching skills [24, 27, 29]. One study reported a significant improvement without reporting descriptive data concerning database searching skills [28].

Concerning RCTs and controlled trials, one reported a significant improvement in database searching skills [34], whereas others reported non-significant improvements in database searching skills [25, 32, 35]. Two studies reported no improvements in database searching skills [23, 34]. In one study, the intervention group had a significantly higher search quality score and significantly lower number of search errors [26].

Reporting of interventions

In six studies, the educational materials that were used were not clearly described [24–26, 34–36]. Almost all studies adequately reported the procedures of the interventions, except for two [31, 32]. Only three studies characterized the persons who delivered the intervention in terms of their professional background, expertise, and/or specific training [23, 31, 32]. All studies reported how the intervention was delivered. Four studies did not report the location of the intervention [23, 29, 31, 34]. Three studies did not report the number or frequency of educational sessions [26, 31, 35]. Most studies did not report tailoring, except for three studies [26, 31, 33], or modifications of the intervention or its planned and actual intervention fidelity, except for one [34] (Table 3).

Table 3.

Reporting assessment of included studies with Template for Intervention Description and Replication (TIDieR) (n=14)

| What: materials | What: procedures | Who provided | How | Where | When and how often | Tailoring | Modifications | How well (planned) | How well (actual) | |

|---|---|---|---|---|---|---|---|---|---|---|

| Erickson and Warner, 1998 [23] | Y | Y | Y | Y | N | Y | — | — | — | — |

| Hobbs et al., 2015 [24] | N | Y | N | Y | Y | Y | — | — | — | — |

| Carlock and Anderson, 2007 [25] | N | Y | N | Y | Y | Y | — | — | — | — |

| Gruppen, et al., 2005 [26] | N | Y | N | Y | Y | N | Y | — | — | — |

| Rosenfeld et al., 2002 [27] | Y | Y | N | Y | Y | Y | — | — | — | — |

| Vogel et al., 2002 [28] | Y | Y | N | Y | Y | Y | — | — | — | — |

| Grant et al., 1996 [29] | Y | Y | N | Y | N | Y | — | — | — | — |

| Bradigan and Mularski [30] | Y | Y | N | Y | Y | Y | — | — | — | — |

| Sikora et al., 2019 [31] | Y | N | Y | Y | N | N | Y | — | — | — |

| Haynes et al., 1993 [32] | Y | N | Y | Y | Y | Y | — | — | — | — |

| Grant and Brettle, 2006 [33] | Y | Y | N | Y | Y | Y | Y | — | — | — |

| Brettle and Raynor, 2013 [34] | N | Y | N | Y | N | Y | — | — | — | Y |

| Wallace et al., 2000 [35] | N | Y | N | Y | Y | N | — | — | — | — |

| Qureshi et al., 2015 [36] | N | Y | N | Y | Y | Y | — | — | — | — |

Abbreviations: Y=Yes, reported; N=No, not reported; —=Unclear whether it was conducted.

DISCUSSION

This scoping review focused on intervention studies aiming to improve literature searching skills in the health sciences. The results showed a wide range of study populations, educational interventions and their components, and outcomes. Overall, the reporting of these studies lacked essential details about educational materials and intervention deliveries to allow a detailed understanding of how the educational interventions were applied.

Database searching is a key element of systematic literature searches [37]. Systematic reviews require a high methodological standard of literature searching using several databases and highly sensitive search strategies [38]. Eight of the fourteen included studies tested database-specific educational interventions to improve searching skills in CINAHL, MEDLINE, and PubMed [23, 25, 28, 29, 32–34, 36]. Since the advent of electronic databases in the late 1970s, technological progress and usability requirements resulted in fundamental changes in database functionalities, capabilities, and layouts [39]. These changes were paralleled by changes in search features and user interfaces. Recent examples are the launch of “new PubMed” as well as updated search functions and layout of the Cochrane Library. These evolutions should be taken into account when designing contemporary educational interventions to improve literature searching skills. Only two studies with database-specific educational interventions were published within the last ten years [34, 36]; thus, the timeliness of these interventions is questionable.

Furthermore, there was general lack of complete reporting among the included studies, including detailed descriptions of database-specific educational interventions and outcomes. While the intervention procedure, format, and intensity (i.e., when and how much) were often reported, most studies lacked information about the educational material used and the expertise of the persons delivering the intervention. These two aspects—timeliness and transparent reporting of interventions—might hinder the transfer of educational interventions into current practice [40]. To better describe the context of implementation of educational interventions and ensure study transparency, replicability, adaptation, and comparability, authors should thoroughly describe their interventions [9] using TIDieR [22] or CReDECI-2 [41] guidelines.

Participants in the included studies consisted of students or physicians. Thus, these studies mainly addressed university education and clinical practice. One finding of our review was that experimental and quasi-experimental studies on objectively measurable literature searching skills of librarians as study participants were lacking, despite the increasing demand to involve skilled librarians and information specialists as methodological peer reviewers or as part of systematic review teams [42, 43]. However, there is evidence that underlines the importance of the active involvement of expert librarians in systematic reviews. A recently published analysis showed that systematic reviews coauthored by librarians had less risk of bias than reviews in which librarians' contributions were only mentioned in the acknowledgments or were unclear [44]. A recently developed competency framework for librarians who are involved in systematic reviews contains indicators that are helpful for determining whether the tasks performed by librarians could be applied independently [45].

Furthermore, a discussion-based framework presenting propositions for planning, developing, and evaluating training interventions for expert librarians to participate effectively in systematic review teams was recently published [46]. These two contextual propositions might underpin the theoretical framework for effective involvement of expert librarians in systematic review teams. However, based on the results of our scoping review, it remains unclear how librarians' searching skills might be further developed or improved. Given their increasingly important role in systematic reviewing, the effectiveness of literature search skill training for librarians should be considered in future research [46].

The development of feasible and effective interventions should be accompanied by the selection of relevant outcomes that are measured at appropriate times [47]. The included studies involved different outcomes, but their times of measurement were predominantly baseline and post-intervention. Follow-up measurement was conducted only in five studies, with a wide range between one and eleven months. Therefore, it is difficult to draw conclusions regarding long-term effects of educational interventions. Studies indicate that literature searching skills can worsen over time [48]. Future research should, therefore, employ long-term evaluations to ensure that the outcomes of interventions demonstrate continuity and long-term effectiveness. This might also promote the development of enduring information material such as manuals that guide students, researchers, and librarians in systematic literature searching.

Literature search recall and precision can be improved by other potential interventions including the development of search filters, supplementary search methods such as hand searching and citation tracking, structured search forms, and objectively derived search terms [37, 49–52]. These interventions were excluded due to our focus on educational interventions. However, such interventions need to be considered in systematic literature searching effectiveness [53].

The included studies were not critically appraised [18]. Nevertheless, significant limitations of internal and external validity were evident. Most studies were neither controlled nor randomized and had small sample sizes. The presentation of results was insufficient in some studies (e.g., confidence intervals and/or estimated effect sizes were not reported). Furthermore, the included studies used different types of outcomes, impeding comparison of their results. To enhance further research, authors should ensure objective, valid, and reliable outcome measurement [54], which could lead to greater internal validity and comparability of results.

A strength of our scoping review was our comprehensive search using a peer-reviewed search strategy [5]. To transform our research question into a search strategy, we intended to use two search components: education and literature search. However, this resulted in a large number of search results that we could not feasibly work through considering our resources. To further limit the search results, we added three concepts under the guidance of a medical expert librarian (Braun): (1) aim of the intervention (e.g., competence, improvement, success); (2) health sciences–related participants (e.g., clinician, librarian, nurse, physician); and (3) study design (e.g., randomized, trial, quasi-experimental). Adding these search components yielded a lower number of results and increased the risk of excluding relevant studies [55]. Therefore, to avoid missing studies that might contribute to the body of evidence, we applied several supplementary search methods—including citation tracking, free web searching, hand searching in relevant reviews, and contacting experts [37, 56]—resulting in the identification of four additional studies.

However, a limitation of our search approach was that we did not search trial registries or conference proceedings as well as specialized educational and library-related databases such as Education Resources Information Center (ERIC); Library, Information Science & Technology Abstracts (LISTA); and Library and Information Science Abstracts (LISA). Instead, we focused on databases covering multiple disciplines such as CINAHL, PsycINFO, and Web of Science Core Collection. The non-use of specialized library-related databases was due to limited subscriptions at our institution but may be a reason why no studies describing educational interventions for librarians were identified.

Another strength of our review was that we ensured high data quality by using two independent reviewers for study selection and a second reviewer to check data extraction and assessment of reporting. The analysis using TIDieR enabled a comprehensive assessment of the reporting of educational interventions to improve literature searching skills in health sciences [57]. To increase the reliability of the data extraction, two reviewers (Hirt, Nordhausen) developed minimal requirements for each TIDieR item criterion.

Our research question was limited to experimental and quasi-experimental studies employing search-specific educational interventions to improve literature searching skills. This scoping review did not address the effectiveness of interventions and quality of studies, which was appropriate for scoping review methodology [12, 17]. Conclusions concerning the positive impact of the identified educational interventions are, therefore, limited. The small number of rigorous study designs that we identified has led us to conclude that a systematic review to determine the effectiveness of interventions and internal validity of studies might not be necessary at the moment, as the last RCT was published in 2013 [34]. Further primary research is needed and should focus on the long-term effectiveness of educational interventions to improve literature searching skills using controlled study designs and follow-up outcome measurement.

To understand which interventions are effective for whom and why, the development of educational interventions should follow a systematic process based on available evidence and the needs of end users and librarians. This review indicates that there is still a need for high-quality research employing well-developed educational interventions and contemporary search methods using rigorous study designs [11].

SUPPLEMENTAL FILES

Appendix A: Search components and search terms

Appendix B: Database-specific search strategies

Appendix C: Characteristics, interventions, outcomes, and main results of included studies

REFERENCES

- 1.Greenhalgh T. How to read a paper: the basics of evidence–based medicine and healthcare 6th ed. Hoboken, NJ: Wiley–Blackwell; 2019. p. xix, 262. [Google Scholar]

- 2.Abbas Z, Raza S, Ejaz K. Systematic reviews and their role in evidence-informed health care. J Pak Med Assoc. 2008. October;58(10):561–7. [PubMed] [Google Scholar]

- 3.Albarqouni L, Hoffmann T, Straus S, Olsen NR, Young T, Ilic D, Shaneyfelt T, Haynes RB, Guyatt G, Glasziou P. Core competencies in evidence-based practice for health professionals: consensus statement based on a systematic review and delphi survey. JAMA Netw Open. 2018. June 1;1(2):e180281 DOI: 10.1001/jamanetworkopen.2018.0281. [DOI] [PubMed] [Google Scholar]

- 4.Medical Library Association. Competencies for lifelong learning and professional success [Internet] The Association; 2017. [cited 15 Mar 2020]. <https://www.mlanet.org/page/mla-competencies>. [Google Scholar]

- 5.McGowan J, Sampson M, Salzwedel DM, Cogo E, Foerster V, Lefebvre C. PRESS Peer Review of Electronic Search Strategies: 2015 guideline statement. J Clin Epidemiol. 2016. July;7540–6. [DOI] [PubMed]

- 6.Sampson M, McGowan J. Errors in search strategies were identified by type and frequency. J Clin Epidemiol. 2006. October;59(10):1057–63. DOI: 10.1016/j.jclinepi.2006.01.007. [DOI] [PubMed] [Google Scholar]

- 7.Salvador-Oliván JA, Marco-Cuenca G, Arquero-Avilés R. Errors in search strategies used in systematic reviews and their effects on information retrieval. J Med Libr Assoc. 2019. April;107(2):210–21. DOI: 10.5195/jmla.2019.567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Faggion CM, Huivin R, Aranda L, Pandis N, Alarcon M. The search and selection for primary studies in systematic reviews published in dental journals indexed in MEDLINE was not fully reproducible. J Clin Epidemiol. 2018. June;98:53–61. DOI: 10.1016/j.jclinepi.2018.02.011. [DOI] [PubMed] [Google Scholar]

- 9.Hecht L, Buhse S, Meyer G. Effectiveness of training in evidence-based medicine skills for healthcare professionals: a systematic review. BMC Med Educ. 2016. April 4;16:103 DOI: 10.1186/s12909-016-0616-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Horsley T, Hyde C, Santesso N, Parkes J, Milne R, Stewart R. Teaching critical appraisal skills in healthcare settings. Cochrane Database Syst Rev. 2011. November 9;260(17):2537 DOI: 10.1002/14651858.CD001270.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Garg A, Turtle KM. Effectiveness of training health professionals in literature search skills using electronic health databases-a critical appraisal. Health Inf Libr J. 2003. March;20(1):33–41. [DOI] [PubMed] [Google Scholar]

- 12.Arksey H, O'Malley L. Scoping studies: towards a methodological framework. Int J Soc. 2005;8(1):19–32. DOI: 10.1080/1364557032000119616. [DOI] [Google Scholar]

- 13.Munn Z, Peters MDJ, Stern C, Tufanaru C, McArthur A, Aromataris E. Systematic review or scoping review? guidance for authors when choosing between a systematic or scoping review approach. BMC Med Res Methodol. 2018. November 19;18(1):143 DOI: 10.1186/s12874-018-0611-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Grant MJ, Booth A. A typology of reviews: an analysis of 14 review types and associated methodologies. Health Inf Libr J. 2009. June;26(2):91–108. [DOI] [PubMed] [Google Scholar]

- 15.Khalil H, Peters M, Godfrey CM, McInerney P, Soares CB, Parker D. An evidence-based approach to scoping reviews. Worldviews Evid Based Nurs. 2016. April;13(2):118–23. DOI: 10.1111/wvn.12144. [DOI] [PubMed] [Google Scholar]

- 16.Holly C. Other types of reviews: rapid, scoping, integrated, and reviews of text In: Holly C, Salmond SW, Saimbert MK, eds. Comprehensive systematic review for advanced nursing practice. New York, NY: Springer Publishing Company; 2016. p. 321–37. [Google Scholar]

- 17.Peters MDJ, Godfrey CM, Khalil H, McInerney P, Parker D, Soares CB. Guidance for conducting systematic scoping reviews. Int J Evid Based Healthc. 2015. September;13(3):141–6. DOI: 10.1097/XEB.0000000000000050. [DOI] [PubMed] [Google Scholar]

- 18.Tricco AC, Lillie E, Zarin W, O'Brien KK, Colquhoun H, Levac D, Moher D, Peters MDJ, Horsley T, Weeks L, Hempel S, Akl EA, Chang C, McGowan J, Stewart L, Hartling L, Aldcroft A, Wilson MG, Garritty C, Lewin S, Godfrey CM, Macdonald MT, Langlois EV, Soares-Weiser K, Moriarty J, Clifford T, Tunçalp Ö, Straus SE. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018. October 2;169(7):467–73. DOI: 10.7326/M18-0850. [DOI] [PubMed] [Google Scholar]

- 19.Lai NM, Teng CL. Self-perceived competence correlates poorly with objectively measured competence in evidence based medicine among medical students. BMC Med Educ. 2011. May 28;1125 DOI: 10.1186/1472-6920-11-25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Li J, Burnham JF, Lemley T, Britton RM. Citation analysis: comparison of Web of Science®, Scopus™, SciFinder®, and Google Scholar. J Electron Resour Med Libr. 2010. July;7(3):196–217. DOI: 10.1080/15424065.2010.505518. [DOI] [Google Scholar]

- 21.Rodriguez RW. Comparison of indexing times among articles from medical, nursing, and pharmacy journals. Am J Health Syst Pharm. 2016. April 14;73(8):569–75. DOI: 10.2146/ajhp150319. [DOI] [PubMed] [Google Scholar]

- 22.Hoffmann TC, Glasziou P, Boutron I, Milne R, Perera R, Moher D, Altman DG, Barbour V, MacDonald H, Johnston M, Lamb SE, Dixon-Woods M, McCulloch P, Wyatt JC, Chan AW, Michie S. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014. March 7;348:g1687. [DOI] [PubMed] [Google Scholar]

- 23.Erickson S, Warner ER. The impact of an individual tutorial session on MEDLINE use among obstetrics and gynaecology residents in an academic training programme: a randomized trial. Med Educ. 1998. May;32(3):269–73. [DOI] [PubMed] [Google Scholar]

- 24.Hobbs DL, Guo R, Mickelsen W, Wertz CI. Assessment of library instruction to develop student information literacy skills. Radiol Technol. 2015. Jan-Feb;86(3):344–9. [PubMed] [Google Scholar]

- 25.Carlock D, Anderson J. Teaching and assessing the database searching skills of student nurses. Nurs Educ. 2007. Nov-Dec;32(6):251–5. [DOI] [PubMed] [Google Scholar]

- 26.Gruppen LD, Rana GK, Arndt TS. A controlled comparison study of the efficacy of training medical students in evidence-based medicine literature searching skills. Acad Med. 2005. October;80(10):940–4. [DOI] [PubMed] [Google Scholar]

- 27.Rosenfeld P, Salazar-Riera N, Vieira D. Piloting an information literacy program for staff nurses: lessons learned. Comput Inform Nurs. 2002. Nov-Dec;20(6):236–41. [DOI] [PubMed] [Google Scholar]

- 28.Vogel EW, Block KR, Wallingford KT. Finding the evidence: teaching medical residents to search MEDLINE. J Med Libr Assoc. 2002. July;90(3):327–30. [PMC free article] [PubMed] [Google Scholar]

- 29.Grant KL, Herrier RN, Armstrong EP. Teaching a systematic search strategy improves literature retrieval skills of pharmacy students. Am J Pharm Educ. 1996;60(3):281–6. [Google Scholar]

- 30.Bradigan PS, Mularski CA. End-user searching in a medical school curriculum: an evaluated modular approach. Bull Med Libr Assoc. 1989. October;77(4):348–56. [PMC free article] [PubMed] [Google Scholar]

- 31.Sikora L, Fournier K, Rebner J. Exploring the impact of individualized research consultations using pre and posttesting in an academic library: a mixed methods study. Evid Based Libr Inf Pract. 2019;14(1):2–21. DOI: 10.18438/eblip29500. [DOI] [Google Scholar]

- 32.Haynes RB, Johnston ME, McKibbon KA, Walker CJ, Willan AR. A program to enhance clinical use of MEDLINE. a randomized controlled trial. Online J Curr Clin Trials. 1993. May 11;doc no. 56. [PubMed]

- 33.Grant MJ, Brettle AJ. Developing and evaluating an interactive information skills tutorial. Health Inf Libr J. 2006. June;23(2):79–86. [DOI] [PubMed] [Google Scholar]

- 34.Brettle A, Raynor M. Developing information literacy skills in pre-registration nurses: an experimental study of teaching methods. Nurse Educ Today. 2013. February;33(2):103–9. [DOI] [PubMed] [Google Scholar]

- 35.Wallace MC, Shorten A, Crookes PA. Teaching information literacy skills: an evaluation. Nurse Educ Today. 2000. August;20(6):485–9. [DOI] [PubMed] [Google Scholar]

- 36.Qureshi A, Bokhari SAH, Pirvani M, Dawani N. Understanding and practice of evidence based search strategy among postgraduate dental students: a preliminary study. J Evid Based Dent Pract. 2015. June;15(2):44–9. [DOI] [PubMed] [Google Scholar]

- 37.Cooper C, Booth A, Varley-Campbell J, Britten N, Garside R. Defining the process to literature searching in systematic reviews: a literature review of guidance and supporting studies. BMC Med. Res. Methodol. 2018. August 14;18(1):85 DOI: 10.1186/s12874-018-0545-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lefebvre C, Glanville J, Briscoe S, Littlewood A, Marshall C, Metzendorf MI, Noel-Storr A, Rader T, Shokraneh F, Thomas J, Wieland LS. Searching for and selecting studies In: Higgins JPT, Thomas J, eds. Cochrane handbook for systematic reviews of interventions. Hoboken, NJ: Wiley Online Library; 2019. p. 67–108. [Google Scholar]

- 39.Reed JG, Baxter PM. Using reference databases In: Cooper H, Hedges LV, Valentine JC, eds. The handbook of research synthesis and meta-analysis. New York, NY: Russell Sage Foundation; 2009. p. 73–102. [Google Scholar]

- 40.Albarqouni L, Glasziou P, Hoffmann T. Completeness of the reporting of evidence-based practice educational interventions: a review. Med Educ. 2018. February;52(2):161–70. DOI: 10.1111/medu.13410. [DOI] [PubMed] [Google Scholar]

- 41.Möhler R, Köpke S, Meyer G. Criteria for reporting the development and evaluation of complex interventions in healthcare: revised guideline (CReDECI 2). Trials. 2015. May 3;16:204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Higgins JPT, Thomas J, eds. Cochrane handbook for systematic reviews of interventions 2nd ed. Hoboken, NJ: Wiley Online Library; 2019. [Google Scholar]

- 43.Aromataris E, Munn Z, eds. JBI reviewer's manual: the Joanna Briggs Institute [Internet] Adelaide, Australia: The Institute; 2017. [cited 21 Oct 2019]. <https://wiki.joannabriggs.org/display/MANUAL/>. [Google Scholar]

- 44.Aamodt M, Huurdeman H, Strømme H. Librarian co-authored systematic reviews are associated with lower risk of bias compared to systematic reviews with acknowledgement of librarians or no participation by librarians. Evid Based Libr Inf Pract. 2019;14(4):103–27. DOI: 10.18438/eblip29601. [DOI] [Google Scholar]

- 45.Townsend WA, Anderson PF, Ginier EC, MacEachern MP, Saylor KM, Shipman BL, Smith JE. A competency framework for librarians involved in systematic reviews. J Med Libr Assoc. 2017. July;105(3):268–75. DOI: 10.5195/jmla.2017.189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Foster MJ, Halling TD, Pepper C. Systematic reviews training for librarians: planning, developing, and evaluating. J Eur Assoc Health Inf Libr. 2018;14(1):4–8. [Google Scholar]

- 47.Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M; Medical Research Council Guidance. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ. 2008. September 29;337:a1655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Just ML. Is literature search training for medical students and residents effective? a literature review. J Med Libr Assoc. 2012. October;100(4):270–6. DOI: 10.3163/1536-5050.100.4.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Booth A, O'Rourke AJ, Ford NJ. Structuring the pre-search reference interview: a useful technique for handling clinical questions. Bull Med Libr Assoc. 2000. July;88(3):239–46. [PMC free article] [PubMed] [Google Scholar]

- 50.Jenkins M. Evaluation of methodological search filters - a review. Health Inf Libr J. 2004. September;21(3):148–63. [DOI] [PubMed] [Google Scholar]

- 51.Damarell RA, May N, Hammond S, Sladek RM, Tieman JJ. Topic search filters: a systematic scoping review. Health Inf Libr J. 2019. March;36(1):4–40. DOI: 10.1111/hir.12244. [DOI] [PubMed] [Google Scholar]

- 52.Hausner E, Guddat C, Hermanns T, Lampert U, Waffenschmidt S. Prospective comparison of search strategies for systematic reviews: an objective approach yielded higher sensitivity than a conceptual one. J Clin Epidemiol. 2016. September;77:118–24. DOI: 10.1016/j.jclinepi.2016.05.002. [DOI] [PubMed] [Google Scholar]

- 53.Cooper C, Varley-Campbell J, Booth A, Britten N, Garside R. Systematic review identifies six metrics and one method for assessing literature search effectiveness but no consensus on appropriate use. J Clin Epidemiol. 2018. July;99:53–63. DOI: 10.1016/j.jclinepi.2018.02.025. [DOI] [PubMed] [Google Scholar]

- 54.Rana GK, Bradley DR, Hamstra SJ, Ross PT, Schumacher RE, Frohna JG, Haftel HM, Lypson ML. A validated search assessment tool: assessing practice–based learning and improvement in a residency program. J Med Libr Assoc. 2011. January;99(1):77–81. DOI: 10.3163/1536-5050.99.1.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Bramer WM, de Jonge GB, Rethlefsen ML, Mast F, Kleijnen J. A systematic approach to searching: an efficient and complete method to develop literature searches. J Med Libr Assoc. 2018. October;106(4):531–41. DOI: 10.5195/jmla.2018.283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Cooper C, Booth A, Britten N, Garside R. A comparison of results of empirical studies of supplementary search techniques and recommendations in review methodology handbooks: a methodological review. Syst Rev. 2017. November 28;6(1):234 DOI: 10.1186/s13643-017-0625-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Altman DG, Simera I. Using reporting guidelines effectively to ensure good reporting of health research In: Moher D, Altman DG, Schulz KF, Simera I, Wager E, eds. Guidelines for reporting health research: a user's manual. Chichester, UK: John Wiley & Sons; 2014. p. 32–40. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix A: Search components and search terms

Appendix B: Database-specific search strategies

Appendix C: Characteristics, interventions, outcomes, and main results of included studies