Abstract

Objective

Much has been invested in big data analytics to improve health and reduce costs. However, it is unknown whether these investments have achieved the desired goals. We performed a scoping review to determine the health and economic impact of big data analytics for clinical decision-making.

Materials and Methods

We searched Medline, Embase, Web of Science and the National Health Services Economic Evaluations Database for relevant articles. We included peer-reviewed papers that report the health economic impact of analytics that assist clinical decision-making. We extracted the economic methods and estimated impact and also assessed the quality of the methods used. In addition, we estimated how many studies assessed “big data analytics” based on a broad definition of this term.

Results

The search yielded 12 133 papers but only 71 studies fulfilled all eligibility criteria. Only a few papers were full economic evaluations; many were performed during development. Papers frequently reported savings for healthcare payers but only 20% also included costs of analytics. Twenty studies examined “big data analytics” and only 7 reported both cost-savings and better outcomes.

Discussion

The promised potential of big data is not yet reflected in the literature, partly since only a few full and properly performed economic evaluations have been published. This and the lack of a clear definition of “big data” limit policy makers and healthcare professionals from determining which big data initiatives are worth implementing.

Keywords: big data; clinical decision-making; economics; data science, cost-effectiveness

INTRODUCTION

Extracting valuable knowledge from big healthcare data has been an important aim of many research endeavors and commercial entities. While no clear definition for big data is available, they are often described according to their complexity and the characteristics of the data such as the size of a dataset (Volume), the speed with which data is retrieved (Velocity) and the fact that the data come from many different sources (Variety).1 Bates et al2 emphasize that big data comprise both the data with their large volume, variety, and velocity, as well as the use of analytics. In this respect, analytics are the “discovery and communication of patterns in data.”

Big data’s potential to assist clinical decision-making has been expressed for a variety of clinical fields such as intensive care,3,4 emergency department,2,5 cardiovascular diseases,6,7 dementia,8 diabetes,9 oncology,10–12 and asthma.13 Big data analytics could also lead to economic benefits.1,2,14–17 Annual savings for the United States (US) healthcare system of providing timely, personalized care have been estimated to exceed US$140 billion.18

Over the years, much has been invested to achieve the promised benefits of big data. For instance, the US has invested millions in their Big Data to Knowledge centers.19 While in Europe, many calls and projects in Europe’s Horizon 2020 program have focused on the use of Big Data for better healthcare (ie, AEGLE, OACTIVE, BigMedylitics). In 2018, US$290 million was allocated to The All of Us initiative which aims to personalize care using a wide variety of data sources (ie, genomic data, monitoring data, electronic health record data) from 1 million US citizens.20 The investments by governments are far exceeded by the investments in “big data technologies” in the commercial sector.21 For example, IBM has already invested billions of dollars in “Dr. Watson” and big data analytics,22 and Roche purchased FlatIron Health for US$1.9 billion in 2018.11

For optimal spending of scarce resources, economic evaluations can be used to assess the (potential) return on investment of novel technologies. Economic evaluations are comparative analyses of the costs and consequences of alternative courses of action.23 Economic evaluations that provide evidence on the health and economic impact of a technology can assist decision-making and justify further investments required to achieve a technology’s potential. Despite the promise that big data analytics can lead to savings, it is unclear whether this promise is corroborated by good evidence. Therefore, we aimed to determine the health and economic impact of big data analytics to support clinical decision-making. Given the absence of a clear definition for big data, we first determined how analytics impacted clinical practice. We then considered which of these analytics could be classified as big data analytics.

MATERIALS AND METHODS

The study follows the Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) Checklist.24

Search strategy and study inclusion

Since there is no consensus on the definition of big data,1 we widened the scope of our search to identify economic evaluations of a variety of analytics. An information specialist from the Academic Library at the Erasmus University Medical Centre was consulted when developing the search strategy (Supplementary Appendix A). In the search strategy, we included MesH and title/abstract terms related to (big data) analytics, economic evaluations, and healthcare. These terms for (big data) analytics included artificial intelligence, tools used to extract patterns from big data, such as machine learning, and generic tools that use analytics to enable decision-making, such as clinical decision support. We combined these with terms such as economic evaluations and cost-effectiveness and terms to exclude studies that had no relation to healthcare (ie, veterinary care).

All major databases were searched (Embase, Medline, Web of Science, and the NHS Economic Evaluations Database). We included all English, peer-reviewed, primary research papers and limited our search to studies of humans. The primary search was performed in March 2018 and updated in December 2019. Initial screening was performed by 1 author (LB). Hereafter, all studies about which there was uncertainty regarding their inclusion were discussed with 2 other authors (JA, WR). Studies were included if they met the following criteria: a) the study reported pattern discovery, interpretation, and communication to assist decision-making of clinical experts at the individual patient level; b) the study implemented analytics in clinical practice using computerized technology; and c) the study reported a monetary estimation of the potential impact of the analytics. Application of these 3 criteria led to the exclusion of studies that only reported time or computation savings and studies that did not assist clinical experts at the individual patient level. Thus, we did not include studies that informed guidelines or policy makers. We also excluded analytics that produced results that could be easily printed on paper for use in clinical practice (ie, Ottawa Ankle Rules) and studies that simply used data mining technologies to extract records from an electronic health record (EHR) but not to perform any analyses of the extracted data.

Data extraction

Data extraction was performed by 1 author (LB). For a random 10% of papers, data was extracted by a second author (WR) to check for concordance. In the end, there were no significant differences in the results. We extracted the following data for each study: patient population, description of the technology in which the analytics are embedded (ie, clinical decision support systems); the analytics used for discovery and communication of patterns in data; description of the data; the intervention and the comparator in the economic evaluation; the perspective, outcomes, and costs included; and results, recommendations, and conflicts of interest. Conflicts of interest included those reported in the paper (related and unrelated), commercial employment of authors, and funding by industry.

We also reported the type of economic evaluation (ie, full or partial) that was used. A full economic evaluation compares 2 or more alternatives and includes both costs and consequences. Partial economic evaluations do not contain a comparison or exclude either costs or consequences.23 Thus, when a study reported cost estimates but no health outcomes, they were classified as partial. For full economic evaluations, we reported the ratio of costs over effects, also known as the incremental cost-effectiveness ratio. Furthermore, economic evaluations can offer valuable insights for decision-makers at many different stages in the development process (ie, during and after development).25 After development, they can assist healthcare payers when choosing novel technologies in which to invest their constrained budget. During development, an “early” economic evaluation can assist developers by identifying minimal requirements of the technology, areas for further research, and viable exploitation and market access strategies.25–27

In our results, we also distinguished in which stage of development the economic evaluation was performed. If a study provided recommendations for developing a technology that did not exist, it was categorized as “before” development. Studies were categorized as “during” development when the economic evaluation was performed and presented alongside development unless the aim of the study was to inform purchasing decisions of funding bodies (ie, perspective of the National Healthcare Services) or when the analytics were already implemented in clinical practice. All remaining studies were categorized as being performed “after” development.

We also performed an analysis to identify economic evaluations that might be classified as “big data analytics”. We used broad criteria to select the highest possible number of papers to sketch a best-case scenario. We defined these criteria based on the volume, variety, and velocity of the data. We classified papers as having big volume when they utilized next generation sequencing data, EHR records or claims data with a sample size of more than 100 000 units (ie, patients, admissions), and all imaging papers published after 2013. Papers were included because of their variety when they combined multiple data types (ie, structured and unstructured data, combining multiple data sources). All papers that used monitoring data published after 2013 were included because they might fulfil the velocity criteria.

RESULTS

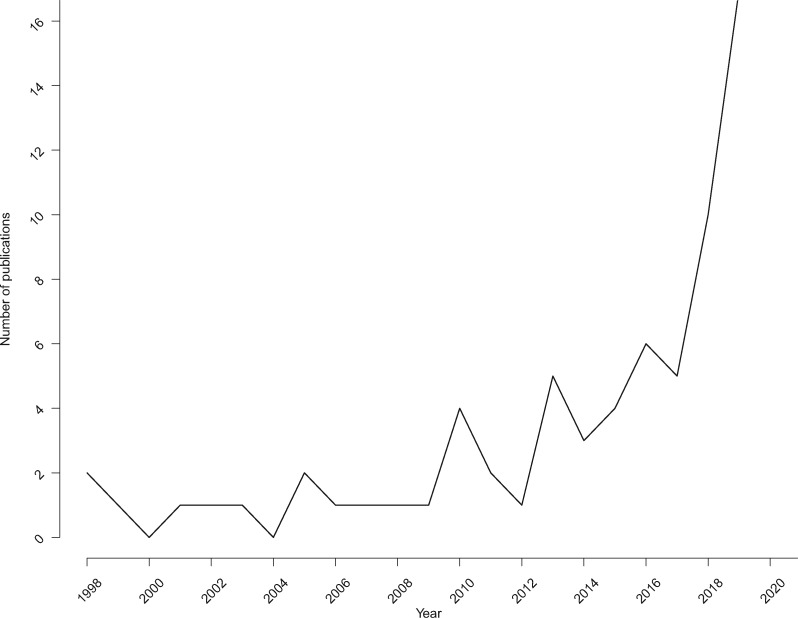

The initial search yielded 12 133 records of which 71 papers were included in the final analysis after title/abstract and full-text screening (Figure 1). Important exclusion criteria for full-text papers were that no monetary estimates were included and that no analytics were used.

Figure 1.

PRISMA flowchart.

Summary of papers

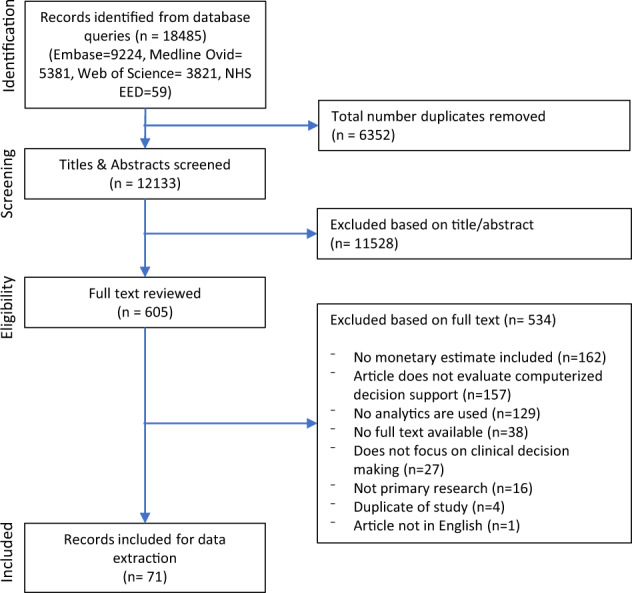

We found that all papers could be classified into 4 categories according to the type of data that was used: medical history databases (ie, data from EHRs, clinical trial databases, claims databases), imaging data, monitoring data (ie, continuous data collection using sensors), and omics data (ie, proteomics, genomics, transcriptomics, metabolomics) (Table 1). Almost all papers originated from North America and Europe (87%). The US was well represented with 39 papers mainly focusing on the use of medical history and omics data. The number of papers originating from Europe was considerably lower (n = 20), while few or no papers originated from South America, Australia, and Africa. There has been a clear increase in the number of publications from 2016 onwards (Figure 2).

Table 1.

Summary of all records according to data type used

| Total | Medical history | Imaging | Monitoring | Omics | |

|---|---|---|---|---|---|

| Total | 71 | 44 | 8 | 8 | 11 |

| Continent | |||||

| North America | 42 | 27 | 3 | 3 | 9 |

| Europe | 20 | 11 | 2 | 5 | 2 |

| Asia | 7 | 5 | 2 | – | – |

| Africa | 1 | – | 1 | – | – |

| South America | 1 | 1 | – | – | – |

| Australia | – | – | – | – | – |

| Type of economic evaluation | |||||

| Full | 22 | 8 | 5 | 2 | 7 |

| Partial | 49 | 36 | 3 | 6 | 4 |

| Perspective | |||||

| Payer perspective | 7 | 3 | – | – | 4 |

| National healthcare system | 8 | 3 | 1 | 2 | 2 |

| Provider perspective | 3 | 1 | – | 1 | – |

| Other | 2 | – | 2 | – | – |

| No perspective reported | 52 | 37 | 5 | 5 | 5 |

| Stage of development | |||||

| Before development | 1 | 1 | – | – | – |

| During development | 33 | 31 | 2 | – | – |

| After development | 37 | 12 | 6 | 8 | 11 |

| Measure of effectiveness | |||||

| QALYs and Life Years | 15 | 5 | 4 | 2 | 4 |

| Model Performance | 29 | 27 | 2 | – | – |

| Other | 20 | 10 | 2 | 3 | 5 |

| Not included | 7 | 2 | – | 3 | 2 |

| Incremental health effects | |||||

| Decrease in effects | 5 | 2 | 1 | – | 2 |

| No difference | 5 | 3 | – | 2 | – |

| Increase in effects | 41 | 23 | 7 | 4 | 7 |

| Not included | 20 | 16 | 2 | 2 | |

| Incremental costs | |||||

| Savings | 54 | 39 | 5 | 5 | 5 |

| No difference | 5 | 2 | – | 3 | – |

| Increase in costs | 12 | 3 | 3 | – | 6 |

| Include costs of implementing analytics | 22 | 2 | 5 | 5 | 10 |

| Recommendations for research & development | |||||

| Focus development on improving the analytics | 30 | 23 | 2 | 2 | 3 |

| Validation and feasibility of implementation | 19 | 13 | 3 | 2 | 1 |

| Development for other clinical areas or subgroups | 11 | 8 | 2 | 1 | – |

| Pricing and economics of the analytics | 9 | 2 | 3 | – | 4 |

| Cost-effectiveness research | 5 | 4 | – | 1 | – |

| Development of the intervention that follows | 3 | 3 | – | – | – |

| Multidisciplinary collaboration | 2 | 2 | – | – | – |

| Refer to big data in the text | 6 | 6 | – | – | – |

| Potential to be classified as big data analytics | 20 | 8 | 5 | 4 | 3 |

Abbreviations: QALYs, quality adjusted life years.

Figure 2.

Number of publications according to the year of publication.

Most studies were partial economic evaluations and found that analytics may improve outcomes and generate savings. A perspective was not often reported, and no study reported a societal perspective. Almost all partial economic evaluations reported savings compared to half of the studies reporting results from full economic evaluations. When grouped according to conflict of interest, no significant differences were found in the percentage of studies that reported savings and improved health. For economic evaluations without a conflict of interest, 61% were performed during development compared to 22% with no conflict of interest. All but 1 reported savings.

In the following paragraphs we will discuss economic results for all 4 data types. An overview of the economic results for all papers can be found in Supplementary Appendix B. A detailed description of all analytics and data used can be found in Supplementary Appendix C.

Analytics for medical history data

The first category consisted of studies that used historic databases containing information on patient demographics and medical history (ie, test results and drug prescriptions) (n = 44).28–71 All papers presented predictive or prescriptive analytics that assist clinical decision-making using a variety of techniques, such as regression, support vector machines, and Markov decision processes. The risk of readmission (n = 9) and problems pertaining to the emergency department (n = 5) were most often examined and 1 study addressed pediatric care.42 Both structured data, such as demographics and laboratory results, and unstructured data, such as free text messages (n = 4),37,40,44,50 were used and the sample size varied from N = 80 patients65 to more than 800 000 urine samples.68 This was the only category in which authors referred to the term “big data” (n = 6).32,35,37,40,50,60

Most of the studies in this category were partial economic evaluations (n = 36) and most were conducted during development (n = 31). Results were often limited to model performance (ie, classification accuracy, area under the curve) and were rarely translated into health benefits such as quality-adjusted life-years. Almost all studies found that the analytics could lead to monetary savings, yet only 2 papers included implementation costs of the analytics.33,62 These costs could, for instance, consist of licensing costs and costs of implementing analytics within a hospital system. Authors often recommended to continue development and focus on improving the analytics. Furthermore, the need for further validation prior to implementation was frequently emphasized.

Analytics for imaging data

Eight studies presented predictive analytics for 7 different types of imaging data (CT, MRI, Chest radiographs, digital cervical smears, mammographies, digital photographs, and ventilation-perfusion lung scans).72–79 The number of full economic evaluations73,75–77,79 and studies performed after development73–76,78 were both higher than the first group of papers that used medical history data. Four studies measured effects in (quality-adjusted) life-years,73,75–77 and more than half of the studies included implementation costs of analytics.73–77 The number of studies that found the analytics could lead to cost-savings was once again quite high (63%).72–74,78,79 Just like the studies that used medical history data, authors of studies in this category emphasized the need for further validation prior to implementation. However, several studies also emphasized the balance between the requirements of the technologies (ie, test sensitivity) and potential health benefits and cost-savings.75,76,79

Analytics for monitoring data

Monitoring data collected with a variety of devices and sensors (ie, airflow monitoring, continuous glucose monitoring, continuous performance tests, infrared cameras, vital signs monitors) was used in 8 studies.80–87 Five of these studies reported descriptive analytics that monitored patient outcomes and compared this to a range or reference value.81,83–85,87 This group of papers differed from those using imaging and medical history data since most analytics were implemented in a medical device. All technologies were evaluated after development, of which many were partial economic evaluations. Roughly half of the studies resulted in more effects82–84,86 and savings82,84–87 and included costs of the device and/or analytics.81,86,87

Analytics for omics data

Eleven papers reported the potential impact of predictive and prescriptive analytics of omics data, often with the aim of applying them as a test in clinical practice.88–98 Only 2 of these papers focused on the use of Next Generation Sequencing data94,96 and 1 paper combined multiple types of data (pharmacogenomics, literature, medical history).89 The remaining papers utilized microarray data, and all the analytics that were adopted as a test were used in oncology (n = 9).88,90–93,95–98

Compared to the other categories, the percentage of full economic evaluations was high.90,92,93,95–98 In half of the studies, the perspective used was that of the payer or the healthcare system. Furthermore, just like the studies that used monitoring data, all economic evaluations were performed after development. Seven studies reported increased effects ,88,90–93,96,97 and 6 studies reported that use of analytics would increase costs.90,93–95,97,98 All but 1 study included the costs of the analytics or the test in which the analytics were implemented.89 Moreover, unlike the other categories, several papers discussed price thresholds at which the analytics or the test would be cost-neutral or dominant (ie, more effects and lower costs) or thresholds at which the analytics or test would be cost-effective (ie, where the incremental cost-effectiveness ratio would be below a specific cost-effectiveness threshold).

Big data analytics

We found that less than a third of all papers (n = 20) might fulfil criteria for classification as “big data analytics” (Table 2). Most papers were included because their volume might be large enough to be considered big data (ie, N > 100 000, imaging data) and studies that used monitoring data were included because of the potential speed with which the data is collected (velocity). Eight of these papers used medical history data,32,37,40,44,45,50,60,68 5 used imaging data,72–74,76,78 4 used monitoring data,80,82,83,87 and 3 used omics data.89,94,44,96 Most were partial economic evaluations (n = 15) and 12 were performed after development. All but 544,76,80,83,94 corroborated expectations that big data analytics could result in cost-savings, varying from US$126 per patient89 to more than US$500 million for the entire US healthcare system.72 However, only a handful of papers included the costs of the analytics.73,74,76,87,94,96

Table 2.

Classification of papers that could be defined as “big data” studies based on the criteria of volume, velocity, and variety. These papers represent a subset of the initial 71 papers

| Volume |

Velocity |

Variety |

|||

|---|---|---|---|---|---|

| Article | Next generation sequencing data | Medical history data with N > 100,000 | Imaging data published after 2013 | Monitoring data published after 2013 | Combines multiple data types |

| Burton (2019) | X | ||||

| Duggal (2016) | X | ||||

| Golas (2018) | X | ||||

| Hunter-Zinck (2019) | X | X | |||

| Jamei (2017) | X | ||||

| Lee (2015) | X | X | |||

| Rider (2019) | X | ||||

| Wang (2019) | X | ||||

| Carballido-Gamio (2019) | X | ||||

| Crespo (2019) | X | ||||

| Philipsen (2015) | X | ||||

| Sato (2014) | X | ||||

| Sreekumari (2019) | X | ||||

| Brocklehurst (2018) | X | ||||

| Calvert (2017) | X | ||||

| Hollis (2018) | X | ||||

| Sánchez-Quiroga (2018) | X | ||||

| Brixner (2016) | X | ||||

| Mathias (2016) | X | ||||

| Nicholson (2019) | X | ||||

| Total | 2 | 6 | 5 | 4 | 5 |

DISCUSSION

In this review, we aimed to determine the health and economic impact of big data analytics for clinical decision-making. We found that expectations of big data analytics with respect to savings and health benefits are not yet reflected in the academic literature. Most studies are partial economic evaluations and the costs of implementing analytics are scarcely included in the calculations. To ensure optimal decision-making, guidelines recommend a full economic evaluation that includes all relevant costs for payers (ie, costs of analytics). Our results align with earlier research noting deployment costs are rarely considered while these costs can be a major barrier to successfully implementing analytics.99

We found that a small subset might be classified as big data analytics. We adopted a broad definition of big data to maximize the number of studies that would be considered as studies of big data. Therefore, the actual number of studies would be even lower if papers were to be assessed by a panel of experts. This corroborates a previous study from 2018 which found that quantified benefits of big data analytics are scarce.1

The studies were grouped into 4 categories according to the data sources used, which were similar to those reported by Mehta et al.1 Two main differences were that we grouped all databases that reported information relating to a patient’s medical history (instead of separating claims and EHR data) and we included a category that evaluated analytics for monitoring data generated in the hospital. This category was not available in the classification used by Mehta et al. However, they reported some categories (ie, social media and wearable sensors) that are not yet represented in the literature on economic evaluations. None of the studies evaluated technologies that used patient-generated data collected using different methods such as healthcare trackers.

Recommendations for future economic evaluations

Good policy making decisions about the use of analytics requires knowledge of the impact that the analytics will have on costs and health outcomes. With this in mind, policy makers could provide incentives to developers of analytics to perform good-quality economic evaluations. Economic evaluations of analytics are still scarce and the studies that were available often did not adhere to best-practice guidelines, thereby limiting their value to inform decision-making. Often a partial instead of a full economic evaluation was performed, costs of purchasing and implementing the analytics were excluded, or only intermediate outcomes were reported. For payers and policy makers, excluding for instance the costs of the analytics could result in an underestimation of the investment needed to implement the technology or an overestimation of its financial benefits. By means of incentives, policy makers could stimulate developers to adhere to guidelines and best practice recommendations (ie, Drummond,23 Buisman,26 Morse99) This could improve the quality of results and thus their ability to inform decision-making.

We found a relatively high number of studies that performed an economic evaluation of analytics during development compared to other fields (ie, drug or medical device development).100,101 A possible explanation for this is the high cost of validating and deploying analytics, known important barriers to implementation.11,99 Few artificial intelligence and big data analytics solutions have been implemented successfully.3,11 To overcome this challenge, Frohlich et al recommend the use of pilot trials to illustrate the potential effectiveness and efficiency of analytics. These results can then be used to find new investors for clinical research.11 In our results, we also saw that those without a conflict of interest (ie, academia) were more inclined to publish during development which might be explained by the need to attract funding for further development.

Defining big data to assist evaluation

Without consensus on a definition, no objective assessment can be made as to whether investments following the introduction of big data in healthcare have realized expectations, whether they can be considered good value for money, and whether future investments should be stimulated. In our analysis, we found that it likely that a small number of studies have performed an economic evaluation of big data analytics. However, this absolute number is uncertain since a clear definition of “big data” is still lacking almost 10 years after its introduction in healthcare. For policy makers and those who wish to practice evidence-based medicine, it is essential to know where and how big data analytics would result in health and financial benefits before investing in products described in mainstream media as “big data” technologies (ie, Afirma GSC, YouScript).102,103 This remains a challenging task if there is no consensus on its definition. Therefore, we recommend experts in the field reconsider the possibility of generating a quantitative definition of big data in healthcare.

Defining big data is no easy task, and we think that a definition will only be accepted by the healthcare field if it is developed by a multidisciplinary collaboration of experts from academia, healthcare organizations, insurers, federal entities, policy makers, and commercial parties. Many authors have described the term in slightly different words,1,104 some have tried to quantify,105 and others have purposefully refrained from doing so.14 Auffray et al14 stated in 2016 that a single definition of big data would probably be “too abstract to be useful” and proposed the use of a workable definition in which big data covers the high volume and diversity of data sources managed with best-practice technologies such as advanced analytics solutions. However, descriptions such as “best-practice,” “advanced,”14 or “traditional”106 are time-dependent. What is “traditional” in 2014 is not necessarily “traditional” in 2020. Thus, perhaps a definition of big data should quantify the “data” element, include a concrete list of analytics that are considered advanced or best practice, be time-dependent, and be updated regularly. We recognize that it might be extremely difficult to achieve wide consensus and we do not think this can be realized without support from academic, clinical, policy, federal, and commercial stakeholders.

Limitations

One limitation of our research is that economic evaluations do not always describe the analytics element of the intervention that was being evaluated. For instance, in studies of omics data, the papers generally referred to the tool (ie, Afirma GSC) but did not describe the analytics used in this tool. One way to ensure that economic evaluations that assess a big data technology are included in future reviews would be to specify explicit tools that might contain big data analytics (ie, Afirma GSC) for each data type in a search strategy. However, such a list is likely to be very long, and this will also be challenging without a definition of big data. Research into the economic value of big data analytics might also be facilitated by better reporting in economic evaluations on the data and analytics used for development. Another limitation is that studies that did not refer to cost estimations in their title/abstract were excluded. This could have led to exclusion of studies that perform a cost estimation but do not report this as a primary outcome in the abstract. A possible solution for future research would be to include studies for full-text screening when 1 of the authors is a health economist or employed in a health policy or economics department.

Also, since our review included only published economic evaluations, it is possible that our results are influenced by the absence of an incentive to submit an academic paper and by publication bias. Commercial developers do not always have an incentive to publish but do have an incentive to market their products using the results of economic analyses. If these studies do not include costs of analytics in their estimation of benefits, this would only underline the importance of our recommendations. It is also possible that studies that do not find a technology cost-effective include costs of analytics more often and are rejected for publication because of negative results.

Methodological limitations were that study selection and data extraction were performed by a single reviewer due to the size of the hits from the search strategy and the fact that Business Review Complete (BSC) was not included in the literature search. While this may have resulted in the exclusion of some relevant studies, we expect this number to be small. Moreover, this does not affect the conclusions of our study. Our search was limited to analytics for decision-making of clinical experts at the individual patient level. There are many other ways in which analytics could improve health, such as managing epidemics and policy making to improve population health that were beyond the scope of this article. To conclude, it is possible that developers sometimes have a valid reason for not including costs of analytics which we did not consider in this study.

CONCLUSION

This is the first study to assess the health and economic impact of big data analytics for clinical decision-making. At present the potential benefits of big data analytics for clinical practice cannot yet be corroborated with academic literature despite high expectations. We found that economic evaluations were sometimes used to estimate the potential of analytics. However, many studies were partial economic evaluations and did not include costs of implementing analytics. Therefore, economic evaluations that adhere to best-practice guidelines should be encouraged. This and the lack of an appropriate definition of big data complicate justification of future expenses and makes it exceedingly difficult to determine whether expectations of big data analytics have thus far been realized. Therefore, we recommend key experts in the field of data science in healthcare reconsider the possibility of defining big data analytics for healthcare.

FUNDING

This work was supported by the European Union’s Horizon 2020 Research and Innovation Programme grant number 644906.

AUTHOR CONTRIBUTIONS

LB contributed to developing the research question, the search strategy, execution of the search, performed study selection, data extraction, and revision of the manuscript. JA contributed to developing the research question, assisted in study selection and data extraction, and revision of the manuscript. CUG contributed to the conceptual design of the research question and study and revision of the manuscript. WR contributed to developing the research question, assisted in study selection and data extraction, and revision of the manuscript. All authors (LB, JA, CUG, and WR) approved the final version for publication.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGMENTS

We would like to thank Wichor Bramer and Maarten Engel, medical information specialists at the Erasmus Medical Centre-Erasmus University Medical Centre, Rotterdam, for their assistance in deriving the search strategy and the systematic retrieval of data.

CONFLICT OF INTEREST STATEMENT

Carin Uyl-de Groot reports unrestricted grants from Boehringer Ingelheim, Celgene, Janssen-Cilag, Genzyme, Astellas, Sanofi, Roche, Astra Zeneca, Amgen, Gilead, Merck, Bayer, outside the submitted work. The remaining authors have no competing interest to declare.

REFERENCES

- 1. Mehta N, Pandit A. Concurrence of big data analytics and healthcare: a systematic review. Int J Med Inform 2018; 114: 57–65. [DOI] [PubMed] [Google Scholar]

- 2. Bates DW, Saria S, Ohno-Machado L, et al. Big data in health care: using analytics to identify and manage high-risk and high-cost patients. Health Aff (Millwood) 2014; 33 (7): 1123–31. [DOI] [PubMed] [Google Scholar]

- 3. Sanchez-Pinto LN, Luo Y, Churpek MM. Big data and data science in critical care. Chest 2018; 154 (5): 1239–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Celi LA, Mark RG, Stone DJ, et al. “ Big data” in the intensive care unit. Closing the data loop. Am J Respir Crit Care Med 2013; 187 (11): 1157–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Janke AT, Overbeek DL, Kocher KE, et al. Exploring the potential of predictive analytics and Big Data in emergency care. Ann Emerg Med 2016; 67 (2): 227–36. [DOI] [PubMed] [Google Scholar]

- 6. Hemingway H, Asselbergs FW, Danesh J, et al. Big data from electronic health records for early and late translational cardiovascular research: challenges and potential. Eur Heart J 2018; 39 (16): 1481–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Rumsfeld JS, Joynt KE, Maddox TM. Big data analytics to improve cardiovascular care: promise and challenges. Nat Rev Cardiol 2016; 13 (6): 350–9. [DOI] [PubMed] [Google Scholar]

- 8. Hofmann-Apitius M. Is dementia research ready for big data approaches? BMC Med 2015; 13 (1): 145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Alyass A, Turcotte M, Meyre D. From big data analysis to personalized medicine for all: challenges and opportunities review. BMC Med Genomics 2015; 8 (1): 33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Beckmann JS, Lew D. Reconciling evidence-based medicine and precision medicine in the era of big data: challenges and opportunities review. Genome Med 2016; 8 (1): 134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Fröhlich H, Balling R, Beerenwinkel N, et al. From hype to reality: data science enabling personalized medicine. BMC Med 2018; 16 (1): 150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Phillips KA, Trosman JR, Kelley RK, et al. Genomic sequencing: assessing the health care system, policy, and big-data implications. Health Aff (Millwood) 2014; 33 (7): 1246–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Prosperi M, Min JS, Bian J, et al. Big data hurdles in precision medicine and precision public health. BMC Med Inform Decis Mak 2018; 18 (1): 139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Auffray C, Balling R, Barroso I, et al. Making sense of big data in health research: towards an EU action plan. Genome Med 2016; 8 (1): 71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Roski J, Bo-Linn GW, Andrews TA. Creating value in health care through big data: opportunities and policy implications. Health Aff (Millwood) 2014; 33 (7): 1115–22. [DOI] [PubMed] [Google Scholar]

- 16. Raghupathi W, Raghupathi V. Big data analytics in healthcare: promise and potential review. Health Inf Sci Syst 2014; 2 (1): 3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Yang C, Kong G, Wang L, et al. Big data in nephrology: are we ready for the change? Nephrology 2019; 24 (11): 1097–102. [DOI] [PubMed] [Google Scholar]

- 18. Groves P, Kayyali B, Knott D, et al. The ‘big data’ revolution in healthcare: Accelerating value and innovation. McKinsey & Company; 2013.

- 19. National Institutes of Health [Internet]. Bethesda:2020. Big Data to Knowledge. National Institutes of Health; 2020[cited 2019 January 8]. https://commonfund.nih.gov/bd2k

- 20. Morello L, Guglielmi G. US science agencies set to win big in budget deal. Nature [Internet] 2018; 555 (7698): 572. Available from: https://www.nature.com/articles/d41586-018-03700-9 [DOI] [PubMed] [Google Scholar]

- 21. Banks MA. Sizing up big data. Nat Med 2020; 26 (1): 5–6. [DOI] [PubMed] [Google Scholar]

- 22. Kisner J. Creating Shareholder Value with AI? Not so Elementary, My Dear Watson. [Internet] Jefferies Group LLC; 2017. [cited 2019, January 8]. https://javatar.bluematrix.com/pdf/fO5xcWjc

- 23. Drummond MF, Sculpher MJ, Claxton K, Stoddart GL, Torrance GW. Methods for the Economic Evaluation of Health Care Programmes. 3rd ed. New York: Oxford University Press; 2005. [Google Scholar]

- 24. Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med 2018; 169 (7): 467–73. [DOI] [PubMed] [Google Scholar]

- 25. Ijzerman MJ, Steuten L. Early assessment of medical technologies to inform product development and market access. Appl Health Econ Health Policy 2011; 9 (5): 331–47. [DOI] [PubMed] [Google Scholar]

- 26. Buisman LR, Rutten-van Mölken M, Postmus D, et al. The early bird catches the worm: early cost-effectiveness analysis of new medical tests. Int J Technol Assess Health Care 2016; 32 (1–2): 46–53. [DOI] [PubMed] [Google Scholar]

- 27. Pietzsch JB, Paté-Cornell ME. Early technology assessment of new medical devices. Int J Technol Assess Health Care 2008; 24 (01): 36–44. [DOI] [PubMed] [Google Scholar]

- 28. Ashfaq A, Sant’Anna A, Lingman M, et al. Readmission prediction using deep learning on electronic health records. J Biomed Inform 2019; 10 (97). [DOI] [PubMed] [Google Scholar]

- 29. Bennett CC, Hauser K. Artificial intelligence framework for simulating clinical decision-making: a Markov decision process approach. Artif Intell Med 2013; 57 (1): 9–19. [DOI] [PubMed] [Google Scholar]

- 30. Bremer V, Becker D, Kolovos S, et al. Predicting therapy success and costs for personalized treatment recommendations using baseline characteristics: data-driven analysis. J Med Internet Res 2018; 20 (8): e10275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Brisimi TS, Xu T, Wang T, et al. Predicting diabetes-related hospitalizations based on electronic health records. Stat Methods Med Res 2019; 28 (12): 3667–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Burton RJ, Albur M, Eberl M, Cuff SM. Using artificial intelligence to reduce diagnostic workload without compromising detection of urinary tract infections. BMC Med Inform Decis Mak 2019; 19 (1): 171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Chae YM, Lim HS, Lee JH, et al. Development of an intelligent laboratory information system for community health promotion center. Medinfo 2001; 10: 425–8. [PubMed] [Google Scholar]

- 34. Chi CL, Street WN, Katz DA. A decision support system for cost-effective diagnosis. Artif Intell Med 2010; 50 (3): 149–61. [DOI] [PubMed] [Google Scholar]

- 35. Choi HS, Choe JY, Kim H, et al. Deep learning based low-cost high-accuracy diagnostic framework for dementia using comprehensive neuropsychological assessment profiles. BMC Geriatr 2018; 18 (1): 234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Cooper GF, Abraham V, Aliferis CF, et al. Predicting dire outcomes of patients with community acquired pneumonia. J Biomed Inform 2005; 38 (5): 347–66. [DOI] [PubMed] [Google Scholar]

- 37. Duggal R, Shukla S, Chandra S, et al. Predictive risk modelling for early hospital readmission of patients with diabetes in India. Int J Diabetes Dev Ctries 2016; 36 (4): 519–28. [Google Scholar]

- 38. Elkin PL, Liebow M, Bauer BA, et al. The introduction of a diagnostic decision support system (DXplainTM) into the workflow of a teaching hospital service can decrease the cost of service for diagnostically challenging Diagnostic Related Groups (DRGs). Int J Med Inform 2010; 79 (11): 772–777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Escudero J, Ifeachor E, Zajicek JP, et al. Machine learning-based method for personalized and cost-effective detection of Alzheimer’s disease. IEEE Trans Biomed Eng 2013; 60 (1): 164–8. [DOI] [PubMed] [Google Scholar]

- 40. Golas SB, Shibahara T, Agboola S, et al. A machine learning model to predict the risk of 30-day readmissions in patients with heart failure: a retrospective analysis of electronic medical records data. BMC Med Inform Decis Mak 2018; 18 (1): 44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Govers TM, Rovers MM, Brands MT, et al. Integrated prediction and decision models are valuable in informing personalized decision making. J Clin Epidemiol 2018; 104: 73–83. [DOI] [PubMed] [Google Scholar]

- 42. Grinspan ZM, Patel AD, Hafeez B, et al. Predicting frequent emergency department use among children with epilepsy: a retrospective cohort study using electronic health data from 2 centers. Epilepsia 2018; 59 (1): 155–69. [DOI] [PubMed] [Google Scholar]

- 43. Harrison D, Muskett H, Harvey S, et al. Development and validation of a risk model for identification of non-neutropenic, critically ill adult patients at high risk of invasive Candida infection: the Fungal Infection Risk Evaluation (FIRE) Study Review. Health Technol Assess 2013; 17 (3): 1–156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Hunter-Zinck HS, Peck JS, Strout TD, et al. Predicting emergency department orders with multilabel machine learning techniques and simulating effects on length of stay. J Am Med Inform Assoc 2019; 26 (12): 1427–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Jamei M, Nisnevich A, Wetchler E, et al. Predicting all-cause risk of 30-day hospital readmission using artificial neural networks. PLoS One 2017; 12 (7): e0181173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Javitt JC, Steinberg G, Locke T, et al. Using a claims data-based sentinel system to improve compliance with clinical guidelines: results of a randomized prospective study. Am J Manag Care 2005; 11: 93–102. [PubMed] [Google Scholar]

- 47. Kocbek P, Fijacko N, Soguero-Ruiz C, et al. Maximizing interpretability and cost-effectiveness of Surgical Site Infection (SSI) predictive models using feature-specific regularized logistic regression on preoperative temporal data. Comput Math Methods Med 2019; 2019: 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Kofoed K, Zalounina A, Andersen O, et al. Performance of the TREAT decision support system in an environment with a low prevalence of resistant pathogens. J Antimicrob Chemother 2009; 63 (2): 400–4. [DOI] [PubMed] [Google Scholar]

- 49. Ladabaum U, Wang G, Terdiman J, et al. Strategies to identify the Lynch syndrome among patients with colorectal cancer: a cost-effectiveness analysis. Ann Intern Med 2011; 155 (2): 69–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Lee EK, Atallah HY, Wright MD, et al. Transforming hospital emergency department workflow and patient care. Interfaces 2015; 45 (1): 58–82. [Google Scholar]

- 51. Lee HK, Jin R, Feng Y, et al. An analytical framework for TJR readmission prediction and cost-effective intervention. IEEE J Biomed Health Inform 2019; 23 (4): 1760–72. [DOI] [PubMed] [Google Scholar]

- 52. Lin Y, Huang S, Simon GE, et al. Cost-effectiveness analysis of prognostic-based depression monitoring. IISE Trans Healthcare Syst Eng 2019; 9 (1): 41–54. [Google Scholar]

- 53. Ling CX, Sheng VS, Yang Q. Test strategies for cost-sensitive decision trees. IEEE Trans Knowl Data Eng 2006; 18 (8): 1055–67. [Google Scholar]

- 54. Marble RP, Healy JC. A neural network approach to the diagnosis of morbidity outcomes in trauma care. Artif Intell Med 1999; 15 (3): 299–307. [DOI] [PubMed] [Google Scholar]

- 55. Monahan M, Jowett S, Lovibond K, et al. Predicting out-of-office blood pressure in the clinic for the diagnosis of hypertension in primary care: an economic evaluation. Hypertension 2018; 71 (2): 250–61. [DOI] [PubMed] [Google Scholar]

- 56. Murtojärvi M, Halkola AS, Airola A, et al. Cost-effective survival prediction for patients with advanced prostate cancer using clinical trial and real-world hospital registry datasets. Int J Med Inform 2020; 133: 104014. [DOI] [PubMed] [Google Scholar]

- 57. Parekattil SJ, Fisher HAG, Kogan BA. Neural network using combined urine nuclear matrix protein-22, monocyte chemoattractant protein-1 and urinary intercellular adhesion molecule-1 to detect bladder cancer. J Urol 2003; 169 (3): 917–20. [DOI] [PubMed] [Google Scholar]

- 58. Petersen ML, LeDell E, Schwab J, et al. Super learner analysis of electronic adherence data improves viral prediction and may provide strategies for selective HIV RNA monitoring. J Acquir Immune Defic Syndr 2015; 69 (1): 109–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Pölsterl S, Singh M, Katouzian A, et al. Stratification of coronary artery disease patients for revascularization procedure based on estimating adverse effects. BMC Med Inform Decis Mak 2015; 15 (1): 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Rider NL, Miao D, Dodds M, et al. Calculation of a primary immunodeficiency “risk vital sign” via population-wide analysis of claims data to aid in clinical decision support. Front Pediatr 2019; 7:70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Ruff C, Koukalova L, Haefeli WE, et al. The role of adherence thresholds for development and performance aspects of a prediction model for direct oral anticoagulation adherence. Front Pharmacol 2019; 10:113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Shazzadur Rahman AAM, Langley I, Galliez R, et al. Modelling the impact of chest X-ray and alternative triage approaches prior to seeking a tuberculosis diagnosis. BMC Infect Dis 2019; 19 (1): 93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Shi J, Su Q, Zhang C, et al. An intelligent decision support algorithm for diagnosis of colorectal cancer through serum tumor markers. Comput Methods Programs Biomed 2010; 100 (2): 97–107. [DOI] [PubMed] [Google Scholar]

- 64. Singh S, Nosyk B, Sun H, et al. Value of information of a clinical prediction rule: informing the efficient use of healthcare and health research resources. Int J Technol Assess Health Care 2008; 24 (01): 112–9. [DOI] [PubMed] [Google Scholar]

- 65. Tan BK, Lu G, Kwasny MJ, et al. Effect of symptom-based risk stratification on the costs of managing patients with chronic rhinosinusitis symptoms. International Forum of Allergy and Rhinology 2013; 3 (11): 933–40. [DOI] [PubMed] [Google Scholar]

- 66. Teferra RA, Grant BJB, Mindel JW, et al. Cost minimization using an artificial neural network sleep apnea prediction tool for sleep studies. Annals ATS 2014; 11 (7): 1064–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Walsh CG, Sharman K, Hripcsak G. Beyond discrimination: a comparison of calibration methods and clinical usefulness of predictive models of readmission risk. J Biomed Inform 2017; 76: 9–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Wang HY, Hung CC, Chen CH, et al. Increase Trichomonas vaginalis detection based on urine routine analysis through a machine learning approach. Sci Rep 2019; 9 (1): 11074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Warner JL, Zhang P, Liu J, et al. Classification of hospital acquired complications using temporal clinical information from a large electronic health record. J Biomed Inform 2016; 59: 209–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Webb BJ, Sorensen J, Mecham I, et al. Antibiotic use and outcomes after implementation of the drug resistance in pneumonia score in ED patients with community-onset pneumonia. Chest 2019; 156 (5): 843–51. [DOI] [PubMed] [Google Scholar]

- 71. Zhang C, Paolozza A, Tseng PH, et al. Detection of children/youth with fetal alcohol spectrum disorder through eye movement, psychometric, and neuroimaging data. Front Neurol [Internet] 2019; [cited 2020 January 12]10: 80. https://www.frontiersin.org/articles/10.3389/fneur.2019.00080/full. doi: 10.3389/fneur.2019.00080 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Carballido-Gamio J, Yu A, Wang L, et al. Hip fracture discrimination based on Statistical Multi-parametric Modeling (SMPM). Ann Biomed Eng 2019; 47 (11): 2199–212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Crespo C, Linhart M, Acosta J, et al. Optimisation of cardiac resynchronisation therapy device selection guided by cardiac magnetic resonance imaging: cost-effectiveness analysis. Eur J Prev Cardiol 2019; 27 (6): 622–32. [DOI] [PubMed] [Google Scholar]

- 74. Philipsen RH, Sánchez CI, Maduskar P, et al. Automated chest-radiography as a triage for Xpert testing in resource-constrained settings: a prospective study of diagnostic accuracy and costs. Sci Rep 2015; 5 (1): 12215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Radensky PW, Mango LJ. Interactive neural network-assisted screening: an economic assessment. Acta Cytol 1998; 42 (1): 246–52. [DOI] [PubMed] [Google Scholar]

- 76. Sato M, Kawai M, Nishino Y, et al. Cost-effectiveness analysis for breast cancer screening: double reading versus single + CAD reading. Breast Cancer 2014; 21 (5): 532–41. [DOI] [PubMed] [Google Scholar]

- 77. Scotland GS, McNamee P, Fleming AD, et al. Costs and consequences of automated algorithms versus manual grading for the detection of referable diabetic retinopathy. Br J Ophthalmol 2010; 94 (6): 712–9. [DOI] [PubMed] [Google Scholar]

- 78. Sreekumari A, Shanbhag D, Yeo D, et al. A deep learning-based approach to reduce rescan and recall rates in clinical MRI examinations. AJNR Am J Neuroradiol 2019; 40 (2): 217–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Tourassi GD, Floyd CE, Coleman RE. Acute pulmonary embolism: cost-effectiveness analysis of the effect of artificial neural networks on patient care. Radiology 1998; 206 (1): 81–8. [DOI] [PubMed] [Google Scholar]

- 80. Brocklehurst P, Field D, Greene K, et al. Computerised interpretation of the fetal heart rate during labour: a randomised controlled trial (INFANT). Health Technol Assess 2018; 22 (9): 1–218. [DOI] [PubMed] [Google Scholar]

- 81. Brüggenjürgen B, Israel CW, Klesius AA, et al. Health services research in heart failure patients treated with a remote monitoring device in Germanya retrospective database analysis in evaluating resource use. J Med Econ 2012; 15 (4): 737–45. [DOI] [PubMed] [Google Scholar]

- 82. Calvert J, Hoffman J, Barton C, et al. Cost and mortality impact of an algorithm-driven sepsis prediction system. J Med Econ 2017; 20 (6): 646–51. [DOI] [PubMed] [Google Scholar]

- 83. Hollis C, Hall CL, Guo B, et al. The impact of a computerised test of attention and activity (QbTest) on diagnostic decision-making in children and young people with suspected attention deficit hyperactivity disorder: single-blind randomised controlled trial. J Child Psychol Psychiatr 2018; 59 (12): 1298–308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84. Levin RI, Koenig KL, Corder MP, et al. Risk stratification and prevention in chronic coronary artery disease: use of a novel prognostic and computer-based clinical decision support system in a large primary managed-care group practice. Dis Manag 2002; 5 (4): 197–213. [Google Scholar]

- 85. Marchetti A, Jacobs J, Young M, et al. Costs and benefits of an early-alert surveillance system for hospital inpatients. Curr Med Res Opin 2007; 23 (1): 9–16. [DOI] [PubMed] [Google Scholar]

- 86. Salzsieder E, Augstein P. The Karlsburg Diabetes Management System: translation from research to eHealth application. J Diabetes Sci Technol 2011; 5 (1): 13–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Sánchez-Quiroga MA, Corral J, Gómez-de-Terreros FJ, et al. Primary care physicians can comprehensively manage patients with sleep apnea a noninferiority randomized controlled trial. Am J Respir Crit Care Med 2018; 198 (5): 648–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88. Abeykoon JP, Mueller L, Dong F, et al. The effect of implementing gene expression classifier on outcomes of thyroid nodules with indeterminate cytology. Horm Canc 2016; 7 (4): 272–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89. Brixner D, Biltaji E, Bress A, et al. The effect of pharmacogenetic profiling with a clinical decision support tool on healthcare resource utilization and estimated costs in the elderly exposed to polypharmacy. J Med Econ 2016; 19 (3): 213–28. [DOI] [PubMed] [Google Scholar]

- 90. D’Andrea E, Choudhry NK, Raby B, et al. A bronchial-airway gene-expression classifier to improve the diagnosis of lung cancer: clinical outcomes and cost-effectiveness analysis. Int J Cancer 2020; 146: 781–790. [DOI] [PubMed] [Google Scholar]

- 91. Green N, Al-Allak A, Fowler C. Benefits of introduction of Oncotype DX® testing. Ann R Coll Surg Engl 2019; 101 (1): 55–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92. Labourier E. Utility and cost-effectiveness of molecular testing in thyroid nodules with indeterminate cytology. Clin Endocrinol 2016; 85 (4): 624–31. [DOI] [PubMed] [Google Scholar]

- 93. Lobo JM, Trifiletti DM, Sturz VN, et al. Cost-effectiveness of the Decipher genomic classifier to guide individualized decisions for early radiation therapy after prostatectomy for prostate cancer. Clin Genitourin Cancer 2017; 15 (3): e299–309. [DOI] [PubMed] [Google Scholar]

- 94. Mathias PC, Tarczy-Hornoch P, Shirts BH. Modeling the costs of clinical decision support for genomic precision medicine. AMIA Summits Transl Sci Proc 2016; 2016: 60–4. [PMC free article] [PubMed] [Google Scholar]

- 95. Nelson RE, Stenehjem D, Akerley W. A comparison of individualized treatment guided by VeriStrat with standard of care treatment strategies in patients receiving second-line treatment for advanced non-small cell lung cancer: a cost-utility analysis. Lung Cancer 2013; 82 (3): 461–8. [DOI] [PubMed] [Google Scholar]

- 96. Nicholson KJ, Roberts MS, McCoy KL, et al. Molecular testing versus diagnostic lobectomy in Bethesda III/IV thyroid nodules: a cost-effectiveness analysis. Thyroid 2019; 29 (9): 1237–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97. Seguí MÁ, Crespo C, Cortés J, et al. Genomic profile of breast cancer: cost-effectiveness analysis from the Spanish National Healthcare System perspective. Expert Rev Pharmacoecon Outcomes Res 2014; 14 (6): 889–99. [DOI] [PubMed] [Google Scholar]

- 98. Shapiro S, Pharaon M, Kellermeyer B. Cost-effectiveness of gene expression classifier testing of indeterminate thyroid nodules utilizing a real cohort comparator. Otolaryngol Head Neck Surg 2017; 157 (4): 596–601. [DOI] [PubMed] [Google Scholar]

- 99. Morse KE, Bagley SC, Shah NH. Estimate the hidden deployment cost of predictive models to improve patient care. Nat Med 2020; 26 (1): 18–19. [DOI] [PubMed] [Google Scholar]

- 100. Markiewicz K, Van Til JA, IJzerman MJ. Medical devices early assessment methods: systematic literature review. Int J Technol Assess Health Care 2014; 30 (2): 137–46. [DOI] [PubMed] [Google Scholar]

- 101. Pham B, Tu HAT, Han D, et al. Early economic evaluation of emerging health technologies: protocol of a systematic review. Syst Rev 2014; 3 (1): 81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Analytical Performance of Afirma GSC: A Genomic Sequencing Classifier for Cytology-Indeterminate Thyroid Nodule FNA Specimens.https://www.afirma.com/physicians/ata-2017/qa-3/Accessed January 2020

- 103. Miller K. Big Data and Clinical Genomics. https://www.clinicalomics.com/magazine-editions/volume-6-issue-number-2-march-april-2019/big-data-and-clinical-genomics/Accessed January 2020

- 104. Shilo S, Rossman H, Segal E. Axes of a revolution: challenges and promises of big data in healthcare. Nat Med 2020; 26 (1): 29–38. [DOI] [PubMed] [Google Scholar]

- 105. Baro E, Degoul S, Beuscart R, et al. Toward a literature-driven definition of big data in healthcare. Biomed Res Int 2015; 2015: 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106. Frost & Sullivan. Drowning in big data? Reducing information technology complexities and costs for healthcare organizations [Internet]. Mountain View: Frost & Sullivan; 2012. [cited 2019 January 8]. https://www.academia.edu/6563567/A_Frost_and_Sullivan_White_Paper_Drowning_in_Big_Data_Reducing_Information_Technology_Complexities_and_Costs_For_Healthcare_Organizations_CONTENTS

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.