Summary

Perception is a proactive ‘‘predictive’’ process, in which the brain takes advantage of past experience to make informed guesses about the world to test against sensory data. Here we demonstrate that in the judgment of the gender of faces, beta rhythms play an important role in communicating perceptual experience. Observers classified in forced choice as male or female, a sequence of face stimuli, which were physically constructed to be male or female or androgynous (equal morph). Classification of the androgynous stimuli oscillated rhythmically between male and female, following a complex waveform comprising 13.5 and 17 Hz. Parsing the trials based on the preceding stimulus showed that responses to androgynous stimuli preceded by male stimuli oscillated reliably at 17 Hz, whereas those preceded by female stimuli oscillated at 13.5 Hz. These results suggest that perceptual priors for face perception from recent perceptual memory are communicated through frequency-coded beta rhythms.

Subject Areas: Human-Computer Interaction, Social Sciences, Psychology

Graphical Abstract

Highlights

-

•

Perception is strongly influenced by prior expectations and perceptual experience

-

•

We show perceptual priors for face perception are communicated through beta rhythms

-

•

Priors for male and female are communicated via distinct beta frequencies

Human-Computer Interaction; Social Sciences; Psychology

Introduction

There is now very good evidence that perceptual performance is not constant over time, but oscillates rhythmically at various frequencies within the beta, alpha, and theta ranges. Behavioral oscillations have been reported for many performance measures, including reaction times, accuracy, and response bias (for reviews see (Bastos et al., 2015; Bonnefond et al., 2017; Fries, 2015)). Oscillations occur at various frequencies, usually lower for reaction times and sensitivity (typically theta range (Landau and Fries, 2012; Huang et al., 2015; Lakatos et al., 2008; Fiebelkorn et al., 2013)) than for criterion (Ho et al., 2017; Cecere et al., 2015; Zhang et al., 2019). In order to reveal the oscillatory behavioral performance, it is necessary to synchronize endogenous neural oscillations in some way, usually with a trigger. Various experiments have shown that either abrupt perceptual stimuli, such as visual attentional cues or sound bursts (Landau and Fries, 2012; Romei et al., 2012; Huang et al., 2015; Fiebelkorn et al., 2013; Song et al., 2014), or motor actions such as hand or eye movements (Tomassini et al., 2015, 2017; Benedetto et al., 2016, 2018, 2020; Hogendoorn, 2016; Zhang et al., 2019; Wutz et al., 2016) can act as a trigger to synchronise oscillations, so small gains in performance can be averaged over several trials to reveal statistically significant oscillatory behavior.

To date, most of the evidence for perceptual oscillations comes from simple “low-level” stimuli such as grating patches or tones, probably processed in primary sensory cortex. Recently, however, oscillations have also been observed in discrimination of faces. Using the bubbles approach, Gosselin and colleagues have reported oscillations in sensitivity around 10 Hz (Vinette et al., 2004; Blais et al., 2013; Dupuis-Roy et al., 2019). Wang and Luo (2017) showed that during a priming task, reaction times for face discrimination oscillate at about 5 Hz, but only for congruent trials, suggesting that priming information is communicated via a rhythmic mechanism. This is consistent with a recent suggestion that oscillations reflect top-down transmission of expectations and perceptual priors (Friston, 2005; Summerfield and De Lange, 2014; Summerfield and Koechlin, 2008).

It has long been known that perception depends not only on the current stimulus but also on prior information including expectations and perceptual experience (Gregory, 1968; van Helmholtz, 1867). One recent and effective technique to study effects of past stimuli and responses on perception is serial dependence: under many conditions, the appearance of images in a sequence depends strongly on the stimulus presented just prior to the current one. Judgements of orientation (Fischer and Whitney, 2014), numerosity (Cicchini et al., 2014), facial identity or gender (Liberman et al., 2014; Taubert et al., 2016), beauty, and even perceived body size (Alexi et al., 2018, 2019) are strongly biased toward the previous image. The biases introduced by serial dependence are not random errors but can lead to more efficient perception, tending to reduce overall error (Cicchini et al., 2018). As the world tends to remain constant over the short term, past events are good predictions of the future. Thus the previous stimulus acts as a predictor, or a prior, to be combined with the current sensory data to enhance the signal.

The neuronal mechanisms of serial dependence, and more in general of priors, are largely unknown. It is assumed that the prior is generated at mid to high levels of analysis and fed back to early sensory areas, which in turn modify the prior (Rao and Ballard, 1999); but we do not know how this information is propagated. One strong possibility, mentioned above, is that recursive propagation and updating of the prior is reflected in low-frequency neural oscillations (Friston et al., 2015; Vanrullen, 2017; Sherman et al., 2016). VanRullen and Macdonald (Vanrullen and Macdonald, 2012) suggest that past perceptual history may be stored in memory “perceptual echo,” a 1-s burst of neural activity, and provides evidence for this idea from reverse correlation of EEG. This activity could serve to maintain a representation of sensory representations over time, which could influence subsequent perception. A recent study (Ho et al., 2019) reinforced this idea, with evidence that perceptual priors about ear of origin (in an auditory task) are propagated through alpha rhythms at about 9 Hz. Oscillations occurred only for trials preceded by a target tone to the same ear, either on the previous trial or two trials back, suggesting that communication of perceptual history generates neural oscillations within specific perceptual circuits.

The current study aims to test whether judgments of gender show oscillations in accuracy and bias, as has been reported for auditory tones and simple gratings (Ho et al., 2017; Zhang et al., 2019) and to test whether these oscillations are related to the previous stimuli and may therefore be instrumental in communicating perceptual expectations, as they are in audition.

Results

Choice of Stimuli

We first ran a small experiment on a subsample of five subjects to select appropriate stimuli from our morphed set. Our aim was to choose male and female stimuli that were scored 75% and 25%, respectively (weak enough for there to be some error) and androgynous stimuli that were perfectly ambiguous (50% male response). To this end we presented all 11 morphed stimuli, varying in strength between pure female and pure male in random order to the five subjects, with 20 repetitions. From the data we constructed an aggregate psychometric function (Figure 1A) and chose stimuli as near as possible to the desired performance.

Figure 1.

Stimuli and Preliminary Psychophysics

(A) Proportion of trials classified as male, as a function of morphing level, averaged over five participants. These preliminary data served to select stimuli corresponding to 25%, 50%, and 75% male (three examples shown), to be used for the main experiment. Error bars show ± 1 SE.

(B) Illustration of experimental timeline. Participants initiated each trial by pressing the lower button (1) with their right thumb. After a randomly variable period between 0 and 800 ms, a randomly selected stimulus was presented for 20 ms followed by a blank screen. After waiting at least 1 s, the participant responded “male” or “female,” by pressing buttons 2 or 3 with their index or middle finger, respectively.

(C) Responses of all 16 participants to the three stimulus classes, male (blue), androgynous (black), and female (red). Dashed lines show averages.

(D) Probability of responding “male” to an androgynous face after viewing a male (blue bars) or female (red bars) one or two trials back. The baseline is the response of all trials, 55%.

The responses for all individual subjects, averaged over all trials, are shown in Figure 1C. On average, the androgynous stimuli were scored around 50% male, but the male stimuli were more often scored male than the female were scored female: on average 85% male for male, compared with 35% for female (only 65% correct). For this reason we confined our major analysis of bias oscillation to androgynous stimuli, as the unmatched accuracy could lead to apparent biases. Indeed, this is what we find when we analyze bias for male and female stimuli.

Figure 1D shows the dependence on previous stimuli. The baseline is the response to all androgynous stimuli averaged over all trials and subjects. The bars show the average response to androgynous stimuli when preceded by male (blue) or female (red) stimuli, on the previous trial (first 2 columns) or two trials back (last two columns). There is a weak and insignificant (p = 0.11, one-tailed binomial test) positive assimilation for the preceding trials, with about 1.4% higher probability to respond male if the previous trial stimulus was an image of a male face. The effects on female faces and for stimuli two trials back were clearly negligible. Overall there were no significant serial effects on average data under the conditions of our study.

Oscillations in Bias

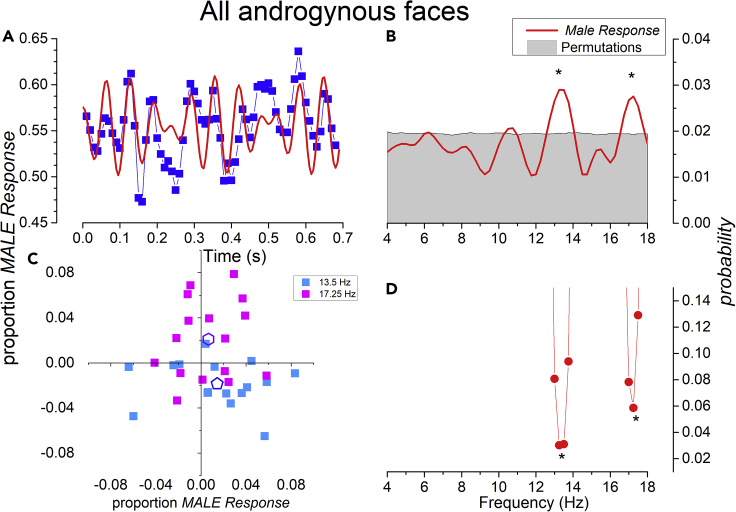

A primary goal of the study was to search for oscillations in bias in gender judgments. For this reason, we presented a large number of androgynous stimuli (50% of trials). Having no clear gender, these stimuli should be most susceptible to both rhythmic changes in criteria and temporal contextual effects. The blue symbols of Figure 2A show the average responses (averaged over all trials and subjects) to all androgynous stimuli as a function of time after thumb press, which initiated the trials. There is a clear oscillation in the response bias, evident on inspection of Figure 2A. The oscillation is brought out clearly in the frequency domain of Figure 2B, which shows distinct amplitude peaks at 13.5 and 17 Hz. The gray shading shows the 95% confidence interval for amplitude of the surrogate data (see Methods). The lower trace (Figure 2C) shows the significance of the oscillations, calculated by a very stringent test, where we compared the amplitude of the real data to the maximal amplitude of the surrogate data at any frequency within the range 4–18 Hz (see Methods). The two peaks at 13.5 and 17 Hz are both significant (p < 0.05, corrected). Figure 2D shows the 2D vector of the individual participants, at 13.5 and 17 Hz. It is clear that the results of the aggregate participant are quite representative of all participants. The average amplitude and phases are 0.023 ± 0.011 and −52° ± 38º for 13.8 Hz and 0.022 ± 0.011 and 72° ± 35º for 17.25 Hz. The Hotelling-T2 test (p = 0.025 and p = 0.01 for 13.8 and 17.25 Hz, respectively) demonstrate that the consistency across subjects is significant, and the vectors do not distribute randomly around zero.

Figure 2.

Oscillations in Bias in Gender Perception

(A) Average response “male” to androgynous stimuli as a function of time after button-press (blue symbols and connecting lines, bin size 10 ms, running average on 20 ms). The red curve is the sum of the two best fitting frequencies from Fourier transform, added in correct amplitude and phase.

(B) Fourier transform of the waveform, showing two distinct peaks at 13.5 and 17 Hz. The gray region shows the results of the permutation analysis (95% confidence interval). The labels and responses were shuffled, and the analysis repeated 10,000 times.

(C) Statistical significance, as a function of frequency, calculated from permutations, expressed as the proportion of times the maximum permutation (of any frequency) was of higher amplitude than the original data at that frequency.

(D) β1 and β2 2D plot of individual participants at 13.5 Hz (light blue) and 17 Hz (magenta). The open pentagons show the group means.

Dependence on Previous Stimuli

We then examined how oscillations depended on the previous stimuli. Although the average data showed no significant serial dependence, it is possible that oscillations are dependent on the perceptual history, implicating them in the transmission of perceptual expectations. Figure 3A shows the amplitude and significance of average oscillations for the aggregate observer for androgynous stimuli preceded by male stimuli (a quarter of the total trials). There is a clear, single-peaked oscillation at 17 Hz, statistically significant against the surrogate maximum over the range (p < 0.005). The peak at 13.5 Hz, which was very strong when all the data were pooled (Figure 2), was completely absent for trials preceded by male stimuli. For trials preceded by female faces (Figure 3B, again 25% of total trials), the situation is complementary. There is a clear oscillation in amplitude at 13.5 Hz, which again is statistically significant against the maximum permuted amplitude. There is no peak near 17 Hz. Interestingly, the amplitudes of these peaks are about twice those of Figure 2, despite the reduced number of trials, to be expected if the oscillations in Figure 2 were present in only half the trials.

Figure 3.

Dependence of Oscillations on Previous Stimuli

(A) Fourier transform of “male” responses to androgynous stimuli preceded by a male stimulus. There is a clear single peak at 17 Hz.

(B) Fourier transform of “male” responses to androgynous stimuli preceded by a female stimulus. There is a clear single peak at 13.5 Hz. The gray region shows the 95% confidence limits of the permutation analysis.

(C and D) Statistical significance of responses preceded by female (C) or male (D), calculated by the same method as in Figure 2.

Thus the two oscillations observed in judgments of androgynous faces (Figure 2), at the distinct frequencies of 13.5 and 17 Hz, have two separate generators. The 17 Hz oscillation occurs when the previous stimulus was a male and the 13.5 Hz oscillation when the previous stimulus was female.

Oscillations in Accuracy

We next explored oscillations in accuracy, necessarily restricting analysis to the male and female stimuli, where accuracy could be scored. The blue symbols of Figure 4A show the average accuracy to all male and female stimuli, pooled over all subjects, as a function of time after button-press. Inspection suggests an oscillation, at a lower frequency than for bias. The amplitude in the frequency domain of Figure 4B shows peaks around 5.5 and 10.5 Hz. However, the only frequency to remain significant after correction is at 10.5 Hz as shown in Figure 4D.

Figure 4.

Oscillations in Accuracy

(A) Average accuracy for identifying male and female stimuli, pooled over all subjects, as a function of time after button-press (blue symbols and lines, bin size 10 ms, running average of 20 ms). The red line shows the 10.5 Hz significant oscillation in appropriate amplitude and phase.

(B) Fourier transform of the waveform, showing two distinct peaks at 5.5 and 10.5 Hz. The gray region shows the 95% confidence limits of the permutation analysis.

(C) Statistical significance, as a function of frequency, calculated from permutations, expressed as the proportion of times the maximum permutation (of any frequency) was of higher amplitude than the original data at that frequency. Only the 10.5 Hz oscillation remained significant with this stringent test.

(D) β1 and β2 2D plot of the 5.5 and 10.5 Hz components for individual observers, shown by green and orange squares. The light green and red pentagons show the group means.

Figure 4C show the complementary analysis, performed separately for each subject at 5.5 and 10.5 Hz. The average amplitude and phases are 0.023 ± 0.009 (correct responses) and 126° ± 32º for 5.5 Hz and 0.015 ± 0.009 and 132° ± 53º for 10.5 Hz. Both the 5.5 Hz and 10.5 Hz peaks are significant on the Hotelling T2 test (p < 0.005 and p < 0.05 for the 5.5 and the 10.5 Hz, respectively), as can be appreciated by the clustering of phases of these frequencies across the 16 subjects. This result suggests that the 5.5 Hz peak that reaches significance against permutation (but without correction) in the aggregate subject may be real, given that the phase is highly congruent across subjects.

We did not attempt to perform any serial dependence analysis on sensitivity for two reasons. Firstly, there is little data, as the only stimuli where sensitivity can be scored are the male and female stimuli, and these need to be preceded by male or female stimuli (12.5% of trials each for congruent and incongruent stimuli). Secondly it makes little sense to analyze serial dependence of sensitivity, as the order of stimuli was completely randomized, so the previous trial carried no information about the current trial. For example, if the previous trial was female, it may bias the current response toward female, which will result in a greater chance of a hit if the trial is female, but an equally greater chance of a miss if the trial is (with equal probability) male. Under this particular experimental design, serial dependence cannot affect sensitivity.

Accuracy and Bias

To study bias and its contingency on previous stimuli, we restricted the analysis to the androgynous stimuli (Figures 2 and 3), as that is the cleanest situation: they should be most susceptible to bias, free to move in both directions, and accuracy cannot influence the result, as there is no correct classification of androgynous stimuli with our paradigm. However, for completeness, we repeated the bias analysis on male and female faces, both pooling over both classes of stimuli, and separately.

The results are shown in Figure 5. The time course data (pooling results for male and female) show a principle oscillation at 4.75 Hz, illustrated by the blue curve (Figure 5A). The frequency analysis of this data (Figure 5B) show four distinct peaks, at 4.75, 11, 15, and 17 Hz. Only the peak near 5 Hz reaches significance by the strict criteria of maximal comparison that we have adopted to date (blue line), which is to be expected if multiple peaks do exist (competing with each other in the maximum-amplitude test). However, with a less stringent criterion (comparison with surrogate data at only that frequency) all four peaks emerge as significant. That is to say, these data in response bias of male and female stimuli show oscillations at frequencies close to previously observed in bias (of androgynous stimuli) and in accuracy (of male/female stimuli).

Figure 5.

Oscillations for Male and Female Stimuli

(A) Average bias toward male in the responses to male and female stimuli, pooled over all subjects, as a function of time after button-press (blue symbols and lines, bin size 10 ms, running average on 20 ms). The red line shows the 4.75 Hz principal oscillation in appropriate amplitude and phase.

(B) Fourier transform of the waveform of combined male and female data, showing four distinct peaks at 4.75, 11, 15, and 17 Hz. The gray region shows the 95% confidence limits of the permutation analysis. Asterix (∗) refers to significance against max response of any frequency p < 0.05.

(C) Same as B, considering only responses to female stimuli.

(D) Same as B, considering only responses to male stimuli.

Figures 5C and 5D suggest why this may have occurred. They show results separately for female (C) and male stimuli (D). When considered separately, bias becomes equivalent to accuracy: higher accuracy in male will result in a bias toward male and vice versa for female. Indeed, the oscillations previously seen in accuracy, near 5 and 10 Hz, are very clearly evident in biases to female stimuli, where the response at 11 Hz survives the very stringent statistical test. They are also evident in the responses to male stimuli, but weaker, and barely meeting the less stringent significance criterion. The Male and Female oscillations are clearly not the same amplitude, with the male oscillations barely reaching the weak level of significance (uncorrected), so the oscillations to female will dominate the combined data, and accuracy will masquerade as bias. In theory, the male/female oscillations should have opposite phase, but the male oscillations are too weak and noisy to verify this assertion.

Discussion

In this study we demonstrate clear oscillations in the judgements of gender of faces, separately for accuracy and bias. Accuracy oscillated at two frequencies, about 5 and 10 Hz, consistent with previous studies (Wang and Luo, 2017; Vinette et al., 2004; Blais et al., 2013). Bias (of androgynous stimuli) also oscillated at two distinctly higher frequencies within the beta: 13.5 and 17 Hz. Further analysis showed that the 13.5 Hz oscillation occurred only for stimuli preceded by female faces and the 17 Hz oscillation only for stimuli preceded by male faces, strongly implicating these oscillations in the transmission of information about perceptual history.

This result nicely reinforces our previous report that judgments of criteria for both the auditory and visual systems oscillate at frequencies quite different from lower-frequency oscillations in sensitivity (Ho et al., 2017). As with this study, oscillations in criteria depended strongly on the previous stimulus, occurring only if the previous stimulus had been in the same ear as the current one (Ho et al., 2019).

It is now well established that to improve efficiency, observers take advantage of past information to anticipate forthcoming sensory input. The fact that oscillations were highly dependent on past stimuli suggests that this predictive perception may be implemented rhythmically through oscillations, along the lines of the “perceptual echo” suggested by Vanrullen and Macdonald (2012). Furthermore, they suggest the use of a frequency code in transmitting the information: when the preceding stimulus was male the oscillation was clearly 17 Hz and when female at 13.5 Hz. Interestingly, the oscillations were more reliable for the male contingent selection. Perhaps this is because the male stimulus was easier to classify, with a higher percent correct across subjects. This is consistent with the idea that the more salient the perception, the stronger will be the reverberant trace in memory that may be responsible for the oscillating prior.

It is interesting that although the gender-contingent oscillations in criteria were very clear and highly significant, there were no significant effects on average measures of bias in this experiment. It is not clear why there was no bias in the average psychophysical judgments, as has often been observed previously (Taubert et al., 2016; Liberman et al., 2014), but there are several possible explanations. There was a quite large pause between successive stimuli in this study, on average about 2 s, which may have minimized the perceptual effect, but nevertheless left an oscillatory trace. Other factors are also possible, such as simultaneous adaptation and serial dependence, canceling each other out, and also non perceptual effects, such as a tendency of observers to alternate responses. But whatever the reason, our results suggest that oscillations may be a far more sensitive signature of temporal contextual effects than biases in mean responses.

Besides the clear stimulus-dependent oscillations in sensitivity, we observed clear oscillations in accuracy, at two distinct frequencies, 5 and 10 Hz. Behavioral oscillations have previously been observed for priming of face identity, at 5 Hz (Wang and Luo, 2017), and also in sensitivity for face discrimination (Blais et al., 2013; Vinette et al., 2004). Wang and Luo used a match-to-sample “priming” task and measured reaction times as a function of distance between prime and probe. RTs oscillated rhythmically with time from prime to probe, at about 5 Hz, when the prime was congruent with the probe. As reaction times is a measure of performance that correlates with accuracy, the 5 Hz oscillation they report is quite consistent with our results. The stronger and more significant oscillation near 10 Hz may or may not be a harmonic of the 5 Hz oscillation, which continues to reverberate for a longer time. Interestingly, oscillations at these two frequencies also emerged when looking at the bias in judging male and female stimuli. However, this most likely reflects a leakage from accuracy to apparent bias, as the sensitivity to male and female stimuli was not exactly balanced. It was for this reason that we presented many androgynous stimuli and used them as the main measure of oscillations in bias and their dependency on previous gender.

The most interesting observation in this study was that oscillations in gender discrimination depended on the previous stimulus. These oscillations may reflect the communication of prior information, as was observed for audition. After viewing a particular stimulus, male or female, the tendency to see an androgynous face as male or female oscillated at a low beta frequency, 13.5 or 17 Hz. Two things are different between this and the previous study on audition (Ho et al., 2017). Firstly, the oscillations are at far higher frequencies, in the low-beta range, whereas those for audition were at 9 Hz, low alpha. Secondly, although for audition the oscillation frequencies for stimuli preceded by right or left ear had been the same (but of opposite phase), here they are quite distinct for the two possible response alternatives (male or female).

We can only speculate on why predictive oscillatory behavior should be different for the two studies. One possibility is that in the auditory study, participants made a simple judgment of ear of origin, which would correspond to the perceived spatial position of the tone. This categorical task is intrinsically bimodal: it is either left ear (and space) or right ear and space. This is a fairly simple task, probably involving fairly low-level processes. Face recognition and discrimination, on the other hand, is a highly complex, multidimensional problem, involving many identifiable features, including gender, age, race etc, besides actual identity. Perhaps to signal expectations in this space, a single frequency is not sufficient, and some form of “frequency-tagging” occurs. Interestingly, there is literature pointing toward a role of beta oscillations in processing local features (Smith et al., 2006; Romei et al., 2011; Liu and Luo, 2019). It is plausible that different frequencies of beta oscillations could account for the response following female or male stimuli and more generally for complex stimuli. Local configurations of nose, mouth, eyes etc. may well act as cues for masculine or feminine interpretations of the stimuli, reflecting the different beta frequency peaks observed here. However, this remains speculation at present, and more work is required, also with other visual tasks, to understand how the frequency acts.

In conclusion, the results of this study suggest that perceptual priors for face perception, accumulated from recent perceptual memory, are communicated through frequency-coded beta rhythms. Priors for male faces show oscillatory effects at 17 Hz and female faces at 13.5 Hz. It is yet to be tested whether priors of other important aspects such as age, race, attractiveness etc are also transmitted in an oscillatory fashion and, if so, at what frequencies.

Limitations of Study

This study uses psychophysical techniques, examining perceptual biases in gender judgment. It is assumed that oscillatory behavior is synchronized by the simple action of button pressing. Although there are several advantages in the psychophysical approach, such as the certainty that oscillations are associated with perceptual changes, other more direct electrophysiological measures, such as electroencephalogram (EEG), magnetoencephalogram (MEG), and fMRI could—and should—be used to probe more directly neural activity. Another possible limitation is not counterbalancing the fingers used to respond male and female, but to exclude the possibility that the male bias could partly be an “index finger” bias, as previous studies have suggested (Zhang et al., 2019).

Resource Availability

Lead Contact

Requests for further information should be directed to the Lead Contact, David Burr, davidcharles.burr@in.cnr.it.

Materials Availability

This study did not generate new materials.

Data and Code Availability

Requests for data and code should be addressed to the lead contact, David Burr.

Methods

All methods can be found in the accompanying Transparent Methods supplemental file.

Acknowledgments

This research was supported by European Union (EU) and Horizon 2020 – ERC Advanced Grant N. 832813 “GenPercept”, by the Italian Ministry of Education PRIN2017 program Grant number 2017SBCPZY, and by Australian Research Council grant DP150101731.

Author Contributions

All others participated in the general design of the experiment, discussions on the results, and editing the manuscript. KC generated the stimuli, JB wrote the experimental programs and collected the data, MCM analyzed the data, and DB wrote the paper.

Declarations of Interest

The authors declare no competing interests.

Published: October 23, 2020

Footnotes

Supplemental Information can be found online at https://doi.org/10.1016/j.isci.2020.101573.

Supplemental Information

References

- Alexi J., Cleary D., Dommisse K., Palermo R., Kloth N., Burr D., Bell J. Past visual experiences weigh in on body size estimation. Sci. Rep. 2018;8:215. doi: 10.1038/s41598-017-18418-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexi J., Palermo R., Rieger E., Bell J. Evidence for a perceptual mechanism relating body size misperception and eating disorder symptoms. Eat. Weight Disord. 2019;24:615–621. doi: 10.1007/s40519-019-00653-4. [DOI] [PubMed] [Google Scholar]

- Bastos A.M., Vezoli J., Fries P. Communication through coherence with inter-areal delays. Curr. Opin. Neurobiol. 2015;31:173–180. doi: 10.1016/j.conb.2014.11.001. [DOI] [PubMed] [Google Scholar]

- Benedetto A., Burr D.C., Morrone M.C. Perceptual oscillation of audiovisual time simultaneity. eNeuro. 2018;5:1–12. doi: 10.1523/ENEURO.0047-18.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benedetto A., Morrone M.C., Tomassini A. The common rhythm of action and perception. J. Cogn. Neurosci. 2020;32:187–200. doi: 10.1162/jocn_a_01436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benedetto A., Spinelli D., Morrone M.C. Rhythmic modulation of visual contrast discrimination triggered by action. Proc. Biol. Sci. 2016;283:20160692. doi: 10.1098/rspb.2016.0692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blais C., Arguin M., Gosselin F. Human visual processing oscillates: evidence from a classification image technique. Cognition. 2013;128:353–362. doi: 10.1016/j.cognition.2013.04.009. [DOI] [PubMed] [Google Scholar]

- Bonnefond M., Kastner S., Jensen O. Communication between brain areas based on nested oscillations. eNeuro. 2017;4:1–14. doi: 10.1523/ENEURO.0153-16.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cecere R., Rees G., Romei V. Individual differences in alpha frequency drive crossmodal illusory perception. Curr. Biol. 2015;25:231–235. doi: 10.1016/j.cub.2014.11.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cicchini G.M., Anobile G., Burr D.C. Compressive mapping of number to space reflects dynamic encoding mechanisms, not static logarithmic transform. Proc. Natl. Acad. Sci. U S A. 2014;111:7867–7872. doi: 10.1073/pnas.1402785111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cicchini G.M., Mikellidou K., Burr D.C. The functional role of serial dependence. Proc. Biol. Sci. 2018;285:20181722. doi: 10.1098/rspb.2018.1722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dupuis-Roy N., Faghel-Soubeyrand S., Gosselin F. Time course of the use of chromatic and achromatic facial information for sex categorization. Vis. Res. 2019;157:36–43. doi: 10.1016/j.visres.2018.08.004. [DOI] [PubMed] [Google Scholar]

- Fiebelkorn I.C., Saalmann Y.B., Kastner S. Rhythmic sampling within and between objects despite sustained attention at a cued location. Curr. Biol. 2013;23:2553–2558. doi: 10.1016/j.cub.2013.10.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischer J., Whitney D. Serial dependence in visual perception. Nat. Neurosci. 2014;17:738–743. doi: 10.1038/nn.3689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fries P. Rhythms for cognition: communication through coherence. Neuron. 2015;88:220–235. doi: 10.1016/j.neuron.2015.09.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K. A theory of cortical responses. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2005;360:815–836. doi: 10.1098/rstb.2005.1622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K.J., Bastos A.M., Pinotsis D., Litvak V. LFP and oscillations-what do they tell us? Curr. Opin. Neurobiol. 2015;31:1–6. doi: 10.1016/j.conb.2014.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gregory R.L. Perceptual illusions and brain models. Proc. R. Soc. Lond. B Biol. Sci. 1968;171:279–296. doi: 10.1098/rspb.1968.0071. [DOI] [PubMed] [Google Scholar]

- Ho H.T., Burr D.C., Alais D., Morrone M.C. Auditory perceptual history is propagated through alpha oscillations. Curr. Biol. 2019;29:4208–4217.e3. doi: 10.1016/j.cub.2019.10.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ho H.T., Leung J., Burr D.C., Alais D., Morrone M.C. Auditory sensitivity and decision criteria oscillate at different frequencies separately for the two ears. Curr. Biol. 2017;27:3643–3649.e3. doi: 10.1016/j.cub.2017.10.017. [DOI] [PubMed] [Google Scholar]

- Hogendoorn H. Voluntary saccadic eye movements ride the attentional rhythm. J. Cogn. Neurosci. 2016;28:1625–1635. doi: 10.1162/jocn_a_00986. [DOI] [PubMed] [Google Scholar]

- Huang Y., Chen L., Luo H. Behavioral oscillation in priming: competing perceptual predictions conveyed in alternating theta-band rhythms. J. Neurosci. 2015;35:2830–2837. doi: 10.1523/JNEUROSCI.4294-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P., Karmos G., Mehta A.D., Ulbert I., Schroeder C.E. Entrainment of neuronal oscillations as a mechanism of attentional selection. Science. 2008;320:110–113. doi: 10.1126/science.1154735. [DOI] [PubMed] [Google Scholar]

- Landau A.N., Fries P. Attention samples stimuli rhythmically. Curr. Biol. 2012;22:1000–1004. doi: 10.1016/j.cub.2012.03.054. [DOI] [PubMed] [Google Scholar]

- Liberman A., Fischer J., Whitney D. Serial dependence in the perception of faces. Curr. Biol. 2014;24:2569–2574. doi: 10.1016/j.cub.2014.09.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu L., Luo H. Behavioral oscillation in global/local processing: global alpha oscillations mediate global precedence effect. J. Vis. 2019;19:12. doi: 10.1167/19.5.12. [DOI] [PubMed] [Google Scholar]

- Rao R.P., Ballard D.H. Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat. Neurosci. 1999;2:79–87. doi: 10.1038/4580. [DOI] [PubMed] [Google Scholar]

- Romei V., Driver J., Schyns P.G., Thut G. Rhythmic TMS over parietal cortex links distinct brain frequencies to global versus local visual processing. Curr. Biol. 2011;21:334–337. doi: 10.1016/j.cub.2011.01.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romei V., Gross J., Thut G. Sounds reset rhythms of visual cortex and corresponding human visual perception. Curr. Biol. 2012;22:807–813. doi: 10.1016/j.cub.2012.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sherman M.T., Kanai R., Seth A.K., Vanrullen R. Rhythmic influence of top-down perceptual priors in the phase of prestimulus occipital alpha oscillations. J. Cogn. Neurosci. 2016;28:1318–1330. doi: 10.1162/jocn_a_00973. [DOI] [PubMed] [Google Scholar]

- Smith M.L., Gosselin F., Schyns P.G. Perceptual moments of conscious visual experience inferred from oscillatory brain activity. Proc. Natl. Acad. Sci. U S A. 2006;103:5626–5631. doi: 10.1073/pnas.0508972103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song K., Meng M., Chen L., Zhou K., Luo H. Behavioral oscillations in attention: rhythmic alpha pulses mediated through theta band. J. Neurosci. 2014;34:4837–4844. doi: 10.1523/JNEUROSCI.4856-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Summerfield C., De Lange F.P. Expectation in perceptual decision making: neural and computational mechanisms. Nat. Rev. Neurosci. 2014;15:745–756. doi: 10.1038/nrn3838. [DOI] [PubMed] [Google Scholar]

- Summerfield C., Koechlin E. A neural representation of prior information during perceptual inference. Neuron. 2008;59:336–347. doi: 10.1016/j.neuron.2008.05.021. [DOI] [PubMed] [Google Scholar]

- Taubert J., Alais D., Burr D. Different coding strategies for the perception of stable and changeable facial attributes. Sci. Rep. 2016;6:32239. doi: 10.1038/srep32239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomassini A., Ambrogioni L., Medendorp W.P., Maris E. Theta oscillations locked to intended actions rhythmically modulate perception. Elife. 2017;6:e25618. doi: 10.7554/eLife.25618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomassini A., Spinelli D., Jacono M., Sandini G., Morrone M.C. Rhythmic oscillations of visual contrast sensitivity synchronized with action. J. Neurosci. 2015;35:7019–7029. doi: 10.1523/JNEUROSCI.4568-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Helmholtz H. Leipzig: Voss; 1867. Handbuch der physiologischen Optik. [Google Scholar]

- Vanrullen R. Predictive coding and neural communication delays produce alpha-band oscillatory impulse response functions. Conf. Cogn. Comput. Neurosci. 2017:1–12. [Google Scholar]

- Vanrullen R., Macdonald J.S. Perceptual echoes at 10 Hz in the human brain. Curr. Biol. 2012;22:995–999. doi: 10.1016/j.cub.2012.03.050. [DOI] [PubMed] [Google Scholar]

- Vinette C., Gosselin F., Schyns P.G. Spatio-temporal dynamics of face recognition in a flash: it's in the eyes. Cogn. Sci. 2004;28:289–301. [Google Scholar]

- Wang Y., Luo H. Behavioral oscillation in face priming: prediction about face identity is updated at a theta-band rhythm. Prog. Brain Res. 2017;236:211–224. doi: 10.1016/bs.pbr.2017.06.008. [DOI] [PubMed] [Google Scholar]

- Wutz A., Muschter E., Van Koningsbruggen M.G., Weisz N., Melcher D. Temporal integration windows in neural processing and perception aligned to saccadic eye movements. Curr. Biol. 2016;26:1659–1668. doi: 10.1016/j.cub.2016.04.070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang H., Morrone M.C., Alais D. Behavioural oscillations in visual orientation discrimination reveal distinct modulation rates for both sensitivity and response bias. Sci. Rep. 2019;9:1115. doi: 10.1038/s41598-018-37918-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Requests for data and code should be addressed to the lead contact, David Burr.