Abstract

Background

With opioid misuse, opioid use disorder (OUD), and opioid overdose deaths persisting at epidemic levels in the U.S., the largest implementation study in addiction research—the HEALing Communities Study (HCS)—is evaluating the impact of the Communities That Heal (CTH) intervention on reducing opioid overdose deaths in 67 disproportionately affected communities from four states (i.e., “sites”). Community-tailored dashboards are central to the CTH intervention’s mandate to implement a community-engaged and data-driven process. These dashboards support a participating community’s decision-making for selection and monitoring of evidence-based practices to reduce opioid overdose deaths.

Methods/Design

A community-tailored dashboard is a web-based set of interactive data visualizations of community-specific metrics. Metrics include opioid overdose deaths and other OUD-related measures, as well as drivers of change of these outcomes in a community. Each community-tailored dashboard is a product of a co-creation process between HCS researchers and stakeholders from each community. The four research sites used a varied set of technical approaches and solutions to support the scientific design and CTH intervention implementation. Ongoing evaluation of the dashboards involves quantitative and qualitative data on key aspects posited to shape dashboard use combined with website analytics.

Discussion

The HCS presents an opportunity to advance how community-tailored dashboards can foster community-driven solutions to address the opioid epidemic. Lessons learned can be applied to inform interventions for public health concerns and issues that have disproportionate impact across communities and populations (e.g., racial/ethnic and sexual/gender minorities and other marginalized individuals).

Trial registration

ClinicalTrials.gov (NCT04111939)

Keywords: Opioid use disorder, Overdose, Dashboards, Implementation science, Interventions, Health surveillance, Information visualization, Helping to End Addiction Long-term, HEALing Communities Study

1. Introduction

The U.S. is experiencing a national crisis of opioid-related harms. In 2018, nearly 47,000 people died from an opioid overdose (Wilson et al., 2020). Evidence-based strategies exist to prevent and treat opioid misuse and opioid use disorder (OUD), but there are few models for combining strategies and bringing together different sectors to collectively combat the epidemic number of opioid overdoses. For these reasons, the National Institute on Drug Abuse (NIDA), in collaboration with the Substance Abuse and Mental Health Services Administration (SAMHSA), initiated the largest implementation study in addiction research to test a coordinated approach for delivering prevention and treatment services across health care, behavioral health, and justice settings.

The HEALing Communities Study (HCS) is a four-year, multi-site, parallel group, cluster randomized wait-list controlled trial to test the impact of the Communities That Heal (CTH) intervention on reducing opioid overdose deaths in 67 disproportionately affected communities in Kentucky, Massachusetts, New York, and Ohio (The HEALing Communities Study Consortium, 2020). The participating communities were randomized to either the CTH intervention (“Wave 1” communities) or a wait-list control condition (“Wave 2” communities). The CTH intervention has three components: (1) a community engagement strategy designed to facilitate data-driven selection and implementation of evidence-based practices (EBPs); (2) the Opioid-overdose Reduction Continuum of Care Approach; and (3) communication campaigns to reduce stigma and raise awareness about EBPs.

A key feature of the CTH intervention is the participation of community members in reviewing timely indicators of the opioid epidemic locally (e.g., the number of people in the community with OUD, overdose, use of medications to treat OUD, administration of naloxone). To implement and reinforce this data-driven ethos, the CTH intervention uses community-tailored dashboards. Within the context of the CTH, “dashboard” refers to web-based interactive data visualizations created to support decision-making and monitoring of community-specific metrics. Each community-tailored dashboard is co-created by HCS researchers and key stakeholders from each community. The co-creation process is grounded in the idea that products developed with end users, as opposed to for end users, result in more useful and effective systems (Reeves et al., 2011; Endsley, 2016). This co-creation process advantageously coincides with a key principle of user-centered design in technology.

Dashboards are an important tool for public health leaders and professionals, health care providers, community leaders, policymakers, and other stakeholders to provide information on standardized health-related performance metrics (Dowding et al., 2015; Joshi et al., 2017). Web-based, interactive data visualizations offer advantages over more traditional means of data dissemination (e.g., static reports), such as immediate availability of data, accessibility at any time and place with internet access, and the ability for users to customize data presentations that discern or elucidate trends and relationships of interest. Well-chosen and rapidly disseminated metrics can help users quickly assess the effectiveness and impact (or lack thereof) of intervention efforts for populations, communities, and/or subpopulations within a community (e.g., by sex/gender, race/ethnicity) (Nash, 2020). Thus, timely dissemination of relevant metrics, which can be further strengthened with quality epidemiological, programmatic, and demographic data, is integral to the success of the HCS and other large-scale efforts to reduce opioid-related harms. Since data can be visualized temporally and geospatially as different EBPs are implemented, dashboards help communities track progress and identify where to make adjustments when efforts are not achieving their desired impact.

This paper describes the methodology for creating and implementing community-tailored dashboards in the CTH intervention. Specifically, we detail the following: (1) key requirements and considerations that informed dashboard content and functionality; (2) the process of developing and deploying community-tailored dashboards in the first year of the CTH intervention; and (3) challenges and early lessons learned. This information and attendant implications could strengthen and accelerate future dashboarding efforts designed to foster community-driven solutions for existing and emerging public health issues.

2. Background

The HCS consortium centralized planning, decision-making, and implementation of the dashboard procedures and protocols through a workgroup of data and visualization experts. This workgroup involved representatives from each of the HCS sites, a Data Coordinating Center (RTI International), the HCS Steering Committee, NIDA, and SAMHSA. Before describing the development and implementation process for the HCS’s community-tailored dashboards, we present two key tenets of the CTH intervention—community engagement and data-driven decision-making by community members—that provided the impetus for the project and informed the process.

2.1. Community-tailored dashboards as a mechanism for community engagement in the CTH intervention

The CTH intervention uses community engagement (CE) as a strategy for collaborative identification, adoption, and implementation of community-tailored EBPs to address the overdose crisis (The HEALing Communities Study Consortium, 2020). Fundamental to the CE approach, the HCS research team works collaboratively with community coalitions—composed of key stakeholders and community members—created or designated specifically for the CTH intervention (see Sprague Martinez et al., 2020). Using data available on the dashboards, the coalitions and their HCS research partners make informed decisions about the selection and implementation of EBPs. The dashboards also meet coalitions’ need for a clear, comprehensible, and user-friendly data dissemination tool. Furthermore, the CE approach encourages community coalitions to continuously monitor implementation outputs and refine choices and strategies based on evolving data. Altogether, community-tailored dashboards help further community engagement by aiding understanding and interpretation of data; identifying and prioritizing community needs; providing a resource to support consensus development and data-driven decision-making; and informing collective action related to implementation and monitoring of EBPs. Stakeholders at the state and local levels are well-positioned to help design the most effective and accessible presentation of critical evidence needed to inform the deployment of initiatives and implementation strategies in ways that optimize programmatic impact.

2.2. Data and data visualizations in the CTH intervention

Access to visualizations of integrated data from different sectors can improve communities’ capacity to plan, monitor, innovate, and support improvements in community-level outcomes (O’Neil et al., 2020a, 2020b). The CE approach facilitates discussions within the coalitions and across community-based organizations to define which data sources and metrics are important for visualization on a dashboard. The process of establishing an interactive data visualization platform can also encourage data sharing relationships between community stakeholders and thus enhancing cross-sector collaboration.

2.3. Conceptual framework guiding the design of community dashboards

The conceptual framework for community-tailored dashboard development was based on user-centered design principles. These principles included: (1) the use of a multi-disciplinary approach, (2) active involvement of end users to achieve a clear understanding and task requirements, and (3) an iterative design and evaluation process (Mao et al., 2005). Research has shown that these approaches tend to result in earlier improvements and better alignment with the needs of end users (Fareed et al., 2020).

Community-tailored dashboard design and development included research experts and experienced, strategically positioned professionals (e.g., directors of community-based service programs, state policymakers) in substance use prevention and treatment, as well as people in related health professions and disciplines (e.g., emergency medicine, criminal justice, housing services). The HCS also drew upon specialists in information technology, software engineering, health communications, and educational technology. Such multi-sector CE-driven approaches to data sharing and data visualization have been used to improve health outcomes and provide a more comprehensive view of a health condition (O’Neil et al., 2020a, 2020b). With respect to the HCS goals, key metrics visualized on community-tailored dashboards were related to the primary outcome for the study, opioid overdose mortality, and key secondary measures related to the EBPs that were expected to drive change.

We also engaged community members in co-creation of the dashboard. Details on the steps that included end users for co-creation are presented in Section 3.5. Briefly, sites made concerted efforts to ensure that end users would be able to understand high-level, multi-faceted information about the opioid crisis in their community at a glance. Our data visualizations were guided by the work of Shneiderman (1996). We prioritized having (1) a clear data overview section first, then (2) features to allow end users to zoom and filter the data, and finally (3) provision of details-on-demand for end users to acquire more technical details about the metrics and/or gain a more nuanced understanding (Shneiderman, 1996). The interactive functions (e.g., zooming and filtering to focus on items of interest and tool tips to get more information about selected items) allowed users to smoothly navigate dashboards, while preserving the complexity of the data used in the visualizations (Card, 2012; Shneiderman et al., 2017).

Ensuing sections of this article discuss the design and evaluation process. Specifically, Sections 3.4 and 3.5 describe an iterative co-creation approach, and Section 3.6 describes the analytics used for evaluation and iteration.

3. Methods/Protocol

3.1. Dashboard requirements for coordination across HCS research sites

A workgroup of data and visualization experts first developed specifications for community-tailored dashboards at a high-level to stipulate what must be consistent across sites without over-specifying exact implementation details. All sites were required to conform to the HCS style guidelines and include the following metrics: (1) number of opioid overdose deaths; (2) number of naloxone units distributed in a community; (3) number of individuals with OUD receiving buprenorphine; (4) number of people receiving new “high-risk” opioid prescriptions; and (5) number of emergency medical services (EMS) events that involved naloxone administration. These metrics were required because they directly related to hypotheses being tested by the study (Metrics 1–4) (Slavova et al., 2020) or were an indicator for monitoring trial safety (Metric 5). Other requirements included restricting access to users who were approved by the HCS research team (and requiring users to create/use their own password to access the dashboards), restricting users to only see metrics for the communities they serve or reside in (metrics aggregated across all other communities implementing the CTH intervention were allowed to be displayed), only creating dashboards for communities that are actively deploying the CTH intervention, and including essential information in language appropriate for a general audience to understand each visualization. Finally, the collection of data visualizations on a community’s dashboard was a component of a larger “portal” made available to each community, with the portal offering other coalition and community-specific support (e.g., meeting materials, event calendars, links to local OUD-related resources) for that community as well as other static/non-interactive presentations of data (e.g., community profiles).

3.2. Technical aspects and considerations for implementing community dashboards

Each site chose their own staff, hardware, software, and services to host community-tailored dashboards for their respective state that met and/or conformed to the requirements specified above. Data use agreements sometimes limited what information could be shown. Most commonly, these agreements stipulated a range within which values must be suppressed to prevent inadvertent subject identification. Outcomes were calculated and stored in a simple common data model format (see Slavova et al., 2020 for details). Dashboard platforms utilized software and services that met the cost and security requirements for each research site. Each research site considered sustainability (presented in more detail in the next section) and reproducibility when selecting technologies to implement these guidelines. Page and data visualization solutions used at the sites differed, but included Microsoft Power BI, Tableau, Sharepoint, D3.js (Data-Driven Documents), Drupal, and Wordpress.

Restricting access to community-tailored dashboards was a primary concern for data security and scientific reasons (e.g., prohibiting individuals in Wave 2 communities from seeing data from Wave 1 communities). Research sites were required to protect each community’s visualizations (hence the community’s data) behind password-protected accounts that could be assigned to appropriate coalition members. This also limited a user’s access to only their specific community.

Technical requirements also accommodated the need to refine and improve content and presentation. Each research site created a mechanism for tracking new visualization requests, including integrating, if possible, existing dashboards already available in the community or otherwise simply linking to that content. User behavior and access is logged; analytical usage of these data is discussed in more detail in Section 3.6.

3.3. Sustainability as a key consideration

We sought to develop and implement the data dashboards in a way that would be sustainable once research funding expires. The teams at each site utilized key aspects of the seven elements of the Program Sustainability Framework, previously demonstrated to be related to program sustainability (Mancini and Marek, 2004). A priori, sites avoided products with high acquisition costs and high-bandwidth solutions to help alleviate the burden of continuing our proposed framework. Sites developed training materials to make the dashboards easier to use. Each site sought to understand the needs of their communities, created space for collaboration with end users, ensured program responsiveness to feedback, and provided regular reports to communities on dashboard effectiveness. Below are some of the site-specific considerations and solutions to promote sustainability of community dashboards:

-

●

The Kentucky site focused on open-source technologies to remove barriers related to software costs. Additionally, many administrative functions of hosting the portal were created by the research team to make long-term adoption easier; this included user management, usage analytics, and specific content management.

-

●

The Massachusetts site considered that capacity for maintaining dashboards in the future is likely to vary across communities. The team worked with the state Department of Public Health to identify an existing platform, the Public Health Information Tool (PHIT; https://www.mass.gov/orgs/population-health-information-tool-phit) to share community-specific data across communities. The Massachusetts community-tailored dashboards and PHIT shared use of PowerBI for visualizations, and PHIT presented an opportunity for scaling and sustaining visualizations created with state-based administrative data across Massachusetts communities after the study.

-

●

The New York site considered the difficulties associated with acquisition and sharing of data. The team implemented a data management platform with tools and capabilities to receive and send data easily (i.e., interfaces directly with systems housing the original data); store and catalog data; automate exchange, transform, load (ETL) procedures; and enable individual user access for analysis and publication (e.g., to dashboards). These were done with a cyberinfrastructure, procedures, and policies that enabled compliance with a variety of regulatory and security requirements, as well as specific requirements contained in data use agreements between data providers and recipients.

-

●

The Ohio site considered the importance of sustaining a tailoring process. The team implemented a solution that enables custom views of the dashboard, specifically allowing for on-demand preferences to be selected by the user. The team balanced the capabilities of the system with the complexity of managing and administrating the system, especially since the system will be deployed and maintained by community members.

3.4. Sequence of activities

Planning and protocol development that specified dashboard requirements and technical implementation commenced at the onset of CTH Phase 0 (“Preparation”) that began in October 2019 (see Sprague Martinez et al., 2020 for a full description of CTH phases). Dashboard staffing and infrastructure preparation continued through subsequent CTH phases such that sites were fully prepared to launch their data dashboards by CTH Phase 3. By the end of April 2020, each site’s study team developed and launched a “version 1” (v1) of the dashboards with the required metrics in each community (described in Section 3.1). The research teams held trainings in each community to ensure coalition members understood how to access the web-based platform, how to accurately interpret data visualizations, how to understand data sources for the metrics and visualizations, and web-page elements that showed graphs, summaries, and other elements of the data visualizations. The creation process in CTH Phase 3 (described in Section 3.5) culminated in a co-created version 2 (v2) of the dashboards for each community. To underscore that dashboards are community-tailored, we explicitly note that the resulting v2 dashboard for each community may look different from the other communities’ dashboards. The co-created v2 of the community-tailored dashboards, launched by the end of September 2020, were the last dashboard deliverable for CTH Phase 3. Community-tailored dashboards will continue to be refined and revised in subsequent phases, based on bug reports, feedback, and feature requests from researchers and end users.

3.5. Co-creation procedures

Each site developed a formal process to work collaboratively with coalition members, data champions, and/or other community members to co-develop the dashboards. This included establishing a base visualization and having a clear process for subsequent modifications via iterative feedback. This co-creation process occurred in stages. First, HCS researchers introduced v1 of the community-tailored dashboards to coalitions to gather feedback on basic design and functionality. Second, each site developed a formal process for more dedicated, in-depth refinement and revision. Example activities included surveys, focus groups, testing of sample visualization formats created by a site’s technologists, and user acceptance testing. Involved parties from each community included coalition members, data champions, and other interested community members. Key areas for feedback and revisions often included: ease of use and navigation; balancing appropriate depth/complexity with ease of comprehension; minimizing potential misinterpretation; and additional data/metrics that would aid communities in monitoring progress and/or data-driven decision-making. Third, during these co-creation activities (or coalition meetings), community stakeholders identified additional data sources for describing the opioid overdose crisis, detailed plans for data sharing, and discussed visualization preferences.

Feedback and suggestions were incorporated as v2—taking resource, time, and data curation constraints into account. COVID-19 was a compelling example of the need and value to revise dashboards beyond refining existing visualizations. The COVID-19 pandemic in the U.S. emerged during the implementation of the CTH intervention. Many community-wide interventions were put in place to mitigate the pandemic including restrictions in large gatherings, business and school closures, and other social distancing measures. The impact on the service delivery system resulted in a consequent need to adapt the planned CE approach (see Sprague Martinez et al., 2020 for more details). As of this writing, revisions are underway to help end users gain insight on the impact of COVID-19 or associated community-wide restrictions on key study metrics.

As noted above, refinement of dashboards will continue for the duration of the CTH intervention. When Wave 2 communities start to implement the CTH intervention, we anticipate starting with a more refined version based on prior experiences with Wave 1.

3.6. Assessing and learning about use of community-tailored dashboards

The HCS also aims to understand the use of community-tailored dashboards within the CTH intervention. Specifically, procedures were developed and attendant mechanisms were implemented to determine how end users are using community-tailored dashboards to make decisions. Domains for data collection were derived from an adaptation of the Technology Acceptance Model (TAM; Davis et al., 1989; Venkatesh and Davis, 2000) and included the following: perceived usefulness, ease of use, intention to use, and actual use of the dashboards. Perceived usefulness was defined as the degree to which a person believes that using the dashboard will enhance data-driven decision making. Perceived ease of use was defined as the degree to which a person believes that using a dashboard will require little effort. Intent to use was defined as a person’s commitment or plans to use the dashboard to inform future behaviors. Finally, usage behavior is represented by the actual use of the dashboard as captured via website analytics. Dashboard server log file data allow us to determine user access to specific areas of the website and frequency of visits to specific site dashboard pages. These metrics will be supplemented by qualitative and quantitative data on TAM domains, ultimately generating insight into the role of dashboards in the end user’s participation in data-driven decision-making.

3.7. Examples

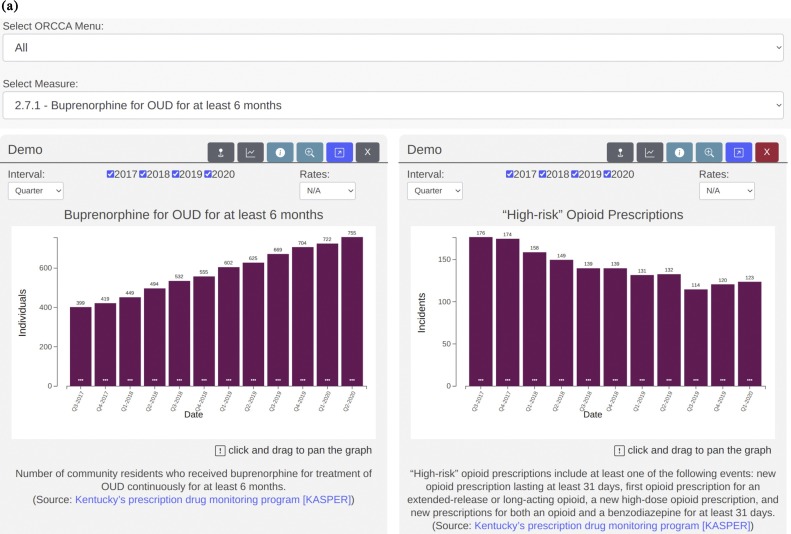

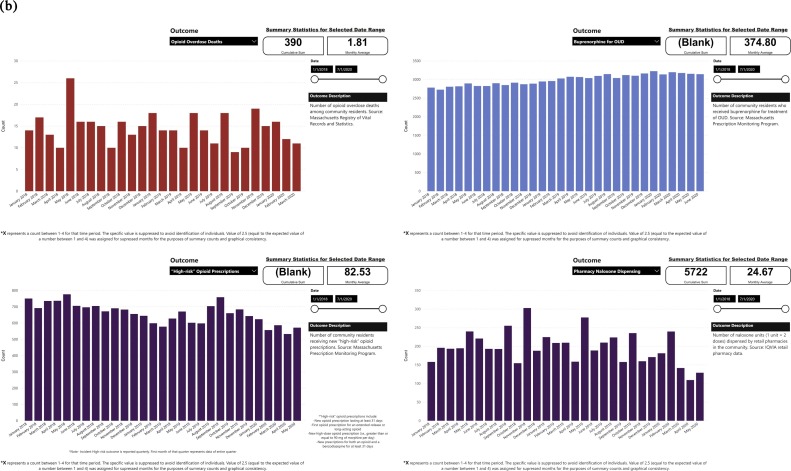

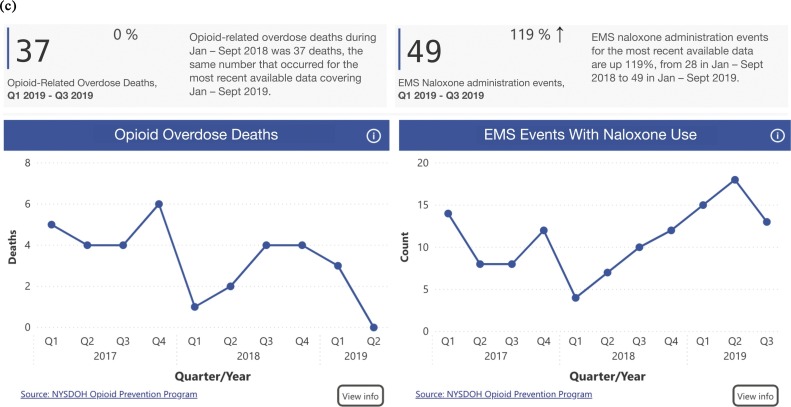

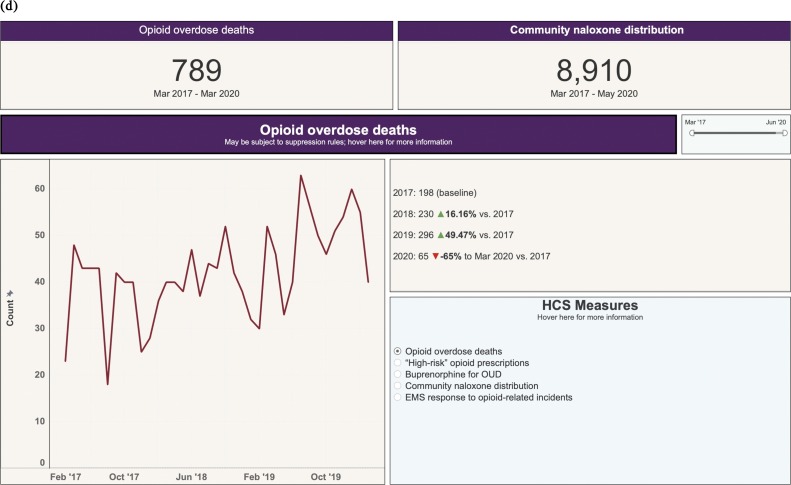

Each research site used common guidelines to develop dashboards. Input from end users indicated a need for visualizations to include plain-language descriptions suitable for a general audience; such language helped users to comprehend and contextualize the data displayed. Because of differences in the actual technology used for implementation, each site’s platform has a unique user experience. Fig. 1 presents sample screenshots from each site’s community-tailored dashboards.

Fig. 1.

(a) Sample community dashboard, Kentucky site: Users can select specific visualization(s) to view and compare from dropdown menus; subsets of visualizations are filterable based on their relevance to specific CTH intervention domains. (b) Sample community dashboard, Massachusetts site: Users can select specific visualization(s) to view and compare from dropdown menus. (c) Sample community dashboard, New York site: “Stories” at the top highlight annual trend for two metrics. Users can scroll down to see additional visualizations. Additional information (e.g., explanations in language appropriate for a general audience) appears when users clicks on the information button in each graph. (d) Sample community dashboard, Ohio site: Panels at the top summarize most recent datapoint for key metrics. Users select measure to be graphs from radio button list, and additional details on temporal trends summarized in an adjacent panel.

Aside from the underlying technologies used to host, create, and publish the community-tailored dashboards, one can observe different approaches to the overview page (e.g., numerical call-out boxes by the Massachusetts and Ohio sites, “highlights” by the New York site), interactivity with data (e.g., filtering via pop-up menus by the Kentucky site and radio button by the Ohio site, zooming and filtering with slider bars by the Massachusetts and Ohio sites), and provision of information and details (e.g., footnotes by the Kentucky site, clickable elements that trigger display or linking to additional information by the New York site).

The following vignette describes how the dashboards may be used as a decision support tool for making selections of EBP strategies and for continuous monitoring of implementation progress. Based on a review of community assets during the first CTH intervention phases, coalition members and their stakeholders become aware of resource gaps that need to be filled to address the opioid overdose epidemic in their community. For example, a coalition might observe a gap related to emergency departments linking persons with OUD to treatment resources. The coalition could then prioritize the linkage to medications for opioid use disorder in their action plans. Furthermore, coalitions can use the dashboards for process monitoring, such as visualizing progress of implementation of overdose education and naloxone distribution at the level of each implementing partner (venue/setting), or at an aggregate community level. This enables coalitions to take greater ownership of the implementation process, identify implementation challenges quickly as they occur, and consequently modify action plans accordingly, including selecting new strategies or modifying failing ones.

4. Discussion

Data visualizations succeed by making complex or dense information easier to process, faster to understand, and more memorable to viewers (Sarikaya et al., 2019). Community-tailored dashboards allow community members to easily view key data depicting the impact of OUD in their communities, to make data-driven decisions related to EBP selection, and to monitor the implementation and impact of these EBPs over time. Disseminating key metrics via dashboards can also inform the intervention and implementation activities of a wide array of societal stakeholders across multiple sectors (e.g., health care, education, public, nonprofit, community-based, advocacy, research) and at multiple levels (e.g., county, state, federal levels) (Nash, 2020).

4.1. Challenges

Large studies, such as the HCS, can benefit from the use of a public-facing system to disseminate metrics that relate to the study goals. As the HCS sought to achieve this via community-tailored dashboards, several challenges emerged. Platform selection proved difficult as there is no standard for hosting a community-engaged dashboard and/or data visualization. Each research site had access to and experience with different technologies. It was not feasible to implement a single technology platform without time delays and added cost; thus, each site picked the most logical choice of technologies for their own institution. Ensuring a coalition member can only see the appropriate data presented another challenge. For example, it was not sufficient to restrict the presentation of results client-side and simply hide data from other communities. The filtering needed to be done server-side to avoid a scenario where the user could potentially dissect the source of the dashboard and view inappropriate data. The data suppression requirements described in Section 3.2 presented a graphical challenge for conveying information. Subsetting cases in a community (e.g., data separated by race/ethnicity, sex/gender) results in smaller cell sizes and increases the likelihood that suppression rules will need to be applied. Furthermore, smaller communities may face higher levels of suppression in their visualizations; it was conceivable that rural communities might not benefit from community-tailored dashboards as much as their larger, urban counterparts in the state. This challenge was compounded when different public health departments had varying requirements for data suppression, which made it difficult to standardize across community-tailored dashboards.

Finally, a particularly noteworthy challenge was the need for timely data. It is not uncommon for public health and/or publicly available data to have a reporting lag of 12 months or more. In some cases, the data may simply be too old to inform selection, tailoring, and optimization of programs. The HCS aimed to have no more than a six-month maximum lag in data for required metrics (see accompanying paper from Slavova et al., 2020); great efforts were undertaken to exceed that specification (e.g., less than a three-month lag) in order to allow communities to make decisions based on more timely data. Building on procedures we developed to address the aforementioned challenges, we anticipate that Wave 2 communities will have more optimized dashboards at the onset of their implementation of the CTH intervention. However, we also note that additional support and investment in state and local surveillance infrastructure may be needed to adequately reduce the time lag in geographic areas not participating in the HCS.

4.2. Limitations

These findings have several limitations. One limitation is that of generalizability. We are only publishing dashboards in four states and only in communities that were selected for study inclusion due to their high opioid overdose mortality rates. Thus, the uptake levels and implementation processes of dashboards that we find in this study may not be reflective of the needs of other states or of communities with lower rates of OUD and opioid overdose deaths. Second, all sites in this study had pre-existing relationships and support from state and county public health departments. All states’ public health departments were committed to successfully carrying out the study; this could strengthen coalitions and thus predispose the CTH intervention to success. Nonetheless, there was variance among public health departments with respect to the financial and staff resources to collect, clean, and disseminate the data, which made it difficult to standardize and could potentially obscure or confound future inference and conclusions. The logistics necessary to coordinate multiple diverse public health departments and provide them with sufficient resources to develop a dashboard should not be underestimated. Other states wishing to develop dashboards for their communities may need to first establish a mechanism to harmonize data collection and dissemination efforts. They may also need to support under-resourced local health departments to help them obtain and disseminate accurate data in a timely manner. Third, the HCS required community stakeholders and others accessing the dashboards to have user accounts and passwords to access data visualizations; this model may not be generalizable to communities or states who would prefer broader access to their data (e.g., “open access” dashboards). Finally, HCS communities were only able to access their own data in their individual dashboard. This was done to decrease confounding or interference in the study design, where communities are randomized to treatment conditions. However, in non-research contexts, it may be helpful for a community to be able to compare their own metrics to those of other communities to help determine how well their programs are working and to share best practices.

4.3. Other public health issues and concerns

Beyond OUD, opioid overdoses, and the impact on the HCS as described in Section 3.5, the COVID-19 pandemic is another public health issue that benefits from a multi-state/multi-national coordinated effort, and in turn may benefit from use of dashboards and data visualizations (Dong et al., 2020). Community-tailored dashboards can help communities monitor their own progress and inform efforts and policies that shape medical and public health issues other than opioid overdose. Furthermore, comparing data between regions—or visualizations that directly present comparisons—can generate additional insights into structural or policy issues that shape other epidemics.

Dashboards also have great promise for reducing health disparities. For example, if race/ethnicity data are available alongside a metric, visualizations can display metrics by race/ethnicity or difference among racial/ethnic groups; this could allow for identification of health disparities and inequities faced by Black, Indigenous, and People of Color (BIPOC). In addition, visualizations can be used to inform, monitor, and/or evaluate EBPs, communication and public awareness efforts, policies, and advocacy that redress the structural barriers and stigma that present challenges to the health of BIPOC, their ability to receive services, and the quality of services they receive.

Altogether, the HCS presents the opportunity to show how dashboards can be used to foster community-driven solutions to address the opioid overdose epidemic. Within the study, community-tailored dashboards were co-created by researchers and community stakeholders to ensure alignment with both the goals of the HCS and expectations of end users. Strong consideration was given to data security, timeliness of metrics, ease of understanding by end users, and sustainability of the dashboards. Dashboards are used by community coalitions to identify key drivers of their local opioid crisis, inform selection of EBPs, and monitor the impact of the implementation of their chosen EBPs on OUD, opioid overdose deaths, and other related metrics. We anticipate the CTH intervention to evolve over time and across communities. Future research regarding community-tailored dashboards can include a description of such happenings, lessons learned, and attendant recommendations for future researchers and developers of community-tailored dashboards. Furthermore, lessons learned from these experiences and analyzing variances in implementation across sites could lead to generalizable recommendations that can inform other epidemics and pandemics, as well as redress the health and social inequities faced by BIPOC, sexual/gender minorities, and other populations that experience marginalization and/or stigma.

5. Contributors

EW and JV conceptualized the manuscript. All authors contributed to the study procedures and provided oversight at their respective research site regarding the development of community-tailored dashboards and co-creation process as part of the CTH intervention. All authors contributed to drafts of the manuscript, critical revisions, and approved the final manuscript.

Role of funding source

This research was supported by the National Institutes of Health through the NIH HEAL Initiative under award numbers UM1DA049394, UM1DA049406, UM1DA049412, UM1DA049415, and UM1DA049417 (ClinicalTrials.gov Identifier: NCT04111939). This study protocol (Pro00038088) was approved by Advarra Inc., the HEALing Communities Study single Institutional Review Board (sIRB). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health or its NIH HEAL Initiative.

Declaration of Competing Interest

MRL reports receiving consulting funds for research paid to his institution by OptumLabs, outside the submitted work. All other authors declare no conflicts of interest.

Acknowledgements

This work was made only made possible by the invaluable expertise, dedication, and contributions from the members of the workgroup focused on data and visualization; we are indebted to the following: Maneesha Aggarwal, Sharon Coleman, Paul Falvo, Jose Garcia, Andrew Gordon, Tyler Griesenbrock, Pankaj Gupta, Dalia Khoury, Puneet Mathur, John McCarthy, Frank Mierzwa, Denis Nash, Gregory Patts, Daniel Redmond, Peter Rock, Carter Roeber, Jeffrey Samet, Reginald Shih, Thomas Stopka, Nathan Surgenor, and Sarah Kosakowski Tufts.

References

- Card S. In: The Human-Computer Interaction Handbook: Fundamentals, Evolving Technologies, and Emerging Applications, Human Factors and Ergonomics. Jacko J.A., editor. CRC Press; Boca Raton, FL: 2012. Information visualization; pp. 515–548. [Google Scholar]

- Davis F.D., Bagozzi R.P., Warshaw P.R. User acceptance of computer technology: a comparison of two theoretical models. Manag. Sci. 1989;35:982–1003. doi: 10.1287/mnsc.35.8.982. [DOI] [Google Scholar]

- Dong E., Du H., Gardner L. An interactive web-based dashboard to track COVID-19 in real time. Lancet Infect. Dis. 2020;20:533–534. doi: 10.1016/S1473-3099(20)30120-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dowding D., Randell R., Gardner P., Fitzpatrick G., Dykes P., Favela J., Hamer S., Whitewood-Moores Z., Hardiker N., Borycki E., Currie L. Dashboards for improving patient care: review of the literature. Int. J. Med. Inform. 2015;84:87–100. doi: 10.1016/j.ijmedinf.2014.10.001. [DOI] [PubMed] [Google Scholar]

- Endsley M.R. second edition. CRC Press; 2016. Designing for Situation Awareness: An Approach to User-Centered Design. [DOI] [Google Scholar]

- Fareed N., Swoboda C.M., Jonnalagadda P., Griesenbrock T., Gureddygari H.R., Aldrich A. Visualizing opportunity index data using a dashboard application: a tool to communicate infant mortality-based area deprivation index information. Appl. Clin. Inform. 2020;11:515–527. doi: 10.1055/s-0040-1714249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joshi A., Amadi C., Katz B., Kulkarni S., Nash D. A Human-Centered Platform for HIV Infection Reduction in New York: Development and Usage Analysis of the Ending the Epidemic (ETE) Dashboard. JMIR Public Health Surveill. 2017;3 doi: 10.2196/publichealth.8312. e95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mancini J.A., Marek L.I. Sustaining community-based programs for families: conceptualization and measurement. Fam. Relat. 2004;53:339–347. doi: 10.1111/j.0197-6664.2004.00040.x. [DOI] [Google Scholar]

- Mao J.Y., Vredenburg K., Smith P.W., Carey T. The state of user-centered design practice. Commun. ACM. 2005;48:105–109. doi: 10.1145/1047671.1047677. [DOI] [Google Scholar]

- Sprague Martinez L., Rapkin B.D., Young A., Freisthler B., Glasgow L., Hunt T., Salsberry P., Oga E.A., Bennet-Fallin A., Plouck T.J., Drainoni M.-L., Freeman P.R., Surratt H., Gulley J., Hamilton G.A., Bowman P., Roeber C.A., El-Bassel N., Battaglia T. Community engagement to implement evidence-based practices in the HEALing Communities Study. Drug Alcohol Depend. 2020;217 doi: 10.1016/j.drugalcdep.2020.108326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nash D. Designing and disseminating metrics to support jurisdictional efforts to end the public health threat posed by HIV epidemics. Am. J. Public Health. 2020;110:53–57. doi: 10.2105/AJPH.2019.305398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Neil S., Hoe E., Ward E., Goyal R. Mathematica; 2020. Data Across Sectors for Health Initiative: Systems Alignment to Enhance Cross-Sector Data Sharing (Health Policy Assessment Issue Brief) [Google Scholar]

- O’Neil S., Hoe E., Ward E., Goyal R., Staatz C. Data Across Sectors For Health Initiative: Promoting A Culture of Health Through Cross-Sector Data Networks. Mathematica. 2020 [Google Scholar]

- Reeves T.C., Huerta T.R., Ford E.W. Co-creating management education: moving towards emergent education in a complex world. Int. J. Info. Operations Manage Ed. 2011;4:265–283. doi: 10.1504/ijiome.2011.044615. [DOI] [Google Scholar]

- Sarikaya A., Correll M., Bartram L., Tory M., Fisher D. What do we talk about when we talk about dashboards? IEEE trans. Visual. Comput. Graphics. 2019;25:682–692. doi: 10.1109/TVCG.2018.2864903. [DOI] [PubMed] [Google Scholar]

- Shneiderman B. In: International Workshop on Multi-Media Database Management Systems: Proceedings, August 14-16, 1996. New York State Center for Advanced Technology in Computer Applications and Software Engineering (Syracuse University), IEEE Computer Society, editors. Presented at the International Workshop on Multi-Media Database Management Systems, IEEE Computer Society Press, Los Alamitos, Calif; Blue Mountain Lake, New York: 1996. The eyes have It: a task by data type taxonomy for information visualizations; pp. 336–343. [Google Scholar]

- Shneiderman B., Plaisant C., Cohen M., Jacobs S.M., Elmqvist N. sixth edition. Pearson; Boston: 2017. Designing the User Interface: Strategies for Effective Human-computer Interaction. [Google Scholar]

- Slavova S., LaRochelle M.R., Root E., Feaster D.J., Villani J., Knott C.E., Talbert J., Mack A., Crane D., Bernson D., Booth A., Walsh S.L. Operationalizing and selecting outcome measures for the HEALing Communities Study. Drug Alcohol Depend. 2020;217 doi: 10.1016/j.drugalcdep.2020.108328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Venkatesh V., Davis F.D. A theoretical extension of the technology acceptance model: four longitudinal field studies. Manag. Sci. 2000;46:186–204. doi: 10.1287/mnsc. 46.2.186.11926. [DOI] [Google Scholar]

- The HEALing Communities Study Consortium HEALing (Helping to End Addiction Long-term) Communities Study: Protocol for a cluster randomized trial at the community level to reduce opioid overdose deaths through implementation of an Integrated set of evidence-based practices. Drug Alcohol Depend. 2020;217 doi: 10.1016/j.drugalcdep.2020.108335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson N., Kariisa M., Seth P., Smith I.V., H, Davis N.L. Drug and opioid-involved overdose deaths — united States, 2017–2018. MMWR Morb. Mortal. Wkly. Rep. 2020;69:290–297. doi: 10.15585/mmwr.mm6911a4. [DOI] [PMC free article] [PubMed] [Google Scholar]