Abstract

Social anxiety (SA) is thought to be maintained in part by avoidance of social threat, which exacerbates fear of negative evaluation. Yet, relatively little research has been conducted to evaluate the connection between social anxiety and attentional processes in realistic contexts. The current pilot study examined patterns of attention (eye movements) in a commonly feared social context – public speaking. Participants (N = 84) with a range of social anxiety symptoms gave an impromptu five-minute speech in an immersive 360°-video environment, while wearing a virtual reality headset equipped with eye-tracking hardware. We found evidence for the expected interaction between fear of public speaking and social threat (uninterested vs. interested audience members). Consistent with prediction, participants with greater fear of public speaking looked fewer times at uninterested members of the audience (high social threat) compared to interested members of the audience (low social threat) b = 0.418, p = 0.046, 95% CI [0.008, 0.829]. Analyses of attentional indices over the course of the speech revealed that the interaction between fear of public speaking and gaze on audience members was only significant in the first three-minutes. Our results provide support for theoretical models implicating avoidance of social threat as a maintaining factor in social anxiety. Future research is needed to test whether guided attentional training targeting in vivo attentional avoidance may improve clinical outcomes for those presenting with social anxiety.

Keywords: Social anxiety; Avoidance, Virtual reality; Eye movements; Visual Attention

Social anxiety disorder (SAD) is a highly prevalent (Davidson, Hughes, George, & Blazer, 1993; Kessler, Chiu, Demler, Merikangas, & Walters, 2005; Ruscio et al., 2007) and disabling psychiatric disorder characterized by marked fear or anxiety about one or more social situations in which the individual is exposed to possible scrutiny by others (American Psychiatric Association, 2013). Attentional processes figure prominently in most contemporary models of SAD (Clarke & Wells, 1995; Rapee & Heimberg, 1997; Hofmann, 2007; Wong & Rapee, 2016) and have been the focus of considerable empirical work (Bögels & Mansell, 2004). Several distinct attentional profiles have been implicated. These include attentional hypervigilance to social evaluative cues (Rapee & Heimberg, 1997), attentional avoidance (Cisler & Koster, 2010), self-focused attention (Clark & Wells, 1995; Clarke & McManus, 2002), and attentional switching between internal and external social-evaluative threat cues (Rapee & Heimberg, 1997). Despite the importance of attention to both cognitive and behavioral features of social anxiety, more empirical work is needed to clarify its role in the disorder. Following from computerized assessment of attention in social anxiety using tasks such as the dot-probe (Asmundson & Stein, 1994), computerized attention modification has emerged as a potential avenue for treatment; however, the efficacy of such treatments has been limited (Heeren, Mogoase, Philippot, & McNally, 2015). One possible explanation is that the operationalization of biased attention relying on computerized paradigms may not accurately reflect attentional processes among those with social anxiety (Kruijt, Parsons, & Fox, 2019).

Naturalistic assessments of attentional patterns in social anxiety have primarily examined eye movements in conjunction with public speaking. This approach has the advantage of evaluating attentional patterns in a realistic context and in response to more ecologically-valid social threat (i.e. an active audience rather than static faces). Moreover, biased attention to social information may be a dynamic process that fluctuates over time. It is possible to evaluate attention over the course of a speech rather than as an aggregate of brief experimental trials. In two separate studies, Chen et al., (2015) and Lin et al. (2016) had participants with either high or low social anxiety give an impromptu speech in front of a pre-recorded audience displayed on a computer monitor. Each audience member was represented with a video recording of their face as part of a grid. The researchers also had the actors use more naturalistic social threat cues (e.g. sighing, head shaking) rather than the frowning faces typically used in computerized paradigms. Chen et al. (2015) found that compared to controls, socially anxious participants avoided looking at the faces of the audience as indexed by greater fixation time on non-face regions. In contrast, Lin et al. (2016), found that high socially anxious participants spent more time looking at the faces of the more socially threatening actors during a speech task, although they did not include a non-face region, which may partially account for the discrepant findings. Kim et al., (2018) had individuals with social anxiety and healthy controls give a speech to a digitally generated VR audience while tracking their eye movements. Compared to controls, socially anxious participants avoided looking at the general area of the audience. In recent work using real world eye tracking, researchers found greater avoidant gaze among socially anxious individuals when walking down a predefined path in public, outside (Rubo, Huestege, & Gamer, 2019). While real-world eye tracking is the most ecologically valid method currently available, without experimental control over who participants see, it is difficult to evaluate the effect of different social behaviors on attention. Prior work on eye movements and social anxiety during a public speaking challenge used either videos displayed on a computer monitor or digital avatars presented in virtual reality (VR). While both approaches offer improvements over the existing computerized paradigms, there is room to enhance both realism and immersion, which may enhance assessment of attentional processes in social anxiety. Moreover, the relationship between social anxiety and attention to specific, but realistic, behaviors that are socially threatening has yet to be examined when participants are in an immersive environment. Further, no prior research has examined how attention to social threat changes over the course of a public speaking challenge. Examining changes in attention over time may lead to additional insights about its role in social anxiety.

The present study

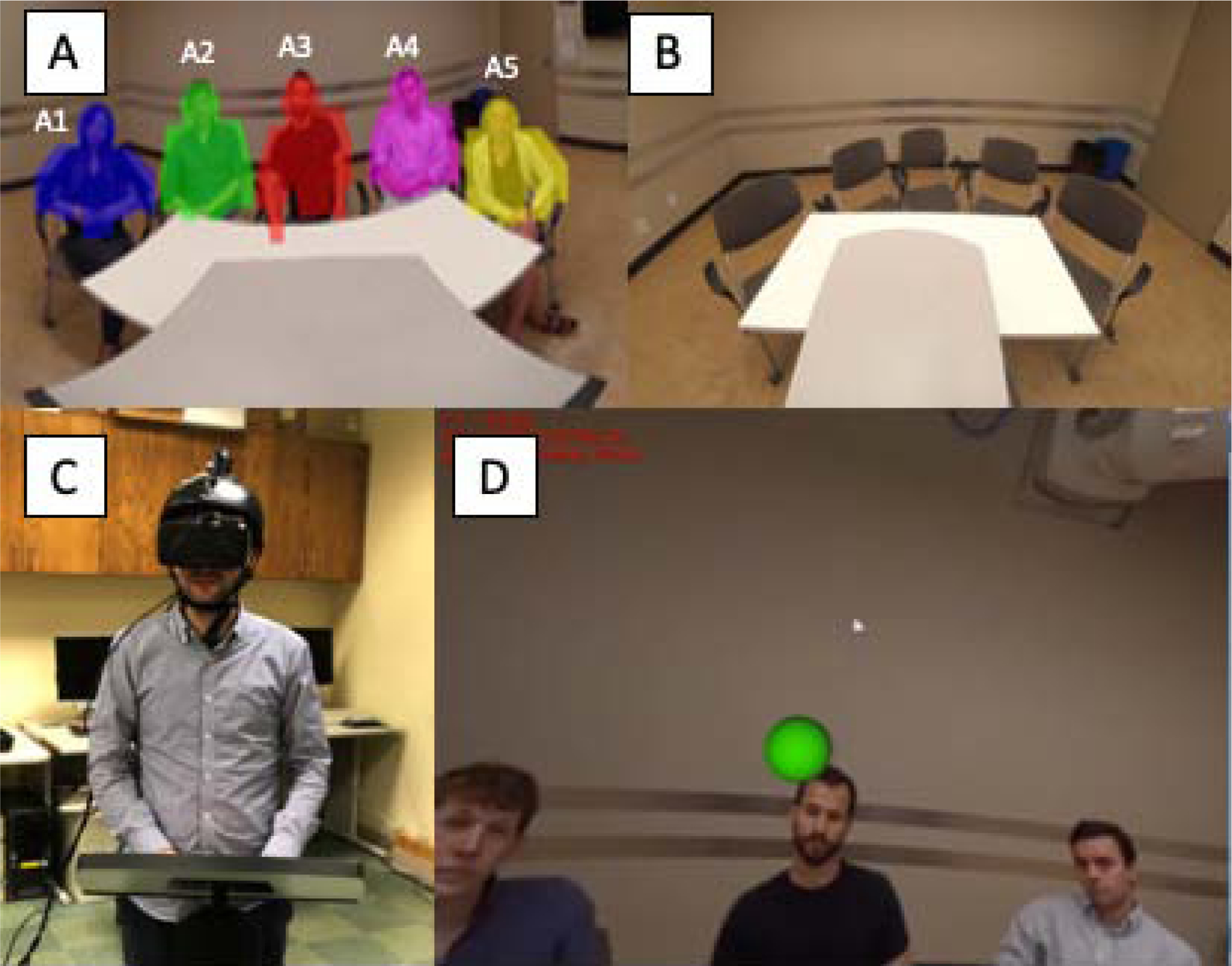

As a pilot study, we investigated the influence of social anxiety on patterns of avoidance gaze to socially threatening behaviors during public speaking challenge using 360° video presented with a VR headset (see Figure 1). We used this setup to maximize the immersion and realism of the public speaking challenge. Social anxiety was indexed by both the total score of the Liebowitz Social Anxiety Scale (LSAS; Leibowitz, 1987) as well the fear of public speaking subscale (Safren, Heimberg, Horner, Juster, Sheneier, & Liebowitz, 1999). We investigated the relationship between fixation frequency and audience members’ level of interest in the speech (interested vs. uninterested), which was quasi-experimentally manipulated (see Figure 1A). We hypothesized that individuals with greater social anxiety (both general and fear of public speaking, specifically) would avoid looking at uninterested audience members compared to the interested audience members. To better understand the time-course of attention across the speech we explored the effects of social anxiety on fixation frequency at 1-minute increments.

Figure 1.

(A) The room with the audience members all shown. Audience members A1 and A4 were coached to behave uninterested, audience members A2 and A5 were coached to behave interested, and audience member A3 was coached to behave neutrally. Overlaid on each audience member is the region of interest used to detect fixation-location for that frame. The regions were dynamic and so changed frame to frame based on the movement of the audience members. Note that the perspective of what participants saw was both narrower and more detailed when using the Oculus headset – see Figure 1D for illustration. (B) The empty room participants saw when they were being oriented to the VR environment. (C) The researcher (M.R.) wearing the Oculus headset and the HiBall motion-tracking system (which was attached to the helmet). He is standing at the same podium used for filming. (D) A screen capture of what the researcher is seeing. The green dot represents where he is looking, but cannot be seen when actually wearing the headset.

Methods

Participants

Participants (N=96) in the study were students at the University of Texas and non-students residing in the surrounding Austin community. Twelve participants were excluded from analyses. Reasons for exclusion included keeping their eyes closed during the speech (n = 3), technical issues with the eye tracking hardware resulting in missing data (n = 6), failing to complete the pre-speech protocol (n = 2), and one participant withdrew from the study. The final sample consisted of 84 participants (see Table 1). The study was approved by the University of Texas Institutional Review Board (IRB). All participants provided informed consent. No monetary compensation was provided to participants. Participants in the introductory psychology course received experimental credit for their participation.

Table 1.

Demographic Summary (n = 84)

| Variable | Mean (SD); range |

|---|---|

| Leibowitz Social Anxiety Scale (LSAS) | 36.31 (19.91); 2–97 |

| Fear of Public Speaking Subscale | 7.24 (2.85); 2–15 |

| Anticipated Fear | 50.17 (24.20); 0–100 |

| Peak Fear | 42.42 (28.25); 0–100 |

| Age | 19.79 (4.02); 18–45 |

| No. (%) | |

| Sex (female) | 39 (46.4) |

| Ethnicity (Hispanic) | 20 (23.8) |

| Race | |

| Black or African American | 7 (8.3) |

| Asian | 20 (23.8) |

| White | 46 (54.8) |

| Other | 11(13.1) |

| LSAS by social anxiety likelihood cut-off | |

| Unlikely (0–30) | 36 (42.9) |

| Probable (30–60) | 40 (47.6) |

| Likely (60–90) | 6(7.1) |

| Highly Likely (greater than 90) | 2 (2.4) |

Social Anxiety Indices

Liebowitz Social Anxiety Scale Self-Report (LSAS-SR).

The LSAS self-report scale (Fresco et al., 2001) is a 48-item measure of fear and avoidance concerning social interactions and performance situations (e.g. telephoning in public, talking to people in authority). Participants rate each item on a 0–3 Likert scale for Fear or Anxiety (0 = “none”, 3= “severe”) and Avoidance (0= “never (0%)” to 3 = “usually (67–100%)”) with a score ranging from 0–144. The alpha coefficient for the current sample was 0.95. We also used the fear of public speaking subscale (Safren et al., 1999) which consists of the fear ratings of five items taken from the LSAS. The alpha coefficient of the subscale for the current study was 0.84. (Also see Table 1.)

Materials

360°-video virtual reality speech environment.

The 360°-video virtual reality (VR) environment consisted of an audience of five individuals sitting in chairs at a table (Figure 1A). The actors in the video were graduate students in psychology at the University or volunteers from the Austin community and were coached to behave in different ways – some to act socially threatening (e.g. uninterested: yawning, looking at their phone), some acted socially positive (e.g. interested: leaning forward, smiling), and the central audience member acted neutrally. The video was filmed with assistance from the faculty at the Moody College of Communications, using two SP360° 4K VR Cameras, mounted on a tripod. To provide participants with the perspective that they were standing behind a podium, the tripod was placed in front of the podium during filming. During the actual speech, participants stood behind the same podium while wearing the VR headset and delivering their speech in the lab (Figure 1). To assess the integrity of our 360°-video environment, we assessed anticipated fear “Please rate the highest level of fear you expect to experience during the speech” and peak fear “Please rate the highest level of fear you experienced at anytime during the speech.” Both anticipated and peak fear were rated using a continuous scale from no fear = 0 to extreme fear = 100. As seen in Table 1, participants reported moderate levels of anticipated and peak fear. After the speech immersion was assessed using a 5-point Likert scale “To what extent did you feel as though you were really in the VR environment (i.e. immersed)?” 83% rated themselves as somewhat, a lot, or completely immersed. Realism was assessed using a 5-point Likert scale “During the speech how often did you forget you were giving a speech to a digital audience?” 56% of participants reported forgetting that they were giving the speech to a digital audience some of the time, most of the time, or all of the time.

Virtual Reality Headset and Eye Tracker.

Participants wore the Oculus Rift DKII virtual reality headset with built-in position tracking. The Oculus was upgraded with an SMI eyetracker to provide high-resolution eye tracking at a sampling rate of 60 Hz. A HiBall motion-tracking system (3rdTech) was used to track vertical and horizontal head movements. However, because the video was filmed from a fixed viewpoint, only the rotations (and not the translations) were used to update the image in the HMD. Participants completed a brief 3-point calibration prior to beginning the speech. Videos of the eye tracking and the video-display (i.e. what the participants saw) were recorded at each video-frame and saved as a .MOV file. These .MOV files were used to later verify the automated eye-gaze analyses.

Procedure

Participants signed up for the study via an online web platform for undergraduate students in an introductory psychology course, or by email for community participants. All self-report data were collected using the Qualtrics survey platform (Qualtrics, Provo, UT).

Participants first completed a series of self-report questionnaires including demographic information. Participants were then briefly oriented to the virtual reality environment. This consisted of them putting on the VR equipment and viewing the “empty” 360°-video virtual reality environment, which was filmed without the audience members present (Figure 1B). Participants were given 90 seconds to look around the environment. Following this orientation, we assessed whether participants experienced any dizziness/nausea. Participants were then given two minutes to prepare a five-minute speech on the topic “something you are proud of”. Participants completed a brief (30 to 60 second) eye-tracking calibration procedure and were then instructed to deliver their speech. Participants who paused for more than 30 seconds or indicated they had nothing more to say before the 5 minutes had elapsed were prompted by the researcher to continue.

Gaze Analysis

Eye Movement Data Pre-Processing.

A data file that contained the eye tracking data was saved from Vizard 4 (WorldViz). We first used OpenPose (Cao, Simon, Wei, & Sheikh, 2018) to generate the regions of interest (ROIs) related to uninterested and interested audience members to be used for hypothesis testing, illustrated in Figure 1A.

The 360°-video was run through OpenPose body, hand, and face keypoint detection. We then used custom MATLAB code in order to create a background vs. audience member mask. This was done by combining convex hulls that contain all face, hand, and torso keypoints respectively, along with masks created by tracing thick lines for arm (shoulder to elbow), and forearm (elbow to hand) with pixel radius 28.3 and 13.9 respectively. Once gaze in image coordinates were determined, each frame’s gaze in image coordinate was used to determine whether gaze was on the background (outside the aforementioned mask) or not (within aforementioned mask). For non-background gaze, the particular audience member (A1-A5) being looked at was determined based on which OpenPose keypoint was closest (OpenPose creates separate skeletons for each person detected in the image). The average ROI (i.e. mask) size in visual angle was 26.41 (SD = 7.61) horizontally and 28.21 (SD = 9.00) vertically.

An automated program developed in house (Kit, Katz, Sullivan, Snyder, Ballard, & Hayhoe, 2014; Li, Aivar, Kit, Tong, & Hayhoe, 2016; Tong, Zohar, & Hahyoe, 2017; Li, Aivar, Tong, & Hayhoe, 2018) was used to identify fixations following the processing of the raw gaze data. (a) data were filtered with a moving window of three frames using a median filter to remove outliers, and an averaging filter to smooth the eye tracking signals; (b) data were segmented into fixations and saccades by setting the parameters of the program to identify a fixation when the eye movement velocity fell below 50-degrees/second for a period of at least 85 milliseconds (ms); (c) consecutive fixations were combined if they were less than 1-degree apart in space and less than 80 ms separated in time. Brief track losses were ignored if the fixation ROIs from the OpenPose pipeline were identical before and after the track loss.

Eye movement data manual checks.

The automated gaze data were checked manually by two independent coders who examined 30 second segments randomly selected from each data file to assess whether the gaze location in the MOV file (e.g. see Figure 1) agreed with the ROIs determined by the automated program. Across 3,694 fixations separately evaluated by each rater, full agreement with the program was reached in 94.7% of the cases.

Eye movement data metrics.

To address our hypotheses, we computed the total fixation frequency to interested and uninterested participants. For our exploratory analyses investigating the time course of gaze during the speech we divided the eye movements into five 1-minute intervals and calculated the fixation frequency to interested and uninterested participants for each of those intervals.

Data Analysis

We conducted Generalized Linear Mixed Models using version 1.1–21 of the lme4 package in R (Bates, Mächler, Bolker, & Walker, 2014). We evaluated number of fixations (i.e. fixation frequency) as the dependent variable. General social anxiety (total LSAS score) and fear of public speaking (LSAS subscale) were standardized as self-reported predictors of fixation frequency. Audience member behavior (uninterested vs. interested) was entered as a factor variable.

To address our primary hypothesis, we evaluated the interaction between each self-report measure and audience member (uninterested vs. interested). We included a random effect for participant to account for multiple observations within person across audience member factor. For the exploratory analyses we added an interaction term with a continuous variable for time (each 1-minute interval during the speech) as well as a random slope for time. For all analyses we used a Poisson distribution with an observation level random effect to account for overdispersion and controlled for fixations to background and fixations to the neutral audience member. We set α = 0.05 for all analyses and 95% confidence intervals around the parameter estimates for each model were calculated using the Wald method. The data and syntax used for the analyses are available in the supplementary materials (https://osf.io/u3tyx/).

Results

During the speech, participants with greater social anxiety avoid the uninterested audience members compared to the interested ones?

See Figure 2A–B for an overview of the findings. There was a large main effect of fixation frequency on audience members, with greater number of fixations to interested audience members (M = 63.3 ± 5.44, SD = 49.8) relative to uninterested audience members (M = 49.3 ± 4.67, SD = 42.8), b = 0.833, p < 0.001, 95% Confidence Intervals (CI) [0.414, 1.25]. We did not find an interaction between LSAS and audience member behavior, b = 0.266, p = 0.209, 95% CI [−0.149, 0.681]. However, we did find an interaction between the fear of public speaking subscale and audience member behavior, where there was greater avoidance (i.e. fewer fixations) to uninterested compared to interested audience members, b = 0.418, p = 0.046, 95% CI [0.008, 0.829].

Figure 2.

(A) There was an interaction between fear of public speaking and audience members (uninterested vs. interested); we found fewer fixations to uninterested audience members with greater fear of public speaking. (B) There was no relationship between general social anxiety and number of fixations to uninterested compared to interested audience members. (C) Greater fear of public speaking was associated with fewer fixations to uninterested compared to interested audience members. Post-hoc Bonferroni corrected contrasts indicated that the difference was significant for the first three minutes of the speech. (D) A similar pattern emerged related to general social anxiety, but the overall effect was not significant.

Note. For all plots the x-axis reflects standardized values. Shaded regions reflect 95% confidence intervals. LSAS = Liebowitz Social Anxiety Scale.

Do patterns of attention to uninterested audience members compared to interested change over the course of the speech?

See Figure 2C–D for an overview of the findings. The interaction between LSAS, audience member, and time did not reach statistical significance, b = −0.059, p = 0.168, 95% CI [−0.144, 0.025]. However, the interaction was significant for fear of public speaking, b = −0.087, p = 0.042, 95% CI [−0.172, −0.003]. For the fear of public speaking subscale initial avoidance of uninterested audience members compared to interested audience members declined over the course of the speech. Post-hoc Bonferroni corrected contrasts showed that there was a significant effect of fear of public speaking on avoidance of social threat in each of the first three minutes of the speech, but not in the fourth or fifth minutes.

Discussion

The primary aim of this pilot study was to test the hypothesis that social anxiety was associated with avoidance of social threat during a public speaking challenge. Avoidance is thought to serve as a behavior maintaining social anxiety through failure to challenge fear of negative evaluation (Wells, Clark, Salkovskis, Ludgate, Hackmann, & Gelder, 2016). This study was the first to examine the potential utility of using a 360°-video environment as an immersive and realistic, controlled environment for conducting research on attentional processes in social anxiety. Indeed, we found that participants reported elevated anticipated fear as well as elevated peak fear during the speech; moreover, most participants rated the experience as immersive and realistic, despite being told ahead of time that they would be speaking to a pre-recorded audience. Together, these data suggest that 360°-video environments may be useful for conducting public-speaking exposure trials and could potentially be adapted for other social contexts.

Support for our primary hypothesis was specific to the interaction between fear of public speaking and audience member (interested vs. uninterested). During the speech, those with greater fear of public speaking looked fewer times at uninterested (socially threatening) audience members compared to interested (non-threatening) audience members. We also found that initial avoidance of social threat diminished over the course of the speech.

Fear of negative evaluation is a core feature of social anxiety (Wong & Rapee, 2016). In the context of public speaking, if someone interprets uninterested audience behaviors as evidence of negative evaluation, then avoidance of those audience members only serves to reinforce the (faulty) belief that negative evaluation is truly threatening. For instance, if an audience member looks at their phone and the speaker’s interpretation is “I must be terrible at this”, then avoiding that information (i.e. uninterested audience member behaviors) in the future only serves to reinforce the negative belief. No one that is giving a talk likes to see audience members looking at their phones. Indeed, in the current study there was a very large effect showing that all participants looked less frequently at the uninterested audience members compared to the interested audience members. The distinction is that those with greater fear of public speaking looked even less at the uninterested audience members. Interestingly, this gaze behavior was driven by differences in fixation frequency at the beginning of the speech that diminished over time. Rather than categorical avoidance of social threat, one interpretation could be that change in attentional avoidance with greater fear of public speaking reflects a slower rate of habituation to perceived negative evaluation, which is a factor maintaining social anxiety (Avery & Blackford, 2016). This interpretation fits neatly into the current conceptualization of evidence-based psychotherapy for social anxiety, where habituation to feared social situations drives symptom reduction (Berry, Rosenfield, Smits, 2008). However, physiological measurement during the course of the speech would be needed to provide a more direct test of this habituation hypothesis.

Contrary to our expectations, we did not find evidence that social anxiety symptoms generally influenced gaze behavior. It may be that attentional avoidance during public speaking is driven primarily by domain specific fear (i.e. fear of public speaking). Alternatively, the sample in the current study included few individuals with probable social anxiety disorder; perhaps greater symptom severity would be associated with attentional avoidance irrespective of context. We found no evidence of hypervigilance to social threat. Recent (unpublished) work using real-world eye tracking demonstrated that in a specific context (a waiting room) socially anxious individuals did show some evidence of hypervigilance-avoidance (Rosler, Gohring, Strunz, & Gamer, 2019). Allowing individuals to look around freely, and consideration of the context when assessing attentional allocation may be important considerations for future research.

Virtual and video environments offer the advantage of greater experimental control, but generalization of attentional processes to the real world is an important goal for future research on attentional processes during public speaking. This is particularly important to consider in the context of attention training strategies as a treatment modality for social anxiety. Working to develop in vivo attention modification interventions would provide a much stronger test of how attentional mechanisms are causally linked to social anxiety symptoms.

The study has several limitations that warrant mention. First, the sample was primarily a non-clinical one and although diverse with some representation of individuals from the Austin community, most participants were university students. Second, while the composition and arrangement of the interested and uninterested audience members in the 360°-video was balanced, there was only one neutral audience member centrally located (see Figure 1). We considered exploring this neutral audience member as an additional contrast, but it would have been impossible to disentangle the effects of central fixation tendency (Tatler, 2007) from threat processing. Future studies are needed to determine the impact of neutral audience responding on attentional avoidance during an in vivo public speaking challenge.

The present findings from this pilot study identified an interaction between attentional avoidance of uninterested (socially threatening) audience members and fear of public speaking symptoms during a public speaking challenge in an immersive, realistic, virtual environment. Avoidant eye movement behaviors may serve to block or at least attenuate the processing of threat disconfirming information - a core therapeutic mechanism implicated in successful treatment of pathological anxiety (Bögels & Mansell, 2004). The use of 360°-video presented in an immersive environment coupled with eye tracking (using a VR headset) offers a potential platform for directly modifying these potentially maladaptive avoidant eye movement patterns by prescribing specific gaze behaviors (i.e., redirecting participants to attend to audience members’ faces) during in vivo interactions in socially threatening contexts such as public speaking and other socially relevant interactions.

Supplementary Material

Realistic 360°-video immersive environment elicits anxiety during a speech

Greater fear of public speaking is linked to specific gaze behaviors

Uninterested (socially threatening) audience members are avoided most

Greater avoidance is linked to greater fear of public speaking

Avoidance fades over the course of the speech

Acknowledgments

We would like to thank Deepak Chetty and Donald Howard (director) of the UT3D program in the Department of Radio/Television/Film, UT Austin for their assistance and expertise in the creation of the 360°-video used in the current study.

Funding: This work was supported by the National Institute of Health (NIH) [R01 EY05729; F31 MH118784]

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- American Psychiatric Association: Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition Arlington, VA, American Psychiatric Association, 2013. [Google Scholar]

- Asmundson GJG, & Stein MB (1994). Selective Processing of Social Threat in Patients With Generalized Social Phobia: Evaluation Using a Dot-Probe Paradigm. Journal of Anxiety Disorders, 107–117. [Google Scholar]

- Avery SN, & Blackford JU (2016). Slow to warm up: The role of habituation in social fear. Social cognitive and affective neuroscience, 11(11), 1832–1840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berry AC, Rosenfield D, & Smits JA (2009). Extinction retention predicts improvement in social anxiety symptoms following exposure therapy. Depression and anxiety, 26(1), 22–27. [DOI] [PubMed] [Google Scholar]

- Bögels SM, & Mansell W (2004). Attention processes in the maintenance and treatment of social phobia: hypervigilance, avoidance and self-focused attention. Clinical Psychology Review, 24(7), 827–856. 10.1016/j.cpr.2004.06.005 [DOI] [PubMed] [Google Scholar]

- Cao Z, Simon T, Wei SE, & Sheikh Y (2018). OpenPose: real-time multi-person keypoint detection library for body, face, and hands estimation.

- Chen NTM, Thomas LM, Clarke PJF, Hickie IB, & Guastella AJ (2015). Hyperscanning and avoidance in social anxiety disorder: The visual scanpath during public speaking. Psychiatry Research, 225(3), 667–672. 10.1016/j.psychres.2014.11.025 [DOI] [PubMed] [Google Scholar]

- Chen YP, Ehlers A, Clark DM, & Mansell W (2002). Patients with generalized social phobia direct their attention away from faces. Behaviour Research and Therapy, 40(6), 677–687. 10.1016/S0005-7967(01)00086-9 [DOI] [PubMed] [Google Scholar]

- Cisler JM, & Koster EHW (2010). Mechanisms of attentional biases towards threat in anxiety disorders: An integrative review. Clinical Psychology Review, 30(2), 203–216. 10.1016/j.cpr.2009.11.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clarke DM & Wells A A cognitive model of social phobia In Heimberg RG, Liebowitz M, Hope DA, and Schneier FR (Eds.), Social phobia: Diagnosis, assessment and treatment (pp. 69–93). [Google Scholar]

- Davidson J, Hughes D, George L, & Blazer D (1993). The epidemiology of social phobia: findings from the Duke Epidemiological Catchment Area Study. Psychological Medicine, 23(3), 709–718. doi: 10.1017/s0033291700025484 [DOI] [PubMed] [Google Scholar]

- Fresco DM, Coles ME, Heimberg RG, Liebowitz MR, Hami S, Stein MB, & Goetz D (2001). The Liebowitz Social Anxiety Scale: a comparison of the psychometric properties of self-report and clinician-administered formats. Psychological medicine, 31(6), 1025–1035. [DOI] [PubMed] [Google Scholar]

- Garner M, Mogg K, & Bradley BP (2006). Orienting and maintenance of gaze to facial expressions in social anxiety. Journal of Abnormal Psychology, 115(4), 760–770. 10.1037/0021-843X.115.4.760 [DOI] [PubMed] [Google Scholar]

- Heeren A, Mogoașe C, Philippot P, & McNally RJ (2015). Attention bias modification for social anxiety: a systematic review and meta-analysis. Clinical psychology review, 40, 76–90. [DOI] [PubMed] [Google Scholar]

- Hofmann SG (2007). Cognitive Factors that Maintain Social Anxiety Disorder: a Comprehensive Model and its Treatment Implications. Cognitive Behaviour Therapy, 36(4), 193–209. doi: 10.1080/16506070701421313 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horley K, Williams LM, Gonsalvez C, & Gordon E (2003). Social phobics do not see eye to eye: A visual scanpath study of emotional expression processing. Journal of anxiety disorders, 17(1), 33–44. [DOI] [PubMed] [Google Scholar]

- Kessler RC, Chiu WT, Demler O, Merikangas KR, & Walters EE (2005). Prevalence, severity, and comorbidity of 12-month DSM-IV disorders in the National Comorbidity Survey Replication. Archives of General Psychiatry, 62(6), 617–627. 10.1001/archpsyc.62.6.617 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H, Shin JE, Hong Y-J, Shin Y-B, Shin YS, Han K, et al. (2018). Aversive eye gaze during a speech in virtual environment in patients with social anxiety disorder. Australian and New Zealand Journal of Psychiatry, 52(3), 279–285. 10.1177/0004867417714335 [DOI] [PubMed] [Google Scholar]

- Kit D, Katz L, Sullivan B, Snyder K, Ballard D, & Hayhoe M (2014). Eye movements, visual search and scene memory, in an immersive virtual environment. PLoS One, 9(4), e94362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kruijt AW, Parsons S, & Fox E (2019). A meta-analysis of bias at baseline in RCTs of attention bias modification: no evidence for dot-probe bias towards threat in clinical anxiety and PTSD. Journal of abnormal psychology, 128(6), 563. [DOI] [PubMed] [Google Scholar]

- Kwon JH, Powell J, & Chalmers A (2013). How level of realism influences anxiety in virtual reality environments for a job interview. International Journal of Human-Computer Studies, 71(10), 978–987. 10.1016/j.ijhcs.2013.07.003 [DOI] [Google Scholar]

- Li CL, Aivar MP, Kit DM, Tong MH, & Hayhoe MM (2016). Memory and visual search in naturalistic 2D and 3D environments. Journal of vision, 16(8), 9–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li CL, Aivar MP, Tong MH, & Hayhoe MM (2018). Memory shapes visual search strategies in large-scale environments. Scientific reports, 8(1), 4324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liebowitz MR Social phobia. Modern Problems of Pharmacopsychiatry, 22, 141–173. [DOI] [PubMed] [Google Scholar]

- Lin M, Hofmann SG, Qian M, Kind S, & Yu H (2016). Attention allocation in social anxiety during a speech. Cognition & Emotion, 30(6), 1122–1136. 10.1080/02699931.2015.1050359 [DOI] [PubMed] [Google Scholar]

- Mogg K, Philippot P, & Bradley BP (2004). Selective Attention to Angry Faces in Clinical Social Phobia. Journal of Abnormal Psychology, 113(1), 160–165. 10.1037/0021-843X.113.1.160 [DOI] [PubMed] [Google Scholar]

- Pishyar R, Harris LM, & Menzies RG (2004). Attentional bias for words and faces in social anxiety. Anxiety, Stress & Coping, 17(1), 23–36. 10.1080/10615800310001601458 [DOI] [Google Scholar]

- Qualtrics. 2005. Qualtrics [computer software]. Provo, Utah, USA: Qualtrics. [Google Scholar]

- R Core Team (2017). R: A language and environment for statistical computing R Foundation for Statistical Computing, Vienna, Austria: https://www.R-project.org/. [Google Scholar]

- Rösler L, Göhring S, Strunz M, & Gamer M (2019). Social anxiety modulates heart rate but not gaze in a real social interaction. https://psyarxiv.com/gps3h/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rubo M, Huestegge L, & Gamer M (2019). Social anxiety modulates visual exploration in real life–but not in the laboratory. British Journal of Psychology. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruscio AM, Brown TA, Chiu WT, Sareen J, Stein MB, & Kessler RC (2007). Social fears and social phobia in the USA: results from the National Comorbidity Survey Replication. Psychological Medicine, 38(01), 282–28. 10.1017/S0033291707001699 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Safren SA, Heimberg RG, Horner KJ, Juster HR, Schneier FR, & Liebowitz MR (1999). Factor structure of social fears: the Liebowitz Social Anxiety Scale. Journal of anxiety disorders, 13(3), 253–270. [DOI] [PubMed] [Google Scholar]

- Tatler BW (2007). The central fixation bias in scene viewing: Selecting an optimal viewing position independently of motor biases and image feature distributions. Journal of vision, 7(14), 4–4. 10.1167/7.14.4 [DOI] [PubMed] [Google Scholar]

- Tong MH, Zohar O, & Hayhoe MM (2017). Control of gaze while walking: task structure, reward, and uncertainty. Journal of vision, 17(1), 28–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wells A, Clark DM, Salkovskis P, Ludgate J, Hackmann A, & Gelder M (2016). Social Phobia: The Role of In-Situation Safety Behaviors in Maintaining Anxiety and Negative Beliefs–Republished Article. Behavior therapy, 47(5), 669–674. [DOI] [PubMed] [Google Scholar]

- Wermes R, Lincoln TM, & Helbig-Lang S (2018). Attentional biases to threat in social anxiety disorder: time to focus our attention elsewhere? Anxiety, Stress, & Coping, 1–16. 10.1016/j.neubiorev.2018.12.007 [DOI] [PubMed] [Google Scholar]

- Wong QJJ, & Rapee RM (2016). The aetiology and maintenance of social anxiety disorder: A synthesis of complimentary theoretical models and formulation of a new integrated model. Journal of Affective Disorders, 203, 84–100. 10.1016/j.jad.2016.05.069 [DOI] [PubMed] [Google Scholar]

- WorldViz. 2014. Vizard4 [computer software]. Santa Barbara, CA, USA: WorldViz LLC. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.