Abstract

Methylation of the O6-methylguanine methyltransferase (MGMT) gene promoter is correlated with the effectiveness of the current standard of care in glioblastoma patients. In this study, a deep learning pipeline is designed for automatic prediction of MGMT status in 87 glioblastoma patients with contrast-enhanced T1W images and 66 with fluid-attenuated inversion recovery(FLAIR) images. The end-to-end pipeline completes both tumor segmentation and status classification. The better tumor segmentation performance comes from FLAIR images (Dice score, 0.897 ± 0.007) compared to contrast-enhanced T1WI (Dice score, 0.828 ± 0.108), and the better status prediction is also from the FLAIR images (accuracy, 0.827 ± 0.056; recall, 0.852 ± 0.080; precision, 0.821 ± 0.022; and F1 score, 0.836 ± 0.072). This proposed pipeline not only saves the time in tumor annotation and avoids interrater variability in glioma segmentation but also achieves good prediction of MGMT methylation status. It would help find molecular biomarkers from routine medical images and further facilitate treatment planning.

1. Introduction

Glioblastoma multiforme (GBM) is the most common and aggressive type of primary brain tumor in adults. It accounts for 45% of primary central nervous system tumors, and the 5-year survival rate is around 5.1% [1, 2]. The standard treatment for GBM is surgical resection followed by radiation therapy and temozolomide (TMZ) chemotherapy, which improves median survival by 3 months compared to radiotherapy alone [3]. Several studies indicated that O6-methylguanine-DNA methyltransferase (MGMT) gene promoter methylation reported in 30-60% of glioblastomas [4] can enhance the response to TMZ, which has been proven to be a prognostic biomarker in GBM patients [3, 5]. Thus, determination of MGMT promoter methylation status is important to medical decision-making.

Genetic analysis based on surgical specimens is the reference standard to assess the MGMT methylation status, while a large tissue sample is required for testing MGMT methylation status using methylation-specific polymerase chain reaction [6]. In particular, the major limitations are the possibility of incomplete biopsy samples due to tumor spatial heterogeneity and high cost [7]. Besides, it cannot be used for real-time monitoring of the methylation status.

Magnetic resonance imaging (MRI) is a standard conventional examination in diagnosis, preoperative planning, and therapy evaluation of GBM [8, 9]. Recently, radiomics, extracting massive quantitative features from medical images, has been proposed to explore the correlation between image features and underlying genetic traits [10–12]. There is growing evidence that radiomics can be used in predicting the status of MGMT promoter methylation [13–15]. However, most previous works utilized handcrafted features. This procedure includes tumor segmentation, feature extraction, and informatics analysis [16–19]. In particular, tumor segmentation is a challenging and important step because most works depend on manual delineation. This step is burdensome and time consuming, and inter- or intraobserver disagreement is unavoidable. Deep learning which can extract features automatically has been emerging as an innovative technology in many fields [20]. The convolutional neural network (CNN) is proven to be effective in image segmentation, disease diagnosis, and other medical image analysis tasks [21–25]. Compared to traditional methods with handcrafted features, deep learning shows several advantages of being robust to distortions such as changes in shape and lower computational cost. A few studies have shown that deep learning can be used to segment tumors and predict MGMT methylation status for glioma [26]. However, to the best of our knowledge, there is no previous report regarding building a pipeline for both glioma tumor segmentation and MGMT methylation status prediction in an end-to-end manner. Therefore, we investigate the feasibility of integrating the tumor segmentation and status prediction of GBM patients into a deep learning pipeline in this study.

2. Methods

2.1. Data Collection

A total of 106 GBM patients were analyzed in our study. MR images, including presurgical axial contrast-enhanced T1-weighted images (CE-T1WI) and T2-weighted fluid-attenuated inversion recovery (FLAIR) images, were collected from The Cancer Imaging Archive (http://www.cancerimagingarchive.net). The images were originated from four centers (Henry Ford Hospital, University of California San Francisco, Anderson Cancer Center, and Emory University). Clinical and molecular data were also obtained from the open-access data tier of the TCGA website.

Genomic data were from the TCGA data portal. MGMT methylation status analysis was performed on Illumina HumanMethylation27 and HumanMethylation450 BeadChip platforms. A median cutoff using the level 3 beta-value present in the TCGA was utilized for categorizing methylation status. Illumina Human Methylation probes (cg12434587 and cg12981137) were selected in this study [27].

Of 106 GBM cases, 87 cases were with CE-T1W images, and 66 cases with FLAIR images. We randomly split the cases into training and testing sets with the ratio of 8 : 2 and applied 10-fold cross-validation to the training set with scikit-learn library (https://scikit-learn.org/stable/). The dataset distribution is listed in Table 1.

Table 1.

Dataset distribution of each experiment.

| Phase | Cases (methylation/unmethylation) | CE-T1WI slices (methylation/unmethylation) | FLAIR slices (methylation/unmethylation) | |

|---|---|---|---|---|

| FLAIR | Training | 51 (25/26) | 676 (288/388) | — |

| Testing | 15 (7/8) | 167 (62/105) | ||

| CE-T1WI | Training | 70 (36/34) | — | 1208 (609/599) |

| Testing | 17 (10/7) | 220 (109/111) |

Note: FLAIR: fluid-attenuated inversion recovery; CE-T1WI; contrast-enhanced T1-weighted imaging.

2.2. Image Preprocessing

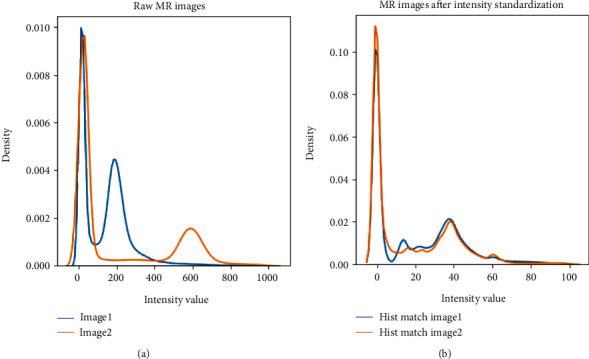

For general images, the pixel values contain reliable image information. However, MR images do not have a standard intensity scale. In Figure 1(a), we show the density plot of two raw MR images. In each plot, there are two peaks, the peak around 0 refers to background pixels, and the other peak refers to white matter. The white matter peaks of the two images are far away. Thus, MR images normalization is needed to guarantee that the grey values of the same tissue among different MR images are close to each other [28].

Figure 1.

Density plot of two different MR images (a) before and (b) after piece-wise linear histogram matching.

The piece-wise linear histogram matching was used to normalize the intensity distribution of MR images [29]. Firstly, we studied standard histogram distribution via averaging the 1st to 99th percentile of all images. Then, we linearly mapped the intensities of each image to this standard histogram. In Figure 1(b), we can see that the white matter peaks of two images coincide with each other after normalization. Secondly, the images were normalized to zero mean and unit standard deviation only on valued voxels. At last, data augmentation was used to increase the dataset size to avoid overfitting. We rotated images for every 5 degrees from -20 to +20 degrees, resulting in a 9-fold increment in the number of MRI scans.

2.3. Segmentation

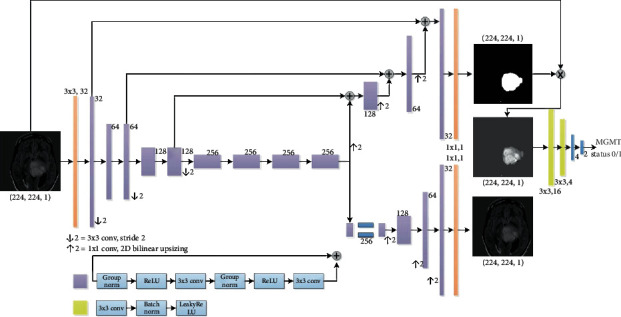

As for tumor segmentation, one state-of-the-art model [30] in BraTS 2018 challenge (Multimodal Brain Tumor Segmentation 2018 Challenge http://braintumorsegmentation.org/) was adapted. The whole network architecture is shown in Figure 2.

Figure 2.

An end-to-end deep learning pipeline for both tumor segmentation and status classification.

In short, the deep learning model added a variational autoencoder (VAE) branch to a fully convolutional network model. The decoder part was shared for both segmentation and VAE tasks. The prior distribution taken for the KL divergence in the VAE part is N(0, 1). ResNet blocks used in the architecture [31] included two 3 × 3 convolutions with normalization and ReLU as well as skip connections. In the encoder part, the image dimension was downsampled using stride convolution by 2 and increased channel size by 2. For the decoder part, the structure was similar to that of the encoder part but using upsampled. The decoder endpoint had the same size as the input image followed by sigmoid activation, and its output was for tumor segmentation. As for the VAE part, the encoder output was reduced to 256, and the input image was reconstructed by using a similar structure as the decoder without skip connection. The segmentation part output the tumor segmentation and the VAE branch attempted to reconstruct the input image. Except for the input and output layers, all blocks in Figure 2 utilized the ResNet block with different channel numbers (depicted aside each layer). For the input layer, a 3 × 3 convolution was with 3 channels; and for both output layers, a 3 × 3 convolution with a dropout rate of 0.2 and L2 regularization with weight 1e − 3 were used to avoid overfitting. The loss function consists of 3 terms as shown in

| (1) |

where LDice is the soft Dice loss between the predicted segmentation and the ground truth labels. The ground truth labels were manually annotated with ImageJ (https://imagej.nih.gov) by one neuroradiologist with 10 years' experience specialized in brain disease diagnosis. LL2 is the L2 loss on the VAE branch output image and the input image, and LKL is the standard VAE penalty term [32, 33]. Then, the Dice coefficient as defined in function (2) was calculated to assess the performance of segmentation:

| (2) |

where pi is the ground truth, is the prediction for pixel i, and epsilon = 1e − 8.

2.4. Status Classification

Meanwhile, for the classification of MGMT methylation status, a 4-layer CNN was designed. Further, the classification model was cascaded with the tumor segmentation model. At the stage of the tumor segmentation model design, the classification network was tried with different numbers of convolutional layers [2–5], and we found that 2 convolutional layers with 2 fully connected (FC) layers performed the best for this task. The first convolutional layer had 16 filters, and the second one had 4 filters. All the convolutional layers had a kernel size of 3 × 3 and stride of 1 followed by LeakyReLU, batch normalization, and max pooling. LeakyReLU was an advanced ReLU activation that avoids dead neurons by setting a negative half-axis slope 0.3 instead of 0. Its advantages include good performance in eliminating gradient saturation, low computational cost, and faster convergence. Batch normalization was used to normalize features by the mean and variance within a small batch. It helped to solve the covariance shift issue and ease optimization. Max pooling with a 4 × 4 filter was used to downsample image features extracted through convolutional layers and then fed into 2 FC layers. ReLU and softmax were adapted as activation functions for the first and second FC layers, respectively. The weight initialization of all layers was done by He-normal [34].

2.5. Parameter Settings and Software

All experiments were conducted under the open-source framework Keras (https://keras.io/) on one GeForce RTX 2080Ti GPU. The numbers of parameters of the segmentation and classification model are, respectively, 6,014,721 and 3,498. In tumor segmentation, Adam optimizer was adapted with a self-designed learning rate scheduler which was initialized with a learning rate 1e − 4; then, the learning rate was divided by 2 when the validation loss did not reduce in the past 5 epochs. The epoch was set at 50 and batch size at 8. Every epoch took around 50 seconds. In tumor classification, 4-CNN was trained for 50 epochs which utilized Adam with learning rate 2e − 4, and the batch size was 32. If the validation accuracy was observed stable for over 10 epochs, the training process would be ended. The averaged elapsed time for each epoch was 5 seconds.

2.6. Statistical Analysis

The Dice coefficient was calculated for evaluating the performance of tumor segmentation. For the MGMT methylation status classification, the accuracy rate, recall, precision, and F1 score were calculated according to equations listed below. In addition, the receiver operating characteristic (ROC) curve was plotted, and the area under the ROC curve (AUC) was reported to measure the classification accuracy. All the parameters were calculated in PyCharm with the programming language of Python (version 3.6.8; Wilmington, DE, USA; http://www.python.org/):

| (3) |

where TP is the true positive, TN is the true negative, FP is the false positive, and FN is the false negative.

3. Results

3.1. Tumor Segmentation

3.1.1. Qualitative Observation

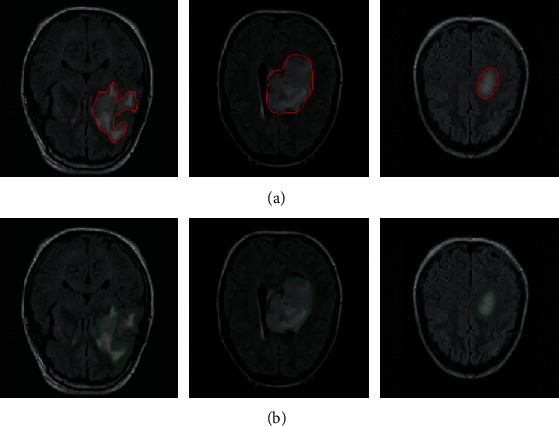

Tumors could be accurately delineated by the proposed pipeline. Figure 3 shows the annotated ground truth (the first row) and corresponding segmentation results (the second row) of GBM in FLAIR images. It is observed that tumor boundaries could be accurately localized by using the deep learning network, and the major hyperintense regions are delineated. The three cases show that automatic segmentation is quite close to the ground truth.

Figure 3.

Automatic segmentation results of brain tumors with FLAIR images. (a) The ground truth of tumor boundaries in FLAIR images and (b) automatic segmentation results using the proposed network with FLAIR images.

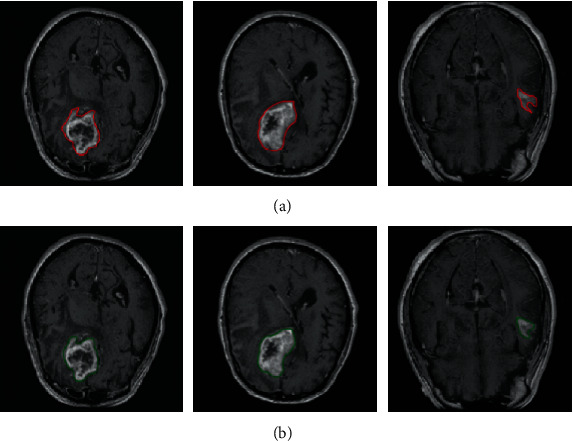

Figure 4 shows the GBM in CE-T1WI images, and the ground truth (the first row) and the segmentation results (the second row) are presented. Tumor boundaries are localized, and it seems that there is no obvious difference between the manual annotation and its corresponding segmentation results obtained from our proposed network, and the suspicious regions are mainly contoured. The three cases show that segmentation results from the deep network approximate the manual delineation.

Figure 4.

Three representative cases of brain tumor manual annotation and automatic segmentation with CE-T1WI images. (a) The manual annotation and (b) the automatic segmentation results with our proposed network.

3.1.2. Quantitative Evaluation

The quantitative performance of automatic tumor segmentation is summarized in Table 2. The deep network obtained good testing performance on tumor segmentation using CE-T1WI (Dice score, 0.828 ± 0.108) and FLAIR (Dice score, 0.897 ± 0.007). And the Dice scores from FLAIR were slightly higher than those from CE-T1WI across training, validation, and testing sets. The maximum difference of the Dice score between average Dice scores from CE-T1W images in training and validation sets was 0.026, indicating that the model was not overfitting.

Table 2.

Dice scores of the deep network on tumor segmentation using MR images.

| Modality | Training | Validation | Testing |

|---|---|---|---|

| CE-T1WI | 0.832 ± 0.009 | 0.831 ± 0.012 | 0.828 ± 0.108 |

| FLAIR | 0.893 ± 0.004 | 0.892 ± 0.008 | 0.897 ± 0.007 |

Note: the number in the table referred to the mean ± standard deviation values of 10 cross-validation experiments. CE-T1WI: contrast-enhanced T1-weighted imaging; FLAIR: fluid-attenuated inversion recovery.

3.1.3. Computational Performance

Time consumption between manual annotation and automatic prediction per MR slice is compared as shown in Table 3. For the evaluation of time consumption, we recorded the total time and divided it by the number of slices. So, the time listed in Table 3 was the average segmentation time per slice. It was observed that the deep network was more efficient, and it took less than 0.2 seconds to complete the segmentation of an MR slice, while manual annotation required more than 30 seconds.

Table 3.

Inference time (seconds) of one MR slice for glioma segmentation.

| Modality | Manual annotation | Deep model |

|---|---|---|

| CE-T1WI | 50 s | 0.11 s |

| FLAIR | 60 s | 0.07 s |

Note: CE-T1WI: contrast-enhanced T1-weighted imaging; FLAIR: fluid-attenuated inversion recovery.

3.2. Classification of MGMT Promoter Methylation Status

Table 4 shows the prediction performance of MGMT promoter methylation status which is evaluated from four classification metrics (accuracy, recall, precision, and F1 score) on three stages (training, validation, and testing) when using different MR images (CE-T1WI, FLAIR). In general, the model trained with FLAIR achieves better results for all metrics across three stages, followed by the model trained with CE-T1WI images. Specifically, the accuracy, recall, precision, and F1 score of the deep model trained with FLAIR images reach 0.827, 0.852, 0.821, and 0.836 in the testing stage, respectively.

Table 4.

Results of MGMT methylation status classification.

| Modality | Phase | Classification | |||

|---|---|---|---|---|---|

| Accuracy | Recall | Precision | F 1 score | ||

| CE-T1WI | Training | 0.894 ± 0.012 | 0.906 ± 0.007 | 0.886 ± 0.018 | 0.896 ± 0.010 |

| Validation | 0.839 ± 0.046 | 0.866 ± 0.044 | 0.823 ± 0.051 | 0.845 ± 0.045 | |

| Testing | 0.804 ± 0.011 | 0.818 ± 0.033 | 0.798 ± 0.014 | 0.808 ± 0.015 | |

|

| |||||

| FLAIR | Training | 0.941 ± 0.056 | 0.943 ± 0.104 | 0.947 ± 0.026 | 0.945 ± 0.081 |

| Validation | 0.885 ± 0.090 | 0.941 ± 0.105 | 0.857 ± 0.028 | 0.889 ± 0.101 | |

| Testing | 0.827 ± 0.056 | 0.852 ± 0.080 | 0.821 ± 0.022 | 0.836 ± 0.072 | |

Note: the number in the table referred to the mean ± standard deviation values of 10 cross-validation experiments. CE-T1WI: contrast-enhanced T1-weighted imaging; FLAIR: fluid-attenuated inversion recovery.

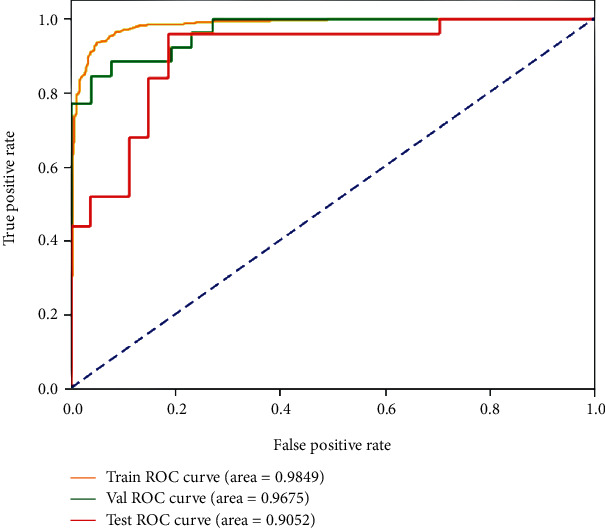

ROC curves of the prediction results are demonstrated in Figures 5 and 6. Figure 5 shows the best status classification when using FLAIR images for a deep model, which achieves an AUC of 0.985 (yellow curve), 0.968 (green curve), and 0.905 (red curve) on the training, validation, and testing datasets, respectively.

Figure 5.

ROC curves of the best result on the FLAIR images for MGMT promoter methylation status classification on the training, validation, and testing datasets.

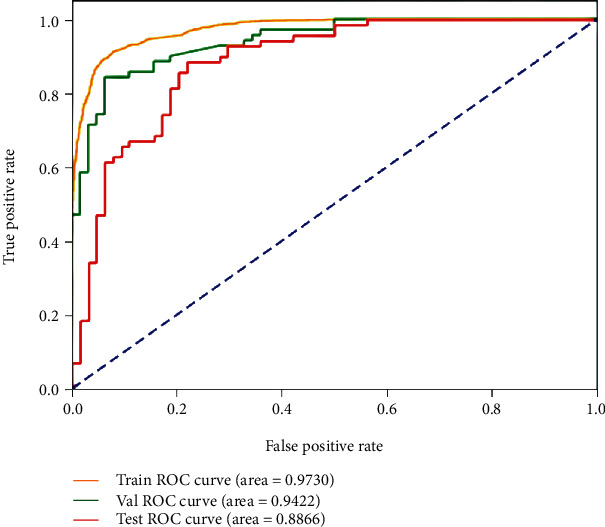

Figure 6.

ROC curves of the best result on the CE-T1W images for MGMT promoter methylation status classification in the training, validation, and testing datasets.

The best status classification when using CE-T1WI images for deep model training is shown in Figure 6. The well-trained deep model obtains AUC up to 0.973 (yellow curve), 0.942 (green curve), and 0.887 (red curve) on the training, validation, and testing datasets, respectively.

4. Discussion

This study presents an MR-based deep learning pipeline for automatic tumor segmentation and MGMT methylation status classification in an end-to-end manner for GBM patients. Experimental results demonstrate promising performance on accurate glioma delineation (Dice score, 0.897) and MGMT status prediction (accuracy, 0.827; recall, 0.852; precision, 0.821; and F1 score, 0.836) coming from the model trained with FLAIR images. In addition, the proposed pipeline dramatically shortens the inference time on glioma segmentation.

For glioma segmentation, one state-of-the-art deep model is utilized and obtains impressive performance on the involved MGMT dataset for GBM segmentation. Its performance is close to these deep network-based tumor segmentation studies. Hussain et al. [35] reported a CNN approach for glioma MRI segmentation, and the model achieved a Dice score of 0.87 on the BRATS 2013 and 2015 datasets. Cui et al. [36] proposed an automatic semantic segmentation model on the BRATS 2013 dataset, and the Dice score was near 0.80 on the combined high- and low-grade glioma datasets. Kaldera et al. [37] proposed a faster RCNN method and achieved a Dice score of 0.91 on 233 patients' data. These studies suggest that deep networks are full of potential for accurate tumor segmentation in MR images.

Several deep models have been designed for the classification of MGMT methylation status in GBM patients. Chang et al. [38] proposed a deep neural network which achieved a classification accuracy of 83% for 259 gliomas patients with T1W, T2W, and FLAIR images. Korfiatis et al. [26] compared different sizes of the ResNet baseline model and reached the highest accuracy of 94.9% in 155 GBM patients with T2W images. Han et al. [39] proposed a bidirectional convolutional recurrent neural network architecture for MGMT methylation classification, while the accuracy was around 62% for 262 GBM patients with T1W, T2W, and FLAIR images. In this study, a shallow CNN is used, and the classification performance is promising. The best performance comes from the model trained with FLAIR images, and we achieved a satisfactory result with the highest accuracy of 0.827 and recall of 0.852 in consideration of the relatively small dataset.

In the previous studies, Drabycz et al. [40] analyzed handcrafted features to distinguish methylated from unmethylated GBM and figured out that texture features from T2-weighted images were important for the prediction of MGMT methylation status. Han et al. [41] found that MGMT promoter-methylated GBM was prone to more tumor necrosis, while T2-weighted FLAIR sequence may be more sensitive to necrosis than T1-weighted images. Interestingly, we also find that better performances of both GBM segmentation and molecular classification are achieved on FLAIR images in our study although the images of CE-T1W and FLAIR did not come from the same patients.

The strengths of this study lie in the fully automatic glioma segmentation and predicting the MGMT methylation status based on a small dataset. Generally, it takes a radiologist about one minute per slice in tumor annotation, while the inference time of the deep learning model is about 0.1 seconds which is around 1/600 times used in manual annotation. Additionally, manual annotation is burdensome and prone to introduce inter- and intraobserver variability. While once well trained, a deep learning model can continuously and repeatedly perform tumor segmentation regardless of the observers. On the other hand, the training strategy in this study is beneficial for small dataset analysis. In general, a deep model requires a large number of training instances. However, it is challenging or impossible to provide massive high-quality images in medical imaging. Finally, although several studies tried to use deep networks for automatic glioma segmentation [35, 36, 42] or molecular classification [26, 38, 39], the proposed network in this study could integrate both glioma segmentation and classification in a seamless connection pipeline. And the performance is competitive to the state-of-the-art studies in tumor segmentation and classification.

There are several limitations to our study. First, the sample size is small in the study; we will further confirm the findings in a study with larger samples. Second, a multicenter research trial is helpful to validate the capability of the proposed pipeline, while the variations of MR imaging sequences, equipment venders, and other factors could impose difficulties on model building. Third, we failed to investigate the value of combined CE-T1WI and FLAIR in tumor segmentation and classification considering the fewer samples. In the future, we will explore multiple MR sequences for MGMT methylation status prediction, such as amide-proton-transfer-weighted imaging and diffusion-weighted imaging. These may have great potential to improve the performance of MGMT methylation status prediction.

5. Conclusion

An MRI-based end-to-end deep learning pipeline is designed for tumor segmentation and MGMT methylation status prediction in GBM patients. It can save time and avoid interobserver variability in tumor segmentation and help discover molecular biomarkers from routine medical images to aid in diagnosis and treatment decision-making.

Acknowledgments

This study was supported by the Key R&D Program of Guangdong Province (2018B030339001), the National Science Fund for Distinguished Young Scholars (No. 81925023), the National Natural Scientific Foundation of China (Nos. 81601469, 81771912, 81871846, 81971574, and 81802227), the Guangzhou Science and Technology Project of Health (No. 20191A011002), the Natural Science Foundation of Guangdong Province (2018A030313282), the Fundamental Research Funds for the Central Universities, SCUT (2018MS23), and the Guangzhou Science and Technology Project (202002030268 and 201804010032).

Contributor Information

Zaiyi Liu, Email: zyliu@163.com.

Xinhua Wei, Email: weixinhua@aliyun.com.

Xinqing Jiang, Email: gzcmmcjxq@163.com.

Data Availability

All MRI data are available in the cancer imaging archive (https://www.cancerimagingarchive.net/), and clinical and molecular data are obtained from the open-access data tier of the TCGA website.

Conflicts of Interest

The authors declare that there is no conflict of interest.

Authors' Contributions

Xin Chen, Min Zeng, and Yichen Tong contributed equally.

References

- 1.Morgan L. L. The epidemiology of glioma in adults: a "state of the science" review. Neuro-Oncology. 2015;17(4):623–624. doi: 10.1093/neuonc/nou358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ostrom Q. T., Gittleman H., Truitt G., Boscia A., Kruchko C., Barnholtz-Sloan J. S. CBTRUS statistical report: primary brain and other central nervous system tumors diagnosed in the United States in 2011-2015. Neuro-oncology. 2018;20(supplement_4):iv1–iv86. doi: 10.1093/neuonc/noy131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hegi M. E., Diserens A. C., Gorlia T., et al. MGMT Gene Silencing and Benefit from Temozolomide in Glioblastoma. The New England Journal of Medicine. 2005;352(10):997–1003. doi: 10.1056/NEJMoa043331. [DOI] [PubMed] [Google Scholar]

- 4.Weller M., Stupp R., Reifenberger G., et al. MGMT promoter methylation in malignant gliomas: ready for personalized medicine? Nature Reviews Neurology. 2010;6(1):39–51. doi: 10.1038/nrneurol.2009.197. [DOI] [PubMed] [Google Scholar]

- 5.Brandes A. A., Franceschi E., Tosoni A., et al. MGMT Promoter Methylation Status Can Predict theIncidence and Outcome of Pseudoprogression After Concomitant Radiochemotherapy in Newly Diagnosed Glioblastoma Patients. Journal of Clinical Oncology. 2008;26(13):2192–2197. doi: 10.1200/JCO.2007.14.8163. [DOI] [PubMed] [Google Scholar]

- 6.Wang L., Li Z., Liu C., et al. Comparative assessment of three methods to analyze MGMT methylation status in a series of 350 gliomas and gangliogliomas. Pathology-Research and Practice. 2017;213(12):1489–1493. doi: 10.1016/j.prp.2017.10.007. [DOI] [PubMed] [Google Scholar]

- 7.Parker N. R., Hudson A. L., Khong P., et al. Intratumoral heterogeneity identified at the epigenetic, genetic and transcriptional level in glioblastoma. Scientific Reports. 2016;6(1, article 22477) doi: 10.1038/srep22477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wen P. Y., Macdonald D. R., Reardon D. A., et al. Updated response assessment criteria for high-grade gliomas: response assessment in neuro-oncology working group. Journal of clinical oncology. 2010;28(11):1963–1972. doi: 10.1200/JCO.2009.26.3541. [DOI] [PubMed] [Google Scholar]

- 9.van den Bent M. J., Wefel J. S., Schiff D., et al. Response assessment in neuro-oncology (a report of the RANO group): assessment of outcome in trials of diffuse low-grade gliomas. The lancet oncology. 2011;12(6):583–593. doi: 10.1016/S1470-2045(11)70057-2. [DOI] [PubMed] [Google Scholar]

- 10.Macyszyn L., Akbari H., Pisapia J. M., et al. Imaging patterns predict patient survival and molecular subtype in glioblastoma via machine learning techniques. Neuro-Oncology. 2015;18(3):417–425. doi: 10.1093/neuonc/nov127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hu L. S., Ning S., Eschbacher J. M., et al. Radiogenomics to characterize regional genetic heterogeneity in glioblastoma. Neuro-Oncology. 2017;19(1):128–137. doi: 10.1093/neuonc/now135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hong E. K., Choi S. H., Shin D. J., et al. Radiogenomics correlation between MR imaging features and major genetic profiles in glioblastoma. European radiology. 2018;28(10):4350–4361. doi: 10.1007/s00330-018-5400-8. [DOI] [PubMed] [Google Scholar]

- 13.Kanas V. G., Zacharaki E. I., Thomas G. A., Zinn P. O., Megalooikonomou V., Colen R. R. Learning MRI-based classification models for MGMT methylation status prediction in glioblastoma. Computer Methods and Programs in Biomedicine. 2017;140:249–257. doi: 10.1016/j.cmpb.2016.12.018. [DOI] [PubMed] [Google Scholar]

- 14.Xi Y. B., Guo F., Xu Z. L., et al. Radiomics signature: a potential biomarker for the prediction of MGMT promoter methylation in glioblastoma. Journal of Magnetic Resonance Imaging. 2018;47(5):1380–1387. doi: 10.1002/jmri.25860. [DOI] [PubMed] [Google Scholar]

- 15.Tixier F., Um H., Bermudez D., et al. Preoperative MRI-radiomics features improve prediction of survival in glioblastoma patients over MGMT methylation status alone. Oncotarget. 2019;10(6):660–672. doi: 10.18632/oncotarget.26578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kickingereder P., Neuberger U., Bonekamp D., et al. Radiomic subtyping improves disease stratification beyond key molecular, clinical, and standard imaging characteristics in patients with glioblastoma. Neuro-Oncology. 2018;20(6):848–857. doi: 10.1093/neuonc/nox188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Liu Z., Zhang X. Y., Shi Y. J., et al. Radiomics analysis for evaluation of pathological complete response to neoadjuvant chemoradiotherapy in locally advanced rectal cancer. Clinical Cancer Research. 2017;23(23):7253–7262. doi: 10.1158/1078-0432.CCR-17-1038. [DOI] [PubMed] [Google Scholar]

- 18.Lambin P., Rios-Velazquez E., Leijenaar R., et al. Radiomics: extracting more information from medical images using advanced feature analysis. European Journal of Cancer. 2012;48(4):441–446. doi: 10.1016/j.ejca.2011.11.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Aerts H. J., Velazquez E. R., Leijenaar R. T., et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nature Communications. 2014;5(1) doi: 10.1038/ncomms5006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 21.Rajpurkar P., Irvin J., Ball R. L., et al. Deep learning for chest radiograph diagnosis: a retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS medicine. 2018;15(11, article e1002686) doi: 10.1371/journal.pmed.1002686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Yang Y., Yan L. F., Zhang X., et al. Glioma grading on conventional MR images: a deep learning study with transfer learning. Frontiers in Neuroscience. 2018;12 doi: 10.3389/fnins.2018.00804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Akay A., Hess H. Deep learning: current and emerging applications in medicine and technology. EEE journal of biomedical and health informatics. 2019;23(3):906–920. doi: 10.1109/JBHI.2019.2894713. [DOI] [PubMed] [Google Scholar]

- 24.Tandel G. S., Biswas M., Kakde O. G., et al. A review on a deep learning perspective in brain cancer classification. Cancers. 2019;11(1) doi: 10.3390/cancers11010111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zou L., Yu S., Meng T., Zhang Z., Liang X., Xie Y. A technical review of convolutional neural network-based mammographic breast cancer diagnosis. Computational and mathematical methods in medicine. 2019;2019:16. doi: 10.1155/2019/6509357.6509357 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Korfiatis P., Kline T. L., Lachance D. H., Parney I. F., Buckner J. C., Erickson B. J. Residual deep convolutional neural network predicts MGMT methylation status. Journal of Digital Imaging. 2017;30(5):622–628. doi: 10.1007/s10278-017-0009-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bady P., Sciuscio D., Diserens A.-C., et al. MGMT methylation analysis of glioblastoma on the Infinium methylation BeadChip identifies two distinct CpG regions associated with gene silencing and outcome, yielding a prediction model for comparisons across datasets, tumor grades, and CIMP-status. Acta Neuropathologica. 2012;124(4):547–560. doi: 10.1007/s00401-012-1016-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Reinhold J. C., Dewey B. E., Carass A., Prince J. L. Evaluating the impact of intensity normalization on MR image synthesis. SPIE Medical Imaging; 2019; San Diego, California, United States. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Shah M., Xiao Y., Subbanna N., et al. Evaluating intensity normalization on MRIs of human brain with multiple sclerosis. Medical Image Analysis. 2011;15(2):267–282. doi: 10.1016/j.media.2010.12.003. [DOI] [PubMed] [Google Scholar]

- 30.Myronenko A. 3D MRI Brain Tumor Segmentation Using Autoencoder Regularization. International MICCAI Brainlesion Workshop: Springer; 2019. [DOI] [Google Scholar]

- 31.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016; Las Vegas, NV, USA. pp. 770–778. [Google Scholar]

- 32.Doersch C. Tutorial on variational autoencoders. 2016. https://arxiv.org/abs/1606.05908.

- 33.Kingma D. P., Welling M. Auto-encoding variational Bayes. 2013. https://arxiv.org/abs/1312.6114.

- 34.He K., Zhang X., Ren S., Sun J. Delving deep into rectifiers: surpassing human-level performance on imagenet classification. Proceedings of the IEEE international conference on computer vision; 2015; Santiago, Chile. pp. 1026–1034. [DOI] [Google Scholar]

- 35.Hussain S., Anwar S. M., Majid M. Segmentation of glioma tumors in brain using deep convolutional neural network. Neurocomputing. 2018;282:248–261. doi: 10.1016/j.neucom.2017.12.032. [DOI] [Google Scholar]

- 36.Cui S., Mao L., Jiang J., Liu C., Xiong S. Automatic semantic segmentation of brain gliomas from MRI images using a deep cascaded neural network. Journal of healthcare engineering. 2018;2018:14. doi: 10.1155/2018/4940593.4940593 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kaldera H., Gunasekara S., Dissanayake M. B. MRI based glioma segmentation using deep learning algorithms. 2019 International Research Conference on Smart Computing and Systems Engineering (SCSE); 2019; Colombo, Sri Lanka. pp. 51–56. [DOI] [Google Scholar]

- 38.Chang P., Grinband J., Weinberg B. D., et al. Deep-learning convolutional neural networks accurately classify genetic mutations in gliomas. AJNR. American Journal of Neuroradiology. 2018;39(7):1201–1207. doi: 10.3174/ajnr.A5667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Han L., Kamdar M. R. MRI to MGMT: predicting methylation status in glioblastoma patients using convolutional recurrent neural networks. Biocomputing. 2018;23:331–342. doi: 10.1142/9789813235533_0031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Drabycza S., Roldánbcde G., Roblesbde P., et al. An analysis of image texture, tumor location, and MGMT promoter methylation in glioblastoma using magnetic resonance imaging. NeuroImage. 2010;49(2):1398–1405. doi: 10.1016/j.neuroimage.2009.09.049. [DOI] [PubMed] [Google Scholar]

- 41.Han Y., Yan L. F., Wang X. B., et al. Structural and advanced imaging in predicting MGMT promoter methylation of primary glioblastoma: a region of interest based analysis. BMC Cancer. 2018;18(1) doi: 10.1186/s12885-018-4114-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Wu S., Li H., Quang D., Guan Y. Three-plane-assembled deep learning segmentation of gliomas. Radiology: Artificial Intelligence. 2020;2(1, article e190011) doi: 10.1148/ryai.2020190011. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All MRI data are available in the cancer imaging archive (https://www.cancerimagingarchive.net/), and clinical and molecular data are obtained from the open-access data tier of the TCGA website.