Abstract

Background

In response to the U.S. opioid epidemic, the HEALing (Helping to End Addiction Long-termSM) Communities Study (HCS) is a multisite, wait-listed, community-level cluster-randomized trial that aims to test the novel Communities That HEAL (CTH) intervention, in 67 communities. CTH will expand an integrated set of evidence-based practices (EBPs) across health care, behavioral health, justice, and other community-based settings to reduce opioid overdose deaths. We present the rationale for and adaptation of the RE-AIM/PRISM framework and methodological approach used to capture the CTH implementation context and to evaluate implementation fidelity.

Methods

HCS measures key domains of the internal and external CTH implementation context with repeated annual surveys and qualitative interviews with community coalition members and key stakeholders. Core constructs of fidelity include dosage, adherence, quality, and program differentiation—the adaptation of the CTH intervention to fit each community’s needs. Fidelity measures include a monthly CTH checklist, collation of artifacts produced during CTH activities, coalition and workgroup attendance, and coalition meeting minutes. Training and technical assistance delivered by the research sites to the communities are tracked monthly.

Discussion

To help attenuate the nation’s opioid epidemic, the adoption of EBPs must be increased in communities. The HCS represents one of the largest and most complex implementation research experiments yet conducted. Our systematic examination of implementation context and fidelity will significantly advance understanding of how to best evaluate community-level implementation of EBPs and assess relations among implementation context, fidelity, and intervention impact.

Keywords: Opioid Use Disorder, Overdose, Implementation Context, Implementation Strategy, Community-Level Implementation, Fidelity, RE-AIM framework, PRISM Framework, Helping to End Addiction Long-term, HEALing Communities Study

1. Introduction

The opioid epidemic is a national emergency in the United States. Opioid overdoses have resulted in more than 750,000 deaths from 1999 to 2018 (CDC and National Center for Health Statistics, 2020). Although there are effective medications to treat opioid use disorder (MOUD) including methadone, buprenorphine, and naltrexone (Substance Abuse and Mental Health Services Administration, 2017), and naloxone can reverse opioid overdoses (Wermeling, 2015; McDonald and Strang, 2016), individuals experience stigma and barriers to accessing these interventions, and these barriers are also influenced by community dynamics and other factors, such as limited uptake of these interventions by providers (Krupitsky et al., 2011; Mojtabai et al., 2019; National Academies of Sciences et al., 2019; Sordo et al., 2017). The HEALing (Helping to End Addiction Long-termSM) Communities Study (HCS) is a multisite, wait-listed, community-level cluster-randomized trial that is testing a promising intervention for expanding an integrated set of evidence-based practices (EBPs) across health care, behavioral health, justice, and other community-based settings in 67 communities experiencing high rates of opioid use disorder (OUD) and overdose fatalities. The primary study outcome is a reduction in opioid overdose deaths (The HEALing Communities Study Consortium, 2020).

Researchers from four sites in Kentucky, Massachusetts, New York, and Ohio collaborated to develop the Communities That HEAL (CTH) intervention, which is based on the Communities That Care model (CTC; Oesterle et al., 2018). The CTH intervention includes three elements:

-

1

Community engagement with existing or new coalitions to develop and deploy comprehensive, data-driven plans for EBP selection and implementation across multiple community sectors (Sprague Martinez et al., 2020);

-

2

The Opioid Reduction Continuum of Care Approach (ORCCA), which consists of a menu of EBPs and strategies to expand overdose education and naloxone distribution (OEND), expand access to and retention in MOUD treatment (i.e., methadone, buprenorphine, and naltrexone); and increase safer opioid prescribing (Winhusen et al., 2020); and

-

3

Community-informed communication campaigns to address stigma and raise awareness about OEND and MOUD (Lefebvre et al., 2020).

The HCS is one of the largest and most complex implementation science research projects conducted to date. Similar to the CTC model, community coalitions play a major role in identifying community needs and setting priorities by selecting EBPs and strategies from the ORCCA. However, the CTH intervention is more complex in that the EBPs to be implemented span a continuum of overdose prevention and OUD treatment, in contrast to CTC’s focus on youth drug use prevention. Furthermore, the EBPs are to be implemented in a wider range of settings, which adds complexity. Moreover, the inclusion of communication campaigns represents an additional innovation of CTH relative to CTC.

The 67 communities recruited to the study are randomized to one of two waves in a wait-list, controlled design that separates initiation of the CTH by two years, with primary outcome measurement relying on administrative sources of data (Slavova et al., 2020). Overall, the CTH intervention is designed to engage and mobilize communities to reduce opioid overdose deaths through the adoption and implementation of EBPs. This paper presents the selection and adaptation of the framework and methodological approach used to capture the context in which the CTH is implemented and to evaluate implementation fidelity.

1.1. Identifying a Guiding Framework

The field of implementation science offers many theories, models, and frameworks to guide the implementation process, identify determinants of practice, and evaluate implementation outcomes (Birken et al., 2017b; Nilsen, 2015; Tabak et al., 2012). Practitioners and researchers select among these depending on their aims, the conceptual level of focus (e.g., team, organizational, or interorganizational), or the practicalities of using one over another (Birken et al., 2017a). As a result, implementation science researchers have presented several comparisons and cross-framework reviews that seek to identify common elements and integrate different approaches to framing implementation evaluation (Damschroder et al., 2009; Davies et al., 2010; Flottorp et al., 2013; Greenhalgh et al., 2004; Li et al., 2018; Moullin et al., 2015; Nilsen and Bernhardsson, 2019; Tabak et al., 2012).

This diversity of theoretical orientations is illustrated by the experience of the four HCS sites. The funding opportunity announcement for the HCS did not specify an implementation science framework, resulting in the four sites proposing four different conceptual frameworks to guide implementation of EBPs to reduce opioid overdose deaths and evaluate their impacts within their respective studies. The originally proposed frameworks were: the Exploration, Preparation, Implementation, and Sustainment (EPIS) framework (Aarons et al., 2011); the Integrated Promoting Action on Research Implementation in Health Services (I-PARIHS) framework (Kitson et al., 2008); the Reach, Effectiveness, Adoption, Implementation, and Maintenance (RE-AIM) evaluation framework (Glasgow et al., 1999); and an integration of the Collective Impact (CI) framework (Cabaj and Weaver, 2016; Christens and Inzeo, 2015; Flood et al., 2015; Kania and Kramer, 2011; Kania and Kramer, 2013) with the Consolidated Framework for Implementation Research (CFIR; Damschroder et al., 2009). However, once sites agreed to multisite collaboration as a single study, selecting one framework became a critical task.

Selecting a single theory, model, or framework requires an understanding of the trade-offs between them. For instance, the RE-AIM model is largely an outcome framework and on its own does not sufficiently capture the multilevel dynamics between individuals, their organizations, and their communities (Harden et al., 2018). The I-PARIHS framework examines four core constructs that are posited to lead to the success or failure of implementation: the facilitation process, the innovation or intervention being implemented, the individuals involved, and the context. While highly focused on context, I-PARIHS concentrates on process and has less of a focus on the measurable outcomes needed to evaluate the CTH intervention. Like I-PARIHS, the EPIS model sufficiently captures the multilevel context of the HCS study but focuses less on the implementation strategies used to support the CTH intervention. The integrated CI and CFIR approach combines a focus on implementation strategies (i.e., CI) with evaluation of those strategies, but CFIR’s focus on process (i.e., implementation strategies) is not as well positioned to evaluate the needs, outcomes, and impact of the intervention.

The cooperative arrangement of the HCS allowed the four research sites to engage in a consensus-based approach involving an iterative review and discussion of the implementation science literature. This work leveraged a collaborative process often used in team science to capitalize on the transdisciplinary distributed network of researchers involved in the HCS (Hall et al., 2008; Hall et al., 2012; Stokols et al., 2008a; Stokols et al., 2008b). During an in-person kickoff meeting focused on the design of the HCS, all four sites presented their implementation science frameworks during a breakout session in which each site had multiple representatives. After the presentations, site representatives discussed whether any of the four presented frameworks was suitable if HCS evolved into a single protocol study. Given that each site’s framework had been selected under the assumption of the site’s proposed research design, representatives reached consensus that none of the proposed frameworks was ideally suited for the emerging single protocol. Through discussion, the hallmarks of a viable alternative framework were identified, and a framework was then suggested by one site that had these hallmarks. Representatives quickly coalesced around this alternative framework.

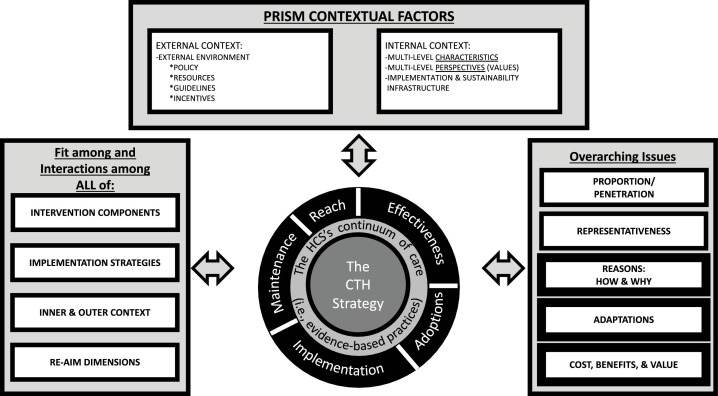

The resulting framework selected from this process was a unified RE-AIM and Practical, Robust, Implementation, and Sustainability (PRISM) model (see Fig. 1 ; Glasgow et al., 2019; Harden et al., 2019; Kwan et al., 2019). For a complete description of this model applied to the context of the HCS, please see The HEALing Communities Study Consortium (2020). Rather than create a model de novo, we adapted the integrated RE-AIM/ PRISM framework, leveraging the prior evidence regarding the use of these models in implementation science to the HCS context. The integrated RE-AIM/PRISM framework emphasizes dynamics within the intervention as well as system-level interrelations among the contexts both internal and external to the intervention. External factors include policy, resources, guidelines, and incentives. This framework also highlights the importance of communication campaigns, a key element of the CTH, as an implementation strategy. More specifically, the integrated RE-AIM/ PRISM framework addresses some of the limitations of the frameworks originally proposed by the four sites, as it uses a multifocal lens to consider issues of context (e.g., internal coalition factors and external environment), process (i.e., the CTH intervention), and outcomes (e.g., overdose deaths, reach of EBPs). This model additionally examines a variety of implementation and intervention outcomes that can be evaluated using mixed-methods approaches.

Fig. 1.

RE-AIM/PRISM Model Adapted for the HCS.

Revised, enhanced RE-AIM/PRISM 2019 model (Glasgow et al., 2019).

This adapted RE-AIM/PRISM framework has driven the development of a mixed-methods approach to measurement that incorporates administrative data, surveys, qualitative interviews, and data recorded by study staff. The RE-AIM outcomes for the EBPs included in the ORCCA and the cost-effectiveness evaluation, which are largely captured by administrative data (Slavova et al., 2020) and study records of costs incurred (Aldridge et al., 2020), are described in separate papers. In this manuscript, we describe the HCS approach to measuring the PRISM internal and external contextual factors through primary data collection as well as fidelity to the CTH intervention.

2. Materials and Methods

2.1. PRISM Contextual Factors: Community Coalition and Key Stakeholder Survey

2.1.1. Survey Design and Eligibility

To measure key domains of the internal and external context within which the CTH intervention is implemented, the HCS is designed to include repeated annual surveys of community coalition members and key stakeholders in the 67 communities. For individuals participating in coalitions as part of HCS (henceforth, HCS-designated coalitions), survey data are collected at four time points (baseline [pre-intervention] and 12-, 24-, and 36-month follow-up).

To be eligible for annual surveys, individuals are required to be at least 18 years old. For the baseline survey, individuals are required to be either members of existing community coalitions that have agreed to participate in the HCS or stakeholders who would be knowledgeable about the HCS community if a new coalition were to be formed as part of the early phase of the intervention. At baseline, research sites recruited from existing coalitions when possible, and the Massachusetts and New York sites also recruited key stakeholders in all communities. For subsequent annual surveys, individuals are required to be participants in the HCS-designated coalitions. Key stakeholders in communities without existing coalitions at baseline who do not join the HCS-designated coalitions are surveyed only once at baseline. New individuals who join the HCS coalitions over the course of the intervention are eligible to participate beginning with the next annual survey. Thus, the sample size may grow or shrink over time, based on the size of the HCS-designated coalitions.

2.1.2. Survey Measures

The baseline survey includes measures adapted from the literature and measures developed specifically for the HCS (see Supplementary Table 1 for baseline survey). The internal context is conceptualized as the characteristics and perspectives within the coalition. For communities with existing coalitions, these internal context measures include diversity and representativeness (Fawcett et al., 1997), coalition functioning (Hays et al., 2000; Israel et al., 1994; Rogers et al., 1993), the coalition’s readiness for change (Shea et al., 2014), and the coalition’s leadership (Aarons et al., 2014) with regard to supporting MOUD and OEND.

Coalition members and key stakeholders also report about the external context, conceptualized as community readiness to implement MOUD and OEND in varied medical, behavioral health, and criminal justice venues. Developed specifically for the HCS, due to a lack of existing measures on community readiness to expand the ORCCA, these measures ask respondents to rate the perceived importance of expanding MOUD and OEND in these community venues and the magnitude of the barriers to expansion in these venues. Perceived community stigma about persons with OUD (Luoma et al., 2010) and toward MOUD and OEND are additional measures of external context. In addition, participants provide information on interorganizational networks in the community—including relationship quality, value, and trust—using measures from the Program to Analyze, Record, and Track Networks to Enhance Relationships (PARTNER) tool (Retrum et al., 2013). Participants provide information on the sectors that they represent (e.g., organizational types, person with lived experience) as well as demographic characteristics of age, gender, race, Hispanic/Latino ethnicity, and educational attainment. Annual follow-up surveys will field many of these same measures, but additional measures such as satisfaction with the CTH intervention as well as perceived changes in access to EBPs within their communities will be included. We hypothesize that the CTH intervention will improve internal factors, such as coalition functioning and coalition’s leadership, thereby further supporting uptake of EBPs. We also hypothesize that the CTH intervention will impact external factors, such as community stigma. Baseline levels of coalition functioning and external factors (e.g. stigma) may serve as moderators, such that the CTH is more effective in communities with higher levels of baseline readiness and lower levels of stigma.

2.1.3. Survey Pilot Study

Before we fielded the baseline survey, we undertook a pilot study in which 15 individuals in non-HCS communities were recruited to complete the survey and then provide feedback on both the recruitment materials and survey via a telephone or in-person interview. All sites performed the pilot in non-HCS communities, but sites had flexibility in recruiting individuals participating in non-HCS coalitions or through existing professional networks. Such flexibility was important because it was anticipated that the baseline survey would be deployed in communities with coalitions and in communities where new coalitions would be formed as part of the HCS. Information from the pilot study was used to refine the recruitment materials and the baseline survey. One change that resulted from the pilot was revising the order of items to group all questions about OEND and MOUD separately, as pilot participants struggled with the focus shifting back and forth between the two EBPs. Second, pilot participants indicated the section pertaining to stigma was redundant, as it contained multiple scales. For the baseline survey, the stigma section was streamlined.

2.1.4. Survey Recruitment

At baseline and subsequent annual follow-up surveys, recruitment procedures are as follows. Before recruitment, research site staff work with coalition leaders to obtain rosters of members or, in communities without pre-existing coalitions, staff communicate with state and local contacts to identify key stakeholders in each community. Efforts for the baseline survey resulted in the identification of 3,592 coalition members and key stakeholders. Before survey deployment, sites may engage a survey champion to introduce the survey to potential participants either via e-mail or during in-person coalition meetings. Survey champions may be local or state government officials, members of the research team who are well-known to communities, or the chairperson of the coalition.

The recruitment process proceeds on a four-week schedule, relying heavily on email invitations with instructions regarding how to access the survey via each site’s REDCap (Research Electronic Data Capture) Web site (Harris et al., 2019; Harris et al., 2009). Sites may engage in additional recruitment strategies, such as including a PDF for the survey so that participants can download the survey and mail or fax back to the research site. In weeks 2–4, if there has been no response, e-mail reminders are sent that include an offer to complete the survey by telephone or in person. Survey champions may send a separate reminder email during the data collection periods and sites may also follow up with non-respondents by phone, text, letter, or fax. At week 4, non-respondents may be mailed a packet that includes a letter requesting participation, a paper survey, and a prepaid addressed envelope.

2.1.5. Survey Data Collection Methods

Surveys may be completed via the following modes: self-administered Web-based survey in REDCap, self-administered hard copy at a coalition meeting or returned by mail, or structured telephone interview. The baseline survey requires about 45 minutes to complete. In Kentucky, Massachusetts, and New York, participants are compensated for each survey unless the participant declines compensation. Ohio does not compensate participants.

For the baseline survey, data collection occurred from November 2019 through January 2020. For Wave 1 communities, data collection occurred before the first Phase 1 coalition meeting (i.e., start of the intervention). For Wave 2 communities, data collection ended on January 31, 2020. At baseline, 1,055 individuals responded to the survey (31.9% of the 3,309 individuals invited). Response rates by state were 43.8% in Kentucky (n = 189), 33.9% in Massachusetts (n = 243), 40.8% in New York (n = 323), and 21.9% in Ohio (n = 300).

2.2. PRISM Contextual Factors: Community Coalition and Key Stakeholder Qualitative Interviews

To measure the key domains of the internal and external context in greater depth, the HCS includes repeated annual, semi-structured interviews of community coalition members and key stakeholders in the 67 communities. These interviews are conducted on the same timeline as the annual surveys and use the same eligibility criteria.

2.2.1. Qualitative Interview Guide

The semi-structured interview guides are based on the RE-AIM/PRISM framework. Two interview guides were used at baseline: one for existing coalition members and one for key informants in communities without pre-existing coalitions (see Supplementary Table 2 for interview guides). Both guides employ framework-directed questions and flexible prompts to solicit participants’ understanding of and experiences with opioid-related issues in their broader communities, or external context. Interview questions inquire about the nature of the local opioid use problem; existing initiatives to address opioid-related overdoses; community attitudes toward opioid use, treatment and prevention; and the need for additional services. The baseline interview guide for existing coalition members asks about the internal context of the coalition, including coalition structure, goals, the individual’s role in the coalition, resources that support coalition activities, and current coalition-led initiatives to address opioid use. Internal context questions in the key informants’ baseline interview guide are similarly structured but inquire about local organizations rather than coalitions, as well as the informant’s role in addressing community opioid use.

A uniform follow-up interview guide investigating evolving dynamics within the external community and internal coalition contexts will be used across all 67 communities annually. The follow-up interview guide will also investigate intervention and implementation experiences, including how coalitions employ community engagement practices, select ORCCA interventions, and provide input on the communication campaigns. Coalition members will be asked to describe their perceptions of outcomes associated with the interventions, including which target groups are reached; the perceived effectiveness, adoption, and maintenance of interventions; and any implementation challenges and adaptations associated with CTH intervention.

2.2.2. Qualitative Interview Pilot Study

To promote relevance and clarity, we pilot-tested baseline interview guides with 16 stakeholders from coalitions, treatment agencies, and prevention organizations in non-HCS communities. Similar to the survey pilot study, sites recruited participants using professional and community networks to identify individuals from non-HCS communities that were involved in addressing the opioid epidemic. Participants were compensated for completing the interview and providing feedback on interview questions, topics, and phrasing. We revised the qualitative interview guide based on feedback from the pilot. While the delivery format was not modified, we modified the background section and questions to address concerns raised by pilot interviewees when they found these materials confusing or identified them as using too much jargon. We modified questions that pilot interviewees found confusing. For example, rather than asking about how the coalition “functioned,” the question was revised to ask about how the coalition worked together to achieve a shared goal. We also revised the order of questions to make them flow more logically. These suggestions were incorporated into the final guides.

2.2.3. Qualitative Interview Recruitment

To obtain broad community perspectives in each state, we purposively sampled qualitative interview participants (Palinkas et al., 2015) from the rosters of coalitions that had committed to participate in HCS or from the list of key stakeholders in communities without pre-existing HCS coalitions at baseline based on roles and sectors. In Massachusetts communities that lacked pre-existing coalitions, research site staff communicated with state public health agency contacts to identify key stakeholders in the community. The recruitment target was a minimum of four individuals per community, representing varying roles and sectors, including individuals who work for agencies that provide treatment (e.g., specialty substance use disorder treatment/MOUD treatment, community mental health centers), harm reduction programs (e.g., syringe service programs and local public health departments), and criminal justice organizations (e.g., jail, probation/parole). We also aimed to include individuals with lived experience of OUD, either personally or through a family member, although we were unable to purposively sample for this. In communities with pre-existing coalitions, we sought to obtain perspectives of the chairperson and coordinators.

Identification of potential participants for the baseline qualitative interview was done in two ways. In Massachusetts and Ohio, recruitment was initiated by responses to the Coalition Member and Key Stakeholder Survey, which included a question asking whether participants would be willing to take part in a qualitative interview. In Kentucky and New York, purposive sampling was used, irrespective of survey participation. Recruitment for annual follow-up interviews will rely on active coalition lists.

At baseline and subsequent follow-up interviews, the recruitment process proceeds on a four-week schedule, relying heavily on email invitations with instructions regarding how to schedule an interview with research site staff. Additional reminders via email, telephone, text, or letters are used to prompt individuals to schedule an interview.

2.2.4. Qualitative Interview Data Collection

Interviews are scheduled according to participant convenience and are conducted in person or via telephone/Zoom, whichever the participant prefers. For in-person interviews, staff travel to the participant’s community. All qualitative interviews are open-ended, with interviewers asking the basic questions in the semi-structured interview guide, followed by probes as needed. All interviewers are trained in qualitative interviewing, as well as in substance use disorder content. The baseline qualitative interview takes approximately 45 minutes to complete. In Kentucky, Massachusetts, and New York, participants are compensated for each interview unless they decline compensation. Ohio does not compensate participants.

All qualitative interviews are audio recorded with interviewee permission. After the interview, the audio recording is transcribed verbatim by a professional transcription company or transcription software. The transcript is then reviewed by the interviewer for accuracy. The transcript is stored in a password-protected folder on a secure server and prepared for analysis using the qualitative software program NVivo 12.0. In line with current ethical standards, any identifying data are removed by the study’s Data and Coordination Center before data archiving (McLellan et al., 2003).

Baseline qualitative data collection occurred from November 2019 through January 2020, using the same time frame as survey data collection. In total, 389 individuals participated in the qualitative interviews at baseline (participation rate = 40.8%)—80 in Kentucky (participation rate = 86.0%), 103 in Massachusetts (participation rate = 42.4%), 87 in New York (participation rate = 21.3%), and 119 in Ohio (participation rate = 56.9%).

2.3. Fidelity Monitoring

Fidelity monitoring is a core construct of the RE-AIM/PRISM model and is used for both research purposes and for feedback for ongoing quality improvement of the CTH intervention implementation. Fidelity is defined as “the extent to which an intervention is implemented as intended” (Nelson et al., 2012; Sanetti and Kratochwill, 2009). For the HCS, the core constructs of fidelity (Allen et al., 2012; Gearing et al., 2011) are: (1) dosage; (2) adherence; (3) quality; and (4) program differentiation—that is, adaptation or modification of the CTH intervention to fit each community’s needs. Collectively, these fidelity measures demonstrate the relation between the implementation of the CTH intervention (independent variable) in the 34 Wave 1 communities and the primary outcome of reducing opioid overdose deaths (dependent variable) compared to the 33 Wave 2 communities with delayed implementation of CTH (see The HEALing Communities Study Consortium, 2020 for more details on study design).

Several considerations have guided the design and selection of fidelity measures (Schoenwald et al., 2011). First, measures that cover the core constructs and have established reliability or can be standardized across communities are prioritized. Second, our measurement seeks to minimize respondent burden and reduce risk of breaching the confidentiality of coalition participants. A third consideration is the ability to develop standard operating procedures that promote speed of data collection to allow for rapid monitoring and feedback. Finally, long-term sustainability and replicability of the fidelity measures in future dissemination of the CTH intervention have been considered.

2.3.1. Fidelity Measures of Dose, Adherence, Quality, and Adaptation

Table 1provides an overview of the fidelity measures that are described in detail below.

Table 1.

Fidelity Constructs and Measurement.

| Fidelity Construct |

||||

|---|---|---|---|---|

| Source/Measure | Dose/ Exposure | Adherence | Quality | Adaptation |

| Coalition | ||||

| Meeting attendance | X | |||

| Meeting minutes | X | X | ||

| Qualitative interviews | X | |||

| CTH materials | ||||

| Milestone and benchmark checklist | X | X | ||

| Document/artifact review | X | X | X | |

CTH Milestone and Benchmark Checklist: The CTH Milestone and Benchmark Checklist is the primary source of adherence data. The CTH Checklist is adapted from the milestones and benchmarks checklist from multiple trials of the Communities That Care (Arthur et al., 2010; Quinby et al., 2008). The CTH Checklist measures the level to which activities of CTH are completed using a five-point Likert scale (0 = not started yet to 4 = completed). The CTH Checklist also qualitatively documents barriers and facilitators encountered during each activity and whether adaptations or modifications have occurred. Research staff complete the checklist monthly for each of the communities in the active intervention wave. Responses are entered into REDCap each month and data reports on progress in implementing the CTH intervention are distributed to the research team.

CTH Documents/Artifacts: The checklist is supplemented by electronic copies of documents or artifacts produced during CTH activities. Examples of documents that are archived include the coalition charter, the communication campaign distribution plan, a community profile, the coalition’s ORCCA EBP action plans, and implementation plans with partner organizations. These artifacts are additional data to document progress of CTH activities and assess their adherence and quality. Specific coding procedures have not yet been developed, but these documents will be available for future content analyses.

Coalition and Workgroup Attendance: Dose or exposure to the CTH intervention is operationalized by the level of attendance at regular HCS-designated coalition and workgroup meetings. The attendance form includes names of participants, organizational affiliation, and contact information. Research staff enter the data into REDCap using a participant ID and organization ID. The monthly attendance report for each community coalition includes the total number of participants who attended the primary monthly meeting (not including research staff), the number of organizations represented, the current size of the coalition by number of members and number of organizations, and the date and length of the meeting. Similar attendance data are collected from subcommittee and work group meetings that occur outside the primary monthly HCS coalition meetings. By comparing attendance to the size of the coalition over time, analyses can examine whether coalitions experiencing a greater dose of the CTH intervention through greater meeting attendance are more successful in achieving the primary HCS outcomes.

Coalition Meeting Minutes: Quality of CTH implementation is documented using meeting minutes. Research staff take minutes at every HCS-designated coalition meeting using a template that includes the agenda, updates on action items from prior meetings, discussion of new business, summary of decisions related to the CTH activities, and action items. HCS staff regularly apprise coalition members that meeting minutes will be used for research purposes to improve understanding of how the coalitions are implementing the CTH intervention. Coalition members may designate any comments “off the record” and edit minutes as needed. Meeting minutes are uploaded monthly. Currently, meeting minutes are being archived to support future content analyses but have not yet been coded. Research sites may use meeting minutes to monitor adherence to planned CTH activities and as another source of information regarding barriers, facilitators, and adaptation of the CTH intervention across communities.

2.4. Training and Technical Assistance Tracking

As research sites partner with community coalitions and local organizations to support the implementation of the ORCCA, training and technical assistance (TTA) is provided to support expansion of EBPs in communities. The Training and Technical Assistance Tracking (TTAT) form measures, at the community level, TTA directly provided or coordinated by research sites outside of the context of HCS-designated coalition meetings and subcommittee meetings. Although much of the TTA is focused on the ORCCA, TTA may also focus on stigma, community engagement, data visualization, and other topics related to the CTH intervention. Some aspects of TTA, such as buprenorphine waiver training, may be time-limited. Other forms of TTA, such as building data capacity to use dashboards or redesigning workflows to support EBP implementation, may entail multiple ongoing contacts. Only TTA that is funded or partially funded using HCS grant funding is included in the TTAT form.

During the CTH intervention, the TTAT form is completed in REDCap for each community by research staff to measure the cumulative TTA activities for a given month. TTAT measures include the number of episodes of TTA, the total number of hours of TTA provided, the reach of TTA in terms of number of recipients, the modalities of TTA (e.g., in person, videoconference), and the foci of TTA. In addition, the TTAT form measures whether TTA was solely delivered by the research site or in partnership with other organizations and whether the TTA was solely supported by HCS or whether other funding sources were leveraged. The TTA measures will be used to test hypotheses regarding whether the dose and reach of TTA is correlated with community-level EBP implementation after adjusting for baseline community readiness. The measures of dose and reach of TTA also supports the health economics costing of the CTH intervention.

2.5. Ethics

The pilot studies of the coalition member/key stakeholder survey and qualitative interview guide were approved by Advarra Inc., the HCS single institutional review board (sIRB; Pro00037850). The study protocol including de novo data collection procedures at baseline and follow-up were approved by Advarra Inc. (Pro00038088). Informed consent is obtained from all community members who participate in the pilot studies and in the de novo data collection. When surveys are self-administered via REDCap, participants are provided with information about the study and their rights as participants and asked to indicate online whether they agree to participate. When in-person data collection from community members occurs, written informed consent is obtained. Verbal consent is obtained when data are collected via telephone/videoconference interviews.

3. Discussion

As part of the HCS, the CTH intervention is designed to help increase adoption of EBPs for treating OUDs and reduce opioid overdose deaths in communities experiencing high rates of OUD and overdose fatalities. If found to be effective, the CTH intervention can be disseminated and implemented in communities across the US to help save thousands of lives. Guided by the RE-AIM/PRISM model, which was adapted for the HCS, we are capturing the external and internal context within which CTH is being implemented. We are also measuring fidelity to the CTH intervention, including the extent to which the impact of CTH may be moderated or mediated, or both, by one or more measures of implementation context or fidelity. Analyses of the relations among implementation context, implementation fidelity, and CTH impact are of critical importance. Successful implementation has been hypothesized to be affected by the fit and interactions among the components of the EBPs, the implementation strategies, and the internal and external contexts, as well as fidelity.

During the first six months of the HCS, researchers from the four sites collaborated to address several challenges to measuring implementation context and fidelity for a community engagement intervention. One of the first challenges was reaching consensus on a guiding framework. Despite the plethora of available theories, models, and frameworks from which to choose, consensus-building was facilitated by the previous work each site conducted to select models as part of its grant application process as well as by a cross-site review of the implementation science literature. Thus, consensus on the RE-AIM/PRISM model was achieved relatively easily.

A second challenge was reaching consensus on the contextual measures and the method (i.e., quantitative, qualitative) and frequency (e.g., monthly, quarterly) of measurement. Researchers sought to balance the need for data on schedules similar to the measurement of the primary outcome (i.e., monthly measures of overdose deaths) with the burdens of primary data collection on communities. The final decision was to focus on the core constructs within the RE-AIM/PRISM model—external context, internal context, intervention and implementation, and outcomes—using both survey and qualitative methods of data collection conducted annually so as to reduce burden on community members. However, data on intervention fidelity are measured monthly, because the effort required is by research site staff, not the communities.

A third challenge was reaching consensus on an efficient, timely, and sustainable process for fidelity data collection to facilitate real-time monitoring and quality improvement while also ensuring rigor, standardization, and reliability. Indeed, there was much discussion and compromise on the plan for collecting and scoring the CTH fidelity checklist. Ultimately, we reached consensus on simplified scoring guidelines for each activity in the CTH checklist and identified documents/artifacts as output from core CTH tasks (e.g., community profiles, community action plans) that could be used to verify the extent and quality of completion. Another challenge to assessing implementation fidelity is the iterative, nonlinear nature of the CTH intervention, which makes it difficult to measure adherence. For example, some CTH tasks have finite endpoints (e.g., a distribution plan for the communication campaign), whereas other CTH tasks are iterative (e.g., creation of community profiles and action plans that are likely to be revised and updated over the course of the intervention). Moreover, some CTH tasks, such as sustainability planning and training on ORCCA EBPs including MOUD and OEND, once selected by communities, are ongoing and do not lend themselves to the linear sequence and discrete categories of the checklist. In response to these challenges, we have collaborated with other work groups in HCS to develop additional measurement tools that are more flexible than the checklist.

Other elements of measurement relevant to the PRISM framework are in development. The four sites are working to develop common measures to track federal, state, and local policy barriers and policy changes that occur during the CTH intervention to supplement the follow-up qualitative interviews. Another external factor is opioid-related, non-HCS community funding, particularly federal funding allocated to states. As part of the health economics evaluation of the HCS, such preceding and concurrent funding is being tracked to describe the additional resources that are helping communities address the opioid epidemic and to inform intervention sustainability.

3.1. Limitations

Although the HCS has numerous strengths, several limitations in the implementation science approach should be noted. First, community-based studies like HCS must be able to understand the impact of disruptive, large-scale events. The COVID-19 pandemic, which emerged during the first year of the intervention, has resulted in adaptations to the CTH intervention and may impact the outcomes achieved. The impact of COVID-19 is now being integrated into follow-up surveys and qualitative interviews at the annual follow-up, exploring both internal and external contexts. Second, with 67 communities included in the HCS, decisions regarding primary data collection needed to reflect a manageable scope of work. Thus, surveys and interviews have focused somewhat narrowly on the coalitions that have been engaged in the project. With each community represented by a single coalition, the perspectives of the coalition members may only represent a subset of the community. The communities vary in that some have coalitions that existed before the HCS while other coalitions are being formed specifically for the project. This variation may have impacted responses and response rate to the baseline survey, as sites had varying levels of relationships with coalition members and key stakeholders. The large number of communities engaged in the HCS should help address this limitation to some degree, but it should be noted that the communities in the four states are somewhat geographically clustered, which may limit generalizability.

3.2. Conclusion

To address the nation’s opioid epidemic, there is an urgent need to increase the adoption of EBPs to expand OEND, access to and retention in MOUD treatment, and increase safer opioid prescribing in communities. As part of the HCS, which represents one of the (if not the) largest and most complex implementation science research experiments conducted to date, we are testing the impact of the CTH intervention on the adoption of EBPs across health care, behavioral health, justice, and other community-based settings. Ideally, the knowledge gained as part of the HCS will extend beyond how best to implement EBPs for addressing OUDs and reducing opioid overdoses. For example, if the RE-AIM/PRISM model is able to successfully guide implementation research as complex as the HCS, it would seem highly promising for guiding future implementation research addressing other significant health problems. Additionally, if the CTH intervention is found to be effective in increasing adoption of EBPs for addressing OUDs and reducing opioid overdoses in communities, it could support communities in the adoption of EBPs for other health conditions. Finally, regardless of the extent to which the CTH intervention is found to be effective, we believe our examination of implementation context and implementation fidelity will lead to significant advancements in understanding how to evaluate community-level implementation of EBPs and assess relationships among implementation context, fidelity, and intervention impact.

Contributors

All authors are responsible for this reported research. The following individuals contributed to the development of the implementation science framework: HKK, KRM, ASM, TRH, LG, MD, DGE, ANCC, DMW, BRG, AMA, CBO, ELC. The following individuals contributed to the development of measures, including de novo measures collected from coalition members, key stakeholders via surveys and qualitative interviews, and fidelity measures: HKK, TRH, LG, MD, CBO, DGE, BRG, AMA, ELC, ANCC, ASM, EAO, DMW. The following individuals contributed to the acquisition or analysis of data, including survey data, qualitative interview data, or fidelity data: HKK, ASM, TRH, LG, MD, DGE, DMW, AMA, ELC, ALS, ASM. The following individuals made substantial contributions to the drafted work, substantively revised it, or both: HKK, MD, LG, TRH, CBO, AMA, ANCC, ELC, BRG, LMG, DGE, KRM, ASM, EAO, ALS, DMW. All authors read and approved the final manuscript.

Trial registration

ClinicalTrials.gov (NCT04111939).

Role of the Funding Source

This research is supported by the National Institutes of Healththrough the NIH HEAL Initiative under award numbers UM1DA049406 (Kentucky), UM1DA049417 (Ohio), UM1DA049412 (Massachusetts), UM1DA049415 (New York), and UM1DA049394 (RTI). Survey data collection through REDCap was also supported by the Boston University Clinical & Translational Science Institute(National Center for Advancing Translational Sciences, NCATS Grant UL1TR001430), The Ohio State University Center for Clinical and Translational Science(NCATS Grant UL1TR002733), and the University of Kentucky Center for Clinical and Translational Science(NCATS Grant UL1TR001998). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health or its NIH HEAL Initiative.

Declaration of Competing Interest

The authors report no declarations of interest.

Acknowledgments

The Implementation Science Work Group within the HCS is grateful for the support of the site research staff who are rigorously tracking the implementation of the study, as well as that of community members who have participated in de novo data collection.

Footnotes

Supplementary material related to this article can be found, in the online version, at doi:https://doi.org/10.1016/j.drugalcdep.2020.108330.

Appendix A. Supplementary data

The following is Supplementary data to this article:

References

- Aarons G.A., Ehrhart M.G., Farahnak L.R. The Implementation Leadership Scale (ILS): development of a brief measure of unit level implementation leadership. Implement. Sci. 2014;9:45. doi: 10.1186/1748-5908-9-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons G.A., Hurlburt M., Horwitz S.M. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm. Policy Ment. Health. 2011;38:4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aldridge A.P., Barbosa C., Barocas J.A., Bush J.L., Chhatwal J., Harlow K.J., Hyder A., Linas B.P., McCollister K.E., Morgan J.R., Murphy S.M., Savitzky C., Schackman B.R., Seiber E.E., Starbird L.E., Villani J., Zarkin G.A. Health economic design for cost, cost-effectiveness and simulation analyses in the HEALing Communities Study. Drug Alcohol Depend. 2020;217 doi: 10.1016/j.drugalcdep.2020.108336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allen J.D., Linnan L.A., Emmons K.M. In: Dissemination and Implementation Research in Health: Translating Science to Practice. Brownson R.C., Colditz G.A., Proctor E.K., editors. Oxford Press; New York: 2012. Fidelity and its relationship to implementation effectiveness, adaptation, and dissemination. [Google Scholar]

- Arthur M.W., Hawkins J.D., Brown E.C., Briney J.S., Oesterle S., Abbott R.D. Implementation of the Communities That Care Prevention System by Coalitions in the Community Youth Development Study. J. Community Psychol. 2010;38:245–258. doi: 10.1002/jcop.20362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birken S.A., Powell B.J., Presseau J., Kirk M.A., Lorencatto F., Gould N.J., Shea C.M., Weiner B.J., Francis J.J., Yu Y., Haines E., Damschroder L.J. Combined use of the Consolidated Framework for Implementation Research (CFIR) and the Theoretical Domains Framework (TDF): a systematic review. Implement. Sci. 2017;12:2. doi: 10.1186/s13012-016-0534-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birken S.A., Powell B.J., Shea C.M., Haines E.R., Alexis Kirk M., Leeman J., Rohweder C., Damschroder L., Presseau J. Criteria for selecting implementation science theories and frameworks: results from an international survey. Implement. Sci. 2017;12:124. doi: 10.1186/s13012-017-0656-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cabaj M., Weaver L. 2016. Collective impact 3.0: an evolving framework for community change.https://www.collectiveimpactforum.org/sites/default/files/Collective%20Impact%203.0.pdf Accessed on July 13, 2020. [Google Scholar]

- CDC, National Center for Health Statistics . 2020. Wide-ranging online data for epidemiological research (WONDER)http://wonder.cdc.gov [Google Scholar]

- Christens B.D., Inzeo P.T. Widening the view: Situating collective impact among frameworks for community-led change. Community Dev. J. 2015;46:420–435. [Google Scholar]

- Damschroder L.J., Aron D.C., Keith R.E., Kirsh S.R., Alexander J.A., Lowery J.C. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement. Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davies P., Walker A.E., Grimshaw J.M. A systematic review of the use of theory in the design of guideline dissemination and implementation strategies and interpretation of the results of rigorous evaluations. Implement. Sci. 2010;5:14. doi: 10.1186/1748-5908-5-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fawcett S., Foster D., Francisco V. In: the Ground Up: A Workbook on Coalition Building and Community Development. Kaye G., Wolff T., editors. AHEC/Community Partners; Amherst, MA: 1997. Monitoring and evaluation of coalition activities and success; pp. 163–185. [Google Scholar]

- Flood J., Minkler M., Hennessey Lavery S., Estrada J., Falbe J. The Collective Impact Model and Its Potential for Health Promotion: Overview and Case Study of a Healthy Retail Initiative in San Francisco. Health Educ. Behav. 2015;42:654–668. doi: 10.1177/1090198115577372. [DOI] [PubMed] [Google Scholar]

- Flottorp S.A., Oxman A.D., Krause J., Musila N.R., Wensing M., Godycki-Cwirko M., Baker R., Eccles M.P. A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implement. Sci. 2013;8:35. doi: 10.1186/1748-5908-8-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gearing R.E., El-Bassel N., Ghesquiere A., Baldwin S., Gillies J., Ngeow E. Major ingredients of fidelity: a review and scientific guide to improving quality of intervention research implementation. Clin. Psychol. Rev. 2011;31:79–88. doi: 10.1016/j.cpr.2010.09.007. [DOI] [PubMed] [Google Scholar]

- Glasgow R.E., Harden S.M., Gaglio B., Rabin B., Smith M.L., Porter G.C., Ory M.G., Estabrooks P.A. RE-AIM Planning and Evaluation Framework: Adapting to New Science and Practice With a 20-Year Review. Front. Public Health. 2019;7:64. doi: 10.3389/fpubh.2019.00064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasgow R.E., Vogt T.M., Boles S.M. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am. J. Public Health. 1999;89:1322–1327. doi: 10.2105/ajph.89.9.1322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenhalgh T., Robert G., Macfarlane F., Bate P., Kyriakidou O. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82:581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall K.L., Feng A.X., Moser R.P., Stokols D., Taylor B.K. Moving the science of team science forward: collaboration and creativity. Am. J. Prev. Med. 2008;35:S243–249. doi: 10.1016/j.amepre.2008.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall K.L., Vogel A.L., Stipelman B., Stokols D., Morgan G., Gehlert S. A Four-Phase Model of Transdisciplinary Team-Based Research: Goals, Team Processes, and Strategies. Transl. Behav. Med. 2012;2:415–430. doi: 10.1007/s13142-012-0167-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harden S.M., Smith M.L., Ory M.G., Smith-Ray R.L., Estabrooks P.A., Glasgow R.E. RE-AIM in Clinical, Community, and Corporate Settings: Perspectives, Strategies, and Recommendations to Enhance Public Health Impact. Front. Public Health. 2018;6:71. doi: 10.3389/fpubh.2018.00071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harden S.M., Strayer T.E., 3rd, Smith M.L., Gaglio B., Ory M.G., Rabin B., Estabrooks P.A., Glasgow R.E. National Working Group on the RE-AIM Planning and Evaluation Framework: Goals, Resources, and Future Directions. Front. Public Health. 2019;7:390. doi: 10.3389/fpubh.2019.00390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris P.A., Taylor R., Minor B.L., Elliott V., Fernandez M., O’Neal L., McLeod L., Delacqua G., Delacqua F., Kirby J., Duda S.N., REDCap Consortium The REDCap consortium: building an international community of software platform partners. J. Biomed. Inform. 2019;95:103208. doi: 10.1016/j.jbi.2019.103208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris P.A., Taylor R., Thielke R., Payne J., Gonzalez N., Conde J.G. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J. Biomed. Inform. 2009;42:377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hays C.E., Hays S.P., DeVille J.O., Mulhall P.F. Capacity for effectiveness: The relationship between coalition structure and community impact. Eval. Program Plan. 2000;23:373–379. doi: 10.1016/S0149-7189(00)00026-4. [DOI] [Google Scholar]

- Israel B.A., Checkoway B., Schulz A., Zimmerman M. Health education and community empowerment: conceptualizing and measuring perceptions of individual, organizational, and community control. Health Educ. Q. 1994;21:149–170. doi: 10.1177/109019819402100203. [DOI] [PubMed] [Google Scholar]

- Kania J., Kramer M. Collective impact. Stanf. Soc. Innov. Rev. 2011;201:36–41. [Google Scholar]

- Kania J., Kramer M. Embracing emergence: how collective impact addresses complexity. Stanf. Soc. Innov. Rev. 2013 [Google Scholar]

- Kitson A.L., Rycroft-Malone J., Harvey G., McCormack B., Seers K., Titchen A. Evaluating the successful implementation of evidence into practice using the PARiHS framework: theoretical and practical challenges. Implement. Sci. 2008;3:1. doi: 10.1186/1748-5908-3-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krupitsky E., Nunes E.V., Ling W., Illeperuma A., Gastfriend D.R., Silverman B.L. Injectable extended-release naltrexone for opioid dependence: a double-blind, placebo-controlled, multicentre randomised trial. Lancet. 2011;377:1506–1513. doi: 10.1016/s0140-6736(11)60358-9. [DOI] [PubMed] [Google Scholar]

- Kwan B.M., McGinnes H.L., Ory M.G., Estabrooks P.A., Waxmonsky J.A., Glasgow R.E. RE-AIM in the Real World: Use of the RE-AIM Framework for Program Planning and Evaluation in Clinical and Community Settings. Front. Public Health. 2019;7:345. doi: 10.3389/fpubh.2019.00345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lefebvre R.C., Chandler R.K., Helme D.W., Kerner R., Mann S., Stein M.D., Reynolds J., Slater M.D., Anakaraonye A.R., Beard D., Burrus O., Frkovich J., Hedrick H., Lewis N., Rodgers E. Health communication campaigns to drive demand for evidence-based practices and reduce stigma in the HEALing Communities Study. Drug Alcohol Depend. 2020;217 doi: 10.1016/j.drugalcdep.2020.108338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li S.A., Jeffs L., Barwick M., Stevens B. Organizational contextual features that influence the implementation of evidence-based practices across healthcare settings: a systematic integrative review. Syst. Rev. 2018;7:72. doi: 10.1186/s13643-018-0734-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luoma J.B., O’Hair A.K., Kohlenberg B.S., Hayes S.C., Fletcher L. The development and psychometric properties of a new measure of perceived stigma toward substance users. Subst. Use Misuse. 2010;45:47–57. doi: 10.3109/10826080902864712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sprague Martinez L., Rapkin B.D., Young A., Freisthler B., Glasgow L., Hunt T., Salsberry P., Oga E.A., Bennet-Fallin A., Plouck T.J., Drainoni M.-L., Freeman P.R., Surratt H., Gulley J., Hamilton G.A., Bowman P., Roeber C.A., El-Bassel N., Battaglia T. Community engagement to implement evidence-based practices in the HEALing Communities Study. Drug Alcohol Depend. 2020;217 doi: 10.1016/j.drugalcdep.2020.108326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonald R., Strang J. Are take‐home naloxone programmes effective? Systematic review utilizing application of the Bradford Hill criteria. Addiction. 2016;111:1177–1187. doi: 10.1111/add.13326. criteria. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLellan E., MacQueen K., Neidig J.L. Beyond the qualitative interview: data preparation and transcription. Field Methods. 2003;15:63–84. doi: 10.1177/1525822X02239573. [DOI] [Google Scholar]

- Mojtabai R., Mauro C., Wall M.M., Barry C.L., Olfson M. Medication Treatment For Opioid Use Disorders In Substance Use Treatment Facilities. Health Aff. (Millwood) 2019;38:14–23. doi: 10.1377/hlthaff.2018.05162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moullin J.C., Sabater-Hernandez D., Fernandez-Llimos F., Benrimoj S.I. A systematic review of implementation frameworks of innovations in healthcare and resulting generic implementation framework. Health Res. Policy Syst. 2015;13:16. doi: 10.1186/s12961-015-0005-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Academies of Sciences, Engineering, and Medicine, Health and Medicine Division, Board on Health Sciences Policy, Committee on Medication-Assisted Treatment for Opioid Use Disorder . The National Academies Press; Washington, DC: 2019. Medications for Opioid Use Disorder Save Lives. [PubMed] [Google Scholar]

- Nelson M.C., Cordray D.S., Hulleman C.S., Darrow C.L., Sommer E.C. A procedure for assessing intervention fidelity in experiments testing educational and behavioral interventions. J. Behav. Health Serv. Res. 2012;39:374–396. doi: 10.1007/s11414-012-9295-x. [DOI] [PubMed] [Google Scholar]

- Nilsen P. Making sense of implementation theories, models and frameworks. Implement. Sci. 2015;10:53. doi: 10.1186/s13012-015-0242-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nilsen P., Bernhardsson S. Context matters in implementation science: a scoping review of determinant frameworks that describe contextual determinants for implementation outcomes. BMC Health Serv. Res. 2019;19:189. doi: 10.1186/s12913-019-4015-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oesterle S., Kuklinski M.R., Hawkins J.D., Skinner M.L., Guttmannova K., Rhew I.C. Long-Term Effects of the Communities That Care Trial on Substance Use, Antisocial Behavior, and Violence Through Age 21 Years. Am. J. Public Health. 2018;108:659–665. doi: 10.2105/ajph.2018.304320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palinkas L.A., Horwitz S.M., Green C.A., Wisdom J.P., Duan N., Hoagwood K. Purposeful Sampling for Qualitative Data Collection and Analysis in Mixed Method Implementation Research. Adm. Policy Ment. Health. 2015;42:533–544. doi: 10.1007/s10488-013-0528-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinby R.K., Hanson K., Brooke-Weiss B., Arthur M.W., Hawkins J.D., Fagan A.A. Installing the communities that care prevention system: implementation progress and fidelity in a randomized controlled trial. J. Community Psychol. 2008;36:313–332. doi: 10.1002/jcop.20194. [DOI] [Google Scholar]

- Retrum J.H., Chapman C.L., Varda D.M. Implications of network structure on public health collaboratives. Health Educ. Behav. 2013;40:13S–23S. doi: 10.1177/1090198113492759. [DOI] [PubMed] [Google Scholar]

- Rogers T., Howard-Pitney B., Feighery E.C., Altman D.G., Endres J.M., Roeseler A.G. Characteristics and participant perceptions of tobacco control coalitions in California. Health Educ. Res. 1993;8 345-257. [Google Scholar]

- Sanetti L.M.H., Kratochwill T.R. Toward developing a science of treatment integrity: Introduction to the special series. School Psych. Rev. 2009:38. [Google Scholar]

- Schoenwald S.K., Garland A.F., Chapman J.E., Frazier S.L., Sheidow A.J., Southam-Gerow M.A. Toward the effective and efficient measurement of implementation fidelity. Adm. Policy Ment. Health. 2011;38:32–43. doi: 10.1007/s10488-010-0321-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shea C.M., Jacobs S.R., Esserman D.A., Bruce K., Weiner B.J. Organizational readiness for implementing change: a psychometric assessment of a new measure. Implement. Sci. 2014;9:7. doi: 10.1186/1748-5908-9-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slavova S., LaRochelle M.R., Root E., Feaster D.J., Villani J., Knott C.E., Talbert J., Mack A., Crane D., Bernson D., Booth A., Walsh S.L. Operationalizing and selecting outcome measures for the HEALing Communities Study. Drug Alcohol Depend. 2020;217 doi: 10.1016/j.drugalcdep.2020.108328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sordo L., Barrio G., Bravo M.J., Indave B.I., Degenhardt L., Wiessing L., Ferri M., Pastor-Barriuso R. Mortality risk during and after opioid substitution treatment: systematic review and meta-analysis of cohort studies. Br. Med. J. 2017;357:j1550. doi: 10.1136/bmj.j1550. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokols D., Hall K.L., Taylor B.K., Moser R.P. The science of team science: overview of the field and introduction to the supplement. Am. J. Prev. Med. 2008;35:S77–89. doi: 10.1016/j.amepre.2008.05.002. [DOI] [PubMed] [Google Scholar]

- Stokols D., Misra S., Moser R.P., Hall K.L., Taylor B.K. The ecology of team science: understanding contextual influences on transdisciplinary collaboration. Am. J. Prev. Med. 2008;35:S96–115. doi: 10.1016/j.amepre.2008.05.003. [DOI] [PubMed] [Google Scholar]

- Substance Abuse and Mental Health Services Administration . Center for Behavioral Health Statistics and Quality, Substance Abuse and Mental Health Services Administration; Rockville, MD: 2017. Key Substance Use and Mental Health Indicators in the United States: Results from the 2016 National Survey on Drug Use and Health (HHS Publication No. SMA 17-5044, NSDUH Series H-52) [Google Scholar]

- Tabak R.G., Khoong E.C., Chambers D.A., Brownson R.C. Bridging research and practice: models for dissemination and implementation research. Am. J. Prev. Med. 2012;43:337–350. doi: 10.1016/j.amepre.2012.05.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The HEALing Communities Study Consortium HEALing (Helping to End Addiction Long-term) Communities Study: Protocol for a cluster randomized trial at the community level to reduce opioid overdose deaths through implementation of an Integrated set of evidence-based practices. Drug Alcohol Depend. 2020;217 doi: 10.1016/j.drugalcdep.2020.108335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wermeling D.P. Review of naloxone safety for opioid overdose: practical considerations for new technology and expanded public access. Ther. Adv. Drug. Safe. 2015;6:20–31. doi: 10.1177/2042098614564776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winhusen T., Walley A., Fanucchi L.C., Hunt T., Lyons M., Lofwall M., Brown J.L., Freeman P.R., Nunes E., Beers D., Saitz R., Stambaugh L., Oga E.A., Herron N., Baker T., Cook C.D., Roberts M.F., Alford D.P., Starrels J.L., Chandler R.K. The Opioid-overdose Reduction Continuum of Care Approach (ORCCA): Evidence-based practices in the HEALing Communities Study. Drug Alcohol Depend. 2020;217 doi: 10.1016/j.drugalcdep.2020.108325. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.