Abstract

Global warming, extreme climate events, earthquakes and their accompanying socioeconomic disasters pose significant risks to humanity. Yet due to the nonlinear feedbacks, multiple interactions and complex structures of the Earth system, the understanding and, in particular, the prediction of such disruptive events represent formidable challenges to both scientific and policy communities. During the past years, the emergence and evolution of Earth system science has attracted much attention and produced new concepts and frameworks. Especially, novel statistical physics and complex networks-based techniques have been developed and implemented to substantially advance our knowledge of the Earth system, including climate extreme events, earthquakes and geological relief features, leading to substantially improved predictive performances. We present here a comprehensive review on the recent scientific progress in the development and application of how combined statistical physics and complex systems science approaches such as critical phenomena, network theory, percolation, tipping points analysis, and entropy can be applied to complex Earth systems. Notably, these integrating tools and approaches provide new insights and perspectives for understanding the dynamics of the Earth systems. The overall aim of this review is to offer readers the knowledge on how statistical physics concepts and theories can be useful in the field of Earth system science.

Keywords: Statistical physics, Complex Earth systems, Complex network, Climate change, Earthquake

1. Introduction

1.1. The Earth as a complex system

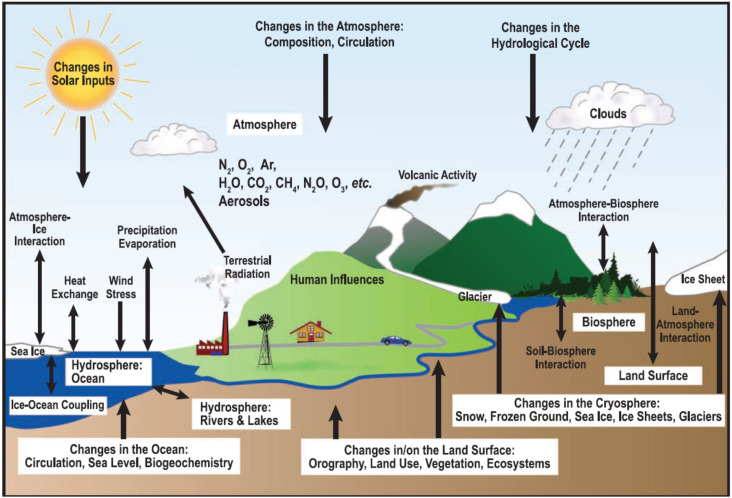

The Earth behaves as an integrated system comprised of geosphere, atmosphere, hydrosphere, cryosphere as well as biosphere components, with nonlinear interactions and feedback loops between and within them [1]. These components can be also regarded as self-regulating systems in their own right, and further broken down into more specialized subsystems. Nevertheless, the growing understanding of the multi-component interactions between physical, chemical, biological and human processes suggests that one should bring different disciplines together and take into account the Earth system as a whole. Such studies and results initiate the emergence of a new ‘science of the Earth’–Earth System Science (ESS) [2]. The ESS framework has already demonstrated its potential as a powerful tool for exploring the dynamical and structural properties of how the Earth operates as a complex system.

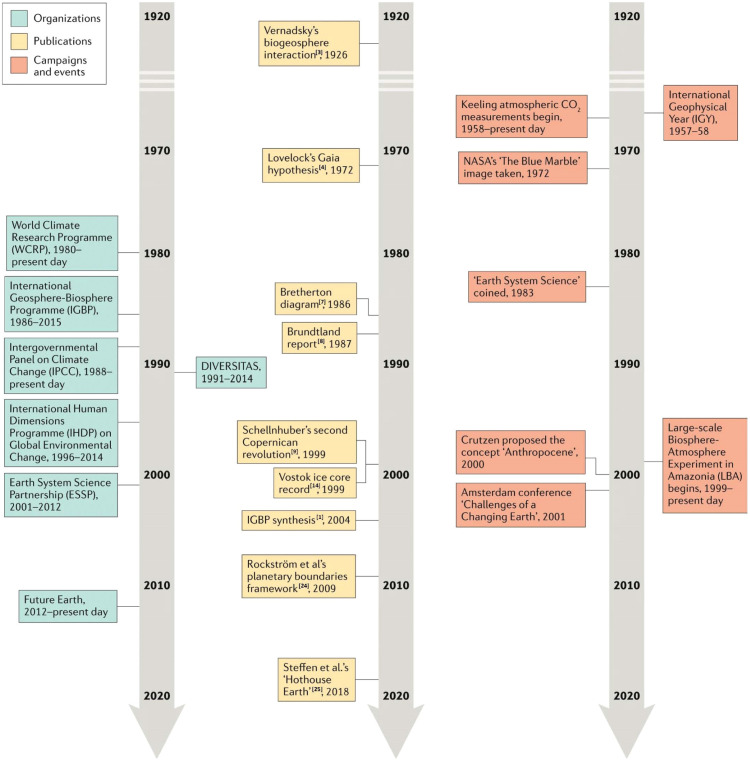

The ESS has emerged in the early to mid-20th century, and has developed rapidly during the last decades. Its historical evolution can be briefly outlined into four phases [2]: (i) Precursors and beginnings (pre-1970s). The systemic nature of Earth was mainly described and emphasized by some conceptualizations, such as Vernadsky’s biosphere concept that life has a strong influence on the physical and chemical properties of Earth [3], and Lovelock’s Gaia hypothesis that Earth is a synergistic and self-regulating, complex system [4]. These conceptualizations play vital roles in the contemporary understanding of the Earth system. In particular, the International Geophysical Year (IGY) 1957–1958 promoted the development of international science and led to the emergence of two contemporary paradigms, modern climatology and plate tectonics [5], [6]. (ii) Founding a new science (1980s). In the 1980s, the newly emerging recognition of Earth as an integrated entity: the Earth system, was called for by a series of workshops and conference reports. In particular, the Bretherton diagram developed by the National Aeronautics and Space Administration (NASA) [7] was the first systems-dynamics representation of the Earth System to couple the physical climate system and biogeochemical cycle. The Brundtland report published by the World Commission on Environment and Development in 1987 [8] developed guiding principles for a sustainable development and recognized the importance of the environmental problems for the Earth system. Some international organizations were also established in this stage, for example, the World Climate Research Programme (WCRP) aims to determine the predictability of the climate and the effects of human activities on the climate. The International Geosphere–Biosphere Programme (IGBP) was launched in 1987 in order to coordinate international research on global-scale and regional-scale interactions between Earth’s biological, chemical and physical processes and their interactions with human systems. The Intergovernmental Panel on Climate Change (IPCC) was created in 1988 to provide policymakers with scientific assessments on climate change, its implications and potential future risks, as well as to put forward adaptation and mitigation options. (iii) Going global (1990s–2015). Due to great research efforts of international programmes such as the IGBP, and the widespread use of the Bretherton diagram, ESS then developed rapidly, from the ‘new science of the Earth’ movement to a global one. In particular, the International Human Dimensions Programme (IHDP) on Global Environmental Change was founded with the aim to frame, develop and integrate social science research on global change. (iv) Contemporary ESS (beyond 2015). In the 21st century, the ESS framework was well established, and initiated a new programme, Future Earth, which was integrated by several international organizations or programmes, such as the IGBP and IHDP. Future Earth’s mission is to accelerate transformations to global sustainability through research and innovation. Following Ref. [1], we highlight the key organizations, publications, campaigns and events that characterize well the evolution of ESS in Fig. 1.

Fig. 1.

Timeline illustration of the development of Earth System Science (ESS). The figure shows the key organizations, publications, campaigns, events and concepts that have helped to define and develop the ESS.

Reprinted figure from Ref. [2].

Among the pioneering publications for the evolution of ESS, Hans Joachim Schellnhuber introduced and developed a fundamental concept for ESS: the dynamic, co-evolutionary relationship between nature and human factors at the planetary scale [9]. He proposed that the Earth system can be conceptually represented by the following mathematical form:

| (1) |

where ; . This model suggests that the overall Earth system contains two main components: stands the ecosphere and is interpreted as the human drivers. is composed of an alphabet of intricately interacting planetary sub-spheres (atmosphere), (biosphere), (cryosphere), etc. The human factor is much more subtle: means the ‘physical’ sub-component and the ‘metaphysical’ sub-component reflects the emergence of a ‘global subject’. This work provided the conceptual framework for fully integrating human dynamics into an Earth system and built a unified understanding of the Earth.

There exist numerous tools and approaches that support the evolutionary development of the ESS. However, it is worth noting that they can be integrated into three interrelated foci: observations, modeling and computer simulations, assessments and syntheses [2]. The Earth observations are usually referring to the instrumental data, for example, that are collected by the meteorological stations or polar orbiting and geostationary satellites. However, such datasets only extend, at best, for about one-to-two centuries into the past. In order to extend our reach beyond thousands or even millions of years ago, climate proxies fill this gap. There are multiple proxy records, including coral records [10], marine-sediment [11], stalagmite time series [12], tree rings [13] as well as the Vostok [14] and EPICA [15] ice core record that help us to better understand the past Earth system. Mathematical models are currently key methods for the understanding and projecting of climate and Earth systems. These models include different variants from conceptual climate models–Energy Balance Models (EBMs) [16] to more complex Earth models, the General Circulation Models (GCMs) [17]. In addition, the integrated assessment models (IAMs) were designed to take human dynamics as an integral component into account and aim to understand how human development and societal choices affect each other and the natural world, including climate change [18], [19]. Based on the GCMs, the Earth systems Models of Intermediate Complexity (EMICs) were developed to investigate the Earth’s systems on long timescales or at reduced computational cost [20]. These models provide knowledge-integration to explore the dynamic properties and basic mechanisms of the Earth system across multiple space and time scales. The assessments and syntheses also played crucial roles in building new scientific knowledge, linking the scientific and political communities, and facilitating new research fields based on the feedback from the political sector.

Some new concepts and theory have indeed arisen from the evolution of ESS, including the emerging concept of sustainability [21], Anthropocene [22], tipping elements [23], planetary boundaries framework [24], such as planetary thresholds and state shifts [25], etc. Taken together, they create powerful ways to better understand and project the future trajectory of the Earth system.

1.2. Why Statistical Physics?

Yet, despite the rapid development of the ESS, the nature and statistical properties of extreme climate events and earthquakes remain elusive and debated, and furthermore, the existence of early warning signals of these phenomena is still a major open question. As a consequence, in this review, we will present several novel approaches based on or stemmed from statistical physics, that could enhance our understanding of how the Earth system could evolve. In particular, the interdisciplinary perspective on statistical physics and the Earth system yields to an improvement of the prediction skill of high-impact disruptive events within the climate and earthquake systems.

Statistical Physics is a branch of physics that draws heavily on the laws of probability and the statistics of many interacting components. It can describe a wide variety of systems with an inherently stochastic nature, aiming to predict and explain measurable properties and behaviors of macroscopic systems. It has been applied to many problems including fields of physics, biology, chemistry, engineering and also social sciences. Note that statistical physics does not focus on the dynamic of every individual particle but on the macroscopic behavior of a large number of particles. The basic theory and ideas of statistical physics are depicted in many textbooks, such as [26], [27], [28].

Sethna motivated the relationship between statistical physics and complex systems in his book [29] as follows: ”Many systems in nature are far too complex to analyze directly. Solving for the motion of all the atoms in a block of ice – or the boulders in an earthquake fault, or the nodes on the Internet – is simply infeasible. Despite this, such systems often show simple, striking behavior. We use statistical mechanics to explain the simple behavior of complex systems”. A complex system is usually defined as a system composed of many components which interact with each other. Regarding the large variety of components and interrelations, the Earth system thus can be interpreted as an evolving complex system. The concepts and methods of statistical physics can infiltrate into ESS, in particular, (i) critical phenomena are analogous to tipping elements: a system will collapse and follow a breakdown [30], if it is close to a phase transition or tipping point. Critical phenomena and transitions exist widely in the Earth system [31], such as in atmospheric precipitation [32] and percolation phase transition in sea ice [33]. (ii) The concept of fractal was used to describe the Earth’s relief, shape, coastlines and islands [34]. (iii) The earthquake process is regarded as a complex spatio-temporal phenomenon, and has been viewed as a self-organized criticality (SOC) paradigm [35]. Moreover, the seismic activity exhibits scaling properties in both temporal and spatial dimensions [36].

Complex network theory provides a powerful tool to study the structure, dynamics and function of complex systems [37], [38], [39], [40]. Meanwhile, statistical physics is a fundamental framework and has brought theoretical insights for understanding many properties of complex networks. From an applied perspective, statistical physics has led to the definition of null models for real-world networks that reproduce global and local features [41]. Complex network theory is an emerging multidisciplinary discipline that has been applied to many fields including mathematics, physics, biology, computer science, sociology, epidemiology and others [42]. Ideas from network science have also been successfully applied to the climate system and revealed interesting mechanisms underlying its functions, and leading to the emergence of a new concept, Climate Network (CN) [43]. The nodes in a CN represent the available, geographically localized, time series, in particular, regular latitude–longitude grids, while the level of similarity and causality between the nonlinear climate records of different grid points represents the CN’s links [44], [45]. The development of this data-based approach is providing radical new ways to investigate the patterns and the dynamics of climate variability [46].

In this review, we will introduce how to apply statistical physics methods in Earth system science. Particularly, we focus largely on the surface of the Earth system, including the climate system, Earth’s relief as well as the earthquakes system, but not including the whole planetary interior.

1.3. Outline of the report

Apart from this introductory preamble, the report is organized along 3 Sections.

In the next Section, we start by offering the overall methodology that will accompany the rest of our discussion. Section 2 contains several topics that are essential but rather formal, including the CN approach, percolation theory, tipping points analysis, entropy theory and complexity. The reader will find there the attempt to define an overall theoretical framework encompassing the different situations and systems that will be later extensively treated.

Section 3 gives a comprehensive review of applications, especially those that were studied in climate systems, the Earth geometric surface relief and earthquake systems.

Finally, Section 4 presents our conclusive remarks and perspective ideas.

2. Methodology

2.1. Climate networks

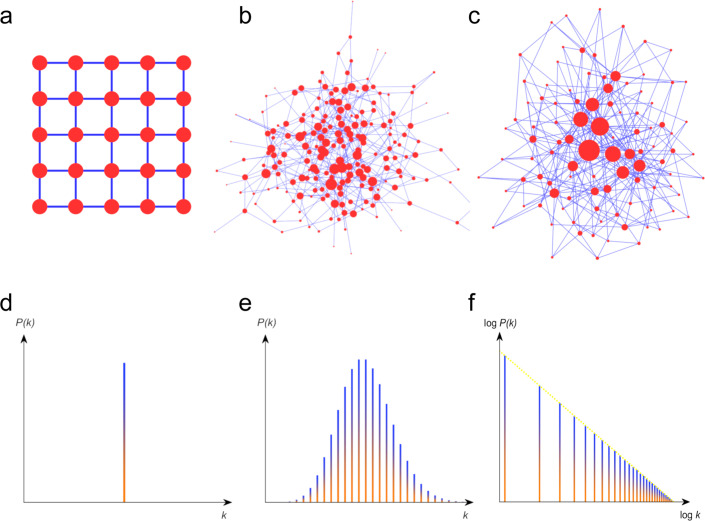

For more than two decades, the complex network paradigm has demonstrated its great potential as a versatile tool for exploring dynamical and structural properties of complex systems, from a wide variety of disciplines in physics, biology, social science, economics, and many other fields [37], [40], [47], [48], [49], [50], [51], [52]. In the context of network theory, a complex network is a graph with non-trivial topological features which do not occur in simple networks such as regular lattices (Fig. 2a) or random Erdős–Rényi graphs (Fig. 2b). One of the novelties of complex network theory is that it can relate the topological characteristics to the function and dynamics of the system. Complex network theory has been successfully applied to many real world systems and revealed fundamental mechanisms underlying their functions. It has been also found that many real world networks are scale-free networks (Fig. 2c) [38], [53].

Fig. 2.

Characteristics of the basic explored model networks. (a) A regular 2D square lattice, is the most degree-homogeneous network. (b) An Erdős–Rényi (ER) network, has a Poisson degree distribution and its degree heterogeneity is determined by the average degree . (c) A scale–free (SF) network, has a power-law degree distribution, yielding large degree heterogeneity. The degree distributions are shown for (d) the 2D square lattice, (e) the ER network and (f) the SF network.

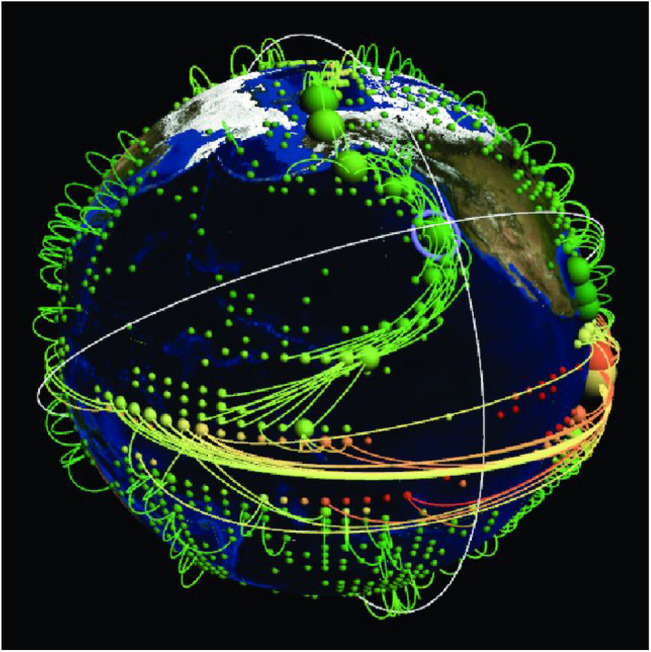

In recent years, the ideas of network theory have also been implemented in climate sciences to construct CN [43], [44], [54]. In CNs the geographical locations (or grid points) are regarded as the nodes of the networks and the level of similarity (causality) between the records (time series) of two grid points represents the links and their strength. Various climate data such as temperature, pressure, wind and precipitation can be used to construct a network. The climate networks approach allows to study the interrelationship between the different locations on the globe and thus represent the global behavior of the climate system, e.g., how energy and matter are transferred from on location to another. These networks have been used successfully to analyze, model, understand, and even predict various climate phenomena [44], [45], [46], [55], [56], [57], [58], [59], [60], [61], [62], [63], [64], [65], [66], [67], [68], [69], [70]. A visualization of a climate network is shown in Fig. 3 as an example.

Fig. 3.

Visualization of a climate network with surface air temperature taking into account the spatial embedding of vertices on the Earth’s surface.

Figure from Ref. [71].

We first provide an introduction of definitions, notations and basic quantities used to describe the topology of a network, and then present an overview on how to construct a climate network.

2.1.1. Network characteristics

In this Section we review basic characteristics and metrics used to describe and analyze networks, most of them come from graph theory [72]. Graph theory is a large field containing many branches but we present only a small fraction of those results here, focusing on the ones most relevant to the study of the complex Earth system. A network, also called a graph in the mathematical literature, is a collection of vertices connected by edges. Vertices and edges are also called nodes and links in computer science, sites and bonds in physics, and actors and ties in sociology [40]. Throughout this review we will denote the number of vertices by and the number of edges by , which is also a common notation in the mathematical and physics literature.

The adjacency matrix

Most of the networks we will study in this review have at most a single edge between any pair of vertices, i.e., self-edges or self-loops not allowed. A simple representation of a network for many purposes is the adjacency matrix , with elements such that

| (2) |

We should notice that the diagonal matrix elements are all zero in the adjacency matrix. In some situations it is useful to represent edges as having a strength, weight, or value to them, usually a real number. Such weighted or valued networks can be represented by giving the elements of the adjacency matrix values equal to the weights of the corresponding connections. A directed network or directed graph, also called a digraph for short, is a network in which each edge has a direction, pointing from one vertex to another. Such edges are themselves called directed edges. For this case, the adjacency matrix is usually not symmetric, and becomes

| (3) |

Degree

The degree of a vertex in a network is the number of edges connected to it. We denote the degree of vertex by . For an undirected network of vertices the degree can be written in terms of the adjacency matrix (Eq. (3)) as

| (4) |

The number of edges is equal to the sum of the degrees of all the vertices divided by 2, so

| (5) |

The mean degree of the node in an undirected graph is .

The concept of degree is more complicated in directed networks. In a directed network each vertex or node has two degrees. The in-degree is the number of in-going edges connected to a node and the out-degree is the number of out-going edges. From the adjacency matrix of a directed network Eq. (3), they can be written

| (6) |

Bearing in mind that the number of edges in a directed network is equal to the total number of in-going ends of edges at all vertices, or equivalently to the total number of out-going ends of edges.

Degree distributions

One of the most fundamental properties of a network that can be measured directly is the degree distribution, or the fraction of nodes having connections (degree ). A well-known result for the Erdős–Rényi [73] network is that its degree distribution follows a Poissonian,

| (7) |

where is the average degree. As shown in Fig. 2e, despite the fact that the position of the edges is random, a typical random graph is rather homogeneous, the maximum number of the nodes having the same number of edges.

However, direct measurements of the degree distribution for real networks, such as the Internet [74], WWW [38], email network [75], metabolic networks [76], airline networks [77], neuronal networks [78], and many more, show that the Poisson law does not apply. But they exhibit an approximate power-law degree distribution

| (8) |

where is a normalization factor. The constant is known as the exponent of the power law. Values in the range are typical, although values slightly outside this range are possible and are observed occasionally [79]. Networks with power-law degree distributions are called scale-free networks. The simplest strategy to determine the scale-free properties is to look at a histogram of the degree distribution on a log–log plot, as we did in Fig. 2f, to see if we have a straight line. Several models have been proposed for the evolution of scale-free networks, each of which may lead to a different ensemble. The first proposal was the preferential attachment model of Barabási and Albert, which is known as the Barabási–Albert model [38]. Several variants of this model have been suggested, see, e.g., in Ref. [80].

Clustering coefficient

The extent to which nodes cluster together on very short scales in a network is measured by the clustering coefficient. The definition of clustering is related to the number of triangles in the network. The clustering is high if two nodes sharing a neighbor have a high probability of being connected to each other. The most common way of defining the clustering coefficient is:

| (9) |

Here a “connected triple” means three nodes with edges and . The factor of three in the numerator arises because each triangle is counted three times when the connected triples in the network are counted.

We can also get a local clustering coefficient for a single vertex by defining

| (10) |

That is, to calculate , we go through all distinct pairs of vertices that are neighbors of , count the number of such pairs that are connected to each other, and divide by the total number of pairs. represents the average probability that a pair of ’s friends are friends of one another. For vertices with degree or , for which both numerator and denominator are zero, we assume . Then the clustering coefficient for the whole network [37] is the average . In both cases, the clustering is in the range .

Subgraphs

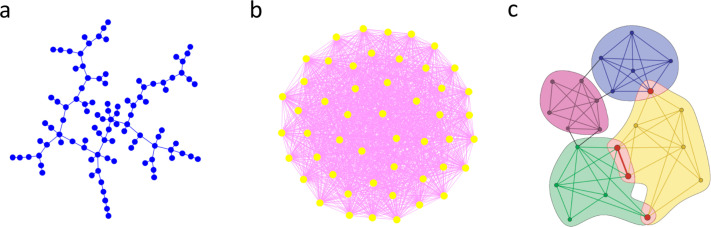

A graph consisting of a set of nodes and a set of edges is a subgraph of a graph if all nodes in are also nodes of and all edges in are also edges of . The simplest examples of subgraphs are cycles, trees, and complete subgraphs [81]. A cycle of order is a closed loop of edges such that every two consecutive edges and only those have a common node. That is, graphically a triangle is a cycle of order 3, while a rectangle is a cycle of order 4. The average degree of a cycle is equal to 2, since every node has two edges. A tree, as shown in Fig. 4a, is a connected, undirected network that contains no closed loops. Here, by “connected” we mean that every node in the network is reachable from every other via some path through the network. A river network is an example of a naturally occurring tree with directed links [82]. The most important property of trees is that, since they have no closed loops, there is exactly one path between any pair of nodes. Thus the number of edges in a tree is always exactly edges. Complete subgraphs of order , also called -clique, contain nodes and all the possible edges—in other words, they are completely connected [see Fig. 4b].

Fig. 4.

Illustration of the concept of subgraphs. Examples of (a) a tree network, (b) a fully completed network, (c) overlapping -clique communities at .

(c): Reprinted figure from Ref. [84].

Let us consider the ER model, in which we start from isolated nodes, then connect every pair of nodes with a probability . Most generally, we can ask whether there is a critical probability that marks the appearance of arbitrary subgraphs consisting of nodes and edges. A few important special cases that directly give an answer to this question [81] are: (i) The threshold probability of having a tree of order is ; (ii) The threshold probability of having a cycle of order is ; (iii) The critical probability of having a complete subgraph of order is .

Generally, a network is always composed of many separate subgraphs or components, i.e., groups of nodes connected internally, but disconnected from others. In each such component there exists a path between any two nodes, but there is no path between nodes in different components. A network component with size proportional to that of the entire network, , is called a giant component.

Similar to component, the concept of community is also one of the most fundamental properties in complex networks [83]. The nodes of the network might be joined together into tightly connected groups, whereas between them there are still links but less connections. A single node of the network may belong to more than one community, and most of the actual networks are made of highly overlapping communities of nodes [84]. Since community structures are quite common in real networks, community finding and detection are thus of great importance for better understanding the function of a network. An example of overlapping -clique communities is shown in Fig. 4c. A good review on the community detection in graphs can be found in Ref. [85].

Network models

Erdős–Rényi model: It can be interpreted by the following two well-studied graph ensembles, the ensemble of all graphs having vertices and edges, and the ensemble consisting of graphs with vertices, where each possible edge is realized/connected with probability . These two families, initially studied by Erdős and Rényi [73], are known to be similar if . They are referred to as Erdős–Rényi (ER) model. These descriptions are quite similar to the microcanonical and canonical ensembles studied in statistical physics [86]. The ER model has traditionally been the dominant subject of study in the field of random graphs [81], with Poissonian degree distributions, see Eq. (7).

Barabási–Albert model: It is based on two simple assumptions regarding network evolution [38]. (i) Growth: Starting with a small number of nodes, at every time step, a new node with edges that link the new node to different nodes already present in the system is added. (ii) Preferential attachment: This is the heart of the model. When choosing the nodes to which the new node will be connected, the probability that the new node will be connected to node depends on the degree of node , such that . In this process, after time steps this results in a network with nodes and edges. Theoretical and numerical results show that this network model evolves into a power-law degree distribution, see Eq. (8).

Watts–Strogatz model:

In 1998, Watts and Strogatz [37] proposed a one-parameter model that interpolates between a regular lattice and a random graph. The details behind the model are the following: (i) Start with order: Start with a ring lattice with nodes in which every node is connected to its closest neighbors. In order to have a sparse but connected network at all times, consider . (ii) Randomize: Randomly rewire each edge of the lattice with probability such that self-connections and duplicate edges are excluded. This process introduces long-range edges. The resulting network properties of this model are small-world and high clustering coefficient. Specifically, a small-world network is referring to a network where the characteristic path length grows proportionally to the logarithm of the number of nodes, [37].

2.1.2. Pearson correlation climate network

Our climate system is made up of an enormous number of nonlinear sub-systems having mutual nonlinear interactions and feedback loops active on a wide-range of temporal and spatial scales. Therefore, modeling the Earth climate system from the point of view of complex networks can clearly provide critical insights into the underlying dynamics of the evolving climate system. As discussed above, in CNs, geographical regions of Earth are regarded as nodes, and the bivariate statistical analysis of similarities between pairs of climatological variables time series represent the links.

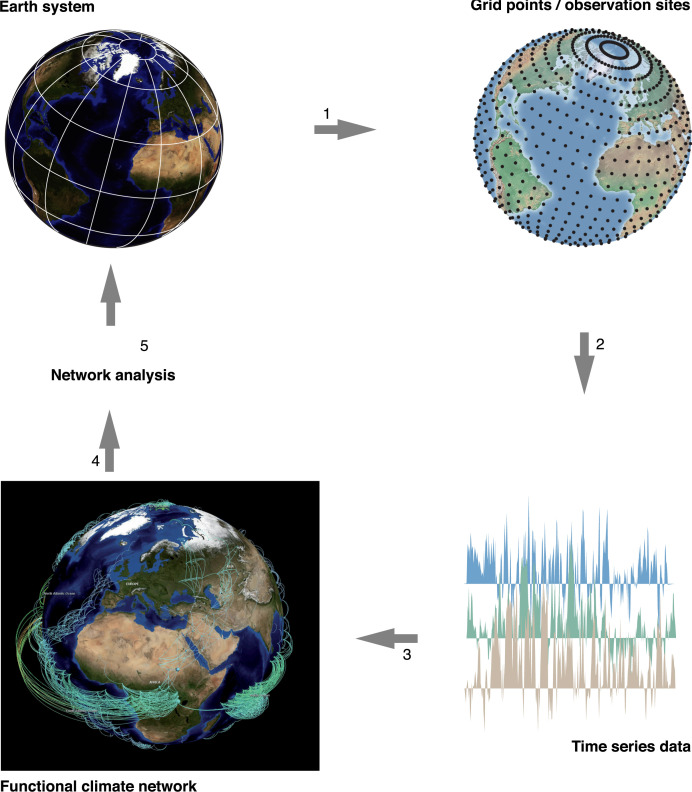

In general, there are five steps for the CNs construction and analysis [46], the procedure is displayed in Fig. 5, adapted from [87]. Step (i): Nodes, the nodes in CNs are usually defined as locations in a longitude–latitude spatial grid at various resolutions. (ii): Climatological variable, we select the suitable climatological time series to be analyzed, e.g., surface air temperature, sea surface temperature, precipitation, wind, etc. Some pre-processing is also often needed. For example, to avoid the strong effect of seasonality, we usually subtract the mean seasonal cycle and divide by the seasonal standard deviations for each grid point time series. (iii): Edges, in this step we compute the statistical similarity that quantifies the interdependencies between pairs of time series. The strength of each edge is correlation based. There are many measures for quantifying the interdependencies of time series, here we mention the types of CNs by correlation measures. For example, a Pearson correlation climate network, where we use Pearson correlation to quantify the cross-correlations between time series; while an event synchronization climate network uses the event synchronization method; and a mutual information climate network is based on mutual information. (iv): Construction, in this step we construct the CN which typically involves some thresholding criterion to select only statistically significant edges. Considering, for example, that the significant links have values above a given threshold, , then the adjacency matrix is determined as

| (11) |

where is the Heaviside function. We then investigate the obtained network by using various network characteristics. Finally, in step (v): Climatological interpretation, the results of this analysis are interpreted in terms of dynamical processes of the climate system (e.g., atmospheric circulation, ocean currents, plenty of waves, etc.).

Fig. 5.

Methodology used to construct a climate network from climatic time series.

Reprinted figure from Ref. [87].

In the following, we will demonstrate how to construct a Pearson correlation climate network. Suppose that the climatic variable is the daily surface air temperature, either from observations, proxy reconstructions, reanalyses, or simulations, gathered at static measurement stations, or provided at grid cells. At each node of the network, we calculate the daily atmospheric temperature anomalies (actual temperature value minus the climatological average which is then divided by the climatological standard deviation) for each calendar day.

For obtaining the time evolution of the weight of the link between a pair of nodes and , we follow [66] and compute, for each time windowing (such as month or year) over the whole time span, the time-delayed cross-correlation function defined as

| (12) |

and

| (13) |

where the brackets denote an average over the past days, according to

| (14) |

Here, is the time lag spanning from days. The reliable estimate of the background noise level, i.e., the values of the were discussed in [88], [89]. Based on the correlation functions, Eqs. (12), (13), a weighted and directed link between nodes and was defined in Refs. [59], [61], [64]. This is done by identifying the value of the highest peak (or lowest valley) of the cross-correlation function and denote the corresponding time lag of this peak (valley) as . The sign of indicates the direction of each link; i.e., when the time lag is positive (), the direction of the link is from to [64]. The positive and negative links and their weights are determined via , and are defined as

| (15) |

and

| (16) |

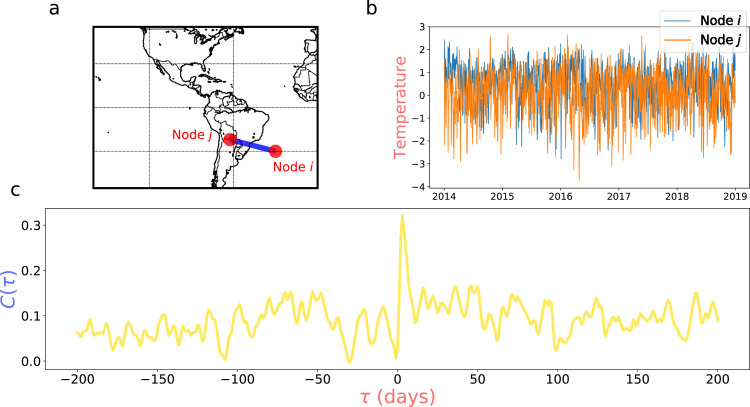

where “max” and “min” are the maximum and minimum values of the cross-correlation function, “mean” and “std” are the mean and standard deviation. Typical time series and their cross-correlation functions are shown in Fig. 6. For demonstration, two nodes are selected, a node is located in the Southwest Atlantic and a node is in the South American continent (Fig. 6a). The near surface daily air temperature anomalies are shown in Fig. 6b for the time period between 2014 to 2018. The cross-correlation function between the time series is shown in Fig. 6c, where the absolute value of a maximum cross-correlation function is much larger than the level of noise and the minimum value. Since , then the direction of the link is from to . According to Eq. (15), we calculate its weight to be . This value represents 5.71 standard deviations above the noise level, i.e., highly significant.

Fig. 6.

Typical weighted and directed link in a Pearson Correlation Climate Network. (a) Node is located on the Southwest Atlantic and node is in the South American continent. (b) The near surface daily air temperature anomalies for the period [2014,2018]. (c) The cross-correlation function between the time series shown in (b). The direction of this link is from to with weight .

Finally, networks can be constructed by establishing links between pairs of nodes with weights larger than some significant threshold. The advantage of this method is that it overcomes the problem of strong auto-correlation values in the data [88].

2.1.3. Event synchronization climate network

The event synchronization method provides an alternative way to construct a network from climate observations: event synchronization CN. It was originally proposed by Quian Quiroga et al. [90], where they measured synchronization and inferred time delays between signals in neuroscience. Event synchronization is based on the relative timings of events, such as rainfall, in a time series and is defined, e.g., by the crossing of a threshold or by local maxima or minima, etc. For instance, an extreme rainfall event is defined as the day where its precipitation is above the 99th percentiles of all days. Event synchronization is especially appropriate for studying extreme events. The degree of synchronization is obtained from the number of quasi-simultaneous events and the delay is calculated from the precedence of events in one time series (signal) with respect to the other.

Event synchronization starts by constructing two event series from two discrete univariate time series, and . An event that occurs at at time is considered to be synchronized with an event that occurs at at time within a time , if , where

| (17) |

Here, , and . and are the number of events in the and respectively. The number of times an event appears in shortly after it occurs in is counted:

| (18) |

with

| (19) |

and analogously to Eq. (18) we can define . Finally, the symmetrical and anti-symmetrical combinations

| (20) |

are used to measure the synchronization of the events and their delay behavior, respectively. They are normalized to and . It should be noted that if several extreme events are very close in one record, then only the first one is considered. Particularly, if and only if the events of the signals are fully synchronized. In addition, if the events in always precede those in , then we get .

The event synchronization method has been found useful for the study of electroencephalogram signals [90], neurophysiological signals [91], and the patterns of extreme rainfall events [92], [93], [94], [95], [96].

2.1.4. Mutual information climate network

The mutual information method is also one of the common tools for quantifying the interdependencies between time series and for constructing climate networks. The mutual information of two random variables is a measure of the mutual dependence between the two variables. The concept of mutual information is intricately linked to that of entropy of a random variable [97]. Let and be a pair of random variables with values over the space . If their joint distribution is , and their associated marginal probability distribution functions are and , then the mutual information is defined as

| (21) |

States with zero probability of occurrence are ignored.

Intuitively, is a measure of the inherent dependence expressed in the joint distribution of and relative to their corresponding joint distribution under the assumption of independence. Mutual information, therefore, measures dependence and nonlinearity, in fact: if and only if and are independent random variables, i.e., . Moreover, mutual information is non-negative, symmetric and can also be computed with a time lag [98]. After we get the mutual information coefficient, Eq. (21), for each pair of nodes, we can construct a mutual information climate network. It has been shown that the mutual information CN can well capture the westward propagation of sea surface temperature (SST) anomalies that occur in the Atlantic multidecadal oscillation [99].

To summarize, in this chapter we have described different network characteristics and presented various linear and nonlinear tools of time series analysis, which can be used to construct, define and characterize CNs. Linear and nonlinear methods include Pearson correlation, event synchronization, and information-theory measures such as entropy and mutual information. There are also some other powerful tools, such as, spectral analysis, empirical orthogonal function analysis and symbolic ordinal analysis that can be used to reconstruct CNs [46].

2.2. Percolation theory

Percolation originally has been used to describe the movement and filtering of fluids through porous materials [100]. The idea of percolation was also considered by Flory and Stockmayer on polymerization and the sol–gel transition [101]. In recent years, it has been a cornerstone in the theory of spatial stochastic processes with broad applications to diverse problems in fields such as statistical physics [102], [103], [104], [105], phase transitions [106], [107], materials [108], [109], epidemiology [110], [111], [112], [113], networks [30], [38], [40], [114], [115], [116], [117], [118], [119], [120], [121], colloids [122], [123], semiconductors [124], traffic [125], turbulence [126], as well as Earth systems [33], [65], [67], [127], [128], [129], [130]. Percolation theory also plays a pivotal role in studying the structure, robustness and functions of complex systems [131].

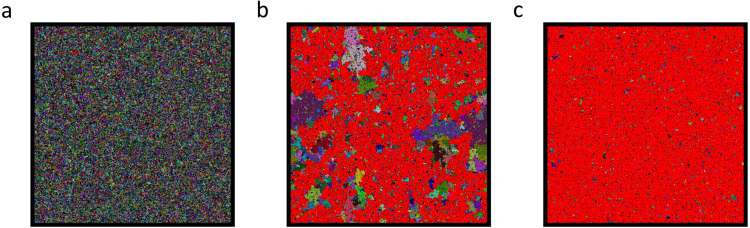

It is well known that in lattices and other ordered networks [106], [107], [132], for dimensions greater than one, a percolation phase transition occurs. The percolation process is a simple model in which the nodes (sites) or edges (bonds) are occupied with some probability and unoccupied with probability . Take a regular lattice as an example (see Fig. 2a). A system is regraded as percolating if there is a path from one side to the other parallel one, passing only through occupied bonds and sites. When such a path exists, the component or cluster of sites that spans the lattice is called the spanning cluster or the infinite percolation cluster. At low concentration , the sites are either isolated or form small clusters of nearest-neighbor sites. Two sites belong to the same cluster if they are connected by a path of nearest-neighbor sites. At large values, on the other hand, at least one path between opposite sides exists (see Fig. 7). The percolation phase transition occurs at some critical density that depends on the type and dimensionality of the lattice.

Fig. 7.

Bond percolation clusters on a 512 × 512 2D square lattice for(a) 0.3, (b) 0.5 and (c) 0.55, respectively. Different cluster sizes have different colors. The color of the larger clusters depends on their size and varies from blue (small clusters) to red (infinite cluster). The percolation threshold is .

For many complex networks, the notion of side does not exist. However, the ideas and tools of percolation theory can still be applied [51]. The main difference is that the condition for percolation is no longer the existence of spanning cluster, but rather having a cluster containing nodes. If such a component exists, we call it giant component, that was discussed in Section 2.1. Indeed, the condition of the existence of a giant component applies also to lattice networks, and therefore it can be used as a more general condition than the spanning property.

There exist two types of percolation, site percolation, where all sites are with probability occupied and empty. In bond percolation, however, it is the bonds which are occupied with probability and above they form a giant component of connected sites. In this review, we will focus on the critical phenomena of percolation near the percolation threshold , where for the first time a giant component is formed or the system collapses [133]. These aspects are called critical phenomena, and the fundamental theory to describe them is the scaling theory from phase transitions.

2.2.1. Phase transition

The concept of phase transition is usually used to describe transitions between solid, liquid, and gaseous states of matter for thermodynamic physical systems, where an ordered phase (e.g., solid) changes into a disordered phase (e.g., liquid) at some critical temperature [26]. Another classical example of a phase transition is the magnetic phase transition, explained by the Ising model, where a spontaneous magnetization appears, at low temperature without any external field. While increasing temperature, the spontaneous magnetization decreases and vanishes at .

Percolation is indeed a simple geometrical phase transition, where the percolation threshold distinguishes a phase of finite clusters (disordered phase) from a phase of an infinite cluster (ordered phase). The occupied probability of sites or bonds plays the same role as the temperature in the thermal phase transition. They are usually called control parameters in statistical physics.

Percolation order parameter

The percolation order parameter in the relative size of the infinite cluster which is defined as the fraction of the sites belonging to the infinite cluster. For , there exist only finite clusters, thus, ; For the case , however, is analogous to the magnetization below and behaves near as a power law

| (22) |

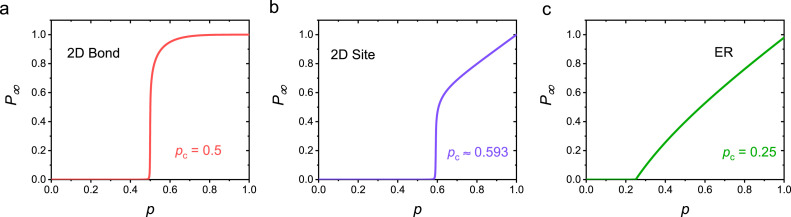

It describes the order in the percolation system and is therefore called the order parameter. We show in Fig. 8, how behaves as a function of , for both bond and site percolation in the 2D square lattice model, as well as, for site percolation in the ER network, respectively. It is worth noting that we adopt as the control parameter in Fig. 8, which results being a monotonic increasing function. When is gradually decreased (from 1), it can be regarded as removal (or attack) of nodes or links and the system collapses after removing a fraction of . Thus, can be a measure of the resilience of the system, i.e., how close it is to collapse.

Fig. 8.

Percolation phase transitions in lattice and network systems. The percolation order parameter as a function of occupied probability for (a) 2D bond percolation with , (b) 2D site percolation with and (c) ER network with . Here the average degree for ER network.

Cluster size distribution

The finite components distribution near criticality follows the scaling form [106], [107]

| (23) |

Where is the component size, and represents the number of components of size . At the percolation threshold diverges and the tail of the distribution behaves as a power law with the critical exponent .

Average cluster size

For any given site, the probability that it belongs to a cluster of size is . Let us define as the probability that any given site is part of a finite cluster,

| (24) |

which is the first moment of the cluster size distribution. Hence, for any given site of any given finite cluster, the probability that the cluster is of size is

| (25) |

with . For any given site of any given finite cluster, the average size of the cluster is

| (26) |

Note that, the average size , excluding the infinite cluster, diverges near the critical point as,

| (27) |

Correlation length

The correlation function is the probability that a site at position from an occupied site in a finite cluster belongs to the same cluster.

Typically, for large , there is an exponential cutoff, i.e., , at the correlation length . The correlation length is defined as

| (28) |

measures the mean distance between two sites on the same finite cluster. When approaches , increases as

| (29) |

The quantities and are analogous to the magnetization and the susceptibility in magnetic systems. , and are called the critical exponents and describe the critical behavior of the percolation phase transition.

2.2.2. Structural properties

Next, we will briefly introduce some fundamental measurements that are used to characterize the structural properties of a percolation cluster. For more details, the readers can refer to Ref. [106].

Fractal dimension

The fractal concept was introduced into the physical world by Mandelbrot [134] and applied to percolation by Stanley [135] to describe the cluster shapes at the percolation threshold . The infinite cluster is self-similar on all length scales, and can be regarded as a fractal. The fractal dimension is defined as how, on average, the mass of the cluster within a sphere of radius from a site of the cluster changes with ,

| (30) |

For length scales smaller than the correlation length , a fractal structure exists. For length scales larger than , the cluster becomes homogeneous. This can be summarized as

| (31) |

The relative size of the giant component, can also be expressed as

| (32) |

The fractal dimension of percolation clusters can be related to the critical exponents and . Substituting Eqs. (22), (29) into Eq. (32), yields .

Fractal analysis was also applied in the study of complex networks [136]. Generally, there are two basic methods to calculate the fractal dimensions of a given system, i.e., using either the box counting method [137] or the cluster growing method [138]. (i) The box counting method: Let be the number of boxes of linear size that are needed to cover the object, the fractal dimension is then given by . It means that the average number of nodes within a box of size is,

| (33) |

The fractal dimension can be obtained by a power law fit. (ii) The cluster growing method: Similar to Eq. (30), the dimension can be calculated by

| (34) |

where is the average mass of the cluster, defined as the average number of nodes within linear size in the cluster. Note that, the above methods are difficult to apply directly to networks, since networks are generally not embedded in space. In order to measure the fractal dimension of networks one usually combines the concept of renormalization [136], i.e., for each chemical size , boxes are placed until the network is covered. Then each box is replaced by a node (renormalization), and the renormalized nodes are connected if there is at least one link between the no-renormalized boxes. This procedure is repeated and the number of boxes scale with as .

Graph dimensions and

The fractal dimension has been used to describe the structural properties of the shortest path between two arbitrary sites in the same cluster. Let be the path length, which is often called the ”chemical distance” [139], it scales with as

| (35) |

and the number of sites within is

| (36) |

where is often called the “graph dimension” or “chemical” dimension. Combining Eqs. (30), (35), (36), we obtain the relation between , and , .

Besides the fractal dimension exponents , and discussed above, there are also some other exponents such as the backbone exponent and the red bonds exponents to describe the fractal dimensions of the substructures composing percolation clusters [26], [107].

2.2.3. Scaling theory

The scaling hypotheses of phase transitions were developed by Kenneth G. Wilson during the last century and honored by the 1982 Nobel prize. The scaling theory of percolation clusters relates the critical exponents of the percolation transition to the cluster size distribution, . According to the scaling hypotheses, it is possible to state the following relation for ,

| (37) |

where is the Fisher exponent [102], and is scaling function following , which rapidly decays to zero.

The correlation length is defined as the root mean square distance between occupied sites on the same finite cluster for all clusters, see Eq. (28). For clusters with sites, the root mean square distance between all pairs of sites on each cluster, is

| (38) |

and thus,

| (39) |

Close to the percolation threshold , , and we obtain from the above equation

| (40) |

To calculate the sums in Eq. (40), we transform them into integrals, and obtain

| (41) |

which yields the scaling relations between and

| (42) |

where is the system’s dimension.

Consider the th moment of the cluster size distribution

| (43) |

which scales in the critical region as,

| (44) |

Next we consider the percolation order parameter , which behaves as [106],

| (45) |

Combining with Eq. (22), we have,

| (46) |

Similarly, we obtain for , the relation , yielding

| (47) |

Thus, the critical exponents are not independent of each other but satisfy two sets of scaling and hyperscaling relations. The scaling relations can be easily expressed by Eqs. (42), (46), (47).

2.2.4. Universal gap scaling

An interesting property of percolation is universality, which is a fundamental principle of behavior at or near a phase transition critical point. As a result, the critical exponents depend only on the dimensionality of the system, and are independent of the microscopic interaction details of the system. The behavior of a system is characterized by a set of critical exponents, as discussed in the last section. If two systems have the same values of critical exponents, they belong to the same universality class. The universality property is a general feature of phase transitions. A phase transition is also characterized by scaling functions that govern the finite-size behaviors [140]. The concept of finite-size scaling provides a versatile tool to study the percolation transition.

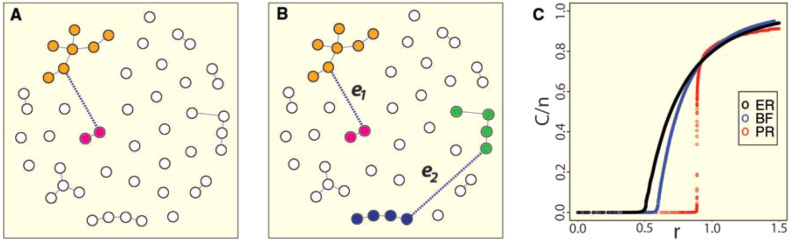

Usually, a lattice or network is expected to undergo a continuous percolation phase transition during a random occupation or failure process [81]. Explosive, hybrid and genuinely discontinuous percolation for processes that are not random but competitive link-addition processes has attracted much attention in recent years [116], [141], [142], [143], [144], [145], [146], [147], [148], [149], [150], [151], [152]. The description of the explosive percolation model is shown in Fig. 9.

Fig. 9.

Schematic of the explosive percolation model. (A) For each step two vertices are chosen randomly and connected by an edge. (B) At each time step of the Product Rule (PR) process, two edges, and , compete for addition. The selected edge is the one minimizing the product of the sizes of the components it merges. Here is accepted and is rejected. (C) Typical evolution of an ER, Bohman Frieze (BF), and PR process on a system of size .

Figure from Ref. [116].

Instead of performing a finite-size scaling analysis at the critical phase transition point, the finite-size scaling analysis on the percolation properties and critical scaling of the size of the largest gap in the order parameter is considered. For instance, Fan et al. [153] developed a new universal gap scaling theory and propose six gap critical exponents to describe the universality of percolation phase transitions. This theoretical framework can be applied for both continuous and discontinuous percolation in various lattice and network models by using finite-size scaling functions.

Gap exponents

Starting with an empty lattice, or network system with isolated nodes, bonds or links are added randomly or by competitive link-addition processes one by one. The control parameter is denoted as , which represents the link density , with being the maximal number of edges. The order parameter is the size of the largest cluster, given by the largest connected component in the entire system. During the evolution of the system, we record the size of the largest cluster at time step , and calculate its largest one-step gap

| (48) |

The step with the largest jump defines and its relative transition point as . Moreover, the percolation strength is defined as the size of the largest connected component at , i.e., .

Critical phenomena such as percolation exhibit scale-free behaviors that are quantified by scaling relations, see discussions in previous sections. Similarly, the averages , and are anticipated to exhibit the following power-law relations [153], as a function of ,2

| (49) |

| (50) |

| (51) |

where , and are three critical exponents describing the universal class of the percolation, and is the percolation threshold in the thermodynamic infinity limit, . Their corresponding fluctuations , and , are also investigated,

| (52) |

| (53) |

| (54) |

where is the number of independent realizations. We expect that , and decay algebraically with with the following scaling relations

| (55) |

| (56) |

| (57) |

where , and constitute another set of critical exponents. The universality class of the percolation is characterized by the new six gap critical exponents. Note that they are highly related to the standard percolation exponents, and not fully independent from each other. The relationships between the gap exponents and the standard percolation critical exponents are derived as [153],

| (58) |

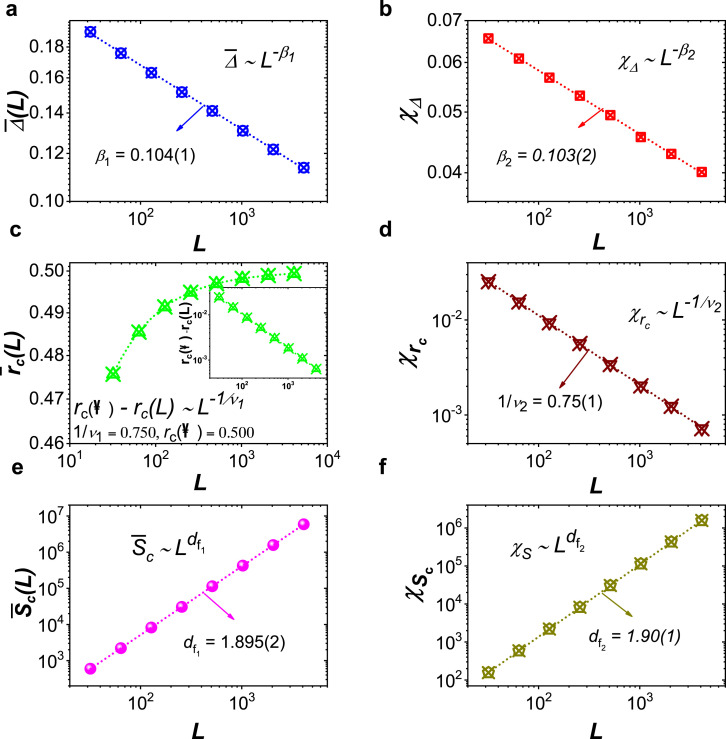

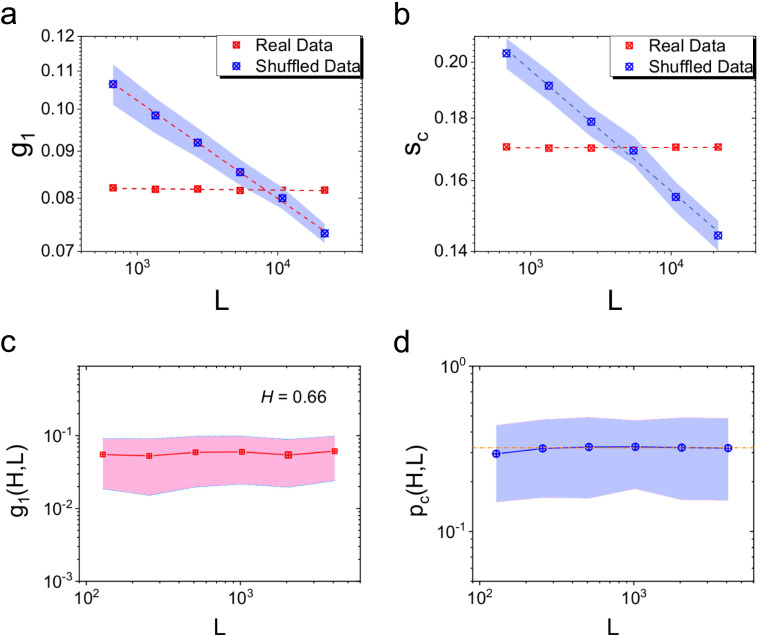

In particular, the values of can imply the order of the percolation. That is, indicates the percolation is a first order transition; whereas models with are continuous [120], [147]. We present the numerical results for bond percolation on a 2D square lattice in Fig. 10, where we obtain , , and , and . All results are in agreement with the known theoretical values [103].

Fig. 10.

Gap critical exponents for bond percolation on 2D square lattice systems. (a) and (b), and as functions of . (c) and (d), log–log plot of the percolation threshold and versus . (e) and (f). The power-law relations of and with . Inset in (c) shows the log–log plot of as a function of .

Reprinted figure from Ref. [153].

2.3. Tipping points analysis

The notion of tipping point: “little things can make a big difference”, was first published by Malcolm Gladwell in his book [154]. It means, at a particular moment in time, a small change can result a large and long-term consequences in a complex system. Tipping points are usually associated with bifurcations [155]. A tipping point is defined as “the moment of critical mass, the threshold, the boiling point”. Many complex systems experience sudden shifts in behavior, often referred to as tipping points or critical transitions, ranging from climate [23], [156], [157], [158], [159], [160], [161], ecosystems [158], [162], [163], [164], [165], [166], [167], to social science [168], [169], financial markets [170], [171], medicine [172], [173], [174] and event macroeconomic agent-based models [175].

Complex systems can shift abruptly from one state to another at tipping points, which may imply growing a threat and risk of abrupt and irreversible changes [161]. It is thus of great practical importance to understand the theoretical mechanisms and predict the tipping phenomena. Although predicting such critical points before they occur is notoriously difficult. Theory proposes the existence of generic early-warning signals (EWS) that may indicate for a wide class of systems if a critical threshold is approaching [176], [177], [178]. EWS is currently one of the most powerful tools for predicting critical transitions. The tipping point analysis technique provides a vital tool to anticipate, detect and predict tipping points in complex dynamical systems. The methodology usually combines monitoring memory in time series, includes dynamically derived lag-1 autocorrelation [179], power-law scaling exponent of detrended fluctuation analysis (DFA) [180], [181], and power-spectrum-based analysis [182].

2.3.1. Basic concepts

Defining tipping points: Following Ref. [177], a tipping point is the corresponding critical point at which the future state of the system is qualitatively changed. A single control parameter is identified as , for which there exists a threshold , from which a small perturbation leads to a qualitative change in a crucial feature of the system , after some time . The actual change is defined as:

| (59) |

In this definition, the critical threshold is considered as the tipping point, beyond which a qualitative change occurs. Note that such changes may occur immediately or even much later after the cause.

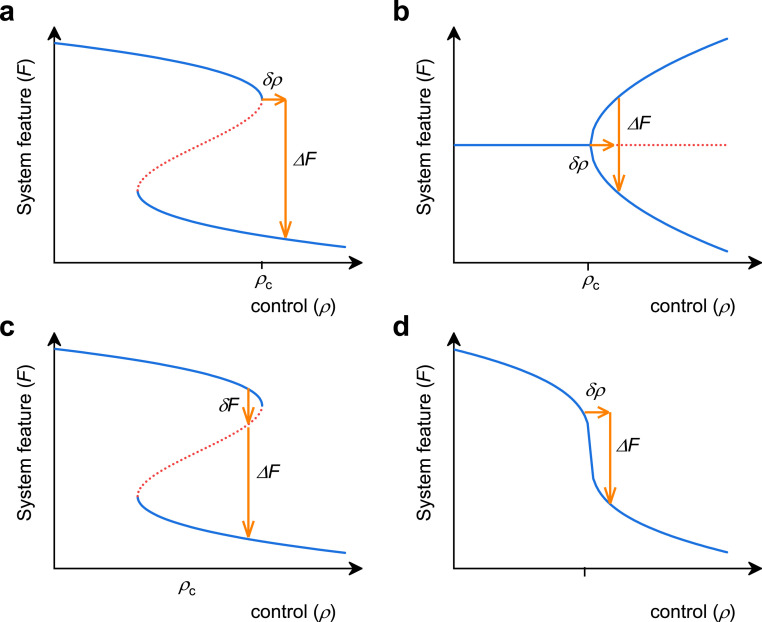

Types of tipping points: The theoretical mechanisms behind tipping phenomena in a complex system can be effectively divided into three distinct categories: bifurcation-induced, noise-induced and rate-dependent tipping [183].

Bifurcation, means that a small change in forcing past a critical threshold causes a large, nonlinear change in the system state, thus meeting the tipping point definition in Eq. (59). Given a conceptualized open system [184],

| (60) |

where is in general a time-varying input. In the case that is constant, we refer Eq. (60) as the parameterized system with parameter and to its stable solution as the quasi-static attractor. If passes through a bifurcation point of the system where a quasi-static attractor loses stability, it is intuitively clear that a system may ‘tip’ directly as a result of varying that parameter. We present two bifurcation-induced tipping point examples in Fig. 11. Where the system’s states are described by

| (61) |

and

| (62) |

for Fig. 11 a and b, respectively. In general, as a system approaches a bifurcation tipping point, where its current state becomes unstable, it leads to a shift to an alternative attractor.

Fig. 11.

Different sources of cascading tipping point types. (a) and (b) Bifurcation-induced, (c) noised-induced transitions, (d) reversible tipping points. Solid lines are stable steady states, dashed lines are unstable steady states.

Noise-induced, noise-induced transitions between existing stable states of a complex system (Fig. 11c), can also be regarded as tipping points [185], however, they do not meet the definition of forced changes, as in Eq. (59). Noise-induced tipping points mean that noisy fluctuations result in the system departing from a neighborhood of a quasi-static attractor. For example, Pikovsky and Kurths studied the coherence resonance in a noise-driven excitable Fitz Hugh–Nagumo system and uncovered that the effect of coherence resonance is explained by different noise dependencies of the activation and the excursion times [186]. The abrupt warming events during the last ice age, known as Dansgaard–Oeschger events, provide a noise-induced tipping real-world example [185]. In addition, Sutera [187] studied noise-induced tipping points in a simple global zero-dimensional energy balance model with ice-albedo and greenhouse feedback [188]. Their results indicate a characteristic time of 100,000 years for random transitions between ‘warm’ and ‘cold’ climate states, which match very well with the observed data. The noise-induced tipping points approach has also successfully been used for modeling changes in other climate models and phenomena [189]. In contrast to approaching bifurcations, it was found that noise-induced transitions are fundamentally unpredictable and show none of the EWS [177].

Rate-dependent, in which the system fails to track a continuously changing quasi-static attractor. To better understand the phenomenon of rate-dependent tipping, Ashwin et al. introduced a linear model with a tipping radius and discussed three examples where rate-dependent tipping appears [183]. Given a system for and the parameter has a quasi-static equilibrium with a tipping radius , then for some initial with , the evolution of with time is given by

| (63) |

where is a fixed stable linear operator (i.e. as ). is a time-varying parameter, that represents the input to the subsystem. The tipping radius may be related to the basin of attraction boundary for the nonlinear problem. This model shows only rate-dependent tipping—because is fixed and there is no bifurcation in the system and no noise is present. In particular, the model can be generalized to include and that vary with . Eq. (63) can be solved with the initial condition to give

| (64) |

Note that the rate-dependent tipping was also observed in the zero-dimensional global energy balance model [188].

Besides the above three tipping types, there is potentially another type, a reversible tipping point. In Fig. 11d, we show an example of a reversible tipping point, in which a mono-stable system exhibits non-linear but reversible change [159].

2.3.2. Tipping elements in the Earth’s climate system

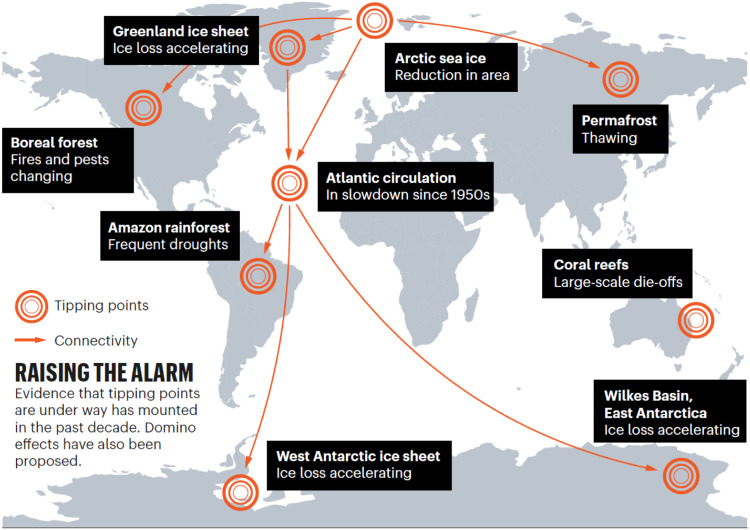

In Ref. [23] Lenton et al. introduced the term tipping elements to describe large-scale components of the Earth system that may pass a tipping point, and assessed where their tipping points lie. They emphasized that the human activities may play a vital role to push the components of the Earth system past their tipping points, resulting in profound impacts on our social and natural systems. They also defined the subset of policy-relevant tipping elements having the following conditions: (i) The first condition is described in Eq. (59); (ii) the second one is related to the “political time horizon” ; (iii) the third one is called “ethical time horizon” ; (iv) the last one is that a qualitative change should correspondingly be defined in terms of impacts. According to these four aforementioned conditions, we review here some policy-relevant potential tipping elements in the climate system, as shown in Fig. 12. For each tipping element, we consider its (1) feature of the system (see Eq. (59)) or direction of change, (2) control parameter(s) , (3) critical value(s) , (4) transition timescale as well as (5) key impacts.

Fig. 12.

Some basic main tipping elements in the Earth’s climate system.

Figure from Ref. [161].

Arctic Sea-Ice. For both summer and winter Arctic sea-ice, the area coverage is declining and the ice has thinned significantly over a large area [190]. In the IPCC projections with ocean–atmosphere GCMs, half of them will become ice-free in September during this century [191], at a polar temperature C [192]. The decline in the areal extent can be regarded as the feature of the system, denoted by ; the local air temperature and ocean heat transport can be regarded as control parameters; a numerical value of the critical threshold is still lacking in literature; the transition timescale is about years; the declining of the Arctic Sea-Ice can amplify warming and cause ecosystem changes.

Greenland ice sheet. It has been reported that the Greenland ice sheet is melting at an accelerating rate [193], which could add additional 7 meters to sea level over thousands of years if it passes a particular threshold. The decrease of the ice volume can be regarded as , a feature of system; the local air temperature is a control parameter; the critical value °; the transition timescale can reach about 300 years.

West Antarctic ice sheet. According to the IPCC report [193], the Amundsen Sea embayment of West Antarctica might have already passed a tipping point, i.e., the ‘grounding line’ (where ice, ocean and bedrock meet) is retreating irreversibly. This could also destabilize the rest of the West Antarctic ice sheet [194]. It has been found, using paleoclimatology data, that such widespread collapse of the West Antarctic ice sheet occurred repeatedly in the past. Similar to the Greenland ice sheet, the decrease of the Ice volume can be regarded as ; the local air temperature is the control parameter; the critical value °; the transition timescale can reach 300 years. The collapse of the West Antarctic ice sheet may lead to about 5 meters of sea-level rise on a timescale of centuries to millennia [23].

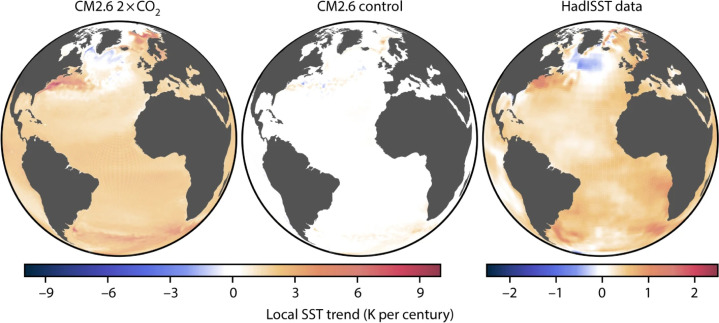

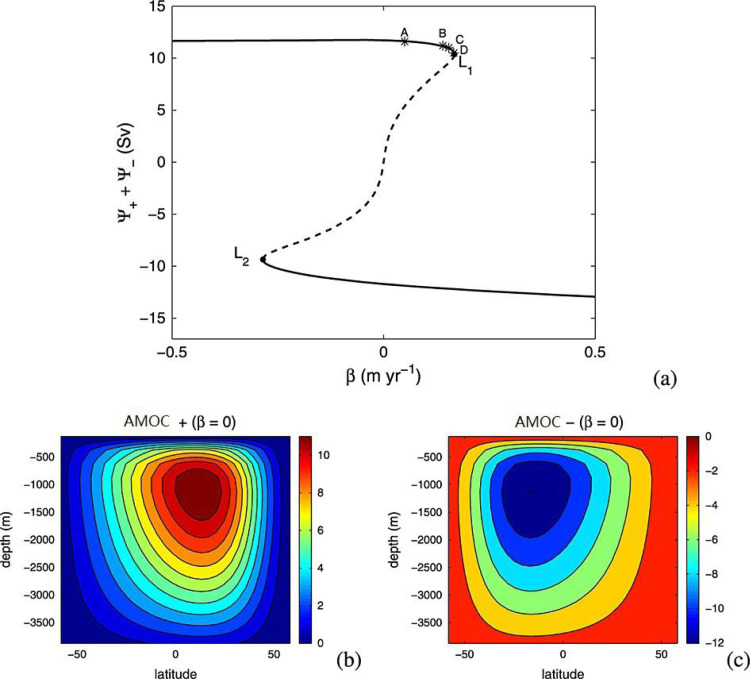

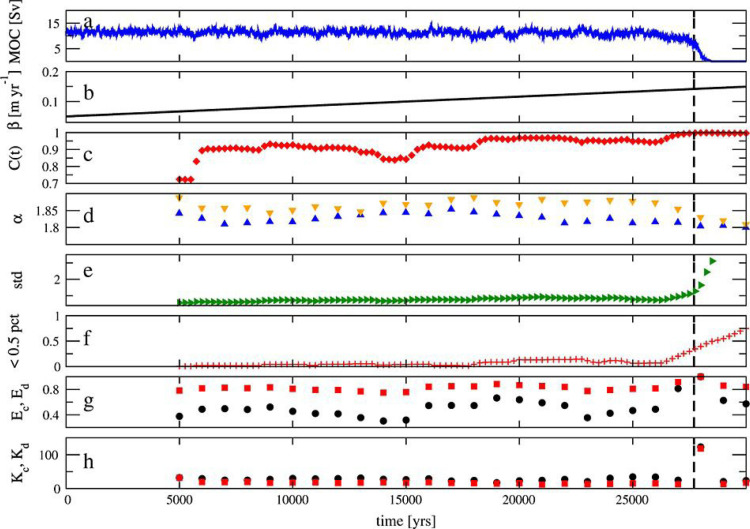

Atlantic circulation. The Atlantic meridional overturning circulation (AMOC) is one of Earth’s major ocean circulation systems, redistributing heat and affecting the climate. Research has provided evidence for a weakening of the AMOC by about sverdrups (around 15 per cent)3 since the mid-twentieth century [160]. The AMOC is also considered as one of the main tipping elements of the Earth system [23], [156]. The overturning of the Atlantic circulation can be regarded as ; the additional North Atlantic freshwater input is the control parameter; the critical value Sv; the transition timescale can reach 100 years. A slowdown of the AMOC is associated with a southward shift of the tropical rainfall belt by influencing the Intertropical Convergence Zone, and a warming of the Southern Ocean and Antarctica [17].

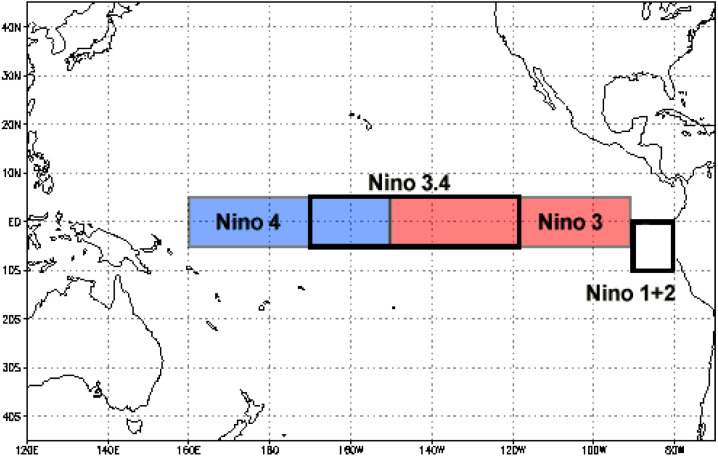

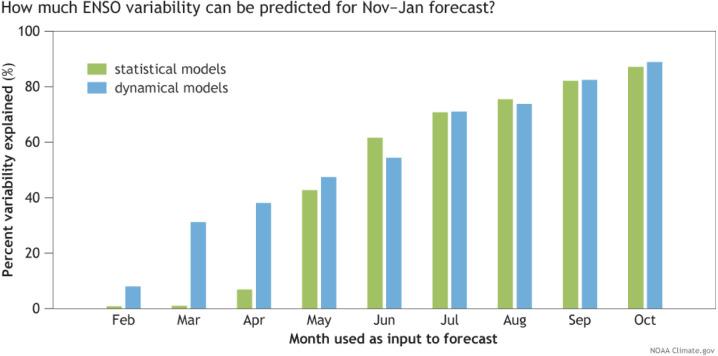

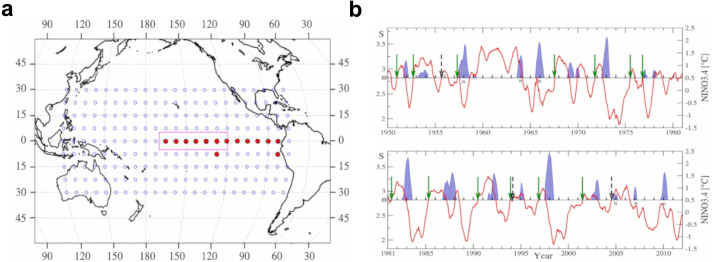

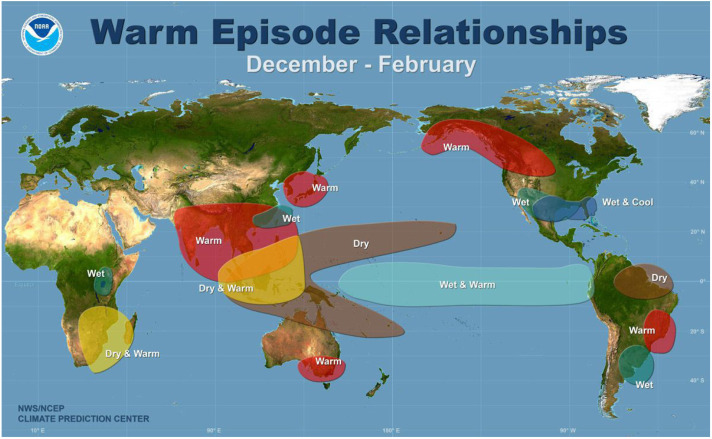

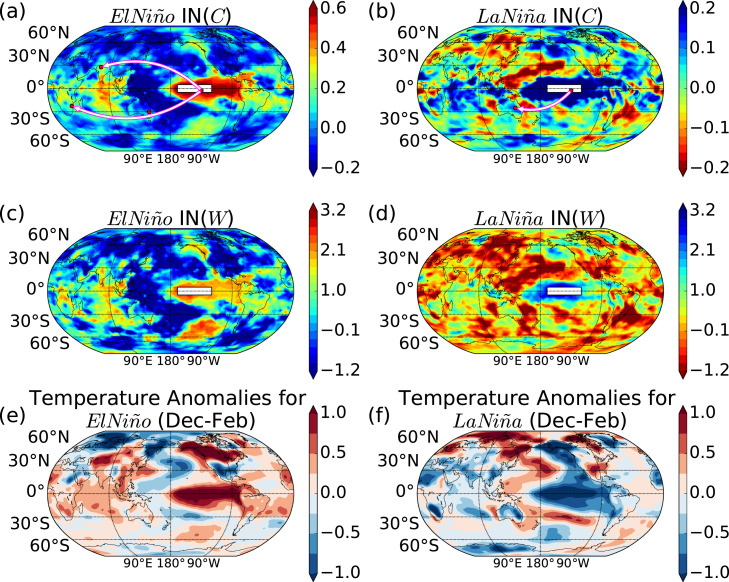

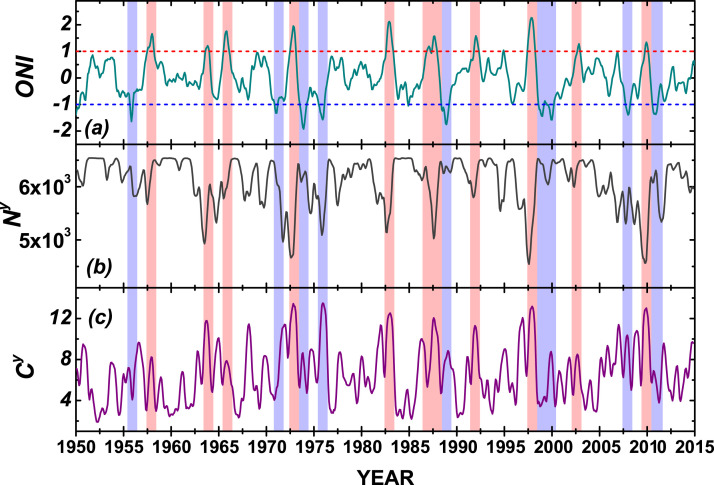

El Niño–Southern Oscillation (ENSO). ENSO, the interannual fluctuation between anomalous warm and cold conditions in the tropical Pacific, is one of the most influential coupled ocean–atmosphere climate phenomena on Earth [195], [196], [197]. It has been reported that extreme El Niño events are projected to likely increase in frequency in the 21st century [198]. The tipping point behavior of ENSO in a warming world was discussed in Ref. [199]. The amplitude of ENSO is regarded as ; the zonal mean thermocline depth, thermocline sharpness in the east equatorial Pacific, and the strength of the annual cycle are the control parameters; the transition timescale can reach 100 years. A stronger El Niño usually causes more extreme events (e.g., floods, droughts, or severe storms), which have serious consequences for economies, societies, agriculture and ecosystems.

Indian summer monsoon. The Indian summer monsoon rainfall (ISMR) has a decisive influence on India’s agricultural output and economy. The monsoon season (from June to September) can bring drought and food shortages or severe flooding, depending on how much rain falls. The land-to-ocean pressure gradient drives the monsoon circulation, related to the moisture-advection feedback [200]. The ISMR shows a declining trend since 1953 [201]. It has been reported that under some plausible decadal-scale scenarios of land use, greenhouse gas and aerosol forcing, the Indian summer monsoon switches between two metastable regimes corresponding to the “active” and “weak” monsoon phases [23]. Thus, the Indian summer monsoon can be also regarded as a tipping element. In particular, the ISMR is regarded as ; the planetary albedo over India is the control parameter; the critical value Sv; and the transition timescale is 1 years.

Amazon rainforest. Tropical forests play a vital role in the global carbon cycle [202] and are the home of more than half of the known species worldwide [203]. Deforestation and climate change are destabilizing the tropical forests with annual deforestation rates of around 0.5% since the 1990s, with a strong increase in recent years [204]. An empirical finding suggests that the observed tropical forest fragmentation is near to the critical point in three continents, including the Americas, Africa and Asia–Australia [205]. In particular, we focus on the Amazon rainforest, since it is the world’s largest rainforest and is home to one in ten known species [161]. If forests are close to tipping points, the Amazon dieback could release 90 gigatonnes Carbon dioxide. Finding the tipping point of the Amazon rainforest is thus essential for us to stay within the emissions budget. Here the rainforest in the Amazon is regarded as ; the precipitation and dry season length are the control parameters; the critical value mm/year; its transition timescale is about 50 years.

Besides the seven above mentioned tipping elements, there are also some potential policy-relevant tipping elements in the climate system, such as, Sahara/Sahel and West African monsoon, boreal forest, Antarctic bottom water, tundra, permafrost, marine methane hydrates, ocean anoxia and Arctic ozone. The readers who are interested can find them in Ref. [23]. Strong evidence indicates that “tipping points are under way has mounted in the past decade. Domino effects have also been proposed” [161].

2.3.3. Early warning of tipping points

As discussed above, many complex dynamical systems, in particular climate systems, can have tipping points and imply risks of unwanted collapse. Although predicting such tipping points before they are reached is a big challenge, the existence of generic EWS provide useful indicators for anticipating such critical transitions [176], [178]. Hence, if an early warning of a tipping point can be identified, then it could help broader society, scientists and policymakers to perform early actions to reduce system collapsed related damages. Thus, numerous studies have been dedicated to detecting and predicting these critical transitions, often making use of the EWS. In this review, we will highlight the tipping point analysis techniques that are used to anticipate, detect and forecast critical transitions in a dynamical system.

Critical slowing down near tipping points

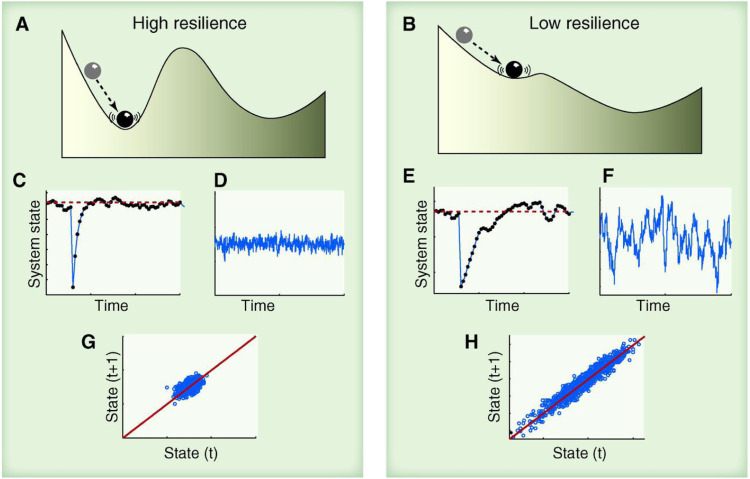

The “critical slowing down” phenomenon has been suggested as indicators of whether a dynamical system is getting close to a critical threshold [206]. This happens, for instance, at the fold bifurcation (Fig. 11), often associated with tipping points. This indicates that the rate at which a system recovers from small perturbations becomes slow, and the slowness can be inferred from rising “memory” in small fluctuations in the state of a system. For example, Fig. 13 demonstrates that the critical slowing down has an indicator that the system has lost resilience and may be tipped more easily into an alternative state (as reflected by lag-1 autocorrelation) [178].

Fig. 13.

Critical slowing down as an indicator for foreseeing tipping points.

Figure from Ref. [178].

In order to illustrate the relation between the critical slowing down phenomenon, increased autocorrelation and increased variance, here we show a simple example that reveals the underlying mechanism. We consider a simple autoregressive model of first order,

| (65) |

where is the stable equilibrium of the model, is the period and is the recovery speed. Here is the deviation of the state variable from the equilibrium, is a random number obtained from a standard normal distribution and is the standard deviation. If and are independent of , Eq. (65) can be written as:

| (66) |

The lag-1 autocorrelation is zero for white noise and close to one for red noise. The expectation value of a first-order autoregressive process is

| (67) |

Let us consider , then the expectation value equals zero and the variance to be

| (68) |

The return speed to equilibrium decreases, when the system is close to the critical point. This implies that tends to zero and approaches to one. According to Eq. (68), the variance tends to infinity. In summary, the critical slowing down leads to an increase in lag-1 autocorrelation () and in the resulting pattern of fluctuations (variance).

Slowing down causes the intrinsic rates of change to decrease, and thus the state of the system becomes more like its past state, i.e., the autocorrelation increases. The resulting increase in “memory” can be measured in a variety of ways from the frequency spectrum of the system.

Autocorrelation function. The lag-1 autocorrelation function (ACF(1)) indicator is a simple way to provide an EWS for an impending tipping event. For instance, Held and Kleinen have shown that the autocorrelation increases in the vicinity of a bifurcation in a model of the thermohaline circulation [179]; Dakos et al. found that the autocorrelation increases before eight well-known climate transitions in the past data [157].

The coefficient of the correlation between two values in a time series is called the ACF. For example the ACF for a time series , see Eq. (66) in the autoregressive model, is given by: where is the time gap and is called the lag. In particular, a lag-1 autocorrelation is the correlation between values that are one time step apart. More generally, a lag autocorrelation is the correlation between values that are time steps apart.

The ACF scaling exponent, , is the power-law decay of the autocorrelation function with increasing lag [207]. Let us denote as the autocorrelation with lag of time series, then the scaling is defined as

| (69) |

for long-range correlations. Notably, for short-range correlated records, decays exponentially and only is indicative of a data variability close to a tipping point.

Detrended fluctuation analysis. Slowing down causes an increase in memory, which can also be measured using detrended fluctuation analysis (DFA) [180]. See [180] for the detailed method. DFA is often used to detect long-range correlations or the persistence of diverse time series including DNA sequences [208], heart rate [209], [210], [211], earthquakes [212], and also climate records [213]. If the time series is long-range correlated, the fluctuation function, , increases according to a power-law relation:

| (70) |

where is the window size and the DFA scaling exponent. Here, , where is the cumulative sum or the “profile” of a time series , and is the fitting polynomial, stands for the (polynomial) order of the DFA. The DFA exponent is calculated as the slope of a linear fit to the log–log graph of vs. . It has been found that the DFA exponent in the temporal range is sensitive to changes in a dynamical system, which is similar to [181].

Power spectrum. Recently, Prettyman et al. introduced a novel scaling indicator based on the decay rate of the power spectrum (PS) [214]. PS analysis partitions the amount of variation in a time series into different frequencies. When a system is close to a critical transition, it tends to show spectral reddening, i.e., higher variation at low frequencies [215]. The PS scaling exponent is calculated by estimating the slope of the power spectrum of the data, from the scaling relationship

| (71) |

Analytically, the three scaling exponents have the linear relationship: . It has been reported that the PS-indicator is a useful technique which behaves similarly to the related ACF(1)- and DFA-indicators. In addition, it also shows signs of providing an EWS for a real geophysical system, tropical cyclones, whereas the ACF(1)- indicator fails [214].

Besides the slower recovery from perturbations, increased autocorrelation and memory [see Eq. (67)], increased variance [measured as standard deviation, see Eq. (68)] is another possible indicator of a critical slowing down as a critical transition is approached. For example, Carpenter and Brock have shown that the variance increases in the vicinity of a bifurcation in a lake model [216].

Flickering before transitions. Another notable EWS is a system’s back and forth oscillation between two stable states in the vicinity of a critical transition. This oscillation has been called flickering and was observed on the model of lake eutrophication [217] and trophic cascades [218].

Skewness and Kurtosis. In addition to the aforementioned EWS before a catastrophic bifurcation, two further precursors, observed when approaching a critical transition and thus suggested as EWS, are changes in the skewness and kurtosis of the distribution of states [219], [220], [221]. While skewness indicates asymmetry in the distribution—with a negative skew indicating a right-sided concentration and a positive skew indicating the opposite. Skewness is the standardized third moment around the mean of a distribution,

| (72) |

Similarly, kurtosis is a measure of the “peakedness” of the distribution—with positive kurtosis indicating a peak higher than the one of a normal distribution and negative kurtosis indicating a lower peak. Kurtosis is the standardized fourth moment around the mean of a distribution estimated as,

| (73) |

2.3.4. Precursors of transitions in real systems

In the last section, we first highlighted the theoretical background of tipping points that may occur in non-equilibrium dynamics before critical transitions. Nonetheless, it poses much of a challenge to detect EWS in real complex systems such as, climate, social and ecological systems. Developing reliable predictive systems based on these generic properties can strengthen our capacity to navigate systemic failure and guide us for designing more resilient systems.

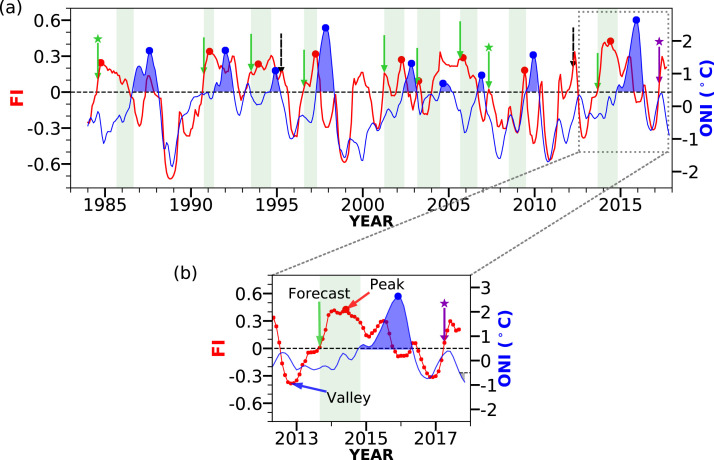

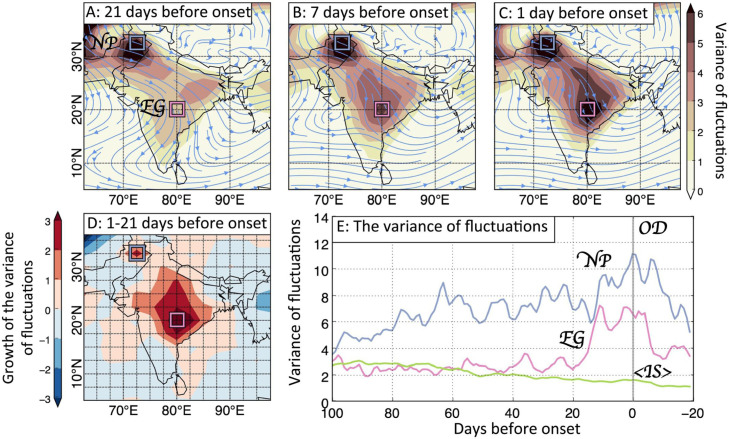

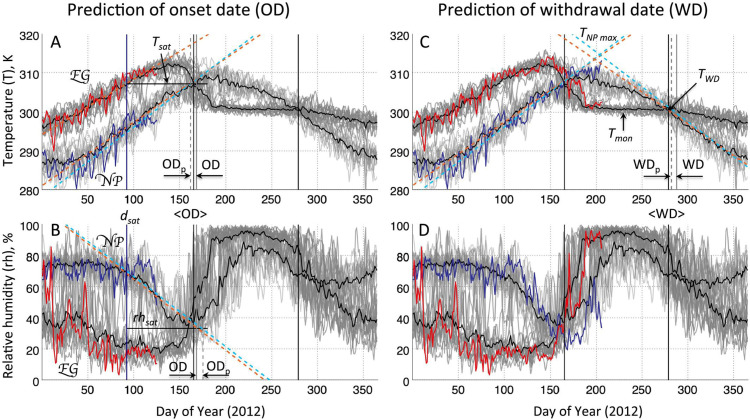

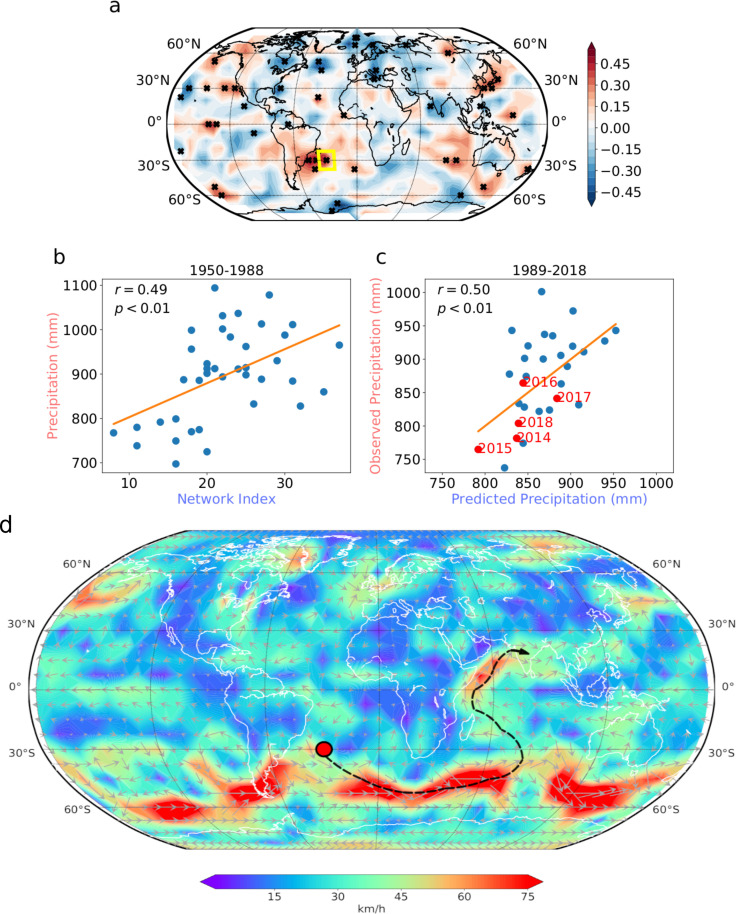

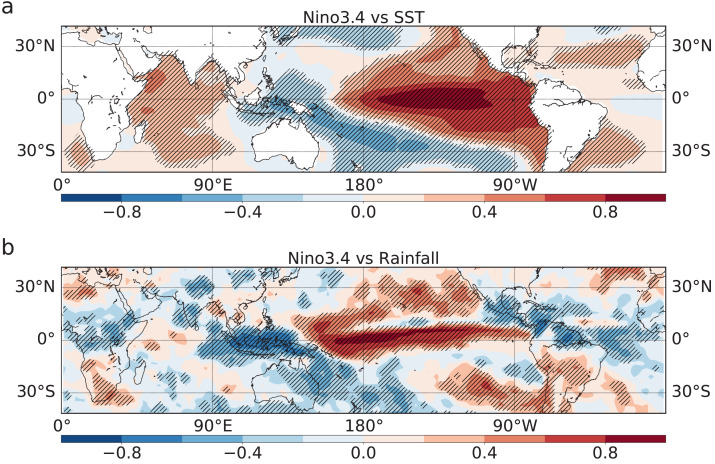

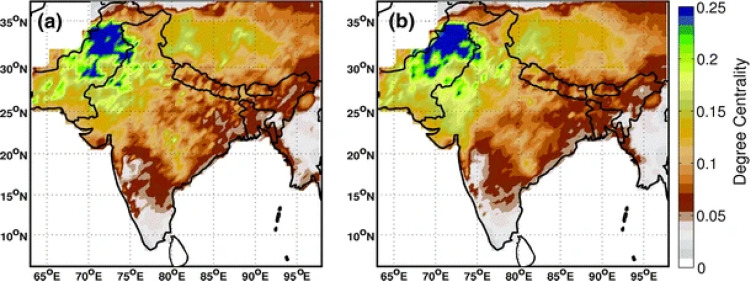

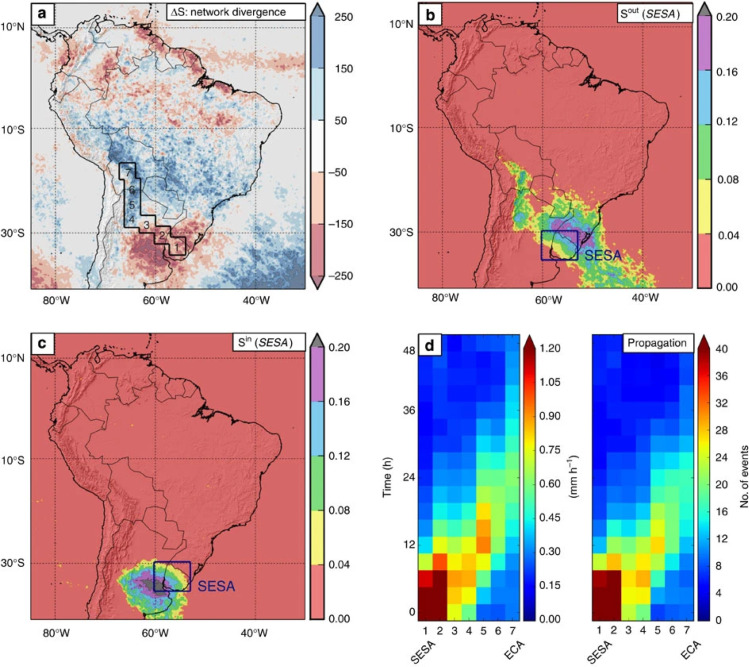

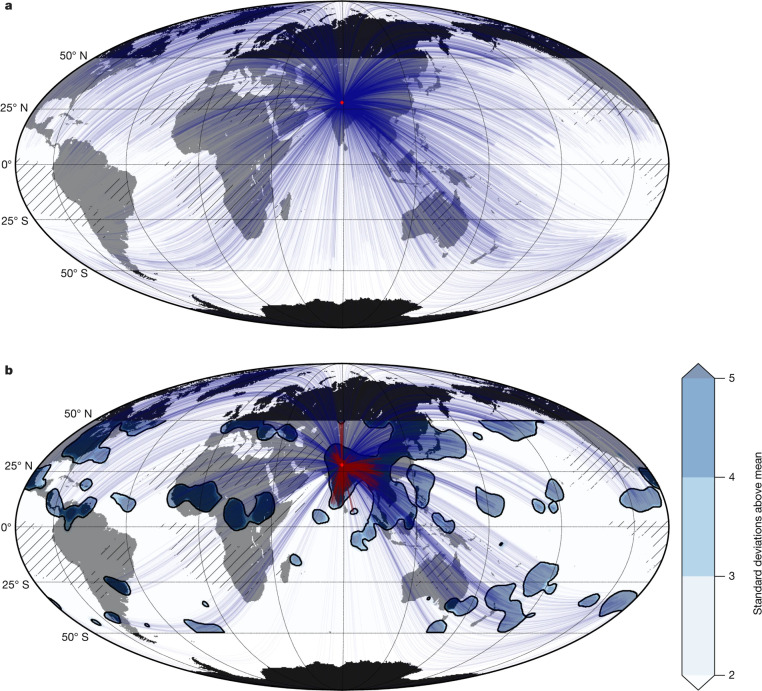

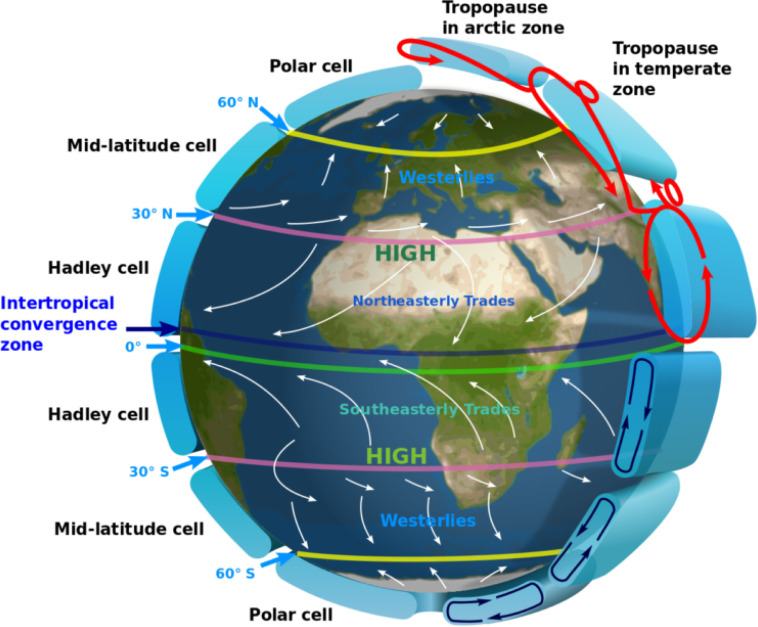

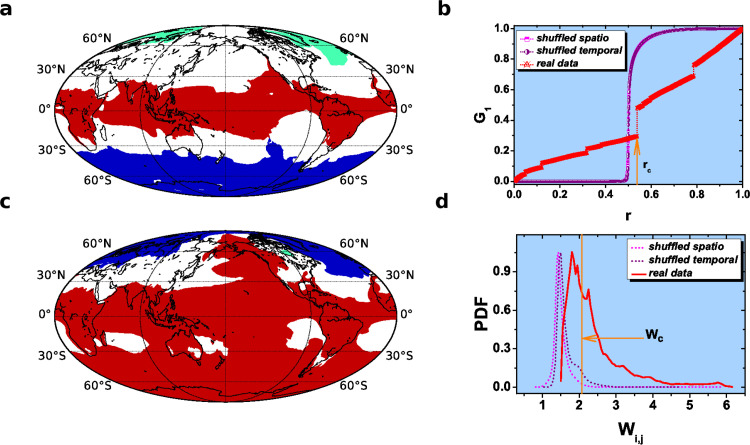

We will briefly review emerging precursors of transitions in different real systems, including climate, ecosystems, medicine and finance. An abrupt climate change occurs when the Earth system is forced to cross a threshold to a new climate state [222]. Large, abrupt, and widespread climate changes include the Carboniferous Rainforest Collapse [223], Younger Dryas [224], greenhouse–icehouse transition [225], Dansgaard–Oeschger events, Heinrich events and Paleocene–Eocene Thermal Maximum [226]. All these rapid climate changes could be explained as critical transitions [176]. For instance, a significant increase in autocorrelation is regarded as a precursor of eight well-known climate transitions [157]. A flickering phenomenon was found to precede the abrupt end of the Younger Dryas cold period, which can be also regarded as a precursor [227].