Abstract

Secure messaging, or “e-visits,” between patients and providers has sharply increased in recent years, and many hope they will help improve healthcare quality, while increasing provider capacity. Using a panel data set from a large healthcare system in the United States, we find that e-visits trigger about 6% more office visits, with mixed results on phone visits and patient health. These additional visits come at the sacrifice of new patients: physicians accept 15% fewer new patients each month following e-visit adoption. Our data set on nearly 100,000 patients spans from 2008 to 2013, which includes the rollout and diffusion of e-visits in the health system we study. Identification comes from difference-in-differences estimates leveraging variation in the timing of e-visit adoption by both patients and providers. We conduct several robustness checks, including matching analyses and an instrumental variable analysis to account for possible time-varying characteristics among patient e-visit adopters.

Keywords: healthcare operations, service operations, primary care, e-visits, patient portals, empirical

1. Introduction

Electronic communication between patients and physicians (“e-visits”) is a recent technological innovation in primary care that affords patients a low-cost alternative to physician office visits. Many medical providers promoted the use of e-visits through patient portals over the past decade, hoping that they can substitute for office and phone visits to allow for larger panel sizes and improved patient health. The efforts to diffuse the technology have been successful; according to a 2012 survey, 57% of healthcare providers have a patient portal and many of those that do not have a patient portal intend to deploy one (KLAS Research 2012). In addition to providing patients with e-visits, these portals typically help patients access their laboratory results, medical histories, appointment schedules, and prescriptions. E-visits can also play an important role in mitigating rising healthcare costs and dealing with the projected shortage of primary care physicians (Petterson et al. 2012).

To date there has been limited empirical research as to whether e-visits are successful in reducing the use of traditional service methods (such as phone or in-person visits) or improving the quality of care. While there are a number of plausible arguments for the benefits of e-visits, it is also possible that the adoption of e-visits may increase the consumption of healthcare services since easier access to healthcare providers may generate additional reasons for an office visit without any attendant health benefit. Thus, the impact of e-visits on physician utilization and health outcomes is an empirical question, the answer to which is important for understanding whether and how to promote this technology.

In this study, we estimate the impact of e-visit usage on visit frequency of office and phone encounters as well as on patient health outcomes. We focus on visit frequency because it has direct consequences for physician panel sizes and also the cost of accessing care for patients. Studying patient health is important because its improvement is the core goal of medical innovations, and e-visits may impact this important goal in unknown ways. Our measures of patient health include blood cholesterol1 (LDL) and blood glucose (HbA1c) levels. These measures have the advantage that they are closely tied to health condition for a substantial class of chronically ill patients, and are regularly measured for a significant fraction of the population. These outcomes can be influenced by e-visits either directly, such as the doctor providing diet or exercise recommendations, or indirectly, by changing the frequency of care. Together, visit frequency and patient health also provide useful information about the impact of e-visits on physician productivity (although we do not attempt direct measurement of productivity due to imperfect data on appointment duration and related variables needed to fully characterize inputs and outputs in this context).

The empirical evaluation of e-visits is challenging because their adoption is likely influenced by patient (and perhaps physician) self-selection. Patients who are relatively healthy (or ill) may adopt e-visits at a greater rate than other patients. Moreover, at least some of the correlates of this self-selection may be unobservable and vary over time. If patients who adopt e-visits are systematically different from nonadopters in ways that are correlated with their visit frequencies or health outcomes, an attempt to measure the effect of e-visit adoption on these outcomes will be biased. Prior papers have addressed selection effects by using matching estimators based on observable patient characteristics (Zhou et al. 2007, 2010). However, this approach is limited to patient characteristics that are observable and available for analysis. Even if researchers can account for time-invariant patient selection effects, time-varying patient characteristics may be an important source of self-selection. To deal with this latter concern, we conduct robustness checks using an instrument for patient e-visit adoption.

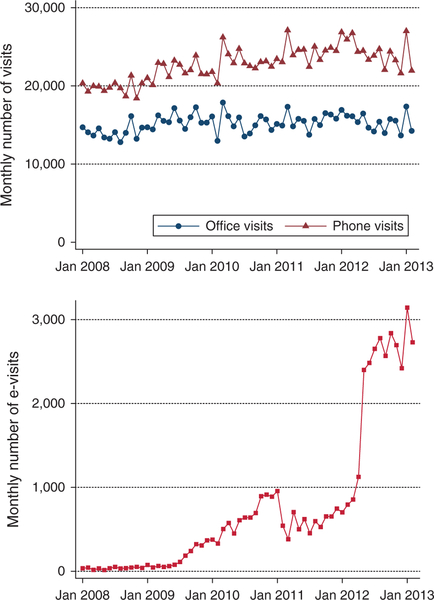

Using a unique panel data set on healthcare interactions for a large primary care practice, our objective is to estimate the relationship between e-visit adoption and performance outcomes with particular attention to addressing selection effects. Our data include five years of healthcare encounters in multiple channels (e-visit, phone, and in-office) for 140,025 patients that access services from 90 providers. As shown in Figure 1, this period covers a period from essentially no use of e-visits in the system (96 e-visits in the first two months of 2008), to extensive adoption by patients (6,449 e-visits in the first two months of 2013) with an observable push by the system to adopt e-visits in April and May of 2012. This diffusion of e-visits contrasts with the mild growth in phone and office visits over the period, which likely increase because of the general growth in the practice we study. The broad cross section of patients and large time dimension of our panel enables addressing time-invariant selection by including patient fixed effects (FEs), which can control for all observed and unobserved patient characteristics that do not vary over the span of our data. To address possible time-varying selection effects we leverage the variation across physicians in the timing and intensity of e-visit adoption. Since a physician recommendation to use e-visits has substantial influence on a patient’s decision to do so, this provides a plausibly exogenous source of variation in patient e-visit adoption. One potential concern of this instrument is that physicians may change their practice styles following adoption. To mitigate this concern, we conduct an auxiliary analysis to verify that physicians do not reduce the frequency or duration of appointments following the adoption of e-visits.

Figure 1.

Monthly Primary Care Encounters in the Studied Health System over the Analysis Period

We begin our analyses by conducting a difference-in-differences analysis of the impact of patient e-visit adoption on office and phone visit frequency, and find that e-visits “trigger” about 6%−7% additional office and phone encounters. These results persist in an analysis restricted to a sample of patient adopters who are matched to nonadopters on key baseline variables, such as primary physician, office and phone visit frequencies, and measures of blood cholesterol and blood glucose. Finally, we use an instrumental variable approach to address potential unobservable selection effects using physician adoption of e-visits as an instrument for patient adoption. Here, our results are directionally consistent with our difference-in-differences results suggesting that e-visits are associated with a substantial increase in office visits. The actual effect sizes are six times larger, but due to the imprecision of the estimates, contain our original results within the 95% confidence interval. We do not find any significant results for phone visits in the instrumental variable analysis.

Given that e-visits appear to increase office visit use, we turn our attention to whether these additional visits are associated with improvements in patient health. We find some evidence of a positive relationship between e-visit adoption and our two health outcomes difference-in-differences analysis. However, these results are no longer significant when we include instrumental variables. Interestingly, we find that the additional visits appear to come at the sacrifice of new patients: after adopting e-visits, providers see 15% fewer new patients each month. The additional office visits also appear to crowd out some care to nonadopters, particularly via a reduction in phone visits.

Our results withstand a variety of robustness checks. The estimates are stable to different definitions of patient e-visit usage. The main definition of e-visit usage in this paper is simply whether the patient has ever used an e-visit, but our results are robust to whether we refine this binary variable to turn on with the patient’s second e-visit, or whether we define the variable more subtly by allowing the variable to stay “on” for only three months after each e-visit. This latter definition allows us to be sure that our results are not driven by patients who adopt e-visits, but only ever use them for a short period of time. We also conduct a placebo analysis and randomize e-visit adoption among our sample to verify that these placebo-induced adoptions do not lead to spurious results. Together, these results provide evidence that e-visits may be triggering additional encounters without any obvious improvements in patient health.

The remainder of this paper is organized as follows. We present our theoretical motivation for our key outcomes in Section 2. In Section 3, we explain our data set and the institutional features of the health system that we study in our analysis. We discuss in detail our empirical strategies in Section 4, followed by our results on visit frequency and patient health and their discussion in Sections 5 and 6, respectively. Section 7 provides robustness checks on our estimates, and we conclude and highlight areas for future research in Section 8.

2. Theoretical Motivation for Outcomes

In this section, we use insights from the literature to motivate the relationship between e-visit adoption and the two sets of outcomes we study: visit frequency and patient health.

2.1. E-Visit Adoption, Visit Frequency, and the Gateway Effect

The first goal of our analysis is to estimate the impact of e-visit adoption on visit frequency, or the number of primary care encounters that a patient has with his or her physician. We measure this by separately summing up the number of office visits and phone visits conducted each month by each patient. These variables are important because they directly affect physician workload, patient health, and healthcare costs. The frequency with which patients require primary care encounters also has a mechanical impact, all else equal, on the number of patients that a physician can serve: a physician whose average patient visits once every two months can handle twice a panel size compared to a physician whose average patient visits every month. The argument is analogous for phone visits.

Our study on visit frequency is related to three main streams of literature. First, our work adds to the operations management literature within primary care examining the impact of various operational interventions, such as provider flexibility on system outcomes (Green and Savin 2008, Zacharias and Pinedo 2014, Liu and Ziya 2014, Balasubramanian et al. 2012, Deo et al. 2013). Second, because e-visits are only one component of the comprehensive set of services offered by patient portals, our findings inform the literature evaluating the impact of adoption of health information technology (Adler-Milstein and Huckman 2013, Angst et al. 2011, Devaraj et al. 2013). Third, our paper joins the small number of studies within the medical literature examining various aspects of e-visits including what types of patients use them and what impacts they have on care quality and visit frequency (Leveille et al. 2016; North et al. 2014; Irizarry et al. 2015; Zhou et al. 2007,2010).

Conceptually, the addition of e-visits to a health system can change several features of the ways in which physicians take care of patients. Because e-visits provide patients with a new, additional (and typically free, as is in our system) channel through which they can communicate with their providers, they can be used to either substitute or complement traditional visits. If patients use e-visits to substitute for office and phone visits, patient e-visit adoption would be expected to lower the frequency of office and phone visits. A number of researchers have argued for this substitution effect, including Bergmo et al. (2005), Kilo (2005), and Zhou et al. (2007).

A number of factors may lead to an increase in the frequency of visits following the adoption of e-visits. First, e-visit adopters may view electronic communication as a low-cost channel for reaching their physicians and bypassing the usual practice gatekeepers, such as office staff and nurses. If this is the case, more communication with the physician creates more potential opportunities for a physician to be obligated to see the patient in the office. It is also possible that an e-visit could require phone follow-up as well but this may be less likely because e-visits and phone conversations are closer substitutes than an e-visit and a physical office visit. For example, in the used car market, Overby and Jap (2009) show that buyers and sellers use physical and electronic channels for different types of transactions. Additionally, behavioral and financial factors can influence physician behavior in initiating office and phone visits following an e-visit (Chandra et al. 2011). The impact of behavioral and environmental factors on server behavior (in our case, physician behavior) is broadly well-documented in the operations management literature (Kc and Terwiesch 2009, Batt and Terwiesch 2017, Tan and Netessine 2014, Berry Jaeker and Tucker 2017, Song et al. 2015).

We call the possibility that e-visit adoption leads to more frequent visits the gateway effect. This effect is consistent with studies based on randomized controlled trials, which show that more frequent phone contact increases the chance of patient readmission to the hospital (Weinberger et al. 1996). Two relevant studies in the literature find that the number of phone visits is not impacted by e-visits (Katz et al. 2003, 2004). These two studies, however, are limited in size and scope and do not address office visits and patient health. It is plausible that e-visits might show different effects on phone visits, which are closer substitutes since neither provides physical interaction, as compared to office visits. The closest prior work investigating this type of effect is Campbell and Frei (2010), which found that the adoption of online banking reduced the use of other self-service channels but increased the use of employee-service channels. However, in their study, both phone and in-person branch transactions increased.

Second, e-visits can be weak in transferring the right types and amounts of information between patients and providers. Prior literature has shown that provision of ambiguous information may lead to more information-seeking behavior (Cox 1967, Murray 1991, Leckie et al. 1996). Kumar and Telang (2012) study the effects of a web-based self-service channel at the call center of a health insurance firm. They find that if the information is unambiguous and easily retrievable on the web, the related calls decrease by 29%. The authors, however, find the opposite effect for ambiguous information. Similar effects have been shown in the financial sector (Miller 1972).

Third, it is possible that e-visits are adopted systematically by “worried-well” patients who overreact to typically minor symptoms (Wagner and Curran 1984). These patients may value the ability to contact their providers quickly via an e-visit to be rest assured that they are not sick. In this case, e-visits may aid patient well-being without measurable improvements in patient health. The problem of endogenous adoption of electronic service delivery channels that we face in this setting has been studied in other settings. For example, in the banking sector, research shows that online customers tend to be younger, are more profitable, and have shorter relationships with the bank (Frei and Harker 2000, Hitt and Frei 2002, Xue et al. 2007). Degeratu et al. (2000) record similar observations for online ordering in supermarkets. An advantage in our setting is that the panel data structure allows us to estimate patient fixed effects that eliminate concerns about certain patient types adopting e-visits more than others.

2.2. E-Visit Adoption and Patient Health

The impact of e-visit adoption on patient health is of central consideration in evaluating patient portals. First, e-visit adoption may impact patient health by changes in the amount of service received through the traditional channels of care delivery—office and phone visits—as discussed earlier. Second, an e-visit is a new channel for care delivery, and patient health could improve from this innovation by patients receiving more care and increased monitoring (Zhou et al. 2010, Baer 2011). Third, e-visits may improve the quality or efficiency of subsequent visits, which we will attempt to shed light on by supplementing our health analysis with appointment duration data. In a different but relevant setting, Buell et al. (2010) investigate the impact of multichannel service delivery in the retail banking industry, and find that while these additional self-service delivery channels do not necessarily improve customer satisfaction, they can help retain customer business.

To investigate whether e-visits improve patient health, we consider two patient health outcomes that are used extensively in the primary care literature (Friedberg et al. 2010): blood glucose (hemoglobin A1c, or HbA1c) and blood cholesterol (low density lipoprotein, or LDL). These health outcome metrics are favored in the health literature because they are easy to measure and responsive to the quality of primary care services. They are also particularly relevant for evaluating patient health in primary care due to the prevalence of chronic heart disease and diabetes in the United States: 71 million American adults (33.5%) have high LDL (Centers for Disease Control and Prevention 2011), and 29.1 million people (9.3%) have diabetes (Centers for Disease Control and Prevention 2014).

3. Data and Sample Definition

We use a panel data set from a large healthcare system in the United States that is involved with research and clinical care. It operates multiple hospitals (with over 2,000 beds in total) and medical centers, along with several primary and specialty care practices in its region. Our data consist of all primary care encounters (office visits, phone visits, and e-visits), all blood cholesterol tests, and all blood glucose tests conducted by patients of the 90 physicians with the largest panel sizes in the health system. These 90 physicians represent nine practices in a similar geographical vicinity, and we obtained data on all patients ever seen by these physicians in the time period studied. This totals 2,566,145 primary care encounters between January 2008 and February 2013 for 143,025 patients, and since the data are structured at the patient level, these encounters can be with physicians or nonphysician providers, such as nurses and residents.

Our only sampling restriction is to keep patients with three or more office visits over the time period studied. We enforce this restriction because the focus of our study is active users of primary care in our health system, and prior literature considers a patient to be on a physician’s panel if he or she visited the provider in the past 18 months (Green et al. 2007). This reduces our sample to 96,566 patients, and since we observe each patient for 62 months, we have 5,987,092 patient-month observations. During the 2008 to 2013 time period we study, the health system began offering patients the option of having e-visit encounters with their providers, and we observe that 12,975 patients (13.4% of our sample) use e-visits at least once. All but five physicians use e-visits in this time period, although the timing of their adoption varies greatly. These varying times and intensities with which patients and physicians adopted e-visits over this time period lend naturally to difference-in-differences approaches comparing visit frequencies and health outcomes for the patients we study.

In keeping with the literature, we define the number of office and phone visits at a monthly level by summing up the number of office visits (and separately, phone visits) for each patient-month. Table 1 shows the summary statistics for all patients, e-visit nonadopters, and e-visit adopters in columns (1), (2), and (3), respectively; column (4) shows the results of t-test on the difference between the adopter and nonadopter samples. We use the term “e-visit adopters” for patients who have communicated with any primary care provider via the secure messaging service of the patient portal at least once. Contrary to traditional technology adoption patterns, we find that e-visit adopters are on average, about five years older than nonadopters (age 56 versus 51 in 2013). Consistent with technology adoption patterns, however, e-visit adopters in our sample are more likely to be male (42% versus 40%) and nonminority. About 72% of all e-visit users are white even though they are 56% of the sample. On the other hand, only 17% of e-visit users are black even though they comprise 33% of the sample. We also observe a foreshadowing of our results in the summary statistics for the visit frequency outcomes: on average, e-visit adopters have 0.03 additional office visits and 0.07 additional phone visits per month.

Table 1.

Summary Statistics by Patient E-Visit Adoption

| (1) All patients |

(2) Nonadopters |

(3) Adopters |

(4) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | t-stat. | ||||

| Demographics | ||||||||||

| Age (in 2013) | 51.96 | 19.03 | 51.34 | 19.55 | 55.99 | 14.59 | −25.99*** | |||

| Male | 0.40 | 0.49 | 0.40 | 0.49 | 0.42 | 0.49 | −5.78*** | |||

| Black | 0.33 | 0.47 | 0.36 | 0.48 | 0.17 | 0.38 | 41.59*** | |||

| White | 0.56 | 0.50 | 0.54 | 0.50 | 0.72 | 0.45 | −40.46*** | |||

| Asian | 0.03 | 0.18 | 0.03 | 0.18 | 0.04 | 0.19 | −3.07** | |||

| Other race | 0.07 | 0.26 | 0.07 | 0.26 | 0.06 | 0.25 | 4.09*** | |||

| Outcomes | ||||||||||

| Monthly office visits | 0.16 | 0.12 | 0.15 | 0.12 | 0.18 | 0.12 | −19.03*** | |||

| Monthly phone visits | 0.24 | 0.28 | 0.23 | 0.27 | 0.30 | 0.31 | −29.39*** | |||

| Unhealthy level of LDLa | 0.49 | 0.44 | 0.48 | 0.44 | 0.50 | 0.42 | −2.65** | |||

| Unhealthy level of HbA1ca | 0.14 | 0.30 | 0.15 | 0.31 | 0.10 | 0.25 | 11.38*** | |||

| Number of patients | 96,566 | 83,591 | 12,975 | |||||||

Notes. Column (4) shows the t-statistic testing the difference of mean summary statistics between nonadopters and adopters. Table A.1 in the appendix provides a correlation table for these variables.

LDL and HbA1c measurements are only available for patients who have laboratory tests. We observe LDL measurements for 75,777 patients and HbA1c measurements for 35,826 patients. LDL and HbA1c are binary variables that equal 1 if at least one measurement of the relevant outcome is unhealthy in a given month.

p < 0.10

p < 0.05

p < 0.01.

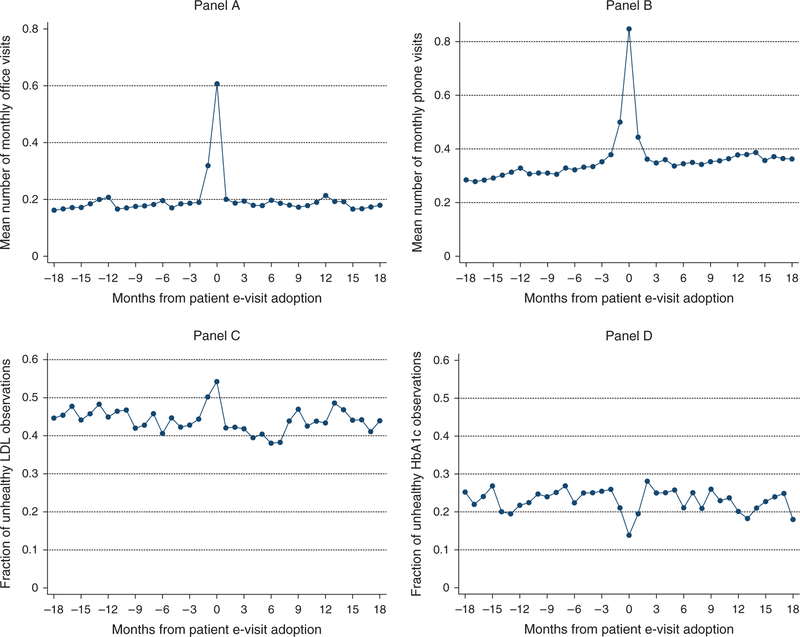

Figure 2 plots the four outcomes for the patients that adopt e-visits. The horizontal axis in each of these graphs is time from the month of adoption, where zero equals the month of adoption. We observe in panel A of this figure that office visits spike in the month before and the month of adoption, consistent with our expectation that patients tend to adopt e-visits following an office visit. We observe the same trend for phone visits in panel B, though there is additionally an increased level of phone visits in the month following e-visit adoption. To be conservative and focus on longer-term impacts, however, we exclude the month before adoption, the month of adoption, and the month following adoption from all our analyses. The other two panels show plots of the mean fraction of unhealthy observations on blood cholesterol (panel C) and blood glucose (panel D) levels. Our sample sizes are smaller for these plots because not all patients have health measurements; however, we do observe a small spike in unhealthy observations of blood cholesterol. On the other hand, we observe a small dip in the unhealthy observations of blood glucose. These spikes are again indicative of abnormal behavior on and potentially around the month of adoption, supporting our decision to remove these few months from our analyses. This reduces the number of patient-months in our sample from 5,987,092 to 5,948,239.

Figure 2.

Visit Frequency and Patient Health by E-Visit Adoption

Notes. Panels A and B plot patient-month-level data for the 12,975 patients who adopt e-visits. Panels C and D plot the health outcomes for this subset of patients. Thus, panels A and B use data from 390,785 patient-months; we do not observe the full 18 months before and after adoption for all patients. Panel C uses 27,122 patient-months, and panel D uses 14,777 patient-months. The panels are unbalanced because patients do not have health observations in every month.

4. Empirical Strategy

We use a threefold empirical approach to study our two sets of outcomes. First, we conduct a standard difference-in-differences analysis using data on all e-visit adopters and nonadopters. Second, we use matching on multiple dimensions to obtain a better set of controls for the e-visit adopters and reestimate our difference-in-differences model on this subset of patients. Third, to explore the role that time-varying unobserved patient characteristics may play in our analyses, we conduct an instrumental variable analysis where patient e-visit adoption is instrumented by physician intensity of e-visit adoption.

All our analyses are conducted at the patient-month level and include both patient and physician fixed effects. The patient fixed effects ensure that our estimates are immune to biases due to observable time-invariant patient characteristics, such as birth-year and race; other observable but unavailable (in our data) factors, such as income and education; and unobservable factors, such as personal preferences for the consumption of health services. Physician fixed effects are useful because they control for all time-invariant characteristics such as demographics, training, or practice style. We are able to identify physician and patient fixed effects separately because some of the patients in our data move between providers. Following a similar logic as the one in Abowd et al. (1999), a relatively small amount of patient mobility would suffice to identify patient and physician fixed effects separately. The median number of providers that a patient interacts with in our data is 2 (the mean is 2.1). The patient-provider assignment process typically involves patients phoning or visiting the primary care offices and having a clerk forward their requests to providers. Since assignment depends on provider availability and the health need, patients visit multiple providers which help us identify provider fixed effects. Throughout our analyses, we cluster the standard errors at both the patient and provider-month levels to account for the common variances in these observations.

One detail in our analyses is that because we structure our analyses at the patient-month level, there are many months in which the patient does not have any primary care encounters. However, we need to assign a physician to that patient in each month to properly estimate the physician fixed effects. The patient’s physician in a given month is assigned as follows: in the most recent month where the patient has any primary care encounter, we first look to see whether there was any office visit. If there was, this physician is recorded as the assigned physician.2 In cases in which the patient has multiple visits of any type in the recent month, we look at the most recent visit of that type in that month.3 This process results in a provider assignment for each patient-month. As mentioned before, we omit observations from the month before adoption, the month of adoption, and the month after adoption for e-visit adopters.

We use the same model to study phone visits and our outcomes on patient health. Our dependent variable is the number of monthly office or phone visits, so each data point is the number of visits (office or phone) for patient i in month-year t. Since our data span 62 months from January 2008 to February 2013, t takes on values 1 to 62. For each patient-month, we create a binary variable PatientE-VisitAdoptionit that equals 1 if patient i has adopted e-visits on or before month t. Adoption of e-visits is perfectly “sticky” in our main specifications: for each patient i the value of eVisitit is 0 in all months before adoption, and after the patient uses e-visits once, the value eVisitit stays 1 for all months after adoption. We relax this assumption in our robustness checks and find similar results.

4.1. Difference-in-Differences on E-Visit Adopters and Nonadopters

To estimate the effect of e-visit adoption on visit frequency, we use the following difference-in-differences specification:

| (1) |

where β is the coefficient of interest and captures the impact of patient e-visit adoption on monthly office visits. The model also includes fixed effects for patients and providers in addition to fixed effects for month to control for seasonality and for year to control flexibly for time trends.

4.2. Difference-in-Differences on Matched Samples

Since we find that adopter and nonadopter patients have systematic differences on characteristics, such as age and race, in Table 1, we undertake several matching strategies to improve the comparison between these groups. We conduct two sets of nearest-neighbor matching analyses to obtain better control groups for our e-visit adopters and then run our difference-in-differences specification in Equation (1).4

In each matching analysis, we match each patient e-visit adopter to a nonadopter based on age in 2013 (equivalent to matching on birth-year), gender, baseline office visit frequency, baseline phone visit frequency, and baseline health levels (either blood cholesterol or blood glucose) in 2008 and 2009. Because we believe that matching on baseline outcomes is important, we limit our sample to patients who adopted e-visits in January 2010 onward; this provides us with 24 months of baseline data from 2008 and 2009. In the nearest neighbor matching procedure we used, each nonadopter may be matched to multiple adopters, and we verified that our analyses are not sensitive to whether we allow this many-to-one match. The matching procedure uses a weighted function of the covariates for each observation to identify a “nearest neighbor” match for each patient adopter.

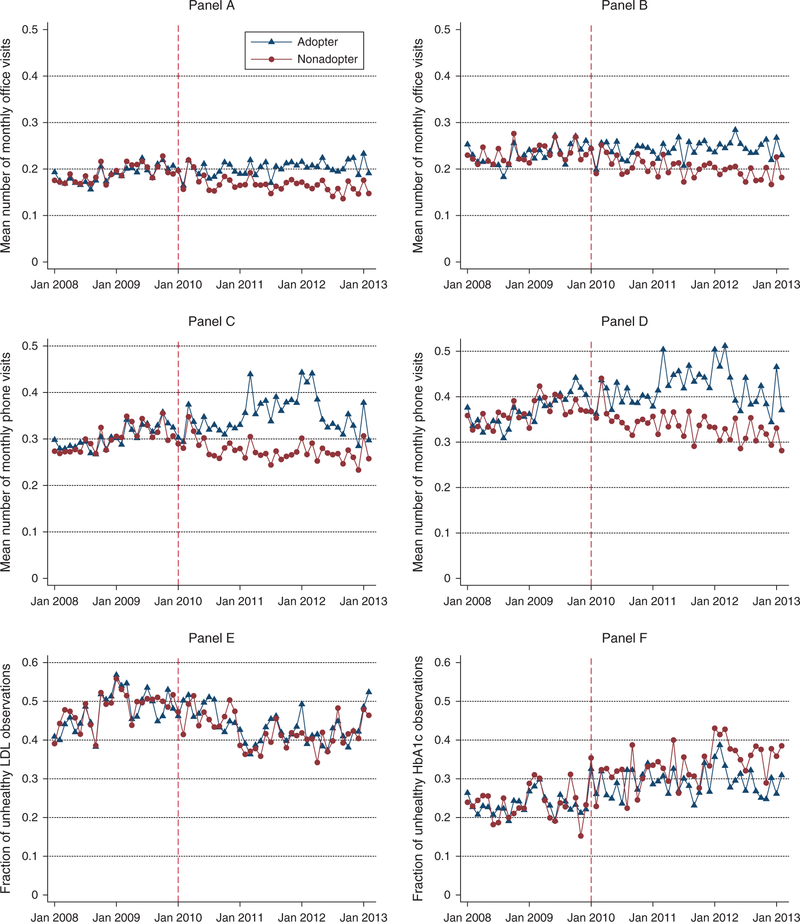

The first matching analysis further restricts the sample to patients with at least one blood cholesterol (LDL) measurement in 2008 or 2009. We match each patient e-visit adopter (who adopted in January 2010 or afterward) to a nonadopter based on birth-year, gender, baseline office visit frequency, baseline phone visit frequency, and baseline blood cholesterol levels.5 The summary statistics for the 7,202 e-visit adopters and their 6,343 nonadopter controls are provided in Table 2. There are no statistically significant differences between the two groups on any of the variables used for matching. Figure 3 provides an illustration of our matching on baseline visit frequency and health measures. The differences in visit frequency and health measurements following the baseline time period provide a preview of the results we find regarding these outcomes.

Table 2.

Summary Statistics by Patient E-Visit Adoption for Matched Samples

| LDL match |

HbA1c match |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| (1) Nonadopters |

(2) Adopters |

(3) | (4) Nonadopters |

(5) Adopters |

(6) | |||||

| Mean | SD | Mean | SD | t-stat. | Mean | SD | Mean | SD | t-stat. | |

| Demographics | ||||||||||

| Age (in 2013) | 59.10 | 13.24 | 59.22 | 13.03 | −0.52 | 61.86 | 12.47 | 61.75 | 12.27 | 0.29 |

| Male | 0.45 | 0.50 | 0.46 | 0.50 | −0.63 | 0.49 | 0.50 | 0.50 | 0.50 | −0.19 |

| Baseline outcomes | ||||||||||

| Monthly office visits | 0.19 | 0.14 | 0.19 | 0.14 | 0.89 | 0.23 | 0.16 | 0.23 | 0.16 | 0.27 |

| Monthly phone visits | 0.30 | 0.34 | 0.30 | 0.34 | −0.44 | 0.37 | 0.41 | 0.37 | 0.42 | −0.21 |

| Unhealthy level of LDL | 0.52 | 0.46 | 0.52 | 0.46 | −0.20 | — | — | — | — | — |

| Unhealthy level of HbA1c | — | — | — | — | — | 0.16 | 0.33 | 0.16 | 0.32 | 0.63 |

| Number of patients | 6,343 | 7,202 | 1,980 | 2,226 | ||||||

Note. Columns (3) and (6) show the t-statistic testing the difference of mean summary statistics between nonadopters and adopters in each of the matched samples.

Figure 3.

Visit Frequency and Patient Health by E-Visit Adoption for Matched Samples

Notes. Panels A–F plot the four outcome variables at the patient-month level for two matched samples. Panels A, C, and E are for the LDL-matched sample, and panels B, D, and F are for the HbA1c-matched sample.

The second matching analysis is different from the first only in that we restrict the sample to patients with at least one blood glucose (HbA1c) measurement in 2008 or 2009, and match on this level in addition to the other variables. Many fewer patients have measurements for blood glucose, but we are able to effectively match our 2,226 e-visit adopters with 1,980 nonadopters and the summary statistics for these groups are provided in Table 2 in the appendix. As in the first matching analysis, the adopter and nonadopter groups have no statistically significant differences on the matching variables.

4.3. Instrumental Variable Analysis

One of the empirical challenges in our analyses is accounting for both time-invariant and time-varying unobservable patient characteristics. All our analyses use patient fixed effects to account for time-invariant unobservable patient characteristics. Time-varying un-observables do not have a standard econometric treatment, however, and there may be concerns that time-varying patient characteristics that are correlated with e-visit adoption will bias our results. For example, patients may modify their behavior based on variation in their health condition.

To account for the endogeneity of e-visit adoption, we run an IV analysis where we use the number of e-visits conducted by the patient’s physician in a given month-year as an instrument for whether the patient is an e-visit adopter in a given month-year. Keeping with our notation in Equation (1) of patient i interacting with a physician in month-year t, our first-stage equation is given by

| (2) |

We use a linear probability model despite the binary endogenous variable to accommodate the estimation of fixed effects in our data set; also, the standard 2SLS estimator must have a linear first stage.6 This type of instrument is often referred to as a “leave-one-out” instrument as for each patient-month, the instrument leverages variation in the behavior among all other patients in that same month. The second-stage equation is then

| (3) |

where is the predicted value from Equation (2), δit is an error term, and βIV is the selection-corrected 2SLS estimator of the impact of e-visit adoption on monthly office visits.

For the IV analysis to provide an unbiased estimate, two conditions must be satisfied: First, the instrument must be relevant in the sense that it is correlated with e-visit adoption. We can verify this by analyzing the F-statistic associated with γ in Equation (2). We report the Wald F-statistic and the p-value of the underidentification test by Kleibergen and Paap (2006) as well as the 10% critical values of Stock and Yogo (2005) with all IV analyses. Second, the instrument must plausibly satisfy the exclusion restriction, which in this case means that the physician’s intensity of e-visit usage with other patients does not directly influence a particular patient’s visit frequency or health outcomes.7 This assumption cannot be directly tested, but we can conduct auxiliary analyses that rule out some plausible ways in which this could be violated due to changes in physician behaviors. One possibility is that adoption of e-visits by other patients changes the amount of time available for a visit by the focal patient, leading to changes in health outcomes. We obtained and analyzed appointment duration data that suggest this is not the case. Similarly, we investigated whether e-visits are associated with a change (in particular, a reduction) in laboratory tests which cannot be done through the electronic channel. We find that testing rates increase with e-visit adoption, suggesting this effect is unlikely. We address other potential concerns with our instrument when we present the results.8

5. Results on Visit Frequency

Table 3 presents the difference-in-differences results. Column (1) shows the impact of patient e-visit adoption on office visits using the full sample of adopters and nonadopters, and here we find an estimate that remains robust in later analyses. We find that patient e-visit adoption is associated with an increase in office visits by about 0.01 each month, which off a base of 0.16 represents an effect size of about 6%. We also find a statistically (and practically) significant effect of patient e-visit adoption on subsequent phone visits in column (2): the estimate is 0.017, which off a base of 0.24 is an effect size of about 7%.

Table 3.

Difference-in-Differences Estimates from Full and Matched Samples on Visit Frequency

| Full diff-in-diff |

LDL match |

HbA1c match |

||||

|---|---|---|---|---|---|---|

| (1) Office | (2) Phone | (3) Office | (4) Phone | (5) Office | (6) Phone | |

| Patient E-Visit Adoption | 0.010*** | 0.017*** | 0.011*** | 0.026*** | 0.013** | 0.043*** |

| (0.002) | (0.004) | (0.003) | (0.006) | (0.005) | (0.012) | |

| Patient FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Provider FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Month FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Year FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Mean of dep. var. | 0.16 | 0.24 | 0.19 | 0.30 | 0.23 | 0.37 |

| R2 | 0.002 | 0.001 | 0.003 | 0.002 | 0.004 | 0.003 |

| Sample | All | All | Matched | Matched | Matched | Matched |

| (LDL) | (LDL) | (HbA1c) | (HbA1c) | |||

| Patient-months | 5,948,239 | 5,948,239 | 818,184 | 818,184 | 254,094 | 254,094 |

Note. Standard errors in parentheses are robust and two-way clustered at the patient and the provider-month levels.

p < 0.10

p < 0.05

p < 0.01.

Next, we show the results for our difference-in-differences analysis using matched samples. Columns (3) and (4) of Table 3 show estimates from matching on selected covariates and blood cholesterol levels. Our estimate of the impact of patient e-visit adoption on subsequent office visits remains at 0.01, and statistically significant, and our estimate of the impact on phone visits increases from 0.017 to 0.026. These results are sustained in columns (5) and (6) of Table 3, which show the same estimates on visit frequency for matching on selected covariates and blood glucose levels. The effect size of about 6% for the impact of e-visit adoption on office visits is stable across model specifications. The estimates on phone visits are statistically significant and range from 7% in column (2) to 12% in column (6).

Finally, Table 4 shows the IV results on visit frequency. Column (1) reports the first-stage regression, and the instrument is highly statistically significant (F(1,6013) = 135, p < 0.001). The estimate of 0.001 can be interpreted in the following way: for every 100 e-visits that a patient’s provider conducts with all his or her other patients, that patient’s likelihood of using e-visits in a given month increases by 10%. The goal with this analysis is to check whether our earlier estimates are upward biased due to negative selection into e-visits. In column (2), we find a large estimate of the impact of patient e-visit adoption on office visits: the coefficient is 0.059 and correlates to a 33% effect size. The 95% confidence interval is wide, however, and includes our earlier estimates. Column (3) shows the impact on phone visits, and while our results are noisy, our point estimate is consistent with our earlier estimates.

Table 4.

Instrumental Variable Estimates on Visit Frequency

| (1) Patient e-visit adoption |

(2) Office |

(3) Phone |

|

|---|---|---|---|

| First stage | Second stage | Second stage | |

| Patient E-Visit Adoption | 0.059** | 0.022 | |

| (0:029) | (0:044) | ||

| Provider’s Number of E-Visits with All Other Patients | 0.001*** (0.0001) |

||

| Patient FEs | ✓ | ✓ | ✓ |

| Provider FEs | ✓ | ✓ | ✓ |

| Month FEs | ✓ | ✓ | ✓ |

| Year FEs | ✓ | ✓ | ✓ |

| Mean of dep. var. | 0.04 | 0.16 | 0.24 |

| R2 | 0.099 | — | — |

| Patient-months | 5,948,239 | 5,948,239 | 5,948,239 |

| Weak id. (KP rk Wald F-stat.) | 135.36 | — | — |

| Underid. (KP rk LM p-value) | < 0.001 | — | — |

Notes. The Wald F-statistic and test of under identification are based on Kleibergen and Paap (2006). The Stock and Yogo (2005) critical value is 16.38 for the IV estimates to have no more than 10% of the bias of the ordinary least squares estimates. Standard errors in parentheses are robust and two-way clustered at the patient and the provider-month levels.

p < 0.10

p < 0.05

p < 0.01.

Together, our results on visit frequency show a consistent effect of patient e-visit adoption on subsequent office and phone visits. Given the substantial increase in the coefficient in the IV analysis, and the fact that it includes our original estimate, we focus on the practical impact of our (non-IV) 0.01 estimate. This represents an effect size of about 6% and translates to an extra office visit every 100 months. While this is not very large for an individual patient, for an average physician in our data with a panel size of 1,611 patients and 13.4% e-visit adoption rate, this amounts to 0.01 × 0.134 × 1,611 = 2.16 additional visits each month. Given the average appointment duration of about 20 minutes (Shaw et al. 2014),9 this equals about 2.16 × (20/60) = 0.72 hours, or about 43.2 minutes, of appointments each month (not including the time needed to provide e-visits and the additional phone visits). If the true value is closer to our IV estimates, the impact could be substantially larger.

5.1. Evaluating the Gateway Effect: Who Conducts the Additional Visits?

Thus far, we have documented that patient e-visit adoption is linked with increased office and phone visits postadoption. One potential mechanism explaining this effect is that e-visits remove the “gatekeeper” between patients and physicians. Consistent with this potential mechanism, we find evidence that patients use e-visits to connect directly to the physician and bypass gatekeepers, such as office staff and nurses.

For this analysis, we estimate the regression specified in Equation (1) but decompose the dependent variable to four parts: “monthly office visits with a physician,” “monthly office visits with a resident,” “monthly office visits with a nurse practitioner,” and “monthly office visits with other,” in which the last category includes all other provider types. The results of this set of analyses are in Table 5. Recalling that our estimate in our full difference-in-differences analysis was 0.01, we find that this decomposes into 0.012 visits with physicians and −0.002 visits with residents, no additional visits with nurse practitioners, and 0.001 additional visits with other providers in columns (1)–(4).10 The observation that the additional office visits are driven by physician encounters indicates that e-visits may allow patients to bypass the usual “gatekeepers” in the primary care system.

Table 5.

Difference-in-Differences Estimates on the Gateway Effect for Visit Frequency

| Office |

Phone |

|||||||

|---|---|---|---|---|---|---|---|---|

| (1) Physician | (2) Resident | (3) NP | (4) Other | (5) Physician | (6) Resident | (7) NP | (8) Other | |

| Patient E-Visit | 0.012*** | −0.002*** | 0.000 | 0.001*** | 0.028*** | −0.008*** | 0.000 | −0.003*** |

| Adoption | (0.002) | (0.001) | (0.001) | (0.000) | (0.004) | (0.001) | (0.000) | (0.001) |

| Patient FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Provider FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Month FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Year FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Mean of dep. var. | 0.12 | 0.02 | 0.01 | 0.01 | 0.18 | 0.02 | 0.01 | 0.03 |

| R2 | 0.002 | 0.001 | 0.000 | 0.000 | 0.001 | 0.001 | 0.000 | 0.001 |

| Patient-months | 5,948,239 | 5,948,239 5,948,239 | 5,948,239 | 5,948,239 | 5,948,239 | 5,948,239 | 5,948,239 | |

Notes. This table presents our results for the effect of e-visit adoption on the number of office and phone visits with physicians (columns (1) and (5)), residents (columns (2) and (6)), nurse practitioners (columns (3) and (7)), and other providers (columns (4) and (8)). Standard errors in parentheses are robust and two-way clustered at the patient and the provider-month levels.

p < 0.10

p < 0.05

p < 0.01.

We find similar results on phone visits in Table 5. Our 0.017 estimate from the difference-in-differences analysis is decomposed into 0.028 additional phone visits with physicians, −0.008 with residents, no additional ones with nurse practitioners, and −0.003 with other providers in columns (5)–(8). Again, physicians appear to bear the burden of these additional encounters.

5.2. Where Do the Additional Visits Come From?

We find an increase of 6% in office visits for patient e-visit adopters, and we have shown that these additional visits are mostly conducted by physicians (not other providers). We next want to know how the physicians are able to provide these additional visits. There are four main possibilities we consider: (1) the physician reduces the amount of time with nonadopter patients; (2) the physician admits fewer new patients; (3) the physician works more hours to provide the additional appointments; and (4) the physician conducts shorter duration appointments. We formally test each of these possibilities and find evidence for the first and second channels.

Nonadopter Visits.

We test the “crowding out” hypothesis on nonadopter patients by regressing the number of office and phone visits (at the patient level) on physician e-visit adoption. The basic idea is to test whether the visit frequency changes for patients who do not adopt e-visits, before and after their physician adopts e-visits. For each physician, we define the variable Provider E-Visit Adoption in the same way we define Patient E-Visit Adoption in our earlier analyses: the variable equals 1 for the month in which a physician first has an e-visit, and for all months afterward. We also include the five physicians who never adopt e-visits in our sample to enable a better difference-in-differences analysis. The regression equation is the following:

| (4) |

where the last term captures error. The results are in columns (1) and (2) of Table 6 for office and phone visits, respectively. We do not find a significant effect on office visits, but we do find a statistically significant reduction in the number of phone visits conducted with patients who do not adopt e-visits. We take this as evidence for some limited level of crowding out of the physician’s time due to e-visit adoption.

Table 6.

Visit Patterns After Provider Adoption

| Visit frequency for nonadopter patients |

Number of new patients |

Provider monthly visits |

Appointment duration |

|||

|---|---|---|---|---|---|---|

| (1) Office | (2) Phone | (3) Two years | (4) Three years | (5) Office | (6) Duration | |

| Provider E-Visit Adoption | −0.004 | −0.009*** | −1.590*** | −1.231** | −0.300 | −0.007 |

| (0.002) | (0.003) | (0.485) | (0.594) | (2.878) | (0.005) | |

| Patient FEs | ✓ | ✓ | — | — | — | — |

| Provider FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Month FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Year FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Mean of dep. var. | 0.15 | 0.23 | 10.85 | 8.73 | 135.34 | 0.52 |

| R2 | 0.002 | 0.001 | 0.680 | 0.695 | 0.058 | 0.203 |

| Sample | Nonadopter patients | Nonadopter patients | All physicians | All physicians | All physicians | All physicians |

| Observations | 5,182,642 | 5,182,642 | 3,193 | 2,197 | 5,056 | 4,398 |

Notes. This table presents our results for the effect of provider e-visit adoption on the number of office and phone visits with patients who do not adopt e-visits (columns (1) and (2)), new patients (columns (3) and (4)), number of total monthly visits (column (5)), and duration of each appointment in hours (column (6)). The titles of columns (3) and (4) refer to the length of observation window in the data allowed to determine whether a patient is new, that is, has had any prior visit in the data set. Standard errors in columns (1) and (2) are two-way clustered at the patient and the provider-month levels.

p < 0.10

p < 0.05

p < 0.01.

New Patients.

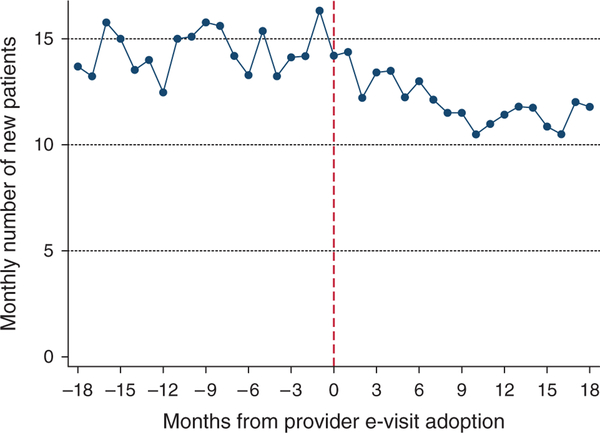

During our conversations with the physicians in the system, a recurring concern was the impact of e-visit adoption on their ability to handle more patients. Impacts on new patients are critical in evaluating e-visits because a common goal of e-visits is to enable a physician to use the technology to care for a larger number of patients. We examine this concern by regressing the monthly number of new patients on physician e-visit adoption. We use a rolling two-year window to count the number of “new patients” in our data. At each month, the number of new patients for physician j is the number of patients who visit the physician and have not had any previous visits (office, phone, or e-visits) in the system over the past two years. The motivation for using a two-year cutoff is to be conservative relative to prior research, which uses an 18-month cutoff for an appointment to define a patient as belonging to a physician’s panel (Green et al. 2007). Also, in our data, the 99th percentile of the number of days between two encounters for a patient is 1.8 years.11 We perform a robustness check for this definition using a rolling three-year window.12 The regression specification is as follows, for physician j in month-year t:

| (5) |

where ζjt is an error term capturing provider-month shocks. The results for the two-year window are in column (3) of Table 6. We find that physicians who adopt e-visits have 1.59 fewer monthly new patient visits. Given that the mean number of new patient visits per month for each physician is 10.85, this is an effect size of 14.7%. The estimated reduction in new patients is similar in column (4), which uses a rolling three-year window. Figure 4 corroborates this result as there is a visible drop in the monthly number of new patients after adoption. Since our analysis shows that physicians accept fewer new patients following e-visit adoption, we infer that these patients were diverted to other physicians or to another health system.

Figure 4.

Monthly Number of New Patients Before and After Provider Adoption

Number and Duration of Appointments.

We also examined whether e-visit adoption by providers has an effect on the monthly number of appointments and appointment duration. The physician may grant more office and phone appointments, engage in shorter office and phone appointments to accommodate the new office visits triggered by e-visits. Also, as discussed earlier in our instrumental variable analysis, one way in which the exclusion restriction assumption is invalidated is if physician practice styles change with the number of e-visits he or she does in each month. To ensure that physician practice styles are at least not changing among these possible dimensions, we analyze the appointment scheduling system for the physicians we study.

These data are available for the entirety of the time period studied and contain information at the appointment-level on the patient requesting the appointment, the physician with whom the appointment was scheduled, and the timing of appointment. The timing data are slightly imperfect, however, because it is sometimes recorded as the scheduled appointment time and sometimes recorded as the appointment check-in time. While measurement errors are important, it is useful to note that they are less problematic when affecting the dependent variable. An additional drawback of this data structure is that we cannot separate out physician slack capacity from a long appointment: if the time between two appointments for a physician is four hours, for example, we do not know if this was the result of a very long appointment or due to slack capacity between appointments. Though our results do not depend on the following restriction, we limit our analysis to typical appointments (based on our conversations with the providers in the system we study) between 10 minutes and two hours in our analysis.

For each of the 90 physicians in our data set (we exclude the seven “other” provider categories in this analysis), we calculate the total number of office visits provided each month. Since we have 62 months in our study period, the maximum number of physician-month observations is 90 × 62 = 5,580; because some physicians have no observations in some months (due to late entry or early exit from our sample), we end up with 5,056 physician-month observations. Specifically, the regression equation is similar to (5) with the dependent variable being the number of monthly office visits provided by physicians, and the estimate of λ from this regression is provided in column (5) of Table 6. We observe no significant differences in the number of monthly office visits provided by physicians after adoption of e-visits.

Next, we use the appointment scheduling data to determine whether patients of physicians who adopt e-visits experience changes in appointment duration. The basic idea is to see whether appointments provided by a given physician change in duration after the physician begins using e-visits. Our regression specification for this analysis is the same as the one in (5) with the physician’s monthly average appointment duration as the dependent variable. The estimate of λ from this analysis is in column (6), Table 6. We do not observe any significant changes in appointment duration upon physician adoption of e-visits.13

Since we do not find any evidence that the number of appointments provided or the duration of these appointments change with physician e-visit adoption, we are more comfortable assuming that other factors in physician practice style may also remain unchanged after e-visit adoption.

Putting together these results, we can obtain an estimate of how physicians provide the additional visits to e-visit adopters. Our estimates show that the number of office visits increases by 0.01 office visits per month for adopters. The average panel size and the percentage of adopters in our data are 1,611 and 13.4%, respectively. This translates to 0.01 × 0.134 × 1,611 = 2.16 additional office visits each month for an average physician. To decompose the source of these additional visits, we use our results on new patients: we observe 1.59 fewer new patients each month on a provider’s panel after the provider adopts e-visits. Assuming each of these new patients would have had one office visit, this results in 1.59 fewer office visits per month, leaving 2.16 – 1.59 = 0.57 office visits per month to be explained by the reduction in office visits from e-visit nonadopters. Therefore, 1.59/2.16 = 74% of the new office visits generated by e-visit adopters occur at the expense of new patient visits, and the remaining 26% occur at the expense of nonadopters.

6. Results on Patient Health

Our empirical strategy for measuring the impact of e-visit adoption on patient health is the same as the ones that we used for visit frequency, outlined in Equations (1) and (3). As stated before, our two key measures of health are LDL and HbA1c levels. Higher levels of these measures are generally correlated with worse patient health, and the medical community has established important cutoff values for each of these measurements that map to whether patient health is “under control” or unhealthy. We define HbA1cit and LDLit to be binary indicators of this cutoff, where 1 indicates an unhealthy observation (≥ 100 mg/dL for LDL and ≥ 7% for HbA1c). We also run the analysis using raw levels of LDL and HbA1c and the results are consistent with our analysis with dichotomized versions of these variables.

Table 7 presents the results on patient health for the sample of patients who have at least one observation over the time period studied. Columns (1) and (2) show the results of the difference-in-differences specification introduced in (1) for patients who adopt e-visits. We find that the likelihood of an unhealthy observation of LDL decreases by 0.016 off of a base of 0.46, reflecting an effect size of about 3%. In column (2), we find that the likelihood of an unhealthy observation of HbA1c decreases by 0.018 off a base of 0.27, reflecting an effect size of about 7%.

Table 7.

Difference-in-Differences Estimates from Full and Matched Samples on Patient Health

| Estimation method |

Full diff-in-diff |

LDL matched diff-in-diff |

HbA1c matched diff-in-diff |

|

|---|---|---|---|---|

| Outcome | (1) LDL | (2) HbA1c | (3) LDL | (4) HbA1c |

| Patient E-Visit Adoption | −0.016** | −0.018** | −0.011 | −0.029** |

| (0.007) | (0.009) | (0.008) | (0.012) | |

| Patient FEs | ✓ | ✓ | ✓ | ✓ |

| Provider FEs | ✓ | ✓ | ✓ | ✓ |

| Month FEs | ✓ | ✓ | ✓ | ✓ |

| Year FEs | ✓ | ✓ | ✓ | ✓ |

| Mean of dep. var | 0.46 | 0.27 | 0.52 | 0.16 |

| R2 | 0.013 | 0.015 | 0.018 | 0.024 |

| Sample | All | All | Matched (LDL) | Matched (HbA1c) |

| Patient-months | 235,978 | 121,225 | 58,960 | 25,083 |

Notes. LDL and HbA1c are binary variables that equal 1 if at least one measurement of the relevant outcome is unhealthy in a given month. Column (3) shows estimates from a sample of patients matched on baseline LDL along with other covariates, and column (4) shows the equivalent for HbA1c. Standard errors in parentheses are robust and two-way clustered at the patient and the provider-month levels.

p < 0.10

p < 0.05

p < 0.01.

Column (3) of Table 7 presents the results on blood cholesterol for the sample of patient e-visit adopters matched with nonadopters based on selected covariates and baseline levels of blood cholesterol; while our point estimate is similar to that in column (1), it is not statistically significant. Our blood glucose measurement remains statistically significant at the 5% level, however, and the point estimate of −0.029 in column (4) of Table 7 suggests an 18% effect size on the likelihood of an unhealthy observation. Overall, we find small and noisy results of the impact of patient e-visit adoption on blood cholesterol, but stronger results on blood glucose.

Next, we analyze our patient health outcomes using the instrumental variable analysis to check for unobservable, time-varying patient selection into e-visit adoption. Our results are shown in Table 8. We estimate two first-stage equations in columns (1) and (3), one for each sample of patients with at least one measurement for the corresponding health outcome. The instrument continues to be highly significant on these subsamples (F(1,5786) = 321, p < 0.001 for LDL and F(1,5664) = 152, p < 0.001 for HbA1c). Neither of our IV estimates in columns (2) and (4) on patient health is statistically different from zero, but the confidence intervals are wide and do not preclude our earlier estimates.

Table 8.

Instrumental Variable Estimates on Patient Health

| LDL |

HbA1c |

|||

|---|---|---|---|---|

| (1) First stage | (2) Second stage | (3) First stage | (4) Second stage | |

| Patient E-Visit Adoption | −0.037 | −0.041 | ||

| (0.028) | (0.039) | |||

| Provider’s Number of E-Visits | 0.002*** | 0.001*** | ||

| with All Other Patients | (0.0001) | (0.0001) | ||

| Patient FEs | ✓ | ✓ | ✓ | ✓ |

| Provider FEs | ✓ | ✓ | ✓ | ✓ |

| Month FEs | ✓ | ✓ | ✓ | ✓ |

| Year FEs | ✓ | ✓ | ✓ | ✓ |

| Mean of dep. var | 0.06 | 0.46 | 0.07 | 0.27 |

| R2 | 0.141 | — | 0.127 | — |

| Patient-months | 235,978 | 235,978 | 121,225 | 121,225 |

| Weak id. (KP rk Wald F-stat.) | 320.65 | — | 151.91 | — |

| Underid. (KP rk LM p-value) | <0.0001 | — | <0.0001 | — |

Notes. LDL and HbA1c are binary variables that equal 1 if at least one measurement of the relevant outcome is unhealthy in a given month. The Wald F-statistic and test of underidentification are based on Kleibergen and Paap (2006). The Stock and Yogo (2005) critical value is 16.38 for the IV estimates to have no more than 10% of the bias of the OLS estimates. Standard errors in parentheses are robust and two-way clustered at the patient and the provider-month levels.

p < 0.10

p < 0.05

p < 0.01.

When we analyze patient health, there is a concern that e-visit adopters may have fewer measurements of blood cholesterol and blood glucose, which could bias our results. This could occur if physicians are less likely to prescribe laboratory tests via e-visits because they cannot be conducted using an electronic channel. If we assume that e-visits do not harm patient health, then undertesting is a problem because we would be systematically missing data from patients who have improved health due to e-visits. To check whether this is a concern in our setting, we examine whether patients who adopt e-visits experience decreases in testing rates postadoption. Table A.2 in the appendix presents the results on this analysis. Perhaps not surprisingly in the context of our results on office visits, we find that adopters experience no change in the levels of LDL testing, and increases in the levels of HbA1c testing among patients with at least one test.14 These findings suggest that e-visit adoption increases care consumption not only through increased office and phone visits with providers, but also through the utilization of other system resources, such as the testing facilities.

7. Robustness

Our main results withstand a variety of robustness checks, five of which we describe in this section. Our first robustness check is the measurement of e-visit adoption: in our main analysis, we take e-visit adoption to be a binary variable that equals 1 in the month that a patient first uses e-visits and stays 1 afterward. Since 38% of adopters in our sample use e-visits only once, however, there is a concern that our e-visit adoption variable does not accurately reflect users of e-visits. Hence, in our robustness check, we refine our definition of patient adoption to equal 1 in the month of the patient’s second e-visit, and also 1 for all months afterward. Table A.3 in the appendix contains the results of using this definition for our analyses. Interestingly, we observe an even larger effect of patient e-visit adoption on visit frequency using this measure of e-visit adoption: office visits increase by 0.03 each month, and phone visits by 0.05 each month, and each coefficient is statistically significant at the 1% level. We continue to find no effect on cholesterol but do observe a 2.5% decrease in the likelihood of an unhealthy blood glucose observation postadoption that is statistically significant.

A related and second robustness check defines the patient e-visit adoption to equal 1 in each month that the patient had an e-visit and for only three months afterward. The goal of this analysis is to test more precisely the “trigger” hypothesis we postulate on e-visits, which would be expected to appear within three months of an e-visit. Table A.4 in the appendix shows the results of this analysis for the full difference-in-differences sample: in column (1) we find that patient e-visit adoption leads to 0.07 additional office visits, and column (2) shows that these patients experience 0.10 additional phone visits. We do not find any effects on blood cholesterol and continue to find statistically significant improvements in blood glucose. As earlier, we drop the original month of adoption, along with the months before and after adoption, in these regressions.

As our third robustness check, we perform a placebo analysis that randomizes the timing of patient e-visit adoption across our sample. The reason for conducting this exercise is to ensure that we are not picking up spurious results in our main analyses as they rely on difference-in-differences estimation. We conduct the placebo check by first randomly assigning 13.4% of all patients to e-visit adoption (based on the percentage of e-visit adoption we observe) and then randomizing these patients to a month-year of adoption between January 2008 and February 2013. The results of our placebo analysis are provided in Table A.5 in the appendix. We do not find any significant results on visit frequency or patient health, providing some assurance that our main estimates are not spurious.

As our fourth robustness check, we run our difference-in-differences estimation on visit frequency controlling for patient health in each month. Since we do not observe patient health in each month, we impute the values of LDL and HbA1c variables based on their last observed value. The coefficients on the impact of patient e-visit adoption on office and phone visits remain positive, statistically significant, and practically similar to our previous estimates, suggesting that time-varying patient attributes do not appear to be a concern in e-visit adoption.

As our fifth robustness check, we conduct robustness checks on our patient health analysis. The estimates in Tables 7 and 8 are obtained from regressions that drop the months of missing health observations for each patient. To mitigate concerns about the potential non-randomness of these missing observations, we conduct three further analyses. We impute the missing observations by the last observed value for each patient, mean value for each patient, and by multiple imputation. We obtain similar interpretations from these methods, indicating that our estimates are unlikely to be biased because of the handling of missing health observations.

8. Conclusion

E-visits have the potential to enhance primary care delivery by enabling cost reductions and larger panel sizes without sacrifices in the quality of care (Green et al. 2013). Almost all large health systems today use patient portals to promote e-visits, telemedicine, and other health technologies. E-visits also improve the ability for patients to contact their providers directly, bypassing the usual gatekeepers in the practice, such as office staff and nurses. From an operations standpoint, these innovations are easier to use because they do not require simultaneous availability of the physician and the patient for an interaction. The medical education sector is also responding to the market for new skills in online communication: the University of Texas at Austin’s new medical school has a focus on the topic, and Kaiser Permanente plans to open a medical school in 2019 that will specialize in training physicians to use online tools (Khullar 2016).

We find that e-visits trigger additional office visits, contrary to common expectations that they serve as a substitute. The overall impact of e-visits on a health system will depend on the extent to which a system is (a) at capacity, and (b) compensated on a fee-for-service basis. In particular, the bottom line will improve for health systems which are not at capacity and in which physician compensation is primarily on a fee-for-service basis since e-visits can increase physician utilization. Systems that are already at capacity or paid on a capitation basis may not see the benefits of e-visits that they expect, since the additional visits by existing patients may reduce capacity for new patients without necessarily generating incremental revenue.

Our analysis improves on those in the literature by better estimation of patient fixed effects, physician fixed effects, flexible time trends, and seasonality which are particularly important in this setting. We are also able to explore the role that unobservable and time-varying patient characteristics may play in patient e-visit adoption via an instrumental variable analysis that leverages variation in physician intensity of e-visit usage. Our selection-corrected estimates show that time-varying patient characteristics are unlikely to be a driver of e-visit adoption.

Our main results are robust to different specifications of e-visit adoption and we are able to show that the increase in office visits crowds out some phone visits to patients who do not adopt e-visits. We also show that provider e-visit adoption is linked to about a 15% reduction in the number of new patients each month, challenging notions that e-visits may increase provider capacity by offloading some care to an online channel. Together, our findings highlight the importance of considering patient and physician responses when introducing new models of service delivery in healthcare (Dobson et al. 2009, Bavafa et al. 2017).

Our analysis has several limitations that may motivate future work. First, regarding the visit frequency analyses, our study is based on data from a health system in which providers are compensated on a fee-for-service basis, and there is evidence that physician incentives affect physician behavior and treatment choices (Shumsky and Pinker 2003, Gosden et al. 2000, Lee et al. 2010). Although fee-for-service is still the most prevalent type of compensation in the United States, it may be the case that physicians behave differently under capitation or salaried payments. Future work can extend the current analysis to settings in which physicians are compensated with incentive schemes other than fee-for-service. Another related topic for future research is to study the impact of tying financial incentives to e-visits. Currently, most health plans, including Medicare and Medicaid, do not reimburse providers for e-visits; this is also true in our setting. However, a handful of health institutions have experimented with charging patients annual fees or co-payments for e-access to their physicians.

A second limitation is that we measure patient health via blood cholesterol and blood glucose levels in keeping with the primary care literature (Friedberg et al. 2010), but e-visits may influence other aspects of patient health or satisfaction in ways not captured by these outcomes. Improved measures on the quality of postadoption care would be especially helpful in examining whether office and phone visits following e-visits are more efficient, and measures of patient satisfaction would also be interesting to analyze. Third, while we are able to obtain quasi-random variation in patient e-visit adoption using our instrumental variable analysis, an ideal study would leverage lottery-like randomization in patient e-visit adoption. Fourth, we study the staggered adoption of e-visits in a relatively short time period of five years, but there may be a novelty effect associated with this technology that dissipates with time, and future work can look at longer-term impacts of such technologies. Until these additional analyses can emerge in the literature, we hope that our study will help inform managerial decisions on whether and how to promote e-visits within primary care systems.

Acknowledgments

The authors are grateful to David A. Asch and Susan Day for their support.

Funding: The authors are grateful for financial research support from the Mack Institute for Innovation Management and the Fishman-Davidson Center for Service and Operations Management, both at the Wharton School, University of Pennsylvania, and from the Wisconsin Alumni Research Foundation at the University of Wisconsin-Madison.

Appendix

Table A.1.

Correlation Table of Patient Demographics, Outcomes, and the Instrumental Variable

| Age | Male | White | Black | Asian | Office | Phone | LDL | HbA1c | Instrument | |

|---|---|---|---|---|---|---|---|---|---|---|

| Age | 1.000 | |||||||||

| Male | 0.018 | 1.000 | ||||||||

| White | 0.187 | 0.194 | 1.000 | |||||||

| Black | −0.175 | −0.227 | −0.847 | 1.000 | ||||||

| Asian | −0.048 | 0.013 | −0.176 | −0.136 | 1.000 | |||||

| Office | −0.038 | −0.041 | −0.136 | 0.150 | −0.011 | 1.000 | ||||

| Phone | −0.048 | −0.079 | −0.107 | 0.127 | −0.020 | 0.210 | 1.000 | |||

| LDLa | −0.172 | −0.118 | −0.121 | 0.123 | 0.001 | 0.049 | 0.031 | 1.000 | ||

| HbA1ca | −0.032 | −0.005 | −0.122 | 0.149 | −0.024 | 0.020 | 0.085 | −0.026 | 1.000 | |

| Instrument | −0.026 | 0.006 | 0.059 | −0.056 | −0.002 | −0.001 | 0.029 | 0.014 | −0.048 | 1.000 |

Note. The mean and standard deviation of the instrument, defined as the number of e-visits a patient’s provider conducts with all other patients in a given month, are 10.97 and 27.50, respectively.

LDL and HbA1c measurements are only available for patients who have laboratory tests. We observe LDL measurements for 75,777 patients and HbA1c measurements for 35,826 patients. LDL and HbA1c are binary variables that equal 1 if at least one measurement of the relevant outcome is unhealthy in a given month.

Table A.2.

Number of LDL and HbA1c Tests by Patient E-Visit Adoption

| Patients with at least one test |

All patients |

|||

|---|---|---|---|---|

| (1) LDL tests | (2) HbA1c tests | (3) LDL tests | (4) HbA1c tests | |

| Patient E-Visit Adoption | 0.001 | 0.009*** | 0.002** | 0.007*** |

| (0.001) | (0.001) | (0.001) | (0.001) | |

| Patient FEs | ✓ | ✓ | ✓ | ✓ |

| Provider FEs | ✓ | ✓ | ✓ | ✓ |

| Month FEs | ✓ | ✓ | ✓ | ✓ |

| Year FEs | ✓ | ✓ | ✓ | ✓ |

| Mean of dep. var. | 0.058 | 0.062 | 0.046 | 0.023 |

| R2 | 0.001 | 0.003 | 0.001 | 0.001 |

| Patient-months | 4,662,017 | 2,202,476 | 5,948,239 | 5,948,239 |

Notes. For months in which patients do not have any tests, the number of tests is recorded as zero. Column (1) includes all patients who have at least one LDL test in our time period, and column (2) includes all patients who have at least one HbA1c test in our time period. Columns (3) and (4) include all patients. Standard errors in parentheses are robust and two-way clustered at the patient and the provider-month levels.

p < 0.10

p < 0.05

p < 0.01.

Table A.3.

All Estimates of Patient E-Visit Adoption (Second E-Visit Definition)

| Full diff-in-diff |

IV |

|||||||

|---|---|---|---|---|---|---|---|---|

| (1) Office | (2) Phone | (3) LDL | (4) HbA1c | (5) Office | (6) Phone | (7) LDL | (8) HbA1c | |

| Patient E-Visit Adoption | 0.031*** | 0.046*** | −0.008 | −0.025** | 0.076** | 0.029 | −0.028 | −0.076 |

| (second e-visit definition) | (0.003) | (0.006) | (0.008) | (0.010) | (0.037) | (0.057) | (0.038) | (0.059) |

| Patient FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Provider FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Month FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Year FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| R2 | 0.002 | 0.001 | 0.013 | 0.015 | — | — | — | — |

| Patient-months | 5,948,239 | 5,948,239 | 235,978 | 121,225 | 5,948,239 | 5,948,239 | 235,978 | 121,225 |

| Weak id. (KP rk Wald F-stat.) | — | — | — | — | 130.61 | 130.61 | 271.21 | 135.22 |

| Underid. (KP rk LM p-value) | — | — | — | — | < 0.001 | < 0.001 | < 0.001 | < 0.001 |

Notes. Patient E-Visit Adoption is defined as 1 for all months in and following the patient’s second e-visit, and is 0 otherwise. We continue to drop the month before, month of, and month following original e-visit adoption from all our analyses. LDL and HbA1c are binary variables that equal 1 if at least one measurement of the relevant outcome is unhealthy in a given month. The Wald F-statistic and test of underidentification are based on Kleibergen and Paap (2006). The Stock and Yogo (2005) critical value is 16.38 for the IV estimates to have no more than 10% of the bias of the ordinary least squares estimates. Standard errors in parentheses are robust and two-way clustered at the patient and the provider-month levels.

p < 0.10

p < 0.05

p < 0.01.

Table A.4.

All Estimates of Patient E-Visit Adoption (Less “Sticky” Definition)

| Full diff-in-diff |

IV |

|||||||

|---|---|---|---|---|---|---|---|---|

| (1) Office | (2) Phone | (3) LDL | (4) HbA1c | (5) Office | (6) Phone | (7) LDL | (8) HbA1c | |

| Patient E-Visit Adoption | 0.069*** | 0.102*** | −0.006 | −0.016* | 0.119** | 0.045 | −0.043 | −0.045 |

| (less “sticky”) | (0.003) | (0.006) | (0.007) | (0.008) | (0.058) | (0.090) | (0.033) | (0.043) |

| Patient FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Provider FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Month FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Year FEs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| R2 | 0.002 | 0.002 | 0.013 | 0.015 | 0.002 | 0.002 | 0.013 | 0.014 |

| Patient-months | 5,948,239 | 5,948,239 | 235,978 | 121,225 | 5,948,239 | 5,948,239 | 235,978 | 121,225 |

| Weak id. (KP rk Wald F-stat.) | — | — | — | — | 152.00 | 152.00 | 315.02 | 141.07 |

| Underid. (KP rk LM p-value) | — | — | — | — | < 0.001 | < 0.001 | < 0.001 | < 0.001 |