Abstract

The rhotic sound /r/ is one of the latest-emerging sounds in English, and many children receive treatment for residual errors affecting /r/ that persist past the age of 9. Auditory-perceptual abilities of children with residual speech errors are thought to be different from their typically developing peers. This study examined auditory-perceptual acuity in children with residual speech errors affecting /r/ and the relation of these skills to production accuracy, both before and after a period of treatment incorporating visual biofeedback. Identification of items along an /r/-/w/ continuum was assessed prior to treatment. Production accuracy for /r/ was acoustically measured from standard /r/ stimulability probes elicited before and after treatment. Fifty-nine children aged 9–15 with residual speech errors (RSE) affecting /r/ completed treatment, and forty-eight age-matched controls who completed the same auditory-perceptual task served as a comparison group. It was hypothesized that children with RSE would show lower auditory-perceptual acuity than typically developing speakers and that higher auditory-perceptual acuity would be associated with more accurate production before treatment. It was also hypothesized that auditory-perceptual acuity would serve as a mediator of treatment response. Results indicated that typically developing children have more acute perception of the /r/-/w/ contrast than children with RSE. Contrary to hypothesis, baseline auditory-perceptual acuity for /r/ did not predict baseline production severity. For baseline auditory-perceptual acuity in relation to biofeedback efficacy, there was an interaction between auditory-perceptual acuity and gender, such that higher auditory-perceptual acuity was associated with greater treatment response in female, but not male, participants.

Keywords: perception, rhotic misarticulation, personalized learning, residual speech errors

Introduction

English /r/ is considered a particularly challenging sound to acquire, even among native speakers. It is one of the latest emerging sounds in the English phonetic inventory, with a significant fraction of typically-developing children acquiring it as late as 8 years (Smit, Hand, Freilinger, Bernthal, & Bird, 1990). In a survey of speech-language pathologists, /r/ was reported to be the sound most likely to fail to improve with treatment (Ruscello, 1995). Children who do not achieve phonetically typical production of the /r/ sound by the age of 9 may be diagnosed with residual speech errors (RSE) (Shriberg, Gruber, & Kwiatkowski, 1994). Such residual errors are estimated to persist into adulthood in roughly 1–2% of the population (Culton, 1986), with potentially negative consequences for peer perception (Crowe Hall, 1991) and socio-emotional well-being (Hitchcock, Harel, & McAllister Byun, 2015).

One reason that English /r/ can be difficult to acquire is that it requires a complex articulatory configuration that consists of two lingual constrictions (Delattre & Freeman, 1968; Gick, Bernhardt, Bacsfalvi, & Wilson, 2008). There is also considerable variability in tongue shapes utilized for /r/ production both across and within individuals (Boyce, 2015; Mielke, Baker, & Archangeli, 2016; Tiede, Boyce, Holland, & Choe, 2004), which can make it difficult to determine what articulator placement cues will be most appropriate for a given individual in intervention. In addition to its distinctive articulatory properties, the English /r/ sound has unusual acoustic characteristics. The most salient acoustic cue that distinguishes /r/ from other English sounds is a substantial lowering of the third formant (F3) during the rhotic interval (Espy-Wilson, Boyce, Jackson, Narayanan, & Alwan, 2000; Lehiste, 1964). Although lowered F3 is the primary acoustic feature of /r/, studies have used the difference between the second and third formants (F3-F2 distance) to quantify the degree of rhoticity of a production because it partially adjusts for individual differences in vocal tract size (Shriberg, Flipsen, Karlsson, & McSweeny, 2001; Whalen, 2011). English /r/ is more likely to be perceived by a listener as accurate when there is a narrow difference between the second and third formants, compared to a wider difference between the two formants (Campbell, Harel, Hitchcock, & McAllister Byun, 2018; Hamilton Dugan et al., 2019; Klein, Grigos, McAllister Byun, & Davidson, 2012; Shriberg et al., 2001).

Auditory-Perceptual Differences in Children With SSD

Previous studies investigating children with speech sound disorder (SSD) have found that this group can exhibit auditory-perceptual difficulties in comparison to age-matched typically developing (TD) children (Cabbage, Hogan, & Carrell, 2016; Hearnshaw, Baker, & Munro, 2018; Rvachew, 1994, 2006, 2007; Rvachew & Grawburg, 2006; Rvachew, Ohberg, Grawburg, & Heyding, 2003). A recent meta-analysis (Hearnshaw et al., 2018) found that 70 of 73 studies reviewed presented evidence of speech perception difficulties in children with SSD. However, numerous studies have reported heterogeneity in auditory-perceptual performance in children with SSD, with some children showing deficits and some children performing in the normal range (Bird & Bishop, 1992; Hoffman, Daniloff, Bengoa, & Schuckers, 1985; Hoffman, Stager, & Daniloff, 1983; Jamieson & Rvachew, 1992; McReynolds, Kohn, & Williams, 1975; Rogow Waldman, Singh, & Hayden, 1978; Rvachew & Jamieson, 1989; Rvachew et al., 2003; Shuster, 1998). It thus appears that individuals with deficits in speech production can have varying levels of auditory-perceptual deficits, or no auditory-perceptual deficits at all.

One reason for divergent outcomes in previous studies of perception in children with SSD pertains to the fact that some studies tested perception of a broad range of sounds, while others assessed children’s perception of the specific sounds they produce in error (Cabbage et al., 2016; Hamilton Dugan et al., 2019; Hearnshaw et al., 2018; Hoffman et al., 1985; Hoffman et al., 1983; Rvachew & Jamieson, 1989; Shuster, 1998). Children with SSD may have reduced auditory-perceptual acuity for the sound(s) that they produce inaccurately (Rvachew & Jamieson, 1989), while their auditory-perceptual acuity for other sounds may remain largely intact. In the specific context of children with SSD affecting /r/, Cabbage et al. (2016) and Shuster (1998) found that /r/ misarticulation was accompanied by auditory-perceptual deficits affecting /r/.

A recent study examined auditory-perceptual abilities of adults, children with RSE affecting /r/, and age-matched TD children (Hamilton Dugan et al., 2019). Participants heard natural speech stimuli collected from children with RSE that ranged in age from 8 to 16 years of age. Stimuli were monosyllabic and bisyllabic whole words containing prevocalic or postvocalic /r/. A clinically trained listener determined whether the /r/ in each word was best categorized as correct or incorrect (i.e., a substitution, omission, or distortion). Participants were instructed to judge the accuracy of the /r/ sound in each word (correct or incorrect), and their responses were scored relative to the clinically trained listener’s ratings. Children with and without RSE demonstrated highly variable performance when judging the accuracy of /r/ in single words. Contrary to the results reported by Cabbage et al. (2016), children with RSE and TD children did not differ significantly in auditory-perceptual performance on this task, although both groups of children were significantly different from the adult group. The discrepancy between the two studies may be partly attributable to the fact that stimuli were naturally produced speech in Hamilton Dugan et al. (2019) and synthetically manipulated speech in Cabbage et al. (2016), as we discuss in more detail below.

A topic that has received relatively little attention in previous literature on perception in SSD is the role of sex or gender in performance on auditory-perceptual tasks. Previous literature has provided evidence that central auditory responses are larger and faster in females than males, with increasing divergence over the course of maturation (Krizman, Bonacina, & Kraus, 2019). Specifically, in their study comparing a child sample (3–5 years old), an adolescent sample (14–15 years old), and a young adult sample (22–26 years old), Krizman et al. (2019) found that the gender difference in central auditory response was greatest in the young adult group. In addition, the relationship between listener and talker gender could influence performance on auditory-perceptual tasks, since previous research on adults has suggested that performance in listening tasks is more accurate when there is a match in gender between the talker and the listener (Yoho, Borrie, Barrett, & Whittaker, 2019). This suggests that speaker gender may be an important factor to consider when analysing auditory-perceptual performance.

Perception-Production Relations

There is evidence that within individuals, degree of auditory-perceptual acuity is correlated with acoustic precision in production (Ghosh et al., 2010; McAllister Byun & Tiede, 2017). In a study by Ghosh et al. (2010), the degree to which an individual could discriminate between two sounds was positively correlated with that same individual’s degree of acoustic distinctness between the same two sounds in production, at least for the sibilant sounds examined. Furthermore, McAllister Byun and Tiede (2017) found that auditory-perceptual acuity correlated significantly with acoustically measured accuracy of /r/ production in TD children. These results suggest that speech perception and production abilities may be interrelated. More precisely, it is possible that differences in auditory-perceptual acuity may account for some of the variation in how speech contrasts are produced across speakers.

Given what is known about the relationship between perception and production, it follows that auditory-perceptual acuity also could potentially predict outcomes in treatment. This idea is supported by the Personalized Learning framework (Perrachione, Lee, Ha, & Wong, 2011; Wong, Vuong, & Liu, 2017), which posits that learning can be optimized by tailoring a training paradigm to meet participants’ specific learning needs. For example, in a study by Wong and Perrachione (2007), participants’ success in a second language (L2) tonal learning task was predicted by their ability to identify pitch patterns in a non-linguistic context. Participants with higher accuracy of pitch identification in a non-linguistic context were more successful at the L2 learning task than participants with lower pitch identification accuracy. Moreover, individuals with high aptitude for pitch identification were more responsive to a training paradigm involving high-variability stimuli than low-variability stimuli, while the low-aptitude listeners showed the reverse pattern of response. The results of this study indicate the potential benefits of identifying individual differences and utilizing them to assign learners to training paradigms.

Applying the Personalized Learning framework to intervention for RSE affecting /r/, we can speculate that children’s response to different types of treatment may be influenced by their auditory-perceptual acuity. Traditional treatment approaches rely primarily on targets modelled in the auditory modality, and if a child does not reliably perceive the contrast between their incorrect production and the target sound, they may derive little benefit from this approach. However, we might expect better outcomes for these children when the target sound is enhanced with a visual display, and children can visually evaluate the proximity of their own productions to the target (Shuster, 1998). In visual biofeedback intervention, instrumentation is used to obtain and visually display measurements of physiology or behaviour to a learner in real time (Davis & Drichta, 1980; Volin, 1998). The dynamic display of the child’s actual production can be contrasted with or superimposed on a visual representation of correct production of the target sound, and the child can be encouraged to adjust their production to achieve a better match with the visual target. This visual enhancement of the auditory target may enable treatment gains in children with reduced auditory-perceptual acuity who have not made progress with traditional forms of intervention.

There are many different biofeedback tools that supply various types of information about speech output to learners. The two types of real-time biofeedback used in the current study are visual-acoustic biofeedback, which displays the acoustic signal of speech, and ultrasound biofeedback, which provides a dynamic display of the shape and movements of the tongue during speech. Numerous previous studies using case study or single-case experimental methodologies have reported positive effects of biofeedback treatment for children with RSE affecting /r/. These include studies of visual-acoustic biofeedback in the form of a spectrogram (Shuster, Ruscello, & Smith, 1992; Shuster, Ruscello, & Toth, 1995) or a linear predictive coding (LPC) spectrum (McAllister Byun, 2017; McAllister Byun & Campbell, 2016; McAllister Byun & Hitchcock, 2012), as well as articulatory biofeedback using ultrasound (Adler-Bock, Bernhardt, Gick, & Bacsfalvi, 2007; Bernhardt, Gick, Bacsfalvi, & Adler-Bock, 2005; Preston et al., 2018; Preston et al., 2019; Preston et al., 2014; see the systematic review by Sugden, Lloyd, Lam, & Cleland, 2019). However, these studies have also reported heterogeneity in treatment outcomes, with virtually all describing a subset of children with RSE who do not respond to biofeedback treatment. Currently, it is unclear why some children with RSE do not respond to biofeedback treatment, indicating a need to identify individual differences that could be predictive of response to biofeedback treatment.

Further research measuring auditory-perceptual acuity in conjunction with treatment response is needed to determine if auditory-perceptual acuity can serve as a predictor of how a child with RSE will respond to biofeedback intervention. However, there are multiple theoretically plausible ways in which the relationship between perception and treatment response could manifest itself. One possibility is that children with poor auditory-perceptual acuity could show greater relative response to biofeedback treatment because biofeedback specifically targets their area of weakness by providing a visual target to supplement a compromised auditory target. Conversely, it is also possible that children who have poor auditory-perceptual acuity for /r/ will not exhibit the same degree of improvement as children who have relatively strong auditory-perceptual acuity. This is related to the above-mentioned hypothesis that children with low auditory-perceptual acuity also have low production accuracy at baseline. That is, individuals with low auditory-perceptual acuity may be at a disadvantage for learning subtle auditory-perceptual contrasts, such as the distinction between related sounds /r/ and phonetically similar sounds such as the labiodental glide /ν/.

The current study measured perception of /r/, production of /r/, and response to biofeedback treatment in a large sample of children with RSE, pooled across multiple studies. It addressed three research questions: (1) Is there a group difference in auditory-perceptual acuity for rhotic sounds, measured via identification of stimuli along a synthetic continuum from /r/ to /w/, between children with RSE affecting /r/ and typically developing children in the same age range? (2) Among children with RSE affecting /r/, is there an association between auditory-perceptual acuity for /r/ and severity of /r/ misarticulation, as indexed by acoustic properties of /r/ production in a standard stimulability measure, while controlling for age and gender? (3) Is there a relationship between auditory-perceptual acuity at baseline and response to biofeedback treatment targeting /r/, while controlling for age and gender?

First, we hypothesized that we would observe a group difference between TD and RSE speakers. This hypothesis is in agreement with previous research by Cabbage et al. (2016) but contrasts with the results of the recent study by Hamilton Dugan et al. (2019). The present study resembles Cabbage et al. (2016) and differs from Hamilton Dugan et al. (2019) in using synthetically generated rather than naturally produced speech stimuli. We thus hypothesized that our use of a synthetic continuum with well-controlled acoustic properties would allow us to detect differences that may not be apparent in a study using natural speech stimuli.

Secondly, we hypothesized that children with more severe /r/ misarticulation would show poorer auditory-perceptual acuity for /r/, in agreement with previous results showing correlations in ability across perception and production domains (e.g., McAllister Byun & Tiede, 2017). To test this hypothesis, we administered the categorical perception task for /r/ developed by McAllister Byun and Tiede (2017) and used the same acoustic measure, F3-F2 distance, to evaluate rhoticity in speech production by children with RSE. While we acknowledge that speech production accuracy may also be influenced by other factors, such as motor control, we restricted our focus in the present study to the measurement of auditory-perceptual abilities.

Finally, building on research in the Personalized Learning framework, we hypothesized that there would be a significant association between auditory-perceptual acuity and response to biofeedback treatment. However, since a logical argument can be made for either of two potential directions of association between these factors, we left this question open to be addressed empirically. Finally, because previous research has indicated that speaker gender and age can influence both the acoustic and auditory-perceptual characteristics of children’s /r/ sounds (Campbell et al., 2018), we included these factors as predictors in our analyses for research questions 2 and 3.

Methods

Participants

Data for the present study were drawn from a multisite collaborative project that included New York University, Syracuse University, Haskins Laboratories, and the University of Cincinnati. Initially, 64 children aged 9 to 15 with RSE affecting /r/ were identified for inclusion in this study. Five of these participants were excluded due to non-convergent scores on the identification task that served as our primary measure of auditory-perceptual acuity, as described in the next section; this resulted in a final sample of 59 participants who were included in the study. All participants were native speakers of English; however, speaking other languages was not an exclusionary criterion. Participants were required to demonstrate receptive language ability in the broadly average range. At Syracuse University, Haskins Laboratories, and the University of Cincinnati, participants were required to have a standard score above 80 on the Peabody Picture Vocabulary Test–Fourth Edition (Dunn & Dunn, 2007) and a scaled score above 6 on the Recalling Sentences subtest of the Clinical Evaluation of Language Fundamentals-5 (Semel, Wiig, & Secord, 2013). At New York University, participants were required to score within one standard deviation of the mean for their age on the Auditory Comprehension subtest of the Test of Auditory Processing Skills-3rd Edition (Martin & Brownell, 2005). All participants were also required to score below the 85th percentile on the Goldman-Fristoe Test of Articulation-Second Edition (Goldman & Fristoe, 2000) and pass a pure-tone hearing screening at 500, 1000, 2000, and 4000 Hz at 20dB HL. As detailed in Table 1 in the next subsection, all children were enrolled in biofeedback treatment, but the treatment type (visual acoustic or ultrasound) and schedule were heterogeneous across sites. This heterogeneity is a known limitation that will be taken into account in our data analysis to follow.

Table 1:

Information about treatment studies included in the present analysis

| Site | Study | Number of Participants Included | Duration of Treatment | Session Frequency & Duration | Treatment Type |

|---|---|---|---|---|---|

| Syracuse University |

Sjolie, Leece, and Preston (2016) Preston, Leece, McNamara, and Maas (2017) Preston and Leece (2017) Preston, Hitchcock, and Leece (submitted) |

1 1 1 15 |

7–8 weeks 7–8 weeks 1 week 7–8 weeks |

2x/week 60 minutes 2x/week 60 minutes 14x/week 60 minutes 2x/week 45 minutes |

Ultrasound Ultrasound Ultrasound Ultrasound |

| TOTAL: | 18 | ||||

| New York University |

McAllister Byun and Campbell (2016) McAllister Byun (2017) |

11 7 |

10 weeks 10 weeks |

2x/week 30 minutes 2x/week 30 minutes |

Visual-Acoustic & Traditional Visual-Acoustic & Traditional |

| TOTAL: | 18 | ||||

| Haskins Laboratories |

Preston et al. (2018) Preston et al. (2019) |

7 10 |

8 weeks 8 weeks |

2x/week 60 minutes 2x/week 60 minutes |

Ultrasound Ultrasound & Traditional |

| TOTAL: | 17 | ||||

| University of Cincinnati | Wnek et al. (2019) | 6 |

8 weeks | 2x/week 60 minutes |

Ultrasound |

Additionally, comparison data were drawn from 50 typically developing children aged 9 to 15 who did not have a history of neurobehavioral, speech-language, or hearing impairment. Forty of these children came from a previous study of perception-production relations (McAllister Byun & Tiede, 2017), while an additional 10 were collected after that study (seven at New York University and three at University of Cincinnati). Applying the above-mentioned criterion of discarding participants with non-convergent scores on our primary auditory-perceptual measure, two typically developing participants were dropped from the sample. This resulted in a final sample of 48 typically developing children who were included in the comparison group. All participants were required to pass a pure-tone hearing screening at 500, 1000, 2000, and 4000 Hz at 20dB HL. Additionally, they were required to be native speakers of English (again, speaking other languages was not exclusionary) and to have no history of speech, language, or hearing difficulties, per parent report. These children completed baseline perception and production tasks, but did not participate in biofeedback treatment.

Procedure

Baseline Perception

Each child’s auditory-perceptual acuity for /r/ was measured using a computerized task drawn from McAllister Byun and Tiede (2017). Stimuli were a synthetic nine-step continuum between the sounds /r/ and /w/, generated from naturally produced tokens of the words rake and wake by a child speaker (female, aged 10 years). The choice of wake as the other endpoint of the continuum was motivated by the fact that the most common error in production of syllable-initial /r/ is typically perceived as /w/ or a similar sound such as the labiodental glide /ν/. Each step along the continuum was synthesized from the weighted average of the linear predictive coding coefficients, the gain, and the associated /r/ residuals that were computed from the voiced region of the aligned natural rake and wake utterances. Participants heard each step of the continuum 8 times, randomly ordered, for a total of 72 trials. After hearing a single token repeated twice with a 500-ms interstimulus interval, participants were asked to identify the token pair as rake or wake in a forced-choice task by using a mouse to click the appropriate written word on a computer screen. Once the participant selected the word that they heard, the program automatically advanced to the next trial. The auditory-perceptual task was completed with headphones in a soundproof booth or sound-attenuated room.

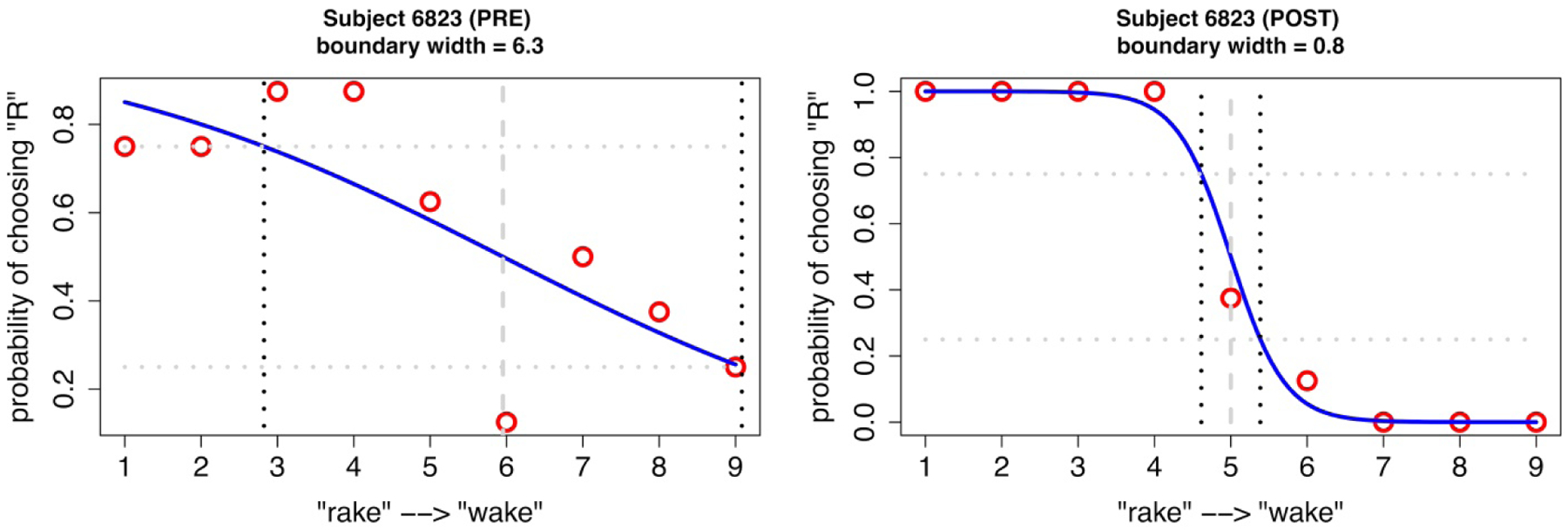

For each participant, the proportion of presentations that were identified as rake was plotted for each stimulus along the continuum. These data points were fitted to logistic functions via maximum likelihood estimation. Auditory-perceptual acuity for /r/ was measured as the width of the fitted function from the 25th to the 75th percentile of probability. In this measure, a narrower boundary region indicates a higher degree of response consistency that is interpreted as indicative of more acute perception of the contrast in question (Hazan & Barrett, 2000; McAllister Byun & Tiede, 2017). As an example, fitted continua for a single participant (subject identifier 6823) at two different time points are shown in Figure 1. (Note that acuity at pre-treatment versus post-treatment is not investigated in the present study, outside of the context of this example, because not all sites collected the auditory-perceptual measure at the post-treatment time point.) The x-axis represents the 9 steps of the continuum, with F3 increasing from step 1 to 9; the y-axis represents the probability that the participant labeled each stimulus as /r/. The participant depicted in Figure 1 has a relatively wide boundary width (6.3) at pretreatment, indicating poor acuity for the /r/-/w/ contrast. At postreatment, the participant shows a narrower boundary width (0.8) compared to pretreatment, suggesting improved auditory-perceptual acuity. In a subset of cases, the logistic function yielded a boundary width that was greater than the total number of continuum steps. These cases were deemed non-convergent and discarded from further analysis, as noted above (number of affected participants = 5 in the RSE group and 2 in the TD group). The rationale for the exclusion of these participants was that they may reflect cases of inattention to the task rather than an authentic measure of participants’ auditory-perceptual acuity.

Figure 1:

Response data and best-fit logistic functions for one participant with RSE (subject 6823) before and after treatment

Production Probes

Participants with RSE affecting /r/ completed a standard probe evaluating stimulability for /r/ in a range of syllable positions and vowel contexts (Miccio, 2002) prior to initiation of biofeedback treatment. The participants were asked to repeat the clinician’s model of a syllable or disyllable containing /r/ in initial, medial, and final positions across varying vowel environments. There were 10 different targets repeated three times, for a total of 30 productions. Participants completed the same /r/ stimulability probe at post-treatment.

Treatment

All children with residual rhotic errors received either ultrasound biofeedback or visual-acoustic biofeedback treatment using LPC spectra (KayPENTAX CSL Sona-Match). In ultrasound biofeedback, participants were shown a real-time display of the surface of their tongue with an image representing a target tongue shape either adjacent to or superimposed over the real-time display. They were cued to adjust their tongue shape to match the target shape, which had been selected in an individualized manner based on an exploratory period in which the clinician cued the child to attempt various lingual configurations. In visual-acoustic biofeedback, participants viewed a real-time LPC spectrum representing the resonant frequencies of their vocal tract and were instructed to adjust the frequency of the third formant ’peak’ or ‘bump’) to a position close to the second formant. A template representing the formant frequencies of a child estimated to have a similar vocal tract size was superimposed over the real-time acoustic display to act as a visual target. The total duration of treatment varied across sites and studies, ranging from one week to 10 weeks. All treatment was administered by the certified speech-language pathologist at each site. Table 1 gives additional information for each data collection site and study, including the number of participants included, the duration of the treatment phase, the frequency and duration of treatment sessions, and the type of treatment (including type of biofeedback and whether there was a traditional treatment component).

Of note, the only participants in the study that received visual-acoustic biofeedback came from the New York University collection site. Participants at New York University received visual-acoustic biofeedback treatment using LPC spectra for 10 sessions and traditional articulation intervention for 10 sessions. Participants at Syracuse University, Haskins Laboratories, and the University of Cincinnati received ultrasound biofeedback instead of visual acoustic biofeedback. Session duration ranged from 30 minutes to one hour across sites. Some participants received intensive treatment within a one-week period, while others received treatment twice per week over a span of time ranging from seven to 10 weeks. In summary, the sample included in the present study had a particularly high degree of heterogeneity with regard to the treatment provided. This is potentially of concern for research question (3), which asked whether auditory-perceptual acuity predicts the magnitude of treatment response. One potential strategy to address this heterogeneity would be to statistically control for factors that varied across the component studies, such as treatment duration and treatment type. However, controlling for these factors could be problematic because of the small number of participants in some conditions (e.g., only one participant received treatment following a one-week intensive schedule). In addition, these factors were not of primary interest for the purposes of the present study. Therefore, we pursued a different strategy in which participants’ response to treatment was coarsely coded into ‘responder’ and ‘nonresponder’ status, defined below. Although the treatment differences described above could affect the magnitude of the effect size of an individual’s treatment response, they were judged unlikely to influence the broad categorization of individuals as responders or nonresponders.

Measurements

Acoustic measurements of formant frequencies of /r/ sounds produced at baseline and post-treatment were obtained using Praat (Boersma & Weenink, 2019). There are known limitations in the accuracy of formant measurements for the speech of children, whose high fundamental frequencies lead to wide harmonic spacing that may compromise the accuracy of automated formant trackers. In particular, measurement errors may be unavoidable when the fundamental frequency is greater than 200 Hz (Chen, Whalen, & Shadle, 2019; Shadle, Nam, & Whalen, 2016). To address this limitation to the best of our ability, a combination of hand annotation and automated measurement was used to arrive at formant measurements for our child speakers. LPC filter order was determined individually for each participant (Derdemezis et al., 2016). The first and the second author independently determined the optimal filter order by visually comparing the match between automated formant tracking and visible areas of energy concentration on the spectrogram at different settings. The selected settings were compared and a consensus determination was reached for all cases of disagreement. Trained research assistants at New York University then used these settings to measure formants from each production. They first identified the /r/ interval in each token using both playback of the audio recording and the visible spectrogram with automated tracking of the first five formants. They then placed their cursor on the point judged to offer the most representative F3 frequency within the rhotic interval. A Praat script (Lennes, 2003) was used to obtain measurements of the first three formants within a 50 ms Gaussian window around the selected point within each word. Accuracy of /r/ production was operationalized as the distance between the second and third formants (F3-F2), where a lower F3-F2 value is indicative of more accurate /r/ production in comparison to a larger difference (Campbell et al., 2018). F3-F2 was additionally normalized relative to data from a sample of TD age-matched peers from Lee, Potamianos, and Narayanan (1999), as published in Flipsen, Shriberg, Weismer, Karlsson, and McSweeny (2001). Each acoustic measure was converted to a z-score reflecting the distance of that measurement from the mean for typically developing children of the speaker’s age and presented gender. This normalized measure was used because it was found in previous research to correlate more strongly with listener’s judgements of accuracy than raw F3-F2 distance (Campbell et al., 2018). Normalized F3-F2 values were then averaged across the 30 tokens elicited in each stimulability probe.

Reliability

To evaluate the reliability of initial measurements, 10% of files in the data set were remeasured by a different research assistant. A paired samples t-test indicated that there was no significant difference in F3-F2 distance between the original and remeasured samples, t(131) = 0.90, p = 0.37. There was a significant correlation of large magnitude between the original and reliability measures of F3-F2 distance, r(130) = 0.90, p < 0.001. Thus, despite the limitations identified in the previous section, formant measurements in the present study were judged to meet typical expectations for measurement reliability.

Analyses

All computations and data visualization for the present study were carried out in the R software environment (R Core Team, 2017). Data wrangling and plotting were completed with the ‘tidyverse’ (Wickham, 2016b) set of packages, including ‘dplyr’ (Wickham, Francois, Henry, & Müller, 2015), and ‘ggplot2’ (Wickham, 2016a). Regression models were fitted using the ‘lme4’ package (Bates, Maechler, Bolker, & Walker, 2015).

A two-tailed t-test for independent samples was conducted to compare auditory-perceptual acuity for rhotic sounds in typically developing children and children with RSE at the pre-treatment time point. Two regression models were fit to test research questions two and three. The first model used linear regression to test if there was an association between auditory-perceptual acuity for /r/ and severity of /r/ misarticulation among children with RSE prior to treatment. The dependent variable was normalized F3-F2 distance in stimulability probes administered at baseline, and the independent variables were auditory-perceptual acuity at baseline, age, and gender, as well as two-way interactions of age and gender with acuity. These specific interactions were included to account for the possibility that the effect of auditory-perceptual acuity on /r/ production accuracy differs across developmental stages and gender.

The second model used logistic regression to test if there was a relationship between baseline auditory-perceptual acuity and response to biofeedback treatment in children with residual speech errors. The dependent variable was response or non-response to treatment, operationalized as follows. First, the effect size of each participant’s treatment response was calculated using Cohen’s d to compare mean F3-F2 distance at pre- and post-treatment time points. Effect sizes were then converted to binary scores reflecting response or non-response. Participants were classified as nonresponders if they had an effect size equal to or less than 1.0, which indicated that the difference between pre-treatment and post-treatment means was no greater than the pooled standard deviation. This criterion was adopted because 1.0 has been identified in previous research as the minimum standardized effect size to be considered clinically relevant (Maas & Farinella, 2012). As in the first model, the independent variables included auditory-perceptual acuity (boundary width) at baseline, age, and gender, as well as the two-way interactions of age and gender with acuity. Accuracy of /r/ production (in the present study, normalized F3-F2 distance) at baseline was also included as an independent variable, since accuracy at the pre-treatment time point has been found in previous research to be a significant predictor of treatment outcomes (McAllister Byun & Campbell, 2016). Complete data and code to reproduce all figures and analyses in the paper can be retrieved at https://osf.io//N2ZFW/.

Results

Research Question 1

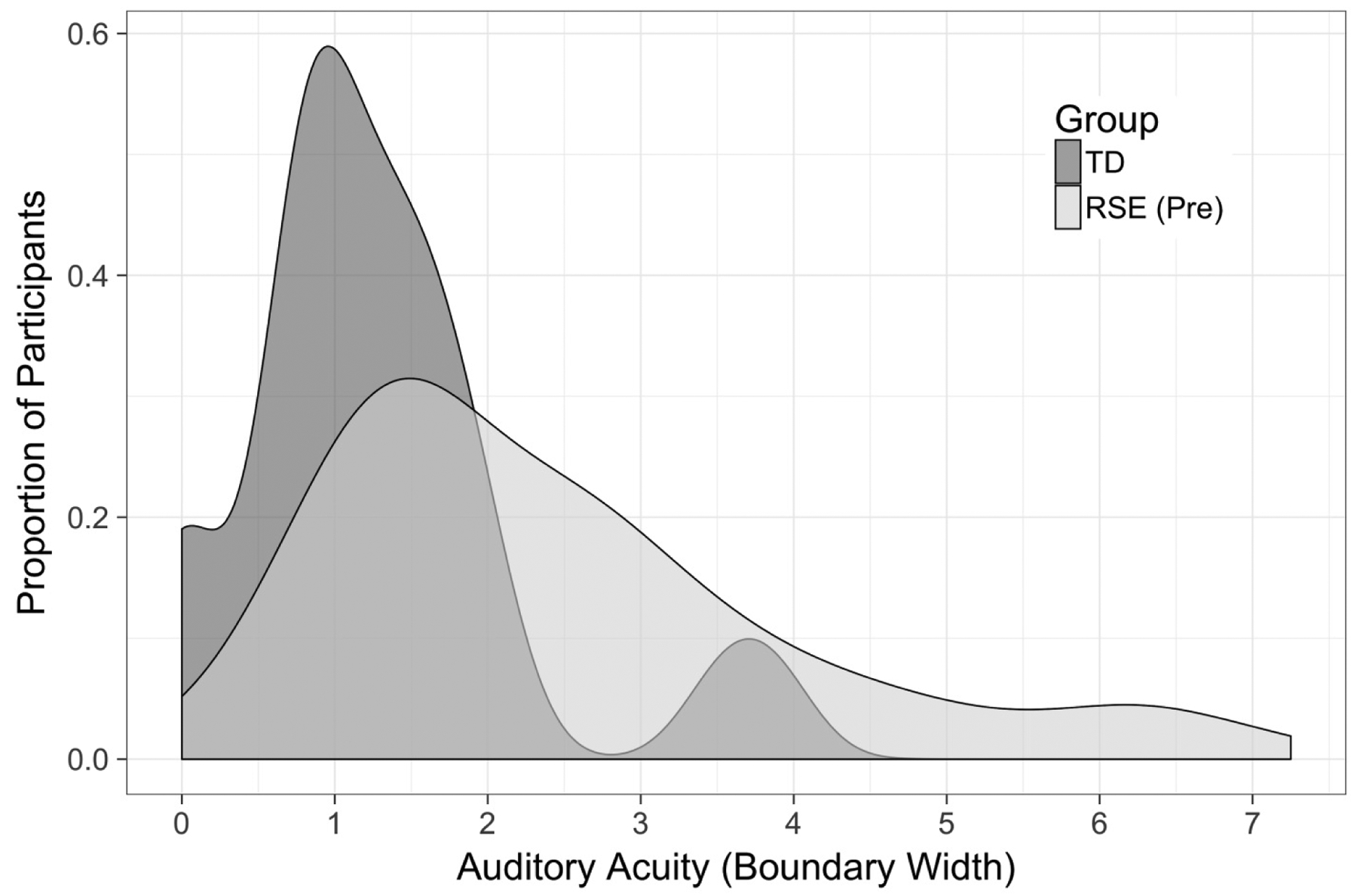

An independent samples t-test was conducted to compare auditory-perceptual acuity for rhotic sounds in the groups of typically developing children and children with RSE affecting /r/. The typically developing children had significantly smaller boundary widths (M = 1.28, SD = 0.93) than the children with RSE (M = 2.48, SD = 1.59), t(95.99) = 4.86, p < 0.0001. The effect size of the difference between these values was large (Cohen’s d = 0.90). A density plot of auditory-perceptual acuity for each group is provided in Figure 2. Although the means differ across the two groups, the plot shows that there is overlap between them, indicating that some children with RSE have high auditory-perceptual acuity (narrow boundary width), whereas some typically developing children exhibit poor acuity. It is suspected that inattention to the task may have played a role in the poor performance exhibited by a subset of typically developing children, although that hypothesis cannot be confirmed or refuted on the basis of the presently collected data. The finding that some typically developing children show poor performance in an auditory-perceptual task involving the /r/ sound is consistent with findings from Hamilton Dugan et al. (2019).

Figure 2:

Auditory-perceptual acuity as measured by boundary width of typically developing (TD) children and children with residual speech errors (RSE)

Research Question 2

Research question two asked whether there is a relationship between auditory-perceptual acuity at baseline and severity of misarticulation in production at the same time point. To answer this question, a linear regression was run that tested whether auditory-perceptual acuity for /r/ predicted the acoustically measured severity of /r/ misarticulation among children with RSE prior to treatment. Across all participants, the mean normalized F3-F2 distance at baseline was 6.92 (SD = 3.31), which indicates that the average participant’s mean F3-F2 distance was roughly 7 standard deviations higher than the mean of their age- and gender-matched reference sample (Flipsen et al., 2001; Lee et al., 1999). The results of the regression indicated that there was no significant association between auditory-perceptual acuity for /r/ and severity of /r/ misarticulation among children with RSE (β = −1.40, SE = 1.60, p = 0.39). Additionally, there were no significant effects of gender (β = −1.42, SE = 1.68, p = 0.40) or age (β = −0.02, SE = 0.03, p = 0.58). There was no interaction between acuity and gender (β = −0.19, SE = 0.60, p = 0.75), nor was there an interaction between acuity and age (β = 0.01, SE = 0.01, p = 0.71). In fact, the overall regression model was non-significant (F(5, 53) = 1.47, p = 0.22), indicating that the models with all variables included did not account for significantly more variance than the intercept-only model. This suggests that there may be other predictors of baseline performance that were not included in our model. We return to this point in the Discussion. Complete results of the regression are provided in Appendix A.

For visual inspection of the results of this regression, Figure 3 shows baseline production accuracy (measured by mean normalized F3-F2 distance) as a function of auditory-perceptual acuity (measured by boundary width). Visually, the best-fit line in Figure 3 shows a slight downward slope, but as noted above, this association was not significant when controlling for other factors. Moreover, this slope represents a trend in the opposite of the predicted direction: larger boundary widths (i.e., poorer acuity) appear in the plot to be associated with slightly lower mean F3-F2 distance (i.e., more accurate production) than smaller boundary widths.

Figure 3:

Auditory-perceptual acuity (boundary width) and production accuracy (normalized F3-F2 distance) in children with residual speech errors (RSE) prior to visual biofeedback treatment

Research Question 3

To answer our third research question, the above-described logistic regression was fit to test if there was a relationship between baseline auditory-perceptual acuity and response or non-response to biofeedback treatment (operationalized as a standardized effect size greater than 1.0 versus less than or equal to 1.0) in children with RSE. There was a significant effect of gender in the likelihood of response to biofeedback treatment in children with RSE (β = −5.83, SE = 2.91, p = 0.045). In this case, the negative coefficient indicates that male participants showed a lower likelihood of being classified as responsive to treatment than females. There was also a significant interaction between auditory-perceptual acuity and gender (β = 2.86, SE = 1.37, p = 0.037). This interaction can be visualized in Figure 4. Although the statistical model used coarse coding (responder vs non-responder), this plot shows effect size as a continuous variable on the y-axis, while the coarse coding is represented in the figure via a difference in point shape. Data have been further partitioned by gender. Figure 4 shows that female participants who were classified as responders to treatment consistently had small boundary widths (less than 2.0 for all but one responder), while female nonresponders had higher boundary widths (greater than 2.0 for all but one nonresponder). In contrast, among male participants, both responders and nonresponders spanned a wide range of auditory-perceptual acuity values. More generally, setting aside the responder/nonresponder distinction, female participants tended to show a greater magnitude of response to treatment when their baseline auditory-perceptual acuity was high, while male participants showed no clear association between auditory-perceptual acuity and treatment response. There were no other significant effects or interactions in the logistic regression. Complete results of this regression are reported in Appendix B.

Figure 4:

Auditory-perceptual acuity (boundary width) and response to biofeedback treatment (standardized effect size, partitioned into ‘responder’ and ‘non-responder’ categories relative to a threshold value of 1.0) in children with residual speech errors (RSE)

Discussion

This study compared perception abilities of typically developing children and children with RSE for synthetic tokens of the /r/ sound and additionally examined the perception-production relationship in children before and after treatment for RSE. The first research question tested the hypothesis that there would be a group difference in perception of /r/ between children with RSE and typically developing children. Children with RSE showed poorer average performance than TD children in a perceptual identification task using a synthetic continuum from /r/ to /w/. This is consistent with a previous general finding that children with SSD tend to show auditory-perceptual deficits affecting sounds that are produced in error. The specific finding of a perceptual deficit for synthetic /r/ in children with RSE is in agreement with previous research by Cabbage et al. (2016). There was also heterogeneity within each group, with some children with RSE exhibiting high auditory-perceptual acuity and some typically developing children showing poor performance.

The present findings contrast with those of Hamilton Dugan et al. (2019), who also compared /r/ perception in groups of children with and without RSE, but using naturally-produced speech stimuli instead of synthetic stimuli. Although recordings of natural speech have direct relevance to the real-world listening environment, natural stimuli differ from one another along many acoustic parameters, and it is difficult to know which of those characteristics drive a listener’s decision to respond in a particular way (Ohde & Sharf, 1988; Sharf & Benson, 1982; Sharf & Ohde, 1983). Synthesized speech continua can potentially offer a better-controlled comparison of auditory-perceptual abilities across listeners by measuring response to stimuli that only vary along one or two dimensions. In addition, synthetically generated speech stimuli are inherently somewhat impoverished in acoustic cues relative to naturally produced speech (Pisoni, 1997), with the consequence that tasks incorporating synthetic stimuli are generally more challenging and less prone to ceiling effects than tasks using exclusively naturally produced speech. These differences between natural and synthesized speech stimuli provide a likely explanation for the difference between the findings of Hamilton Dugan et al. (2019) versus those of the present study and of Cabbage et al. (2016). The finding that auditory-perceptual differences between TD and RSE groups were apparent in studies with synthetic stimuli but not in a study with natural stimuli suggests that these differences are subtle and may only become apparent in challenging tasks. On the other hand, the effect size of the observed difference in the present study was robust, suggesting that the auditory-perceptual limitations of children with RSE could indeed have a clinically significant impact.

The second research question examined whether there is an association between auditory-perceptual acuity for /r/ and acoustically measured severity of /r/ misarticulation at baseline among the group of children with RSE. Based on previous literature documenting significant correlations between perception and production skills, we hypothesized that individuals with higher auditory-perceptual acuity would also produce more accurate /r/ sounds at baseline. However, no such association was found. This was particularly surprising in light of the significant perception-production link found by McAllister Byun and Tiede (2017) in typical children using the same stimuli. We also note that it was not only auditory-perceptual acuity that failed to show a significant association with baseline accuracy; the other included predictors of age and gender also failed to reach significance, and the model as a whole did not differ significantly in goodness of fit from the intercept-only model. This suggests that there may be other important predictors of /r/ production accuracy that were not included in the current model. This aligns with previous research indicating that speech sound disorders are multifactorial in nature (McNutt, 1977; Shriberg et al., 2010). In addition to auditory-perceptual acuity, somatosensory acuity and motor skill are potentially relevant factors in determining pre-treatment severity of /r/ misarticulation. Other factors, such as the duration of previous treatment or the presence or absence of other error sounds in the child’s speech development history (Flipsen, 2015) could also be investigated as potential predictors. In short, the present null result points to a need for further exploration of factors that influence characteristics of speech production in children with RSE prior to treatment.

The third research question, building on work in the Personalized Learning framework, examined whether there is a relationship between baseline auditory-perceptual acuity and response to treatment targeting /r/. We identified two possible directions of association between auditory-perceptual acuity and treatment response. The first possibility was that individuals with low auditory-perceptual acuity would show greater response to biofeedback treatment because it directly fills their need by providing visual enhancement that could compensate for a poorly specified auditory target or weak auditory feedback processing. The second possibility was that better perceivers would also be more successful learners over the course of training due to the perception-production links described previously. The present study revealed no main effect of acuity in the likelihood of response to treatment. We discuss this null result in greater detail in the Limitations section below. However, there was a significant effect of gender, with females showing greater average gains than males. This finding is not entirely unexpected: as reviewed in the introduction, there is evidence that females have stronger central auditory responses than males (Krizman et al., 2019). Moreover, our use of a female talker as the basis for the synthetic continuum could have proven advantageous for female participants, since gender match between speaker and listener has been reported to improve performance in perceptual tasks (Yoho et al., 2019). A more surprising result was the finding of a significant interaction between acuity and gender. Among females, high auditory-perceptual acuity was associated with a more favourable response to treatment, while among males, the likelihood of being a responder to treatment did not appear to differ based on auditory-perceptual acuity. This unexpected result led us to explore an additional literature on gender differences in sensory gating, or how the sensory system responds to stimuli across repeated exposures, which we briefly review below.

In a study by Hammer and Krueger (2014), male and female participants were asked to detect puffs of air presented to the laryngeal mucosa (a somatosensory acuity task) in two conditions, active voicing and tidal breathing. The authors found that male and female speakers did not differ in sensitivity during tidal breathing, but during active voicing, females showed greater sensitivity to somatosensory stimulation than males. This result was interpreted as an indication that females show a lower degree of gating in the somatosensory domain. Gating is a phenomenon whereby sensory responses are partially suppressed when the sensory stimulus is presented repeatedly or self-produced (as in the case of somatosensory stimulation during active voicing). Interestingly, previous research by Hetrick et al. (1996) examined sensory gating in response to repeated auditory stimuli in the auditory domain and found that females showed a diminished degree of gating relative to males. Thus, it is possible that females tend to show a lesser degree of gating than males across sensory modalities. Although we are not aware of research specifically examining a relationship between sensory gating and response to treatment in the speech domain, it is possible to speculate how such a relationship could exist and could differ across male and female speakers. Specifically, speech intervention requires extended monitoring of self-produced speech across multiple repetitions. Gender-related differences in gating could yield an outcome where male speakers’ sensory response to these repeated self-produced tokens is diminished over the course of treatment, while female speakers’ response remains more active. Such differences in auditory self-monitoring could, in turn, yield a difference in the influence of baseline auditory-perceptual acuity on treatment response. Of course, this line of explanation remains highly speculative at this time, and considerable additional research would be required to substantiate it. Whatever the final explanation should prove to be, our finding underscores the need for further investigation of gender as a relevant variable in the study of speech disorders and treatment response.

Limitations

There are several factors that limit the strength of the conclusions that can be drawn from this study. The sample measured in this study contained a relatively small number of female participants due to lower prevalence of RSE in females (Shriberg, 2009). Future research should evaluate whether an interaction between gender and auditory-perceptual acuity is also observed in a sample with a more balanced representation of female participants. Another limitation is the above-described fact that the characteristics of the treatment provided were heterogeneous across data collection sites. Treatment duration varied across sites, with some participants receiving treatment over the course of 10 weeks and others receiving intensive treatment for 1 to 2 weeks. For future studies, it would be better to control treatment duration across all participants. In addition, while all sites provided biofeedback treatment, some participants received ultrasound biofeedback while others received visual-acoustic biofeedback. These differences in treatment type could have played an especially consequential role in the null result reported for our third research question pertaining to the association between auditory-perceptual acuity and response to treatment, since different biofeedback interventions may interact differently with acuity. Visual-acoustic biofeedback provides a visual representation of the acoustic signal, which could be expected to assist individuals who, due to poor perception, would not otherwise know whether they are approaching or achieving the target sound based on auditory feedback alone. The visual display of the acoustic signal may be less helpful for individuals with high acuity who can hear the difference between correct and incorrect productions but have not yet determined appropriate articulatory placement to achieve the target sound. Ultrasound, which directly displays the movements of the articulators, might be more beneficial for individuals in this latter category. By this logic, we recommend that future models that analyse treatment response include biofeedback type as a predictor in a larger and more balanced dataset.

A final limitation is that both baseline accuracy and response to treatment were measured using the acoustics of participants’ /r/ productions in the context of a stimulability probe, which is considered the most supportive context for production of target sounds. The choice to use the stimulability probe was made because stimulability was the only production measure that was collected in an identical way across sites. It would be helpful to examine measures of accuracy and treatment response in less supportive contexts, such as a probe evaluating /r/ production accuracy in untreated words, which can provide more information about generalization learning. It is possible that effects or interactions of gender and auditory-perceptual acuity would be different at the word or sentence level. Thus, future research studies should standardize materials across sites for better aggregation potential.

Conclusion

This study examined differences in auditory-perceptual acuity between children with residual speech errors (RSE) and age-matched typically developing peers. Additionally, auditory-perceptual acuity was investigated as a possible predictor of both baseline severity and response to a period of treatment incorporating visual biofeedback. Identification of items along a synthetically generated /r/-/w/ continuum was assessed prior to treatment, and /r/ production accuracy was acoustically measured from standard /r/ stimulability probes elicited before and after treatment in children with RSE affecting /r/. Results indicated that typically developing children exhibited more acute perception of the /r/-/w/ contrast than children with RSE, which is consistent with expectations based on previous research. Contrary to our hypothesis, baseline auditory-perceptual acuity for /r/ did not predict baseline production accuracy. However, there was a significant interaction between acuity and gender when testing the relationship between baseline auditory-perceptual acuity and response to biofeedback treatment targeting /r/. In female participants, higher auditory-perceptual acuity was associated with a greater likelihood of a positive response to treatment, but in male participants, there was no association between auditory-perceptual acuity and treatment response. This study emphasizes the importance of taking auditory-perceptual acuity into consideration when planning and providing treatment for children with residual speech errors, and also highlights a need for further investigation of gender as a mediating factor in determining treatment response.

Acknowledgments

Many thanks to all participants and their families for their cooperation throughout the study. Additional thanks are extended to Kimberly Kraus-Preminger, Kristina Doyle, Samantha Ayala, Christian Savarese, and Erin Doty for completing formant measurements, as well as to Siemens for the ultrasound machines they made available to the New York University, University of Cincinnati, and Haskins Laboratories sites.

Funding Details: This work was supported by the National Institute on Deafness and Other Communication Disorders of the National Institutes of Health under Grant R01DC013668 (D. Whalen, PI), Grant R01DC017476 (McAllister, PI), and Grant R03DC012152 (J. Preston, PI).

Appendix A. Complete results of regression for research question (2)

Call:

Residuals:

| Min | 1Q | Median | 3Q | Max |

|---|---|---|---|---|

| −7.721 | −1.735 | 0.307 | 1.820 | 8.105 |

Coefficients:

| Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|

| (Intercept) | 11.445 | 4.553 | 2.514 | 0.015 |

| Acuity | −1.402 | 1.601 | −0.876 | 0.385 |

| gender (reference level: F) | −1.416 | 1.671 | −0.847 | 0.401 |

| Age | −0.018 | 0.031 | −0.568 | 0.573 |

| acuity:gender | −0.191 | 0.605 | −0.316 | 0.753 |

| acuity:age | 0.008 | 0.011 | 0.716 | 0.477 |

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 3.242 on 53 degrees of freedom

Multiple R-squared: 0.122, Adjusted R-squared: 0.039

F-statistic: 1.466 on 5 and 53 DF, p-value: 0.217

Appendix B. Complete results of regression for research question (3)

Call:

Deviance Residuals:

| Min | 1Q | Median | 3Q | Max |

|---|---|---|---|---|

| −1.933 | −1.089 | 0.492 | 0.858 | 1.769 |

Coefficients:

| Estimate | Std. Error | z value | Pr(>|z|) | |

|---|---|---|---|---|

| (Intercept) | 0.542 | 5.028 | 0.108 | 0.914 |

| Acuity | −1.175 | 1.778 | −0.661 | 0.509 |

| gender (reference level: F) | −5.830 | 2.909 | −2.004 | 0.045* |

| Age | 0.041 | 0.027 | 1.534 | 0.125 |

| normalized F3-F2 at baseline | −0.032 | 0.137 | −0.234 | 0.815 |

| acuity:gender | 2.855 | 1.368 | 2.088 | 0.037* |

| acuity:age | −0.011 | 0.009 | −1.280 | 0.201 |

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 76.823 on 58 degrees of freedom

Residual deviance: 60.350 on 52 degrees of freedom

AIC: 74.35

Number of Fisher Scoring iterations: 6

Footnotes

Declaration of Interest

The authors report no conflict of interest.

Contributor Information

Laine Cialdella, Department of Communicative Sciences & Disorders, New York University, New York, NY, USA.

Heather Kabakoff, Department of Communicative Sciences & Disorders, New York University, New York, NY, USA.

Jonathan L. Preston, Department of Communication Sciences and Disorders, Syracuse University, Syracuse, NY & Haskins Laboratories, New Haven, CT, USA,.

Sarah Dugan, Department of Communication Sciences and Disorders, University of Cincinnati, Cincinnati, OH, USA,.

Caroline Spencer, Department of Communication Sciences and Disorders, University of Cincinnati, Cincinnati, OH, USA.

Suzanne Boyce, Department of Communication Sciences and Disorders, University of Cincinnati, Cincinnati, OH & Haskins Laboratories, New Haven, CT, USA,.

Mark Tiede, Haskins Laboratories, New Haven, CT, USA,.

Douglas H. Whalen, Program in Speech-Language-Hearing Sciences, City University of New York Graduate Centre, New York, NY & Haskins Laboratories, New Haven, CT, USA,.

Tara McAllister, Department of Communicative Sciences & Disorders, New York University, New York, NY, USA,.

References

- Adler-Bock M, Bernhardt BM, Gick B, & Bacsfalvi P (2007). The use of ultrasound in remediation of North American English /r/ in 2 adolescents. American Journal of Speech-Language Pathology, 16(2), 128–139. doi: 10.1044/1058-0360(2007/017) [DOI] [PubMed] [Google Scholar]

- Bates D, Maechler M, Bolker B, & Walker S (2015). Fitting linear mixed-effects models using ‘lme4’. Journal of Statistical Software, 67(1), 1–48. doi: 10.18637/jss.v067.i01 [DOI] [Google Scholar]

- Bernhardt B, Gick B, Bacsfalvi P, & Adler-Bock M (2005). Ultrasound in speech therapy with adolescents and adults. Clinical Linguistics & Phonetics, 19(6–7), 605–617. [DOI] [PubMed] [Google Scholar]

- Bird J, & Bishop D (1992). Perception and awareness of phonemes in phonologically impaired children. International Journal of Language and Communication Disorders, 27(4), 289–311. doi: 10.3109/13682829209012042 [DOI] [PubMed] [Google Scholar]

- Boersma P, & Weenink D (2019). Praat: doing phonetics by computer (Version 6.0.50) [Computer program] Retrieved from www.fon.hum.uva.nl/praat/

- Boyce SE (2015). The articulatory phonetics of /r/ for residual speech errors. Seminars in Speech and Language, 36(04), 257–270. doi: 10.1055/s-0035-1562909 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cabbage KL, Hogan TP, & Carrell TD (2016). Speech perception differences in children with dyslexia and persistent speech delay. Speech Communication, 82, 14–25. doi: 10.1016/j.specom.2016.05.002 [DOI] [Google Scholar]

- Campbell H, Harel D, Hitchcock E, & McAllister Byun T (2018). Selecting an acoustic correlate for automated measurement of American English rhotic production in children. International Journal of Speech-Language Pathology, 20(6), 635–643. doi: 10.1080/17549507.2017.1359334 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen W, Whalen D, & Shadle CH (2019). F0-induced formant measurement errors result in biased variabilities. Journal of the Acoustical Society of America, 145(5), EL360–EL366. doi: 10.1121/1.5103195 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowe Hall BJ (1991). Attitudes of fourth and sixth graders toward peers with mild articulation disorders. Language, Speech, and Hearing Services in Schools, 22(1), 334–340. doi: 10.1044/0161-1461.2201.334 [DOI] [Google Scholar]

- Culton GL (1986). Speech disorders among college freshmen: a 13-year survey. Journal of Speech and Hearing Disorders, 51(1), 3–7. doi: 10.1044/jshd.5101.03 [DOI] [PubMed] [Google Scholar]

- Davis SM, & Drichta CE (1980). Biofeedback: Theory and application to speech pathology. Speech and language: Advances in basic research and practice, 283–286. doi: 10.1016/B978-0-12-608603-4.50015-9 [DOI] [Google Scholar]

- Delattre P, & Freeman DC (1968). A dialect study of American r’s by x-ray motion picture. Linguistics, 6(44), 29–68. doi: 10.1515/ling.1968.6.44.29 [DOI] [Google Scholar]

- Derdemezis E, Vorperian HK, Kent RD, Fourakis M, Reinicke EL, & Bolt DM (2016). Optimizing vowel formant measurements in four acoustic analysis systems for diverse speaker groups. American Journal of Speech-Language Pathology, 25(3), 335–354. doi: 10.1044/2015_AJSLP-15-0020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn LM, & Dunn DM (2007). Peabody Picture Vocabulary Test - 4th edition. Bloomington, MN: Pearson Assessments. [Google Scholar]

- Espy-Wilson CY, Boyce SE, Jackson M, Narayanan S, & Alwan A (2000). Acoustic modeling of American English /r/. Journal of the Acoustical Society of America, 108(1), 343–356. doi: 10.1121/1.429469 [DOI] [PubMed] [Google Scholar]

- Flipsen P (2015). Emergence and prevalence of persistent and residual speech errors. Seminars in Speech and Language, 36(4), 217–223. doi: 10.1055/s-0035-1562905 [DOI] [PubMed] [Google Scholar]

- Flipsen P, Shriberg LD, Weismer G, Karlsson HB, & McSweeny JL (2001). Acoustic phenotypes for speech-genetics studies: reference data for residual /3/ distortions. Clinical Linguistics & Phonetics, 15(8), 603–630. doi: 10.1080/02699200110069410 [DOI] [PubMed] [Google Scholar]

- Ghosh SS, Matthies ML, Maas E, Hanson A, Tiede M, Ménard L,… Perkell JS (2010). An investigation of the relation between sibilant production and somatosensory and auditory acuity. Journal of the Acoustical Society of America, 128(5), 3079–3087. doi: 10.1121/1.3493430 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gick B, Bernhardt B, Bacsfalvi P, & Wilson I (2008). Chapter 11. Ultrasound imaging applications in second language acquisition In Phonology and second language acquisition (Vol. 36, pp. 309–322): John Benjamins Publishing Company. [Google Scholar]

- Goldman R, & Fristoe M (2000). Goldman-Fristoe Test of Articulation - 2nd Edition (GFTA-2) Bloomington, MN: Pearson/PsychCorp. [Google Scholar]

- Hamilton Dugan S, Silbert N, McAllister T, Preston JL, Sotto C, & Boyce SE (2019). Modelling category goodness judgments in children with residual sound errors. Clinical Linguistics & Phonetics, 295–315. doi: 10.1080/02699206.2018.1477834 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hammer MJ, & Krueger MA (2014). Voice-related modulation of mechanosensory detection thresholds in the human larynx. Experimental Brain Research, 232(1), 13–20. doi: 10.1007/s00221-013-3703-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hazan V, & Barrett S (2000). The development of phonemic categorization in children aged 6–12. Journal of Phonetics, 28(4), 377–396. doi: 10.1006/jpho.2000.0121 [DOI] [Google Scholar]

- Hearnshaw S, Baker E, & Munro N (2018). The speech perception skills of children with and without speech sound disorder. Journal of Communication Disorders, 71, 61–71. doi: 10.1044/2019_JSLHR-S-18-0519 [DOI] [PubMed] [Google Scholar]

- Hetrick WP, Sandman CA, Bunney WE Jr, Jin Y, Potkin SG, & White MH (1996). Gender differences in gating of the auditory evoked potential in normal subjects. Biological Psychiatry, 39(1), 51–58. doi: 10.1016/0006-3223(95)00067-4 [DOI] [PubMed] [Google Scholar]

- Hitchcock E, Harel D, & McAllister Byun T (2015). Social, emotional, and academic impact of residual speech errors in school-aged children: A survey study. Seminars in Speech and Language, 36(4), 283–293. doi: 10.1055/s-0035-1562911 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman PR, Daniloff RG, Bengoa D, & Schuckers GH (1985). Misarticulating and normally articulating children’s identification and discrimination of synthetic [r] and [w]. Journal of Speech and Hearing Disorders, 50(1), 46–53. doi: 10.1044/jshd.5001.46 [DOI] [PubMed] [Google Scholar]

- Hoffman PR, Stager S, & Daniloff RG (1983). Perception and production of misarticulated /r/. Journal of Speech and Hearing Disorders, 48(2), 210–215. doi: 10.1044/jshd.4802.210 [DOI] [PubMed] [Google Scholar]

- Jamieson DG, & Rvachew S (1992). Remediating speech production errors with sound identification training. Journal of Speech-Language Pathology and Audiology, 16(3), 201–210. doi:1993-27184-001 [Google Scholar]

- Klein HB, Grigos MI, McAllister Byun T, & Davidson L (2012). The relationship between inexperienced listeners’ perceptions and acoustic correlates of children’s /r/ productions. Clinical Linguistics & Phonetics, 26(7), 628–645. doi: 10.3109/02699206.2012.682695 [DOI] [PubMed] [Google Scholar]

- Krizman J, Bonacina S, & Kraus N (2019). Sex differences in subcortical auditory processing emerge across development. Hearing Research, 380, 166–174. doi: 10.1016/j.heares.2019.07.002 [DOI] [PubMed] [Google Scholar]

- Lee S, Potamianos A, & Narayanan S (1999). Acoustics of children’s speech: Developmental changes of temporal and spectral parameters. Journal of the Acoustical Society of America, 105(3), 1455–1468. doi: 10.1121/1.426686 [DOI] [PubMed] [Google Scholar]

- Lehiste I (1964). Acoustical characteristics of selected English consonants Research Center in Anthropology. Bloomington, IN: Indiana University Research Center in Anthropology, Folklore, and Linguistics. [Google Scholar]

- Lennes M (2003). Collect formant data from files [Praat script]. Retrieved from http://www.helsinki.fi/~lennes/praat-scripts/public/collect_formant_data_from_files.praat

- Maas E, & Farinella KA (2012). Random versus blocked practice in treatment for childhood apraxia of speech. Journal of Speech, Language, and Hearing Research, 55(2), 561–578. doi: 10.1044/1092-4388(2011/11-0120) [DOI] [PubMed] [Google Scholar]

- Martin N, & Brownell R (2005). Test of Auditory Processing Skills: TAPS-3. Novato, CA: Academic Therapy Publications. [Google Scholar]

- McAllister Byun T (2017). Efficacy of visual-acoustic biofeedback intervention for residual rhotic errors: A single-subject randomization study. Journal of Speech, Language, and Hearing Research, 60, 1175–1193. doi: 10.1044/2016_JSLHR-S-16-0038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAllister Byun T, & Campbell H (2016). Differential effects of visual-acoustic biofeedback intervention for residual speech errors. Frontiers in Human Neuroscience, 10(567), 1–17. doi: 10.3389/fnhum.2016.00567 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAllister Byun T, & Hitchcock ER (2012). Investigating the use of traditional and spectral biofeedback approaches to intervention for /r/ misarticulation. American Journal of Speech-Language Pathology, 21(3), 207–221. doi: 10.1044/1058-0360(2012/11-0083) [DOI] [PubMed] [Google Scholar]

- McAllister Byun T, & Tiede M (2017). Perception-production relations in later development of American English rhotics. PloS One, 12(2), e0172022. doi: 10.1371/journal.pone.0172022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNutt JC (1977). Oral sensory and motor behaviors of children with /s/ or /r/ misarticulations. Journal of Speech, Language, and Hearing Research, 20(4), 694–703. doi: 10.1044/jshr.2101.192 [DOI] [PubMed] [Google Scholar]

- McReynolds LV, Kohn J, & Williams GC (1975). Articulatory-defective children’s discrimination of their production errors. Journal of Speech and Hearing Disorders, 40(3), 327–338. doi: 10.1044/jshd.4003.327 [DOI] [PubMed] [Google Scholar]

- Miccio AW (2002). Clinical problem solving: assessment of phonological disorders. American Journal of Speech-Language Pathology, 11(3), 221–229. doi: 10.1044/1058-0360(2002/023) [DOI] [Google Scholar]

- Mielke J, Baker A, & Archangeli D (2016). Individual-level contact limits phonological complexity: Evidence from bunched and retroflex /ɹ/. Language, 92(1), 101–140. doi: 10.1353/lan.2016.0019 [DOI] [Google Scholar]

- Ohde RN, & Sharf DJ (1988). Perceptual categorization and consistency of synthesized /r-w/ continua by adults, normal children and /r/-misarticulating children. Journal of Speech, Language, and Hearing Research, 31(4), 556–568. doi: 10.1044/jshr.3104.556 [DOI] [PubMed] [Google Scholar]

- Perrachione TK, Lee J, Ha LY, & Wong PC (2011). Learning a novel phonological contrast depends on interactions between individual differences and training paradigm design. Journal of the Acoustical Society of America, 130(1), 461–472. doi: 10.1121/1.3593366 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pisoni DB (1997). Perception of Synthetic Speech In Van Santen JPH, Sproat RW, Olive JP, & Hirschberg J (Eds.), Progress in Speech Synthesis (pp. 541–560): Springer-Verlag. [Google Scholar]

- Preston JL, Hitchcock E, & Leece MC (submitted). Auditory perception and ultrasound biofeedback treatment outcomes for children with residual /ɹ/ distortions: A randomized controlled trial. In. [DOI] [PMC free article] [PubMed]

- Preston JL, & Leece MC (2017). Intensive treatment for persisting rhotic distortions: A case series. American Journal of Speech-Language Pathology, 26(4), 1066–1079. doi: 10.1044/2017_AJSLP-16-0232 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preston JL, Leece MC, McNamara K, & Maas E (2017). Variable practice to enhance speech learning in ultrasound biofeedback treatment for childhood apraxia of speech: A single case experimental study. American Journal of Speech-Language Pathology, 26(3), 840–852. doi: 10.1044/2017_AJSLP-16-0155 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preston JL, McAllister T, Phillips E, Boyce S, Tiede M, Kim JS, & Whalen DH (2018). Treatment for residual rhotic errors with high-and low-frequency ultrasound visual feedback: a single-case experimental design. Journal of Speech, Language, and Hearing Research, 61(8), 1875–1892. doi: 10.1044/2018_JSLHR-S-17-0441 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preston JL, McAllister T, Phillips E, Boyce S, Tiede M, Kim JS, & Whalen DH (2019). Remediating residual rhotic errors with traditional and ultrasound-enhanced treatment: a single-case experimental study. American Journal of Speech-Language Pathology, 28, 1167–1183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preston JL, McCabe P, Rivera-Campos A, Whittle JL, Landry E, & Maas E (2014). Ultrasound visual feedback treatment and practice variability for residual speech sound errors. Journal of Speech, Language, and Hearing Research, 57(6), 2102–2115. doi: 10.1044/2014_JSLHR-S-14-0031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Core Team. (2017). R: A language and environment for statistical computing [Software]: R Foundation for Statistical Computing. Retrieved from https://www.R-project.org/

- Rogow Waldman F, Singh S, & Hayden ME (1978). A comparison of speech—sound production and discrimination in children with functional articulation disorders. Language and Speech, 21(3), 205–220. doi: 10.1177/002383097802100301 [DOI] [PubMed] [Google Scholar]

- Ruscello DM (1995). Visual feedback in treatment of residual phonological disorders. Journal of Communication Disorders, 28(4), 279–302. doi: 10.1016/0021-9924(95)00058-X [DOI] [PubMed] [Google Scholar]

- Rvachew S (1994). Speech perception training can facilitate sound production learning. Journal of Speech, Language, and Hearing Research, 37(2), 347–357. doi: 10.1044/jshr.3702.347 [DOI] [PubMed] [Google Scholar]

- Rvachew S (2006). Longitudinal predictors of implicit phonological awareness skills. American Journal of Speech-Language Pathology, 15(2), 165–176. doi: 10.1044/1058-0360(2006/016) [DOI] [PubMed] [Google Scholar]

- Rvachew S (2007). Phonological processing and reading in children with speech sound disorders. American Journal of Speech-Language Pathology, 16, 260–270. doi: 10.1044/1058-0360(2007/030) [DOI] [PubMed] [Google Scholar]

- Rvachew S, & Grawburg M (2006). Correlates of phonological awareness in preschoolers with speech sound disorders. Journal of Speech, Language, and Hearing Research, 49 74–87. doi: 10.1044/1092-4388(2006/006) [DOI] [PubMed] [Google Scholar]

- Rvachew S, & Jamieson DG (1989). Perception of voiceless fricatives by children with a functional articulation disorder. Journal of Speech and Hearing Disorders, 54(2), 193–208. doi: 10.1044/jshd.5402.193 [DOI] [PubMed] [Google Scholar]

- Rvachew S, Ohberg A, Grawburg M, & Heyding J (2003). Phonological awareness and phonemic perception in 4-year-old children with delayed expressive phonology skills. American Journal of Speech-Language Pathology(4), 463–471. doi: 10.1044/1058-0360(2003/092) [DOI] [PubMed] [Google Scholar]

- Semel E, Wiig EH, & Secord WA (2013). Clinical Evaluation of Language Fundamentals, 5th Ed. (CELF-5) Toronto Ontario: Pearson: The Psychological Corporation. [Google Scholar]

- Shadle CH, Nam H, & Whalen D (2016). Comparing measurement errors for formants in synthetic and natural vowels. Journal of the Acoustical Society of America, 139(2), 713–727. doi: 10.1121/1.4940665 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharf DJ, & Benson PJ (1982). Identification of synthesized /r–w/ continua for adult and child speakers. Journal of the Acoustical Society of America, 71(4), 1008–1015. doi: 10.1121/1.387652 [DOI] [PubMed] [Google Scholar]

- Sharf DJ, & Ohde RN (1983). Perception of distorted “R” sounds in the synthesized speech of chlldren and adults. Journal of Speech, Language, and Hearing Research, 26(4), 516–524. doi: 10.1044/jshr.2604.516 [DOI] [PubMed] [Google Scholar]

- Shriberg LD (2009). Childhood speech sound disorders: From post-behaviorism to the postgenomic era In Paul R & Flipsen P Jr. (Eds.), Speech Sound Disorders in Children: In Honor of Lawrence D. Shriberg (pp. 1–34). San Diego, CA: Plural. [Google Scholar]

- Shriberg LD, Flipsen P, Karlsson HB, & McSweeny JL (2001). Acoustic phenotypes for speech-genetics studies: an acoustic marker for residual /3/ distortions. Clinical Linguistics & Phonetics, 15(8), 631–650. doi: 10.1080/02699200110069429 [DOI] [PubMed] [Google Scholar]

- Shriberg LD, Fourakis M, Hall SD, Karlsson HB, Lohmeier HL, McSweeny JL, … Tilkens CM (2010). Extensions to the speech disorders classification system (SDCS). Clinical Linguistics & Phonetics, 24(10), 795–824. doi: 10.3109/02699206.2010.503006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shriberg LD, Gruber FA, & Kwiatkowski J (1994). Developmental phonological disorders III: long-term speech-sound normalization. Journal of Speech, Language, and Hearing Research, 37(5), 1151–1177. doi: 10.1044/jshr.3705.1151 [DOI] [PubMed] [Google Scholar]

- Shuster LI (1998). The perception of correctly and incorrectly produced /r/. Journal of Speech, Language, and Hearing Research, 41(4), 941–950. doi: 10.1044/jslhr.4104.941 [DOI] [PubMed] [Google Scholar]

- Shuster LI, Ruscello DM, & Smith KD (1992). Evoking [r] using visual feedback. American Journal of Speech-Language Pathology, 1(3), 29–34. doi: 10.1044/1058-0360.0103.29 [DOI] [Google Scholar]

- Shuster LI, Ruscello DM, & Toth AR (1995). The use of visual feedback to elicit correct /r/. American Journal of Speech-Language Pathology, 4(2), 37–44. doi: 10.1044/1058-0360.0402.37 [DOI] [Google Scholar]

- Sjolie GM, Leece MC, & Preston JL (2016). Acquisition, retention, and generalization of rhotics with and without ultrasound visual feedback. Journal of Communication Disorders, 64, 62–77. doi: 10.1016/j.jcomdis.2016.10.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smit AB, Hand L, Freilinger JJ, Bernthal JE, & Bird A (1990). The Iowa articulation norms project and its Nebraska replication. Journal of Speech and Hearing Disorders, 55(4), 779–798. doi: 10.1044/jshd.5504.779 [DOI] [PubMed] [Google Scholar]

- Sugden E, Lloyd S, Lam J, & Cleland J (2019). Systematic review of ultrasound visual biofeedback in intervention for speech sound disorders. International Journal of Language and Communication Disorders, 54(5), 705–728. [DOI] [PubMed] [Google Scholar]

- Tiede MK, Boyce SE, Holland CK, & Choe KA (2004). A new taxonomy of American English /r/ using MRI and ultrasound. Journal of the Acoustical Society of America, 115(5), 2633–2634. doi: 10.1121/1.4784878 [DOI] [Google Scholar]