Abstract

Understanding how certain brain regions relate to a specific neurological disorder has been an important area of neuroimaging research. A promising approach to identify the salient regions is using Graph Neural Networks (GNNs), which can be used to analyze graph structured data, e.g. brain networks constructed by functional magnetic resonance imaging (fMRI). We propose an interpretable GNN framework with a novel salient region selection mechanism to determine neurological brain biomarkers associated with disorders. Specifically, we design novel regularized pooling layers that highlight salient regions of interests (ROIs) so that we can infer which ROIs are important to identify a certain disease based on the node pooling scores calculated by the pooling layers. Our proposed framework, Pooling Regularized-GNN (PR-GNN), encourages reasonable ROI-selection and provides flexibility to preserve either individual- or group-level patterns. We apply the PR-GNN framework on a Biopoint Autism Spectral Disorder (ASD) fMRI dataset. We investigate different choices of the hyperparameters and show that PR-GNN outperforms baseline methods in terms of classification accuracy. The salient ROI detection results show high correspondence with the previous neuroimaging-derived biomarkers for ASD.

Keywords: fMRI Biomarker, Graph Neural Network, Autism

1. Introduction

Explaining the underlying roots of neurological disorders (i.e., what brain regions are associated with the disorder) has been a main goal in the field of neuroscience and medicine [1,2,3,4]. Functional magnetic resonance imaging (fMRI), a non-invasive neuroimaging technique that measures neural activation, has been paramount in advancing our understanding of the functional organization of the brain [5,6,7]. The functional network of the brain can be modeled as a graph in which each node is a brain region and the edges represent the strength of the connection between those regions.

The past few years have seen the growing prevalence of using graph neural networks (GNN) for graph classification [8]. Like pooling layers in convolutional neural networks (CNNs) [9,10], the pooling layer in GNNs is an important design to compress a large graph to a smaller one for lower dimensional feature extraction. Many node pooling strategies have been studied and can be divided into the following categories: 1) clustering-based pooling, which clusters nodes to a super node based on graph topology [11,12,13] and 2) ranking-based pooling, which assigns each node a score and keeps the top ranked nodes [14,15]. Clustering-based pooling methods do not preserve node assignment mapping in the input graph domain, hence they are not inherently interpretable at the node level. For our purpose of interpreting node importance, we focus on ranking-based pooling methods. Currently, existing methods of this type [14,15] have the following key limitations when applying them to salient brain ROI analysis: 1) ranking scores for the discarded nodes and the remaining nodes may not be significantly distinguishable, which is not suitable for identifying salient and representative regional biomarkers, and 2) the nodes in different graphs in the same group may be ranked totally differently (usually caused by overfitting), which is problematic when our objective is to find group-level biomarkers. To reach group-level analysis, such approaches typically require additional steps to summarize statistics (such as averaging). For these two-stage methods, if the results from the first stage are not reliable, significant errors can be induced in the second stage.

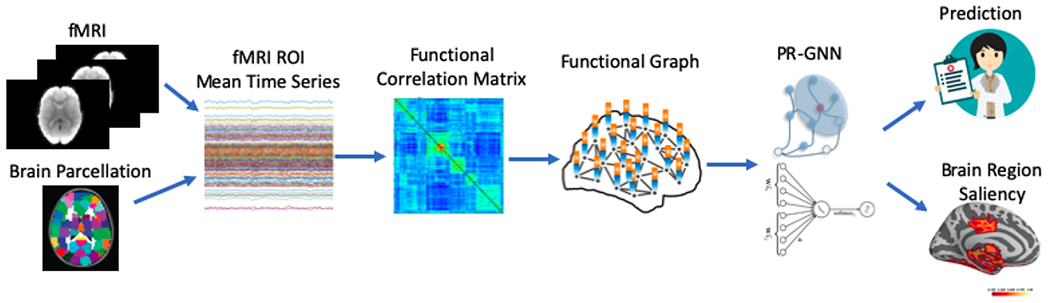

To utilize GNN for fMRI learning and meet the need of group-level biomarker finding, we propose a pooling regularized GNN framework (PR-GNN) for classifying neurodisorder patients vs healthy control subjects and discovering disorder related biomarkers jointly. The overview of our methods is depicted in Fig. 1. Our key contributions are:

We formulate an end-to-end framework for fMRI prediction and biomarker (salient brain ROIs) interpretation.

We propose novel regularization terms for ranking-based pooling methods to encourage more reasonable node selection and provide flexibility between individual-level and group-level interpretation in GNN.

Fig. 1:

The overview of the pipeline. fMRI images are parcellated by atlas and transferred to graphs. Then, the graphs are sent to our proposed PR-GNN, which gives the prediction of specific tasks and jointly selects salient brain regions that are informative to the prediction task.

2. Graph Neural Network for Brain Network Analysis

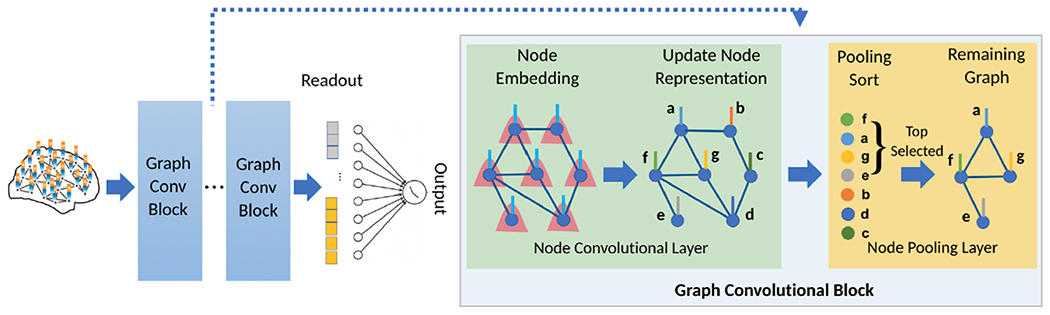

The architecture of our PR-GNN is shown in Fig. 2. Below, we introduce the notation and the layers in PR-GNN. For simplicity, we focus on Graph Attention Convolution (GATConv) [16,17] as the node convolutional layer. For node pooling layers, we test two existing ranking based pooling methods: TopK pooling [14] and SAGE pooling [15].

Fig. 2:

PR-GNN for brain graph classification and the details of its key component - Graph Convolutional Block. Each Graph Convolutional Block contains a node convolutional layer followed by a node pooling layer.

2.1. Notation and Problem Definition

We first parcellate the brain into N ROIs based on its T1 structural MRI. We define ROIs as graph nodes . We define an undirected weighted graph as , where is the edge set, i.e., a collection of (vi, vj) linking vertices vi and vj. G has an associated node feature matrix H = [h1, … , hN]⊺, where hi is the feature vector associated with node vi. For every edge connecting two nodes, , we have its strength . We also define eij = 0 for and therefore the adjacency matrix is well defined.

2.2. Graph Convolutional Block

Node Convolutional Layer

To improve GATConv [8], we incorporate edge features in the brain graph as suggested by Gong & Cheng [18] and Yang et. al [17]. We define as the feature for the ith node in the lth layer and , where N(l) is the number of nodes at the lth layer (the same for E(1)). The propagation model for the forward-pass update of node representation is calculated as:

| (1) |

where the attention coefficients αij are computed as

| (2) |

where denotes the set of indices of neighboring nodes of vi, || denotes concatenation, and are model parameters.

Node Pooling Layer

The choices of keeping which nodes in TopK pooling and SAGE pooling are determined based on the node importance score , which is calculated in two ways as follows:

| (3) |

where is calculated in Eq. (1) and and are model parameters. Note that θ(l) is different from Θ(l) in Eq. (1) such that the output of is a scalar.

Given s(l) the following equation roughly describes the pooling procedure:

| (4) |

The notation above finds the indices corresponding to the largest k(l) elements in score vector s(l), and (·)i,j is an indexing operation which takes elements at row indices specified by i and column indices specified by j. The nodes receiving lower scores will experience less feature retention.

Lastly, we seek a “flattening” operation to translate graph information to a vector. Suppose the last layer is L, we use , where mean operates elementwisely. Then z is sent to a multilayer perceptron (MLP) to give the final prediction.

3. Proposed Regularizations

3.1. Distance Loss

To overcome the limitation of existing methods that ranking scores for the discarded nodes and the remaining nodes may not be distinguishable, we propose two distance losses to encourage the difference. Before introducing them, we first rank the elements of the mth instance scores, , in a descending order, denote it as , and denote its top k(l) elements as , and the remaining elements as . We apply two types of constraint to all the M training instances.

MMD Loss

Maximum mean discrepancy (MMD) loss [19,20] was originally proposed in Generative adversarial nets (GANs) to quantify the difference of the scores between real and generated samples. In our application, we define MMD loss for the pooling layer as:

where κ(a, b)=exp(|| a − b ||2)/σ is a Gaussian kernel and σ is a scaling factor.

BCE Loss

Ideally, the scores for the selected nodes should be close to 1 and the scores for the unselected nodes should be close to 0. Binary cross entropy (BCE) loss is calculated as:

| (5) |

The effect of this constraint will be shown in Section 4.3.

3.2. Group-level Consistency Loss

Note that s(l) in Eq. (4) is computed from the input H(l). Therefore, for H(l) from different instances, the ranking of the entries of s(l) can be very different. For our application, we want to find the common patterns/biomarkers for a certain neuro-prediction task. Thus, we add regularization to force the s(l) vectors to be similar for different input instances in the first pooling layer, where the group-level biomarkers are extracted. We call the novel regularization group-level consistency (GLC) and only apply it to the first pooling layer, as the nodes in the following layers from different instances might be different. Suppose there are Mc instances for class c in a batch, where c ∈ {1, … , C} and C is the number of classes. We form the scoring matrix . The GLC loss can be expressed as:

| (6) |

where Lc = Dc − Wc, Wc is a Mc × Mc matrix with all 1s, Dc is a Mc × Mc diagonal matrix with Mc as diagonal elements. We propose to use Euclidean distance for si,c and sj,c due to the benefits of convexity and computational efficiency.

Cross entropy loss Lce is used for the final prediction. Then, the final loss function is formed as:

| (7) |

where λ’s are tunable hyper-parameters, l indicates the lth GNN block and L is the total number of GNN blocks, Dist is either MMD or BCE.

4. Experiments and Results

4.1. Data and Preprocessing

We collected fMRI data from a group of 75 ASD children and 43 age and IQ-matched healthy controls (HC), acquired under the ”biopoint” task [21]. The fMRI data was preprocessed following the pipeline in Yang et al. [22]. The Desikan-Killiany [23] atlas was used to parcellate brain images into 84 ROIs. The mean time series for each node was extracted from a random 1/3 of voxels in the ROI by bootstrapping. In this way, we augmented the data 10 times. Edges were defined by top 10% positive partial correlations to achieve sparse connections. If this led to isolated nodes, we added back the largest edge to each of them. For node attributes, we used Pearson correlation coefficient to node 1 – 84. Pearson correlation and partial correlation are different measures of fMRI connectivity. We aggregate them by using one to build edge connections and the other to build node features.

4.2. Implementation Details

The model architecture was implemented with 2 conv layers and 2 pooling layers as shown in Fig. 2, with parameter d(0) = 84, d(1) = 16, d(2) = 16. We designed a 3-layer MLP (with 16, 8 and 2 neurons in each layer) that takes the flattened graph as input and predicts ASD vs. HC. The pooling layer kept the top 50% important nodes (k(l) = 0.5N(l)). We will discuss the variation of λ1 and λ2 in Section 4.3. We randomly split the data into five folds based on subjects, which means that the graphs from a single subject can only appear in either the training or test set. Four folds were used as training data, and the left-out fold was used for testing. Adam was used as the optimizer. We trained the model for 100 epochs with an initial learning rate of 0.001, annealed to half every 20 epochs. We set σ = 5 in the MMD loss to match the same scale as BCE loss.

4.3. Hyperparameter Discussion and Ablation Study

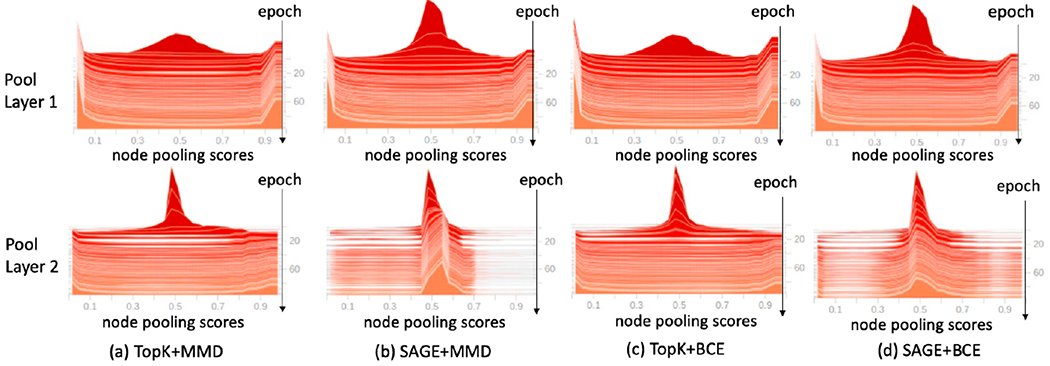

We tuned the parameters λ1 and λ2 in the loss function Eq. (7) and showed the results in Table 1. λ1 encouraged more separable node importance scores for selected and unselected nodes after pooling. λ2 controlled the similarity of the selected nodes for instances within the same class. A larger λ2 moves toward group-level interpretation of biomarkers. We first performed an ablation study by comparing setting (0-0) and (0.1-0). Mean accuracies increased at least 3% in TopK (1-2% in SAGE) with MMD or BCE loss. To demonstrate the effectiveness of LDist, we showed the distribution of node pooling scores of the two pooling layers in Fig. 3 over epochs for different combination of pooling functions and distance losses, with λ1 = 0.1 and λ2 = 0. In the early epochs, the scores centered around 0.5. Then the scores of the top 50% important nodes moved to 1 and scores of unimportant nodes moved to 0 (less obvious for the second pooling layer using SAGE, which may explain why SAGE got lower accuracies than TopK). Hence, significantly higher scores were attributed to the selected important nodes in the pooling layer. Then, we investigated the effects of λ2 on the accuracy by varying it from 0 to 1, with λ1 fixed at 0.1. Without LGLC, the model was easier to overfit to the training set, while larger LGLC may result in underfitting to the training set. As the results in Table 1 show, the accuracy increased when λ2 increased from 0 to 0.1 and the accuracy dropped if we increased λ2 to 1 (except for TopK+MMD). For the following baseline comparison experiments, we set λ1-λ2 to be 0.1-0.1.

Table 1:

Model variations and hyperparameter (λ1-λ2) discussion.

| Loss | Pool | 0-0 | 0.1-0 | 0.1-0.1 | 0.1-0.5 | 0.1-1 |

|---|---|---|---|---|---|---|

| MMD | TopK | 0.753(0.042) | 0.784(0.062) | 0.781(0.038) | 0.780(0.059) | 0.744(0.060) |

| SAGE | 0.751(0.022) | 0.770(0.039) | 0.771(0.051) | 0.773(0.047) | 0.751(0.050) | |

| BCE | TopK | 0.750(0.046) | 0.779(0.053) | 0.797(0.051) | 0.789(0.066) | 0.762(0.044) |

| SAGE | 0.755(0.041) | 0.767(0.033) | 0.773(0.047) | 0.764(0.050) | 0.755(0.041) | |

Fig. 3:

Distributions of node pooling scores over epochs (offset from far to near).

4.4. Comparison with Existing Models

We compared our method with several brain connectome-based methods, including Random Forest (1000 trees), SVM (RBF kernel), and MLP (one 20 nodes hidden layer), a state-of-the-art CNN-based method, BrainNetCNN [24] and a recent GNN method on fMRI [25], in terms of accuracy and number of parameters. We used the parameter settings indicated in the original paper [24]. The inputs and the architecture parameter setting (node conv, pooling and MLP layers) of the alternative GNN method were the same as PR-GNN. The inputs of BrainNetCNN were Pearson correlation matrices. The inputs of the other alternative methods were the flattened up-triangle of Pearson correlation matrices. Note that the inputs of GNN models contained both Pearson and partial correlations. For a fair comparison with the non-GNN models, we used Pearson correlations (node features) as their inputs, because Pearson correlations were the embedded features, while partial correlations (edge weights) only served as message passing filters in GNN models. The results are shown in Table 2. Our PR-GNN outperformed alternative models. With regularization terms on the pooling function, PR-GNN achieved better accuracy than the recent GNN [25]. Also, PR-GNN needs only 5% parameters compared to the MLP and less than 1% parameters compared to BrainNetCNN.

Table 2:

Comparison with different baseline models.

| Metric╲Model | SVM | Random Forest | MLP | BrainNetCNN [24] | Li et al. [25] | PR-GNN* |

|---|---|---|---|---|---|---|

| Acc | 0.686(0.111) | 0.723(0.020) | 0.727(0.047) | 0.781(0.044) | 0.753(0.033) | 0.797(0.051) |

| #Par | 3k | 3k | 137k | 1438k | 16k | 6k |

Acc: Accuracy; #Par: The number of trainable parameters; PR-GNN*: TopK+BCE.

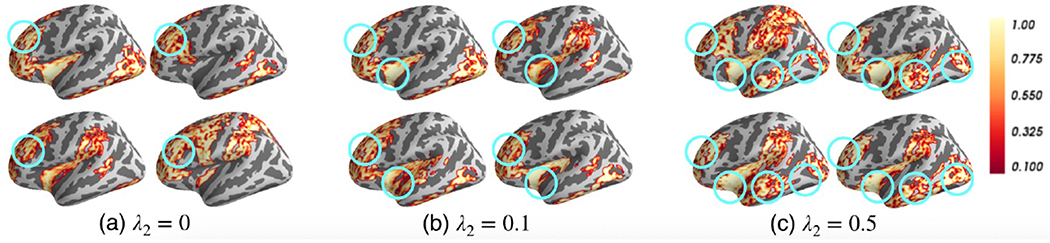

4.5. Biomarker Interpretation

Without losing generalizability, we investigated the selected salient ROIs using the model TopK+BCE (λ1 = 0.1) with different levels of interpretation by tuning λ2. As we discussed in Section 3.2, large λ2 led to group-level interpretation and small λ2 led to individual-level interpretation. We varied λ2 from 0-0.5. Without losing generalizability, we show the salient ROI detection results of four randomly selected ASD instances in Fig. 4. We show the remaining 21 ROIs after the 2nd pooling layer (with pooling ratio = 0.5, 25% nodes left) and corresponding node pooling scores. As shown in Fig. 4(a), when λ2 = 0, we could rarely find any overlapped area among the instances. In Fig. 4(b–c), we circled the large overlapped areas across the instances. By visually examining the salient ROIs, we found two overlapped areas in Fig. 4(b) and four overlapped areas in Fig. 4(c). By averaging the node importance scores (1st pooling layer) over all the instances, dorsal striatum, thalamus and frontal gyrus were the most salient ROIs associated with identifying ASD. These ROIs are related to the neurological functions of social communication, perception and execution [26,27,28,29], which are clearly deficient in ASD.

Fig. 4:

Selected salient ROIs (importance score indicated by yellow-red color) of four randomly selected ASD individuals with different weights λ2 on GLC. The commonly detected salient ROIs across different individuals are circled in green.

5. Conclusion

In this paper, we propose PR-GNN, an interpretable graph neural network for fMRI analysis. PR-GNN takes graphs built from fMRI as inputs, then outputs prediction results together with interpretation results. With the built-in interpretability, PR-GNN not only performs better on classification than alternative methods, but also detects salient brain regions for classification. The novel loss term gives us the flexibility to use this same method for individual-level biomarker analysis (small λ2) and group-level biomarker analysis (large λ2). We believe that this is the first work using a single model in fMRI study that fills the critical interpretation gap between individual- and group-level analysis. Our interpretation results reveal the salient ROIs to identify autistic disorders from healthy controls. Our method has a potential for understanding neurological disorders, and ultimately benefiting neuroimaging research. We will extend and validate our methods on larger benchmark datasets in future work.

Acknowledgements

This research was supported in part by NIH grants [R01NS035193, R01MH100028].

References

- 1.Kaiser MD, Hudac CM, Shultz S, Lee SM, Cheung C, Berken AM, Deen B, Pitskel NB, Sugrue DR, Voos AC, et al. , “Neural signatures of autism,” Proceedings of the National Academy of Sciences, vol. 107, no. 49, pp. 21223–21228, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Goldani AA, Downs SR, Widjaja F, Lawton B, and Hendren RL, “Biomarkers in autism,” Frontiers in psychiatry, vol. 5, p. 100, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Baker JT, Holmes AJ, Masters GA, Yeo BT, Krienen F, Buckner RL, and Öngür D, “Disruption of cortical association networks in schizophrenia and psychotic bipolar disorder,” JAMA psychiatry, vol. 71, no. 2, pp. 109–118, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.McDade E, Wang G, Gordon BA, Hassenstab J, Benzinger TL, Buckles V, Fagan AM, Holtzman DM, Cairns NJ, Goate AM, et al. , “Longitudinal cognitive and biomarker changes in dominantly inherited alzheimer disease,” Neurology, vol. 91, no. 14, pp. e1295–e1306, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Worsley KJ, Liao CH, Aston J, Petre V, Duncan G, Morales F, and Evans A, “A general statistical analysis for fmri data,” Neuroimage, vol. 15, no. 1, pp. 1–15, 2002. [DOI] [PubMed] [Google Scholar]

- 6.Poldrack RA, Halchenko YO, and Hanson SJ, “Decoding the large-scale structure of brain function by classifying mental states across individuals,” Psychological science, vol. 20, no. 11, pp. 1364–1372, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wang X, Liang X, Jiang Z, Nguchu BA, Zhou Y, Wang Y, Wang H, Li Y, Zhu Y, Wu F, et al. , “Decoding and mapping task states of the human brain via deep learning,” Human Brain Mapping, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hamilton W, Ying Z, and Leskovec J, “Inductive representation learning on large graphs,” in Advances in neural information processing systems, pp. 1024–1034, 2017. [Google Scholar]

- 9.Simonyan K and Zisserman A, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014. [Google Scholar]

- 10.Long M, Zhu H, Wang J, and Jordan MI, “Unsupervised domain adaptation with residual transfer networks,” in Advances in neural information processing systems, pp. 136–144, 2016. [Google Scholar]

- 11.Defferrard M, Bresson X, and Vandergheynst P, “Convolutional neural networks on graphs with fast localized spectral filtering,” in Advances in neural information processing systems, pp. 3844–3852, 2016. [Google Scholar]

- 12.Dhillon IS, Guan Y, and Kulis B, “Weighted graph cuts without eigenvectors a multilevel approach,” IEEE transactions on pattern analysis and machine intelligence, vol. 29, no. 11, pp. 1944–1957, 2007. [DOI] [PubMed] [Google Scholar]

- 13.Ying Z, You J, Morris C, Ren X, Hamilton W, and Leskovec J, “Hierarchical graph representation learning with differentiable pooling,” in Advances in neural information processing systems, pp. 4800–4810, 2018. [Google Scholar]

- 14.Gao H and Ji S, “Graph u-nets,” arXiv preprint arXiv:1905.05178, 2019. [Google Scholar]

- 15.Lee J, Lee I, and Kang J, “Self-attention graph pooling,” arXiv preprint arXiv:1904.08082, 2019. [Google Scholar]

- 16.Veličković P et al. , “Graph attention networks,” in ICLR, 2018. [Google Scholar]

- 17.Yang H, Li X, Wu Y, Li S, Lu S, Duncan JS, Gee JC, and Gu S, “Interpretable multimodality embedding of cerebral cortex using attention graph network for identifying bipolar disorder,” in International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 799–807, Springer, 2019. [Google Scholar]

- 18.Gong L and Cheng Q, “Exploiting edge features for graph neural networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9211–9219, 2019. [Google Scholar]

- 19.Gretton A, Borgwardt KM, Rasch MJ, Schölkopf B, and Smola A, “A kernel two-sample test,” Journal of Machine Learning Research, vol. 13, no. Mar, pp. 723–773, 2012. [Google Scholar]

- 20.Li C-L, Chang W-C, Cheng Y, Yang Y, and Póczos B, “Mmd gan: Towards deeper understanding of moment matching network,” in Advances in Neural Information Processing Systems, pp. 2203–2213, 2017. [Google Scholar]

- 21.Kaiser MD et al. , “Neural signatures of autism,” PNAS, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Yang D et al. , “Brain responses to biological motion predict treatment outcome in young children with autism,” Translational psychiatry, vol. 6, no. 11, p. e948, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Desikan RS, Ségonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D, Buckner RL, Dale AM, Maguire RP, Hyman BT, et al. , “An automated labeling system for subdividing the human cerebral cortex on mri scans into gyral based regions of interest,” Neuroimage, vol. 31, no. 3, pp. 968–980, 2006. [DOI] [PubMed] [Google Scholar]

- 24.Kawahara J, Brown CJ, Miller SP, Booth BG, Chau V, Grunau RE, Zwicker JG, and Hamarneh G, “Brainnetcnn: Convolutional neural networks for brain networks; towards predicting neurodevelopment,” NeuroImage, vol. 146, pp. 1038–1049, 2017. [DOI] [PubMed] [Google Scholar]

- 25.Li X, Dvornek NC, Zhou Y, Zhuang J, Ventola P, and Duncan JS, “Graph neural network for interpreting task-fmri biomarkers,” in International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 485–493, Springer, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Schuetze M, Park MTM, Cho IY, MacMaster FP, Chakravarty MM, and Bray SL, “Morphological alterations in the thalamus, striatum, and pallidum in autism spectrum disorder,” Neuropsychopharmacology, vol. 41, no. 11, pp. 2627–2637, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hardan AY, Girgis RR, Adams J, Gilbert AR, Keshavan MS, and Minshew NJ, “Abnormal brain size effect on the thalamus in autism,” Psychiatry Research: Neuroimaging, vol. 147, no. 2-3, pp. 145–151, 2006. [DOI] [PubMed] [Google Scholar]

- 28.Bhanji JP and Delgado MR, “The social brain and reward: social information processing in the human striatum,” Wiley Interdisciplinary Reviews: Cognitive Science, vol. 5, no. 1, pp. 61–73, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Press C, Weiskopf N, and Kilner JM, “Dissociable roles of human inferior frontal gyrus during action execution and observation,” Neuroimage, vol. 60, no. 3, pp. 1671–1677, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]